Abstract

The prevalence of machine learning in biomedical research is rapidly growing, yet the trustworthiness of such research is often overlooked. While some previous works have investigated the ability of adversarial attacks to degrade model performance in medical imaging, the ability to falsely improve performance via recently-developed “enhancement attacks” may be a greater threat to biomedical machine learning. In the spirit of developing attacks to better understand trustworthiness, we developed two techniques to drastically enhance prediction performance of classifiers with minimal changes to features: 1) general enhancement of prediction performance, and 2) enhancement of a particular method over another. Our enhancement framework falsely improved classifiers’ accuracy from 50% to almost 100% while maintaining high feature similarities between original and enhanced data (Pearson’s r′s > 0.99). Similarly, the method-specific enhancement framework was effective in falsely improving the performance of one method over another. For example, a simple neural network outperformed logistic regression by 17% on our enhanced dataset, although no performance differences were present in the original dataset. Crucially, the original and enhanced data were still similar (r = 0.99). Our results demonstrate the feasibility of minor data manipulations to achieve any desired prediction performance, which presents an interesting ethical challenge for the future of biomedical machine learning. These findings emphasize the need for more robust data provenance tracking and other precautionary measures to ensure the integrity of biomedical machine learning research. Code is available at https://github.com/mattrosenblatt7/enhancement_EPIMI.

Keywords: machine learning, adversarial attacks, neuroimaging

1. Introduction

Machine learning has demonstrated great success across numerous fields. However, the success of these models is not immune to attacks. Adversarial attacks, or data manipulations designed to alter the prediction [3], threaten real-world machine learning applications. Adversarial attacks include evasion attacks [2,30,12,4], where only test data are manipulated, or poisoning attacks [19,3,4,7], where the attacker may contribute manipulated test and/or training data. Understanding adversarial attacks and developing corresponding defenses is crucial to the integrity of machine learning applications.

Machine learning is becoming increasingly prevalent in biomedical research—including biomedical imaging. Previous studies of adversarial attacks in medical imaging have focused on clinical applications where a malicious party would be interested in altering the prediction outcomes for financial or other purposes. Most of these studies implemented evasion attacks [11,10,32,17,6,17], while a smaller subset used poisoning attacks [20,9,18,26].

An equally relevant yet understudied motivation in scientific machine learning is the feasibility of manipulating data to improve model performance falsely. In scientific research, data manipulations designed to make results seem more impressive are regarded as a major ethical issue. For example, a line of highly-cited Alzheimer’s research was recently flagged for likely manipulation of biological images [22]. This paper is just one example of many where scientific data were manipulated in ways that harmed their respective fields [1,5]. The potential for data manipulation is not widely acknowledged in the scientific machine learning community. Traditional approaches to preventing and detecting academic fraud include data and code sharing. While data and code sharing are useful for improving the replicability, they do not necessarily ensure that the results can be trusted. Even if the data and code are shared, we will show in this work how scientific machine learning results can be modified through subtle and unnoticeable data manipulations, which could have major consequences.

For example, a malicious party might manipulate their data to improve model performance, falsely claim that they can classify a specific mental health condition, and make a paper more publishable or increase the valuation of a healthcare start-up (Fig 1). If they manipulate the data in a subtle way, they could then publicly share this manipulated dataset, and other researchers would likely not notice that anything was wrong with the data. These data manipulations could waste grant money, misdirect future research directions, and potentially cause harmful public effects. One recent work showed that the performance of regression models using neuroimaging data could be falsely enhanced by injecting subtle associations into the data, labeled as “enhancement attacks” [25]. However, this enhancement framework relies on manually adding patterns to the data that are correlated with the prediction outcome of interest. Thus, the previous framework only works for regression problems and cannot generalize to other settings, such as classification. Given the prevalence of classification problems in biomedical machine learning, developing a general framework for enhancement is novel and important for understanding the feasibility of data manipulations in machine learning.

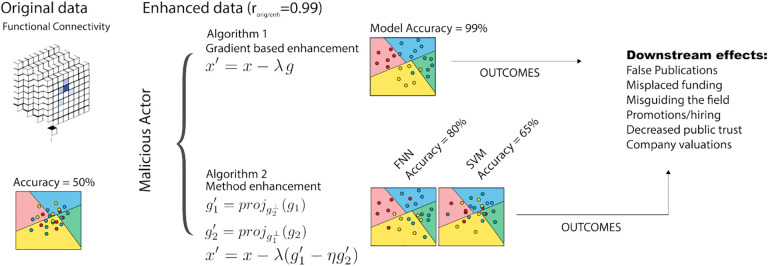

Fig.1.

Overview of enhancement methods used in this paper. Classification accuracy in the original dataset is 50%. After applying gradient-based enhancement attacks following Algorithm 1, classification accuracy in the enhanced dataset is 99%. Using method enhancement attacks (Algorithm 2), datasets are altered such that a specific method (e.g., feedforward neural network) outperforms another (e.g., support vector machine). In all cases, the changes between the original and enhanced datasets are minor. The “Downstream effects” box highlights possible implications of enhancement attacks.

In this work, we first extend the enhancement attack to classification models with a gradient-based enhancement framework (GOAL #1). Then, we present an additional way in which data can be enhanced with only subtle manipulations: falsely demonstrating that a particular method (e.g., a type of machine learning model) outperforms another (GOAL #2). We found that both methods were successful in falsely improving classification accuracy with only minimal changes to the dataset. Finally, given the vulnerability of biomedical datasets to enhancement attacks, we discuss the implications and potential solutions.

2. Methods

In the following sections, we considered two separate attacker goals: falsely enhancing 1) overall classifier performance (GOAL #1), and 2) the performance of one method over another (GOAL #2) (Fig 1). Whereas traditional adversarial attackers may have complete (“white-box”) or limited (“black-box” or “gray-box”) knowledge of the model and/or data [4], enhancement attackers have even greater knowledge than the “white-box” setting. Our methods can modify the entire dataset, which includes both training and test data. These enhancement attacker assumptions mimic a realistic setting, where a researcher could modify their data to falsely improve performance and then publicly release the data such that their results are computationally reproduced by others. We assess all methods with two main metrics: classification accuracy in the enhanced dataset and similarity between the original and enhanced datasets, as measured by Pearson’s correlation. A highly effective enhancement attack is one that falsely increases the accuracy while the original and enhanced datasets remain highly similar.

GOAL #1: Gradient-based enhancement

The key idea behind gradient-based enhancement is to “push” the samples in the direction of a learned model to make the decision boundaries clearer and more consistent across all samples, thus improving performance. The method is similar to an adversarial evasion attack, except we are altering the entire dataset, not just the test data. For a single held-out point, one may optimally change the classification by perturbing the point in the direction of g = ∇xA, where A can be a decision function or loss function. For example, in the case of binary linear support vector machine (SVM) or logistic regression (LR):

| (1) |

| (2) |

where w is a vector of model coefficients and ϵ is a scaling factor. Equations 1–2 would move the corresponding held-out points toward the correct side of the decision boundary. As summarized in Algorithm 1, a model f is first trained by holding one or numerous points out with K-fold partitioning. Then, the held-out point(s) are updated with the attacker gradient g = ∇xA, such that the model will predict them correctly. This process repeats until all points in the dataset are held out. Empirically, we found that enhancement was most effective for complex models (i.e., neural networks) when updating the held-out data iteratively within the cross-validation loop, whereas updating the dataset once after the cross-validation loop was more effective for simpler models (i.e., support vector machine or logistic regression). Since learned model coefficients are similar when only holding out a small fraction of the points, this method pushes all points of a given class in a consistent direction. Eventually, when the enhanced dataset is released, an independent researcher would not notice any perturbations but would falsely find higher performance.

Algorithm 1 Gradient-based enhancement attacks

| D ∈ {X, y}: dataset |

| f: model |

| nfolds: folds for K-fold partitioning |

| λ: enhancement step size |

| for k = 1 : nfolds do |

| Establish Dtr, Dheld-out |

| Train f |

| g ← ∇xA where A = L(f, x) or DF(f, x) |

| Xheld-out ← Xheld-out − λg |

| end for |

GOAL #2: Enhancement of a particular method

A roadblock to method-specific enhancement is that the gradients used in Equations 1–2 generally transfer well across model types [8], which would make this process ineffective in enhancing the performance of a specific method over another. Transferability of attacks from a base classifier f1 to another classifier f2 is defined by [8] as how well an attack designed for f1 works on f2. In this case, we do not wish for the attacks to transfer between models. We want to find a new direction that enhances the performance of f1 but does not affect f2. We achieve this by taking the component of g1 that is orthogonal to g2:

| (3) |

Furthermore, Equation 3 may not be sufficient to limit the performance of f2, since f2 can learn a new decision boundary after retraining. As such, we propose to include a term to suppress the performance of f2:

| (4) |

Then, for a held-out sample, we can update it as follows to attempt to improve the performance of f1 but not f2:

| (5) |

where λ and the suppression coefficient η control the influence of and .

Algorithm 2 Method enhancement

| D ∈ {X, y}: dataset |

| f1: model to enhance |

| f2: model to avoid enhancement |

| nfolds: number of folds for K-fold partitioning |

| λ: enhancement step size |

| η: enhancement suppression coefficient |

| for k = 1 : nfolds do |

| Establish Dtrain , Dheld-out |

| Train f1, f2 |

| g1 ← ∇xA(f1,Xheld-out) |

| g2 ← ∇xA(f2,Xheld-out) |

| end for |

Similar to the model-based data enhancement, we split the data into k folds. For each partitioning, we train two models: 1) A model that we want to enhance (i.e., f1), and 2) a second model that we do not want to enhance (i.e., f2). Subsequently, Equations 3–5 are applied to update the held-out data, and the process is repeated until each sample is held out once.

3. Experiments

Datasets

Resting-state functional MRI (fMRI) data were obtained from the UCLA Consortium for Neuropsychiatric Phenomics (CNP) [23] dataset. For all data, we performed motion correction, registration to common space, regression of covariates of no interest, temporal smoothing, and gray matter masking. Participants were also excluded for lack of full-brain coverage. After these exclusion criteria, 245 participants remained. We parcellated the fMRI data for each participant into 268 nodes using the Shen atlas [29]. To form functional connectivity matrices, the time series data from each pair of nodes (regions of interest) was correlated using Pearson’s correlation, and then Fisher’s transform was applied. Before inputting the functional connectivity matrices into machine learning models, we first vectorized the upper triangle of each matrix to use as features. In all the following models, we classified participants in CNP based on their diagnoses, including no diagnosis (n=117), schizophrenia (n=46), bipolar disorder (n=44), and ADHD (n=38). In addition, all plots below were made with seaborn [14,31].

GOAL #1: Gradient-based enhancement

We enhanced the CNP dataset for a classification problem with the following four classes: participants with 1) no diagnosis, 2) bipolar disorder, 3) schizophrenia and 4) ADHD. We used linear support vector machine (SVM), logistic regression (LR) [21], and a feedforward neural network (FNN) as models. Our FNN consisted of three fully connected layers with the ReLU activation function. During the enhancement attack, all model hyperparameters were held constant. For SVM and LR, this included an L2 regularization parameter C=1. For the FNN, we used cross entropy loss and the Adam [16] optimizer, with a learning rate of α = 0.001 and batch size of 10 for 10 epochs.

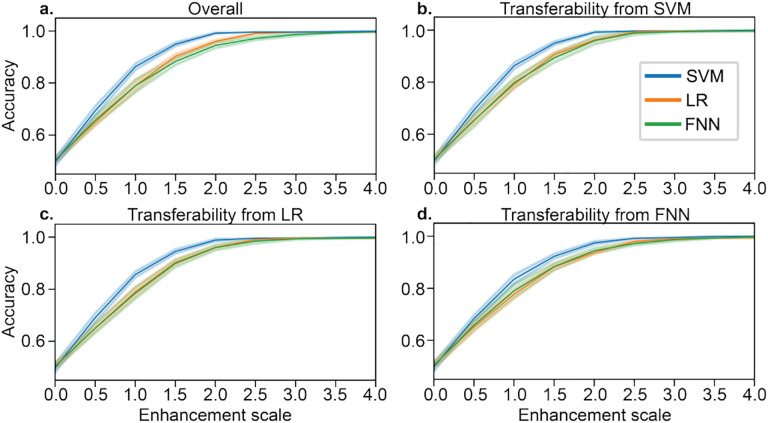

Gradients were computed as the model coefficients in SVM and LR (linear models), while Pytorch’s autograd feature was used for the FNN. All gradients (i.e., ∇xA in Algorithm 1) were normalized to have a Frobenius norm of 1 and then multiplied by the corresponding enhancement scale in Figure 2. After creating an “enhanced dataset,” we evaluated enhanced performance with nested k-fold cross-validation, as one would do if receiving this dataset without any knowledge of the enhancement, with a grid search in SVM and LR for the L2 regularization parameter C={1e-4,1e-3,1e-2,1e-1,1} within each fold. Due to computational restraints, we did not perform a hyperparameter search for the FNN but used the same hyperparameters described above. Enhancement brought prediction performance from 50% to 99% in all three models (Fig 2a), despite similar feature values between the original and enhanced datasets (r = 0.99).

Fig.2.

a) Model-based enhancement of SVM, LR, and FNN models for various enhancement scales. The enhancement scale is multiplied by the unit norm direction of the perturbation for each sample, where an enhancement scale of 0 reflects the original dataset. b-d) Transferability of enhancement between the three models. All accuracies were evaluated with 5-fold cross-validation, with error bars showing standard deviation across 10 random seeds.

Furthermore, we considered whether the enhancement attacks could transfer between models. After data were enhanced using Algorithm 1 for each of the three models (Figure 2a), we re-trained the other two types of models to assess transferability. For example, in Figure 2b, data are first enhanced using SVM and Algorithm 1; then, the performance of that enhanced dataset is evaluated using LR and FNN. Overall, we found that the enhancement attacks transferred between each of the three models (Figure 2b–d).

GOAL #2: Enhancement of a particular method

Since the enhancement attacks above transferred between models, we investigated how enhancement may be targeted to a specific model. Although there are countless machine learning models, we selected three of the most common models for functional connectivity data to perform a case study: SVM, LR, and a FNN. We demonstrated the hypothetical scenario in which one may perturb a dataset such that a FNN outperforms simpler methods like SVM and LR.

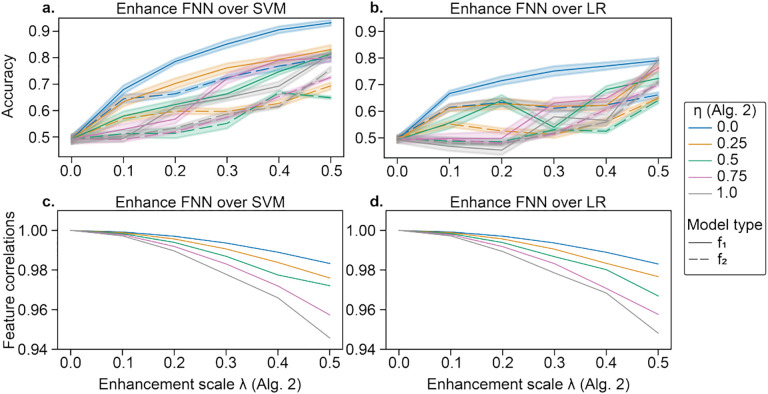

CNP data were manipulated following Algorithm 2 to promote the performance of a particular method over another. Hyperparameters were held constant when enhancing the data with Algorithm 2 (as detailed in GOAL #1). After enhancing the data, we performed 10-fold cross-validation to evaluate accuracy with nested folds and a grid search for the L2 regularization parameter C in SVM and LR. We consider different enhancement scales λ for the classifier of interest (f1=FNN) and different suppression values η for the classifier which we do not wish to perform well (f2=SVM or LR). Despite no differences in the original dataset, FNN outperformed SVM and LR (Fig 3a–b), while maintaining high feature similarities (Fig 3c–d). The performance on f2 generally increased, though less, as performance on f1 increased, but increasing the suppression coefficient η limited performance improvements of f2. Furthermore, attacks transferred between SVM and LR. For f2=SVM, λ=0.4, and η=0, accuracies for SVM and LR were 76.8% and 77.8% vs. 90.6% for FNN. For f2=LR, λ=0.4, and η=0, accuracies for SVM and LR were 61.8% and 62.3% vs. 77.0% for FNN. This method was less effective than that of Algorithm 1, which is likely because the enhancement is weakened by the suppression coefficient and the projection of the gradients.

Fig.3.

Enhancement of a FNN over a,c) SVM and b,d) LR in CNP. In a,b), data are enhanced with increasing λ (see Algorithm 2). Solid lines represent the accuracy for f1 (FNN), while dashed lines show the accuracy for f2 (SVM in a and LR in b). Error bars reflect standard deviation across 10 random seeds of k-fold cross-validation initialization seeds for FNN. Line color shows the suppression coefficient for f2, η. In c,d), the correlation between original and enhanced features is shown with increasing λ. The original and enhanced features are highly correlated (r′s > 0.94).

Proof-of-concept validation in other models and datasets

While the focus of this work is enhancement of SVM, LR, and FNN models in the CNP dataset, we also wanted to demonstrate that other datasets and models were easily manipulated. To demonstrate generalizability to another dataset, we performed enhancement attacks with enhancement scale λ = 2 in the Philadelphia Neurodevelopmental Cohort [28,27] (N=1126) dataset to predict self-reported sex using resting-state functional connectivity data. The accuracies were (base-line/after enhancement): SVM (79.40%/100%), logistic regression (79.13%/100%), and FNN (76.47%/99.91%).

Furthermore, we demonstrated our results in the CNP dataset with another model, BrainNetCNN [15], a deep learning model for brain connectivity data. BrainNetCNN consists of two edge-to-edge filters, one edge-to-node filter, one node-to-graph filter, and three dense layers. Further details of BrainNetCNN are in the original paper [15]. BrainNetCNN was trained with cross entropy loss and the Adam [16] optimizer, with a learning rate of α = 0.001 and batch size of 10 for 20 epochs. For the gradient-based enhancement attack with enhancement scale λ = 3, the resulting accuracy was 79.31% (baseline: 41.10%). We enhanced BrainNetCNN over the FNN with λ = 0.15, η = 0; accuracy was 85.43% for BrainNetCNN and 52.33% for FNN. We enhanced the FNN over BrainNetCNN with λ = 0.15, η = 0; accuracy was 80.16% for BrainNetCNN and 99.27% for FNN. These results demonstrate that complex models are also susceptible to designed data manipulations.

4. Discussion

In this work, we developed a gradient-based framework for enhancement attacks and showed that a functional neuroimaging dataset could be modified to achieve essentially any desired prediction performance. The vulnerability of machine learning pipelines to possible fraud (enhancement) is integral to the trustworthiness of the field. Considering the prevalence of research fraud [1,5,22] and the increasing popularity of machine learning in biomedical research, we believe that enhancement could become a major issue, if it is not already.

For GOAL #1, we demonstrated that a four-way classification task went from near-chance performance, where chance is defined as the most frequently occurring group (47.76%), to over 99% accuracy while the original and enhanced data remained highly similar (r > 0.99). In a hypothetical scenario, a malicious researcher could collect data, enhance it to perform well in a specific classification or regression task, and release this data on a public repository. For example, if the enhanced CNP dataset in this paper was released, the scientific community would be excited and impressed by the near-perfect classification accuracy between participants with no diagnosis, schizophrenia, bipolar disorder, and ADHD. In the academic sector, these false results could lead to hiring and grant decisions or the distribution of future grant funding under false pretenses. In the industrial sector, these false results may cause increased investments in certain companies. Furthermore, enhancement attacks could have additional downstream effects, such as the undermining of public trust or the wasted time and resources of other researchers attempting to build upon the false results.

For GOAL #2, we found that in the best case, a FNN outperformed LR and SVM by 17% in the enhanced dataset, despite no differences in original performance and high similarity between original and enhanced data (r = 0.99). The feasibility of modifying a dataset such that a specific type of model outperforms another is both powerful and potentially dangerous. For instance, a start-up company may demonstrate that their new model outperforms the current industry standard to increase their valuation. Alternatively, researchers may perturb a dataset so their novel method performs the best, leading to a (falsely) more exciting paper.

There were several limitations to our study. First, we investigated enhancement only in functional neuroimaging. Future work should expand these concepts to other disciplines, which may have different sample sizes or dimensionality. Second, the method enhancement algorithm (Algorithm 2) only allows for the suppression of a specific model, and it remains to be seen how this can be extended to improve the performance of one model over many other models. However, we demonstrated that the method-specific enhancement transferred across SVM and LR, which is promising for the extension of it to multiple models. Third, we evaluated enhancement attacks in processed connectome data, and applying enhancement earlier in the processing pipeline (e.g., raw fMRI data) may reduce its effectiveness. Fourth, future work should evaluate gradient-based enhancement of additional model types. By design, the gradient-based enhancement attack is generalizeable and should be able to work for any machine learning model, including both classification and regression models, for which a gradient of the loss with respect to the input can be computed.

In conclusion, although our analysis was restricted to functional neuroimaging, these problems extend to the greater biomedical machine learning communities, where many view data and code sharing as the panacea for trustworthiness. Whereas adversarial attackers only have limited access to the model and data, enhancement attackers have unrestricted access, which makes developing defenses more difficult. Still, future work should explore whether existing adversarial defenses, including augmentation and input transformations [24], can be adapted to defend against enhancement attacks, though we expect these defenses will be less effective given the much greater capabilities of enhancement relative to adversarial attacks. Another possible defense is data provenance tracking, such as DataLad [13]. However, one caveat is that data could be manipulated before provenance tracking begins. Thus, an alternative solution could be the replication of studies in an independent dataset. Overall, we hope that this work sparks additional discussion about possible defenses against enhancement attacks and data manipulations to secure the integrity of the field.

Footnotes

Data use declaration and acknowledgment The UCLA Consortium for Neuropsychiatric Phenomics (download: https://openneuro.org/datasets/ds000030/versions/00016) and the Philadelphia Neurodevelopmental Cohort (dbGaP Study Accession: phs000607.v1.p1) are public datasets that obtained consent from participants and supervision from ethical review boards. We have local human research approval for using these datasets.

References

- 1.Al-Marzouki S., Evans S., Marshall T., Roberts I.: Are these data real? statistical methods for the detection of data fabrication in clinical trials. BMJ 331(7511), 267–270 (Jul 2005) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Biggio B., Corona I., Maiorca D., Nelson B., Šrndić N., Laskov P., Giacinto G., Roli F.: Evasion attacks against machine learning at test time. In: Machine Learning and Knowledge Discovery in Databases. pp. 387–402. Springer Berlin Heidelberg; (2013) [Google Scholar]

- 3.Biggio B., Nelson B., Laskov P.: Poisoning attacks against support vector machines (Jun 2012)

- 4.Biggio B., Roli F.: Wild patterns: Ten years after the rise of adversarial machine learning. Pattern Recognit. 84, 317–331 (Dec 2018) [Google Scholar]

- 5.Bik E.M., Casadevall A., Fang F.C.: The prevalence of inappropriate image duplication in biomedical research publications. MBio 7(3) (Jun 2016) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bortsova G., González-Gonzalo C., Wetstein S.C., Dubost F., Katramados I., Hogeweg L., Liefers B., van Ginneken B., Pluim J.P.W., Veta M., Sánchez C.I., de Bruijne M.: Adversarial attack vulnerability of medical image analysis systems: Unexplored factors. Med. Image Anal. 73, 102141 (Oct 2021) [DOI] [PubMed] [Google Scholar]

- 7.Cinà A.E., et al. : Wild patterns reloaded: A survey of machine learning security against training data poisoning (May 2022)

- 8.Demontis A., et al. : Why do adversarial attacks transfer? explaining transferability of evasion and poisoning attacks. In: USENIX Security Symposium 2019. pp. 321–338 (2019) [Google Scholar]

- 9.Feng Y., Ma B., Zhang J., Zhao S., Xia Y., Tao D.: FIBA: Frequency-Injection based backdoor attack in medical image analysis. arXiv preprint arXiv:2112.01148 (Dec 2021) [Google Scholar]

- 10.Finlayson S.G., Bowers J.D., Ito J., Zittrain J.L., Beam A.L., Kohane I.S.: Adversarial attacks on medical machine learning. Science 363(6433), 1287–1289 (Mar 2019) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Finlayson S.G., Chung H.W., Kohane I.S., Beam A.L.: Adversarial attacks against medical deep learning systems. arXiv preprint arXiv:1804.05296 (Apr 2018) [Google Scholar]

- 12.Goodfellow I.J., Shlens J., Szegedy C.: Explaining and harnessing adversarial examples (Dec 2014)

- 13.Halchenko Y., et al. : DataLad: distributed system for joint management of code, data, and their relationship (2021)

- 14.Hunter J.D.: Matplotlib: A 2d graphics environment. Computing in science & engineering 9(03), 90–95 (2007) [Google Scholar]

- 15.Kawahara J., Brown C.J., Miller S.P., Booth B.G., Chau V., Grunau R.E., Zwicker J.G., Hamarneh G.: BrainNetCNN: Convolutional neural networks for brain networks; towards predicting neurodevelopment. Neuroimage 146, 1038–1049 (Feb 2017) [DOI] [PubMed] [Google Scholar]

- 16.Kingma D.P., Ba J.: Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014) [Google Scholar]

- 17.Ma X., Niu Y., Gu L., Wang Y., Zhao Y., Bailey J., Lu F.: Understanding adversarial attacks on deep learning based medical image analysis systems. Pattern Recognit. 110, 107332 (Feb 2021) [Google Scholar]

- 18.Matsuo Y., Takemoto K.: Backdoor attacks to deep neural Network-Based system for COVID-19 detection from chest x-ray images. NATO Adv. Sci. Inst. Ser. E Appl. Sci. 11(20), 9556 (Oct 2021) [Google Scholar]

- 19.Muñoz-González, et al. : Towards poisoning of deep learning algorithms with back-gradient optimization. In: Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security. pp. 27–38 (Nov 2017) [Google Scholar]

- 20.Nwadike M., Miyawaki T., Sarkar E., Maniatakos M., Shamout F.: Explainability matters: Backdoor attacks on medical imaging. arXiv preprint arXiv:2101.00008 (Dec 2020) [Google Scholar]

- 21.Pedregosa F., et al. : Scikit-learn: Machine learning in python. the Journal of machine Learning research 12, 2825–2830 (2011) [Google Scholar]

- 22.Piller C.: Blots on a field? Science (New York, NY) 377(6604), 358–363 (2022) [DOI] [PubMed] [Google Scholar]

- 23.Poldrack R.A., et al. : A phenome-wide examination of neural and cognitive function. Sci Data 3, 160110 (Dec 2016) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Ren K., Zheng T., Qin Z., Liu X.: Adversarial attacks and defenses in deep learning. Proc. Est. Acad. Sci. Eng. 6(3), 346–360 (Mar 2020) [Google Scholar]

- 25.Rosenblatt M., Rodriguez R., Westwater M.L., Horien C., Greene A.S., Constable R.T., Noble S., Scheinost D.: Connectome-based machine learning models are vulnerable to subtle data manipulations. Patterns (Jul 2023) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Rosenblatt M., Scheinost D.: Data poisoning attack and defenses in Connectome-Based predictive models. In: Workshop on the Ethical and Philosophical Issues in Medical Imaging. pp. 3–13. Springer Nature Switzerland; (2022) [Google Scholar]

- 27.Satterthwaite T.D., Connolly J.J., Ruparel K., Calkins M.E., Jackson C., Elliott M.A., Roalf D.R., Hopson R., Prabhakaran K., Behr M., Qiu H., Mentch F.D., Chiavacci R., Sleiman P.M.A., Gur R.C., Hakonarson H., Gur R.E.: The philadelphia neurodevelopmental cohort: A publicly available resource for the study of normal and abnormal brain development in youth. Neuroimage 124(Pt B), 1115–1119 (Jan 2016) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Satterthwaite T.D., Elliott M.A., Ruparel K., Loughead J., Prabhakaran K., Calkins M.E., Hopson R., Jackson C., Keefe J., Riley M., Mentch F.D., Sleiman P., Verma R., Davatzikos C., Hakonarson H., Gur R.C., Gur R.E.: Neuroimaging of the philadelphia neurodevelopmental cohort. Neuroimage 86, 544–553 (Feb 2014) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Shen X., Tokoglu F., Papademetris X., Constable R.T.: Groupwise whole-brain parcellation from resting-state fMRI data for network node identification. Neuroimage 82, 403–415 (Nov 2013) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Szegedy C., Zaremba W., Sutskever I., Bruna J., Erhan D., Goodfellow I., Fergus R.: Intriguing properties of neural networks (Dec 2013)

- 31.Waskom M.: seaborn: statistical data visualization. J. Open Source Softw. 6(60), 3021 (Apr 2021) [Google Scholar]

- 32.Yao Q., He Z., Lin Y., Ma K., Zheng Y., Kevin Zhou S.: A hierarchical feature constraint to camouflage medical adversarial attacks (2021)