Abstract

Stance detection is an evolving opinion mining research area motivated by the vast increase in the variety and volume of user-generated content. In this regard, considerable research has been recently carried out in the area of stance detection. In this study, we review the different techniques proposed in the literature for stance detection as well as other applications such as rumor veracity detection. Particularly, we conducted a systematic literature review of empirical research on the machine learning (ML) models for stance detection that were published from January 2015 to October 2022. We analyzed 96 primary studies, which spanned eight categories of ML techniques. In this paper, we categorize the analyzed studies according to a taxonomy of six dimensions: approaches, target dependency, applications, modeling, language, and resources. We further classify and analyze the corresponding techniques from each dimension’s perspective and highlight their strengths and weaknesses. The analysis reveals that deep learning models that adopt a mechanism of self-attention have been used more frequently than the other approaches. It is worth noting that emerging ML techniques such as few-shot learning and multitask learning have been used extensively for stance detection. A major conclusion of our analysis is that despite that ML models have shown to be promising in this field, the application of these models in the real world is still limited. Our analysis lists challenges and gaps to be addressed in future research. Furthermore, the taxonomy presented can assist researchers in developing and positioning new techniques for stance detection-related applications.

Keywords: Stance detection, Stance classification, Sentiment analysis, Rumor detection, Machine learning, PRISMA

Introduction

With the advent of Web 2.0, many online platforms for producing User-Generated Content (UGC) have been established, such as social media, wikis, and debate websites. UGC usually comes in the form of pictures, videos, reviews, or blog posts. Currently, social media platforms are being inherent parts of our daily lives as a media of communication and expressing opinions. Consequently, the amount of available data is rapidly increasing. However, most data are unstructured, where texts represent a substantial part. As the volume of these data increases, the demand for the automatic processing of UGC significantly increases. Advances in machine learning (ML) techniques aid in the extraction of useful information from texts using Natural Language Processing (NLP). This new source of information could be used to measure people’s opinions, stances, and attitudes toward products, events, services, controversial news, and politics. These measurements can play a valuable role in decision-making for companies, policymakers, politicians, and even regular people. Furthermore, detecting the stances expressed in a piece of text can be a powerful tool for a range of tasks, such as rumor veracity detection and fake news detection [1, 2].

Stance detection is the task of automatically predicting the writers’ stance on a subject of interest (target). It depends on the examination of a written text and sometimes the user’s social activity on debate sites (e.g., social media platforms). There are other definitions for stance detection. In the following, we present the definitions of stance detection from different perspectives, and then we will present some related problems.

Before presenting the stance detection definitions, we provide a definition of stance itself from a sociolinguistic perspective. Du Bois [3] defined a stance as “a public act by a social actor, achieved dialogically through overt communicative means, of simultaneously evaluating objects, positioning subjects, and aligning with other subjects, with respect to any salient dimension of the sociocultural field”. Kockelman [4] defined it as an expression of the stance taker’s attitude and judgment toward a proposition and thereby aligns himself/herself with others. Several definitions of stance detection (also known as stance classification) can be found in the field of sociolinguistics. The main concern in stance detection is to infer the embedded viewpoint from the authors’ text. A study on stance detection is conducted by linking the stance to one or more of the following three factors: linguistic features (tense, lexical aspect, subject, and object), individual identity, and social activity [5]. Stance considerably determines the tone of the writers’ message and words that they choose [3].

The term “stance detection” is used in the ML field to refer to a classification problem. The input in this problem is usually in the form of a pair of text and a target, and the output is a category from the set: {Favor, Against, None}. Furthermore, some scholars add to the set the category “Neutral”, which implies that the author is neutral toward the target [6]. However, a neutral stance arguably does not exist as people usually position themselves to be against or in favor of a proposition [5]. In addition, there is good agreement in the literature that if the stance of a text toward a target is not in favor of or against it, then the proper stance category would be “None” instead of “Neutral”, because no stance information can be obtained from the text. Thus, the “None” category is usually assigned to all cases other than the Favor or Against categories.

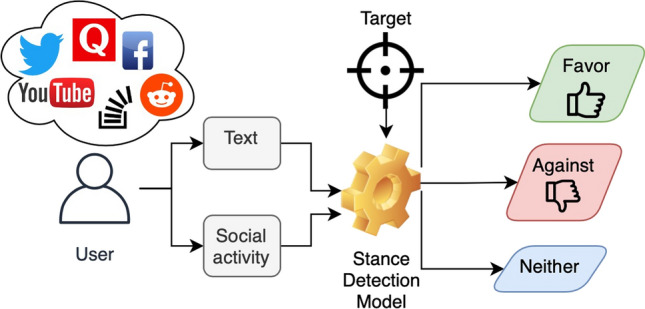

In general, stance detection, as observed in the literature, is defined as predicting writers’ stance on the target by examining the text they wrote and/or their social activity on social media platforms (connection, preferences, etc.). This definition is schematically illustrated in Fig. 1.

Fig. 1.

General representation of the stance detection system

Moreover, stance detection is a problem related to sentiment analysis (or opinion mining). Sentiment analysis focuses on the sentiment polarity that is explicitly expressed by a text. The main sentiment polarities considered by several scholars are Positive, Negative, and Neutral. By contrast, stance detection aims to classify the stance of a piece of text toward a target (event, entity, idea, claim, topic, etc.) explicitly or implicitly mentioned in the text.

There are two subproblems of sentiment analysis that are more related to stance detection: (1) Target-dependent sentiment analysis, and (2) Aspect-based sentiment analysis. Both problems are concerned with the identification of the sentiment concerning a specific target (e.g., iPhone vs Galaxy) or different aspects of a target (e.g., screen and battery life of iPhone). It has been noticed in some social media analysis studies that there is a misconception between the definition of stance detection with the generic sentiment analysis as well as the two subproblems (i.e., Aspect-based, Target-dependent) [7]. Thus, we list here the main theoretical differences between them:

The generic sentiment analysis is concerned with the emotion polarity without a specific target. Meanwhile, in stance detection, a well-defined target must be given to evaluate the position toward this target.

-

The stance may not be aligned with the sentiment for a target within a text. That is, a text may have a positive polarity, whereas the stance is against the target, and vice versa. For example, the sentence: “I am so glad that Trump lost the election”

has a positive sentiment, but the stance is against Trump.

Sentiment analysis studies focus on non-ideological topics (e.g., products and services). Meanwhile, stance detection targets ideological topics (e.g., atheism, feminist movement, and political issues), which are harder to detect.

In two subproblems of sentiment analysis (i.e., aspect-based, target-dependent), the target in sentiment analysis is usually an entity or an aspect (e.g., reviews about hotels, movies, or products), whereas the target in stance detection may be an event (e.g., the US presidential election).

Further, stance detection, as a research area in the ML field, is related to other problems besides sentiment analysis. These problems include (i) emotion detection [8], (ii) sarcasm detection [9], (iii) perspective identification [10], (iv) argument mining [11], (v) controversy detection [12], and (vi) biased language detection [13].

Achieving stance detection is challenging due to the fact that determining stance is subjective. In addition, concepts and opinions are formed through a variety of expressions and linguistic compositions, making it more difficult to detect. In social media, stance detection is more demanding due to the nature of social media text [7]. For example, the text is usually short (e.g., a tweet can contain up to 280 characters), informal, containing many abbreviations, and with a nonstandard format due to the users’ inconsistent use of grammar. Furthermore, social media discussions are more scattered and lack contextual information [14].

An increasing number of research papers and applications are published by multiple communities on the stance detection problem. With this large number of studies, there is a need to have a framework to classify the available approaches in the literature, since they use various techniques and rely on different underlying models. This is crucial to enable researchers and practitioners to understand the contexts of the different approaches and their suitability for different circumstances. Furthermore, there are still open gaps and promising future trends to be explored toward more robust stance detection models. This study aimed to propose a framework for classifying different approaches, evaluating the current state of affairs, and identifying open gaps.

In this study, we present a systematic literature review (SLR) that focuses on stance detection. To the best of our knowledge, there is no SLR in this area, which motivates our work in the current study. Our research investigates the ML techniques used in the literature for stance detection by addressing five research questions following a well-defined methodology. The contributions of this SLR lay on:

Covering the most recent studies (2015–2022) and a significant number of papers resulting from an established literature review protocol.

Proposing a taxonomy to classify the literature on the stance detection domain, as well as a taxonomy of different techniques used for stance modeling.

Classifying 96 selected studies according to the proposed taxonomy.

Summarizing the current state-of-the-art stance models with a focus on ML techniques.

Introducing open gaps to be explored for future research toward more robust approaches for stance detection.

The rest of this article is organized as follows. Section 2 presents previous works regarding literature reviews related to stance detection. Section 3 presents the methodology used for performing the present SLR, starting with our research questions. Section 4 presents and discusses our results from this SLR by addressing the research questions. Finally, Sect. 5 concludes this survey.

Related reviews

Survey studies can be broadly divided into two categories, namely, traditional literature reviews and SLRs [15]. Traditional literature reviews mainly cover the research trends, whereas the SLRs aim to answer various research questions. In the field of social computing, stance detection is a comparatively recent computational problem. Although the fact that there are multiple survey studies in this newly established area [2, 7, 16–18], there is no existing SLR in this domain, which motivates our work in this survey.

Furthermore, some of these survey studies targeted only one aspect of stance detection. Hardalov et al. [16] surveyed the applications of stances for misinformation and disinformation detection. Alkhalifa and Zubiaga [17] investigated the existing directions in capturing stance dynamics in social media. They reviewed the relevant literature on the temporal dynamics of social media and discussed their impact on the development of stance detection models. Wang et al. [18] surveyed the opinion mining methods in general, with a particular focus on customers’ stances toward products. Their study emphasized the methods for extracting textual features of social media posts only, where they examined numerous techniques for extracting aspects from posts commenting about products.

Relatively comparative surveys in stance detection were published in 2019 and 2020 by Küçük and Can [2] and Aldayel and Magdy [7], respectively. Küçük and Can [2] discussed the NLP techniques used with stance detection. Their survey includes a useful explanation for the intersections and distinctions between stance and related tasks, such as emotion recognition, sarcasm, and argument mining. Aldayel and Magdy [7] surveyed studies on stance detection targeting the social media domain, starting by providing a broad overview of the stance detection task, including the definition, theoretical comparison between stance and sentiment, feature modeling, and different types of stance targets. Then, they presented a breakdown of the most recent approaches to stance modeling in social media.

The related reviews presented above are limited by study selection bias as they did not seem to follow a systematic selection methodology. Moreover, those studies are not comprehensive as they seemed overly restrictive in terms of the approaches and applications considered. These shortcomings motivated us to conduct this SLR that comprehensively explores and analyzes relevant prominent studies from different domains and applications. This SLR also outlines the present literature gaps and suggests possible research directions to improve the current state of the affairs. In addition, related reviews did not deeply discuss the emerging techniques of machine learning (e.g., inductive transfer learning and low-shot learning) as presented in this survey.

Methodology

In this study, we compile, categorize, and present a comprehensive and up-to-date survey of stance detection models and applications. To enforce sound inclusion eligibility criteria, we followed the SLR procedure proposed by Kitchenham [19]. The main advantage of this procedure over others is that it was designed primarily for computer science surveys, which helps in adapting it well to the stance detection topic. In addition, following this well-defined protocol makes the study reproducible and reduces the possibility of bias in the results of the literature.

During the planning stage of this SLR, we developed a review protocol that is broken down into five phases: research question definition, search strategy design, study selection, quality assessment, and data extraction. Details of the review protocol will be presented in the following subsections.

Research questions

The goal of our study is to answer the following research questions:

RQ1 : What is the current state of the stance detection research?

RQ2 : What taxonomy could be used to represent the stance detection applications?

RQ3 : What is the focus of the stance detection research? Particularly, what are the platforms and domains for which stance detection models were proposed? How is stance modeled in the selected studies?

RQ4 : What are the major developments in the stance detection research? Particularly, what are the ML techniques used and how can they be classified?

RQ5 : What are the research gaps observed in the literature?

Search strategy

Preliminary searches were performed to determine the number of possibly relevant studies in the stance detection area. When we applied the query by searching full texts, an unfeasible volume of irrelevant papers was returned (hundreds of thousands) as the searched phrases are common in other fields (e.g., sociolinguistics). Therefore, we have decided to conduct our search based on title, abstract, and keywords. In addition, we used alternative terms and synonyms for the topics we were looking for throughout our preliminary searches. As a result, the following query string was used for identifying primary studies:

“stance detection” OR “stance prediction” OR “stance identification” OR “stance classification” OR “stance recognition”.

We restricted the search to the period from January 2015 to October 2022. The period constraint was set due to the significant increase in the number of studies that targeted stance detection compared with the studies published before 2015. Furthermore, most of the techniques proposed prior to 2015 relied primarily on statistical modeling rather than machine learning for stance detection.

The following electronic libraries were selected as sources for our study: ACM Digital Library1, Scopus2, Springer3, Web of Science4, and IEEE-Xplore5. These libraries were selected because they host the major journals and conference proceedings related to social computing and ML. To complement these libraries, we also searched Google Scholar6. Consequently, a total of six libraries were examined in this SLR.

In addition, we conducted backward snowballing by scanning the references in the relevant papers. We identified ten extra papers, and three of them were found to be relevant and passed the quality assessment (presented in Sect. 3.3). These papers have been included in the final number of selected papers.

The applied search strategy was based on preferred reporting items for systematic review and meta-analysis (PRISMA) statements [20], which is summarized in Fig. 2. The search results were managed and stored using the Mendeley software package (https://www.mendeley.com/). According to the search procedure, we identified 96 primary studies out of 654 studies that resulted from the first search phase. Figure 2 presents the detailed search procedure as well as the number of papers found at each phase.

Fig. 2.

PRISMA flow diagram for the search strategy; where n is the number of papers

Study selection

The first search phase resulted in 654 candidate papers (see the identification phase in Fig. 2). These papers obtained from the identification phase were evaluated by applying the inclusion and exclusion criteria to identify the most relevant papers for our SLR. Papers that met all inclusion criteria were included, whereas those that met any exclusion criterion were excluded. The following inclusion and exclusion criteria were developed and refined through a pilot selection. We selected papers by looking at their titles, abstracts, and full texts.

- Inclusion criteria

- Empirical studies using the ML techniques for stance detection, whether as a main task or as an auxiliary task for other applications.

- Papers that study the detection of stance based on the following forms of data: text and social media networks.

- Papers written in English.

- Peer-reviewed papers.

- In the case of multiple publications of the same study, only the most recent and comprehensive version was included.

- For notebook papers of the annual SemEval workshop and other competitions related to stance detection, only the top two papers (based on the reported results referenced in the official overview papers of the workshops) were included.

- Exclusion criteria

- Papers that do not satisfy any of the specified inclusion criteria.

- Survey or review papers without any findings.

- Extended abstracts, posters, books, patents, tutorials, and short papers (as categorized by conferences).

- Inaccessible papers.

- Studies focusing on building a resource for stance detection, such as datasets, lexicons, annotation framework, or solutions for addressing imbalanced data.

The use of these selection criteria resulted in the identification of 132 studies. The final selected studies were obtained using the quality assessment criteria, which we formed for evaluating the relevance and strength of the main studies. The quality assessment criteria are listed in Table 1. The questions are ranked as follows: “Yes” = 1, “Partly” = 0.5, and “No” = 0. After summing the values assigned to each question, the total score is calculated. A study could have a maximum score of 8 and a minimum score of 0. We considered only the relevant studies with a quality score greater than 4 (i.e., 50% of the maximum score), which were eventually used for data extraction. Accordingly, we further dropped 36 relevant papers with a quality score of 4 or less. Consequently, 96 studies were finally identified for the data extraction process.

Table 1.

Quality assessment questions

| Q# | Quality questions |

|---|---|

| Q1 | Does the paper have a well-defined methodology? |

| Q2 | Is the information about the dataset size and data source identified? |

| Q3 | Are the pre-processing techniques clearly described and justified? |

| Q4 | Are the ML techniques sufficiently defined? |

| Q5 | Are the performance measures fully defined and reported? |

| Q6 | Is there a comparison with other approaches? |

| Q7 | Does the study add/contribute to academia? |

| Q8 | Does the study have sufficient number of the average citations per year? |

Data extraction and data synthesis

Relevant data were extracted from each of the selected papers in order to fulfill RQs 1–5. In addition, we collected the metadata information on each paper for further statistical investigation. The metadata included the title, publication year, authors, type of publication, venue, and the number of citations. The extracted data were organized using Excel spreadsheets.

The primary goal of data synthesis is to collect and combine facts and statistics from the selected studies to answer RQs 1–5 and build a response. Grouping studies with similar and comparable outcomes helped obtain conclusive answers to RQs by presenting research evidence. We examined both quantitative and qualitative data, such as prediction accuracy, approach category, feature extraction technique, ML method, language, domain, and dataset. To synthesize data from the primary studies and address RQs 1–5, various techniques were used, including visualization techniques (e.g., treemap and word cloud). Tables were also used to summarize and present the findings.

Results and discussion

In this section, we present and discuss the results of our literature analysis. In each of the following five Sects. (4.1, 4.2, 4.3, 4.4, 4.5), we present and discuss our findings in-line with RQs 1–5.

The current state of research on stance detection (RQ1)

The objective of this section is to answer RQ1, which is related to showing the current research state on stance detection. Therefore, we start by presenting the population of the published literature on stance detection and the leading publication venues. In addition, we survey the competitions (shared tasks) related to stance detection in Sect. 4.1.2. Furthermore, we present the datasets and resources used in the current stance detection models in Sect. 4.1.3.

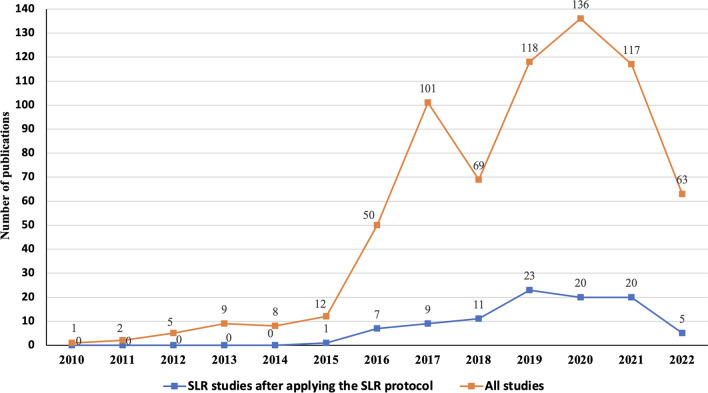

Description of primary studies

Stance detection (also known as stance classification, stance identification, and stance prediction) is a considerably recent computational problem in the area of social computing. One of the observations during our literature review is the significant growth in the number of studies on the stance detection topic in recent years. Figure 3 presents the number of stance detection publications and the publications from 2015 to 2022 after applying the SLR protocol (presented in Sect. 3). It can be observed from the figure that there is a noticeable growth in the number of publications from 2016, which is attributable to the publication of the SemEval-2016 competition that presented the first benchmarked dataset for stance detection based on social media contents [21]. This dataset opened up opportunities to develop models for stance representations on social media.

Fig. 3.

Number of stance detection studies between 2010 and 2022

We selected 96 of 654 identified papers that used ML techniques for stance detection (based on the SLR protocol presented in Sect. 3). About 21% of these papers were issued in journals, and the rest were published in conference proceedings. Table 2 presents the publication venues and distribution of the papers per venue. As presented in Table 2, the top two publication venues are EMNLP and ACL conferences, with around 23% of the selected papers (14% and 9%, respectively). Both conferences are prestigious in the computational linguistics field, where substantial advances in NLP are likely to be published.

Table 2.

Publication venues and the distribution of selected studies

| Publication Venue | Type | # studies | Percent |

|---|---|---|---|

| Empirical Methods in Natural Language Processing (EMNLP) | Conference | 13 | 13.54 |

| Association for Computational Linguistics (ACL) | Conference | 9 | 9.38 |

| International Workshop on Semantic Evaluation (SemEval) | Conference | 6 | 6.25 |

| International Conference on Computational Linguistics (COLING) | Conference | 4 | 4.17 |

| IEEE ACCESS | Journal | 3 | 3.13 |

| World Wide Web Conference (WWW) | Conference | 3 | 3.13 |

| ACM Transactions on Information Systems (TOIS) | Journal | 2 | 2.08 |

| IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining | Conference | 2 | 2.08 |

| Information Processing & Management | Journal | 2 | 2.08 |

| International AAAI Conference on Web and Social Media (ICWSM) | Conference | 2 | 2.08 |

| International Conference on Artificial Neural Networks (ICANN) | Conference | 2 | 2.08 |

| International Conference on Data Mining (ICDMW) | Conference | 2 | 2.08 |

| International Conference on Natural Language Processing and Chinese Computing | Conference | 2 | 2.08 |

| International Joint Conference on Neural Networks (IJCNN) | Conference | 2 | 2.08 |

| Other journals | Journal | 15 | 15.63 |

| Other conferences | Conference | 27 | 28.13 |

| Total | 96 | 100 |

Stance detection competitions

Besides the SemEval-2016 competition mentioned earlier, there are six competitions have been held for stance detection. All these competitions contributed to the advancement of stance detection research by offering annotated datasets of different languages, annotation guidelines, evaluation metrics, and an overview of the participating teams. The details of these competitions are presented next in chronological order. Furthermore, the information on the datasets used in these competitions is presented in Table 3.

SemEval-2016 Task 6 (SE16-T6): This is the first shared task on stance detection that was organized as a part of the International Workshop on Semantic Evaluation [21]. The competition comprised two subtasks: Tasks A and B. Task A is a supervised stance detection in English Tweets where the participants are provided with 70% of annotated training data. Task B is a weakly supervised stance detection where the participants are given only a large unlabeled dataset along with a smaller test dataset for a new target. Notably, in this competition, the worst performing systems are based on deep learning methods [21]. It has been hypothesized that due to the irregular syntax of social media text and the small size of training data, traditional deep learning methods cannot model tweet text well.

NLPCC-2016 Task 4: For Chinese microblogs, a stance detection competition was held with two subtasks similar to SE16-T6 [22].

IberEval-2017: A shared task conducted for stance and gender detection in Spanish and Catalan tweets [23].

SemEval-2017 Task 8 (RumourEval-2017): A shared task aimed at identifying rumors and the stance of Twitter users through their textual replies [24].

SemEval-2019 Task 7 (RumourEval-2019): A shared task that comprised two tasks: rumor verification and rumor stance prediction on Twitter and Reddit posts [25].

EVALITA-2020 (SardiStance): SardiStance, held during the EVALITA-2020 conference, was the first shared task for stance detection in the Italian language [26]. This competition also comprised two subtasks: Tasks A and B. Task A is related to textual stance detection, and Task B is based on contextual stance detection that uses additional information from the user’s social network and tweets, as well as information about the user profile.

Table 3.

Publicly available stance detection datasets

| Dataset Name | Language | Target depen. | Domain | Targets | Annotation | Dataset Size |

|---|---|---|---|---|---|---|

| Emergent [27] | English | TI | Claims from different sites | Several topics | Favor, against, observe | 300 claims and 2,595 articles |

| SemEval-2016 Task 6 [21] | TS | Tweets | Atheism, Climate change, Feminist movement, Hillary Clinton, Abortion | Favor, against, none | 4163 tweets | |

| Multi-Target SD [28] | MrT | Tweets | Donald Trump, Ted Cruz, Hillary Clinton, Bernie Sanders | Favor, against, none | 4455 tweets | |

| IBM Debater [29] | TI | Claims and evidence from Wikipedia | 55 topics | Pros, cons | 2394 claims | |

| RumourEval-17 [24] | TI | Tweets | Rumors about ten events | Support, deny, query, comment | 5568 tweets | |

| FNC-1 [30] | TI | News headlines | Several topics | Agree, disagree, discuss, unrelated | 2587 news headlines | |

| UKP or AM [11] | TS | Posts from debate websites | Several topics | Favor, against, none | 25,492 comments | |

| Perspectrum [31] | TI | Posts from debate websites | Several topics | Support, opposing | 11,876 pairs (perspective, claim) | |

| Args.me [32] | TI | Posts from debate websites | Several topics | Pros, cons* | 387,606 arguments | |

| RumourEval-19 [25] | TI | Tweets, Reddit posts | Natural disasters | Support, deny, query, comment | 8574 posts | |

| VAST [33] | CT | Posts from The New York Times | Several topics | Pros, cons, neutral | 23,525 comments | |

| WT-WT [34] | TS | Tweets | Health insurance companies | Support, refute, comment | 51,284 tweets | |

| TW-BREXIT [35] | TS | Tweets | BREXIT referendum | Leave, remain, none | 1800 triplets of tweets | |

| Procon20 [36] | TS | Posts from procon.org | 419 controversial issues. | Pros, cons | 6094 pairs (question, opinion) | |

| Grimminger et al. [6] | TS | Tweets | Donald Trump, Joe Biden, Kanye West | Favor, against, none, hateful, non-hateful | 3000 tweets | |

| Baly et al. [37] | Arabic | TI | Posts from Verify and Reuters | War in Syria and related political issues | Agree, disagree, discuss, unrelated | 422 claims and 3,042 articles |

| Arabic News Stance [38] | TI | News headlines | Several topics | Agree, disagree, other | 3786 pairs (claim, evidence) | |

| ConRef-STANCE-ita [39] | Italian | TS | Tweets | The reform of the Italian Constitution | Favor, against, none | 963 triplets (tweet, retweet, reply) |

| SardiStance [26] | TS | Tweets | Sardines movement | Favor, against, none | 3242 tweets | |

| NLPCC-2016 Task 4 [22] | Chinese | TS | Weibo posts | Several topics | Favor, against, none | 3250 posts |

| Hercig et al. [40] | Czech | TS | News comments | Miloš Zeman, Smoking ban in restaurants | Favor, against, none | 5423 comments |

| KÜÇÜK et al. [41] | Turkish | TS | Tweets | Football clubs | Favor, against | 1065 tweets |

| Pheme [42] | Multi (English, French, German) | TI | Tweets | Rumors about nine events | Support, deny, query, comment | 4842 tweets |

| X-stance [43] | Multi (French, German, Italian) | CT | Posts from Smartvote website | 150 political issues | Favor, against * | German: 40,200, French: 14,129, Italy: 1,173 |

| IberEval 2017 [23] | Multi (Catalan, Spanish) | TS | Tweets | Catalan Independence | Favor, against, none | 5400 tweets (for each language) |

| Zotova et al. [44] | TS | Tweets | Catalan Independence | Favor, against, none * | Spanish: 10K, Catalan: 10K |

The in the annotation column means that the dataset is annotated automatically

Resources

In this SLR, we also reviewed the resources that were employed across all selected studies for stance detection. These resources involve datasets, lexicons, and knowledge graphs. Although stance classification is a recent research area, extensive effort is dedicated to creating and annotating datasets for this task. The annotated datasets have been used to train both supervised and weakly supervised models. In addition, they have been used for validating unsupervised models. In the surveyed literature, we encountered many public stance detection datasets of different text types (news headlines, news comments, tweets, and posts in online forums). The datasets targeted ten languages: Arabic, Catalan, Chinese, Czech, English, French, German, Italian, Spanish, and Turkish.

Table 3 presents the details of the surveyed datasets in terms of language, target dependency (TS: target-specific, MrT: multi-related targets, CT: cross-target, and TI: target-independent), domain, targets, annotation classes, and dataset size. We only included the publicly available datasets that are listed in chronological order in Table 3. The table lists 26 public datasets, 6 of them are shared-task datasets: NLPCC-2016 Task 4, SE16-T6, RumourEval-17, RumourEval-19, SardiStance, and IberEval-2017. It is worthwhile noting that 55 of the 96 reviewed studies considered shared-task datasets. SE16-T6 is the most dominant one and was used by 38 studies.

Aside from the datasets, different lexicons (e.g., VADER [45]) were used by 13 studies (out of 96). These lexicons were used as extra features to train ML models. In the following, we list the top eight lexicons along with the studies that used them (note that some studies used more than one lexicon).

NRC (also known as EmoLex)7 [46]: an emotion lexicon used in [36, 47–51].

Hu and Liu8 [52]: an opinion lexicon used in [35, 48, 50, 53–55].

MPQA9 [56]: a subjectivity lexicon used in [48, 50, 51, 53, 57].

LIWC (Linguistic Inquiry and Word Count)10 [58]: an emotion lexicon used in [35, 47, 55, 59].

AFINN (Affective Norms for English Words)11 [61]: a sentiment lexicon used in [35, 51, 55].

VADER (Valence Aware Dictionary and sEntiment Reasoner)12 [45]: a lexicon and rule-based sentiment analysis tool used in [36, 59].

In addition to the aforementioned lexicons, only one study created a new lexicon as part of their work. The authors of [54] constructed a stance lexicon14 to guide the attention mechanism in their stance detection model. Specifically, they built a stance lexicon for each target in the SE16-T6 dataset as well as 1000 additional tweets that have been collected using specific hashtags for each target.

External knowledge graphs are another resource used for stance detection. Two studies (out of 96) used this resource [63, 64]. Both studies adopted the ConceptNet knowledge graph [65], which comprises millions of relation triples (head concept, relation, and tail concept). ConceptNet was used to construct relational subgraphs for building a commonsense knowledge-enhanced module to be used by low-shot techniques for stance detection.

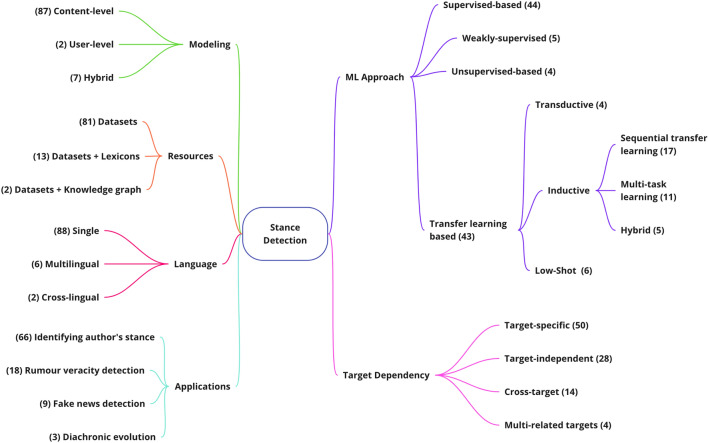

Stance detection taxonomy (RQ2)

The second research question that we are trying to answer in this survey is “What taxonomy could be used to represent the stance detection applications?” Aiming to answer this question, we propose a taxonomy of research work in stance detection which is shown in Fig. 4. As depicted in the figure, the reviewed studies can be classified in six dimensions: ML approaches, target dependency, applications, modeling (stance representation), language, and resources. The number of studies belonging to each dimension is presented in Fig. 4. It should be noted that each study can fit into all the different dimensions. In addition, there is no overlap between branches (i.e., categories) within a dimension. Meaning that we can describe each study using a category from each of the six dimensions. In the following, we describe each dimension:

Fig. 4.

Proposed taxonomy of the Stance Detection problem with the number of surveyed studies in each subcategory

ML approaches Existing approaches for stance detection can be broadly categorized into two based on feature extraction and learning: non-machine learning (or feature-based) and machine learning (or data-driven) techniques. The non-machine learning approaches involve techniques that depend on hand-crafted features to represent the stance (e.g., arguing lexicon and social activity). These techniques have been employed by some studies in the literature; however, we excluded them during the inclusion and exclusion stage of the SLR protocol. Meanwhile, data-driven techniques use machine learning or deep learning algorithms to train a classifier in a supervised, weakly supervised, or unsupervised manner. Some studies combine both approaches for the stance detection problem [35, 55, 66]. More details on the different ML techniques for stance detection are presented in Sect. 4.4.

Target Dependency Target dependency in stance detection studies can be categorized into four: target-specific (or specific target), multi-related targets, cross-target, and target-independent (as shown in Fig. 4). In target-specific studies, the text or the user is the main input to identify the stance toward specific and predefined targets, such as Donald Trump in the US election and the BREXIT referendum. Few studies considered multi-related targets by applying one stance detection model to multiple related targets. In these studies, it was assumed that when people express their stance on one target, they indicate their stance toward the other related targets (e.g., Trump versus Biden).

In both target-specific and multi-related targets studies, the task’s boundary is defined by the target on which the stance is taken, and training data for every target are usually given for prediction on the same target. However, in cross-target studies, researchers investigate the possibility of generalizing classifiers across targets. The objective of cross-target systems is to propose models that can transfer learned knowledge between targets (from a source target to a destination target), for instance, training a classifier on “Donald Trump” and predicting on “Joe Biden”. For the target-independent studies, in which the target of the stance is not an explicit entity. In fact, the target in these studies is a claim in a piece of news. Target-independent models aim to detect the stance in the comments about some news (confirming the news or denying its validity), or to predict whether a given pair of arguments argue for the same stance (i.e., same side stance classification). Table 4 lists the surveyed studies categorized by target dependency and publication year; most studies targeted a specific topic (target-specific). Meanwhile, there are few studies on multi-related targets due to the challenges associated with this task and the lack of annotated datasets.

Table 4.

Selected studies categorized by target dependency and publication year

| Target dependency | 2015–2016 | 2017–2018 | 2019–2020 | 2021–2022 |

|---|---|---|---|---|

| Target-specific | [48, 59, 67–71] | [50, 57, 72–79] | [35, 36, 51, 54, 55, 66, 80–99] | [100–106] |

| Target-independent | [42, 107–113] | [38, 47, 53, 114–124] | [125–130] | |

| Cross-target | [131] | [132] | [33, 43, 49] | [63, 64, 133–139] |

| Multi-related targets | [140] | [141, 142] | [143] |

Applications The applications of stance detection (other than identifying the stance of a user toward some target) can be categorized into three: rumor veracity detection, fake news detection, and diachronic evolution analysis. In the rumor veracity task, a stance detection model is used to determine the veracity of a currently circulating story or information that is yet to be verified at the time of spreading [113]. In more formal terms, given a pair of textual rumors and responses, stance detection refers to the classification of the text’s position toward the rumor into a label from the set {Support, Deny, Query, Comment}. This configuration has been widely examined in the context of social media microblogs [144]. Fake news detection is a similar field in which the veracity of circulating information does not need to be confirmed at the time of dissemination, as the fake news is intentionally written to mislead consumers. Thus, the task is to detect news that is always fake and contains specific types of misinformation. A well-known example of this task is to determine the relationship between a headline and the content of an article (probably from another news source). The possible classes for this task are Agree, Disagree, Discuss, and Unrelated. However, the challenges of recognizing fake news and rumors are essentially the same; usually, auxiliary information, such as user credibility on social media, is required to make a decision.

Moreover, the analysis of diachronic evolution is a recent research area in stance detection, in which the researchers explore the stance toward a specific target at the user level by aggregating data over time, considering different time-window sizes [101]. This task is usually defined as a three-way classification where each post is assigned to a stance in favor, against, or neutral. The goal of studying diachronic evolution is to understand the temporal variations in the real world and their impact on public opinion. Developing models for this task requires large datasets collected over different periods of time. Table 5 provides some examples of input formulation with the corresponding target and stance polarity in different stance detection applications.

Table 5.

Examples of input formulation with the corresponding target and stance in different stance detection applications

| Application | Input formulation | Target | Stance | Ref. |

|---|---|---|---|---|

| Identifying author’s stance | Tweet (e.g., “The woman has a voice. Who speaks for the baby? I’m just asking”) | Legalization of abortion | Against | [50] |

| Diachronic evolution analysis | Tweets from different time-window (six-year time period) | Gender equality | Favor, against, or none | [101] |

| Rumor veracity detection | Tree-structured thread discussing the veracity of a source tweet introducing a rumor | NA | Support, deny, comment, or query | [128] |

| Fake news detection | News headlines and a set of articles | NA | Agree, disagree, discuss, or unrelated | [126] |

Modeling Modeling the features of stance on social media can be classified into three: content-level, user-level, and hybrid. The content-level modeling is modeled by the linguistic features (e.g., topic modeling, N-gram, and word embeddings) and sentiment information. User-level features include the users’ interactions, preferences, connections, and timelines on their social platforms. Hybrid models learn representation from both content and user features. The details of the features used for stance modeling are presented in Sect. 4.3.3.

Language The literature on stance detection can also be categorized based on the targeted language: single language, multilingual, and cross-lingual. However, most studies on stance detection target a single language. English is the main language targeted by most stance detection studies; only a handful of stance detection studies considered languages other than English. In multilingual studies, researchers create one model for different languages using datasets for each language. For stance detection in a cross-lingual setting, the domain adaptation approach is generally considered when there are sufficient labeled data in one language, and the aim is to learn representations from this language that are useful for another language with few learning data.

Resources Different types of resources have been used in the literature for stance detection. The three main forms of these resources are datasets (labeled or unlabeled), lexicons (e.g., VADER for sentiment polarity [45]), and knowledge graphs (e.g., ConceptNet [65]) used in [63, 64]. The details of these resources are presented in Sect. 4.1.3.

Context of stance detection studies (RQ3)

In this section, we aim to answer RQ3 by presenting the focus of the stance detection research in terms of the platforms and domains for which stance detection models are proposed and how the stance is modeled in the selected studies. Section 4.2 presented the different aspects that were adopted in the selected studies. In this section, we show how the tasks were implemented for three aspects: platforms, domain areas, and stance modeling.

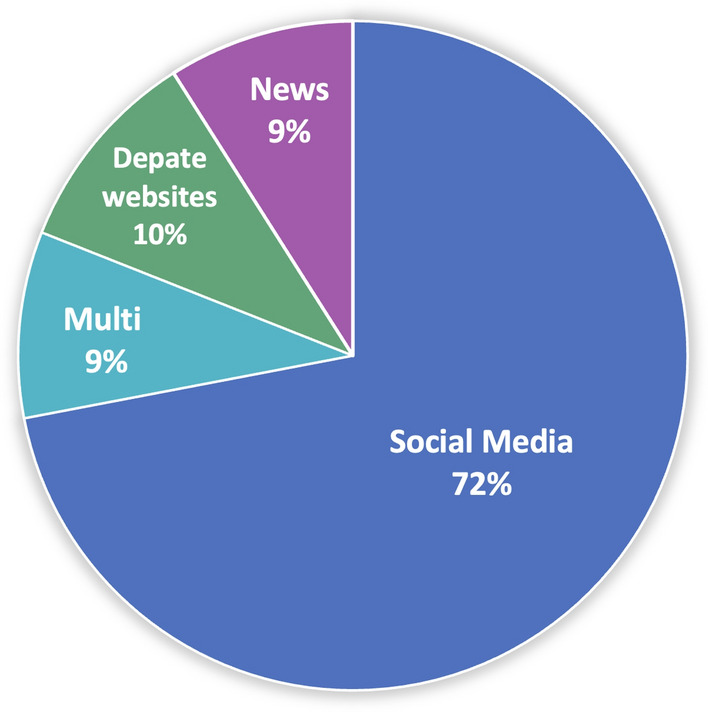

Platforms

Several platforms have been used in the literature as data sources for model training and evaluation. The main platforms that have been used in the literature for stance detection are social media, news websites, and debate websites. Figure 5 presents the percentage of studies per platform; notably, 9% of the selected studies adopted multiple types of platforms. From the figure, most selected studies adopted social media platforms as their context for building models. Twitter is the most used dataset resource; it was used by 64 of the 96 selected studies. Meanwhile, only two studies used Weibo [75, 93], and one study considered Facebook [68]. These findings highlight the significance and popularity of social media for research and development in this field. The high dependency on Twitter can be attributed to the accessibility and ethical considerations in data extraction using Twitter APIs compared with other social media platforms (e.g., Facebook) that pose more challenges in data extraction.

Fig. 5.

Distribution of the data source platforms used by the selected studies

Moreover, ten studies considered debate websites to collect data and evaluate their models. For example, www.procon.org is used in [36, 88] to collect a set of controversial issues and their related pros and cons posts. This resulted in long documents with numerous words per document (the average number is 166 words) in contrast to data collected from social media that use samples with fewer words (Twitter uses a maximum of 280 characters per sample). The websites www.Idebate.com and www.debatewise.org were also considered in [116] to have a set of controversial claims and users’ perspectives in order to infer these perspectives in terms of supporting or opposing the claim. Although these debate websites are being used as a resource to encourage critical thinking and present information in a nonpartisan format, the topics covered are limited, and the training data would not extend to general topics, such as those discussed on social media platforms.

The news domain has been considered by several studies. This type of platform is considered mostly by target-independent studies. The models in these studies were built for fake news detection or rumor veracity detection tasks. In fake news detection studies, the models are depending on the news headlines and body texts to evaluate the stance of the body text toward a specific target. Several polarities have been targeted in these studies such as agrees, disagrees, discusses, and unrelated. On the other hand, the typical input for rumor veracity models is a stream of social media posts that report circulating news or story. The goal of these models is to classify each post as a rumor or not rumor.

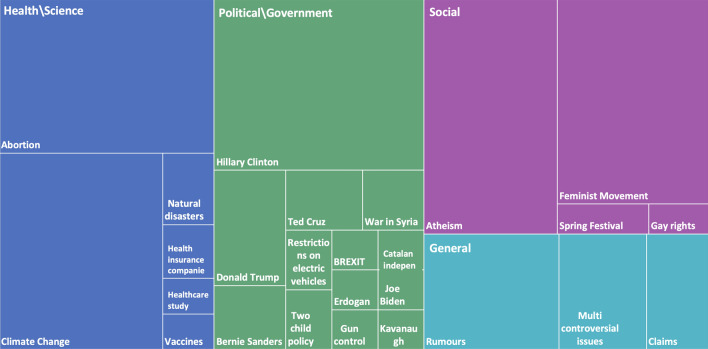

Domain area

Most selected studies focused on one or more controversial topics. Figure 6 presents a treemap that shows the main domains that have been targeted by stance detection studies, where the sizes of the rectangles represent the number of studies. The main domains, as shown in Fig. 6, are political issues (e.g., the US election), social issues (e.g., feminist movement), health (e.g., COVID-19 vaccine), and science (e.g., climate change) issues. However, some studies do not target-specific topics, where their proposed models are designed to detect the veracity of rumors/fake news in general or to assess the position of a claim toward any topic.

Fig. 6.

Domain areas targeted by stance detection studies (sizes of rectangles represent the number of studies)

The political or government domain is the dominant topic area targeted by most stance detection approaches. These approaches are applied to different political events or actors, such as Hilary Clinton (all studies that considered the SE16-T6 dataset), the Turkish election [94, 95], the war in Syria [75, 83, 87, 93], Catalan independence [55, 74], the US presidential candidates [103, 140–142], gun control and rights [80, 96], and the BREXIT referendum [35, 90]. In terms of the social domain, all studies that considered the SE16-T6 dataset evaluated their models on two social topics: atheism and the feminist movement. In addition, some other studies focused on gay rights [68, 125] or gender equality [101].

The health domain is also used by some studies, where it focuses on either the legalization of abortion (all studies that considered the SE16-T6 dataset), health insurance companies [133, 136], controversial health studies [67], or vaccination [96, 104]. Some other studies targeted scientific events, such as climate change (all studies that considered the SE16-T6 dataset) and natural disasters [118, 121, 124, 128].

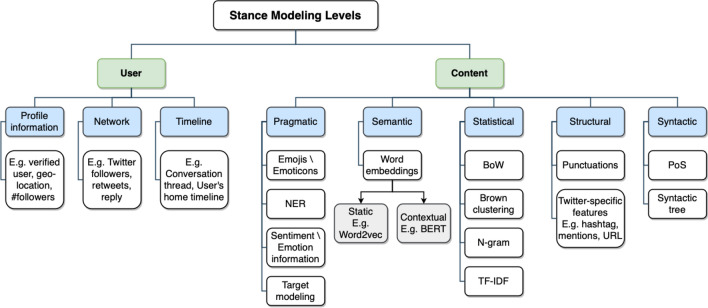

Stance modeling

Generally, stance modeling can be performed at two levels: content and user levels. The content-level modeling includes textual and social media specific features (e.g., hashtags and mentions). The user-level modeling employs the user’s network features, timeline, and profile information for stance detection. Figure 7 presents in detail the different forms of features at each level used for building stance detection models. Further, data on the features adopted in each of the 96 selected studies are listed in Tables 6, 7, and 8.

Fig. 7.

Stance modeling

Table 6.

Supervised-based learning studies (ordered by: Type, Language, Dataset)

| Type | Paper | Language | Features | ML models | Dataset | Best score (macro-) |

|---|---|---|---|---|---|---|

| Target-specific | [48] | English | N-gram, SE, Sentiment lexicons | SVM | SE16-T6 | 59.21 |

| [70] | English | N-gram, Sentiment lexicons, Topic modeling | Maximum entropy | SE16-T6 | 61.04 | |

| [71] | English | SE | CNN, voting scheme | SE16-T6 | 67.33 | |

| [72] | English | N-gram, POS, Structural, Sentiment lexicons | LibSVM | SE16-T6 | 77.11 | |

| [50] | English | SE, N-gram, POS, Sentiment lexicons, Target | SVM | SE16-T6 | 70.30 | |

| [57] | English | Text, N-gram, Sentiment/subjectivity lexicons, Syntactic | SVM | SE16-T6 | 74.44 | |

| [73] | English | SE, Target modeling | BiGRU, CNN | SE16-T6 | 67.40 | |

| [76] | English | POS, Syntactic tree, Structural | SVM tree kernel, majority voting count | SE16-T6 | 70.03 | |

| [77] | English | SE, Sentiment lexicon, Dependency parser, Argument information | LSTM+attention | SE16-T6 | 61.00 | |

| [79] | English | SE, Target modeling | BiGRU+attention+memory network | SE16-T6 | 71.04 | |

| [66] | English | Network, N-gram | SVM | SE16-T6 | 71.85 | |

| [89] | English | SE | CNN+attention | SE16-T6 | 62.45 | |

| [80] | English | SE, Target embedding | RNN-Capsule | SE16-T6 | 69.44 | |

| [91] | English | TF-IDF, Sentiment lexicons | Weighted KNN | SE16-T6 | 76.45 | |

| [92] | English | SE, POS, Structural, Statistical | Random forest, MLP, CNN, BiLSTM | SE16-T6 | 70.46 | |

| [100] | English | CE, N-gram | Ensemble model (RoBERTa+BiLSTM+attention) | SE16-T6 | 73.77 | |

| [106] | English | CE, Topic modeling, Sentiment, N-gram, TF-IDF | SVM, LR, Extremely Randomized Trees, AdaBoost | SE16-T6 | 74.63 | |

| [51] | English | N-gram, Sentiment lexicons, Target modeling, Structural, SE | Ensemble classifier, DNRFAF, DSRFE, DECCV | SE16-T6, AM | SE16-T6: 71.24, AM: 57.61 | |

| [35] | English | N-gram, BoW, Structural, Sentiment lexicons, Common-knowledge | SVM | TW-BREXIT | 67.01 | |

| [36] | English | SE, CE, Emotion lexicons | GRU, BERT | Procon20 | 76.90 | |

| [90] | English | SE, User’s timeline | LSTM+attention, GRU, Hierarchical LDA | Brexit, US Election-2016 | Brexit:65, Election: 72 | |

| [101] | English | SE, Temporal features | CNN | Temporally annotated | 72.20 | |

| [68] | English, Chinese | Network, SE, Topic modeling | CNN, LDA | CreateDebate, FBFans | 75.50 | |

| [75] | English, Chinese | SE, Target modeling | RNN, LSTM+attention | SE16-T6, NLPCC-2016 | English: 68.79, Chinese: 72.88 | |

| [93] | English, Chinese | SE | BiLSTM+attention | SE16-T6, NLPCC-2016 | English: 69.21, Chinese: 74.14 | |

| [55] | English, French, Italian, Spanish, Catalan | N-gram, BoW, Structural, Emotion lexicons, Domain knowledge | SVM, LR, CNN, LSTM, biLSTM | SE16-T6, IberEval 2017, Extended dataset for other languages | 64.51 | |

| [83] | Chinese | SE | CNN, GRU | NLPCC2016 | 62.20 | |

| [82] | Italy | BoW, Structural | SVM | ConRef-STANCE-ita + user network | 85.00 | |

| [74] | Spanish, Catalan | BoW, POS, Structural | SVM | IberEval2017 | Spanish: 48.88, Catalan: 49.01 | |

| Target-independent | [42] | English | SE, Structural, Text similarity | LSTM | RumourEval-17 | 43.40 |

| [108] | English | Structural, Sentiment score, POS, Text similarity | XGBoost | RumourEval-17 | 45.00 | |

| [111] | English | CE, Conversation structure, Timestamp | CNN, BiGRU, MLP, attention | RumourEval-17 | 79.86 | |

| [47] | English | Structural, Pragmatic, Conversation structure, Text similarity | SVM | RumourEval-17 | 47.00 | |

| [119] | English | Structural, Similarity scores, Sentiment, User information | LR | RumourEval-17 | 57.40 | |

| [112] | English | CE, Statistical, Structural, Sentiment lexicons | MLP, LSTM, GRU | FNC-1 | 83.08(Acc.) | |

| [109] | English | SE, Similarity score between claim and evidence | CNN, LSTM | FNC-1 | 56.88 | |

| [114] | English | CE, SE, Structural, Statistical, Pragmatic, Text similarity, Sentiment, BLEU and ROUGE scores | BiLSTM+max-pooling+attention | FNC-1 | 82.23(Acc.) | |

| [129] | English | SE, Statistical, Sentiment, Text similarity, POS | Cascading classifiers, SVM, CNN | FNC-1 | 38.00 | |

| [107] | English | BoW, Brown cluster, POS, Pragmatic, Structural, Confidence score, User profile information | Random forest | PHEME, RumourEval-17 | PHEME: 77.42, RumourEval: 79.02(Acc.) | |

| [113] | English | SE, Structural, POS, BoW, Text similarity, Social network, Hawkes processes | LSTM-branch | PHEME | 44.90 | |

| [124] | English | CE, TF-IDF | RoBERTa, MLP | RumourEval-19 | 64.00 | |

| Cross-target | [49] | English | SE, Semantic/emotion lexicons, Knowledge graph | GCN, BiLSTM+knowledge-aware memory unit | SE16-T6 | 53.60 |

| [133] | English | CE, Syntactical dependency, Pragmatic dependency graph, Stance tokens | BiLSTM, GCN, attention | SE16-T6, WT-WT | SE16-T6: 59.5, WT-WT: 74.2 | |

| Multi-related targets | [142] | English | SE | Multi-kernel Convolution+Attentive LSTM | MultiTarget SD | 58.72 |

Table 7.

Unsupervised-based and weakly supervised learning studies (ordered by: Type, Language, Dataset)

| Type | Paper | Language | Features | ML models | Dataset | Best score (macro-) |

|---|---|---|---|---|---|---|

| Target-specific | [84] | English | SE, Topic modeling, Noisy stance labeling | BiGRU, SRNet | SE16-T6 | 60.78 |

| [59] | English | N-gram, Emotion lexicons, Followers list | HL-MRFs, SVM | SE16-T6.B (unlabeled set) | 57.52 | |

| [78] | English | Network, BoW, TF-IDF | RNN+GRU | SE16-T6+ Tweets about gun control and gun rights | 53.00 | |

| [67] | English | TF-IDF, Predicted argument tags | SVM | 1,063 comments about health study from news websites | 77.00 | |

| [96] | English | SE, CE, Network | Multilingual-BERT | Tweets on 8 polarizing US-centric topics | 92.10 | |

| [95] | English, Turkish | Network, Structural | UMAP, Mean shift, SVM | 3 labeled sets (Kavanaugh, Trump, Erdogan), 1 unlabeled set of 6 topics in USA | 90.40 | |

| [94] | Turkish | CE, User’s timeline | SVM, MUSE | 108M Turkish election-related tweets+ Timeline tweets of 168k users | 85.00 | |

| Target- independent | [53] | English | Text, Syntactical dependencies, Sentiment lexicons | Unsupervised approach | 1,502 labeled arguments with consequences from Debatepedia | 73.00 |

| Cross- target | [131] | English | SE, CE, Target modeling | LSTM | SE16-T6 | 58.03 |

Table 8.

Transfer learning-based studies (ordered by: Type, Language, Dataset). *T: Transductive, S: Sequential, MT: Multitask, and LS: Low-shot

| Type | Paper | Language | Transfer learning type* | Features | ML models | Dataset | Best score (macro-) | |||

|---|---|---|---|---|---|---|---|---|---|---|

| T | S | MT | LS | |||||||

| Target-specific | [69] | English | SE, Hashtag prediction | LSTM | SE16-T6 | 67.80 | ||||

| [85] | English | SE, Topic modeling, Sentiment labeling | Attention | SE16-T6 | 68.54 | |||||

| [54] | English | SE, Sentiment and stance lexicons | Attention | SE16-T6 | 65.33 | |||||

| [81] | English | N-gram, BoW, Sentiment labeling | LSTM | SE16-T6 | 60.16 | |||||

| [98] | English | CE, Topic modeling | RoBERTa, Hierarchical capsule network | SE16-T6 | 78.43 | |||||

| [99] | English | CE, Target modeling | BERT+stance-wise convolution layer | SE16-T6 | 73.73 | |||||

| [105] | English | CE, Target modeling | BERTweet+AKD | SE16-T6, Multi-Target SD, AM, WT-WT, COVID-19, Election-2020 | 68.17 | |||||

| [86] | English | CE, Structural, Emotions | RoBERTa, LR | ACD, IAC 2.0 | ACD: 77.13, IAC: 80.30 | |||||

| [88] | English | CE | LSTM, ULMFiT | ProCon | 69.60 | |||||

| [102] | English | CE | BERT+Adversarial attacks | 10 datasets | 66.95 | |||||

| [103] | English | CE, Stance relevant tokens | BERT | US election | 77.27 | |||||

| [104] | English | SE, N-gram, TF-IDF | BERT | 7,530 labeled tweets about COVID-19 vaccination | 78.94 (Acc.) | |||||

| [87] | English, Arabic | CE | LSTM, CNN | FNC-1, Baly et al. | 45.20 | |||||

| [97] | Italy | CE | UmBERTo | Sardistance | 68.53 | |||||

| Target-independent | [38] | Arabic | CE | Multilingual-BERT | Arabic News Stance | 76.70 | ||||

| [120] | English | SE | BERT, Self-attention | RumourEval-17 | 47.50 | |||||

| [117] | English | CE, Stance information, Temporal modeling | BiGRU, Conversational-GCN, RNN | RumourEval-17 | 49.90 | |||||

| [127] | English | CE, Conversation structure, Stance information | BERT, GCN | PHEME, RumourEval-17 | PHEME: 42.70, RumourEval-17: 70.20 | |||||

| [130] | English | CE (textual: BistilBERT, visual: VGG-19) | Attention | PHEME, RumourEval-17 | PHEME: 82.02, RumourEval-17: 80.41 | |||||

| [122] | English | CE, Auxiliary data for paraphrase detection | BERT | FNC-1 | 74.40 | |||||

| [110] | English | SE, Stance information | GRU+enhanced shared-layer | FNC-1, PHEME | FNC-1: 32.80, PHEME: 43.00 | |||||

| [123] | English | CE, User profile information, Stance information | LSTM+task specific layers, VAE | PHEME | 35.00 | |||||

| [115] | English | SE, Statistical(BoW, Brown clusters, Kullback-Leibler), Target modeling | Gaussian processes | PHEME, England Riots | PHEME: 59.80, England Riots: 70.80 | |||||

| [121] | English | CE, Structural, Cosine distance to source tweet | BERT, Ensemble methods | RumourEval-19 | 61.67 | |||||

| [118] | English | Structural, Sentiment information, User profile information | OpenAI GPT, input concatenation mechanism | RumourEval-19 | 61.87 | |||||

| [128] | English | CE, SE, Structural, Pragmatic, Syntactic, Timeline | Longformer (trained from RoBERa), LSTM, Ensembling | RumourEval-19 | 67.20 | |||||

| [125] | English | CE | ALBERTv2 | Args.me | 73.70 | |||||

| [116] | English | CE | BERT+cosine embedding loss+joint loss | Perspectrum | 79.95 | |||||

| [126] | English | CE, Confidence score, Negated perspective tokens | BERT | Perspectrum, IBM debater | Perspectrum: 81.35, IBM debater: 71.16 | |||||

| Cross-target | [132] | English | SE, CE, Target modeling | CrossNet: Attention+MLP | SE16-T6 | 46.10 | ||||

| [135] | English | CE, Topic modeling | Adversarial learning, 2-Layer feedforward network | SE16-T6 | 54.10 | |||||

| [137] | English | CE, Topic modeling, Sentiment | BERT, Adversarial attention network | SE16-T6, Perspectrum | 68.47 | |||||

| [138] | English | CE, Entity recognition, Sentiment, Idelogy representation | RoBERTa, CNN | SE16-T6, VAST, Basil | 67.66 | |||||

| [139] | English | CE, Syntactic, Sentiment, Opinion-toward | BERT, GAT, BiLSTM | SE16-T6, COVID-19, Election-2020 | SE16-T6: 67.46, COVID-19: 82.6, Election: 79.37 | |||||

| [33] | English | CE, Text similarity, Topic modeling | BERT, Ward hierarchical clustering | VAST | 66.60 | |||||

| [63] | English | CE, Knowledge graph | BERT, Concept-Net | VAST | 70.20 | |||||

| [64] | English | CE, Knowledge graph, Sentiment, Commonsense representation | BERT, Graph autoencoder | VAST | 72.6 | |||||

| [134] | English | CE, Target modeling | RoBERTa, Mixture-of-experts, Domain-adversarial learning | 16 datasets | 42.67 | |||||

| [136] | English | TF-IDF | MLP | WT-WT+ 134,922 synthetically annotated tweets | 37.69 | |||||

| [43] | French, German, Italian | CE | Multilingual-BERT | X-stance | 76.60 | |||||

| Multi-related targets | [140] | English | SE, Target modeling | BiLSTM, Dynamic memory | MultiTarget SD | 56.73 | ||||

| [141] | English | SE | Seq2seq | MultiTarget SD | 54.81 | |||||

| [143] | English | CE, Target modeling | BERTweet, RoBERTa | MultiTarget SD, SE16-T6 | 60.56 | |||||

The majority of the studies in this SLR (87 out of 96) modeled the stance at the content-level by extracting one or more of the five feature levels: pragmatic, semantic, statistical, structural, and syntactic (see Fig. 7). Most studies extracted the semantic features of the text using static word embedding (e.g., Glove and word2vec) or contextual word embedding (e.g., Bidirectional Encoder Representations From Transformers (BERT)). Statistical features (e.g., N-gram) have also been widely employed to model the textual content, especially in earlier work (i.e., publications during 2015–2018). Pragmatic and syntactic features have been considered to model the textual content or enrich the textual content using external information, such as sentiment and emotion lexicons, target information, or syntactical dependency tree.

A graph-based approach was employed in four studies to perform a form of stance modeling at content-level [49, 63, 117, 133]. Wei et al. [117] proposed a modified graph convolutional network (GCN) to learn stance features by encoding conversation threads. Zhang et al. [49] used external emotion and semantic lexicons to build a semantic-emotion heterogeneous graph, which is then fed into a GCN to capture multi-hop semantic connections between emotion tags and words. Liang et al. [133] proposed an approach to capture the exact role of contextual words by investigating a novel technique of creating target-adaptive pragmatic dependency graphs with interactive GCN blocks for each tweet. Liu et al. [63] proposed a commonsense knowledge-enhanced model based on CompGCN [145]. The proposed model exploits both the semantic-level and structural-level information of the relation knowledge graph extracted from ConceptNet [65], allowing the model to improve its reasoning and generalization capabilities.

In addition, out of the 87 studies that modeled the stance at content-level, only one study [130] considered visual content with textual content. The authors of [130] proposed multimodal content as embedding vectors using BERT to obtain the embedding of the text content and used VGG19 to generate the visual embedding of an attached image.

User-level modality was used by a few stance detection studies (9 out of 96) compared with content-level modality. However, seven of the nine studies combined both users features and content features for stance detection [66, 68, 78, 90, 96, 119, 123], whereas two of them modeled the stance only at the user level [94, 95]. Darwish et al. [95] introduced a model for detecting the stance of prolific Twitter users using retweeted tweets, retweeting accounts, and hashtags as features for computing the similarities between users. Rashed et al. [94] used Google’s convolutional neural network (CNN)-based multilingual universal sentence encoder to map the users into an n-dimensional embedding space. Furthermore, other studies [66, 68, 96] combined text embedding with user embedding generated from user network information comments such as likes, retweets, mentions, and following accounts. Benton et al. [78] constructed user embeddings by combining textual embedding generated from Term Frequency-Inverse Document Frequency (TF-IDF), weighted bag of words (BoW), and social network embeddings. In addition, two studies utilized user profile information as a feature combined with textual features [119, 123]. Finally, the authors of [90] proposed to model the users’ posts and the topical context of users’ neighbors in social networks for user-level stance prediction.

The content-level-based approaches utilize the raw text for stance detection without the need for other information related to the writer. This feature makes these techniques applicable to all UGC platforms. However, relying solely on the text may not provide a complete understanding of the user’s stance, especially when the user employs sarcasm to present his opinion on a specific topic. In contrast, user-level-based approaches employ the user’s information to understand the user’s stance. These approaches can be used only with UGC platforms that provide access to user information, such as social media.

To combine the features of content-level and user-level modelings, hybrid models have been proposed recently for stance detection. The attained results of these models outperformed the models that depend only on content-level. However, studies that depend on community features may compromise user privacy. This highlights the need for further research aimed at protecting social media users from unconsciously disclosing their views and beliefs. Therefore, due to privacy concerns, most social media platforms have recently begun restricting access to user information. This makes the application domain of user-level-based techniques limited and depends on the availability of the user’s information.

Machine learning techniques (RQ4)

In this section, we consider RQ4. We analyze the ML approaches that contributed to the major developments in stance detection research. The ML techniques proposed for stance detection can be broadly classified into supervised-based, unsupervised-based, weakly supervised, and transfer learning-based. The transfer learning models used for stance detection in turn can be subclassified into transductive, inductive, and low-shot. As highlighted in Fig. 4, 44 surveyed studies adopted supervised approaches for stance detection, five studies proposed weakly supervised models, four studies employed unsupervised models, and 43 studies applied transfer learning through unsupervised, supervised, or distantly supervised source tasks.

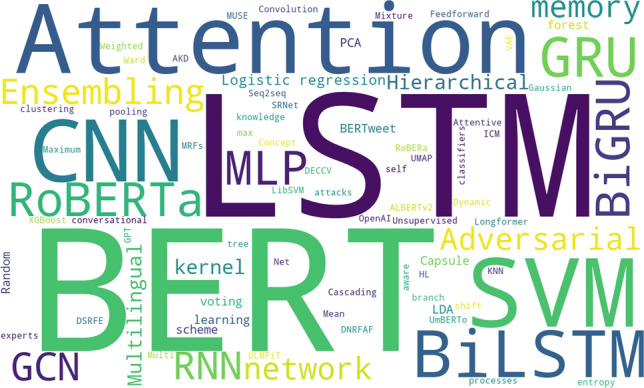

Figure 8 depicts a word cloud representing the frequency of ML techniques used in the selected studies. The significance of each technique is associated with its font size. It should be noted that the ML technique in this word cloud corresponds to the best reported technique (in terms of performance score) in each selected study. As shown in the figure, deep learning models that adopt the mechanism of self-attention (e.g., BERT) are used more frequently than the other approaches. The word cloud also shows that attention mechanism and recurrent neural network (RNN) models, such as long short-term memory (LSTM) and gated recurrent unit (GRU), are employed in a significant number of studies.

Fig. 8.

A word cloud of the ML techniques used in the selected papers

Supervised-based learning

The majority of the surveyed techniques (44 out of 96) applied supervised learning for stance detection. In supervised-based learning studies, the aim is to train a model on labeled data for a given target and domain, and expect it to perform well on new data of the same target and domain.

Earlier work in stance detection (between 2016 and 2018) employed traditional ML techniques to classify a stance toward a target. Most of them used a support vector machine (SVM) classifier [35, 47, 50, 66, 72, 74, 82]. Dey et al. [57] proposed a simple two-phase strategy with traditional SVM learning. A new syntactic feature was used in the first phase. This feature was learned from external subjectivity lexicons to differentiate between neutral and non-neutral tweets. In the second phase, non-neutral tweets were classified to favor or against by using a novel semantic feature extracted from external sentiment lexicons. Other stance detection studies employed other traditional ML techniques, such as random forest [107], gradient boosting [108], logistic regression (LR) [119], and k-nearest neighbors (KNN) [91]. A recent study [106], published in 2022, aimed to explain the stance detection model performance and provide a qualitative understanding of the classifier behavior. The authors exploited the Biterm Topic Model (BTM) to identify textual content that affected the stance. However, traditional ML techniques do not consider the contextual meaning of words. Given that having labeled data for every setting is infeasible, the performance score of such techniques is low compared with other approaches.

Several scholars have provided supervised models for stance detection using deep learning architectures. RNNs, a robust class of artificial neural networks adapted to work for processing and identifying patterns in sequential data (e.g., natural language), were used in four studies [75, 80, 111, 114]. Sun et al. [80] were the first who introduced RNN-Capsule into the stance detection problem by extracting multiple vectors for stance features instead of one vector. In addition, they developed an attention mechanism to identify the dependency relationship between a text and a target. The results of experimenting with the proposed capsule network with attention mechanism on the SemEval-2016 dataset were encouraging with an average F1 score of 69.44.

LSTM, an RNN architecture specifically designed to handle long-term dependencies, is the most widely used deep learning architecture among the supervised learning studies used for stance detection (Fig. 8). LSTM algorithm was developed to deal with the vanishing gradient problem that can be encountered when training traditional RNN models. This feature makes the LSTM algorithm capable of learning and memorizing long-term dependencies. In total, 10 of 44 supervised learning studies made use of this algorithm [36, 42, 55, 75, 77, 90, 109, 112, 113, 142]. Five other studies [49, 92, 93, 100, 133] used BiLSTM, a variant of the standard LSTM that can improve the performance on sequence classification problems.

GRU is the newest entrant after LSTM and RNN; hence, it provides an improvement over them. Similar to LSTM, gates are used in GRU to control information flow. GRU is less complex than LSTM because it has a smaller number of gates. This advantage usually results in some performance improvements with GRU over LSTM. In total, 6 of 44 studies [36, 73, 79, 83, 90, 112] used GRU. Notably, Zhu et al. [90] introduced a novel neural dynamic model that jointly models topical contextual and user’s sequential posting behavior. This model was simulated by a GRU to exploit the temporal contextual information for online learning. Their approach can dynamically identify topic-dependent stances, in contrast to static models that perform one-time predictions. In addition, a two-channel CNN–GRU fusion network was proposed in [83] to overcome the problems of CNN, such as information loss when handling time-series data and not being able to extract features with varying lengths from text accurately.

Although LSTM and GRU are efficient for learning time-series data, these algorithms lack the capability of CNN for learning the spatial features of the input data [146]. To overcome this problem, several researchers employed CNN as a feature extractor and fed these features into a time-series learning technique, such as LSTM or GRU. CNN was used in 12 of the 44 studies [36, 55, 68, 71, 73, 83, 89, 92, 101, 109, 111, 129]. Notably, Mohtarami et al. [109] proposed a novel memory network model enhanced with LSTM and CNN networks based on a similarity-based matrix that has been used at inference time. Their results indicated that their model is capable of extracting significant snippets from an input text, which is useful not only for stance recognition but also for human experts deciding on the veracity of a claim.

Compared with single classifiers, ensemble classifiers have several advantages, such as decreasing the possibility of selecting an unstable subset of features, more precise prediction results, and avoiding the problem of local optimum [147]. Siddiqua et al. [76] proposed an ensemble learning approach in which different SVM tree kernel classifiers were consolidated to arrive at a final stance output using a majority voting scheme. In addition, the authors of [51] proposed an ensemble method using three algorithms—DNRFAF, DSRFE, and DECCV— developed in [148–150] for selecting the best set of features and classifiers. Chen et al. [100] proposed a novel ensemble model that combined a robustly optimized BERT approach (RoBERTa) with N-gram features and bidirectional LSTM (BiLSTM) with a target-specific attention mechanism. This fusion improved the results by 1.2% in micro-F1 score compared with state-of-the-art systems on the SemEval-2016 dataset. Similarly, Prakash et al. [124] used a multilayer perceptron (MLP) with RoBERTa in an ensemble model with TF-IDF features as the input. This approach has been evaluated on the RumourEval-2019 dataset; the reported results indicated that the ensemble model outperformed the base RoBERTa model by 0.07% in the macro-F1 score, achieving state-of-the-art results [25].

For stance detection in a multilingual setting, Lai et al. [55] evaluated their model in four languages: English, Spanish, French and Italian. SemEval-2016 was used for English, IberEval-2017 for Spanish, and a new dataset was collected for French and Italian. Four types of features were used in this work: stylistic, structural, affective, and contextual. For feature learning and classification, several techniques were experimented with in this work, such as SVM, LR, CNN, LSTM, and BiLSTM. The reported results over all languages and domains of the classical ML models (SVM and LR) proved to be competitive compared with the considered deep learning models (CNN, LSTM, and BiLSTM). Three studies combined English and Chinese datasets to evaluate their models [68, 75, 93]. The authors of [68] proposed a CNN-based model by incorporating user information (from user comments and likes) and topic information obtained from topic modeling using linear discriminant analysis (LDA). Du et al. [75] proposed a neural attention model to extract target-related information for stance detection. Overfitting and gradient vanishing, as well as dealing with long-term dependencies during multilayer LSTM training, are all issues that were addressed in [93]. The authors presented a two-stage deep attention neural network that encodes tweet tokens with densely connected BiLSTM and target tokens with traditional BiLSTM.

Modeling of the interaction between stance and sentiment has been investigated by some researchers to boost the results of stance detection. Sobhani et al. [48] conducted several experiments to elucidate the interaction between stance and sentiment. They trained SVM using three features: N-gram, word embedding, and sentiment lexicon. They concluded that although sentiment features are useful, they alone are insufficient for stance detection. Ebrahim et al. [70] proposed maximum entropy (as discriminative) and Naive Bayes (as generative) to model the interactions between stance and sentiment by training the SemEval-2016 dataset. Hosseinia et al. [36] demonstrated that bidirectional transformers can achieve competitive performance, even without fine-tuning, by leveraging sentiment and emotion lexicons. Their findings suggested that employing sentiment information is more beneficial than emotion in detecting the stance.

The main advantages of supervised-based learning techniques are their reliable and accurate performance, given the appropriate representation of data and appropriate algorithms. However, the main drawback of these approaches is the need for a sufficient amount of annotated data for the desired task. Considering the plethora of human languages and the complexity of NLP problems in the real world, having labeled data for every setting is infeasible. Thus, supervised learning may fail given these real-world challenges.

Table 6 summarizes the supervised-based learning stance detection techniques used in the selected studies. In this table, we present the following comparison criteria:

Type of the target dependency: target-specific, multi-related targets, cross-target, or target-independent.

Target language of the study: single language or multilingual.

Features used for model learning. The abbreviations in the feature column are SE: static embeddings, and CE: contextualized embeddings.

ML models adopted by the study.

Dataset name used for model training or the resource of collecting data if there is no defined dataset name.

Best score. The literature on stance classification varies on the used performance measures; however, the macro-average F1 score is the most popular measure in the surveyed studies. Thus, we report the macro-average F1 score of the best ML model in each study. Note that few studies did not report their results in macro-average F1; thus, we report their results with the accuracy (Acc.) score.

As can be seen from Table 6, the majority of the supervised techniques have targeted a specific topic using the SemEval-2016 dataset. The English language is the main language considered by most supervised-based stance detection studies. In addition, deep learning models (e.g., LSTM and transformers) achieved higher performance scores compared to traditional ML models, such as SVM.

Unsupervised-based learning