Abstract

The current multiphase, invitro study developed and validated a 3-dimensional convolutional neural network (3D-CNN) to generate partial dental crowns (PDC) for use in restorative dentistry. The effectiveness of desktop laser and intraoral scanners in generating data for the purpose of 3D-CNN was first evaluated (phase 1). There were no significant differences in surface area [t-stat(df) = − 0.01 (10), mean difference = − 0.058, P > 0.99] and volume [t-stat(df) = 0.357(10)]. However, the intraoral scans were chosen for phase 2 as they produced a greater level of volumetric details (343.83 ± 43.52 mm3) compared to desktop laser scanning (322.70 ± 40.15 mm3). In phase 2, 120 tooth preparations were digitally synthesized from intraoral scans, and two clinicians designed the respective PDCs using computer-aided design (CAD) workflows on a personal computer setup. Statistical comparison by 3-factor ANOVA demonstrated significant differences in surface area (P < 0.001), volume (P < 0.001), and spatial overlap (P < 0.001), and therefore only the most accurate PDCs (n = 30) were picked to train the neural network (Phase 3). The current 3D-CNN produced a validation accuracy of 60%, validation loss of 0.68–0.87, sensitivity of 1.00, precision of 0.50–0.83, and serves as a proof-of-concept that 3D-CNN can predict and generate PDC prostheses in CAD for restorative dentistry.

Subject terms: Computational biology and bioinformatics, Medical research, Engineering, Materials science

Introduction

The development of artificial intelligence (AI) took place in 1943, but the term “artificial intelligence” was coined at a session in Dartmouth in 19561. Within this analogy, deep learning, neural networks, and machine learning are subsets of the AI. Machines can learn via building of algorithms solving predictive problems without human insights2. The neural networks (NN) used are mathematical non-linear models mimicking the human brain in traits of learning and decision making, stimulating human cognitive skills3. Such NNs can be complex with hidden layers can be trained to represent and predict multilayer perceptions processing data with deep learning2. Convolutional neural networks and artificial neural networks are the most used designs to process the data in planning prophylaxis, pivotal therapies, and projecting treatment costs3. Looking onto the near future, this technology will lead to the introduction of various new application areas within public domains in the form of smart assistants4. One of the areas that would benefit would be the field of dental medicine opening diverse opportunities of routine tasks that were initially performed by dental staff with improved quality in care5,6.

A priori, AI models have been commonly used for the mapping and finishing of tooth preparations and different prosthodontic applications. Computer-aided-design methods have also been used for tooth anatomy selection for automated dental restoration designs. Successful casting of metal frameworks, tooth shade selection and with porcelain shade matching have been recommended features of AI models7. Indirect restorations, partial dental crowns or PDCs (inlays and onlays) have recently begun generating popularity in the ‘minimally invasive dentistry’ movement. Reinforcing their proposed advantages, it has been established that gold and ceramic onlay preparations resulted in significantly less coronal tooth structure reduction compared with their full-coverage equivalents on the same tooth when performed by undergraduate students8,9. While commercially available digital solutions provided CAD assistance to dentists for digital inlay and onlay preparations, most free or open-source implementation were documented for dentures and larger prostheses as opposed to PDC10,11. Furthermore, the literature suggested that both the desktop laser scanners and intraoral scanners were accurate devices in their own rights and carried out specific features effectively12–14. The documentations did not however specify the ideal device to record input data for digitally recording tooth preparations for PDC and machine learning purposes. Considering the missing specific literature, previous reports of open-source CAD design were analyzed and modified to develop novel reconstruction workflows suitable for the current research. The workflows were revised and simplified, to eliminate the steep learning curve that are commonly reported by dentists welcoming clinical digitization15–17. Therefore, it was deemed appropriate that dentists designed the digital PDCs in CAD that would then be used in the machine learning process.

There are various impediments associated with AI or any other technology introduced. Recent medical and dental development of technologies have been expensive and were accompanied by unspecified patient compliance and acceptance amongst dental professionals18,19. Machine learning and neural networks have been proven to be successful in classifying and grouping data in a multitude of different fields of medicine and dentistry. In the past there have been a few documented methods of tooth preparation detection in teeth from radiographic image analysis using deep learning20. However, the prospect of using 3-Dimensional (3D) data of partial dental tooth preparations until now has evaded translational possibilities with 3D medical machine learning still in its infancy21.

The current study aimed to generate an accurate, novel 3D dental prosthetic dataset for 3D convolutional neural network (3D-CNN) training purposes. To generate a novel prosthetic dataset, the first challenge was to obtain accurate scans of prepared teeth. Based on past experiments, this can be achieved by both a desktop laser scanning system and intraoral scanner with no significant difference in surface topography and precision12,13. While both devices use imaging, infrared, and laser sensors to take thousands of images in a fraction of a second and compile them into 3D meshes, the key difference lies in the level of triangulation or magnification, with intraoral scanners providing larger magnification12,13. However, no study evaluated the system’s accuracy on partial dental crowns and therefore in phase 1, the two systems were virtually compared for which of the two generated more accurate 3D models relevant for the current prosthetic design.

The second challenge lay in that dental prostheses can be designed using both free and commercial software, with the most prominent differences being the number of features relevant to dentistry and ease of use for the human operator. As no previous study documented a comparison between the two options for partial dental crowns, in phase 2, the current study recruited two dentists with no previous computer aided design (CAD) experience and trained them on the workflows to design partial dental crowns on both the free and commercial CAD system. The scanned 3D models were augmented according to published medical AI practices22 and super-sampled to produce a larger dataset.

Therefore, the aim of the current study was to analyze different digital workflows to identify the most accessible workflow that can generate accurate novel digital data of partial dental crowns for 3D machine learning purposes. It was hypothesized that digital workflows could generate accurate dental data of partial dental crowns for 3D machine learning purposes using the proposed workflows.

Results

The development of the convolutional neural network was possible using 3D STL models of tooth preparations of partial dental crowns.

Phase 1

All assumptions for normality were met and an independent sample t-test was carried out. There were no significant differences in surface area [t-stat(df) = − 0.01 (10), mean difference = − 0.058, P > 0.99] and volume [t-stat(df) = 0.357(10), mean difference = 21.25, P = 0.375]. HD values ranged between − 0.02 to 0.10 mm with DSC ranging between 0.90 to 0.98. Intraoral scans produced greater volumetric details (343.83 ± 43.52 mm3) in comparison to desktop laser scanning (322.70 ± 40.15 mm3).

Phase 2

A 3-factor ANOVA produced significant differences for MSA (F-stat = 111.28, P < 0.001) (Table 1) and VV (F-stat = 112.91, P < 0.001) (Supplementary Table 1) when type of prosthesis was an independent factor with no significant differences in the other factors. There were no significant interaction effects among the 3 independent factors. However, workflow 1 generated substantially larger surface area (129.63–140.30 mm2) and volumes (38.20–39.16 mm3) for inlays for both operators. Analyses of HD (Supplementary Table 2) and DSC (Supplementary Table 3) demonstrated significant interchangeable interactions between virtual workflow, clinical operator and type of prosthesis being designed. Workflow 2 produced greater DSC (0.85–0.95) for onlays. One 3D model was digitally corrupted at the time of spatial overlap analysis, and therefore the mesh and its three counterparts generated by the clinicians from the other workflows were removed prior to statistical evaluation to maintain the integrity of the report.

Table 1.

Analysis of variance (n = 116) for mesh surface area.

| List of independent factors: | ||

|---|---|---|

|

1. Type of Prosthesis: F(df) = 111.279(1), P < 0.001 Inlay = Mean ± SD = 112.44 ± 46.05 Onlay = Mean ± SD = 291.65 ± 117.62 2. Virtual Workflow: F(df) = 1.355(1), P = 0.247 Workflow 1 (3matics) = Mean ± SD = 214.59 ± 120.21 Workflow 2 (Meshmixer) = Mean ± SD = 195.68 ± 134.36 3. Clinical Operator: F(df) = 0.018(1), P = 0.894 Operator 1 = Mean ± SD = 206.21 ± 127.48 Operator 2 = Mean ± SD = 204.07 ± 128.19 |

| Operator 1 (Mean ± SD) | Operator 2 (Mean ± SD) | |

|---|---|---|

| Inlay | ||

| Workflow 1 | 140.30 ± 60.739 | 129.63 ± 40.131 |

| Workflow 2 | 90.298 ± 26.026 | 89.550 ± 27.355 |

| Onlay | ||

| Workflow 1 | 285.95 ± 122.64 | 291.87 ± 119.51 |

| Workflow 2 | 296.17 ± 117.45 | 292.62 ± 122.96 |

| Interaction effect | ||

|---|---|---|

|

1. Type of Prosthesis vs. Virtual Workflow: F(df) = 2.211(1), P = 0.140 2. Type of Prosthesis vs. Clinical Operator: F(df) = 0.041(1), P = 0.840 3. Virtual Workflow vs. Clinical Operator: F(df) < 0.001(1), P = 0.995 4. Type of Prosthesis vs Virtual Workflow vs Clinical Operator: F(df) = .081(1), P = 0.776 |

Phase 3

The 30-specimen dataset produced a maximum validation accuracy of 60% in determining the type of prosthesis required for each tooth preparation. Validation loss of 0.8748 was seen in tooth preparation dataset and 0.6832 in prosthetic dataset. (Supplementary Fig. 1) Sensitivity was 1.00 for both datasets with 3D tooth preparation dataset producing a precision of 0.50 while 3D prostheses producing 0.83. Apart from precision, every accuracy indicator of the automatic segmentation revealed a distinction between the groups that was statistically significant (P < 0.05).

Discussion

The current study aimed to investigate the most reliable workflows to generate novel 3D data for dental machine learning purposes and subsequently classify 3D models of partial dental crowns and their tooth preparations by training the model using multidisciplinary medical datasets. In addition, this was to validate an innovative AI-driven tool for time-efficient and precise automation. To the authors’ knowledge, this is the first study to have attempted the said approach. The AI-driven tool demonstrated high accuracy and a fast performance. While both scanning apparatuses in phase 1 are considered standard for their respective roles according to previous literature20,21, the current study found substantial differences between intraoral scans and desktop scans indicating that intraoral scans are more appropriate to detect the line angles and finish lines on the prepared teeth. The thin layer of titanium oxide applied may have affected the outcomes, but further validation is required to confirm this hypothesis. Unlike radiomic datasets, where clinical experience is critical and dictate the overall success of machine learning23, both workflows in phase 2 produced respectable results when operated by 2 dentists with little to no experience with dental CAD. however, surface reconstruction for inlays while Boolean subtraction for onlays generated more reliable outcomes supporting the age-old argument of commercial software being highly optimized for smaller details while open-source or crowd supported projects being more user accessible for general projects9,10.

Accuracy metrics are widely acknowledged for assessing AI segmentation quality. However, the time as a factor has received less attention throughout the process, which makes it a pivotal influencer when considering its clinical applicability and relevance. The current study was greatly limited by the size of the original dataset (n = 6) and the fitting of data in GPU memory optimization. 3D neural network applications require substantial graphical computing periods that is still being optimized for the inevitable era of augmented reality and virtual metaverse. To tackle this, transfer learning of similar data was investigated with robust techniques like application of 3D convolutional networks24,25. The lack of 3D data for machine learning in dental restorative sciences encouraged transfer learning from multidisciplinary lung datasets to train smaller dental dataset. The current work demonstrated that 3D images obtained from .stl file data can be directly fed into a 3D neural network model instead of regarding the 3D spatial information as a stacked input of 2D based methods26. A validation accuracy of 60% with a validation loss (the sum of errors made for each example in training or validation sets) below 1.00 on such a small dataset would indicate promising prospects for further development. Oversampling the data in CAD to increase sample size and appointing different practitioners to design the prostheses helped introduce minute variations on the 6 specimens which led to the production of 120 specimens. Future studies with increased dental sample size, with the current model transferred into the network, and application of the promising generative adversarial networks21 can potentially increase accuracy, lower issues of overfitting, while clinically allowing for more targeted machine learning applications in dental diagnostics and treatment planning.

The findings of the current report suggest that the 3D STL models of tooth preparations of partial dental crowns can be analyzed and processed using neural networks. However, the main error identified during the process for all groups tested was instances of under-estimation or under-evaluation. Such limitations may be explained by the presence of artifacts that can produce higher false-positive voxels or wide parameter adjustments commonly accompanying neural networks27,28. At the same time, these errors are unlikely to have detrimental impact in a clinical scenario and can be implemented in challenging and complex cases.

The conversion of solid objects into 3D data matrices creates the potential for segmentation, classification, and analysis of dental cavities, oral cysts, and neoplastic lesion from models generated directly from 3D radiographic imaging. Finally, accurate generation of fillings and crowns through autoencoders (i.e., neural networks that can compress and create meaningful information for decoding later) can be performed just by analyzing the cavity itself, completely mitigating the need for operator intervention in designing the prosthesis29. With the availability of a larger dataset and at a reduced graphics intensive workload, the use of autoencoders and 3D-CNNs can potentially negate the need for an expensive computer setup to run 3D machine learning application and make this technology commonplace.

Conclusion

Within the limitations of the study, the findings of the current in-vitro study are as below:

Intraoral scans can produce more accurate surface texture details of teeth prepared for partial dental crowns than laser scanner-derived 3D images.

The study demonstrated that clinicians do not require expensive computer setups or experience with CAD to design virtual crown prostheses that are fit to facilitate machine learning.

The study serves as a proof of concept that both open-source and commercial CAD workflows can process virtual data for tooth preparations which are acceptable for machine learning in restorative dentistry.

3D deep learning can generate and predict partial dental crown restorations appropriate for tooth preparations in dentistry.

Materials and methods

This study was conducted in compliance with the World Medical Association Declaration of Helsinki on medical research. Informed consent was taken from all subjects providing tooth samples. All experimental protocols were approved by the Joint Ethical Committee/Institutional Review Board at International Medical University under Project No. 259/2020. The current experiment was designed and executed in 3 phases as summarized in Supplementary Fig. 2.

Phase 1: scanning

Six extracted natural teeth were prepared for inlay and onlay restorations following standardized methodology. A mesio-occlusal distal cavity was prepared on the proximal surfaces. The bur was kept at 90 degrees to occlusal plane and slightly tilted laterally. The bur was in rotation when applied and did not stop rotation until and unless was removed from the tooth. The marginal ridge was thinned out before dropping into the proximal box. For the occlusal segment, the line and point angles were defined with buccal and lingual walls parallel to each other and at 90 degrees to the occlusal plane. The mesial and distal walls were divergent pulpo-occlusally. The bur was moved facially and lingually along the dentino-enamel junction and oriented according to the proximal wall directions. The gingival margins were extended gingivally30. The prepared teeth were coated in titanium dioxide spray to reduce surface reflection of ambient light for scanning31,32.

Each preparation was scanned once using an intra-oral scanner (3Shape; Trios) and once using a desktop laser scanner (3D Scanner Ultra HD; Next Engine Santa Monica). The scans were exported as standard tessellation language (STL) files and virtually quantitatively evaluated for likeness in surface contour, geometric and volumetric similarities by measuring four separate parameters33,34: mesh surface area (MSA), virtual volume (VV), Hausdorff’s distance (HD) and Dice Similarity co-efficient (DSC). MSA evaluated the surface contour, VV measured the volumetric similarities, HD broke the two objects into points and measured the number of interpoint mismatches while DSC measured the volumetric spatial overlap between the two objects. The scanning method that produced the best visual (Supplementary Fig. 3) and quantitative results was selected for phase 2.

Phase 2: restoration design

The tooth preparations were categorized according to the type of restoration (inlay or onlay) and two workflows were developed for each category of restoration: Workflow 1—Medical grade commercial software (3matics; Materialise NV); Workflow 2—Free software (Meshmixer; Autodesk Inc). Inlays for medical grade commercial software were designed following the ‘surface construction’ principles (Fig. 1), as documented in a previous literature11,35. The inlay design for free software (Supplementary Fig. 4) and onlay designs for both commercial (Fig. 2) and free software (Supplementary Fig. 5) followed the ‘Boolean subtraction’ principles, as previously documented36. Virtual crown templates were obtained from scanned physical restorations on dental casts and were used to reconstruct the onlay cusps9,10,37.

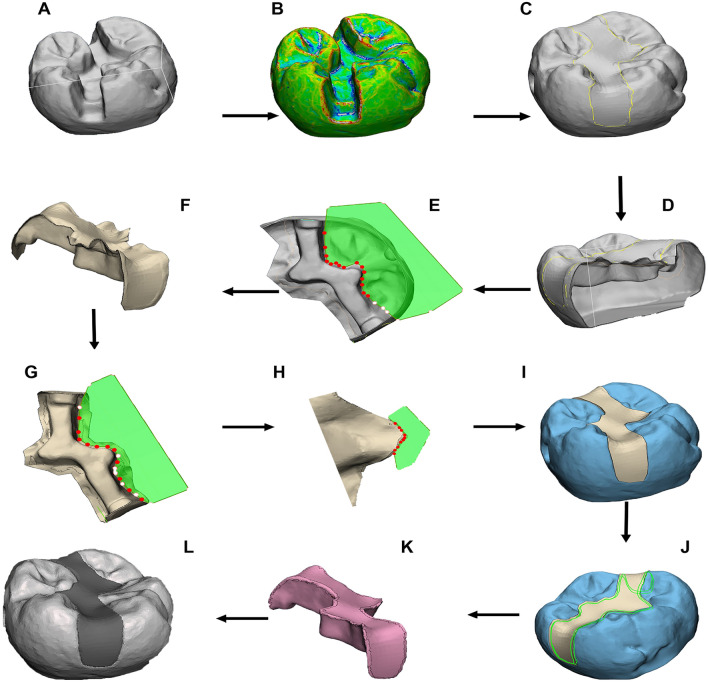

Figure 1.

Workflow 1 for inlay design with respective commands: (A) 3D model loaded into 3-matics, (B) curvature analysis and curve creation on model surface, (C) surface reconstruction function to bridge defect , (D) hollow the model and trim down, (E) manual trimming of isolated segments, (F) apply wrap function, G) manually trim after wrapping, (H) manually trim any overhangs, (I) check fit onto 3D preparation model, (J) fine smoothing of edges, (K) final wrap and apply autofix function to fix any defects, (L) final output.

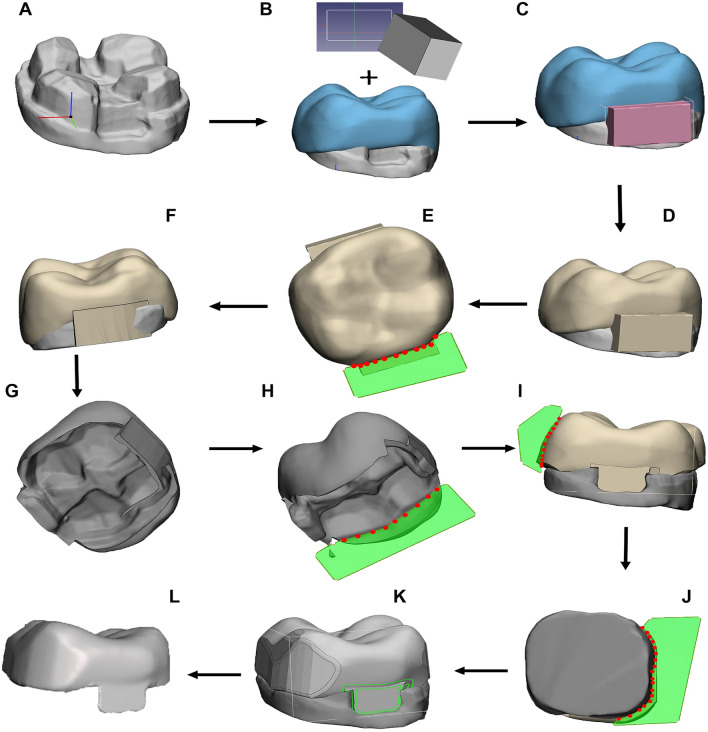

Figure 2.

Workflow 1 for onlay design: (A) import 3D tooth preparation, (B) superimpose crown template, (C) import and scale block template, (D) perform Boolean union, (E) trim excess, (F) check contour for overhang, (G) perform Boolean intersection, (H) remove excess, (I) contour edges, (J) crown shaping through manual trim, (K) edge smoothing, (L) sculpt and smooth.

The process and commands for the restorations in both workflows were video recorded using low detail practice crown templates and documented in text (Supplementary file). The videos and template files were provided to two dentists with no prior experience in CAD-based rehabilitation to offset potential operator dependent biases36. The dentists practiced digital rehabilitation using the templates at their convenience for one week before being introduced to the actual crown templates as selected and designed by a certified prosthodontist. This process was followed to discourage memorized familiarity-induced fatigue38. The six specimens were digitally oversampled five times introducing incremental variations in tooth preparation size and depths to facilitate a machine learning model trained using a larger variation in tooth preparations. This resulted in 30 samples (Supplementary Fig. 6) assigned to each workflow, thereby producing 120 samples (60 from each dentist). This graphics intensive task of oversampling was undertaken on a computer (ROG Flow X13; Asus) sporting an AMD Ryzen 7 5800HS processor, 16 GB of RAM, NVIDIA GTX 1650 max-Q design dedicated graphics and a thermal design power (TDP) of 30 W. However, to standardize the evaluation and keep it practically relevant, both practitioners designed all the prostheses on a personal computer (Idea pad Flex 5; Lenovo) with an intel Core i5 1135g7 processor, 8 GB of RAM and 512 GB of NVM.e solid state drive, no dedicated GPU, and a TDP of 15 W, reflecting an average modern day laptop computer of 2022. The workflows with the most consistent and favorable MSA, VV, HD and DSC values were evaluated, and the best performing workflows as determined by greater surface area, volumetric similarities, and spatial overlap, were selected to train the machine learning model in Phase 3.

Phase 3: deep learning from 3D data

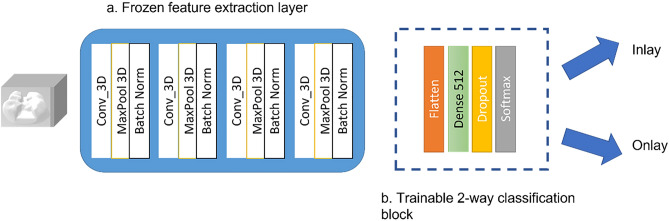

30 specimens and their corresponding restorations from the best workflows were selected for transfer learning and machine learning application and sliced into 2D segments (Supplementary Fig. 7) and later appended into readable 3D matrix. A 17-layer 3D convolutional neural network (CNN) of four 3D layers implemented at a kernel size of 3 × 3 × 339. The 17-layer CNN had a frozen feature extraction block consisting of 13 layers and a classification block consisting of four layers. The first two layers consisted of 64–128 and 256 filters with each CNN layer followed by a 2-stride max pool layer and a ReLU activation which ends with batch normalization (BN) layer. The classification block had 512 neurons of dense ‘Flatten’ layer followed by a 60% ‘Dropout’ layer and finally ‘SoftMax’ layer. The primary dataset for training was obtained from the Image CLEF Tuberculosis 2019 dataset40 and frozen after obtaining a 73.3% validation accuracy. 132,097 trainable parameters were transferred to train the current dataset. The dental dataset was broken down to 2:1 ratio for training and validation. Training was done for 100 epochs with planned termination set should validation accuracy not improve beyond 15 epochs. The developed 3D network has been highlighted in Fig. 3.

Figure 3.

The 3D neural network.

Statistical analysis

Sample size estimation for a healthy evaluation for MSA, VV, HD, and DSC was carried out using G*Power41. A large effect size f = 0.40, α = 0.05, power = 0.85 determined a minimum sample size of 108 digital specimens. A 3-way analysis of variance (ANOVA) with pairwise comparison and interaction effects was applied to MSA, VV, HD, and DSC for the purpose of determining the best outcomes from Phase 2 from 116 specimens. This was carried out using a statistical software (SPSS, IBM Corp.)

Supplementary Information

Acknowledgements

Dr Taseef Farook is a recipient of the University of Adelaide Research Scholarship. The authors thank Ms Suzana Yahya and Dr Johari Yap Abdullah of Universiti Sains Malaysia for facilitating the laser scanning procedure.

Author contributions

Study idea and design conceptualization: N.B.J., T.H.F., U.M.D.; Study methodology design: T.H.F., S.A., P.R.S., P.K.; Digital data synthesis: T.H.F., F.R., A.B., S.A., A.M.L.; Data analysis: F.R., A.B., S.A., P.K.; Statistical analyses: F.R., A.B., P.R.S., A.M.L.; Formulation of supplementary data: F.R., A.B.; Data validation: T.H.F., S.A., S.Z.; Manuscript writing: T.H.F., J.D., P.K., S.Z.; Manuscript revision: N.B.J., J.D., U.M.D., S.Z.; Final approval for submission: N.B.J., J.D., U.M.D.

Data availability

The data supporting the findings of this study are available within the article and its Supplementary Information file. Source data are provided with this paper.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-023-28442-1.

References

- 1.Moor J. The Dartmouth College artificial intelligence conference: The next fifty years. AI Mag. 2006;27:87. [Google Scholar]

- 2.Mupparapu M, Wu C-W, Chen Y-C. Artificial intelligence, machine learning, neural networks, and deep learning: Futuristic concepts for new dental diagnosis. Quintessence Int. 2018;49:687–688. doi: 10.3290/j.qi.a41107. [DOI] [PubMed] [Google Scholar]

- 3.Hamet P, Tremblay J. Artificial intelligence in medicine. Metabolism. 2017;69:S36–S40. doi: 10.1016/j.metabol.2017.01.011. [DOI] [PubMed] [Google Scholar]

- 4.Ivanov SH, Webster C, Berezina K. Adoption of robots and service automation by tourism and hospitality companies. Revista Turismo & Desenvolvimento. 2017;27:1501–1517. [Google Scholar]

- 5.Grischke, J., Johannsmeier, L., Eich, L. & Haddadin, S. Dentronics: Review, first concepts and pilot study of a new application domain for collaborative robots in dental assistance. In 2019 International Conference on Robotics and Automation (ICRA) 6525–6532 (IEEE, 2019).

- 6.Farook TH, Jamayet NB, Abdullah JY, Alam MK. Machine learning and intelligent diagnostics in dental and orofacial pain management: A systematic review. Pain Res. Manag. 2021;2021:6659133. doi: 10.1155/2021/6659133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Zhang B, Dai N, Tian S, Yuan F, Yu Q. The extraction method of tooth preparation margin line based on S-Octree CNN. Int. J. Numer. Methods Biomed. Eng. 2019;35:e3241. doi: 10.1002/cnm.3241. [DOI] [PubMed] [Google Scholar]

- 8.Dudley J. Comparison of coronal tooth reductions resulting from different crown preparations. Int. J. Prosthodont. 2018;31:142–144. doi: 10.11607/ijp.5569. [DOI] [PubMed] [Google Scholar]

- 9.Tran J, Dudley J, Richards L. All-ceramic crown preparations: An alternative technique. Aust. Dent. J. 2017;62:65–70. doi: 10.1111/adj.12433. [DOI] [PubMed] [Google Scholar]

- 10.Farook TH, Barman A, Abdullah JY, Jamayet NB. Optimization of prosthodontic computer-aided designed models: A virtual evaluation of mesh quality reduction using open source software. J. Prosthodont. 2021;30:420–429. doi: 10.1111/jopr.13286. [DOI] [PubMed] [Google Scholar]

- 11.Farook TH, et al. Development and virtual validation of a novel digital workflow to rehabilitate palatal defects by using smartphone-integrated stereophotogrammetry (SPINS) Sci. Rep. 2021;11:1–10. doi: 10.1038/s41598-021-87240-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Bohner L, et al. Accuracy of digital technologies for the scanning of facial, skeletal, and intraoral tissues: A systematic review. J. Prosthet. Dent. 2019;121:246–251. doi: 10.1016/j.prosdent.2018.01.015. [DOI] [PubMed] [Google Scholar]

- 13.Patzelt SBM, Emmanouilidi A, Stampf S, Strub JR, Att W. Accuracy of full-arch scans using intraoral scanners. Clin. Oral Investig. 2014;18:1687–1694. doi: 10.1007/s00784-013-1132-y. [DOI] [PubMed] [Google Scholar]

- 14.Patil, P. G. & Lim, H. F. The use of intraoral scanning and 3D printed casts to facilitate the fabrication and retrofitting of a new metal-ceramic crown supporting an existing removable partial denture. J. Prosthet. Dent. (2021). [DOI] [PubMed]

- 15.Kuo R-F, Fang K-M, Su F-C. Open-source technologies and workflows in digital dentistry. In: Sasaki K, Suzuki O, Takahashi N, editors. Interface Oral Health Science 2016. Springer; 2017. pp. 165–171. [Google Scholar]

- 16.Jokstad A. Computer-assisted technologies used in oral rehabilitation and the clinical documentation of alleged advantages—A systematic review. J. Oral Rehabil. 2017;44:261–290. doi: 10.1111/joor.12483. [DOI] [PubMed] [Google Scholar]

- 17.Khanagar SB, et al. Developments, application, and performance of artificial intelligence in dentistry—A systematic review. J. Dent. Sci. 2021;16:508–522. doi: 10.1016/j.jds.2020.06.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Milner MN, et al. Patient perceptions of new robotic technologies in clinical restorative dentistry. J. Med. Syst. 2020;44:1–10. doi: 10.1007/s10916-019-1488-x. [DOI] [PubMed] [Google Scholar]

- 19.Jeelani S, et al. Robotics and medicine: A scientific rainbow in hospital. J. Pharm. Bioallied Sci. 2015;7:S381. doi: 10.4103/0975-7406.163460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Lee J-H, Kim D-H, Jeong S-N, Choi S-H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 2018;77:106–111. doi: 10.1016/j.jdent.2018.07.015. [DOI] [PubMed] [Google Scholar]

- 21.Tian S, et al. DCPR-GAN: Dental crown prosthesis restoration using two-stage generative adversarial networks. IEEE J. Biomed. Health. Inform. 2021;26:151–160. doi: 10.1109/JBHI.2021.3119394. [DOI] [PubMed] [Google Scholar]

- 22.Chlap P, et al. A review of medical image data augmentation techniques for deep learning applications. J. Med. Imaging Radiat. Oncol. 2021;65:545–563. doi: 10.1111/1754-9485.13261. [DOI] [PubMed] [Google Scholar]

- 23.Nozawa M, et al. Automatic segmentation of the temporomandibular joint disc on magnetic resonance images using a deep learning technique. Dentomaxillofac. Radiol. 2022;51:20210185. doi: 10.1259/dmfr.20210185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Pan SJ, Yang Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009;22:1345–1359. doi: 10.1109/TKDE.2009.191. [DOI] [Google Scholar]

- 25.Tran, D., Bourdev, L., Fergus, R., Torresani, L. & Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision 4489–4497 (2015).

- 26.Zheng G. Effective incorporating spatial information in a mutual information-based 3D–2D registration of a CT volume to X-ray images. Comput. Med. Imaging Graph. 2010;34:553–562. doi: 10.1016/j.compmedimag.2010.03.004. [DOI] [PubMed] [Google Scholar]

- 27.Leite AF, et al. Artificial intelligence-driven novel tool for tooth detection and segmentation on panoramic radiographs. Clin. Oral Investig. 2021;25:2257–2267. doi: 10.1007/s00784-020-03544-6. [DOI] [PubMed] [Google Scholar]

- 28.Kareem SA, Pozos-Parra P, Wilson N. An application of belief merging for the diagnosis of oral cancer. Appl. Soft Comput. 2017;61:1105–1112. doi: 10.1016/j.asoc.2017.01.055. [DOI] [Google Scholar]

- 29.Bank, D., Koenigstein, N. & Giryes, R. Autoencoders. arXiv preprint arXiv:2003.05991 (2020).

- 30.Ritter AV. Sturdevant’s Art & Science of Operative Dentistry-e-Book. Elsevier Health Sciences; 2017. [Google Scholar]

- 31.Rashid F, et al. Color variations during digital imaging of facial prostheses subjected to unfiltered ambient light and image calibration techniques within dental clinics: An in vitro analysis. PLoS ONE. 2022;17:e0273029. doi: 10.1371/journal.pone.0273029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Rashid F, et al. Factors affecting color stability of maxillofacial prosthetic silicone elastomer: A systematic review and meta-analysis. J. Elastom. Plast. 2021;53:698–754. doi: 10.1177/0095244320946790. [DOI] [Google Scholar]

- 33.Farook TH, Abdullah JY, Jamayet NB, Alam MK. Percentage of mesh reduction appropriate for designing digital obturator prostheses on personal computerse. J. Prosthet. Dent. 2020;128:219–224. doi: 10.1016/j.prosdent.2020.07.039. [DOI] [PubMed] [Google Scholar]

- 34.Jamayet, N.B., Farook, T. H., Ayman, A.-O., Johari, Y. & Patil, P. G. Digital workflow and virtual validation of a 3D-printed definitive hollow obturator for a large palatal defect. J. Prosthet. Dent. (2021). [DOI] [PubMed]

- 35.Beh YH, et al. Evaluation of the differences between conventional and digitally developed models used for prosthetic rehabilitation in a case of untreated palatal cleft. Cleft Palate Craniofac. J. 2020;58:386–390. doi: 10.1177/1055665620950074. [DOI] [PubMed] [Google Scholar]

- 36.Farook TH, et al. Designing 3D prosthetic templates for maxillofacial defect rehabilitation: A comparative analysis of different virtual workflows. Comput. Biol. Med. 2020;118:103646. doi: 10.1016/j.compbiomed.2020.103646. [DOI] [PubMed] [Google Scholar]

- 37.Paulus D, Wolf M, Meller S, Niemann H. Three-dimensional computer vision for tooth restoration. Med Image Anal. 1999;3:1–19. doi: 10.1016/S1361-8415(99)80013-9. [DOI] [PubMed] [Google Scholar]

- 38.Rystedt H, Reit C, Johansson E, Lindwall O. Seeing through the dentist’s eyes: Video-based clinical demonstrations in preclinical dental training. J. Dent. Educ. 2013;77:1629–1638. doi: 10.1002/j.0022-0337.2013.77.12.tb05642.x. [DOI] [PubMed] [Google Scholar]

- 39.Zunair, H., Rahman, A., Mohammed, N. & Cohen, J. P. Uniformizing techniques to process CT scans with 3D CNNs for tuberculosis prediction. In International Workshop on PRedictive Intelligence in Medicine 156–168 (Springer, 2020).

- 40.Cid, Y. D. et al. Overview of ImageCLEFtuberculosis 2019-Automatic CT-based Report Generation and Tuberculosis Severity Assessment. In CLEF (Working Notes) (2019).

- 41.Faul F, Erdfelder E, Lang A-G, Buchner A. G* Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav Res Methods. 2007;39:175–191. doi: 10.3758/BF03193146. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data supporting the findings of this study are available within the article and its Supplementary Information file. Source data are provided with this paper.