Abstract

Purpose:

Individual differences and variability in outcomes following cochlear implantation (CI) in patients with hearing loss remain significant unresolved clinical problems. Case reports of specific individuals allow for detailed examination of the information processing mechanisms underlying variability in outcomes. Two adults who displayed exceptionally good postoperative CI outcomes shortly after activation were administered a novel battery of auditory, speech recognition, and neurocognitive processing tests.

Method:

A case study of two adult CI recipients with postlingually acquired hearing loss who displayed excellent postoperative speech recognition scores within 3 months of initial activation. Preoperative City University of New York sentence testing and a postoperative battery of sensitive speech recognition tests were combined with auditory and visual neurocognitive information processing tests to uncover their strengths, weaknesses, and milestones.

Results:

Preactivation CUNY auditory-only (A) scores were < 5% correct while the auditory + visual (A + V) scores were > 74%. Acoustically with their CIs, both participants' scores on speech recognition, environmental sound identification and speech in noise tests exceeded average CI users scores by 1–2 standard deviations. On nonacoustic visual measures of language and neurocognitive functioning, both participants achieved above average scores compared with normal hearing adults in vocabulary knowledge, rapid phonological coding of visually presented words and nonwords, verbal working memory, and executive functioning.

Conclusions:

Measures of multisensory (A + V) speech recognition and visual neurocognitive functioning were associated with excellent speech recognition outcomes in two postlingual adult CI recipients. These neurocognitive information processing domains may underlie the exceptional speech recognition performance of these two patients and offer new directions for research explaining variability in postimplant outcomes. Results further suggest that current clinical outcome measures should be expanded beyond the conventional speech recognition measures to include more sensitive robust tests of speech recognition as well as neurocognitive measures of working memory, vocabulary, lexical access, and executive functioning.

Individual differences and variability in outcomes following cochlear implantation (CI) continue to be formidable and unresolved clinical problems since CIs were first developed as a medical treatment for profound sensorineural hearing loss (SNHL; Bilger et al., 1977; Carlson, 2020; Kral et al., 2019; Niparko, 2009; Wilson & Blake, 2008; Wilson et al., 2011). We are still unable to reliably predict which patients will do well with their CIs and which patients will have poor outcomes based on preoperative measures (Moberly et al., 2016; Velde et al., 2021).

Knowledge of which sensory, cognitive, and linguistic factors underlie individual differences and variability in speech recognition outcomes is a prerequisite for achieving optimal outcomes with all patients, but especially CI patients who display poor outcomes. The effects of auditory and cognitive training after implantation are also highly variable. More importantly, most patients with CIs show very little evidence of generalization and transfer of training to novel speech materials and nontrained information processing tasks (Klingberg, 2010; Nunes et al., 2014).

Recent evidence suggests that cognitive and multisensory processing of information are important for both normal hearing listeners and cochlear implant recipients. Speech recognition in everyday real-world environments reflects not only properties and attributes of hearing and audibility and sensory processing of speech by the peripheral and central auditory system but also significant contributions of downstream cognitive factors and prior linguistic knowledge reflecting global effects on speech recognition (Pisoni, 2021). Recent studies demonstrate that variability in speech recognition outcomes following implantation reflect the joint contributions of both hearing and cognition, particularly cognitive factors related to rapid phonological coding, working memory capacity, controlled attention, inhibitory control, executive functioning, perceptual learning, verbal learning, and memory (Tamati et al., 2022).

Second, most clinical research studies on speech recognition outcomes following CI have relied heavily on traditional demographic measures, hearing histories, and patient-related factors such as residual hearing and length of hearing loss before implantation as the major predictors of outcomes and benefits (Blamey et al., 2013; Holden et al., 2013; Velde et al., 2021). Demographic variables are proxies for more elementary neural and cognitive factors that underlie information processing operations used in tasks that make use of rapid phonological coding, lexical retrieval, verbal working memory, attention, and inhibitory control. Thus, demographic variables considered alone do not provide any novel insights or mechanistic explanations of the sensory, perceptual, and cognitive processes that underlie speech recognition and comprehension. Human speech recognition is a complex dynamic process involving multiple sensory, cognitive, and linguistic subsystems working together in a seamless manner to support language comprehension (Pisoni, 2021).

Traditional speech recognition measures are limited, because they are a consequence of a long series of sensory, perceptual, cognitive, and linguistic operations that reflect the endpoint product of a patient's performance (Moberly et al., 2018). While endpoint measures provide useful clinical benchmarks to assess benefits and track progress following implantation, these conventional speech recognition outcome measures are not explanatory measures that are closely linked to core underlying theoretical constructs of how information is encoded, stored, retrieved, and processed and used to accomplish specific information processing tasks. Moreover, the use of a very limited set of outcome measures of speech recognition such as Consonant–Nucleus–Consonant monosyllabic word lists or AzBio sentences provide only a limited assessment of the strengths, weaknesses, and milestones of a patient's speech understanding performance after implantation (Adunka et al., 2018; Luxford & Ad Hoc Subcommittee of the Committee on Hearing and Equilibrium of the American Academy of Otolaryngology-Head and Neck Surgery, 2001; Owens et al., 1981; Spahr et al., 2012). Conventional methods of assessing speech recognition outcomes after implantation should be broadened significantly to include speech recognition measures that reflect perceptual learning, attention, and adaptive functioning of the listener under adverse and challenging listening conditions such as noise, multitalker babble, foreign-accented speech, cognitive load, and dual processing tasks that require the use of controlled and focused attention in the face of distraction.

Finally, the traditional audiological approach to assessing hearing for speech in listeners with hearing loss has relied almost exclusively on auditory-only presentation of test materials to measure speech recognition (Pisoni, 2021). Except in a few circumstances, measures of audio-visual (AV) integration skills (e.g., perceptual processes involving lipreading and other optical facial cues concurrent with presentation of auditory signals) are rarely obtained from patients either before or after implantation (Stevenson et al., 2017; Wolfe, 2020), even though speech communication in everyday life involves the use of AV integration skills. Measures of AV speech recognition and AV integration as well as visual-only lipreading provide a unique window to investigate the underlying brain networks employed in speech perception and spoken language processing and the neural reorganization and remodeling that has taken place as a result of a period of auditory sensory deprivation prior to implantation (Alzaher et al., 2021; Lee et al., 2021; Rosenblum & Dorsi, 2021; Stevenson et al., 2017). Thus, there is a continuing need to identify sensory and cognitive influences on post-CI speech recognition in order to understand their contribution to individual differences and variability in outcomes.

Case reports allow for selection of unique or paragon cases that demonstrate potentially novel mechanisms of action to explain outcomes despite their known limitations in sample size (Ernst et al., 2013; Schork & Goetz, 2017; Woolston, 2020). For patients with significant hearing loss, case reports offer the opportunity for description of atypical cases in order to suggest potential mechanisms underlying extraordinarily positive or negative outcomes. Furthermore, case reports can demonstrate the value of expanded clinical use of novel predictor and outcome measures that have been suggested by prior research. In this clinical focus article, we report results obtained with two exceptional postlingual adult CI patients referred by their neurotologist for expanded, more detailed evaluation due to their near-ceiling clinical speech recognition outcomes. Both participants displayed speech recognition outcomes at ceiling levels comparable with normal-hearing adults in quiet postoperatively after a brief period of time using their CIs following activation. Participant 001 achieved ceiling levels of speech recognition on novel lists of AzBio Sentences in quiet after only 3 months of CI use. Participant 002 also displayed ceiling-level speech recognition performance on novel lists of AzBio test sentences presented in both quiet and +10 dB SNR noise on the day of activation.

In order to better understand the factors influencing outcomes in these two patients, we administered an expanded battery of speech recognition outcomes as well as a novel battery of tests to assess cognitive and linguistic abilities that have been identified in prior large-sample research as predictive of post-CI outcomes (e.g., working memory, executive functioning, and linguistic knowledge; see Kronenberger & Pisoni, 2019, 2020). By studying these two postlingual adults with CIs in greater depth with a diverse battery of sensory, cognitive, and linguistic information processing measures, we hoped to obtain some new insights into the factors that may underlie variability in speech recognition outcomes following implantation. Additional assessments with these two patients may help us to identify potential preoperative predictors of speech recognition outcomes. Thus, these case reports may serve to identify potential targets for future research studies and novel interventions.

Method

Participants and Procedure

The two participants for this report were recruited from an ongoing study on individual differences, which was approved by the university institutional review board. Both participants agreed in writing to have their clinical and research data used in these case reports. The two participants were both adult males aged 18 years or older, had a diagnosis of postlingual severe-to-profound hearing loss, scored 30/30 on the Mini Mental State Examination, indicating absence of significant cognitive impairment (Folstein et al., 1975), and received at least one cochlear implant at the time of testing. Both participants met current Food and Drug Administration CI candidacy criteria for CI (Adunka et al., 2018; Gifford et al., 2010). Their preoperative scores are displayed in Table 2. Both participants used listening and speaking as their primary mode of communication. Neither had any experience with American Sign Language. Participants were specifically referred by their neurotologist (R.F.N.) because of their exceptional preactivation auditory + visual (A + V) integration skills on the CUNY sentences as well as their remarkable speech recognition outcomes shortly after implantation.

Table 2.

Preoperative clinical scores.

| Measure | Participant 001 | Participant 002 |

|---|---|---|

| Unaided thresholds | Severe-to-profound bilateral sensorineural hearing loss | Severe-to-profound bilateral mixed hearing loss right ear Moderately-severe-to-profound mixed hearing loss left ear |

| Aided thresholds | Responses within the mild sloping to severe hearing loss range | Responses within the mild sloping to severe hearing loss range |

| AzBio Sentences in Quiet | 0% with binaural super-power behind-the-ear hearing aids | 45% with osseointegrated device with right abutment 70% with hearing aid, left* |

| AzBio Sentences in +10 dB SNR | DNT | 27% with osseointegrated device with right abutment**

43% with hearing aid, left |

| CUNY Sentences Auditory-Only (A) | 0% (aided binaurally) | 46% (unaided left ear only) |

| CUNY Sentences Visual-Only (V) | 21% (aided binaurally) | 5% (unaided left ear only) |

| CUNY Auditory + Visual (A + V) | 74% (aided binaurally) | 85% (unaided left ear only) |

Note. CUNY = City University of New York.

Patient retested in noise.

Qualifying score.

Both of the participants in this study were implanted with Cochlear lateral wall (LW) arrays. The intrascalar location of the cochlear implant electrode array has been theorized to impact speech recognition in CI users. Because spiral ganglion neural elements emanate from the modiolus, it is theorized that having the electrodes closer to the neural elements and modiolus will improve sound perception and speech recognition. In addition, the distance from the LW electrode to the neural elements may result in overlapping stimulation due to voltage spread. However, a direct comparison of round window inserted perimodiolar arrays and LW arrays demonstrates that there was no measurable difference in postoperative outcomes in AzBio scores (MacPhail et al., 2021).

A specially designed novel assessment battery of sensory, cognitive, and linguistic information processing tasks was administered to both participants to measure several foundational information processing domains (Moberly & Reed, 2019). Selection of the processing domains was guided by recent findings using expanded speech recognition outcome assessments after implantation (Aronoff & Landsberger, 2013; Pisoni & Kronenberger, 2021; Won et al., 2007) and recent findings on cognitive and linguistic predictors of outcomes (Kramer et al., 2018; Moberly & Reed, 2019; Zhan et al., 2020). Participants were tested by a licensed, certified audiologist (C.J.H.). Directions in this study were administered in the same order using standard instructions (with breaks as needed) via live-voice presentation in auditory-verbal format without the use of any sign language and with the examiner's face in full view. Speech recognition tests were administered via audio recording. Most audio-recorded items were presented in a quiet setting at 65 dB sound pressure level using a high-quality loudspeaker (Advent AV570) located approximately 3 ft from the participant in an acoustically insulated room; presentation through a GSI 61 audiometer in a sound-attenuated booth is otherwise explained below. Other cognitive tests were administered using standard instructions, as described below.

Research Measures

Auditory Information Processing Tasks

The Spectral-Temporally Modulated Ripple Test (SMRT) was used to assess participants' spectral resolution by measuring their ripple-resolution threshold (Aronoff & Landsberger, 2013). Participants were asked to listen to three complex nonspeech sounds and identify which sound was different from the other two sounds presented on each trial. A ripple-resolution threshold was obtained. The ripple-resolution threshold (ripples per octave, RPO) was obtained using a three-interval, two-alternative forced-choice, and one-up/one-down adaptive procedure. The test was completed after six runs of 10 reversals each. The RPO was based on the last six reversals of each run with the first three runs discarded as practice. For this task, a higher score represents better spectral resolution.

The Environmental Sound Recognition Test was used to assess sound identification (Anaya et al., 2016; Marcell et al., 2000). The test asked participants to identify common everyday familiar environmental sounds using an open-set response format. Participants heard a total of 25 environmental sounds and were asked to identify the sound without any context or response alternatives. The test stimuli contained sounds from various categories: animals, vehicles, nonspeech sounds made by humans, music, and others (glass breaking, police siren, etc.) and were normed using a group of typically developing young adults on several indexes including familiarity and naming response latency (Marcell et al., 2000).

The Children's Test of Nonword Repetition (CNRep) was administered to assess rapid phonological coding of speech (Gathercole et al., 1994). Participants were asked to repeat back recorded phonologically permissible nonword patterns consisting of sequences of English phonemes that do not have any linguistic meaning and are not words in the language (e.g., “altupatory”). Performance on nonword repetition tasks has been found to be strongly related to speech and language outcomes in deaf children with CIs and may be a reliable clinical marker of developmental milestones in rapid phonological coding (Casserly & Pisoni, 2013; Nittrouer et al., 2014).

QuickSIN was used to evaluate speech recognition in noise (Killion et al., 2004). Participants were asked to repeat sentences produced by a female talker in the presence of multitalker babble at decreasing signal-to-noise ratios. QuickSIN sentences were played back through a GSI 61 audiometer free-field with a loudspeaker in a sound–attenuated booth at 70 dB HL following calibration as indicated by the Version 1.3 manual.

The Perceptually Robust English Sentence Test Open-Set (PRESTO)–STANDARD and PRESTO–Foreign Accented English (FAE) Sentences were used to assess speech recognition under challenging conditions; these two tests are sensitive, high-variability multitalker tests of sentence recognition (Gilbert et al., 2013; Mendel & Danhauer, 1997; Tamati et al., 2013, 2014). For the PRESTO-Standard sentence task, each participant was asked to repeat sentences, each of which was produced by a different talker selected from among six different geographical regions in the United States. Like the PRESTO-STANDARD sentence task, the PRESTO-FAE sentence task also used a set of high-variability multitalker sentences that were produced by different nonnative speakers of English (Tamati et al., 2021). Use of foreign accented English sentences presents compromised acoustic–phonetic speech cues to the listener.

A set of Harvard-Anomalous Sentences was also used to evaluate speech recognition for grammatically correct sentences without semantic meaning (Herman & Pisoni, 2000; Marks & Miller, 1964; Miller & Isard, 1963). Participants were asked to repeat back sequences of familiar English words that were embedded in syntactically intact sentence contexts that were semantically incoherent and linguistically uninterpretable (e.g., “The trout is straight and also writes brass.”).

Cognitive Information Processing Tasks

The Letter–Number Sequencing test (LNS) from the Wechsler Intelligence Scale for Children–Fifth Edition; (WISC-V) was used to assess verbal working memory (Wechsler, 2014). Participants were asked to listen to random sequences of letters and numbers and then repeat back the numbers in ascending numerical order followed by the letters in alphabetical order. Following instructions in the WISC-V test manual, presentation took place using monitored live voice at the participant's most comfortable listening level in free-field via a GSI 61 audiometer with a loudspeaker in a sound attenuated booth.

The Stroop Color-Word Test (Stroop test) was used to measure inhibition and controlled attention (Stroop, 1935). Participants were asked to identify the ink color of a printed word while ignoring the meaning of the word. Stimuli could either be a congruent color word, an incongruent color word, or a control word/condition. Computerized testing was administered using the publicly available Stroop task (http://www.millisecond.com). Response times were recorded for the control condition, congruent condition, and incongruent conditions and three separate mean response times were computed. For all three measures, higher scores reflect longer response times.

The Test of Word Reading Efficiency–Second Edition (TOWRE-2) was used to evaluate single word reading fluency which included the assessment of word recognition and lexical access for real words and phonetic decoding of nonword sequences (Torgesen et al., 2012). Participants were asked to read aloud as many words and nonwords as possible in 45 s. The words and phonologically permissible nonwords were presented in separate blocks to measure word recognition and phonetic decoding skills in reading.

The Visual Flanker Task was used to measure visual attentional control under conditions of distraction, sustained effort, and mental flexibility (Eriksen & Eriksen, 1974; Weintraub et al., 2013). Participants were asked to report the direction of the middle arrow in a string of five arrows. The direction of the center arrow was either congruent or incongruent to the other four arrows in the display.

The Pattern Comparison Processing Speed Test from the NIH Toolbox was used to measure visual perceptual processing speed (Weintraub et al., 2013). Participants were asked to indicate if two visual displays were the same or different.

Linguistic Information Processing Task

The WordFAM word familiarity rating task was used to assess vocabulary and lexical knowledge (Lewellen et al., 1993). Participants were asked to rate their subjective familiarity of 150 visually presented words that varied in both word frequency and subjective familiarity based on normative data obtained from young college-aged adults.

Results

Participant 001

Demographics and Clinical History

Participant 001's demographics and clinical history are summarized in the left panels of Table 1 and Figure 1. Participant 001 used bilateral traditional amplification with hearing aids for 20 years for his diagnosed progressive severe-to-profound, postlingual SNHL at age 7 years. He was sequentially implanted (right then left). His preoperative and postoperative clinical AzBio speech recognition scores for each individual ear are summarized in the left-hand columns of Tables 2 and 3. CUNY Sentence scores (A-only, V-only, A + V) were obtained preoperatively from Participant 001 and are shown in the middle column of Table 2.

Table 1.

Demographic data.

| Summary of demographics | |

|---|---|

| Participant 001 | Participant 002 |

|

Demographics: Late 20s, Male, White Native/fluent language: English only Etiology of hearing loss: Unknown Duration of hearing loss: 20 years Approximate age of amplification: 7 years Noise exposure: Occupational, 3 years Occupation: Manual laborer Education: College degree Communication mode: Auditory/oral Device use: Use of listening devices over 10 hr per day for over 10 years Lifestyle: Lives alone, rarely attends social activities |

Demographics: Early 40s, Male, White Native/fluent language: English only Etiology of hearing loss: Unknown with reported otosclerosis and noise exposure Duration of hearing loss: 20 years Approximate age of amplification: Early 20s Noise exposure: Occupational, 6 years and recreational, over 20 years Occupation: Skilled laborer Education: College degree Communication mode: Auditory/Oral Device use: Use of listening devices over 10 hr per day for over 10 years Lifestyle: Lives with spouse and other family members, regularly attends social activities |

| Summary of Clinical Chart Review | |

| Amplification history: Bilateral behind-the-ear power hearing aids with ear molds | Amplification history: Bilateral hearing aids followed by unilateral behind-the-ear power hearing aid with ear mold, on left, and osseointegrated (OI) amplification, on right |

| Summary of Cochlear Implantation | |

|

Initial Implant: Cochlear Nucleus 522, right, with Nucleus 7 (CP1000) Contralateral Implant: Cochlear Nucleus 522, left, with Nucleus 7 (CP1000) 4 months postinitial implant |

Implant: Cochlear Nucleus 622, right, with Nucleus 7 (CP1000) |

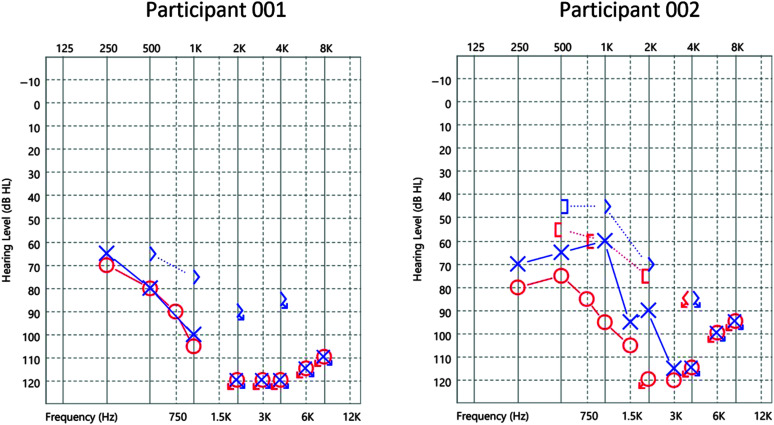

Figure 1.

Preimplantation audiometric thresholds measured with air- and bone-conduction for Participant 001 (left panel) and Participant 002 (right panel) for frequencies between 0.25 and 8 kHz expressed as Hearing Level (dB HL).

Table 3.

Postoperative clinical scores on AzBio sentences in quiet.

| Participant 001 (sequential implantation, right then left) |

Participant 002 |

||||

|---|---|---|---|---|---|

| Right ear only | Percentage correct | Left ear only | Percentage correct | Right ear only | Percentage correct |

| Pre-op aided | 0% (inferred) | Pre-op aided | 0% (inferred) | Pre-op aided* | 45 (27% +10 SNR) |

| 1 month | 56% | 1 month | 92% | Day 1 | 96% |

| 3 months | 91% | 3 months | 98% | 3 months | 99.3% |

Tested in quiet and in +10 dB SNR noise.

Research Session Data

Auditory information processing tasks. Participant 001 had an average final reversal of 3.3 RPO on the Spectral-Temporally Modulated Ripple discrimination test, which is lower than the average reported for adults with normal hearing and experienced cochlear implant users (average RPO for adult with normal hearing reported 9.35 and average RPO for experienced cochlear implant users of 4.30 [mean CI use 4.13 years, range: 0–12 years]; Landsberger et al., 2018). Participant 001 correctly identified 50% of the 25 nonspeech biologically significant auditory test signals on the open-set Environmental Sound Recognition Test. This score is lower than the average young adult with normal hearing (70.55%; Anaya et al., 2016). Participant 001 correctly repeated 55% of the Auditory Nonwords presented in the CNRep test, which is lower than the average for young adults with normal hearing (87.44%) but higher than the average for postlingually implanted adult cochlear implant users (25.82%; Kronenberger & Pisoni, 2019). His performance on the QuickSIN test of speech recognition in noise revealed a 7-dB SNR loss, which reflects a mild-to-moderate degree of functional signal-to-noise ratio (SNR) loss (normal hearing control subjects = 2 dB SNR loss; Killion et al., 2004). Participant 001's speech recognition scores on the PRESTO-Standard and FAE sentence tests were comparable to the performance of young adults with normal hearing and much higher than the average scores from adult and young adult cochlear implant users (Smith et al., 2019). On the Harvard-Anomalous sentence test, Participant 001 correctly recognized 76% of the key words, which is lower than the average of high-performing pediatric listeners with normal hearing but higher than the average of experienced adult cochlear implant users (Moberly & Reed, 2019; Pisoni & Kronenberger, 2021).

Cognitive information processing. Participant 001 obtained a scaled score of 12 on the LNS task (normative mean of 10 and normative standard deviation of 3, Weschler, 2014). This scaled score was converted to a standard score of 110 (normative mean of 100 and normative standard deviation of 15). On the Visual Stroop task, Participant 001 displayed faster response times than the averages obtained for both older normal hearing controls and experienced cochlear implant users (see Table 5; Moberly et al., 2018). His standard scores for the TOWRE-2 word reading test were 106 for words and 123 for nonwords/phonetic decoding (normative mean of 100 and normative standard deviation of 15; Torgesen et al., 2012). His age-corrected standard scores for the Flanker task and the Pattern Comparison task were 95 and 94, respectively (normative mean of 100 and normative standard deviation of 15; Weintraub et al., 2013).

Table 5.

Cognitive and linguistic processing postoperative assessment data.

| Measure | Participant 001 | Participant 002 |

|---|---|---|

| Cognitive information processing | ||

| Auditory WISC-V spoken letter–number sequencing (Wechsler, 2014) | ||

| Scaled score | 12 | 10 |

| Standard score | 110 | 100 |

| Visual TOWRE-2 (Torgesen et al., 2012) | ||

| Total words standard score | 106 | 123 |

| Total nonwords standard score | 111 | 112 |

| Visual Stroop Color-Word Test (Stroop, 1935) | ||

| Control (speed), ms (Older NH = 1105.4, ECI = 1342.5; Moberly et al., 2019) |

827.78 | 968.46 |

| Congruent (concentration), ms (Older NH = 1135.5, ECI = 1371.1; Moberly et al., 2019) |

846.57 | 1004.59 |

| Incongruent (inhibition), ms (Older NH = 1466.1, ECI = 1779.0; Moberly et al., 2019) |

934.79 | 2149.07 |

| Visual flanker (NIH Toolbox; Weintraub et al., 2013) | ||

| Age-corrected standard score | 95 | 105 |

| Visual pattern comparison (NIH Toolbox; Weintraub et al., 2013) | ||

| Age-corrected standard score | 94 | 93 |

| Linguistic information processing | ||

| Word familiarity rating task (WordFAM; Lewellen et al., 1993; range: 1 [low] to 7 [high]; experienced cochlear implant users [ECIs]; Tamati et al., 2021) | ||

| Low (ECI: mean rating = 3.10) | 2.9 | 5.76 |

| Medium (ECI: mean rating = 4.49) | 5.3 | 6.86 |

| High (ECI: mean rating = 6.33) | 6.92 | 6.94 |

| Overall (ECI: mean rating = 4.65) | 5.06 | 6.53 |

Note. WISC-V = Wechsler Intelligence Scale for Children–Fifth Edition; TOWRE-2 = Test of Word Reading Efficiency–Second Edition; NH = normal hearing control; ECI = experienced cochlear implant user.

Linguistic information processing. On the Word Familiarity Rating Task, Participant 001 provided an overall average rating of 5.06 (range: 1 [low familiarity] to 7 [high familiarity]). Less familiar words were rated at an average of 2.9. Medium familiarity words were rated at an average of 5.3, and highly familiar words were rated at an average of 6.92.

Participant 002

Demographic and Clinical History

Participant 002's demographics and clinical history are summarized in the right-hand panels of Table 1 and Figure 1, respectively, documenting his previous use of bilateral traditional amplification for progressive, postlingual hearing loss. However, for the 5 years prior to CI, Participant 002 also used an osseointegrated device (Cochlear Americas, Baha 5 SuperPower) on a percutaneous abutment on his right side (Reinfeldt et al., 2015). His pre- and postoperative speech recognition scores are summarized in the right-hand columns of Tables 2 and 3. On the second day of his 2-day initial stimulation procedure, CUNY tests were administered to Participant 002 by the attending CI audiologist under unaided binaural conditions (e.g., without his left hearing aid or the right external CI processor). These CUNY scores are shown in the right-hand column of Table 2.

Research Session Data

Wearing his right CI processor and his left hearing aid for the research testing session, Participant 002 completed all the information processing tasks in the battery spanning several different domains; see right-hand panels Tables 4 and 5 for the auditory, cognitive, and linguistic information processing measures collected.

Table 4.

Auditory information processing postoperative assessment data.

| Measure | Participant 001 | Participant 002 |

|---|---|---|

| Spectral-Temporally Modulated Ripple Test (SMRT; NH average = 9.35 RPO, experienced CI average = 4.30 RPO; Landsberger et al., 2018) | ||

| Average of ripples per octave for last reversal | 3.30 | 3.73 |

| Environmental sound recognition (young adults with normal hearing 70.55%; Anaya et al., 2016) | ||

| Percent sounds correctly identified | 50% | 92% |

| CNRep auditory nonword repetition task (NH average = 87.44, CI average = 25.82; Kronenberger & Pisoni, 2019) | ||

| Percent nonwords correctly repeated | 55% | 80% |

| QuickSIN (0–3 dB SNR = normal/near normal; 3–7 dB SNR = mild SNR loss; 7–15 dB SNR = moderate SNR loss; Killion et al., 2004) | ||

| dB SNR loss | 7 dB SNR loss | 3 dB SNR loss |

| PRESTO-Standard sentences (NH average = 96.0%, CI average = 48.4%; Smith et al., 2019) | ||

| Percent key words correct | 86% | 99% |

| PRESTO-FAE sentences (NH average = 81.4%, CI average = 33.0%; Smith et al., 2019) | ||

| Percent key words correct | 66% | 88% |

| Harvard-Anomalous Sentences (high performing pediatric NH average = 96%, Pisoni & Kronenberger, 2021; experienced CI average = 45.4%; Moberly & Reed, 2019) | ||

| Percent key words correct | 76% | 87% |

Note. NH = normal hearing control; RPO = ripples per octave; CI = cochlear implant user; CNRep = Children's Test of Nonword Repetition; SNR = signal-to-noise ratio.

Auditory information processing. Participant 002's average RPO in the SMRT ripple discrimination task was 3.73 RPO, which like Participant 001, is lower than the average reported for adults with normal hearing and experienced cochlear implant users (average RPO for adult with normal hearing reported 9.35 and average RPO for experienced cochlear implant users of 4.30 [mean CI use 4.13 years, range: 0–12 years]; Landsberger et al., 2018). Participant 002 correctly identified 92% of the 25 nonspeech auditory test signals on the open-set Environmental Sound Recognition Test, which is much higher than the average for young adults with normal hearing (70.55%; Anaya et al., 2016). Participant 002 correctly reproduced 80% of the Auditory Nonwords presented in the CNRep task; this is only slightly lower than the average for young adults with normal hearing (Kronenberger & Pisoni, 2019). Participant 002's performance on the QuickSIN speech in noise test revealed a 3-dB SNR loss, which reflects a minimal-to-normal degree of functional SNR loss (Killion et al., 2004). For the PRESTO-Standard and PRESTO-FAE sentence tests, Participant 002 performed nearly at the same level as young adults with normal hearing (Smith et al., 2019). For the Harvard-Anomalous sentence test, Participant 002 correctly recognized 87% of key words, which is lower than the average of pediatric listeners with normal hearing but higher than the average experienced cochlear implant user (Moberly & Reed, 2019; Pisoni & Kronenberger, 2021).

Cognitive information processing. Participant 002 obtained a scaled score of 10 on the LNS task (normative mean of 10 and standard deviation of 3; Weschler, 2014). This scaled score was converted to a standard score of 100 (normative mean of 100 and normative standard deviation of 15). His standard scores for the TOWRE-2 word reading test were 111 for words and 112 for nonwords (normative mean 100, normative standard deviation of 15; Torgesen et al., 2012). Participant 002's performance on the Stroop reflected average response times for the Control and Congruent trials and slightly longer response times for the Incongruent trials compared with the average times found for older adults with normal hearing and experienced cochlear implant users (see Table 5, Moberly et al., 2018). His age-corrected standard scores for the Flanker task and the Pattern Comparison task were 105 and 93, respectively, (normative mean 100, normative standard deviation of 15; Weintraub et al., 2013).

Linguistic information processing. On the Word Familiarity Rating Task, Participant 002 provided an overall average rating of 6.53 (range: 1 [low familiarity] to 7 [high familiarity]). Less familiar words were rated an average of 5.76. Medium familiarity were rated an average of 6.86, and highly familiar words were rated an average of 6.94.

General Discussion

Understanding the factors that underlie variability in outcomes is clearly one of the most important challenges facing clinicians and research scientists working in the field of CIs today (Moberly et al., 2016; Pisoni et al., 2018). Numerous studies published over the years have found that conventional demographic and hearing history variables are insufficient for predicting speech recognition outcomes (see papers by Blamey et al., 2013; Boisvert et al., 2020; Carlyon & Goehring, 2021; Goudey et al., 2021). Exceptionally good speech recognition outcomes by high functioning patients with CIs like the two participants described in this report can provide some new insights into potential factors that may explain variability in outcomes and can demonstrate the translational value of novel research methods and measures for individual cases in the clinical setting.

One of the major reasons we have made so little progress in understanding and explaining individual differences and variability in patients with CIs has been the heavy reliance on a small number of narrowly focused speech recognition outcome measures in the clinical setting (see Carhart, 1951; Davis, 1948; Hirsh, 1947; Hudgins et al., 1947; Newby, 1958). Relying on a limited (albeit valid and clinically relevant) endpoint/product “gold standard” outcome measure like AzBio sentences alone risks obscuring other sources of variability in speech recognition outcomes and other more basic elementary underlying sensory, cognitive, and linguistic processes, which may provide valuable new insights into outcomes after implantation. In the current case analysis, both participants scored over 90% on AzBio sentences in quiet within 3 months after activation and were considered remarkable successes as a result. However, administration of additional speech recognition and neurocognitive tests revealed a more detailed range of outcomes within specific speech, language, and neurocognitive domains and provided a better picture of these exceptional post-CI outcomes (Moberly et al., 2018; Moberly & Reed, 2019). Using only traditional clinical measures of speech recognition limits the potential to understand and explain the underlying sensory, cognitive, and linguistic factors that could better predict outcomes.

Conventional, clinical speech recognition measures used to assess outcomes and benefits following implantation consist of a handful of more basic elementary foundational component sensory, neural, and cognitive processes (Friedman & Miyake, 2017; Miyake et al., 2000; Pisoni, 2021). Focusing on only traditional endpoint or product measures of outcome may mask the underlying source of individual differences and the specific strengths and weaknesses of individual patients, which may provide valuable new insights into how CI users may differ from each other and how they may accomplish the same end result via different alternative pathways or information processing strategies (Moberly et al., 2018). There is also a pressing need for “converging operations” that use multiple measures of the same underlying theoretical construct. Current endpoint/product measures of outcome are unable to provide any detailed mechanistic explanations of why someone does very well with their CI like Participants 001 and 002 and why someone else does poorly with their CI (Moberly et al., 2018). Moreover, conventional endpoint/product clinical measures of outcomes do not provide any detailed information about the “time-course” of information processing, one of the fundamental foundational assumptions of the information processing approach to cognition (Haber, 1969; Neisser, 1967; Pisoni, 2000, 2021). As a result, conventional endpoint measures have very little to contribute in identifying and understanding the causal mechanisms of action that support speech recognition or how these mechanisms may have changed and become reorganized as a result of auditory sensory deprivation prior to implantation (Alzaher et al., 2021).

Clinical Impressions of Participant 001 From Research Session Data

Participant 001 demonstrated the expected patterns in performance routinely obtained by typically developing adults with normal hearing on clinical measures of speech recognition within 3 months after implantation. Despite preimplant performance of 0% on CUNY Sentences (Auditory Only), he scored over 90% on AzBio sentences in each individual ear within 3 months after implantation. Because the AzBio scores were the primary speech recognition results obtained in the clinical setting, Participant 001 performed at ceiling for clinically measured speech recognition outcomes in quiet.

However, the observed profile of Participant 001's auditory and speech recognition tests revealed several specific weaknesses from his ceiling performance on AzBio Sentences. Participant 001's average RPO in the SMRT task indicated some weakness in spectral discrimination of complex, nonspeech signals. Additionally, Participant 001's performance on the Environmental Sound Recognition Test, while in the range found for typical adult CI users, revealed only 50% sound recognition, which was lower than scores obtained from young adults with normal hearing (Anaya et al., 2016).

Similar performance at a mildly suboptimal level was noted for Participant 001 in performance on more challenging speech recognition tests. Results using the QuickSIN test of speech recognition in noise revealed the presence of mild to moderate difficulties in recognizing speech in noise. Furthermore, although Participant 001 scored close to 90% on the high-variability multitalker PRESTO-Standard sentences test, he displayed weaknesses in recognizing high-variability multitalker speech produced by nonnative speakers of English. The difficulty with nonnative, foreign-accented English could reflect a speech processing load that overwhelmed his limited-capacity processing resources, which may not be manifest under more ideal listening conditions using sentences from native speakers of English presented in quiet. This hypothesis is consistent with challenges experienced by Participant 001 on the Harvard-Anomalous Sentences, which eliminated downstream contextual/semantic cues and introduced interference and conflict, thereby putting additional strain on limited speech recognition resources that were not measured by the AzBio sentences alone. While Participant 001's scores on these more challenging speech and sound recognition tests did not reach ceiling or normal-hearing levels, they were in a very strong range compared with the typical CI user with several years of experience using their devices (Kramer et al., 2018; Smith et al., 2019; Zhan et al., 2020).

On measures of executive functioning and language processing, Participant 001 performed average or better, suggesting that strong neurocognitive and foundational language functioning may act to support his outstanding adaptation to the CI. His score on the LNS test of working memory relative to norms (for older adolescents, the oldest norm sample on the WISC-V) was average to above average, indicating strong verbal working memory, whereas accuracy and speed scores on the Stroop, Flanker, and Pattern Comparison tests demonstrated average or better speed of information processing, inhibition, and concentration skills. Similarly, his WordFam ratings suggest vocabulary knowledge in the average to above average range and TOWRE-2 results indicate single-word reading fluency (reflecting phonological processing, vocabulary, and word recognition) in the average to above average range when compared with experienced CI users (Tamati et al., 2021).

In summary, Participant 001 is doing remarkably well for a new cochlear implant user. In addition to scoring at ceiling levels of performance on AzBio sentences, the gold-standard measure of speech recognition in quiet, his scores on other more challenging speech recognition measures were in a range consistent with more experienced CI users. On the other hand, his individual scores on other more sensitive speech-recognition tests also suggest that he is not performing up to normal-hearing standards on those latter measures, likely because the information processing demands overwhelmed his available limited-capacity resources. Participant 001's average to above-average language and executive functioning skills may provide some insight into his excellent adaptation to the CI, as these factors have been found in past research to predict CI outcomes (Kronenberger & Pisoni, 2020).

Clinical Impressions of Participant 002 From Research Session Data

Like Participant 001, Participant 002 demonstrated exceptionally strong sentence recognition performance on the AzBio sentences shortly after implantation. Although Participant 002 also demonstrated a weakness on the SMRT, he scored at near-ceiling, normal-hearing levels of performance on more challenging measures of speech recognition including QuickSIN, PRESTO, PRESTO-FAE, and Harvard-Anomalous Sentences. It is very likely that the extraordinary speech recognition performance achieved by Participant 002 may reflect his previous 5 years of experience processing speech via his osseointegrated device, but we are not able to assess this hypothesis at the present time with the current experimental design and test protocol. These findings suggest that Participant 002's hair cells may not be as damaged as traditional cochlear implant users. The presence of a mixed hearing loss suggests improved coupling of the CI to remaining sensory receptors. We do not have any detailed information or test scores on how Participant 002 did before he received his Cochlear Americas Baha and how he did during the 5 years he was actively using this device before he received his current CI (Reinfeldt et al., 2015).

Participant 002 also showed similar average to above average performance (compared with Participant 001) on measures of executive functioning (e.g., Stroop, Flanker, Pattern Comparison, and Letter–Number Sequence) and language processing (WordFAM and TOWRE-2) skills. In fact, Participant 002 showed stronger scores than Participant 001 on the WordFAM test, indicating very strong vocabulary skills, which may have provided additional downstream support for his excellent speech recognition skills under challenging conditions. The presence of strong executive and language functioning in both Participant 001 and Participant 002 provides further suggestive evidence of the potential role of these underlying core neurocognitive functions in supporting excellent speech and language outcomes.

Overview of Similarities and Differences Between Participants 001 and 002

An examination of the similarities and differences in auditory, speech-language, and neurocognitive functioning between these two participants provides some new insights into potential outcome profiles among patients with excellent speech recognition outcomes following implantation, as well as potential factors explaining those outcomes. Both participants demonstrated evidence of remarkably fast perceptual learning following implantation. Participant 001 showed dramatic improvement from 56% at his 1-month assessment to 91% at his 3-month assessment in AzBio scores in his first implanted ear, and then scored at a near-ceiling level on the AzBio within 1 month following implantation in his second ear. This pattern suggests very rapid perceptual learning in the first 3 months in the first implanted ear, and possibly an advantage from experience with the CI in rapid (1 month) adaptation to the second ear. Participant 002 showed even more dramatic, very fast perceptual learning, scoring 96% correct on AzBio sentences on the day of initial activation. Overall, within 3 months of implantation, the clinical speech recognition scores of Participants 001 and 002 on AzBio sentences in quiet were very similar and at near-ceiling (> 90% accuracy) levels—much higher than the speech recognition scores typically obtained from the general postlingually implanted clinical population (Kramer et al., 2018; Zhan et al., 2020).

Similarities Between 001 and 002

Preimplantation auditory experience may be one common factor explaining the exceptional adaptation and rapid perceptual learning of both participants with their CIs. Both participants had normal hearing in childhood (up to about age 7 years for 001 and into his 20s for 002), followed by severe-to-profound bilateral hearing loss and auditory stimulation via traditional amplification for well over 10 years. Prior to implantation, both patients showed very strong A + V speech recognition performance on CUNY sentences. This ongoing multimodal AV sensory stimulation may have contributed to actively maintaining the neural networks used in hearing, speech recognition, and language processing, resulting in an auditory system primed to receive degraded and compromised stimulation from a cochlear implant. A causal relationship between preoperative multisensory speech processing and postoperative speech recognition seems intuitive but, currently, there is no robust confirmation of this account in postlingual adult CI recipients (see Lee et al., 2021). This may be due to neural reorganization within the brain (Kral et al., 2016, 2019). In fact, preservation of left-hemispheric dominance for language in the human brain is optimal and joint involvement of the right temporal cortex and visual cortices predicts poor speech outcomes in profoundly deaf CI recipients (Lazard & Giraud, 2017). We suggest that the presence of preoperative low frequency acoustic hearing in both 001 and 002 may also allow for robust multimodal speech processing without the maladaptive cortical reorganization observed in profoundly deaf adults, although future studies are necessary to specifically evaluate multimodal processing in patients with residual preoperative hearing (Moberly, 2021).

Participants 001 and 002 both displayed comparable scores on spectral ripple discrimination. Their scores on the spectral ripple test indicate Participants 001 and 002 both have spectral resolution discrimination for complex nonspeech sounds that are very similar to those obtained from experienced cochlear implant users but much poorer than normal-hearing young adults (Landsberger et al., 2018).

On language (word familiarity and reading fluency) and neurocognitive (working memory and executive functioning) tests, both participants performed in the average to above-average range compared with benchmark values. The strong language test scores indicate that both patients had good vocabulary, language familiarity, and fast-automatic phonological coding skills. Neurocognitive results demonstrate strong working memory, speed of information processing, and executive functioning (inhibition and concentration) skills. Notably, these language and neurocognitive subdomains have been identified as reliable predictors of speech-language outcomes after CI (Kronenberger & Pisoni, 2019).

Differences Between 001 and 002

On measures of speech recognition and rapid phonological processing, Participant 001 consistently performed more poorly than Participant 002. Participant 001 showed weaker speech recognition in noise on the QuickSIN test, as well as poorer identification of environmental sounds. Similarly, Participant 001 repeated 25% fewer nonwords accurately on the nonword repetition test. However, it is important to note that these differences reflected exceptionally strong performance by Participant 002 as opposed to weaker performance by Participant 001. Compared with benchmarks from samples of experienced adult CI users, Participant 001 scored as high or higher on measures of auditory information processing (Kramer et al., 2018; Zhan et al., 2020).

A similar pattern of outcomes was observed for scores on more sensitive, challenging measures of speech recognition. Participant 002 scored at normal-hearing levels and near the ceiling (87% or higher) on recognition of speech from multiple talkers (PRESTO), nonnative English speakers with foreign accents (PRESTO-FAE), and anomalous sentences that prevent coherent meaningful semantic interpretation (Harvard-A nomalous). In contrast, Participant 001 scored below levels typical of normal-hearing listeners but in a range typical of strong CI users on those same tests. Thus, AzBio scores in the normal-hearing range for Participant 002 were further supported by normal-hearing range performance on more challenging tests of sentence recognition. The AzBio scores in the normal-hearing range for Participant 001 masked suboptimal performance on more challenging robust tests of sentence recognition, suggesting that those more demanding conditions may have overwhelmed the listening and information processing resources of Participant 001.

In summary, although participants 001 and 002 displayed comparable very high levels of speech recognition on clinical AzBio sentences after a very short period of time using their CIs, they also differed in a number of ways on a range of different sensory, cognitive, and linguistic information processing tasks in the expanded test battery. The differences that were uncovered by these novel performance tests provide a better understanding of their speech-language and neurocognitive functioning than would have been obtained with the conventional AzBio clinical assessment alone. Furthermore, these differences provide potential directions for a better understanding of the elementary sensory, cognitive, and linguistic factors that might underlie variability and individual differences in outcomes following CI.

Limitations

The primary limitation of this investigation is the case study approach. Data obtained prior to and immediately after implantation were obtained clinically and therefore did not have the same consistency of administration timing that is typically found in more formal, large sample research. Additionally, results may be specific to the two patients studied, and generalization should be done with caution and awareness of the hazards of single-N evidence. However, the case study method provided a demonstration and proof of concept of a translational approach to enhancing clinical evaluation of individual patients with CIs (Kronenberger & Pisoni, 2020) and also provided suggestions for new research on speech, language, and neurocognitive functioning that can explain individual differences in outcomes following implantation. Future research should expand the scope of these findings to larger sample sizes of patients with exceptionally positive post-CI outcomes in order to provide statistical tests of some of the findings and hypotheses generated in this study. Future research using an expanded battery of audiological and neurocognitive assessments should also be used to investigate longer time periods after implantation and longitudinal changes in functioning over time.

Because of time constraints, there were a number of information processing domains that we were unable to assess, such as perception of the indexical properties of speech, divided attention and multitasking, mental workload and cognitive effort, music perception, higher level language processing, and speed of information processing. These other domains may reflect important speech-language-hearing outcomes as well as important contributors to those outcomes. Additionally, future work should more thoroughly investigate intervention histories both pre- and postimplantation, including use of formal auditory rehabilitation or cognitive training after implantation. Finally, more systematic assessment of functional outcomes such as everyday hearing, listening effort, quality of life, and psychosocial outcomes are fruitful domains of investigation for future case study research.

Summary and Conclusions

The goal of this study was to uncover strengths, weaknesses, and milestones in auditory, speech, language, and neurocognitive functioning in two remarkably successful postlingual adult patients who received cochlear implants, using a battery of novel sensory, cognitive, and linguistic outcome measures. Both patients had early pre-CI experiences that may have primed their auditory systems to be prepared for the novel input of a CI and showed exceptionally strong performance on clinical AzBio speech recognition scores. However, expanded auditory and speech recognition testing revealed a pattern of similarities and differences demonstrating the value and clinical utility of a broader assessment battery in characterizing and understanding outcomes, which were supported by a pattern of strong language and neurocognitive functioning. The findings obtained in these two case reports support enhancements in clinical assessments of adult patients after implantation and suggest novel directions for better understanding and explaining differences in outcomes following CI.

Acknowledgments

This research was supported by National Institute on Deafness and Other Communication Disorders Grant R01DC015257 to William G. Kronenberger and David B. Pisoni, a National Institutes of Health Grant K0DC016034 to Rick F. Nelson, and grants from the American Laryngological, Rhinological, and Otological Society and American College of Surgeons to Rick F. Nelson.

Funding Statement

This research was supported by National Institute on Deafness and Other Communication Disorders Grant R01DC015257 to William G. Kronenberger and David B. Pisoni, a National Institutes of Health Grant K0DC016034 to Rick F. Nelson, and grants from the American Laryngological, Rhinological, and Otological Society and American College of Surgeons to Rick F. Nelson.

References

- Aronoff, J. M. , & Landsberger, D. M. (2013). The development of a modified spectral ripple test. The Journal of the Acoustical Society of America, 134(2), EL217–EL222. https://doi.org/10.1121/1.4813802 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adunka, O. F. , Gantz, B. J. , Dunn, C. , Gurgel, R. K. , & Buchman, C. A. (2018). Minimum reporting standards for adult cochlear implantation. Otolaryngology—Head & Neck Surgery, 159(2), 215–219. https://doi.org/10.1177/0194599818764329 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alzaher, M. , Vannson, N. , Deguine, O. , Marx, M. , Barone, P. , & Strelnikov, K. (2021). Brain plasticity and hearing disorders. Revue Neurologigue, 177(9), 1121–1132. https://doi.org/10.1016/j.neurol.2021.09.004 [DOI] [PubMed] [Google Scholar]

- Anaya, E. M. , Pisoni, D. , & Kronenberger, W. (2016). Long-term musical experience and auditory and visual perceptual abilities under adverse conditions. The Journal of the Acoustical Society of America, 140(3), 2074–2081. https://doi.org/10.1121/1.4962628 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bilger, R. C. , Black F. O., & Hopkinson N. T. (1977). Evaluation of subjects presently fitted with implanted auditory prostheses. Annals of Otology, Rhinology & Laryngology, 86(3), 1–176. https://doi.org/10.1177/00034894770860S303 [DOI] [PubMed]

- Blamey, P. , Artieres, F. , Başkent, D. , Bergeron, F. , Beynon, A. , Burke, E. , Dillier, N. , Dowell, R. , Fraysse, B. , Gallégo, S. , Govaerts, P. J. , Green, K. , Huber, A. M. , Kleine-Punte, A. , Maat, B. , Marx, M. , Mawman, D. , Mosnier, I. , O'Connor, A. F. , … Lazard, D. S. (2013). Factors affecting auditory performance of postlinguistically deaf adults using cochlear implants: An update with 2251 patients. Audiology and Neurotology, 18(1), 36–47. https://doi.org/10.1159/000343189 [DOI] [PubMed] [Google Scholar]

- Boisvert, I. , Reis, M. , Au, A. , Cowan, R. , & Dowell, R. C. (2020). Cochlear implantation outcomes in adults: A scoping review. PLOS ONE, 15(5), Article e0232421. https://doi.org/10.1371/journal.pone.0232421 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carhart, R. (1951). Basic principles of speech audiometry. Acta Oto-Laryngologica, 40(1–2), 62–71. https://doi.org/10.3109/00016485109138908 [DOI] [PubMed] [Google Scholar]

- Carlson, M. L. (2020). Cochlear implantation in adults. The New England Journal of Medicine, 382(16), 1531–1542. https://doi.org/10.1056/NEJMra1904407 [DOI] [PubMed] [Google Scholar]

- Carlyon, R. P. , & Goehring, T. (2021). Cochlear implant research and development in the twenty-first century: A critical update. Journal of the Association for Research in Otolaryngology, 22(5), 481–508. https://doi.org/10.1007/s10162-021-00811-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Casserly, E. D. , & Pisoni, D. B. (2013). Nonword repetition as a predictor of long-term speech and language skills in children with cochlear implants. Otology & Neurotology, 34(3), 460–470. https://doi.org/10.1097/MAO.0b013e3182868340 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis, H. (1948). The articulation area and the social adequacy index for hearing. The Laryngoscope, 58(8), 761–778. https://doi.org/10.1288/00005537-194808000-00002 [DOI] [PubMed] [Google Scholar]

- Eriksen, B. A. , & Eriksen, C. W. (1974). Effects of noise letters upon the identification of a target letter in a nonsearch task. Perception & Psychophysics, 16(1), 143–149. https://doi.org/10.3758/BF03203267 [Google Scholar]

- Ernst, M. M. , Barhight, L. R. , Bierenbaum, M. L. , Piazza-Waggoner, C. , & Carter, B. D. (2013). Case studies in clinical practice in pediatric psychology: The “why” and “how to.” Clinical Practice in Pediatric Psychology, 1(2), 108–120. https://doi.org/10.1037/cpp0000021 [Google Scholar]

- Folstein, M. F. , Folstein, S. E. , & McHugh, P. R. (1975). “Mini-mental state”: A practical method for grading the cognitive state of patients for the clinician. Journal of Psychiatric Research, 12(3), 189–198. https://doi.org/10.1016/0022-3956(75)90026-6 [DOI] [PubMed] [Google Scholar]

- Friedman, N. P. , & Miyake, A. (2017). Unity and diversity of executive functions: Individual differences as a window on cognitive structure. Cortex, 86, 186–204. https://doi.org/10.1016/j.cortex.2016.04.023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gathercole, S. E. , Willis, C. S. , Baddeley, A. D. , & Emslie, H. (1994). The Children's Test of Nonword Repetition: A test of phonological working memory. Memory, 2(2), 103–127. https://doi.org/10.1080/09658219408258940 [DOI] [PubMed] [Google Scholar]

- Gifford, R. H. , Dorman, M. F. , Shallop, J. K. , & Sydlowski, S. A. (2010). Evidence for the expansion of adult cochlear implant candidacy. Ear and Hearing, 31(2), 186–194. https://doi.org/10.1097/AUD.0b013e3181c6b831 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilbert, J. L. , Tamati, T. N. , & Pisoni, D. B. (2013). Development, reliability and validity of PRESTO: A new high-variability sentence recognition test. Journal of the American Academy of Audiology, 24(01), 026–036. https://doi.org/10.3766/jaaa.24.1.4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goudey, B. , Plant, K. , Kiral, I. , Jimeno-Yepes, A. , Swan, A. , Gambhir, M. , Büchner, A. , Kludt, E. , Eikelboom, R. H. , Sucher, C. , Gifford, R. H. , Rottier, R. , & Anjomshoa, H. (2021). A multicenter analysis of factors associated with hearing outcome for 2,735 adults with cochlear implants. Trends in Hearing, 25. https://doi.org/10.1177/23312165211037525 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haber, R. N. (1969). Information processing approaches to visual perception. Holt, Rinehart & Winston. [Google Scholar]

- Herman, R. , & Pisoni, D. B. (2000). Perception of “elliptical speech” following cochlear implantation: Use of broad phonetic categories in speech perception. The Volta Review, 102(4), 321–347. [PMC free article] [PubMed] [Google Scholar]

- Hirsh, I. J. (1947). Clinical application of two Harvard auditory tests. Journal of Speech Disorders, 12(2), 151–158. https://doi.org/10.1044/jshd.1202.151 [DOI] [PubMed] [Google Scholar]

- Holden, L. K. , Finley, C. C. , Firszt, J. B. , Holden, T. A. , Brenner, C. , Potts, L. G. , Gotter, B. D. , Vanderhoof, S. S. , Mispagel, K. , Heydebrand, G. , & Skinner, M. W. (2013). Factors affecting open-set word recognition in adults with cochlear implants. Ear and Hearing, 34(3), 342–360. https://doi.org/10.1097/AUD.0b013e3182741aa7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hudgins, C. V. , Hawkins, J. E. , Karlin, J. E. , & Stevens, S. S. (1947). The development of recorded auditory tests for measuring hearing loss for speech. The Laryngoscope, 57(1), 57–89. https://doi.org/10.1288/00005537-194701000-00005 [PubMed] [Google Scholar]

- Killion, M. C. , Niquette, P. A. , Gudmundsen, G. I. , Revit, L. J. , & Banerjee, S. (2004). Development of a quick speech-in-noise test for measuring signal-to-noise ratio loss in normal-hearing and hearing-impaired listeners. The Journal of the Acoustical Society of America, 116(4), 2395–2405. https://doi.org/10.1121/1.1784440 [DOI] [PubMed] [Google Scholar]

- Klingberg, T. (2010). Training and plasticity of working memory. Trends in Cognitive Science, 14(7), 317–324. https://doi.org/10.1016/j.tics.2010.05.002 [DOI] [PubMed] [Google Scholar]

- Kral, A. , Dorman, M. F. , & Wilson, B. S. (2019). Neuronal development of hearing and language: Cochlear implants and critical periods. Annual Review of Neuroscience, 42(1), 47–65. https://doi.org/10.1146/annurev-neuro-080317-061513 [DOI] [PubMed] [Google Scholar]

- Kral, A. , Kronenberger, W. G. , Pisoni, D. B. , & O'Donoghue, G. M. (2016). Neurocognitive factors in sensory restoration of early deafness: A connectome model. The Lancet Neurology, 15(6), 610–621. https://doi.org/10.1016/S1474-4422(16)00034-X [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kramer, S. , Vasil, K. J. , Adunka, O. F. , Pisoni, D. B. , & Moberly, A. C. (2018). Cognitive functions in adult cochlear implant users, cochlear implant candidates, and normal-hearing listeners. Laryngoscope Investigative Otolaryngology, 3(4), 304–310. https://doi.org/10.1002/lio2.172 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kronenberger, W. G. , & Pisoni, D. B. (2019). Neurocognitive functioning in deaf children with cochlear implants. In Knoors H. & Marschark M. (Eds.), Evidence-based practice in deaf education (pp. 363–396). Oxford University Press. [Google Scholar]

- Kronenberger, W. G. , & Pisoni, D. B. (2020). Why are children with cochlear implants at risk for executive functioning delays?: Language only or something more? In Marschark M. & Knoors H. (Eds.), Oxford handbook of deaf Studies in learning and cognition. Oxford University. [Google Scholar]

- Landsberger, D. M. , Padilla, M. , Martinez, A. S. , & Eisenberg, L. S. (2018). Spectral-temporal modulated ripple discrimination by children with cochlear implants. Ear and Hearing, 39(1), 60–68. https://doi.org/10.1097/AUD.0000000000000463 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lazard, D. S. , & Giraud, A. L. (2017). Faster phonological processing and right occipito-temporal coupling in deaf adults signal poor cochlear implant outcome. Nature Communications, 8(1), Article 14872. https://doi.org/10.1038/ncomms14872 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee, H. J. , Lee, J. M. , Choi, J. Y. , & Jung, J. (2021). The effects of preoperative audiovisual speech perception on the audiologic outcomes of cochlear implantation in patients with postlingual deafness. Audiology & Neurotology, 26(3), 149–156. https://doi.org/10.1159/000509969 [DOI] [PubMed] [Google Scholar]

- Lewellen, M. J. , Goldinger, S. D. , Pisoni, D. B. , & Greene, B. G. (1993). Lexical familiarity and processing efficiency: Individual differences in naming, lexical decision, and semantic categorization. Journal of Experimental Psychology: General, 122(3), 316–330. https://doi.org/10.1037/0096-3445.122.3.316 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luxford, W. M. , & Ad Hoc Subcommittee of the Committee on Hearing and Equilibrium of the American Academy of Otolaryngology-Head and Neck Surgery. (2001). Minimum speech test battery for postlingually deafened adult cochlear implant patients. Otolaryngology—Head & Neck Surgery, 124(2), 125–126. https://doi.org/10.1067/mhn.2001.113035 [DOI] [PubMed] [Google Scholar]

- MacPhail, M. E. , Connell, N. T. , Totten, D. J. , Gray, M. T. , Pisoni, D. B. , Yates, C. W. , & Nelson, R. F. (2021). Speech recognition outcomes in adults with slim straight and slim modiolar cochlear implant electrode arrays. Otolaryngology—Head & Neck Surgery, 166(5), 943–950. https://doi.org/10.1177/01945998211036339 [DOI] [PubMed] [Google Scholar]

- Marcell, M. M. , Borella, D. , Greene, M. , Kerr, E. , & Rogers, S. (2000). Confrontation naming of environmental sounds. Journal of Clinical Experimental Neuropsychology, 22(6), 830–864. https://doi.org/10.1076/jcen.22.6.830.949 [DOI] [PubMed] [Google Scholar]

- Marks, L. , & Miller, G. A. (1964). The role of semantic and syntactic constraints in the memorization of English sentences. Journal of Verbal Learning and Verbal Behavior, 3(1), 1–5. https://doi.org/10.1016/S0022-5371(64)80052-9 [Google Scholar]

- Mendel, L. L. , & Danhauer, J. L. (1997). Audiologic evaluation and management and speech perception assessment. Singular. [Google Scholar]

- Miller, G. A. , & Isard, S. (1963). Some perceptual consequences of linguistic rules. Journal of Verbal Learning and Verbal Behavior, 2(3), 217–228. https://doi.org/10.1016/S0022-5371(63)80087-0 [Google Scholar]

- Miyake, A. , Emerson, M. J. , & Friedman, N. P. (2000). Assessment of executive functions in clinical settings: Problems and recommendations. Seminars in Speech and Language, 21(02), 169–183. https://doi.org/10.1055/s-2000-7563 [DOI] [PubMed] [Google Scholar]

- Moberly, A. C. (2021). Audiovisual integration skills before cochlear implantation predict post-operative speech recognition in adults [Paper presentation] . Cochlear Implant Team, Department of Otolaryngology—HNS, Indiana University School of Medicine, Indianapolis, IN. [DOI] [PMC free article] [PubMed]

- Moberly, A. C. , Bates, C. , Harris, M. S. , & Pisoni, D. B. (2016). The enigma of poor performance by adults with cochlear implants. Otology & Neurotology, 37(10), 1522–1528. https://doi.org/10.1097/MAO.0000000000001211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moberly, A. C. , Castellanos, I. , Vasil, K. J. , Adunka, O. F. , & Pisoni, D. B. (2018). “Product” versus “process” measures in assessing speech recognition outcomes in adults with cochlear implants. Otology & Neurotology, 39(3), e195–e202. https://doi.org/10.1097/MAO.0000000000001694 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moberly, A. C. , & Reed, J. (2019). Making sense of sentences: Top-down processing of speech by adult cochlear implant users. Journal of Speech, Language, and Hearing Research, 62(8), 2895–2905. https://doi.org/10.1044/2019_JSLHR-H-18-0472 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neisser, U. (1967). Cognitive psychology. Appleton-Century-Crofts. [Google Scholar]

- Newby, H. A. (1958). Audiology principles and practice. Appleton-Century-Crofts. [Google Scholar]

- Niparko, J. K. (2009). Cochlear implants: Principles and practices (2nd ed.) . Lippincott Williams and Wilkins. [Google Scholar]

- Nittrouer, S. , Caldwell-Tarr, A. , Sansom, E. , Twersky, J. , & Lowenstein, J. H. (2014). Nonword repetition in children with cochlear implants: A potential clinical marker of poor language acquisition. American Journal of Speech-Language Pathology, 23(4), 679–695. https://doi.org/10.1044/2014_AJSLP-14-0040 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nunes, T. , Barros, R. , Evans, D. , & Burman, D. (2014). Improving deaf children's working memory through training. International Journal of Speech & Language Pathology and Audiology, 2(2), 51–66. [Google Scholar]

- Owens, E. , Kessler, D. K. , Telleen, C. T. , & Schubert, E. D. (1981). The Minimal Auditory Capabilities (MAC) battery. Hearing Aid Journal, 34, 9–34. [Google Scholar]

- Pisoni, D. B. (2000). Cognitive factors and cochlear implants: Some thoughts on perception, learning, and memory in speech perception. Ear and Hearing, 21(1), 70–78. https://doi.org/10.1097/00003446-200002000-00010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pisoni, D. B. (2021). Cognitive audiology: An emerging landscape in speech perception. In Pardo J., Nygaard L. C., Remez R. E., & Pisoni D. B. (Eds.), Handbook of speech perception (2nd ed.). Wiley-Blackwell. [Google Scholar]

- Pisoni, D. B. , Kronenberger, W. G. , Harris, M. S. , & Moberly, A. C. (2018). Three challenges for future research on cochlear implants. World Journal of Otorhinolaryngology-Head and Neck Surgery, 3, 240–254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pisoni, D. B. , & Kronenberger, W. G. (2021). Recognizing spoken words in semantically- anomalous sentences: Effects of executive control in early-implanted deaf children with cochlear implants. Cochlear Implants International, 22(4), 223–236. https://doi.org/10.1080/14670100.2021.1884433 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reinfeldt, S. , Hakansson, B. , Taghavi, H. , & Eeg-Olofsson, M. (2015). New developments in bone-conduction hearing implants: A review. Medical Devices: Evidence and Research, 8, 79–93. https://doi.org/10.2147/MDER.S39691 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenblum, L. D. , & Dorsi, J. (2021). Primacy of multimodal speech perception for the brain and science. In Pardo J., Nygaard L. C., Remez R. E., & Pisoni D. B. (Eds.), Handbook of speech perception (2nd ed., pp. 28–57). Wiley-Blackwell. https://doi.org/10.1002/9781119184096.ch2 [Google Scholar]

- Schork, N. J. , & Goetz, L. H. (2017). Single-subject studies in translational nutrition research. Annual Review of Nutrition, 37(1), 395–422. https://doi.org/10.1146/annurev-nutr-071816-064717 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith, G. N. L. , Pisoni, D. B. , & Kronenberger, W. G. (2019). High-variability sentence recognition in long-term cochlear implant users: Associations with rapid phonological coding and executive functioning. Ear and Hearing, 40(5), 1149–1161. https://doi.org/10.1097/AUD.0000000000000691 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spahr, A. J. , Dorman, M. F. , Litvak, L. M. , Van Wie, S. , Gifford, R. H. , Loizou, P. C. , Loiselle, L. M. , Oakes, T. , & Cook, S. (2012). Development and validation of the AzBio sentence lists. Ear and Hearing, 33(1), 112–117. https://doi.org/10.1097/AUD.0b013e31822c2549 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson, R. A. , Sheffield, S. W. , Butera, I. M. , Gifford, R. H. , & Wallace, M. T. (2017). Multisensory integration in cochlear implant recipients. Ear and Hearing, 38(5), 521–538. https://doi.org/10.1097/AUD.0000000000000435 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stroop, J. R. (1935). Studies of interference in serial verbal reactions. Experimental Psychology, 18(6), 643–662. https://doi.org/10.1037/h0054651 [Google Scholar]

- Tamati, T. N. , Gilbert, J. L. , & Pisoni, D. B. (2013). Some factors underlying individual differences in speech recognition on PRESTO: A first report. Journal of the American Academy of Audiology, 24(7), 616–634. https://doi.org/10.3766/jaaa.24.7.10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tamati, T. N. , & Pisoni, D. B. (2014). Non-native listeners' recognition of high-variability speech using PRESTO. Journal of the American Academy of Audiology, 25(9), 869–892. https://doi.org/10.3766/jaaa.25.9.9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tamati, T. N. , Pisoni, D. B. , & Moberly, A. C. (2022). Speech and language outcomes in adults and children with cochlear implants. Annual Review of Linguistics, 8, 299–319. https://doi.org/10.1146/annurev-linguistics-031220-011554 [Google Scholar]

- Tamati, T. N. , Vasil, K. J. , Kronenberger, W. G. , Pisoni, D. B. , Moberly, A. C. , & Ray, C. (2021). Word and nonword reading efficiency in postlingually deafened adult cochlear implant users. Otology & Neurotology, 42(3), e272–e278. https://doi.org/10.1097/MAO.0000000000002925 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Torgesen, J. K. , Wagner, R. K. , & Rashotte, C. A. (2012). Test of Word Reading Efficiency–Second Edition. Pro-Ed. [Google Scholar]

- Velde, H. M. , Rademaker, M. M. , Damen, J. A. A. , Smit, A. L. , & Stegeman, I. (2021). Prediction models for clinical outcome after cochlear implantation: A systematic review. Journal of Clinical Epidemiology, 137, 182–194. https://doi.org/10.1016/j.jclinepi.2021.04.005 [DOI] [PubMed] [Google Scholar]

- Wechsler, D. (2014). Wechsler Intelligence Scale for Children–Fifth Edition (WISC-V) Technical and Interpretive Manual. NCS Pearson. [Google Scholar]

- Weintraub, S. , Dikmen, S. S. , Heaton, R. K. , Tulsky, D. S. , Zelazo, P. D. , Slotkin, J. , Carlozzi, N. E. , Bauer, P. J. , Wallner-Allen, K. , Fox, N. , Havlik, R. , Beaumont, J. L. , Mungas, D. , Manly, J. J. , Moy, C. , Conway, K. , Edwards, E. , Nowinski, C. J. , & Gershon, R. (2013). The cognition battery of the NIH toolbox for assessment of neurological and behavioral function: Validation in an adult sample. Journal of the International Neuropsychological Society, 20(6), 567–578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson, B. S. , & Dorman, M. F. (2008). Cochlear implants: A remarkable past and a brilliant future. Hearing Research, 242(1–2), 3–21. https://doi.org/10.1016/j.heares.2008.06.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson, B. S. , Dorman, M. F. , Woldorff, M. G. , & Tucci, D. L. (2011). Cochlear implants: Matching the prosthesis to the brain and facilitating desired plastic changes in brain function. Progress in Brain Research, 194, 117–129. https://doi.org/10.1016/B978-0-444-53815-4.00012-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolfe, J. (2020). Cochlear implants: Audiological management and considerations for implantable hearing devices. Plural. [Google Scholar]

- Won, H. W. , Drennan, W. R. , & Rubinstein, J. T. (2007). Spectral-ripple resolution correlates with speech reception in noise in cochlear implant users. Journal of the Association for Research in Otolaryngology, 8(3), 384–392. https://doi.org/10.1007/s10162-007-0085-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woolston, C. (2020, January 9). Singular science. Knowable Magazine from Annual Reviews. https://doi.org/10.1146/knowable-010820-1 [Google Scholar]

- Zhan, K. , Lewis, J. H. , Vasil, K. J. , Tamati, T. N. , Harris, M. S. , Pisoni, D. B. , Kronenberger, W. G. , Ray, C. , & Moberly, A. C. (2020). Cognitive functions in adults receiving cochlear implants: Predictors of speech recognition and changes after implantation. Otology & Neurotology, 41(3), e322–e329. https://doi.org/10.1097/MAO.0000000000002544 [DOI] [PubMed] [Google Scholar]