Abstract

Identifications of novel genetic signals conferring susceptibility to human complex diseases is pivotal to the disease diagnosis, prevention, and treatment. Genetic association study is a powerful tool to discover candidate genetic signals that contribute to diseases, through statistical tests for correlation between the disease status and genetic variations in study samples. In such studies with a case-control design, a standard practice is to perform the Cochran-Armitage (CA) trend test under an additive genetic model, which suffers from power loss when the model assumption is wrong. The Jonckheere-Terpstra (JT) trend test is an alternative method to evaluate association in a nonparametric way. This study compares the power of the JT trend test and the CA trend test in various scenarios, including different sample sizes (200–2000), minor allele frequencies (0.05–0.4), and underlying modes of inheritance (dominant genetic model to recessive genetic model). By simulation and real data analysis, it is shown that in general the JT trend test has higher, similar, and lower power than the CA trend test when the underlying mode of inheritance is dominant, additive, and recessive, respectively; when the sample size is small and the minor allele frequency is low, the JT trend test outperforms the CA trend test across the spectrum of genetic models. In sum, the JT trend test is a valuable alternative to the CA trend test under certain circumstances with higher statistical power, which could lead to better detection of genetic signals to human diseases and finer dissection of their genetic architecture.

Introduction

Over the past fifteen years, genome-wide association studies have significantly expanded the knowledge base for genetic factors in important healthcare outcomes [1]. Such studies have identified numerous genetic signals contributing to various complex human diseases, which can be very important to the diseases’ diagnosis, prevention, and treatment. One commonly used approach to test association in a case-control genetic study is the Cochran-Armitage (CA) trend test [2, 3] under the assumption of an additive genetic model [4, 5], which can reach the optimal power when the underlying genetic model is also additive. However, it can suffer from power loss when the true genetic model is nonadditive (see, e.g., [6–9]). Power loss is one critical issue in genetic association studies. On the one hand, conducting a statistical test with reduced power may fail to detect true genetic signals, leading to false negative results. On the other hand, to achieve the same level of statistical power, the sample sizes needed will increase, leading to higher study expenses and resource requirements.

To test for associations between the disease status and genetic variation, one alternative approach to the CA trend test is the Jonckheere-Terpstra (JT) trend test [10, 11], which is a rank-based nonparametric test. The JT trend test does not make assumptions on genetic models or data distribution, and thus has the potential to achieve better statistical power than the parametric CA trend test under certain circumstances. The potentially higher power from the JT trend test may result in novel genetic discoveries for complex human diseases, which can help researchers better understand the genetic etiology and eventually aid in the development of effective diagnosis, prevention, and treatment strategies of the diseases. Although the JT trend test offers an alternative to the CA trend test with potential advantages, their comparative performance has not been examined in the genetic literature. In this study, we aim to fill this research gap by comparing the power of the two tests in various conditions via simulations and real data analysis. The knowledge gained in this study can help guide the model selection between the CA and JT trend tests when conducting genetic case-control studies in practice.

Methods

Consider a diallelic locus with the major and minor alleles denoted as a and A, respectively, the genotype distribution in a case-control study can be summarized as in Table 1. Specifically, denote ri and si as the number of cases and controls, respectively, for genotype Gi, where i∈{0,1,2} reflects the number of A alleles a subject has. Thus G0, G1, and G2 correspond to genotypes aa, Aa, and AA, respectively. Denote by R, S, and ni the marginal sums such that , and ni = ri+si, and by N the total sample size such that . Assume (r0, r1, r2) follow a trinomial distribution with parameters R and (τ0, τ1, τ2), and (s0, s1, s2) follow a trinomial distribution with parameters S and (υ0, υ1, υ2). The null hypothesis of no association between the disease and genotype is then H0: τi = υi, for i∈{0,1,2}. Equivalently, we can also assume ri’s are drawn from binomial distributions Bin(ni, πi). The null hypothesis of no association between the disease and genotype is H0: π0 = π1 = π2. Assuming G0, G1, and G2 are three ordered categories, a restricted alternative hypothesis for a trend test is H1: π0≤π1≤π2 or π0≥π1≥π2 with at least one strict inequality.

Table 1. Genotype distribution at a diallelic marker in a case-control study.

| Genotype* | ||||

|---|---|---|---|---|

| Phenotype | aa | Aa | AA | Total |

| Cases | r 0 | r 1 | r 2 | R |

| Controls | s 0 | s 1 | s 2 | S |

| Total | n 0 | n 1 | n 2 | N |

* A denotes the minor allele across the paper.

To test H1, the CA trend test assigns a set of scores (x0, x1, x2) to G0, G1, and G2, respectively, with the constraints x0≤x1≤x2 and x0<x2, and examines whether there is a linear relationship between πi’s and xi’s by fitting a linear regression model. The test statistic is . Under H0, TCA follows a χ2 distribution with 1 degree-of-freedom (d.f.). The choices of (x0, x1, x2) represent assumptions on the genetic models. In practice, the additive model with (x0, x1, x2) = (0,0.5,1) is usually assumed because of its robustness. Hereinafter we denote it as .

Alternatively, the JT trend test compares the ranks of subjects based on their affection status between genotype groups to test H1. Consider the disease status of case and control as an ordinal variable Y, and denote by Yij∈{0,1} individual j′s phenotypic value with genotype Gi. The JT test statistic is , where , and , in which and . The components of U are the Mann-Whitney U statistics defined as . For large N and ni′s not too small, TJT also follows a χ2 distribution with 1 d.f. under H0.

For simplicity, hereinafter we use TJT and to refer to both tests and test statistics.

Simulation

We conduct simulations to compare performance between TJT and in terms of statistical power under various conditions. Define the penetrance function for each genotype as fi = P(affected|Gi), i = 0, 1, 2, and define genotype relative risk as λi = fi/f0; thus λ0 = 1. The dominant, additive, and recessive genetic models can be specified by λ1 = λ2, λ1 = (1+λ2)/2, and λ1 = 1, respectively. Note that the genetic model is defined in regard to the minor allele in this study. The model can be reparameterized by defining λ1 = 1+λcosθ and λ2 = 1+λsinθ, where λ≥0 is the distance between point P = (λ1, λ2) and point O = (1,1), which determines how far the genetic effect is from the null, and θ∈[π/4, π/2] is the angle between OP and the horizontal line in a two-dimensional space, which determines the genetic model [12]. Therefore, the null hypothesis can be rewritten as H0: λ = 0. In terms of genetic models, θ = π/4, arctan 2, and π/2 correspond to dominant, additive, and recessive models, respectively. We performed simulations under the following alternative settings. Assume a disease prevalence (K) of 0.1 and the minor allele frequency (MAF) q∈(0.05,0.1,0.2,0.3). Fix the alternative hypothesis as λ = 1 and vary the genetic models by setting θ‘ = θ/π from 1/4 to 1/2, i.e., from the dominant model to the recessive model, with an increment of 0.01. Under each genetic model, penetrances are determined by and fi = λif0. The probabilities of the two trinomial distributions for cases and controls are then τi = P(Gi)fi/K and υi = P(Gi)(1−fi)/(1−K), respectively. Consider a balanced design, i.e., R = S with the total sample size N∈(200, 500, 1000). At each setting 10,000 replicates are simulated, and each dataset is examined by both TJT and . The empirical power is estimated as the proportion of the replicates for which the p-value is less than or equal to 0.05. In an additional set of simulations for wider range of sample size and MAF, we considered N = 1500 and 2000 as well as q = 0.4 across the genetic models. Because the sample sizes and MAF were large, the effect size in the alternative hypothesis was set to λ = 0.5 to make the maximum power less than 1 for the sake of comparison.

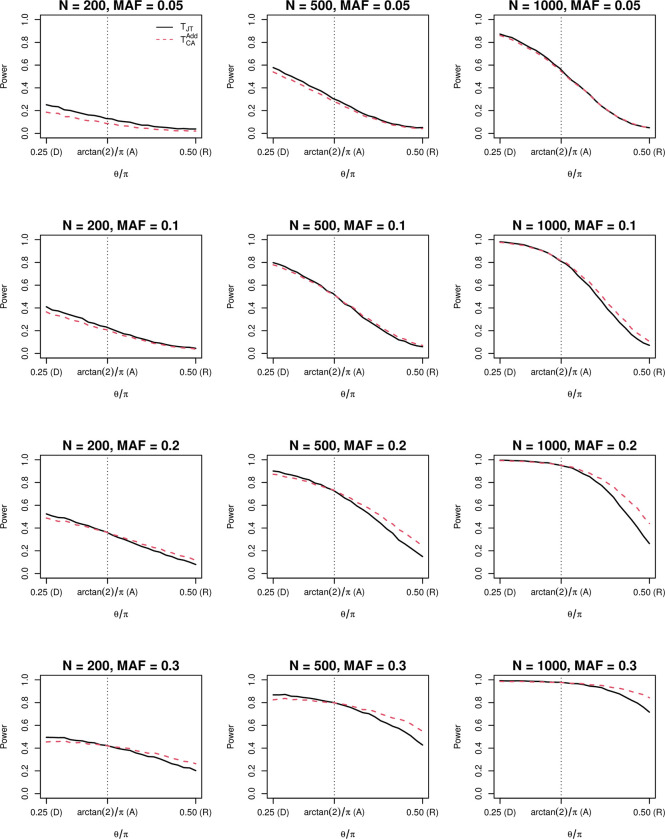

The simulation results across the main settings are presented in Fig 1 and the results for the additional set of simulations are presented in S1 Table. In all situations TJT is more powerful than when the underlying genetic model is dominant. The power advantage of TJT diminishes as the genetic model evolves toward the additive model. In most situations except for small sample sizes and low MAFs, the two tests have approximately equivalent power when the underlying model is additive. TJT becomes less powerful than and the disadvantage enlarges when the genetic model keeps evolving toward the recessive end. In case of low MAFs and small sample sizes, e.g., N = 200 & q≤0.1 or N≤1000 & q = 0.05, TJT is more powerful than .

Fig 1. Power comparison between the Jonckheere-Terpstra trend test (TJT) and the Cochran−Armitage trend test .

The black solid line denotes TJT and the red dashed line denotes . Along the x-axis, θ = π/4, arctan 2, and π/2 correspond to dominant (D), additive (A), and recessive (R) genetic models, respectively. The y-axis is the average empirical power at the 0.05 level based on 10,000 replicates each. The disease prevalence equals 0.1. MAF: minor allele frequency.

To verify the above findings, for each simulation setting we construct a table with the value in each cell equal to the expected value under the trinomial distributions for cases and controls, respectively, i.e., E(ri) = τiN/2 and E(si) = υiN/2, i∈{0,1,2}. Specifically, in each simulation setting, using the fixed combination of sample size (N), MAF (q), and genetic model (θ), the cell probabilities of each genotype for cases and controls in the genotype distribution table (Table 1) can be calculated, and therefore, the expected cell values can also be calculated by multiplying the probabilities with the sample size. This table consists of the expected cell values, which allows us to evaluate the relative performance of the two tests by comparing their theoretical test statistics (TJT and ) in each simulation setting. For each table, the theoretical TJT and are calculated and compared by . Therefore, a positive (or negative) value of ΔT indicates the JT trend test is more (or less) powerful than the CA trend test. The results of ΔT for dominant, additive, and recessive genetic models across simulation settings are reported in Table 2. Consistent with the simulation results, ΔT>0 when the genetic model was dominant; |ΔT|<2% when the genetic model was additive, and ΔT<0 when the genetic model was recessive. The only discrepancy is that in case of low MAFs and small sample sizes, theoretically ΔT would be less than zero under the recessive model, but empirically it is greater than zero. We suspect it is because the parametric assumptions and asymptotic theory behind do not hold in these circumstances, whereas TJT does not impose assumptions on data distribution.

Table 2. Percent difference between the Jonckheere-Terpstra trend test statistic (TJT) and the Cochran-Armitage trend test statistic () based on the expectation of genotype distributions under dominant, additive, and recessive genetic models.

| N | Minor Allele Frequency | |||

|---|---|---|---|---|

| Dominant | Additive | Recessive | ||

| 200 | 0.05 | 2.15% | -1.39% | -69.47% |

| 200 | 0.1 | 4.97% | -1.67% | -62.83% |

| 200 | 0.2 | 9.92% | -1.00% | -46.62% |

| 200 | 0.3 | 11.58% | 0.14% | -27.71% |

| 500 | 0.05 | 2.46% | -1.10% | -69.38% |

| 500 | 0.1 | 5.28% | -1.38% | -62.71% |

| 500 | 0.2 | 10.25% | -0.70% | -46.46% |

| 500 | 0.3 | 11.91% | 0.44% | -27.49% |

| 1000 | 0.05 | 2.56% | -1.00% | -69.35% |

| 1000 | 0.1 | 5.39% | -1.28% | -62.68% |

| 1000 | 0.2 | 10.36% | -0.60% | -46.41% |

| 1000 | 0.3 | 12.03% | 0.54% | -27.42% |

Real data analysis

We further compared TJT and in real data, which confirmed the simulation results. The first example is on the association between the variant rs2398162 and hypertension in the Wellcome Trust Case Control Consortium study [13]. There were 1940 cases (r0 = 1205, r1 = 624, r2 = 111) and 2923 controls (s0 = 1608, s1 = 1121, s2 = 194), and it was suggested the minor allele have a dominant effect. Applying the two trend tests on the dataset, we can obtain TJT = 22.82 (p-value = 1.8×10−6) and (p-value = 7.9×10−6). These results were in line with the observations in the simulation that TJT is more powerful than when the underlying genetic model is dominant.

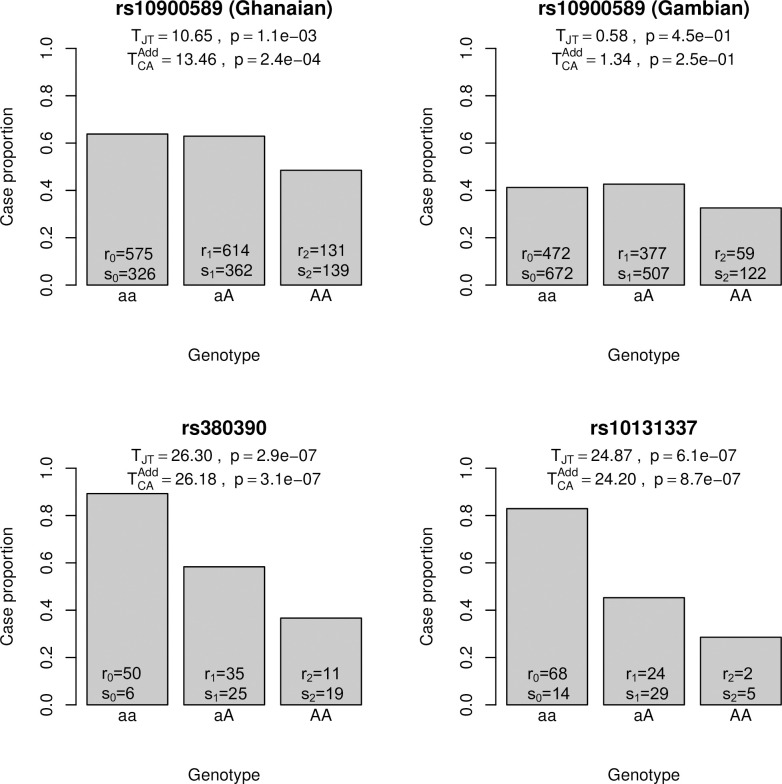

Additional real data analyses came from case-control studies for falciparum malaria [14], age-related macular degeneration (AMD) [15] and hypertension with additional variants, with the genotype counts all extracted from [9]. In the falciparum malaria study, variant rs10900589 in ATP2B4 was associated with the disease in the Ghanaian samples. This association was also evaluated in the Gambian samples and it was significant under a recessive model but insignificant under dominant and additive models. In the AMD study, variants rs380390 and rs10131337 in CFH were associated with AMD. We examined the associations of the three variants with the diseases using both tests. Cell counts, test statistics and p-values are reported in Fig 2. For rs10900589, the minor allele approximately acts in a recessive mode and , which were consistent with the simulation results that TJT is less powerful than when the underlying genetic model is recessive. For both rs380390 and rs10131337, the minor allele approximately acts in an additive mode and , which were consistent with the simulation results that the two tests have comparable power when the underlying model is additive. In the hypertension study, we compared the two tests on three SNPs that showed genome-wide significance. The results are reported in S2 Table. The conclusion still holds in this real data analysis: TJT and had similar power when the genetic model was close to be additive (rs7961152, rs1937506, rs6997709).

Fig 2. Comparison between the Jonckheere-Terpstra trend test (TJT) and the Cochran−Armitage trend test in four real datasets.

A denotes the minor allele; ri′s and si′s are as defined in Table 1.

To assess the potential genotyping errors among the variants considered in the real data analysis, we tested the Hardy-Weinberg equilibrium (HWE) among the cases and controls, separately, for each variant [16, 17]. An exact test for HWE was conducted using the R package HardyWeinberg [18], and the results with exact p-values for the variants were reported in S3 Table. Results showed that the p-values of the HWE tests for all the variants were larger than 0.01, with only two between 0.01 and 0.05, suggesting that there was little evidence of genotyping error among the variants. Moreover, we conducted allelic test to evaluate the association for the variants. The allelic test assesses the genetic association at the allele level by collapsing the genotypes into the counts for reference and alternative alleles, between cases and controls, however, this approach is not robust against the HWE departures [4]. The test statistics and p-values of allelic test results were summarized in S3 Table. Of note, the results were close to those of , suggesting that the assumptions of HWE were not violated.

Discussion

Our previous work elucidates the mechanism of the CA trend test that it examines the location shift of genotype scores between the case and control groups [19] by measuring the goodness of fit of a linear regression model correlating proportions of cases in genotype groups with their respective scores [20]. The preassigned scores reflect assumptions on the genetic model. In contrast, the JT trend test examines the location shift of phenotype scores among genotype groups in a rank-based nonparametric way without making assumptions on genetic models or data distribution. The power difference between the two tests in different situations shown in this study can be explained by their properties. When the underlying model is dominant, suffers power loss because of the wrong model assumption that it is inferior to TJT; when the underlying model is recessive, the limited information on location shift hampers TJT more than the wrong model assumption hampers ; in case of low MAFs and small sample sizes where the large-sample theory breaks, TJT outperforms because it does not impose assumptions on data distribution as the latter does.

In sum, in this study we compared the power of and TJT under different situations. By simulation and real data examples, we show TJT can provide a valuable alternative to in case of small sample sizes and low MAFs; when the genetic mechanism is known to be dominant, or that is the only model of interest, TJT is preferred. However, in a moderate to large sample size study with the true mode of inheritance unknown, the use of the JT trend test is not recommended compared to the CA trend test under an additive model, which is more robust under a wide range of modes of inheritance.

Supporting information

(DOCX)

(DOCX)

(DOCX)

Acknowledgments

The authors acknowledge the Texas Advanced Computing Center (https://www.tacc.utexas.edu) at The University of Texas at Austin for providing high performance computing resources that have contributed to the research results reported within this paper.

Data Availability

Real data analyzed in this study can be obtained from the cited articles (Liu et al., 2000; Timmann et al., 2012; Loley et al., 2013).

Funding Statement

This work is supported by the National Institute of Environmental Health Sciences grant R03ES034138 to C.X. and Z.Z. D.F.D. and Z.Z. are also supported in part by the National Institute on Minority Health and Health Disparities grant 5U54MD013376-8281. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript. There was no additional external funding received for this study.

References

- 1.Visscher P.M., et al., 10 years of GWAS discovery: Biology, function, and translation. Am J Hum Genet, 2017. 101(1): p. 5–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Cochran W.G., Some Methods for Strengthening the Common χ2 Tests. Biometrics, 1954. 10(4): p. 417–451. [Google Scholar]

- 3.Armitage P., Tests for Linear Trends in Proportions and Frequencies. Biometrics, 1955. 11(3): p. 375–386. [Google Scholar]

- 4.Sasieni P.D., From genotypes to genes: doubling the sample size. Biometrics, 1997: p. 1253–1261. [PubMed] [Google Scholar]

- 5.Clarke G.M., et al., Basic statistical analysis in genetic case-control studies. Nature Protocols, 2011. 6(2): p. 121–133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Gonzalez J.R., et al., Maximizing association statistics over genetic models. Genet Epidemiol, 2008. 32(3): p. 246–54. [DOI] [PubMed] [Google Scholar]

- 7.Kuo C.L. and Feingold E., What’s the best statistic for a simple test of genetic association in a case-control study? Genet Epidemiol, 2010. 34(3): p. 246–253. doi: 10.1002/gepi.20455 [DOI] [PubMed] [Google Scholar]

- 8.Li Q., et al., Robust tests for single-marker analysis in case-control genetic association studies. Ann Hum Genet, 2009. 73(2): p. 245–52. doi: 10.1111/j.1469-1809.2009.00506.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Loley C., et al., A unifying framework for robust association testing, estimation, and genetic model selection using the generalized linear model. Eur J Hum Genet, 2013. 21(12): p. 1442–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Jonckheere A.R., A Distribution-Free k-Sample Test Against Ordered Alternatives. Biometrika, 1954. 41(1/2): p. 133–145. [Google Scholar]

- 11.Terpstra T.J., The asymptotic normality and consistency of Kendall’s test against trend, when ties are present in one ranking. Indagationes Mathematicae, 1952. 14(3): p. 327–333. [Google Scholar]

- 12.Zheng G., Joo J., and Yang Y., Pearson’s test, trend test, and MAX are all trend tests with different types of scores. Annals of Human Genetics, 2009. 73(2): p. 133–140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.The Wellcome Trust Case Control Consortium, Genome-wide association study of 14,000 cases of seven common diseases and 3,000 shared controls. Nature, 2007. 447(7145): p. 661–678. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Timmann C., et al., Genome-wide association study indicates two novel resistance loci for severe malaria. Nature, 2012. 489(7416): p. 443–446. [DOI] [PubMed] [Google Scholar]

- 15.Li Q., et al., MAX-rank: a simple and robust genome-wide scan for case-control association studies. Human Genetics, 2008. 123(6): p. 617–623. [DOI] [PubMed] [Google Scholar]

- 16.Leal S.M., Detection of genotyping errors and pseudo‐SNPs via deviations from Hardy‐Weinberg equilibrium. Genetic Epidemiology, 2005. 29(3): p. 204–214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hosking L., et al., Detection of genotyping errors by Hardy–Weinberg equilibrium testing. European Journal of Human Genetics, 2004. 12(5): p. 395–399. [DOI] [PubMed] [Google Scholar]

- 18.Graffelman J., Exploring diallelic genetic markers: the HardyWeinberg package. Journal of Statistical Software, 2015. 64: p. 1–23. [Google Scholar]

- 19.Zhou Z., et al., Differentiating the Cochran-Armitage trend test and Pearson’s χ2 test: Location and dispersion. Annals of Human Genetics, 2017. 81(5): p. 184–189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Zhou Z., et al., Decomposing Pearson’s χ2 test: A linear regression and its departure from linearity. Annals of Human Genetics, 2018. 82(5): p. 318–324. [DOI] [PMC free article] [PubMed] [Google Scholar]