SUMMARY

Machine learning leverages statistical and computer science principles to develop algorithms capable of improving their performance through interpretation of data rather than through explicit instructions. Alongside widespread use in image recognition, language processing, and data mining, machine learning techniques have received increasing attention in medical applications, ranging from automated imaging analysis to disease forecasting. This review examines the parallel progress made in epilepsy, highlighting applications in automated seizure detection from EEG, video, and kinetic data, automated imaging analysis and pre-surgical planning, prediction of medication response, and prediction of medical and surgical outcomes using a wide variety of data sources. A brief overview of commonly used machine learning approaches, as well as challenges in further application of machine learning techniques in epilepsy, is also presented. With increasing computational capabilities, availability of effective machine learning algorithms, and accumulation of larger datasets, clinicians and researchers will increasingly benefit from familiarity with these techniques and the significant progress already made in their application in epilepsy.

Keywords: Artificial intelligence, Deep learning, Seizure detection, Epilepsy imaging, Epilepsy surgery

INTRODUCTION

Within the field of artificial intelligence, machine learning bridges statistics and computer science to develop algorithms whose performance improves with exposure to meaningful data, rather than with explicit instructions.1 Alongside ubiquitous applications in speech recognition, image classification, and text translation,2 machine learning has increasingly been used in a variety of medical applications, including triaging of ophthalmology referrals based on optical coherence tomography data,3 diagnosis of malignant melanoma from dermoscopic and photographic images,4 and identification of influenza from emergency department encounter records,5 in all three instances matching or exceeding the performance of clinical experts. Similar advances have also been made in epilepsy, driven by continuous improvements in data collection, storage, and processing. To illustrate the broad utility of machine learning techniques in epilepsy, this work highlights recent applications in automated seizure detection, analysis of imaging and clinical data, epilepsy localization, and prediction of medical and surgical outcomes, following a brief overview of commonly used machine learning algorithms.

MACHINE LEARNING APPROACHES

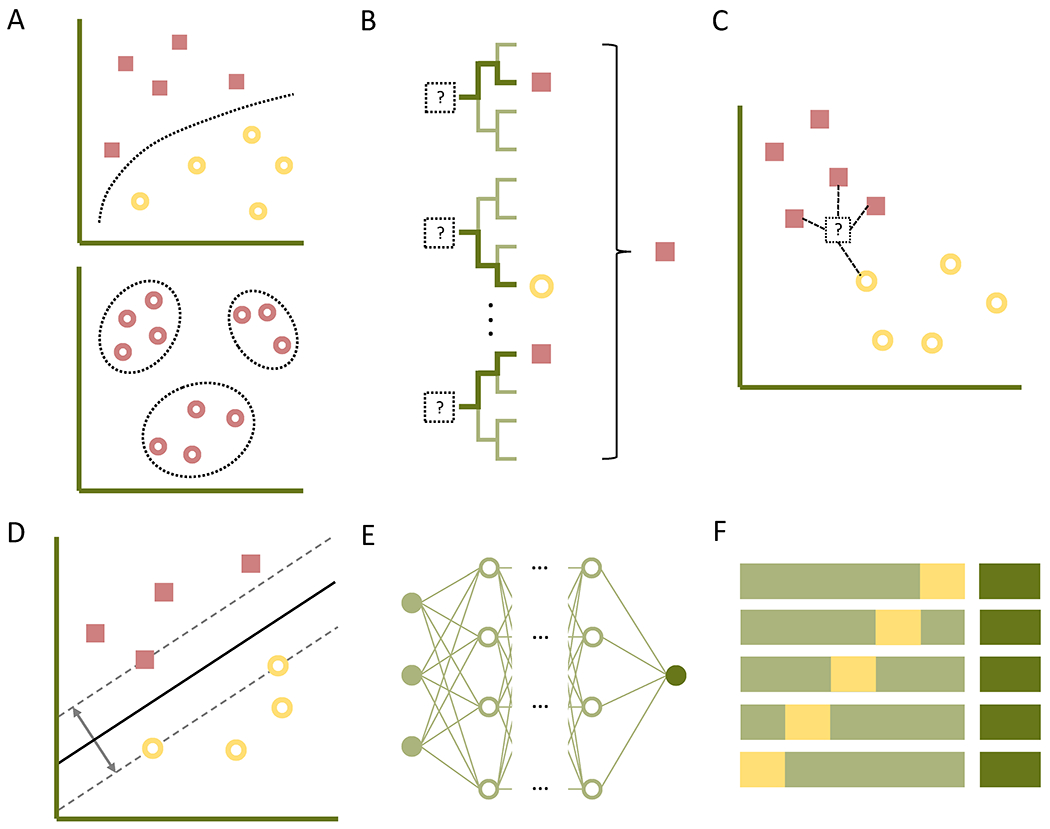

Machine learning tasks may broadly be classified as supervised or unsupervised learning (Figure 1A). In a supervised learning task, an algorithm is ‘trained’ on a set of previously-labeled input data in order to estimate outputs for unlabeled data.6 For example, annotated EEG recordings may be used to train an algorithm to automatically detect epileptiform discharges. In contrast, in unsupervised learning, an algorithm is used to uncover trends, subgroups, or outliers in unlabeled input data.6 Compared to the prior example, an unsupervised algorithm may identify candidate epileptiform discharges by detecting outliers from the background EEG recording. With either approach, informative input features are identified in a process termed feature selection (either manually, based on expert-level knowledge, or by the algorithm itself, without requiring domain expertise), then analyzed using a mapping function that generates output predictions from these features.7

Figure 1.

Overview of machine learning concepts. A, In supervised learning (top), an algorithm is trained on labeled input data to generate predicted outputs, while in unsupervised learning (bottom), the algorithm uncovers subgroups or outliers in unlabeled data. B, The random forest algorithm generates a forest of decision trees, each utilizing subsets of input features as bifurcation points to differentiate the training data into expected outputs; the output of the ensemble (e.g., the majority vote) is reported for new inputs. C, In k-nearest neighbor classification, an input is plotted as a vector within a feature space alongside labeled data, and is subsequently assigned to the class of its k nearest neighbors (here, k = 4). D, Support vector machines generate a hyperplane in higher-dimensional feature space to maximally separate labeled training data. E, In artificial neural networks, input data (far left) is passed through a non-linear activation function and assigned a weight, projecting through intermediary nodes until reaching the output node (far right) for classification. F, In cross-validation, a subset of the training data is withheld as the validation set (yellow), allowing for fine-tuning of an algorithm parametrized on the training set (light green); after multiple iterations (here showing K-fold cross-validation with K = 5), the algorithm may be tested on an initially withheld testing set (dark green) to assess accuracy and generalizability of the finalized model.

Among the more commonly used mapping functions, the random forest algorithm creates multiple decision trees from training data, in which each tree uses a randomly selected subset of input features as iterative bifurcation points to best separate the input data into the expected outputs (Figure 1B).8 The resultant forest of decision trees then generates an ensemble output (e.g., the most frequently predicted classification among the decision trees) for new inputs.8 In k-nearest neighbor (k-NN) classification (Figure 1C), a given input is represented by a vector within a feature space, and the distance between the input vector and labeled vectors in the training set are calculated; the input is then assigned to the class of the majority of its k nearest neighbors (e.g., for k = 5, the input is assigned to the same class as its nearest five training data points).9 Support vector machine (SVM) classifiers, in contrast, generate a hyperplane in a higher-dimensional feature space to maximally separate clusters of labeled training data, providing a decision boundary for classifying new inputs (Figure 1D).10 Multilayer artificial neural networks process data through layers of nodes, in each of which weighted inputs are summated and passed through a non-linear activation function to yield intermediary outputs; these may in turn proceed through additional layers of nodes as desired, ultimately reaching output nodes (Figure 1E).11 Deep learning refers to this use of multiple ‘hidden layers,’ and provides the advantage of automated feature selection with sufficiently large datasets, enabling high accuracy without incorporating domain expertise—in other words, training data can effectively serve as programming code for a deep learning model.11 Advancements in deep learning have thus enabled many of the recent accomplishments in machine learning, such as automated image classification,12 speech recognition,13 and text translation.14

The choice among mapping functions for a given application may be influenced by several factors, including sample size (e.g., requiring larger datasets to achieve high accuracy with deep learning),11 ease of use (whether from fewer user-specified variables, as in k-NN, or from availability of user-friendly software packages), or relative interpretability (increasingly facilitated by a growing number of algorithm-specific and algorithm-agnostic approaches)15; ultimately, however, the selection is often empiric, iterative, and informed by investigator experience.16 Mapping functions may also be combined in a given classification task to form an ensemble, based on the principle that a committee of predictors prone to non-identical errors will prove more accurate than any given predictor alone.17 Ensembles may be generated by applying the same mapping function to subsets of data, training the same function using different sets of parameters, or by combining different mapping functions, overall resulting in improved accuracy at the expense of computation time, data size, and comprehensibility.18 Along similar lines, automated machine learning (auto-ML) approaches the selection of a mapping function and its parameters as a machine learning task, allowing for selection and optimization of a mapping function or ensemble of functions with little user input.16

With small datasets or excessively complex models, the mapping function may ‘overfit’ the training data, learning properties inherent to the sample rather than relationships in the overall population; as a result, the generalizability, or out-of-sample accuracy, of the algorithm is diminished.19 To limit overfitting, the training data may be divided into a training set, from which the algorithm sets its parameters, and a validation set, from which a preliminary estimate of the algorithm’s generalizability is obtained (Figure 1F). The latter can then be used to adjust the hyperparameters of the algorithm, i.e., aspects of the algorithm not directly learned from training data (including selected features, type of mapping function, or prespecified properties of the mapping function, such as the number of neighbors in k-NN classification) that impact the efficacy of the algorithm.19 This partitioning and readjustment can be repeated a prespecified number of times (K-fold cross-validation) or repeated across the entire dataset by holding out a single data point for validation in each iteration (leave-one-out cross-validation).19 Subsequently, a third, testing set of withheld data may be used to estimate the generalizability of the final model (Figure 1F), e.g., by determining accuracy, sensitivity, and specificity for binary classifiers.19 Another commonly-reported measure is the area under the receiver operating characteristic curve (AUC), which examines sensitivity as a function of the false-positive rate at different test thresholds; the area under this curve provides a prevalence-independent measure of the test’s discriminatory ability.20 An alternate approach to overcoming overfitting is transfer learning, wherein a deep learning model previously trained for one task may be re-trained using a far smaller dataset for a different task, for instance by re-training an image classification algorithm to classify Gram stain images.21

AUTOMATED SEIZURE DETECTION

Given the utility of machine learning in analyzing large, complex datasets, considerable attention has been paid to automated seizure detection in EEG recordings. A wide variety of techniques have been applied to this task, including SVM,22,23 k-NN,24,25 and deep learning classifiers.26,27 Similar techniques have also been applied to seizure forecasting from EEG data, achieving prediction times of as much as several minutes using limited databases,28,29 including on low-power chips suitable for wearable or implantable devices.30 Recent progress and future directions in seizure detection and forecasting have been reviewed elsewhere in greater depth.31–33

Aside from EEG recordings, however, machine learning techniques have also been applied to novel data sources for seizure detection. In neonatal epilepsy, Karayiannis et al examined extremity movements in bedside video recordings, training neural networks to classify recordings as focal clonic seizures, myoclonic seizures, or non-seizure movements; after training on 120 recordings, the authors achieved seizure detection sensitivities of 85.5–94.4% and specificities of 92.5–97.9% (varying with the type of input data, with overall better detection of myoclonic seizures) on a matched testing set.34 A follow-up study with modified video analysis algorithms and neural network architecture improved on this performance, yielding a peak sensitivity of 96.8% and specificity of 97.7%.35 Ogura et al added EEG data to video-based extremity movement measurements, demonstrating a sensitivity of 96.2% and specificity of 94.2% for detecting epileptic spasms using a probabilistic neural network.36 In pediatric and adult epilepsy, early studies relied on infrared markers attached to anatomic landmarks for motion detection, and were therefore limited by occlusion of the reflective markers.37 Accordingly, Cuppens et al tracked spatio-temporal interest points defined by corner detection algorithms in nocturnal video recordings, achieving a peak sensitivity of 77% in detecting myoclonic jerks using an SVM classifier (though noting reduced performance for recordings including subtle myoclonic jerks or intermixed epileptic and non-epileptic movements).38 Along similar lines, Achilles et al extracted depth and position data from an infrared Kinect camera (Microsoft, Redmond, WA) set up in an epilepsy monitoring unit, using a convolutional neural network to identify tonic, tonic-clonic, and focal motor seizures. After training on videos of seizures from five patients, the algorithm achieved an AUC of 78.3% with five test patients, achieving a processing speed (10 frames per second) compatible with real-time analysis.39 Taken together, while there remains room for improvement in seizure detection accuracy from video recordings, machine learning-enabled techniques are rapidly approaching real-time applicability in seizure telemetry and characterization in epilepsy monitoring units, as well as in supplemental analysis of ambulatory video recordings.

In addition to video data, machine learning approaches have also been applied to analysis of movement information. One recent multicenter study of 69 patients combined wrist-worn accelerometer data with measurements of electrodermal activity (a reflection of sweat gland activation by the sympathetic nervous system), achieving a sensitivity of 94.6% and false-positive rate of 0.20 per day (AUC 94%) with a median detection latency of 29.3 seconds using an SVM classifier.40 Milošević et al also applied SVM to data from extremity-worn accelerometers combined with surface electromyography (sEMG) recordings from both biceps, achieving a combined sensitivity of 90.9%, clinical latency of 10.5 seconds, and false positive rate of 0.45 alarms per 12-hour recording in seven pediatric patients. Notably, the authors found 40–45% of these false alarms to be of clinical interest, including for falls, EEG lead removal, or concerning behaviors.41 In another notable study, individual muscle component transforms of upper extremity accelerometer data were examined with an SVM classifier, demonstrating a 93.3% sensitivity and 85.0% specificity for distinguishing non-epileptic convulsions from motor seizures.42 Additional machine learning approaches, including random forest43 and k-NN44 classifiers, have also been successfully applied to movement data. Overall, the portability of accelerometer- and sEMG-based systems holds promise for more accurate detection and tracking of seizures in the ambulatory setting, potentially also incorporating pulse oximetry,45 electrocardiography,46 and audio47 data in future applications.

MACHINE LEARNING IN THE DIAGNOSIS OF EPILEPSY

Imaging Analysis in Epilepsy

Aside from detection or prediction of individual seizures, machine learning approaches have also been investigated in the broader diagnosis of epilepsy using a wide variety of data sources. In particular, a number of studies have applied machine learning techniques to analysis of imaging data. Zhang et al examined asymmetry of functional connectivity in homologous brain regions on resting-state functional MRI (fMRI) in 100 patients with epilepsy and 80 controls, achieving a peak diagnostic sensitivity of 82.5% and specificity of 85% using an SVM classifier.48 Amarreh et al examined fractional anisotropy, mean diffusivity, radial diffusivity, and axial diffusivity in diffusion tensor imaging (DTI) data from 20 pediatric patients and 29 controls, finding that an SVM classifier was able to accurately distinguish patients with active epilepsy from those in remission (i.e., seizure-free for 12 months and not on medications) as well as from controls.49 Soriano et al, in contrast, examined resting-state magnetoencephalography (MEG) data from 14 patients with focal frontal epilepsy, 14 patients with idiopathic generalized epilepsy, and 14 controls, achieving 88% sensitivity and 86% specificity in differentiating the three groups using sequential neural network classifiers.50 While these studies are limited by modest sample sizes, they illustrate the capabilities of machine learning techniques in uncovering subtle anatomic and functional network changes in epilepsy, thereby enabling novel lines of inquiry in imaging analysis. In one illustrative example, Pardoe et al trained a Gaussian processes regression algorithm to estimate patient age using T1-weighted MRI sequences from a database of 2001 healthy controls; in subsequently applying the algorithm to 42 patients with newly-diagnosed focal epilepsy, 94 patients with medically refractory focal epilepsy, and 74 contemporaneous controls, they noted a 4.5-year disparity between imaging-based estimated brain age and chronological age in medically refractory patients, with larger disparities noted with earlier age of onset.51

One particular application of machine learning techniques in imaging analysis has been the automated characterization of focal cortical lesions. Hong et al applied SVM classifiers to cortical thickness and curvature maps from 41 patients with focal cortical dysplasias as well as matched controls, achieving 98% accuracy in distinguishing histologic subtypes, 92% and 86% accuracy (for type I and II FCDs, respectively) in lateralization, and 92% and 82% accuracy (as above) in predicting Engel I seizure freedom at an average of four years of follow-up.52 Noting the difficulty of adequately capturing lesional variability with a small number of samples, El Azami et al instead demonstrated an unsupervised approach, training a one-class support vector machine to identify heterotopias and blurring of the gray-white matter junction as outliers on T1-weighted images from 77 healthy controls. When tested on 11 patient scans, the classifier achieved a sensitivity of 77% with a mean of 3.2 false positive detections per patient, compared to a sensitivity of 54% and an average of 6.3 false positives using state-of-the-art statistical parametric mapping (Wellcome Centre for Human Neuroimaging, London, UK), driven by improved detection of lesions not visible on expert-annotated MRI studies.53 More recently, a neural network classifier demonstrated a sensitivity of 73.7% and specificity of 90.0% in detecting solitary FCDs on a dataset of 61 patients with type II FCDs as well as 120 controls from three different epilepsy centers, notably comprising healthy controls as well as controls with hippocampal sclerosis.54

Non-Imaging Diagnostics

Outside of imaging analysis, machine learning techniques have similarly enabled epilepsy diagnosis from a wide range of clinical data. Goker et al applied several classification algorithms to 105 scanning EMG recordings from nine juvenile myoclonic epilepsy patients and ten controls, achieving 100% diagnostic sensitivity and 83.6% specificity using an artificial neural network.55 Kassahun et al employed a genetics-based data mining approach (which incorporated an auto-ML algorithm) to distinguish temporal lobe epilepsies (TLEs) from extratemporal epilepsies using clinical semiologies, finding favorable performance compared to an individual clinician and comparable performance to a team of clinicians examining the same data.56 Using an SVM classifier, Won et al. examined visual evoked potential measurements of contrast gain control to differentiate 10 idiopathic generalized epilepsy patients from 19 controls, achieving sensitivities of 80–86% and specificities of 77–85%.57 Frank et al examined neuropsychological batteries administered on 228 patients with epilepsy, using an SVM classifier to distinguish patients with TLEs from extratemporal epilepsies (with 72.2–78.0% accuracy) and to lateralize the epileptic focus among the TLE patients (with 52.8–61.3% accuracy).58 A number of investigators have also applied machine learning techniques to analysis of unprocessed medical records for epilepsy characterization. Connolly et al examined progress notes for pediatric patients with focal, generalized, or otherwise unspecified epilepsies, analyzing frequencies of word strings using an SVM classifier; after training on 90 notes of each category from one institution, the algorithm demonstrated better-than-chance classification of progress notes from a different institution, with improved performance if the classifier was trained using notes from two institutions before being tested on notes from a third.59 Biswal et al, in contrast, trained a Naïve Bayes classifier on 3,277 EEG reports labeled with the presence or absence of seizures or epileptiform discharges, which then achieved an AUC of 99.05% for detecting reports with seizures and 96.15% for those with epileptiform discharges on a testing set of 39,695 reports.60 Building on this approach, Goodwin and Harabagiu combined automated indexing of EEG reports with neural network-generated ‘fingerprints’ of the associated recordings to create a searchable database, allowing for identification of patient cohorts within the database with such queries as, “History of seizures and EEG with TIRDA without sharps, spikes, or electrographic seizures;”61 a panel of expert reviewers found that the addition of EEG ‘fingerprint’ indices provided more relevant results and captured pertinent records not recovered by searches of EEG reports alone.61 The broad range of data sources examined in these studies highlights the utility of machine learning techniques in leveraging underutilized clinical data for patient- and population-level characterization of epilepsy.

Lateralization of Epilepsy

Another area of focus in machine learning-enhanced epilepsy diagnostics has been the detection and lateralization of temporal lobe epilepsies. In early studies, Bakken et al analyzed hippocampal magnetic resonance spectroscopy data from 15 patients with TLE and 13 controls using an artificial neural network, correctly distinguishing and lateralizing patients with TLEs from controls in 60 of 67 spectra.62 In another study, a neural network classifier demonstrated 85% agreement with an expert reviewer in detecting and lateralizing TLEs on interictal 18F-fluorodeoxyglucose-positron-emission tomography (FDG-PET) images from 197 patients and 64 controls.63 Kerr et al later applied neural networks to interictal FDG-PET images from 73 patients with TLE and 32 patients with non-epileptic seizures, accurately identifying and lateralizing TLE in 76% of cases, compared to accuracies of 78% with expert review of FDG-PET data and 71% with expert review of MRI data.64 Interestingly, limited correlation was noted between the results of expert review and the neural network classifier, suggesting that the neural network did not recapitulate manual analysis.64 Multifractal analysis of ictal and interictal single photon emission computed tomography (SPECT) data, examined using an SVM classifier, has also been shown to accurately localize TLE in 19 of 20 cases, comparing favorably to conventional image subtraction.65 A number of studies have also applied machine learning techniques to morphometric analysis of structural MRI using T1-weighted and fluid-attenuated inversion recovery (FLAIR) sequences, both for detection66 and lateralization67 of TLE. Similar results have more recently been shown for analysis of diffusion weighted imaging,68 diffusion tensor imaging,69 and diffusion kurtosis imaging.70 Recently, Jin and Chung also applied an SVM classifier to functional connectivity data from resting-state MEG, distinguishing 46 TLE patients from matched controls with 95.1% accuracy and lateralizing TLEs with 76.2% accuracy.71

APPLICATIONS IN SURGICAL MANAGEMENT OF EPILEPSY

Machine learning approaches have also increasingly been applied in surgical planning and prediction of surgical outcomes in epilepsy. Dian et al examined intracranial EEG recordings from six patients undergoing resection for extratemporal epilepsy to identify regions of interest using an SVM classifier, finding close concordance with the ultimate resection area in patients achieving Engel I outcomes but limited concordance in patients with Engel III or IV outcomes, potentially suggesting the need for a larger resection area in the former or inaccurate identification of the seizure onset zone in the latter.72 In a pediatric population, Roland et al highlighted the use of a neural network-based algorithm in identifying canonical resting-state networks on fMRI as part of presurgical planning, allowing for identification of clinically relevant networks even under general anesthesia.73 Alongside applications in FCD detection and TLE lateralization, these findings begin to highlight the broader applicability of machine learning techniques in surgical planning for extratemporal epilepsies or those with ostensibly unrevealing imaging findings.

In the domain of predicting surgical outcomes, an early study by Grigsby et al trained a neural network classifier on encoded clinical, electrographic, neuropsychologic, imaging, and surgical data from 65 patients undergoing anterior temporal lobectomy, yielding a sensitivity of 80.0% and specificity of 83.3% in predicting Engel I outcomes (improving to 100% and 85.7%, respectively, for Engel I or II outcomes) in a testing cohort of 22 patients.74 Arle et al also applied neural networks of varying architecture to a similar dataset (though also incorporating an index of medication number and dosage), reporting an accuracy of 96% in predicting Engel I outcomes in 80 surgical patients; curiously, the authors noted an improvement in accuracy to 98% when excluding intraoperative variables (such as electrocorticography or pathology data).75 More recently, Armañanzas et al compared k-NN and Naïve Bayes classifiers in predicting Engel I vs. II–III outcomes using similar presurgical data, though with the notable addition of a larger neuropsychological battery that included personality style as assessed using a Rorschach test; after narrowing the classifiers to the three most informative variables (including personality style), the authors noted predictive accuracies of 89.47% with either classifier.76 Memarian et al instead compared linear discriminant analysis with Naïve Bayes and SVM classifiers in examining pre-operative clinical, electrophysiologic, and structural MRI data from 20 patients, noting highest accuracy (of 95%) in predicting Engel I outcomes using a Least-Square SVM classifier.77 In contrast, a study utilizing an SVM classifier only on T1-weighted MRI sequences from 49 patients demonstrated 100% sensitivity and 88%–92% specificity (in male and female cohorts, respectively) in predicting post-surgical seizure freedom.78 More recently, a neural network classifier examining DTI-based structural connectomes from 50 TLE patients yielded a positive predictive value of 88% and negative predictive value of 79% in predicting Engel I outcomes, comparing favorably to a discriminant function classifier using only clinical variables.79 Measures of thalamocortical connectivity on resting-state fMRI have similarly been used to predict favorable response (>50% seizure reduction) to vagus nerve stimulation, achieving an AUC of 86% using an SVM classifier in 21 pediatric patients.80 Antony et al, in contrast, applied an SVM classifier to measures of functional connectivity from stereo-EEG in 23 patients undergoing anterior temporal lobectomy, achieving a sensitivity of 90% and specificity of 85% in predicting seizure freedom at one year of follow-up.81 Tomlinson et al also examined functional connectivity using interictal intracranial EEG recordings from 17 pediatric patients, achieving 100% sensitivity and 87.5% specificity in predicting Engel I outcomes with an SVM classifier.82 Interestingly, however, a retrospective study of 118 patients found that neither MRI nor routine, video, or intracranial EEG data predicted post-surgical seizure freedom at 2 years using conditional logistic regression,83 with a separate study similarly failing to predict seizure freedom from FDG-PET and 11C-flumazenil-PET data assessed with a random forest classifier.84 Nevertheless, when viewed as a whole, these studies demonstrate the ability of machine learning techniques to uncover prognostically valuable trends within the complex, multimodality data obtained during a typical presurgical evaluation, potentially allowing for improved patient selection and counseling.

APPLICATIONS IN MEDICAL MANAGEMENT OF EPILEPSY

As with surgical planning and prediction of surgical outcomes, machine learning techniques have also been applied to medical decision-making and prediction of medical outcomes in epilepsy. Aslan et al, for example, trained a neural network classifier using seven clinical features (including age of onset, history of febrile seizures, and presentation to clinic >1 year after disease onset) from 302 patients, achieving an accuracy of 91.1% in 456 test cases for predicting seizure freedom, seizure reduction, or absence of significant change (i.e., <50% reduction) in seizure frequency.85 Cohen et al instead examined clinic visit notes of 200 pediatric patients (half undergoing epilepsy surgery) to predict surgical candidacy using Naïve Bayes and SVM classifiers, finding comparable performance to a panel of four neurologists up to several months before actual referral; notably, however, this comparison was limited by high measures of dispersion around the mean predictions.86 In contrast, Kimiskidis et al examined features derived from paired-pulse transcranial magnetic stimulation-EEG recordings using a Naïve Bayes classifier, achieving a mean sensitivity of 86% and specificity of 82% in distinguishing patients with genetic generalized epilepsies from controls, as well as a mean sensitivity of 80% and specificity of 73% in predicting seizure freedom at 12 months’ follow-up.87 More recently, An et al compared machine learning algorithms for prediction of drug-resistant epilepsy (defined as requiring >3 medication changes during the study period) utilizing comprehensive U.S. claims data from 2006–2015. The authors found that the best-performing algorithm, a random forest classifier trained using 635 features (comprising demographic variables, comorbidities, treatment regimens, insurance data, and clinical encounters) from 175,735 records, yielded an AUC of 76.4% and was able to identify patients with drug-resistant epilepsy an average of 1.97 years before failing a second medication trial, using data available at the time of the first medication prescription.88

A number of studies have also demonstrated the capabilities of machine learning algorithms in predicting individual medication responses. Devinsky et al examined clinical characteristics (e.g., type and number of medications used, age, and comorbidities) from records of 34,990 patients extracted from a medical claims database, and trained a random forest classifier to predict a medication regimen least likely to require changes in the following 12 months (serving as a proxy of regimen efficacy and tolerability). In a testing set of 8,292 patients, the classifier’s predicted regimens, which yielded an AUC of 72%, might have resulted in 281.5 fewer hospitalization days and fewer physician visits per year if prescribed at the time of the prediction, though only matched the prescribed regimen in 13% of cases.89 Another study examining six EEG features before and after a medication change in 20 pediatric epilepsy patients achieved 85.71% sensitivity and 76.92% specificity in predicting subsequent treatment response (i.e., >50% reduction in seizure frequency) using an SVM classifier.90 In a similar study, clinical data (e.g., age at onset, seizure frequency, family history, and abnormal imaging) were combined with EEG features (sample entropy of the α, β, δ, and θ frequency bands) from 36 newly-diagnosed patients started on levetiracetam monotherapy to predict Engel I outcomes, achieving a sensitivity of 100% and specificity of 80.0% (AUC 96%) using an SVM classifier.91 Petrovski et al notably investigated five single nucleotide polymorphisms in 115 patients using a k-NN classifier in order to predict seizure freedom at one year of follow-up, achieving a sensitivity of 91% and specificity of 82% in the cohort of newly-diagnosed epilepsy patients as well as a sensitivity and specificity of 81% in a testing cohort of 108 patients on chronic pharmacotherapy.92

Aside from reductions in seizure frequency, machine learning techniques have also shed light on a number of pertinent clinical outcomes. Paldino et al found that a random forest classifier achieved 100% sensitivity and 95.4% specificity in predicting language impairment using DTI-based whole-brain tractography data from 33 pediatric patients with malformations of cortical development.93 A later study demonstrated that a random forest classifier trained on resting-state fMRI images from 45 pediatric epilepsy patients was also able to estimate disease duration with high accuracy (correlating with true disease duration with r = 0.95, p = 0.0004), which in turn was inversely correlated with full-scale intelligence quotient.94 Piña-Garza et al investigated healthcare utilization in patients with Lennox-Gastaut syndrome using a multi-state Medicaid claims database, utilizing a random forest classifier to identify probable patients based on clinical variables (e.g., prescriptions for felbamate or clobazam, vagus nerve stimulator placement, corpus callosotomy, helmet use, or claims for intellectual disability); aside from finding higher lifetime healthcare costs, particularly for home-based or long-term care, the authors found lower rates of clobazam or rufinamide use in older patients, raising questions of suboptimal management in this cohort.95 Grinspan et al, examining demographic, insurance, comorbidity, and medication data in medical records at two pediatric referral centers, demonstrated that a random forest classifier achieved AUCs of 84.1% and 73.4% at each center in predicting emergency department visit rates for the following year.96 The range of clinical outcomes examined in these studies, from seizure rates to medication response to healthcare utilization, highlights the potential utility of machine learning techniques in clinical practice.

LIMITATIONS AND FUTURE DIRECTIONS

Although machine learning techniques have demonstrated high discriminatory ability in a wide range of applications in epilepsy, there is considerably less experience in the literature with external validation studies. In one notable example, Shazadi et al97 applied the five-SNP classifier described by Petrovski et al92 to two external cohorts recruited in the UK, finding that the algorithm was unable to predict treatment responses as in the original study. The classifier was similarly unable to predict outcomes when re-trained using a subset of the UK cohorts. The authors noted differences in medication use between the groups, finding high rates of carbamazepine and valproate use in the original cohort but a greater proportion of lamotrigine use in the UK cohorts, with the classifier demonstrating modest predictive power (when re-trained using the UK cohorts) only for patients treated with carbamazepine or valproate; notably, however, there was no clear functional relationship between the SNPs and these medications. The two study populations were also noted to vary in sample size, ethnic composition, and clinical setting (with the larger UK cohorts recruited into, and evaluated as part of, clinical trials).

The study by Shazadi et al. highlights several potential barriers to generalizability of machine learning applications, including training dataset size, confounding clinical variables, and variability in data collection and interpretation. As noted previously, smaller datasets increase the risk of overfitting, particularly for excessively complex models or those of insufficient complexity to handle outliers.19 Moreover, very small training sets may lead to ostensibly high classification accuracies by chance, regardless of the selected mapping function or cross-validation approach.98 Recognizing the difficulty of accumulating large clinical datasets, a number of cloud-based repositories have been developed to allow for cross-institution data sharing99 and centralized data quality assurance.100 These approaches present an opportunity to create larger and more representative sample populations, while also facilitating consistency in data collection and classification, in an overall effort to develop more generalizable models. Robust external validation of these models, alongside advancements in interpretability, may lead to improved clinician confidence in these models and facilitate incorporation into clinical practice.

CONCLUSIONS

Mirroring the increasingly ubiquitous use of machine learning in business, industry, as well as several medical subspecialties, machine learning techniques have already enjoyed widespread application in challenging areas within epilepsy. As evidenced by the rapidly maturing literature in seizure detection, machine learning techniques show promise in expanding capabilities for inpatient and ambulatory seizure monitoring, and may provide an avenue for further progress in seizure forecasting. Advances in automated analysis of EEG and imaging data have similarly demonstrated the capabilities of machine learning in uncovering diagnostic and prognostic information currently inaccessible to expert review, creating opportunities for improved selection of pharmacotherapy, prediction of clinical outcomes, and surgical planning. The relatively limited experience in the literature from validation studies does raise questions about the generalizability of these models, however, and underscores the need for larger, more diverse datasets as well as increased investment in external validation studies. Despite these concerns, novel approaches, including use of automated machine learning to reduce barriers to entry as well as development of cross-institution, cloud-based data repositories to improve training datasets, highlight exciting avenues forward for developing increasingly robust and generalizable algorithms suitable for introduction into clinical practice.

KEY POINTS:

Advances in machine learning, particularly in deep learning, have enabled increasingly widespread applications in medicine

Machine learning has already demonstrated utility in seizure detection, imaging analysis, and prediction of medical and surgical outcomes

Validation studies with larger, more diverse cohorts are needed to bridge the gap to widespread acceptance in clinical practice

DISCLOSURE OF CONFLICTS OF INTEREST

Daniel Goldenholz has received grant support from the National Institute of Neurological Disorders and Stroke Division of Intramural Research, and serves on the advisory board of Magic Leap. Bardia Abbasi has no conflicts of interest.

Footnotes

ETHICAL PUBLICATION STATEMENT

We confirm that we have read the Journal’s position on issues involved in ethical publication and affirm that this report is consistent with those guidelines.

REFERENCES

- 1.Libbrecht MW, Noble WS. Machine learning applications in genetics and genomics. Nat Rev Genet 2015; 16:321–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature 2015; 521:436–44. [DOI] [PubMed] [Google Scholar]

- 3.De Fauw J, Ledsam JR, Romera-Paredes B, et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat Med 2018; 24:1342–50. [DOI] [PubMed] [Google Scholar]

- 4.Esteva A, Kuprel B, Novoa RA, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017; 542:115–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.López Pineda A, Ye Y, Visweswaran S, et al. Comparison of machine learning classifiers for influenza detection from emergency department free-text reports. J Biomed Inform 2015; 58:60–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Yu K-H, Beam AL, Kohane IS. Artificial intelligence in healthcare. Nat Biomed Eng 2018; 2:719–31. [DOI] [PubMed] [Google Scholar]

- 7.Deo RC. Machine learning in medicine. Circulation 2015; 132:1920–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Speiser JL, Durkalski VL, Lee WM. Random forest classification of etiologies for an orphan disease. Stat Med 2015; 34:887–99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hu L-Y, Huang M-W, Ke S-W, et al. The distance function effect on k-nearest neighbor classification for medical datasets. Springerplus 2016; 5:1304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Huang S, Cai N, Pacheco PP, et al. Applications of support vector machine (SVM) learning in cancer genomics. Cancer Genomics Proteomics 2018; 15:41–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Chartrand G, Cheng PM, Vorontsov E, et al. Deep learning: A primer for radiologists. Radiographics 2017; 37:2113–31. [DOI] [PubMed] [Google Scholar]

- 12.Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional neural networks. Commun ACM 2012; 60:84–90. [Google Scholar]

- 13.Hinton G, Deng L, Yu D, et al. Deep Neural Networks for Acoustic Modeling in Speech Recognition: The Shared Views of Four Research Groups. IEEE Signal Process Mag 2012; 29:82–97. [Google Scholar]

- 14.Sutskever I, Vinyals O, Le QV. Sequence to Sequence Learning with Neural Networks. In Ghahramani Z, Welling M, Cortes C, et al. (eds.) Advances in Neural Information Processing Systems 27. Curran Associates, Inc.; 2014:3104–12. [Google Scholar]

- 15.Guidotti R, Monreale A, Ruggieri S, et al. A survey of methods for explaining black box models. ACM Comput Surv 2018; 51:1–42. [Google Scholar]

- 16.Luo G A review of automatic selection methods for machine learning algorithms and hyper-parameter values. Netw Model Anal Health Inform Bioinforma 2016; 5:18. [Google Scholar]

- 17.Wall R, Cunningham P, Walsh P, et al. Explaining the output of ensembles in medical decision support on a case by case basis. Artif Intell Med 2003; 28:191–206. [DOI] [PubMed] [Google Scholar]

- 18.Kotsiantis Zaharakis, Pintelas. Machine learning: A review of classification and combining techniques. Artif Intell Rev 2006; 26:159–90. [Google Scholar]

- 19.Lemm S, Blankertz B, Dickhaus T, et al. Introduction to machine learning for brain imaging. Neuroimage 2011; 56:387–99. [DOI] [PubMed] [Google Scholar]

- 20.Akobeng AK. Understanding diagnostic tests 3: Receiver operating characteristic curves. Acta Paediatr 2007; 96:644–7. [DOI] [PubMed] [Google Scholar]

- 21.Smith KP, Kang AD, Kirby JE. Automated interpretation of blood culture gram stains by use of a deep convolutional neural network. J Clin Microbiol 2018; 56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Zhang Zisheng, Parhi KK. Seizure detection using wavelet decomposition of the prediction error signal from a single channel of intra-cranial EEG. Conf Proc IEEE Eng Med Biol Soc 2014; 2014:4443–6. [DOI] [PubMed] [Google Scholar]

- 23.Chavakula V, Sánchez Fernández I, Peters JM, et al. Automated quantification of spikes. Epilepsy Behav 2013; 26:143–52. [DOI] [PubMed] [Google Scholar]

- 24.Siuly S, Kabir E, Wang H, et al. Exploring sampling in the detection of multicategory EEG signals. Comput Math Methods Med 2015; 2015:576437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Firpi H, Goodman E, Echauz J. On prediction of epileptic seizures by means of genetic programming artificial features. Ann Biomed Eng 2006; 34:515–29. [DOI] [PubMed] [Google Scholar]

- 26.Kang J-H, Chung YG, Kim S-P. An efficient detection of epileptic seizure by differentiation and spectral analysis of electroencephalograms. Comput Biol Med 2015; 66:352–6. [DOI] [PubMed] [Google Scholar]

- 27.Asif U, Roy S, Tang J, et al. SeizureNet: A Deep Convolutional Neural Network for Accurate Seizure Type Classification and Seizure Detection. arXiv preprint arXiv:190303232 2019; [Google Scholar]

- 28.Sharif B, Jafari AH. Prediction of epileptic seizures from EEG using analysis of ictal rules on Poincaré plane. Comput Methods Programs Biomed 2017; 145:11–22. [DOI] [PubMed] [Google Scholar]

- 29.Alexandre Teixeira C, Direito B, Bandarabadi M, et al. Epileptic seizure predictors based on computational intelligence techniques: a comparative study with 278 patients. Comput Methods Programs Biomed 2014; 114:324–36. [DOI] [PubMed] [Google Scholar]

- 30.Kiral-Kornek I, Roy S, Nurse E, et al. Epileptic seizure prediction using big data and deep learning: toward a mobile system. EBioMedicine 2018; 27:103–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Acharya UR, Hagiwara Y, Adeli H. Automated seizure prediction. Epilepsy Behav 2018; 88:251–61. [DOI] [PubMed] [Google Scholar]

- 32.Freestone DR, Karoly PJ, Cook MJ. A forward-looking review of seizure prediction. Curr Opin Neurol 2017; 30:167–73. [DOI] [PubMed] [Google Scholar]

- 33.Stacey WC. Seizure Prediction Is Possible-Now Let’s Make It Practical. EBioMedicine 2018; 27:3–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Karayiannis NB, Tao G, Xiong Y, et al. Computerized motion analysis of videotaped neonatal seizures of epileptic origin. Epilepsia 2005; 46:901–17. [DOI] [PubMed] [Google Scholar]

- 35.Karayiannis NB, Xiong Y, Tao G, et al. Automated detection of videotaped neonatal seizures of epileptic origin. Epilepsia 2006; 47:966–80. [DOI] [PubMed] [Google Scholar]

- 36.Ogura Y, Hayashi H, Nakashima S, et al. A neural network based infant monitoring system to facilitate diagnosis of epileptic seizures. Conf Proc IEEE Eng Med Biol Soc 2015; 2015:5614–7. [DOI] [PubMed] [Google Scholar]

- 37.Li Z, Martins da Silva A, Cunha JPS. Movement quantification in epileptic seizures: a new approach to video-EEG analysis. IEEE Trans Biomed Eng 2002; 49:565–73. [DOI] [PubMed] [Google Scholar]

- 38.Cuppens K, Chen C-W, Wong KB-Y, et al. Using Spatio-Temporal Interest Points (STIP) for myoclonic jerk detection in nocturnal video. Conf Proc IEEE Eng Med Biol Soc 2012; 2012:4454–7. [DOI] [PubMed] [Google Scholar]

- 39.Achilles F, Tombari F, Belagiannis V, et al. Convolutional neural networks for real-time epileptic seizure detection. Computer Methods in Biomechanics and Biomedical Engineering: Imaging & Visualization 2016; 6:1–6.29623248 [Google Scholar]

- 40.Onorati F, Regalia G, Caborni C, et al. Multicenter clinical assessment of improved wearable multimodal convulsive seizure detectors. Epilepsia 2017; 58:1870–9. [DOI] [PubMed] [Google Scholar]

- 41.Milosevic M, Van de Vel A, Bonroy B, et al. Automated Detection of Tonic-Clonic Seizures Using 3-D Accelerometry and Surface Electromyography in Pediatric Patients. IEEE J Biomed Health Inform 2016; 20:1333–41. [DOI] [PubMed] [Google Scholar]

- 42.Kusmakar S, Gubbi J, Yan B, et al. Classification of convulsive psychogenic non-epileptic seizures using muscle transforms obtained from accelerometry signal. Conf Proc IEEE Eng Med Biol Soc 2015; 2015:582–5. [DOI] [PubMed] [Google Scholar]

- 43.Larsen SN, Conradsen I, Beniczky S, et al. Detection of tonic epileptic seizures based on surface electromyography. Conf Proc IEEE Eng Med Biol Soc 2014; 2014:942–5. [DOI] [PubMed] [Google Scholar]

- 44.Borujeny GT, Yazdi M, Keshavarz-Haddad A, et al. Detection of epileptic seizure using wireless sensor networks. J Med Signals Sens 2013; 3:63–8. [PMC free article] [PubMed] [Google Scholar]

- 45.Goldenholz DM, Kuhn A, Austermuehle A, et al. Long-term monitoring of cardiorespiratory patterns in drug-resistant epilepsy. Epilepsia 2017; 58:77–84. [DOI] [PubMed] [Google Scholar]

- 46.Amengual-Gual M, Ulate-Campos A, Loddenkemper T. Status epilepticus prevention, ambulatory monitoring, early seizure detection and prediction in at-risk patients. Seizure 2019; 68:31–7. [DOI] [PubMed] [Google Scholar]

- 47.Arends JB, van Dorp J, van Hoek D, et al. Diagnostic accuracy of audio-based seizure detection in patients with severe epilepsy and an intellectual disability. Epilepsy Behav 2016; 62:180–5. [DOI] [PubMed] [Google Scholar]

- 48.Zhang J, Cheng W, Wang Z, et al. Pattern classification of large-scale functional brain networks: identification of informative neuroimaging markers for epilepsy. PLoS ONE 2012; 7:e36733. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Amarreh I, Meyerand ME, Stafstrom C, et al. Individual classification of children with epilepsy using support vector machine with multiple indices of diffusion tensor imaging. Neuroimage Clin 2014; 4:757–64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Soriano MC, Niso G, Clements J, et al. Automated Detection of Epileptic Biomarkers in Resting-State Interictal MEG Data. Front Neuroinformatics 2017; 11:43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Pardoe HR, Cole JH, Blackmon K, et al. Structural brain changes in medically refractory focal epilepsy resemble premature brain aging. Epilepsy Res 2017; 133:28–32. [DOI] [PubMed] [Google Scholar]

- 52.Hong S-J, Bernhardt BC, Schrader DS, et al. Whole-brain MRI phenotyping in dysplasia-related frontal lobe epilepsy. Neurology 2016; 86:643–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.El Azami M, Hammers A, Jung J, et al. Detection of Lesions Underlying Intractable Epilepsy on T1-Weighted MRI as an Outlier Detection Problem. PLoS ONE 2016; 11:e0161498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Jin B, Krishnan B, Adler S, et al. Automated detection of focal cortical dysplasia type II with surface-based magnetic resonance imaging postprocessing and machine learning. Epilepsia 2018; 59:982–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Goker I, Osman O, Ozekes S, et al. Classification of juvenile myoclonic epilepsy data acquired through scanning electromyography with machine learning algorithms. J Med Syst 2012; 36:2705–11. [DOI] [PubMed] [Google Scholar]

- 56.Kassahun Y, Perrone R, De Momi E, et al. Automatic classification of epilepsy types using ontology-based and genetics-based machine learning. Artif Intell Med 2014; 61:79–88. [DOI] [PubMed] [Google Scholar]

- 57.Won D, Kim W, Chaovalitwongse WA, et al. Altered visual contrast gain control is sensitive for idiopathic generalized epilepsies. Clin Neurophysiol 2017; 128:340–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Frank B, Hurley L, Scott TM, et al. Machine learning as a new paradigm for characterizing localization and lateralization of neuropsychological test data in temporal lobe epilepsy. Epilepsy Behav 2018; 86:58–65. [DOI] [PubMed] [Google Scholar]

- 59.Connolly B, Matykiewicz P, Bretonnel Cohen K, et al. Assessing the similarity of surface linguistic features related to epilepsy across pediatric hospitals. J Am Med Inform Assoc 2014; 21:866–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Biswal S, Nip Z, Moura Junior V, et al. Automated information extraction from free-text EEG reports. Conf Proc IEEE Eng Med Biol Soc 2015; 2015:6804–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Goodwin TR, Harabagiu SM. Multi-modal Patient Cohort Identification from EEG Report and Signal Data. AMIA Annu Symp Proc 2016; 2016:1794–803. [PMC free article] [PubMed] [Google Scholar]

- 62.Bakken IJ, Axelson D, Kvistad KA, et al. Applications of neural network analyses to in vivo 1H magnetic resonance spectroscopy of epilepsy patients. Epilepsy Res 1999; 35:245–52. [DOI] [PubMed] [Google Scholar]

- 63.Lee JS, Lee DS, Kim SK, et al. Localization of epileptogenic zones in F-18 FDG brain PET of patients with temporal lobe epilepsy using artificial neural network. IEEE Trans Med Imaging 2000; 19:347–55. [DOI] [PubMed] [Google Scholar]

- 64.Kerr WT, Nguyen ST, Cho AY, et al. Computer-Aided Diagnosis and Localization of Lateralized Temporal Lobe Epilepsy Using Interictal FDG-PET. Front Neurol 2013; 4:31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Lopes R, Steinling M, Szurhaj W, et al. Fractal features for localization of temporal lobe epileptic foci using SPECT imaging. Comput Biol Med 2010; 40:469–77. [DOI] [PubMed] [Google Scholar]

- 66.Rudie JD, Colby JB, Salamon N. Machine learning classification of mesial temporal sclerosis in epilepsy patients. Epilepsy Res 2015; 117:63–9. [DOI] [PubMed] [Google Scholar]

- 67.Keihaninejad S, Heckemann RA, Gousias IS, et al. Classification and lateralization of temporal lobe epilepsies with and without hippocampal atrophy based on whole-brain automatic MRI segmentation. PLoS ONE 2012; 7:e33096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Davoodi-Bojd E, Elisevich KV, Schwalb J, et al. TLE lateralization using whole brain structural connectivity. Conf Proc IEEE Eng Med Biol Soc 2016; 2016:1103–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Kamiya K, Amemiya S, Suzuki Y, et al. Machine learning of DTI structural brain connectomes for lateralization of temporal lobe epilepsy. Magn Reson Med Sci 2016; 15:121–9. [DOI] [PubMed] [Google Scholar]

- 70.Del Gaizo J, Mofrad N, Jensen JH, et al. Using machine learning to classify temporal lobe epilepsy based on diffusion MRI. Brain Behav 2017; 7:e00801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Jin S-H, Chung CK. Electrophysiological resting-state biomarker for diagnosing mesial temporal lobe epilepsy with hippocampal sclerosis. Epilepsy Res 2017; 129:138–45. [DOI] [PubMed] [Google Scholar]

- 72.Dian JA, Colic S, Chinvarun Y, et al. Identification of brain regions of interest for epilepsy surgery planning using support vector machines. Conf Proc IEEE Eng Med Biol Soc 2015; 2015:6590–3. [DOI] [PubMed] [Google Scholar]

- 73.Roland JL, Griffin N, Hacker CD, et al. Resting-state functional magnetic resonance imaging for surgical planning in pediatric patients: a preliminary experience. J Neurosurg Pediatr 2017; 20:583–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Grigsby J, Kramer RE, Schneiders JL, et al. Predicting outcome of anterior temporal lobectomy using simulated neural networks. Epilepsia 1998; 39:61–6. [DOI] [PubMed] [Google Scholar]

- 75.Arle JE, Perrine K, Devinsky O, et al. Neural network analysis of preoperative variables and outcome in epilepsy surgery. J Neurosurg 1999; 90:998–1004. [DOI] [PubMed] [Google Scholar]

- 76.Armañanzas R, Alonso-Nanclares L, Defelipe-Oroquieta J, et al. Machine learning approach for the outcome prediction of temporal lobe epilepsy surgery. PLoS ONE 2013; 8:e62819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Memarian N, Kim S, Dewar S, et al. Multimodal data and machine learning for surgery outcome prediction in complicated cases of mesial temporal lobe epilepsy. Comput Biol Med 2015; 64:67–78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Feis D-L, Schoene-Bake J-C, Elger C, et al. Prediction of post-surgical seizure outcome in left mesial temporal lobe epilepsy. Neuroimage Clin 2013; 2:903–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Gleichgerrcht E, Munsell B, Bhatia S, et al. Deep learning applied to whole-brain connectome to determine seizure control after epilepsy surgery. Epilepsia 2018; 59:1643–54. [DOI] [PubMed] [Google Scholar]

- 80.Ibrahim GM, Sharma P, Hyslop A, et al. Presurgical thalamocortical connectivity is associated with response to vagus nerve stimulation in children with intractable epilepsy. Neuroimage Clin 2017; 16:634–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Antony AR, Alexopoulos AV, González-Martínez JA, et al. Functional connectivity estimated from intracranial EEG predicts surgical outcome in intractable temporal lobe epilepsy. PLoS ONE 2013; 8:e77916. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Tomlinson SB, Porter BE, Marsh ED. Interictal network synchrony and local heterogeneity predict epilepsy surgery outcome among pediatric patients. Epilepsia 2017; 58:402–11. [DOI] [PubMed] [Google Scholar]

- 83.Goldenholz DM, Jow A, Khan OI, et al. Preoperative prediction of temporal lobe epilepsy surgery outcome. Epilepsy Res 2016; 127:331–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Yankam Njiwa J, Gray KR, Costes N, et al. Advanced [(18)F]FDG and [(11)C]flumazenil PET analysis for individual outcome prediction after temporal lobe epilepsy surgery for hippocampal sclerosis. Neuroimage Clin 2015; 7:122–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Aslan K, Bozdemir H, Sahin C, et al. Can neural network able to estimate the prognosis of epilepsy patients according to risk factors? J Med Syst 2010; 34:541–50. [DOI] [PubMed] [Google Scholar]

- 86.Cohen KB, Glass B, Greiner HM, et al. Methodological issues in predicting pediatric epilepsy surgery candidates through natural language processing and machine learning. Biomed Inform Insights 2016; 8:11–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Kimiskidis VK, Tsimpiris A, Ryvlin P, et al. TMS combined with EEG in genetic generalized epilepsy: A phase II diagnostic accuracy study. Clin Neurophysiol 2017; 128:367–81. [DOI] [PubMed] [Google Scholar]

- 88.An S, Malhotra K, Dilley C, et al. Predicting drug-resistant epilepsy - A machine learning approach based on administrative claims data. Epilepsy Behav 2018; 89:118–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Devinsky O, Dilley C, Ozery-Flato M, et al. Changing the approach to treatment choice in epilepsy using big data. Epilepsy Behav 2016; 56:32–7. [DOI] [PubMed] [Google Scholar]

- 90.Ouyang C-S, Chiang C-T, Yang R-C, et al. Quantitative EEG findings and response to treatment with antiepileptic medications in children with epilepsy. Brain Dev 2018; 40:26–35. [DOI] [PubMed] [Google Scholar]

- 91.Zhang J-H, Han X, Zhao H-W, et al. Personalized prediction model for seizure-free epilepsy with levetiracetam therapy: a retrospective data analysis using support vector machine. Br J Clin Pharmacol 2018; 84:2615–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Petrovski S, Szoeke CE, Sheffield LJ, et al. Multi-SNP pharmacogenomic classifier is superior to single-SNP models for predicting drug outcome in complex diseases. Pharmacogenet Genomics 2009; 19:147–52. [DOI] [PubMed] [Google Scholar]

- 93.Paldino MJ, Hedges K, Zhang W. Independent contribution of individual white matter pathways to language function in pediatric epilepsy patients. Neuroimage Clin 2014; 6:327–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Paldino MJ, Zhang W, Chu ZD, et al. Metrics of brain network architecture capture the impact of disease in children with epilepsy. Neuroimage Clin 2017; 13:201–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Piña-Garza JE, Montouris GD, Vekeman F, et al. Assessment of treatment patterns and healthcare costs associated with probable Lennox-Gastaut syndrome. Epilepsy Behav 2017; 73:46–50. [DOI] [PubMed] [Google Scholar]

- 96.Grinspan ZM, Patel AD, Hafeez B, et al. Predicting frequent emergency department use among children with epilepsy: A retrospective cohort study using electronic health data from 2 centers. Epilepsia 2018; 59:155–69. [DOI] [PubMed] [Google Scholar]

- 97.Shazadi K, Petrovski S, Roten A, et al. Validation of a multigenic model to predict seizure control in newly treated epilepsy. Epilepsy Res 2014; 108:1797–805. [DOI] [PubMed] [Google Scholar]

- 98.Combrisson E, Jerbi K. Exceeding chance level by chance: The caveat of theoretical chance levels in brain signal classification and statistical assessment of decoding accuracy. J Neurosci Methods 2015; 250:126–36. [DOI] [PubMed] [Google Scholar]

- 99.Kini LG, Davis KA, Wagenaar JB. Data integration: Combined imaging and electrophysiology data in the cloud. Neuroimage 2016; 124:1175–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Cui L, Huang Y, Tao S, et al. ODaCCI: Ontology-guided Data Curation for Multisite Clinical Research Data Integration in the NINDS Center for SUDEP Research. AMIA Annu Symp Proc 2016; 2016:441–50. [PMC free article] [PubMed] [Google Scholar]