Abstract

From the perspective of historical review, the methodology of economics develops from qualitative to quantitative, from a small sampling of data to a vast amount of data. Because of the superiority in learning inherent law and representative level, deep learning models assist in realizing intelligent decision-making in economics. After presenting some statistical results of relevant researches, this paper systematically investigates deep learning in economics, including a survey of frequently-used deep learning models in economics, several applications of deep learning models used in economics. Then, some critical reviews of deep learning in economics are provided, including models and applications, why and how to implement deep learning in economics, research gap and future challenges, respectively. It is obvious that several deep learning models and their variants have been widely applied in different subfields of economics, e.g., financial economics, macroeconomics and monetary economics, agricultural and natural resource economics, industrial organization, urban, rural, regional, real estate and transportation economics, health, education and welfare, business administration and microeconomics, etc. We are very confident that decision-making in economics will be more intelligent with the development of deep learning, because the research of deep learning in economics has become a hot and important topic recently.

Keywords: Deep learning, Economics, Critical review, Intelligent decision-making

Introduction

Economics is a significant subject that studies various economic activities and corresponding economic relations of human society, aiming to help people discover economic regulation, instruct economic practice and forecast economic behavior (Marshall, 1992). In order to study the concepts, theories and basic reasoning principle of economics, economic methodology were constructed (Blaug, 1981). The development of economic methodology has gone through the following five stages: (1) Before 1790s, possible economic laws were concluded through complicated history and statistical data of economic and social phenomena, represented by a book “An Inquiry Into the Nature and Causes of the Wealth of Nations” (Smith, 1976); (2) Between Mid-17th to Mid-19th century, that was the period of classical economics, researchers adopted reasoning mode that takes deduction as the leading actor and induction as the supplementary; (3) From late 19th century to early 20th century, Marshall founded the neoclassical school, which provides economic community with tools such as static method, local analysis and general equilibrium analysis, etc. Then, he began to pay attention to the statistical supplement of economic theory (Marshall, 1992); (4) At 1930, the publication of Keynes’s General Theory broke the neoclassical harmony between individual and social interests, reintroducing moral issues and dynamic changes into economics. So that from 1930 to 1960 s, Positivism and falsificationism disputed each other and this period became the golden age of the development of economic methodology (Keynes, 1936); (5) After 1960s, with the rapid development of econometrics, statistics, econometric methods and tools became the main research weapons adopted by mainstream economics. Positivism dominated the upper hand (Ahelegbey, 2016; Lee, 2020).

As far as we know, the methodology of economics develops from qualitative to quantitative, and mathematic models play important roles (Lindenlaub & Prummer, 2021; Page & Clemen, 2013; Tsakas et al., 2021). But it is undeniable that the criticism and reflection on this methodology system has never stopped (Cerniglia and Fabozzi, 2020; Rattinger, 1976). Especially with the emergence of many “black swan events” such as the financial crisis and epidemic disease, the interpretation and prediction ability of Positivist Economics has been greatly challenged, and the effectiveness of the policy measures proposed by it has been seriously questioned. Without strict premise of hypothesis and mathematic models, machine learning has been adopted to handle a large amount of data or the economic problem cannot be described by mathematic models (Hugo et al., 2019; Ozgur & Akkoc, 2021).

However, if the amount of data in economic field is extremely huge and the information is buried in meaningless features, the feature learning efficiency of machine learning algorithms will largely decrease and its performance will be severely affected. Deep learning aims at mining the relationships and regulations hidden in the data by constructing the representation hierarchy of data, for performing downstream tasks, such as classification and decision-making. Hence, it can also improve the intelligent decision-making in economic fields. Because it can deal with a larger amount of higher dimensional data and mine the potential information and rules in the data, deep learning models usually perform better than traditional machine learning models. Combined with other models, deep learning variants solve many practical problems in economics. Therefore, deep learning has greatly improved the development of economics research. A comprehensive literature review and critical comments on deep learning in economics, not only provide economists with new ideas and methods to solve economic problems, but also expand the application scenarios for machine learning community.

As special type of machine learning, deep learning models are representation-learning methods with multiple simple but non-linear modules, constructed in the form of multi-layer neural network (LeCun et al., 2015). It has strong expression ability and can perform sufficiently complex functions for fitting features. Its concept came into people’s sight when deep belief network (DBN) was proposed by Hinton and Salakhutdinov (2006). Since then, deep learning has become an important driving force for scientific research and application in the field of artificial intelligence. Some several deep learning models, e.g., Deep Neural Network (DNN), Restricted Boltzmann Machine (RBM), Convolutional Neural Network (CNN), Recurrent Neural Network (RNN), Autoencoder (AE), or hybrid techniques, like Deep Reinforcement Learning (DRL), have been developed rapidly and widely applied in pattern recognition (Salakhutdinov & Hinton, 2012), speech recognition (Arisoy et al., 2015), computer vision (Guo et al., 2020), auto-controlling (Roopaei et al., 2017), mechanical equipment (Wu et al., 2019), medical system (Xu et al., 2020), financial field (Huang et al., 2020) and other fields. The main differences between these deep learning models are discussed in Table 1.

Table 1.

Main differences between the deep learning models

| Features | Advantages | Disadvantages | Main variants | |

|---|---|---|---|---|

| DNN |

a. Can be regarded as a neural network with many hidden layers, which are fully connected; b. Use forward propagation and back propagation algorithms to tune parameters. |

a. Can be used in wide range of applications; b. Network structure is simple. |

a. The number of parameters is easy to be inflated; b. Need a lot of computation. |

DNN-HMM, DNN-CTC |

| RBM |

a. Be a stochastic generated neural network that can learn probabilistic distribution from an input data set; b. Be the building block of DBN. |

a. Flexible and efficient computation; b. Easy to reason. |

a. Only suitable for working on binary-valued data; b. Model is relatively simple so that expression ability is not good enough. |

Conditional RBM, Point-wise Gated RBM, Temporal RBM |

| DBN |

a. Can be regarded as either a generation model or a discriminant model; b. Can be used for both unsupervised and supervised learning. |

a. The generation model learns the joint probability density distribution, so it can represent the distribution of data from a statistical point of view and reflect the similarity of similar data; b. The generative model can restore the conditional probability distribution, which is equivalent to the discriminant model. |

a. The classification accuracy of the generative model is not as high as the discriminant model when it is used for classification problems; b. Because the generation model learns the joint distribution of data, the learning problem is more complex; c. The input data are required to be labeled for training. |

Convolution DBN |

| CNN |

a. Effectively reduce the dimension of large data images to small data (without affecting the results); b. Can retain the characteristics of the picture, similar to the principle of human vision. |

a. The weight sharing strategy reduces the parameters that need to be trained, making the generalization ability of the trained model stronger; b. Pooling operation can reduce the spatial resolution of the network, so that the translation invariance of the input data is not required. |

Depth models are prone to gradient dissipation. | GoogleNet, VGG, Deep Residual Learning |

| RNN |

a. Long-term information can be effectively retained; b. Select important information to keep, and select “forget” for less important information. |

The model is a depth model in time dimension, which can model the sequence content. |

a. There are many parameters that need to be trained, which are prone to gradient dissipation or gradient explosion; b. Without feature learning ability. |

LSTM, GRU |

| AE |

a. Data dependent; b. Learn automatically from data samples. |

a. Strong generalization; b. Can be used for dimension reduction; c. Can be used for feature detectors; d. Can be used for generation model. |

a. Information is somewhat lost in the process of encoding and decoding; b. The compression ability only applies to samples similar to training samples. |

Denoising AE, Stack AE, Undercomplete AE, Regular AE |

| Transformer |

a. Self-attention mechanism; b. Focus on global information. |

a. Enables to model more long-distance dependencies; b. Parallel computing. |

a. High program complexity; b. Not Turing complete; c. Compute resource input average. |

Linear Transformer, Sparse Transformer, Reformer, Set Transformer, Transformer-XL |

| DRL |

a. Combine deep learning with reinforcement learning; b. End-to-End training. |

a. Learn control strategies directly from high-dimensional raw data; b. Large numbers of samples can be produced for supervised study. |

a. Difficult to achieve continuous motion control; b. Overestimation, that is, the estimated value function is larger than the true value function. |

QR-DQN, Rainbow DQN |

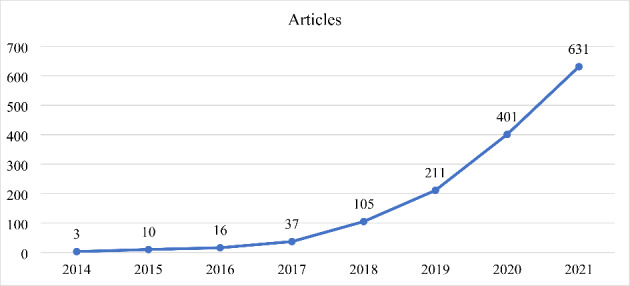

Although the application of deep learning in the economic field started late, it has developed very rapidly. Searched from Web of Science, Scopus and DBLP dataset, the first three published papers about the topic of deep learning and economics were collected at 2014. Then, the number of relevant articles increases every year in the average speed of 222.4% from 2015, until 631 published papers in 2021. Comprehensive review of advanced machine learning and deep learning methods (Nosratabadi et al., 2020) has summarized four data science methods in economics, including deep learning models, hybrid deep learning, hybrid machine learning, and ensemble models. The findings show that the development trends of hybrid models will outperform other learning algorithms. Moreover, a bibliometric analysis on deep learning (Li et al., 2020b) provides researchers with a statistical perspective on the development of the field. A state-of-the-art survey about fusing deep learning and fuzzy systems (Zheng et al., 2021) gives a systematic analysis on the fusion effect of fuzzy technology and deep learning.

In this paper, we try to investigate and present answers to the following research questions:

Which deep learning models are preferred (and more successful) in economics? What are the characteristics of different deep learning algorithms in the economic field?

What economic application areas are of interest to deep learning community? What are the differences when execute deep learning models against traditional soft computing/machine learning techniques?

What are the drawbacks of the current applications of deep learning in economics?

What are the future directions of researches about deep learning in economics?

Therefore, this paper systematically investigates the frequently used deep learning models in economics and several applications of deep learning in economics, aiming to provide a comprehensive cognition of deep learning in economics and seek a new path or new ideas for its development. The main insights of the paper are in the following aspects: (1) Introducing deep learning into economics community and making a survey of frequently-used deep learning models in economic applications, including DNN, RBM, DBN, CNN, RNN, AE, Transformer and DRL (The main differences between these deep learning models are shown in Table 1); (2) Exhibiting applications of deep learning in economics, in which the applications are classified based on Journal of Economic Literature (JEL) classification system. It is a standard method of classifying scholarly literature in the field of economics (“Jel classification system,“); (3) Providing some critical review of deep learning in economics, and offering some possible trends and opportunities of the further fusion.

The rest of the paper is constructed as follows: Sect. 2 presents some statistical results of literature about deep learning in economics. Then, Sect. 3 introduces eight frequently-used deep learning methods and their applications in economics and Sect. 4 analyzes the most common applications of using deep learning in economics. Section 5 offers some critical review of deep learning in economics, including critical review on models and applications, models and applications, why and how to implement deep learning in economics, research gap and future challenges. Finally, the answers to our initially stated research questions and some conclusions are exhibited in Sect. 6.

Statistical results

In order to collect as many relevant published documents as possible, broader search strings were initially identified, i.e., Web of Science: TS = (economics OR economy) AND TS = “deep learning”, and search span is set from 2006 to 2021 (because the concept of deep learning coming into people’s sight was in 2006 when DBN was proposed). Then, till Dec. 31th, 2021, 548 articles matched the constraints. Scopus: (TITLE-ABS-KEY (economics) OR TITLE-ABS-KEY (economy) AND TITLE-ABS-KEY (“deep learning”)) AND PUBYEAR > 2005 AND PUBYEAR < 2022. Then, till Dec. 31th, 2021, 1,384 articles matched the constraints. DBLP: searching from “economy, deep learning” OR “economics, deep learning”, 6 articles matched the constraints. After combining all articles searched from different databases and removing duplicates, 1,468 articles are retained for further consideration. To ensure that final search results are as accurate as possible, a total of 1,414 articles are collected after purely peer-reviewed academic journal articles finally. These articles are ranked according to the published time and analyzed from the number of articles by year.

As shown in Fig. 1, the earliest three relevant articles published in 2014, after the concept of deep learning emerged (Hinton and Salakhutdinov, 2006). From 2014 to 2015, only some researchers paid attention to this topic. But starting from 2016, the number of articles kept increasing year by year, till 631 articles in 2021. Meanwhile, it is easy to see that more and more scholars have been devoted to the research field of deep learning and economics.

Fig. 1.

The number of articles by year

Frequently-used deep learning models in economics applications

Deep neural network (DNN) in economics

DNN is one of fundamental models of deep learning (Hinton et al., 2012) and it can be thought of as a neural network with many hidden layers. As we can see in Fig. 2, DNN is made of three types of network layer. The first layer is the input layer, the last layer is the output layer, and the layers in middle are both the hidden layers. Layers to layers are fully connected and each layer performs specific effect of sorting and ordering in a process that some are called as “representation hierarchy”.

Fig. 2.

Architecture of DNN model

As one of the fundamental methods of deep learning, DNNs have been applied in various research fields of economics, e.g., financial economics, macroeconomics and monetary economics, urban, rural, regional, real estate, and transportation economics, industrial organization, etc. Some of applications are shown in Table 2. Not only single DNN model (Bazan-Krzywoszanska & Bereta, 2018; Feng et al., 2018; Kremsner et al., 2020; Lukman et al., 2020), but also hybrid models (Chatzis et al., 2018; Ding et al., 2019; Frey et al. 2019; Galeshchuk & Mukherjee, 2017; He et al., 2019; Tan et al., 2020; Yuan & Lee, 2020; Zhong & Enke, 2019), often achieve higher classification or prediction accuracy than other benchmark methods.

Table 2.

DNN in economics

| Article | Aim of study | Specific approach | Benchmark methods for comparison | Superiority of the proposed method |

|---|---|---|---|---|

| (Zhong & Enke, 2019) | Predict the daily return direction of the SPDR S&P 500 ETF | DNN, ANN | Single DNN or single ANN | Significantly higher classification accuracy |

| (Chatzis et al., 2018) | Forecast stock market crisis events | MXNET DNN | LOGIT, CART, RF, SVM, NN, XGBoost | Higher discriminatory power and superior predictive accuracy |

| (He et al., 2019) | Forecast financial time series | DNN, LSTM | Five strategies | Outperforms others in MAPE, RMSE, R2 |

| (Kremsner et al., 2020) | Compute risk measure | DNN | Classical methods in references | Can solve problems with high dimension |

| (Alaminos et al., 2019) | Predict currency crisis event | DNN, DNDT | LOGIT, MLP, SVM, AdaBoost | Higher levels of accuracy |

| (Galeshchuk & Mukherjee, 2017) | Predict exchange rate | DNN, LSTM | Shallow neural network | Significantly higher predictive accuracy |

| (Lukman et al., 2020) | Predict the amount of salvage and waste materials | DNN | The component based neural network model | Higher and more steady prediction accuracy |

| (Bazan-Krzywoszanska & Bereta, 2018) | Forecast real estate value | DNN | Linear regression | Perform better in test data according to prediction criteria MAE, MRE |

| (Ding et al., 2019) | Estimate socioeconomic status | S2S models containing DNN and LSTM | Random Guess, STL, GBDT | Outperform other models in precision, recall, and F1-score |

| (Yuan & Lee, 2020) | Forecast intelligent sales volume | DNN, grey analysis, LSSVR | GA-ANN, GA-LSSVR, and PSO- LSSVR | Superior performance in Google Index |

| (Feng et al., 2018) | Recognize pattern and make classification | DNN | Traditional auction | Better performance when the number of SUs exceeds a certain value |

| (Frey et al., 2019) | Predict for investment decisions | DNN, Gradient Boosting, RF | GLM | Higher prediction accuracy |

| (Tan et al., 2020) | Estimate poverty | Deep ResNet, FPN | Linear regression model with night-time light data, linear regression model with both night-time light data and spectral index data | Outperform other models with the Pearson correlation coefficient |

Note: ANN (Artificial Neural Network), LOGIT (Logistic Regression), MXNET (Deep Learning Techniques), CART (Classification and Regression Trees), RF (Random Forest), SVM (Support Vector Machine), NN (Neural Network), XGBoost (Extreme Gradient Boosting), DNDT (Deep Neural Decision Tree), MLP (Multilayer Perceptron), LSTM (Long Short-Term Memory), RMSE (Root Mean Square Error), STL (Standard Template Library), GBDT (Gradient Boosting Decision Tree), LSSVR (Least-Square Support Vector Regression), GA (Genetic Algorithm), PSO (Particle Swarm Optimization), GLM (Generalized Linear Models), FPN (Feature Pyramid Network), ResNet (Residual neural Network)

Restricted Boltzmann Machine (RBM) in economics

Inspired by energy function of statistical physics, RBM is a randomly generated neural network that can learn probability distributions from input data sets (Le Roux & Bengio, 2008). As a special topology structure of Boltzmann Machine (BM), RBM has two layers: a visible layer and a hidden layer. Unlike convolutional BM (Krefl et al., 2020), there are connections between all units of different layers while there is no connection between units in the same layer in RBM. The architecture of RBM is exhibited in Fig. 3. Because of the advantages of strong representation and easy reasoning, RBM is successfully applied to recommendation system (Chen et al., 2020), image segmentation (Li & Wang, 2020), natural language processing (Tsutsui & Hagiwara, 2019), etc.

Fig. 3.

Architecture of RBM model

RBM model can better maintain the intrinsic characteristics of the original data because the error of feature reconstruction is lower in the process of feature learning (Mittelman et al., 2014). These intrinsic characteristics enable RBM to learn more reconfigurable features and build a wonderful prediction model. In the field of economics, RBM has been applied in several subfields, like macroeconomics and monetary economics (Galeshchuk, 2017), urban, rural, regional, real estate, and transportation economics (Rafiei & Adeli, 2016), agricultural and natural resource economics (Li et al., 2017; Pei et al., 2020), etc. Please refer to Table 3 for more details. The approaches have also been proven effectively in economic predictions and performed better than the compared method.

Table 3.

RBM in economics

| Article | Aim of study | Specific approach | Benchmark methods for comparison | Superiority of the proposed method |

|---|---|---|---|---|

| (Galeshchuk, 2017) | Predict exchange rate | RBM, AE | Multilayer perceptron, Autoregressive integrated moving average model, Random walk model | Higher accuracy |

| (Rafiei & Adeli, 2016) | Estimate the sale prices of real estate units | Deep RBM, nonmating genetic algorithm |

Standard genetic search, best first, linear forward selection, and correlation-based feature subset |

The superiority of the new method is substantiated in accuracy of classifier |

| (Pei et al., 2020) | Forecast vehicle velocity | RBM, bidirectional LSTM | RBF-NN, BP-NN, EV, 5MC, RBF-WT | Performs better in RMSE |

| (Li et al., 2017) | Time series forecasting | RBM, BSASA | BP, Elman, RBMBP, BSABP, DEBP | Superior capability in preventing the search result from falling into the minimum |

Note: BSASA (Backtracking Search Algorithm with Simulated Annealing), BP (Back Propagation), RBF-NN (Radial Basis Function Neural Network), BP-NN (Back Propagation Neural Network), EV (Exponentially Varying prediction method), 5MC (5-stageMarkov chain prediction method), WT (a novel velocity predicted method based on Wavelet Transform), RBMBP (Restricted Boltzmann Machine Back Propagation), BSABP (Backtracking Search Algorithm Back Propagation), DEBP (Differential Evolution Back Propagation)

Deep Belief Network (DBN) in economics

DBN is a kind of probabilistic generation model (Hinton et al., 2006), which is used for statistical modeling and representing abstract features or statistical distributions of things. As is shown in Fig. 4, DBN’ s graph structure is composed of multiple nodes. There is no internal connection between nodes of the same layer, and there are full connections between nodes of two adjacent layers. The lowest layer of the network is the observable variable, and all nodes of other layers are the hidden variables.

Fig. 4.

Architecture of DBN model

DBN has been utilized for solar power forecasting (Gensler et al., 2016), short-term load forecasting of integrated energy (Huan et al., 2020), and very short-term bus load forecasting (Shi et al., 2019). It is also helpful for fault signal recognition in power distribution system (Rao et al., 2020) and power plant control (Cui et al., 2020) (For detailed information, please see Table 4). When compared with other machine learning algorithms or benchmark methods, DBN performs better and gets more accurate results. As researchers have found that DBN has excellent nonlinear fitting ability to fit the moving point trajectory and provide prediction of the trajectory (Shi et al., 2019). DBN also can extract abstract high-level features and analyze the correlation of multiple features, so that it can learn better features and improve the forecasting accuracy (Huan et al., 2020). Moreover, DBN is regarded as one of advanced artificial intelligence techniques in the construction of robust methods of computer vision applied to precision agriculture from the results of the systematic review (Patricio & Rieder, 2018).

Table 4.

DBN in economics

| Article | Aim of study | Specific approach | Benchmark methods for comparison | Superiority of the proposed method |

|---|---|---|---|---|

| (Gensler et al., 2016) | Forecast the energy output of solar power plants | DBN, AE, LSTM | Multilayer perceptrons, physical models | Superior forecasting performance |

| (Cui et al., 2020) | Predictive control for ultrasupercritical power plant | DBN, Economic model predictive control | Subspace model identification | Performs better in terms of economic performance and tracking performance |

| (Rao et al., 2020) | Detect and classify the fault signal in power distribution system | DBN | SVM, quadratic SVM, RBF SVM, polynomial SVM, MLP SVM, LM-NN, GD-NN | Effectively detects and classifies the fault signal |

| (Huan et al., 2020) | Forecast short-term load of integrated energy systems | DBN, BP, multitask regression layer | SVR, ARIMA, BPNN | Learns better features and improves the forecasting accuracy |

| (Shi et al., 2019) | Forecast very short-term bus load | Phase space reconstruction, DBN | PSR-NN, DBN, ARIMA, NN, LSTM, PSR-DBN (no tuning) | Higher prediction accuracy and better adaptability |

Note: LM-NN (Levenberg-Marquardt Neural Network), GD-NN (Gradient Descent Neural Network), BPNN (Back Propagation Neural Network), SVR (Support Vector Regression), ARIMA (Autoregressive Integrated Moving Average Model,), PSR-NN (Phase Space Reconstruction Neural Network), PSR-DBN (Phase Space Reconstruction Deep Belief Network)

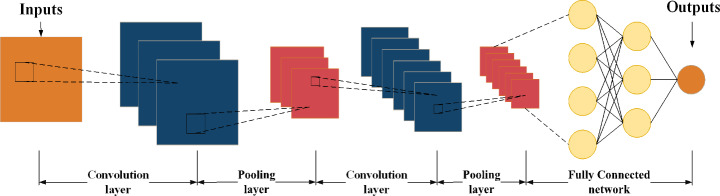

Convolutional neural network (CNN) in economics

Convolutional neural network (CNN) is one of the representative algorithms in deep learning, composed of three types of layers: convolution layer, pooling layer and fully connected layer (Goodfellow et al., 2016; Gu et al., 2018) (Inquire Fig. 5 for its architecture). Compared with other neural network structures, CNN requires relatively few parameters, which enable it to be widely used and obtain higher computation efficiency (Fujita & Cimr, 2019). Developed by LeCun and his team, CNN has successfully solved the handwriting digit classification problem (Lecun et al., 1998), so that it comes into the sights of computer vision researchers rapidly (Abdalla et al., 2019; Kheradpisheh et al., 2018; Selim et al., 2016; Zhang et al., 2020b). In other fields, CNN has been generally applied to natural language processing (Gu et al., 2018), recommendation systems (Zhang & Yang, 2019), remote sensing science (Zhang et al., 2018), etc., which also obtains excellent achievements.

Fig. 5.

Architecture of CNN model

CNN has been widely applied to solve problems in macroeconomics and monetary economics (Chen et al., 2019; Galeshchuk and Demazeau, 2017; Yasir et al., 2019; Yu et al., 2020a), industrial organization (Adebowale et al., 2020; Guo, 2020; Ullah et al., 2019; Wang & Zeng, 2020), financial economics (Liu et al., 2020; Liu et al., 2018), agricultural and natural resource economics (Conte et al., 2019; Gadekallu et al., 2020), urban, rural, regional, real estate (Ajami et al., 2019; Yao et al., 2018), business administration and business economics (Lan et al., 2018), health, education, and welfare (Yeh et al., 2020). Shown in Table 5, cooperated with other machine learning approaches or deep learning algorithms, CNN and its variants usually perform better or improve the estimation and classification accuracy than baseline methods. Among other deep learning models, CNN is great for financial forecasting and economic assessment because of two main causes: Firstly, noise filters and dimensionality reduction approaches help to select crafted input features (Yasir et al., 2019); Secondly, information mining through visual images provides unique and complementary perspective for higher economic prediction performance (Galeshchuk & Demazeau, 2017).

Table 5.

CNN in economics

| Article | Aim of study | Specific approach | Benchmark methods for comparison | Superiority of the proposed method |

|---|---|---|---|---|

| (Yasir et al., 2019) | Forecast foreign exchange rate | CNN | Linear regression, SVR | Perform better than other methods in prediction accuracy |

| (Chen et al., 2019) | Forecast interaction of exchange rates |

CNN, fixed-length binary Strings, a binary component |

A random selection rule method, a trend rule method | Higher prediction performance |

| (Galeshchuk & Demazeau, 2017) | Predict exchange rates | CNN | RW, ARIMA, Shallow neural networks | Outperform the baseline methods in prediction |

| (Yu et al., 2020a) | Estimate economy | CNN | Luminosity product | Improve the estimation accuracy |

| (Guo, 2020) | Encode image features and select the image features of commodities | CNN, attention mechanism | Analyze different impact of different situations with the assistance of CNN | Successfully extract the most important image feature corresponding to the decoding time |

| (Ullah et al., 2019) | Detect cyber security threats | CNN, DNN | GIST-SVM, LBP-SVM, CLGM-SVM | Outperform when measuring the cybersecurity threats |

| (Wang & Zeng, 2020) | Select typical economic indicators | CNN | Deep confidence network, Multilayer trestle automatic coder | Improve the classification accuracy and adaptability |

| (Adebowale et al., 2020) | Detect intelligent phishing | CNN, LSTM | Single CNN, single LSTM | Higher classifier prediction performance and less training time |

| (Liu et al., 2020) | Forecast stock price | CNN, GBoost | WSAEs-LSTM | More accurate prediction |

| (Liu et al., 2018) | Predict stock price movement from financial news | TransE Model, CNN, LSTM | T-SVM, J-SVM, C-SVM, C-LSTM, J-LSTM | Predict better |

| (Conte et al., 2019) | Estimate catfish density | CNN, Aerial images ainalysis | - | - |

| (Gadekallu et al., 2020) | Classify tomato plant diseases | CNN, Whale optimization algorithm | DNN without Dimensionality Reduction, DNN with Dimensionality Reduction using PCA | Higher accuracy and low rate, lesser time for training and testing of the data |

| (Ajami et al., 2019) | Predict data-driven index of multiple deprivation | CNN | Principal component regression model combining hand-crafted and GIS features, ensemble model | Outperform than others in terms of R2, RMSE, BIAS |

| (Yao et al., 2018) | Map fine-scale urban housing prices | UMCNN and RF | CNN (HSR), PCA-CNN (HSR), SD, CNN (HSR & SD), PCA-CNN (HSR & SD), CNN (HSR) & SD, PCA-CNN (HSR) & SD, CNN (SD), CNN (HSR) & CNN (SD) | The highest housing price simulation accuracy |

| (Lan et al., 2018) | Extract features of trademark images | CNN, Constraint theory | LBP, SIFT, HOG, CNN-original, CNN-LBP, CNN-Siamese | Best comprehensive retrieval ability |

| (Yeh et al., 2020) | Predict asset wealth | Deep CNN | Simpler KNN, scalar NL | Meets or exceeds published performance |

Note: RW (Random walk without a drift), GIST (Generalized Search Tree),T-SVM (Tf-idf algorithm feature extraction and SVM prediction model), J-SVM (Joint learning feature extraction and SVM prediction model), C-SVM (CNN feature extraction and SVM prediction model), C-LSTM (CNN feature extraction and LSTM prediction model), J-LSTM (Joint learning and LSTM prediction model), PCA (Principle Component Analysis), GIS (Geographic Information System), UMCNN (Convolutional Neural Network for United Mining), HSR (High Spatial Resolution), PCA (Principal Component Analysis), SD (Spatial Data), LBP (Local Binary Pattern), SIFT (Scale Invariant Feature Transform), HOG (Histogram of Oriented Gradients), KNN (K-Nearest Neighbor), NL (Nighlights)

Recurrent neural network (RNN) in economics

Proposed from the idea that the cognition of people towards all things is coming from memory and experience, Recurrent neural network (RNN) is a class of recursive neural networks that takes sequence data as input (Goodfellow et al., 2016). Distinguished from other neural networks, RNN not only considers the input of previous moment, but also endows the network a “memory” function to handle the situation that the decision of current state is dependent on previous state. Starting from Jordan network in 1986 (Jordan, 1986) and Elman network in 1990 (Elman, 1990), RNN has occupied an important position in deep learning algorithms and been successfully applied to natural language processing, like speech recognition (Wang, 2020), language modeling (Noaman et al., 2018) and machine translation (Mahata et al., 2019), and also been used in various time series forecasting (Waheeb & Ghazali, 2020), music recommendation (Kim et al., 2019), commodity recommendation (Chen et al., 2021), etc. The architecture of RNN and its unfolded framework through time is shown in Fig. 6.

Fig. 6.

Architecture of RNN and its unfolded framework through time

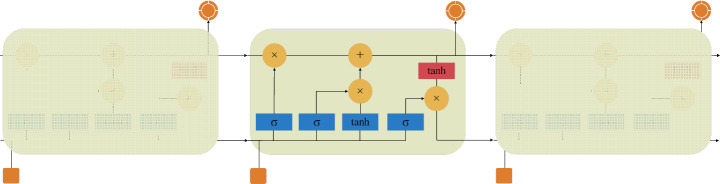

Then, Hochreiter and Schmidhuber (1997) proposed a RNN variant called Long Short-Term Memory (LSTM), which can tackle issues mentioned by Bengio et al. (1994) and learn long-term dependency relations. Its structure is exhibited in Fig. 7, composed of special units: blocks and gates.

Fig. 7.

Architecture of LSTM model

RNN can still be seen in industrial organization, macroeconomics and monetary economics, agricultural and natural resource economics, financial economics. Collaborated with other machine learning algorithms such as logistic regression, encoder-decoder and attention mechanism, RNN and its variants (Alsmadi et al., 2020; Andrijasa, 2019; Becerra-Vicario et al., 2020; Mishev et al., 2020; Zhang et al., 2020a) show superiority in prediction or evaluation than the compared methods, e.g., SVM, neural network. As one of typical deep learning models, RNN is believed to be more suitable to simulate the sequence dynamical data and capture contextual information than regular feedforward neural networks (Alsmadi et al., 2020; Anbazhagan & Kumarappan, 2013). More importantly, for timeseries like electricity price or exchange rate, they present high periodicity patterns and multiple time steps prediction, so that RNN is acted as an ideal option. (Andrijasa, 2019; Zhang et al., 2020a). As a variant of RNN, the essence of LSTM is to introduce the concept of cellular state. Unlike RNN which only considers the most recent state, the cellular state of LSTM determines which states should be left behind and which states should be forgotten. Hence, LSTM plays an important role in many fields of economic research. In comparison with other benchmark methods, the authors design some measurement indicators and the results show that the proposed methods combining LSTM present high reliability and good capability of forecasting, estimating and detection. Table 6 exhibits some typical applications of RNN in economics.

Table 6.

RNN in economics

| Article | Aim of study | Specific approach | Benchmark methods for comparison | Superiority of the proposed method |

|---|---|---|---|---|

| (Alsmadi et al., 2020) | Predict helpful reviews | RCNN | Fasttext, SVM, Bi-LSTM, (3 Layers) CNN | Outperform conventional as well as deep learning-based models in classification accuracy |

| (Becerra-Vicario et al., 2020) | Predict bankruptcy | DRCNN, LOGIT | Single DRCNN, single LOGIT, neural network | Predict well |

| (Ebrahimi et al., 2020) | Identify semi-supervised cyber threat | Transductive SVMs, LSTM | k-NN, LOGI, RF, SVM, CNN, LSTM, Transductive SVM | State-of-the-art classification performance |

| (Agarwal et al., 2021) | Detect Fraudulent resource consumption attack | LSTM | DTC, RFC, LR, SVM, KNN, ANN | Perform best in effectively and accurately detecting FRC attacks |

| (Arkhangelski et al., 2020) | Evaluate the economic benefits | LSTM | MILP, fuzzy logic, or another linear optimization technique | More prediction accuracy |

| (Haytamy & Omara, 2020) | Predict the Cloud QoS provisioned values | LSTM, PSO | MQPM | Outperforms the existing MQPM model in terms of RMSE |

| (Andrijasa, 2019) | Predict exchange currency rates | Encoder-decoder RNN | - | - |

| (Tang et al., 2020) | Forecast economic recession through Share Price | LSTM | MA, KNN, ARIMA, Prophet | LSTM outperforms the other models in prediction |

| (Zhang et al., 2020a) | Forecast day-ahead electricity price | DRNN | Single SVM, hybrid SVM network | Outperform in terms of simulating the relationships between external factors and the electricity price |

| (Zhang et al., 2019) | Promote the accuracy of wind prediction | LSTM, multi-objective PSO | Grey Model | Numeric results demonstrate that LSTM is superior to the traditional grey model in terms of prediction accuracy, robustness, and computational efficiency |

| (Zhou et al., 2019) | Forecast electricity price | LSTM, SMBO | SVR, BPNN, GTB, DTR, LSTM series models include shallow LSTM, stacked LSTM, EEMD-LSTM and EEMD-LSTM-SMBO | Much better than that of the general LSTM model and traditional models in accuracy and stability |

| (Abdel-Nasser & Mahmoud, 2019) | Forecast photovoltaic power | LSTM-RNN | MLR, BRT, and neural networks | Further reduction in the forecasting error |

| (Guo et al., 2018) | Forecast short-term power load | Integrating several LSTM networks | LSTM, ARIMA, SVR, MLP | Improve the forecasting performance |

| (Mishev et al., 2020) | Analyze sentiment in finance | RNN, RNN-Attention, CNN, Dense Network | SVC, XGB, Dense, CNN, RNN | Better performance in several criteria |

| (Ji et al., 2021) | Forecast stock indices | IPSO and LSTM | Support-vector regression, LSTM and PSO-LSTM | High reliability and good forecasting capability |

| (Jin et al., 2020) | Predict stock closing price | LSTM, sentiment analysis, attention mechanism, empirical modal decomposition |

LS_RF, S_LSTM, The LSTM model that considers the S_AM_LSTM |

The highest accuracy, the lowest time offset and the closest predictive value when predicting the stock market |

| (Nikou et al., 2019) | Predict stock price | LSTM | ANN, RF, SVM | Better in prediction of the close price of iShares MSCI United Kingdom than the other methods |

| (Niu et al., 2020) | Predict stock price index | VMD-LSTM | PNN, ELM, CNN, and LSTM, and the hybrid models EMD-BPNN, EMD-ELM, EMD-CNN, EMD-LSTM, VMD-BPNN, VMD-ELM, and VMD-CNN | The hybrid models perform significantly better than the single models |

| (Sharaf et al., 2021) | Predict stock price | LSTM, CNN, Stacked-LSTM, and Bidirectional-LSTM | SVM, Linear Regression, LOGIT, K-Neighbors, Decision Tree, RF | Outperform the other models based on several evaluation metrics |

| (Katayama et al., 2019) | Identify sentiment polarity in financial news | LSTM, Convolution model | Common polarity dictionary | Captures more news sentiment |

| (Tao et al., 2020) | Evaluate the impact of the Northridge Earthquake | LSTM, NAR neural network | Single LSTM, single NAR | Perform better based on some criteria |

| (He, 2021) | Predict investment benefits and national economic attributes | EEMD-LSTM | BP model, EEMD-BP model, LSTM model, and ARMA | Highest prediction accuracy |

| (Wu et al., 2018) | Estimate remaining useful life of complex engineered systems | Vanilla LSTM | RNN, GRU | Significance of performance improvement |

| (Sehovac & Grolinger, 2020) | Forecast electrical load | S2S RNN | Vanilla RNN, LSTM, and GRU | Outperform other models |

| (Li et al., 2021) | Predict the price of gold | VMD-ICSS-BiGRU | SVR, LR, ANN, LSTM | Consistently reduce the forecasting error and improve the fitting performance effectively |

Note: RCNN (Recurrent Convolutional Neural Network), Bi-LSTM (Bidirectional-LSTM), DRCNN (Deep Recurrent Convolutional Neural Network), DRNN (Deep Recurrent Neural Network), SVC (Support Vector Classifier), XGB (Extreme Gradient Boosting), IPSO (Improved Particle Swarm Optimization), LS_RF (Random Rorest estimates using LSboost), S_LSTM (The LSTM model considering the sentiment index), S_AM_LSTM (The LSTM model that considers the sentiment index and attention mechanism), EMD (Empirical Modal Decomposition), NAR (Nonlinear Autoregressive), DTC (Decision Tree Classifier), RFC (Random Forest Classifier), MINLP (Mixed-Integer Nonlinear Programming), MQPM (Multivariate Quality of service Prediction Model), SMBO (Sequence Model-Based Optimization), GTB (Gradient Boosting Regressor), DTR (Decision tree regressor), EEMD (Ensemble Empirical Mode Decomposition), MLR (Multiple Linear Regression), BRT (Bagged Regression Trees), MA (Moving Average), ARMA (Autoregressive Moving Average), S2S RNN (Sequence to Sequence Recurrent Neural Network), ICSS (Iterated Cumulative Sums of Squares), BiGRU (Bidirectional Gated Recurrent Unit), GRU (Gated Recurrent Unit), LR (Linear Regression)

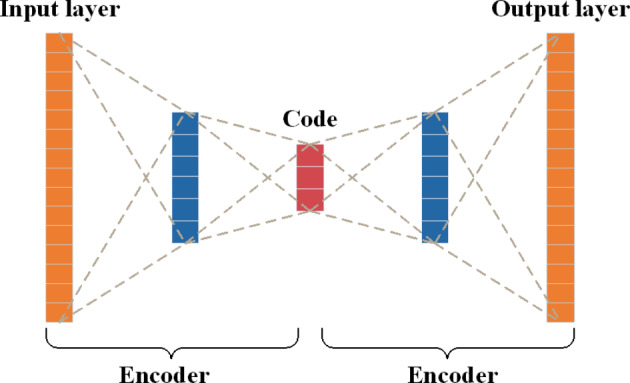

Autoencoder (AE) in economics

Proposed by LeCun (1987), AE is a kind of artificial neural network used in semi-supervised learning or unsupervised learning, for representation learning of input information by taking input information as learning target (Bengio et al., 2013; Goodfellow et al., 2016). As shown in Fig. 8, AE is built by encoder and decoder, which is helpful for dimensionality reduction of data (Chen et al., 2018), feature extraction (Meng et al., 2017) and anomaly detection (Han et al., 2020). It also has some variants like undercomplete autoencoder (Buongiorno et al., 2019), regularized autoencoder (Hong et al., 2020) and variational autoencoder (Che et al., 2020).

Fig. 8.

Architecture of AE model

In the economic field, AE is usually employed to automatically learn features from the high dimensional data (Long et al., 2020; Ranjan et al., 2021) and for self-adaptive feature reduction (Li et al., 2020). In order to force AE to learn useful information, noises are often added to the input data (Vincent et al., 2008), and then the network is trained to express the original data without noise. Meanwhile, sparse penalty is added to the encoding layer so that the active neurons of encoding layer are limited and the original data can be replaced with discovery features (Li et al., 2020; Xu et al., 2016). In addition, the merit of AE is the interpretability of the model (Suimon et al., 2020), which is largely superior to most of deep learning models in tackling with economic issues. Shown in Table 7, compared with some prediction technologies and other deep learning models, AE also performs well and gets more accurate prediction results, requiring less trainable parameters and training time.

Table 7.

AE in economics

| Article | Aim of study | Specific approach | Benchmark methods for comparison | Superiority of the proposed method |

|---|---|---|---|---|

| (Wang et al., 2020a) | Forecast long-term time series in industrial production | SSAEN, SAEGN | BPNN, DLSTM, GrC-based long-term prediction model | Significantly improve the long-term time series prediction accuracy |

| (Long et al., 2020) | Learn features and recognize fault of Delta 3-D printers | SAE, ESN | ESN, SAE-Softmax, DBN-ESN | Best forecasting performance |

| (Heaton et al., 2017) | Predict and classify financial market | Stacked auto-encoders, DL | - | - |

| (Li et al., 2020a) | Detect feedwater heater performance | SDSAE | PCA(T2), PCA(SPE), GA-ELM, PCA-BPNN | Achieves the best performance according to detection threshold, computation accuracy, Accnormal, Accfault |

| (Suimon et al., 2020) | Represent the Japanese yield curve | AE | LSTM, VAR | Effective, and interpretable |

| (Ranjan et al., 2021) | Analyze and predict large-scale road network congestion | Convolutional AE | ConvLSTM, PredNet | More accurate prediction result, less trainable parameter and training time |

Note: SSAEN (Stacked Sparse Auto-Encoders Network), SAEGN (Sparse Auto-Encoder Granulation Network), DLSTM (Deep Long Short-Term Memory), SAE (Sparse Autoencoder), ESN (Echo State Network), SDSAE (Sacked Denoising Sparse Autoencoder), SPE (Squared Prediction Error), GA-ELM (Genetic Algorithm based Extreme Learning Machine), VAR (Vector Autoregression), ConvLSTM (Convolution Long Short-Term memory), PredNet (Prediction Network)

Transformer in economics

Transformer model proposed by Google in 2017 is a well-known architecture for deep learning, which performs well on a variety of natural language processing tasks (He et al., 2021). Transformer is based on the self-attention mechanism dispensing with recurrence and convolutions entirely (Vaswani et al., 2017). Moreover, Transformer is composed of two parts, including encoder and decoder (Fan et al., 2022). Encoder codes the input and decoder decodes the encoded information, and finally get the decoded output, its structure is shown in Fig. 9. Besides natural language processing, it has also been successfully applied to image classification, object detection, and segmentation tasks (Bazi et al., 2021; Yu et al., 2020b). It has some variants like Vision Transformer (ViT) (Fisichella and Garolla, 2021), Data efficient image Transformers (DeiT) (Touvron et al., 2020), Convolutional vision Transformer (CvT) (Wu et al., 2021), and Swin-Transformer (Liu et al., 2021).

Fig. 9.

Architecture of Transformer model

In the field of economy, Transformer takes advantage of performing well on a variety of natural language processing tasks by treating a sentence as a sequence of words and proposing a self-attentive layer structure (Liao et al., 2021). In addition, Bidirectional Encoder Representation from Transformer (BERT) (Devlin et al., 2018) is used to pre-train deep bidirectional representation (Wang & Li, 2022; Yue et al., 2020), and ViT applied directly to sequences of image patches can perform very well on image classification tasks (Fisichella & Garolla, 2021). Therefore, compared with some prediction technologies and conventional deep learning methods, Transformer performs well on higher accuracy in prediction, more efficient and robust training process, and less accumulation of errors (Shown in Table 8).

Table 8.

Transformer in economics

| Article | Aim of study | Specific approach | Benchmark methods for comparison | Superiority of the proposed method |

|---|---|---|---|---|

| (Wang & Li, 2022) | Detect renewable energy incidents from news articles containing accidents in various renewable energy systems | PTM word2vec, BERT, TCNN, TRNN | BERT-TCNNs BERT-TRNNs word2vec-TCNNs word2vec-TRNNs TCNNs, TRNNs | Effective and robust in detecting renewable energy incidents from large-scale textual materials |

| (Liao et al., 2021) | Predict multistep-ahead location marginal price | Transformer, with seq2seq architecture | LSTM, Bi-LSTM, GRU, Bi-GRU, and TCN | Avoid the error accumulation of the results, higher accuracy |

| (Yue et al., 2020) | Predict accurate energy and classify simultaneous status | BERT | GRU, LSTM, CNN | More stable and precise, higher prediction consistency |

| (Fisichella & Garolla, 2021) | Develop a complete trading system with a combination of trading rules on Forex time series data | ViT | ResNet50 | Fewer computational resources to train |

Note: PTM (Pre-Trained Model), BERT (Bidirectional Encoder Representation from Transformer), TRNN (Text Recursive Neural Network), TCNN (Text Convolution Neural Network), seq2seq (sequence to sequence), GRU (Gated Recurrent Unit), Bi-GRU (Bi-directional Gated Recurrent Unit), TCN (Train Communication Network), Vision Transformer (ViT).

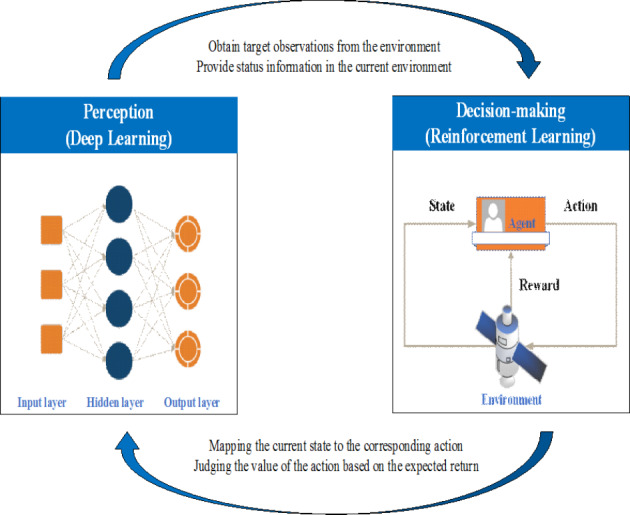

Deep reinforcement learning (DRL) in economics

DRL is a brand new technique that combines deep learning with reinforcement learning to realize end-to-end learning from perception to action. Although so many people had the same idea, the publication “Playing Atari with Deep Reinforcement Learning” brought DRL into researcher’s vision (Mnih et al., 2013). Then, DeepMind improved DQN, Hinton, Bengio and Lecun took DRL as one of important development directions of deep learning in the future (LeCun et al., 2015). It is used to describe and solve problems in which agents adopt learning strategy to maximize the return or realize some specific targets in the process of interacting with environment. The architecture of DRL is constructed in Fig. 10.

Fig. 10.

Architecture of DRL

DRL is widely applied in economics to help people make intelligent and reliable decisions (Table 9). Comprehensive review (Mosavi et al., 2020) has discussed the development of DRL methods and applications in economics. For example, in the field of financial economics, Chakole and Kurhekar (2020) proposed an algorithm using deep Q-learning techniques to make trading decisions. In terms of agricultural and natural resource economics, a state-of-the-art proximal policy optimization (PPO) algorithm was adopted to derive alternating current optimal power flow solutions with operational constraints (Zhou et al., 2020b), and a novel DRL method combining deep deterministic policy gradient (DDPG) principles with a prioritized experience replay (PER) strategy was developed to solve the examined electric vehicle pricing problem (Qiu et al., 2020). From the aspect of macroeconomics and monetary economics, health, education, and welfare, deep neural model of DRL, DQN and DDPG are used to recommend cryptocurrency trading points (Sattarov et al., 2020) and learn optimal policy for COVID-19 prevention (Uddin et al., 2020). Furthermore, some comparisons between DRL and other conventional methods present the best performance of the proposed DRL. That is because DRL can not only statistically forecast the change trend direction, but also capture the discrete nature of environmental state, so that it greatly assists human in making rapid and effective decisions.

Table 9.

DRL in economics

| Article | Aim of study | Specific approach | Benchmark methods for comparison | Superiority of the proposed method |

|---|---|---|---|---|

| (Chakole & Kurhekar, 2020) | Make trading decisions | Deep Q-learning | Decision Tree strategy, Buy-and-Hold strategy | Outperforms in terms of some economic indicators: Accumulated Return, Maximum Drawdown, Average daily return, average annual return, Skewness, Kurtosis, Sharpe ratio, and Standard Deviation |

| (Zhou et al., 2020b) | Derive optimal power flow | DRL, PPO with IL | IL, PPO | Perform better in accuracy and running time |

| (Qiu et al., 2020) | Pricing electric vehicles | PDDPG | Q-learning, DQN, DDPG | Better performance in standard deviation, learning pace, flexibility and computational time |

| (Sattarov et al., 2020) | Recommend cryptocurrency trading points | Deep Neural Model of DRL |

Double cross strategy, swing trading, scalping trading |

Best performance in number of actions and quality of Trading |

| (Uddin et al., 2020) | Estimate impact of COVID-19 on the spread of the infection, personal satisfaction or quality of life, resource use and economy | DQN, DDPG | Random, Q-Learning, SARSA | Perform better in terms of best rewards and best policy |

Note: IL (Imitation Learning), PDDPG (Prioritized Deep Deterministic Policy Gradient), DQN (Deep Q Network), DDPG (Deep Deterministic Policy Gradient), SARSA (State-Action-Reward-State-Action).

Applications of deep learning in economics

Financial economics

Financial economics covers studies about issues related to various sub-fields: general financial markets dealing with securities (stocks, bonds, and commodity and other futures), financial institutions and services, and corporate finance and governance (“Jel classification system,“). From Table 10, we know that the selected data come from relevant database, websites or references, and time span of data is usually several years. In this field, various deep learning models like AE, RNN, CNN, DNN, LSTM, DRL and their variants, have been utilized to predict and classify financial market, forecast stock price, evaluate and analyze supply chain financial credit level, etc. In particular, many scholars are interested in exploiting different deep learning models to make effective and accurate predictions for stock market (Chatzis et al., 2018; Katayama et al., 2019; Nikou et al., 2019; Sharaf et al., 2021; Tao et al., 2020; Zhong & Enke, 2019). But for different aims or facing different types of data, deep learning models play a variety of roles in the field of financial economics. For example, if we consider the effect of time or long-term information, RNN or LSTM models are popular to forecast the stock price; if image information is involved during the estimation process, CNN may be an ideal option; if we need to make trading decisions or decide the change trend, DRL and its variants stand out.

Table 10.

Applications of deep learning for financial economics

| Application subfield | Article | Aim of study | Data set | Date size | Time span | Used models |

|---|---|---|---|---|---|---|

| Financial market | (Heaton et al., 2017) | Predict and classify financial market | Component stocks of the biotechnology IBB index | Weekly returns data | 2012–2016 | Stacked AE |

| (Mishev et al., 2020) | Analyze sentiment in finance | Financial Phrase-Bank dataset, SemEval-2017 task dataset | 4,845 English sentences, 2,510 news headlines | - | RNN, RNN, Attention, CNN, Dense Network | |

| Stock market | (Zhong & Enke, 2019) | Forecast daily stock return | SPDR S&P 500 ETF (ticker symbol: SPY) | 60 factors over 2,518 trading days | 2003–2013 | DNN, ANN |

| (Chatzis et al., 2018) | Forecast crisis events | FRED and the SNL | More than 5,000 records | 1996–2017 | MXNET, DNN | |

| (Nikou et al., 2019) | Predict stock price | iShares MSCI United Kingdom exchange | 869 data | 2015–2018 | LSTM | |

| (Sharaf et al., 2021) | Predict stock price | Quandl dataset | - | 2000–2019 | LSTM, CNN, Stacked-LSTM, Bi-LSTM | |

| (Tao et al., 2020) | Evaluate the impact of the Northridge Earthquake | http://finance.yahoo.com/ | 616 listed companies | 1992–1994 | LSTM, NAR neural network | |

| (Katayama et al., 2019) | Identify sentiment polarity in financial news | Economy Watchers Survey | 234,626 samples | 2000–2018 | LSTM, Convolution model | |

| (Ji et al., 2021) | Forecast stock indices | Australian stock market index | 2,523 records | 2010–2020 | LSTM-IPSO | |

| (Jin et al., 2020) | Predict stock closing price | Stock of Apple from (https://stocktwits.com/) | 96,903 comments | 2013–2018 | LSTM, sentiment analysis, attention mechanism, empirical modal decomposition | |

| (Liu et al., 2020) | Forecast stock price | CSMAR and WIND | - | 2008–2016 | CNN, Gboost | |

| (Xu et al., 2016) | Select feature and forecast price | Apple, S&P 500 in Yahoo Finance | 6,423 financial news headlines | 2011–2017 | TransE Model, CNN, LSTM | |

| (Niu et al., 2020) | Predict stock price index | HIS, SPX, FTSE and IXIC | - | 2010–2019 | VMD-LSTM | |

| (Sattarov et al., 2020) | Recommend cryptocurrency trading points | Bitcoin, Litecoin, and Ethereum—hourly historical data from (https://www.cryptodatadownload.com) | - | 2019 | DRL | |

| (Chakole & Kurhekar, 2020) | Make trading decisions | DJIA, NASDAQ, NIFTY and SENSEX index stocks | - | 2001–2018 | Deep Q-learning | |

| Insurance mathematics | (Kremsner et al., 2020) | Compute risk measure | Dataset coming from references | - | - | DNN |

Note: IPSO (Improved Particle Swarm Optimization), VMD (Variational Mode Decomposition), S&P 500 (Standard & Poor’s 500 index), FRED (Federal Reserve Economic Database), SNL (S&P Global Market Intelligence), CSMAR (China Stock Market & Accounting Research Database), HIS (Daily closing prices of the Hong Kong Hang Seng Index), SPX (S&P 500 Index), FTSE (London FTSE Index) and IXIC (Nasdaq Index), DJIA (Dow Jones Industrial Average), NASDAQ (National Association of Securities Dealers Automated Quotations)

Macroeconomics and monetary economics

Macroeconomics and monetary economics mainly include researches about the aggregate performance of an economy: output, employment, prices, and interest rates and their determinants (“Jel classification system,“). This field concentrates on the law of economic operation of an economic field with the help of national income, overall economic investment and consumption and other overall statistical concepts. It is easy to find that the data are mainly obtained from bank websites or government office, and most of long-term data, such as more than ten years, are taken into consideration. That is because macroeconomics and monetary economics issues need to be addressed by observing and exploring long-term data. As shown in Table 11, various deep learning models including DNN, LSTM, CNN, RNN, AE and RBM, are utilized to predict currency crisis event (Alaminos et al., 2019), forecast exchange rate (Andrijasa, 2019; Galeshchuk, 2017; Galeshchuk & Mukherjee, 2017; Yasir et al., 2019), or estimate economy condition (Tang et al., 2020; Yu et al., 2020a). Among these deep learning models, DNN seems to be one of the most popular models to handle forecasting problems with large amount of data, CNN is good at reducing dimensionality of high-dimensional data and fusing image information. Similarly, RNN and LSTM are both suitable to deal with short-term or long-term dependencies problems in macroeconomics and monetary economics.

Table 11.

Applications of deep learning for macroeconomics and monetary economics

| Application sub field | Article | Aim of study | Data set | Data size | Time span | Models |

|---|---|---|---|---|---|---|

| International monetary system | (Alaminos et al., 2019) | Predict currency crisis event | IMF International Financial Statistics, World Bank Development Indicators, World Economic Outlook, and the World Bank Global Financial Database | 7,708 observations | 1970–2017 | DNN, decision trees |

| Circular economy | (Lukman et al., 2020) | Predict the amount of salvage and waste materials | UK Demolition Industry | 2,280 building demolition records | - | DNN |

| Cost efficient cardiac health monitoring | (Faust et al., 2020) | Machine classification: signal processing and make decisions | https://www.polar.com/uk-en | - | - | DL |

| Economic condition | (Tang et al., 2020) | Forecast economic recession through Share Price | Yahoo Finance | Six kinds of stock, and 1232 rows of data for each stock | 2015–2019 | LSTM |

| Currency exchange market | (Andrijasa, 2019) | Predict exchange currency rates | Bank Indonesia official websites | 4,344 daily data series | 2001–2018 | Encoder-decoder RNN |

| (Yasir et al., 2019) | Forecast foreign exchange rate | Daily data of exchange rate | 1,304 observations | 2008–2018 | CNN | |

| (Galeshchuk, 2017) | Predict exchange rate | http://www.global-view.com/forex-trading-tools/forex-history/index.html | Each time series contains 1,304 observations | 2010–2014 | RBM, AE | |

| (Galeshchuk & Demazeau, 2017) | Forecast ungarian forint exchange rate | https://www.bloomberg.com/markets/currencies | - | 2000–2017 | CNN | |

| (Galeshchuk & Mukherjee, 2017) | Predict exchange rates | http://www.globalview.com/forex-trading-tools/forex-history/ | - | 2000–2015 | DNN, LSTM | |

| Macroeconomics and Monetary Economics | (Chen et al., 2019) | Forecast interaction of exchange rates | Poloniex, Kaggle, Dukascopy Bank’s website | - | 2016–2017 | CNN, fixed-length binary Strings, a binary component |

| Social-economic | (Yu et al., 2020a) | Estimate economy | ImageNet data set, Landsat image dataset | - | 2009 | CNN |

| Government bonds | (Suimon et al., 2020) | Represent the Japanese yield curve | Several weekly Japanese Government Bond rates with varying maturities | - | 1992–2019 | AE |

Note: IMF (International Monetary Fund)

Agricultural and natural resource economics

Agricultural and natural resource economics mainly discuss economic issues pertaining to two closely related fields: agriculture and natural resources (“Jel classification system,“). Table 12 demonstrates some examples of applying deep learning for agricultural and natural resource economics. In terms of the features of problems and the selected data, CNN is used to find useful learning model from numerous images for catfish density estimation (Conte et al., 2019) and tomato plant diseases classification (Gadekallu et al., 2020), DNN is utilized to help complex investment decisions (Frey et al., 2019), RNN and LSTM is suitable for forecasting short-term power load or energy price (Abdel-Nasser & Mahmoud, 2019; Guo et al., 2018; Zhang et al., 2020a; Zhang et al., 2019; Zhou et al., 2019), DRL can derive optimal power flow (Zhou et al., 2020b) and decide the price of electric vehicles (Qiu et al., 2020). Unlike above two applications, the time period of the invested data covers different lengths. Some of them are as long as thirteen years, while others are as short as two months. And the data are selected from various channels, dataset repository, electricity market, relevant website or references, etc.

Table 12.

Applications of deep learning for agricultural and natural resource economics

| Application sub field | Article | Aim of study | Data set | Data size | Time span | Model |

|---|---|---|---|---|---|---|

| Food economic chain | (Conte et al., 2019) | Estimate catfish density | Aerial Images | 300 images | - | CNN, Aerial Images Analysis |

| (Gadekallu et al., 2020) | Classify tomato plant diseases | Plant-village dataset repository | - | - | DNN, Whale optimization algorithm | |

| Energy market | (Frey et al., 2019) | Predict for Investment decisions | Energymap.info | 1.4 million solar installations | - | DNN, gradient boosting, random forests |

| (Huang & Wu, 2018) | Forecast price | 5 crude oil spot prices (WTI, Brent, Forties, Dubai, and Oman), 2 financial prices (the gold prices and the U.S. exchange rate) | 435 daily observations | 2009–2010 | DMKL, directed deep acyclic graph | |

| (Zhang et al., 2020a) | Forecast day-ahead electricity price | The New England electricity market, ISO launches Standard Market | - | - | DRNN | |

| (Zhang et al., 2019) | Promote the accuracy of wind prediction | Wind power from Hongfeng Eco-town | - | 2009–2017 | LSTM, multi-objective particle swarm optimization | |

| (Zhou et al., 2019) | Forecast electricity price | The electricity price of the Pennsylvania-New Jersey-Maryland power market | - | 2018 | LSTM, SMBO | |

| (Abdel-Nasser & Mahmoud, 2019) | Forecast photovoltaic power | Two photovoltaic datasets for locations in Aswan (Dataset1) and Cairo (Dataset2) cities, Egypt | - | 1 year | LSTM-RNN | |

| (Guo et al. 2018) | Forecast short-term power load | https://www.torontohydro.com, http://climate.weather.gc.ca/indexe.html | - | 2002–2016 | Integrating several LSTM networks | |

| (Zhou et al., 2020b) | Derive optimal power flow | Data come from references | 17,364 in data set I and 2,000 in data set II on the IEEE 14-bus system. 20,000 in data set I and 5,000 in data set II on the Illinois 200-bus system | - | DRL | |

| Electric vehicles | (Pei et al., 2020) | Forecast vehicle velocity | Open Street Map | - | - | DBM, bidirectional LSTM |

| (Qiu et al., 2020) | Pricing electric vehicles | Data come from the reference | - | - | DRL |

Note: DMKL (Deep Multiple Kernel Learning), DBM (Deep restricted Boltzmann Machines)

Industrial organization

There are many subcategories in industrial organization that are closely related to those in microeconomics. The studies of industry include two types: manufacturing and services (“Jel classification system,“). As shown in Table 13, in the aspect of manufacturing, Phitthayanon and Rungreunganun (2019) used deep learning technique to predict jewelry production material cost for jewelry production, Wang et al. (2020a) adopted a deep granular network with adaptive unequal‑length granulation strategy to forecast long term time series for industrial enterprises, Wang and Zeng (2020) utilized multilayer CNN to select feature and estimate the influence of COVID-19 epidemic situation to sports industry. In terms of services, LSTM, CNN and their variants have been widely applied to solve problems in Internet services and restaurant industry, such as economic benefit evaluation (Arkhangelski et al., 2020), security threat detection (Adebowale et al., 2020; Ebrahimi et al., 2020; Guo, 2020; Ullah et al., 2019), reviews and bankruptcy prediction (Alsmadi et al., 2020; Becerra-Vicario et al., 2020). Meanwhile, we can see that the collected data are coming from a lot of ways: websites, industrial enterprises, references and database, which may be because of the wide coverage features of industrial organization.

Table 13.

Applications of deep learning for industrial organization

| Application sub field | Article | Aim of study | Date set | Date size | Time span | Model |

|---|---|---|---|---|---|---|

| Jewelry Production | (Phitthayanon & Rungreunganun, 2019) | Predict material cost | XAUUSD and XAGUSD at London Fixed market. The gold and silver price data were collected and archived by usagold.com. The diamond price data were obtained from the reference | - | 2000–2018 | NAR model, NARX |

| Industry enterprise | (Wang et al., 2020a) | Forecast long-term time series in industrial production | the Mackey–Glass time-series, the Rossler time series data, the flow of passenger on the Paris metro line 3 and 13, as well as two practical industrial datasets | - | - | SSAEN model, SAEGN model |

| Sports industry | (Wang & Zeng, 2020) | Select typical economic indicators | Standard economic parameter database and Yale industrial economic database | - | - | Multilayer CNN |

| Mart-community microgrid | (Arkhangelski et al., 2020) | Evaluate the economic benefits | A real conventional rural grid in France | - | - | LSTM |

| IoT (Internet of Things) | (Ullah et al., 2019) | Detect cyber security threats | GCJ | - | - | Deep CNN |

| Online attacks | (Adebowale et al., 2020) | Detect intelligent phishing | A data set containing 1 m URLs | - | - | CNN-LSTM |

| E-commerce | (Guo, 2020) | Encode image features and select the image features of commodities | MSCOCO-2015 data set | with about 160,000 product images for training, and about 80,000 product images for test and verification | - | CNN, attention mechanism |

| (Alsmadi et al., 2020) | Predict helpful reviews | 2014 version of the Amazon reviews dataset | around 83.7 million unique reviews | 1996–2014 | RCNN | |

| Dark net marketplaces | (Ebrahimi et al., 2020) | Identify semi-supervised cyber threat | https://github.com/mohammadrezaebrahimi/JMIS-DarkNetMarketData | 79k product listings | - | Transductive SVM-LSTM |

| Cloud services and security | (Haytamy & Omara, 2020) | Predict the Cloud QoS provisioned values | Data come from references: TimeSynth open source library and Cloud providers’ dataset | - | 6 months | LSTM- PSO |

| (Agarwal et al., 2021) | Detect Fraudulent resource consumption attack | NASA web-server logs | - | 2 months | LSTM | |

| Restaurant industry | (Becerra-Vicario et al., 2020) | Predict bankruptcy | SABI database | 460 solvent and bankrupt companies | 2008–2017 | DRCNN, LOGIT |

Note: NARX (Nonlinear Autoregressive neural network with exogenous variables), XAUUSD (Gold Spot US Dollar), XAGUSD (Silver Spot US Dollar), GCJ (Google Code Jam)

Urban, rural, regional, real estate, and transportation economics

This subfield covers the research of urban, rural, and regional economics, real estate and transportation economics (“Jel classification system,“). Shown in Table 14, some data of this subfield are collected from industrial office, and others come from company and websites. In order to identify a slum’s degree and predict data-driven index of multiple deprivation, CNN was utilized to capture features from 1,114 very high-resolution images (Ajami et al., 2019). In the aspect of transportation economics, various deep learning techniques, like DNN, LSTM, deep capsule network or their variants, were applied to predict transportation demand or estimate socioeconomic status (Bazan-Krzywoszanska & Bereta, 2018; Ding et al., 2019; He, 2021; Markou et al., 2020). As for real state economics, DNN was used to forecast a real estate value (Yao et al., 2018) and CNN was used to map fine-scale urban housing prices through images (Rafiei & Adeli, 2016).

Table 14.

Applications of deep learning for urban, rural, regional, real estate, and transportation economics

| Application sub field | Article | Aim of study | Data set | Data size | Time span | Model |

|---|---|---|---|---|---|---|

| Slums’ degree of deprivation | (Ajami et al., 2019) | Predict data-driven index of multiple deprivation | 1114 households living in 37 notified slums | 1,114 households living | 2010 | CNN |

| Transportation system | (He, 2021) | Predict investment benefits and national economic attributes | Railway transportation industry from the National Bureau of Statistics | - | 2013–2019 | EEMD-LSTM |

| (Ding et al., 2019) | Estimate socioeconomic status | Smart card: the dataset contains all the subway records in Shanghai; POI dataset of Shanghai is crawled based on GaoDe Map API Service2; Housing price dataset is crawled from Lianjia.com 3 website | - | 2015 | S2S models containing DNN and LSTM | |

| (Markou et al., 2020) | Time series forecasting of taxi demand | Taxi data are available by the NYC TLC. | around 600 million taxi trips after data filtering | 2013–2016 | A neural network architecture based on FC dense layers and a Deep Gaussian Processes architecture | |

| Real estate market | (Bazan-Krzywoszanska & Bereta, 2018) | Forecast real estate value | the city center of Zielona Gora | 163 sale and purchase transactions | 2016–2017 | DNN |

| (Yao et al., 2018) | Map fine-scale urban housing prices | Fang.com, Tianditu.cn, several basic geographic and social media datasets | - | - | UMCNN, RF | |

| (Rafiei & Adeli, 2016) | Estimate the sale prices of real estate units | Tehran, Iran | 360 residential condominiums (3–9 stories) | 1993–2008 | Deep RBM, nonmating genetic algorithm |

Note: EEMD (Ensemble Empirical Mode Decomposition), FC (Fully-Connected), UMCNN (Convolutional Neural Network for United Mining), NYC TLC (New York City Taxi and Limousine Commission)

Health, education, and welfare

Deep learning models have also been applied to address issues related to health, education, and welfare (Table 15). Deep residual neural networks was used to estimate poverty (Tan et al., 2020), deep CNN was applied to predict asset wealth (Yeh et al., 2020), and DRL was adopted to estimate the impact of COVID-19 on the spread of the infection, personal satisfaction or quality of life, resource use and economy (Uddin et al., 2020). We find that not only CNN, but also deep residual neural networks can handle image information. Meanwhile, DRL is still good at deciding the trend or impact.

Table 15.

Applications of deep learning for health, education, and welfare

| Application sub field | Article | Aim of study | Data set | Data size | Time span | Model |

|---|---|---|---|---|---|---|

| Poverty | (Tan et al., 2020) | Estimate poverty | Landsat 8 images, spectral index data (NDVI, MNDWI, and NDBI), night-time light data, and statistical yearbook data | 39,145 satellite images | 2014–2017 | Deep residual neural networks, feature pyramid networks |

| ttTEconomic well-being | (Yeh et al., 2020) | Predict asset wealth | Nationally representative DHS | more than 500,000 households living in 19,669 villages across 23 countries in Africa | 2009–2016 | Deep CNN |

| Policy learning | (Uddin et al., 2020) | Estimate impact of COVID-19 on the spread of the infection, personal satisfaction or quality of life, resource use and economy | Simulation data | 100 episodes | - | DRL |

Note: DHS (Demographic and Health Surveys)

Business administration and microeconomics

This part contains studies about business administration: production, personnel, and information technology management, new firms, corporate culture, and international business administration. Microeconomics is a branch of modern economics, mainly taking a single economic unit (a single producer, a single consumer, a single market economic activity) as a subject of analysis. As illustrated in Table 16, the method combining CNN with constraint theory was adopted to extract features of trademark images for commodity economy (Lan et al., 2018), DNN was used to assess risk for overseas investment of enterprises (Xu, 2020) and recognize pattern and make classification for multi-slot spectrum auction (Feng et al., 2018), LSTM was adopted to investigate and learn useful sentiment information from large amount of consumer review records (Luo et al., 2021).

Table 16.

Applications of deep learning for business administration and microeconomics

| Application sub field | Article | Aim of study | Data set | Data size | Time span | Model | |

|---|---|---|---|---|---|---|---|

| Commodity economy | (Lan et al., 2018) | Extract features of trademark images | Self-built trademark training database | 1,141 trademarks | - | CNN, Constraint Theory | |

| Overseas investment of enterprises | (Xu, 2020) | Assess risk | Countries and regions that have continuity in the Fraser risk assessment learning label | the selected training samples include a total of 124 research samples containing 4,284 feature values; the selected test samples include a total of 41 research samples containing 1,426 feature values. | 2018–2019 | DNN | |

| Auction market | (Feng et al., 2018) | Recognize pattern and make classification | Simulation data | - | - | DNN | |

| Hotel management | (Luo et al., 2021) | Investigate the sentiment of hotel guests | eLong.com | 363,723 reviews | 2018 | Bidirectional LSTM, conditional random field | |

Critical review of deep learning in economics

Critical reviews on deep learning models and applications in economics

Because of the excellent feature learning capability in constructing model, deep learning presents great application values in economic research. The model containing deep learning can not only handle a great amount of data in experiment, but also create an effective way to solve problems of economics. There are some comments about deep learning models and applications in economics:

1) Critical reviews of deep learning models are in terms of two points: basic models and hybrid techniques. On the one hand, different basic deep learning models play different roles in economic field. DNN, AE and RBM are the general models to construct the learning architecture because of the highly efficient computation ability when dealing with large amount of and high dimensional data. When long-term or short-term action affects the current state, or the economic problem is dynamic over time, RNN, Transformer and their variants are the most suitable options to describe the situation and construct the learning model. CNN and its variants have been widely applied to handle a large number of images in economic research, exchange rate forecast. On the other hand, hybrid deep learning has cooperated with various technologies in handling problems in economics. For example, DRL is a good way to help people make decisions and evaluate economic condition. Decision tree is one of classic decision-making methods, collaborated with DNN, they successfully predicted the currency crisis event (Alaminos et al., 2019). With the assist of attention mechanism, CNN was developed to successfully extract the most important image feature (Guo, 2020). Cooperated with logistic regression, RNN obtained better bankruptcy prediction results than single model (Becerra-Vicario et al., 2020). The combination of LSTM and sentiment analysis developed stock closing price prediction (Jin et al., 2020). Furthermore, deep learning models were successfully cooperated with other optimization technologies, e.g., improved particle swarm optimization (Ji et al., 2021), whale optimization algorithm (Gadekallu et al., 2020), multi-objective particle swarm optimization (Zhang et al., 2019), sequence model-based optimization (Zhou et al., 2019). To sum up, basic and hybrid deep learning models accelerate the development of economic research. In the future, it is possible to use various types of deep learning models to conduct a better performance in the field of economics, and novel combinations of deep learning models and other technologies are used to tackle economic issues, such as combining deep learning with social network systems to forecast the state of economic development, or developing deep learning in multi-agent system for better economic evaluation and intelligent decision-making.