Summary

Genotype imputation is a fundamental step in genomic data analysis, where missing variant genotypes are predicted using the existing genotypes of nearby ‘tag’ variants. Although researchers can outsource genotype imputation, privacy concerns may prohibit genetic data sharing with an untrusted imputation service. Here, we developed secure genotype imputation using efficient homomorphic encryption (HE) techniques. In HE-based methods, the genotype data is secure while it is in transit, at rest, and in analysis. It can only be decrypted by the owner. We compared secure imputation with three state-of-the-art non-secure methods and found that HE-based methods provide genetic data security with comparable accuracy for common variants. HE-based methods have time and memory requirements that are comparable or lower than the non-secure methods. Our results provide evidence that HE-based methods can practically perform resource-intensive computations for high throughput genetic data analysis. Source code is freely available for download at https://github.com/K-miran/secure-imputation. A record of this paper’s Transparent Peer Review process is included in the Supplemental Information.

Introduction

Whole-genome sequencing (WGS) (Ng & Kirkness 2010, Shendure et al. 2017) has become the standard technique in clinical settings for tailoring personalized treatments (Rehm 2017) and in research settings for building reference genetic databases (Chisholm et al. 2013, Consortium 2015, Schwarze et al. 2018). Technological advances in the last decade enabled a massive increase in the throughput of WGS methods (Heather & Chain 2016), which provided the opportunity for population-scale sequencing (Goldfeder et al. 2017), where a large sample from a population is sequenced, for studying ancestry, complex phenotypes (Allen et al. 2010, Locke et al. 2015), as well as rare (Agarwala et al. 2013, Chen et al. 2019, Gibson 2012) and chronic diseases (Cooper et al. 2008). While the price of sequencing has been decreasing, the sample sizes are increasing to accommodate the power necessary for new studies. It is anticipated that tens of millions of individuals will have access to their personal genomes in the next few years.

The increasing size of sequencing data creates new challenges arise for sharing, storage, and analyses of genomic data. Among these, genomic data security and privacy has received much attention in recent years. Most notably, the increasing prevalence of genomic data, e.g., direct-to-consumer testing and recreational genealogy, make it harder to share genomic data due to privacy concerns. Genotype data is very accurate in identifying the owner because of its high dimensionality, and leakage can cause concerns about discrimination and stigmatization (Nissenbaum 2009). Also, the recent cases of forensic usage of genotype data are making it very complicated to share data for research purposes. The identification risks extend to family members of the owner since a large portion of genetic data is shared with relatives. Many attacks have been proposed on genomic data sharing models, where the correlative structure of the variant genotypes provides enough power to adversaries to make phenotype inference and individual re-identification (Nyholt et al. 2009) possible. Therefore, it is of the utmost importance to ensure that genotype data is shared securely. There is a strong need for new methods and frameworks that will enable decreasing the cost and facilitate analysis and management of genome sequencing.

One of the main techniques used for decreasing the cost of large-scale genotyping is in-silico genotype imputation, i.e., measuring genotypes at a subsample of variants, e.g., using a genotyping array and then utilizing the correlations among the genotypes of nearby variants (the variants that are close to each other in genomic coordinates) and imputing the missing genotypes using the sparsely genotyped variants (Das et al. 2018, Howie et al. 2011, Marchini & Howie 2010). Imputation methods aim at capturing the linkage disequilibrium patterns on the genome. These patterns emerge because the genomic recombination occurs at hotspots rather than at uniformly random positions along the genome. The genotyping arrays are designed around the idea of selecting a small set of ‘tag’ variants that, as small as 1% of all variants, optimize the trade-off between the cost and the imputation accuracy (Hoffmann et al. 2011, Stram 2004). Imputation methods learn the correlations among variant genotypes by using population-scale sequencing projects (Loh et al. 2016). In addition to filling missing genotypes, the imputation process has many other advantages. Combining low-cost array platforms with computational genotype imputation methods decreases genotyping costs and increases the power of genome-wide association studies (GWAS) by increasing the sample sizes (Tam et al. 2019). Accurate imputation can also greatly help with the fine mapping of causal variants (Schaid et al. 2018) and is vital for meta-analysis of the GWAS (Evangelou & Ioannidis 2013). Genotype imputation is now a standard and integral step in performing GWAS. Although imputation methods can predict only the variant genotypes that exist in the panels, the panels’ sample sizes are increasing rapidly, e.g., in projects such as TOPMed (NHLBI Trans-Omics for Precision Medicine Whole Genome Sequencing Program 2016, Taliun et al. 2019) will provide training data for imputation methods to predict rarer variant genotypes, and this can increase the sensitivity of GWAS.

Although imputation and sparse genotyping methods enable a vast decrease in genotyping costs, they are computationally very intensive and require management of large genotype panels and interpretation of the results (Howie et al. 2012). The imputation tasks can be outsourced to third parties, such as the Michigan Imputation Server, where users upload the genotypes (as a VCF file) to a server that performs imputation internally using a large computing system. The imputed genotypes are then sent back to the user. There are, however, major privacy (Naveed et al. 2015) and data security (Berger & Cho 2019) concerns over using these services, since the genotype data is analyzed in plaintext format where any adversary who has access to the third party’s computer can view, copy, or even modify the genotype data. As genotype imputation is one of the central initial steps in many genomic analysis pipelines, it is essential that the imputation be performed securely to ensure that these pipelines can be computed securely as a whole. For instance, although several secure methods for GWAS have been developed (Cho et al. 2018), if the genotype imputation (a vital step in GWAS analyses) is not performed securely, it is not possible to make sure GWAS analysis can be performed securely.

In order to test the current state-of-the-art methodologies for benchmarking the feasibility of the cryptographic methods for genotype imputation, we organized the genotype imputation track in iDASH2019 Genomic Privacy Challenges. This track benchmarked more than a dozen methods at a small scale (Supplementary Information, Supplementary Table 1) to rank the most promising approaches for secure genotype imputation. The methods developed by the top winning teams led us (organizers and contestants) to perform this study to report a more comprehensive analysis of the secure genotype imputation framework including benchmarks with state-of-the-art methods. We developed and implemented several approaches for secure genotype imputation. Our methods make use of the homomorphic encryption (HE) formalism (Gentry 2009) that provides mathematically provable, and potentially the strongest security guarantees for protecting genotype data while imputation is performed in an untrusted semi-honest environment. To include a comprehensive set of approaches, we focus on three state-of-the-art HE cryptosystems, namely Brakerski/Fan-Vercauteren (BFV) (Brakerski 2012, Fan & Vercauteren 2012), Cheon-Kim-Kim-Song (CKKS) (Cheon et al. 2017), and Fully Homomorphic Encryption over the Torus (TFHE) (Boura et al. 2018, Chillotti et al. 2019). In our HE-based framework, genotype data is encrypted by the data owner before outsourcing the data. After this point, data remains always encrypted, i.e., encrypted in-transit, in-use, and at-rest; it is never decrypted until the results are sent to the data owner. The strength of our HE-based framework stems from the fact that the genotype data remains encrypted even while the imputation is being performed. Hence, even if the imputation is outsourced to an untrusted third party, any semi-honest adversaries learn nothing from the encrypted data. This property makes the HE-based framework very powerful: For an untrusted third party who does not have access to the private key, the genotype data is indistinguishable from random noise (i.e., practically of no use) at any stage of the imputation process. Our HE-framework provides the strongest form of security for outsourcing genotype imputation compared to any other approaches under the same adversarial model.

HE-based frameworks have been deemed impractical since their inception. Therefore, in comparison to other cryptographically secure methods, such as multiparty computation (Cho et al. 2018) and trusted execution environments (Kockan et al. 2020), HE-based frameworks have received little attention. Recent theoretical breakthroughs in the HE literature, and a strong community effort (Homomorphic Encryption Standardization (HES) n.d.) have since rendered HE-based systems practical. Many of these improvements, however, are only beginning to be reflected in practical implementations and applications of HE algorithms. In this study, we provide evidence for the practicality of the HE formalism by building secure and ready-to-deploy methods for genotype imputation. We perform detailed benchmarking of the time and memory requirements of HE-based imputation methods and demonstrate the feasibility of large-scale secure imputation. In addition, we compared HE-based imputation methods with the state-of-the-art plaintext, i.e., non-secure, imputation methods, and we found comparable performance (with a slight decrease) in the imputation accuracy with the benefit of total genomic data security.

We present HE-based imputation methods in the context of two main steps, as this enables a general modular approach. The first step is imputation model building, where imputation models are trained using the reference genotype panel with a set of tag variants (variant genotypes on an Illumina array platform) to impute the genotypes for a set of target variants, e.g., common variants in the 1000 Genomes Project (Consortium 2015) samples. The second step is the secure imputation step where the encrypted tag variant genotypes are used to predict the target genotypes (which are encrypted) by using the imputation models trained in the first step. This step, i.e., imputation model evaluation using the encrypted tag variant genotypes, is where the HE-based methods are deployed. In principle, the model training step needs to be performed only once when the tag variants do not change, i.e., the same array platform is used for multiple studies. Although these steps seem independent, model evaluation is heavily dependent on the representation and encoding of the genotype data, and the model complexity affects the timing and memory requirements of the secure outsourced imputation methods. Our results suggest, however, that linear models (or any other model that can be approximated by linear models) can be almost seamlessly trained and evaluated securely, where the model builders (1st step) and model evaluators (2nd step) can work independently. Our results, however, also show that there is an accompanying performance penalty, especially for the rare variants, in using these models and we believe that new and accurate methods are needed to provide both privacy and imputation accuracy. It should be noted that the performance penalty stems not from HE-model evaluation but the lower performance of plaintext models. We provide a pipeline that implements both model training and evaluation steps so that it can be run on any selection of tag variants. We make the implementations publicly available so that they can be used as a reference by the computational genomics community.

Results

We present the scenario and the setting for secure imputation and describe the secure imputation approaches we developed. Next, we present accuracy comparisons with the current state-of-the-art non-secure imputation methods and the time and memory requirements of the secure imputation methods. Finally, we present the comparison of time and memory requirements of our secure imputation pipeline with the non-secure methods.

Genotype Imputation Scenario

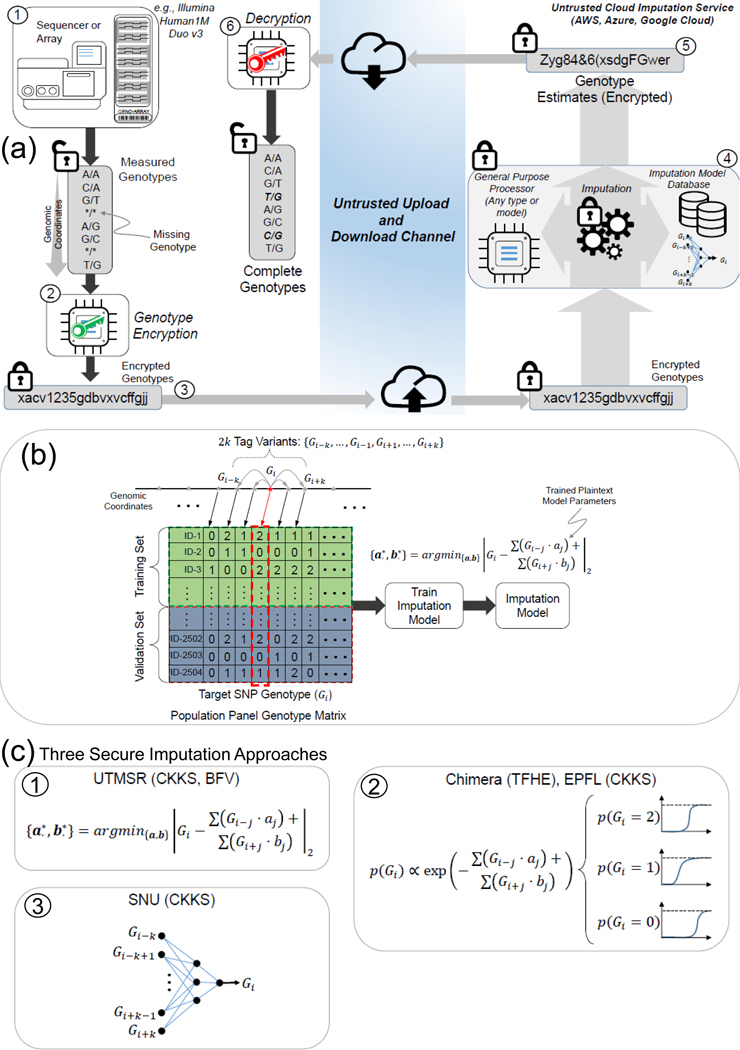

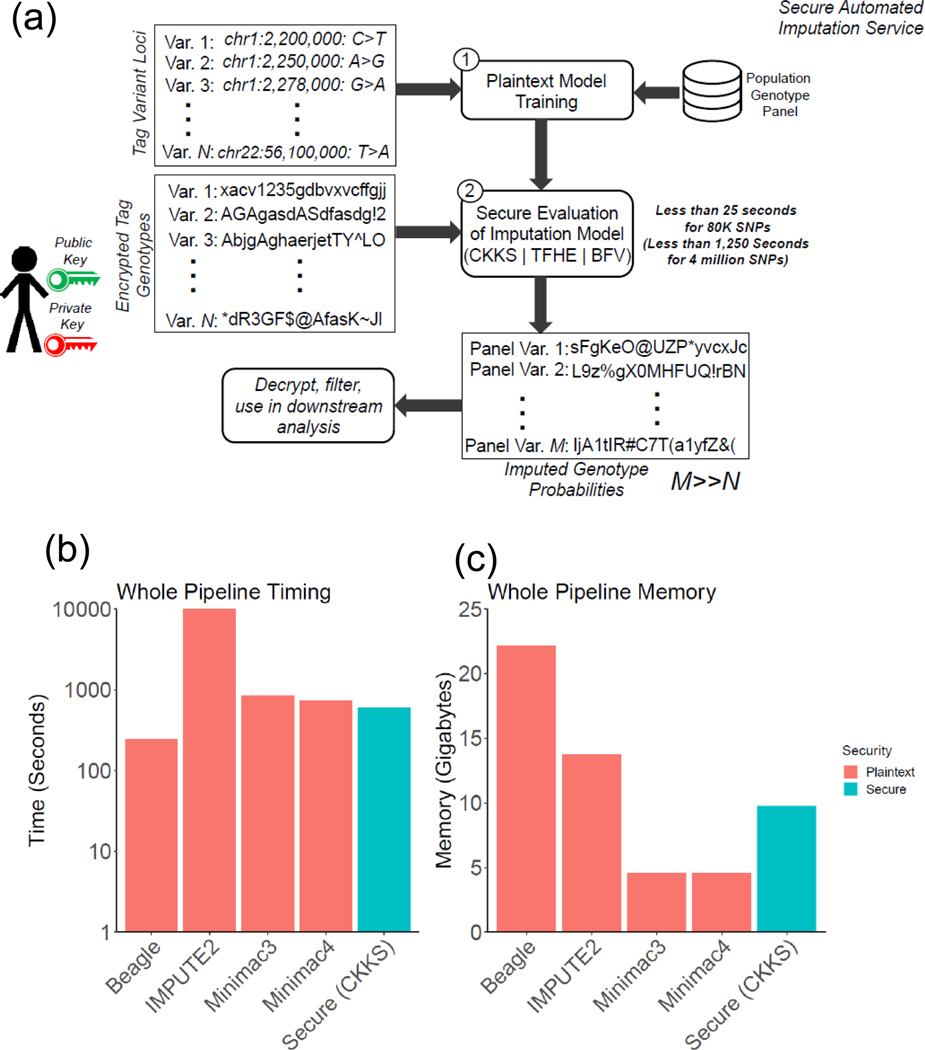

Figure 1a illustrates the secure imputation scenario. A researcher genotypes a cohort of individuals by using genotyping arrays or other targeted methods, such as whole-exome sequencing, and calls the variants using a variant caller such as GATK(Depristo et al. 2011). After the genotyping, the genotypes are stored in plaintext, i.e., unencrypted and not secure for outsourcing. Each variant genotype is represented by one of the three values {0,1,2}, where 0 indicates a homozygous reference genotype, 1 indicates a heterozygous genotype, and 2 indicates a homozygous alternate genotype. To secure the genotype data, the researcher generates two keys, a public key for encrypting the genotype data and a private key for decrypting the imputed data. The public key is used to encrypt the genotype data into ciphertext, i.e., random-looking data that contains the genotype data in a secure form. It is mathematically provable (i.e., equivalent to the hardness of solving the ring learning with errors, or RLWE, problem (Lyubashevsky et al. 2010)) that the encrypted genotypes cannot be decrypted into plaintext genotype data by a third party without the private key, which is in the possession of only the researcher. Even if an unauthorized third party copies the encrypted data without authorization (e.g., hacking, stolen hard drives), they cannot gain any information from the data as it is essentially random noise without the private key. The security (and privacy) of the genotype data is therefore guaranteed, as long as the private key is not compromised. The security guarantee of the imputation methods is based on the fact that genotype data is encrypted in transit, during analysis, and at rest. The only plaintext data that is transmitted to the untrusted entity is the locations of the variants, i.e., the chromosomes and positions of the variants. Since the variant locations are publicly known for genotyping arrays, they should not leak any information. However, when the genotyping is performed by sequencing-based methods, the variant positions may leak information, as we discuss more below.

Figure 1.

Illustration of secure genotype imputation. a) Illustration of the genotype imputation scenario. The incomplete genotypes are measured by genotyping arrays with missing genotypes (represented by stars). Encryption generates random-looking string from the genotypes. At the server, encrypted genotypes are encoded, then they are used to compute the missing variant genotype probabilities. The encrypted probabilities are sent to the researcher, who decrypts the probabilities identifies the genotypes with highest probabilities (italic values). b) Building of the plaintext model for genotype imputation. The server uses a publicly available panel to build genotype estimation models for each variant. The models are stored in plaintext domain. The model in the current study is a linear model where each variant genotype is modeled using genotypes of variants within a k variant vicinity of the target variant. c) The plaintext models implemented under the secure frameworks.

The encrypted genotypes are sent through a channel to the imputation service. The channel does not have to be secure against an eavesdropper because the genotype data is encrypted by the researcher. However, secure channels should regardless be authenticated to prevent malicious man-in-the-middle attacks (Gangan 2015). The encrypted genotypes are received by the imputation service, an honest-but-curious entity, i.e., they will receive the data legitimately and extract all the private information they can from the data. A privacy breach is, however, impossible as the data is always encrypted when it is in the possession of the imputation service. Hence, the only reasonable action for the secure imputation server is to perform the genotype imputation and to return the data to the researcher. It is possible that the imputation server acts maliciously and intentionally returns bad-quality data to the researcher using badly calibrated models. It is, however, economically or academically reasonable to assume that this is unlikely since it would be easy to detect this behavior on the researcher’s side and to advertise the malicious or low quality of the service to other researchers. Therefore, we assume that the secure server is semi-honest, and it performs the imputation task as accurately as possible. However, more complex malicious entities that perform complex attacks (e.g. slight biases in the models) are harder to detect. We treat these scenarios as out of scope of our current study. Providing secure services against malicious entities is a worthwhile direction to explore for future studies.

After receipt of the encrypted genotypes by the server, the first step is re-coding of the encrypted data into a packed format (Supplementary Figure 1) that is optimized for the secure imputation process. This step is performed to decrease time requirements and to optimize the memory usage of the imputation process. The data is coded to enable analysis of multiple genotypes in one cycle of the imputation process (Dowlin et al. 2017). The next step is the secure evaluation of the imputation models, which entails securely computing the genotype probability for each variant by using the encrypted genotypes. The variants received from the researcher are treated as the tag variants whose genotypes are used as features in the imputation model to predict the “target” variants, i.e., the missing variants (Fig. 1b). For each target variant, the corresponding imputation model uses the genotypes of the nearby tag variants to predict the target variant genotype in terms of genotype probabilities. In other words, we use a number of nearby tag variants to build an imputation model for the respective target variant such that the tag variants that are nearby (in genomic coordinates) are treated as the features for assigning genotype scores for the target variant. After the imputation is performed, the encrypted genotype probabilities are sent to the researcher. The researcher decrypts the genotype probabilities by using the private key. The final genotypes can be assigned using the maximum probability genotype estimate, i.e., by selecting the genotype with the highest probability for each variant.

Genotype Imputation Models.

We provide five approaches implemented by four different teams. For simplicity of description, we refer to the teams as Chimera, EPFL, SNU, and UTMSR (See Methods). Among these, CKKS is used in three different approaches (EPFL-CKKS, SNU-CKKS, UTMSR-CKKS), and BFV and TFHE are each utilized by one separate approach (UTMSR-BFV and Chimera-TFHE, respectively). The teams independently developed and trained the plaintext imputation models using the reference genotype panel dataset. For each target variant, the tag variants in the vicinity of the target variant are used for imputing the target variant, i.e., the tag variants in the vicinity are used as the features in the imputation models. Chimera team trained a logistic regression model and EPFL team trained a multinomial logistic regression model. (Supplementary Figure 4, Table 7, and Tables 3,4); SNU team used a 1-hidden layer neural network (Fig. 1c, Supplementary Figures 2, 3, Table 5); and UTMSR team trained a linear regression model (Fig. 1c, Supplementary Figure 5).

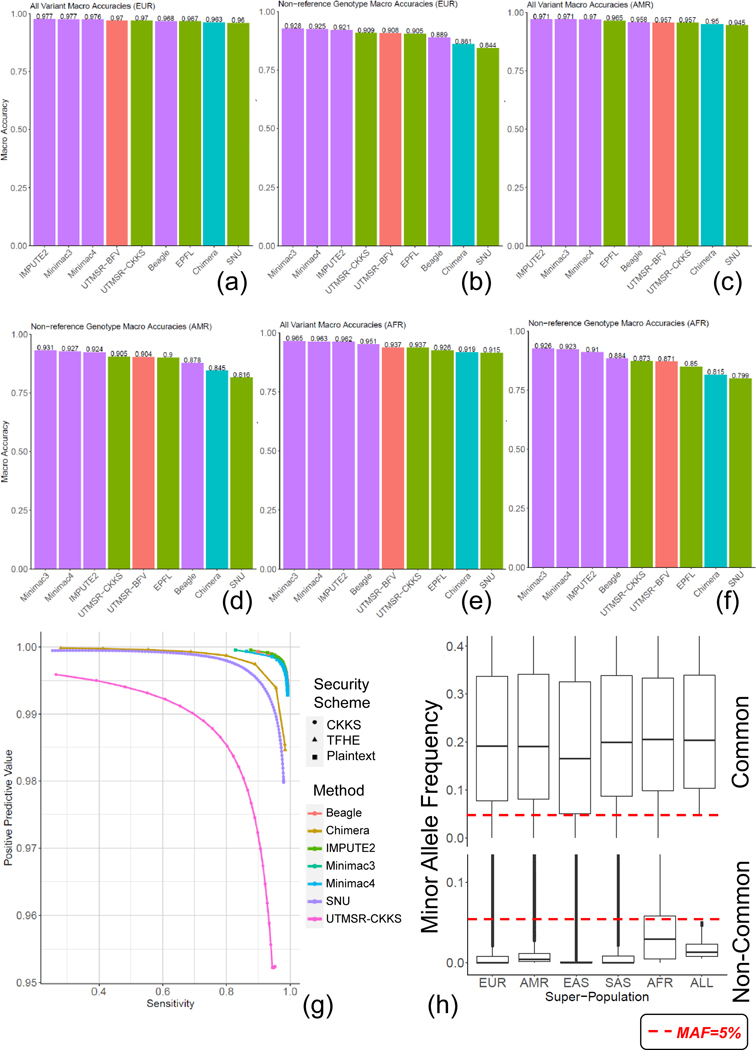

Figure 3.

The population stratification of the accuracy is shown for EUR all genotypes (a) and non-ref genotypes (b), AMR all (c) and non-ref (d) genotypes, and AFR all (e), and non-ref genotypes (f). Precision-recall curve for rare variants (g). The box plots illustrate the super-population-specific minor allele frequency distribution (y-axis) for the common (top) and un-common variants (bottom) (h). ALL indicates the MAF distribution for all populations. The center and the two ends of the boxplots show the median and 25–75% values of the MAF distributions.

Genotype Representation.

All methods treat the genotypes as continuous predictions, except for Chimera and SNU teams who utilized a one-hot encoding of the genotypes (see Methods), e.g., 0 → (1,0,0), 1 → (0,1,0), 2 → (0,0,1).

Tag Variant (Feature) Selection.

The selection of the tag variants is important as these represent the features that are used for imputing each target variant. In general, we found that the models that use 30–40 tag variants provide optimal results (for the current array platform) in terms of imputation accuracy (Supplementary Tables 2, 5, 6, 8). As previous studies have shown, tag variant selection can provide an increase in imputation accuracy (Yu & Schaid 2007). Finally, we observed a general trend of linear scaling with the number of target variants (as shown in Supplementary Figure 6 and other supplementary tables). This provides evidence that there is minimal extra overhead (in addition to the linear increasing sample size) to scaling to genome-wide and population-wide computations.

Training and Secure Evaluation of Models

We present the accuracy comparison results below. We include extended discussion of the specific ideas used for training and for secure evaluation of the genotype imputation models in Supplementary Information.

Accuracy Comparisons with the Non-Secure Methods

We first analyzed the imputation accuracy of the secure methods with the plaintext (non-secure) counterparts that are the most popular state-of-the-art imputation methods. We compared secure imputation methods with IMPUTE2 (Howie et al. 2009), Minimac3 (Das et al. 2016) (and Minimac4, which is an efficient re-implementation of Minimac3), and Beagle (Browning et al. 2018) methods. These plaintext methods utilize hidden Markov models (HMM) for genotype imputation (see Methods). The population panels and the pre-computed estimates of the recombination frequencies are taken as input to the methods. Each method is set to provide a measure of genotype probabilities, in addition to the imputed genotype values.

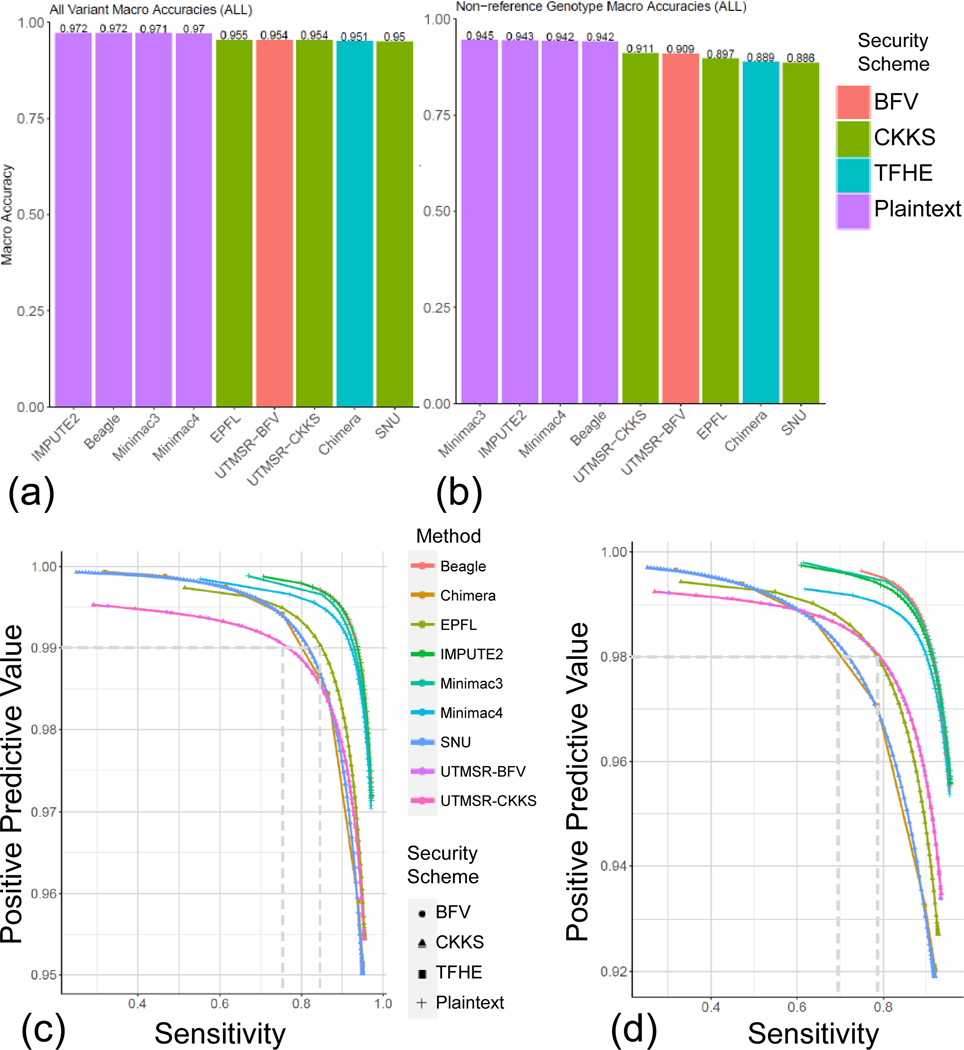

To perform comparisons in a realistic setting, we used the variants on the Illumina Duo 1M version 3 array platform (Johnson et al. 2013). This is a popular array platform that covers more than 1.1 million variants and is used by population-scale genotyping studies such as HAPMAP (Belmont et al. 2003). We extracted the genotypes of the variants that are probed by this array platform and overlap with the variants identified by the 1000 Genomes Project population panel of 2,504 individuals. For simplicity of comparisons, we focused on chromosome 22. The variants that are probed by the array are treated as the tag variants that are used to estimate the target variant genotypes. The target variants are defined as the variants on chromosome 22 whose allele frequency is greater than 5%, as estimated by the 1000 Genomes Project (Consortium 2015). We used the 16,184 tag variants and 80,882 common target variants. Then, we randomly divided the 2,504 individuals into a training genotype panel of 1,500 samples and a testing panel of 1,004 samples. The training panel is used as the input to the plaintext methods (i.e., IMPUTE2, Minimac3–4, Beagle) and also for building the plaintext imputation models of the secure methods. Each method is then used to impute the target variants using the tag variants. Figure 2a shows the comparison of genotype prediction accuracy computed over all the predictions made by the methods. The non-secure methods show the highest accuracy among all the methods. The secure methods exhibit very similar accuracy, whereas the closest method follows with only a 2–3% decrease in accuracy. To understand the differences between the methods, we also computed the accuracy of the non-reference genotype predictions (see Methods, Fig. 2b). The non-secure methods show slightly higher accuracy compared to the secure methods. These results indicate that the proposed secure methods provide perfect data privacy at the cost of a slight decrease in imputation accuracy.

Figure 2.

Accuracy benchmarks. Accuracy for all genotypes (a) and the non-reference genotypes (b) are shown for each method (x-axis). Average accuracy value is shown at the top of each bar for comparison. Precision-recall curves are plotted for all genotypes (c) and the non-reference genotypes (d). Plaintext indicates the non-secure methods.

We next assessed whether the genotype probabilities (or scores) computed from the secure methods provide meaningful measures for choosing reliably imputed genotypes. For this, we calculated the sensitivity and the positive predictive value (PPV) of the imputed genotypes whose scores exceed a cutoff (see Methods). To analyze how the cutoff selections affect the accuracy metrics, we shifted the cutoff (swept the cutoff over the range of genotype scores) so that the accuracy is computed for the most reliable genotypes (high cutoff) and for the most inclusive genotypes (low cutoff). We then plotted the sensitivity versus the PPV (Fig 2c). Compared to the secure methods, the non-secure methods generally show a higher sensitivity at the same PPV. However, secure methods can capture more than 80% of the known genotypes at 98% accuracy. The same results hold for the non-reference genotypes’ prediction accuracy (Fig 2d). These results indicate that secure genotype predictions can be filtered by setting cutoffs to improve accuracy.

We also evaluated the population-specific effects on the imputation accuracy. For this, we divided the testing panel into three populations, 210 European (EUR), 135 American (AMR), and 272 African (AFR) samples, as provided by the 1000 Genomes Project. The training panel yielded 389 AFR, 212 AMR, and 293 EUR samples. Figures 3a and 3b show genotype and non-ref genotype accuracy for EUR, respectively. We observed that the non-secure and secure methods are similar in terms of accuracy. We observed that the secure CKKS (UTMSR-CKKS) scheme with a linear prediction model outperformed Beagle in EUR population, with marginally higher accuracy. We observed similar results for AMR populations where the non-secure methods performed at the top and secure methods show very similar but slightly lower accuracy (Fig. 3c, 3d). For AFR populations, the non-reference genotype prediction accuracy is lower for all the methods (Fig. 3e, 3f). This is mainly rooted at the fact that the African populations show distinct properties that are not yet well characterized by the 1000 Genomes Panels. We expect that the larger panels can provide better imputation accuracy.

To further investigate the nature of the imputation errors, we analyzed the characteristics of imputation errors of each method by computing the confusion matrices (Supplementary Fig. 7). We found that the most frequent errors are made when the real genotype is heterozygous, and the imputed genotype is a homozygous reference genotype. The pattern holds predominantly in secure and non-secure methods, although the errors are slightly lower, as expected, for the non-secure methods. Overall, these results indicate that secure imputation models can provide genotype imputations comparable to non-secure counterparts.

To test the performance of the methods on rare variants, we focused on the 117,904 variants whose MAF are between 0.5% and 5%. These variants represent harder to impute variants since they are much less represented compared to the common variants. The results show that the vicinity-based approaches that our methods use show a clear decrease in performance compared to the HMM-based approaches (Figure 3g). This is expected since our approaches depend heavily on existence of well-represented training datasets. In the rare variants, however, the number of training examples for the non-reference genotypes go as low as 1 or 2 examples over 1000 individuals. That is why we observed a substantial decrease in imputation power in our methods. Interestingly, we observed that the more complex methods (Chimera’s logistic regression and SNU’s neural network approach) provided comparably better accuracy than the ordinary linear model, which suggests that the more complex vicinity-based models can perform more accurate imputation for the rare variants. In summary to this comparison, the rare variants represent challenging cases and a limitation for the vicinity-based secure approaches.

It should be noted that a substantial portion of the rare variants are shown to be population-specific (Bomba et al. 2017). To test for this, we analyzed the population-specificity of the variants by computing the population-specific AF of these variants. We observed that most of the rare variants show enrichment in the African populations (Figure 3h) with a median MAF of around 2–3% for AFR. Compared to the rare variants, the common variants showed a much more frequent and more uniform representation among the populations. These results highlight that the rare variants can potentially be more accurately imputed using population-specific panels, which is in concordance with the previous studies (Kowalski et al. 2019). Finally, from the perspective of the downstream analyses such as GWAS, high allele frequency variants are much more useful since even the highly powered GWAS studies perform stringent MAF cutoff at 2–3% to ensure that the causal variants are not false-positives (Sung et al. 2018).

Timing and Memory Requirements of Secure Imputation Methods

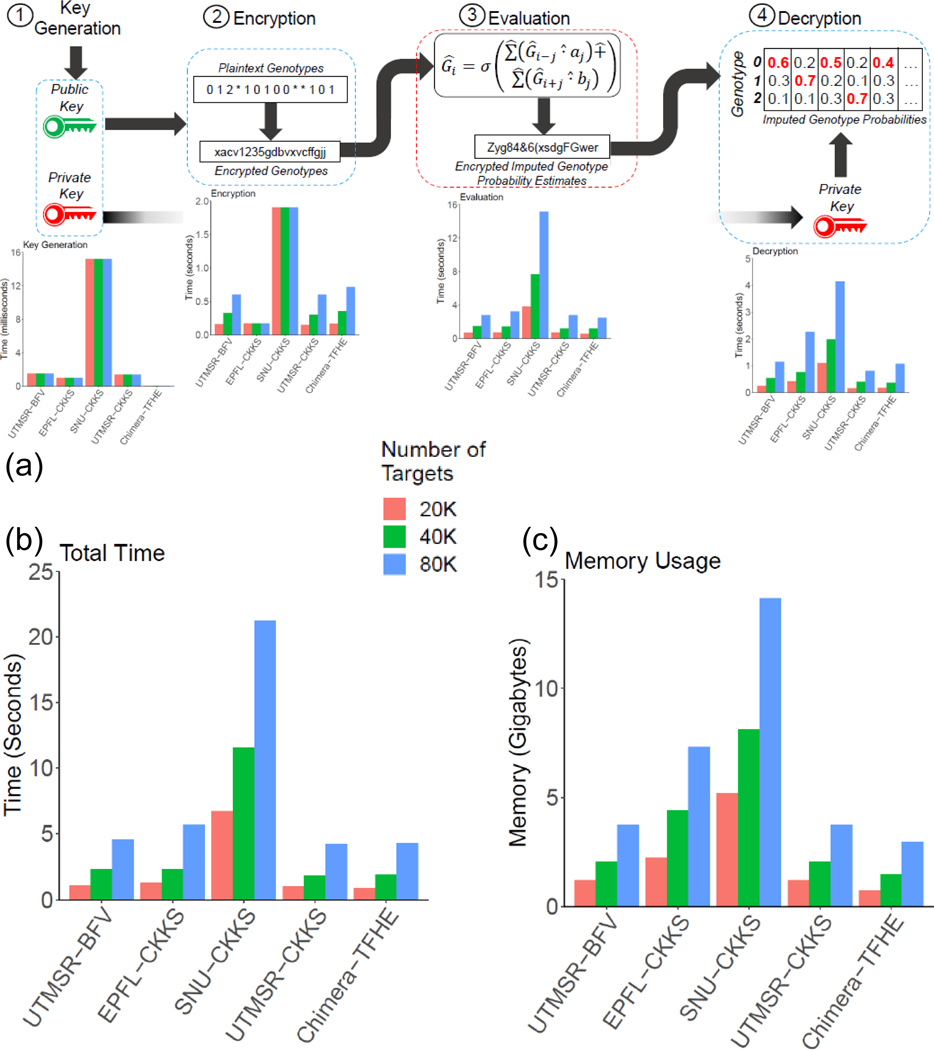

One of the main critiques of homomorphic encryption methods is that they are impractical due to memory and time requirements. We, therefore, believe that the most important challenge is to make HE methods practical in terms of memory and time. To assess and demonstrate the practicality of the secure methods, we performed a detailed analysis of the time and memory requirements of secure imputation methods. We divided the imputation process into four steps (key generation, encryption, secure model evaluation, and decryption), and we measured the time and the overall memory requirements. Figure 4a shows the detailed time requirements for each step. In addition, we studied the scalability of secure methods. For this, we report the time requirements for 20,000 (20K), 40,000 (40K), and 80,000 (80K) target variants to present how the time requirements scale with the number of target variants. The secure methods spend up to 10 milliseconds for key generation. In the encryption step, all methods were well below 2 seconds. The most time-consuming step of evaluation took less than 10 seconds, even for the largest set of 80K variants. Decryption, the last step, took less than 2 seconds. Except for the key generation and encryption, all methods exhibited a linear scaling with the increasing number of target variants. Overall, the total time spent in secure model evaluation took less than 25 seconds (Fig. 4b). This could be ignored when compared to the total time requirements of the non-secure imputation. Assuming that time usage scales linearly with the number of target variants (Fig. 3a), 4 million variants can be evaluated in approximately 1,250 seconds, which is less than half an hour. In other terms, secure evaluation is approximately 312 microseconds per variant per 1000 individuals ((25 sec×1000 individuals)/(80,000 variants×1004 individuals)). It can be decreased even further by scaling to a higher number of CPUs (i.e., cores on local machines or instances on cloud resources). In terms of memory usage, all methods required less than 15 gigabytes of main memory, and three of the five approaches required less than 5 gigabytes (Fig. 4c). These results highlight the fact that secure methods could be deployed on even the commodity computer systems. The training of the methods on rare variants were performed to ensure the assigned scores are best-tuned for the unbalanced training data in rare variants. Chimera and SNU teams (best performing methods) have a diverse range of requirements for secure evaluation where the neural network approach (SNU) requires high resources while logistic regression approach has much more practicable resource requirements (Supplementary Tables 9, 10).

Figure 4.

Memory and time requirements of the secure methods. Each method is divided into 4 steps, (1) key generation, (2) Encryption, (3) Evaluation, (4) Decryption. The bar plots show the time requirements (a) using 20K, 40K, and 80K target variant sets. The aggregated time (b) and the maximum memory usage of the methods are also shown (c).

Resource Usage Comparison between Secure and Non-Secure Imputation Methods

An important aspect of practicality is whether the methods are adaptable to different tag variants. This issue arises when a new array platform is used for genotyping the tag variants with a new set of tag variant loci. In this case, the current security framework requires that the plaintext models must be re-parametrized, and this may require a large amount of time and memory. To evaluate this, we optimized the linear models for the UTMSR-CKKS approach and measured the total time (training and evaluation) and the memory for the target variant set.

In order to make the comparisons fair with the HMM-based methods, we included the rare variants and common variants in this benchmark where the variants with MAF greater than 0.5% are used. In total, we used the 200,976 target variants in this range. This way, we believe that we perform a fair comparison of resource usage with other non-secure methods. We assumed that the training and secure evaluation would be run sequentially, and we measured the time requirement of the secure approach by summing the time for key generation, encryption, secure evaluation, decryption, and the time for training. For memory, we computed the peak memory required for training and the peak memory required for secure evaluation. These time and memory requirements provided us with an estimate of resources used by the secure pipeline (Fig. 5a) that can be fairly compared to the non-secure methods.

Figure 5.

Illustration of a secure outsourced imputation service (a). The time (b) and memory requirements (c) are illustrated in the bar plots where colors indicate security context. The y-axis shows the time (in seconds) and main memory (in gigabytes) used by each method to perform the imputation of the 80K variants where the secure outsourced method includes the plaintext model training and secure model evaluation steps.

We measured the time and memory requirements of all the methods by using a dedicated computer cluster to ensure resource requirements are measured accurately (see Methods). For IMPUTE2, there was no option for specifying multiple threads. Hence, we divided the sequenced portion of chromosome 22 into 16 regions and imputed variants in each region in parallel using IMPUTE2, as instructed by the manual, i.e., we ran 16 IMPUTE2 instances in parallel to complete the computation. We then measured the total memory required by all 16 runs and used this as the memory requirement by IMPUTE2. We used the maximum time among all the 16 runs, as the time requirements by parallelized IMPUTE2. Beagle, Minimac3, and Minimac4 were run with 16 threads as this option was available in the command line. In addition, Minimac4 requires model parametrization and preprocessing of the reference panel, which required large CPU time. We therefore included this step in timing requirements. Figures 5b and 5c show the time and memory requirements, respectively, of the three non-secure approaches and our secure method. The results show that the secure pipeline provides competitive timing (2nd fastest after Beagle) and memory requirements (3rd in terms of least usage after Minimac3 and Minimac4). Our results also show that Minimac3/Minimac4 and our secure approach provided a good tradeoff between memory and timing because Beagle and IMPUTE2 exhibit the highest time or highest memory requirements compared to other methods.

We also compared the secure models and found that different secure models exhibit diverse accuracy depending on allele frequency and position of variants (Supplemental Information).

Discussion

We presented fully secure genotype imputation methods that can practically scale to genome-wide imputation tasks by using efficient homomorphic encryption techniques where the data is encrypted in transit, in analysis, and at rest. This is a unique aspect of the HE-based frameworks because, when appropriately performed, encryption is one of the few approaches that are recognized at the legislative level as a way of secure sharing of biomedical data, e.g. by HIPAA (Wilson 2006) and partially by GDPR (Hoofnagle et al. 2019).

Our study was enabled by several key developments in the fields of genomics and computer science. First, the recent theoretical breakthroughs in the homomorphic encryption techniques have enabled massive increases in the speed of secure algorithms. As much of the data science community still regards HE as a theoretical and not-so-practical framework, the reality is far from this image. We hope that our study can provide a reference for the development of privacy-aware and fully secure approaches that employ homomorphic encryption. Second, the amount of genomic data has increased several orders of magnitude in recent years. This provides enormous genotype databases where we can train the imputation models and test them in detail, before implementing them in secure evaluation frameworks. Another significant development is the recent formation of genomic privacy communities and alliances, i.e. Global Alliance for Genomic Health (GA4GH), where researchers build interdisciplinary approaches for developing privacy-aware methods. For example, our international study stemmed from the 2019 iDASH Genomic Privacy Challenge. We firmly believe that these communities will help bring together further interdisciplinary collaborations for the development of secure genomic analysis methods.

The presented imputation methods train an imputation model for each target variant. Our approaches handle millions of models, i.e., parameters. Unlike the HMM models that can adapt seamlessly to a new set of tag variants (i.e., a new array platform), our approaches need to be retrained when the tag variants are updated. We expect that the training can be performed a-priori for a new genotyping array and that they can be re-used in the imputation. The decoupling of the (1) plaintext training and (2) secure evaluation steps is very advantageous because plaintext training can be independently performed at the third party without the need to wait for the data to arrive. This way, the users would have to accrue only the secure evaluation time that is, as our results show, much smaller compared to the time requirements of the non-secure models, as small as 312 microseconds per variant per 1000 individuals. Nevertheless, even with the training, our results show that the secure imputation framework can train and evaluate in run times comparable to the plaintext (non-secure) methods. In the future, we expect many optimizations can be introduced to the models we presented. For example, we foresee that the linear model training can be replaced with more complex feature selection and training methods. Deep neural networks are potential candidates for imputation tasks as they can be trained for learning the complex haplotype patterns to provide better imputation accuracy (Das et al. 2018). With the introduction of the graphical processing units (GPUs) on the cloud, these models can be trained and evaluated securely and efficiently. It is, however, important to be thorough about the security of the data because, as we mentioned before, even the number of untyped target variants that the researcher sends to the server can leak some information about the datasets. These stealthy leakages highlight the importance of using semantic security approaches. It is important to note that the secure evaluation steps implemented in our study replicate the results of the plaintext models virtually exactly, which indicates that “HE-conversion” does not accrue any performance penalty.

Our study aims to spearhead the feasibility of the secure genotype imputation in a high-throughput manner. As such, there are currently numerous limitations that must be overcome in future studies (Supplemental Information). For example, our approaches provide sub-optimal accuracy when compared to the non-secure methods, especially for rare variants. As we mentioned earlier, we foresee that our methods can be optimized in numerous ways. For instance, it has been previously shown that the vicinity-based methods can make use of tag SNP selection to increase accuracy (Yu & Schaid 2007). We are also foreseeing that new methods can be adapted on the hard-to-impute regions (Chen & Shi 2019b, Duan et al. 2013) to provide higher accuracy for these regions with complex haplotype structures.

Finally, we believe that the multitude of models and the secure evaluation approaches that we presented here can help provide a much-needed reference point for the development and improvement of the imputation methods. Moreover, the developed models can be easily adapted to solve other privacy-sensitive problems by using secure linear, logistic, and network model evaluations, such as the secure rare variant association tests (Wu et al. 2011). Therefore, we believe that our codebases represent an essential resource for the computational genomics community. We have organized the codebases to ensure that they can be most accessible to the users without the necessary cryptography expertise. We are hoping that our codebase can provide a central role in the development of a community (similar to dynverse (dynverse: benchmarking, constructing and interpreting single-cell trajectories n.d.) or TAPE (Chen & Shi 2019a, Tasks Assessing Protein Embeddings (TAPE), a set of five biologically relevant semi-supervised learning tasks spread across different domains of protein biology. n.d.) repositories for trajectory inference and protein embedding, respectively) where users can use the developed methods and datasets for uniform benchmarking of their new imputation method.

STAR Methods

We present and describe the data sources, accuracy metrics, and non-secure imputation method parameters. The detailed methods are presented in the Supplementary Information.

RESOURCE AVAILABILITY

Lead Contact:

Further information and requests for resources and reagents should be directed to and will be fulfilled by the Lead Contact, Arif Harmanci (arif.o.harmanci@uth.tmc.edu).

Materials Availability:

This study did not generate new materials.

Data and Code Availability:

Source data statement. Accuracy and resource benchmarking related source data have been deposited at DOI 10.5281/zenodo.4947832. The 1000 Genomes project dataset are publicly available from NCBI portal at ftp://ftp-trace.ncbi.nih.gov/1000genomes/ftp/release/20130502/ALL.chr22.phase3_shapeit2_mvncall_integrated_v5a.20130502.genotypes.vcf.gz). The Illumino array platform metadata is available from https://support.illumina.com/downloads/human1m-duo_v3–0_product_files.html.

Code statement. The original source code, documentation, and usage examples for the imputation models are deposited at https://github.com/K-miran/secure-imputation and are also archived and deposited at DOI 10.5281/zenodo.4948000.

Scripts statement. The source and scripts for generating the figures and associated instructions are archived and deposited under DOI 10.5281/zenodo.4947832 and are co-located with the figure-related datasets.

Any additional information required to reproduce this work is available from the Lead Contact.

Variant and Genotype Datasets

All the tag and target variant loci, and the genotypes are collected from the public resources. We downloaded the Illumina Duo 1M version 3 variant loci from the array’s specification at the Illumina web site (https://support.illumina.com/downloads/human1m-duo_v3–0_product_files.html). The file was parsed to extract the variants on chromosome 22, which yielded 17,777 variants. We did not use the CNVs and indels while filtering the variants and we focused only on the single nucleotide polymorphims (SNPs). We then intersected these variants with the 1000 Genomes variants on chromosome 22 to identify the array variants that are detected by the 1000 Genomes Project. We identified 16,184 variants from this intersection. This variant set represents the tag variants that are used to perform the imputation. The phased genotypes on chromosome 22 for the 2,504 individuals in the 1000 Genomes Project are downloaded from the NCBI portal (ftp://ftp-trace.ncbi.nih.gov/1000genomes/ftp/release/20130502/ALL.chr22.phase3_shapeit2_mvncall_integrated_v5a.20130502.genotypes.vcf.gz). We filtered out the variants for which the allele frequency reported by the 1000 Genomes Project is less than 5%. After excluding the tag variants on the array platform, we identified 83,072 target variants that are to be used for imputation. As the developed secure methods use vicinity variants, the variants at the ends of the chromosome are not imputed. We believe this is acceptable because these variants are located very close to the centromere and at the very end of the chromosome. After filtering the non-imputed variants, we focused on the 80,882 variants that were used for consistent benchmarking of all the secure and non-secure methods.

Accuracy Benchmark Metrics

We describe the genotype level and variant level accuracy. For each variant, we assign the genotype with the highest assigned genotype probability. The variant level accuracy is the average variant accuracy where each variant’s accuracy is estimated based on how well these imputed genotypes of the individuals match the known genotypes:

Variant level accuracy is also referred to as the macro-aggregated accuracy.

At the genotype level, we simply count the number of correctly computed genotypes and divide this with the total number of genotypes:

In the sensitivity vs positive predictive value (PPV) plots, the sensitivity and PPV are computed after filtering the imputed genotypes with respect to the imputation probability. We compute the sensitivity at the probability cutoff of τ is:

Positive predictive value measures the fraction of correctly imputed genotypes among the genotypes whose probability is above the cutoff threshold:

Next, we swept a large cutoff range for τ from −5 to 5 with steps 0.01. We finally plotted the sensitivity versus PPV to generate the precision-recall curves for each method.

Micro-AUC Accuracy Statistics.

For parameterizing the accuracy and demonstrating how different parameters affect algorithm performance, we used micro-AUC as the accuracy metric. This was also the original accuracy metric for measuring the algorithm performance in iDASH19 competition. Micro-AUC treats the imputation problem as a three-level classification problem where each variant is “classified” into one of three classes, i.e., genotypes, {0,1,2}. Micro-AUC computes an AUC metric for each genotype then microaggregates the AUCs for all the genotypes. This enables assigning one score to a multi-class classification problem. We use the implementation in scikit-learn package to measure the micro-AUC scores for each method (https://scikit-learn.org/stable/modules/generated/sklearn.metrics.roc_auc_score.html).

Measurement of Time and Memory Requirements.

For consistently measuring the time and memory usage among all the benchmarked methods, we used “/usr/bin/time -f %e “\t” %M” to report the wall time (in seconds) and peak memory usage (in kilobytes) of each method.

Secure Methods

We briefly describe the secure methods.

UTMSR-BFV and UTMSR-CKKS.

The UTMSR (UTHealth-Microsoft Research) team uses a linear model with the nearby tag variants as features for each target variant. The plaintext model training is performed using the GNU Scientific Library. The collinear features are removed by performing the SVD and removing features with singular values smaller than 0.01. The target variant genotype is modeled as a continuous variable that represents the “soft” estimate of the genotype (or the estimated dosage of the alternate allele) and can take any value from negative to positive infinity. The genotype probabilities are assigned by converting the soft genotype estimation to a score in the range [0,1]:

| (1) |

where g denotes one of the genotypes and g˜ represents the decrypted value of the imputed genotype estimate. Suppose that each variant genotype is modeled using genotypes of variants within k variant vicinity of the variant. In plaintext domain, the imputed value can be written as follows:

| (2) |

where is the intercept of the linear model, and and denote the linear model weights for the jth target variant’s rth upstream and downstream tag variants, respectively.

The secure outsourcing imputation protocols are implemented on two popular ring-based HE cryptosystems – BFV (Brakerski 2012, Fan & Vercauteren 2012) and CKKS (Cheon et al. 2017). These HE schemes share the same parameter setup and key-generation phase but have different algorithms for message encoding and homomorphic operations. In a nutshell, a ciphertext is generated by adding a random encryption of zero to an encoded plaintext, which makes the ring-based HE schemes secure under the RLWE assumption. More precisely, each tag variant is first encoded as a polynomial with its coefficients, and the encoded plaintext is encrypted into a ciphertext using the underlying HE scheme. The plaintext polynomial in the BFV scheme is separated from an error polynomial (inserted for security), whereas the plaintext polynomial in the CKKS scheme embraces the error. Then Eq. (2) is homomorphically evaluated on the encrypted genotype data by using the plain weight parameters. We exploit parallel computation on multiple individual data, and hence it enables us to obtain the predicted genotype estimates over different samples at a time. Our experimental results indicate that the linear model with 32 tag variants as features for each target variant shows the most balanced performance in terms of timing and imputation accuracy in the current testing dataset (see Supplementary Table 8 and Supplementary Figure 5). Our protocols achieve at least a 128-bit security level from the HE standardization workshop paper (Albrecht et al. 2018). We defer the complete details to the “UTHealth-Microsoft Research team solution” section in the supplementary document.

Chimera-TFHE.

The Chimera team used multi-class logistic regression (logreg) models trained over one-hot encoded tag features: each tag SNP variant is mapped to 3 Boolean variables. Chimera’s model training and architecture performed the best (with respect to accuracy and resource requirement) among six other solutions in the iDASH2019 Genotype Imputation Challenge.

We build three models per target SNP (one model per variant), i.e., target SNPs are also one-hot-encoded. These models give the probabilities for each target SNP variant. The maximal probability variant is the imputed target SNP value. A fixed number d of the nearest tag SNPs (in relation to the current target SNP) are used in model building. We train the models with different values of d in order to study the influence of neighborhood size: from 5 to 50 neighbors with an increment of 5. The most accurate model, in terms of micro-AUC score, is obtained for a neighborhood size d = 45. The fastest model with an acceptable accuracy (micro-AUC > 0.99) is obtained for d = 10. Although, the execution time of the fastest model is only ≈ 2 times faster compared to the most accurate model (refer to Table 2 in the Supplementary document).

During the homomorphic evaluation, only the linear part of the logreg model is executed, which means in particular that we do not homomorphically apply the sigmoid function on the output scores. We use the coefficient packing strategy and pack as many plaintext values as possible in a single ciphertext. The maximum number of values that can be packed in a RingLWE ciphertext equals the used ring dimension, which is n = 1024 in our solution. We chose to pack one or several columns of the input (tag SNPs) into a single ciphertext. Since the TFHE library RingLWE ciphertexts encrypt polynomials with Torus ( mod 1) coefficients, we downscale the data to Torus values (multiples of 2−14) and upscale the model coefficients to integers.

In our solution, we use linear combinations with public integer coefficients. The evaluation is based on the security of LWE and only the encryption phase uses RingLWE security notions with no additional bootstrapping or key-switching keys. The security parameters have been tuned to support binary keys. Of course, as neither bootstrapping nor key-switching is used in our solution, the key distribution can be changed to any distribution (including the full domain distribution) without any time penalty. Our scheme achieves 130 bits of security, according to the LWE estimator (Albrecht et al. 2015). More information about the our solution is described in the supplementary document (“Chimera-TFHE team solution”).

EPFL-CKKS.

EPFL uses a multinomial logistic regression model with d −1 neighboring coefficients and 1 intercept variable for each target variant, with three classes {0,1,2}. The plaintext model is trained using the scikit-learn python library. The input variants are represented as values {0,1,2}. There is no pre-processing applied to the training data. For a target position j, the predicted probabilities for each class label are given by:

| (3) |

where are the trained regression coefficients for label and position j, and are the neighboring variants for patient p around target position j. The hard prediction for position j is given by . The variants are sent encrypted and packed to the server, using the CKKS homomorphic cryptosystem, and the exponents in Eq. (3) are computed homomorphically. The client decrypts the result and can obtain the label probabilities and hard predictions for each position. For the prediction, we use several numbers of regression coefficients, ranging from 8 to 64; as this number increases, both the obtained accuracy and the computational complexity increase (see Supplementary Table 6). We use a single parametrization of the cryptosystem (see the “EPFL-Lattigo team solution” section in the Supplementary document) for all the regression sizes, which keeps the cipher expansion asymptotically constant. The security of this solution is based on the hardness of the RLWE problem with Gaussian secrets.

SNU-CKKS.

The SNU team applies one-hidden layer neural network for the genotype imputation. The model is obtained from Tensorflow module in plain (unencrypted) state, and the inference phase is progressed in encrypted stated for given test SNP data encrypted by the CKKS HE scheme. We encode each ternary SNP data into a 3-dimensional binary vector, i.e., 0 → (1,0,0), 1 → (0,1,0) and 2 → (0,0,1). For better performance in terms of both accuracy and speed, we utilize an inherent property that each target SNP is mostly related by its adjacent tag SNPs. We set the number of the adjacent tag SNPs as a pre-determined parameter d, and run experiments on various choices of the parameter (d = 8k for 1 ≤ k ≤ 9). As a result, we check that d = 40 shows the best accuracy in terms of micro-AUC. Since the running time of computing genotype score grows linear to d, the fastest result is obtained at d = 8. We refer the intermediate value d = 24 to the most balanced choice in terms of accuracy and speed.

The security of the utilized CKKS scheme relies on the hardness of solving the RLWE problem with ternary (signed binary) secret. For the security estimation, we applied the LWE estimator (Albrecht et al. 2015), a sage module that computes the computational costs of state-of-art (R)LWE attack algorithms. The script for the security estimation is attached as a figure in the “SNU team solution” section in the supplementary document.

Non-Secure Methods

We describe the versions and the details of how the non-secure methods were run. The benchmarks were performed on a Linux workstation with 769 Gigabytes of main memory on an Intel Xeon Platinum 8168 CPU at 2.7 GHz with 96 cores. No other tools were run in the course of benchmarks.

Beagle

We obtained the jar formatted Java executable file for Beagle version 5.1 from the Beagle web site. The population panel (1,500 individuals) and the testing panel data are converted into VCF file format as required by Beagle. We ran Beagle using the chromosome 22 maps provided from the web site. The number of threads is specified as 16 threads at the command line (option ‘nthreads=16’). We set the ‘gp’ and ‘ap’ flags in the command line to explicitly ask Beagle to save genotype probabilities that are used for building the sensitivity versus PPV curves. Beagle supplies the per genotype probabilities for each imputed variant. These probabilities were used in plotting the curves.

IMPUTE2

IMPUTE2 is downloaded from the IMPUTE2 website. The haplotype, legend, genotype, and the population panels are converted into specific formats that are required by IMPUTE2. We could not find a command line option to run IMPUTE2 with multiple threads. To be fair, we divided the sequenced portion of the chromosome 22 (from 16,000,000 to 51,000,000 base pairs) into 16 equally spaced regions of length 2.333 megabases. Next, we ran 16 different IMPUTE2 instances in parallel, as described in the IMPUTE2 manual. The output from the 16 runs is pooled to evaluate the imputation accuracy of IMPUTE2. IMPUTE2 provides per genotype probabilities, which were used for plotting the precision-recall curves.

Minimac3 and Minimac4

Minimac3 and Minimac4 are downloaded from the University of Michigan web site. We next downloaded Eagle 2.4.1 phasing software for phasing input genotypes. ‘Eagle+Minimac3’ and ‘Eagle+Minimac4’ were used in the Michigan Imputation Server’s pipeline that is served for the public use. The panels are converted into indexed VCF files as required by Eagle, Minimac3, and Minimac4. We first used the Eagle protocol to phase the input genotypes. The phased genotypes are supplied to Minimac3 and Minimac4, and final imputations are performed. Eagle, Minimac3, and Minimac4 were run with 16 threads using the command line options (‘−numThreads=16’ and ‘−cpus 16’ options for Eagle and Minimac3, respectively). Minimac3 and Minimac4 reports an estimated dosage of the alternate allele, which we converted to a score as in the above equation for UTMSR’s scoring.

Minimac4 algorithm requires a preprocessing of the reference haplotype with a parameter estimation step. We observed that the parameter estimation step add a substantial amount of processing time and Minimac4 requires the parameter estimates to perform imputation.

Supplementary Material

KEY RESOURCES TABLE

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Antibodies | ||

| Bacterial and virus strains | ||

| Biological samples | ||

| Chemicals, peptides, and recombinant proteins | ||

| Critical commercial assays | ||

| Deposited data | ||

| The chromosome 22 genotype calls the 2,504 individuals in The 1000 Genomes Project’s 3rd phase. | The 1000 Genomes Consortium | ftp://ftp-trace.ncbi.nih.gov/1000genomes/ftp/release/20130502/ALL.chr22.phase3_shapeit2_mvncall_integrated_v5a.20130502.genotypes.vcf.gz |

| Illumina Duo version 3 genotyping array documentation | Illumina Inc. Web Site | https://support.illumina.com/downloads/human1m-duo_v3–0_product_files.html |

| Source Data for figures 1–5 and Supplementary Information | This work | DOI: 10.5281/zenodo.4947832 |

| Experimental models: Cell lines | ||

| Experimental models: Organisms/strains | ||

| Oligonucleotides | ||

| Recombinant DNA | ||

| Software and algorithms | ||

| R Statistical Computing Platform | The R Foundation | https://www.r-project.org/ |

| Source Code and Documentation for Secure Imputation Models | This work | DOI: 10.5281/zenodo.4948000 |

| Source Code for generating Figures 1–5 and the supplementary figures | This work | DOI: 10.5281/zenodo.4947832 |

| Other | ||

Fast homomorphic encryption enables secure and practical genotype imputations.

Secure methods use using comparable resources as non-secure methods.

Secure methods are limited in accuracy especially for the rare variants.

Acknowledgments

Authors thank the National Human Genome Research Institute (NHGRI) of National Institutes of Health for providing funding and support for iDASH Genomic Privacy challenges (R13HG009072). We also thank Luyao Chen for providing technical support to set up the computational environment for unified evaluation.

Footnotes

Declaration of Interests

The authors declare no competing interests.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Agarwala V, Flannick J, Sunyaev S, Altshuler D, Consortium G. et al. (2013), ‘Evaluating empirical bounds on complex disease genetic architecture’, Nature genetics 45(12), 1418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Albrecht M, Chase M, Chen H, Ding J, Goldwasser S, Gorbunov S, Halevi S, Hoffstein J, Laine K, Lauter K, Lokam S, Micciancio D, Moody D, Morrison T, Sahai A. & Vaikuntanathan V. (2018), Homomorphic encryption security standard, Technical report, HomomorphicEncryption.org, Toronto, Canada. [Google Scholar]

- Albrecht MR, Player R. & Scott S. (2015), ‘On the concrete hardness of learning with errors’, J. Mathematical Cryptology 9(3), 169–203. URL: http://www.degruyter.com/view/j/jmc.2015.9.issue-3/jmc-2015-0016/jmc-2015-0016.xml [Google Scholar]

- Allen HL, Estrada K, Lettre G, Berndt SI, Weedon MN, Rivadeneira F, Willer CJ, Jackson AU, Vedantam S, Raychaudhuri S. et al. (2010), ‘Hundreds of variants clustered in genomic loci and biological pathways affect human height’, Nature 467(7317), 832–838. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belmont JW, Hardenbol P, Willis TD, Yu F, Yang H, Ch’Ang LY, Huang W, Liu B, Shen Y, Tam PKH, Tsui LC, Waye MMY, Wong JTF, Zeng C, Zhang Q, Chee MS, Galver LM, Kruglyak S, Murray SS, Oliphant AR, Montpetit A, Chagnon F, Ferretti V, Leboeuf M, Phillips MS, Verner A, Duan S, Lind DL, Miller RD, Rice J, Saccone NL, Taillon-Miller P, Xiao M, Sekine A, Sorimachi K, Tanaka Y, Tsunoda T, Yoshino E, Bentley DR, Hunt S, Powell D, Zhang H, Matsuda I, Fukushima Y, Macer DR, Suda E, Rotimi C, Adebamowo CA, Aniagwu T, Marshall PA, Matthew O, Nkwodimmah C, Royal CD, Leppert MF, Dixon M, Cunningham F, Kanani A, Thorisson GA, Chen PE, Cutler DJ, Kashuk CS, Donnelly P, Marchini J, McVean GA, Myers SR, Cardon LR, Morris A, Weir BS, Mullikin JC, Feolo M, Daly MJ, Qiu R, Kent A, Dunston GM, Kato K, Niikawa N, Watkin J, Gibbs RA, Sodergren E, Weinstock GM, Wilson RK, Fulton LL, Rogers J, Birren BW, Han H, Wang H, Godbout M, Wallenburg JC, L’Archevêque P, Bellemare G, Todani K, Fujita T, Tanaka S, Holden AL, Collins FS, Brooks LD, McEwen JE, Guyer MS, Jordan E, Peterson JL, Spiegel J, Sung LM, Zacharia LF, Kennedy K, Dunn MG, Seabrook R, Shillito M, Skene B, Stewart JG, Valle DL, Clayton EW, Jorde LB, Chakravarti A, Cho MK, Duster T, Foster MW, Jasperse M, Knoppers BM, Kwok PY, Licinio J, Long JC, Ossorio P, Wang VO, Rotimi CN, Spallone P, Terry SF, Lander ES, Lai EH, Nickerson DA, Abecasis GR, Altshuler D, Boehnke M, Deloukas P, Douglas JA, Gabriel SB, Hudson RR, Hudson TJ, Kruglyak L, Nakamura Y, Nussbaum RL, Schaffner SF, Sherry ST, Stein LD & Tanaka T. (2003), ‘The international hapmap project’, Nature 426(6968), 789–796. [DOI] [PubMed] [Google Scholar]

- Berger B. & Cho H. (2019), ‘Emerging technologies towards enhancing privacy in genomic data sharing’. [DOI] [PMC free article] [PubMed]

- Bomba L, Walter K. & Soranzo N. (2017), ‘The impact of rare and low-frequency genetic variants in common disease.’, Genome biology 18(1), 77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boura C, Gama N, Georgieva M. & Jetchev D. (2018), Chimera: Combining ring-lwe-based fully homomorphic encryption schemes, Technical report, Cryptology ePrint Archive, Report 2018/758. https://eprint.iacr.org/2018/758.

- Brakerski Z. (2012), Fully homomorphic encryption without modulus switching from classical GapSVP, in Safavi-Naini R. & Canetti R, eds, ‘CRYPTO 2012’, Vol. 7417 of Lecture Notes in Computer Science, Springer, pp. 868–886. [Google Scholar]

- Browning BL, Zhou Y. & Browning SR (2018), ‘A one-penny imputed genome from next-generation reference panels’, American Journal of Human Genetics 103(3), 338–348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen J, Harmanci AS & Harmanci AO (2019), Detecting and Annotating Rare Variants, Elsevier, pp. 388–399. [Google Scholar]

- Chen J. & Shi X. (2019a), ‘Evaluating protein transfer learning with tape’, bioRxiv (9). URL: https://www.biorxiv.org/content/10.1101/676825v1 [PMC free article] [PubMed]

- Chen J. & Shi X. (2019b), ‘Sparse convolutional denoising autoencoders for genotype imputation’, Genes 10(9), 652. URL: 10.3390/genes10090652 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheon JH, Kim A, Kim M. & Song Y. (2017), Homomorphic encryption for arithmetic of approximate numbers, in ‘International Conference on the Theory and Application of Cryptology and Information Security’, Springer, pp. 409–437. [Google Scholar]

- Chillotti I, Gama N, Georgieva M. & Izabachène M. (2019), ‘TFHE: Fast fully homomorphic encryption over the torus’, Journal of Cryptology .

- Chisholm J, Caulfield M, Parker M, Davies J. & Palin M. (2013), ‘Briefing genomics england and the 100K genome project’, Genomics Engl .

- Cho H, Wu DJ & Berger B. (2018), ‘Secure genome-wide association analysis using multiparty computation’, Nature biotechnology 36(6), 547–551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Consortium T. . G. P. (2015), ‘A global reference for human genetic variation’, Nature 526(7571), 68–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cooper JD, Smyth DJ, Smiles AM, Plagnol V, Walker NM, Allen JE, Downes K, Barrett JC, Healy BC, Mychaleckyj JC et al. (2008), ‘Meta-analysis of genome-wide association study data identifies additional type 1 diabetes risk loci’, Nature genetics 40(12), 1399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Das S, Abecasis GR & Browning BL (2018), ‘Genotype imputation from large reference panels’, Annual review of genomics and human genetics 19, 73–96. [DOI] [PubMed] [Google Scholar]

- Das S, Forer L, Schönherr S, Sidore C, Locke AE, Kwong A, Vrieze SI, Chew EY, Levy S, McGue M, Schlessinger D, Stambolian D, Loh PR, Iacono WG, Swaroop A, Scott LJ, Cucca F, Kronenberg F, Boehnke M, Abecasis GR & Fuchsberger C. (2016), ‘Next-generation genotype imputation service and methods’, Nature Genetics 48(10), 1284–1287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Depristo MA, Banks E, Poplin R, Garimella VK, Maguire JR, Hartl C, Philippakis AA, Del Angel G, Rivas MA, Hanna M, McKenna A, Fennell TJ, Kernytsky AM, Sivachenko AY, Cibulskis K, Gabriel SB, Altshuler D. & Daly MJ (2011), ‘A framework for variation discovery and genotyping using next-generation dna sequencing data’, Nature Genetics 43(5), 491–501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dowlin N, Gilad-Bachrach R, Laine K, Lauter K, Naehrig M. & Wernsing J. (2017), ‘Manual for using homomorphic encryption for bioinformatics’, Proceedings of the IEEE .

- Duan Q, Liu EY, Croteau-Chonka DC, Mohlke KL & Li Y. (2013), ‘A comprehensive SNP and indel imputability database’, Bioinformatics 29(4), 528–531. URL: 10.1093/bioinformatics/bts724 [DOI] [PMC free article] [PubMed] [Google Scholar]

- dynverse: benchmarking, constructing and interpreting single-cell trajectories (n.d.), https://github.com/dynverse. Accessed: 2021-04-19.

- Evangelou E. & Ioannidis JP (2013), ‘Meta-analysis methods for genome-wide association studies and beyond’, Nature Reviews Genetics 14(6), 379–389. [DOI] [PubMed] [Google Scholar]

- Fan J. & Vercauteren F. (2012), ‘Somewhat practical fully homomorphic encryption.’, IACR Cryptology ePrint Archive 2012, 144. [Google Scholar]

- Gangan S. (2015), ‘A review of man-in-the-middle attacks’, CoRR abs/1504.02115. URL: http://arxiv.org/abs/1504.02115

- Gentry C. (2009), Fully homomorphic encryption using ideal lattices, in ‘Proceedings of the Forty-first Annual ACM Symposium on Theory of Computing’, STOC ‘09, ACM, pp. 169–178. URL: http://doi.acm.org/10.1145/1536414.1536440 [Google Scholar]

- Gibson G. (2012), ‘Rare and common variants: twenty arguments’, Nature Reviews Genetics 13(2), 135–145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldfeder RL, Wall DP, Khoury MJ, Ioannidis JP & Ashley EA (2017), ‘Human genome sequencing at the population scale: a primer on high-throughput DNA sequencing and analysis’, American journal of epidemiology 186(8), 1000–1009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heather JM & Chain B. (2016), ‘The sequence of sequencers: The history of sequencing DNA’, Genomics 107(1), 1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoffmann TJ, Kvale MN, Hesselson SE, Zhan Y, Aquino C, Cao Y, Cawley S, Chung E, Connell S, Eshragh J. et al. (2011), ‘Next generation genome-wide association tool: design and coverage of a high-throughput european-optimized SNP array’, Genomics 98(2), 79–89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Homomorphic Encryption Standardization (HES) (n.d.), https://homomorphicencryption.org. HES.

- Hoofnagle CJ, Sloot v. d. B. & Borgesius FZ (2019), ‘The european union general data protection regulation: What it is and what it means’, Information and Communications Technology Law 28(1), 65–98. [Google Scholar]

- Howie B, Fuchsberger C, Stephens M, Marchini J. & Abecasis GR (2012), ‘Fast and accurate genotype imputation in genome-wide association studies through pre-phasing’, Nature genetics 44(8), 955–959. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howie B, Marchini J. & Stephens M. (2011), ‘Genotype imputation with thousands of genomes’, G3: Genes, Genomes, Genetics 1(6), 457–470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howie BN, Donnelly P. & Marchini J. (2009), ‘A flexible and accurate genotype imputation method for the next generation of genome-wide association studies’, PLoS Genetics 5(6). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson EO, Hancock DB, Levy JL, Gaddis NC, Saccone NL, Bierut LJ & Page GP (2013), ‘Imputation across genotyping arrays for genome-wide association studies: Assessment of bias and a correction strategy’, Human Genetics 132(5), 509–522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kockan C, Zhu K, Dokmai N, Karpov N, Kulekci MO, Woodruff DP & Sahinalp SC (2020), ‘Sketching algorithms for genomic data analysis and querying in a secure enclave’, Nature Methods 17(3), 295–301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kowalski MH, Qian H, Hou Z, Rosen JD, Tapia AL, Shan Y, Jain D, Argos M, Arnett DK, Avery C, Barnes KC, Becker LC, Bien SA, Bis JC, Blangero J, Boerwinkle E, Bowden DW, Buyske S, Cai J, Cho MH, Choi SH, Choquet H, Adrienne Cupples L, Cushman M, Daya M, de Vries PS, Ellinor PT, Faraday N, Fornage M, Gabriel S, Ganesh SK, Graff M, Gupta N, He J, Heckbert SR, Hidalgo B, Hodonsky CJ, Irvin MR, Johnson AD, Jorgenson E, Kaplan R, Kardia SL, Kelly TN, Kooperberg C, Lasky-Su JA, Loos RJ, Lubitz SA, Mathias RA, McHugh CP, Montgomery C, Moon JY, Morrison AC, Palmer ND, Pankratz N, Papanicolaou GJ, Peralta JM, Peyser PA, Rich SS, Rotter JI, Silverman EK, Smith JA, Smith NL, Taylor KD, Thornton TA, Tiwari HK, Tracy RP, Wang T, Weiss ST, Weng LC, Wiggins KL, Wilson JG, Yanek LR, Zöllner S, North KE, Auer PL, Raffield LM, Reiner AP & Li Y. (2019), ‘Use of >100,000 NHLBI Trans-Omics for Precision Medicine (TOPMed) Consortium whole genome sequences improves imputation quality and detection of rare variant associations in admixed African and Hispanic/Latino populations’, PLoS Genetics 15(12). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Locke AE, Kahali B, Berndt SI, Justice AE, Pers TH, Day FR, Powell C, Vedantam S, Buchkovich ML, Yang J. et al. (2015), ‘Genetic studies of body mass index yield new insights for obesity biology’, Nature 518(7538), 197–206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loh P-R, Danecek P, Palamara PF, Fuchsberger C, Reshef YA, Finucane HK, Schoenherr S, Forer L, McCarthy S, Abecasis GR et al. (2016), ‘Reference-based phasing using the Haplotype Reference Consortium panel’, Nature genetics 48(11), 1443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyubashevsky V, Peikert C. & Regev O. (2010), On ideal lattices and learning with errors over rings, in ‘On ideal lattices and learning with errors over rings’, Vol. 6110 LNCS, pp. 1–23. [Google Scholar]

- Marchini J. & Howie B. (2010), ‘Genotype imputation for genome-wide association studies’, Nature Reviews Genetics 11(7), 499–511. [DOI] [PubMed] [Google Scholar]

- Naveed M, Ayday E, Clayton EW, Fellay J, Gunter CA, Hubaux J-P, Malin BA & Wang X. (2015), ‘Privacy in the genomic era’, ACM Computing Surveys (CSUR) 48(1), 1–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ng PC & Kirkness EF (2010), Whole genome sequencing, in ‘Genetic variation’, Springer, pp. 215–226. [DOI] [PubMed] [Google Scholar]

- NHLBI Trans-Omics for Precision Medicine Whole Genome Sequencing Program (2016), https://www.nhlbiwgs.org/. TOPMed.

- Nissenbaum H. (2009), Privacy in context: Technology, policy, and the integrity of social life, Stanford University Press. [Google Scholar]

- Nyholt DR, Yu C-E & Visscher PM (2009), ‘On Jim Watson’s APOE status: genetic information is hard to hide’, European Journal of Human Genetics 17(2), 147–149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rehm HL (2017), ‘Evolving health care through personal genomics’, Nature Reviews Genetics 18(4), 259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schaid DJ, Chen W. & Larson NB (2018), ‘From genome-wide associations to candidate causal variants by statistical fine-mapping’, Nature Reviews Genetics 19(8), 491–504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwarze K, Buchanan J, Taylor JC & Wordsworth S. (2018), ‘Are whole-exome and whole-genome sequencing approaches cost-effective? A systematic review of the literature’, Genetics in Medicine 20(10), 1122–1130. [DOI] [PubMed] [Google Scholar]

- Shendure J, Balasubramanian S, Church GM, Gilbert W, Rogers J, Schloss JA & Waterston RH (2017), ‘DNA sequencing at 40: past, present and future’, Nature 550(7676), 345–353. [DOI] [PubMed] [Google Scholar]

- Stram DO (2004), ‘Tag SNP selection for association studies’, Genetic Epidemiology: The Official Publication of the International Genetic Epidemiology Society 27(4), 365–374. [DOI] [PubMed] [Google Scholar]