Abstract

The recent development of technological applications has made it inevitable to replicate human eyesight talents artificially and the issues requiring particular attention in the ideas of solutions increase in proportion to the number of applications. Facial classification in admittance restriction and video inspection is typically amidst the open-ended applications, where suitable models have been offered to meet users' needs. While it is true that subsequent efforts have led to the proposal of powerful facial recognition models, limiting factors affecting the quality of the results are always considered. These include low-resolution images, partial occlusion of faces and defense against adversarial attacks. The aspect of the input image, the verification of the presence of face occlusion in the image, the motive derived from the image, and the ability to fend off adversarial attacks are all examined by the RoFace formal representation of the face, which is presented in this paper as a solution to these issues. To assess the impact of these components on the classification/recognition accuracy, experiments have been conducted.

Keywords: Adversarial attack, Classification, Face recognition, Low-resolution image, Partial occlusion

1. Introduction

The recent transition of most applications to biometric technology is the cause of the growing interest in making people monitoring-related suggestions in an area. These methods include facial recognition and verification, iris-based control systems, and fingerprint-based control systems, to name a few. However, because facial recognition does not require user-system interaction, the requirement to deploy autonomous systems concentrates our attention on this area. One of the essential requirements for establishing accurate face recognition is face representation.

Let's have a look at a 3-D matrix of a face to see how challenging it may be. The supplied face can be rotated once to produce a completely different result. Thus, in the representation of the face, everything contributes to the determination of a description that is fairly basic and invariant in any operation (rotation, translation, etc.) as well as in any factor (illumination, age, etc.).

In the plethora of approaches that have emerged, the method of fractal representation [1] was one of the beginnings using the principle of representing an object by itself, which is a representation with self-similar elements. It was advantageous in that less processing resources were required compared with the conventional Principal Component Analysis (PCA) method [2]. However, if a new sample was added, the feature vectors would need to be recalculated. The same idea was then applied to a number of additional representations, i.e. that of 3-D matrix transposition into a feature vector in one dimension. This is done in order to reduce spatial and temporal complexities unfortunately assuming that images are of very high quality.

To address these issues, the face representation in this paper is presented. It has the RoFace signature, which is an acronym for robust face representation approach for precise categorization. The goal is to suggest a model that can handle both the identification process and reinforcement processes like image improvement, occlusion removal, and protection against adversarial attacks. The rest of the paper is structured as followed; Section 2 summarizes prior research with a focus on its high quality and relevance to our topic. Section 3 presents the RoFace signature in detail and explains the formalization procedures. Section 4 details the implementations made and their associated outcomes, allowing the model to be validated. Section 5 is devoted to the conclusion which is composed of a summary and projections of reflections for further investigations.

2. Related work

In the past, the approaches used for this purpose included local binary patterns (LBP) which, considered as manual engineering methods, also integrate Vectors of Fisher as well as SIFT features where SIFT stands for Scale Invariant Feature Transform.

The term “sparsity” refers to the idea of representing a face using a principle that enables the representation of a particular face to be done accurately and robustly using the fewest possible elements derived from the face's original matrix. The result is known as the features vector. The supplied features are most often determined using a mathematical notion. When going through the literature, countless approaches were developed some being more popular than others like eigenfaces, local binary patterns (LPB) and fisher faces.

The situation of Fisher faces [3] is similar to that of eigenfaces, but they differ in that Fisher faces also include a concept known as LDA which stands for linear discriminant analysis [4]. This addition makes it possible to extract characteristics according to the classes in opposition in the consideration of images taken as samples as a during the process of extraction of eigenvalues. This makes it possible to separate different classes among each other. It has the benefit of reducing mistake rates.

Other algorithms use SIFT features (Scale-invariant feature transform) [5] and when applicable, consists of space scale extrema detection which is established for point and blob structure detection. These algorithms also incorporate a feature that removes minor trust points. This is followed by orientation assignment, where one or more orientations are assigned to a crucial observation based on the gradient directions of local image, where each candidate key point is evaluated, and a decision is taken based on its value (below a threshold). It can ultimately resort to descriptors of key points which use the image gradient that sample it surrounding the key point with the help of its scale to choose the extent of Gaussian blur for the image.

The vicinity of every single pixel is investigated for LPB [6]. Based on this, some specific computations called binary computations are made. The mean of a formula is applied on every single pixel, and the obtained values are grouped and used to obtain the histogram of the image using a given threshold. This same threshold applies a dependent mechanism leading to a sensitivity of the approach to variations that are local as well as illumination. Consequently, it does perform well when of the images contain occlusions.

Eigenfaces [7] employs a projection on a new vector space with a reduced dimension using a method called principal component analysis [8], whereas LPB uses local pixel data to calculate the values of the features. This method is advantageous in that it structures the recognition process and does not require knowledge on the geometry or reflectance of faces. The process is cumbersome, though, and numerous example photos must be taken into account in order to reach an acceptable degree of accuracy.

Beginning from 2012, new representations of faces have emerged thanks to the vulgarization of neural networks and the precision in their results made them popular. Their specificity stems from the fact that they are calibrated to receive 3D matrices as input, i.e., images. Among the plethora of models that have been proposed, two families of models have seen their reputation crystallize, these are Visual Geometric Group (VGG) model [9] and the FaceNet model (Face Network) [10]. As was previously mentioned, these two groups of models employ neural networks with a specific number of layers. Consider one of the most popular models, FaceNet, which includes 22 layers and a triplet loss layer at the end. During training, this layer adds the distance between samples that are not from similar class and diminishes it for data from the same class. On the other hand, there are numerous sub-models within the VGG model family, the most notable of which are VGG-16 [9], VGG-19 [11], and ResNet-50 which means Residual Network [12]. Note that several other popular models, for example, Alex network [13], Inception [14], etc. For more information, refer to Ref. [15].

In the same vein, more complex models exist in particular Deepid3 [16] which is a model built from layers filled with inception and convolution giving them an incredible capacity for facial recognition. Erjin et al. [17] and other authors provide a model that can achieve a precision of 99.5% on the LFW. The DeepID2+ model [18] is not the least because, using the principle of supervision identification for its learning, it achieves promising results. This is made possible by adding more convolutional layers and expanding the size of the hidden representations. Zhu et al. [19] developed a deep architecture model that can recover a canonical view of faces, drastically reducing intra-person variations and at the same time preserving adequate inter-person discrimination. Xudong et al. [20] on their part, elaborates on a transfer learning method that uses a number of principles to combine data from the source domain with carefully chosen, small samples in the significance area to create a functional classifier as well as if the rich data of the domain were rather targeted. The Bayesian method, introduced by in Ref. [21] is an approach to recognize faces allowing a joint modeling of double facial representation having acceptable hypothesis about the portrayal of the face leading to excellent results. The same work teaches a method called [22] which uses a dataset containing many facial images belonging to approximately 4000 individuals for the training of its model Consequently, the present mistake is decreased by more than 27%, and the accuracy on LFW is increased to 97.35%. A summary of state of art approach is presented on Table 1 where the performance metric is the accuracy.

Table 1.

Comparing cutting-edge facial recognition methods using the LFW dataset.

2.1. Choice of convolutional neural network

It is generally accepted that convolutional neural networks are specifically designed to process 3-D images, thus giving them an advantage in the management of positioning, performance, localization, and contradictory examples.

This is made possible by the insertion of artificial shifts at the inputs [48] conferring a differentiation capacity on the network. Therefore, an implicit coding of position information is introduced in the map of the extracted features. Combined with this, an edge detection is ensured by the initial layers to allow the following layers to anticipate the concepts of increasingly high levels as the penetration into the network progresses.

The specific case of contradictory examples challenges us, because generating patches which when placed in strategic places of the classifier's field of vision produces a targeted class [28] leading it to predict an erroneous class with a high degree of confidence. This ability to fool the system is made possible with the introduction of almost imperceptible noise. To counter this, the indicated approach is to train the classifier to recognize the attacker, concretely, to present attacks to the model during training. This is what authors like Papernot et al. [29] or more extensively, Bingcai et al. [30] present.

The Resnet family network which is a convolutional neural network has been chosen because it has a better accuracy as shown in Table 1. The values of the table were taken from the literature where authors chosen where authors who did different implementations on the same dataset which; the label face in the wild (LFW).

3. Presentation of RoFace approach

In the past, the approaches used for this purpose included local binary patterns (LBP) which, considered as manual engineering methods, also integrate Fisher vectors, Scale In- variant Feature Transform (SIFT).

3.1. Generalities

A top-down approach is usually used to design a complex algorithm and the analysis proceeds by successive refinements. This approach led to independence from any implementation. It has as consequence a consistent representation of information (trough data) that does not have a constant representation: it is the notion of abstract data type. The resultant representation emphasizes the data's structure as well as the actions that may be carried out on it, known as primitives, and finally the semantic characteristics of these operations. Inspired by Ref. [31], a mathematical representation known as an abstract data type (ADT) specifies operations applied to data. Since the structure of the data is subject to a set of specifications, a correspondence can be made from it and the nature of the qualification. This enables the definition of data kinds that are not yet implemented in programming languages, such as non-primitive types. Nonetheless, the scheme for formal definition of these types and operations that are applied to objects belonging to these types are the so-called algebraic specifications. To be more precise, to associate a semantic to an algebraic specification, several (from single to many) of the data it depicts must be defined. Therefore, a signature and a set of axioms are used to specify a single or many data types in an algebraic blueprint. The signature, on the other hand, refers to an assembly of type names commonly indexed as sort with a collection of mathematical manipulations where a profile is connected to the name of each process with the following structure S1 S2 … Sn → S0 where Si = 0 … n are sorts.

Therefore, the definition of an abstract data type is subject to some fields which are.

-

•

Introduction: This includes de definition type name as well as any useful specification.

-

•

Description(optional): Where operations are informally described.

-

•

Operations: Here, all syntaxes of operations are defined as well as their parameters and they are located in the interface.

-

•

Axioms: By mean of equations the semantic of operations are defined here. As a result, a stipulation that consists of a mix of spells and operations refers to a signature, and operations have to be accurately and fully described by axioms. Remember that sort is a more descriptive word than type. The reason of this distinction relies on the fact that we mention sort, we can think of a synthetic object compared to type which refers to a set of data and the features that can be applied on these data [32]. Note that there is the possibility of associating different types pending the functionality considered when an identical set of data is associated. In addition, the term arity [32] of a function which indicates how many elements are needed to perform it represents in this context, any operation/definition.

3.2. Face representation: signature

The authors of [33], on their part, define the concept of signature by an aggregate of sort S, of a set A containing names of operations, each of these having an arity on S. Knowing that it is not necessary that the set A is finite, the names of operations at least must be distinct two by two. Note however that in practice, the desire to address specifications in a limited number of characters gives to the set A, a limited number of characters.

Note that prior to any facial representation, a crop mechanism is needed on the input image to exclusively cut the area where the face may be seen, as determined by a facial exposure method. MTCNN [34] is the specific methodology employed in this study, and it is a combination of triple of neural networks that is used to recognize and position the face with the help of a bounding box.

Considering an image representing a face, a representation denoted S of I wants to be found such that this depiction has sufficient components to accurately depict a face for it to be used to differentiate between two faces. It has been demonstrated earlier that the Resnet family model best fits this purpose. This implies that for a given face image, a features vector will be extracted with the help of VGGFace approach [12,35] leading to a unique features vector of size 2622. Although this is not often the case in reality, these operations are performed with the hypothesis that the images are of great condition. Following this viewpoint [36], came up with an approach which allows for an improvement of the quality of the image, in particular, its reconstruction to an image of better resolution. Additionally, two other parameters are considered, notably the ability to resist to adversarial attacks and a reconstruction capability in case the face is occluded.

3.3. Equation representing the face

The concept behind this representation is to come up with a portrayal f(I) in case for an image I as highlighted in Equation (1):

| (1) |

The characteristic elements in Equation (1) can either be vectors or parameters of real nature. Here, we're looking for an image that captures the distinctive features of a face. Therefore, when the representation is better the more parameters there are. Additionally, unlike in different datasets, handled images in real-world situations are not necessarily of excellent aspect introducing at a certain moment new set with constraints that have the possibility of being linked to luminescence, resolution, rotation, occlusion, etc. In this view, a face representation is proposed which integrates a set of elements that guarantees its robustness. This initially includes the image enhancement module, the occlusion module, the feature of invariant characteristics, and a coefficient of sensitivity to adversarial attacks. Thus, the face representation is defined as follows: A signature S is extracted from a facial image using Equation (2) as followed:

| (2) |

Super-resolution is an approach which allows the enhancement of image quality, specifically the resolution of an image using formulas found in Equation (3), Equation (4) and Equation (5) respectively.

| (3) |

with

| (4) |

And

| (5) |

In these equations (Equations (3)–5)), Y is a low-resolution image with kernels ; and which belong to successive convolutions and the biases are respectively represented , and , ultimately, F (Y) is the reconstructed image with greater resolution. Occlusion removal depends on two parameters; mask and the occlusion feature map (see Section 4.3). The attributes on the input image, which must be of size 224, are retrieved using the mean of the Resnet-50 model [12], which calculates the vector. Finally, the adversarial component is a factor that is determined by generating adversarial images, fine tuning the classification model, and computing the improvement percentage. More details on the principle used are presented in Section 9. Thus, the components of the vector representing the signature can be represented in Equation (6):

| (6) |

3.4. Using the specified signature

For any input image I, it passes through four steps which are.

-

•

First step: This initial step consists in determining input image dimensions. Meanwhile, it is then compared to a threshold value which is the minimum resolution required for further processes. In case it is greater than the threshold value Rs nothing is done. Otherwise, the image resolution is increase via a specific principle called super resolution and produce a new image with the required resolution.

-

•

Second step: In case of occluded face, the occlusion is removed using the second component of the representation. Note that in practice, emphasis has been made on eyeglasses and face mask occlusion.

-

•

Third step: Extracting features from a vector F. In this stage, the features F vector from picture I is extracted, and its closest vector is found using a layer known as SoftMax which is a layer of regression. At the end of this process, the individual obtains its claimed identity

-

•

Fourth step: Refinement of the model for it to be robust to adversarial attacks.

These steps are illustrated on Fig. 1 where all components are illustrated.

Fig. 1.

Flow chart of RoFace.

4. Implementation and results

The RoFace approach is made up of three parts which are the image enhancement part, the features extraction part and the part dedicated to the verification. This section presents results derived from each component whit an emphasis on the advantages of the results compared to that obtained previously.

The implementations in this study are made prior some assumptions. At first it is assumed that the data are of good quality that is, it possesses enough elements (variability, without noise, large enough, etc.) to perform proper training. In addition, it is assumed that datasets used reflect the reality of our case study consequently results obtained can easily be fed in it. Any other parameter that can affect these results will be investigated in further studies.

In addition, apart from one of authors who willingly accepted to provide some of his images for experimentations, the rest of images were obtained from public available dataset. Consequently, no ethical rules were violated either on consent or on disclosure.

The following aspects are taken into consideration, for normalization all images are resized before passing them to a model. For face recognition and occlusion removal models, images are of size 224 × 224 pixels and any image size is accepted for super resolution model.

The parameter's adjustment has been made prior information from previous works, consequently we tested most used activation function and was found that softmax activation gives better results for recognition model. Sparse Categorical Crossentropy for the lost function, nadam for the optimizer and learning rate is assigned a value of 0.001.

To determine the number of epochs, we started with a very high number, and it was noticed while plotting the training curve a stabilization of training accuracy after 100 epochs that is why 200 was chosen as number of epochs. Added to that, the analysis of confusion matrix prevents to fall into local minimal leading to the adjustment of batch size which was chosen to be 32 in different experiments.

4.1. Module of super resolution

If the image resolution is below the threshold resolution, improving image quality by raising it is a necessary component of this. Fig. 2 depicts the super-resolution architecture in use.

Fig. 2.

Architecture of FSRCNN network.

4.1.1. Module's guiding concept of operation

Since is usually not evident in real-life situations to have images of excellent quality, the processing that follows will be altered, negatively affecting the results and resulting in poor or insufficient feature extraction. A super-resolution module was developed to deal with this scenario by allowing the facial image dimensions to be raised when it fell below an empirically determined threshold. With the help of a convolutional neural network, this technique creates a higher-resolution image from a lower-resolution one. Many techniques are described in the literature, however the Fast Super Resolution Convolutional Neural Network (FSRCNN), a well-known and current one, was selected based on its outcomes. By increasing an image's resolution by k, a factor greater than, FSRCNN enhances images of objects. In this work, we modified it to enhance photos of people's faces using a dataset we created.

4.1.2. Creation of training datasets

If the images are of high quality, the regular approach involves training the model using a collection of random images (resolution). The decision to train a model using images of faces, however, results in this model performing poorly in the unique scenario facial representation of humans. The dataset of items with human faces was used in its place to achieve this. Because it comprises enough images—13233 images that were divided into two subsets—the label faces in the wild (LFW) dataset is the basis for face retrieval in the current investigation. A proportion of 75% of images were in the primary subgroup used for training, while the remaining 25% were used as the test dataset. This produced a batch of 9924 training images and 3309 testing images. A number of 20 epochs of training data were used. Note that this number of epochs has been determined as previous parameters empirically, because it was noticed that beyond 20 we have a sort of stabilization with the same accuracy.

The original intent of this network application was to use the natural tendency of convolutional neural networks to reconstruct a higher-resolution version of the original image. The algorithm of the new technique adjusts the values of the picture matrix on a new dimension, which typically results in the loss of some feature elements, distinguishing it from a straightforward zoom. The super-resolution method, on the other hand, starts with a low-resolution image and reconstructs it into a higher-resolution version of itself. In this case, it's used to enhance feature extraction by drawing attention to subtly relevant information.

4.1.3. Validation of super-resolution model

To validate the model of super resolution, the following principle was used; A validation dataset was generated using sample images collected from the previous LFW test set (81 to be more specific). The images were downscaled by a factor of k (in our case k is 4). At this level, we had the input images and their corresponding down-scaled samples. The down-scaled images were then reconstructed with the proposed model and other models. In our case, two additional models were used: the SRCNN model and FSRCNN_mobileNet model. Note that the choice of 4 as factor was chosen empirically, that is starting at 1.5 significant results were obtained, 4 was chosen illustration purposes only.

The MobileNet model is TensorFlow's first mobile computer vision model designed to be used in mobile applications. By using depth-wise separable convolutions, it is possible to drastically reduce the number of parameters compared to a network that utilizes ordinary convolutions of the same depth. SRCNN model [37], on the other hand, is the source material from which FSRCNN [38] was derived. All layers are collaboratively optimized after learning a mapping from low to high resolution. Despite its compact design, it displays cutting-edge restoration quality and performs at a rate suitable for real-world online applications.

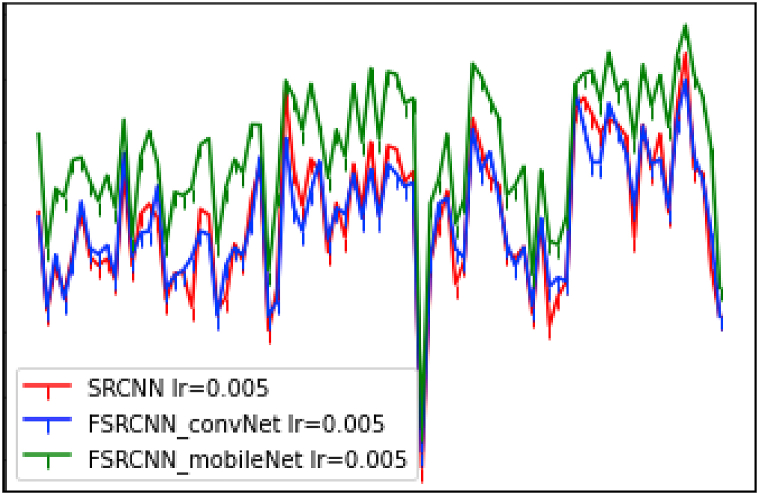

Peak signal to noise ratio (PNSR) values are obtained by dividing the sum of all the potential powers that make up an image by the sum of all the possible powers that come from corrupting noise and hence have an effect on the image's representation. Reconstruction principles perform better as this value rises. Fig. 3 displays the 81 images and their respective peak signal-to-noise ratios (PNSRs).

Fig. 3.

Evolution of PNSR values of images for each of the three models.

Fig. 3 demonstrates the best values that were obtained when the FSRCNN model is used, more precisely, the mobileNet version. This conclusion is drawn because in each case, the highest PNSR value was obtained when the mobileNet version was used.

In addition, the average PNSR values during the validation phase for each of the models were recorded and the total. These are presented in Table 2. As expected, the mobileNet version had the best value in terms of average PNSR. Also, both FSRCNN models took less time to process the 81 images compared to SRCNN which is why it was adopted as the super-resolution model.

Table 2.

Average PNSR and time taken during training phase.

| Model | Average PNSR | Time (s) |

|---|---|---|

| SRCNN model | 26.113 | 309 |

| FSRCNN_convNet | 27.287 | 103 |

| FSRCNN_mobilevNet | 25.596 | 91 |

4.1.4. An analysis of how super resolution affects face detection

To do so, a model was developed that increases the input resolution by a factor of 4. A certain number of epochs was used (100 in this case) to obtain acceptable results. Note that this was done to enhance input image quality to minimize the loss function. Following this procedure, 200 images were used, including 20 people × 20 images. For each image, copies of different scales were created to illustrate an increase in performance when using super resolution. This led to copies of images with the following low resolutions; 25 × 25, 35 × 35, 45 × 45 and 55 × 55. The percentage of faces found in Table 3 is briefly presented together with the total number of faces found. In each case, the use of super resolution resulted in the identification of more faces than would have been possible without it. It's also worth noting that the super resolution method produced an improved output image when the original image's resolution was raised. Having a resolution of 35 by 35 is good enough. In the demonstrated process flow, this empirical value served as a cutoff point.

Table 3.

Distribution of Face Count vs. Image Resolution.

| Resolution | 25 × 25 | 35 × 35 | 45 × 45 | 55 × 55 |

|---|---|---|---|---|

| Recognition of Faces at Low Resolution (on 200) | 175 | 189 | 18ç | 191 |

| Estimated Percentage of Detection (in %) | 87.5 | 94.5 | 94.5 | 95.5 |

| Recognition of Faces at high Resolution (on 200) | 191 | 195 | 195 | 197 |

| Estimated Percentage of Detection (in %) | 95.5 | 97.5 | 97.5 | 98.5 |

4.2. Features extraction of the face

Here, we're working with a component that's sole purpose is to extract features from input images, giving each image its own distinct representation.

Several models were presented in the literature to achieve this goal, with FaceNet, VGG, and Resnet being three of the most well-known. The ratio of the precision of the results to the duration of the models' image processing times has drawn a lot of focus to these models. Therefore, tests were conducted, and the outcomes are shown in Table 4.

Table 4.

Examination of competing feature extraction models.

| Network type | Number of images | Number of faces being detected | Features vector size | Recognition accuracy in % |

|---|---|---|---|---|

| FaceNet | 3243 | 2610 | 128 | 79 |

| VGG | 3243 | 2610 | 512 | 80 |

| Resnet-50 | 3243 | 2610 | 2048 | 95 |

As input, a VGG-based model requires an RGB image of exactly 224 by 224 pixels. The input image then travels through a series of convolutional layers, where filters with a 3 × 3 pixel receiving field are applied. It has been shown to be the smallest possible value that describes the three axes of space (up, down, and left/right) equally well. There are some cases when it employs 11 convolutional filters, which can be thought of as linear modifications of input channels. The number of layers ensuring spatial pooling is 4 which are maximum pooling layers following certain convolutional layers where the frames are those of 2 × 2 with a step of 2. This family of models always need huge resources for their training as well as time (weeks to months). Commonly, a Titan is employed, which has a massive graphics processing unit (GPU) running at 837 MHz and a distinct nuler of clock cycles per minute of 3004 MHz, both of which are of the Graphics Double Data Rate version 5 (GDDR5) variety.

4.2.1. Face classification or recognition

It represents the final step in the pipeline which consist of corresponding a feature vector made up of an image derived from input to another vector where its components are features from a class in the base of classes (knowledge base). K-nearest neighbor (KNN) is a popular algorithm that is often utilized. This method calculates the gap that exist among input image's features vector and all of the images in the knowledge base, and returns the class with the smallest distance. A different option is the employment of a support vector machine (SVM), which can be taught to efficiently classify or regress data. Once trained, this classifier has relatively low processing time, which is a major advantage. But its efficiency drops dramatically as the number of classes grows or as the data that cannot be separated by a linear function. This amply justifies the port of the choice on the softmax type classifier because the details of the features vectors are considered by the latter and a reduced time for its execution as is the case for SVM.

4.2.2. Complexity analysis of softmax and KNN

To illustrate, let's say n represents the number of characteristics vectors and m represents the number of classes (referring here to the number of individuals). It is assumed that there are roughly n students in each group. Therefore, the following procedures have been developed.

Algorithm 1: Classification using KNN.

The illustration of the execution of KNN algorithm is presented in Algorithm 1.

-

•

By possessing an individual, the number of calculations will rise to n × 1 resulting in a complexity of order θ(n)

-

•

With 2 persons, the number of computations is n × 2, leading to a complexity of θ(2n)

-

•

If there are three persons involved, there will be n3 computations, leading to a complexity of θ(3n)

-

•

etc …

-

•

If there are m people involved, then there will be n m calculations, leading to a complexity of θ(nm).

-

•

If, however, n is more than m, as is typically the case in practice, then we will have a complexity bigger than θ(n2)

Therefore, a taught softmax layer was selected, as unsupervised learning is not practical in most applications. The second algorithm displays this.

Since just one computation is required, the complexity is θ(ε), where ε < < n and ε is the instruction complexity.

4.2.3. Repercussions of the principle on validation and training

Concatenated Rectifier Linear Unit (CReLU) activation function has been shown to further improve classification capability after introducing a margin layer [39,40]. Thus, four situations were encountered: (1) training a softmax layer under standard conditions and parameters; (2) training with the addition of a new layer type, the margin layer. Thirdly, there is a situation whereby CRelu module is used to train the model in place of the activation function and finally, the two previous scenarios are combined to produce the fourth. Consequently, during training, it was easily noticed that the stabilization was better when the combined approach was used in both situations (a rise in precision and a decrease in loss).

Algorithm 2: Classification using softmax.

Note that an extraction of softmax layer is made derived from VGGFace model which is a ResNet-based model (specifically Resnet-50Then, the pre-trained weights derived in the work of [12], which are tailored toward face feature extraction, were added to the mix. The last piece of the puzzle is a softmax regressor that was developed using your own dataset's training data. To demonstrate this approach, we trained the specified layer using 400 images samples from the preceding twenty persons constituted of twenty samples per person (with 70% training, 30% testing).

The confusion matrix which is needed for any further analysis, was not save for all situations because the focus was on the impact of introducing the CRelu activation function and the addition of the margin layer, which is better illustrated by tracking the development of training accuracy and loss over time.

Subsequently, 200 samples images were used, with a ten-image-per-person ratio, all from the same group of 20 persons. As in the training process, many copies of each 450 image were constructed and downscaled for the following image resolutions: 25 × 25, 35 × 35, 45 × 45, 55 × 55. The objective here was to draw attention to the fact that there is an increase in recognition performances when super resolution is used. Input image resolution is shown as a function of recognition percentages in Table 5.

Table 5.

Average recognition rate against image resolution.

| Resolution | 25 × 25 | 35 × 35 | 45 × 45 | 55 × 55 |

|---|---|---|---|---|

| Recognition Rate(%) | 5 | 36 | 63.5 | 79 |

| Exceptional Recognition in Percentage Terms | 32.5 | 58 | 76 | 88 |

The model utilized was based on Resnet-50, which can account for rotation, posture, and age differences. This was then pre-trained and fine-tuned using the dataset at hand, and finally, a layer will fully connected components was added for feature extraction. The resulting features did not depend on any transformations.

4.3. Reconstruction module

4.3.1. Introduction

In controlled conditions, such as frontal or near-frontal poses, neural expressions, and near-uniform illumination, face recognition systems show impressive performance, but in uncontrolled environments, they fail to work [41]. Image patterns of the face dramatically change due to occlusions like hairs, eyeglasses, and face masks, degrading the performance of facial landmark detection and consequently, face recognition. Fig. 4 shows an example of occluded face with a mask.

Fig. 4.

An example of partially occluded facial image.

Facial occlusion is the main reason why face recognition fails in uncontrolled contexts [42]. Partial occlusions on facial images occur in the wild and provide a challenge to facial identification in these situations. In principle, any obstructing item can constitute a partial occlusion, but in practice, the occlusion needs to be less than half of the face for it to be deemed partial [43].

An approach based on Semi-supervised training of a Generative Adversarial Network (GAN) is proposed by Cai et al. [44]. Where the ground-truth non-occluded face image of an occluded face is not accessible, their model, an Occlusion-Aware Generative Adversarial Network (OA-GAN), uses natural face images, and where artificial occlusion is present, it uses paired face images. Concurrently, it makes predictions about the occluded areas and recovers a facial image in which those areas were not obscured.

4.3.2. Principle

If we call the training set that includes occlusion-free and occlusion-containing natural face images X and Y, respectively. There is no matched ground-truth facial image , in Y for any facial image in X with natural occlusion. The goal is to learn a mapping between X and Y, denoted by , such that for each , we can derive , where i is in the domain Y. Fig. 5 depicts a simplified example of this principle in action.

Fig. 5.

Provision of an introduction to the proposed occlusion-aware GAN (OA-GAN) for semi-supervised face de-occlusion, which does not rely on natural paired images or occlusion masks ([45]).

In order to detect and restore occluded parts of the input face image, OA-Generator GAN's incorporates a face-occlusion-aware module as well as a face completion module with an encoder-decoder architecture. The non-occluded areas are preserved by regressing the occlusion mask, a binary filter (where 0 is used for occlusion and 1 for non occlusion).

| (7) |

Element-wise multiplication is denoted by in Equation (7); the mask is indicated by M. Feat is the intermediate facial feature of the module subject to an awareness of occlusion, and is the occlusion-free feature map that is supplied into the face completion module.

The is fed into the face completion module, which then produces a synthetic face photo. Equation (8) determines the final image of the recovered face.

| (8) |

Where , x and stand for the de-occluded image in its final stage, the occluded face image at its original stage, and the synthetic facial image produced by proposed face completeness module. The discriminator acts as an instructor's critic when the generator is being taught. It's purpose is to check if the attributes of the original face image have been preserved in the recovered face, and if the face is authentic or phony.

4.3.3. Dataset

The naturally unpaired dataset used in [44] is made up of naturally occluded images and has no non-occluded counterpart, that is, there is no individual naturally occluded face image with its non-occluded counterpart that is matching in terms of identity and the settings in the image. A naturally unpaired paired dataset will be built and used instead, where the non-occluded counterpart of an occluded image will be different in image settings and will preserve the person's identity. To construct the synthetic paired dataset and the naturally unpaired paired dataset, the CelebA dataset is used. The dataset is split into two, based on images with people wearing eyeglasses and those without eyeglasses. One set of 13,193 face images with eyeglasses and 189,406 face images without eyeglasses. The 189,406 face images without eyeglasses are used to create the paired dataset, by projecting a collection of 12 eyeglasses and 24 face masks unto the face images using the Dlib 68-points face landmark and the annotation keys of each occlusion (eyeglasses and face masks). The annotation key files are made using an open-source online tool called makesense.

The identity file (identity_CelebA.csv) and the attribute file (list_attr_celeba.csv) are used to create a CSV file of the unpaired paired dataset. For one nonoccluded image, four naturally occluded images of the same person are selected. A natural unpaired paired dataset of 12,501 image pairs is obtained.

4.3.4. Training

OA-GAN module undergoes a training in steps, switching between the two datasets and employing various loss combinations throughout. It is first trained on synthetically occluded image pairs to form a foundation for the network's face de-occlusion capabilities, and then on unpaired natural images to generalize those skills to real-world circumstances. Equation (9) gives us the definition of the discriminator's loss function.

| (9) |

where represents the loss derived from adversarial related mechanisms and the mean square error belonging to the attribute enclosed by the ground-truth image and image obtained after face recovering process. is exclusively utilized for paired facial images with synthesized occlusions. Both α and β are examples of hyper-parameters. Creating a sort of fair balancing between the two losses

In Equation (10), the cumulative loss function is defined and belongs to the synthetized paired facial images that are used to train the generator:

| (10) |

The function representing the cumulative loss of natural unpaired facial images used in training the generator is defined by Equation (11):

| (11) |

The training is carried out following the diagram on Fig. 6 1,515,000 pairs of the paired dataset and 12,023 pairs of the unpaired dataset are when undergoing the training process. Due to the large size of the paired dataset and limited resources at hand, it was divided into chunks of 12,023 pairs (126 chunks) for training. The size of each chunk of the unpaired paired dataset is further divided into three and increased after each epoch. The alternative training is done on 3 epochs on the chunk of the paired dataset and the unpaired dataset.

Fig. 6.

The training schedule for our OA-GAN, which features alternate periods [44].

The training is repeated for each chunk and 39 chunks of the paired dataset were used to train the model.

4.3.5. Performance evaluation

To be able to assess the level of resemblance of two images, the use of a property called Structural SIMilarity (SSIM) index is required. The SSIM values range from 0 to 1, 1 implies a perfect match between the reconstructed image and the original one. Generally, SSIM values above 0.80 imply acceptable quality [46] reconstruction techniques.

In contrast, the Peak signal-to-noise ratio (PSNR) [45] is defined as the comparison between the maximum possible signal power and the maximum possible corrupting noise power. Because many signals have a range of values which is highly variable, logarithm representations are most often used for PSNR representation where quantities are expressed in decibel scales. In high-volume conditions, image quality can be considered high by a PSNR rating of 60 dB or more but when it comes to low quality losses, 20 dB–25 dB is considered to be acceptable. In the present scenario, values are to be considered under low volume conditions because of the resolutions of the processed images.

It can be noticed that non-occluded images could be reconstructed out of occluded images by the mean of GAN architecture which is made of a generator that has sub-modules to detect and remove occlusions from images and a discriminator.

Additionally, the reconstructed images have quite acceptable qualities as proven by PNSR and SSIM values. This can be clearly illustrated in Table 6.

Table 6.

PSNR and SSIM evaluation of the results obtained.

| Personalities | Nicole | Lionel | Travolta | Freeman |

|---|---|---|---|---|

| PNSR in dB | 23.73 | 17.75 | 22.34 | 24.67 |

| SSIM | 0.89 | 0.83 | 0.92 | 0.87 |

4.3.6. Impact on face recognition

The same individuals were used to verify how the process of image reconstruction impacts the recognition process and the following observations can be made.

-

•

For the case of occluded faces with face mask the system is unable to detect faces in the images (see Fig. 8), this is understandable because the face mask, as illustrated in Fig. 7, covers almost half of the image so the detection algorithm cannot perform adequately. When the images are reconstructed, we see that the system recognizes them with a very high accuracy almost equal to that of the original faces.

-

•

For the case of occluded faces with eye glasses In this scenario, (see Fig. 8), it can be noticed that eyeglasses do not significantly influence the recognition because the faces are detected normally and are recognized as well with very high accuracy. It is normal because the region affected by the glasses is not significant, so it does not perturb the detection algorithm, or the recognition algorithm.

Fig. 8.

Sample face detection for original images, occluded images, and reconstructed images.

Fig. 7.

Results obtained by the approach on synthetic occlusions on some images of the dataset used.

The different accuracies obtained are summarized in Table 7 where one can easily see that the values are very considerable (at least 0.99) when faces are detected.

Table 7.

Evaluation of image reconstruction on recognition accuracy.

| Personalities | Nicole | Lionel | Travolta | Freeman |

|---|---|---|---|---|

| Original faces | 0.917 | 0.997 | 0.992 | 0.998 |

| Occluded faces | not detected | not detected | 0.993 | 0.997 |

| Reconstructed faces | 0.944 | 0.990 | 0.996 | 0.996 |

4.4. Adversarial defense component

4.4.1. Introduction

As mentioned earlier, to be able to defend against adversarial attacks, adversarial sample images were generated and used to fine-tune the classification model. Many approaches exist in the literature, but an emphasis was made on one popular method called Fast Gradient Sign Method (FGSM). The fact that prompted this decision is that it uses the gradients of the network used to generate new samples, by so doing, it anticipates future manipulations by attackers. Despite its simplicity, it is an efficient method thanks to it capacity of generating pertinent adversarial images. The method, first presented by Ian et al. [47], involves feeding an input image into a trained convolutional neural network, which is subsequently used to make predictions about the image. The correct class label is then used to calculate the prediction's loss. The gradients that are found in the loss function are calculated using the input image. The signed gradient is then utilized to create the adversarial image and the process repeats until an incorrect image is produced.

4.4.2. Working principle

This approach uses the neural network gradients to construct adversarial examples. As a whole, it works by calculating the gradients of a loss function (such as MSE, hinge loss, etc.) according to the input image. This is followed by the creation of a new image with the help of the sign of the gradients (i.e., the incorrect facial image) which conduces to an increase in the loss. Consequently, an image is produced at the output which has specific characteristics in the sense that it is capable of confusing or fooling the neural network despite its apparent identity with the original image. Previous studies made have demonstrated that if a training is done combining adversarial and clean examples, this leads to the regularization of the neural network. This is because this training differs from other data augmentation schemes; usually, uses transformation to augment data. This transformation includes translations which normally occur in the test set. The nature of this type of data augmentation makes that rather than using inputs that mathematically have a negligeable probability to occur naturally, it tries to bring out the limits regardless on how the model does to elaborate its decision function [47].

To express this, Equation (12) that follows is presented

| (12) |

To make the inserted perturbations as unnoticeable as possible to the human sight, but considerable enough to be able to fool a neural network (see Fig. 9 for more illustration), formula 12 is used with x associated to the genuine input image, y is the ground-truth label of the input as well as ε a petty value which boosts the signed gradients.

Fig. 9.

The Fast Gradient Sign Method expressed mathematically [47].

5. Results

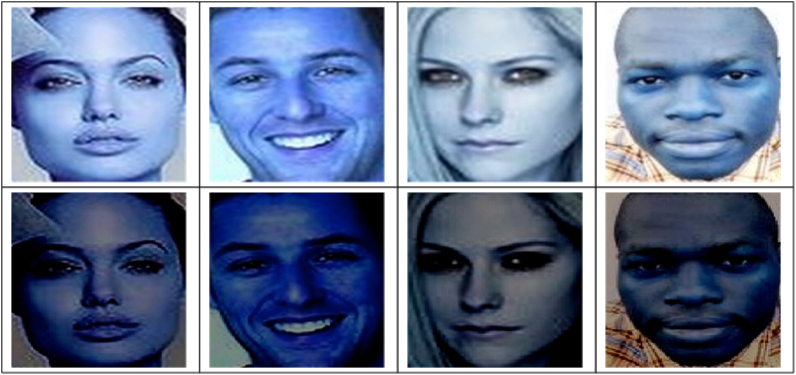

When FGSM is used to generate adversarial examples from the dataset, these images are implemented to improve upon the baseline model so that it will be robust enough to resist any similar attack. In this view, a subset of the training set was used to generate adversarial images. Fig. 10 presents some of the images obtained with the approach and it can be seen that these images are remarkably faithful recreations of the source materials and it is almost impossible to differentiate them with human eyes.

Fig. 10.

Original images compared to adversarial images. First line original image, second line adversarial image.

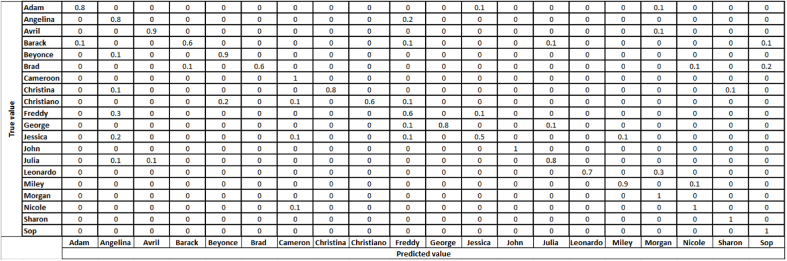

Once the images have been generated through this method, they are used to fine -tune the recognition model as said earlier. In the end, the confusion matrices of the two models are measured for the images of the 20 classes used since the beginning. Concretely, the images are passed in the model before and after the tune, and the same exercise is repeated for generated images. Fig. 11 shows the model's confusion matrix for regular imaes, Fig. 12 shows the model's confusion matrix for adversarial images it has generated, and Fig. 13 shows the model's final output with adversarial images after it has been fine-tuned.

Fig. 11.

Confusion matrix of recognition model on normal images.

Fig. 12.

Confusion matrix of recognition model on adversarial images.

Fig. 13.

Confusion matrix of fine-tuned model on adversarial images.

It can be noticed that the generated adversarial images considerable reduce the performance of the model (from Figs. 11 and 12) which was predictable because adversarial images tend to fool the network. Now, when the model is fine-tuned, we observe an improvement (from Figs. 12 and 13) meaning the proposed mechanism was able to improve the robustness or the sensitivity to such attacks. These preliminary results show that it is a promising path.

For all the CNNs used, a deep sensitivity analysis will be required for an optimized implementation of the proposed approach. The end goal will be to be able to control the effectiveness of input parameters. For this purpose, many approaches exist in the literature, from area under the curve (AUC) to Receiver operating characteristics (ROC) etc. [48] or more specific approaches such as one at a time (OAT/OFAT), automated differentiation, screening emulators, etc. More details about SA analysis is located in [49].

It is true that significant results were obtained with different models used in the present work however, there are some factors that can negatively affect the obtained results some important one is uncertainty and reliability which will be invested in a recent future to consolidate the results.

6. Conclusion

This paper presented a robust pipeline dedicated to the recognition of faces named RoFacewhich tackles some challenges that influence the accuracy of recognition/classification which are: image quality, partial occlusion, and sensitivity to adversarial attacks. For this purpose, after a brief study of relevant approaches in the literature, detailed explanations of the proposed pipeline were presented. RoFace uses a super resolution module, an occlusion removal component, a resnet-based architecture for features extraction, and a principle enabling the model to defend against adversarial attacks. The results showed that the approach provides benefits in many aspects, firstly, it enhances or increases image aspect (the aspect characteristic studied here is the resolution) when dealing with low-resolution images, it removes the occlusions if the face is hidden by a face mask or with eyes glasses and is robust to adversarial examples. Additionally, the extracted features are also invariant to any transformation such as rotation, etc. However, the gain obtained is still not considerable even if they are acceptable, meaning that future studies will imply more investigation on these factors affecting the recognition in order to increase the improvements as much as possible. Moreover, other related factors such as uncertainty of model as well as reliability will also deeply be investigated to have a more realistic solution.

Author contribution statement

Elie TAGNE FUTE; Lionel Landry SOP DEFFO: Conceived and designed the experiments; Performed the experiments; Analyzed and interpreted the data; Contributed reagents, materials, analysis tools or data; Wrote the paper.

Emmanuel TONYE: Conceived and designed the experiments; Analyzed and interpreted the data; Contributed reagents, materials, analysis tools or data; Wrote the paper.

Funding statement

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Data availability statement

Data will be made available on request.

Declaration of interest's statement

The authors declare no competing interests.

References

- 1.Tang Zhijie, Wu Xiaocheng, Fu Bin, Chen Weiwei, Feng Hao. Fast face recognition based on fractal theory. Appl. Math. Comput. 2018;321:721–730. doi: 10.1016/j.amc.2017.11.017. ISSN 0096-3003. [DOI] [Google Scholar]

- 2.Paul Harris, Chris Brunsdon, Martin Charlton Geographically weighted principal components analysis, Int. J. Geogr. Inf. Sci., vol 25, pp 1717-1736.

- 3.Yang Ming-Hsuan. Proceedings of the fifth IEEE International Conference on Automatic Face and Gesture Recognition. 2018. Kernel eigenfaces vs kernel fisherfaces: face recognition using kernel methods face recognition using eigen-faces, Fisher-faces; pp. 215–230. [Google Scholar]

- 4.Li Mengyu, Zhao Junlong. Communication-efficient distributed linear discriminant analysis for binary classification. Stat. Sin. 2022;32:1343–1361. [Google Scholar]

- 5.Krizaj J., truc V., Pavesic N. In: Campilho A., Kamel M., editors. vol. 6111. Springer; Berlin: 2010. Adaptation of SIFT features for robust face recognition. (Image Analysis and Recognition. ICIAR). [DOI] [Google Scholar]

- 6.Rahim Abdur, Hossain Najmul, Wahid Tanzillah, Azam Shafiul. Face recognition using local binary patterns (LBP) Global J. Comp. Sci. Technol. Graphics and Vision. 2013;13 [Google Scholar]

- 7.Carik Muge, Ozen Figen. vol. 1. Elsevier; 2012. pp. 118–123. (A Face Recognition System Based on Eigenfaces Method, Procedia Technology). [Google Scholar]

- 8.Gazley Michael, Collins Katie, Roberston J., Benjamin Hines, Fisher L., Angus McFarlane. NZAusIMM Branch Conference at Dunedin; 2015. Application of Principal Component Analysis and Cluster Analysis to Mineral Exploration and Mine Geology. [Google Scholar]

- 9.Krishna M., Neelima M., Harshali Mane, Venu Matcha. Image classification using Deep learning. Int. J. Eng. Technol. 2018;7(614) doi: 10.14419/ijet.v7i2.7.10892. [DOI] [Google Scholar]

- 10.Schroff F., Kalenichenko D., Philbin J., Facenet A unified embedding for face recognition and clustering. IEEE Conference on Computer Vision and Pattern Recognition. 2015;6(7):815–823. doi: 10.1109/cvpr.2015.7298682. [DOI] [Google Scholar]

- 11.Lu X., Zheng X., Yuan Y. Remote sensing scene classification by unsupervised Representation Learning. IEEE Transactions on Geoscience and Remote Sensing. 2017;55(9):5148–5157. doi: 10.1109/TGRS.2017.2702596. [DOI] [Google Scholar]

- 12.Cao Q., Shen L., Xie W., Parkhi O.M., Zisserman A. IEEE Conference on Automatic Face and Gesture Recognition. 2018. Vggface2: a dataset for recognizing faces across pose and age. arXiv:1710.08092 [cs.CV] [Google Scholar]

- 13.Li Shaojuan, Wang Lizhi, Jia Li, Yao Yuan. Image classification algorithm based on improved AlexNet. J. Phys. Conf. 2021;1813(12051) doi: 10.1088/1742-6596/1813/1/012051. [DOI] [Google Scholar]

- 14.Zhong Zilong, Li Jonathan, Ma Lingfei, Jiang Han, He Zhao. International Geoscience and Remote Sensing Symposium (IGARSS) 2017. Deep residual networks for hyperspectral image classification, IEEE. [DOI] [Google Scholar]

- 15.He Kaiming, Zhang Xiangyu, Ren Shaoqing, Sun Jian. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2016. Deep residual learning for image recognition; pp. 770–778. [Google Scholar]

- 16.Wang Mei, Deng Weihong. Deep face recognition: a survey, neurocomputing. Elsevier. 2021;429(14):215–244. [Google Scholar]

- 17.Zhou E., Cao Z., Yin Q. 2015. Naive-deep Face Recognition: Touching the Limit of Lfw Benchmark or Not? arXiv:1501.04690v1 [cs.CV] (January. [Google Scholar]

- 18.Sun Y., Wang X., Tang X. 2014. Deeply Learned Face Representations Are Sparse, Selective, and Robust. arXiv:1412.1265 [cs.CV] (December. [Google Scholar]

- 19.Zhu Z., Luo P., Wang X., Tang X. 2014. Recover Canonical-View Faces in the Wild with Deep Neural Networks. arXiv:1404.3543 [cs.CV] [Google Scholar]

- 20.Cao X., Wipf D., Wen F., Duan G., Sun J. IEEE International Conference on Computer Vision (ICCV) 2013. A practical transfer learning algorithm for face verification. [DOI] [Google Scholar]

- 21.Moghaddam Baback, Jebara Tony, Pentland Alex. Bayesian face recognition. Pattern Recogn. 2000;33(11):1771–1782. doi: 10.1016/S0031-3203(99)00179-X. ISSN 0031-3203. [DOI] [Google Scholar]

- 22.Poorni R., Charulatha S., Amritha B., Bhavyashree P. Real-time face detection and recognition using deepface convolutional neural network. The Electrochemical Society. 2022;107(5091) [Google Scholar]

- 23.Sharath Shylaja S., Balasubramanya Murthy K.N., Natarajan S. 2010. Dimensionality Reduction Techniques for Face Recognition, Refinements and New Ideas in Face Recognition. [DOI] [Google Scholar]

- 24.Li Haoxiang, Hua Gang. IEEE Proceeding in Computer Vision and Pattern Recognition. 2015. Hierarchical-PEP model for real-world face recognition. [Google Scholar]

- 25.Balaprasad Tegala, Krishna R.V.V. Face recognition based security system using sift algorithm. Int. J. Sci. Engin. Adv. Technol. IJSEAT. 2015;3(11) [Google Scholar]

- 26.Ammar Sirine, Bouwmans Thierry, Neji Mahmoud. Face identification using data augmentation based on the combination of DCGANs and basic manipulations. Information MDPI. 2022;12(370) doi: 10.3390/info13080370. [DOI] [Google Scholar]

- 27.Liu F., Chen D., Wang F., et al. Artif Intell Rev; 2022. Deep Learning Based Single Sample Face Recognition: a Survey. [DOI] [Google Scholar]

- 28.Aneja Sandhya, Aneja Nagender, Abas Pg Emeroylariffion, Naim Abdul Ghani. Defense against adversarial attacks on deep convolutional neural networks through nonlocal denoising. IAES Int. J. Artif. Intell. 2022;11(3):961–968. doi: 10.1159/ijai.v11.i3.pp961-968. [DOI] [Google Scholar]

- 29.Papernot Nicolas, McDaniel Patrick, Wu Xi, Jha Somesh, Swamiz Ananthram. 37th IEEE Symposium on Security & Privacy. 2016. Distillation as a Defense to Adversarial Perturbations against Deep Neural Network. [Google Scholar]

- 30.Kathrin Grosse, Nicolas Papernot, Praveen Manoharan, Michael Backes, Patrick McDaniel, Adversarial Examples for Malware Detection, Computer Security – ESORICS, Vol vol. 10493, ISBN : 978-3-319-66398-2.

- 31.Mila E. Majster,Data types, abstract data types and their specification problem. Theor. Comput. Sci. 1979;8(1):89–127. doi: 10.1016/0304-3975(79)90059-8. ISSN 0304-3975. [DOI] [Google Scholar]

- 32.Thatcher J.W., Wagner E.G., Wright J.B. Data type specification: parameterization and the power of specification techniques. ACM Trans. Program Lang. Syst. 1982;4(4) [Google Scholar]

- 33.Gaudel Marie-Claude, Gall Pascale Le. In: Formal Methods and Testing. Hierons R., Bowen J., Harman M., editors. Springer-Verlag; 2008. Testing data types implementations from algebraic specifications; pp. 209–239. [DOI] [Google Scholar]

- 34.Zhang K., Zhang Z., Li Z., Qiao Y. Joint face detection and alignment using multi-task cascaded convolutional networks. IEEE Signal Process. Lett. 2016 doi: 10.1109/LSP.2016.2603342. [DOI] [Google Scholar]

- 35.Hasan Fuad Tahmid, Ahmed Fime Awal, Sikder Delowar, Raihan Iftee Akil, Rabbi Jakaria, Mabrook S., Al-rakhami, Gumae Abdu, Sen Ovishake, Fuad Mohtasim, Islam Nazrul. Recent advances in deep learning techniques for face recognition. IEEE Access. 2021;9:99112–99142. doi: 10.1109/ACCESS.2021.3096136. [DOI] [Google Scholar]

- 36.Yu(AUST) Zhang. Xu Huan. PeerJ Comput Science; 2021. Chengfei Pei and Gaoming Yang , Adversarial Example Defense Based on Image Reconstruction. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Dong Chao, Loy Chen Change, He Kaiming, Tang Xiaoou. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2014;38(2) doi: 10.1109/TPAMI.2015.2439281. [DOI] [PubMed] [Google Scholar]

- 38.Turhan C.G., Bilge H.S. 2018 26th Signal Processing and Communications Applications Conference. SIU); 2018. Single Image Super Resolution Using Deep Convolutional Generative Neural Networks; pp. 1–4. [DOI] [Google Scholar]

- 39.Fute E.T., Deffo L.L.S., Tonye E. Eff-vibe: an efficient and improved background subtraction approach based on vibe. Int. J. Image Graph. Signal Process. 2019;11(2):1–14. doi: 10.5815/ijigsp.2019.02.01. [DOI] [Google Scholar]

- 40.Deffo L.L.S., Fute E.T., Tonye E. Cnnsfr: a convolutional neural network system for face detection and recognition. Int. J. Adv. Comput. Sci. Appl. 2018;9(12) doi: 10.14569/IJACSA.2018.091235. [DOI] [Google Scholar]

- 41.Zhang Jinlu, Liu Mingliang, Wang Xiaohang, Cao Chengcheng. 40th Chinese Control Conference. CCC); 2021. Residual net use on FSRCNN for image super-resolution; p. 8077. 8077. [DOI] [Google Scholar]

- 42.Darvish Mahdi, Pouramini Mahsa, Hamid Bahador. 2021. Towards Fine-grained Image Classification with Generative Adversarial Networks and Facial Landmark Detection. arXiv:2109.00891 [cs.CV] [DOI] [Google Scholar]

- 43.Alhlffee Mahmood H.B., Huang Yea-Shuan, Chen Yi-An. PeerJ Computer Science; 2022. 2D Facial Landmark Localization Method for Multi-View Face Synthesis Image Using a Two-Pathway Generative Adversarial Network Approach. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Li Lei, Zhang Tianfang, Oehmcke Stefan, Gieseke Fabian, Igel Christian. 2022. Mask-FPAN: Semi-supervised Face Parsing in the Wild with De-occlusion and UV GAN. arXiv:2212.09098v1 [cs.CV] [Google Scholar]

- 45.Sajati Haruno. The effect of peak signal to noise ratio (PSNR) values on object detection accuracy in viola jones method. Proceeding senatik. 2018;4 [Google Scholar]

- 46.Wang Z., Bovik A., Sheikh H.R., Simoncelli E.P. Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 2004;13(4):600–612. doi: 10.1109/TIP.2003.819861. [DOI] [PubMed] [Google Scholar]

- 47.Goodfellow I.J., Shlens J., Szegedy C. International Conference on Machine Learning. 2015. Explaining and Harnessing Adversarial Examples. [DOI] [Google Scholar]

- 48.Islam Amirul, Kowal Matthew, Jia Sen, Derpanis Konstantinos G., Neil D., Bruce B. Computer Vision Foundation; 2021. Global Pooling, More than Meets the Eye: Position Information Is Encoded Channel-Wise in CNNs. [Google Scholar]

- 49.Ceker H., Upadhyaya S. IEEE International Workshop on Information Forensics and Security (WIFS) 2017. Sensitivity analysis in keystroke dynamics using convolutional neural networks. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data will be made available on request.