Abstract

The TRIPOD-Cluster (transparent reporting of multivariable prediction models developed or validated using clustered data) statement comprises a 19 item checklist, which aims to improve the reporting of studies developing or validating a prediction model in clustered data, such as individual participant data meta-analyses (clustering by study) and electronic health records (clustering by practice or hospital). This explanation and elaboration document describes the rationale; clarifies the meaning of each item; and discusses why transparent reporting is important, with a view to assessing risk of bias and clinical usefulness of the prediction model. Each checklist item of the TRIPOD-Cluster statement is explained in detail and accompanied by published examples of good reporting. The document also serves as a reference of factors to consider when designing, conducting, and analysing prediction model development or validation studies in clustered data. To aid the editorial process and help peer reviewers and, ultimately, readers and systematic reviewers of prediction model studies, authors are recommended to include a completed checklist in their submission.

Medical decisions are often guided by predicted probabilities, for example, regarding the presence of a specific disease or condition (diagnosis) or that a specific outcome will occur in time (prognosis).1 2 3 4 5 Predicted probabilities are typically estimated using multivariable models by combining information or values from multiple variables (called predictors) that are observed or measured in an individual. Prediction models are typically aimed at assisting healthcare professions in making clinical decisions for individual patients.5 6 In essence, a prediction model is an equation that converts an individual’s observed predictor values into a probability (or risk) of a particular outcome occurring.

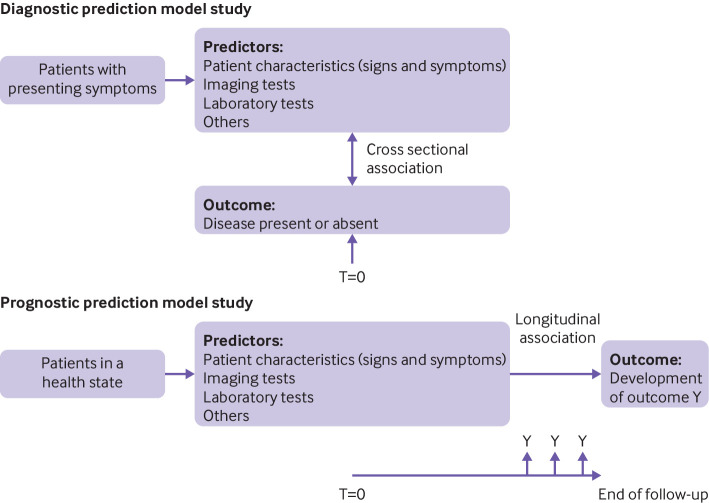

Prediction models fall into two broad categories: diagnostic and prognostic (box 1; fig 1).1 3 In a diagnostic model, two or more predictors are combined and used to estimate the probability that a certain condition is present at the moment of prediction: a cross sectional relation or prediction. They are typically developed for individuals suspected of having that condition based on presenting symptoms or signs. In a prognostic model, multiple predictors are combined and used to estimate the probability of a particular event occurring within a given prediction (time) horizon: a longitudinal relation or prediction. Prognostic models are developed for individuals in a particular health state that are at risk of developing the outcome of interest.5 8 9 We use the term “prognostic models” in the broad sense, referring to the prediction of a future condition in individuals with symptoms or in those without (eg, individuals in the general population), rather than the narrower definition of predicting the disease course of patients receiving a diagnosis of a particular disease with or without treatment. The prediction horizon can vary considerably depending on the event of interest. For example, when predicting in-hospital complications after surgery, the horizon is shorter than for predicting mortality at three months in patients receiving a diagnosis of pancreatic cancer, which in turn is shorter than predicting outcomes such as coronary heart disease in the general population where the prediction horizon is often 10 years.10

Box 1. Types of prediction model studies, adapted from TRIPOD E&E 20152*.

Prediction model development studies

These studies aim to develop or produce a prediction model by identifying predictors of the outcome (eg, based on a priori knowledge, data driven analysis) that can be used for tailored predictions by estimating the weight (or coefficient) of each predictor. Sometimes, the development can focus on updating an existing prediction model by including one or more additional predictors, for example, that were identified following the development of the original model.

Quantification of the model’s predictive performance (eg, by calibration, discrimination, clinical utility) using the same dataset in which the model was developed (often referred to as apparent performance) will tend to give optimistic results, particularly in small datasets. This is the result of overfitting, which is the tendency of models to capture some of the random variation that is present in any dataset. Overfitting is more problematic when the sample size is insufficient for model development.

Hence, prediction model development studies that use the same data for estimating the developed model’s predictive performance should also include a procedure to estimate optimism corrected performance, known as internal validation. The methods are often referred to as internal validation because no new dataset is being used; rather, performance is estimated internally using the dataset at hand. The most common approaches for internal validation include bootstrapping or cross validation. These methods aim to give more realistic estimates of the performance that we might expect in new participants from the same underlying population that was used for model development.

External validation studies†

These studies aim to assess (and compare) the predictive performance of one or more existing prediction models by using participant data that were not used to develop the prediction model. External validation can also be part of a model development study. It involves calculating outcome predictions for each individual in the validation dataset using the original model (ie, the developed model or formula) and comparing the model predictions to the observed outcomes. An external validation can be used for participant data collected by the same investigators, with the same predictor and outcome definitions and measurements but sampled from a later time period; used by other investigators in another hospital or country; used in similar participants, but from an intentionally different setting (eg, models developed in secondary care and tested in similar participants, but selected from primary care); or even in other types of participants (eg, models developed in adults and tested in children, or developed for predicting fatal events and tested for predicting non-fatal events).

Randomly splitting a single dataset (at the participant level) into a development and a validation part is often erroneously referred to as a form of external validation† of the model. In fact, this process is a weak and inefficient form of internal validation: for small datasets, it reduces the development sample size and leaves insufficient data for evaluation, while for large sample sizes, the two parts only differ by chance, and is thus a weak evaluation.

For large datasets, a non-random split (eg, at the hospital level) might be useful—although more informative approaches (eg, internal-external cross validation) are available to examine (heterogeneity) model performance.7 A chronological split into development and validation parts resembles temporal external validation, but usually this process is still different to a completely separate validation study conducted at a later point in time. When a model performs poorly, a validation study can be followed by updating or adjusting the existing model (eg, recalibrating or extending the model by including additional predictors).

*TRIPOD=Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis; E&E=explanation and elaboration document.

†The term validation, although widely used, is misleading, because it indicates that model validation studies lead to a “yes” (good validation) or “no” (poor validation) answer on the model’s performance. However, the aim of internal or external validation is to evaluate (quantify) the model’s predictive performance.

Fig 1.

Schematic representation of diagnostic and prognostic prediction modelling studies. T=time of prediction. Adapted by permission BMJ Publishing Group Limited [Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): the TRIPOD statement. Collins GS, Reitsma JB, Altman DG, Moons KGM. BMJ 2015;350:g7594]1

Prediction model studies can look at the development of an entirely new prediction model, or the evaluation of a previously developed model in new data (often referred to as model validation) (box 1). Model development studies also include studies that update the model parameters (or include additional predictors) with the goal of improving the predictive accuracy in a specific setting, for example, in a different subgroup (such as race/ethnic group or sex/gender) or in a specific hospital or country.11 12 13

The development and validation of prediction models is often based on participant level data from a specific setting such as a single hospital, institute, or centre. However, datasets might combine or use participant level data from multiple sources or settings (referred to here as clusters). An example is an individual participant data (IPD) meta-analysis that uses IPD from multiple studies or sources, and another is an electronic health records (EHR) study containing IPD recorded from multiple hospitals or general practices.

Although single cluster studies (eg, from one particular hospital) can be convenient (eg, to facilitate data collection), they can result in unwanted problems for either model development or validation.14 Firstly, single cluster studies will typically have smaller sample sizes than studies with data from multiple clusters. Prediction models that are developed in small datasets are prone to overfitting and tend to have poor reproducibility.15 Briefly, overfitting implies that the model is too much tailored to the development sample, and no longer yields accurate predictions for new individuals from the same source population. To reduce the risk of overfitting, it is generally recommended to adopt penalisation (ie, shrinkage) methods that decrease the variability of model predictions. However, the effectiveness of these methods can be very limited when the sample size is low.16

Secondly, estimates of prediction model performance can be very imprecise when derived from small samples.17 18 Although it has been suggested that at least 100 events and 100 non-events is recommended when assessing prediction model performance,19 even larger samples can give imprecise estimates.20 Unfortunately, the sample size of (single cluster) validation studies is often limited.21 For example, a systematic review of prediction models for coronavirus disease 2019 found that most (79%) published validation studies included fewer than 100 events.22

Thirdly, the intended use of prediction models is rarely restricted to the narrow setting or context from which they were developed. In practice, prediction models are often applied months or even years after their development, possibly in new hospitals, medical settings (eg, from primary to secondary care), domains (eg, from adults to children), regions, or even countries. Although single centre validation studies might help to query the model’s performance in a new sample, they do not directly reveal the model’s extent of transportability and generalisability across different but related source populations.15

Fourthly, when combining data from multiple clusters to a single dataset but with multiple data clusters, participants from the same cluster in the datasets (eg, the same hospital, centre, city, or perhaps even country) will often tend to be more similar than participants from different clusters. Participants from the same cluster will have been subject to similar healthcare processes and other related factors. Hence, correlation is likely to be present between observations from the same data cluster.23 This effect is also known as clustering, and can lead to differences (or heterogeneity) between clusters regarding participant characteristics, baseline risk, predictor effects, and outcome occurrence. As a consequence, the performance of prediction models developed or validated in clustered datasets will tend to vary across the clusters.24 25 26 Using a dataset from a single cluster to develop or validate a prediction model can therefore be of limited value, because the resulting model or model performance is unlikely to generalise to other relevant clusters that intend to use the prediction model.

The TRIPOD (transparent reporting of a multivariable prediction model for individual prognosis or diagnosis) statement was published in 2015 to provide guidance on the reporting of prediction model studies.1 2 27 It comprises a checklist of 22 minimum reporting items that should be looked at in all prediction model studies, with translations available in Chinese and Japanese (https://www.tripod-statement.org/). However, the 2015 statement does not detail important issues that arise when prediction model studies are based on clustered datasets. For this reason, we developed a new standalone guideline for prediction model reporting, TRIPOD-Cluster, that provides guidance for transparent reporting of studies that describe the development or validation (including updating) of a diagnostic or prognostic prediction model using clustered data.28 TRIPOD-Cluster covers both diagnostic and prognostic prediction models, and we will collectively refer to them more generally as “prediction models” in this article, while highlighting issues that are specific to either type of model.

Summary points.

To evaluate whether a prediction model is fit for purpose, and to properly assess their quality and any risks of bias, full and transparent reporting of prediction model studies is essential

TRIPOD-Cluster is a new reporting checklist for prediction model studies that are based on clustered datasets

Clustered datasets can be obtained by combining individual participant data from multiple studies, by conducting multicentre studies, or by retrieving individual participant data from registries or datasets with electronic health records; presence of clustering can lead to differences (or heterogeneity) between clusters regarding participant characteristics, baseline risk, predictor effects, and outcome occurrence

Performance of prediction models can vary across clusters, and thereby affect their generalisability

Additional reporting efforts are needed in clustered data to clarify the identification of eligible data sources, data preparation, risk-of-bias assessment, heterogeneity in prediction model parameters, and heterogeneity in prediction model performance estimates

Types of clustered datasets covered by TRIPOD-Cluster

We distinguish three types of large clustered datasets that are dealt with by TRIPOD-Cluster. For example, IPD could come from:

Existing multiple studies (eg, cohort studies or randomised trials) where each study contributes separate datasets that are combined into a single dataset (clustering by study)

Large scale, multicentre studies (which cluster by centre)

EHR or registries from multiple practices or hospitals (clustering by practice or hospital).

Examples of large, clustered datasets of each type are provided in table 1. A specific type of clustered datasets arises when predictor or outcome variables are assessed at multiple time points.34 Because repeated measurements are clustered within individuals, their analysis requires special efforts during the development and validation of prediction models,34 35 which is beyond the scope of TRIPOD-Cluster.

Table 1.

Examples of large datasets with clustering

| Dataset | IMPACT29 | EPIC30 | CPRD31 | MIMIC-III32 |

|---|---|---|---|---|

| Population | Patients with a head injury | Volunteers agreeing to participate | Patients attending primary care practices in the UK | Patients admitted to the Beth Israel Deaconess Medical Center (Boston, MA, USA) |

| Data source | IPD from multiple studies | IPD from a prospective multicentre study | Linked database with EHR data | Hospital database with EHR data |

| Total sample size | 11 022 | 519 978 | 11 299 221 | 38 597 |

| No of clusters | 15 studies | 23 centres | 674 general practices | 5 critical care units |

| Heterogeneity in study designs | Phase 3 clinical trials; observational cohort studies | Observational cohort studies; nested case-control studies | Not applicable | Not applicable |

| Heterogeneity in included populations | Data collection from 1984 to 1997; data from high, low, and middle income countries; variable severity of brain injury | Participant enrolment from 1992 to 2000; data from 10 European countries; heterogeneity in participant recruitment schemes | Data collection from 1987 to present; data from England, Wales, Scotland, and Northern Ireland | Data collection from 2001 to 2012; variable patient ethnic group and social status, among other factors |

| Heterogeneity in data quality | Variable classification for head injuries; variable time points for outcome assessment | Lack of standardised procedures across cohorts; heterogeneity in dietary assessment methods; heterogeneity in anthropometric measurement methods; heterogeneity in questionnaires across countries | Selective linkage with other databases; large variation in data recording between practices; variable frequency of data recording by age, sex, and underlying morbidity; informative missingness of patient characteristics; non-standardised definitions of diagnoses and outcomes; possible variation in extent of misclassification between diseases | Different critical care information systems in place during data collection; protected health information removed from free text fields |

| Heterogeneity in level of care | Variability in level of local care; clear improvement of treatment standards over time | Not applicable | Not applicable | Variable efforts to health prevention owing to variability in health insurance programmes among patients33 |

IPD=individual participant data; EHR=electronic health records data; IMPACT=International Mission for Prognosis And Clinical Trial; EPIC=European Prospective Investigation into Cancer and Nutrition; CPRD=Clinical Practice Research Datalink; MIMC-III=Medical Information Mart for Intensive Care III.

Individual participant data from existing multiple studies

A common approach to increase sample size and capture variability between clusters is to combine the IPD from multiple primary studies.5 14 36 37 38 Eligible datasets are ideally, but not necessarily, identified through a systematic review of published primary studies.39 Alternatively, datasets can be obtained through a data sharing platform. For instance, the International Mission for Prognosis And Clinical Trial (IMPACT) database was set up in 2007 to combine the IPD from patients with head injuries who participated in different randomised trials and observational studies.29 The included studies adopted different protocols, predictor and outcome definitions, measurement times, and data collection procedures. As a consequence, baseline population characteristics differed among the included studies, with variability being particularly high in some of the observational studies.40 Furthermore, some trials had lower mortality, possibly because they excluded patients with severe head injury. Using so-called IPD meta-analysis (IPD-MA) techniques, the resulting large IMPACT database has been used to develop various prediction models, for example, to estimate the risk of mortality at six months in patients with traumatic brain injury. Several efforts were made to investigate the presence and potential impact of heterogeneity between clusters (here, between studies) in performance.40

As another example, Geersing et al conducted an IPD-MA to assess the performance of the Wells rule, a prediction model for predicting the presence of deep vein thrombosis.41 Eligible datasets were identified by contacting principal investigators of published primary studies on the diagnosis of deep vein thrombosis, and by conducting a literature search. Authors of 13 studies provided datasets, after which predictive performance of the Wells rule and its heterogeneity between studies was investigated using meta-analysis techniques.

Individual participant data from predesigned multicentre studies

An IPD database can also be set up by establishing a collaboration of participating researchers across multiple centres (eg, primary, secondary, or tertiary healthcare practices) by design and thus before data collection. Such predesigned multicentre studies are typically prospective and share a common master protocol outlining participant eligibility criteria, variable definitions, measurement methods, and other study features. Participating investigators and healthcare professionals agree before the start of their study to pool their data, and to predefine the participant eligibility criteria, data collection methods, and analysis techniques. For example, the European Prospective Investigation into Cancer and Nutrition (EPIC) study is a multinational cohort where 23 research centres within Europe with prospectively collected IPD from more than half a million participants to study the role of nutrition in the causation and prevention of cancer.30 Questionnaires and interviews were used to retrieve baseline data on diet and non-dietary variables, as well as anthropometric measurements and blood samples. Participant outcomes were determined using insurance records, cancer registries, pathology registries, mortality registries, active follow-up, and death records collection. Over the past few years, the EPIC dataset has been used to develop and validate prognostic risk prediction models for ovarian cancer,42 colorectal cancer,43 HIV infection,44 type 2 diabetes,45 and several other disease outcomes.

When combining IPD from existing studies, these studies could be multicentre studies themselves. Typically, however, when such study datasets are used for prediction model development or validation, the data are often considered as a single study dataset. But in this scenario as well, developed and validated models could be subject to variability in predictive accuracy across different centres.

Electronic healthcare records or registries from multiple practices or hospitals

Another type of clustered data are large databases with routinely collected data from multiple hospitals, primary care, or other healthcare practices.46 47 48 These registry databases usually contain EHR for thousands or even millions of individuals from multiple practices, hospitals, or countries. Prediction model studies using EHR data are increasingly common.48 For example, QRISK3 was developed using EHR data from 1309 QResearch general practices in England.49 Unlike data that are collected specifically for research purposes, EHR data are collected as per routine practice requirements.48 As a consequence, data quality and completeness often varies between individuals, clinical domains, geographical regions, and individual databases.50 51 52 53 54 For instance, patients with clinically obvious disease might receive less expensive and less invasive investigation than patients with less severe disease that is more difficult to diagnose. Further problems arise when registries cannot be linked reliably for all patients or have very limited follow-up.

Challenges and opportunities in using clustered datasets for prediction modelling

Without any recognition or adjustment for clustering when developing a prediction model, the estimated model parameters (eg, baseline risk, predictor effects, or weights) and the resulting predicted probabilities could be misleading. For example, when a standard logistic regression, time-to-event or machine learning model is used that ignores the inherent clustering, the final model might yield estimated probabilities that are too close to the overall outcome frequency in the entire study dataset.55 The presence of clustering might also affect the transportability of developed prediction models, and the interpretation of validation study results. In particular, clusters can differ in outcome occurrence, in participant characteristics, or even in predictor effects, which could lead to heterogeneity in prediction model performance across clusters and thereby affect its generalisability. This effect is also known as the spectrum effect.56 57

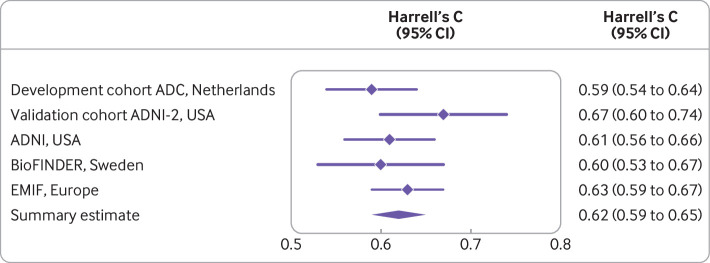

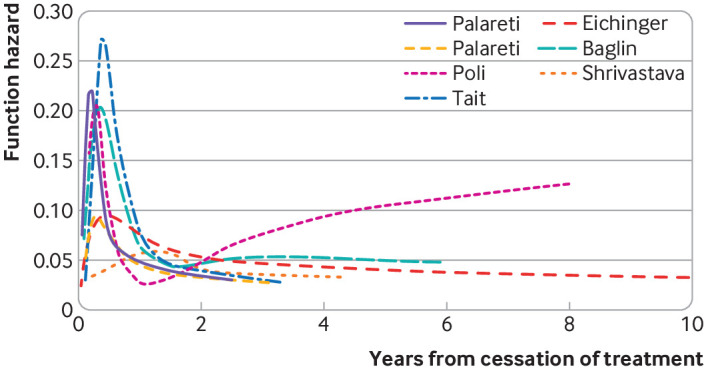

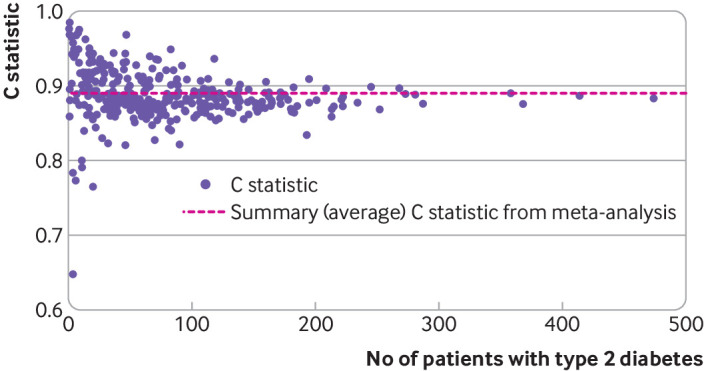

For instance, the ability of a model to distinguish between patients with or without the outcome event, which is often measured by the concordance (c) statistic, depends on the homogeneity of the case mix in the entire dataset: the more similar the values of a predictor are in a given set of individuals, the lower the c statistic tends to be.26 With case mix, we refer to the distribution of predictor values and other relevant participant or cluster characteristics (such as treatments received), and to the outcome prevalence or incidence. As an example, Steyerberg et al used IPD from 15 studies to validate a prediction model for unfavourable outcome in patients with traumatic brain injury.40 They found that case mix variability was particularly high in the four observational studies (as compared with the remaining 11 clinical trials), which also yielded a higher c statistic (as compared with the clinical trials).

Heterogeneity in outcomes

The performance of a prediction model can also vary according to the outcome prevalence or incidence within a cluster, because the outcome occurrence might not only be determined by predictors that are included in the model, but also by the distribution of other participant or cluster characteristics. Hence, clusters that vary considerably in outcome prevalence or incidence might also differ in case mix.56 58 For example, Wilson et al previously developed a prediction model for coronary heart disease. This model has been validated across different time periods and geographical regions with substantial heterogeneity in baseline characteristics (eg, age, sex) and outcome incidence.10 Recently, a systematic review found that the model originally developed by Wilson et al overestimates the risk of developing coronary heart disease, and that the extent of miscalibration substantially varies across settings.59

Heterogeneity in design or patient characteristics

Case mix can also vary between studies with major differences in design or eligibility criteria, or even within individual studies. For example, Vergouwe et al used data from a randomised trial to develop a model to predict unfavourable outcome (ie, death, a vegetative state, or severe disability) in patients with traumatic brain injury.26 When the developed prediction model’s performance was assessed in the original development dataset, it yielded a c statistic of 0.740 (which was optimism corrected) and an explained variation (ie, Nagelkerke R2) of 0.379. However, when the model was externally validated in the data of another trial with similar eligibility criteria, the c statistic increased to 0.779 and R2 increased to 0.485. Further analyses indicated that a large part of the higher performance should be attributed to a more heterogeneous case mix.

Heterogeneity in predictor effects

The predictive performance of a prediction model, when evaluated in different settings, is not only influenced by case mix variation, but also by differences in predictor effects.15 26 Heterogeneity in predictor effects could, for instance, arise when predictors are measured differently (eg, using different equipment, assays, or techniques), recorded at different time point (eg, before or after surgery), or quantified differently (eg, using a different cut-off point to define high and low values) across clusters. The magnitude and distribution of measurement error in predictors might also be inconsistent, which can further contribute to heterogeneity in predictor effects.60 61 Many other clinical, laboratory, and methodological differences might also exist across clusters, including differences in local healthcare, treatment or management strategies, clinical experience, disease and outcome definitions, or follow-up durations.

To summarise, developing or validating prediction models on data from a single cluster or on a clustered dataset where clustering is ignored does not allow to adequately study and understand the heterogeneity that we can expect between clusters.15 To investigate heterogeneity in prediction model performance and to identify underlying causes of such heterogeneity, we need not only studies in which development and validation takes place in multiple clusters, but also the application of analysis techniques that account for such clustering.15 26 40 46 62 Such techniques are often referred to as IPD-MA techniques, and typically adopt hierarchical models (eg, random effects) to account for clustering and for heterogeneity between studies.63 Accounting for clustering potentially allows researchers to develop prediction models with improved generalisability across different settings and populations,38 40 64 to investigate heterogeneity in prediction model performance across multiple clusters, and to assess the generalisability of model predictions across different sources of variation.15 40

TRIPOD-Cluster

Aim and outline of this document

Prediction modelling studies based on evidently clustered data have statistical complexities that are not explicitly covered in the original TRIPOD reporting guideline but need complete and transparent reporting.1 2 As mentioned above, these items include, for example, the presence and handling of systematically missing data within and across clusters, handling of differences in predictor and outcome definitions and measurements across clusters, the (un)availability of specific primary studies, different analysis strategies to account for the presence and modelling of statistical heterogeneity across clusters, and the generalisability and quantification of prediction model performance across clusters.

TRIPOD-Cluster28 provides guidance comprising a checklist of 19 items for the reporting of studies describing the development or validation of a multivariable diagnostic or prognostic prediction model using clustered data (table 2). Studies describing an update (eg, adding predictors), or recalibration of a prediction model using clustered data (ie, a type of model development) are also covered by TRIPOD-Cluster.28 The aim of this explanation and elaboration document is to describe the guidance, provide the rationale for the reporting items, and give examples of good reporting. TRIPOD-Cluster28 is a reporting guideline and does not prescribe how prediction model studies using clustered data should be conducted. However, we do believe that the detailed guidance provided for each item will help researchers and readers for this purpose. We therefore also summarise aspects of good methodological conduct (and the limitations of inferior approaches) to more broadly outline the benefits and implications of developing and validating prediction models using clustered data.

Table 2.

Checklist of items to include when reporting a study developing or validating a multivariable prediction model using clustered data (TRIPOD-Cluster)

| Section/topic | Item No | Description | Page No |

|---|---|---|---|

| Title and abstract | |||

| Title | 1 | Identify the study as developing and/or validating a multivariable prediction model, the target population, and the outcome to be predicted | |

| Abstract | 2 | Provide a summary of research objectives, setting, participants, data source, sample size, predictors, outcome, statistical analysis, results, and conclusions* | |

| Introduction | |||

| Background and objectives | 3a | Explain the medical context (including whether diagnostic or prognostic) and rationale for developing or validating the prediction model, including references to existing models, and the advantages of the study design* | |

| 3b | Specify the objectives, including whether the study describes the development or validation of the model* | ||

| Methods | |||

| Participants and data | 4a | Describe eligibility criteria for participants and datasets* | |

| 4b | Describe the origin of the data, and how the data were identified, requested, and collected | ||

| Sample size | 5 | Explain how the sample size was arrived at* | |

| Outcomes and predictors | 6a | Define the outcome that is predicted by the model, including how and when assessed* | |

| 6b | Define all predictors used in developing or validating the model, including how and when measured* | ||

| Data preparation | 7a | Describe how the data were prepared for analysis, including any cleaning, harmonisation, linkage, and quality checks | |

| 7b | Describe the method for assessing risk of bias and applicability in the individual clusters (eg, using PROBAST) | ||

| 7c | For validation, identify any differences in definition and measurement from the development data (eg, setting, eligibility criteria, outcome, predictors)* | ||

| 7d | Describe how missing data were handled* | ||

| Data analysis | 8a | Describe how predictors were handled in the analyses | |

| 8b | Specify the type of model, all model building procedures (eg, any predictor selection and penalisation), and method for validation* | ||

| 8c | Describe how any heterogeneity across clusters (eg, studies or settings) in model parameter values was handled | ||

| 8d | For validation, describe how the predictions were calculated | ||

| 8e | Specify all measures used to assess model performance (eg, calibration, discrimination, and decision curve analysis) and, if relevant, to compare multiple models | ||

| 8f | Describe how any heterogeneity across clusters (eg, studies or settings) in model performance was handled and quantified | ||

| 8g | Describe any model updating (eg, recalibration) arising from the validation, either overall or for particular populations or settings* | ||

| Sensitivity analysis | 9 | Describe any planned subgroup or sensitivity analysis—eg, assessing performance according to sources of bias, participant characteristics, setting | |

| Results | |||

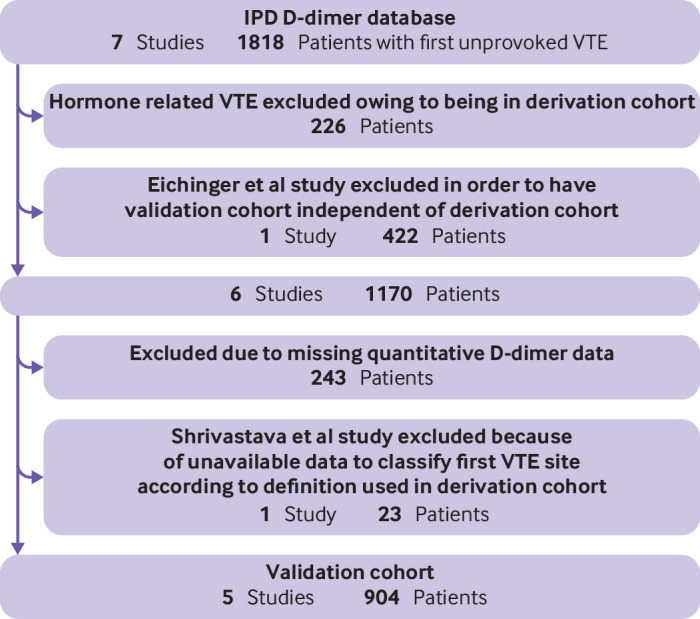

| Participants and datasets | 10a | Describe the number of clusters and participants from data identified through to data analysed; a flowchart might be helpful* | |

| 10b | Report the characteristics overall and where applicable for each data source or setting, including the key dates, predictors, treatments received, sample size, number of outcome events, follow-up time, and amount of missing data* | ||

| 10c | For validation, show a comparison with the development data of the distribution of important variables (demographics, predictors, and outcome) | ||

| Risk of bias | 11 | Report the results of the risk-of-bias assessment in the individual clusters | |

| Model development and specification | 12a | Report the results of any assessments of heterogeneity across clusters that led to subsequent actions during the model’s development (eg, inclusion or exclusion of particular predictors or clusters) | |

| 12b | Present the final prediction model (ie, all regression coefficients, and model intercept or baseline estimate of the outcome at a given time point) and explain how to use it for predictions in new individuals* | ||

| Model performance | 13a | Report performance measures (with uncertainty intervals) for the prediction model, overall and for each cluster | |

| 13b | Report results of any heterogeneity across clusters in model performance | ||

| Model updating | 14 | Report the results from any model updating (including the updated model equation and subsequent performance), overall and for each cluster* | |

| Sensitivity analysis | 15 | Report results from any subgroup or sensitivity analysis | |

| Discussion | |||

| Interpretation | 16a | Give an overall interpretation of the main results, including heterogeneity across clusters in model performance, in the context of the objectives and previous studies* | |

| 16b | For validation, discuss the results with reference to the model performance in the development data, and in any previous validations | ||

| 16c | Discuss the strengths of the study and any limitations (eg, missing or incomplete data, non-representativeness, data harmonisation problems) | ||

| Implications | 17 | Discuss the potential use of the model and implications for future research, with specific view to generalisability and applicability of the model across different settings or (sub)populations | |

| Other information | |||

| Supplementary information | 18 | Provide information about the availability of supplementary resources (eg, study protocol, analysis code, datasets)* | |

| Funding | 19 | Give the source of funding and the role of the funders for the present study | |

TRIPOD-Cluster=transparent reporting of multivariable prediction models developed or validated using clustered data. A separate version of the checklist is also available in the supplementary materials. *Item text is an adaptation of one or more existing items from the original TRIPOD checklist.

Reporting of all relevant information might not always be feasible, for instance, because of word count limits. In these situations, researchers can summarise the relevant information in a table or figure, and provide additional details in the supplementary material.

Use of examples

For each item, we aimed to present two examples from published articles; one using an IPD-MA from multiple existing studies or a predesigned study with multiple clusters (type 1 and 2 above), and one using EHR data (type 3). By referencing only two types of examples, we do not suggest that the TRIPOD-Cluster guidance is limited to these two settings. These examples illustrate the information that is recommended to report. Our use of a particular example to illustrate a specific item does not imply that all aspects or items of the study were well conducted and reported, or that the methods being reported are necessarily the best methods to be used in prediction model research. Rather, the examples illustrate a particular aspect of an item that has been well reported in the context of the methods used by the study authors. Some of the quoted examples have been edited for clarity, with text omitted (denoted by . . .), text added (denoted by []), citations removed, or abbreviations spelled out, and some tables have been simplified.

TRIPOD-Cluster checklist: title and abstract

Item 1: identify the study as developing and/or validating a multivariable prediction model, the target population, and the outcome to be predicted

The main purpose of this item is to recommend an informative title to help readers easily identify relevant articles. The original TRIPOD guidance recommends that authors should include four main issues in their title2; here, we add a fifth item:

The target population in which the model was developed, validated, or updated

The outcome to be predicted

Whether it is a diagnostic or prognostic model (which might already be clear from the target population and outcome to be predicted, or from the prediction model’s acronym)

Whether the paper reports model development, external validation (including updating), or both

The type of clustering—for example, IPD from a given number of clusters (eg, studies, hospitals), a multicentre study, or EHR data from a given number of practices or hospitals

The examples given demonstrate that these five aspects can easily be resolved in a title without making it unnecessarily long. For example, the target population sometimes directly indicates whether the model has a diagnostic or a prognostic aim.1 If a study internally or externally validates an existing prediction model with a known name or acronym, then this name should be mentioned in the title. The title can also include the type of predictors used, such as the addition of laboratory predictors to an existing prediction model.

Example of individual participant data meta-analysis

Pooled individual patient data from five countries were used to derive a clinical prediction rule for coronary artery disease in primary care.65

Example using electronic health records data

Development and validation of prediction models to estimate risk of primary total hip and knee replacements using data from the UK: two prospective open cohorts using the UK Clinical Practice Research Datalink.66

Item 2: provide a summary of research objectives, setting, participants, data source, sample size, predictors, outcome, statistical analysis, results, and conclusions

This item largely follows the same recommendations and guidance as in the original TRIPOD,1 2 and the TRIPOD for Abstracts guidance,67 with some additions.

The abstract should provide enough detail to help readers and reviewers identify the study and then persuade them to read the full paper. It should describe the objectives, study design, analysis methods, main findings (such as a description of the model and its performance), and conclusions. Journal word count restrictions will dictate how much of this detail can be presented. For example, it might not be possible to list all potential predictors evaluated for inclusion in the prediction model in a development study.

Ideally, the predictors included in the final model or their categories (eg, sociodemographic predictors, history taking and physical examination items, laboratory or imaging tests, and disease characteristics) can be listed. Abstracts reporting prediction model studies using clustered datasets should also include:

An explicit reference to whether the study is based on a systematic review of available datasets, a convenient combination of existing datasets, a single multicentre study, or a clustered EHR database

The level within which individuals are clustered (eg, studies, datasets, countries, regions, centres, hospitals, practices) and number of clusters

A summary of how the data were used for model development or validation (eg, which clusters were used to develop and validate the model, the use of internal-external cross validation (as explained below))

How clustering was dealt with in the analysis (eg, one stage IPD-MA)

Information about the heterogeneity in the model’s performance across clusters.

Example of individual participant data meta-analysis

“Background: External validations and comparisons of prognostic models or scores are a prerequisite for their use in routine clinical care but are lacking in most medical fields including chronic obstructive pulmonary disease (COPD). Our aim was to externally validate and concurrently compare prognostic scores for 3-year all-cause mortality in mostly multimorbid patients with COPD.

“Methods: We relied on 24 cohort studies of the COPD Cohorts Collaborative International Assessment consortium, corresponding to primary, secondary, and tertiary care in Europe, the Americas, and Japan. These studies include globally 15 762 patients with COPD (1,871 deaths and 42 203 person years of follow-up). We used network meta-analysis adapted to multiple score comparison (MSC), following a frequentist two-stage approach; thus, we were able to compare all scores in a single analytical framework accounting for correlations among scores within cohorts. We assessed transitivity, heterogeneity, and inconsistency and provided a performance ranking of the prognostic scores.

“Results: Depending on data availability, between two and nine prognostic scores could be calculated for each cohort. The BODE score (body mass index, airflow obstruction, dyspnea, and exercise capacity) had a median area under the curve (AUC) of 0.679 [1st quartile − 3rd quartile=0.655 − 0.733] across cohorts. The ADO score (age, dyspnea, and airflow obstruction) showed the best performance for predicting mortality (difference AUCADO − AUCBODE=0.015 [95% confidence interval (CI)= −0.002 to 0.032]; P=0.08) followed by the updated BODE (AUCBODE updated − AUCBODE=0.008 [95% CI= −0.005 to 0.022]; P=0.23). The assumption of transitivity was not violated. Heterogeneity across direct comparisons was small, and we did not identify any local or global inconsistency.

“Conclusions: Our analyses showed best discriminatory performance for the ADO and updated BODE scores in patients with COPD. A limitation to be addressed in future studies is the extension of MSC network meta-analysis to measures of calibration. MSC network meta-analysis can be applied to prognostic scores in any medical field to identify the best scores, possibly paving the way for stratified medicine, public health, and research.”68

Example using electronic health records data

“Background. An easy-to-use prediction model for long term renal patient survival based on only four predictors [age, primary renal disease, sex and therapy at 90 days after the start of renal replacement therapy (RRT)] has been developed in The Netherlands. To assess the usability of this model for use in Europe, we externally validated the model in 10 European countries.

“Methods. Data from the European Renal Association European Dialysis and Transplant Association (ERA-EDTA) Registry were used. Ten countries that reported individual patient data to the registry on patients starting RRT in the period 1995–2005 were included. Patients <16 years of age and/or with missing predictor variable data were excluded. The external validation of the prediction model was evaluated for the 10- (primary endpoint), 5- and 3-year survival predictions by assessing the calibration and discrimination outcomes.

“Results. We used a dataset of 136 304 patients from 10 countries. The calibration in the large and calibration plots for 10 deciles of predicted survival probabilities showed average differences of 1.5, 3.2 and 3.4% in observed versus predicted 10-, 5- and 3-year survival, with some small variation on the country level. The c index, indicating the discriminatory power of the model, was 0.71 in the complete ERA-EDTA Registry cohort and varied according to country level between 0.70 and 0.75.

“Conclusions. A prediction model for long term renal patient survival developed in a single country, based on only four easily available variables, has a comparably adequate performance in a wide range of other European countries.”69

TRIPOD-Cluster checklist: introduction

Item 3a: explain the medical context (including whether diagnostic or prognostic) and rationale for developing or validating the prediction model, including references to existing models, and the advantages of the study design

The original TRIPOD guidance1 2 also applies to prediction model studies using clustered datasets. Authors should describe:

The medical context and target population for which the prediction model is intended (eg, diagnostic model to predict the probability of deep vein thrombosis in patients with a red or swollen leg or a prognostic model to predict the risk of remission in women diagnosed with breast cancer)

The predicted health outcomes and their relevance

The context and moment in healthcare when the prediction should be made (eg, predict before surgery the risk of postoperative nausea and vomiting in a patient undergoing surgery, or predict in the second trimester of pregnancy the risk of developing pre-eclampsia later in the pregnancy)

The consequences or aims of the model predictions (eg, a diagnostic model to guide decisions about further tests or a prognostic model to guide decisions about treatment or preventive interventions)

If needed, the type of predictors studied (eg, adding specific types of predictors, such as laboratory measurements obtained from more advanced tests, to established, easily obtainable predictors).

Authors of prediction model development studies should reference any existing models and indicate why a new model is needed, ideally supported by a systematic review of existing models.70 Authors who validate a prediction model should explicitly reference the original development study and any previous validations of that model and discuss why validation is needed for the current setting or population.

Finally, authors should stress why the study is carried out on a clustered dataset and clarify the clinical relevance of the clusters. For example, IPD from multiple studies might be used during model development to increase sample size, improve the identification and estimation of predictors, or increase generalisability.14 38 When analysing large registries with EHR data, the presence of clustering might help to tailor predictions to centres with specific characteristics.71 In validation studies, clustered data might be used to evaluate heterogeneity in model performance and to determine whether the model needs to be updated for specific settings or subpopulations.40 46 72 When multiple sources of clustering are present (eg, if individuals are clustered by centre and by physician), authors should motivate and report which clusters were chosen for the analysis.

Example of individual participant data meta-analysis

“Various clinical decision rules have been developed to improve the clinical investigations for suspected deep vein thrombosis. These rules combine different clinical factors to yield a score, which is then used to estimate the probability of deep vein thrombosis being present. The most widely used clinical decision rule is probably that developed by Wells and colleagues . . . Although the Wells rule seems to be a valid tool in the clinical investigation of suspected deep vein thrombosis in unselected patients, its validity in various clinically important subgroups is unclear; most original diagnostic studies on deep vein thrombosis contained few patients in these important subgroups. To determine whether the Wells rule behaves differently in such subgroups we combined individual patient data from 13 diagnostic studies of patients with suspected deep vein thrombosis (n=10 002). Such meta-analyses of individual patient data (data of individual studies combined at patient level) provide a unique opportunity to perform robust subgroup analyses.”41

Example using electronic health records data

“It is hypothesised that earlier detection of acute kidney injury (AKI) may improve patient outcomes through the increased opportunity to treat the patient and the prevention of further renal insults in the setting of evolving injury . . . Over the last few years, several groups have reported both electronic health record (EHR)-based and non-EHR-based risk algorithms that can forecast AKI earlier than serum creatinine. Many of these algorithms use patient demographics, past medical history, vital signs, and laboratory values. However, the EHR contains a wealth of other data that could be used to predict the development of AKI, including nephrotoxin exposure, fluid administration, and other orders and treatments. These additional variables could improve model accuracy, resulting in improved detection of AKI and fewer false positives.”73

Item 3b: specify the objectives, including whether the study describes the development or validation of the model

This item remains unchanged from the original TRIPOD guidance.1 2 The objectives (typically at the end of the introduction) should summarise in a few sentences the healthcare setting, target population, and study focus (model development, validation, or both). Clarifying the study’s main aim facilitates its critical appraisal by readers.

Example of individual participant data meta-analysis

“We therefore analysed clinical, cognitive, and genetic data of patients with amyotrophic lateral sclerosis (ALS) from ALS centers in Europe with a view to predicting a composite survival outcome . . . We aimed to develop and externally validate a prediction model in multiple cohorts.”74

Example using electronic health records data

“This study validates a machine-learning algorithm, InSight, which uses only six vital signs taken directly from the EHR, in the detection and prediction of sepsis, severe sepsis and septic shock in a mixed-ward population at the University of California, San Francisco. We investigate the effects of induced data sparsity on InSight performance and compare all results with other scores that are commonly used in the clinical setting for the detection and prediction of sepsis.”75

TRIPOD-Cluster checklist: methods

Item 4a: describe eligibility criteria for participants and datasets

Readers need a clear description of a study’s eligibility criteria to understand the model’s applicability and generalisability. Prediction model studies that combine IPD from multiple sources often identify relevant studies through collaborative networks or a systematic review of the literature.37 Authors should clearly report the eligibility criteria for selecting both the studies or datasets, the individual participants with those studies or datasets, and eligibility at the individual participant level for inclusion in the development or validation of a prediction model.

Studies might be selected on the basis of their study design (eg, data from randomised trials), study characteristics (eg, a predefined sample size), population characteristics (eg, treatments received), or data availability (eg, availability of particular predictors or outcomes). Prediction model studies that involve multicentre data should report the eligibility criteria for both participants and, when possible, for specific centres (eg, location or sample size). Sometimes, the eligibility criteria of the prediction model study can differ from the eligibility criteria used originally for the included individual studies. For example, some of the identified studies might have targeted particular subpopulations (eg, younger patients) or used eligibility criteria that do not fully match the current study. If the prediction model study therefore excluded certain participants from the included studies, this should be clearly described.

Example of individual participant data meta-analysis

“IPD for model validation was identified using the same systematical search in PubMed, EMBASE and the Cochrane Library as described above. Prospective studies were included when recording disease status of pneumonia and clinical signs and symptoms . . . Individual studies were included when containing patients who: (a) were at least 18 years old; (b) presented through self-referral in primary care, ambulatory care or at an emergency department with an acute or worsened cough (28 days of duration) or with a clinical presentation of lower respiratory tract infection; (c) consulted for the first time for this disease episode; (d) were immunocompetent.”76

Example using electronic health records data

“This study used data from the General Practice Research Database in the United Kingdom which is part of the Clinical Practice Research Datalink (CPRD) . . . People in CPRD have now been linked individually and anonymously to the national registry of hospital admission (Hospital Episode Statistics) and death certificates . . . The main study population consisted of people aged 35–74 years, using the November 2011 version of CPRD and drawn from CPRD practices that participated in the linkages . . . The following persons were excluded: (i) those with cardiovascular disease before the index date or with missing dates, (ii) those prescribed a statin before the index date or with missing dates, (iii) those temporarily registered with the practice.”77

Item 4b: describe the origin of the data, and how the data were identified, requested, and collected

Prediction model studies based on existing data sources should fully describe how they identified, requested, and collected that data. Item 7a covers data cleaning, data harmonisation, and database linkage. The provenance of all included data sources should be made clear (eg, citations or web links).

Investigators conducting prediction model studies based on IPD from multiple studies might identify and collect source datasets by performing a review of the literature (systematic or not) and requesting data from the authors or by forming a collaborative network.37 If IPD collection from all the eligible studies identified in a review is not possible or not necessary, as is often the case, the reasons for excluding any identified studies should be reported. Prediction model studies that used a systematic review should cite that review if it has been published or should report the full search string, with dates and databases searched, using the PRISMA (preferred reporting items for systematic reviews and meta-analyses)-Search extension (https://osf.io/ygn9w/).

Studies using EHR data must report the data source or registry (eg, by referring to a publication or web link) and data extraction methods (eg, queries relating to structured query language). For prospective multicentre studies, this reporting item mostly relates to data collection procedures such as the use of data storage platforms and encryption standards.

Example of individual participant data meta-analysis

“A systematic search of literature was performed to identify all published studies with no restriction on language. This study protocol was started in December 2008, followed by literature searched in PubMed/Medline, Ovid, Web of knowledge and Embase, with additional MESH and free text terms for ‘secondary cytoreductive surgery and ovarian cancer or secondary cytoreductive surgery and ovarian carcinoma’, were supplemented by hand searches of conference proceedings, reference lists in the publications and review articles. We sent the invitation letters to all available investigators or groups of studies we identified, who had reported articles with regard to SCR [secondary cytoreductive surgery]. An international collaborative study group was then set up . . . Individual patient data were collected from all participating groups in which these involved already complete datasets.”78

Example using electronic health records data

“We gathered data from the cost-accounting systems of 433 hospitals that participated in the Premier Inc Data Warehouse (PDW; a voluntary, fee-supported database) between January 1, 2009, and June 30, 2011 . . . PDW includes ≈15% to 20% of all US hospitalizations. Participating hospitals are drawn from all regions of the United States, with greater representation from urban and southern hospitals.”79

Item 5: explain how the sample size was arrived at

One of the main reasons for using large or clustered datasets to develop or validate a model is the greater sample size and access to a broader population.14 46 71 The larger the sample size the better, because large samples lead to more precise results. The effective sample size in a prediction model study is calculated differently depending on the type of outcome:

Binary outcomes: the smaller of the two outcome frequencies80 81 82

Time-to-event outcomes: the number of participants with the event by the main time point of interest80

Sample size considerations for model development

Prediction models developed using small datasets are likely to be affected by overfitting, particularly if they have many candidate predictors relative to the number of outcome events.84 Empirical simulations focusing on accuracy and precision of the regression coefficients (rather than model performance) have suggested that at least 10 participants with the outcome event per variable are needed.85 More precisely, this value is the number of parameters (degrees of freedom) needed to represent these candidate predictors. For example, more than one degree of freedom is required for categorical predictors with two or more levels, and for continuous predictors modelled using splines or fractional polynomials.

However, some researchers have argued that an event per variable of 10 is too conservative 86 and others have suggested larger values of events per variable to avoid bias in estimated regression coefficients.87 88 Simulation studies have shown that the presence of clustering and between-study heterogeneity does not affect the sample size needed much for prediction model development; rather, variable selection and the total number of events and non-events are more influential.89 Recent simulation studies have shown no rationale for the rule of thumb regarding 10 events per variable,90 and no strong relation between event per variable and predictive performance.91

These recent results suggest that sample size requirements should be tailored to the problem and setting. Minimum sample size criteria have been proposed for models developed using linear regression,83 logistic,80 91 and time-to-event models.80 Adaptive sample size procedures have also been proposed.92 Models developed using internal-external cross validation (see below) should ensure that each omitted cluster is sufficiently large for validation. Although formal guidance about the minimal number of clusters or minimum sample size per cluster is currently lacking, the fact that the effective sample size of clustered datasets decreases as the similarity of study participants within each cluster increases is well known.93

Authors should explain how the sample size was determined, fully describing any statistical or practical considerations, including any estimates (rationale and provenance) used in the calculation. Sample size is often determined by practical considerations, such as time, data availability (particularly when obtaining IPD from multiple studies), and cost. In these instances, it is helpful to discuss the adequacy of the sample size in relation to the number of predictors under study, the need for variable selection, and the primary performance measures.

Sample size considerations for model validation

Validation studies aim to quantify a model’s predictive performance when evaluated on a different dataset to that used in development. Current recommendations suggest that at least 100 participants with the outcome and 100 without the outcome are needed,19 while more than 200 outcome events are preferable to ensure precise estimates of predictive performance.20 82 94 95 Datasets tend to be large when originating from multiple sources or EHR. Small sample concerns are then less of an issue, unless the outcome is very rare or model performance is evaluated in each dataset or cluster.46 Authors should explain how they determined the sample size and whether they considered the potential presence of clustering.

If investigators use clustered data, they should highlight which clusters they used to develop the model and which to validate the model. Sample size requirements should be considered for both model development and validation, which might affect which datasets or clusters are used. For example, if a single cluster is kept back for validation, it must contain enough outcome events to be useful for evaluating predictive performance and obtaining precise estimates. However, if a very large cluster is kept from model development to use in validation, the resulting model might have less accurate predictions (ie, more overfitting concerns) especially if the clusters used for development are much smaller. In this scenario, it would be preferable to use all data for model development.

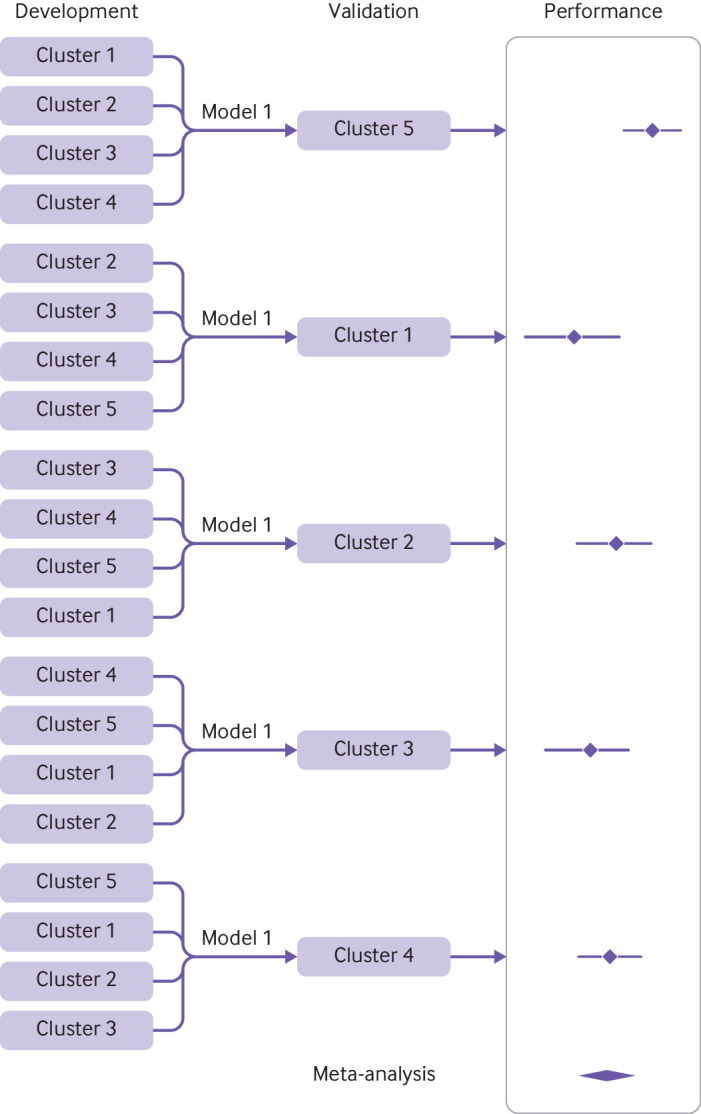

Internal-external cross validation

Internal-external cross validation combines the strength of external validation with the strength of prediction model development using all available data.7 96 Here, a model is developed using the full data minus one cluster, then validating this model using the excluded cluster (fig 2). This process is repeated so that each cluster is omitted and reserved for validation in turn. The consistency of the developed model and its performance can then be examined on multiple occasions. Heterogeneity in performance can then be examined across the different settings and populations represented by the clusters (see also item 8f).

Fig 2.

Illustration of internal-external cross validation

For example, Takada et al implemented internal-external cross validation to evaluate different modelling strategies for predicting heart failure.97 To this purpose, they used an existing large population level dataset that links three sources of EHR databases in England: primary care records from the Clinical Practice Research Datalink, secondary care diagnoses and procedures recorded during admissions in Hospital Episodes Statistics, and the cause specific death registration information sourced from the Office for National Statistics registry. This clustered dataset included 871 687 individuals from 225 general practices, and was used to assess the model’s discrimination and calibration performance across the included practices.

Example of individual participant data meta-analysis

“A general rule of thumb is for at least 10 events to be available for each candidate predictor considered in a prognostic model. There were seven candidate predictors (age, sex, site of VTE [venous thromboembolism], BMI [body mass index], D-dimer post-treatment, lag time and treatment duration) for consideration, but some of these were continuous predictors, which may potentially require non-linear modelling (e.g., fractional polynomials) that would slightly increase the number of variables further (e.g., if age+age2 is included, then “age” relates to two predictors). The RVTEC database has seven trials in total, with 1,634 patients with follow-up information post-treatment and 230 of these have a recurrence . . . six of the seven trials are used for model development, so there are between 1,196 and 1,543 patients and between 161 and 221 recurrences available for the development phase of the prognostic models. Thus, there will be at least 23 (=161 events divided by seven candidate predictors) events for each of the seven candidate predictors, which is considerably greater than the minimum 10 per variable required, this gives adequate scope for fractional polynomial modelling of non-linear trends as necessary . . . Furthermore, the external validation databases also had large numbers. The RIETE database has 6,291 patients with follow-up information post-treatment and 742 of these have a recurrence.”98

Example using electronic health records data

“Based on a previous work using linked CPRD data in which 5118 incident cirrhosis patients were identified in primary or secondary care during a 12-year period from 1998 to 2009, we estimate that at least 426 patients per year are diagnosed with cirrhosis. Therefore, during our study period from 1998 to 2016, there will be around 6887 new cirrhosis cases (study period ends in February 2016) . . . This will give us a population of approximately 765 111 patients (with an abnormal liver blood test result) with the estimated 3443 outcome events. As we are planning to omit 30 GP practices for model validation, assuming all of the 411 practices will be included in our dataset and an even distribution of outcome events across practices, we will have 251 events in the validation dataset (above the estimated minimum requirement of 100 events). For our development dataset, we will have 3192 events. We are interested in 39 predictor variables that may require potentially as many as 65 parameters to be estimated (counting in multiple categories and assuming each of our 14 continuous predictors requires an extra parameter for a non-linear term). This would provide our model with 49 events per variable.”99

Item 6a: define the outcome that is predicted by the model, including how and when assessed

A clear definition and description of how and when the outcomes are measured is key. Readers need this information to judge what the actual outcome was and whether any outcomes were missed or misclassified. The original TRIPOD guidance and explanation and elaboration document (E&E) give clear explanations of how to report outcome assessment and the differences in diagnostic and prognostic prediction studies,2 which we do not repeat here.

Multiple data sources or clusters might have defined or measured their common outcomes slightly differently (see also item 7b). Authors should clearly report these differences and any efforts to redefine or reclassify outcomes from particular data sources to a common outcome definition and classification (see also item 7a).

Prediction model studies using registry data could be at higher risk for problems in detecting or classifying the outcome than when data from dedicated, prospective studies are used. Authors are encouraged, where possible, to report each source study’s protocol for measuring the outcome, data checks, and quality measures.

Example of individual participant data meta-analysis

“The outcome was time to death in years. Participants were contacted by study interviewers in every wave and those who were not located or whose relatives informed they had died, had their mortality information confirmed by the national vital statistics records or by a next-of-kin.”100

Example using electronic health records data

“We defined the primary outcome of critical illness during hospitalization as intensive care unit location stated in the EHRs with concomitant delivery of organ support (either mechanical ventilation or vasopressor use). The delivery of mechanical ventilation was identified using intubation, extubation, and tracheostomy events and ventilator mode data in the EHRs. Vasopressor use was defined as the administration of vasoactive agents (e.g., norepinephrine, dopamine, epinephrine) by infusion for more than 1 h recorded in the EHRs.”101

Item 6b: define all predictors used in developing or validating the model, including how and when measured

Prediction models typically use multiple predictors in combination and might therefore include demographic characteristics, medical history and physical examination items, information on treatments received, and more complex measurements from, for example, medical imaging, electrophysiology, pathology, and biomarkers. When prediction models are developed using clustered data, they might also include characteristics of the healthcare setting (eg, line of care, location). Authors should clearly report how predictors were measured and when, to help readers identify situations where the model is suitable for use.

Authors should indicate whether predictors were measured differently in each cluster and whether any formal harmonisation was done (see also item 7a). Item 7d discusses how to report when one predictor of interest is completely missing from one or more clusters.

Some predictors might be measured differently, for example in EHR datasets and prospective studies, including trials.102 For instance, different healthcare providers and systems can record substantially different amounts of detail about medical histories, clinical variables, and laboratory results.103 These differences can lead to differences in the measurement error (either random or systematic error), which will affect an existing model’s performance and a new model’s regression coefficients.60 104 105 106 Authors should therefore explain how predictors were measured, so that readers can evaluate this information before choosing to use a model in practice.

Example of individual participant data meta-analysis

“In all cohorts, information on age, gender, comorbidities, lifestyle factors, and functional status were collected through structured interviews . . . Comorbidities and lifestyle factors included disease history (heart disease, lung disease, stroke, cancer, diabetes, and hypertension), depression, body mass index (BMI), alcohol use, smoking, and physical activity . . . Depression was defined by the Center for Epidemiologic Studies Depression Scale score ≥3 in ELSA, HRS, and MHAS; by 15-item Geriatric Depression Scale score ≥5 in SABE; and by EURO-D score ≥4 in SHARE. Height and body weight were self-reported in all cohorts, except SABE in which both were measured during a visit . . . Participants were considered physically active if they had engaged in vigorous physical activity (sports, heavy housework, or a job that involves physical labour) at least three times a week in HRS, MHAS, and SABE; and at least once a week in ELSA and SHARE.”100

Example using electronic health records data

Clinical and administrative data were extracted from SingHealth’s electronic health records system, Electronic Health Intelligence System, which is an enterprise data repository that integrates information from multiple sources, including administrative, clinical and ancillary . . . Patient demographics included age, gender, and ethnicity. Social determinants of health included the requirement of financial assistance using Medifund and admission to a subsidized hospital ward . . . For medical comorbidities, chronic diseases such as heart failure, chronic obstructive pulmonary disease, cerebrovascular accident, peripheral vascular disease among other major diseases listed under the Charlson Comorbidity Index, Elixhauser comorbidities and Singapore Ministry of Health Chronic Diseases Program were extracted. These diseases were extracted using International Classification of Diseases 10 codes of primary and secondary discharge diagnoses dating back to seven years.”107

Item 7a: describe how the data were prepared for analysis, including any cleaning, harmonisation, linkage, and quality checks

This new item helps to ensure transparency in how researchers clean, harmonise, and link their obtained data. This information will allow readers to better appraise the quality and integrity of data and determine whether the data are truly comparable and combinable across clusters.

Data cleaning is an essential part of any research study and involves tasks such as identifying duplicate records, checking outliers, and dealing with missing values. The quality of large, routinely collected health data has often been criticised, particularly with respect to their completeness and accuracy.47 108 For example, the quality of routinely collected primary care data can vary substantially, because data are entered by general practitioners during routine consultations, and not for the purpose of research.31 Researchers must therefore undertake comprehensive data quality checks before undertaking a study. Particular weaknesses include missing data, and the potential for data to be missing not at random; non-standardised definitions of diagnoses and outcomes; interpreting the absence of a disease or outcome recording as absence of the disease or outcome itself, when patients with the disease or outcome can sometimes simply fail to present to the general practitioner; incomplete follow-up times and event dates (such as hospital admission and length of stay); and lack of recording of potentially important predictors.

When IPD are obtained from multiple studies or through a multicentre collaboration, researchers need a careful, often prolonged, process to clean each received dataset and harmonise information, so that the IPD can be combined in a meta-analysis.39 102 This process often involves merging the data into one storage/query system (technical harmonisation) and integrating datasets into a logically coherent entity (semantic harmonisation).109 Data integration is often achieved by adopting common vocabularies (eg, CDISC Operational Data Model) or taxonomies (eg, SNOMED Clinical Terms) for naming variables or for standardising values for those variables used commonly in clinical research. For example, measurement units can differ across clusters (eg, kilograms v pounds for weight) and might therefore require standardisation. In addition, when mapping data from different data sources, we recommend distinguishing between what is measured (eg, systolic blood pressure) and how the measurement is done (eg, using a sphygmomanometer). Authors should describe any process for querying and confirming data with the original investigators, and report any process taken to standardise measurements. The complexities involved in managing IPD can be enormously labour intensive and require considerable clinical insight.29 Problems might include identification of the same individual in multiple studies (duplicates), exclusion of ineligible participants who do not meet the inclusion criteria, inconsistent recording of continuous predictors and outcomes between studies, inconsistent timing and method of measuring predictors, and coding of censoring information. Authors should clearly describe how they handled these problems and add any detailed descriptions that do not fit within article word limits to the supplementary material.

Prediction model studies that use routinely collected data should also describe any required data linkage, for example, to join information about a participant’s characteristics from one database to their recorded outcomes in another database (eg, national death registries or even another database from the same cluster). The RECORD (reporting of studies conducted using observational routinely collected data) statement specifically asks researchers to report whether the study “included person-level, institutional-level, or other data linkage across two or more databases,” and, if so, that the study included “the methods of linkage and methods of linkage quality evaluation.”110 A flow diagram might be helpful to demonstrate the linkage process.

Example of individual participant data meta-analysis