Abstract

Introduction

Providers’ communication skills have a significant impact on patients’ satisfaction. Improved patients’ satisfaction has been positively correlated with various healthcare and financial outcomes. Patients’ satisfaction in the inpatient setting is measured using the Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) survey. In this study, we evaluated the impact of dynamic real-time feedback to the providers on the HCAHPS scores.

Methods

This was a randomized study conducted at our 550-bed level-1 tertiary care center. Twenty-six out of 27 hospitalists staffing our 12 medicine teams (including teams containing advanced practice providers (APPs) and house-staff teams) were randomized into intervention and control groups. Our research assistant interviewed 1110 patients over a period of 7 months and asked them the three provider communication–specific questions from the HCAHPS survey. Our intervention was a daily computer-generated email which alerted providers to their performance on HCAHPS questions (proportions of “always” responses) along with the performance of their peers and Medicare benchmarks.

Results

The intervention and control groups were similar with regard to baseline HCAHPS scores and clinical experience. The proportion of “always” responses to the three questions related to provider communication was statistically significantly higher in the intervention group compared to the control group (86% vs 80.5%, p-value 0.00001). It was also noted that the HCAHPS scores were overall lower on the house-staff teams and higher on the teams with APPs.

Conclusion

Real-time patients’ feedback to inpatient providers with peer comparison via email has a positive impact on the provider-specific HCAHPS scores.

INTRODUCTION

Patients’ satisfaction with providers’ communication is both a key component of the Institute for Healthcare Improvement triple aim for high-performing healthcare organizations and a key driver of performance-based reimbursement systems for the hospitals. Patients’ satisfaction in the inpatient setting is measured using the Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) survey. HCAHPS has been employed by the Centers for Medicare and Medicaid Services (CMS) since 2012 as one of the factors that determine reimbursement to the hospital through the Value-Based Purchasing (VBP) program (1). In addition to the financial incentives, the impact of patient’s satisfaction and positive inpatient experience go well beyond the index hospitalization. High level of patient satisfaction has been associated with improved health outcomes, adherence to medications, availing preventive care services, and reduced resource utilization including readmissions and length of stay (2, 3). Physicians’ communication skills have a significant impact on patients’ satisfaction and have been shown to be positively correlated with improved patient satisfaction scores (4).

Providers’ performance feedback is increasingly being used to improve quality of care in healthcare (5). Two studies have evaluated the impact of real-time performance feedback on patient satisfaction scores in the inpatient setting. A non-randomized, pre-post design trial showed an improvement in patient satisfaction scores by providing real-time feedback to the internal medicine residents (2). However, another randomized trial of providing real-time feedback to the internal medicine attendings did not show an improvement in the top-box responses on the satisfaction surveys administered to patients in the inpatient setting (6). Top-box response is the highest possible response on a survey scale. Neither study included peer performance information as part of their real-time feedback. Peer or benchmark comparison improves effectiveness of a performance feedback and is a recommended component of performance feedback system for the physicians (5).

Hospitalists in academic medical centers can see patients in different roles, i.e., sole hospitalist-led teams, in collaboration with advanced practice providers (APPs), or while supervising resident physicians. Retrospective studies have either shown higher scores in the physician communication domain for the resident teams compared to teams with APPs, or no difference in scores between resident teams, APP teams, or solo hospitalist-led teams (7). One study showed higher patients’ satisfaction with overall care in patients discharged from the hospitalist teams as opposed to the resident teams (8). There is no prospective data on differences in patient satisfaction scores by the hospitalist role on the team. It is also unknown if patient satisfaction scores on teams with residents and APPs are affected by the real-time feedback provided to the hospitalist attendings.

The purpose of our randomized controlled trial was to evaluate the effectiveness of daily email-based feedback, with peer and benchmark comparison, on improving top-box scores on the three provider-specific communication questions on the HCAHPS survey. We also studied the differences in patient satisfaction scores on the physician communication domain of the HCAHPS by hospitalists’ role on different teams, i.e. sole hospitalist-led teams versus patients cared for by hospitalist attendings in collaboration with APPs versus resident teams.

METHODS

Our facility is a 550-bed academic medical center in the Midwest. There were 27 full-time faculty hospitalists staffing a total of 12 general internal medicine ward teams in our group at the time this study was conducted. Six teams were resident (house-staff) teams, and the remaining were direct care teams. House-staff teams are made up of one attending hospitalist, one internal medicine post graduate year (PGY) 2 or 3 resident, two internal medicine PGY 1 residents, one fourth-year medical student, and two third-year medical students. Direct care teams are made up of one attending hospitalist, one advanced practice provider (APP), and one third-year medical student. While all patients on our direct care teams are seen and staffed by the attending hospitalist, roughly half are cared for by the APPs who serve as the primary inpatient provider for these patients. Twenty-six of 27 hospitalists consented to participate in the study. Hospitalists were randomized to intervention and control groups. Informed consent was obtained from all participants. This study was approved by the Medical College of Wisconsin Institutional Review Board (PRO00021494).

A research assistant picked a random sample of patients from our 12 GIM ward teams every morning and alternately assigned them to the intervention and control groups. A random team would be selected first followed by a random patient on that team. The number of patients selected each day was determined by the time the research assistant had available to dedicate to the project. She interviewed each selected patient in their room after obtaining informed consent and asked them the three questions related to provider communication from the HCAHPS survey:

During this hospital stay, how often did doctors explain things in a way you could understand?

During this hospital stay, how often did doctors listen carefully to you?

During this hospital stay, how often did doctors treat you with courtesy and respect?

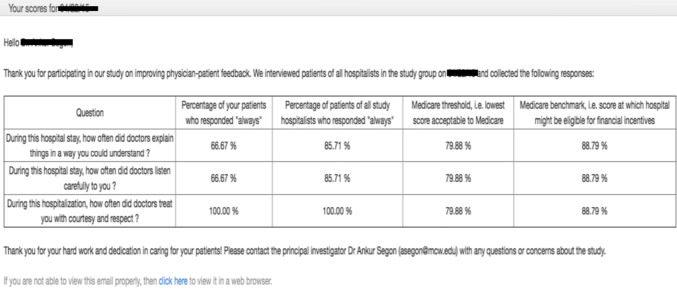

Each patient was interviewed only once. Non-English-speaking patients, patients with moderate or advanced dementia, and patients admitted from prison under custody of sheriff deputies were excluded from the study. Patients’ responses were recorded on the following scale: Never, sometimes, usually, and always. The research assistant entered patient responses into a website developed for the project 5 days a week (Monday to Friday). The website tabulated the percentage of a hospitalist’s patients in the intervention group who responded “always” to each of the three questions, along with the percentage of patients of all hospitalists (in both the intervention and control groups) who responded “always” to the three questions for comparison. Medicare threshold and Medicare benchmark scores were also included. The website pushed out an email to each hospitalist in the intervention group at 7 am every morning (Fig. 1) except Sundays and Mondays. Emails were not sent to hospitalists in the control group. Hospitalist attendings on house-staff teams were encouraged to forward the emails to their team and use this as an opportunity to discuss patient satisfaction and HCAHPS scores with their learners.

Fig. 1.

Sample of the email sent to the providers in the intervention group containing their respective patients’ HCAHPS scores along with peer comparison and Medicare threshold and benchmark.

A total of 1110 patients were randomized over a period of 7 months. Ninety-one percent of randomized patients agreed to participate in the study. Of the 1013 patients who agreed to participate, 500 belonged to the intervention group and 513 belonged to the control group. The proportion of top-box (or “always”) responses to each question were compared between the intervention and control groups using the 훸2 test. Of note, only top-box responses were included in the analysis to mirror the HCAHPS reporting methodology adopted by the CMS.

RESULTS

Physicians in the intervention and control groups were evenly matched with regard to demographics, experience, and baseline performance on the HCAHPS survey (Table 1).

Table 1.

Baseline characteristics

| Intervention group (N = 13) | Control group (N = 13) | p-value | |

|---|---|---|---|

| Age (mean) | 37.4 years | 36.2 years | 0.23 |

| Gender | 7 male, 6 female | 7 male, 6 female | NA |

| Baseline HCAHPS scores | 76% | 74% | 0.62 |

| Years since completion of medical school | 7.9 years | 10.8 years | 0.08 |

| Years of hospitalist experience | 5.3 years | 6.3 years | 0.54 |

The proportion of “always” responses to the three provider-specific communication questions was statistically significantly higher in the intervention group (86%, 1290/1500) compared to the control group (80.5%, 1239/1539), with a p-value of less than 0.00001. The proportion of “always” responses reached statistical significance for question 1 (doctor explained things in a way you could understand) and question 3 (doctor treated you with respect and courtesy) in the intervention group when compared to the controls (Table 2).

Table 2.

Proportion of “always” responses to the three provider communication–specific questions of the HCAHPS score

| Question | Intervention group* | Control group* | p-value |

|---|---|---|---|

| During this hospitalization, how often did doctors explain things in a way you could understand? | 82% (1230/1500) | 74% (1140/1539) | 0.0034 |

| During this hospitalization, how often did doctors listen carefully to you? | 83% (1245/1500) | 78% (1200/1539) | 0.05 |

| During this hospitalization, how often did doctors treat you with courtesy and respect? | 93% (1395/1500) | 89% (1371/1539) | 0.03 |

*Each patient in both groups was asked three provider-specific questions. Top-box response to each question was analyzed leading to 1500 responses (3*500) in the intervention group and 1539 (3*513) in the control group

We also analyzed “always” responses to the three survey questions by the role of the hospitalist on the team. The three roles were working with residents on house-staff teams, working with APPs on direct care teams for patients assigned to APPs, and working on their own on direct care teams. Proportion of “always” responses was higher in the intervention group when hospitalists were working on their own (87% versus 81%, p < 0.0001) and when hospitalists were working with the residents (86% versus 78%, p = 0.05). Proportion of “always” responses was not significantly different in the intervention and control groups when hospitalists were working in collaboration with APPs (Table 3).

Table 3.

Proportion of “always” Responses to the Three Survey Questions by the Attendings’ Role

| Provider role | Intervention group | Control group | p-value |

|---|---|---|---|

| Attending on their own | 87% (757/870) | 81% (701/864) | < 0.0001 |

| Attending with APP | 85% (178/210) | 86% (203/237) | 0.79 |

| Attending with house-staff | 86% (361/420) | 78% (344/438) | 0.005 |

Lastly, we calculated the proportion of “always” responses to the 3 survey questions by the attending hospitalist’s role in the control group. The proportion of “always” responses was lowest on the house-staff teams (78%) and highest for patients seen by hospitalists while working with APPs on direct care teams (86%) (Table 4).

Table 4.

Proportion of “always” Responses by Attendings’ Role in the Control Group Patients

| Role | Proportion of responses | p-value |

|---|---|---|

| Attending with APP | 86% (203/237) | 0.02*/0.10** |

| Attending on their own | 81% (701/864) | 0.27* |

| Attending with house-staff | 78% (344/438) |

*Compared to attending with house-staff; **compared to attending on their own

DISCUSSION

Our study shows that a dynamic real-time feedback improves provider-specific HCAHPS scores. We saw higher scores on questions related to clear explanations and treating patients with courtesy and respect in the intervention group. Interestingly, it was noted that the HCAHPS scores were overall lower on the house-staff teams and higher on the teams with APPs.

Feedback on individual performance has been shown to improve physician scores on quality metrics (9). Further, a combination of target sharing and performance feedback is more effective in improving performance than either strategy alone (10). Sharing peer performance and target benchmarks as part of the feedback packet helps drive performance improvement for both highly motivated and underperforming physicians (11). Locke and Latham’s goal setting theory postulates that feedback is a key moderator of goal attainment (11). Feedback modulates performance by making a subject aware of the gap between their current performance and target performance. This allows subjects to adjust the intensity of their effort and deploy alternative tactics if they are underperforming on a goal (12).

To date, there has been only one other randomized trial (Indovina et al.) (6) that has assessed the impact of real-time feedback on the providers’ HCAHPS scores. Our intervention regarding alerting physicians in the intervention group via email about their patients’ real-time feedback was similar to Indovina et al. However, contrarily, we did not employ in-person feedback and coaching on improving HCAHPS scores. Instead, our emails contained group comparison scores for all the hospitalists surveyed the previous day along with the Medicare benchmark and threshold scores (Fig. 1). Second, we randomized providers to intervention and control groups, while Indovina et al. randomized patients. As opposed to our results, they did not see an improvement in the top-box scores on daily patient satisfaction surveys. This could be related to overlap in exposure of providers to the intervention since 65% of the providers took care of patients in both the control and intervention groups.

The benefits of real-time feedback were also demonstrated in a pre-post, non-randomized trial by Banka et al. (2). There was a statistically significant increase in the physician component of HCAHPS scoring with real-time feedback, educational sessions, monthly recognition, and incentives. The study population was limited to resident physicians which affects the generalizability of the results to the attending physicians. Also, as multiple interventions were tested simultaneously, it was unclear if real-time feedback played a significant role in improvement of the HCAHPS scores.

Various other techniques to improve and strengthen physicians’ communication skills have been employed and tested in other studies. These include communication skills training workshops (4, 13), interactive skills-based seminars with standardized patients (14), standardized medical simulation and coaching (1), standardized communication model with scripted approach (15), and interactive small group reflection (16). These approaches have been variably effective in improving provider communication scores and are limited by time and scheduling constraints. These challenges make it difficult to sustain these strategies over time. In addition, the efficacy of these interventions is uncertain. Recent systematic review regarding interventions to improve HCAHPS scores showed that most studies are of low quality and vary widely in their approach, methodology, and design (17). Deploying an email to deliver patient satisfaction scores along with peer comparison and goal performance, as employed in our study, is an efficient and practical way to provide real-time feedback. We received grant funding to develop a website that automated the process of generating and sending emails every morning. Patient surveys were administered by a research assistant who can be replaced by a bedside nurse, hospital volunteers, or members of hospital employed patient experience teams.

We did not see a statistically significant difference in patient satisfaction scores between the intervention and control groups for patients cared for by both attendings and APPs. Nonetheless, the attending-APP duo scored higher patient satisfaction scores in the control group when compared to attending-resident (house-staff) and solo-hospitalists led teams. This is different from the findings of Lappé et al. who found no difference in HCAHPS scores between solo attending, attending-APP, and attending-resident teams (18). In another retrospective study, Iannuzzi et al. found higher patient satisfaction scores in patients cared for on house-staff teams as opposed to attending-APP teams (7). In our study, we did not collect patient-level demographic or comorbidity data to determine if patients in attending-APP and attending-resident groups were similar. Also, we did not characterize the training and experience level of our APPs. Thus, further studies are needed to determine the impact of hospitalist team structure on patient satisfaction scores.

Our study has limitations. While we randomized attending physicians and found the intervention and control groups to be comparable with regard to demographics, experience, and baseline performance (Table 1), residual confounding is always possible. Ours was a single-center study and this limits generalizability. Furthermore, as we are a large hospital medicine group at an academic medical center attached to a level 1 tertiary care hospital, we cannot generalize our results to dissimilar groups and centers. In addition, our sampling of patients on any given day was limited by the time available to the research assistant to interview patients. While we randomly selected patients to interview each day, we did not collect patient-level demographic or clinical information and cannot assert with complete certainty that patients assigned to the intervention and control groups were similar. However, all our medicine teams admit adult patients with similar inclusion and exclusion diagnostic criteria; therefore, significant differences between patients in the intervention and control groups are unlikely.

There are potential risks to employing patient satisfaction scores as a quality metric. A retrospective study showed an association between higher patient satisfaction scores and higher utilization of inpatient care and greater inpatient expenditure (19). There are concerns that a single-minded focus on patient satisfaction scores may result in overprescription of opiates and other addictive medications (20). Addressing these balancing measures was out of scope of our study. In addition, we did not inquire about email fatigue and impact of receiving daily comparative patient satisfaction scores on providers’ wellness and burnout. Lastly, we did not collect data on strategies implemented by individual providers to improve their scores in response to daily emails. Further studies are needed to address these important areas.

In conclusion, providing real-time patient feedback to inpatient providers with peer comparison and group goals via email has a positive impact on provider-specific HCAHPS scores. This intervention is a pragmatic model for academic centers to promote patient-centered care, enhance providers’ communication skills, and improve revenue in the era of value-based purchasing.

Declarations

Conflict of Interest

The authors declare that they do not have a conflict of interest.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Asif Surani, Email: asurani@mcw.edu.

Muhammad Hammad, Email: mhammad@mcw.edu.

Nitendra Agarwal, Email: agarwaln@uthscsa.edu.

Ankur Segon, Email: segon@uthscsa.edu.

References

- 1.Seiler A, Knee A, Shaaban R, et al. Physician communication coaching effects on patient experience. PLoS One. 2017;12(7). 10.1371/journal.pone.0180294 [DOI] [PMC free article] [PubMed]

- 2.Banka G, Edgington S, Kyulo N, et al. Improving patient satisfaction through physician education, feedback, and incentives. J Hosp Med. 2015;10(8). 10.1002/jhm.2373 [DOI] [PubMed]

- 3.Doyle C, Lennox L, Bell D. A systematic review of evidence on the links between patient experience and clinical safety and effectiveness. BMJ Open. Published online 2013. 10.1136/bmjopen-2012-001570 [DOI] [PMC free article] [PubMed]

- 4.Boissy A, Windover AK, Bokar D, et al. Communication Skills Training for Physicians Improves Patient Satisfaction. J Gen Intern Med. 2016;31(7). 10.1007/s11606-016-3597-2 [DOI] [PMC free article] [PubMed]

- 5.Becker B, Nagavally S, Wagner N, Walker R, Segon Y, Segon A. Creating a culture of quality: our experience with providing feedback to frontline hospitalists. BMJ Open Qual. 2021;10(1):e001141. doi: 10.1136/bmjoq-2020-001141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Indovina K, Keniston A, Reid M, et al. Real-time patient experience surveys of hospitalized medical patients. J Hosp Med. 2016;11(4). 10.1002/jhm.2533 [DOI] [PubMed]

- 7.Iannuzzi MC, Iannuzzi JC, Holtsbery A, Wright SM, Knohl SJ. Comparing Hospitalist-Resident to Hospitalist-Midlevel Practitioner Team Performance on Length of Stay and Direct Patient Care Cost. J Grad Med Educ. 2015 Mar;7(1):65-9. 10.4300/JGME-D-14-00234.1 [DOI] [PMC free article] [PubMed]

- 8.Wray CM, Flores A, Padula WV, Prochaska MT, Meltzer DO, Arora VM. 9. easuring patient experiences on hospitalist and teaching services: Patient responses to a 30-day postdischarge questionnaire. J Hosp Med. 2016 Feb;11(2):99-104. 10.1002/jhm.2485. Epub 2015 Sep 18 [DOI] [PMC free article] [PubMed]

- 9.Ivers NM, Barrett J. Using report cards and dashboards to drive quality improvement: lessons learnt and lessons still to learn. BMJ Quality & Safety. 2018;27:417–420. doi: 10.1136/bmjqs-2017-007563. [DOI] [PubMed] [Google Scholar]

- 10.Bandura A, Cervone D. Self-evaluative and self-efficacy mechanisms governing the motivational effects of goal systems Journal of Personality and Social Psychology. 45: 1017-1028.10.1037/0022-3514.45.5.1017

- 11.Locke EA, Latham GP. Building a practically useful theory of goal setting and task motivation. A 35-year odyssey. Am Psychol. 2002;57(9):705–17. doi: 10.1037//0003-066x.57.9.705. [DOI] [PubMed] [Google Scholar]

- 12.Matsui T, et al. Mechanism of feedback affecting task performance. Organizational Behavior and Human Performance. 1983;31:114–122. doi: 10.1016/0030-5073(83)90115-0. [DOI] [Google Scholar]

- 13.O’Leary KJ, Darling TA, Rauworth J, Williams M V. Impact of hospitalist communication-skills training on patient-satisfaction scores. J Hosp Med. 2013;8(6). 10.1002/jhm.2041 A [DOI] [PubMed]

- 14.Zabar S, Hanley K, Stevens DL, et al. Can interactive skills-based seminars with standardized patients enhance clinicians’ prevention skills? Measuring the impact of a CME program. Patient Educ Couns. 2010;80(2). 10.1016/j.pec.2009.11.015 B [DOI] [PubMed]

- 15.Horton DJ, Yarbrough PM, Wanner N, Murphy RD, Kukhareva P V., Kawamoto K. Improving physician communication with patients as measured by HCAHPS using a standardized communication model. Am J Med Qual. Published online 2017. 10.1177/1062860616689592C [DOI] [PubMed]

- 16.Richards SE, Thompson R, Paulmeyer S, et al. Can specific feedback improve patients’ satisfaction with hospitalist physicians? A feasibility study using a validated tool to assess inpatient satisfaction. Patient Exp J. 2018;5(3). 10.35680/2372-0247.1299 D

- 17.Davidson KW, Shaffer J, Ye S, et al. Interventions to improve hospital patient satisfaction with Healthcare Providers and systems: A systematic review. BMJ Qual Saf. 2017;26(7). 10.1136/bmjqs-2015-004758 E [DOI] [PMC free article] [PubMed]

- 18.Lappé KL, Raaum SE, Ciarkowski CE, Reddy SP, Johnson SA. Impact of Hospitalist Team Structure on Patient-Reported Satisfaction with Physician Performance. J Gen Intern Med. 2020;35(9). 10.1007/s11606-020-05775-5 F [DOI] [PMC free article] [PubMed]

- 19.Fenton JJ, Jerant AF, Bertakis KD, Franks P. The cost of satisfaction: a national study of patient satisfaction, health care utilization, expenditures, and mortality. Arch Intern Med. 2012 Mar 12;172(5):405-11. 10.1001/archinternmed.2011.1662. Epub 2012 Feb 13. G [DOI] [PubMed]

- 20.Zgierska A, Miller M, Rabago D. Patient satisfaction, prescription drug abuse, and potential unintended consequences. JAMA. 2012;307(13):1377-1378. 10.1001/jama.2012.419H [DOI] [PMC free article] [PubMed]