Abstract

Natural language processing (NLP) is a promising tool for collecting data that are usually hard to obtain during extreme weather, like community response and infrastructure performance. Patterns and trends in abundant data sources such as weather reports, news articles, and social media may provide insights into potential impacts and early warnings of impending disasters. This paper reviews the peer-reviewed studies (journals and conference proceedings) that used NLP to assess extreme weather events, focusing on heavy rainfall events. The methodology searches four databases (ScienceDirect, Web of Science, Scopus, and IEEE Xplore) for articles published in English before June 2022. The preferred reporting items for systematic reviews and meta-analysis reviews and meta-analysis guidelines were followed to select and refine the search. The method led to the identification of thirty-five studies. In this study, hurricanes, typhoons, and flooding were considered. NLP models were implemented in information extraction, topic modeling, clustering, and classification. The findings show that NLP remains underutilized in studying extreme weather events. The review demonstrated that NLP could potentially improve the usefulness of social media platforms, newspapers, and other data sources that could improve weather event assessment. In addition, NLP could generate new information that should complement data from ground-based sensors, reducing monitoring costs. Key outcomes of NLP use include improved accuracy, increased public safety, improved data collection, and enhanced decision-making are identified in the study. On the other hand, researchers must overcome data inadequacy, inaccessibility, nonrepresentative and immature NLP approaches, and computing skill requirements to use NLP properly.

Keywords: Natural language processing, Extreme weather events, Text mining, Disaster management

Introduction

Meteorologists have always faced the challenge of predicting extreme precipitation events (Lazo et al. 2009). According to recent surveys of the public’s use of weather forecasts, the most largely utilized information of standard forecasts is precipitation prediction (e.g., where, when, and how much rain will fall) (Lazo et al. 2009). End users in different communities and fields, like transportation, water resources, flood control, emergency management, and many others, require reliable precipitation forecasts. In the USA Weather Research Program’s (USWRP) community planning report, experts in hydrology, transportation, and emergency management discussed how quantitative precipitation forecasts (QPF) relate to their specific communities (Ralph 2005). On average, users can receive weather information four to five times daily through mobile phone apps, TV, newspapers, tweets, etc. (Purwandari et al. 2021). When assessing climate data, it is possible to encounter a discrete–continuous option problem because of the dynamic nature of precipitation and the variety of physical forms involved, making rainfall forecasts challenging (Purwandari et al. 2021). The determination of QPF in short-, mid-, and long-range is done with different levels of difficulties, and QPF is usually delivered with varying uncertainty (Purwandari et al. 2021). Meteorologists have always tried to assimilate as many possible observations as possible to enhance their weather forecast skills. Observations from satellite, airborne, or ground-based sources have been used. The more reliable observations, event qualitative, are examined, processed, and eventually assimilated into models, the better the forecast. The increasing use of the Internet, precisely social media steams, has created a wealth of information that holds the potential to improve weather models. However, a large amount of unstructured data is available on the Internet, which lacks the identifiable tabular organization necessary for traditional data analysis methods, which diminishes its potential (Gandomi and Haider 2015). Although unstructured data, such as Web pages, emails, and mobile phone records, may contain numerical and quantitative information (e.g., dates), they are usually text-heavy. Contrary to numbers, textual data are inherently inaccurate and vague. According to (Britton 1978), at least 32% of the words used in English text are lexically ambiguous. Textual data are often unstructured, making it difficult for researchers to use them to enhance meteorologic models. Nevertheless, the large amount of textual data provides new opportunities for urban researchers to investigate people’s perceptions, attitudes, and behaviors, which will help them better understand the impact of natural hazards. Jang and Kim (2019) have demonstrated that crowd-sourced text data can effectively represent the collective identity of urban spaces by analyzing crowd-sourced text data gathered from social media. Conventional methods of collecting data, such as surveys, focus groups, and interviews, are often time-consuming and expensive. Raw text data without predetermined purposes can be compelling if used wisely and can complement purposefully designed data collection strategies.

Machines can analyze and comprehend human language thanks to a process known as natural language processing (NLP). It is at the heart of all the technologies we use daily, including search engines, chatbots, spam filters, grammar checkers, voice assistants, and social media monitoring tools (Chowdhary 2020). By applying NLP, it is possible to grasp better human language’s syntax, semantics, pragmatics, and morphology. Then, computer science uses this language understanding to create rule-based, machine learning algorithms that can solve certain issues and carry out specific tasks (Chowdhary 2020). NLP has demonstrated tremendous capabilities in harvesting the abundance of textual data available. Hirschberg and Manning (2015) define it as a form of artificial intelligence similar to deep learning and machine learning that uses computational algorithms to learn, understand, and produce human language content. Basic NLP procedures require processing text data, converting text into features, and identifying semantic relationships (Ghosh and Gunning 2019). In addition to structuring large volumes of unstructured data, NLP can also improve the accuracy of text processing and analysis because it follows the rules and criteria consistently. A wide range of fields has proven to benefit from NLP. Guetterman et al. (2018) conducted an experiment in which they compared the results of traditional text analysis with those of a natural language processing analysis. The authors claim that NLP could identify major themes manually summarized by conventional text analysis. Syntactic and semantic analysis is frequently employed in NLP to break human discourse into machine-readable segments (Chowdhary 2020). Syntactic analysis, commonly called parsing or syntax analysis, detects a text’s syntactic structure and the dependencies between words, as shown on a parse tree diagram (Chowdhary 2020). The semantic analysis aims to find out what the language means or, to put it another way, extract the exact meaning or dictionary meaning from the text. However, semantics is regarded as one of the most challenging domains in NLP due to language’s polysemy and ambiguity (Chowdhary 2020). Semantic tasks examine sentence structure, word interactions, and related ideas to grasp the topic of a text and the meaning of words. One of the main reasons for NLP’s complexity is the ambiguity of human language. For instance, sarcasm is problematic for NLP (Suhaimin et al. 2017). It would be challenging to educate a machine to understand the irony in the statement, “I was excited about the weekend, but then my neighbor rained on my parade," or “it is raining cats and dogs,” yet humans would be able to do so quickly. Researchers worked on instructing NLP technologies to explore beyond word meanings and word order to thoroughly understand context, word ambiguities, and other intricate ideas related to communications. However, they must consider other factors like culture, background, and gender while adjusting natural language processing models. For instance, idioms, like those related to weather, can significantly vary from one nation to the next.

NLP can support extreme weather events data challenges by analyzing large amounts of weather data for patterns and trends (Kahle et al. 2022). These data can provide more accurate forecasts and early warning systems for extreme weather events (Kitazawa and Hale 2021; Rossi et al. 2018; Vayansky et al. 2019; Zhou et al. 2022). NLP can also monitor social media for information on extreme weather events, allowing for the detection of local events that may not be reported in official channels (Kitazawa and Hale 2021; Zhou et al. 2022). Additionally, NLP can be used to create automated chat bots to provide information to those affected by extreme weather events, such as directions to shelters, medical assistance, and other resources.

This article comprehensively reviews how researchers have used NLP in extreme weather event assessment. The present study is, to our knowledge, a first attempt to synthesize opportunities and challenges for extreme events assessment research by adopting Natural Language Processing. In the methodology section of the manuscript, the approach, the selection method, and the search terms used for the article selection are detailed. Then, the search results are summarized and categorized. Next, the role of NLP and its challenges in supporting extreme events are assessed. Finally, the limitations of this literature study are listed.

Methodology

First, the protocol registration and information sources were determined. In this study, the Preferred Reporting Items for Systematic Reviews and Meta-Analysis Reviews and Meta-Analysis guidelines (PRISMA) were followed to conduct the systematic review (Page et al. 2021). The protocol (Tounsi 2022) was registered with the Open Science Framework on June 6th, 2022. We searched for peer-reviewed publications in databases to identify articles within this systematic literature review’s scope and eligibility criteria.

Then, the search strategy was defined. Our search terms were developed systematically to ensure that all related and eligible papers in the databases were captured. A preliminary literature review determined the keywords used in the search and then modified them based on feedback from content experts and the librarian. Our review then incorporated a collaborative search strategy to ensure that all papers related to the use of NLP and their use for the assessment of extreme weather events were included in our review and determined the keywords. We searched four databases: IEEE Xplore, Web of Science, ScienceDirect, and Scopus.

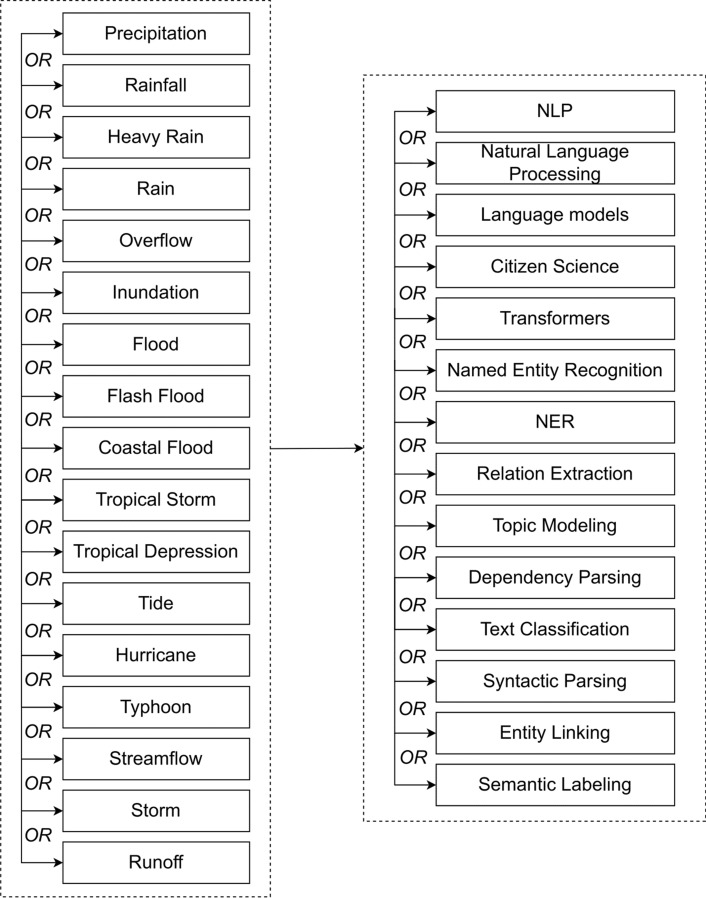

We grouped the query keywords to identify relevant studies that meet our scope and inclusion criteria. We combined them using an AND/OR operator. We used keyword terms such as “NLP OR Natural Language Processing” with narrower terms such as “Precipitation OR Rainfall” in our keyword search. Figure 1 shows all the combinations of search terms used in the keyword search.

Fig. 1.

Conceptual framework of the search terms used in the literature review to query the studies for the literature review

This study focused on peer-reviewed publications satisfying the following two primary conditions: (a) applying one of the techniques of natural language processing to solve the problem stated in the study, the study has to show the results of the model used, not just suggest it and (b) reporting results related to precipitation-related extreme weather events assessment. Note that, papers that did not meet these conditions were excluded from consideration. For example, studies that only focused on developing numerically based deep learning models were excluded from the study. Additionally, secondary research, such as reviews, commentaries, and conceptual articles, was excluded from this review. The search was limited to English language papers published until June 2022.

Two authors screened the publications simultaneously to decide whether or not to include each of them using consensus methods. First, we screened the publications by looking at the titles and abstracts, and then, we removed duplicates. Having read the full text of the remaining papers, we finalized the selection by reading the full text. To minimize selection bias, all discrepancies were resolved by discussion requiring consensus from both reviewers. For each paper, standardized information was recorded using a data abstraction form.

Results

Search results

The flowchart of the procedure used to choose the articles to be included in this systematic literature review is shown in Fig. 2. A total of 1225 documents were found in the initial search using a set of queries. To control the filtering and duplicate removal procedure, we employed EndNote. We eliminated duplicates and all review, opinion, and perspective papers. Following that, two writers conducted second filtering by reading titles and abstracts (n = 846). Three hundred sixteen (316) documents were left after a screening procedure using inclusion criteria for a full-text examination. An additional 281 articles that were not within the scope of the study were removed after reading the full study. For example, some studies suggested NLP as a solution but did not implement it. We cannot include such studies because NLP was not shown as an efficient solution to the problem. Other examples include studies that explained the consequences of different extreme weather events (including rain-related issues) on other fields, such as construction or maritime transportation.

Fig. 2.

PRISMA flow diagram for Searching and Selection process

Consequently, the final number of studies considered for the systematic review is 35, with consensus from both authors. To extract the information listed in Table 1, we used a systematic approach from each eligible article. Overall, 29 were journal articles, and nine were conference proceedings (Table 2).

Table 1.

Summary of included literature

| Citation | Objective | Data source | Data type | Model (name) | Type of extreme event | Country |

|---|---|---|---|---|---|---|

| Zhou et al. (2021) | Propose a guided latent Dirichlet allocation (LDA) workflow for investigating latent temporal topics in tweets from a hurricane event | Unstructured | Latent Dirichlet Allocation (LDA) | Hurricanes and storms | USA | |

| Fan et al. (2020a, b) | Discover the unfolding of catastrophic occurrences related to various areas from social media posts during disasters and suggest and test a hybrid machine learning pipeline |

Social media Google Map Geocoding API |

Unstructured | Fine-tuning BERT Classifier and graph-based clustering | Hurricanes and storms | USA |

| Lai et al. (2022) | Use Natural Language Processing (NLP) to build a hybrid Named Entity Recognition (NER) model that uses a domain-specific machine learning model, linguistic characteristics, and rule-based matching to extract newspaper information | online newspaper articles | Unstructured |

Information extraction through the use of a hydrology NER model (SpaCy) Relationship Extraction, Entity Linking, Geocoding Locations, and Location Filtering through a binary classification model |

Hurricanes and storms | USA |

| Fan et al. (2018) | Identify topic evolution connected to the functionality of infrastructure systems in catastrophes, the authors propose and evaluate a system analytics framework based on social sensing and text mining | Unstructured |

Text lemmatization Part-of-Speech (POS) tagging TF-IDF vectorization Topic modeling by using Latent Dirichlet Allocation (LDA) K-Means clustering |

Hurricanes and storms | USA | |

| Vayansky et al. (2019) | Create a collection of workable topic models for sentiment analysis and Latent Dirichlet Allocation (LDA) topic modeling to create a pattern of sentiment trends over the development of the storm totality | Unstructured |

Sentiment Analysis Latent Dirichlet Allocation model (LDA) |

Hurricanes and storms | USA | |

| Sermet and Demir (2018) | Develop Flood AI which is an intelligent system built to increase societal readiness for floods by offering a knowledge engine based on a generic ontology for catastrophes with a significant focus on flooding. Flood AI employs speech recognition, artificial intelligence, and natural language processing | Ontology—XML Parser | Structured/unstructured data |

Stanford NLP wit.ai NlpTool |

Flooding | USA |

| Xin et al. (2019) | Analyze the consistency of hurricane-related tweets over time and the similarity of the themes across Twitter and other private social media data | Unstructured | Latent Dirichlet Allocation (LDA) | Hurricanes and storms | USA | |

| Kahle et al. (2022) | Analyze flood events using four different methodologies: (1) hermeneutics, which involves the analog interpretation of printed sources and newspapers; (2) text mining and natural language processing of digital newspaper articles available online; (3) precipitation and discharge models based on instrumental data; and (4) how the findings can be related to the extreme historic floods of 1804 and 1910, which is based on documentary source analysis |

Journals Online media Numerical climate & gauge data Historical records: newspapers & chronicles |

Structured/unstructured data |

NLP Tokenize & Lemmatize Named Entity Extraction |

Flooding | Germany |

| Alam et al. (2020) | Describe a methodology based on cutting-edge AI techniques from unsupervised to supervised learning for a thorough analysis of multimodal social media data gathered during catastrophes | Textual and imagery content from millions of tweets | Unstructured |

Relevancy Classification Sentiment Classification Topic Modeling (Latent Dirichlet Allocation) NER (Stanford CoreNLP) Duplicate Detection |

Hurricanes and storms | USA |

| Barker and Macleod (2019) | Create a prototype national-scale Twitter data mining pipeline to retrieve pertinent social geodata based on environmental data sources to increase stakeholder situational awareness during flooding disasters in Great Britain (flood warnings and river levels) | Unstructured |

Latent Dirichlet Allocation (LDA) word embeddings (Doc2Vec) Logistic Regression |

Flooding | Great Britain | |

| Maulana and Maharani (2021) | Categorize forecasts into "flooded" and "not flooded" categories using geographical data and tweets | Unstructured | BERT-MLP | Flooding | Indonesia | |

| Farnaghi et al. (2020) | Present a technique for dynamic event extraction from geotagged tweets in broad research regions called dynamic Spatiotemporal tweet mining | Unstructured | Word2vec, GloVe, and FastText | Hurricanes and storms | USA | |

| Chen and Ji (2021) | Examine the application of social media to monitor post-disaster infrastructure conditions systematically. A practical topic modeling-based approach is proposed to model infrastructure condition-related topics by incorporating domain knowledge into the correlation explanation and looking at spatiotemporal patterns of topic engagement levels for systematically sensing infrastructure functioning, damage, and restoration conditions | Unstructured | Topic Modeling (CorEx) | Hurricanes and storms | USA | |

| Zhang et al. (2021) | Use BERT-Bilstm-CRF model to offer a social sensing strategy for identifying flooding sites by separating waterlogged places from common locations | Chinese wiki released by Google | Unstructured | GeoSemantic2vec algorithm—BERT or BERT-Bi-LSTM-CRF model | Flooding | China |

| Zhang and Wang (2022) | Use of literature mining, identify quantitative and spatial distribution elements of flood catastrophe research trends and hotspots | Disaster Risk Reduction Knowledge Service | Unstructured | Spacy NER, Stanford NER | Flooding | World |

| Reynard and Shirgaokar (2019) | Classify geolocated tweets concerning Hurricane Irma and Florida using geospatial and machine learning approaches. We used sentiment analysis to categorize tweets regarding damage and/or transportation into negative, neutral, or positive groups. We analyzed whether the tweet, tweeter, or location characteristics were more likely to be connected with positive or negative attitudes using a multinomial logit specification. We also utilized the American Community Survey (ACS) 2017 geography to offer socioeconomic background | Unstructured | Multinomial logit model | Hurricanes and storms | USA | |

| Karimiziarani et al. (2022) | Offer a thorough spatiotemporal analysis of the textual material from the several southeast Texas counties impacted by Hurricane Harvey (2017) and its millions of tweets | Unstructured | Latent Dirichlet Allocation (LDA), | Hurricanes and storms | USA | |

| Wang et al. (2018) | Show how to use computer vision and natural language processing to gather and analyze data for studies on urban floods |

Crowdsourcing photos |

Unstructured | Named Entity Recognition (Stanford NER), Computer Vision (CNN) | Flooding | USA |

| Sit et al. (2019) | Introduce an analytical framework that combines spatial analysis and a two-step classification approach to (1) identify and classify fine-grained details about a disaster, such as affected people, damaged infrastructure, and disrupted services from tweets; and (2) classify the results of the analysis. (2) Set apart impact areas, time frames, and the relative importance of each type of disaster-related information over both spatial and temporal scales | Unstructured | LSTM, SVM, CNN, LDA | Hurricanes and storms | USA | |

| de Bruijn et al. (2020) | Create a multimodal neural network that uses contextual hydrological information and textual information to enhance the categorization of tweets for flood detection in four different languages | Unstructured | Multimodal neural network | Flooding | Netherlands | |

| Chao Fan et al. (2020a, b) | Use of social media data for situation assessment and action prioritization in catastrophes, suggest and test a methodological framework that combines natural language processing and meta-network analysis | Unstructured | named entity recognition, convolutional neural networks, and meta-network analysis | Hurricanes and storms | USA | |

| Shannag and Hammo (2019) | Create two classifiers using the Python Natural Language Toolkit (NLTK) package to filter and identify extracted events from Arabic tweets, | Unstructured |

Information Retrieval Named Entity Recognition Latent Dirichlet Allocation |

Flooding | Jordan | |

| Devaraj et al. (2020) | Consider whether data can successfully extract helpful information from public tweets during a hurricane to first responders | Unstructured | Support vector machine (SVM), multilayer perceptron (MLP), and Convolutional Neural Network (CNN) trained on word embeddings, average word embeddings, and Part-Of-Speech (POS) tags, respectively | Hurricanes and storms | USA | |

| Xiao et al. (2018) | Present a real-time identification method for urban-storm disasters using Weibo data | Weibo data | Unstructured | Jieba segmentation module for word segmentation, term frequency-inverse document frequency method, naive Bayes, support vector machine, and random forest text classification algorithms | Hurricanes and storms | China |

| Rahmadan et al. (2020) | Use of a lexicon-based technique to examine the attitude expressed by the population when floods occur | Unstructured | Latent Dirichlet Allocation (LDA) | Flooding | Indonesia | |

| Kitazawa and Hale (2021) | Use the examples of Typhoon Etau and the heavy rains in Kanto-Tohoku, Japan to examine how the general population reacts online to warnings | Unstructured | Latent Dirichlet Allocation (LDA) | Typhoon | Japan | |

| Chen and Lim 2021) |

Introduce a novel method to evaluate the damage extent indicated by social media texts |

Unstructured | BERT | Typhoon | China | |

| Yuan et al. (2021) | Investigate the differences in sentiment polarities between various racial/ethnic and gender groups; look into the concern themes in their expressions, including the popularity of these themes and their sentiment toward them; and improve our understanding of the social aspects of disaster resilience with the help of disaster response disparities | Unstructured | Latent Dirichlet Allocation (LDA), | Hurricanes and storms | USA | |

| Cerna et al. (2022) | Analyze multiple ML models created for predicting intervention peaks due to uncommon weather phenomena and pinpoints the significant influence of NLP models on weather bulletin texts | SDIS25 (Weather bulletins) | Unstructured | Long Short-Term Memory, Convolutional Neural Networks, FlauBERT, and CamemBERT | Wildfire | France |

| GrÜNder-Fahrer et al. (2018) | Investigate social media communication’s thematic and temporal structure in the context of a natural disaster |

|

Unstructured | Latent Dirichlet Allocation (LDA), | Flooding | Germany / Austria |

| Lam et al. (2017) | Suggest a strategy for poorly annotating tweets on typhoons when just a tiny, annotated subset is available | Unstructured | Naive Bayes classifiers, Latent Dirichlet Allocation (LDA), | Typhoon | Philippines | |

| Wang et al. (2020) | Use a mix of Computer Vision (CV) and NLP approaches to extract information from both the visual and linguistic content of social media to create a deep learning-based framework for flood monitoring | structured/unstructured data | NeuroNER (LSTM based), CNN | Hurricanes and storms | USA | |

| Yuan et al. (2020) | Analyze the emotions and expressions of the general public’s answers over time as they changed in response to Hurricane Matthew | Unstructured | Latent Dirichlet Allocation (LDA), CNN | Hurricanes and storms | USA | |

| Sattaru et al. (2021) | Develop a social media-based, near-real-time flood monitoring system is suggested, along with solid architecture. The study piece also stresses effective ways to analyze, examine, and investigate the many social media data components | Unstructured | Naive Bayes classifier | Flooding | India | |

| Zhou et al. (2022) | Develop VictimFinder models based on cutting-edge NLP techniques, including BERT, to identify tweets requesting rescue | Unstructured | Bidirectional Encoder Representations from Transformers (BERT) | Hurricanes and storms | USA |

Table 2.

Opportunities and challenges of the included works of literature

| Citation | Opportunities | Challenges | ||||

|---|---|---|---|---|---|---|

| Model (how it helps) | Data | Application opportunities | Data-related challenges | Model limitations | Application limitations | |

| Zhou et al. (2021) | The Latent Dirichlet Allocation (LDA) model was developed to ease the workload and manage variable data | Because individuals tend to interact and disseminate information on open social media platforms during a crisis, Twitter has promoted quick disaster response and management | Investigate latent temporal subjects to provide decision-makers and residents with more information during Hurricane Laura |

Tweets contained considerable noise from fake news, automated messaging, adverts, and unrelated cross-event subjects It is challenging to separately analyze the subjects specifically for Storm Laura due to the co-occurrence of multiple events, including COVID-19, wildfires in California, the presidential election, and other hurricane occurrences. Most tweets were hybrids, including information across events |

It is worthwhile to experiment with the many labels produced by the study using an enhanced attention model to improve situational awareness | Depending on the population and the impacted areas, authorities should carefully distribute resources and adopt and adjust plans daily |

| Fan et al. (2020a, b) |

The algorithm can successfully filter out the noise and useless posts from social media's situational information postings The model can accurately categorize the tweets' humanitarian content and find reliable situational information |

Using social media data for disaster management has sparked the development of several information processing analytics tools The degree of a disaster’s effects in various areas might be reflected in social media posts relating to various local entities |

Prioritizing disaster response and recovery measures requires understanding the development of catastrophe scenarios in an area Advising on how to improve disaster management's efficiency and effectiveness by opening a new line of contact with volunteers and individuals in danger |

The demands for aid and the level of damage based on situational information collected from social media may differ from the actual condition of various areas in disasters due to unequal social media attention to local entities The attention to location entities is unevenly distributed across multiple areas in social media due to the co-occurrence of many crisis incidents |

The model would be unable to learn the categories’ characteristics very effectively due to the lack of training data | Situational data are not used to inform disaster management strategy |

| Lai et al. (2022) |

To extract named entities and associated entities from geographically dispersed, multi-paragraph manuscripts using a hybrid named entity recognition model that combines rule-based and deep learning techniques Syntactic neighbors are used to derive entity connections of flooded and toponym entities instead of the nearest neighbor heuristic technique |

A considerable historical flood record hit the Contiguous USA over the past 20 years. This dataset introduces a new dataset for future flood pattern analysis since it contains information on flood episodes, including their kind, severity, and location | The study developed a database that may be utilized for future flood pattern prediction and analysis as an alternative to official sources and commercial databases |

The neighborhood, city, and street did not correspond to an actual site The written structure and patterns of the news articles substantially vary since the material is drawn from various news sources It is quite challenging in many articles to precisely pinpoint the causes of streets, cities, and floods without additional information from sources unrelated to the original article |

Only 73% of locations are correctly classified using the binary model used to decide whether a site is acceptable, misclassifying numerous locations even at that stage | Many river and coastal regions are still unmapped, and even those mapped have limits and understate population danger. Government sources sometimes cover a larger geographical area than at the parcel level |

| Fan et al. (2018) | To handle vast amounts of text and minimize the dimensionality of the dataset for subsequent research, topic modeling is a practical strategy |

Filter social media posts on infrastructure, group tweets into distinct clusters, highlight important events, and examine how these events evolve as crises occur A key word-based strategy makes it possible to find specific subjects about infrastructure performance |

Disaster responders can further identify possible difficulties (such as a lack of resources that can be transported and distributed) in this cluster of tweets based on the extracted subjects regarding the airport |

Rate limitation of Twitter’s public API Since relative terms or events may arise in other parts of the world, the keyword-based collection always results in much noise in datasets During catastrophes, neither the Twitter Rest API nor the Twitter Streaming API was used to gather the dataset; instead, a web crawler used the Twitter search platform |

For finding specific information in social communications, the suggested framework can combine more text mining techniques (such as named entity recognition and event detection) | There is not a trained model for entity and event detection and summarization for infrastructure |

| Vayansky et al. (2019) | Inland counties had the most significant negative attitudes, indicating a lack of readiness in non-coastal areas | By assessing the concerns of those impacted and users’ attitudes in reaction to existing response efforts on a real-time basis, data may substantially enhance the improvement and optimization of relief activities here and in other countries | Data are analyzed to identify the concerns that need attention, whether the responses produced happy or bad feelings, and whether feelings predominated at different times as the storm developed |

Text from social media is poorly suited for sentiment analysis, as they often contain few words, slang or phrases, videos and images, and emoticons Accessing and understanding the data has proven to be challenging |

Training in twitter data was difficult to achieve in a limited time | Public response limited during disasters |

| Sermet and Demir (2018) |

Creation of knowledge production engines that accept natural language queries to easily access and evaluate massive amounts of flood-related data and models Flood AI removes the requirement for users to go through complicated information systems and datasets by providing knowledge access that mimics speaking with a natural flood expert |

It is essential to increase resilience by educating people on how to better prepare for, recover from, and adapt to catastrophes to reduce the consequences of these catastrophic occurrences | Depending on the rules of the host server, accessing external sources could call for a proxy server |

Most of these libraries and tools take longer to analyze the query and extract various grammatical features than expected from a web-based system Restricted ability to customize the ontology connection techniques |

Flood Ontology AI is constructed to allow extensions in natural catastrophes, such as wildfires, earthquakes, meteorological disasters, and space disasters, despite its focus on floods | |

| Xin et al. (2019) | LDA can successfully classify fresh tweets that are not yet assigned to a subject and identify the themes present in each dataset | Many humanitarian initiatives and organizations utilize social media during catastrophes to comprehend the status of victims, rescuers, and others | We expect that by identifying some recurring thematic regions and subjects in disaster-related tweets, our results will assist first responders and government organizations detect urgent material in tweets more quickly and with less human interaction |

Dirty data (names same as hurricanes) Inequitable data size between tweets and audio transcripts As they frequently contain higher degrees of slang, URLs, linguistic variety, and other elements compared with generic text or other social media posts, tweets are challenging to examine in a comparative context with other media |

The signal dataset's different media impacted the LDA's performance | Not ready for operational use |

| Kahle et al. (2022) | Text mining techniques may collect digital articles from web portals to record severe flood catastrophes. This is a considerable improvement compared to analog analyses, which are slower and more subjective | The event becomes vivid and tactile thanks to text analysis, which emphasizes the emotional effect and gives real-life visuals of the calamity |

Long-term analysis may be connected to contemporary flood risk management by incorporating past occurrences Examining contemporary and historical text assessment can estimate the effect pathways and damage patterns. With this, it is possible to link return durations, inundation intensities, and inundation regions |

Because spot-light data recordings are susceptible to technical failure during severe weather events, cross-validation with hermeneutic and documentary sources is crucial for creating long-term risk assessment systems The technological standards of communication, infrastructure, and economic progress in 2021, as opposed to 1804 or 1910, introduce a temporal bias into the data |

LDA results in extracted topics that are mainly inconsistent regarding the anticipated themes, making them less beneficial than those recovered using NMF | Because historical occurrences are not sufficiently represented and the current time series analysis does not go back far enough, intensities and return periods are approximated erroneously |

| Alam et al. (2020) |

For humanitarian organizations to fully understand an occurrence, both textual and visual material formats provide crucially and frequently complementing information According to a named entity recognition technique on social media data, identifying highly mentioned named entities can help uncover essential stories, either by filtering them out to concentrate on messages relevant to actual local emergency needs or by considering some for more consideration in-depth analysis |

During natural and unnatural disasters, social media is being used by millions of people | Only a small number of tweets from each cluster need to be manually examined by skilled annotators since clustering algorithms provide cohesive groupings of data based on their content. Experts can quickly identify disaster-specific humanitarian categories using this |

The text in tweets is often brief, casual, loud, and full of abbreviations, typos, and unusual characters Information credibility, dealing with loud material, dealing with information overload, dealing with information processing effectively, and dull talks, among others (check paper for other references) The most significant challenges that can considerably benefit from using supplemental information offered in text messages and photos are comprehending context and handling missing information |

Human oversight is also necessary for topic modeling to make sense of the automatically found topics Social media data have a noisy structure, so identifying identified entities in tweets is more complicated NER involves human interaction as a post-processing operation and is less effective for handling loud social media text |

Not ready to be implemented in real life due to data quality and noise |

| Barker and Macleod (2019) | Logistic regression was chosen as an excellent discriminative model in two-class (binary) contexts. As an illustration, it performed excellently with word embeddings in tasks like pertinent labeling tweets of landslide occurrences and a semantic relatedness test | More and more sources of near-real-time environmental data provide details on danger zones, river levels, and rainfall | By employing Paragraph Vectors and a classifier based on logistic regression to recognize tweets automatically, this study responds to requests for better crisis situational awareness |

Given that this influences whether tweets overlap bounding boxes and provide a match, extra consideration and study are needed to define the boundaries of at-risk locations People do not always tweet “on the scene,” but relatively frequently close by or after. Using more sources of information would probably be advantageous |

Social geodata machine learning pipelines need to be developed and tested further. For instance, how to integrate automated and manual labeling of tweets and increase the usage of unsupervised learning It is necessary to demonstrate this machine learning pipeline's potential during a variety of different types (and causes) of flooding events, such as pluvial and fluvial flooding, as well as to investigate how real-time rainfall information may provide more accurate information about areas at risk of pluvial flooding, especially during the summer months Words in inferred vectors that are not part of the trained model's vocabulary will not be considered |

not ready to be implemented in real life due to data quality and further model optimization |

| Maulana and Maharani (2021) | During training, BERT gathers knowledge from both the left and right sides of the token context | The issue of natural catastrophes in Indonesia is one of the hot topics on Twitter, with a wide range of popular themes | Twitter is an excellent source of knowledge that can assist decision-makers in preventing fatalities | Since the scope of this study is confined to case studies of flood disasters, it may also be applied to other kinds of natural catastrophes | While it does not provide the precise location of the disaster, the model can predict tweets discussing floods, and the geospatial data set creates an affected region from it. The issues that lead to misclassification include the stemming process cutting off affixes to words, tweets that have negative context, and misclassification of impacted user keywords | It is challenging to obtain a complete situational awareness to help disaster management due to the unpredictable nature of natural catastrophe behavior |

| Farnaghi et al. (2020) |

The capacity to handle the geographical heterogeneity in Twitter data and sensitivity to shifts in the tweet density in various areas The capacity to extract spatiotemporal clusters in real-time while considering both textual similarity and geographical and temporal distances Calculating twitter textual similarity while considering tweet semantic similarity using sophisticated NLP techniques, particularly vectorization and text embedding approaches |

Large study regions can utilize Twitter data for disaster management | Decision-makers and disaster managers may use the real-time data retrieved by DSTTM to respond quickly and effectively to various situations before, during, and after a disaster | the impact of spatial autocorrelation on the ability of geotagged tweets to identify events |

Most density-based clustering methods, including DBSCAN and its offshoots, do not consider the geographical heterogeneity of the Twitter data These frequency-based techniques (count vector (CV), term frequency (TF), and term frequency-inverse document frequency (TF-IDF)) have the drawback of producing enormous vectors for tweet representation. They also fail to consider the texts’ context, semantics, or the impact of synonyms and antonyms. They cannot mimic the acronyms and incorrect word spellings regularly used in tweets Given that tweets are only 280 characters long, these algorithms’ output vectors are highly sparse, which makes it challenging to use distance functions like cosine distance to determine how similar tweets are to one another The necessity to assess the processes by which the three components of geographical, temporal, and textual information are merged to produce an overall metric to convey the distance between geotagged tweets is another crucial problem in this respect |

These problems restrict us from developing a disaster management system that can dynamically identify spatiotemporal emergency occurrences in large-scale locations with varied densities without requiring human intervention |

| Chen and Ji (2021) | Under each infrastructure scenario, the suggested topic modeling-based technique may detect social media posts quickly. At the onset of a crisis, such rapid identification is crucial when there is little accessible information for situational evaluation | The suggested method considerably improves the efficiency of processing social media postings because it doesn't need a training data set. The proposed technique differs from knowledge-based approaches in that it does not require in-depth domain expertise, making it simpler to implement | Implement situational assessment from social media into infrastructure management procedures to increase infrastructure resilience and lessen the effects of disasters on communities | Social media posts about infrastructure are few, and a data-driven strategy is reliable. Critical infrastructure is mentioned in just a tiny percentage of the tweets, indicating little infrastructure-related information on social media | However, these learning-based systems are difficult to adapt to new catastrophes because of the semantic changes of social media posts over different disasters, which takes a lot of effort to label a new training data set | Less research has been done on the use of social media for systematic sensing of infrastructure conditions (such as functioning, damage, and restoration) |

| Zhang et al. (2021) | Using economic and social spatial semantics, the new GeoSemantic2vec algorithm can learn the spatial context linkages from POI data to generate urban functional zones (the regions assigned to various social and economic activities) | In the urban environment, such data records human behavioral actions, such as POI, taxi tracks, Twitter, Flickr images, etc. | based on a data-driven analysis of the socioeconomic danger of floods to humans and crowdsourcing data extraction of flood areas that also takes into account the socioeconomic characteristics of flood locations |

It does not weight data based on POI's floor area The urban functional zones labeled as Other need to be improved |

Because of the arithmetic power constraint, we set a low spatial resolution and only acquired a small amount of semantic data New supervised classification techniques are pending since the remote sensing picture classification requirements do not entirely match |

Not ready for operational use |

| Zhang and Wang (2022) | A model was used to categorize many articles and discover essential entities quickly | Global scale data (including many studies from all over the world) | Rainfall, coastal flooding, and flash floods, frequent hazard types, are the main topics of study on flood disasters |

Full-text literature mining; data extraction from images and tables; multilingual literature The primary data utilized in this research includes information from disaster news reports, disaster site data, and essential geographical data |

Recognize the influencing elements and consider them in evaluations and simulation prediction models to ensure the outcomes represent reality as closely as possible | The geographical disparity in the spatial distribution of flood research at the global and intercontinental scales |

| Reynard and Shirgaokar (2019) | Good classifier (sentiment analysis) | Twitter is a valuable tool for social scientists since it allows for the fast and low-cost collection of massive amounts of data |

Twitter data may be used to create policy before, during, and after crises Location information included in tweets may be helpful to learn more about content important to policy This method increases the chance of accurate reaction time and effective resource allocation during and after a disaster |

To ensure that lengthier tweets do not unfairly influence algorithm training, researchers may choose a random sample of tweets from all durations. Second, look at tweets from people who have been using Twitter for a longer time rather than depending on popular tweets or prominent Twitter users Block group polygons and Twitter bounding boxes lacked continuous borders |

Further investigation on how the time component of this corpus could reveal when opinions are forming, and shifting is lacking | Future research may explore how attitudes change over time and when they occur. The development of evaluation methods for disaster aid organizations may benefit from such studies |

| Karimiziarani et al. (2022) | A well-known topic modeling technique pulls themes from a huge volume of tweets to understand what was being discussed on Twitter during Hurricane Harvey | By giving them helpful information on social elements, effects, and the resilience of cities to a natural disaster, social media data may help crisis managers and responders meet their data demands | Before, during, and after Harvey, the study offers valuable county-level data that might greatly aid disaster management and first responders in reducing its effects and enhancing community readiness |

Small number of geotagged tweets Most studies on community susceptibility to natural disasters have been founded on factual information |

Lack of benchmark for other types of models | Some of the research used public opinion polls to gather stated preferences as opposed to revealed preferences that were freely provided through social media platform information sharing behavior |

| Wang et al. (2018) | NER tool performance on location recognition from tweets may improve when trained on Twitter data |

There aren't many hyper-resolution datasets for urban floods The GPS position precision of crowd-sourced data can reach meters, but Twitter-based data are only accurate to the level of street names A Twitter-based strategy would be preferable because it allows for relatively low-cost monitoring of a much larger area |

The datasets created based on conventional remote sensing and witness accounts can be supplemented with social media and crowdsourcing 399 |

The GPS position precision of crowd-sourced data can reach meters, but Twitter-based data are only accurate to the level of street names Because of the high noise in the Twitter-based technique, data must be cleaned before analysis |

The time to construct the dataset is significant (manually labeled) | The study is not ready to be implemented |

| Sit et al. (2019) | By considering the word order and the overall text structure utilizing long-term semantic word and feature relationships, LSTM networks outperform previous approaches | In contrast to retrospective studies, which frequently lack data during the initial few hours of the occurrence, the prospective studies acquired information before, during, and after the catastrophe | Identify the affected people and infrastructure damage category, which targets emergency services, rescue teams, and utility companies; donations and support category, which targets government agencies and non-profit institutions for planning recovery and relief efforts; affected people and pets type, which targets animal control and shelters; and advice, warning, and alerts category, which targets local governments and FEMA for constructing and enhancing |

Data manually labeled Stemming causes noise in the data and does not make topic models easier to comprehend Without considering the content, timing, and location of tweets, determining whether a tweet is informative or not typically requires more than just reading its text |

CNN had trouble remembering features when it was being trained RNN: The neural network's weight only receives a negligibly small amount of the gradient of the loss, which prevents the learning process |

Due to space constraints, the study did not conduct an in-depth investigation of the site-specific data we discovered through analysis |

| de Bruijn et al. (2020) | In a perfect world, the network should be capable of learning both intramodal and intermodal dynamics | Effective filtering of these tweets is required to increase user experience, boost the functionality of downstream algorithms, and decrease human labor |

The classification of tweets can be improved with the application of neural networks, enabling a more swift and efficient catastrophe response The classification method performs better when used in a hydrology context to classify unknown languages |

Low-resolution dataset Only Latin languages are analyzed in this experiment |

Basic neural network—Better geoparsing process elsewhere | Poor modeling of hydrological processes and few hydrological variables (e.g., runoff, snowmelt, and evaporation), |

| Chao Fan et al. (2020a, b) |

It has been proven that Stanford NER is a reliable technique for a broad application of entity recognition Convolutional Neural Networks (CNN) excel at solving challenges involving text classification |

Large study regions can utilize Twitter data for disaster management | For locals, volunteers, and relief organizations to coordinate response activities to natural disasters, situation assessment based on an examination of the location-event-actor nexus (i.e., what event is occurring where and who is working on what reaction actions) is crucial |

Twitter data may contain noise, such as non-sense symbols, punctuation, and misspelled word Some emoticons may be informative for the content of tweets |

Models not well optimized Other models would be more interesting |

Social sensing is a complementary approach to providing evidence for physical sensing and other data sources |

| Shannag and Hammo (2019) | A mixed vector that improves text categorization might employ vector-space and NER algorithms | The hashtags facilitated the search process | Gave some valuable information to authorities and decision-makers for them to take into account to prevent the issue in the future |

Twitter posts are brief. Recently, Twitter users have been limited to 280 characters or fewer Some postings could include rumors, nonsensical statements, and contextual information like usernames, tags, and URLs that could interfere with the effectiveness of event detection activities Widespread usage of casual language that might include misspelled words and phrases, as well as emojis and other uncommon acronyms |

More NLP and machine learning methods to improve our strategy When two words have the same contextual meaning, IR cannot identify similarities between them LDA won't produce reliable results when used with microblogging platforms like Twitter because of its unique features, particularly its brief tweets |

Not ready for deployment because Extracting and Detecting events from Arabic tweets is still under investigation (not very developed) |

| Devaraj et al. (2020) |

Average word embeddings are a brand-new and powerful feature class for non-neural catastrophe models Classifiers might be useful to first responders to identify people needing rescue |

People increasingly use social media sites like Twitter to submit urgent aid needs Note that during Harvey, not only are specific, urgent pleas for assistance broadcast on Twitter but machine learning models can be created to precisely recognize these demands as well |

Even though these messages are “needles in a haystack,” locating any impacted individuals during a cyclone can significantly improve the job of first responders and other pertinent parties |

Rare but extremely helpful to categorize tweets with emergency needs after a natural catastrophe The only tweets about Hurricane Harvey make up the data Users who list their location on their profile sometimes provide false descriptions or are too general to be helpful It is also helpful to determine whether a tweet’s geographical origin during a crisis affects the likelihood that it will be urgent |

We have not examined the models’ capacity to generalize to other catastrophes because they have only been trained on data from Hurricane Harvey | It would be helpful to identify small groups of people affected by the hurricane based on their location data and movement patterns rather than concentrating on particular persons who need support |

| Xiao et al. (2018) | Successfully resolving multivariate issues has been used in many different sectors | It is possible to successfully avoid and mitigate earthquake-related losses by mining Weibo data | Mitigating and minimizing the financial costs and deaths brought on by waterlogging disasters caused by urban rainstorms |

There is not much information on using microblog data for emergency management during urban heavy rain catastrophes Due to conventional media’s poor information dissemination efficiency, it cannot immediately gather timely disaster information to help relevant agencies implement proper countermeasures when a downpour causes waterlogging Data noise also impacted how well each categorization system performed |

The location and ambiguity of words, as well as the semantics and syntax of the text, were not taken into account in this study. The algorithm’s assessment index score is poor as a result | Not ready for deployment |

| Rahmadan et al. (2020) | LDA is appropriate for studying massive text datasets, like data from social media | Social media has proven to be an excellent tool for getting information about catastrophes from those who have personally experienced them | Related parties can utilize information to establish disaster management plans, map at-risk floodplains, assess the causes, monitor the effects after a flood catastrophe, etc. | Emotional analysis not conducted lack of geolocation | To improve topic modeling outcomes, more research may be conducted utilizing the upgraded LDA approach or other topic modeling techniques | Not ready for deployment |

| Kitazawa and Hale (2021) | Due to the lack of labeled data for supervised approaches and our lack of a solid prior for the specific topics of conversation that would make up each of these four categories, as well as our knowledge that the words pertinent to each class would probably vary between locations and change as the disaster progressed, we chose LDA topic modeling as an unsupervised approach | Social media may be a fascinating source of information for determining how individuals act based on EWS calls | These insights can assist authorities in creating more focused social media strategies that can reach the public more rapidly and extensively than traditional communication channels, improve the circulation of information to the public at large, and gather more detailed catastrophe data |

Limited case studies (one event, in Japan only) There are a few elements, including disaster-related (such as severity, length, magnitude, and kind of catastrophe) and non-disaster-related (such as regional socioeconomic variations, day/night) aspects |

Network analysis and other techniques should be looked at to see further insights available for disaster-related authorities instead of the manual study we employed | Further studies must be made before implementation |

| Chen and Lim (2021) | A huge pre-trained model with vast parameters was used to transform text tokens into continuous representations. The model has already undergone extensive training on enormous volumes of data and is instantly available for various purposes | The staggering volume of data that social media platforms generate daily from around the world is a powerful motivation for sociological research on social media | Find the damage reports among tweets that fall into the established categories of infrastructure, transportation, water, or power supply, which are the most frequent damage in typhoon catastrophes |

Social media data are absent in some areas Noisy data |

In multi-instance learning, it is possible to acquire some information about the instance label, but this knowledge is very speculative and insufficient to identify the label of each instance | Assessment is limited to the available locations, and no generalization can be made |

| Yuan et al. (2021) | A text document’s latent themes can be found using an LDA topic model. The LDA topic model specifically assigns a word distribution to each subject | Social media has shown promise as a new data source for studying human actions and reactions in times of crisis | They are practicing the skills needed to react quickly to a disaster (short-term resilience) and the skills required to develop long-term resilience | Different demographic groups’ response disparities on these social media platforms remain unexplored | Other methods can perform better than the used model | Unstudied are the differences in impacted persons’ dynamic interactions characterized by their demography with catastrophic occurrences and the built environment in the crisis |

| Cerna et al. (2022) |

Sequence labeling, classification, and generation are three distinct sorts of issues that LSTM is best equipped to address The BERT structure enables him to learn the language in general and in both directions |

The great majority of brigades have gathered significant information about their previous interventions | By developing better techniques to ready themselves for natural catastrophes (storms, floods, etc.), fire departments and EMS, in general, would be able to pinpoint peak intervention times and maximize their response, keeping the populace better protected and safe | Limited—add meteorological bulletins from other departments of the country |

Use texts in French and English to develop and evaluate new text-preprocessing and modeling techniques Incorporate the results from this categorization technique into a larger regression model that projects the frequency of interventions by place and across time (hourly, daily, and monthly) (principal cities and mountain cities) |

needs more work before deployment |

| GrÜNder-Fahrer et al. (2018) | For searching, browsing, summarizing, and soft grouping of significant document collections, topic model approaches have shown to be quite helpful. The subjects arise from the examination of the original texts. Therefore, their implementation does not need any prior annotations or labeling of documents |

Facebook and Twitter play very diverse and complimentary roles, as opposed to just being competitors in the same area of the media spectrum The shifting interests end users have at various times during the disaster’s life cycle are remarkably comparable to the temporal growth of social media content |

There is tremendous potential for social media content across the disaster management life cycle and the crisis’s factual, organizational, and psychological aspects | Increase opportunities for tangible assistance and social interaction during the reaction and initial stages of recovery |

Detection of temporal phases in low resolution Much more has to be stated and done on adjusting other NLP techniques to the unique features of German and social media language |

No reliable, unique methodological framework for social media processing is available so far |

| Lam et al. (2017) | The topic models will be improved as the algorithm iterates because words will gradually be allocated more to subjects that are already frequent, and topics will become more prevalent in texts where they already are | Small ready use manually labeled data | Government and other concerned bodies can utilize tweet classifiers to find additional information about a tragedy on social media |

Noisy data The development of classifiers that apply to diverse typhoons may not be possible with an insufficient annotation approach |

The weak annotation approach used in this study focuses on identifying the month in which a subject appears most frequently. Still, it may be changed to use weeks, days, or any relevant filtering criteria | Due to a scarcity of data, particularly at the beginning of a natural catastrophe, there must be creative approaches to construct classifiers fast |

| Wang et al. (2020) | Neural network-based models generally outperform traditional models such as CRF in the task of NER |

Manual data evaluation based on the tweets that have been automatically filtered has shown promise in improving data mining outcomes The extensive data gathered from social media might offer a fresh, exciting, and textual stream to enlighten predicting end consumers |

It is used to establish a passive hotline to guide search and rescue activities |

The context of catastrophes has shown that disinformation is frequently present in social media streams Data privacy issue |

The model cannot detect fake and spam social media messages | |

| Yuan et al. (2020) | LDA topic model offers both the distribution of topics throughout each text and the distribution of subjects across words | Social media has shown its ability to help understand how the public reacts to catastrophes as a new and real-time data source | Crisis response managers may develop and implement response plans by comprehending the timely evolutions of the public’s responses during disasters |

Disregarded the regularity of social media users’ posts Other significant events, such as the general election, may occur in 2016 besides Hurricane Matthew Only Florida's experience with Hurricane Matthew was used to establish the conclusions of the investigation of public reaction evolutions Twitter numbers may not accurately reflect Florida’s population after Hurricane Matthew |

The number of topics to be generated |

A baseline of sentiment is also lacking to determine how a calamity has affected public attitude Quantification indexes are required but have not yet been researched to describe changes in public worries throughout catastrophe periods |

| Sattaru et al. (2021) |

In the Naive Bayes classifier, conditional independence between predictors is given top priority on the supposition that all characteristics are conditionally independent * very useful for high dimensional datasets, and they are Easy to build |

Social media is a crowdsourcing tool that may be used in catastrophe mapping to complement and add to remote sensing data | Helpful in constructing a near-real-time monitoring system during crises is the suggested structure |

The user’s non-use of the location function offered by the Twitter platform The user failed to indicate the location in the tweet’s content One of this system’s complex tasks is determining the tweet's precise position |

Benchmark of other models |

the flood map generated from social Media data alone is not a complete solution for flood mapping and management, |

| Zhou et al. (2022) | Bidirectional Transformer, an attention mechanism that learns contextual relationships between words during fine-tuning, has been used to BERT, which pre-trains deep bidirectional representations using unlabeled text | For instance, social media has increasingly become a part of our everyday lives and has contributed significantly to effective social sensing, providing significant potential for disaster management | Future victim-finding applications are built on the tests’ findings. Web apps can be created to present near-real-time rescue request locations that emergency responders and volunteers may use as a guide for dispatching assistance. The ideal model can also be included in GIS tools |

Social media users who use languages other than English cannot use this study's findings Locational bias, temporal bias, and dependability problems plague social media posts |

Tweets from a single event, Hurricane Harvey, are used to train and test the algorithms, which may impact their generalizability | Because there are currently no systems that can automatically and quickly recognize rescue request messages on social media, effectively utilizing social media in rescue operations remains difficult |

NLP areas of application

The authors of the literature selection have explored numerous NLP topics, as shown in Table 1. To summarize, researchers have used NLP in four areas: (1) Social influence and trend analysis, (2) event impact assessment and mapping, (3) event detection, and (4) disaster resilience. Social influence and trend analysis is the most dominant topic (37% of all literature), which includes discussions on crowdsourcing, sentiment analysis, topic modeling, and citizen engagement. Researchers also have used NLP to study extreme events’ impact assessment and mappings (25% of all literature), such as disaster response, event impact on infrastructure, and flood mapping. Event detection is another popular area of research (21% of all literature), in which authors used NLP to detect and monitor extreme weather events and design early warning systems. Lastly, researchers adopted NLP models for disaster resilience (17% of all literature).

Data

Multiple sources of data have been used for the selected studies. Figure 3 shows the distribution of data sources used in the literature. Data sources vary between social media (Twitter, Weibo, and Facebook), publications (Newspapers, Disaster Risk Reduction Knowledge Service, Numerical climate & gauge data, Weather bulletins, and Generic ontology), public encyclopedia, and services (Chinese wiki and Google Map) and posted photographs. Social media plays an essential role in data sourcing for the studies. For example, Twitter is used by 57.1% of the total studies, which emphasizes both the role and the potential of this platform as a real-time and historical public data provider with additional features and functionality that support collecting more precise, complete, and unbiased datasets. Typically, researchers take the content from social media posts and evaluate it along with the geolocation data. Authors also used NLP to process other data sources such as weather bulletins, gauge data, online photographs, and newspapers. Data size could vary from small datasets (dozens of photographs, hundreds of weather bulletins) to large ones (millions of tweets). It is important to note that when predictive modeling was the goal of a study, it was usual practice to compare the accuracy of NLP findings to data from reliable sources.

Fig. 3.

Pie chart of the data sources used within the literature

NLP tasks and models in the review literature

All of the studies mentioned in this review applied at least one NLP task within their research that involves either syntactic or sematic analysis. As studies deal with extensive unstructured social media data, clustering was used to categorize tweets to understand what was being discussed on social platforms. Clustering proved a highly effective machine learning approach for identifying structures in labeled and unlabeled datasets. Studies used K-means and graph-based clustering.

In addition, pre-trained models (PTMs) for NLP are deep learning models trained on a large dataset to perform specific NLP tasks. PTMs may learn universal language representations when they are trained on a significant corpus. This can aid in solving actual NLP tasks (downstream tasks) and save the need to train new models from the start. Several studies within the literature have used PTMs such as Bidirectional Encoder Representations from Transformers (BERT), SpaCy NER, Stanford NLP, NeuroNER, FlauBERT, and CamemBERT. These models have either been used for named entity recognition or classification.

Moreover, the automated extraction of subjects being discussed from vast amounts of text is another application addressed in the literature. Topic modeling is a statistical technique for discovering and separating these subjects in an unsupervised manner from the massive volume of documents. The authors have used models such as Latent Dirichlet Allocation (LDA) and Correlation Explanation (CorEx) (Barker and Macleod 2019; Chen and Ji 2021; Karimiziarani et al. 2022; Xin et al. 2019; Zhou et al. 2021). Furthermore, other NLP sub-tasks such as tokenization, part-of-speech tagging, dependency parsing, and lemmatization & stemming have also been applied in several studies to deal with data-related problems.

Study area

Studies utilizing NLP have been conducted in different countries. Most research concentrated on metropolitan cities like Beijing, China, and New York City, USA. This may be because these cities are heavily populated, which increases the probability of data availability (more social media users, for example). For example, the USA is the leading country based on the number of Twitter users as of January 2022, with more than 76.9 million users, while 252 million Chinese users actively use Weibo daily. Adding to that, these cities are both in the path of frequent tropical cyclones. They might be affected by several types of flooding, such as coastal flooding due to storm surges, pluvial flooding due to heavy precipitation in a short time, or fluvial flooding due to their proximity to a river. For example, on July 21, 2012, a flash flood struck the city of Beijing for over twenty hours. As a result, 56,933 people were evacuated within a day of the flooding, which caused 79 fatalities, at least 10 billion Yuan in damages, and destroyed at least 8,200 dwellings. Figure 4 shows the distribution of the studies by country. Some researchers compared data from various cities. The analysis may be done at multiple scales, from one town to a whole continent.

Fig. 4.

Distribution of studies by country

Types of extreme weather events

Three types of extreme events were addressed in the surveyed literature: hurricanes and storms, typhoons, and flooding. Formed in the North Atlantic, the northeastern Pacific, the Caribbean Sea, or the Gulf of Mexico, hurricanes are considered to cause significant damage over a wide area with high population density, which explains the focus on this type of extreme event and its presence in social media. More than 48% of the studies concentrated in one way or another on hurricanes. Hurricanes have hazardous impacts. Storm surges and large waves produced by hurricanes pose the greatest threat to life and property along the coast (Rappaport et al. 2009). In emergencies, governments have to invest in resources (financial and human) to support the affected areas and populations and to help spread updates and warnings (Vanderford et al. 2007).

Flood is a challenging and complex phenomenon as it may occur at different spatial and temporal scales (Istomina et al. 2005). Floods can occur due to intense rainfall, surges from the ocean, rapid snowmelt, or the failure of dams or levees (Istomina et al. 2005). The most dangerous floods are flash floods, which combine extreme speed with a flood’s devastating strength (Sene 2016). As it is considered the deadliest type of severe weather, decision-makers must use every possible data source to confront flooding. Flooding was the second hazard addressed in the literature, varying magnitudes from a few inches of water to several feet. They may also come on quickly or build gradually. Nearly 42% of the study literature covered flooding.

Three studies, representing 10% of the literature, covered typhoons, which develop in the northwestern Pacific and usually affect Asia. Typhoons can cause enormous casualties and economic losses. Additionally, governments and decision-makers have difficulty gathering data on typhoon crisis scenarios. At the same time, modern social media platforms like Twitter and Weibo offer access to almost real-time disaster-related information (Jiang et al. 2019).

Discussion

Hurricanes, storms, and floods are the most extreme events addressed in the literature. The duration of weather hazards varies greatly, from a few hours for some powerful storms to years or decades for protracted droughts. The occurrences of weather hazards usually raise more awareness of weather hazards and trigger, therefore, higher sensitivity to extreme events and the tendency of the public and Internet users to report them. Even short-lived catastrophes may leave a long-lasting mark in the public’s mind and remain referred to online as a reference event. Using ground-based sensors to understand the dynamics of weather hazards is often confronted with limited resources, leading to sparse networks and data scares. Thus, we can only ensure a better understanding and monitoring of these extreme events by expanding the data set to other structured and unstructured data sources. In this regard, only through NLP this kind of data could be valorized and made available for weather modelers. This systematic review addressed a critical gap in the literature by exploring the applications of NLP in assessing extreme events.

Role of NLP in supporting extreme events assessment

Hurricanes

NLP can help learn from structured and unstructured textual data from reliable sources to take preventive and corrective measures in emergencies to help support decision-making. We found in this study that many different NLP models are used together with multiple data sources. For example, topic modeling was used by 12 studies to support the hurricanes and storms decision-making using LDA and CorEx models applied to social media data (Facebook and Twitter). Vayansky et al. (2019) used sentiment analysis to measure changes in Twitter users’ emotions during natural disasters. Their work can help the authorities limit the damages from natural disasters, specifically hurricanes and storms. In addition to the corrective measures of recovering from the disaster, it can also help them adjust future response efforts accordingly (Vayansky et al. 2019). Yuan et al. (2021) investigated the differences in sentiment polarities between various racial/ethnic and gender groups to look into the themes of concern in their expressions, including the popularity of these themes and their sentiment toward them, and to understand better the social aspects of disaster resilience using the results of disparities in disaster response. Findings can assist crisis response managers in identifying the most sensitive/vulnerable groups and targeting the appropriate demographic groups with catastrophe evolution reports and relief resources. On another note, Yuan et al. (2020) looked at how often people posted on social media and used the yearly average sentiment as a baseline for the sentiment. The LDA was used to creatively determine the sentiment and weights for various subjects in public discourse. Using their work, a better understanding of the public’s unique worries and panics as catastrophes progress may assist crisis response managers in creating and carrying out successful response methods. In addition to protecting people’s lives, NLP can be used to monitor post-disaster infrastructure conditions. Chen and Ji (2021) used the CorEx topic model to capture infrastructure condition-related topics by incorporating domain knowledge into the correlation explanation and to look at spatiotemporal patterns of topic engagement levels for systematically sensing infrastructure functioning, damage, and restoration conditions. To help practitioners maintain essential infrastructure after catastrophes, Chen and Ji (2021) offered a systematic situational assessment of the infrastructure. Additionally, the suggested method looked at how people and infrastructure systems interact, advancing human-centered infrastructure management (Chen and Ji 2021).