Abstract

Purpose:

Text messaging is a pervasive form of communication in today's digital society. Our prior research indicates that individuals with aphasia text, but they vary widely in how actively they engage in texting, the types of messages they send, and the number of contacts with whom they text. It is reported that people with aphasia experience difficulties with texting; however, the degree to which they are successful in conveying information via text message is unknown. This study describes the development of a rating scale that measures transactional success via texting and reports on the transactional success of a sample of 20 individuals with chronic aphasia. The relationships between texting transactional success and aphasia severity, texting confidence, and texting activity are explored.

Method:

Performance on a texting script was evaluated using a three-category rating in which turns elicited from participants with aphasia received a score of 0 (no transaction of message), 1 (partial transaction), or 2 (successful transaction). Internal consistency was assessed using Cronbach's alpha. Interrater reliability was determined using intraclass correlation coefficient and Krippendorff's alpha.

Results:

Although preliminary, results suggest adequate internal consistency and strong interrater reliability. Texting transactional success on the script response items was significantly correlated with overall aphasia severity and severity of reading and writing deficits, but there was no relationship between transactional success and texting confidence or overall texting activity.

Conclusions:

This study describes initial efforts to develop a rating scale of texting transactional success and to evaluate the validity of scores derived from this measure. Information from a texting transactional success measure could inform treatment that aims to improve electronic messaging in people with aphasia.

Text messaging is pervasive in today's digital society. In the United States, texting is the most common nonvoice application used by mobile phone owners (Smith, 2011, 2015). Currently, 97% of Americans own a cellphone of some kind. While there is notable variation based on age, education, and household income, the number of Americans who own a smartphone has dramatically increased in the last decade from 35% to 85% (Pew Research Center, 2021). Alongside this rapid growth, has come an increase in mobile phone messaging. In 2021, an estimated 3.09 billion people worldwide used text or other messaging apps to communicate (Ceci, 2021). The popularity of text messaging is influencing patterns of communication. For example, 31% of respondents to a Pew Research Center survey reported a preference for texting over talking on the phone (Smith, 2011).

There are many ways in which texting and other forms of electronic communication mirror face-to-face conversation. A recent review of conversation analysis and online interaction details core organizational features of conversation, such as turn taking and repair, which are also present in electronic exchanges (Meredith, 2019). However, texting also differs from spoken conversation. For example, the timing of turns that typically occur asynchronously during conversation differs in text exchanges (Cherny, 1999). Sequence organization is also different in texting exchanges, as recipients will often choose to respond to multiple turns in the order they were posted (Nilsen & Mäkitalo, 2010). Furthermore, communication via text may include various forms of media, including emojis, pictures, and GIFs. Nonetheless, texting can serve one of the primary functions of conversation, that is, to establish and maintain interpersonal connections (Armstrong et al., 2016).

Aphasia affects reading and writing abilities in addition to spoken language. Therefore, it is no surprise that texting abilities in individuals with aphasia may also be impacted. Cases of dystextia, in which patients presented with compromised texting or an inability to text after a stroke, have been reported in the literature (Al Hadidi et al., 2014; Lakhotia et al., 2016). There are also reports of individuals with aphasia who received training to target compromised texting abilities (Beeson et al., 2013; Fein et al., 2020).

The process of texting for individuals with aphasia is complex, requiring lexical retrieval of appropriate words and their correct orthography, in addition to visuospatial and motor skills needed to locate and carry out keyboard movements (Beeson et al., 2013). The central language impairment associated with aphasia may interfere with comprehension and formulation of text messages. Studies examining typed written discourse indicate that individuals with aphasia type more slowly, use less complex syntax, and produce less text than people without aphasia. The lower production rate among individuals with aphasia is attributed to the time consuming editing process, in addition to lexical retrieval and spelling difficulties (Behrns et al., 2008, 2010; Johansson-Malmeling et al., 2021). These same underlying issues with written discourse production are likely implicated in the production of text messages. Yet, typing patterns on a keyboard differ from typing on mobile phones due to the small size of the keys. This, together with sensory motor impairments, such as hemiparesis of the dominant hand that may co-occur with aphasia, interfere with the ability to navigate a mobile phone (Greig et al., 2008).

Despite these potential barriers to texting, there are numerous advantages of texting as a communication modality for individuals with aphasia. Text messaging offers more time to read, process, and formulate responses compared to face-to-face communication or voice calls; the “time taken to compose a text message is entirely up to the individual” (p. 2, Beeson et al., 2013). Electronic writing allows users with language and/or memory challenges a method of tracking conversation and saving messages for repeated future use (Todis et al., 2005). In addition, there are features, standard in some mobile phones, including predictive text and autocorrect, as well as nonlexical tools such as GIFS, emojis, and photographs, that can support texting for individuals with impaired lexical retrieval and/or writing ability. Importantly, the prevalence of cell phone usage in the general population and the social nature of text messaging make texting an attractive communication mode for people with aphasia.

Texting Behaviors of Individuals With Aphasia

While texting is widespread in the general population, the extent to which individuals with aphasia communicate via texting in their everyday lives has not been examined until recently. We explored this important question with a descriptive study that reported on the texting behaviors of a sample of 20 individuals with chronic aphasia (Kinsey et al., 2021). Participants provided informed consent for researchers to view and analyze texts they had sent and received over a 7-day period. Text messages were recorded, transcribed, and coded based on whether the message was sent or received and whether the message represented an initiation or response to a texting partner. Results indicated that participants in our sample texted less frequently than reports of texting in the general population. For example, participants with aphasia exchanged an average of 40.3 texts over the 7-day period compared to Pew Research Center data indicating neurologically healthy Americans, age 18–65+ years, exchange an average of 41.5 texts per day (Smith, 2011). Participants varied in the types of texts sent; some initiated a greater proportion of texts, whereas others were more frequent responders to messages. Forty percent of the sample used multimedia content in their messages such as photographs, emoticons, GIFs, and links to websites. We also found that texting activity (i.e., amount of text messages sent and received over the 7-day period) was not correlated with aphasia severity as measured by the aphasia quotient (AQ) of the Western Aphasia Battery–Revised (WAB-R; Kertesz, 2007), or with the severity of writing impairments as measured by the WAB-R Writing subscale score. Briefly, our study confirmed that people with aphasia do indeed engage in texting, but that their texting behaviors are widely variable.

While we examined the content of participants' messages for information such as mean number of words per message, we did not evaluate how successful participants were in communicating their intended message via text. To date, how successful persons with aphasia are able to convey meaningful information via texting has not been reported. Yet, transaction is a key component of communication (in any modality) and is highly relevant to participating in texting.

Measuring Transactional Success

Transaction is conceptualized as the expression of content or the exchange of information, whereas interaction refers to the social function of language (Brown & Yule, 1983). The concept of transaction is well recognized within the context of conversation. Perhaps Holland put it best when she wrote, “people with aphasia communicate better than they talk” (Holland, 1977), embracing the notion that people with aphasia are able to successfully convey information in an exchange despite linguistic errors. Successful transaction involves both verbal and nonverbal behaviors; individuals with aphasia and their conversation partners will often work together to construct meaning and confirm understanding (Kagan et al., 2001). However, transaction via written language is unique with demands that differ from face-to-face spoken communication. According to Brown and Yule (1983), when communication occurs via writing, an individual does not have access to the same nonverbal cues or immediate feedback from their partner as is present in face-to-face conversation and, therefore, some interpretation may be required.

To our knowledge, there are currently no measures that attempt to capture transactional success in written communication, and more specifically, in text exchanges of individuals with aphasia. Yet, with the proliferation of texting activity in the last decade, it is likely that we will see texting emerge as a target for intervention. Subsequently, there is a need for tools to assess the success of texting transaction, both as a starting place for therapy and as a means of monitoring progress.

Efforts to evaluate transactional success in the spoken conversation of people with aphasia have informed the conceptualization and development of a measure of transactional success in texting. Ramsberger and Rende (2002) describe the development of a tool that utilizes standardized procedures to stimulate natural conversation surrounding a story retell of popular television sitcom episodes. With regard to the theoretical basis of their measure, the authors suggest that understanding is dynamically constructed within the conversation; partners “continually edit, infer, correct, and confirm their understanding of the information being exchanged” (p. 339; Ramsberger & Rende, 2002). Thus, their measure was based on the collective success of participants with aphasia and their conversation partners. After watching a sitcom episode, the person with aphasia communicated the main ideas of the episode using whatever means they chose including speaking, writing, drawing, or gesturing. Afterwards, the partner without aphasia was instructed to retell the story without feedback from the person with aphasia. The number of main ideas expressed by the partner without aphasia served as the measure of transactional success. The researchers were deliberate in their rationale for selecting validity and reliability procedures. For example, construct validity was evaluated using a multimethod approach in which their measure of transactional success was correlated with a comprehensiveness rating and main ideas elicited from additional story retells. Coefficients of equivalence were calculated comparing the number of main ideas within parallel sitcom episodes (two more complex and two less complex episodes) as a measure of internal consistency. Adequate interrater reliability via point-to-point agreement was also reported.

Leaman and Edmonds adapted Ramsberger and Rende's (2002) measure to examine communicative success in the conversations of persons with aphasia across communication partners (2019) and within a structured narrative task (2021). Similar to Ramsberger and Rende's measure, their success rating was based on verbal and nonverbal behaviors of the person with aphasia as well as the listener's understanding of the person with aphasia. Understanding was based on observation of the conversation partner's response to the person with aphasia's turn. Conversational success was measured on a 4-point scale with 4 = successful, indicating that the message was clearly communicated, and 1 = not successful, indicating that nothing understandable. Leaman and Edmonds (2021) reported good interrater reliability and test–retest stability on their measure when it was applied to unstructured conversation and a structured narrative task.

Kagan et al. (2004) also applied the concept of transactional success in their assessment tool, the Measure of Participation in Conversation (MPC). This observational measure calls for “a global rating of the ability to transact (exchange information) through observable communication behaviors” (Kagan et al., 2004). Accuracy and speed of responses, ability to reliably indicate yes/no/don't know, ability to initiate topics, and ability to repair misunderstandings are factors to consider when judging transactional success. Following a brief conversation with the person with aphasia (or observation of a video-recorded conversation), the examiner scores transaction on a Likert scale ranging from 0 (no successful transaction) through 2 (several sufficiently successful transactions) to 4 (consistently successful transactions). Anchors and guidance for scoring interaction are also provided; transaction and interaction scores are combined to yield an overall rating of conversational participation. Authors report support for construct validity based on significant correlations between scores on the measure and experienced clinical judgments of participants' communicative skill. They elicited acceptable interrater reliability on scores from trained and experienced raters. However, it is notable that a lower interrater score on the MPC was elicited in the context of their experimental study for the interaction category (r = .65, p < .001) indicating reliability may need to be re-evaluated for new applications (Kagan et al., 2001). Despite a need for further evaluation of their measure, the tool suggests promising clinical applications. Their anchors for transactional success and Likert scale also provide a useful model for rating this construct.

In short, while the tools described above apply to transaction within spoken conversation, they can certainly inform the development of a measure of transactional success applied to texting. These measures are theoretically grounded (Brown & Yule, 1983), acknowledging the need to assess not only the content of information conveyed by the person with aphasia but also the comprehension of the message by the conversational partner. Importantly, developers aimed to assess validity and reliability of the scores yielded from their measures (with varying degrees of success), acknowledging the importance of these concepts for instrument development. However, these tools were developed to evaluate transaction within conversation and were not designed for use with texting. While there are many similarities between texting and spoken conversation, as described previously, texting is a unique communication modality that involves asynchronous timing, distinct sequence organization, and various forms of multimedia. Therefore, a specific measure of transactional success via texting is needed. Such a measure would need to consider factors such as graphemic or spelling errors that potentially influence successful written communication, as well as factors specific to texting, such as the use of multimedia (e.g., emojis, pictures, GIFs) that can be transactional.

As discussed previously, individuals with aphasia experience difficulties with texting (e.g., Beeson et al., 2013; Fein et al., 2020; Lakhotia et al., 2016) and text less frequently (Kinsey et al., 2021) than neurologically healthy adults of a similar age (Smith, 2011). However, the degree to which individuals with aphasia are successful with conveying information via text message is unknown. This article describes the initial development of a measure of transactional success via texting and a preliminary assessment of its psychometric properties to support the validity of inferences that can be drawn from its score. We applied the rating scale to data collected during the participants' baseline assessments as part of a clinical trial investigating an electronic writing intervention for aphasia (Clinical Trials Identifier: NCT03773419). As a follow-up to our original article on texting behaviors of individuals with aphasia, we report on the same sample of 20 participants described in Kinsey et al. (2021). More specifically, we aim to:

describe the development of a texting transactional success (TTS) rating scale, including the training procedures for raters;

assess the validity of scores derived from the TTS by evaluating internal consistency and interrater reliability of the scale;

report on the TTS of the sample of 20 participants with chronic aphasia; and

identify variables that may be related to transactional success including amount of texting activity, confidence with texting, and aphasia severity.

Whereas our previous work suggested texting behaviors were not associated with aphasia severity or severity of writing impairments (Kinsey et al., 2021), we anticipated that there would be a relationship between TTS and overall severity of aphasia and severity of reading and writing skills, as measured by the WAB-R AQ, language quotient (LQ), and Writing subscale score. We also anticipated a positive relationship between transactional success and amount of texting activity, as well as confidence with texting.

Method

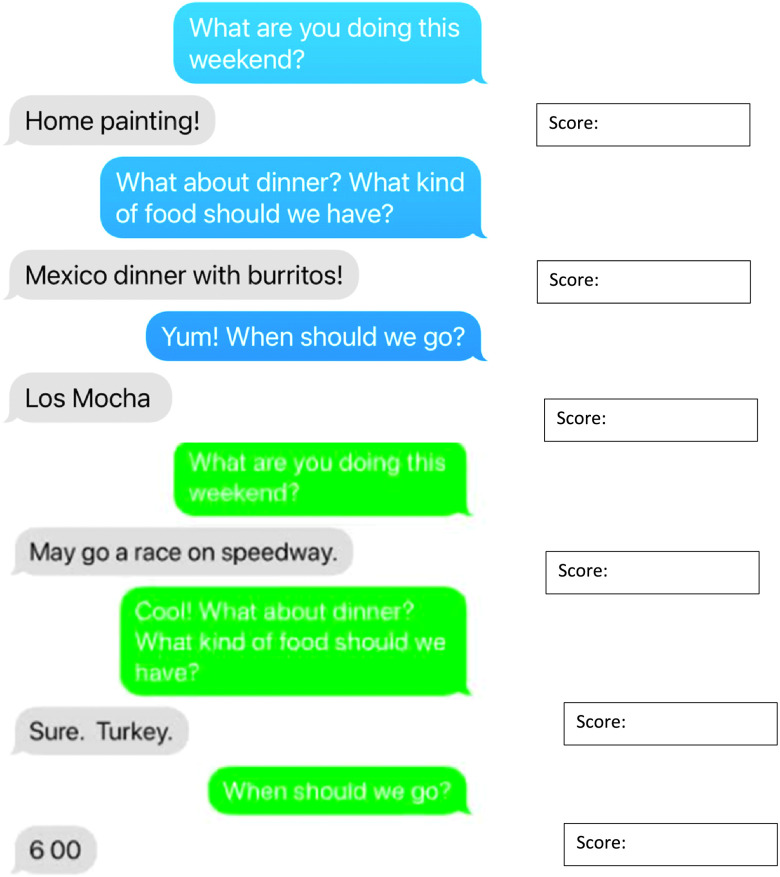

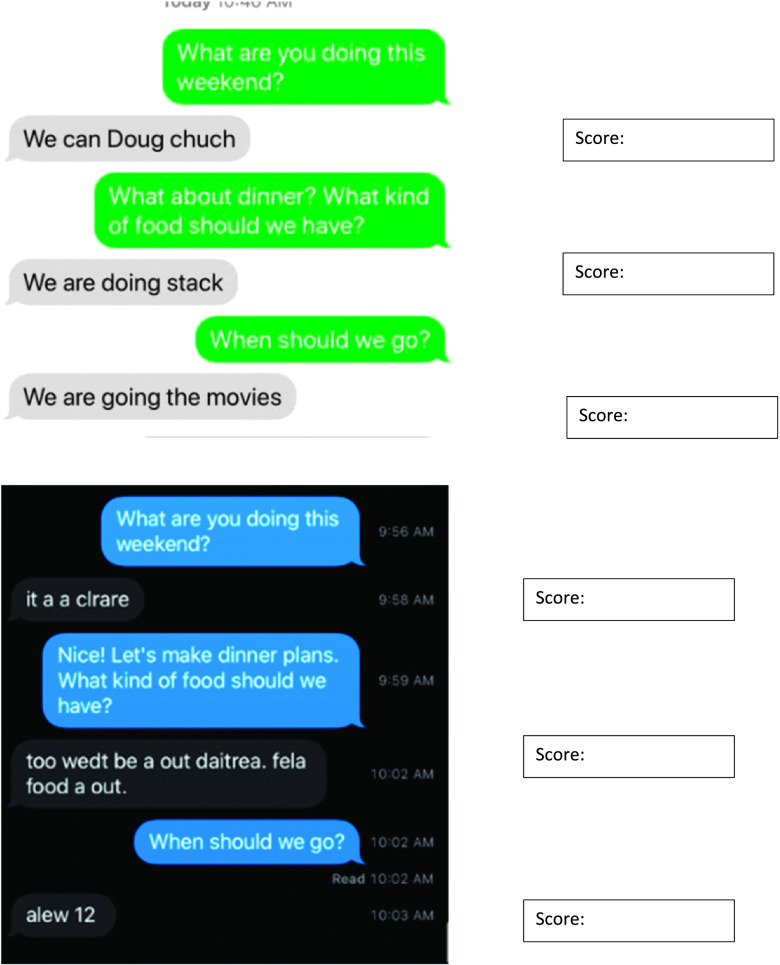

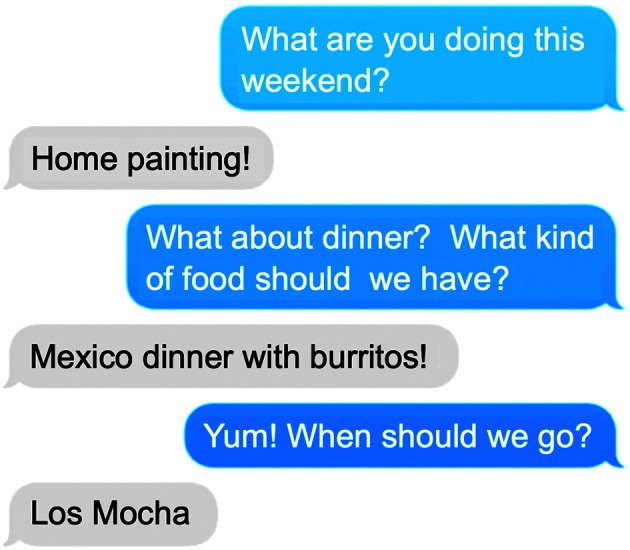

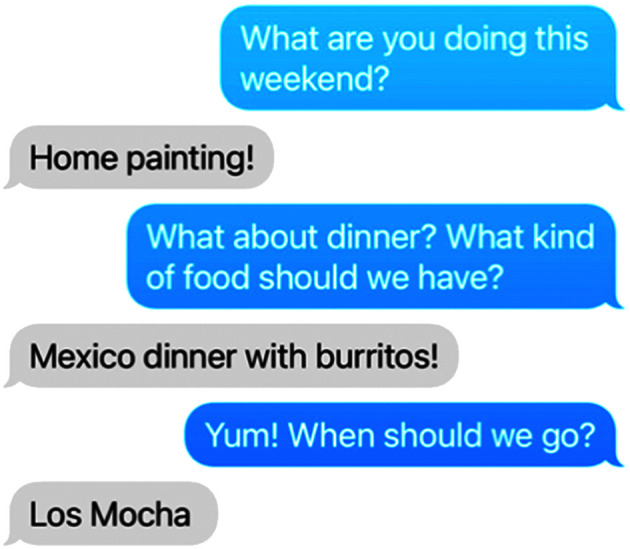

A standard “texting script” was developed and administered to participants enrolled in a clinical trial investigating an electronic writing treatment. We used this script as a first step to develop the TTS, an idea that was initiated after the completion of the clinical trial. The original purpose of the script was to evaluate potential gains on a functional measure of electronic writing following experimental and control treatments. The research team involved in the trial created the script following discussion about typical everyday topics around which people exchange text messages. The resulting three-turn “dinner plans” script is shown in Figure 1. Administration of the script consisted of the evaluator delivering the written prompt (e.g., “What are you doing this weekend?”). The participant with aphasia was instructed to respond to the prompt via texting. Following any response, the evaluator delivered the next prompt and so on until three responses were elicited. To emulate a more authentic texting experience, the procedure allowed for the evaluator to add a relevant written response, such as “yum” or “great” prior to the subsequent prompt. Participants used their personal smartphone or an iPad supplied by the researchers if they did not own a mobile phone. Assistive features, including spell check and predictive text, were disabled for the evaluation. Because this assessment was conducted as part of a clinical trial evaluating a writing intervention, the decision to disable assistive features was motivated by a desire to capture unaided linguistic skills.

Figure 1.

Example texting script with participant responses.

The team considered potential methods for evaluating participants' responses on the texting script, such as applying correct information units (CIU; Nicholas & Brookshire, 1993) or the Naming and Oral Reading for Language in Aphasia 6-Point scale (NORLA-6; Pitts et al., 2017) modified for writing to account for lexical errors and spelling accuracy. However, we strongly endorse the notion that individuals with aphasia are competent and able to convey meaningful information regardless of errors or “incorrect” output. Therefore, we recognized that a functional measure of texting should not be limited to capturing accuracy, but rather should reflect the ability to exchange meaningful information.

Development of the TTS Rating Scale

Development of the TTS rating scale was grounded in Brown and Yule's (1983) theory of transaction within communication. As previously discussed, transaction reflects the exchange of information and involves both understanding and expression of meaningful messages and information. When communication occurs via writing, there is not the same access to nonverbal cues or immediate feedback from a partner as is present in face-to-face conversation. How successful one is conveying meaningful information via writing will thus require some interpretation.

The scoring anchors for the TTS were based on the idea that the information conveyed in a participant's response to the texting script prompt could be readily interpreted within the context of the prompt. Scoring was also informed by Kagan et al.'s (2004) MPC, which uses a global rating of the ability to transact through observable communication behaviors. Similar to the MPC's operationally defined markers, the TTS scores range from no successful transaction (0), through partial transaction (1), and successful transaction (2). We operationally defined each category based on the extent to which the prompt was likely to have been understood and meaningful information was conveyed. Next, definitions associated with each score were refined through a data-driven approach. The researchers examined a random sample of responses to the standard texting script elicited from all 60 participants in the clinical trial (see Participant description below) and via discussion, and added parameters and further definitions with examples of responses corresponding to ratings of 0, 1, and 2. We also considered how these ratings could be applied to texting responses more broadly outside of the specific texting script used in the study. Table 1 shows the finalized operationalized definitions of ratings with illustrative examples. Note that the ratings consider the information conveyed by the person with aphasia and also the comprehension of the message by the conversational partner.

Table 1.

Operationalized definitions of ratings with examples.

| Rating | Definition | Example | Rationale |

|---|---|---|---|

| Rating = 2: Successful transaction | Response reflects comprehension of prompt and conveys to the communication partner meaningful information related to immediate prompt | P: What are you doing this weekend? PwA: We in NFL Saturday and Sunday |

Conveys meaningful information despite syntactical error |

| Considerations: ◆ Graphemic/spelling errors acceptable as long as word is recognizable; ◆ Morphological and syntactical errors that do not affect meaning are acceptable; ◆ Successful self-corrections are acceptable ◆ Irrelevant or additional words or graphemes that do not change meaning are acceptable. |

P: What about dinner? What kind of food should we have? PwA: pizza like |

Conveys meaningful information; additional word does not alter meaning | |

|

Rating = 1: Partial transaction |

Response provides partial or incomplete information that conveys to the communication partner meaning within the context of the prompt |

CP: What about dinner? What kind of food should we have? PwA: Subway for dinner. Mexico! |

Communication partner needs to clarify due to incongruent information (Do you want Subway or Mexican food?) |

| Considerations: ◆ Response may reflect impaired comprehension of prompt, e.g., ○ answers a related question, such as a similar “wh” question ○ conveys temporally inaccurate information ○ conveys a request for clarification. ◆ Response to which communication partner may need to clarify, i.e., communication burden shared with communication partner, ○ response contains words or additional information that may alter the meaning or information that is incongruent. |

P: When should we go? PwA: Nick's |

Response reflects impaired comprehension; answers “where” with name of restaurant | |

| P: What are you doing this weekend? PwA: I saw a good movie. |

Response reflects impaired comprehension of prompt; temporally inaccurate | ||

|

Rating = 0: No successful transaction |

Response does not convey to the communication partner meaningful information within the context of the prompt |

P: When should we go? PwA: I go… |

Not meaningful relative to the prompt; word from prompt is repeated without additional information |

| Considerations: ◆ Response contains words or graphemes that are unrecognizable in the context ◆ Response repeats prompt or part of prompt without adding additional meaningful information |

P: What are you doing this weekend? PwA: i w e |

Single graphemes that do not convey meaningful information | |

Note. P = prompt; PwA = person with aphasia.

Raters and Rater Training

Experienced raters included the first author and two speech-language pathologists (SLPs) who served as Research SLPs in the clinical trial. These three raters have 8–18 years of clinical and research experience working with individuals with aphasia and particular experience rating performance data with other established methods (e.g., CIU Analysis, Nicholas & Brookshire, 1993; NORLA-6 scoring, Pitts et al., 2017). The three experienced raters used the operationalized scoring definitions and examples to rate a training set. This training set included 21 items (i.e., three-turn “dinner plans” standard texting script responses from seven participants from the clinical trial representing a range of transactional success scores) to determine reliability prior to coding the sample of 20 participants for this study. We intentionally did not include data elicited from the 20 participants in the training set.

Then two students, including a first year SLP graduate student and an undergraduate Communication Sciences and Disorders student, not involved in the instrument development or clinical trial were trained to serve as naïve raters. Student raters received a phased training that included a brief overview of aphasia and its impact, followed by structured practice during which time they received feedback on their ratings and had an opportunity to ask questions in order to establish their reliability on the TTS with the experienced raters. Structured practice included 12 items (elicited from four participants whose data were not included in the training set or in this study). These items represented both straightforward and challenging rating scenarios. For example, a response to the prompt “When should we go?” included a string of graphemes and the number 12. This example was selected to encourage discussion about a score of 1 representing “partial transaction” and a need for clarification from the communication partner. Students completed the first phase independently in approximately 30–60 min. The discussion with the first author following structured practice took approximately 15 min more. The next phase of their training consisted of scoring the aforementioned training set of 21 items to reach criterion of at least 80 ICC before they coded the sample for this study. In the case that a rater did not achieve criterion, she would have received feedback and an additional training set to reach criterion. See Appendix for training materials and instructions for raters.

Participants

Participants were individuals with aphasia in a randomized controlled trial investigating an electronic writing treatment for aphasia (Clinical Trials Identifier: NCT03773419). Eligibility for the clinical trial were a diagnosis of aphasia from a single left hemisphere stroke at least 6 months before, premorbid right-hand dominance, at least an eighth grade education, and premorbid proficiency in English per self-report. Participants were required to have a WAB-R AQ between 40 and 85 or a WAB-R Writing score between 40 and 80 if AQ fell below or above this AQ range. Exclusion criteria included any premorbid neurological condition other than stroke, uncontrolled psychiatric conditions, and/or active substance abuse. The study was approved by the institutional review board of Northwestern University in Chicago, Illinois.

Assessment Data

Language Assessment

A comprehensive language profile for participants was obtained from administration of the WAB-R (Kertesz, 2007). Three components of the WAB-R were collected and analyzed: AQ, LQ, and the Writing subscale score of the LQ. The AQ of the WAB-R was used to characterize the type and severity of the aphasia, and together with the Writing subscale was used to determine eligibility as previously noted. The Writing scale, a component of the LQ, includes tasks such as writing one's name and address, writing automatic sequences including the alphabet and the numbers 0–20, writing letters, numbers, words and sentences to dictation, copying a sentence, and writing a story about a picture. The maximum possible score is 100 points.

Technology Survey

A survey was administered to obtain information about participants' use and confidence with various forms of technology. It was written in an aphasia friendly format (e.g., short, grammatically simple questions, pictures, large font) and administered verbally at the initial appointment. This technology survey inquired about participants' confidence with using the various forms of technology in their possession (e.g., smartphone, tablet) as well as confidence in texting ability on a 10-point Likert scale (0 = not at all, 5–6 = somewhat, 10 = very much). A copy of the survey is included in the Supplemental Materials of Kinsey et al. (2021).

Texting Activity Data

Participants provided informed consent for the research team to view and extract sent and received text messages from their phones over a 7-day period immediately prior to the initial assessment. To obtain texting data, the Research SLP scrolled through text history on the participant's phone while video recording the information. Measures of interest related to texting behaviors included the number of contacts and number of texts sent and received. Detailed findings are reported in Kinsey et al. (2021).

Measures of Validity and Reliability

Instrument development demands careful attention to the concepts of validity and reliability. Validity is the degree to which interpretations derived from an assessment are relevant and meaningful (Messick, 1989). Cook and Beckman (2006) argue that evidence should be sought from several sources to support the validity of inferences made from instrument scores. Reliability, or the consistency of scores from an assessment, is a “necessary, but not sufficient, component of validity…an instrument that does not yield reliable scores does not permit valid interpretations” (p. 166, Cook & Beckman, 2006). Therefore, in this preliminary evaluation of the TTS measure, we chose to focus on sources of evidence derived from the assessment of (a) interrater reliability and (b) internal consistency. The rationale for evaluating interrater reliability was to assess whether reliable scores could be attained not only from experienced raters, but also from naïve raters with limited experience with individuals with aphasia or their written language output. The purpose of evaluating internal consistency was to test the assumption that items within the texting script measured the same construct, i.e., transaction.

Statistical Analysis

Interrater reliability was analyzed using interclass correlation coefficients (ICCs) with a two-way random effects, absolute agreement model. Krippendorff's alpha test was also used (Hayes & Krippendorff, 2007; Krippendorff, 2011) as a more conservative estimate of interrater reliability that accounts for agreement that occurs by chance. Interrater reliability on the training set was evaluated prior to evaluating reliability between raters on scores elicited from the participant sample. Internal consistency was analyzed using Cronbach's alpha (Cronbach, 1951; Tavakol & Dennick, 2011). Cronbach's alpha estimates the intercorrelation of scores elicited from the three items of the script for each individual. A two-way mixed model intraclass correlation coefficient, calculated in SPSS (IBM SPSS Statistics, Version 27), was used to estimate a 95% confidence interval for Cronbach's alpha, as this statistic is identical to Cronbach's alpha (Bravo & Potvin, 1991).

A Spearman correlation was used to examine associations between transactional success and variables of interest. The Spearman correlation was selected because assumptions of parametric tests were violated due to nonnormal distributions. Spearman correlations were not corrected for multiple comparisons given the exploratory nature of the study and small sample size. Statistical analyses were carried out in SPSS (IBM SPSS Statistics, Version 27).

Results

Participants included the first 20 individuals with poststroke aphasia (six women, 14 men) who enrolled in the clinical trial. Thirteen individuals presented with nonfluent aphasia and seven with fluent aphasia as judged by the experienced SLP following administration of the WAB-R (Kertesz, 2007). Participants had a mean age of 55.15 (11.15) years, mean time postonset of 30.95 (17.13) months, and mean WAB-R AQ of 69.99 (13.09). Table 1 presents the sample's aphasia severity. A complete description of participant characteristics is reported in Kinsey et al. (2021).

Interrater Reliability and Internal Consistency

There was a high degree of reliability between the three expert raters on the training set. ICC was .953, 95% CI [.904,.980], p < .001. The two student raters met criterion interrater reliability on the training set; ICC = .818 and .897, respectively, prior to coding the sample. For the responses on the standard texting script from the 20 participants with chronic aphasia, a high degree of reliability was found between the five raters. The average measure ICC was .959 with a 95% confidence interval from .940 to .973, p < .001. Krippendorff's alpha was .826 with a 95% confidence interval from .787 to .862, confirming strong interrater reliability. Cronbach's alpha was .717, 95% CI [.403,.879], suggesting internal consistency may be adequate.

TTS of the Sample

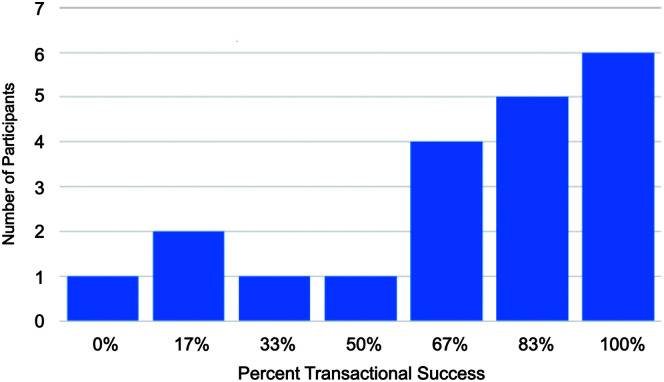

Transactional success, texting activity (in terms of messages sent and received over a 7-day period), and texting confidence are presented in Table 2. TTS scores ranged from 0/6 to 6/6. Percent transactional success by number of participants is illustrated in Figure 2.

Table 2.

Texting transactional success, severity, texting activity, and confidence.

| Participant | Texting transactional success |

Aphasia severity based on WAB-R*

|

Number of texts*

|

Texting confidence* |

|||||

|---|---|---|---|---|---|---|---|---|---|

| Raw score | Percent | AQ | LQ | Writing | Sent | Received | Total | Technology survey | |

| 1 | 6/6 | 100.00 | 84.7 | 86.6 | 80 | 13 | 10 | 23 | 3 |

| 2 | 4/6 | 66.67 | 61.5 | 61.4 | 36 | 24 | 15 | 39 | 10 |

| 3 | 5/6 | 83.33 | 77.4 | 80 | 78 | 6 | 27 | 33 | 10 |

| 4 | 1/6 | 16.67 | 54.9 | 57.3 | 38.5 | 35 | 33 | 68 | 6 |

| 5 | 5/6 | 83.33 | 76.8 | 78.8 | 62.5 | 2 | 3 | 5 | 6.5 |

| 6 | 5/6 | 83.33 | 71.6 | 75.9 | 65.5 | 82 | 57 | 139 | 7 |

| 7 | 6/6 | 100.00 | 69.4 | 79.9 | 91 | 67 | 103 | 170 | 4 |

| 8 | 5/6 | 83.33 | 57.6 | 61.5 | 70 | 10 | 92 | 102 | 10 |

| 9 | 4/6 | 66.67 | 78.4 | 78.1 | 67 | 41 | 37 | 78 | 10 |

| 10 | 3/6 | 50.00 | 68.8 | 66.2 | 62.5 | 0 | 4 | 4 | 7 |

| 11 | 3/6 | 50.00 | 73 | 65.8 | 48 | 0 | 3 | 3 | 5 |

| 12 | 0/6 | 0.00 | 53.6 | 49.9 | 41.5 | 1 | 15 | 16 | 2 |

| 13 | 6/6 | 100.00 | 79.6 | 84.6 | 89 | 1 | 5 | 6 | 2 |

| 14 | 6/6 | 100.00 | 91.8 | 90.2 | 71.5 | 4 | 24 | 28 | 9 |

| 15 | 1/6 | 16.67 | 36.5 | 38.2 | 46 | 6 | 45 | 51 | 8 |

| 16 | 3/6 | 50.00 | 82.2 | 81.8 | 85.5 | 1 | 7 | 8 | 9 |

| 17 | 5/6 | 83.33 | 70.5 | 74.2 | 83 | 0 | 1 | 1 | 5 |

| 18 | 6/6 | 100.00 | 87.4 | 87.2 | 69.5 | 11 | 7 | 18 | 5 |

| 19 | 4/6 | 66.67 | 77.4 | 79.4 | 76 | 0 | 4 | 4 | 5 |

| 20 | 5/6 | 83.33 | 67.2 | 69.3 | 67 | 4 | 6 | 10 | 9 |

Note. WAB-R = Western Aphasia Battery–Revised (Kertesz, 2007); AQ = aphasia quotient; LQ = language quotient.

Data reported in Kinsey et al. (2021).

Figure 2.

Histogram of sample's percent transactional success.

For Aim 4, we evaluated the relationship between transactional success and amount of texting activity, confidence with texting, and aphasia severity. There was no relationship between TTS and number of texts sent over a 7-day period (r s = .334, p = .15), number of texts received (r s = .133, p = .57), or overall texting activity (r s = .226, p = .339). There was no relationship between TTS and self-reported confidence with texting on the technology survey (r s = −.056, p = .81).

There was a moderate correlation between transactional success and aphasia severity, as measured by the WAB-R AQ (r s = .698, p < .01). There was a strong correlation between transactional success and WAB-R LQ (r s = .848, p < .01) and a strong correlation between transaction success and writing severity, as measured by the WAB-R Writing subtest (r s = .824, p < .01). A post hoc analysis also showed a moderate correlation between transactional success and the spontaneous writing output subtest on the WAB-R Writing (r s = .690, p < .01).

Discussion

This article describes the initial development of a measure to evaluate TTS in individuals with aphasia. Results, while preliminary, suggest support for the validity of scores yielded from the TTS rating scale. Transactional success scores are reported in a sample of 20 individuals with chronic poststroke aphasia that included individuals presenting with both fluent and nonfluent aphasia, representing a range of language severity levels. TTS was significantly correlated with aphasia severity, as measured by the WAB-R AQ, and reading and writing severity, as measured by the WAB-R LQ and Writing subscale.

Texting is quickly growing as a primary means to exchange information and connect socially. Despite the challenges individuals with aphasia will potentially encounter with texting, this modality offers a number of advantages. As reported in Kinsey et al. (2021), people with aphasia do engage in texting. However, if improving texting success is to emerge as a rehabilitation target for persons with aphasia, we will need functional tools to evaluate performance in this domain. This study, while preliminary, suggests that the TTS measure may be able to fill this pressing need.

Development and Validity of Transactional Success Scores

Development of the TTS rating scale was systematic and comprehensive. The initial assignment of scores was grounded in Brown and Yule's (1983) theory of transaction and informed by measures of transactional success in conversation (Kagan et al., 2004; Ramsberger & Rende, 2002). We used an iterative, data-driven approach to refine and operationalize definitions for each category of rating (0–2). In addition, we carefully considered how scores could reflect both comprehension and production of meaningful and relevant information within the context of the texting script prompt. The team involved in development of the scale were experienced SLPs with research and clinical experience working with individuals with aphasia. We were also closely familiar with the texting script and the types of responses it elicited from having administered the measure in the context of a clinical trial. As such, it was critical to develop training procedures and scoring definitions that were also accessible to raters with limited or no experience with persons with aphasia.

The training developed for student raters was sufficient without being time-intensive. Our student raters completed the first phase of the training in less than 1 hr; examples from the structured practice set elicited good questions and discussion of the scoring rules. This discussion and in particular, the feedback provided in response to students' questions, was likely instrumental in facilitating the speed in which they became reliable. Furthermore, our training was adequate, as our student raters achieved criterion reliability on the training set of items without a need for additional feedback, discussion, and a second training set. Our results suggest the training process and scoring definitions are sufficient, and therefore may also be appropriate for clinicians without extensive background working with individuals with aphasia and who have limited time for assessment. Nonetheless, we included only two students in this study and, therefore, further examination of the clinical feasibility of training on this measure is warranted.

In this study, we aimed to assess validity and reliability of scores yielded from the TTS rating scale with recognition that development of a measure necessitates careful attention to these concepts. An instrument cannot be valid unless it is reliable (Feldt & Brennan, 1989), and so evaluating reliability was a primary focus. We assessed the interrater reliability of scores from five raters; as discussed, three experienced and two novice raters were included. ICC were used to estimate how well scores from different raters coincided. Yet, because ICC does not account for agreement that could have occurred by chance, we also used Krippendorff's alpha (Krippendorff, 2011) as a more conservative analysis. Results suggest good interrater reliability. We assessed internal consistency using Cronbach's alpha to determine the extent to which TTS items were intercorrelated and potentially measuring the same construct. Our results (i.e., Cronbach's alpha = .717), while preliminary, suggest adequate internal consistency and encourage confidence in our scale's item agreement. Cronbach's alpha of .7 is considered acceptable internal consistency by some reports (Tavakol & Dennick, 2011). However, the wide confidence interval surrounding alpha reflects the uncertainty of this estimate, and as such, this finding should be interpreted with caution (Hazra, 2017). In addition, the evaluation of internal consistency can be influenced by individual variability in participants' responses. Future investigations should aim to employ a larger sample of data in order to mitigate individual variability, narrow the confidence interval reflected in the current estimate, and further refine Cronbach's alpha. Results of this study are merely a starting place.

Additional aspects of the measure warrant discussion. The three-turn texting script was not originally developed for the purpose of developing a transactional success rating scale. We applied the TTS to this script about a single topic, making weekend dinner plans. Given the nature of this script, the items are not independent of each other. This dependency of responses represents a threat to the validity of the measure. It is possible that a longer script or series of scripts ranging in topic could address this issue. One may also question whether three turns is sufficient to estimate transactional success or if a longer script would yield similar results. Future research could address these questions of script length and item interdependency that are limitations of the study.

In our previous study of authentic texting behaviors over a 7-day period (Kinsey et al., 2021), we examined the content of participants' messages for information, (e.g., mean number of words per message); however, we did not attempt to evaluate how successful participants were in communicating their intended messages via text, in part, because there were no tools to assess transactional success via texting. Future research should investigate whether the rating scale can be applied to authentic texting data and if it would yield similar or different results than the rating scale applied to the standard texting script. Similar results would offer support for the external validity of this measure. Extracting and analyzing texting data is time intensive and potentially intrusive for many individuals. Therefore, use of the texting script would offer a more efficient, private method for evaluating transactional success.

TTS in Aphasia

All participants, except one, achieved some degree of transactional success on the standard three-turn “dinner plans” text. Transactional success varied across participants, just as amount of texting (i.e., number of texts sent or received) done each week varied (Kinsey et al., 2021). However, unlike prior findings in which there was no relationship between amount of texting and aphasia severity, transactional success was significantly related to severity of aphasia. This relationship was consistent for measures addressing comprehension and expression of both spoken and written language as determined by the AQ and LQ. Importantly, it was strongest for the LQ and the writing subscale of the WAB-R.

Clinically, this would suggest the importance of specifically addressing written expressive skills during therapy in order improve TTS. Further investigation is warranted to determine the specific focus of therapy. For example, it is not known whether focusing treatment on single word writing would be sufficient, or whether it would be more beneficial to target connected text (phrases and sentences) in order to improve transactional success. In this study, assistive features, including spell check and predictive text, were disabled for the evaluation. Investigation of how individuals with aphasia use assistive features in their device during texting and whether these tools increase transactional success is also warranted. This information would also have clear implications for therapy.

Although the sample included participants with both fluent and nonfluent aphasia, we did not assess whether there were differences in transactional success based on type of aphasia. This would also be a worthwhile endeavor for future research with larger samples of participants.

We had anticipated a relationship between texting activity and TTS, that is, those people with aphasia who more actively engaged in texting would demonstrate greater transactional success. However, there was no significant relationship between these variables. It is possible that poor transaction leads to misunderstanding on the part of the texting partner. As a result, the texting partner may not respond to the text or may respond incorrectly, which prompts the person with aphasia to send additional texts, thereby increasing the number of texts sent and possibly received. Strategies to aid the texting partner to respond back to the person with aphasia in a way that facilitates better transaction of the message is one approach to treatment that warrants further exploration. Relatedly, a person's ability to self-identify errors versus recognize errors only after correspondence from a texting partner may also play a role in the relationship between texting activity and transactional success. This, as well as the efficiency of a text exchange, provide additional avenues for future research.

It was also anticipated that participants who were more confident in their ability to send texts would be more successful in transacting the meaning of their texts to the texting partner. However, there was no significant relationship between confidence in texting and transactional success. The finding might reflect a weakness in how texting confidence was measured. The prompt on the technology survey was: “I am confident in my ability to text.” Participants may have indicated their confidence in physically accessing or executing a text message and not considered their confidence in their ability to communicate via text message. This finding may also suggest that individuals with aphasia do not associate texting confidence with their ability to successfully transact, that is, convey information, via texting. For example, about 25% of our sample (e.g., Participant 1 [P1], P7) appeared to lack texting confidence, but demonstrated a high percentage of transactional success on the TTS measure. P1 and P7 rated confidence 3/10 and 4/10 respectively, but demonstrated 100% transactional success. Another 25% of our sample (e.g., P9, P15, P16) expressed confidence with texting, which was not reflected in their relatively low transactional success scores. This finding may reflect poor self-monitoring in some individuals with aphasia. Interestingly, approximately half of the samples' confidence ratings appear roughly consistent with their transactional success scores, suggesting that individuals with aphasia are aware of their communication abilities via texting. Individuals who demonstrate this awareness may be particularly receptive to strategies and tools to enhance TTS.

Conclusions

One of the primary goals of conversation, whether by spoken or written modality, is the exchange of information and ideas. Texting holds a number of advantages as a communication tool for individuals with aphasia and is an appropriate target for treatment. This article describes the development of a rating scale of TTS and preliminary data to support the validity of scores derived from this measure. Future research is needed to refine this tool. Nonetheless, information derived from a TTS measure could inform treatment that aims to improve electronic messaging in individuals with aphasia. Understanding how successfully individuals with aphasia understand and convey meaningful information via text is also fundamental in training family members and friends to support texting in their loved one with aphasia.

Author Contributions

Jaime B. Lee: Conceptualization (Lead), Data curation (Lead), Formal analysis (Lead), Investigation (Lead), Methodology (Equal), Writing – original draft (Lead), Writing – review & editing (Equal). Leora R. Cherney: Conceptualization (Equal), Funding acquisition (Lead), Methodology (Equal), Supervision (Supporting), Writing – original draft (Supporting) Writing – review & editing (Equal).

Acknowledgments

The contents of this article were developed under a grant to Leora R. Cherney from the National Institute on Disability, Independent Living, and Rehabilitation Research (NIDILRR Grant 90IFRE0007). NIDILRR is a Center within the Administration for Community Living (ACL), Department of Health and Human Services (HHS). The contents of this article do not necessarily represent the policy of NIDILRR, ACL, or HHS, and you should not assume endorsement by the Federal Government. We extend our gratitude to our participants for helping to further this important research. We thank Jamie Azios who contributed to the conceptualization of the measure along with Laura Kinsey and Elissa Conlon, who also served as raters. We would also like to thank William Hula and Gerasimos Fergatiotis for consulting on the instrument development and evaluation aspects of this study.

Appendix

Training for Student Raters

Hello, and thank you for your participation in this project!

This project has two phases: (1) Training and (2) Coding. The first phase involves reviewing some general information about aphasia, as well as reading through the development of the Texting Transactional Success measure and operationalized definitions of the 3-category rating scale. You will also code select mock responses from TTS administrations as a structured practice activity. You will receive feedback and have the opportunity to ask questions during this phase.

The second phase involves coding responses from a sample of TTS administrations. You may review provided training materials; however, we will not be available to provide feedback or answer questions specific to coding.

Let's get started!

WHAT IS APHASIA?

Familiarize yourself with aphasia, its symptomology and impact. Review the About Aphasia tab on the Aphasia Access website: https://www.aphasiaaccess.org/about-aphasia/

Consider how different types of aphasia result in varying language problems

Next, learn about aphasia relative to the concept of competence on the Aphasia Institute's website: https://www.aphasia.ca/family-and-friends-of-people-with-aphasia/what-is-aphasia-2/

Finally, experience aphasia symptomology for yourself. https://vohadmin.github.io/Aphasia_Sim/aphasia-simulations/what-is-aphasia.html

Review tabs for “WHAT IS APHASIA” and Complete the simulations for Listening, Reading, Writing, and Speaking.

Development of the Scoring System

Development of the Texting Transactional Success (TTS) measure is grounded in Brown and Yule's (1983) conceptualization of transaction and interaction within communication. Transaction is the expression of content or transference of information, whereas interaction pertains to social aspects of communication. Transaction reflects the exchange of information and involves both the expression and understanding of meaningful messages and information. According to Brown and Yule (1983), when communication occurs via writing, an individual does not have access to the same nonverbal cues or immediate feedback from her partner as is present in conversation and therefore, some interpretation may be required.

The scoring anchors for texting transactional success were based on the idea that the information conveyed in an examinee's response to the texting script prompt could be readily interpreted, i.e., meaningful within the context of the prompt. Scoring was also informed by Kagan and colleagues' Measure of Participation in Conversation (Kagan et al., 2004). This observational measure calls for “a global rating of the ability to transact (exchange information) through observable communication behaviors.” Kagan et al. suggest that accuracy and speed of responses, ability to reliably indicate yes/no/don't know, ability to initiate topics and ability to repair misunderstandings are factors to consider when judging transactional success. Following a brief conversation with the person with aphasia, the examiner scores transaction on a 9-point Likert scale ranging from 0 (no successful transaction) through 2 (several sufficiently successful transactions) to 4 (consistently successful transactions).

Similarly, our TTS scores range from no successful transaction (0) through partial transaction (1) to successful transaction (2). We operationally defined each category based on the extent to which meaningful information was conveyed. Next, definitions associated with each score were refined through a data-driven approach. The research team examined a sample of responses to the texting script and via discussion added parameters and further definitions with examples.

Texting Transactional Success Measure

Scoring Definitions

Item 1: What are you doing this weekend?

2: Successful Transaction

Response reflects comprehension of prompt and conveys meaningful information related to immediate prompt; the following are acceptable:

Graphemic/spelling errors acceptable as long as word is recognizable;

Morphological and syntactical errors that do not affect meaning are acceptable;

Emojis and other nonlexical tools/symbols that reflect meaning are acceptable;

Successful self-corrections are acceptable

Irrelevant or additional words or graphemes that do not change meaning are acceptable.

Example responses yielding a score of 2:

| We in NFL Saturday and Sunday | Conveys meaning despite syntactical error |

| Church | Conveys meaningful information |

| Nothing | Conveys absence of plans |

1: Partial Transaction

Response provides partial or incomplete information that conveys meaning within the context of the prompt. In addition:

-

Response may reflect impaired comprehension of prompt, e.g.,

Response conveys temporally inaccurate information

Response conveys a request for clarification.

-

Response to which communication partner may need to clarify, i.e., communication burden shared with communication partner), e.g.

response contains words or additional information that may alter the meaning or information that is incongruent.

Example responses yielding a score of 1:

| Good weekend… | Partial/incomplete information conveyed |

| Feb 13–14: They was home at raining. | (Administered Feb 15) Temporally inaccurate; response reflects impaired comprehension |

| I saw a good movie. | Temporally inaccurate; response reflects impaired comprehension |

0: No successful transaction

Response does not convey meaningful information within the context of the prompt; Response contains words or graphemes that are unrecognizable in the context.

Example responses yielding a score of 0

| Nope | No meaning conveyed |

| Yes | Not meaningful relative to the prompt |

| Love | Not meaningful relative to the prompt |

| i w e | Single graphemes that do not convey meaningful information |

Item 2: What about dinner? What kind of food should we have?

2: Successful Transaction

Response reflects comprehension of prompt and conveys meaningful information related to immediate prompt the following are acceptable:

Graphemic/spelling errors acceptable as long as word is recognizable;

Morphological and syntactical errors that do not affect meaning are acceptable;

Emojis and other nonlexical tools/symbols that reflect meaning are acceptable;

Successful self-corrections are acceptable

Irrelevant or additional words or graphemes that do not change meaning are acceptable.

Example responses yielding a score of 2:

| pizza like | Conveys meaningful information; additional word “like” does not alter meaning |

| meatloaf and mashed potato | Conveys meaningful information |

| Steaks [steak emoji]. Logan Restaurant mashed, corn and salad. | Conveys meaningful information; uses meaningful emoji |

| I am the mood for sushi in | Conveys meaningful information; successful self-correction |

| Spaghet | Conveys meaningful information despite lexical error; intended word is still recognizable |

1: Partial Transaction

Response provides partial or incomplete information that conveys meaning within the context of the prompt. In addition:

-

Response may reflect impaired comprehension of prompt, e.g.,

Response conveys temporally inaccurate information

Response conveys a request for clarification.

-

Response to which communication partner may need to clarify (i.e. communication burden shared with communication partner) for example:

response contains words or additional information that may alter the meaning or information that is incongruent.

Examples:

| dinner for I doant | Some information conveyed; Communication partner may need to clarify (do you want to have dinner together?) |

| Subway for dinner. Mexico! | Communication partner needs to clarify due to incongruent information (Do you want Subway or Mexican food?) |

| Yes | Partial response |

| We had pizza. | Reflects impaired comprehension, temporally inaccurate |

| The pizza Friday. Saturday the movie. Sunday grizz out hammer, hot dride, the chicken. | Communication partner could attempt to clarify; response contains additional information that alters meaning. |

0: No successful transaction

Response does not convey meaningful information within the context of the prompt; Response contains words or graphemes that are unrecognizable in the context.

Example responses yielding a score of 0

| Would to there to should to spariance. | No meaning conveyed |

| i dinner. What food | Repetition of prompt, with no additional meaning conveyed |

Item 3: When should we go?

2: Successful Transaction

Response reflects comprehension of prompt and conveys meaningful information related to immediate prompt; the following are acceptable:

Graphemic/spelling errors acceptable as long as word is recognizable;

Morphological and syntactical errors that do not affect meaning are acceptable;

Emojis and other nonlexical tools/symbols that reflect meaning are acceptable;

Successful self-corrections are acceptable

Irrelevant or additional words or graphemes that do not change meaning are acceptable.

Example responses yielding a score of 2

| Saterday at 5:00 | Conveys meaningful information despite spelling error |

| Sunday | Conveys meaningful information |

| At 4:30 | Conveys meaningful information |

1: Partial Transaction

Response provides partial or incomplete information that conveys meaning within the context of the prompt. In addition:

-

Response may reflect impaired comprehension of prompt, e.g.,

Response answers a related question, such as a similar “wh” question

Response conveys temporally inaccurate information

Response conveys a request for clarification.

-

Response to which communication partner may need to clarify (i.e., communication burden shared with communication partner) for example:

response contains words or additional information that may alter the meaning or information that is incongruent.

Example responses yielding a score of 1

| Come up. So should lunch. | Some meaningful information conveyed though communication partner may need to clarify |

| I should be here by 9:20 am. | Response reflects impaired comprehension (conversation about dinner plans not morning plans); communication partner will need to clarify |

| Nick's | Response reflects impaired comprehension of prompt; answers “where” with name of restaurant; communication partner may need to repeat the question. |

0: No successful transaction

Response does not convey meaningful information within the context of the prompt; Response contains words or graphemes that are unrecognizable in the context.

Example responses yielding a score of 0

| Great. | Not meaningful within context of prompt |

| Yes | No meaning conveyed |

| I go… | No meaning conveyed |

| Pick to some f | No meaning conveyed |

Texting Transactional Success in Aphasia Measure

Structured Practice

References

Aphasia Access. About Aphasia. Retrieved from https://www.aphasiaaccess.org/about-aphasia/

Aphasia Institute. Family and Friends of People with Aphasia: What is Aphasia? Retrieved from https://www.aphasia.ca/family-and-friends-of-people-with-aphasia/what-is-aphasia-2/

Brown, G., & Yule, G. (1983). Discourse analysis: Cambridge University Press. https://doi.org/10.1017/CBO9780511805226

Kagan, A., Winckel, J., Black, S., Duchan, J. F., Simmons-Mackie, N., & Square, P. (2004). A set of observational measures for rating support and participation in conversation between adults with aphasia and their conversation partners. Topics in Stroke Rehabilitation, 11(1), 67–83. Retrieved from https://www.unboundmedicine.com/medline/citation/14872401/A_set_of_observational_measures_for_rating_support_and_participation_in_conversation_between_adults_with_aphasia_and_their_conversation_partners_

Voices of Hope for Aphasia. Aphasia Simulations. Retrieved from https://vohadmin.github.io/Aphasia_Sim/aphasia-simulations/what-is-aphasia.html

Funding Statement

The contents of this article were developed under a grant to Leora R. Cherney from the National Institute on Disability, Independent Living, and Rehabilitation Research (NIDILRR Grant 90IFRE0007). NIDILRR is a Center within the Administration for Community Living (ACL), Department of Health and Human Services (HHS). The contents of this article do not necessarily represent the policy of NIDILRR, ACL, or HHS, and you should not assume endorsement by the Federal Government.

References

- Al Hadidi, S. , Towfiq, B. , & Bachuwa, G. (2014). Dystextia as a presentation of stroke. BMJ Case Report, 2014(1), bcr2014206987. https://doi.org/10.1136/bcr-2014-206987 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Armstrong, E. , Bryant, L. , Ferguson, A. , & Simmons-Mackie, N. (2016). Approaches to assessment and treatment of everyday talk in aphasia. In Papathanasiou, I. & Coppens, P. (Eds.), Aphasia and related neurogenic communication disorders (2nd ed., pp. 269–285). Jones & Bartlett Learning. [Google Scholar]

- Beeson, P. M. , Higginson, K. , & Rising, K. (2013). Writing treatment for aphasia: A texting approach. Journal of Speech, Language, and Hearing Research, 56(3), 945–955. https://doi.org/10.1044/1092-4388(2012/11-0360) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behrns, I. , Ahlsén, E. , & Wengelin, Å. (2008). Aphasia and the process of revision in writing a text. Clinical Linguistics & Phonetics, 22(2), 95–110. https://doi.org/10.1080/02699200701699603 [DOI] [PubMed] [Google Scholar]

- Behrns, I. , Ahlsén, E. , & Wengelin, Å. (2010). Aphasia and text writing. International Journal of Language & Communication Disorders, 45(2), 230–243. https://doi.org/10.3109/13682820902936425 [DOI] [PubMed] [Google Scholar]

- Bravo, G. , & Potvin, L. (1991). Estimating the reliability of continuous measures with Cronbach's alpha or the intraclass correlation coefficient: Toward the integration of two traditions. Journal of Clinical Epidemiology, 44(4–5), 381–390. https://doi.org/10.1016/0895-4356(91)90076-l [DOI] [PubMed] [Google Scholar]

- Brown, G. , & Yule, G. (1983). Discourse analysis. Cambridge University Press. https://doi.org/10.1017/CBO9780511805226 [Google Scholar]

- Ceci, L. (2021). Statista mobile Internet report. https://www.statista.com/statistics/483255/number-of-mobile-messaging-users-worldwide/

- Cherny, L. (1999). Conversation and community: Chat in a virtual world. Center for the Study of Language and Information. [Google Scholar]

- Cook, D. A. , & Beckman, T. J. (2006). Current concepts in validity and reliability for psychometric instruments: Theory and application. The American Journal of Medicine, 119(2), 166.E7–166.e16. https://doi.org/10.1016/j.amjmed.2005.10.036 [DOI] [PubMed] [Google Scholar]

- Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika, 16(3), 297–334. https://doi.org/10.1007/BF02310555 [Google Scholar]

- Fein, M. , Bayley, C. , Rising, K. , & Beeson, P. M. (2020). A structured approach to train text messaging in an individual with aphasia. Aphasiology, 34(1), 102–118. https://doi.org/10.1080/02687038.2018.1562150 [Google Scholar]

- Feldt, L. S. , & Brennan, R. L. (1989). Reliability. In Linn R. L. (Ed.), Educational measurement (3rd ed., pp. 105–146). Macmillan Publishing Company. [Google Scholar]

- Greig, C.-A. , Harper, R. , Hirst, T. , Howe, T. , & Davidson, B. (2008). Barriers and facilitators to mobile phone use for people with aphasia. Topics in Stroke Rehabilitation, 15(4), 307–324. https://doi.org/10.1310/tsr1504-307 [DOI] [PubMed] [Google Scholar]

- Hayes, A. F. , & Krippendorff, K. (2007). Answering the call for a standard reliability measure for coding data. Communication Methods and Measures, 1(1), 77–89. https://doi.org/10.1080/19312450709336664 [Google Scholar]

- Hazra, A. (2017). Using the confidence interval confidently. Journal of Thoracic Disease, 9(10), 4124–4129. https://doi.org/10.21037/jtd.2017.09.14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holland, A. (1977). Some practical considerations in aphasia rehabilitation. In Sullivan M. & Kommers M. (Eds.), Rationale for adult aphasia therapy (pp. 167–180). University of Nebraska Press. [Google Scholar]

- Johansson-Malmeling, C. , Hartelius, L. , Wengelin, Å. , & Henriksson, I. (2021). Written text production and its relationship to writing processes and spelling ability in persons with post-stroke aphasia. Aphasiology, 35(5), 615–632. https://doi.org/10.1080/02687038.2020.1712585 [Google Scholar]

- Kagan, A. , Black, S. E. , Duchan, J. F. , Simmons-Mackie, N. , & Square, P. (2001). Training volunteers as conversation partners using “Supported Conversation for Adults With Aphasia” (SCA). Journal of Speech, and Language Hearing Research, 44(3), 624–638. https://doi.org/10.1044/1092-4388(2001/051) [DOI] [PubMed] [Google Scholar]

- Kagan, A. , Winckel, J. , Black, S. , Duchan, J. F. , Simmons-Mackie, N. , & Square, P. (2004). A set of observational measures for rating support and participation in conversation between adults with aphasia and their conversation partners. Topics in Stroke Rehabilitation, 11(1), 67–83. https://doi.org/10.1310/CL3V-A94A-DE5C-CVBE [DOI] [PubMed] [Google Scholar]

- Kertesz, A. (2007). Western Aphasia Battery–Revised (WAB-R) [Database record] . APA PsycTests. https://doi.org/10.1037/t15168-000 [Google Scholar]

- Kinsey, L. E. , Lee, J. B. , Larkin, E. M. , & Cherney, L. R. (2021). Texting behaviors of individuals with chronic aphasia: A descriptive study. American Journal of Speech-Language Pathology, 31(1), 99–112. https://doi.org/10.1044/2021_AJSLP-20-00287 [DOI] [PubMed] [Google Scholar]

- Krippendorff, K. (2011). Computing Krippendorff's alpha-reliability. https://repository.upenn.edu/asc_papers/43

- Lakhotia, A. , Sachdeva, A. , Mahajan, S. , & Bass, N. (2016). Aphasic dystextia as presenting feature of ischemic stroke in a pediatric patient. Case Reports in Neurological Medicine, 2016, Article 3406038. https://doi.org/10.1155/2016/3406038 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leaman, M. C. , & Edmonds, L. A. (2019). Conversation in aphasia across communication partners: Exploring stability of microlinguistic measures and communicative success. American Journal of Speech-Language Pathology, 28(1S), 359–372. https://doi.org/10.1044/2018_AJSLP-17-0148 [DOI] [PubMed] [Google Scholar]

- Leaman, M. C. , & Edmonds, L. A. (2021). Assessing language in unstructured conversation in people with aphasia: Methods, psychometric integrity, normative data, and comparison to a structured narrative task. Journal of Speech, Language, and Hearing Research, 64(11), 4344–4365. https://doi.org/10.1044/2021_JSLHR-20-00641 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meredith, J. (2019). Conversation analysis and online interaction. Research on Language and Social Interaction, 52(3), 241–256. https://doi.org/10.1080/08351813.2019.1631040 [Google Scholar]

- Messick, S. L. (1989). Validity. In Linn R. L. (Ed.), Educational measurement (3rd ed., pp. 13–103). Macmillan Publishing Company. [Google Scholar]

- Nicholas, L. E. , & Brookshire, R. H. (1993). A system for quantifying the informativeness and efficiency of the connected speech of adults with aphasia. Journal of Speech and Hearing Research, 36(2), 338–350. https://doi.org/10.1044/jshr.3602.338 [DOI] [PubMed] [Google Scholar]

- Nilsen, M. , & Mäkitalo, Å. (2010). Towards a conversational culture? How participants establish strategies for co-ordinating chat postings in the context of in-service training. Discourse Studies, 12(1), 90–105. https://doi.org/10.1177/1461445609346774 [Google Scholar]

- Pew Research Center. (2021). Mobile fact sheet. https://www.pewresearch.org/internet/fact-sheet/mobile/

- Pitts, L. L. , Hurwitz, R. , Lee, J. B. , Carpenter, J. , & Cherney, L. R. (2017). Validity, reliability and sensitivity of the NORLA-6: Naming and Oral Reading for Language in Aphasia 6-Point scale. International Journal of Speech-Language Pathology, 20(2), 274–283. https://doi.org/10.1080/17549507.2016.1276962 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramsberger, G. , & Rende, B. (2002). Measuring transactional success in the conversation of people with aphasia. Aphasiology, 16(3), 337–353. https://doi.org/10.1080/02687040143000636 [Google Scholar]

- Smith, A . (2011). How Americans use text messaging. https://www.pewresearch.org/internet/2011/09/19/how-americans-use-text-messaging/

- Smith, A. (2015). U.S. Smartphone use in 2015. Pew Research Center. https://www.pewresearch.org/internet/2015/04/01/us-smartphone-use-in-2015/ [Google Scholar]

- Tavakol, M. , & Dennick, R. (2011). Making sense of Cronbach's alpha. International Journal of Medical Education, 2, 53–55. https://doi.org/10.5116/ijme.4dfb.8dfd [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todis, B. , Sohlberg, M. M. , Hood, D. , & Fickas, S. (2005). Making electronic mail accessible: Perspectives of people with acquired cognitive impairments, caregivers and professionals. Brain Injury, 19(6), 389–401. https://doi.org/10.1080/02699050400003957 [DOI] [PubMed] [Google Scholar]