Abstract

Purpose:

The study's primary aim was to investigate developmental changes in the perception of vocal loudness and voice quality in children 3–6 years of age. A second aim was to evaluate a testing procedure—the intermodal preferential looking paradigm (IPLP)—for the study of voice perception in young children.

Method:

Participants were categorized in two age groups: 3- to 4-year-olds and 5- to 6-year-olds. Children were tested remotely via a Zoom appointment and completed two perceptual tasks: (a) voice discrimination and (b) voice identification. Each task consisted of two tests: a vocal loudness test and a voice quality test.

Results:

Children in the 5- to 6-year-old group were significantly more accurate than children in the 3- to 4-year-old group in discriminating and identifying differences between voices for both loudness and voice quality. The IPLP, used in the identification task, was found to successfully detect differences between the age groups for overall accuracy and for most of the sublevels of vocal loudness and voice quality.

Conclusions:

Results suggest that children's ability to discriminate and identify differences in vocal loudness and voice quality improves with age. Findings also support the use of the IPLP as a useful tool to study voice perception in young children.

Voice is a critical aspect of communication. Children express their emotions and intents through their voice from the day they are born. Infants cry to indicate their physical and emotional needs such as hunger and pain. Voice perception completes the circle of communication, as the child can perceive the emotions and intents of others through others' voices. Voice and specifically prosodic modulations hold information that is not expressed in words but is crucial for communication (Belin & Grosbras, 2010; Kreiman & Sidtis, 2011). Pitch change, for example, can differentiate a question from a statement. Changes in loudness may signal the importance of a message and the emotions of the speaker. Voice quality (e.g., breathiness and hoarseness) can inform the listener about the health state of the speaker.

Despite the central role of voice and voice perception in human communication in general and in children in particular, data regarding the development of voice perception in early childhood (beyond infancy) is scarce. Newborns were shown to discriminate unfamiliar voices (Floccia et al., 2000; Spence & Freeman, 1996). Some data suggest that newborns can discriminate their mother's voice from another female's voice (DeCasper & Fifer, 1980; Hepper et al., 1993; Ockleford et al., 1988; Spence & Freeman, 1996) and respond differently to the mother's versus father's voice (Lee & Kisilevsky, 2014). Three- to 4-week-old infants discriminate their mother's voice from a set of other female voices (Mehler et al., 1978; Mills & Melhuish, 1974). By 7 months of age, infants discriminate adult voices based on gender (Miller, 1983; Miller et al., 1982), and by 9 months of age, infants match pictures of males and females with the same gender voice (Poulin-Dubois et al., 1994). These findings suggest that voice discrimination is among infants' first perceptual abilities, and it is present even before children have had significant exposure to spoken language and different speakers.

Perception of voice continues to develop throughout preschool and elementary school as children discriminate increasingly subtle differences between voices as they mature. Heller Murray et al. (2019) tested children ages 5;6–11;7 (years;months) and adults in a vocal pitch discrimination task using the just notable difference (JND), also known as the difference threshold and defined as “the smallest difference between two stimuli that can be consistently and accurately detected on 50% of trials” (American Psychological Association, 2007). These authors found that the JND for voice pitch decreases with age but had not yet matured to the adult level even for the oldest children in this age range. Similar findings were reported by Nagels et al. (2020). In their study, children ages 4–12 years and adults were tested on their ability to discriminate and categorize two vocal features: (a) fundamental frequency (f o) and (b) vocal tract length (expressed by the manipulated spectral envelope of the soundwave; Koelewijn et al., 2021). In the discrimination task, the JND for both f o and vocal tract length decreased with age. Again, the JND for f o had not reached the adult level by the age of 12 years. The JND for vocal tract length, on the other hand, was similar to that of adults for children in the 8- to 10-year and older group. Interestingly, for the categorization task, children were able to recognize voices (i.e., indicate whether a voice matched a picture of a male or female) at the adult level from the age of 6 years for f o and 10 years for vocal tract length. To our knowledge, these two studies are the only ones that have examined the development of the perception of specific voice features. Together, the studies support the notion of a developmental trajectory in the refinement of voice perception skills that is linked to age.

A well-established body of auditory-perceptual literature on nonvocal auditory stimuli, such as pure tones, warble tones, and noises, has documented advances in perceptual abilities as children mature (e.g., Banai & Yifat, 2011; Berg & Boswell, 2000; Gillen et al., 2018; Jensen & Neff, 1993; Moore et al., 2008). Findings show that the ability to discriminate frequency, intensity, and duration improves with age for these stimuli. The perception of the noted acoustic features develop at different rates, as the JND for intensity decreases (mature) the fastest, followed by the JND for f o and the JND for duration, which has the latest maturation (Jensen & Neff, 1993). Though research in pure-tone perception and other manipulated sounds can provide us with some meaningful clues as to the developmental trajectory of voice perception, results from this research cannot replace the study of voice perception completely. The human voice signal is more complex than any of the manipulated sounds noted. Human voice is typically composed of many frequencies (in contrast to pure tones), which are enhanced in the vocal tract in a way that creates meaningful utterances together with the paralinguistic aspects of communication.

As noted, prior work has primarily focused on vocal features related to vocal frequency, but other voice features, such as vocal loudness and voice quality, have not yet been studied in pediatric populations. Specifically, vocal loudness and voice quality are often addressed in voice therapy, and although they are central to the treatment of voice disorders in children, many questions remain regarding the development of perception of vocal loudness and voice quality and, ultimately, how those voice features may affect the learning of new voice patterns.

Considering this gap, it is critical to better understand the developmental trajectory of voice perception in phonotypical children, which we aimed to do in this study. This project was designed to generate a baseline for future studies in children with voice disorders. The current project had two main objectives: to examine age-related changes in the perception of (a) vocal loudness (henceforth “loudness”) and (b) voice quality in two age groups: 3- to 4-year-olds and 5- to 6-year-olds. We tested children in two tasks: voice discrimination and voice identification. We hypothesized that older children would outperform younger children in discriminating and identifying differences between voices for both loudness and voice quality.

Additionally, this study aimed to evaluate whether the intermodal preferential looking paradigm (IPLP; Golinkoff et al., 1987, 2013; Hirsh-Pasek & Golinkoff, 1996), which has been primarily used to assess word learning (Halberda, 2003; Hollich et al., 2000; Newman et al., 2018) and word recognition (Fernald et al., 2001; Houston-Price et al., 2007; Morini & Newman, 2019; Swingley & Aslin, 2002), could also be used as a tool to assess voice perception abilities in young children. In the IPLP, pairs of images appear on a screen, and participants hear auditory stimuli that provide information about which object to look at. This paradigm relies on eye gaze instead of verbal production; the child “answers” by looking at an object on the screen. One advantage of the approach is that it bypasses verbal responses, which are not always reliable in young children. Thus, the ability to utilize less overt methods such as the IPLP might provide more accurate and finely grained measures of children's abilities in a variety of domains than verbal responses. We chose to use the IPLP in the current study because the study sample included young children. We aimed to explore an instrument that may eventually allow us to study this population, which is underrepresented in pediatric voice research, in voice perception studies. The data for the identification task were collected using a modified version of the IPLP. Additional information on this task, as well as the discrimination (same–different task), is provided in the following sections.

Method

The study was approved by the University of Delaware Institutional Review Board (IRB 1533420). All study procedures were conducted remotely using virtual Zoom appointments. A recent study in word learning showed that children's performance in IPLP tasks was similar when the measures were collected virtually or in person (Morini & Blair, 2021). These data supported our decision to collect data for this study virtually, where in-person testing was challenged by the restrictions imposed by the COVID-19 pandemic.

Study Design Overview

The study included two tasks that were completed in one session. The first was a task of voice discrimination in which children heard two voices and indicated whether the voices were the same or different from each other. This task solicited two types of tests: one for loudness and another for voice quality. The second task was a voice identification task that similarly solicited voice loudness and voice quality tests separately. For this task, children listened to two voices and identified which one was louder or quieter for the loudness test and good or bad for the voice quality test. Further details are provided in the next sections. The total duration of the study was approximately 30 min, including light and sound check, camera setup, and study procedures.

Participants

A total of 68 children between the ages 3;1–6;11 participated in the study. Participation for three additional children was truncated due to their failure to complete the procedure, and their data were not analyzed. One of the study aims was to investigate developmental changes in voice perception in children; thus, we recruited children from two age groups: preschool children (3- to 4-year-olds, M = 46.35 months, SD = 7.63 months, 18 girls and 16 boys) and early school–age children (5- to 6-year-olds, M = 72.11 months, SD = 6.67 months, 21 girls and 13 boys). Parental signed consent forms and verbal assent were obtained for all children. Based on parental report, all children were typically developing with normal hearing and normal or corrected-to-normal vision. Additionally, parents reported their child had no history of a voice disorder (with the exception of brief periods associated with a cold). All children were monolingual native speakers of English.

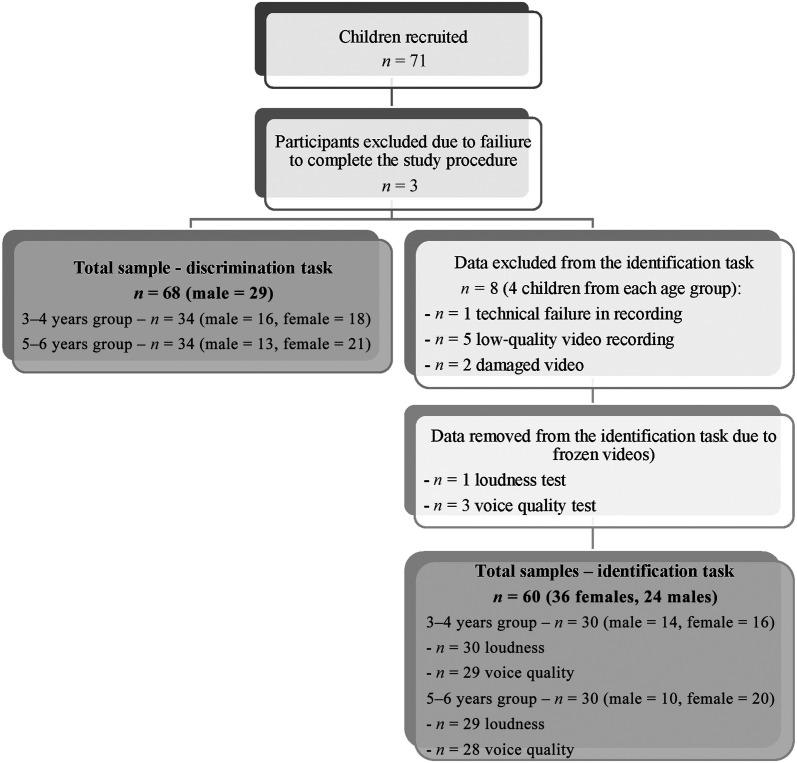

Data were obtained from all 68 children for the discrimination task, 34 in each group. For the identification task, data were obtained from 60 children: 30 children in the 3- to 4-year-old group (M = 45.9 months, SD = 7.50 months, 16 girls and 14 boys) and 30 children in the 5- to 6-year-old group (M = 72.40 months, SD = 6.514 months, 20 girls and 10 boys). Data losses are described in Figure 1.

Figure 1.

Study participants' inclusion in the discrimination and identification tasks. Data from eight children who completed the discrimination task were removed from analyses for the identification task due to technical failure (one child), poor quality video recording (five children), or damaged video (two children) that did not allow for reliable eye gaze coding. Technical issues (frozen video) prevented the coding of a loudness test for one child from the 5- to 6-year-old group. Similarly, data from the voice quality test were not coded for one child from the 3- to 4-year-old group and two children from the 5- to 6-year-old group. Data from the identification task were not processed in cases in which the entire video was damaged or was of poor quality (e.g., blurry images and low resolution). In cases in which the video from just one test was damaged, the other test was coded and the data were used for that test only.

Stimuli Development and Presentation

Voice stimuli for both discrimination and identification tasks involved the sustained vowel /a/ produced by a single female adult with expertise in voice qualities. Four voice qualities were produced: breathy, pressed, hoarse, and normal. All recordings were made in an acoustic booth using a Shure SM48 dynamic microphone. Voice samples were recorded using Praat software (Version 6.1.06; Boersma & Weenink, 2020) at 44.1 kHz, 16 bits, and saved in .wav format. Voice samples were manipulated digitally using Praat for f o (220 Hz), intensity (60 dB), and duration (1.8 s). For the loudness test, only the normal voice sample was used, and it was digitally manipulated to produce three intensities: 50, 60, and 70 dB. These intensities were chosen to create pairs of stimuli that differed in 10- or 20-dB increments. The interstimulus difference of 10 dB was used following Jensen and Neff (1993), who reported a 7.5-dB JND in 4-year-old children, with a standard deviation of about 5 dB. To avoid ceiling effects in the older age group, a difference of 10 dB was chosen. The range of intensities between 50 and 70 dB was chosen to mimic the typical intensity range in normal conversational speech. In that range, 50 dB was considered to be the lowest intensity that can be detected without much effort, and 70 dB was the maximum intensity used to protect children's hearing.

To confirm that the voice samples corresponded to the desired voice qualities (breathy, pressed, hoarse, and normal), two speech-language pathologists specialized in voice rated the auditory samples using the Consensus Auditory-Perceptual Evaluation of Voice (CAPE-V; Kempster et al., 2009). The CAPE-V includes six scales ranging from normal (0) to severe (100) for overall severity, roughness, breathiness, strain, pitch, and loudness. The voice samples were, as noted, controlled for pitch (~f o) and loudness (~intensity), and thus, these parameters were not rated. The breathy voice sample received an average score of 79 on the breathiness scale (severe breathiness on the CAPE-V scale), the pressed sampled was scored 40 on average for strain (moderate strain), and the hoarse sample was rated, on average, 71.5 for breathiness and 79 for roughness (both in the “severe” range), where “hoarse” is generally understood to be a combination of breathiness and roughness (Fairbanks, 1960). All deviant (nonnormal) voice samples received a high score on the overall severity scale (76, 40, and 89.5, respectively). The normal voice sample was rated as 0 (normal) on all scales except for strain for which the average score was 0.5, which effectively can be considered a zero score.

It is important to note that since this study focuses on perception, it was important to determine if the stimuli were interpreted perceptually as we intended when we created them. Moreover, currently, there is no perfect match between perception and any acoustic measures. This relationship will be interesting to pursue in a future study but is not the point of the current one.

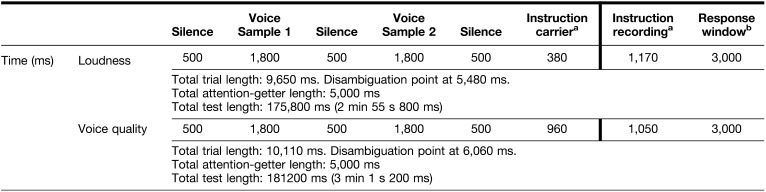

Short videos were created, in which pairs of cartoon animal-like creatures (see Figure 2) were paired with voices. Twelve types of creatures were generated using the SPORE Creature Creator software. Creatures in each video differed only by their color—one creature was green and the other was yellow, counterbalanced (see Figure 2). For each trial, two creatures appeared on the screen simultaneously, and each produced a voice token sequentially. The left creature was always the first to produce a voice. The creatures were animated to enlarge when they produced a voice token. After both creatures produced their tokens, children were asked a question (discrimination task) or given an instruction (identification task) regarding the voices they heard. For the discrimination task, children were asked if the creatures' voices were the same or different (“Were the creatures' voices the same?” or “Were the creatures' voices different?”). For the identification task, children were asked to look at the louder or quieter creature for the loudness test (“look at the quieter one” or “look at the louder one”) or to look at the creature with the good or bad voice for the voice quality test (“look at the one with the good voice” or “look at the one with the bad voice”). The timelines for the different types of trials are shown in Tables 1 and 2.

Figure 2.

Examples of cartoon-like animal creatures. Each trial presented two creatures that differed only by color. One creature was green and the other was yellow, with alternating locations.

Table 1.

Full timeline for the discrimination task: loudness and voice quality.

| Silence | Voice Sample 1 | Silence | Voice Sample 2 | Silence | Question | Response windowa | |

|---|---|---|---|---|---|---|---|

| Time (ms) | 500 | 1,800 | 500 | 1,800 | 500 | 1,800 | 3000 |

| Total trial length: 9,680 ms Total attention-getter length: 5,000 ms Total white slide length: 4,000 ms Total test length: 226,800 ms (3 min 46 s 800 ms) |

|||||||

Creatures appear on the screen with no voice stimuli.

Table 2.

Full timeline for the identification task: loudness and voice quality.

Note. The bolded line marks the disambiguation point at 5,480 ms (loudness) and 6,060 ms (voice quality). The accuracy of response was calculated from the disambiguation point.

Instruction carrier refers to the part of the instruction that precedes the disambiguation point. It includes a carrier phrase (“look at the” for loudness and “look at the one with the” for voice quality). Eye gaze from this part of the instruction was not analyzed. Instruction recording refers to the part of the instruction that includes the specific information about the creature children are asked to look at (“louder/quieter one” for loudness and “good/bad voice” for voice quality). Eye gaze from the instruction recording and the response window was analyzed to capture the accuracy of response.

Creatures appear on the screen with no voice stimuli.

We chose to maintain the same order of stimulus presentation across all trials (i.e., the left creature always produced voice first) to create consistency and minimize cognitive load for the children. Regarding cognitive load, children had to remember the two voices and contrast them to respond to the question or instruction. Children also had to remember the location of the creature that produced each voice. The consistent production order—left first/right second—would minimize cognitive load in preparing a response. Post hoc analyses showed that a left–right response bias was not detected for either of the age groups. Specifically, we examined children's fixation patterns at the moment the instruction was given for each trial (i.e., the “disambiguation point”) and found that 3- to 4-year-olds looked at the object on the left 41% of the time, looked at the object on the right 34% of the time, and away from the screen 25% of the time. Similarly, children in the 5- to 6-year-old group looked 35% to the left, 36% to the right, and 29% away from the screen. This suggests that although the creature on the left always produced sound first, participants were not biased to always look at that side when the looking instruction was heard.

Before each trial, children saw a 5-s attention-getter (repetitive video cartoon), which provided them with a break from the stimulus string and helped direct attention to the center of the screen. Each video started with an attention-getter that had a black background, followed by a test trial with a white background. The difference in background color was chosen to create a clear contrast that would allow coders to identify beginnings and ends of trials. Duration of trials and details about onset of stimuli and instructions are provided in Tables 1 and 2. Discrimination tests included an additional 4-s white slide after each video, which was meant to provide children with more time to verbally answer questions if needed.

Procedure

The study procedure was conducted remotely using Zoom. Children were tested in their home, and parents were instructed to choose a quiet room and use a computer with a screen 13 in. in size or larger. All participants received headphones by mail (Noot Products K11) to use during the study. By providing the same type of headphones to all participants, differences in the delivery of audio signals would be minimized across subjects. The use of headphones aimed to improve the quality of the signal and minimize noise from the home environment.

The virtual meeting started with light and audio testing. Children watched a short video of whales swimming with music playing. The video background changed repeatedly from black to white at a 5-s rate. The experimenter then looked to see whether changes in brightness on the child's face were detectable. In cases in which changes in brightness were not detectable, the experimenter helped the parent adjust the room lighting (e.g., close the window shades, turn off a light) or the brightness of the computer screen, and the video was played again. The video included music presented in an intensity similar to the maximum intensity used for the study stimuli. Parents were asked to adjust the computer volume to a comfortable level. We instructed parents to choose a comfortable volume on their computer in order to avoid intensity levels that could harm the child's hearing or would be clipped by the headphones that had a maximum delivery intensity of 94 dB. The experimenter also looked for distractors in the room, and if a distractor was detected (e.g., a toy held by the child), the parent was asked to remove it.

The testing procedure included a pretest assessment followed by a discrimination task and finally an identification task. Before the identification task, the experimenter instructed caregivers on the operation of the camera app on their computer and the recording of the child's face. By recording the child locally from their home computer, we aimed to avoid lags in the recording field that could affect the eye gaze coding. Children sat either on their parent's lap or on a chair in front of the computer screen and completed the procedure in one session. A short break was given to children between discrimination and identification tasks.

Pretest

The pretest task was designed to assess the child's understanding of two concepts considered essential for successful responses in discrimination and identification tasks: (a) same–different and (b) adjective + “er” = more + adjective (e.g., smaller − “more small”). For this pretest, each child was presented with two pairs of circles (sequentially), one pair similar in size and color and one pair similar in size but not in color. For each circle, children were asked if the circles were the same or if they were different. Children who did not answer correctly on the same–different question were redirected and were tested again with a new pair of circles. Next, a pair of circles similar in color but different in size appeared and was followed by an instruction to point at the bigger or smaller circle. All children pointed correctly. Pointing was chosen because it does not require complex analyses, and results for this task were needed to evaluate inclusion criteria regarding children's ability to understand the linguistic concept at hand and therefore their suitability to continue in the study. The pretest phase did not include training children in the task or linguistic terms used in the study. Training was not needed because the linguistic terms used are familiar to 3- to 6-year-old children.

Discrimination

Following successful completion of the pretest, the next task assessed discrimination, which included two tests: loudness and separately voice quality tests. Children watched short videos of two cartoon animal-like creatures (see Figure 2) who produced voices (as described in the Stimuli Development and Presentation section) and responded verbally to indicate whether the two creatures' voices were the same or different.

An all-pairs approach was used. Each test included four sublevels (pairs). For the loudness test, the sublevels were 50–60 dB, 60–70 dB, 50–70 dB, and “same” dB (50–50 dB, 60–60 dB, and 70–70 dB). For the voice quality test, one of three voice qualities (breathy, pressed, and hoarse) was compared to the normal voice sample, and for the “same pairs,” each voice quality was compared to itself (hoarse–hoarse, pressed–pressed, and breathy–breathy). For both loudness and voice quality trials, each pair was repeated twice, each with a different stimulus order. For example, in one pair, the child heard the hoarse voice followed by the normal voice, and in the other, the child heard the normal voice followed by the hoarse voice. “Same” pairs were also repeated twice. Each test consisted of a total of 12 trials (two for each type of pair). The order of trials within each test was semirandomized so that one type of instruction (same or different) and the order of colors (green–yellow or yellow–green) were not repeated more than 3 times in a row. The order of tests was counterbalanced across children. Each test (loudness and voice quality separately) lasted for approximately 3 min (see Table 1).

Identification

The identification task also included two tests—loudness and voice quality—and both visual and auditory stimuli were identical to the ones described for the discrimination task. Data were collected using the IPLP.

For this condition, children watched the screen as the two creatures (i.e., the visual stimuli) were paired with voices (i.e., the auditory stimuli). Participants were then asked to “look at the louder one” or “look at the quieter one” for the loudness test, and to “look at the one with the good voice” or “look at the one with the bad voice” for the voice quality test. Children were recorded by the computer camera as they watched the videos for later coding of their eye movements.

As in the discrimination task, each test had 12 trials. However, for this task, the “same” pairs were omitted, and each of the other pairs was repeated 4 times, two for each stimulus order (e.g., 50–70 dB × 2 and 70–50 dB × 2). The order of trials within each test was semirandomized so that one type (e.g., louder or quieter) of instruction and the order of colors (green–yellow or yellow–green) were not repeated more than 3 times, and a pair type did not repeat more than twice in a row. Tests were counterbalanced across children. Each test (loudness and voice quality separately) lasted approximately 3 min (see Table 2).

Data Coding

Discrimination

All data were coded as 1 (correct answer) or 0 (wrong answer). The total correct answers were then divided by the total questions answered and multiplied by 100 to calculate the percent accuracy of responses (see Equation 1). The same calculation was used for both loudness and voice quality separately.

| (1) |

Identification

As noted, children were video-recorded to capture their eye gaze. Two trained researchers, who were blinded to the correct answer (i.e., the target side), coded the videos on a frame-by-frame basis to capture eye movements using Datavyu software (Datavyu Team, 2014). Data from the two coders were compared for reliability, and in situations in which there was a difference of more than 500 ms between the coders for a particular trial, a third coder was introduced. Coding files were then compared, and the one that matched the third coding was used for final data analyses. A third coder was required for 10.9% of trials, which is in line with other studies that have used the same paradigm (Morini & Blair, 2021). A minimum fixation duration was set at 500 ms. Data from trials that failed to meet this criterion were removed from analyses.

Accuracy was calculated as the proportion of time the child looked at the correct answer (see Equation 2) calculated over a window of 4,170 ms for loudness and 4,050 ms for voice quality trials. This window started with the disambiguation point and continued to the end of the trial (for details, see Table 2). As for the discrimination task, accuracy was averaged across all trials and separately for each sublevel of loudness and voice quality. These windows of analysis were longer than in other IPLP studies. In other eye gaze studies, children have been asked to look at a concrete item (“look at the cookie”), whereas in this study, participants were asked to process the question and compare two items to correctly identify an object that was not directly named (“look at the louder one”). Furthermore, the correct item had to be determined based on how it compared to the competing item; thus, greater times were required for responses.

It is important to note that 100% accuracy is not an expected performance in the IPLP. Participants are expected to spend some of the time looking at each of the objects on the screen before committing to a particular object. Hence, the proportion of time looking at the correct response is usually not a perfect 100%. With this in mind, scores that are significantly above chance (50%) are taken as an indication that children are able to accurately identify the target item from the stimuli presented.

| (2) |

Data Analysis

A separate one-way analysis of variance (ANOVA) test was conducted for each of the four tests (i.e., discrimination loudness, discrimination voice quality, identification loudness, and identification voice quality separately) to compare the overall accuracy (all pairs) of children in the two age groups (3- to 4-year-olds and 5- to 6-year-olds). The effect size for significant ANOVA tests was computed using an η2 test and interpreted as follows: .01–.058, small effect size; .059–.137, medium effect size; and ≥ .138, large effect size (Cohen, 1988). As a second step for the identification test, t tests for comparing the accuracy of the two age groups were calculated for each of the sublevels of each the two tests (L1–L2, hoarse–normal, etc.). Cohen's d was used to calculate the effect size of the significant t tests. The effect size was interpreted following Cohen (1988) where 0.20–0.49 effect size was considered small, 0.50–0.79 was considered medium, and ≥ 0.80 was considered large. The difference between the groups was not tested for the sublevels of the discrimination task because of the small number of repetitions for each sublevel. Chance-level hit rates of the accuracy scores were computed using one-sample t tests at a 50% chance level. An alpha level of .05 was set a priori for all analyses.

Results

Discrimination

Loudness

As shown in Figure 3a, children in the 5- to 6-year-old group were more accurate than children in the 3- to 4-year-old group on the discrimination of loudness test (5- to 6-year-old group: M = 65.263%, SD = 18.076%, 3- to 4-year-old group: M = 55.633%, SD = 13.482%). The between-groups difference for loudness accuracy was significant, f(68) = 6.201, p = .015, η2 = .086. Data are presented in Table 3. The accuracy of both groups was found to be better than chance (5- to 6-year-old group: t = 4.923, p < .001, d = 0.844; 3- to 4-year-old group: t = 2.436, p = .010, d = 0.418). See Table 4.

Figure 3.

Percent accuracy calculated for the two age groups in the discrimination task for vocal loudness (a) and voice quality (b) tests. An X inside the boxes marks the mean.

Table 3.

Mean accuracy and standard deviation for the discrimination task (loudness and voice quality) and a summary of the analysis of variance and t tests for the two groups: .

| Test | Sublevel | 3–4 years (n = 34) |

5–6 years (n = 34) |

Test statistic | p | Effect size | ||

|---|---|---|---|---|---|---|---|---|

| M | SD | M | SD | |||||

| Loudness | All pairs | 55.633% | 13.482% | 65.263% | 18.076% | f = 6.201 | .015 | η2 = .086 |

| Voice quality | All pairs | 62.366% | 16.029% | 81.239% | 14.932% | f = 25.234 | < .001 | η2 = .277 |

Note. Significant test results are bolded.

Table 4.

Chance-level hit rates for the discrimination and identification tests.

| Task | Test | Sublevel | 3–4 years |

5–6 years |

||||

|---|---|---|---|---|---|---|---|---|

| t score | p | Cohen's d | t score | p | Cohen's d | |||

| Discrimination | Loudness | All pairs | 2.436 | .010 | 0.418 | 4.923 | < .001 | 0.844 |

| Voice quality | All pairs | 4.499 | < .001 | 0.771 | 12.199 | < .001 | 2.092 | |

| Identification | Loudness | All pairs | 6.394 | < .001 | 1.167 | 10.410 | < .001 | 1.933 |

| L1–L2 (50–60 dB) | 2.768 | .005 | 0.505 | 5.253 | < .001 | 0.975 | ||

| L2–L3 (60–70 dB) | 5.325 | < .001 | 0.972 | 9.891 | < .001 | 1.837 | ||

| L1–L3 (50–70 dB) | 6.476 | < .001 | 1.182 | 11.513 | < .001 | 2.138 | ||

| Voice quality | All pairs | 0.958 | .173 | 0.178 | 5.143 | < .001 | 0.972 | |

| Normal–hoarse | 1.614 | .059 | 0.300 | 6.742 | < .001 | 1.274 | ||

| Normal–pressed | 1.147 | .131 | 0.213 | 4.443 | < .001 | 0.840 | ||

| Normal–breathy | −0.447 | .671 | −0.083 | −0.064 | .525 | 0.012 | ||

Note. Significant test results are bolded. Accuracy scores were compared to chance level of 50%.

Voice Quality

Similar to the results for loudness discrimination, children in the older age group were more accurate in discriminating voice quality (5- to 6-year-old group: M = 81.239%, SD = 14.932%; 3- to 4-year-old group: M = 62.366%, SD = 16.029%; see Figure 3b). The group difference was significant, f(68) = 25.234, p < .001, η2 = .277, favoring the older age group. Data are presented in Table 3. Accuracy was better than chance for both groups (5- to 6-year-old group: t = 12.199, p < .001, d = 2.092; 3- to 4-year-old group: t = 4.499, p < .001, d = 0.771). See Table 4.

Identification

Loudness

The 5- to 6-year-old group was found to perform more accurately than the 3- to 4-year-old group on the identification–loudness test (5- to 6-year-old group: M = 74.327%, SD = 12.585%; 3- to 4-year-old group: M = 63.537%, SD = 11.596%; see Figure 4a). The difference between groups was significant, f(59) = 11.742, p = .001, η2 = .171. The analysis of loudness sublevels revealed that older children (5- to 6-year-olds) were significantly more accurate than younger children (3- to 4-year-olds) for all sublevels (see Table 5). Accuracy scores for overall performance (all levels) and sublevels were better than chance for both groups (see Table 4).

Figure 4.

Percent accuracy calculated for the two age groups in the identification task for vocal loudness (a) and voice quality (b) tests. An X inside the boxes marks the mean.

Table 5.

Mean accuracy and standard deviation for the identification task (loudness and voice quality) and a summary of the analysis of variance and t tests for the two groups: .

| Test | Sublevel | 3–4 years (loudness: n = 30; voice quality: n = 29) |

5–6-years (loudness: n = 29; voice quality: n = 28) |

Test statistic | p | Effect size | ||

|---|---|---|---|---|---|---|---|---|

| M | SD | M | SD | |||||

| Loudness | All pairs | 63.537% | 11.596% | 74.327% | 12.585% | f = 11.742 | .001 | η2 = .171 |

| L1–L2 (50–60 dB) | 60.184% | 20.152% | 69.998% | 20.501% | t = −1.854 | .034 | d = 0.483 | |

| L2–L3 (60–70 dB) | 63.216% | 14.956% | 76.157% | 12.614% | t = −2.573 | < .001 | d = 0.930 | |

| L1–L3 (50–70 dB) | 67.684% | 13.593% | 76.966% | 14.241% | t = −3.571 | .006 | d = 0.670 | |

| Voice quality | All pairs | 52.295% | 12.895% | 62.840% | 13.211% | f = 9.300 | .004 | η2 = .145 |

| Normal–hoarse | 54.506% | 15.034% | 71.706% | 17.036% | t = −4.045 | < .001 | d = 1.072 | |

| Normal–pressed | 53.974% | 18.656% | 66.805% | 20.017% | t = −2.505 | .008 | d = 0.664 | |

| Normal–breathy | 48.514% | 17.889% | 49.721% | 22.920% | t = −0.222 | .413 | d = 0.059 | |

Note. Significant test results are bolded.

Voice Quality

The accuracy for children in the older age group (5- to 6-year-olds) was higher than for the young age group (5- to 6-year-old group: M = 62.840%, SD = 13.211%; 3- to 4-year-old group: M = 52.295%, SD = 12.895%; see Figure 4b). Children in the older age group were significantly more accurate in identifying voice quality than those in the younger group, f(57) = 9.300, p = .004, η2 = .145. Analysis of sublevels of voice quality showed a significant advantage in accuracy for the older age group for all sublevels except for the normal–breathy pair that was not found to show significant group differences (see Table 5). Accuracy scores were better than chance only for overall accuracy, the normal–hoarse pair, and the normal–pressed pair for the 5- to 6-year-old group. All other scores (including the normal–breathy pair for the 5-to 6-year-old group) were not different from chance (see Table 4).

Discussion

Key Findings

The current project aimed to study the development of voice perception in young children. Results suggest that 5- to 6-year-old children are more accurate at perceiving differences between vocal loudness for both discrimination and identification tasks compared to 3- to 4-year-olds. Findings for voice quality were similar. Overall, our findings suggest that as children mature, they are better able to perceive subtle differences in loudness and voice quality. That is not to say that, early on, young children are not able to perceive loudness and voice quality. Although older children performed better in the two perceptual tasks, younger children still perceived differences in loudness and voice quality. This study is a first step in a series in which we examine vocally healthy children in the perception of loudness and voice quality. Future studies will focus on children with voice disorders and will assess how voice perception may affect learning in therapy (to be elaborated in the Future Directions section).

Bruner (1957) defines perception as an “act of categorization,” a nonconscious process in which sensory information is organized based on stimulus features (color, shape, smell, etc.). As children mature, they are able to process finer stimulus details and organize the information into smaller, more specific categories (Eimas & Quinn, 1994). This specification process allows children to handle information with increasing precision as they mature. The results of both the discrimination and identification tasks suggest that voice perception follows the same trend. That is, voice perception becomes more specific with age. Although newborns and infants discriminate voices broadly, for example, with respect to the distinction between different speakers or gender differences (DeCasper & Fifer, 1980; Miller et al., 1982; Ockleford et al., 1988; Poulin-Dubois et al., 1994), preschoolers and kindergarten children can discriminate and categorize more finely grained details such as f o (Nagels et al., 2020) and, in our study, loudness and voice quality. Voice perception continues to develop throughout the school years (Heller Murray et al., 2019); as children mature, their accuracy in discriminating and identifying differences between voices improves. Given the importance of voice perception in communication, an improved ability to perceive vocal dimensions may assist children in perceiving and interpreting speech through prosodic changes and other metalinguistic information. More studies are required to determine when voice perception matures to the adult level.

Our findings also align with former studies that found an improvement in the ability to perceive small differences between nonvoice stimuli (such as pure tones and noises) with age (e.g., Banai & Yifat, 2011; Berg & Boswell, 2000; Gillen et al., 2018; Jensen & Neff, 1993; Moore et al., 2008).

Breathy and Normal Voice Outcomes

Interestingly, the only sublevel that was not found to be different between the age groups on the identification task was the normal–breathy distinction. Both groups had an average score of about 50% accuracy, which indicated that children were not certain about the difference and had to guess which voice was the bad one and which was the good one. One possible explanation is that since incomplete glottal closure that leads to breathy voice is common in women (Biever & Bless, 1989; Henton & Bladon, 1985; Södersten & Lindestad, 1990), children are exposed to it fairly often and consider it to be the norm. Hence, although children identified the breathy voice as different from the normal voice in the discrimination task, they did not identify it as being deviant (“bad”) compared to the normal voice in the identification task. To be stated differently, the word “bad” may not have applied to children's understanding of breathy voice.

It is also possible that although the breathy voice sample was rated as having a breathy quality by two speech-language pathologists, it was not deviant enough from the normal voice sample, and thus, children were not able to perceive the differences. However, when observing the data from the normal–breathy sublevel of the discrimination task, it appears that 3- to 4-year-old children and 5- to 6-year-old children had an average of 61.765% and 75.000% accuracy, respectively. The averages were marginally above chance for the younger age group (t = 1.852, p = .073, d = 0.365) and significantly above chance for the older age group (t = 4.123, p < .001, d = 0.707), which suggests that children did discriminate the two voice types. This observation should be taken with caution due to the small number of repetitions in the discrimination task.

Performance on the Discrimination Task Compared to the Identification Task

Children's accuracy differed on the discrimination task versus the identification task. This finding is not surprising. Children's performance in auditory-perceptual studies varies depending on the type of task used. Several studies have shown that the design of the study affects children's performance on auditory-perceptual tasks (Andrews & Madeira, 1977; Banai, 2008; Sutcliffe & Bishop, 2005). However, the difference in performance between the discrimination and identification tasks was dissimilar for the two vocal features tested. Examination of accuracy scores suggests that overall accuracy (all pairs) was higher on the identification task over the discrimination task for loudness but not for voice quality. For voice quality, children had higher accuracy on the discrimination task. One possible reason for these conflicting trends is language use. Language is shown to modulate perceptual processes top-down and alter task performance (Lupyan, 2012). We suggest that the language used in the identification task influenced children's performance on the tests differently, supporting performance for loudness but not for voice quality.

For the discrimination task, the same terms were used to compare the stimuli for both blocks (“Are the creatures' voices the same–different?”). For the identification task on the other hand, each test used different terms. For the loudness test, the terms used to instruct children were louder and quieter. These terms described the targeted voice features directly and are familiar to children from daily life. These familiar terms may have added some redundancy that helped children categorize the stimuli while performing the task, which in turn increased accuracy. On the voice quality task, on the other hand, we chose to use the words “good” and “bad” to describe normal and deviant voices, respectively. These terms are more abstract to children in relation to voice and were chosen after numerous discussions among the research team and with other researchers and clinicians. In the absence of better terms to describe normal and deviant voices in a way that even 3-year-olds will understand, we decided to use simple terms that indirectly describe the quality of a voice. We acknowledge that thinking of voice as good or bad is abstract and can be even considered to be judgmental. The use of the terms good and bad did not create a redundancy (as we suggested that happened for “louder” and “quieter”) and maybe even confused some of the children leading to lower accuracy in the voice quality test. Further investigation to optimize terms used to study voice quality perception is warranted.

The motor theory of speech perception may provide another explanation for the difference in performance between loudness and voice quality responses across tasks. According to the motor theory of speech perception, production has a role in perception, and the two share a common representation (Galantucci et al., 2006; Liberman & Mattingly, 1985; Mole, 2009). The notion is that the existence of a motor pattern in production is expressed in improved recognition perceptually because of a shared representation. In the identification task, children had to label the stimuli and associate them with categories to accurately identify them. Loudness variations are common in children's productions; children use different voice intensities throughout the day, and hence, a category for loudness likely already exists in children's repertoire. The loudness category supported the labeling of voices on the identification task. Voice quality does not share the same advantage of a preexisting category associated with performance. Since only phonotypical children participated in the study, participants likely had almost no experience in producing deviant voice, and thus, we speculate that the category for voice quality was limited or did not exist in their repertoire. When trying to label voices in the voice quality test in the identification task, children did not have an internal representation to serve as a reference to support the labeling process.

The motor theory of speech perception has variable reception in the literature. Although some questions still exist around this theory (e.g., Mole, 2009), neurophysiological literature indicates a link between perception and production (Assaneo & Poeppel, 2018; Fadiga et al., 2002; Iacoboni, 2008; Wilson et al., 2004)—a report we find compelling. For this reason, we have included it in this report.

Feasibility of the IPLP in Pediatric Voice Research

Another aim of this study was to assess whether the IPLP could be extended to the field of voice perception. Overall, the pattern of results was similar across the two tasks (i.e., discrimination, which did not use the IPLP, and identification, which did). In other words, older children surpassed younger children in accuracy regardless of testing paradigm. However, the identification task that used the IPLP revealed differences between groups for all sublevels of loudness and two of the three sublevels of voice quality. We acknowledge the small number of repetitions (trials) in both tasks compared to other studies that examined older children (e.g., Heller Murray et al., 2019). More repetitions could have potentially provided more finely grained outcomes in the discrimination task. However, given the young age of the participants and their limited attention spans, including a larger number of repetitions was not possible. The IPLP, which requires fewer repetitions, is shorter and less tiring than methods used in previous studies with older children and hence may be better suited for young children. The findings from the current study suggest that despite the small number of repetitions, data from the IPLP task were sensitive enough to allow for group comparisons in all sublevels of loudness and voice quality.

Chance-level hit rates for the identification test indicated that the performance of all children on the loudness test was significantly better than chance, which suggests that children were able to identify the target object successfully. With that said, accuracy scores for the younger age group were not different from chance for the voice quality test, which we speculate may stem from the terms used to describe voice quality (good vs. bad), as indicated in former sections. Future studies should continue to investigate the overall reliability of the IPLP for use in voice perception research, as it has the potential to expand the type of questions that can be examined.

Limitations

The current study had several limitations that should be noted. Since the study was conducted remotely, the computer intensity level was not controlled across participants. The study was conducted remotely due to limitations imposed by the COVID-19 pandemic that did not allow us to test children in the lab. The volume of the home computer was adjusted by parents to a comfortable level to protect children's hearing and avoid high intensities that are filtered by the headphones. The differences of 10 or 20 dB between stimuli were maintained across children. This did not impose a problem for the voice quality tests, in which all stimuli were delivered at the same intensity. Additional studies are required and should compare our results to those obtained in controlled lab settings where audio intensity levels across children are the same.

A fixed set of stimuli was used for all tasks; the differences between stimuli were predetermined and did not change based on the child's response as is traditionally done in the study of auditory perception. Classically, to find the JND (e.g., in intensity or frequency), an adaptive procedure is used. In such procedure, stimuli differences increase or decrease from trial to trial based on participant's responses until the JND is found (e.g., see Jensen & Neff, 1993; Moore et al., 2008). In our study, the differences between pairs of stimuli and the order in which stimuli were presented were predetermined. The method we chose limits our ability to detect children's JND and maturation of voice perception for loudness and voice quality. Two main reasons guided us in choosing this method. First, when using the IPLP, children's responses are analyzed only after the completion of the procedure, which does not allow for stimulus changes to be made automatically as in the traditional methods. Second, currently there is no agreement on the amount of change to the acoustic components of voice that are required to create voice deviations such as those used in this study. Voice quality is multidimensional (Kreiman et al., 2005); it is not possible to create scaled differences between stimuli that would allow us to use more traditional methods to study the perception of voice quality.

Future Directions

Our results may have some implications for voice treatment in children. A recent review found that data regarding the effect of different developmental issues (cognition, emotion, anatomy, etc.) on pediatric voice treatment outcomes are lacking (Feinstein & Verdolini Abbott, 2021). A preliminary study of voice treatment outcomes in children suggests that several cognitive mechanisms may contribute to vocal learning (Feinstein et al., 2021). The current project examined the development of voice perception in phonotypical children. Future studies should examine the development of loudness and voice quality perception in children with voice disorders to ultimately understand whether voice perception plays a role in vocal learning and should be addressed directly in pediatric voice treatment. Some authors suggest that vocal perception issues may underlie behavioral voice disorders (e.g., vocal nodules) in some children (Heller Murray et al., 2019). A study that examined vocal pitch perception in children with and without vocal nodules did not find differences between the groups (Heller Murray et al., 2019). Perhaps this is not the case for other vocal features, such as loudness and voice quality. Evidence of auditory processing deficits in children with voice disorders (Arnaut et al., 2011; Szkiełkowska et al., 2022) suggests that more investigation of auditory-perceptual performance in this population is warranted. The current study provides the first evidence on the development of the perception of loudness and voice quality in young children. A study that examines the perception of loudness and voice quality in children with and without voice disorders and the impact of voice perception on vocal learning in children is currently underway in our lab.

Conclusions

The current study is the first to investigate the development of vocal loudness and voice quality perception in 3- to 6-year-old children. The findings from this study are in line with other studies of voice perception that found that children improve in their ability to detect and identify differences between voices as they mature. We were also able to show that the IPLP can be used to study voice perception in young children, which will potentially allow us to study voice in populations that have been thus far understudied. Future studies that examine the influence of voice perception on vocal learning in children with and without voice disorders will built on current findings.

Data Availability Statement

Numerical data are available from the authors by request. For confidentiality reasons, videos are not available.

Acknowledgments

The writing of this article was partially funded by the University of Delaware Graduate School Dissertation Fellowship award to H.F. Support was also received from the National Institute on Deafness and Other Communication Disorders (R01 DC017923; K.V.A., principal investigator). The authors thank all children and families who participated in the study. Some participants for this study were recruited through http://ChildrenHelpingScience.com. They also thank Gabriela Vallejo, Jacqueline Fitzula, Mackensie Blair, Katherine Richard, Sydney Horne, Taylor Hallacy, Dea Harjianto, and Chaithra Reddy for their assistance in data collection and eye gaze coding. Lastly, the authors thank Hod Wolff for his invaluable contribution in data analysis and technical support in all stages of the study.

Funding Statement

The writing of this article was partially funded by the University of Delaware Graduate School Dissertation Fellowship award to H.F. Support was also received from the National Institute on Deafness and Other Communication Disorders (R01 DC017923; K.V.A., principal investigator).

References

- American Psychological Association. (2007). Difference threshold. In VandenBos G. R. (Ed.), APA dictionary of psychology. https://dictionary.apa.org/difference-threshold

- Andrews, M. L. , & Madeira, S. S. (1977). The assessment of pitch discrimination ability in young children. Journal of Speech and Hearing Disorders, 42(2), 279–286. https://doi.org/10.1044/jshd.4202.279 [DOI] [PubMed] [Google Scholar]

- Arnaut, M. A. , Agostinho, C. V. , Pereira, L. D. , Weckx, L. L. M. , & de Avila, C. R. B. (2011). Auditory processing in dysphonic children. Brazilian Journal of Otorhinolaryngology, 77(3), 362–368. https://doi.org/10.1590/S1808-86942011000300015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Assaneo, M. F. , & Poeppel, D. (2018). The coupling between auditory and motor cortices is rate-restricted: Evidence for an intrinsic speech-motor rhythm. Science Advances, 4(2), eaao3842. https://doi.org/10.1126/sciadv.aao3842 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banai, K. (2008). Auditory frequency discrimination development depends on the assessment procedure. Journal of Basic and Clinical Physiology and Pharmacology, 19(3–4), 209–222. https://doi.org/10.1515/JBCPP.2008.19.3-4.209 [DOI] [PubMed] [Google Scholar]

- Banai, K. , & Yifat, R. (2011). Perceptual anchoring in preschool children: Not adultlike, but there. PLOS ONE, 6(5), Article e19769. https://doi.org/10.1371/journal.pone.0019769 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belin, P. , & Grosbras, M.-H. (2010). Before speech: Cerebral voice processing in infants. Neuron, 65(6), 733–735. https://doi.org/10.1016/j.neuron.2010.03.018 [DOI] [PubMed] [Google Scholar]

- Berg, K. M. , & Boswell, A. E. (2000). Noise increment detection in children 1 to 3 years of age. Perception & Psychophysics, 62(4), 868–873. https://doi.org/10.3758/BF03206928 [DOI] [PubMed] [Google Scholar]

- Biever, D. M. , & Bless, D. M. (1989). Vibratory characteristics of the vocal folds in young adult and geriatric women. Journal of Voice, 3(2), 120–131. https://doi.org/10.1016/S0892-1997(89)80138-9 [Google Scholar]

- Boersma, P. , & Weenink, D. (2020). Praat: Doing phonetics by computer (6.1.06) [Computer software] . http://www.fon.hum.uva.nl/praat

- Bruner, J. S. (1957). On perceptual readiness. Psychological Review, 64(2), 123–152. https://doi.org/10.1037/h0043805 [DOI] [PubMed] [Google Scholar]

- Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Erlbaum. [Google Scholar]

- Datavyu Team. (2014). Datavyu: A video coding tool. Databrary Project. http://datavyu.org [Google Scholar]

- DeCasper, A. J. , & Fifer, W. P. (1980). Of human bonding: Newborns prefer their mothers' voices. Science, 208(4448), 1174–1176. https://doi.org/10.1126/science.7375928 [DOI] [PubMed] [Google Scholar]

- Eimas, P. D. , & Quinn, P. C. (1994). Studies on the formation of perceptually based basic-level categories in young infants. Child Development, 65(3), 903–917. https://doi.org/10.1111/j.1467-8624.1994.tb00792.x [PubMed] [Google Scholar]

- Fadiga, L. , Craighero, L. , Buccino, G. , & Rizzolatti, G. (2002). Speech listening specifically modulates the excitability of tongue muscles: A TMS study. European Journal of Neuroscience, 15(2), 399–402. https://doi.org/10.1046/j.0953-816x.2001.01874.x [DOI] [PubMed] [Google Scholar]

- Fairbanks, G. (1960). Voice and articulation drillbook (2nd ed.). Harper & Row. [Google Scholar]

- Feinstein, H. , Daşdöğen, Ü. , Libertus, M. E. , Awan, S. N. , Galera, R. I. , Dohar, J. E. , & Abbott, K. V. (2021). Cognitive mechanisms in pediatric voice therapy—An initial examination. Journal of Voice, S0892199721003246. https://doi.org/10.1016/j.jvoice.2021.09.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feinstein, H. , & Verdolini Abbott, K. (2021). Behavioral treatment for benign vocal fold lesions in children: A systematic review. American Journal of Speech-Language Pathology, 30(2), 772–788. https://doi.org/10.1044/2020_AJSLP-20-00304 [DOI] [PubMed] [Google Scholar]

- Fernald, A. , Swingley, D. , & Pinto, J. P. (2001). When half a word is enough: Infants can recognize spoken words using partial phonetic information. Child Development, 72(4), 1003–1015. https://doi.org/10.1111/1467-8624.00331 [DOI] [PubMed] [Google Scholar]

- Floccia, C. , Nazzi, T. , & Bertoncini, J. (2000). Unfamiliar voice discrimination for short stimuli in newborns. Developmental Science, 3(3), 333–343. https://doi.org/10.1111/1467-7687.00128 [Google Scholar]

- Galantucci, B. , Fowler, C. A. , & Turvey, M. T. (2006). The motor theory of speech perception reviewed. Psychonomic Bulletin & Review, 13(3), 361–377. https://doi.org/10.3758/BF03193857 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gillen, N. , Agus, T. , & Fosker, T. (2018). Measuring auditory discrimination thresholds in preschool children: An empirically based analysis. Auditory Perception & Cognition, 1(3–4), 173–204. https://doi.org/10.1080/25742442.2019.1600970 [Google Scholar]

- Golinkoff, R. M. , Hirsh-Pasek, K. , Cauley, K. M. , & Gordon, L. (1987). The eyes have it: Lexical and syntactic comprehension in a new paradigm. Journal of Child Language, 14(1), 23–45. https://doi.org/10.1017/S030500090001271X [DOI] [PubMed] [Google Scholar]

- Golinkoff, R. M. , Ma, W. , Song, L. , & Hirsh-Pasek, K. (2013). Twenty-five years using the intermodal preferential looking paradigm to study language acquisition: What have we learned? Perspectives on Psychological Science, 8(3), 316–339. https://doi.org/10.1177/1745691613484936 [DOI] [PubMed] [Google Scholar]

- Halberda, J. (2003). The development of a word-learning strategy. Cognition, 87(1), B23–B34. https://doi.org/10.1016/S0010-0277(02)00186-5 [DOI] [PubMed] [Google Scholar]

- Heller Murray, E. S. , Hseu, A. F. , Nuss, R. C. , Harvey Woodnorth, G. , & Stepp, C. E. (2019). Vocal pitch discrimination in children with and without vocal fold nodules. Applied Sciences, 9(15), 3042. https://doi.org/10.3390/app9153042 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henton, C. G. , & Bladon, R. A. (1985). Breathiness in normal female speech: Inefficiency versus desirability. Language & Communication, 5(3), 221–227. https://doi.org/10.1016/0271-5309(85)90012-6 [Google Scholar]

- Hepper, P. G. , Scott, D. , & Shahidullah, S. (1993). Newborn and fetal response to maternal voice. Journal of Reproductive and Infant Psychology, 11(3), 147–153. https://doi.org/10.1080/02646839308403210 [Google Scholar]

- Hirsh-Pasek, K. , & Golinkoff, R. M. (1996). The intermodal preferential looking paradigm: A window onto emerging language comprehension. In McDaniel D., McKee C., & Cairns H. S. (Eds.), Language, speech, and communication. Methods for assessing children's syntax (pp. 105–124). MIT Press. [Google Scholar]

- Hollich, G. J. , Hirsh-Pasek, K. , & Golinkoff, R. M. (2000). Breaking the language barrier: An emergentist coalition model for the origins of word learning. Monographs of the Society for Research in Child Development, 65(3), 1–123. [PubMed] [Google Scholar]

- Houston-Price, C. , Mather, E. , & Sakkalou, E. (2007). Discrepancy between parental reports of infants' receptive vocabulary and infants' behaviour in a preferential looking task. Journal of Child Language, 34(4), 701–724. https://doi.org/10.1017/S0305000907008124 [DOI] [PubMed] [Google Scholar]

- Iacoboni, M. (2008). The role of premotor cortex in speech perception: Evidence from fMRI and rTMS. Journal of Physiology-Paris, 102(1–3), 31–34. https://doi.org/10.1016/j.jphysparis.2008.03.003 [DOI] [PubMed] [Google Scholar]

- Jensen, J. K. , & Neff, D. L. (1993). Development of basic auditory discrimination in preschool children. Psychological Science, 4(2), 104–107. https://doi.org/10.1111/j.1467-9280.1993.tb00469.x [Google Scholar]

- Kempster, G. B. , Gerratt, B. R. , Verdolini Abbott, K. , Barkmeier-Kraemer, J. , & Hillman, R. E. (2009). Consensus Auditory-Perceptual Evaluation of Voice: Development of a standardized clinical protocol. American Journal of Speech-Language Pathology, 18(2), 124–132. https://doi.org/10.1044/1058-0360(2008/08-0017) [DOI] [PubMed] [Google Scholar]

- Koelewijn, T. , Gaudrain, E. , Tamati, T. , & Başkent, D. (2021). The effects of lexical content, acoustic and linguistic variability, and vocoding on voice cue perception. The Journal of the Acoustical Society of America, 150(3), 1620–1634. https://doi.org/10.1121/10.0005938 [DOI] [PubMed] [Google Scholar]

- Kreiman, J. , & Sidtis, D. (2011). Foundations of voice studies: An interdisciplinary approach to voice production and perception. Wiley. https://doi.org/10.1002/9781444395068 [Google Scholar]

- Kreiman, J. , Vanlancker-Sidtis, D. , & Gerratt, B. R. (2005). Perception of voice quality. In Pisoni D. B. & Remez R. E. (Eds.), The handbook of speech perception (pp. 338–362). Blackwell. https://doi.org/10.1002/9780470757024.ch14 [Google Scholar]

- Lee, G. Y. , & Kisilevsky, B. S. (2014). Fetuses respond to father's voice but prefer mother's voice after birth. Developmental Psychobiology, 56(1), 1–11. https://doi.org/10.1002/dev.21084 [DOI] [PubMed] [Google Scholar]

- Liberman, A. M. , & Mattingly, I. G. (1985). The motor theory of speech perception revised. Cognition, 21(1), 1–36. https://doi.org/10.1016/0010-0277(85)90021-6 [DOI] [PubMed] [Google Scholar]

- Lupyan, G. (2012). Linguistically modulated perception and cognition: The label-feedback hypothesis. Frontiers in Psychology, 3. https://doi.org/10.3389/fpsyg.2012.00054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mehler, J. , Bertoncini, J. , Barriere, M. , & Jassik-Gerschenfeld, D. (1978). Infant recognition of mother's voice. Perception, 7(5), 491–497. https://doi.org/10.1068/p070491 [DOI] [PubMed] [Google Scholar]

- Miller, C. L. (1983). Developmental changes in male/female voice classification by infants. Infant Behavior and Development, 6(2–3), 313–330. https://doi.org/10.1016/S0163-6383(83)80040-X [Google Scholar]

- Miller, C. L. , Younger, B. A. , & Morse, P. A. (1982). The categorization of male and female voices in infancy. Infant Behavior and Development, 5(2–4), 143–159. https://doi.org/10.1016/S0163-6383(82)80024-6 [Google Scholar]

- Mills, M. , & Melhuish, E. (1974). Recognition of mother's voice in early infancy. Nature, 252(5479), 123–124. https://doi.org/10.1038/252123a0 [DOI] [PubMed] [Google Scholar]

- Mole, C. (2009). The motor theory of speech perception. In Nudds M. & O'Callaghan C. (Eds.), Sounds and perception: New philosophical essays (pp. 211–233). Oxford University Press. https://doi.org/10.1093/acprof:oso/9780199282968.003.0010 [Google Scholar]

- Moore, D. R. , Ferguson, M. A. , Halliday, L. F. , & Riley, A. (2008). Frequency discrimination in children: Perception, learning and attention. Hearing Research, 238(1–2), 147–154. https://doi.org/10.1016/j.heares.2007.11.013 [DOI] [PubMed] [Google Scholar]

- Morini, G. , & Blair, M. (2021). Webcams, songs, and vocabulary learning: A comparison of in-person and remote data collection as a way of moving forward with child-language research. Frontiers in Psychology, 12. https://doi.org/10.3389/fpsyg.2021.702819 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morini, G. , & Newman, R. S. (2019). Dónde está la ball? Examining the effect of code switching on bilingual children's word recognition. Journal of Child Language, 46(6), 1238–1248. https://doi.org/10.1017/S0305000919000400 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nagels, L. , Gaudrain, E. , Vickers, D. , Hendriks, P. , & Başkent, D. (2020). Development of voice perception is dissociated across gender cues in school-age children. Scientific Reports, 10(1), 5074. https://doi.org/10.1038/s41598-020-61732-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newman, R. S. , Morini, G. , Kozlovsky, P. , & Panza, S. (2018). Foreign accent and toddlers' word learning: The effect of phonological contrast. Language Learning and Development, 14(2), 97–112. https://doi.org/10.1080/15475441.2017.1412831 [Google Scholar]

- Ockleford, E. M. , Vince, M. A. , Layton, C. , & Reader, M. R. (1988). Responses of neonates to parents' and others' voices. Early Human Development, 18(1), 27–36. https://doi.org/10.1016/0378-3782(88)90040-0 [DOI] [PubMed] [Google Scholar]

- Poulin-Dubois, D. , Serbin, L. A. , Kenyon, B. , & Derbyshire, A. (1994). Infants' intermodal knowledge about gender. Developmental Psychology, 30(3), 436–442. https://doi.org/10.1037/0012-1649.30.3.436 [Google Scholar]

- Södersten, M. , & Lindestad, P.-Å. (1990). Glottal closure and perceived breathiness during phonation in normally speaking subjects. Journal of Speech and Hearing Research, 33(3), 601–611. https://doi.org/10.1044/jshr.3303.601 [DOI] [PubMed] [Google Scholar]

- Spence, M. J. , & Freeman, M. S. (1996). Newborn infants prefer the maternal low-pass filtered voice, but not the maternal whispered voice. Infant Behavior and Development, 19(2), 199–212. https://doi.org/10.1016/S0163-6383(96)90019-3 [Google Scholar]

- Sutcliffe, P. , & Bishop, D. (2005). Psychophysical design influences frequency discrimination performance in young children. Journal of Experimental Child Psychology, 91(3), 249–270. https://doi.org/10.1016/j.jecp.2005.03.004 [DOI] [PubMed] [Google Scholar]

- Swingley, D. , & Aslin, R. N. (2002). Lexical neighborhoods and the word-form representations of 14-month-olds. Psychological Science, 13(5), 480–484. https://doi.org/10.1111/1467-9280.00485 [DOI] [PubMed] [Google Scholar]

- Szkiełkowska, A. , Krasnodębska, P. , & Miaśkiewicz, B. (2022). Assessment of auditory processing in childhood dysphonia. International Journal of Pediatric Otorhinolaryngology, 155, 111060. https://doi.org/10.1016/j.ijporl.2022.111060 [DOI] [PubMed] [Google Scholar]

- Wilson, S. M. , Saygin, A. P. , Sereno, M. I. , & Iacoboni, M. (2004). Listening to speech activates motor areas involved in speech production. Nature Neuroscience, 7(7), 701–702. https://doi.org/10.1038/nn1263 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Numerical data are available from the authors by request. For confidentiality reasons, videos are not available.