Abstract

Many studies have recently used several deep learning methods for detecting skin cancer. However, hyperspectral imaging (HSI) is a noninvasive optics system that can obtain wavelength information on the location of skin cancer lesions and requires further investigation. Hyperspectral technology can capture hundreds of narrow bands of the electromagnetic spectrum both within and outside the visible wavelength range as well as bands that enhance the distinction of image features. The dataset from the ISIC library was used in this study to detect and classify skin cancer on the basis of basal cell carcinoma (BCC), squamous cell carcinoma (SCC), and seborrheic keratosis (SK). The dataset was divided into training and test sets, and you only look once (YOLO) version 5 was applied to train the model. The model performance was judged according to the generated confusion matrix and five indicating parameters, including precision, recall, specificity, accuracy, and the F1-score of the trained model. Two models, namely, hyperspectral narrowband image (HSI-NBI) and RGB classification, were built and then compared in this study to understand the performance of HSI with the RGB model. Experimental results showed that the HSI model can learn the SCC feature better than the original RGB image because the feature is more prominent or the model is not captured in other categories. The recall rate of the RGB and HSI models were 0.722 to 0.794, respectively, thereby indicating an overall increase of 7.5% when using the HSI model.

Keywords: skin cancer, hyperspectral imaging, convolutional neural network, YOLO

1. Introduction

The most common and most diagnosed among the cancers is nonmelanoma skin cancer (NMSC) [1]. Common types of skin cancer include basal cell carcinoma (BCC), squamous cell carcinoma (SCC), and seborrheic keratosis (SK) [2]. BCC is the least aggressive NMSC and can be characterized by cells that resemble epidermal basal cells, while squamous cells are invasive and may metastatically proliferate in an abnormal manner [3]. BCC is the most prevalent kind of skin cancer and usually develops in the head and neck area in 80% of patients without metastasizing [4,5]. Meanwhile, SCC is the second most common skin cancer, and it is also associated with aggressive tumors with highly invasive properties [6].

The demand for rapid prognosis of skin cancer has grown because of the steadily increasing incidence of skin cancer [7,8,9,10,11,12]. Early diagnosis is a critical factor in skin treatment [13,14,15]. Although doctors typically adopt biopsy, this method is slow, painful, and time consuming [16] because it collects samples from potential spots of skin cancer, and these samples are medically determined to detect cancer cells [17]. However, many computer-aided detection (CAD) models have been developed in recent years since the development of artificial intelligence (AI) to detect various types of cancers [18,19,20,21].

Haenssle et al. [22] used the InceptionV4 model to detect skin cancer and then compared the results with the findings of 58 dermatologists. The CAD model outperformed the dermatologists in sensitivity by 8% but underperformed in specificity by 9%. Han et al. [23] used ResNet-152 to classify images in an Asan training dataset, which was only at par with the results of 16 dermatologists. Fujisawa et al. [24] utilized a deep learning method on 6000 images to detect malignant and benign cases and achieved an accuracy of 76%. In addition to CAD, biosensors are a cost-effective tool for early cancer diagnosis [23,24,25]. However, environmental adaptabilities of these nanomaterials remain a challenge [26,27]. CAD modes based on conventional red, green, and blue (RGB) have also reached an upper saturation limit [28]. However, hyperspectral imaging (HSI) is an approach that can overcome the disadvantages of conventional methods [29,30].

HSI is an emerging method that analyzes a wide range of wavelengths instead of only providing each pixel one of the three primary colors [31,32]. HSI has been used in numerous classifications fields, such as agriculture [33], cancer detection [34,35,36,37], military [38], air pollution detection [29,39], remote sensing [40], dental imaging [41], environment monitoring [42], satellite photography [43], counterfeit verification [44,45,46], forestry monitoring [47], food security [48], natural resource surveying [49], vegetation observation [50], and geological mapping [51].

Many researches have also been conducted on skin cancer detection using HSI [52,53,54]. Leon et al. proposed a method based on supervised and unsupervised learning techniques for automatic identification and classification of the pigmented skin lesions (PSL) [55]. Courtenay et al. built an ad-hoc platform combined with a visible-near infra-red (VNIR) hyperspectral imaging sensor to find an ideal spectral wavelength to distinguish between normal skin and cancerous skin [56]. In another study by Courtenay et al. HSI was utilized to obtain spectral differences between benign and cancerous dermal tissue specimens [57]. However, most of current methods use an HSI imaging sensor or camera to capture the skin which is expensive and a difficult procedure.

Therefore, a rapid skin cancer detection method using the you only look once (YOLO) version 5 model combined with HSI technology was proposed in this study. The developed model was judged on the basis of five indicators, namely, precision, recall, specificity, accuracy, and F1-score.

2. Methods

2.1. Data Preprocessing

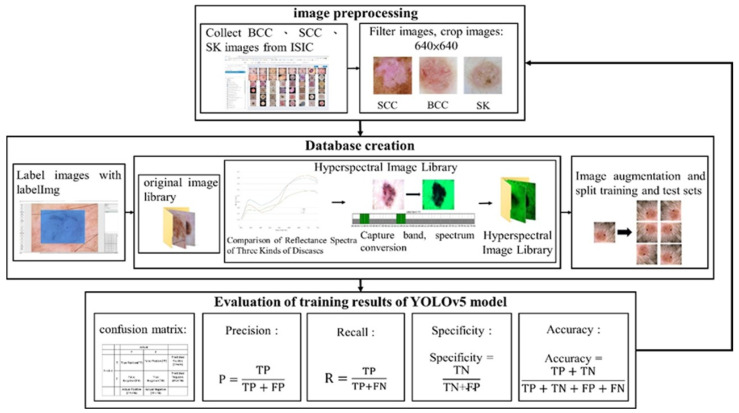

This study focuses on three skin-related diseases: BCC, SCC, and SK. The dataset consists of a training set of 654, 336, and 480 images, and a validation set of 168, 90, and 126 images of BCC, SCC, and SK, respectively. A total of 1470 images in the training set and 384 images in the validation set are trained on the network. The whole study can be divided into three parts, namely, image preprocessing, database creation, and evaluation of the training results of the YOLOv5 model, as shown in Figure 1. ISIC data were used. Figure 2, Figure 3 and Figure 4 show the squamous cell carcinoma (SCC), basal cell carcinoma (BCC), and seborrheic keratosis, respectively.

Figure 1.

Overall experimental flow chart.

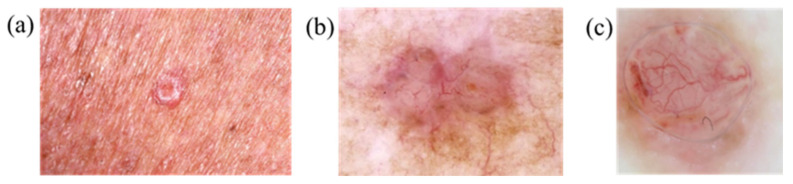

Figure 2.

Basal cell carcinoma: (a) bright protrusions around the epidermis and (b,c) enlarged and frequently hemorrhagic lesions.

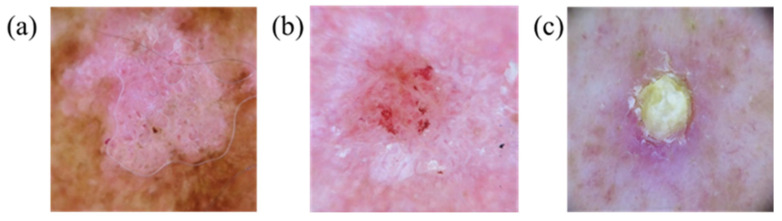

Figure 3.

Squamous cell carcinoma appears as (a,b) red squamous plaques or (c) nodules.

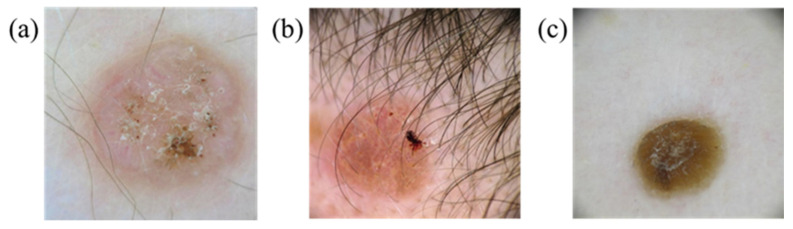

Figure 4.

Seborrheic keratosis (a,b) due to hyperplasia of mutant epidermal keratinocytes and (c) black mass of keratinocytes.

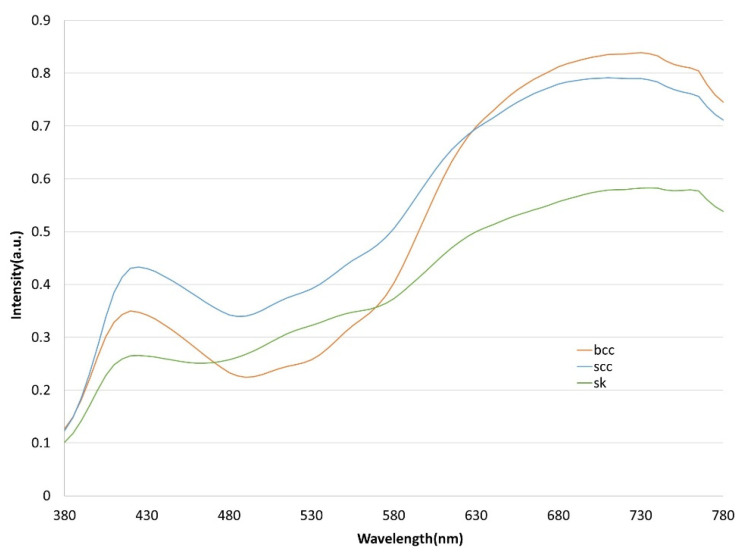

Each image was set to a default size of 640 × 640 pixels during image preprocessing to avoid problems, such as insufficient computer memory, during spectrum conversion and to ensure that the format is consistent. LabelImg software was adopted to mark the three sets of images, which are outputted as an xml file. This file is then converted to a txt file and fed to the YOLOv5 model for training. Note that the annotated image file was converted into an HSI narrow-band image (NBI) through a spectrum conversion algorithm prior to training. The three types of skin cancer conditions are first analyzed by comparing the normalized reflection spectrum of the lesion area, as shown in Figure 5. Wavelength bands with large differences in reflected light intensity are compared. The spectrum is converted into an HSI with a spectral resolution of 1 nm in the range of 380–780 nm. However, dimensional data will result in high computing and storage space requirements. Principal component analysis (PCA) is utilized to counter this problem and transform the data from the image to a new data space in a linear manner. PCA generates new component information, extracts important parts of features, and reduces the dimensionality of the image. Data are finally projected in a low-dimensional space in which spectral information is decorrelated.

Figure 5.

Normalized reflection spectra of BCC, SCC, and SK. The orange line represents BCC, the blue line represents SCC, and the green line represents SK.

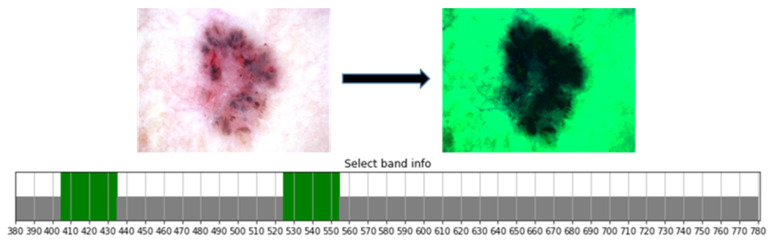

Characteristic bands of the visible light absorption spectrum for skin irradiation are between 405–435 nm and 525–555 nm due to the absorption intensity and wavelength position of visible light by heme in the blood flow change. Wavelengths in the range of 435 and 525–555 nm are used to extract important parts of skin lesions, and the output obtains the HSI-NBI image, as shown in Figure 6.

Figure 6.

Bands 380–780, 405–435, and 525–555 nm between the 401 channels are selected and extracted.

Two data sets were obtained using the RGB and HSI images. The training and test sets need to be divided. Hence, 80% of the data was divided for training while the remaining 20% of the data were used for testing the model after image amplification of the two data sets. The PyTorch deep learning framework was built using the Windows 10 operating system, and the program written in the Python language was based on the Python 3.9.12 platform.

2.2. YOLOv5 Model

YOLOv5 was specifically chosen in this study because previous research suggests that when compared with other models such as RetinaNet or SSD, YOLO had a better detection speed which helps to achieve real-time performance [58]. YOLOv5 comprises three main parts, namely, backbone, neck, and head terminals (detailed information on its structure is presented in Section S1 of the Supplement Materials). Backbone is the architecture of the convolutional neural network (CNN) and mainly composed of models, such as focus, CONV-BN-Leaky ReLU (CBL), cross stage partial (CSP), and spatial pyramid pooling (SPP) models. The function of focus is to slice the input image, reduce the required CUDA memory and number of layers, increase forward and back propagation speed, aggregate different fine-grained images, and form image features. Focus cuts the input image with a preset image of 640 × 640 × 3 into four 320 × 320 × 3 images and then passes through CONCAT combined with slices and layers of convolution kernels to obtain a 320 × 320 × 32 image by reducing the image dimension to accelerate the training. SPP is a pooling layer that keeps the image input from being limited by the input size while maintaining integrity. Neck consists of CBL, Upsample, CSP2_X, and other models; it is a series of feature aggregation layers that combines image features and generates feature pyramid (FPN) and path aggregation (PAN) networks. YOLOv5 retains the CSP1_X structure of the YOLOv4 version CSPDarknet-53 and then adds the CSP2_X structure to reduce the model size and extract increasingly complete image features. Head uses GIoU Loss as the loss function for bounding boxes.

The loss function in YOLOv5 is divided into three types: bounding box regression, confidence, and classification losses. The loss function is used to describe the predicted and true values of the model to indicate the degree of difference. The loss function of the YOLOv5 model is expressed as follows:

| (1) |

where represents the number of grids and B is the number of bounding boxes in each grid. The value of is equal to 1 when an object exists in the bounding box; otherwise, it will be 0.

| (2) |

where is the predicted confidence of the bounding box of j in the grid of i, is the true confidence of the bounding box of j in the grid of i, and is the confidence weight when objects are absent in the bounding box.

| (3) |

| (4) |

where is the probability that the detected object is predicted to be the category and is the probability that it actually belongs to the category.

3. Results

The batch size was set to 16, and value curves of the training and validation sets were determined after 300 iterations of training the loss function. Detailed information on the original image is presented in Section S1 of Supplement Materials). The confusion matrix of the developed models in this study is presented in Table 1. Among the 384 test images, 301 images were correctly predicted in the RGB model while 274 images were correctly identified in the HSI model. The results were analyzed using several indicators, including precision, recall, specificity, accuracy, and F1 score, to evaluate the detection performance of the YOLOv5 network (see Supplement Materials, Section S2 for the detailed information on different equations of indicators). The obtained results are listed in Table 2 (see Supplement Materials, Table S1 for the heatmap of the confusion matrix). The respective accuracies of the RGB and HSI models of 0.792 and 0.787 indicated their similarity. The more evident SCC category in the HSI model presents a more significant improvement with other labels than the original image category SCC. This finding demonstrated the high degree of completeness of the model in the learning of lesion features. It can be seen that the accuracy rate of both the RGB model and the HSI model are similar because the number of images used are comparatively lesser. However, it can be proved from this study that the HSI-based conversion algorithms which have the capability to convert the RGB images to HSI images have the potential to classify and detect skin cancers. Although most of the results are similar, this research has significantly improved the recall rate and specificity of the SCC category. This study has also improved the specificity also known as the true negative rate for the SK category.

Table 1.

Confusion matrix for the YOLOv5 detection model.

| Skin Disease | Results of the RGB Model | ||||

|---|---|---|---|---|---|

| True | |||||

| BCC | SCC | SK | Background FP | ||

| Predicted | BCC | 133 | 7 | 8 | 45 |

| SCC | 6 | 66 | 0 | 27 | |

| SK | 6 | 1 | 102 | 54 | |

| Background FN | 23 | 16 | 16 | ||

| Skin Disease | Result of HSI Model | ||||

| True | |||||

| BCC | SCC | SK | Background FP | ||

| Predicted | BCC | 102 | 4 | 19 | 74 |

| SCC | 17 | 72 | 0 | 10 | |

| SK | 6 | 0 | 100 | 55 | |

| Background FN | 43 | 14 | 7 | ||

Table 2.

Performance results of YOLOv5 training.

| RGB Model | Precision | Recall | Specificity | F1-Score | Accuracy |

|---|---|---|---|---|---|

| All | 0.888 | 0.758 | 0.798 | 0.818 | 0.792 |

| BCC | 0.899 | 0.747 | 0.791 | 0.816 | |

| SCC | 0.812 | 0.722 | 0.833 | 0.764 | |

| SK | 0.954 | 0.805 | 0.71 | 0.873 | |

| HSI Model | Precision | Recall | Specificity | F1-score | Accuracy |

| All | 0.8 | 0.726 | 0.786 | 0.761 | 0.787 |

| BCC | 0.813 | 0.624 | 0.716 | 0.706 | |

| SCC | 0.746 | 0.794 | 0.878 | 0.769 | |

| SK | 0.841 | 0.76 | 0.764 | 0.798 |

The future scope of this study is to implement the hyperspectral imaging conversion technique to capsule endoscopy. In a capsule endoscopy, there is no space to add an additional narrow band imaging filter which improves the performance of detecting cancers. The same algorithm can be used to convert the RGB images to HSI images. Another advantage of this algorithm is that it reduces the cost of the hyperspectral dermatologic acquisition system, which is not cost-effective. Therefore, this method can be implemented on the RGB images taken to detect skin cancers. In addition, video dermatoscopes combined with AI software can also be used for automatic diagnosis.

4. Conclusions

Skin cancer images are primarily classified into three categories of SCC, BCC, and SK using YOLOv5 based on the CNN architecture. Lesion categories, including RGB and hyperspectral datasets, are utilized to create a confusion matrix and calculate the precision, recall, specificity, precision, and F1-score values, which are used as classification indicators. The experimental results showed that the SCC category in the HSI model can successfully learn characteristic features. The HSI classification model presents a better detection effect than the original RGB image. The recall rate improved from 0.722 to 0.794. The HSI model can extract detailed spectral bands, reduce the noise from the image, and highlight lesion features. Because some symptoms of BCC are similar to SK, it is easy to be confused, so the recall rate is the lowest. Compared with previous versions, YOLOv5 is simpler to use because the input image can be automatically scaled to the required size. The relatively small model structure achieves an accuracy similar to that of the previous version at a faster speed. The gain can be used in the subsequent designs of the experiment to improve the accuracy in future investigations.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/jcm12031134/s1, Figure S1. YOLOv5 network architecture. Figure S2. Convergence of loss functions for training set and validation set of original images and precision, recall, and average precision. Figure S3. Hyperspectral image training set and validation set loss functions and convergence of precision, recall, and mean precision. Table S1. Heatmap of the confusion matrix.

Author Contributions

Conceptualization, H.-Y.H., Y.-M.T., Y.-P.H. and H.-C.W.; data curation, A.M., Y.-M.T. and H.-C.W.; formal analysis, Y.-P.H., Y.-M.T. and W.-Y.C.; funding acquisition, H.-Y.H., W.-Y.C. and H.-C.W.; investigation, Y.-M.T., W.-Y.C., A.M. and H.-C.W.; methodology, H.-Y.H., Y.-P.H., A.M. and H.-C.W.; project administration, W.-Y.C. and H.-C.W.; resources, H.-C.W. and W.-Y.C.; software, Y.-P.H. and A.M.; supervision, H.-C.W.; validation, H.-Y.H., W.-Y.C. and H.-C.W.; writing—original draft, A.M.; writing—review and editing, H.-C.W. and A.M. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Institutional Review Board of the Ditmanson Medical Foundation Chia-Yi Christian Hospital (CYCH) (IRB2022084).

Informed Consent Statement

Written informed consent was waived in this study because of the retrospective anonymized nature of the study design.

Data Availability Statement

The data presented in this study are available in this article.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research was supported by the National Science and Technology Council, The Republic of China under the grants NSTC 111-2221-E-194-007. This work was financially/partially supported by the Advanced Institute of Manufacturing with High-tech Innovations (AIM-HI) and the Center for Innovative Research on Aging Society (CIRAS) from The Featured Areas Research Center Program within the framework of the Higher Education Sprout Project by the Ministry of Education (MOE), the Ditmanson Medical Foundation Chia-Yi Christian Hospital, and the National Chung Cheng University Joint Research Program (CYCH-CCU-2022-08), and Kaohsiung Armed Forces General Hospital research project KAFGH_D_112040 in Taiwan.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Didona D., Paolino G., Bottoni U., Cantisani C. Non Melanoma Skin Cancer Pathogenesis Overview. Biomedicines. 2018;6:6. doi: 10.3390/biomedicines6010006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Leiter U., Eigentler T., Garbe C. Sunlight, Vitamin D and Skin Cancer. Springer; New York, NY, USA: 2014. Epidemiology of skin cancer; pp. 120–140. [DOI] [PubMed] [Google Scholar]

- 3.Apalla Z., Nashan D., Weller R.B., Castellsagué X. Skin cancer: Epidemiology, disease burden, pathophysiology, diagnosis, and therapeutic approaches. Dermatol. Ther. 2017;7:5–19. doi: 10.1007/s13555-016-0165-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Cameron M.C., Lee E., Hibler B.P., Barker C.A., Mori S., Cordova M., Nehal K.S., Rossi A.M. Basal cell carcinoma: Epidemiology; pathophysiology; clinical and histological subtypes; and disease associations. J. Am. Acad. Dermatol. 2019;80:303–317. doi: 10.1016/j.jaad.2018.03.060. [DOI] [PubMed] [Google Scholar]

- 5.Cives M., Mannavola F., Lospalluti L., Sergi M.C., Cazzato G., Filoni E., Cavallo F., Giudice G., Stucci L.S., Porta C., et al. Non-Melanoma Skin Cancers: Biological and Clinical Features. Int. J. Mol. Sci. 2020;21:5394. doi: 10.3390/ijms21155394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chantrain C.F., Henriet P., Jodele S., Emonard H., Feron O., Courtoy P.J., DeClerck Y.A., Marbaix E. Mechanisms of pericyte recruitment in tumour angiogenesis: A new role for metalloproteinases. Eur. J. Cancer. 2006;42:310–318. doi: 10.1016/j.ejca.2005.11.010. [DOI] [PubMed] [Google Scholar]

- 7.Godar D.E. Worldwide increasing incidences of cutaneous malignant melanoma. J. Ski. Cancer. 2011;2011:858425. doi: 10.1155/2011/858425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Smittenaar C., Petersen K., Stewart K., Moitt N. Cancer incidence and mortality projections in the UK until 2035. Br. J. Cancer. 2016;115:1147–1155. doi: 10.1038/bjc.2016.304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Donaldson M.R., Coldiron B.M. Seminars in Cutaneous Medicine and Surgery. WB Saunders; Philadelphia, PA, USA: 2011. No end in sight: The skin cancer epidemic continues; pp. 3–5. [DOI] [PubMed] [Google Scholar]

- 10.Sung H., Ferlay J., Siegel R.L., Laversanne M., Soerjomataram I., Jemal A., Bray F. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA A Cancer J. Clin. 2021;71:209–249. doi: 10.3322/caac.21660. [DOI] [PubMed] [Google Scholar]

- 11.Ferlay J., Soerjomataram I., Dikshit R., Eser S., Mathers C., Rebelo M., Parkin D.M., Forman D., Bray F. Cancer incidence and mortality worldwide: Sources, methods and major patterns in GLOBOCAN 2012. Int. J. Cancer. 2015;136:E359–E386. doi: 10.1002/ijc.29210. [DOI] [PubMed] [Google Scholar]

- 12.Wen D., Khan S.M., Ji Xu A., Ibrahim H., Smith L., Caballero J., Zepeda L., de Blas Perez C., Denniston A.K., Liu X., et al. Characteristics of publicly available skin cancer image datasets: A systematic review. Lancet Digit. Health. 2022;4:e64–e74. doi: 10.1016/S2589-7500(21)00252-1. [DOI] [PubMed] [Google Scholar]

- 13.Senan E.M., Jadhav M.E. Classification of dermoscopy images for early detection of skin cancer—A review. Int. J. Comput. Appl. 2019;975:8887. [Google Scholar]

- 14.Janda M., Cust A.E., Neale R.E., Aitken J.F., Baade P.D., Green A.C., Khosrotehrani K., Mar V., Soyer H.P., Whiteman D.C. Early detection of melanoma: A consensus report from the Australian skin and skin cancer research centre melanoma screening Summit. Aust. N. Z. J. Public Health. 2020;44:111–115. doi: 10.1111/1753-6405.12972. [DOI] [PubMed] [Google Scholar]

- 15.Demir A., Yilmaz F., Kose O. Early detection of skin cancer using deep learning architectures: Resnet-101 and inception-v3; Proceedings of the 2019 Medical Technologies Congress (TIPTEKNO); Izmir, Turkey. 3–5 October 2019; pp. 1–4. [Google Scholar]

- 16.Dildar M., Akram S., Irfan M., Khan H.U., Ramzan M., Mahmood A.R., Alsaiari S.A., Saeed A.H.M., Alraddadi M.O., Mahnashi M.H. Skin Cancer Detection: A Review Using Deep Learning Techniques. Int. J. Environ. Res. Public Health. 2021;18:5479. doi: 10.3390/ijerph18105479. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Brinker T.J., Hekler A., Utikal J.S., Grabe N., Schadendorf D., Klode J., Berking C., Steeb T., Enk A.H., Von Kalle C. Skin cancer classification using convolutional neural networks: Systematic review. J. Med. Internet Res. 2018;20:e11936. doi: 10.2196/11936. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Adla D., Reddy G., Nayak P., Karuna G. Deep learning-based computer aided diagnosis model for skin cancer detection and classification. Distrib. Parallel Databases. 2021;40:717–736. doi: 10.1007/s10619-021-07360-z. [DOI] [Google Scholar]

- 19.Henriksen E.L., Carlsen J.F., Vejborg I.M., Nielsen M.B., Lauridsen C.A. The efficacy of using computer-aided detection (CAD) for detection of breast cancer in mammography screening: A systematic review. Acta Radiol. 2019;60:13–18. doi: 10.1177/0284185118770917. [DOI] [PubMed] [Google Scholar]

- 20.di Ruffano L.F., Takwoingi Y., Dinnes J., Chuchu N., Bayliss S.E., Davenport C., Matin R.N., Godfrey K., O’Sullivan C., Gulati A. Computer-assisted diagnosis techniques (dermoscopy and spectroscopy-based) for diagnosing skin cancer in adults. Cochrane Database Syst. Rev. 2018;12 doi: 10.1002/14651858.CD013186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Fang Y.-J., Mukundan A., Tsao Y.-M., Huang C.-W., Wang H.-C. Identification of Early Esophageal Cancer by Semantic Segmentation. J. Pers. Med. 2022;12:1204. doi: 10.3390/jpm12081204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Haenssle H.A., Fink C., Toberer F., Winkler J., Stolz W., Deinlein T., Hofmann-Wellenhof R., Lallas A., Emmert S., Buhl T. Man against machine reloaded: Performance of a market-approved convolutional neural network in classifying a broad spectrum of skin lesions in comparison with 96 dermatologists working under less artificial conditions. Ann. Oncol. 2020;31:137–143. doi: 10.1016/j.annonc.2019.10.013. [DOI] [PubMed] [Google Scholar]

- 23.Mukundan A., Feng S.-W., Weng Y.-H., Tsao Y.-M., Artemkina S.B., Fedorov V.E., Lin Y.-S., Huang Y.-C., Wang H.-C. Optical and Material Characteristics of MoS2/Cu2O Sensor for Detection of Lung Cancer Cell Types in Hydroplegia. Int. J. Mol. Sci. 2022;23:4745. doi: 10.3390/ijms23094745. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Mukundan A., Tsao Y.-M., Artemkina S.B., Fedorov V.E., Wang H.-C. Growth Mechanism of Periodic-Structured MoS2 by Transmission Electron Microscopy. Nanomaterials. 2022;12:135. doi: 10.3390/nano12010135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Hsiao Y.-P., Mukundan A., Chen W.-C., Wu M.-T., Hsieh S.-C., Wang H.-C. Design of a Lab-On-Chip for Cancer Cell Detection through Impedance and Photoelectrochemical Response Analysis. Biosensors. 2022;12:405. doi: 10.3390/bios12060405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Chao L., Liang Y., Hu X., Shi H., Xia T., Zhang H., Xia H. Recent advances in field effect transistor biosensor technology for cancer detection: A mini review. J. Phys. D Appl. Phys. 2021;55:153001. doi: 10.1088/1361-6463/ac3f5a. [DOI] [Google Scholar]

- 27.Goldoni R., Scolaro A., Boccalari E., Dolci C., Scarano A., Inchingolo F., Ravazzani P., Muti P., Tartaglia G. Malignancies and biosensors: A focus on oral cancer detection through salivary biomarkers. Biosensors. 2021;11:396. doi: 10.3390/bios11100396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Johansen T.H., Møllersen K., Ortega S., Fabelo H., Garcia A., Callico G.M., Godtliebsen F. Recent advances in hyperspectral imaging for melanoma detection. Wiley Interdiscip. Rev. Comput. Stat. 2020;12:e1465. doi: 10.1002/wics.1465. [DOI] [Google Scholar]

- 29.Mukundan A., Huang C.-C., Men T.-C., Lin F.-C., Wang H.-C. Air Pollution Detection Using a Novel Snap-Shot Hyperspectral Imaging Technique. Sensors. 2022;22:6231. doi: 10.3390/s22166231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Tsai C.-L., Mukundan A., Chung C.-S., Chen Y.-H., Wang Y.-K., Chen T.-H., Tseng Y.-S., Huang C.-W., Wu I.-C., Wang H.-C. Hyperspectral Imaging Combined with Artificial Intelligence in the Early Detection of Esophageal Cancer. Cancers. 2021;13:4593. doi: 10.3390/cancers13184593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Schneider A., Feussner H. Chapter 5—Diagnostic Procedures. In: Schneider A., Feussner H., editors. Biomedical Engineering in Gastrointestinal Surgery. Academic Press; Cambridge, MA, USA: 2017. pp. 87–220. [Google Scholar]

- 32.Schelkanova I., Pandya A., Muhaseen A., Saiko G., Douplik A. 13—Early optical diagnosis of pressure ulcers. In: Meglinski I., editor. Biophotonics for Medical Applications. Woodhead Publishing; Sawston, UK: 2015. pp. 347–375. [Google Scholar]

- 33.Lu B., Dao P.D., Liu J., He Y., Shang J. Recent advances of hyperspectral imaging technology and applications in agriculture. Remote Sens. 2020;12:2659. doi: 10.3390/rs12162659. [DOI] [Google Scholar]

- 34.Hsiao Y.-P., Chiu C.W., Lu C.W., Nguyen H.T., Tseng Y.S., Hsieh S.C., Wang H.-C. Identification of skin lesions by using single-step multiframe detector. J. Clin. Med. 2021;10:144. doi: 10.3390/jcm10010144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hsiao Y.-P., Wang H.-C., Chen S.H., Tsai C.H., Yang J.H. Identified early stage mycosis fungoides from psoriasis and atopic dermatitis using non-invasive color contrast enhancement by LEDs lighting. Opt. Quantum Electron. 2015;47:1599–1611. doi: 10.1007/s11082-014-0017-x. [DOI] [Google Scholar]

- 36.Hsiao Y.-P., Wang H.-C., Chen S.H., Tsai C.H., Yang J.H. Optical perception for detection of cutaneous T-cell lymphoma by multi-spectral imaging. J. Opt. 2014;16:125301. doi: 10.1088/2040-8978/16/12/125301. [DOI] [Google Scholar]

- 37.Tsai T.-J., Mukundan A., Chi Y.-S., Tsao Y.-M., Wang Y.-K., Chen T.-H., Wu I.-C., Huang C.-W., Wang H.-C. Intelligent Identification of Early Esophageal Cancer by Band-Selective Hyperspectral Imaging. Cancers. 2022;14:4292. doi: 10.3390/cancers14174292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Gross W., Queck F., Vögtli M., Schreiner S., Kuester J., Böhler J., Mispelhorn J., Kneubühler M., Middelmann W. A multi-temporal hyperspectral target detection experiment: Evaluation of military setups; Proceedings of the Target and Background Signatures VII; Online. 13–17 September 2021; pp. 38–48. [Google Scholar]

- 39.Chen C.-W., Tseng Y.-S., Mukundan A., Wang H.-C. Air Pollution: Sensitive Detection of PM2.5 and PM10 Concentration Using Hyperspectral Imaging. Appl. Sci. 2021;11:4543. doi: 10.3390/app11104543. [DOI] [Google Scholar]

- 40.Gerhards M., Schlerf M., Mallick K., Udelhoven T. Challenges and future perspectives of multi-/Hyperspectral thermal infrared remote sensing for crop water-stress detection: A review. Remote Sens. 2019;11:1240. doi: 10.3390/rs11101240. [DOI] [Google Scholar]

- 41.Lee C.-H., Mukundan A., Chang S.-C., Wang Y.-L., Lu S.-H., Huang Y.-C., Wang H.-C. Comparative Analysis of Stress and Deformation between One-Fenced and Three-Fenced Dental Implants Using Finite Element Analysis. J. Clin. Med. 2021;10:3986. doi: 10.3390/jcm10173986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Stuart M.B., McGonigle A.J., Willmott J.R. Hyperspectral imaging in environmental monitoring: A review of recent developments and technological advances in compact field deployable systems. Sensors. 2019;19:3071. doi: 10.3390/s19143071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Mukundan A., Wang H.-C. Simplified Approach to Detect Satellite Maneuvers Using TLE Data and Simplified Perturbation Model Utilizing Orbital Element Variation. Appl. Sci. 2021;11:10181. doi: 10.3390/app112110181. [DOI] [Google Scholar]

- 44.Huang S.-Y., Mukundan A., Tsao Y.-M., Kim Y., Lin F.-C., Wang H.-C. Recent Advances in Counterfeit Art, Document, Photo, Hologram, and Currency Detection Using Hyperspectral Imaging. Sensors. 2022;22:7308. doi: 10.3390/s22197308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Mukundan A., Tsao Y.-M., Lin F.-C., Wang H.-C. Portable and low-cost hologram verification module using a snapshot-based hyperspectral imaging algorithm. Sci. Rep. 2022;12:18475. doi: 10.1038/s41598-022-22424-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Mukundan A., Wang H.-C., Tsao Y.-M. A Novel Multipurpose Snapshot Hyperspectral Imager used to Verify Security Hologram; Proceedings of the 2022 International Conference on Engineering and Emerging Technologies (ICEET); Kuala Lumpur, Malaysia. 27–28 October 2022; pp. 1–3. [Google Scholar]

- 47.Vangi E., D’Amico G., Francini S., Giannetti F., Lasserre B., Marchetti M., Chirici G. The new hyperspectral satellite PRISMA: Imagery for forest types discrimination. Sensors. 2021;21:1182. doi: 10.3390/s21041182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Zhang X., Han L., Dong Y., Shi Y., Huang W., Han L., González-Moreno P., Ma H., Ye H., Sobeih T. A deep learning-based approach for automated yellow rust disease detection from high-resolution hyperspectral UAV images. Remote Sens. 2019;11:1554. doi: 10.3390/rs11131554. [DOI] [Google Scholar]

- 49.Hennessy A., Clarke K., Lewis M. Hyperspectral classification of plants: A review of waveband selection generalisability. Remote Sens. 2020;12:113. doi: 10.3390/rs12010113. [DOI] [Google Scholar]

- 50.Terentev A., Dolzhenko V., Fedotov A., Eremenko D. Current State of Hyperspectral Remote Sensing for Early Plant Disease Detection: A Review. Sensors. 2022;22:757. doi: 10.3390/s22030757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.De La Rosa R., Tolosana-Delgado R., Kirsch M., Gloaguen R. Automated Multi-Scale and Multivariate Geological Logging from Drill-Core Hyperspectral Data. Remote Sens. 2022;14:2676. doi: 10.3390/rs14112676. [DOI] [Google Scholar]

- 52.Vo-Dinh T. A hyperspectral imaging system for in vivo optical diagnostics. IEEE Eng. Med. Biol. Mag. 2004;23:40–49. doi: 10.1109/memb.2004.1360407. [DOI] [PubMed] [Google Scholar]

- 53.Lu G., Fei B. Medical hyperspectral imaging: A review. J. Biomed. Opt. 2014;19:010901. doi: 10.1117/1.JBO.19.1.010901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Aggarwal s.L.P., Papay F.A. Applications of multispectral and hyperspectral imaging in dermatology. Exp. Dermatol. 2022;31:1128–1135. doi: 10.1111/exd.14624. [DOI] [PubMed] [Google Scholar]

- 55.Leon R., Martinez-Vega B., Fabelo H., Ortega S., Melian V., Castaño I., Carretero G., Almeida P., Garcia A., Quevedo E., et al. Non-Invasive Skin Cancer Diagnosis Using Hyperspectral Imaging for In-Situ Clinical Support. J. Clin. Med. 2020;9:1662. doi: 10.3390/jcm9061662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Courtenay L.A., González-Aguilera D., Lagüela S., del Pozo S., Ruiz-Mendez C., Barbero-García I., Román-Curto C., Cañueto J., Santos-Durán C., Cardeñoso-Álvarez M.E., et al. Hyperspectral imaging and robust statistics in non-melanoma skin cancer analysis. Biomed. Opt. Express. 2021;12:5107–5127. doi: 10.1364/BOE.428143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Dicker D.T., Lerner J., Van Belle P., Guerry t.D., Herlyn M., Elder D.E., El-Deiry W.S. Differentiation of normal skin and melanoma using high resolution hyperspectral imaging. Cancer Biol. Ther. 2006;5:1033–1038. doi: 10.4161/cbt.5.8.3261. [DOI] [PubMed] [Google Scholar]

- 58.Tan L., Huangfu T., Wu L., Chen W. Comparison of RetinaNet, SSD, and YOLO v3 for real-time pill identification. BMC Med. Inform. Decis. Mak. 2021;21:324. doi: 10.1186/s12911-021-01691-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data presented in this study are available in this article.