Abstract

Machine learning (ML) is being increasingly employed in dental research and application. We aimed to systematically compile studies using ML in dentistry and assess their methodological quality, including the risk of bias and reporting standards. We evaluated studies employing ML in dentistry published from 1 January 2015 to 31 May 2021 on MEDLINE, IEEE Xplore, and arXiv. We assessed publication trends and the distribution of ML tasks (classification, object detection, semantic segmentation, instance segmentation, and generation) in different clinical fields. We appraised the risk of bias and adherence to reporting standards, using the QUADAS-2 and TRIPOD checklists, respectively. Out of 183 identified studies, 168 were included, focusing on various ML tasks and employing a broad range of ML models, input data, data sources, strategies to generate reference tests, and performance metrics. Classification tasks were most common. Forty-two different metrics were used to evaluate model performances, with accuracy, sensitivity, precision, and intersection-over-union being the most common. We observed considerable risk of bias and moderate adherence to reporting standards which hampers replication of results. A minimum (core) set of outcome and outcome metrics is necessary to facilitate comparisons across studies.

Keywords: dental radiography, dentistry, machine learning, neural networks, scoping review

1. Introduction

With the advent of the big data era, machine learning (ML) methods like Support Vector Machine, Naïve Bayesian Classifier, Decision Tree, Random Forest (RF), K-Nearest Neighbor, and Deep Learning involving Convolutional Neural Network (CNN), etc., have been increasingly adopted in fields such as finance, spatial sciences, and speech recognition [1]. Additionally, in medicine and dentistry, ML has been employed for a range of applications, for example, image analysis in dermatology, ophthalmology, or radiology, with accuracy values similar or better than that of experienced clinicians [1,2].

In the field of ML, mathematical models are employed to enable computers to learn inherent structures in data and to use the learned understanding for predicting on new, unseen data [3]. For deep learning models, specifically CNNs, different types of model ‘architecture’ can be used. A ML workflow involves training the model, where a subset of the data is used to learn the underlying statistical patterns in the data, and testing it on a yet unseen, testing data subset. ML models tend to become more accurate, when larger training datasets are used [4]. Moreover, basic learning parameters are usually optimized on a separate data subset, referred to as validation data, a process called hyperparameter tuning. Testing the model on the test data involves a wealth of performance metrics (accuracy, sensitivity also known as recall, specificity, and F-scores, among others), while the assessment of a model’s generalizability, achievable via assessing its performance on an external (independent) dataset, is not frequently performed yet.

Notably, studies in the field of dental ML can vary widely [1]. Different research questions translate into different ML tasks, which in turn necessitate different model specifications. Various input data (numerical, imagery, speech, etc.) can be employed and varied models (SVM, Extreme Learning Machine, Decision Tree, RF, K-Nearest Neighbor, Neural Network, etc.) can be used. Datasets of different sizes and partitions (training, testing, and validation sets) can be used, and a range of methods for balancing the input datasets via synthetic data generation can be conducted. Moreover, the reference test can be established either by having a “hard” ground truth (for example, for imagery, histological sectioning) or fuzzy labeling schemes (for example, multiple human annotators labeling the same image), and a variety of performance metrics can be used to evaluate the model’s performance. These metrics differ with the ML task (classification or, for imagery, detection of objects, or segmentation of specific pixels in an image, or even generation of new images), and can be determined on different hierarchical levels, e.g., patient level, image level, tooth level, surface level or pixel level. Exemplary metrics are accuracy, the confusion matrix and (associated with it) sensitivity (also known as recall), specificity, positive predictive value (precision), and negative predictive value as well as the area-under-the receiver-operating-characteristics curve (c-statistic). For image segmentation tasks (where each pixel has its own classification accuracy), the intersection-over-union (IoU), i.e., the overlap between labeled and predicted pixels (DICE coefficient or Jaccard index), is often used.

As a result, there is significant heterogeneity in the data, tasks, models, and performance metrics, which makes it difficult to contrast studies and assess the robustness and consistency of the emerging body of evidence for ML in dentistry. Additionally, the quality of ML studies—both with regards to the risk of bias but also the reporting of the methods and results—has been shown to vary [5], and with a high likelihood such variance in quality and replicability is also present for dental ML studies.

We aimed to assess this quality of recent ML studies in dentistry, focusing on risk of bias and reporting quality, and to characterize the overall body of evidence with regards to the clinical and ML tasks frequently studied, the model types and underlying datasets, and the employed metrics. Having an overview about these aspects and appraising the consistency and robustness of existing ML studies in our field facilitates to highlight current strengths and weaknesses, and to identify future research needs. In comparison with recent focused reviews on certain clinical tasks (e.g., caries detection on radiographs [6], cephalometric landmark detection [2], etc.), this scoping review not only mainly targets clinical applicability and performance in a subfield of dentistry, but captures the overall picture of ML in our field with a broader focus, and thus a higher number of studies are expected to be included.

2. Materials and Methods

2.1. Search Strategy and Selection Criteria

We screened three electronic databases (MEDLINE via PubMed, Institute of Electrical and Electronics Engineers (IEEE) Xplore, and arXiv). Search terms used were ‘deep learning’, ‘artificial intelligence’, ‘machine learning’, ‘convolutional neural network’, ‘dental’ and ‘teeth’. The search strategy for all the three databases used is specified in the Supplementary Materials. No language restrictions were applied. The search was overall designed to account for different publication cultures across disciplines. Reviews, editorials, and technical standards were excluded.

The following inclusion criteria were applied:

-

(1)

Studies which had a dental/oral focus, including technical papers.

-

(2)

Studies employing ML, for example, SVM, RF, Artificial Neural Network, CNN.

-

(3)

Studies published between 1 January 2015 and 31 May 2021, as we aimed to gather recent studies and specifically include deep learning as the most rapidly evolving ML field at present.

Reporting of this scoping review followed the PRISMA checklist [7,8]. Our PICO question was as follows: Which ML practices are being employed by studies in dentistry and what are the methodological quality and findings? The question was constructed according to the Participants Intervention Comparison Outcome and Study (PICOS) strategy.

Population: All types of data with a dental or oral component.

Intervention/Comparison: ML techniques applied with a dental or oral focus for the diagnosis, management, prognosis of dental conditions or improving data quality. Patient-level, tooth-level, surface-level, or pixel-level.

Outcome: Performance evaluation of the ML models in terms of metrics, for example, accuracy, IoU, sensitivity, precision, area under the receiver operating characteristic, F indices, specificity, negative predictive value, rank-N recognition rate, error estimates, correlation coefficients, etc.

Study design type: For this review, we considered all kinds of studies except reviews, editorials, and technical standards, with no language restrictions.

Ethics approval was not sought because this study was based exclusively on published literature.

Screening of titles or abstracts was performed by one reviewer (A.C.). Inclusion or exclusion was decided by two reviewers in consensus (F.S. and A.C.). All papers which were found to be potentially eligible were assessed in full text against the inclusion criteria. We did not limit the inclusion of studies based on the target study population, outcome of interest, or the context in which ML was used. All original studies related to dentistry and ML, without gross reporting fallacies, such as failure to define the type of ML used, failure to minimally describe which dataset was employed for training and testing, and failure to report study findings, were included in this scoping review.

2.2. Data Collection, Items, and Pre-Processing

Data extraction was performed jointly by A.C., A.M., and L.T.A.-S. The extracted data was reviewed by L.T.A.-S. Adjudication in case of any disagreement was performed by discussion (L.T.A.-S. and J.K.). A pretested Excel spreadsheet was used to record the extracted data. Study characteristics included country, year of publication, aim of study and clinical field, type of input data (covariates or imagery [photographs or radiographs; 2-D or 3-D imagery]), dataset source, size and partitions (training, test, validation sets), type of model used and, for deep learning, architecture, augmentation strategies employed, reference test and its definition, comparators (if available, e.g., current standard of care, clinicians, etc.), and performance metrics and their values. In each study, all data items that were compatible with a domain of the extracted data were sought and recorded (e.g., all performance metrics, models employed). No assumptions were made regarding missing or unclear data.

2.3. Quality Assessment

The risk of bias was assessed using the QUADAS-2 tool in four domains [9]. First, risk of bias in data selection was assessed using the parameters of ‘inappropriate exclusions’, ‘case-control design’, and ‘consecutive or random patient enrollment’. Second, risk of bias in the index test was assessed using the parameters of ‘assessment independent of reference standard and ‘pre-specification of thresholds used’. Third, risk of bias in the reference standard was assessed using the parameters of ‘validity of reference standard and ‘assessment independent of index test’. Fourth, risk of bias in the flow and timing was assessed using the parameters of ‘appropriate interval between index test and reference standard’, ’use of a reference standard for all patients’, ‘use of the same reference standard for all patients’, and ‘inclusion of all patients in the analysis’. Using the same tool, applicability concerns in three domains were also evaluated. First, applicability concerns for data selection were assessed using the parameter of ‘mismatch between the included patients and the review question’. Second, applicability concerns for the index test were assessed via the parameter of ‘mismatch between the test, its conduct, or its interpretation and the review question’. Last, applicability concerns for the reference standard were assessed via the parameter of ‘mismatch between the target condition as defined by the reference standard and the review question’. We note that alternatively (or even complimentary), the PROBAST tool [10] could have been used for the same assessment.

Adherence to reporting standards was assessed using the Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis (TRIPOD) tool, which is a 22-item checklist that provides reporting standards for prediction model studies [11]. Note that not all studies included were prediction model studies (studies varied widely in their broader approach, as discussed below), but all involved a mathematical model (ML) for a specific task, which is why we assumed that this checklist would require most studies to adhere to the large majority of domains. TRIPOD has been used for similar purposes in other domains [5]. Risk of bias and adherence to reporting standards were independently assessed by one reviewer (L.T.A.-S.).

2.4. Data Synthesis

We describe various aspects of the included studies, such as country of origin, type of input data used, source of datasets, type of ML methods used, etc. We had initially attempted to conduct a meta-analysis using the results of the confusion matrices reported by the included studies; however, out of 168 studies, only 16 (10%) studies presented their confusion matrices in a way that could be used for analysis and furthermore. These studies differed from each other in terms of their clinical research question/task, type of input data, model architecture, etc.

Instead, a narrative synthesis was performed, displaying which ML tasks (i.e., classification, object detection, semantic segmentation, instance segmentation, and generation) have been studied in different clinical fields of dentistry namely, restorative dentistry and endodontics, oral medicine, oral radiology, orthodontics, oral surgery and implantology, periodontology, prosthodontics, and others, i.e., non-specific field or general dentistry. We briefly explain the different tasks in the following section:

In ML, classification refers to a predictive modeling problem where a class label is predicted for a given example of input data. An example is to classify a given handwritten character as one of the known characters. Algorithms popularly used for classification in the included studies were logistic regression, k-Nearest Neighbors, Decision Trees, Naïve Bayes, RF, Gradient Boosting, etc.

In object detection tasks, one attempts to identify and locate objects within an image or video. Specifically, object detection draws bounding boxes around the detected objects, which allow to locate the said objects. Given the complexity of handling image data, deep learning based on CNNs, such as Region-based CNN, Fast Region-based CNN, You Only Look Once, Single Shot multiBox Detection, are popularly used for this task.

In image segmentation tasks, one aims to identify the exact outline of a detected object in an image. There are two types of segmentation tasks: semantic segmentation and instance segmentation. Semantic segmentation classifies each pixel in the image into a particular class. It does not differentiate between different instances of the same object. For example, if there are two cats in an image, semantic segmentation gives the same label, for instance, ‘cat’, to all the pixels of both cats. Instance segmentation differs from this in the sense that it gives a unique label to every instance of a particular object in the image. Thus, in the example of an image containing two cats, each cat would receive a distinct label, for instance, ‘cat1’ and ‘cat2’. Currently, the most popular models for image segmentation are Fully CNNs and their variants like UNet, DeepLab, PointNet, etc.

A fifth type of a ML task is a generation task, which is not predictive in nature. Such tasks involve the generation of new images from the input images, for example, generation of artifact-free CT images from those containing metal artifacts.

The study protocol was registered after the initial screening stage (PROSPERO registration no. CRD42021288159).

3. Results

3.1. Study Selection and Characteristics

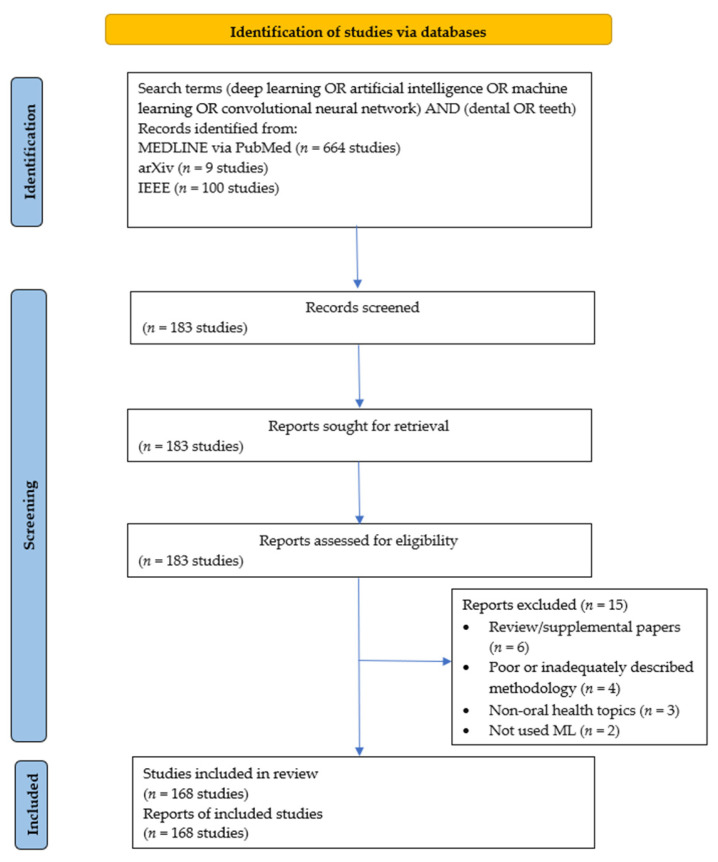

A total of 183 studies were identified and 168 (92%) studies were included (Figure 1). The included studies [3,4,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123,124,125,126,127,128,129,130,131,132,133,134,135,136,137,138,139,140,141,142,143,144,145,146,147,148,149,150,151,152,153,154,155,156,157,158,159,160,161,162,163,164,165,166,167,168,169,170,171,172,173,174,175,176,177] and their characteristics can be found in Table S1. The excluded studies with reasons for exclusion are listed in Table S2. The included studies were published between 1 January 2015 and 31 May 2021 (median: 2019), with the number of published studies increasing each year; 2015: six studies, 2016: four studies, 2017: 13 studies, 2018: 21 studies, 2019: 49 studies, 2020: 68 studies (for 2021, data only until May was available). The included studies stemmed from 40 countries (Figure S1) and used different kinds of input data, such as 2-D data (radiographs: 42% studies, photographs, or other kinds of images: 16% studies), 3-D data (radiographic scans: 18% studies, non-radiographic scans: 4% studies), non-image data (survey data: 10% studies, single nucleotide polymorphism sequences: 1% studies), and combinations of the aforementioned types of data (9% studies). Further, 97% studies used data from universities, hospitals, and private practices, whereas 1% studies each used data from the National Health and Nutrition Examination Survey, M3BE database, 2013 Nationwide Readmissions Database of the USA, and the National Institute of Dental and Craniofacial Research dataset.

Figure 1.

PRISMA study flow diagram.

Additionally, 85% studies partitioned their total dataset into training and testing data subsets, and 59% studies also created validation data subsets from the same data source. The median size of the training datasets was 450 (range: 12 to 1,296,000 data instances) and of the test datasets was 126 (range: 1 to 144,000). Nearly half of the studies tested model performance on a hold-out test dataset while the remaining used cross-validation. Cross-validation is a resampling method that uses different portions of the data to test and train a model during each iteration. For example, in a 10-fold cross-validation, the original dataset is randomly partitioned into 10 subsamples, out of which nine subsamples are used as training data and one subsample as the test data. Ten iterations of the following step are carried out; the model is trained on the nine subsamples designated as training data and tested on the one subsample of test data; but in each iteration, a different subsample is chosen to serve as the test data and thus a different combination of subsamples constitutes the training data. Eventually, the final estimation of model performance is the average of these results.

In addition, 65% studies augmented their input data, mainly the training data, but a few augmented the testing data, too. Only 20% studies used an external dataset to validate their model’s performance. The reference test (i.e., how the ground truth was defined) was established by professional experts in 73% studies: one expert in 18% studies, two experts in 11% studies, three experts in 10% studies, four and five experts in 2% studies each, six experts in 1% studies, and seven, eight, 12, and 20 experts in 0.5% studies, each. Another 27% studies used experts for establishing the reference test but did not provide details on the exact numbers. Additionally, 22% studies used information from their datasets as the reference test (for example, age, diagnosis from medical records) and 1% studies used a software tool to generate the reference test. The remaining 4% studies did not provide details on how the reference test was established.

Of all studies, 70% used deep learning models; CNN as classifiers: 59 studies, CNN for other tasks: 14 studies, Faster R-CNN: seven studies, fully CNN: 19 studies, Mask R-CNN: seven studies, 3-D CNN: three studies, adaptive CNN and pulse-coupled CNN: one study each, and non-convolutional deep neural networks: seven studies (Table S1). Another 22% studies used non-deep learning models; perceptron: four studies, other neural networks: three studies, other types of models, such as, fuzzy classifier, SVM, RF, etc.: 30 studies. In addition, 6% studies used various combinations of the aforementioned models and 2% studies did not provide details of the model architecture employed. Both, models using and not using deep learning were employed in higher proportions by studies in restorative dentistry and endodontics, oral medicine, and non-specific field or general dentistry (Table S3). Additionally, models not using deep learning were frequently employed by studies in orthodontics and periodontology. Finally, 20% studies compared their model’s performance with that of human comparators.

3.2. Risk of Bias and Applicability Concerns

The risk of bias was assessed in four domains, namely data selection, index test, reference standard, and flow and timing. It was found to be high for 54% of the studies regarding data selection and for 58% of the studies regarding the reference standard (Table 1). On the other hand, the risk of bias was low for the majority of studies regarding the index test (77%) and flow and timing (89%). Applicability concerns were found to be high for 53% of the studies regarding data selection but were low for most studies regarding the index test (79%) and reference standard (73%).

Table 1.

Evaluation of risk of bias in studies included (n = 168) using the QUADAS-2 tool.

| Sr. No. [Citation] | Data Selection: Risk of Bias/Applicability Concerns | Index Test: Risk of Bias/Applicability Concerns | Reference Standard: Risk of Bias/Applicability Concerns | Flow and Timing: Risk of Bias |

|---|---|---|---|---|

| 1. [12] | high/high | low/high | high/high | low |

| 2. [13] | low/low | low/low | low/low | low |

| 3. [14] | high/low | low/low | low/low | low |

| 4. [15] | low/low | low/high | high/high | low |

| 5. [16] | low/low | low/low | low/low | low |

| 6. [17] | high/high | low/high | high/high | low |

| 7. [18] | high/high | low/low | high/low | low |

| 8. [19] | low/low | low/high | low/low | low |

| 9. [20] | low/low | low/low | low/high | low |

| 10. [21] | high/high | low/low | high/low | low |

| 11. [22] | high/high | low/low | high/high | low |

| 12. [23] | high/low | high/low | high/low | low |

| 13. [24] | low/high | low/low | high/high | low |

| 14. [25] | high/high | high/low | low/low | low |

| 15. [26] | low/low | high/low | low/low | low |

| 16. [27] | high/low | low/low | high/low | low |

| 17. [28] | high/high | low/low | high/low | low |

| 18. [29] | high/low | low/low | high/low | low |

| 19. [30] | high/high | low/low | high/low | low |

| 20. [31] | high/high | low/high | high/low | low |

| 21. [32] | high/high | high/high | high/high | low |

| 22. [33] | low/low | low/low | low/low | low |

| 23. [34] | low/high | low/low | low/high | low |

| 24. [35] | high/high | low/low | low/low | low |

| 25. [36] | low/low | low/low | low/low | low |

| 26. [37] | high/high | low/low | high/low | low |

| 27. [38] | high/high | low/low | high/low | low |

| 28. [39] | high/high | low/low | high/low | low |

| 29. [40] | high/high | high/low | high/low | low |

| 30. [41] | low/low | low/low | low/low | low |

| 31. [42] | high/low | high/low | low/low | low |

| 32. [43] | low/high | low/high | low/high | low |

| 33. [44] | low/low | high/low | high/low | low |

| 34. [45] | high/high | low/high | low/high | low |

| 35. [46] | high/low | low/low | low/low | low |

| 36. [47] | high/high | low/low | low/low | low |

| 37. [48] | high/high | low/high | low/high | low |

| 38. [49] | low/low | low/low | high/low | low |

| 39. [50] | low/high | low/low | high/low | high |

| 40. [51] | low/high | low/low | low/low | low |

| 41. [52] | high/low | low/high | high/low | low |

| 42. [53] | high/high | low/low | low/low | high |

| 43. [54] | low/low | low/high | low/high | low |

| 44. [55] | high/high | low/low | high/low | low |

| 45. [56] | high/high | low/high | high/low | low |

| 46. [57] | high/high | low/low | high/high | low |

| 47. [58] | high/high | high/high | high/high | low |

| 48. [59] | low/high | low/low | high/high | low |

| 49. [60] | low/high | low/low | high/high | low |

| 50. [61] | low/low | low/low | high/low | high |

| 51. [62] | high/high | low/low | high/low | low |

| 52. [63] | low/high | low/high | high/high | low |

| 53. [64] | high/high | high/high | high/high | low |

| 54. [65] | high/high | low/low | high/low | low |

| 55. [66] | low/high | low/low | high/low | low |

| 56. [67] | high/high | low/high | low/high | low |

| 57. [68] | low/high | low/low | low/low | high |

| 58. [69] | low/low | low/low | low/low | low |

| 59. [70] | high/high | low/low | low/low | low |

| 60. [71] | low/low | low/low | low/low | low |

| 61. [72] | low/high | low/low | high/low | low |

| 62. [73] | low/low | low/low | high/low | low |

| 63. [74] | low/low | low/low | low/low | low |

| 64. [75] | low/low | low/low | low/low | low |

| 65. [76] | low/low | low/low | low/low | low |

| 66. [77] | high/high | high/low | high/low | low |

| 67. [78] | high/low | high/low | high/low | low |

| 68. [79] | high/low | high/low | high/low | low |

| 69. [80] | high/low | high/low | low/low | low |

| 70. [81] | low/low | low/low | low/low | low |

| 71. [82] | low/low | low/low | high/low | low |

| 72. [83] | low/low | low/low | low/low | low |

| 73. [84] | high/low | low/low | high/low | low |

| 74. [85] | low/low | low/low | low/low | high |

| 75. [86] | high/high | low/low | low/low | low |

| 76. [87] | high/high | high/low | low/low | low |

| 77. [88] | low/low | low/low | low/low | low |

| 78. [89] | high/high | high/high | high/high | low |

| 79. [90] | high/high | high/high | high/high | low |

| 80. [91] | high/high | low/low | high/low | low |

| 81. [92] | low/low | low/low | high/low | low |

| 82. [93] | low/high | low/low | high/high | low |

| 83. [94] | low/low | low/low | low/low | high |

| 84. [95] | high/high | high/low | high/high | low |

| 85. [96] | low/high | high/low | high/high | low |

| 86. [97] | high/high | low/high | low/high | low |

| 87. [98] | high/high | low/low | low/low | low |

| 88. [99] | low/high | low/high | high/high | low |

| 89. [100] | low/high | low/high | high/high | low |

| 90. [101] | low/high | low/low | low/high | low |

| 91. [102] | high/high | low/low | high/low | low |

| 92. [103] | low/low | low/low | low/low | low |

| 93. [4] | high/low | low/high | high/high | low |

| 94. [104] | low/low | low/low | high/low | low |

| 95. [105] | high/high | low/high | high/low | low |

| 96. [106] | low/high | low/low | low/high | low |

| 97. [107] | low/low | low/low | high/low | low |

| 98. [108] | low/low | low/low | low/low | low |

| 99. [109] | high/high | high/low | high/low | low |

| 100. [110] | low/low | low/low | high/low | low |

| 101. [111] | low/low | low/low | high/low | low |

| 102. [112] | high/low | high/low | high/high | low |

| 103. [113] | high/high | low/low | low/high | high |

| 104. [3] | low/high | low/low | low/low | low |

| 105. [114] | low/low | low/low | low/low | low |

| 106. [115] | low/low | low/low | low/low | low |

| 107. [116] | high/high | high/low | high/low | low |

| 108. [117] | high/low | high/low | low/low | low |

| 109. [118] | high/high | low/low | high/low | low |

| 110. [119] | low/low | low/low | low/low | low |

| 111. [120] | low/low | low/high | high/high | low |

| 112. [121] | low/low | low/low | high/low | low |

| 113. [122] | high/high | high/low | low/low | low |

| 114. [123] | low/low | low/low | low/low | low |

| 115. [124] | low/high | low/low | high/low | low |

| 116. [125] | high/high | low/low | low/high | low |

| 117. [126] | high/low | high/low | high/low | high |

| 118. [127] | high/high | low/low | high/low | low |

| 119. [128] | low/low | high/low | low/low | low |

| 120. [129] | high/low | low/low | low/low | low |

| 121. [130] | high/high | low/low | high/low | high |

| 122. [131] | high/low | high/low | high/low | low |

| 123. [132] | high/high | low/low | high/low | low |

| 124. [133] | high/high | low/low | high/low | high |

| 125. [134] | low/high | high/low | high/low | low |

| 126. [135] | high/low | high/low | low/low | low |

| 127. [136] | high/low | high/low | high/low | low |

| 128. [137] | high/low | high/high | low/low | low |

| 129. [138] | low/high | low/high | high/low | low |

| 130. [139] | high/low | low/low | low/low | low |

| 131. [140] | high/low | low/high | high/high | low |

| 132. [141] | low/low | low/low | high/low | low |

| 133. [142] | high/high | low/low | high/low | low |

| 134. [143] | high/high | low/low | low/low | low |

| 135. [144] | high/high | low/low | high/low | low |

| 136. [145] | high/high | high/low | high/low | low |

| 137. [146] | high/high | low/low | high/low | low |

| 138. [147] | high/low | high/low | low/low | low |

| 139. [148] | high/high | low/low | high/low | low |

| 140. [149] | high/high | low/high | high/high | low |

| 141. [150] | high/high | low/high | high/high | low |

| 142. [151] | low/high | low/low | high/high | low |

| 143. [152] | high/high | low/high | high/high | low |

| 144. [153] | high/low | low/low | high/low | low |

| 145. [154] | low/low | low/high | high/high | low |

| 146. [155] | low/low | high/low | low/low | low |

| 147. [156] | low/high | low/low | low/low | low |

| 148. [157] | high/high | high/low | high/low | high |

| 149. [158] | low/low | low/low | low/low | low |

| 150. [159] | low/high | low/high | low/high | low |

| 151. [160] | high/low | low/high | low/low | low |

| 152. [161] | low/low | high/low | high/low | high |

| 153. [162] | high/low | low/low | low/high | low |

| 154. [163] | low/low | low/high | low/high | low |

| 155. [164] | high/low | low/low | high/low | low |

| 156. [165] | low/low | low/low | high/low | low |

| 157. [166] | low/high | low/high | high/high | high |

| 158. [167] | low/low | low/low | low/low | low |

| 159. [168] | low/low | low/low | high/low | low |

| 160. [169] | low/high | high/low | high/high | low |

| 161. [170] | high/high | low/low | low/low | low |

| 162. [171] | low/low | low/low | high/low | low |

| 163. [172] | low/low | low/low | low/low | low |

| 164. [173] | low/low | low/low | high/low | low |

| 165. [174] | low/low | low/low | low/low | low |

| 166. [175] | high/high | high/high | high/high | low |

| 167. [176] | high/high | high/low | low/low | low |

| 168. [177] | high/high | low/low | high/low | low |

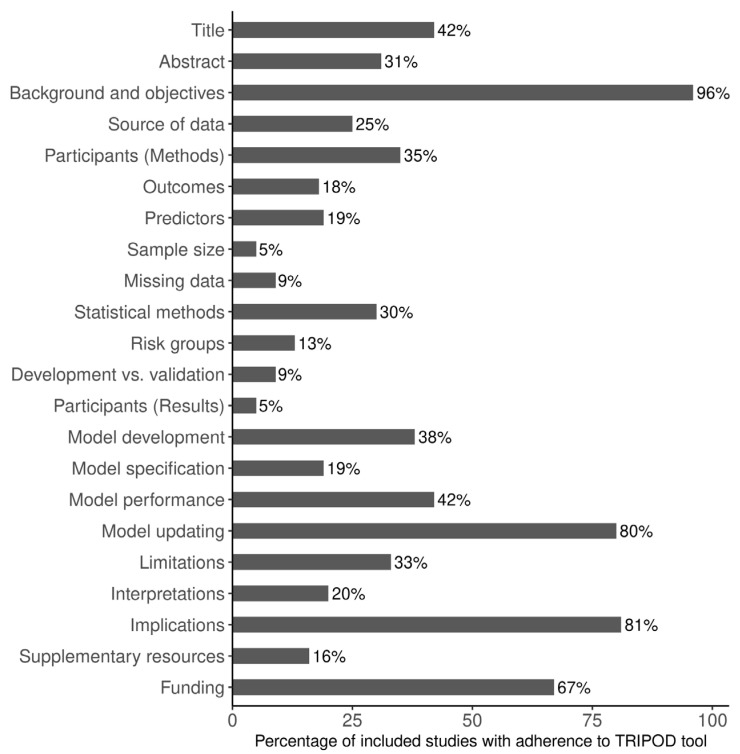

3.3. Adherence to Reporting Standards

Overall adherence to the TRIPOD reporting checklist was 33.3%, with 18/22 domains having an adherence rate less than 50% (Figure 2). Reporting adherence was at or above 80% for background and objectives, and potential clinical use of the model and implications for future research, but below 10% for sample size calculation, handling of missing data, differences between development and validation data, and details on participants. In particular, less than 20% of studies adequately defined their predictors and outcomes (in terms of their blinded assessments), stratification into risk groups, presented the full prediction model and provided information on supplementary resources, such as study protocol, web calculator, or data sets. Less than 40% of the studies adequately reported about their data sources (i.e., study dates), participant eligibility, statistical methods (specifically, details on model refinement), model results (in terms of results from crude models), study limitations, and results with reference to performance in the development data, and any other validation data.

Figure 2.

Reporting adherence of studies (n = 168) to Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis (TRIPOD) tool.

3.4. Tasks, Metrics, and Findings of the Studies

Based on the nature of the ML task formulated, the 168 included studies could be classified into five major categories of ML tasks; classification task, n = 85; object detection task, n = 22; semantic segmentation task, n = 37; instance segmentation task, n = 19; and generation task, n = 5. Classification tasks were most commonly used in oral medicine studies (22%), whereas object detection, semantic segmentation, and instance segmentation tasks, each were most commonly used in non-specific field or general dentistry studies (36%, 38%, and 58%, respectively), Table 2. Generation tasks, though small in number, were most commonly used in oral radiology studies (80%).

Table 2.

Number of studies in each field of dentistry, stratified by type of machine learning task (n = 168).

| Classification Task | Object Detection Task | Semantic Segmentation Task | Instance Segmentation Task | Generation Task | |

|---|---|---|---|---|---|

| n | 85 | 22 | 37 | 19 | 5 |

| Field of dentistry, n (%) | |||||

| Restorative dentistry and endodontics | 13 (15%) | 1 (4%) | 9 (24%) | 2 (11%) | 0 (0%) |

| Oral medicine | 19 (22%) | 5 (23%) | 1 (3%) | 0 (0%) | 0 (0%) |

| Oral radiology | 3 (4%) | 0 (0%) | 2 (5%) | 2 (11%) | 4 (80%) |

| Orthodontics | 10 (12%) | 3 (14%) | 1 (3%) | 3 (15%) | 1 (20%) |

| Oral surgery and implantology | 11 (13%) | 3 (14%) | 3 (8%) | 0 (0%) | 0 (0%) |

| Periodontology | 9 (11%) | 2 (9%) | 7 (19%) | 1 (5%) | 0 (0%) |

| Prosthodontics | 2 (2%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) |

| Others (non-specific field, general dentistry) | 18 (21%) | 8 (36%) | 14 (38%) | 11 (58%) | 0 (0%) |

A total of 42 different metrics were used by the studies to evaluate model performance and some of these could be grouped into one class, for example, the various correlation coefficients could be combined. Such grouping (or consolidation) resulted in 26 distinct classes of metrics. Note that most studies reported multiple metrics. Studies on classification tasks commonly reported accuracy, sensitivity, area under the receiver-operating characteristic, specificity, and precision, and those on object detection reported on sensitivity, precision, and accuracy. Studies on semantic segmentation reported on IoU and sensitivity, and those on instance segmentation reported on accuracy, sensitivity, and IoU. Lastly, studies using generation tasks commonly reported on peak signal-to-noise ratio, structural similarity index, and relative error. Table S4 shows the number of studies which used the different metrics, stratified by ML task.

After stratifying the studies by ML task and clinical field of dentistry, we attempted to evaluate studies that reported on accuracy, or mean average precision, or IoU. A formal comparison was inhibited by the large variability at the level of clinical or diagnostic tasks amongst the studies.

4. Discussion

ML in dentistry is characterized by the availability of a plethora of clinical tasks which necessitate the use of a wide range of input data types, ML models, performance metrics, etc. This has given rise to a large body of evidence with limited comparability. The present scoping review synthesized this evidence and allowed to comprehensively assess this body. We will begin by discussing our findings in detail.

First, the included studies aimed for different ML tasks on a wide variety of data. These data then differed once more within specific subtypes (e.g., imagery, with radiographs, scans, photographs, each of them being sub-classified again, and differing in resolution, contrast, etc.). Moreover, data usually stemmed from single centers, representing only a limited population (and diversity in terms of data generation strategy or technique), all of which likely adversely impacts generalizability of results. The data used were nearly never available, except for the few studies employing data from open databases, leading to difficulties in replication of results. Researchers are urged to comply with journals’ data sharing policies and make their data available upon reasonable request. We acknowledge that there may be data sharing and privacy concerns across institutions and countries. Alternatives to centralized learning of ML models, like federated learning, which do not require data sharing may be of relevance especially for data which are hard to de-identify [178]. Practices of data linkage and triangulation, i.e., using a variety of data sources to create a richer dataset, were almost non-existent. Thus, limiting options for verification of data integrity and increasing the learning output of a ML model by leveraging information from multiple data sources on hierarchical structures and correlations.

Second, a wide range of outcome measures was used by the included studies. These can be measured on different levels, such as patient-level, tooth-level, and surface-level, and while this is relevant for any comparison or synthesis across studies, it was not always reported on what level the outcomes were assessed. Another issue was the high number of performance metrics in use, as evident from our results, leading to only a few studies being comparable to each other. Defining an agreed-upon set of outcome metrics for specific subtasks in ML in dentistry (e.g., classification, detection, segmentation on images) along with standards towards the level of outcome assessment seems warranted. This outcome set should reflect various aspects of performance (e.g., under- and over-detection), consider the impact of prevalence (e.g., predictive values), and attempt to transport not only diagnostic value, but also clinical usefulness. For the latter, studies attempting to assess the value of ML in the hands of clinicians against the current standard of care are needed.

Third, the use of reference tests (i.e., how the ground truth was established) warrants discussion. A wide range of strategies to establish reference tests were employed. In many studies, no details towards the definition of the reference tests were provided. A few studies using image data used only one human annotator as the reference test, a decision which may be criticized given the known wide variability in experts’ annotations [2]. Alternative concepts of applying the reference test to training datasets should be employed and compared to gauge the impact of different approaches and validate the one eventually selected. Additionally, testing datasets should be standardized and heterogeneous to ensure class balance and generalizability. One approach is to establish open benchmarking datasets, as attempted by the ITU/WHO Focus Group on Artificial Intelligence for Health [179].

Fourth, the quality of conducting and reporting ML studies in dentistry remains problematic. Notably, the specific risks emanating from ML and the underlying data are insufficiently addressed, e.g., biases, data leakage, or overfitting of the model. Furthermore, many studies suffered from unclear or a lack of validation of their results on external datasets. The evaluation of a model’s performance on unseen data is a crucial aspect as it relates to the generalizability of ML models regarding performance on data from other sources. Exploration of why some models were not generalizable was even less common, thus preventing identification of steps required to better the models. Generally, the majority of studies performed application testing, developed models, and showed that ML can learn and, in many studies, predict. Understanding why this is, how it could be improved, what the clinical domain needs, or which safeguards for ML in dentistry are required, was seldom an issue. General reporting did not allow full replication, as many details were not presented, and additionally, the display of the model performance remained, as discussed, insufficient. Researchers need to adhere to the published guidelines on study conduct and reporting [180,181,182].

In an effort to characterize the emerging pattern in the included studies, first, we would like to elaborate on the nature of clinical tasks employed by the studies. A wide array of research questions were present; from detecting dental artifacts in images to investigating the benefits of transfer-learning, from classifying different dental conditions to aiding in decision-making and assessing cost-effectiveness. Thus, there is evidence of broadening of avenues where ML could be exploited. As stated earlier, classification tasks were the most common and this may be because diagnosing dental structures or anomalies on images is a vital step towards successful treatment outcomes and prognosis. However, over the years, ML methods have improved their classification performance on images at the cost of increased model complexity and opacity [183]. The inability to explain ML’s methods and decisions is one of the contributing factors towards development of explainable AI, i.e., a set of processes that allows human users to comprehend and trust the results created by ML algorithms. Second, more recent studies tended to employ image segmentation models [2,25,39,48,59,60,73,151].

The presented scoping review has a few salient features. First, it is the most comprehensive overview on ML in dentistry with 168 studies being included. Second, and as a limitation, we could not include randomized controlled trials because none were available and found the included studies to have a considerable risk of bias, both of which should be considered when interpreting our results. Third, to our knowledge this study is the first to employ TRIPOD for gauging the reporting quality of studies using ML in dentistry. TRIPOD is a checklist designed to assess prediction models which has not been validated specifically for ML applications [5]. However, previous studies have used it to evaluate ML models since the quality assessment criteria for clinical prediction tools and ML models are similar [5]. At present, a TRIPOD-ML tool is under-construction [5]. Fourth, we included studies until May 2021 only, as the systematic critique of the 168 studies required considerable time and effort since then. We acknowledge that inclusion of recently published studies may have strengthened our review. Furthermore, we acknowledge that arXiv, an archiving database, may include studies which did not undergo a formal peer-review process and this may be a limitation for our study. However, studies on arXiv are reviewed by peers in a non-formal process and updated after peer-review. Last, any clinical usability cannot be inferred from this study because it was not the focus of this comprehensive review.

5. Conclusions

In conclusion, we demonstrated that ML has been employed for a large number of tasks in dentistry, building on a wide range of methods and employing highly heterogeneous reporting metrics. As a result, comparisons across studies or benchmarking of the developed ML models are only possible to a limited extent. A minimum (core) set of defined outcomes and outcome metrics would help to overcome this and facilitate comparisons, whenever appropriate. The overall body of evidence showed considerable risk of bias as well as moderate adherence to reporting standards. Researchers are urged to adhere more closely to reporting standards and plan their studies with even greater scientific rigor to reduce any risk of bias. Last, the included studies mainly focused on developing ML models, while presenting their generalizability, robustness, or clinical usefulness was uncommon. Future studies should aim to demonstrate that ML positively impacts the quality and efficiency of healthcare.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/jcm12030937/s1, Search strategy, Figure S1: Geographical trends in number of publications of machine learning methods in dentistry between 1 January 2015 and 31 May 2021; Table S1: Studies included in the scoping review along with their characteristics (n = 168); Table S2: Studies excluded from the scoping review along with the reason for exclusion (n = 15); Table S3: Number of studies in each field of dentistry, stratified by the machine learning model used (n = 168); Table S4: Number of studies using the various performance metrics stratified by type of machine learning task. References [1,184,185,186,187,188,189,190,191,192,193,194,195,196,197] are cited in the Supplementary Materials.

Author Contributions

Conceptualization, F.S., A.C. and J.K.; methodology, L.T.A.-S., A.C. and A.M.; software, A.C. and L.T.A.-S.; validation, L.T.A.-S. and J.K.; formal analysis, L.T.A.-S.; investigation, A.C.; resources, F.S. and A.C.; data curation, L.T.A.-S. and A.M.; writing—original draft preparation, L.T.A.-S.; writing—review and editing, F.S.; visualization, L.T.A.-S.; supervision, F.S.; project administration, J.K.; funding acquisition, F.S. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All relevant data are available through the paper and supplementary material. Additional information is available from the authors upon reasonable request.

Conflicts of Interest

F.S. and J.K. are co-founders of the startup dentalXrai GmbH. dentalXrai GmbH had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Funding Statement

We acknowledge financial support from the Open Access Publication Fund of Charité—Universitätsmedizin Berlin and the German Research Foundation (DFG).

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Sun M.-L., Liu Y., Liu G.-M., Cui D., Heidari A.A., Jia W.-Y., Ji X., Chen H.-L., Luo Y.-G. Application of Machine Learning to Stomatology: A Comprehensive Review. IEEE Access. 2020;8:184360–184374. doi: 10.1109/ACCESS.2020.3028600. [DOI] [Google Scholar]

- 2.Schwendicke F., Chaurasia A., Arsiwala L., Lee J.-H., Elhennawy K., Jost-Brinkmann P.-G., Demarco F., Krois J. Deep learning for cephalometric landmark detection: Systematic review and meta-analysis. Clin. Oral Investig. 2021;25:4299–4309. doi: 10.1007/s00784-021-03990-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Farhadian M., Shokouhi P., Torkzaban P. A decision support system based on support vector machine for diagnosis of periodontal disease. BMC Res. Notes. 2020;13:337. doi: 10.1186/s13104-020-05180-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Abdalla-Aslan R., Yeshua T., Kabla D., Leichter I., Nadler C. An artificial intelligence system using machine-learning for automatic detection and classification of dental restorations in panoramic radiography. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2020;130:593–602. doi: 10.1016/j.oooo.2020.05.012. [DOI] [PubMed] [Google Scholar]

- 5.Ben Li B., Feridooni T., Cuen-Ojeda C., Kishibe T., de Mestral C., Mamdani M., Al-Omran M. Machine learning in vascular surgery: A systematic review and critical appraisal. NPJ Digit. Med. 2022;5:7. doi: 10.1038/s41746-021-00552-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Schwendicke F., Tzschoppe M., Paris S. Radiographic caries detection: A systematic review and meta-analysis. J. Dent. 2015;43:924–933. doi: 10.1016/j.jdent.2015.02.009. [DOI] [PubMed] [Google Scholar]

- 7.Moher D., Liberati A., Tetzlaff J., Altman D.G., PRISMA Group Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Med. 2009;6:e1000097. doi: 10.1371/journal.pmed.1000097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Page M.J., McKenzie J.E., Bossuyt P.M., Boutron I., Hoffmann T.C., Mulrow C.D., Shamseer L., Tetzlaff J.M., Akl E.A., Brennan S.E., et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ. 2021;372:n71. doi: 10.1136/bmj.n71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Whiting P.F., Rutjes A.W.S., Westwood M.E., Mallett S., Deeks J.J., Reitsma J.B., Leeflang M.M.G., Sterne J.A.C., Bossuyt P.M.M., QUADAS-2 Group QUADAS-2: A Revised Tool for the Quality Assessment of Diagnostic Accuracy Studies. Ann. Intern. Med. 2011;155:529–536. doi: 10.7326/0003-4819-155-8-201110180-00009. [DOI] [PubMed] [Google Scholar]

- 10.Wolff R.F., Moons K.G., Riley R., Whiting P.F., Westwood M., Collins G.S., Reitsma J.B., Kleijnen J., Mallett S., for the PROBAST Group PROBAST: A Tool to Assess the Risk of Bias and Applicability of Prediction Model Studies. Ann. Intern. Med. 2019;170:51–58. doi: 10.7326/M18-1376. [DOI] [PubMed] [Google Scholar]

- 11.Collins G.S., Reitsma J.B., Altman D.G., Moons K.G.M. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): The TRIPOD Statement. BMC Med. 2015;13:214. doi: 10.1186/s12916-014-0241-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Aliaga I.J., Vera V., De Paz J.F., García A.E., Mohamad M.S. Modelling the Longevity of Dental Restorations by means of a CBR System. BioMed Res. Int. 2015;2015:540306. doi: 10.1155/2015/540306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Gupta A., Kharbanda O.P., Sardana V., Balachandran R., Sardana H.K. A knowledge-based algorithm for automatic detection of cephalometric landmarks on CBCT images. Int. J. Comput. Assist. Radiol. Surg. 2015;10:1737–1752. doi: 10.1007/s11548-015-1173-6. [DOI] [PubMed] [Google Scholar]

- 14.Gupta A., Kharbanda O.P., Sardana V., Balachandran R., Sardana H.K. Accuracy of 3D cephalometric measurements based on an automatic knowledge-based landmark detection algorithm. Int. J. Comput. Assist. Radiol. Surg. 2016;11:1297–1309. doi: 10.1007/s11548-015-1334-7. [DOI] [PubMed] [Google Scholar]

- 15.Hadley A.J., Krival K.R., Ridgel A.L., Hahn E.C., Tyler D.J. Neural Network Pattern Recognition of Lingual–Palatal Pressure for Automated Detection of Swallow. Dysphagia. 2015;30:176–187. doi: 10.1007/s00455-014-9593-y. [DOI] [PubMed] [Google Scholar]

- 16.Kavitha M.S., An S.-Y., An C.-H., Huh K.-H., Yi W.-J., Heo M.-S., Lee S.-S., Choi S.-C. Texture analysis of mandibular cortical bone on digital dental panoramic radiographs for the diagnosis of osteoporosis in Korean women. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2015;119:346–356. doi: 10.1016/j.oooo.2014.11.009. [DOI] [PubMed] [Google Scholar]

- 17.Mansoor A., Patsekin V., Scherl D., Robinson J.P., Rajwa B. A Statistical Modeling Approach to Computer-Aided Quantification of Dental Biofilm. IEEE J. Biomed. Health Inform. 2015;19:358–366. doi: 10.1109/JBHI.2014.2310204. [DOI] [PubMed] [Google Scholar]

- 18.Jung S.-K., Kim T.-W. New approach for the diagnosis of extractions with neural network machine learning. Am. J. Orthod. Dentofac. Orthop. 2016;149:127–133. doi: 10.1016/j.ajodo.2015.07.030. [DOI] [PubMed] [Google Scholar]

- 19.Kavitha M.S., Kumar P.G., Park S.-Y., Huh K.-H., Heo M.-S., Kurita T., Asano A., An S.-Y., Chien S.-I. Automatic detection of osteoporosis based on hybrid genetic swarm fuzzy classifier approaches. Dentomaxillofac. Radiol. 2016;45:20160076. doi: 10.1259/dmfr.20160076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Mahmoud Y.E., Labib S.S., Mokhtar H.M.O. Teeth periapical lesion prediction using machine learning techniques; Proceedings of the 2016 SAI Computing Conference (SAI); London, UK. 13–15 July 2016; pp. 129–134. [DOI] [Google Scholar]

- 21.Wang L., Li S., Chen R., Liu S.-Y., Chen J.-C. An Automatic Segmentation and Classification Framework Based on PCNN Model for Single Tooth in MicroCT Images. PLoS ONE. 2016;11:e0157694. doi: 10.1371/journal.pone.0157694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.De Tobel J., Radesh P., Vandermeulen D., Thevissen P.W. An automated technique to stage lower third molar development on panoramic radiographs for age estimation: A pilot study. J. Forensic Odonto-Stomatol. 2017;35:42–54. [PMC free article] [PubMed] [Google Scholar]

- 23.Hwang J.J., Lee J.-H., Han S.-S., Kim Y.H., Jeong H.-G., Choi Y.J., Park W. Strut analysis for osteoporosis detection model using dental panoramic radiography. Dentomaxillofac. Radiol. 2017;46:20170006. doi: 10.1259/dmfr.20170006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Imangaliyev S., van der Veen M.H., Volgenant C., Loos B.G., Keijser B.J., Crielaard W., Levin E. Classification of quantitative light-induced fluorescence images using convolutional neural network. arXiv. 20171705.09193 [Google Scholar]

- 25.Johari M., Esmaeili F., Andalib A., Garjani S., Saberkari H. Detection of vertical root fractures in intact and endodontically treated premolar teeth by designing a probabilistic neural network: An ex vivo study. Dentomaxillofac. Radiol. 2017;46:20160107. doi: 10.1259/dmfr.20160107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Liu Y., Li Y., Fu Y., Liu T., Liu X., Zhang X., Fu J., Guan X., Chen T., Chen X., et al. Quantitative prediction of oral cancer risk in patients with oral leukoplakia. Oncotarget. 2017;8:46057–46064. doi: 10.18632/oncotarget.17550. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Miki Y., Muramatsu C., Hayashi T., Zhou X., Hara T., Katsumata A., Fujita H. Classification of teeth in cone-beam CT using deep convolutional neural network. Comput. Biol. Med. 2017;80:24–29. doi: 10.1016/j.compbiomed.2016.11.003. [DOI] [PubMed] [Google Scholar]

- 28.Oktay A.B. Tooth detection with Convolutional Neural Networks; Proceedings of the 2017 Medical Technologies National Congress (TIPTEKNO); Trabzon, Turkey. 12–14 October 2017; pp. 1–4. [DOI] [Google Scholar]

- 29.Prajapati A.S., Nagaraj R., Mitra S. Classification of dental diseases using CNN and transfer learning; Proceedings of the 2017 5th International Symposium on Computational and Business Intelligence (ISCBI); Dubai, United Arab Emirates. 11–14 August 2017; pp. 70–74. [Google Scholar]

- 30.Raith S., Vogel E.P., Anees N., Keul C., Güth J.-F., Edelhoff D., Fischer H. Artificial Neural Networks as a powerful numerical tool to classify specific features of a tooth based on 3D scan data. Comput. Biol. Med. 2017;80:65–76. doi: 10.1016/j.compbiomed.2016.11.013. [DOI] [PubMed] [Google Scholar]

- 31.Rana A., Yauney G., Wong L.C., Gupta O., Muftu A., Shah P. Automated segmentation of gingival diseases from oral images; Proceedings of the 2017 IEEE Healthcare Innovations and Point of Care Technologies (HI-POCT); Bethesda, MD, USA. 6–8 November 2017; pp. 144–147. [DOI] [Google Scholar]

- 32.Srivastava M.M., Kumar P., Pradhan L., Varadarajan S. Detection of tooth caries in bitewing radiographs using deep learning. arXiv. 20171711.07312 [Google Scholar]

- 33.Štepanovský M., Ibrová A., Buk Z., Velemínská J. Novel age estimation model based on development of permanent teeth compared with classical approach and other modern data mining methods. Forensic Sci. Int. 2017;279:72–82. doi: 10.1016/j.forsciint.2017.08.005. [DOI] [PubMed] [Google Scholar]

- 34.Yilmaz E., Kayikcioglu T., Kayipmaz S. Computer-aided diagnosis of periapical cyst and keratocystic odontogenic tumor on cone beam computed tomography. Comput. Methods Programs Biomed. 2017;146:91–100. doi: 10.1016/j.cmpb.2017.05.012. [DOI] [PubMed] [Google Scholar]

- 35.Du X., Chen Y., Zhao J., Xi Y. A Convolutional Neural Network Based Auto-Positioning Method for Dental Arch In Rotational Panoramic Radiography; Proceedings of the 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Honolulu, HI, USA. 18–21 July 2018; pp. 2615–2618. [DOI] [PubMed] [Google Scholar]

- 36.Egger J., Pfarrkirchner B., Gsaxner C., Lindner L., Schmalstieg D., Wallner J. Fully Convolutional Mandible Segmentation on a valid Ground- Truth Dataset; Proceedings of the 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Honolulu, HI, USA. 18–21 July 2018; pp. 656–660. [DOI] [PubMed] [Google Scholar]

- 37.Fakhriy N.A.A., Ardiyanto I., Nugroho H.A., Pratama G.N.P. Machine Learning Algorithms for Classifying Abscessed and Impacted Tooth: Comparison Study; Proceedings of the 2018 2nd International Conference on Biomedical Engineering (IBIOMED); Bali, Indonesia. 24–26 July 2018; pp. 88–93. [DOI] [Google Scholar]

- 38.Fariza A., Arifin A.Z., Astuti E.R. Interactive Segmentation of Conditional Spatial FCM with Gaussian Kernel-Based for Panoramic Radiography; Proceedings of the 2018 International Symposium on Advanced Intelligent Informatics (SAIN); Yogyakarta, Indonesia. 29–30 August 2018; pp. 157–161. [DOI] [Google Scholar]

- 39.Gavinho L.G., Araujo S.A., Bussadori S.K., Silva J.V.P., Deana A.M. Detection of white spot lesions by segmenting laser speckle images using computer vision methods. Lasers Med. Sci. 2018;33:1565–1571. doi: 10.1007/s10103-018-2520-y. [DOI] [PubMed] [Google Scholar]

- 40.Ha S.-R., Park H.S., Kim E.-H., Kim H.-K., Yang J.-Y., Heo J., Yeo I.-S.L. A pilot study using machine learning methods about factors influencing prognosis of dental implants. J. Adv. Prosthodont. 2018;10:395–400. doi: 10.4047/jap.2018.10.6.395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Heinrich A., Güttler F., Wendt S., Schenkl S., Hubig M., Wagner R., Mall G., Teichgräber U. Forensic Odontology: Automatic Identification of Persons Comparing Antemortem and Postmortem Panoramic Radiographs Using Computer Vision. Rofo. 2018;190:1152–1158. doi: 10.1055/a-0632-4744. [DOI] [PubMed] [Google Scholar]

- 42.Jader G., Fontineli J., Ruiz M., Abdalla K., Pithon M., Oliveira L. Deep Instance Segmentation of Teeth in Panoramic X-ray Images; Proceedings of the 2018 31st SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI); Parana, Brazil. 29 October–1 November 2018; pp. 400–407. [DOI] [Google Scholar]

- 43.Jiang M.-X., Chen Y.-M., Huang W.-H., Huang P.-H., Tsai Y.-H., Huang Y.-H., Chiang C.-K. Teeth-Brushing Recognition Based on Deep Learning; Proceedings of the 2018 IEEE International Conference on Consumer Electronics-Taiwan (ICCE-TW); Taichung, Taiwan. 19–21 May 2018; pp. 1–2. [DOI] [Google Scholar]

- 44.Kim D.W., Kim H., Nam W., Kim H.J., Cha I.-H. Machine learning to predict the occurrence of bisphosphonate-related osteonecrosis of the jaw associated with dental extraction: A preliminary report. Bone. 2018;116:207–214. doi: 10.1016/j.bone.2018.04.020. [DOI] [PubMed] [Google Scholar]

- 45.Lee J.-H., Kim D.-H., Jeong S.-N., Choi S.-H. Diagnosis and prediction of periodontally compromised teeth using a deep learning-based convolutional neural network algorithm. J. Periodontal Implant. Sci. 2018;48:114–123. doi: 10.5051/jpis.2018.48.2.114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Lee J.-H., Kim D.-H., Jeong S.-N., Choi S.-H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 2018;77:106–111. doi: 10.1016/j.jdent.2018.07.015. [DOI] [PubMed] [Google Scholar]

- 47.Lu S., Yang J., Wang W., Li Z., Lu Z. Teeth Classification Based on Extreme Learning Machine; Proceedings of the 2018 Second World Conference on Smart Trends in Systems, Security and Sustainability (WorldS4); London, UK. 30–31 October 2018; pp. 198–202. [DOI] [Google Scholar]

- 48.Shah H., Hernandez P., Budin F., Chittajallu D., Vimort J.B., Walters R., Mol A., Khan A., Paniagua B. Automatic quantification framework to detect cracks in teeth. Proc. SPIE Int. Soc. Opt. Eng. 2018;10578:105781K. doi: 10.1117/12.2293603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Song B., Sunny S., Uthoff R., Patrick S., Suresh A., Kolur T., Keerthi G., Anbarani A., Wilder-Smith P., Kuriakose M.A., et al. Automatic classification of dual-modalilty, smartphone-based oral dysplasia and malignancy images using deep learning. Biomed. Opt. Express. 2018;9:5318–5329. doi: 10.1364/BOE.9.005318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Thanathornwong B. Bayesian-Based Decision Support System for Assessing the Needs for Orthodontic Treatment. Health Inform. Res. 2018;24:22–28. doi: 10.4258/hir.2018.24.1.22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Yang J., Xie Y., Liu L., Xia B., Cao Z., Guo C. Automated Dental Image Analysis by Deep Learning on Small Dataset; Proceedings of the 2018 IEEE 42nd Annual Computer Software and Applications Conference (COMPSAC); Tokyo, Japan. 23–27 July 2018; pp. 492–497. [DOI] [Google Scholar]

- 52.Yoon S., Odlum M., Lee Y., Choi T., Kronish I.M., Davidson K.W., Finkelstein J. Applying Deep Learning to Understand Predictors of Tooth Mobility Among Urban Latinos. Stud. Health Technol. Inform. 2018;251:241–244. [PMC free article] [PubMed] [Google Scholar]

- 53.Zakirov A., Ezhov M., Gusarev M., Alexandrovsky V., Shumilov E. Dental pathology detection in 3D cone-beam CT. arXiv. 20181810.10309 [Google Scholar]

- 54.Zanella-Calzada L.A., Galván-Tejada C.E., Chávez-Lamas N.M., Rivas-Gutierrez J., Magallanes-Quintanar R., Celaya-Padilla J.M., Galván-Tejada J.I., Gamboa-Rosales H. Deep Artificial Neural Networks for the Diagnostic of Caries Using Socioeconomic and Nutritional Features as Determinants: Data from NHANES 2013–2014. Bioengineering. 2018;5:47. doi: 10.3390/bioengineering5020047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Zhang K., Wu J., Chen H., Lyu P. An effective teeth recognition method using label tree with cascade network structure. Comput. Med. Imaging Graph. 2018;68:61–70. doi: 10.1016/j.compmedimag.2018.07.001. [DOI] [PubMed] [Google Scholar]

- 56.Ali H., Khursheed M., Fatima S.K., Shuja S.M., Noor S. Object Recognition for Dental Instruments Using SSD-MobileNet; Proceedings of the 2019 International Conference on Information Science and Communication Technology (ICISCT); Karachi, Pakistan. 9–10 March 2019; pp. 1–6. [DOI] [Google Scholar]

- 57.Alkaabi S., Yussof S., Al-Mulla S. Evaluation of Convolutional Neural Network based on Dental Images for Age Estimation; Proceedings of the 2019 International Conference on Electrical and Computing Technologies and Applications (ICECTA); Ras Al Khaimah, United Arab Emirates. 19–21 November 2019; pp. 1–5. [DOI] [Google Scholar]

- 58.Askarian B., Tabei F., Tipton G.A., Chong J.W. Smartphone-Based Method for Detecting Periodontal Disease; Proceedings of the 2019 IEEE Healthcare Innovations and Point of Care Technologies, (HI-POCT); Bethesda, MD, USA. 20–22 November 2019; pp. 53–55. [DOI] [Google Scholar]

- 59.Bouchahma M., Ben Hammouda S., Kouki S., Alshemaili M., Samara K. An Automatic Dental Decay Treatment Prediction using a Deep Convolutional Neural Network on X-ray Images; Proceedings of the 2019 IEEE/ACS 16th International Conference on Computer Systems and Applications (AICCSA); Abu Dhabi, United Arab Emirates. 3–7 November 2019; pp. 1–4. [DOI] [Google Scholar]

- 60.Casalegno F., Newton T., Daher R., Abdelaziz M., Lodi-Rizzini A., Schürmann F., Krejci I., Markram H. Caries Detection with Near-Infrared Transillumination Using Deep Learning. J. Dent. Res. 2019;98:1227–1233. doi: 10.1177/0022034519871884. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Chen H., Zhang K., Lyu P., Li H., Zhang L., Wu J., Lee C.-H. A deep learning approach to automatic teeth detection and numbering based on object detection in dental periapical films. Sci. Rep. 2019;9:3840. doi: 10.1038/s41598-019-40414-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Cheng B., Wang W. Dental hard tissue morphological segmentation with sparse representation-based classifier. Med. Biol. Eng. Comput. 2019;57:1629–1643. doi: 10.1007/s11517-019-01985-0. [DOI] [PubMed] [Google Scholar]

- 63.Chin C.-L., Lin J.-W., Wei C.-S., Hsu M.-C. Dentition Labeling and Root Canal Recognition Using Ganand Rule-Based System; Proceedings of the 2019 International Conference on Technologies and Applications of Artificial Intelligence (TAAI); Kaohsiung, Taiwan. 21–23 November 2019; pp. 1–6. [DOI] [Google Scholar]

- 64.Choi H.-I., Jung S.-K., Baek S.-H., Lim W.H., Ahn S.-J., Yang I.-H., Kim T.-W. Artificial Intelligent Model with Neural Network Machine Learning for the Diagnosis of Orthognathic Surgery. J. Craniofac. Surg. 2019;30:1986–1989. doi: 10.1097/SCS.0000000000005650. [DOI] [PubMed] [Google Scholar]

- 65.Cui Z., Li C., Wang W. ToothNet: Automatic Tooth Instance Segmentation and Identification from Cone Beam CT Images; Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); Long Beach, CA, USA. 15–20 June 2019; pp. 6361–6370. [DOI] [Google Scholar]

- 66.Dasanayaka C., Dharmasena B., Bandara W.R., Dissanayake M.B., Jayasinghe R. Segmentation of Mental Foramen in Dental Panoramic Tomography using Deep Learning; Proceedings of the 2019 14th Conference on Industrial and Information Systems (ICIIS); Kandy, Sri Lanka. 18–20 December 2019; pp. 81–84. [DOI] [Google Scholar]

- 67.Cruz J.C.D., Garcia R.G., Cueto J.C.C.V., Pante S.C., Toral C.G.V. Automated Human Identification through Dental Image Enhancement and Analysis; Proceedings of the 2019 IEEE 11th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM); Laoag, Philippines. 29 November–1 December 2019; pp. 1–6. [DOI] [Google Scholar]

- 68.Duong D.Q., Nguyen K.-C.T., Kaipatur N.R., Lou E.H.M., Noga M., Major P.W., Punithakumar K., Le L.H. Fully Automated Segmentation of Alveolar Bone Using Deep Convolutional Neural Networks from Intraoral Ultrasound Images; Proceedings of the 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Berlin, Germany. 23–27 July 2019; pp. 6632–6635. [DOI] [PubMed] [Google Scholar]

- 69.Ekert T., Krois J., Meinhold L., Elhennawy K., Emara R., Golla T., Schwendicke F. Deep Learning for the Radiographic Detection of Apical Lesions. J. Endod. 2019;45:917–922.e5. doi: 10.1016/j.joen.2019.03.016. [DOI] [PubMed] [Google Scholar]

- 70.Hatvani J., Basarab A., Tourneret J.-Y., Gyongy M., Kouame D. A Tensor Factorization Method for 3-D Super Resolution with Application to Dental CT. IEEE Trans. Med. Imaging. 2019;38:1524–1531. doi: 10.1109/TMI.2018.2883517. [DOI] [PubMed] [Google Scholar]

- 71.Hatvani J., Horvath A., Michetti J., Basarab A., Kouame D., Gyongy M. Deep Learning-Based Super-Resolution Applied to Dental Computed Tomography. IEEE Trans. Radiat. Plasma Med. Sci. 2019;3:120–128. doi: 10.1109/TRPMS.2018.2827239. [DOI] [Google Scholar]

- 72.Hegazy M.A.A., Cho M.H., Cho M.H., Lee S.Y. U-net based metal segmentation on projection domain for metal artifact reduction in dental CT. Biomed. Eng. Lett. 2019;9:375–385. doi: 10.1007/s13534-019-00110-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Hiraiwa T., Ariji Y., Fukuda M., Kise Y., Nakata K., Katsumata A., Fujita H., Ariji E. A deep-learning artificial intelligence system for assessment of root morphology of the mandibular first molar on panoramic radiography. Dentomaxillofac. Radiol. 2019;48:20180218. doi: 10.1259/dmfr.20180218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Hu Z., Jiang C., Sun F., Zhang Q., Ge Y., Yang Y., Liu X., Zheng H., Liang D. Artifact correction in low-dose dental CT imaging using Wasserstein generative adversarial networks. Med. Phys. 2019;46:1686–1696. doi: 10.1002/mp.13415. [DOI] [PubMed] [Google Scholar]

- 75.Hung M., Voss M.W., Rosales M.N., Li W., Su W., Xu J., Bounsanga J., Ruiz-Negrón B., Lauren E., Licari F.W. Application of machine learning for diagnostic prediction of root caries. Gerodontology. 2019;36:395–404. doi: 10.1111/ger.12432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Ilic I., Vodanovic M., Subasic M. Gender Estimation from Panoramic Dental X-ray Images using Deep Convolutional Networks; Proceedings of the IEEE EUROCON 2019 -18th International Conference on Smart Technologies; Novi Sad, Serbia. 1–4 July 2019; pp. 1–5. [DOI] [Google Scholar]

- 77.Kats L., Vered M., Zlotogorski-Hurvitz A., Harpaz I. Atherosclerotic carotid plaque on panoramic radiographs: Neural network detection. Int. J. Comput. Dent. 2019;22:163–169. [PubMed] [Google Scholar]

- 78.Kats L., Vered M., Zlotogorski-Hurvitz A., Harpaz I. Atherosclerotic carotid plaques on panoramic imaging: An automatic detection using deep learning with small dataset. arXiv. 20181808.08093 [Google Scholar]

- 79.Kim D.W., Lee S., Kwon S., Nam W., Cha I.-H., Kim H.J. Deep learning-based survival prediction of oral cancer patients. Sci. Rep. 2019;9:6994. doi: 10.1038/s41598-019-43372-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Kim J., Lee H.-S., Song I.-S., Jung K.-H. DeNTNet: Deep Neural Transfer Network for the detection of periodontal bone loss using panoramic dental radiographs. Sci. Rep. 2019;9:17615. doi: 10.1038/s41598-019-53758-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Kise Y., Shimizu M., Ikeda H., Fujii T., Kuwada C., Nishiyama M., Funakoshi T., Ariji Y., Fujita H., Katsumata A., et al. Usefulness of a deep learning system for diagnosing Sjögren’s syndrome using ultrasonography images. Dentomaxillofac. Radiol. 2020;49:20190348. doi: 10.1259/dmfr.20190348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Koch T.L., Perslev M., Igel C., Brandt S.S. Accurate segmentation of dental panoramic radiographs with U-NETS; Proceedings of the 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019); Venice, Italy. 8–11 April 2019; pp. 15–19. [Google Scholar]

- 83.Krois J., Ekert T., Meinhold L., Golla T., Kharbot B., Wittemeier A., Dörfer C., Schwendicke F. Deep Learning for the Radiographic Detection of Periodontal Bone Loss. Sci. Rep. 2019;9:8495. doi: 10.1038/s41598-019-44839-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Lee J.-S., Adhikari S., Liu L., Jeong H.-G., Kim H., Yoon S.-J. Osteoporosis detection in panoramic radiographs using a deep convolutional neural network-based computer-assisted diagnosis system: A preliminary study. Dentomaxillofac. Radiol. 2019;48:20170344. doi: 10.1259/dmfr.20170344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Li X., Zhang Y., Cui Q., Yi X., Zhang Y. Tooth-Marked Tongue Recognition Using Multiple Instance Learning and CNN Features. IEEE Trans. Cybern. 2019;49:380–387. doi: 10.1109/TCYB.2017.2772289. [DOI] [PubMed] [Google Scholar]

- 86.Liu L., Xu J., Huan Y., Zou Z., Yeh S.-C., Zheng L.-R. A Smart Dental Health-IoT Platform Based on Intelligent Hardware, Deep Learning, and Mobile Terminal. IEEE J. Biomed. Health Inform. 2020;24:898–906. doi: 10.1109/JBHI.2019.2919916. [DOI] [PubMed] [Google Scholar]

- 87.Liu Y., Shang X., Shen Z., Hu B., Wang Z., Xiong G. 3D Deep Learning for 3D Printing of Tooth Model; Proceedings of the 2019 IEEE International Conference on Service Operations and Logistics, and Informatics (SOLI); Zhengzhou, China. 6–8 November 2019; pp. 274–279. [DOI] [Google Scholar]

- 88.Milosevic D., Vodanovic M., Galic I., Subasic M. Estimating Biological Gender from Panoramic Dental X-ray Images; Proceedings of the 2019 11th International Symposium on Image and Signal Processing and Analysis (ISPA); Dubrovnik, Croatia. 23–25 September 2019; pp. 105–110. [DOI] [Google Scholar]

- 89.Minnema J., van Eijnatten M., Hendriksen A.A., Liberton N., Pelt D.M., Batenburg K.J., Forouzanfar T., Wolff J. Segmentation of dental cone-beam CT scans affected by metal artifacts using a mixed-scale dense convolutional neural network. Med. Phys. 2019;46:5027–5035. doi: 10.1002/mp.13793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Moriyama Y., Lee C., Date S., Kashiwagi Y., Narukawa Y., Nozaki K., Murakami S. Evaluation of Dental Image Augmentation for the Severity Assessment of Periodontal Disease; Proceedings of the 2019 International Conference on Computational Science and Computational Intelligence (CSCI); Las Vegas, NV, USA. 5–7 December 2019; pp. 924–929. [DOI] [Google Scholar]

- 91.Moutselos K., Berdouses E., Oulis C., Maglogiannis I. Recognizing Occlusal Caries in Dental Intraoral Images Using Deep Learning; Proceedings of the 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Berlin, Germany. 23–27 July 2019; pp. 1617–1620. [DOI] [PubMed] [Google Scholar]

- 92.Murata M., Ariji Y., Ohashi Y., Kawai T., Fukuda M., Funakoshi T., Kise Y., Nozawa M., Katsumata A., Fujita H., et al. Deep-learning classification using convolutional neural network for evaluation of maxillary sinusitis on panoramic radiography. Oral Radiol. 2019;35:301–307. doi: 10.1007/s11282-018-0363-7. [DOI] [PubMed] [Google Scholar]

- 93.Patcas R., Bernini D., Volokitin A., Agustsson E., Rothe R., Timofte R. Applying artificial intelligence to assess the impact of orthognathic treatment on facial attractiveness and estimated age. Int. J. Oral Maxillofac. Surg. 2019;48:77–83. doi: 10.1016/j.ijom.2018.07.010. [DOI] [PubMed] [Google Scholar]

- 94.Patcas R., Timofte R., Volokitin A., Agustsson E., Eliades T., Eichenberger M., Bornstein M.M. Facial attractiveness of cleft patients: A direct comparison between artificial-intelligence-based scoring and conventional rater groups. Eur. J. Orthod. 2019;41:428–433. doi: 10.1093/ejo/cjz007. [DOI] [PubMed] [Google Scholar]

- 95.Sajad M., Shafi I., Ahmad J. Automatic Lesion Detection in Periapical X-rays; Proceedings of the 2019 International Conference on Electrical, Communication, and Computer Engineering (ICECCE); Swat, Pakistan. 24–25 July 2019; pp. 1–6. [DOI] [Google Scholar]

- 96.Senirkentli G.B., Sen S., Farsak O., Bostanci E. A Neural Expert System Based Dental Trauma Diagnosis Application; Proceedings of the 2019 Medical Technologies Congress (TIPTEKNO); Izmir, Turkey. 3–5 October 2019; pp. 1–4. [DOI] [Google Scholar]

- 97.Stark B., Samarah M. Ensemble and Deep Learning for Real-time Sensors Evaluation of algorithms for real-time sensors with application for detecting brushing location; Proceedings of the 2019 IEEE 5th International Conference on Computer and Communications (ICCC); Chengdu, China. 5–9 December 2019; pp. 555–559. [DOI] [Google Scholar]

- 98.Tian S., Dai N., Zhang B., Yuan F., Yu Q., Cheng X. Automatic Classification and Segmentation of Teeth on 3D Dental Model Using Hierarchical Deep Learning Networks. IEEE Access. 2019;7:84817–84828. doi: 10.1109/ACCESS.2019.2924262. [DOI] [Google Scholar]

- 99.Tuzoff D.V., Tuzova L.N., Bornstein M.M., Krasnov A.S., Kharchenko M.A., Nikolenko S.I., Sveshnikov M.M., Bednenko G.B. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac. Radiol. 2019;48:20180051. doi: 10.1259/dmfr.20180051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Vinayahalingam S., Xi T., Bergé S., Maal T., de Jong G. Automated detection of third molars and mandibular nerve by deep learning. Sci. Rep. 2019;9:9007. doi: 10.1038/s41598-019-45487-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Woo J., Xing F., Prince J.L., Stone M., Green J.R., Goldsmith T., Reese T.G., Wedeen V.J., El Fakhri G. Differentiating post-cancer from healthy tongue muscle coordination patterns during speech using deep learning. J. Acoust. Soc. Am. 2019;145:EL423–EL429. doi: 10.1121/1.5103191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Xu X., Liu C., Zheng Y. 3D Tooth Segmentation and Labeling Using Deep Convolutional Neural Networks. IEEE Trans. Vis. Comput. Graph. 2019;25:2336–2348. doi: 10.1109/TVCG.2018.2839685. [DOI] [PubMed] [Google Scholar]

- 103.Yamaguchi S., Lee C., Karaer O., Ban S., Mine A., Imazato S. Predicting the Debonding of CAD/CAM Composite Resin Crowns with AI. J. Dent. Res. 2019;98:1234–1238. doi: 10.1177/0022034519867641. [DOI] [PubMed] [Google Scholar]

- 104.Yauney G., Rana A., Wong L.C., Javia P., Muftu A., Shah P. Automated Process Incorporating Machine Learning Segmentation and Correlation of Oral Diseases with Systemic Health; Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Berlin, Germany. 23–27 July 2019; pp. 3387–3393. [DOI] [PubMed] [Google Scholar]

- 105.Alalharith D.M., Alharthi H.M., Alghamdi W.M., Alsenbel Y.M., Aslam N., Khan I.U., Shahin S.Y., Dianišková S., Alhareky M.S., Barouch K.K. A Deep Learning-Based Approach for the Detection of Early Signs of Gingivitis in Orthodontic Patients Using Faster Region-Based Convolutional Neural Networks. Int. J. Environ. Res. Public Health. 2020;17:8447. doi: 10.3390/ijerph17228447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Aliaga I., Vera V., Vera M., García E., Pedrera M., Pajares G. Automatic computation of mandibular indices in dental panoramic radiographs for early osteoporosis detection. Artif. Intell. Med. 2020;103:101816. doi: 10.1016/j.artmed.2020.101816. [DOI] [PubMed] [Google Scholar]

- 107.Banar N., Bertels J., Laurent F., Boedi R.M., De Tobel J., Thevissen P., Vandermeulen D. Towards fully automated third molar development staging in panoramic radiographs. Int. J. Leg. Med. 2020;134:1831–1841. doi: 10.1007/s00414-020-02283-3. [DOI] [PubMed] [Google Scholar]

- 108.Cantu A.G., Gehrung S., Krois J., Chaurasia A., Rossi J.G., Gaudin R., Elhennawy K., Schwendicke F. Detecting caries lesions of different radiographic extension on bitewings using deep learning. J. Dent. 2020;100:103425. doi: 10.1016/j.jdent.2020.103425. [DOI] [PubMed] [Google Scholar]