Abstract

The last decade’s developments in sensor technologies and artificial intelligence applications have received extensive attention for daily life activity recognition. Autism spectrum disorder (ASD) in children is a neurological development disorder that causes significant impairments in social interaction, communication, and sensory action deficiency. Children with ASD have deficits in memory, emotion, cognition, and social skills. ASD affects children’s communication skills and speaking abilities. ASD children have restricted interests and repetitive behavior. They can communicate in sign language but have difficulties communicating with others as not everyone knows sign language. This paper proposes a body-worn multi-sensor-based Internet of Things (IoT) platform using machine learning to recognize the complex sign language of speech-impaired children. Optimal sensor location is essential in extracting the features, as variations in placement result in an interpretation of recognition accuracy. We acquire the time-series data of sensors, extract various time-domain and frequency-domain features, and evaluate different classifiers for recognizing ASD children’s gestures. We compare in terms of accuracy the decision tree (DT), random forest, artificial neural network (ANN), and k-nearest neighbour (KNN) classifiers to recognize ASD children’s gestures, and the results showed more than 96% recognition accuracy.

Keywords: autism spectrum disorder, sign language, gesture recognition, speech-impaired, IoT, artificial neural network, decision tree, random forest, k-nearest neighbors

1. Introduction

Autism spectrum disorder (ASD) is a complex neurological developmental disorder characterized by significant impairment in social interaction, communication, and ritualistic behavior [1]. According to the 2015 World Health Organization statistics, more than 5% of the world’s population suffers from hearing impairment. The burden on society increases due to deaf workers because their unemployment rate is about 75% [2]. About 1 out of 200 children is diagnosed with ASD, and boys are four to five times more affected than girls [3]. Biao et al. [4] stated that according to the Centers for Disease Control and Prevention (CDC), about 1 in 59 children has ASD. Maenner et al. [5] discussed in the recent CDC estimates that in eight-year-old children, 23 out of 1000 (i.e., 1 in 44) meet the criterion of ASD, which is an increase from the prior estimates. Areeb et al. [6] reported in their article that according to the 2011 census, in India, about 2.7 million people cannot speak, and 1.8 million are deaf. Each ASD child has his/her specific needs. ASD symptoms are usually seen in a child aged one to two years. ASD children often experience problems with social contact, have an association, and lack social interaction, social behavior, or physical activity. ASD children have difficulties communicating with other people. These children require extra care because they often do not understand other people’s actions. These children are challenging to understand and face difficulty in speech and communication. Ramos-Cabo et al. [7] studied the impact of gesture types and cognition in ASD. In this paper, we propose a hand- and head-worn sensors-based system to recognize the sign language of autistic children to communicate the message to non-ASD people.

Autistic people can communicate in sign language, which is not easy to understand [8,9] because only deaf and mute people understand sign language. It is difficult for people to learn sign language until it becomes necessary, or they must encounter ASD people regularly [10]. Padden, in the article [11], identified that there are 6909 spoken languages and 138 sign languages. Sign language focuses on the arms, hands, head, and body movements to construct a significantly gesture-based language. Providing the proper facilitation to disabled people is essential because a considerable portion of our society consists of autistic hearing-impaired people. Traditional and non-technical solutions offered to these people are cochlear implants, writing, and interpreters. A cochlear implant is not an inclusive solution, as 10.1% of people having cochlear implants still have hearing problems [12]. It is frustrating to use writing as a mode of communication because of its ambiguity and slowness. A sensor added to the body requires tight-fitting garments that upset the solace level to avoid relative advancement. In contrast, handwriting rates are 15 to 25 WPM (words per minute), usually a person’s second language. Autistic people also use interpreters, but this involves privacy issues, and they charge a high hourly rate. Finding an interpreter for a person with specialized vocabulary is also challenging. Ivani et al. [13] designed and implemented an algorithm to automatically recognize small and similar gestures within a humanoid–robot therapy called IOGIOCO for ASD children. Wearable computing provides proactive support in collecting data from the user context. Wrist-worn sensor modules are used for collecting the gestures data and recognizing gestures of the ASD children [14]. Borkar et al. [15] designed a glove of flex sensors pads to sense various movements, specifically the curve movements of fingers. The device is designed smartly to sense the resistance and action by the hand. Most of the literature is focused on hand movements only and is limited in recognizing complex gestures which involve multiple kinds of body movement. In this article, we propose a fusion-based IoT platform where multiple sensors are placed on different body locations for complex gesture recognition of ASD children.

Wearable sensors, the Internet of Things (IoT), machine learning, and deep learning have gained a significant role in daily life. IoT integrates the actuators, sensors, and communication technologies to acquire the data, control the environment, and access statuses at any time and anywhere [16,17,18]. Features are extracted from sensor data and then classified by different classification techniques. Placing the sensors at an optimal location is essential, as the variation affects the classification. Avoiding relative movement sensors appended to the body requires the utilization of tight-fitting garments which upsets the solace level [19]. A gesture-based recognition system is the best possible solution which enables mute people to move quickly through society. A sensor-based recognition system has advantages over other recognition approaches. The benefits are that the sensors are portable, easy to use, low power, low cost, durable and safe to use, and easy to install [20]. Some of the gestures of these people are complex, and they require a particular interpreter, so there is a need for a system that efficiently translates the gestures of mute and deaf people.

The overall contributions of the paper can be summarized as follows:

ASD children have difficulties in communication skills and they suffer from a speech disorder. We proposed a multi-sensor body-worn area sensor network for complex sign language recognition of ASD children focusing on helping the ASD children to convey their message to non-ASD people.

We acquired and built a dataset of ten complex gestures of ASD children’s sign language, performed twenty times each using the designed flex sensors glove, accelerometers, and gyroscope placed at hand and head position.

We extracted statistical measures as features from the sensors data both in time and frequency domains to reduce the processing time and improve accuracy.

A performance comparison of machine learning algorithms to select the algorithm with the highest accuracy, precision, and recall for efficient recognition of ASD gestures was carried out.

A real-time implementation is tested on Raspberry-PI systems on a chip to demonstrate the system.

The rest of the paper is organized as follows. Section 2 briefly introduces the related works and the background of sensors, IoT, and machine learning techniques about activities and gesture recognition. The proposed methodology for body-worn sensors-based IoT platform for ASD children’s gestures acquisition, feature extraction, and recognition algorithms is discussed in Section 3. Section 4 discusses the results, and finally we conclude the research in Section 5.

2. Related Work

Body area sensor network (BASN), also termed wireless body area network (WBAN), is a network for portable processing devices. BASN devices can be incorporated into the body as an implant, attached to a body at a fixed position or in combination with devices that people can carry in multiple positions, such as pockets, in the hand, or in carrying bags [21]. There is always a trend toward narrowing devices; body area networks consist of multiple mini sensor networks, and collectively these sensor networks are called body central units (BCUs). Larger smart devices (tabs and tablets) and associated devices play a significant role in serving as data centers or data portals, and provide a user interface to view and manage BASN applications on-site [22]. WBAN can use WPAN (wireless personal area network) technology as long-distance installation [23]. IoT makes it possible to connect portable devices implanted in the human body to the internet. In this way, healthcare professionals can access a patient’s data online, using the internet, regardless of the patient’s location. TEMPO 3.1 (tech-medical sensitive observation) allows wireless transmission in six degrees, in a portable form, to capture and processes the precise and accurate platform of third-generation body area sensors. TEMPO 3.1 is designed for user and researcher use, allowing motion capture applications in BASN networks [24].

Human gesture recognition uses ambient sensors, cameras, wearable sensors, and mobile sensor-based systems. Ambient sensors, used in an external or local frameworks, are installed in the environment and have no physical contact with the concerned person. These sensors include radar, RF signals, event (switch based), and pressure sensors [25,26]. The systems have the main advantage of allowing ignoring the existence of the sensors within the user environment, and not pertaining to privacy leakage issues. For example, to monitor the older person’s activity, sensors can be installed in a living room or anywhere in the home [27]. Cameras also achieve gesture detection, in addition to ambient sensors. The cameras are placed in a limited area to provide images or videos of human activities to implement the fall detection algorithm [28]. Zheng et al. [29] proposed a large-vocabulary sign language recognition system using an entropy-based forward and backward matching algorithm to segment each gesture signal. They designed a gesture recognizer consisting of generating a gesture and a semantic-based voter. The candidate gesture generator aims to provide candidate gesture designs based on a three-branch convolutional neural network (CNN). Han et al. [30] proposed three-dimensional CNN for concurrently modeling the spatial appearance and temporal evolution for sign language recognition. They used RGB video to recognize the signs. To reduce risks and improve the quality of life at home, it can be monitored by determining daily life activities (ADL) using RGB-D cameras [31]. Camera surveillance does not attract many people as it raises privacy concerns in general. The camera is especially suitable for living rooms; however, it is difficult to place a camera in a living room due to privacy. Recently, electronic devices (such as smartphones) are becoming a daily de facto tool. Mobiles have many integrated sensors such as GPS locators, gyroscopes, and accelerometers [32,33]. These sensors provide data both remotely and accurately. In addition to many advantages, reception based on portable sensors also has disadvantages. Keeping the smartphone in pockets reduces the effectiveness of recognizing certain activities such as writing, eating, and cooking [34]. In addition, women tend to keep their phones in their bags, almost losing mobile connection to their bodies [35,36]. We can eliminate all these problems and achieve greater efficiencies by installing wearable sensors on desired body parts.

Wearable devices give opportunities for innovative services in health sciences, along with predictive health monitoring by persistently collecting data of the wearer [37]. Wearable sensors provide precise and accurate data by installing them on the desired muscles of the limb, thereby ensuring a better and more correct method of gesture recognition system [38]. This system comprises body-worn sensors by the person for data acquisition purposes. The systems contain body-worn accelerometers, gyroscopes, flex sensors, pedometers, and goniometers [39]. The sensors are installed at different body parts, such as the hip, wrist, ankle, arm, or thigh, to recognize gestures performed by these muscles [40]. The advantage of using wearable sensors is that data collected by these sensors have greater efficiency, can monitor multiple muscles’ movement, and above all, are effortless to use for the affected person [41]. The study on gesture recognition has found that the location of sensors depends primarily on the purpose of data collection. The accuracy of the observation depends on the position of the sensor installed on the body [42]. It also shows that different gestures, which include movement, posture, or activity, are best controlled by placing a sensor on the ankle, hip, or pocket [43]. On the other hand, exercises related to upper body parts require sensors placed on the chest, neck, arms, or elbows for better recognition accuracy [44]. The system proposes the optimal location for the sensor network to be placed on different muscles to provide better accuracy. Li Lang et al. [45] designed SkinGest using five strain sensors and machine learning algorithms such as k-nearest neighbor (KNN) and support vector machine (SVM) to recognize the numerical sign language for 0–9. They used ten subjects to acquire data. Zhang et al. designed WearSign for sign language translation using the inertial and ElectroMyoGraphy sensors [46]. Table 1 shows the literature review related to wearable sensors.

Table 1.

Literature review focused on wearable sensors used for gestures and activity recognition.

| Ref. No. | Dataset Details | Extracted Features | Types of Sensor |

|---|---|---|---|

| [50] | 20 numbers, 20 alphabets | Mean value, standard dev, percentiles. and correlation frequency domain (energy, entropy) | 5 capacitive touch sensors |

| [43] | 200 words, 10 iterations | Mean, standard dev, kurtosis, skewness, correlation, range, spectral energy, peak frequencies, cross-spectral densities, and spectral entropy | 2 accelerometers |

| [51] | 35 gestures, 20 trials each 5 persons | Mean value, variance, energy, spectral, entropy, and FFT | 2 accelerometers, 1 gyroscope |

| [52] | Arabic alphabets, 20 trails | Average, standard dev, the time between peaks, binned distribution, and average resultant acceleration | 2 accelerometers, 2 gyroscopes |

| [53] | 50 letters, 50 iterations | Mean, median, standard deviation, frequency domain, time domain | 5 flex sensors |

| [49] | 20 gestures, 4 persons, 20 iterations | Mean, variance, energy, spectral entropy, and discrete FFT | 2 accelerometers, 1 gyroscope |

| [8] | English alphabets | Mean, standard deviation, average | 1 accelerometer |

The sensor data is collected using different sampling frequencies depending on the nature of the recognizing activities. The data is divided into segments of time series, called window size. Zappi et al. [47] performed several related tasks and collected data from an accelerometer with a frequency of 50 Hz and proposed a two-step selection and acquisition method. The acquisition phase received 37 functions with a one-second window size and overlapping of half a second. They used the relief-F function for the selection algorithm to select 7 attributes specific to 37 in the selection step [48]. First, they used a low-pass filter to pre-process the signal to eliminate the DC component, then recover the processed data to gain the desired properties (min, max, average RMS value, STD, average, and maximum frequency). Classifiers are one of the best methods that use functional data for testing and training. Training data generally include functions that are not labeled. The classifier learning algorithm adjusts the parameters to create a model or run a hypothesis. Now this template can provide a label to new inputs [49]. The most used classifiers techniques used for the classification of data are random forest (RF), KNN, SVM, multilayer receiver perception (MLP), artificial natural network (ANN), and decision tree. Table 2 shows the literature review related to different algorithms.

Table 2.

Literature review related to algorithms

| Ref. No. | Paper Proposal | Hardware/Software | Datasets | Applied Algorithms | Accuracy |

|---|---|---|---|---|---|

| [54] | This paper proposes an electronic system equipped with wearable sensors for gesture recognition to interpret abnormal activities of the children to their parents through a machine learning algorithm. | Flex Sensor, Arduino, mpu6050, Bluetooth | Alphabets, 20 iterations | KNN | 95% |

| [55] | This work provides ICT solutions for autistic children by examining a person’s voice, body language, and facial expressions to monitor their behavior while performing gestures. | Accelerometer, PC, RGB camera | 40 gestures, 10 persons, 10 repetitions | Parallel HMM | 99% |

| [56] | This article presents the links between known attention processes and descriptive indicators, emotional and traditional gestures, and nonverbal gestures between ASDs in attention processes and gestures. | Flex sensor, MPU 6050, contact sensor, Arduino, Bluetooth, PC | 1300 words | HMM | 80% |

| [57] | This paper has proposed a system in which the recognition process uses a wearable glass and allows these children to interpret their gestures easily. | RGB camera, PC | 26 postures, 28,000 images | HAAR cascade algorithm | 94.5% |

| [58] | This paper presented the idea to analyze the development of conventional gestures in different types of children, such as typical ASD children. | RGB camera, PC | English and Arabic alphabets | BLOB, MRB, least difference, GMM | 89.5%, 80% |

| [59] | This paper presented the IoT-based system called” Wear Sense” to detect the atypical and unusual movements and behaviors in children who have ASD. | Capacitive touch sensor, R-pi, Python | 36 gestures, 30 trials each | Binary detection system | 86% |

3. The Proposed Body-Worn Sensors-Based IoT Platform for ASD Children Gesture Recognition

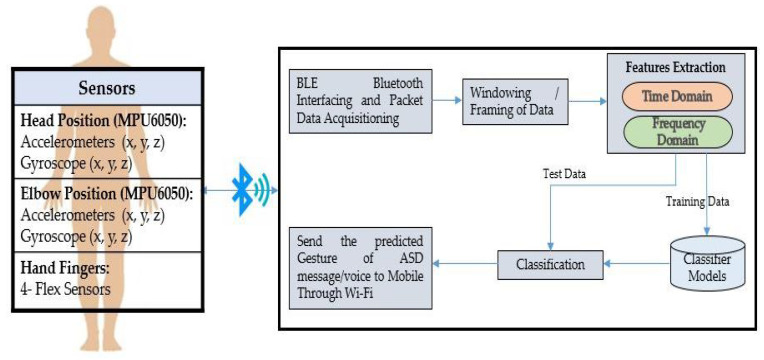

The article aims to develop a body-worn multi-sensor-based IoT platform to recognize ASD children and convert sign language to voice and text messages. Figure 1 shows the system architecture proposed for the sign language translator that explains the complete system operation. The proposed system consists of the following modules:

Body-worn sensors interfacing platform

Pictorial overview of gestures and data acquisition

Sampling, windowing, and features extraction

Classification/recognition algorithms

Figure 1.

The proposed architecture for body-worn sensors-based IoT platform for ASD children’s gestures recognition.

3.1. Body-Worn Sensors Interfacing Platform

We use body-worn sensors placed in three positions. (1) At the head position, in a head cap, an MPU6050 sensor module is installed. (2) At an elbow position, an MPU6050 sensors module is installed. For the fingers movements and bending, we use Flex sensors. An MPU6050 sensors module consists of an accelerometer and gyroscope of 3-axis. For the Flex sensors, we designed a glove, as shown in Figure 2, to place the Flex sensors in order to acquire finger-bending movement. The flex sensors can be used as variable resistors, and their resistance is proportional to the bend in the fingers. When the fingers bend, the resistance increases and vice versa. The flex sensor has flat resistance of 25 kΩ. Depending on a bend, the value can increase up to 125 kΩ. Fixed resistors uses flex sensors in series. Equation (1) shows the voltage divider rule, which calculates the flex resistance.

| (1) |

where = voltage of flex sensor, = 5 V (in this case); = resistance of flex sensor and = fixed resistance. MPU6050 is the motion sensor used in this paper to measure the linear and angular motion of the head and hand. This module has a three-axis embedded accelerometer and three-axis gyroscope sensors. The accelerometer and gyroscope of the MPU6050 both provide continuous output over time. For sending the data to the controller using the I2C communication protocol, the values are digitized using a 16-bit analog/digital converter. The ADC samples the data with a specific frequency (fs) and then quantizes these samples. We used the sampling frequency of 50 Hz for the accelerometer and gyroscope. The data from the sensors is collected by Arduino, which is then sent to the Raspberry Pi using Bluetooth. Data from the motion sensor is compiled using the I2C protocol, and data from the flex sensor is collected at the analog input (ADC). I2C is a two-wired bidirectional serial communication protocol used to send and receive data between two more electronic circuits.

Figure 2.

Placement of flex sensors in glove for hand gesture data.

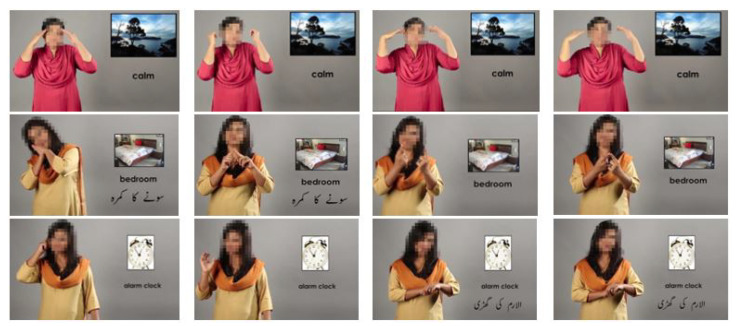

3.2. Pictorial Overview of Gestures and Data Acquisition

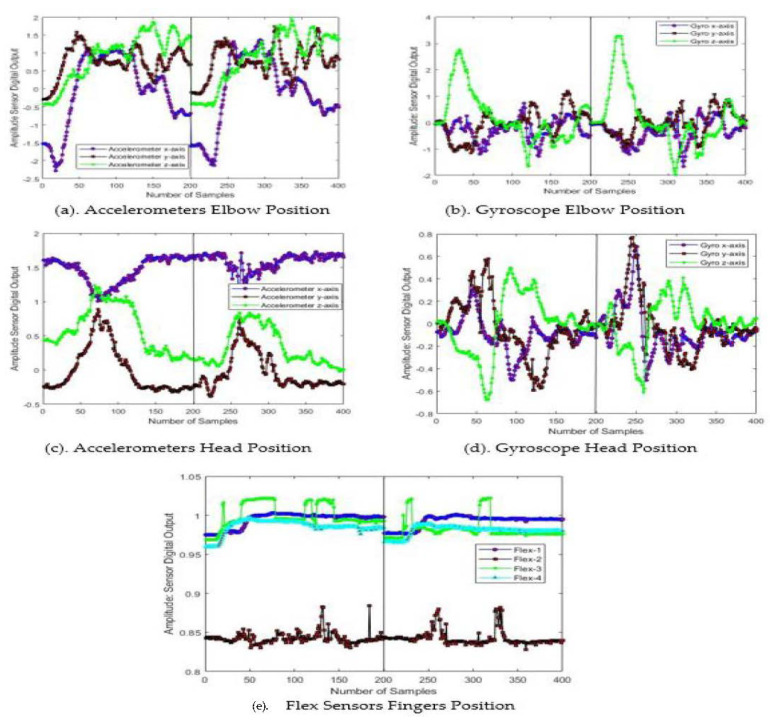

In this article, we acquire the data of ASD children by installing motion sensors at elbow and head positions, and a flex sensors glove for finger bending. Figure 3 shows the pictorial overview images taken from Pakistan sign language (PSL) and clearly depicts that each gesture involves multiple parts of the body. We installed multiple sensors on different body parts. We collected the data for ten gestures from ten ASD children from MPU1, MPU2, and flex1 through flex4, and assigned labels as GST-1–GST-10 as shown in Table 3. Each ASD child performed each gesture 20 times. Figure 4 shows the sensors’ response for two cycles performed by an ASD subject for the gesture of GST-1: alarm clock. We consider the 200 samples in a cycle, i.e., 4 second to complete each gesture. The Figure 4 clearly depicts that the sensors’ responses have a similar nature with little variation in amplitude and time.

Figure 3.

Pictorial overview of different gestures performed.

Table 3.

Information about the gestures.

| Gesture | Label | Gesture | Label |

|---|---|---|---|

| Alarm clock | GST-1 | Brilliant | GST-6 |

| Beautiful | GST-2 | Calm | GST-7 |

| Bed | GST-3 | Skull | GST-8 |

| Bedroom | GST-4 | Door Open | GST-9 |

| Blackboard | GST-5 | Fan | GST-10 |

Figure 4.

Two cycles of sensor response for acquiring the gesture alarm clock.

3.3. Sampling, Windowing, and Features Extraction

The sampling rate and windowing for feature extraction play a leading role in the gesture recognition process. The accelerometer, gyroscope, and flex sensor data is acquired at 50 Hz. Four seconds for the non-overlapping sliding windows approach is used for features . The features are extracted using the data window of 200 samples. We extract the features such as standard deviation, mean, minimum, and maximum values of accelerometers and flex sensors. The RMS feature is only used for the gyroscope, and the remaining features are only for accelerometers. Table 4 shows the statistical measures used to extract the features for classifying and recognizing ASD children’s gestures. We use a feature vector of 58 for each gesture.

Table 4.

Feature extraction from time-series data of multiple sensors

| Features Name | Description | Equations | ||

|---|---|---|---|---|

| Mean | Mean value calculation for accelerometer and gyroscope |

|

||

| Standard deviation | Finds the sensor’s data spread around the mean. |

|

||

| Skewness | The measure for the degree of symmetry in the variable distribution. |

|

||

| Kurtosis | The measure of tailedness in the variable distribution. |

|

||

| Maximum value | Calculates the maximum value of the accelerometer (x,y,z). |

|

||

| Minimum value | Shows the minimum value of the accelerometer (x,y,z). |

|

||

| Entropy | Essential for differentiating between activities. |

|

||

| Cosine Similarity | To distinguish between activities that fluctuate along an axis. |

|

||

| Root mean square | Calculates the angular movement along the x, y, and z axes, accordingly. |

|

||

| The absolute time difference between peaks | Computed by taking the absolute difference between the maximum and minimum peak times. |

|

||

| Frequency domain features | To find frequency domain features of acceleration data based on fast Fourier transform (FFT). |

|

||

| Quartile Range | To find the middle number between the minimum and the median of the sample data. |

|

3.4. Classification and Recognition Using Machine Learning Algorithms

Raspberry-PI is used as a platform to extract the features and use the machine learning libraries to recognize the gesture. We used the KNN, decision trees, random forest, and neural networks. We explain the algorithms in the following subsections.

K-Nearest Neighbours (KNN) Algorithm

The KNN algorithm is one of the lazy methods used for learning, because the learning (discovering the relationship between input features and the corresponding labels) begins after a test input. The algorithm finds the similarities (distance) between the feature vectors, sorts according to the similarity measure, and selects the Top-K neighbors. From the training data, this algorithm finds the K number of adjacent samples that are like test input samples. Mathematically, the similarity between the training samples and test data can be calculated either by Euclidean, Manhattan, or Minkowski distance, as follows, by Equations (14)–(16), respectively. Algorithm 1 shows the

| (14) |

| (15) |

| (16) |

| Algorithm 1 KNN Pseudocode |

3.5. Decision Tree

The fundamental structure of a decision tree includes a root node, a branch, and a leaf node. The root node represents an attribute’s test, the branches give the test outcomes/results, the leaf node gives the decision taken after considering the attributes (in other words, leaf nodes give a class label), and internal sub-nodes signify the dataset structures. It creates a tree type for the whole dataset and proceeds a single outcome at each leaf node by minimizing errors. Two parameters play an important key role in the formation of this algorithm’s structure: the attributes and attributes selection method. The nodes are selected using various mechanism scuh as Gini-Index, entropy, and information gain ratio. The pseudo-code of decision tree is Algorithm 2.

| Algorithm 2 Decision Tree Pseudo-code |

|

3.5.1. Random Forest Algorithms

The random forest method [60] is a classification method used to build multiple decision trees and ultimately take many weak learners’ decisions. Often, pruning these trees helps to prevent overfitting. Pruning serves as a trade-off between complexity and accuracy. No pruning implies high complexity, high use of time, and the use of more resources. This classifier helps to predict the gesture. Algorithm 3 shows the pseudo-code of random forest.

| Algorithm 3 Random Forest Pseudocode |

|

3.5.2. Artificial Neural Network

This paper uses neural networks [61] for complex models and multi-class classification. Neural networks are inspired by the brain, which is a network of neurons. The neuron model consists of input with input weight (activation function), hidden layers, and output. When input arrives, it is multiplied by the weight of the connection. During the time of the training of the model, this weight is updated to reduce the error. The input layer of the model does not process the input and passes it on to the nest layer, called hidden layers. These layers process the signals and create an output delivered to the output layer. The weight of the connection defines the influence of one neuron over the other. This weight updates in the back-propagation process to reduce the error. Algorithm 4 shows the neural networks.

| Algorithm 4 The Neural Networks using Back-propagation Method Pseudocode | ||||||||||||

|

After the gesture is recognized, we send the gesture text message to the person/guardian’s mobile for interaction.

4. Results and Discussion

This section discusses the sensors’ response and the results of classifiers. The inputs to these classifiers are the features extracted from the original dataset. We used accuracy, precision, and recall as a performance metrics to evaluate and compare the algorithms.

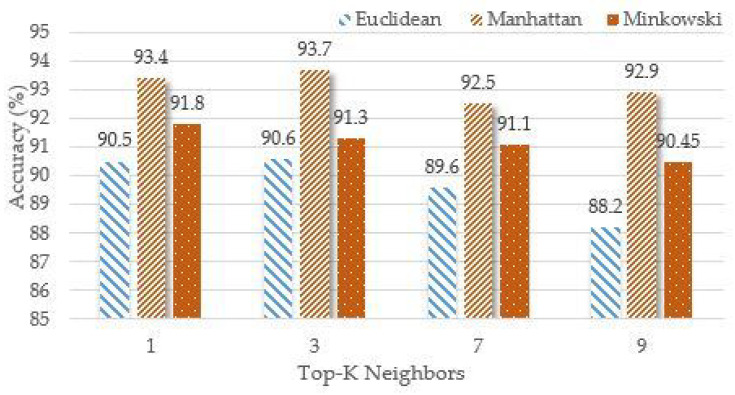

4.1. K-Nearest Neighbours (KNN) Algorithm Results

This classifier has several distances to use. Here, we have used three different distances: Euclidean distance, Manhattan distance, and Minkowski distance. The value of K varied between 1, 3, 7 and 9 to obtain maximum accuracy. The KNN classifier is applied to the dataset with different K values and the distance measures as shown in Figure 5. The maximum accuracy achieved with the KNN algorithm is 93.7% using Manhattan distance at K = 3 and cross-validation of 10 folds. Table 5 shows the confusion matrix for maximum accuracy of KNN.

Figure 5.

The KNN algorithm accuracy using different K-values and distance measures.

Table 5.

Confusion matrix for KNN classifier using Manhattan distance with the cross-validation factor of 10%.

| Actual /Predicted | GST-1 | GST-2 | GST-3 | GST-4 | GST-5 | GST-6 | GST-7 | GST-8 | GST-9 | GST-10 |

|---|---|---|---|---|---|---|---|---|---|---|

| GST-1 | 193 | 2 | 0 | 0 | 1 | 2 | 0 | 0 | 2 | 0 |

| GST-2 | 0 | 194 | 1 | 0 | 1 | 0 | 3 | 1 | 0 | 0 |

| GST-3 | 0 | 0 | 188 | 6 | 2 | 2 | 2 | 0 | 0 | 0 |

| GST-4 | 0 | 2 | 8 | 187 | 0 | 1 | 0 | 2 | 0 | 0 |

| GST-5 | 0 | 2 | 2 | 0 | 176 | 5 | 11 | 2 | 2 | 0 |

| GST-6 | 0 | 1 | 0 | 0 | 2 | 192 | 3 | 2 | 0 | 0 |

| GST-7 | 0 | 0 | 1 | 0 | 11 | 10 | 177 | 1 | 0 | 0 |

| GST-8 | 0 | 0 | 2 | 0 | 7 | 1 | 3 | 187 | 0 | 0 |

| GST-9 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 192 | 6 |

| GST-10 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 10 | 188 |

4.2. Decision Trees (J48) Results

The results from J48 classifiers are summarized in Table 6. We used different cross-validations to check the effect of training the dataset size for accuracy. The maximum accuracy achieved is for 90–10% for ten-fold cross-validation.

Table 6.

Results for J48 decision tree classifier.

| Number of Iterations | Cross-Validations (%) | Accuracy (%) |

|---|---|---|

| 1 | 5 | 86.1 |

| 2 | 10 | 87.91 |

| 3 | 15 | 86.6 |

| 4 | 20 | 87.7 |

| 5 | 25 | 87.7 |

The maximum accuracy obtained using this classifier is 87.912% at a cross-validation of 10%. Table 7 shows the corresponding confusion matrix.

Table 7.

Confusion matrix for J48 classifier with the cross-validation factor of 10%.

| Actual/Predicted | GST-1 | GST-2 | GST-3 | GST-4 | GST-5 | GST-6 | GST-7 | GST-8 | GST-9 | GST-10 |

|---|---|---|---|---|---|---|---|---|---|---|

| GST-1 | 191 | 1 | 0 | 1 | 1 | 3 | 0 | 1 | 0 | 2 |

| GST-2 | 0 | 183 | 2 | 6 | 3 | 1 | 4 | 1 | 0 | 0 |

| GST-3 | 0 | 5 | 167 | 9 | 8 | 4 | 1 | 6 | 0 | 0 |

| GST-4 | 0 | 5 | 12 | 167 | 3 | 5 | 2 | 6 | 0 | 0 |

| GST-5 | 0 | 1 | 7 | 2 | 157 | 5 | 20 | 6 | 2 | 0 |

| GST-6 | 3 | 4 | 2 | 7 | 3 | 172 | 8 | 1 | 0 | 0 |

| GST-7 | 0 | 4 | 3 | 1 | 16 | 13 | 162 | 1 | 0 | 0 |

| GST-8 | 2 | 4 | 6 | 7 | 4 | 3 | 5 | 169 | 0 | 0 |

| GST-9 | 0 | 0 | 0 | 0 | 3 | 0 | 0 | 0 | 195 | 2 |

| GST-10 | 3 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 195 |

4.3. Random Forest Results

The results in Table 8 show the output with changing cross-validation and maximum depth.

Table 8.

Results of RF classifiers with maximum depth and cross-validation factor.

| Number of Iterations |

Cross Validations (%) |

Accuracy (%) |

|---|---|---|

| 1 | 5 | 95.25 |

| 2 | 10 | 95.9 |

| 3 | 15 | 95.875 |

| 4 | 20 | 95.13 |

| 5 | 25 | 94.25 |

The maximum accuracy obtained is 95.9%. Table 9 shows the resulting confusion matrix of this maximum accuracy.

Table 9.

Confusion matrix of random forest with accuracy (95.9%).

| Actual/Predicted | GST-1 | GST-2 | GST-3 | GST-4 | GST-5 | GST-6 | GST-7 | GST-8 | GST-9 | GST-10 |

|---|---|---|---|---|---|---|---|---|---|---|

| GST-1 | 197 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 0 |

| GST-2 | 0 | 195 | 0 | 1 | 0 | 0 | 1 | 3 | 0 | 0 |

| GST-3 | 0 | 0 | 191 | 7 | 0 | 1 | 1 | 0 | 0 | 0 |

| GST-4 | 0 | 0 | 4 | 195 | 0 | 1 | 0 | 0 | 0 | 0 |

| GST-5 | 0 | 0 | 5 | 1 | 184 | 2 | 4 | 2 | 2 | 0 |

| GST-6 | 0 | 2 | 1 | 0 | 1 | 194 | 1 | 1 | 0 | 0 |

| GST-7 | 0 | 0 | 0 | 0 | 7 | 7 | 186 | 0 | 0 | 0 |

| GST-8 | 0 | 0 | 2 | 1 | 4 | 1 | 2 | 190 | 0 | 0 |

| GST-9 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 194 | 5 |

| GST-10 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 6 | 192 |

4.4. Artificial Neural Network

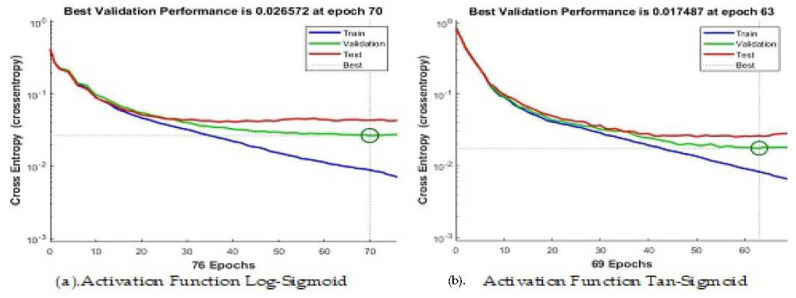

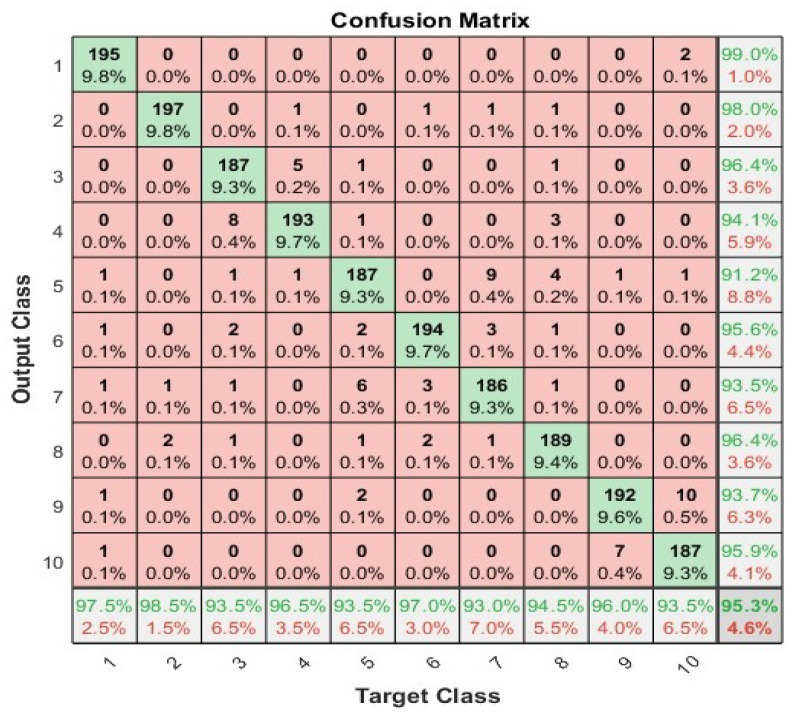

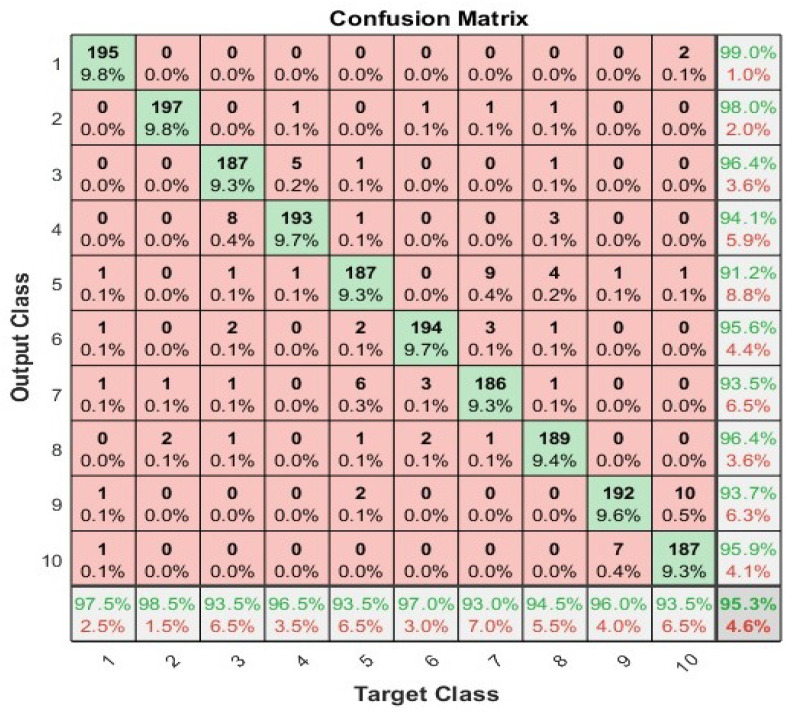

We have applied the neural network with 70%, 15%, and 15% split for training, validation, and testing, respectively, to show the highest accuracy. We used 100 neurons in the hidden layer and two different activation functions i.e., log-sigmoid and tan-sigmiod. Figure 6 shows the convergence curve using cross entropy for both activation functions. The tan-sigmoid shows early convergence with higher accuracy. Figure 7 and Figure 8 show the confusion matrices of log-sigmoid and tan-sigmoid, respectively.

Figure 6.

The error convergence and optimization current using different activation functions and neurons = 100.

Figure 7.

Confusion matrix of neural networks using activation function log-sigmoid.

Figure 8.

Confusion matrix of neural networks using activation function tan-sigmoid.

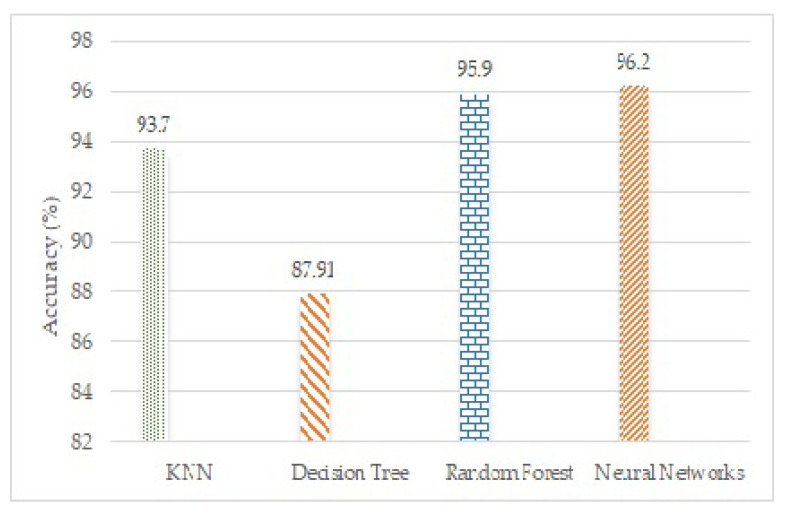

4.5. Comparison of Classifiers

Figure 9 shows a bar graph for the comparison of different classifiers. The bars represent the average accuracy of each classifier. The neural networks classifier with tan-sigmoid has the highest average accuracy of all.

Figure 9.

Performance Comparison Graph.

5. Conclusions

This article proposed a wearable sensors-based body area IoT system to acquire ASD children’s gesture time-series data and use machine learning (ML) to recognize what they are trying to say in sign language. This research is focused on the daily gesture recognition of ASD children to communicate their message to non-ASD people without any hesitation. The proposed system consists of wearable sensors such as accelerometers, gyroscopes, and flex sensors modules, installed at the head, elbow, and hand–fingers positions. The proposed system consists of acquiring the time-series data, sending the data through BLE Bluetooth to a Raspberry-PI-based processing system to extract the features, and recognizing the gesture using ML algorithms. Complex gestures involve the movement of multiple body parts, so we use multiple sensors at the head, hand, and finger to acquire the motions. We use two motion sensor modules (one for the head and one for the hand at the elbow) and four flex sensors (one for each finger) to collect the gesture response. We collected data from sensors and extracted features of 200 samples for every gesture performed. We have used different classifiers such as k-nearest neighbor, random forest, decision tree, and neural networks to predict the gesture performed. On average, the accuracy obtained is more than 90% for each gesture, and the maximum accuracy achieved is 96% by the neural networks. Finally, the gesture predicted is displayed for the gesture to verbal communication through the WiFi on the user’s smartphone.

Author Contributions

Conceptualization, F.U.; data curation, A.U. and A.A.S.; formal analysis, F.U. and N.A.A.; funding acquisition, N.A.A.; methodology, R.U.; software, U.A.S. and R.U.; supervision, F.U. and N.A.A.; writing—original draft, U.A.S. and A.U.; Writing—review & editing, F.U. and N.A.A. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

The study was conducted according to the guidelines, and approved by the Graduate and Research Ethic Committee of the Institutes.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

All authors declare no conflict of interest.

Funding Statement

This work is funded by the Big Data Analytics Center, UAEU, Grant number (G00003800).

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Razmkon A., Maghsoodzadeh S., Abdollahifard S. The effect of deep brain stimulation in children and adults with autism spectrum disorder: A systematic review. Interdiscip. Neurosurg. 2022;29:101567. doi: 10.1016/j.inat.2022.101567. [DOI] [Google Scholar]

- 2.Zablotsky B., Black L.I., Maenner M.J., Schieve L.A., Danielson M.L., Bitsko R.H., Blumberg S.J., Kogan M.D., Boyle C.A. Prevalence and trends of developmental disabilities among children in the United States: 2009–2017. Pediatrics. 2019;144:e20190811. doi: 10.1542/peds.2019-0811. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Alwakeel S.S., Alhalabi B., Aggoune H., Alwakeel M. A machine learning based WSN system for autism activity recognition; Proceedings of the 2015 IEEE 14th International Conference on Machine Learning and Applications (ICMLA); Miami, FL, USA. 9–11 December 2015; pp. 771–776. [Google Scholar]

- 4.Baio J., Wiggins L., Christensen D.L., Maenner M.J., Daniels J., Warren Z., Warren Z., Kurzius-Spencer M., Zahorodny W., Rosenberg C.R., et al. Prevalence of autism spectrum disorder among children aged 8 years—Autism and developmental disabilities monitoring network, 11 sites, United States, 2014. MMWR Surveill. Summ. 2018;67:1. doi: 10.15585/mmwr.ss6706a1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Maenner M.J., Shaw K.A., Bakian A.V., Bilder D.A., Durkin M.S., Esler A., Furnier S.M., Hallas L., Hall-Lande J., Hudson A., et al. Prevalence and characteristics of autism spectrum disorder among children aged 8 years—Autism and developmental disabilities monitoring network, 11 sites, United States, 2018. MMWR Surveill. Summ. 2021;70:1. doi: 10.15585/mmwr.ss7011a1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Areeb Q.M., Nadeem M., Alroobaea R., Anwer F. Helping hearing-impaired in emergency situations: A deep learning-based approach. IEEE Access. 2022;10:8502–8517. doi: 10.1109/ACCESS.2022.3142918. [DOI] [Google Scholar]

- 7.Ramos-Cabo S., Acha J., Vulchanov V., Vulchanova M. You may point, but do not touch: Impact of gesture-types and cognition on language in typical and atypical development. Int. J. Lang. Commun. Disord. 2022;57:324–339. doi: 10.1111/1460-6984.12697. [DOI] [PubMed] [Google Scholar]

- 8.Liang R.-H., Ouhyoung M. A real-time continuous gesture recognition system for sign language; Proceedings of the Third IEEE International Conference on Automatic Face and Gesture Recognition; Nara, Japan. 14–16 April 1998; pp. 558–567. [Google Scholar]

- 9.Dalimunte M., Daulay S.H. Echolalia Communication for Autism: An Introduction. AL-ISHLAH J. Pendidik. 2022;14:3395–3404. doi: 10.35445/alishlah.v14i3.740. [DOI] [Google Scholar]

- 10.Abdulla D., Abdulla S., Manaf R., Jarndal A.H. Design and implementation of a sign-to-speech/text system for deaf and dumb people; Proceedings of the 2016 5th International Conference on Electronic Devices, Systems and Applications (ICEDSA); Ras Al Khaimah, United Arab Emirates. 6–8 December 2016; pp. 1–4. [Google Scholar]

- 11.Padden C. Deaf around the World: The Impact of Language Deaf around the World: The Impact of Language. Oxford University Press; New York, NY, USA: 2010. Sign language geography; pp. 19–37. [DOI] [Google Scholar]

- 12.McGuire R.M., Hernandez-Rebollar J., Starner T., Henderson V., Brashear H., Ross D.S. Towards a one-way American sign language translator; Proceedings of the Sixth IEEE International Conference on Automatic Face and gesture Recognition; Seoul, Repiblic of Korea. 19–19 May 2004; pp. 620–625. [Google Scholar]

- 13.Ivani A.S., Giubergia A., Santos L., Geminiani A., Annunziata S., Caglio A., Olivieri I., Pedrocchi A. A gesture recognition algorithm in a robot therapy for ASD children. Biomed. Signal Process. Control. 2022;74:103512. doi: 10.1016/j.bspc.2022.103512. [DOI] [Google Scholar]

- 14.Siddiqui U.A., Ullah F., Iqbal A., Khan A., Ullah R., Paracha S., Shahzad H., Kwak K.S. Wearable-Sensors-Based Platform for Gesture Recognition of Autism Spectrum Disorder Children Using Machine Learning Algorithms. Sensors. 2021;21:3319. doi: 10.3390/s21103319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Borkar P., Balpande V., Aher U., Raut R. Hand-Talk Assistance: An Application for Hearing and Speech Impaired People. Intell. Syst. Rehabil. Eng. 2022:197–222. [Google Scholar]

- 16.Zhang Q., Xin C., Shen F., Gong Y., Zi Y., Guo H., Li Z., Peng Y., Zhang Q., Wang Z.L. Human body IoT systems based on the triboelectrification effect: Energy harvesting, sensing, interfacing and communication. Energy Environ. Sci. 2022;15:3688–3721. doi: 10.1039/D2EE01590K. [DOI] [Google Scholar]

- 17.Alam T., Gupta R. Federated Learning and Its Role in the Privacy Preservation of IoT Devices. Future Internet. 2022;14:246. doi: 10.3390/fi14090246. [DOI] [Google Scholar]

- 18.Iqbal A., Ullah F., Anwar H., Ur Rehman A., Shah K., Baig A., Ali S., Yoo S., Kwak K.S. Wearable Internet-of-Things platform for human activity recognition and health care. Int. J. Distrib. Sens. Netw. 2020;16:1550147720911561. doi: 10.1177/1550147720911561. [DOI] [Google Scholar]

- 19.Atallah L., Lo B., King R., Yang G.-Z. Sensor positioning for activity recognition using wearable accelerometers. IEEE Trans. Biomed. Circuits Syst. 2011;5:320–329. doi: 10.1109/TBCAS.2011.2160540. [DOI] [PubMed] [Google Scholar]

- 20.Hayek H.E., Nacouzi J., Kassem A., Hamad M., El-Murr S. Sign to letter translator system using a hand glove; Proceedings of the Third International Conference on e-Technologies and Networks for Development (ICeND2014); Beirut, Lebanon. 29 April–1 May 2014; pp. 146–150. [Google Scholar]

- 21.Lukowicz P., Amft O., Roggen D., Cheng J. On-body sensing: From gesture-based input to activity-driven interaction. Computer. 2010;43:92–96. doi: 10.1109/MC.2010.294. [DOI] [Google Scholar]

- 22.Mao J., Zhao J., Wang G., Yang H., Zhao B. Live demonstration: A hand gesture recognition wristband employing low power body channel communication; Proceedings of the 2017 IEEE Biomedical Circuits and Systems Conference (BioCAS); Turin, Italy. 19–21 October 2017; p. 1. [Google Scholar]

- 23.Naik M.R.K., Samundiswary P. Survey on Game Theory Approach in Wireless Body Area Network; Proceedings of the 2019 3rd International Conference on Computing and Communications Technologies (ICCCT); Chennai, India. 21–22 February 2019; pp. 54–58. [Google Scholar]

- 24.Duan H., Huang M., Yang Y., Hao J., Chen L. Ambient light based hand gesture recognition enabled by recurrent neural network. IEEE Access. 2020;8:7303–7312. doi: 10.1109/ACCESS.2019.2963440. [DOI] [Google Scholar]

- 25.Grosse-Puppendahl T., Beck S., Wilbers D., Zeiss S., von Wilmsdorff J., Kuijper A. Ambient gesture-recognizing surfaces with visual feedback; Proceedings of the International Conference on Distributed, Ambient, and Pervasive Interactions; Heraklion, Greece. 22–27 June 2014; pp. 97–108. [Google Scholar]

- 26.Cheng H.-T., Chen A.M., Razdan A., Buller E. Contactless gesture recognition system using proximity sensors; Proceedings of the 2011 IEEE International Conference on Consumer Electronics (ICCE); Las Vegas, NV, USA. 9–12 January 2011; pp. 149–150. [Google Scholar]

- 27.Zhang C., Tian Y. RGB-D camera-based daily living activity recognition. J. Comput. Vis. Image Process. 2012;2:12. [Google Scholar]

- 28.Harville M., Li D. Fast, integrated person tracking and activity recognition with plan-view templates from a single stereo camera; Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition; Washington, DC, USA. 27 June–2 July 2004; p. II. [Google Scholar]

- 29.Zhiwen Z., Wang Q., Yang D., Wang Q., Huang W., Xu Y. L-Sign: Large-Vocabulary Sign Gestures Recognition System. IEEE Trans. Hum.-Mach. Syst. 2022;52:290–301. [Google Scholar]

- 30.Xiangzu H., Lu F., Yin J., Tian G., Liu J. Sign Language Recognition Based on R (2 + 1) D With Spatial-Temporal-Channel Attention. IEEE Trans. Hum.-Mach. Syst. 2022:687–698. doi: 10.1109/THMS.2022.3144000. [DOI] [Google Scholar]

- 31.Song B., Kamal A.T., Soto C., Ding C., Farrell J.A., Roy-Chowdhury A.K. Tracking and activity recognition through consensus in distributed camera networks. IEEE Trans. Image Process. 2010;19:2564–2579. doi: 10.1109/TIP.2010.2052823. [DOI] [PubMed] [Google Scholar]

- 32.Choudhury T., Borriello G., Consolvo S., Haehnel D., Harrison B., Hemingway B., Hightower J., Pedja P., Koscher K., LaMarca A., et al. The mobile sensing platform: An embedded activity recognition system. IEEE Pervasive Comput. 2008;7:32–41. doi: 10.1109/MPRV.2008.39. [DOI] [Google Scholar]

- 33.Sardar A.W., Ullah F., Bacha J., Khan J., Ali F., Lee S. Mobile sensors based platform of Human Physical Activities Recognition for COVID-19 pandemic spread minimization. Comput. Biol. Med. 2022;105662:146. doi: 10.1016/j.compbiomed.2022.105662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Weiss G.M., Lockhart J. The impact of personalization on smartphone-based activity recognition; Proceedings of the Workshops at the Twenty-Sixth AAAI Conference on Artificial Intelligence; Toronto, ON, Canada. 22–23 July 2012. [Google Scholar]

- 35.Shoaib M., Bosch S., Incel O.D., Scholten H., Havinga P.J. A survey of online activity recognition using mobile phones. Sensors. 2015;15:2059–2085. doi: 10.3390/s150102059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Zeng M., Nguyen L.T., Yu B., Mengshoel O.J., Zhu J., Wu P., Zhang J. Convolutional neural networks for human activity recognition using mobile sensors; Proceedings of the 6th International Conference on Mobile Computing, Applications and Services; Austin, TX, USA. 6–7 November 2014; pp. 197–205. [Google Scholar]

- 37.Lei C., Clifton D.A., Pimentel M.A.F., Watkinson P.J., Tarassenko L. Predictive monitoring of mobile patients by combining clinical observations with data from wearable sensors. IEEE J. Biomed. Health Inform. 2013;18:722–730. doi: 10.1109/JBHI.2013.2293059. [DOI] [PubMed] [Google Scholar]

- 38.Ferrone A., Jiang X., Maiolo L., Pecora A., Colace L., Menon C. A fabric-based wearable band for hand gesture recognition based on filament strain sensors: A preliminary investigation; Proceedings of the 2016 IEEE Healthcare Innovation Point-of-Care Technologies Conference (HI-POCT); Cancun, Mexico. 9–11 November 2016; pp. 113–116. [Google Scholar]

- 39.Jani A.B., Kotak N.A., Roy A.K. Sensor based hand gesture recognition system for English alphabets used in sign language of deaf-mute people; Proceedings of the 2018 IEEE SENSORS; New Delhi, India. 28–31 October 2018; pp. 1–4. [Google Scholar]

- 40.Liang X., Heidari H., Dahiya R. Wearable capacitive-based wrist-worn gesture sensing system; Proceedings of the 2017 New Generation of CAS (NGCAS); Genova, Italy. 6–9 September 2017; pp. 181–184. [Google Scholar]

- 41.Atallah L., Lo B., King R., Yang G.-Z. Sensor placement for activity detection using wearable accelerometers; Proceedings of the 2010 International Conference on Body Sensor Networks; Singapore. 7–9 June 2010; pp. 24–29. [Google Scholar]

- 42.Tian J., Du Y., Peng S., Ma H., Meng X., Xu L. Research on Human gesture Recognition Based on Wearable Technology; Proceedings of the 2019 IEEE 8th Joint International Information Technology and Artificial Intelligence Conference (ITAIC); Chongqing, China. 24–26 May 2019; pp. 1627–1630. [Google Scholar]

- 43.Oprea S., Garcia-Garcia A., Garcia-Rodriguez J., Orts-Escolano S., Cazorla M. A recurrent neural network based Schaeffer gesture recognition system; Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN); Anchorage, AK, USA. 14–19 May 2017; pp. 425–431. [Google Scholar]

- 44.Vutinuntakasame S., Jaijongrak V.-R., Thiemjarus S. An assistive body sensor network glove for speech-and hearing-impaired disabilities; Proceedings of the 2011 International Conference on Body Sensor Networks; Dallas, TX, USA. 23–25 May 2011; pp. 7–12. [Google Scholar]

- 45.Ling L., Jiang S., Shull P.B., Gu G. SkinGest: Artificial skin for gesture recognition via filmy stretchable strain sensors. Adv. Robot. 2018;32:1112–1121. [Google Scholar]

- 46.Qian Z., Jing J., Wang D., Zhao R. WearSign: Pushing the Limit of Sign Language Translation Using Inertial and EMG Wearables. ACM Interact. Mob. Wearable Ubiquitous Technol. 2022;6:1–27. [Google Scholar]

- 47.Zappi P., Stiefmeier T., Farella E., Roggen D., Benini L., Troster G. Activity recognition from on-body sensors by classifier fusion: Sensor scalability and robustness; Proceedings of the 2007 3rd International Conference on Intelligent Sensors, Sensor Networks and Information; Melbourne, VIC, Australia. 3–6 December 2007; pp. 281–286. [Google Scholar]

- 48.Mamun A.A., Polash M.S.J.K., Alamgir F.M. Flex Sensor Based Hand Glove for Deaf and Mute People. Int. J. Comput. Networks Commun. Secur. 2017;5:38. [Google Scholar]

- 49.Gałka J., Mąsior M., Zaborski M., Barczewska K. Inertial motion sensing glove for sign language gesture acquisition and recognition. IEEE Sens. J. 2016;16:6310–6316. doi: 10.1109/JSEN.2016.2583542. [DOI] [Google Scholar]

- 50.Poon C.C., Zhang Y.T., Bao S.D. A novel biometrics method to secure wireless body area sensor networks for telemedicine and m-health. IEEE Commun. Mag. 2006;44:73–81. doi: 10.1109/MCOM.2006.1632652. [DOI] [Google Scholar]

- 51.Uddin M.Z. A wearable sensor-based activity prediction system to facilitate edge computing in smart healthcare system. J. Parallel Distrib. Comput. 2019;123:46–53. doi: 10.1016/j.jpdc.2018.08.010. [DOI] [Google Scholar]

- 52.Swee T.T., Ariff A.K., Salleh S., Seng S.K., Huat L.S. Wireless data gloves Malay sign language recognition system; Proceedings of the 2007 6th International Conference on Information, Communications and Signal Processing; Singapore. 10–13 December 2007; pp. 1–4. [DOI] [Google Scholar]

- 53.Vogler C., Metaxas D. Parallel hidden Markov models for American sign language recognition; Proceedings of the Seventh IEEE International Conference on Computer Vision; Kerkyra, Greece. 20–27 September 1999; pp. 116–122. [DOI] [Google Scholar]

- 54.Sadek M.I., Mikhael M.N., Mansour H.A. A new approach for designing a smart glove for Arabic Sign Language Recognition system based on the statistical analysis of the Sign Language; Proceedings of the 2017 34th National Radio Science Conference (NRSC); Alexandria, Egypt. 13–16 March 2017; pp. 380–388. [DOI] [Google Scholar]

- 55.Truong V.N., Yang C.K., Tran Q.V. A translator for American sign language to text and speech; Proceedings of the 2016 IEEE 5th Global Conference on Consumer Electronics; Kyoto, Japan. 11–14 October 2016; pp. 1–2. [DOI] [Google Scholar]

- 56.Jamdal A., Al-Maflehi A. On design and implementation of a sign-to-speech/text system; Proceedings of the 2017 International Conference on Electrical, Electronics, Communication, Computer, and Optimization Techniques (ICEECCOT); Mysuru, India. 15–16 December 2017; pp. 186–190. [DOI] [Google Scholar]

- 57.Abhishek K.S., Qubeley L.C.F., Ho D. Glove-based hand gesture recognition sign language translator using capacitive touch sensor; Proceedings of the 2016 IEEE International Conference on Electron Devices and Solid-State Circuits (EDSSC); Hong Kong, China. 3–5 August 2016; pp. 334–337. [DOI] [Google Scholar]

- 58.Sole M.M., Tsoeu M.S. Sign language recognition using the Extreme Learning Machine. IEEE Africon. 2011;11:1–6. doi: 10.1109/AFRCON.2011.6072114. [DOI] [Google Scholar]

- 59.Shukor A.Z., Miskon M.F., Jamaluddin M.H., Ibrahim F.B.A., Asyraf M.F., Bahar M.B.B. A New Data Glove Approach for Malaysian Sign Language Detection. Procedia Comput. Sci. 2015;76:60–67. doi: 10.1016/j.procs.2015.12.276. [DOI] [Google Scholar]

- 60.Leo B. Random forests. Mach. Learn. 2001;45:5–32. [Google Scholar]

- 61.Hecht-Nielsen R. Neural Networks for Perception. Academic Press; Cambridge, MA, USA: 1992. Theory of the backpropagation neural network; pp. 65–93. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.