Abstract

Background

Health systems increasingly need to implement complex practice changes such as the routine capture of patient-reported outcome (PRO) measures. Yet, health systems have met challenges when trying to bring practice change to scale across systems at large. While implementation science can guide the evaluation of implementation determinants, teams first need tools to systematically understand and compare workflow activities across practice sites. Structured analysis and design technique (SADT), a system engineering method of workflow modeling, may offer an opportunity to enhance the scalability of implementation evaluation for complex practice change like PROs.

Method

We utilized SADT to identify the core workflow activities needed to implement PROs across diverse settings and goals for use, establishing a generalizable PRO workflow diagram. We then used the PRO workflow diagram to guide implementation monitoring and evaluation for a 1-year pilot implementation of the electronic Patient Health Questionnaire-9 (ePHQ). The pilot occurred across multiple clinical settings and for two clinical use cases: depression screening and depression management.

Results

SADT identified five activities central to the use of PROs in clinical care: deploying PRO measures, collecting PRO data, tracking PRO completion, reviewing PRO results, and documenting PRO data for future use. During the 1-year pilot, 8,596 patients received the ePHQ for depression screening via the patient portal, of which 1,719 (21%) submitted the ePHQ; 367 patients received the ePHQ for depression management, of which 174 (47%) submitted the ePHQ. We present three case examples of how the SADT PRO workflow diagram augmented implementation monitoring, tailoring, and evaluation activities.

Conclusions

Use of a generalizable PRO workflow diagram aided the ability to systematically assess barriers and facilitators to fidelity and identify needed adaptations. The use of SADT offers an opportunity to align systems science and implementation science approaches, augmenting the capacity for health systems to advance system-level implementation.

Plain Language Summary

Health systems increasingly need to implement complex practice changes such as the routine capture of patient-reported outcome (PRO) measures. Yet these system-level changes can be challenging to manage given the variability in practice sites and implementation context across the system at large. We utilized a systems engineering method—structured analysis and design technique—to develop a generalizable diagram of PRO workflow that captures five common workflow activities: deploying PRO measures, collecting PRO data, tracking PRO completion, reviewing PRO results, and documenting PRO data for future use. Next, we used the PRO workflow diagram to guide our implementation of PROs for depression care in multiple clinics. Our experience showed that use of a standard workflow diagram supported our implementation evaluation activities in a systematic way. The use of structured analysis and design technique may enhance future implementation efforts in complex health settings.

Keywords: patient-reported outcomes, workflow modeling, implementation process

Introduction

Health systems are increasingly charged with implementing complex practice change across the organization. Implementation science theories and frameworks can be instrumental in helping to characterize the role of implementation context, identify determinants of adoption, and broadly guide the stages of implementation (e.g., planning, acting, reflecting, and evaluating) (Nilsen, 2015). Yet in order to support implementation of practice change at scale, teams first need an approach to systematically characterize workflow activities across practice sites. Some methods of systems engineering, such as methods that produce generalizable workflow diagrams, can fill this gap as they are rooted in the goal of distilling complex processes into simple steps that can be easily defined, replicated, and compared (IEEE Computer Society, 1998; Marca & McGowan, 1988; Mutic et al., 2010). The method of structured analysis and design technique (SADT), for example, has a particular focus on characterizing the complexity of workflow activities in ways that can simplify and demystify implementation details (Mutic et al., 2010; Reid et al., 2005).

In 2005, the National Academy of Engineering and Institute of Medicine advocated for the widespread application of systems engineering methods like SADT to improve health care delivery (Reid et al., 2005). Similar to implementation science, systems engineering aims to address the goal of making sense of the messiness of real-world practice through the systematic study of how implementations unfold; however, these fields operate in silos, utilizing disparate vocabulary, methods, and theory (Nilsen, 2015). Further, there is a paucity of research demonstrating the use of systems engineering in tandem with implementation science to guide rigorous implementation monitoring and evaluation in practice, especially for the system-level integration of new forms of patient data. An opportunity exists to align systems engineering and implementation science methods in ways that enhance our ability to understand implementation-related outcomes and address the growing complexity of system-level change.

A critical gap in system-level implementation efforts is understand workflows across practice sites in meaningful way. There are many approaches to workflow modeling (i.e., the iterative process of understanding dynamic interactions of workflow activities and/or workflow changes) and to workflow documentation (i.e., the generation of a diagram or other static representations that describes workflow activities). These range from traditional process mapping such as decision diagrams or flow charts, to sophisticated computer-based simulation such as discrete event modeling. Each of these approaches can be useful for different goals but have important trade-offs to consider. For example, process maps are often used by local implementation teams to document particular decisions about which sequence of activities their stakeholders will take to use an intervention. Process maps are useful for informational or instructional purposes but are often too detailed to support systems-level implementation. In contrast, discrete event modeling is a design analysis methodology that allows system-level stakeholders to understand and make decisions about the impact on care team performance and resource requirements when making organization-level changes. Discrete event modeling however requires specialized software and expertise and is not effective for guiding implementation processes.

SADT is a relatively simple and accessible systems engineering method of modeling that may be well-suited for teams who want to evaluate experiences and outcomes across complex, system-level implementations (Congram & Epelman, 1995). SADT modeling expands on and refines traditional process maps to generate a workflow diagram that can support system-level views of common workflow activities (IEEE Computer Society, 1998; Marca & McGowan, 1988; Mutic et al., 2010). To do this, SADT distills multiple process maps for similar workflows into a unified diagram of core workflow activities that can apply across practice sites. SADT diagrams therefore reflect the common activities that are essential for completing the workflow (i.e., the core functions), without getting mired in the specific details of roles, modalities, or local constraints (i.e., forms) that may vary by context in ways that are not important for the system as a whole to understand. This is not dissimilar to the terminology of “form vs. function” for complex interventions (Perez Jolles et al., 2019), and SADT can be utilized in the absence of already-established core functions for a given intervention.

In 2018, our health system launched an effort to support expanded integration of patient-reported data for depression screening and management through the electronic capture of the Patient Health Questionnaire-9 (i.e., electronic Patient Health Questionnaire-9 [ePHQ]) in the patient portal. While there is a growing body of literature citing the value of patient-reported outcomes (PROs) in clinical practice, evidence surrounding PRO implementation experiences and best practice models is limited, particularly for system-wide implementations (Nelson et al., 2020; Nordan et al., 2018; Stover et al., 2021). Early in our planning, we recognized the challenge of documenting and evaluating workflows for depression screening and management (two distinct use cases) across 18 primary care clinics—up to 36 diverse workflows to consider. To address this challenge, we combined SADT with implementation science approaches. In this methods article, we describe our planned effort and learnings, organized around two phases. In Phase 1, we used SADT to develop a generalizable workflow diagram for PRO implementation that could be used systemwide. In Phase 2, we used the SADT workflow diagram that resulted from Phase 1 as a tool—in tandem with the Consolidated Framework for Implementation Research—to guide our evaluation of the ePHQ pilot implementation, including activities of implementation monitoring, tailoring and adaptations, and assessment of barriers and facilitators.

PHASE 1: Development of PRO Workflow Diagram

Overview of SADT

SADT methods generate core workflow activities through an inductive process that starts with documentation of on the ground workflows, such as detailed process maps. Then, SADT uses a hierarchical aggregation and decomposition approach to identify and organize the core workflow activities that are essential and remain present across the variability of each practice site. Through aggregation, lower-level workflow activities in process maps are collapsed under larger activities (i.e., core functions). Through decomposition, larger activities are expanded to ensure they fully encompass lower-level activities across all contexts. This process is conducted iteratively, such that a set of core workflow activities are identified from one process map, then those core workflows activities are compared to another process map, and cyclical processes of aggregation and decomposition confirms either the fit of core workflow activities or the need for adjustments. Core workflow activities are sufficient if they are present in all local workflows, encompass the full breadth of lower-level workflow activities, and are distinct and mutually exclusive from one another. The resulting SADT diagram helps teams focus on the core workflow activities that serve a critical function to accomplish the task objective, regardless of implementation context. Initial SADT diagrams are created with paper and pencil. As the diagrams are refined during the modeling process, a computer version may be developed with simple drawing programs such as Visio (the method used by our team) or the graphics tools in Powerpoint.

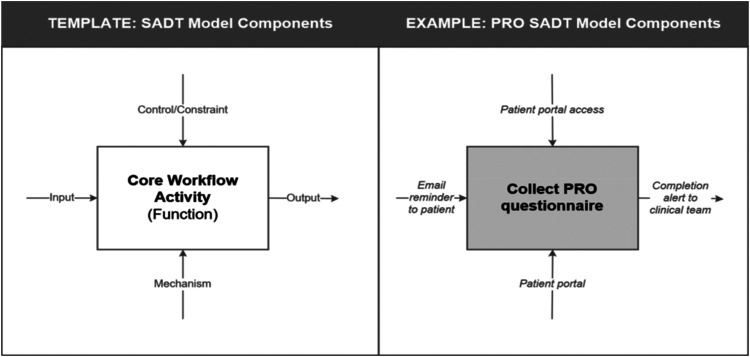

In addition to core workflow activities, SADT also considers the signals (i.e., inputs and outputs) that communicate the status of an activity, as well as the resources (i.e., what is needed to perform the activity) and constraints (i.e., rules or standards that limit the activity) that may influence activity execution (see Figure 1). Consistent with SADT principles of aggregation and decomposition, core workflow activities may involve a collection of lower-level activities. For example, decomposing the “Collect PRO questionnaire” activity in Figure 1 might identify the specific activities performed by the clinical staff as:

-

1.1

Patient receives email reminder alerting them to PRO questionnaire (input signal).

-

1.2

Patient logs in to patient portal and opens PRO questionnaire.

-

1.3

Patient completes PRO questions and submits completed questionnaire.

-

1.4

Patient portal generates alert for clinical teams that PRO questionnaire is complete (output signal).

Figure 1.

Template and example of components Involved in structured analysis and design technique (SADT) diagramming.

The way each of these lower-level activities are set up may vary across settings yet still work to achieve the same function of the core workflow activity, therefore enabling a systematic view of diverse implementations.

The goal of our first phase was to develop a generalizable PRO workflow diagram that could function as a template reflecting the common PRO workflow activities observed across multiple clinical practice settings. We launched this inductive process with three primary requirements: (1) that the methodology could adequately model the different levels of complexity and scale across the many clinical workflows observed; (2) that no special technology, training or resources was required to use the methodology effectively; and (3) that the resulting diagram could be easily shared and understood by multidisciplinary stakeholders (e.g., operational leaders, frontline staff). We considered multiple modeling approaches, including process mapping and computer-based discrete event simulation, and determined that SADT methods best aligned with our requirements and overall implementation goals.

Methods for SADT PRO Diagram Development

To develop a generalizable PRO workflow diagram via SADT, we began by documenting multiple existing workflows for PRO collection. First, we conducted participant observation of four outpatient clinics that were already collecting PRO measures separately from our planned implementation of the ePHQ. One observer made multiple visits (n = 3–5) to each clinic, each time observing different areas of operations (e.g., waiting room, front desk, nursing station). The observer documented the clinic activities in a structured log, which included describing the nature of the activity, the stakeholders involved, the standards and practices which guided the completion of each activity, results of the activities (e.g., patient in exam room), and the time required to accomplish the activity. Observations were iteratively reviewed by a multidisciplinary team of systems engineers, biomedical informatics researchers, and health services researchers, and used to generate a detailed process map of each clinic's activities related to PRO data collection.

With four examples in place, we then compared and contrasted process maps across the clinics to identify patterns and core workflow activities for PRO workflow. Starting with one process map, we iteratively hid (aggregated) and expanded (decomposed) workflow activities until we were able to group and minimize lower-level activities and identify a set of core workflow activities. We then compared this set of core workflow activities to the other three process maps, repeating the iterative process of aggregating and decomposing workflow details to ensure that the core workflow activities accurately represented all four process maps in full. For example, two of our four example clinics collected PRO measures via paper while the other two collected PRO measures via tablet. By aggregating the lower-level activities involved in how PRO data collection modalities varied across each example, we could identify that a common objective of PRO workflow is to collect the PRO measure. Any further granularity of detail beyond collect would have started to vary by practice site and therefore would not have been appropriate for the SADT diagram, however each practice site consistently had workflow activities related to collecting the PRO from the patient. These common elements served as the basis for the PRO workflow diagram, which provided a standardized template for documenting the variation in operational details of specific implementations while maintaining a consistent perspective for their comparison. The draft PRO workflow diagram was subsequently reviewed with key stakeholders (e.g., frontline staff, implementation team, information technology [IT] teams) to validate the accuracy and sufficiency of those representations. More detailed information about SADT methods can be reviewed in the Congram and Epelman (1995) article and Marca and McGowan (1988) textbook.

Description of Resulting PRO Workflow Diagram

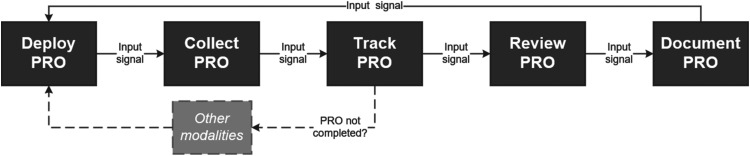

Using the SADT modeling process, we identified five core workflow activities that are considered central to the use of PROs in clinical care: deploy, collect, track, review, and document (see Figure 2). The deploy activity encompasses the activities to identify when a PRO questionnaire is needed and send the PRO to the individual patient. The collect activity comprises the tasks associated with patient receipt and completion of the PRO questionnaire. The track activity includes the steps the clinical staff take to monitor the completion status of the deployed PRO questionnaire, and if necessary, re-deploy the PRO in an alternate modality. The review activity represents the steps clinical teams take to access and interpret the PRO scores with patients. The document activity includes the steps taken by the clinical team to archive the reviewed PRO score for future reference or for use by other stakeholders (e.g., billing or population health teams).

Figure 2.

Patient-reported outcome (PRO) workflow diagram.

In preparation for our pilot implementation of the ePHQ, we utilized the PRO workflow diagram to systematically document PRO workflows for depression screening and management. Each of the five core workflow activities represents a critical component of the workflow for PRO use by clinical teams, and therefore a framework for guiding implementation monitoring and fidelity across individual settings. As is consistent with SADT methods, the specific sub-steps associated with each core activity varied by PRO use case (i.e., screening, management) and clinical setting. For example, in the screening context the deploy activity was achieved via an automated algorithm in the electronic medical record that identified when a patient was due for an ePHQ. Whereas in the depression management context, the deploy activity was accomplished via the sub-steps of provider chart review and manual creation of a patient message in the patient portal. In some clinics, tracking ePHQ completion was completed by nursing staff, whereas in other clinics it was managed by front desk staff. Table 1 provides definitions and descriptions of each of the five core workflow activities for the contexts of depression screening and management. The PRO workflow diagram was used to guide our evaluation of the pilot implementation, including via the review of process metrics for each of the five core workflow activities, as is described in the following section.

Table 1.

Description of 5 Core Activities in PRO Workflow.

| Workflow activity | Definition | Workflow description | |

|---|---|---|---|

| Depression screening | Depression management | ||

| Deploy | Delivering the PRO questionnaire to the patient | Automated, 7 days before appointment, based on algorithm of last PHQ completion date | Manual, clinic staff sends patient message directly based on clinical need, pathway criteria, or upcoming appointment |

| Collect | Patient's completion of the PRO questionnaire | Patient receives generic request to complete the ePHQ2 or ePHQ9 and two reminders prompts. Patient completes ePHQ in patient portal or waiting room kiosk. | Patient receives tailored message to complete ePHQ9 and no reminders. Patient completes ePHQ in patient portal. |

| Track | Monitoring the completion status of PRO questionnaire by clinical staff | ePHQ completion status is tracked via appointment check-in or rooming views of EHR | ePHQ completion status is tracked via direct messages to care provider (i.e., “in-baskets”) |

| Review | Accessing and viewing the PRO scores by clinical teams | Provider reviews ePHQ before or during visit | Provider reviews ePHQ when submitted (either with or without clinical visit) |

| Document | Archiving the PRO scores for future use or use by other stakeholders | Clinic staff or provider saves (i.e., “files”) ePHQ data to EHR | Clinic staff or provider saves (i.e., “files”) ePHQ data to EHR |

Note. PRO = patient-reported outcome; PHQ = Patient Health Questionnaire; ePHQ9 = electronic Patient Health Questionnaire-9; EHR = electronic health record.

PHASE 2: ePHQ Pilot Implementation Evaluation

Overview of ePHQ Pilot Implementation

We piloted the ePHQ over a 12-month period from September 2018 through September 2019 across two contexts of care: depression screening (e.g., preventive care) and depression management (e.g., chronic care) (Austin et al., 2020). The depression screening pilot occurred in one primary care clinic with 16 primary care providers. Since ePHQ for depression screening was automated, ePHQs were deployed for all eligible patients (n = 8,596) who were due for an annual depression screen during the 1-year period. The depression management pilot occurred across 15 clinics, however, use of the ePHQ was managed by a centralized population health team involved in depression care pathway activities. The ePHQ for depression management was manually deployed to 367 patients actively engaged in depression care during the 1-year period. The pilot implementation and evaluation were led by a multidisciplinary team, including stakeholders with expertise in clinical care (primary care, psychiatry), healthcare operations, IT and informatics, systems engineering, and health services research. Two representatives from the pilot sites (one representing depression screening and one representing depression management workflows) were also included on the implementation team. Prior to the pilot launch, the implementation team conducted repeated numbe of trainings with clinical staff at pilot sites and developed multiple training resources for frontline staff. Once the pilot launched, the implementation team met on a bi-weekly basis for 12 months to assess implementation progress and barriers and regularly provided feedback to individual clinical staff and/or clinical teams. As barriers arose, the team conducted additional outreach to frontline staff and system-level leadership councils, such as Medical Directors, leaders of Ambulatory Care and IT, and the Patient and Family Advisory Council, to solicit further input and consensus on needed adaptations and implementation supports. At the conclusion of the 12-month pilot, the implementation team shared summaries of pilot data (quantitative, qualitative) with pilot sites and system-wide stakeholders and engaged in reflexive debriefing with stakeholders.

Methods for ePHQ Pilot Evaluation

Using an embedded implementation research approach, our evaluation of the pilot incorporated multiple forms of quantitative and qualitative data, collected throughout the 12-month pilot period and reviewed sequentially (Churruca et al., 2019). Quantitative data, described below, were analyzed on an ongoing basis to support implementation monitoring efforts, and then a summative analysis was done at the end of the pilot. Qualitative data, also described below, were analyzed after the pilot implementation was completed and triangulated with quantitative data via joint displays that showed quantitative and qualitative data together for each context of ePHQ use (screening, management) and PRO workflow activity. Activities were reviewed and approved by the University of Washington Institutional Review Board, and informed consent was acquired when appropriate in accordance with our protocol.

Quantitative Data. Quantitative data sources included process metrics generated from electronic health records (EHR) data. EHR data documented aspects of ePHQ deployment and utilization, including the number of ePHQs deployed per clinic and provider, the number of ePHQs submitted by patients, time to completion, completeness of ePHQ responses, and the number of ePHQs documented within the medical record. Variables were automatically generated from the EHR in realtime, and the implementation team analyzed data on a bi-weekly basis using descriptive statistics. The team generated reports that visualized data for each bi-weekly timeframe and the cumulative implementation period over time, as well as metrics for individual clinics and the combined sample of clinics. Reporting of process metrics was organized around the PRO workflow activities generated in Phase 1, such that data was available for each workflow activity to support comparison across settings.

Qualitative Data. Qualitative data included detailed fieldnotes from all implementation team meetings, trainings with clinical staff, and meetings with frontline staff and other stakeholders throughout the pilot. Additionally, specific requests for technical assistance and resulting adaptations made in response to clinical staff feedback were documented via a semistructured implementation change log. After completion of the pilot, a trained qualitative researcher (EJA) began by reviewing and deductively coding fieldnotes and free text data from change logs using directed content analysis. Coding was a priori guided by constructs of the Consolidated Framework for Implementation Research (CFIR) so as to identify commonalities in implementation determinants across contexts and support replicability of findings (Damschroder et al., 2009). Coded excerpts were then grouped by each PRO workflow diagram activity to ensure barriers and facilitators experienced for each activity were explored. Next, the evaluation team refined the identified barriers and facilitators through iterative review and compared implementation determinants across each workflow activity (e.g., deploy, collect), across each implementation context (e.g., screening, and management), and across the implementation overall to identify relationships between determinants and cross-cutting themes. Preliminary summaries of learnings were shared back with stakeholders involved in the ePHQ implementation as well as a broader network of stakeholders involved in PRO implementation for refinement and verification.

Pilot Results and Case Examples

Over the course of the pilot, 8,963 ePHQs were deployed across 16 clinics (Table 2). On average, 20.8% of depression screening ePHQs and 47.4% of depression management ePHQs were submitted by patients prior to their appointment. Patients took an average of 5 days to submit their ePHQ for depression screening and 1 day to submit their ePHQ for depression management. Providers documented the ePHQs in the medical record 62%–66% of the time. Qualitative data identified multiple barriers and facilitators to ePHQ implementation (Table 3) that align with CFIR constructs, many of which varied between the screening and management context of use. Below, we describe three brief case examples of how the SADT-guided PRO workflow diagram aided activities of implementation monitoring, tailoring and adaptations, and assessment of implementation determinants. Of note, no adjustments were identified for the SADT PRO workflow diagram itself during implementation monitoring, confirming its accuracy and sufficiency.

Table 2.

Implementation Monitoring Goals and Example Measures.

| Workflow activity | Implementation monitoring goal | Example measure | Depression screening | Depression management |

|---|---|---|---|---|

| Deploy | Ensure all appropriate patients were receiving the electronic Patient Health Questionnaire (ePHQ) for screening or management contexts; monitor provider adoption of ePHQ tools | Number of clinics involved | 1 | 15 |

| Number of providers involved | 16 | 44 | ||

| Number of ePHQs deployed | 8,596 | 367 | ||

| Collect | Understand patient use of the ePHQ | Number (and rate) of ePHQs submitted | 1,787 (20.8%) | 174 (47.4%) |

| Track | Identify patterns in missed ePHQ submissions | Mean time to ePHQ submission | 5 days | 1 day |

| Review | Characterize frequency of high value ePHQs received | Prevalence (n (%)) of suicidal ideation reported | 74 (4.1%) | 15 (8.6%) |

| Document | Ensure all ePHQ responses are filed in chart for future use | Mean rate of ePHQs documented in chart | 62.2% (20%–100%) | 66.1% (0%–100%) |

Table 3.

Barriers and Facilitators by Workflow Activity and Implementation Context (With Associated CFIR Constructs).

| Workflow activity | Barriers and facilitators | |

|---|---|---|

| Depression screening | Depression management | |

| Deploy |

Barriers:

Facilitators:

|

Barriers:

|

| Collect |

Barriers:

|

Barriers:

Facilitators:

|

| Track |

Barriers:

Facilitators:

|

Barriers:

Facilitators:

|

| Review |

Barriers:

Facilitators:

|

Barriers:

Facilitators:

|

| Document |

Barriers:

Facilitators:

|

Barriers:

Facilitators:

|

Note. CFIR = Consolidated Framework for Implementation Research; PHQ = Patient Health Questionnaire; ePHQ = electronic Patient Health Questionnaire.

Case Example 1—Implementation Monitoring. Implementation monitoring activities involved bi-weekly review of process metrics generated from the EHR and organized around the PRO workflow diagram activities. Early in our implementation monitoring, we identified that the document activity, where ePHQ scores are saved within the medical record for future use, was happening very inconsistently, suggesting low fidelity across providers. We utilized the strategy of audit and feedback (Powell et al., 2015), providing individual providers with weekly data that included the list of their patients that had completed the ePHQ (i.e., the ePHQ had been successfully deployed, collected, tracked and reviewed) and how many of those had not been documented within the medical record. By focusing on this single workflow activity, we were able to send data reports to providers that were brief and easy to follow. Providers in turn reported feedback to our team about the barriers they were facing with the documentation activity. Barriers were cataloged across providers and compared across the screening and management use cases. Analysis of barriers revealed that challenges with the documentation activity were not unique to use case or setting, but mostly related (1) provider training and comfort with the EHR and (2) the ways in which individual providers customized their EHR screens, which often made the ePHQ scores less visible. As a result, our team developed additional training tools (e.g., job aid, brief instructional video) that provided education on both the documentation activity and recommendations for EHR screen customization.

Case Example 2—Intervention Tailoring and Adaptations. Requests for tailoring and adaptations to the ePHQ tools were documented in a semi-structured change log that was organized by the PRO workflow diagram. When multiple adaptation requests emerged for the same workflow activity, we triangulated feedback from the change log, implementation monitoring data, and fieldnotes from team meetings and debriefs with frontline staff. For example, to increase the rate of ePHQ collection, stakeholders requested adaptations related to the frequency and framing of reminder messages patients received. Specifically, they requested sending multiple automated reminders to patients in the week leading up to their clinical visit, and tailoring the language within the patient portal to frame depression screening as a part of routine primary care delivery. We detailed the micro-workflows within the collect activity for the screening and management use cases. We then modeled the proposed adaptations for both use cases and sought feedback from our implementation team, frontline staff, and Patient and Family Advisory Council to consider potential unintended consequences of the proposed adaptations. Suggested refinements included limiting automated reminders to two reminders and ensuring the patient portal language considered the perspectives of patients with active depression, not just those due for annual screening, as all patients would see the same language about ePHQ rationale. Modeling helped us maintain focus on the core function (e.g., to collect the ePHQ) while also appreciating the variability in workflows by context of use. Through this, we refined the proposed adaptations to maintain fidelity for our core objectives and balanced the needs of multiple stakeholders.

Case Example 3—Assessment of Barriers and Facilitators. Throughout the implementation we documented staff-reported barriers to ePHQ use in our fieldnotes from debriefs with frontline staff and the implementation change log (e.g., documenting an email exchange between implementation team members and frontline staff). At the end of our pilot implementation period, we used these data to assess staff perspectives on barriers and facilitators to adoption of the ePHQ using a deductive analysis guided by the PRO workflow diagram. One theme that emerged was variable staff perspectives regarding the relative advantage of the ePHQ tool over current practice, which specifically related to the track activity. For teams that were capturing PHQs by phone prior to the launch of the ePHQ, the ePHQ offered a clear relative advantage over current practice as it reduced staff burden, even if ePHQ submissions needed to be tracked by staff. However, in settings where workflows for capturing the PHQ on paper were already well established and efficient, the introduction of another modality for PHQ capture increased the burden on staff to track PHQ completion and adjust workflows during clinical visits. Exploring barriers and facilitators by core workflow activity provided a perspective on comparison across settings that allowed us to identify cross-cutting themes and actionable areas for implementation support.

Discussion

In this work, we sought to leverage a systems engineering method—SADT—to guide and augment the implementation process across complex contexts of care delivery. In Phase 1, we interrogated existing workflows for patent-reported data collection to identify the common workflow activities and the basis of an SADT diagram for PRO workflow. In Phase 2, we leveraged the PRO workflow diagram to guide our evaluation of implementation experiences, barriers, and facilitators by context of care delivery. Our experience demonstrated the value SADT methods can add to largescale implementation evaluation efforts and provide an example of how systems engineering and implementation science methods can mutually augment one another.

Care delivery continues to become more complex, and therefore the role of implementation science is becoming both more challenging and more critical (Westbrook et al., 2007). Health systems and implementation teams need tools to aid them in fostering implementations that are adaptive and responsive to the unique contextual factors of local settings while still enabling a systems-level perspective on outcomes and best practice learnings (Sturmberg, 2018). Even further, implementation efforts need to incorporate a sociotechnical approach to almost all implementations, regardless of whether technology is involved in the intervention itself, given the pervasive role, EHRs play in facilitating care delivery (Sittig & Singh, 2010; Westbrook et al., 2007). As this example demonstrates, standardized workflow diagrams can offer an organizing framework to understand implementation barriers, balance the needs of multiple stakeholders, and replicate best practices across clinical settings. Focusing on the experiences and outcomes from a single implementation may stymie the ability of complex health systems to achieve system-level goals for patient engagement and measurement-based care. The pairing of implementation science theory with systems engineering methods such as SADT may offer a compliment that greatly strengthens our ability to address the increasing complexity of transforming care (Barnes et al., 2021). SADT modeling offers a relatively simple approach to modeling workflow that can aid systems-level implementation without the use of sophisticated technology or technical expertise. SADT can also lay the foundation for implementation teams to utilize more sophisticated approaches to simulation modeling and integration of systems science goals. For example, SADT models can serve as templates for the development of discrete event simulation models, which can be used to systemically identify opportunities to reduce variance in implementation processes.

The learnings from this work reinforce prior studies that emphasize the importance of understanding the local context when integrating PROs and other forms of patient-generated data into care delivery (Lewis et al., 2019; Stover et al., 2021). In our example, the barriers and facilitators related to ePHQ use varied largely between the contexts of depression screening vs. management. These differences were influenced by the existing practices in place before implementation, as well as the variation in how the same PRO measure was used for different clinical contexts of care delivery. For health system change, this will be an increasingly common challenge, as technical resources such as EHR tools are often limited in scope and have limited adaptability to accommodate the multiple uses of a single tool. Implementation within complex adaptive systems will need to consider how to design and implement interventions that simultaneously meet the needs of multiple users and multiple clinical use cases (Westbrook et al., 2007). The PRO workflow diagram, though, aided our ability to navigate these implementation challenges by providing a framework to systemically assess and diagnose implementation barriers across contexts. This enabled a systems-level view of implementation experiences and potential unintended consequences of proposed adaptations. Leveraging the PRO workflow diagram therefore allowed us to more thoughtfully identify implementation strategies and adaptations while reducing the risk of unintended consequences on the system overall.

There are some important limitations to acknowledge for this work. First, our experience reflects one health system; while the PRO workflow diagram was intended to be generalizable, other health systems may have important contextual differences that influence their implementation experiences. Second, we leveraged EHR-based reporting tools to guide our implementation monitoring efforts, which have some inherent limitations to data breadth and quality. And third, our methods were iterative and emergent so as to capture the complexity of real-world practice, however, this may limit the validity and transferability of our learnings. In particular, while we are able to report system-level outcomes for ePHQ use, we continued to experience high variability in ePHQ workflows across contexts of care and practice sites. Future work beyond this pilot should identify strategies to reduce variation in ways that further enhance implementation outcomes for ePHQ use. Lastly, the use of SADT methods may be appropriate for other interventions that can be reasonably distilled into a set of common workflow activities, such as other forms of patient-generated data collection. However, there may be interventions that are too complex in nature (e.g., too unstable and/or frequently changing), or may be too reliant on clinical judgment and/or complex decision-making to support the application of SADT. Future teams should consider the unique characteristics of the intervention they are looking to implement and assess the appropriateness of SADT methods.

In summary, the use of SADT methods aided our ability to navigate complex workflow and stakeholder perspectives, compare fidelity and variation across settings and users and generate cross-setting implementation learnings. Furthermore, while our pilot was focused on depression care, there is an opportunity to expand use of the PRO workflow diagram for other clinical areas and types of patient-reported or patient-generated heath data. This example showcases the potential for systems engineering methods to enhance future implementation efforts in complex health settings.

Acknowledgments

We would like to acknowledge the members of the University of Washington Medicine Patient Reported Outcomes Governance Committee and the broader community of practice through many local and national engagement activities for their contributions throughout this work.

Footnotes

The authors declared the no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by the Agency for Healthcare Research and Quality (grant number R01HS023785). The content is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research and Quality.

Ethical Approval: This work was reviewed and approved by the University of Washington Institutional Review Board.

Informed Consent: Informed consent was acquired when appropriate in accordance with our protocol.

ORCID iD: Elizabeth J. Austin https://orcid.org/0000-0002-4221-1362

References

- Austin, E., LeRouge, C., Hartzler, A. L., Segal, C., & Lavallee, D. C. (2020). Capturing the patient voice: Implementing patient-reported outcomes across the health system. Quality of Life Research, 29(2), 347–355. 10.1007/s11136-019-02320-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barnes G. D., Acosta J., Kurlander J. E., Sales A. E. (2021). Using health systems engineering approaches to prepare for tailoring of implementation interventions. Journal of General Internal Medicine, 36(1), 178–185. 10.1007/s11606-020-06121-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Churruca K., Ludlow K., Taylor N., Long J. C., Best S., Braithwaite J. (2019). The time has come: Embedded implementation research for health care improvement. Journal of Evaluation in Clinical Practice, 25(3), 373–380. 10.1111/jep.13100 [DOI] [PubMed] [Google Scholar]

- Congram C., Epelman M. (1995). How to describe your service: An invitation to the structured analysis and design technique. International Journal of Service Industry Management, 6(2), 6–23. 10.1108/09564239510084914 [DOI] [Google Scholar]

- Damschroder L., Aron D., Keith R., Kirsh S., Alexander J., Lowery J. (2009). Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implementation Science, 4. 10.1186/1748-5908-4-50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- IEEE Computer Society. (1998). IEEE Standard for Functional Modeling Language–Syntax and Semantics for IDEF0, IEEE Std 1320.1–1998 (R2004). Accessed March 11, 2020.

- Lewis C. C., Boyd M., Puspitasari A., Navarro E., Howard J., Kassab H., Hoffman M., Scott K., Lyon A., Douglas S., Simon G., Kroenke K. (2019). Implementing measurement-based care in behavioral health: A review. JAMA Psychiatry, 76(3), 324. 10.1001/jamapsychiatry.2018.3329 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marca D. A., McGowan C. L. (1988). SADT: Structured Analysis and Design Technique. McGraw-Hill Book Co. [Google Scholar]

- Mutic S., Brame R. S., Oddiraju S., Michalski J. M., Wu B. (2010). System mapping of complex healthcare processes using IDEF0: A radiotherapy example. International Journal of Collaborative Enterprise, 1(3/4), 316. 10.1504/IJCENT.2010.038356 [DOI] [Google Scholar]

- Nelson T. A., Anderson B., Bian J., Boyd A. D., Burton S. v., Davis K., Starren J. B. (2020). Planning for patient-reported outcome implementation: Development of decision tools and practical experience across four clinics. Journal of Clinical and Translational Science, 1–10. 10.1017/cts.2020.37 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nilsen P. (2015). Making sense of implementation theories, models and frameworks. Implementation Science, 10(1), 53. 10.1186/s13012-015-0242-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nordan L., Blanchfield L., Niazi S., Sattar J., Coakes C. E., Uitti R., Vizzini M., Naessens J. M., Spaulding A. (2018). Implementing electronic patient-reported outcomes measurements: Challenges and success factors. BMJ Quality & Safety, 27(10), 852–856. 10.1136/bmjqs-2018-008426 [DOI] [PubMed] [Google Scholar]

- Perez Jolles M., Lengnick-Hall R., Mittman B. S. (2019). Core functions and forms of complex health interventions: A patient-centered medical home illustration. Journal of General Internal Medicine, 34(6), 1032–1038. 10.1007/s11606-018-4818-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell B. J., Waltz T. J., Chinman M. J., Damschroder L. J., Smith J. L., Matthieu M. M., Proctor E. K., Kirchner J. E. (2015). A refined compilation of implementation strategies: Results from the expert recommendations for implementing change (Eric) project. Implementation Science, 10(1), 21. 10.1186/s13012-015-0209-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reid P. P., Compton W. D., Grossman J. H., Fanjiang G. (2005). Building a better delivery system: A new engineering/health care partnership. In Building a better delivery system: A new engineering/health care partnership. National Academies Press. 10.17226/11378 [DOI] [PubMed] [Google Scholar]

- Sittig D. F., Singh H. (2010). A new socio-technical model for studying health information technology in complex adaptive healthcare systems. Quality & Safety in Health Care, 19(Suppl 3), i68–i74. 10.1136/qshc.2010.042085 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stover A. M., Haverman L., van Oers H. A., Greenhalgh J., Potter C. M., On behalf of the ISOQOL PROMs/PREMs in Clinical Practice Implementation Science Work Group (Ahmed, S., Greenhalgh, J., Gibbons, E., Haverman, L., Manalili, K., Potter, C., Roberts, N., Santana, M., Stover, A. M., & van Oers, H.) (2021). Using an implementation science approach to implement and evaluate patient-reported outcome measures (Prom) initiatives in routine care settings. Quality of Life Research, 30(11), 3015–3033. 10.1007/s11136-020-02564-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sturmberg J. P. (2018). Embracing complexity in health and health care-translating a way of thinking into a way of acting. Journal of Evaluation in Clinical Practice, 24(3), 598–599. 10.1111/jep.12935 [DOI] [PubMed] [Google Scholar]

- Westbrook J. I., Braithwaite J., Georgiou A., Ampt A., Creswick N., Coiera E., Iedema R. (2007). Multimethod evaluation of information and communication technologies in health in the context of wicked problems and sociotechnical theory. Journal of the American Medical Informatics Association, 14(6), 746–755. 10.1197/jamia.M2462 [DOI] [PMC free article] [PubMed] [Google Scholar]