Abstract

Background:

Systematic reviews of measures can facilitate advances in implementation research and practice by locating reliable and valid measures and highlighting measurement gaps. Our team completed a systematic review of implementation outcome measures published in 2015 that indicated a severe measurement gap in the field. Now, we offer an update with this enhanced systematic review to identify and evaluate the psychometric properties of measures of eight implementation outcomes used in behavioral health care.

Methods:

The systematic review methodology is described in detail in a previously published protocol paper and summarized here. The review proceeded in three phases. Phase I, data collection, involved search string generation, title and abstract screening, full text review, construct assignment, and measure forward searches. Phase II, data extraction, involved coding psychometric information. Phase III, data analysis, involved two trained specialists independently rating each measure using PAPERS (Psychometric And Pragmatic Evidence Rating Scales).

Results:

Searches identified 150 outcomes measures of which 48 were deemed unsuitable for rating and thus excluded, leaving 102 measures for review. We identified measures of acceptability (N = 32), adoption (N = 26), appropriateness (N = 6), cost (N = 31), feasibility (N = 18), fidelity (N = 18), penetration (N = 23), and sustainability (N = 14). Information about internal consistency and norms were available for most measures (59%). Information about other psychometric properties was often not available. Ratings for internal consistency and norms ranged from “adequate” to “excellent.” Ratings for other psychometric properties ranged mostly from “poor” to “good.”

Conclusion:

While measures of implementation outcomes used in behavioral health care (including mental health, substance use, and other addictive behaviors) are unevenly distributed and exhibit mostly unknown psychometric quality, the data reported in this article show an overall improvement in availability of psychometric information. This review identified a few promising measures, but targeted efforts are needed to systematically develop and test measures that are useful for both research and practice.

Plain language abstract:

When implementing an evidence-based treatment into practice, it is important to assess several outcomes to gauge how effectively it is being implemented. Outcomes such as acceptability, feasibility, and appropriateness may offer insight into why providers do not adopt a new treatment. Similarly, outcomes such as fidelity and penetration may provide important context for why a new treatment did not achieve desired effects. It is important that methods to measure these outcomes are accurate and consistent. Without accurate and consistent measurement, high-quality evaluations cannot be conducted. This systematic review of published studies sought to identify questionnaires (referred to as measures) that ask staff at various levels (e.g., providers, supervisors) questions related to implementation outcomes, and to evaluate the quality of these measures. We identified 150 measures and rated the quality of their evidence with the goal of recommending the best measures for future use. Our findings suggest that a great deal of work is needed to generate evidence for existing measures or build new measures to achieve confidence in our implementation evaluations.

Keywords: Implementation outcomes, implementation, dissemination, evidence based, behavioral health, measurement evaluation

It is well established that evidence-based practices are slow to be implemented into routine care (Carnine, 1997). Implementation science seeks to narrow the research-to-practice gap by identifying barriers and facilitators to effective implementation and designing strategies to achieve desired implementation outcomes. Proctor and colleagues’ seminal work (Proctor et al., 2009, 2011) articulated at least eight implementation outcomes for the field: (1) acceptability, (2) adoption, (3) appropriateness, (4) cost, (5) feasibility, (6) fidelity, (7) penetration, and (8) sustainability (Table 1) (Lewis et al., 2015). While many other implementation frameworks exist (Glasgow et al., 1999), the outcomes framework developed by Proctor and colleagues provides clear differentiation of implementation outcomes from clinical and services outcomes and offers a harmonized focus for the field. Despite this, measurement inherently lags behind framework development and construct specification. Indeed, a 2015 systematic review led by our team found 104 measures used in behavioral health (including mental health, substance use, and other addictive behaviors) across these eight outcomes, with 50 identified for acceptability, 19 for adoption, but fewer than 10 for each of the other six outcomes. Our systematic review and psychometric assessment revealed that evidence of measures’ reliability and validity is largely unknown or poor (Lewis et al., 2015). Of the psychometric properties assessed, only four measures had been tested for responsiveness or sensitivity to change, meaning that it is not clear whether the majority of implementation outcome measures are designed and able to detect change over time. Without accurate measurement of implementation outcomes, we cannot be sure if implementation efforts are (un)successful or if the measures are simply unfit to identify change in outcomes when it in fact occurs.

Table 1.

Definitions of implementation outcomes.

| Acceptability | The perception among implementation stakeholders that a given treatment, service, practice, or innovation is agreeable, palatable, or satisfactory. |

| Adoption | The intention, initial decision, or action to try or employ an innovation or evidence-based practice. |

| Appropriateness | The perceived fit, relevance, or compatibility of the innovation or evidence-based practice for a given practice setting, provider, or consumer; and/or perceived fit of the innovation to address a particular issue or problem. |

| Cost | The cost impact of an implementation effort. |

| Feasibility | The extent to which a new treatment, or an innovation, can be successfully used or carried out within a given agency or setting. |

| Fidelity | The degree to which an intervention was implemented as it was prescribed in the original protocol or as it was intended by the program developers. |

| Penetration | The integration of a practice within a service setting and its subsystems. |

| Sustainability | The extent to which a newly implemented treatment is maintained or institutionalized within a service setting’s ongoing, stable operation. |

Source: From Proctor et al. (2009, 2011).

Since 2015, we have sought to update and expand these reviews. Full details about our updated approach are published in a protocol paper (Lewis et al., 2018). Three major differences are worth noting. One, we expanded our assessment of measures to their scales given that many measures purportedly assess numerous constructs delineated by scales. For example, the Texas Christian University Organizational Readiness for Change Scale contains 19 scales measuring constructs such as motivation for change, available resources, staff attributes, and organizational climate (Lehman et al., 2002). Moreover, researchers tend to select and deploy individual scales versus full measures given their interest in minimizing respondent burden. Two, we added additional validity assessments including convergent validity, discriminant validity, concurrent validity, and known-groups validity. Three, we used a more comprehensive and critical approach to mapping measure content (i.e., items) to constructs by engaging content experts in the review of measure items: If at least two items in a scale reflected the definition of a given construct, an assignment was made in an effort to reflect real-world practices of measure use. Taken together, these changes will offer much richer and more useful information to implementation researchers and practitioners when selecting measures.

Although it has only been 4 years since the publication of our initial systematic review (Lewis et al., 2015), the field of implementation science has evolved with rapid pace. This progress, together with the enhancements made to our systematic review protocol (Lewis et al., 2018), calls for an update to our assessment of measures of implementation outcomes. Specifically, this article presents the findings from systematic reviews of the eight implementation outcomes, including a robust synthesis of psychometric evidence for all identified measures.

Method

Design overview

The systematic literature review and synthesis consisted of three phases. Phase I, measure identification, included the following five steps: (1) search string generation, (2) title and abstract screening, (3) full text review, (4) measure assignment to implementation outcome(s), and (5) measure forward (cited-by) searches. Phase II, data extraction, consisted of coding relevant psychometric information, and in Phase III data analysis was completed.

Phase I: data collection

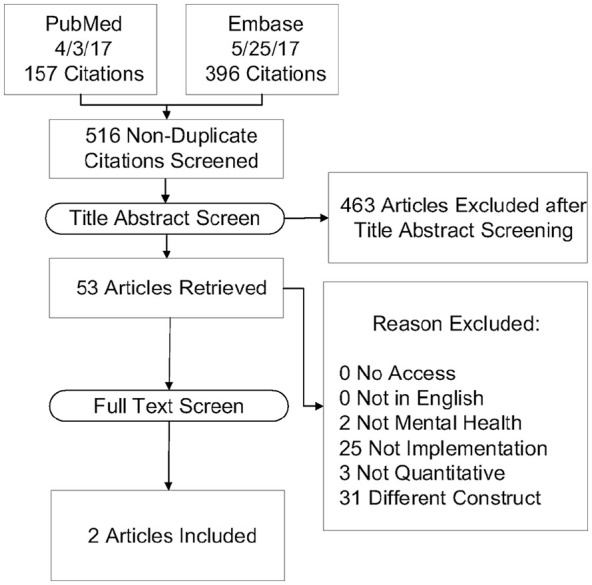

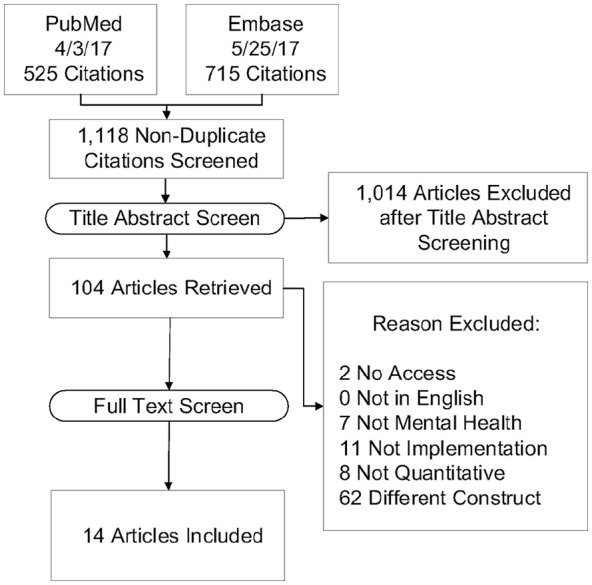

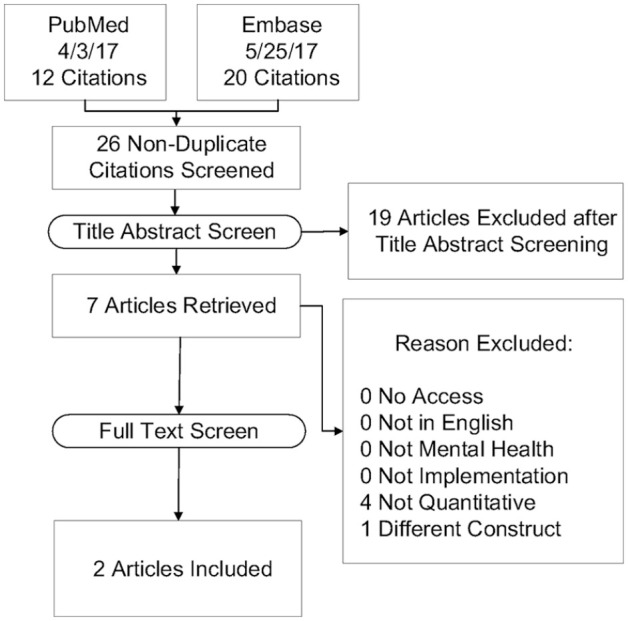

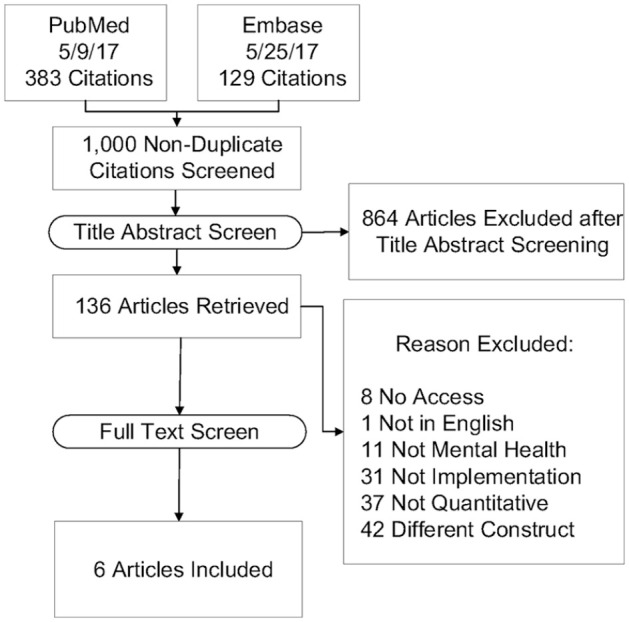

First, literature searches were conducted in PubMed and Embase bibliographic databases using search strings curated in consultation from PubMed support specialists and a library scientist. Consistent with our funding source and aim to identify and assess implementation-related measures in mental and behavioral health, our search was built on four core levels: (1) terms for implementation (e.g., diffusion, knowledge translation, adoption); (2) terms for measurement (e.g., instrument, survey, questionnaire); (3) terms for evidence-based practice (e.g., innovation, guideline, empirically supported treatment); and (4) terms for behavioral health (e.g., behavioral medicine, mental disease, psychiatry) (Lewis et al., 2018). For the current study, we included a fifth level for each of the following Implementation Outcomes from Proctor et al. (2011): (1) acceptability, (2) adoption, (3) appropriateness, (4) cost, (5) feasibility, (6) fidelity, (7) penetration, and (8) sustainability. Literature searches were conducted independently for each outcome, thus eight different sets of search strings were employed. Articles published from 1985 to 2017 were included in the search. Searches were completed from April 2017 to May 2017 (Table 2).

Table 2.

Database search terms.

| Search term | Search string |

|---|---|

| Implementation | (Adopt[tiab] OR adopts[tiab] OR adopted[tiab] OR

adoption[tiab] NOT “adoption”[MeSH Terms] OR Implement[tiab]

OR implements[tiab] OR implementation[tiab] OR

implementation[ot] OR “health plan implementation”[MeSH

Terms] OR “quality improvement*”[tiab] OR “quality

improvement”[tiab] OR “quality improvement”[MeSH Terms] OR

diffused[tiab] OR diffusion[tiab] OR “diffusion of

innovation”[MeSH Terms] OR “health information

exchange”[MeSH Terms] OR “knowledge translation*”[tw] OR

“knowledge exchange*”[tw]) AND |

| Evidence-based practice | (“empirically supported treatment”[All Fields] OR “evidence

based practice*”[All Fields] OR “evidence based

treatment”[All Fields] OR “evidence-based practice”[MeSH

Terms] OR “evidence-based medicine”[MeSH Terms] OR

innovation[tw] OR guideline[pt] OR (guideline[tiab] OR

guideline’[tiab] OR guideline’’[tiab] OR

guideline’pregnancy[tiab] OR guideline’s[tiab] OR

guideline1[tiab] OR guideline2015[tiab] OR

guidelinebased[tiab] OR guidelined[tiab] OR

guidelinedevelopment[tiab] OR guidelinei[tiab] OR

guidelineitem[tiab] OR guidelineon[tiab] OR guideliner[tiab]

OR guideliner’[tiab] OR guidelinerecommended[tiab] OR

guidelinerelated[tiab] OR guidelinertrade[tiab] OR

guidelines[tiab] OR guidelines’[tiab] OR

guidelines’quality[tiab] OR guidelines’s[tiab] OR

guidelines1[tiab] OR guidelines19[tiab] OR guidelines2[tiab]

OR guidelines20[tiab] OR guidelinesfemale[tiab] OR

guidelinesfor[tiab] OR guidelinesin[tiab] OR

guidelinesmay[tiab] OR guidelineson[tiab] OR

guideliness[tiab] OR guidelinesthat[tiab] OR

guidelinestrade[tiab] OR guidelineswiki[tiab]) OR

“guidelines as topic”[MeSH Terms] OR “best

practice*”[tw]) AND |

| Measure | (instrument[tw] OR (survey[tw] OR survey’[tw] OR

survey’s[tw] OR survey100[tw] OR survey12[tw] OR

survey1988[tw] OR survey226[tw] OR survey36[tw] OR

surveyability[tw] OR surveyable[tw] OR surveyance[tw] OR

surveyans[tw] OR surveyansin[tw] OR surveybetween[tw] OR

surveyd[tw] OR surveydagger[tw] OR surveydata[tw] OR

surveydelhi[tw] OR surveyed[tw] OR surveyedandtestedthe[tw]

OR surveyedpopulation[tw] OR surveyees[tw] OR

surveyelicited[tw] OR surveyer[tw] OR surveyes[tw] OR

surveyeyed[tw] OR surveyform[tw] OR surveyfreq[tw] OR

surveygizmo[tw] OR surveyin[tw] OR surveying[tw] OR

surveying’[tw] OR surveyings[tw] OR surveylogistic[tw] OR

surveymaster[tw] OR surveymeans[tw] OR surveymeter[tw] OR

surveymonkey[tw] OR surveymonkey’s[tw] OR

surveymonkeytrade[tw] OR surveyng[tw] OR surveyor[tw] OR

surveyor’[tw] OR surveyor’s[tw] OR surveyors[tw] OR

surveyors’[tw] OR surveyortrade[tw] OR surveypatients[tw] OR

surveyphreg[tw] OR surveyplus[tw] OR surveyprocess[tw] OR

surveyreg[tw] OR surveys[tw] OR surveys’[tw] OR

surveys’food[tw] OR surveys’usefulness[tw] OR

surveysclub[tw] OR surveyselect[tw] OR surveyset[tw] OR

surveyset’[tw] OR surveyspot[tw] OR surveystrade[tw] OR

surveysuite[tw] OR surveytaken[tw] OR surveythese[tw] OR

surveytm[tw] OR surveytracker[tw] OR surveytrade[tw] OR

surveyvas[tw] OR surveywas[tw] OR surveywiz[tw] OR

surveyxact[tw]) OR (questionnaire[tw] OR questionnaire’[tw]

OR questionnaire’07[tw] OR questionnaire’midwife[tw] OR

questionnaire’s[tw] OR questionnaire1[tw] OR

questionnaire11[tw] OR questionnaire12[tw] OR

questionnaire2[tw] OR questionnaire25[tw] OR

questionnaire3[tw] OR questionnaire30[tw] OR

questionnaireand[tw] OR questionnairebased[tw] OR

questionnairebefore[tw] OR questionnaireconsisted[tw] OR

questionnairecopyright[tw] OR questionnaired[tw] OR

questionnairedeveloped[tw] OR questionnaireepq[tw] OR

questionnaireforpediatric[tw] OR questionnairegtr[tw] OR

questionnairehas[tw] OR questionnaireitaq[tw] OR

questionnairel02[tw] OR questionnairemcesqscale[tw] OR

questionnairenurse[tw] OR questionnaireon[tw] OR

questionnaireonline[tw] OR questionnairepf[tw] OR

questionnairephq[tw] OR questionnairers[tw] OR

questionnaires[tw] OR questionnaires’[tw] OR

questionnaires’’[tw] OR questionnairescan[tw] OR

questionnairesdq11adolescent[tw] OR questionnairess[tw] OR

questionnairetrade[tw] OR questionnaireure[tw] OR

questionnairev[tw] OR questionnairewere[tw] OR

questionnairex[tw] OR questionnairey[tw]) OR instruments[tw]

OR “surveys and questionnaires”[MeSH Terms] OR “surveys and

questionnaires”[MeSH Terms] OR measure[tiab] OR

(measurement[tiab] OR measurement’[tiab] OR

measurement’s[tiab] OR measurement1[tiab] OR

measuremental[tiab] OR measurementd[tiab] OR

measuremented[tiab] OR measurementexhaled[tiab] OR

measurementf[tiab] OR measurementin[tiab] OR

measuremention[tiab] OR measurementis[tiab] OR

measurementkomputation[tiab] OR measurementl[tiab] OR

measurementmanometry[tiab] OR measurementmethods[tiab] OR

measurementof[tiab] OR measurementon[tiab] OR

measurementpro[tiab] OR measurementresults[tiab] OR

measurements[tiab] OR measurements’[tiab] OR

measurements’s[tiab] OR measurements0[tiab] OR

measurements5[tiab] OR measurementsa[tiab] OR

measurementsare[tiab] OR measurementscanbe[tiab] OR

measurementscheme[tiab] OR measurementsfor[tiab] OR

measurementsgave[tiab] OR measurementsin[tiab] OR

measurementsindicate[tiab] OR measurementsmoking[tiab] OR

measurementsof[tiab] OR measurementson[tiab] OR

measurementsreveal[tiab] OR measurementss[tiab] OR

measurementswere[tiab] OR measurementtime[tiab] OR

measurementts[tiab] OR measurementusing[tiab] OR

measurementws[tiab]) OR measures[tiab] OR

inventory[tiab]) AND |

| Mental health | (“mental health”[tw] OR “behavioral health”[tw] OR

“behavioural health”[tw] OR “mental disorders”[MeSH Terms]

OR “psychiatry”[MeSH Terms] OR psychiatry[tw] OR

psychiatric[tw] OR “behavioral medicine”[MeSH Terms] OR

“mental health services”[MeSH Terms] OR (psychiatrist[tw] OR

psychiatrist’[tw] OR psychiatrist’s[tw] OR

psychiatristes[tw] OR psychiatristis[tw] OR

psychiatrists[tw] OR psychiatrists’[tw] OR

psychiatrists’awareness[tw] OR psychiatrists’opinion[tw] OR

psychiatrists’quality[tw] OR psychiatristsand[tw] OR

psychiatristsare[tw]) OR “hospitals, psychiatric”[MeSH

Terms] OR “psychiatric nursing”[MeSH Terms]) AND |

| Acceptability | acceptability[tw] OR satisfaction [tw] OR

agreeable[tw] OR |

| Adoption | (adopt[tw] OR adopts[tw] OR adopted[tw] OR adoption[tw] OR

((“intention”[MeSH Terms] OR “intention”[All Fields]) AND

adopt[All Fields]) NOT “adoption”[MeSH Terms] OR uptake[tw]

OR utilization[tw] OR “initial implementation”[All

Fields]) OR |

| Appropriateness | appropriateness[tw] OR applicability[tw] OR

applicability[tw] OR compatibility[tw] OR “perceived fit” OR

fitness[tw] OR sustainability[tw] OR relevance[tw] OR

relevant[tw] OR suitability[tw] OR usefulness][tw] OR

practicability[tw] OR |

| Cost | “marginal cost” [tw] OR “cost-effectiveness” OR

economics[sh] OR economics[mh] OR cost-benefit analysis[mh]

OR “cost benefit”[tw] OR “cost utility” OR |

| Feasibility | feasibility[tw] OR transferability [tw] OR applicability

[tw] OR practicability [tw] OR workability [tw] OR “actual

fit” [tw] OR “actual utility” [tw] OR “suitability for

everyday use”[tw] OR |

| Fidelity | fidelity [tw] OR “delivered as intended” OR adherence[tw] OR

“patient adherence”[tw] OR patient compliance[mh] OR

compliance[tw] OR compliant[tw] OR integrity [tw] OR

“quality of program delivery”[tw] OR |

| Penetration | penetration [tw] OR “integration of practice”[tw] OR

infiltration [tw] OR |

| Sustainability | sustain*[tw] OR maintenance[tw] OR “long-term implementation” OR routinization [tw] OR durability [tw] OR institutionalization [tw] OR “capacity building” [tw] OR continuation [tw] OR incorporation [tw] OR integration [tw] OR “sustained use”[tw] |

Identified articles were vetted through a title and abstract screening followed by full text review to confirm relevance to the study parameters. In brief, we included empirical studies and measure development studies that contained one or more quantitative measures of any of the eight implementation outcomes if they were used in an evaluation of an implementation effort in a behavioral health context. Of note, we decided to retain only fidelity measures that were not specific to one evidence-based practice (EBP) and could be applied generally to be consistent with our goal of identifying broadly applicable measures.

Included articles then progressed to the fourth step, construct assignment. Trained research specialists (C.D., K.M.) mapped measures and/or their scales to one or more of the eight aforementioned implementation outcomes (Proctor et al., 2011). Assignment was based on the study author’s definition of what was being measured. Assignment was also based on content coding by the research team who reviewed all items of the measure for evidence of content explicitly assessing one of the eight implementation outcomes when compared against the construct definition. Construct assignment was checked and confirmed by content expert (C.L.) having reviewed items within each measure and/or scale.

The final step subjected the included measures to “cited-by” searches in PubMed and Embase to identify all empirical articles that used the measure in behavioral health implementation research.

Phase II: data extraction

Once all relevant literature was retrieved, articles were compiled into “measure packets.” These measure packets included the measure itself (as available), the measurement development article(s) (or article with the first empirical use in a behavioral health context), and all additional empirical uses of the measure in behavioral health. In order to identify all relevant reports of psychometric information, the team of trained research specialists (CD, KM) reviewed each article and electronically extracted information to assess the psychometric and pragmatic rating criteria, referred to hereafter as PAPERS (Psychometric And Pragmatic Evidence Rating Scale). The full rating system and criteria for the PAPERS are published elsewhere (Lewis et al., 2018; Stanick et al., 2019). The current study, which focuses on psychometric properties only, used nine relevant PAPERS criteria: (1) internal consistency, (2) convergent validity, (3) discriminant validity, (4) known-groups validity, (5) predictive validity, (6) concurrent validity, (7) structural validity, (8) responsiveness, and (9) norms. Data on each psychometric criterion were extracted for both full measure and individual scales as appropriate. Measures were considered “unsuitable for rating” if the format of construct assessment did not produce psychometric information (e.g., qualitative nomination form) or format of the measure did not conform to the rating scale (e.g., cost analysis formula, penetration formula).

Having extracted all data related to psychometric properties, the quality of information for each of the nine criteria was rated using the following scale: “poor” (−1), “none” (0), “minimal/emerging” (1), “adequate” (2), “good” (3), or “excellent” (4). Final ratings were determined from either a single score or a “rolled up median” approach. If a measure was unidimensional or the measure had only one rating for a criterion in an article packet, then this value was used as the final rating and no further calculations were conducted. If a measure had multiple ratings for a criterion across several articles in a packet, we calculated the median score across articles to generate the final rating for that measure on that criterion. For example, if a measure was used in four different studies, each of which rated internal consistency, we calculated the median score across all four articles to determine the final rating of internal consistency for that measure. This process was conducted for each criterion.

If a measure contained a subset of scales relevant to a construct, the ratings for those individual scales were “rolled up” by calculating the median which was then assigned as the final aggregate rating for the whole measure. For example, if a measure had four scales relevant to acceptability and each was rated for internal consistency, the median of those ratings was calculated and assigned as the final rating of internal consistency for that whole measure. This process was carried out for each psychometric criterion. When reporting the “rolled up median” approach, if the computed median resulted in a non-integer rating, the non-integer was rounded down (e.g., internal consistency ratings of 2 and 3 would result in a 2.5 median which was rounded down to 2). In cases where the median of two scores would equal “0” (e.g., a score of −1 and 1), the lower score would be taken (e.g., −1).

In addition to psychometric data, descriptive data were also extracted on each measure. Characteristics included (1) country of origin, (2) concept defined by authors, (3) number of articles contained in each measure packet, (4) number of scales, (5) number of items, (6) setting in which measure had been used, (7) level of analysis, (8) target problem, and (9) stage of implementation as defined by the Exploration, Adoption/Preparation, Implementation, Sustainment (EPIS) model (Aarons et al., 2011).

Phase III: data analysis

Simple statistics (i.e., frequencies) were calculated to report on measure characteristics and availability of psychometric-relevant data. A total score was calculated for each measure by summing the scores given to each of the nine psychometric criteria. The maximum possible rating for a measure was 36 (i.e., each criterion rated 4) and the minimum was −9 (i.e., each criterion rated −1). Bar charts were generated to display visual head-to-head comparisons across all measures within a given construct.

Results

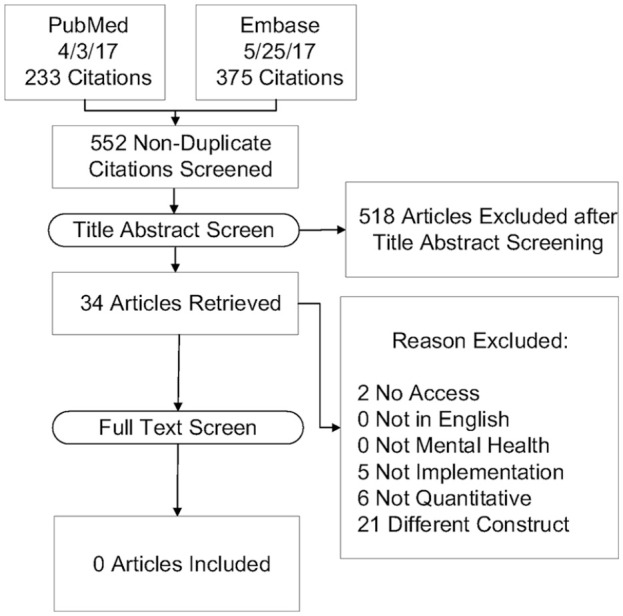

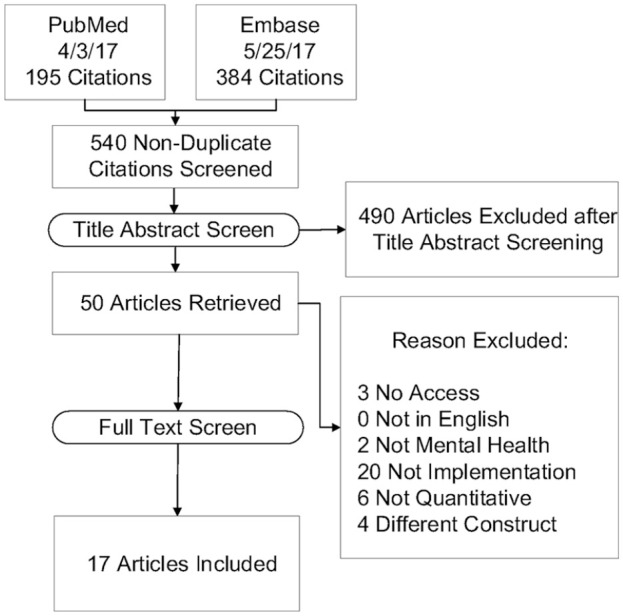

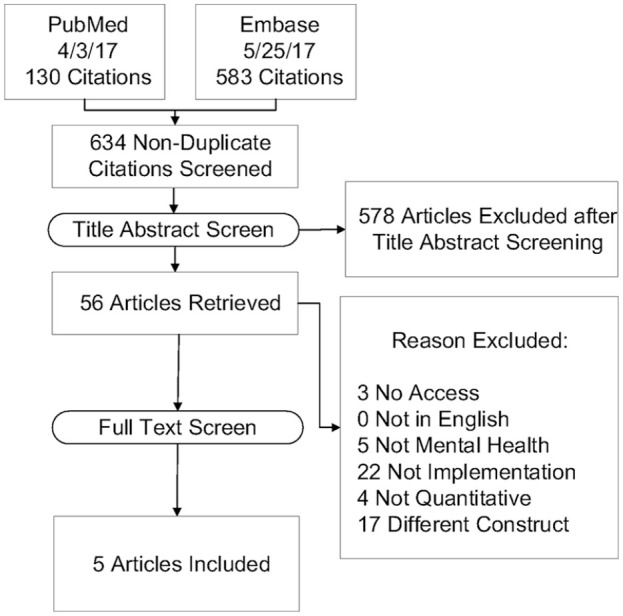

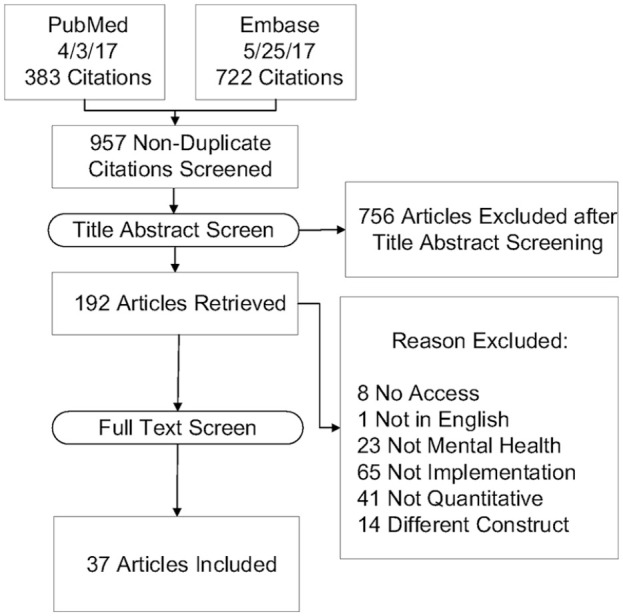

Following the rolled-up approach applied in this study, results are presented at the full measure level. Where appropriate, we indicate the number of scales relevant to a construct within that measure (see Figures A1–A8 in the Appendix 1 for PRISMA flowcharts of included and excluded studies).

Overview of measures

Searches of electronic databases yielded 150 measures related to the eight implementation outcomes (acceptability, adoption, appropriateness, cost, feasibility, fidelity, penetration, and sustainability) that have been used in mental or behavioral health care research. Thirty-two measures of acceptability were identified, one of which was a specific scale within a broader measure (i.e., SFTRC Course Evaluation—Attitude scale) (Haug et al., 2008). Twenty-six measures of adoption were identified, three of which were scales part of broader measures (e.g., Perceptions of Computerized Therapy Questionnaire—Future Use Intentions scale) (Carper et al., 2013) and two of which were deemed “unsuitable for rating.” As mentioned previously, measures were considered “unsuitable for rating” if the format of construct assessment did not produce psychometric information or format of the measure did not conform to the rating scale (e.g., Fortney Measure of Adoption Rate) (Fortney et al., 2012). Six measures of appropriateness were identified, of which one was a scale within a broader measure (i.e., Moore & Benbasat Adoption of IT Innovation Measure—Compatibility scale) (Moore & Benbasat, 1991). Eighteen measures of feasibility were identified, four of which were scales within broader measures (e.g., Behavioral Interventionist Satisfaction Survey—Feasibility scale) (McLean, 2013). Eighteen measures of fidelity were identified. Twenty-three measures of penetration were identified, of which 14 were deemed unsuitable for rating (e.g., Pace Proportion Measure of Penetration) (Pace et al., 2014). Finally, 14 measures of sustainability were identified, one of which was deemed unsuitable for rating (i.e., Kirchner Sustainability Measure) (Kirchner et al., 2014), and another was a scale within a larger measure (i.e., Eisen Provider Knowledge & Attitudes Survey—Sustainability scale) (Eisen et al., 2013). Thirty-one measures of implementation cost were identified; however, none of them were suitable for rating and thus their psychometric evidence was not assessed. It is worth noting that the number of measures listed above for each outcome does not add up to 150. This is because there were 14 measures identified that had scales relevant in multiple different outcomes. Of these 14 measures, 11 were included in two outcomes, one was included in three outcomes, and one was included in four.

Characteristics of measures

Table 3 presents the descriptive characteristics of measures used to assess implementation outcomes. Most measures of implementation outcomes that were suitable for rating were used only once (n = 78, 79%) and most were created in the United States (n = 85, 86%). The remaining measures were developed in Australia, Canada, the United Kingdom, Israel, the Netherlands, Spain, and Zimbabwe. The majority of identified measures were used in the outpatient community setting (n = 53, 54%) or a variety of “other” settings (e.g., prison, church) (n = 41, 42%). Half of the measures were used to assess implementation outcomes influencing implementation in the general mental health field or substance use (n = 49, 50%) and were applied at the implementation or sustainment stage (n = 64, 65%, respectively). A small number of measures showed evidence of predictive validity for other implementation outcomes (n = 15, 15%). Of these, six predicted fidelity (6%), five predicted sustainability (5%), two predicted adoption (2%), and two predicted penetration (2%).

Table 3.

Description of measures and subscales.

| Acceptability

(N = 32) |

Adoption

(N = 25) |

Appropriateness

(N = 6) |

Feasibility

(N = 18) |

Fidelity

(N = 18) |

Penetration

(N = 9) |

Sustainability

(N = 13) |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| n | % | n | % | n | % | n | % | n | % | n | % | n | % | |

| Concept defined | ||||||||||||||

| Yes | 6 | 19 | 10 | 40 | 5 | 83 | 2 | 11 | 5 | 28 | 9 | 100 | 2 | 15 |

| No | 26 | 81 | 15 | 60 | 1 | 17 | 16 | 89 | 13 | 72 | 0 | 0 | 11 | 85 |

| One-time use only | ||||||||||||||

| Yes | 14 | 44 | 18 | 72 | 5 | 83 | 10 | 56 | 11 | 61 | 5 | 56 | 7 | 54 |

| No | 18 | 56 | 7 | 28 | 1 | 17 | 8 | 44 | 7 | 39 | 4 | 44 | 6 | 46 |

| Number of items | ||||||||||||||

| 1–5 | 1 | 3 | 4 | 16 | 0 | 0 | 2 | 11 | 2 | 11 | 1 | 11 | 0 | 0 |

| 6–10 | 4 | 13 | 2 | 8 | 0 | 0 | 4 | 22 | 0 | 0 | 0 | 0 | 0 | 0 |

| 11 or more | 25 | 78 | 16 | 64 | 5 | 83 | 10 | 56 | 11 | 61 | 5 | 56 | 11 | 85 |

| Not specified | 2 | 6 | 3 | 12 | 1 | 17 | 2 | 11 | 5 | 28 | 3 | 33 | 2 | 15 |

| Country | ||||||||||||||

| US | 21 | 66 | 15 | 60 | 3 | 50 | 9 | 50 | 15 | 83 | 7 | 78 | 12 | 92 |

| Other | 11 | 34 | 10 | 40 | 3 | 50 | 9 | 50 | 3 | 17 | 2 | 22 | 1 | 8 |

| Setting | ||||||||||||||

| State mental health | 1 | 3 | 1 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Inpatient psychiatry | 1 | 3 | 1 | 4 | 0 | 0 | 1 | 6 | 1 | 6 | 0 | 0 | 0 | 0 |

| Outpatient community | 11 | 34 | 12 | 48 | 1 | 17 | 7 | 39 | 8 | 44 | 4 | 44 | 6 | 46 |

| School mental health | 15 | 47 | 3 | 12 | 1 | 17 | 1 | 6 | 1 | 6 | 0 | 0 | 1 | 8 |

| Residential care | 1 | 3 | 4 | 16 | 0 | 0 | 0 | 0 | 2 | 11 | 0 | 0 | 0 | 0 |

| Other | 4 | 13 | 7 | 28 | 1 | 17 | 4 | 22 | 6 | 33 | 4 | 44 | 4 | 31 |

| Not specified | 0 | 0 | 0 | 0 | 3 | 50 | 4 | 22 | 1 | 6 | 1 | 11 | 2 | 15 |

| Level | ||||||||||||||

| Consumer | 5 | 16 | 1 | 4 | 1 | 17 | 2 | 11 | 0 | 0 | 1 | 11 | 0 | 0 |

| Organization | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Clinic/site | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 11 | 1 | 8 |

| Provider | 20 | 63 | 19 | 76 | 0 | 0 | 13 | 72 | 15 | 83 | 7 | 78 | 9 | 69 |

| System | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Team | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 6 | 0 | 0 | 0 | 0 |

| Director | 1 | 3 | 3 | 12 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 8 |

| Supervisor | 3 | 9 | 1 | 4 | 1 | 17 | 1 | 6 | 1 | 6 | 1 | 11 | 1 | 8 |

| Other | 2 | 6 | 4 | 16 | 1 | 17 | 1 | 6 | 1 | 6 | 0 | 0 | 1 | 8 |

| Not specified | 2 | 6 | 2 | 8 | 3 | 50 | 1 | 6 | 0 | 0 | 0 | 0 | 2 | 15 |

| Population | ||||||||||||||

| General mental health | 3 | 9 | 6 | 24 | 0 | 0 | 2 | 11 | 6 | 33 | 5 | 56 | 3 | 23 |

| Anxiety | 2 | 6 | 1 | 4 | 0 | 0 | 0 | 0 | 1 | 6 | 0 | 0 | 0 | 0 |

| Depression | 4 | 13 | 2 | 8 | 0 | 0 | 2 | 11 | 3 | 17 | 1 | 11 | 3 | 23 |

| Suicidal ideation | 1 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 6 | 1 | 11 | 0 | 0 |

| Alcohol use disorder | 0 | 0 | 1 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Substance use disorder | 5 | 16 | 10 | 40 | 1 | 17 | 5 | 28 | 5 | 28 | 0 | 0 | 3 | 23 |

| Behavioral disorder | 12 | 38 | 1 | 4 | 0 | 0 | 1 | 6 | 0 | 0 | 0 | 0 | 1 | 8 |

| Mania | 1 | 3 | 0 | 0 | 0 | 0 | 2 | 11 | 0 | 0 | 0 | 0 | 0 | 0 |

| Eating disorder | 3 | 9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Grief | 1 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Tic disorder | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Trauma | 1 | 3 | 3 | 12 | 0 | 0 | 0 | 0 | 2 | 11 | 0 | 0 | 1 | 8 |

| Other | 6 | 19 | 5 | 20 | 1 | 17 | 3 | 17 | 1 | 6 | 0 | 0 | 1 | 8 |

| Not specified | 0 | 0 | 0 | 0 | 4 | 67 | 2 | 11 | 1 | 6 | 2 | 22 | 1 | 8 |

| EPIS phase | ||||||||||||||

| Exploration | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Preparation | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Implementation | 3 | 9 | 11 | 44 | 1 | 17 | 2 | 11 | 10 | 56 | 8 | 89 | 2 | 15 |

| Sustainment | 0 | 0 | 5 | 20 | 0 | 0 | 1 | 6 | 2 | 11 | 1 | 11 | 8 | 62 |

| Not specified | 29 | 91 | 9 | 36 | 5 | 83 | 15 | 83 | 6 | 33 | 0 | 0 | 3 | 23 |

| Outcomes assessed | ||||||||||||||

| Acceptability | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Appropriateness | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Adoption | 2 | 6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Cost | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Feasibility | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Fidelity | 3 | 9 | 0 | 0 | 0 | 0 | 1 | 6 | 1 | 6 | 1 | 11 | 0 | 0 |

| Penetration | 1 | 3 | 0 | 0 | 1 | 17 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Sustainability | 2 | 6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 11 | 2 | 15 |

EPIS: exploration, adoption/preparation, implementation, sustainment.

Availability of psychometric evidence

Of the 150 measures of implementation constructs, 48 were categorized as unsuitable for rating; unsurprisingly the majority of which were cost measures (n = 31). For the remaining 102 measures, there was limited psychometric information available (Table 4). Forty-six (45%) measures had no information for internal consistency, 80 (78%) had no information for convergent validity, 97 (94%) had no information for discriminant validity, 93 (91%) had no information for concurrent validity, 81 (80%) had no information for predictive validity, 88 (86%) had no information for known-groups validity, 84 (82%) had no information for structural validity, 95 (93%) had no information for responsiveness, and finally, 46 (45%) had no information on norms.

Table 4.

Data availability.

| Construct | N | Internal consistency |

Convergent validity |

Discriminant validity |

Known-groups validity |

Predictive validity |

Concurrent validity |

Structural validity |

Responsiveness |

Norms |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| n | (%) | n | (%) | n | (%) | n | (%) | n | (%) | n | (%) | n | (%) | n | (%) | n | (%) | ||

| Acceptability | 32 | 19 | 59 | 10 | 31 | – | – | 4 | 13 | 6 | 19 | 1 | 3 | 4 | 13 | 2 | 6 | 20 | 63 |

| Adoption | 25 | 16 | 64 | 4 | 16 | – | – | 2 | 8 | 5 | 20 | 2 | 8 | 4 | 16 | 1 | 4 | 12 | 48 |

| Appropriateness | 6 | 3 | 50 | 1 | 17 | – | – | – | – | 1 | 17 | – | – | 1 | 17 | – | – | 2 | 33 |

| Feasibility | 18 | 5 | 28 | – | – | – | – | 1 | 6 | 1 | 6 | – | – | 1 | 6 | – | – | 9 | 50 |

| Fidelity | 18 | 6 | 33 | 3 | 17 | 2 | 11 | 2 | 11 | 4 | 22 | 1 | 6 | 2 | 11 | – | – | 9 | 50 |

| Penetration | 9 | 3 | 33 | 1 | 11 | – | – | – | – | 2 | 22 | – | – | 1 | 11 | 1 | 3 | 33 | |

| Sustainability | 13 | 6 | 46 | 1 | 8 | – | – | 3 | 23 | 2 | 15 | 3 | 23 | 4 | 30 | – | – | 8 | 62 |

Excluding measures that were deemed unsuitable for rating.

Psychometric evidence rating scale results

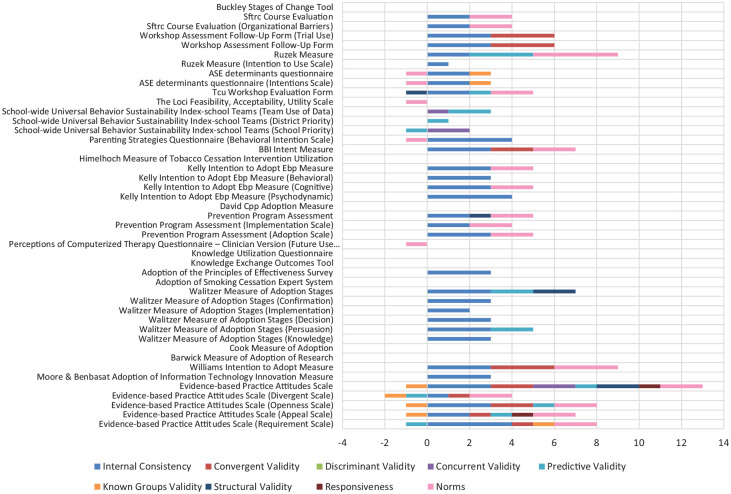

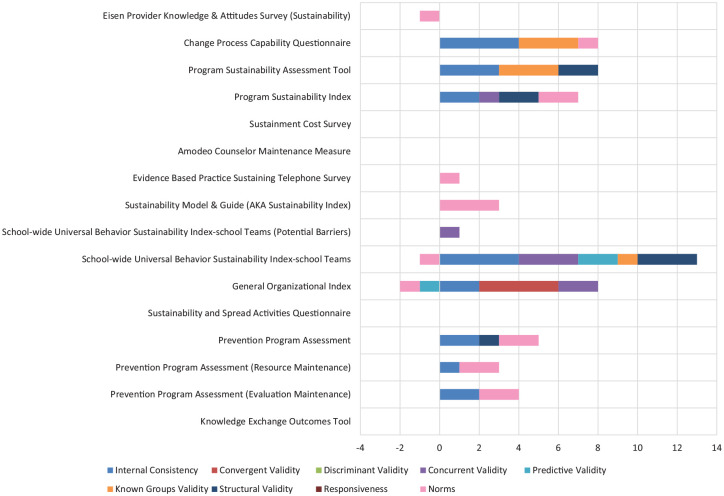

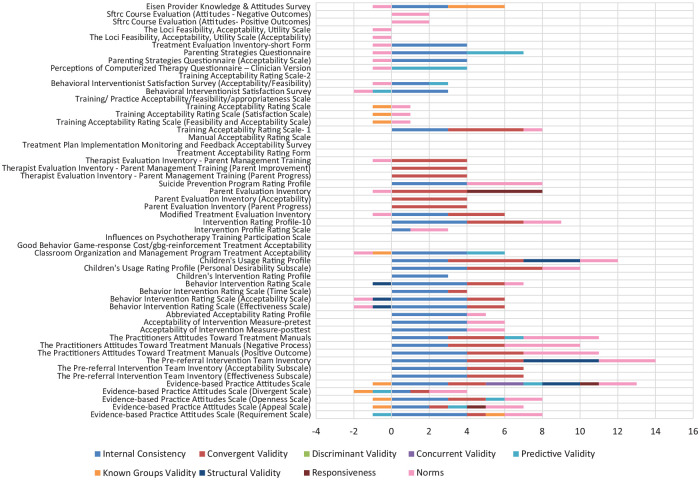

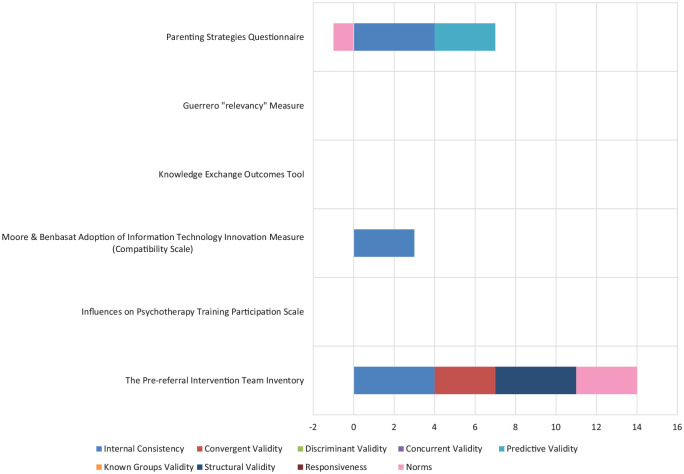

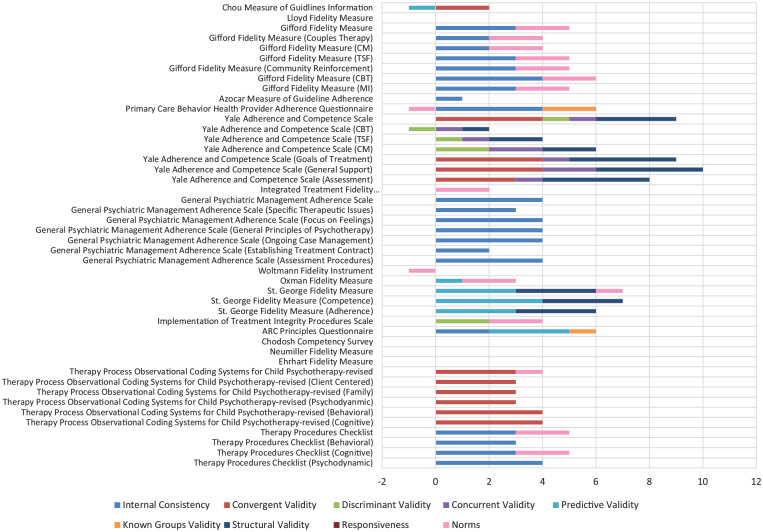

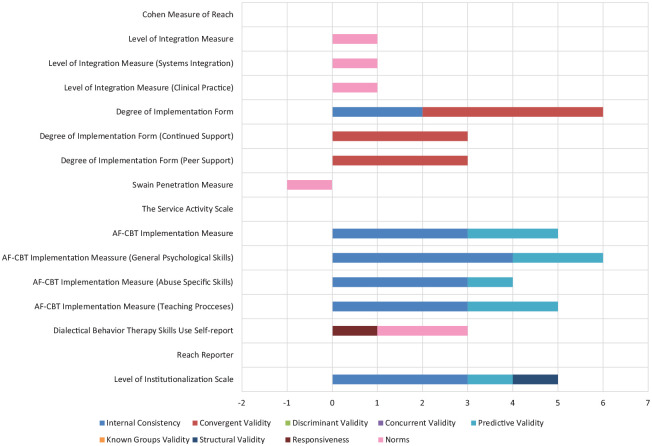

Table 5 describes the median ratings and range of ratings for psychometric properties for measures deemed suitable for rating (n = 102) and those for which information was available (i.e., those with non-zero ratings on PAPERS criteria). Individual ratings for all measures can be found in Table 6 and head-to-head bar graphs can be found in Figures 1 to 7.

Table 5.

Psychometric scores summary data.

| Construct | N | Internal consistency |

Convergent validity |

Discriminant validity |

Known-groups validity |

Predictive validity |

Concurrent validity |

Structural validity |

Responsiveness |

Norms | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| M | R | M | R | M | R | M | R | M | R | M | R | M | R | M | R | M | R | ||

| Acceptability | 32 | 3 | 1–4 | 2 | 1–4 | – | – | –1 | −1–3 | –1 | −1–4 | 2 | 2 | –1 | −1–4 | 1 | 4 | 1 | −1–4 |

| Adoption | 25 | 2 | 1–4 | 2 | 1–3 | – | – | –1 | −1–1 | 1 | −1–3 | 2 | 1–2 | 1 | −1–2 | 1 | 1 | 2 | −1–4 |

| Appropriateness | 6 | 4 | 3–4 | 3 | 3 | – | – | – | – | 3 | 3 | – | – | 4 | 4 | – | – | 1 | −1–3 |

| Feasibility | 18 | 4 | 2–4 | – | – | – | – | –1 | –1 | 1 | 1 | – | – | 2 | 2 | – | – | –1 | −1–2 |

| Fidelity | 18 | 3 | 1–4 | 3 | 2–4 | 1 | −1–2 | 1 | 1–2 | 3 | −1–4 | 1 | 1–2 | 3 | 1–4 | – | – | 2 | −1–2 |

| Penetration | 9 | 3 | 2–4 | 3 | 3–4 | – | – | – | – | 2 | 1–2 | – | – | 1 | 1 | – | – | 1 | −1–2 |

| Sustainability | 13 | 2 | 1–4 | 4 | 4 | – | – | 3 | 1–3 | –1 | −1–2 | 1 | 1–3 | 2 | 1–3 | – | – | 1 | −1–3 |

Note. M = median; R = range. Excluding zeros where psychometric information not available; excluding measures that were deemed unsuitable for rating; scores range from “poor” (−1) to “excellent” (4).

Table 6.

Individual psychometric ratings.

| Measure name | Internal consistency | Convergent validity | Discriminant validity | Known-groups validity | Predictive validity | Concurrent validity | Structural validity | Responsiveness | Norms | Total score |

|---|---|---|---|---|---|---|---|---|---|---|

| Acceptability | ||||||||||

| Abbreviated Acceptability Rating Profile (Tarnowski & Simonian, 1992) | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 5 |

| Acceptability of Intervention Measure–posttest (Henninger, 2010) | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 6 |

| Acceptability of Intervention Measure–pretest (Henninger, 2010) | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 6 |

| Behavior Intervention Rating Scale (S. N. Elliott & Treuting, 1991) | 4 | 2 | 0 | 0 | 0 | 0 | –1 | 0 | 1 | 6 |

| Behavior Intervention Rating Scale (Acceptability Scale) (S. N. Elliott & Treuting, 1991) | 4 | 2 | 0 | 0 | 0 | 0 | –1 | 0 | –1 | 4 |

| Behavior Intervention Rating Scale (Effectiveness Scale) (S. N. Elliott & Treuting, 1991) | 4 | 2 | 0 | 0 | 0 | 0 | –1 | 0 | –1 | 4 |

| Behavior Intervention Rating Scale (Time Scale) (S. N. Elliott & Treuting, 1991) | 3 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 4 |

| Behavioral Interventionist Satisfaction Survey (K. A. McLean, 2013) | 3 | 0 | 0 | 0 | –1 | 0 | 0 | 0 | –1 | 1 |

| Behavioral Interventionist Satisfaction Survey (Acceptability/Feasibility) (K. A. McLean, 2013) | 2 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | –1 | 2 |

| Children’s Intervention Rating Profile (S. N. Elliott et al., 1986) | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 |

| Children’s Usage Rating Profile (Briesch & Chafouleas, 2009) | 3 | 4 | 0 | 0 | 0 | 0 | 3 | 0 | 2 | 12 |

| Children’s Usage Rating Profile (Personal Desirability Subscale) (Briesch & Chafouleas, 2009) | 4 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 10 |

| Classroom Organization and Management Program Treatment Acceptability (Tanol, 2010) | 4 | 0 | 0 | 0 | 2 | –1 | 0 | 0 | –1 | 4 |

| Eisen Provider Knowledge & Attitudes Survey (Eisen et al., 2013) | 3 | 0 | 0 | 0 | 0 | 3 | 0 | 0 | –1 | 5 |

| Evidence-Based Practice Attitudes Scale (Aarons, 2004) | 3 | 2 | 0 | 2 | 1 | –1 | 2 | 1 | 2 | 12 |

| Evidence-Based Practice Attitudes Scale (Appeal Scale) (Aarons, 2004) | 2 | 1 | 0 | 0 | 1 | –1 | 0 | 1 | 2 | 6 |

| Evidence-Based Practice Attitudes Scale (Divergent Scale) (Aarons, 2004) | 1 | 1 | 0 | 0 | –1 | –1 | 0 | 0 | 2 | 2 |

| Evidence-Based Practice Attitudes Scale (Openness Scale) (Aarons, 2004) | 3 | 2 | 0 | 0 | 1 | –1 | 0 | 0 | 2 | 7 |

| Evidence-Based Practice Attitudes Scale (Requirement Scale) (Aarons, 2004) | 4 | 1 | 0 | 0 | –1 | 1 | 0 | 0 | 2 | 7 |

| Good Behavior Game-response Cost/GBG-reinforcement Treatment Acceptability (Tanol et al., 2010) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Influences on Psychotherapy Training Participation Scale (A. Lyon, 2010) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Intervention Profile Rating Scale (Kutsick et al., 1991) | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 3 |

| Intervention Rating Profile-10 (Power et al., 1995) | 4 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 9 |

| Manual Acceptability Rating Scale (D. Milne, 2010) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Modified Treatment Evaluation Inventory (Johnston & Fine, 1993) | 3 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | –1 | 5 |

| Parent Evaluation Inventory (Kazdin et al., 1989) | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 4 | –1 | 7 |

| Parent Evaluation Inventory (Acceptability) (Kazdin et al., 1989) | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 4 |

| Parent Evaluation Inventory (Parent Progress) (Kazdin et al., 1989) | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 4 |

| Parenting Strategies Questionnaire (Whittingham et al., 2006) | 4 | 0 | 0 | 0 | 3 | 0 | 0 | 0 | –1 | 6 |

| Parenting Strategies Questionnaire (Acceptability Scale) (Whittingham et al., 2006) | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | –1 | 3 |

| Perceptions of Computerized Therapy Questionnaire—Clinician Version (Carper et al., 2013) | 0 | 0 | 0 | 0 | 4 | 0 | 0 | 0 | –1 | 3 |

| SFTRC Course Evaluation (Attitudes—Negative Outcomes) (Carper et al., 2013) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 2 |

| SFTRC Course Evaluation (Attitudes–Positive Outcomes) (Carper et al., 2013) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 2 |

| Suicide Prevention Program Rating Profile (Eckert et al., 2003) | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 4 | 8 |

| The LOCI Feasibility, Acceptability, Utility Scale (Aarons et al., 2015) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | –1 | –1 |

| The LOCI Feasibility, Acceptability, Utility Scale (Acceptability) (Aarons et al., 2015) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | –1 | –1 |

| The Practitioners Attitudes Toward Treatment Manuals (Addis & Krasnow, 2000) | 3 | 3 | 0 | 0 | 1 | 0 | 0 | 0 | 4 | 11 |

| The Practitioners Attitudes Toward Treatment Manuals (Negative Process) (Addis & Krasnow, 2000) | 3 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 4 | 10 |

| The Practitioners Attitudes Toward Treatment Manuals (Positive Outcome) (Addis & Krasnow, 2000) | 4 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 4 | 11 |

| The Pre-referral Intervention Team Inventory (Yetter, 2010) | 4 | 3 | 0 | 0 | 0 | 0 | 4 | 0 | 3 | 14 |

| The Pre-referral Intervention Team Inventory (Acceptability Subscale) (Yetter, 2010) | 4 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 7 |

| The Pre-referral Intervention Team Inventory (Effectiveness Subscale) (Yetter, 2010) | 4 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 7 |

| Therapist Evaluation Inventory—Parent Management Training (Myers, 2008) | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | –1 | 3 |

| Therapist Evaluation Inventory—Parent Management Training (Parent Improvement) (Myers, 2008) | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 4 |

| Therapist Evaluation Inventory—Parent Management Training (Parent Progress) (Myers, 2008) | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 4 |

| Training Acceptability Rating Scale (D. Milne, 2010) | 0 | 0 | 0 | 0 | 0 | –1 | 0 | 0 | 1 | 0 |

| Training Acceptability Rating Scale (Feasibility and Acceptability Scale) (D. Milne, 2010) | 0 | 0 | 0 | 0 | 0 | –1 | 0 | 0 | 1 | 0 |

| Training Acceptability Rating Scale (Satisfaction Scale) (D. Milne, 2010) | 0 | 0 | 0 | 0 | 0 | –1 | 0 | 0 | 1 | 0 |

| Training Acceptability Rating Scale-1 (Davis & Capponi, 1989) | 3 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 8 |

| Training Acceptability Rating Scale-2 (D. Milne & Noone, 1996) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Training/Practice Acceptability/Feasibility/Appropriateness Scale (A. Lyon, 2011) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Treatment Acceptability Rating Form (Reimers, 1988) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Treatment Evaluation Inventory–Short Form (Newton & Sturmey, 2004) | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | –1 | 3 |

| Treatment Plan Implementation Monitoring and Feedback Acceptability Survey (Easton, 2009) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Adoption | ||||||||||

| Adoption of Smoking Cessation Expert System (Hoving et al., 2006) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Adoption of the Principles of Effectiveness Survey (Pankratz et al., 2002) | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 |

| ASE Determinants Questionnaire (Zwerver et al., 2013) | 2 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | –1 | 2 |

| ASE Determinants Questionnaire (Intentions Scale) (Zwerver et al., 2013) | 2 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | –1 | 2 |

| Barwick Measure of Adoption of Research (Barwick et al., 2008) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| BBI Intent Measure (Brothers et al., 2015) | 3 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 7 |

| Buckley Stages of Change Tool (Buckley et al., 2003) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Cook Measure of Adoption (Cook et al., 2009) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| David CPP Adoption Measure (David & Schiff, 2015) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Evidence-Based Practice Attitudes Scale (Aarons, 2004) | 3 | 2 | 0 | 2 | 1 | –1 | 2 | 1 | 2 | 12 |

| Evidence-Based Practice Attitudes Scale (Appeal Scale) (Aarons, 2004) | 2 | 1 | 0 | 0 | 1 | –1 | 0 | 1 | 2 | 6 |

| Evidence-Based Practice Attitudes Scale (Divergent Scale) (Aarons, 2004) | 1 | 1 | 0 | 0 | –1 | –1 | 0 | 0 | 2 | 2 |

| Evidence-Based Practice Attitudes Scale (Openness Scale) (Aarons, 2004) | 3 | 2 | 0 | 0 | 1 | –1 | 0 | 0 | 2 | 7 |

| Evidence-Based Practice Attitudes Scale (Requirement Scale) (Aarons, 2004) | 4 | 1 | 0 | 0 | –1 | 1 | 0 | 0 | 2 | 7 |

| Himelhoch Measure of Tobacco Cessation Intervention Utilization (Himelhoch et al., 2014) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Kelly Intention to Adopt EBP Measure (Kelly & Deane, 2012) | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 5 |

| Kelly Intention to Adopt EBP Measure (Behavioral) (Kelly & Deane, 2012) | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 |

| Kelly Intention to Adopt EBP Measure (Cognitive) (Kelly & Deane, 2012) | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 5 |

| Kelly Intention to Adopt EBP Measure (Psychodynamic) (Kelly & Deane, 2012) | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 4 |

| Knowledge Exchange Outcomes Tool (Skinner, 2007) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Knowledge Utilization Questionnaire (Chagnon et al., 2010) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Moore & Benbasat Adoption of Information Technology Innovation Measure (Moore & Benbasat, 1991) | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 |

| Parenting Strategies Questionnaire (Behavioral Intention Scale) (Moore & Benbasat, 1991) | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | –1 | 3 |

| Perceptions of Computerized Therapy Questionnaire—Clinician Version (Future Use Intentions) (Moore & Benbasat, 1991) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | –1 | –1 |

| Prevention Program Assessment (Stamatakis et al., 2012) | 2 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 2 | 5 |

| Prevention Program Assessment (Adoption Scale) (Stamatakis et al., 2012) | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 5 |

| Prevention Program Assessment (Implementation Scale) (Stamatakis et al., 2012) | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 4 |

| Ruzek Measure (Ruzek et al., 2016) | 2 | 0 | 0 | 0 | 3 | 0 | 0 | 0 | 4 | 9 |

| Ruzek Measure (Intention to Use Scale) (Ruzek et al., 2016) | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 |

| School-wide Universal Behavior Sustainability Index–School Teams (District Priority) (Ruzek et al., 2016) | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 |

| School-wide Universal Behavior Sustainability Index–School Teams (School Priority) (Ruzek et al., 2016) | 0 | 0 | 0 | 2 | –1 | 0 | 0 | 0 | 0 | 1 |

| School-wide Universal Behavior Sustainability Index–School Teams (Team Use of Data) (Ruzek et al., 2016) | 0 | 0 | 0 | 1 | 2 | 0 | 0 | 0 | 0 | 3 |

| SFTRC Course Evaluation (Haug et al., 2008) | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 4 |

| SFTRC Course Evaluation (Organizational Barriers) (Haug et al., 2008) | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 4 |

| TCU Workshop Evaluation Form (Simpson, 2002) | 2 | 0 | 0 | 0 | 1 | 0 | –1 | 0 | 2 | 4 |

| The LOCI Feasibility, Acceptability, Utility Scale (Aarons et al., 2015) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | –1 | –1 |

| Walitzer Measure of Adoption Stages (Walitzer et al., 2015) | 3 | 0 | 0 | 0 | 2 | 0 | 2 | 0 | 0 | 7 |

| Walitzer Measure of Adoption Stages (Confirmation) (Walitzer et al., 2015) | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 |

| Walitzer Measure of Adoption Stages (Decision) (Walitzer et al., 2015) | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 |

| Walitzer Measure of Adoption Stages (Implementation) (Walitzer et al., 2015) | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 |

| Walitzer Measure of Adoption Stages (Knowledge) (Walitzer et al., 2015) | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 |

| Walitzer Measure of Adoption Stages (Persuasion) (Walitzer et al., 2015) | 3 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 5 |

| Williams Intention to Adopt Measure (Williams et al., 2017) | 3 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 3 | 9 |

| Workshop Assessment Follow-Up Form (Simpson, 2002) | 3 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 6 |

| Workshop Assessment Follow-Up Form (Trial Use) (Simpson, 2002) | 3 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 6 |

| Appropriateness | ||||||||||

| The Pre-referral Intervention Team Inventory (Yetter, 2010) | 4 | 3 | 0 | 0 | 0 | 0 | 4 | 0 | 3 | 14 |

| Influences on Psychotherapy Training Participation Scale (A. Lyon, 2010) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Moore & Benbasat Adoption of Information Technology Innovation Measure (Compatibility Scale) (A. Lyon, 2010) | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 |

| Knowledge Exchange Outcomes Tool (Skinner, 2007) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Guerrero “Relevancy” Measure (Guerrero et al., 2016) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Parenting Strategies Questionnaire (Whittingham et al., 2006) | 4 | 0 | 0 | 0 | 3 | 0 | 0 | 0 | –1 | 6 |

| Feasibility | ||||||||||

| Children’s Usage Rating Profile (Feasibility Scale) (Whittingham et al., 2006) | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 6 |

| Training Acceptability Rating Scale (Feasibility and Acceptability Scale) (Whittingham et al., 2006) | 0 | 0 | 0 | 0 | 0 | –1 | 0 | 0 | 1 | 0 |

| Behavioral Interventionist Satisfaction Survey (Acceptability/Feasibility) (Whittingham et al., 2006) | 2 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | –1 | 2 |

| Parenting Strategies Questionnaire (Usability Scale) (Whittingham et al., 2006) | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | –1 | 3 |

| Evital Feasibility Questionnaire (Alonso et al., 2010) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Feasibility of Administering the Screening Tool for Autism in Toddlers and Young Children (Kobak et al., 2011) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | –1 | –1 |

| Feasibility of the Stages of Recovery Instrument (Weeks et al., 2011) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Feasibility Questionnaire for Threshold Assessment Grid (Slade et al., 2001) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | –1 | –1 |

| The Measure of Disseminability (Trent, 2010) | 3 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 5 |

| Feasibility of a Screening Tool (Hides et al., 2007) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 2 |

| Questionnaire for User Interface Satisfaction (Chin & Norman, 1988) | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 4 |

| Ebert Providers’ Perception of IC Questionnaire (Ebert et al., 2014) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Structured Assessment of Feasibility (Bird et al., 2014) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| System Usability Scale (Kobak et al., 2015) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | –1 | –1 |

| Readiness Ruler (Herie Version) (Miller & Rollnick, 2002) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Malte Post-treatment Smoking Cessation Beliefs Measure (Feasibility Scale) (Miller & Rollnick, 2002) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Malte Post-treatment Smoking Cessation Beliefs Measure (Malte et al., 2013) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| The LOCI Feasibility, Acceptability, Utility Scale (Feasibility) (Aarons et al., 2015) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | –1 | –1 |

| The LOCI Feasibility, Acceptability, Utility Scale (Aarons et al., 2015) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | –1 | –1 |

| Fidelity | ||||||||||

| Therapy Procedures Checklist (Psychodynamic) (Weersing et al., 2002) | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 4 |

| Therapy Procedures Checklist (Cognitive) (Weersing et al., 2002) | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 5 |

| Therapy Procedures Checklist (Behavioral) (Weersing et al., 2002) | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 |

| Therapy Procedures Checklist (Weersing et al., 2002) | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 5 |

| Therapy Process Observational Coding Systems for Child Psychotherapy–Revised (Cognitive) (McLeod et al., 2015) | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 4 |

| Therapy Process Observational Coding Systems for Child Psychotherapy–Revised (Behavioral) (McLeod et al., 2015) | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 4 |

| Therapy Process Observational Coding Systems for Child Psychotherapy–Revised (Psychodynamic) (McLeod et al., 2015) | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 |

| Therapy Process Observational Coding Systems for Child Psychotherapy–Revised (Family) (McLeod et al., 2015) | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 |

| Therapy Process Observational Coding Systems for Child Psychotherapy–Revised (Client Centered) (McLeod et al., 2015) | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 |

| Therapy Process Observational Coding Systems for Child Psychotherapy–Revised (McLeod et al., 2015) | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 4 |

| Ehrhart Fidelity Measure (Ehrhart et al., 2015) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Neumiller Fidelity Measure (Neumiller et al., 2009) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Chodosh Competency Survey (Chodosh et al., 2015) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| ARC Principles Questionnaire (Glisson et al., 2013) | 2 | 0 | 0 | 0 | 3 | 1 | 0 | 0 | 0 | 6 |

| Implementation of Treatment Integrity Procedures Scale (Perepletchikova et al., 2007) | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 2 | 4 |

| St. George Fidelity Measure (Adherence) (George et al., 2016) | 0 | 0 | 0 | 0 | 3 | 0 | 3 | 0 | 0 | 6 |

| St. George Fidelity Measure (Competence) (George et al., 2016) | 0 | 0 | 0 | 0 | 4 | 0 | 3 | 0 | 0 | 7 |

| St. George Fidelity Measure (George et al., 2016) | 0 | 0 | 0 | 0 | 3 | 0 | 3 | 0 | 1 | 7 |

| Oxman Fidelity Measure (Oxman et al., 2006) | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 2 | 3 |

| Woltmann Fidelity Instrument (Woltmann et al., 2008) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | –1 | –1 |

| General Psychiatric Management Adherence Scale (Assessment Procedures) (Kolla et al., 2009) | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 4 |

| General Psychiatric Management Adherence Scale (Establishing Treatment Contract) (Kolla et al., 2009) | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 |

| General Psychiatric Management Adherence Scale (Ongoing Case Management) (Kolla et al., 2009) | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 4 |

| General Psychiatric Management Adherence Scale (General Principles of Psychotherapy) (Kolla et al., 2009) | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 4 |

| General Psychiatric Management Adherence Scale (Focus on Feelings) (Kolla et al., 2009) | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 4 |

| General Psychiatric Management Adherence Scale (Specific Therapeutic Issues) (Kolla et al., 2009) | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 |

| General Psychiatric Management Adherence Scale (Kolla et al., 2009) | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 4 |

| Integrated Treatment Fidelity scale (McKee et al., 2013) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 2 |

| Yale Adherence and Competence Scale (Assessment) (Carroll et al., 2000) | 0 | 3 | 0 | 1 | 0 | 0 | 4 | 0 | 0 | 8 |

| Yale Adherence and Competence Scale (General Support) (Carroll et al., 2000) | 0 | 4 | 0 | 2 | 0 | 0 | 4 | 0 | 0 | 10 |

| Yale Adherence and Competence Scale (Goals of Treatment) (Carroll et al., 2000) | 0 | 4 | 0 | 1 | 0 | 0 | 4 | 0 | 0 | 9 |

| Yale Adherence and Competence Scale (CM) (Carroll et al., 2000) | 0 | 0 | 2 | 2 | 0 | 0 | 2 | 0 | 0 | 6 |

| Yale Adherence and Competence Scale (TSF) (Carroll et al., 2000) | 0 | 0 | 1 | 1 | 0 | 0 | 2 | 0 | 0 | 4 |

| Yale Adherence and Competence Scale (CBT) (Carroll et al., 2000) | 0 | 0 | –1 | 1 | 0 | 0 | 1 | 0 | 0 | 1 |

| Yale Adherence and Competence Scale (Carroll et al., 2000) | 0 | 4 | 1 | 1 | 0 | 0 | 3 | 0 | 0 | 9 |

| Primary Care Behavior Health Provider Adherence Questionnaire (Beehler et al., 2013) | 4 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | –1 | 5 |

| Azocar Measure of Guideline Adherence (Azocar et al., 2003) | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 |

| Gifford Fidelity Measure (MI) (Gifford et al., 2012) | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 5 |

| Gifford Fidelity Measure (CBT) (Gifford et al., 2012) | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 6 |

| Gifford Fidelity Measure (Community Reinforcement) (Gifford et al., 2012) | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 5 |

| Gifford Fidelity Measure (TSF) (Gifford et al., 2012) | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 5 |

| Gifford Fidelity Measure (CM) (Gifford et al., 2012) | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 4 |

| Gifford Fidelity Measure (Couples Therapy) (Gifford et al., 2012) | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 4 |

| Gifford Fidelity Measure (Gifford et al., 2012) | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 5 |

| Lloyd Fidelity Measure (Lloyd et al., 2015) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Chou Measure of Guidelines Information (Chou et al., 2011) | 0 | 2 | 0 | 0 | –1 | 0 | 0 | 0 | 0 | 1 |

| Penetration | ||||||||||

| Level of Institutionalization Scale (Goodman et al., 1993) | 3 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 5 |

| Reach Reporter (Balasubramanian et al., 2015) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Dialectical Behavior Therapy Skills Use Self-Report (Dimeff et al., 2011) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 2 | 3 |

| AF-CBT Implementation Measure (Teaching Processes) (Kolko et al., 2012) | 3 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 5 |

| AF-CBT Implementation Measure (Abuse Specific Skills) (Kolko et al., 2012) | 3 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 4 |

| AF-CBT Implementation Measure (General Psychological Skills) (Kolko et al., 2012) | 4 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 6 |

| AF-CBT Implementation Measure (Kolko et al., 2012) | 3 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 5 |

| The Service Activity Scale (Tobin et al., 2001) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Swain Penetration Measure (Swain et al., 2010) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | –1 | –1 |

| Degree of Implementation Form (Peer Support) (Forchuk et al., 2002) | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 |

| Degree of Implementation Form (Continued Support) (Forchuk et al., 2002) | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 |

| Degree of Implementation Form (Forchuk et al., 2002) | 2 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 6 |

| Level of Integration Measure (Clinical Practice) (Beehler et al., 2015) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 |

| Level of Integration Measure (Systems Integration) (Beehler et al., 2015) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 |

| Level of Integration Measure (Beehler et al., 2015) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 |

| Cohen Measure of Reach (Cohen et al., 2015) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Sustainability | ||||||||||

| Knowledge Exchange Outcomes Tool (Skinner, 2007) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Prevention Program Assessment (Evaluation Maintenance) (Stamatakis et al., 2012) | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 4 |

| Prevention Program Assessment (Resource Maintenance) (Stamatakis et al., 2012) | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 3 |

| Prevention Program Assessment (Stamatakis et al., 2012) | 2 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 2 | 5 |

| Sustainability and Spread Activities Questionnaire (Chung et al., 2013) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| General Organizational Index (Bond et al., 2009) | 2 | 4 | 0 | 2 | –1 | 0 | 0 | 0 | –1 | 6 |

| School-wide Universal Behavior Sustainability Index–School Teams (McIntosh et al., 2011) | 4 | 0 | 0 | 3 | 2 | 1 | 3 | 0 | –1 | 12 |

| School-wide Universal Behavior Sustainability Index–School Teams (Potential Barriers) (McIntosh et al., 2011) | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 |

| Sustainability Model & Guide (AKA Sustainability Index) (Maher et al., 2007) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 | 3 |

| Evidence Based Practice Sustaining Telephone Survey (Swain et al., 2010) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 |

| Amodeo Counselor Maintenance Measure (Amodeo et al., 2010) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Sustainment Cost Survey (Roundfield and Lang, 2017) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Program Sustainability Index (Mancini & Marek, 2004) | 2 | 0 | 0 | 1 | 0 | 0 | 2 | 0 | 2 | 7 |

| Program Sustainability Assessment Tool (Luke et al., 2014) | 3 | 0 | 0 | 0 | 0 | 3 | 2 | 0 | 0 | 8 |

| Change Process Capability Questionnaire (Solberg et al., 2008) | 4 | 0 | 0 | 0 | 0 | 3 | 0 | 0 | 1 | 8 |

| Eisen Provider Knowledge & Attitudes Survey (Sustainability) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | –1 | –1 |

Leadership and Organizational Change for Implementation (LOCI); San Francisco Treatment Research Center (SFTRC); Attitude, Social norm, Self-efficacy (ASE); Child Parent Psychotherapy (CPP); Bio-behavioral Intervention (BBI); Texas Christian University (TCU); Cognitive Behavioral Therapy (CBT); Twelve Step Facilitation (TSF); Clinical Management (CM); Motivational Interviewing (MI); Alternatives for Families - A Cognitive Behavioral Therapy (AF-CBT); Also known as (AKA)

Figure 1.

Acceptability ratings.

Figure 7.

Sustainability ratings.

Figure 2.

Adoption ratings.

Figure 3.

Appropriateness ratings.

Figure 4.

Feasibility ratings.

Figure 5.

Fidelity ratings.

Figure 6.

Penetration ratings.

Acceptability

Thirty-two measures of acceptability were identified in mental or behavioral health care research. Information about internal consistency was available for 19 measures, convergent validity for 10 measures, discriminant validity for no measures, concurrent validity for one measure, predictive validity for six measures, known-groups validity for four measures, structural validity for four measures, responsiveness for two measures, and norms for twenty measures. For those measures of acceptability with information available (i.e., those with non-zero ratings on PAPERS criteria), the median rating for internal consistency was “3—good,” “2—adequate” for convergent validity, “2—adequate” for concurrent validity, “−1—poor” for predictive validity, “−1—poor” for known-groups validity, “−1—poor” for structural validity, “1—minimal/emerging” for responsiveness, and “1—minimal/emerging” for norms.

The Pre-referral Intervention Team Inventory had the highest psychometric rating score among measures of acceptability used in mental and behavioral health care (psychometric total maximum score = 14; maximum possible score = 36), with ratings of “4—excellent” for internal consistency, “3—good” for convergent validity, “4—excellent” for structural validity, and “3—good” for norms (Yetter, 2010). There was no information available on any of the remaining psychometric criteria.

Adoption

Twenty-six measures of adoption were identified in mental or behavioral health care research, two of which were deemed unsuitable for rating. Information about internal consistency was available for 16 measures, convergent validity for four measures, discriminant validity for no measures, concurrent validity for two measures, predictive validity for five measures, known-groups validity for two measures, structural validity for four measures, responsiveness for one measure, and norms for 12 measures. For those measures of adoption with information available (i.e., those with non-zero ratings on PAPERS criteria), the median rating for internal consistency was “2—adequate,” “2—adequate” for convergent validity, “2—adequate” for concurrent validity, “1—minimal/emerging” for predictive validity, “−1—poor” for known-groups validity, “1—minimal/emerging” for structural validity, “1—minimal/emerging” for responsiveness, and “2—adequate” for norms.

The Williams “Intention to Adopt” and Ruzek “Measure of Adoption” measures had the highest psychometric rating scores among measures of adoption used in mental and behavioral health care (psychometric total maximum score = 9; maximum possible score = 36), with ratings of “3—good” and 2—adequate” for internal consistency, “3—good” and “0—no evidence” for convergent validity, “0—no evidence” and “3—good” for predictive validity,, and “3—good” and “4—”excellent” for norms, respectively (Ruzek et al., 2016; Williams et al., 2017). There was no information available on any of the remaining psychometric criteria. It is worth noting that these scores reflect the median of over 88 uses of this measure in behavioral health research.

Appropriateness

Six measures of appropriateness were identified in mental or behavioral health research. Information about internal consistency was available for three measures, convergent validity for one measure, discriminant validity for no measures, concurrent validity no measures, predictive validity for one measure, known-groups validity for no measures, structural validity for one measure, responsiveness for no measures, and norms for two measures. For those measures of appropriateness with information available (i.e., those with non-zero ratings on PAPERS criteria), the median rating for internal consistency was “4—excellent,” “3—good” for convergent validity, “3—good” for predictive validity, “4—excellent” for structural validity, and “1—minimal/emerging” for norms.

The Pre-referral Intervention Team Inventory had the highest psychometric rating score among measures of appropriateness used in mental and behavioral health care (psychometric total maximum score = 14; maximum possible score = 36), with ratings of “4—excellent” for internal consistency, “3—good” for convergent validity, “4—excellent” for structural validity, and “3—good” for norms (Yetter, 2010). There was no information available on any of the remaining psychometric criteria.

Cost

Thirty-one measures of implementation cost were identified in mental or behavioral health research; however, none of them were suitable for rating and thus their psychometric information was not assessed.

Feasibility

Eighteen measures of feasibility were identified in mental or behavioral health research. Information about internal consistency was available for five measures, convergent validity for no measures, discriminant validity for no measures, concurrent validity no measures, predictive validity for one measure, known-groups validity for one measure, structural validity for one measure, responsiveness for no measures, and norms for nine measures. For those measures of feasibility with information available (i.e., those with non-zero ratings on PAPERS criteria), the median rating for internal consistency was “4—excellent,” “1—minimal/emerging” for predictive validity, “−1—poor” for known-groups validity, “2—adequate” for structural validity, and “−1—poor” for norms.

The Children’s Usage Rating Profile had the highest psychometric rating score among measures of feasibility used in mental and behavioral health care (psychometric total maximum score = 6; maximum possible score = 36), with ratings of “4—excellent” for internal consistency and “2—adequate” for norms (Briesch & Chafouleas, 2009). There was no information available on any of the remaining psychometric criteria. It is worth noting that there was only one scale relevant to feasibility in this measure and the scores above are rolled up to reflect the score for the broader measure.

Fidelity

Eighteen measures of fidelity were identified in mental or behavioral health research. Information about internal consistency was available for six measures, convergent validity for three measures, discriminant validity for two measures, concurrent validity one measure, predictive validity for four measures, known-groups validity for two measures, structural validity for two measures, responsiveness for no measures, and norms for nine measures. For those measures of feasibility with information available (i.e., those with non-zero ratings on PAPERS criteria), the median rating for internal consistency was “3—good,” “3—good” for convergent validity, “1—minimal/emerging” for discriminant validity, “1—minimal/emerging” for concurrent validity, “3—good” for predictive validity, “1—minimal/emerging” for known-groups validity, “3—good” for structural validity, and “2—adequate” for norms.

The Yale Adherence and Competence Scale had the highest psychometric rating score among measures of fidelity used in mental and behavioral health care (psychometric total maximum score = 9; maximum possible score = 36), with ratings of “4—excellent” for convergent validity, “1—minimal/emerging” for discriminant validity, “1—minimal/emerging” for concurrent validity, and “3—good” for structural validity (Carroll et al., 2000). There was no information available on any of the remaining psychometric criteria.

Penetration

Twenty-three measures of penetration were identified in mental or behavioral health research of which 14 were deemed unsuitable for rating. Information about internal consistency was available for three measures, convergent validity for one measure, discriminant validity for no measures, concurrent validity for no measures, predictive validity for two measures, known-groups validity for no measures, structural validity for one measure, responsiveness for one measure, and norms for three measures. For those measures of penetration with information available (i.e., those with non-zero ratings on PAPERS criteria), the median rating for internal consistency was “3—good,” “3—good” for convergent validity, “2—adequate” for predictive validity, “1—minimal/emerging” for structural validity, and “1—minimal/emerging” for norms.

The Degree of Implementation Form had the highest psychometric rating score among measures of penetration used in mental and behavioral health care (psychometric total maximum score = 6; maximum possible score = 36), with ratings of “2—adequate,” for internal consistency and “4—excellent” for convergent validity (Forchuk et al., 2002). There was no information available on any of the remaining psychometric criteria.

Sustainability

Fourteen measures of sustainability were identified in mental or behavioral health research of which one was deemed unsuitable for rating. Information about internal consistency was available for six measures, convergent validity for one measure, discriminant validity for no measures, concurrent validity for three measures, predictive validity for two measures, known-groups validity for three measures, structural validity for four measures, responsiveness for no measures, and norms for eight measures. For those measures of penetration with information available (i.e., those with non-zero ratings on PAPERS criteria), the median rating for internal consistency was “2—adequate,” “4—excellent” for convergent validity, “1—minimal/emerging” for concurrent validity, “−1—poor” for predictive validity, “3—good” for known-groups validity, “2—adequate” for structural validity, and “1—minimal/emerging” for norms.

The School-wide Universal Behavior Sustainability Index-school Teams had the highest psychometric rating score among measures of penetration used in mental and behavioral health care (psychometric total maximum score = 12; maximum possible score = 36), with ratings of “4—excellent,” for internal consistency and “3—good” for concurrent validity, “2—adequate” for predictive validity, “1—minimal/emergent” for known-groups validity, “3—good” for structural validity, and “−1—poor” for norms (McIntosh et al., 2011). There was no information available on any of the remaining psychometric criteria.

Discussion

Summary of study findings

This systematic review identified 150 measures of implementation outcomes used in mental and behavioral health which were unevenly distributed across the eight outcomes (especially when suitability for rating was concerned). We found 32 measures of acceptability, 26 measures of adoption (one was deemed unsuitable for rating), 6 measures of appropriateness, 31 measures of cost (none were deemed suitable for rating) 18 measures of feasibility, 18 measures of fidelity, 23 measures of penetration (14 of which were deemed unsuitable for rating), and 14 measures of sustainability (one of which was deemed unsuitable for rating). Overall there was limited psychometric information available for measures of implementation outcomes. Norms was the most commonly reported psychometric criterion (N = 63, 52%), followed by internal consistency (N = 58, 48%). Responsiveness was the least reported psychometric property (3%), despite the fact that, for implementation outcomes, responsiveness (or sensitivity to change) is a critically important property. Finally, we found limited evidence of measures’ reliability and validity. Psychometric ratings using the Psychometric and Pragmatic Evaluation Rating Scale (PAPERS; Lewis et al., 2018) ranged from −1 to 14 with a possible minimum of score of −9 and a possible maximum score of 36, illustrating a profound need for measurement studies in this field. This means that almost all measures of implementation outcomes have no evidence (dis)confirming their capacity to detect real change over time, a gap in the literature that requires significant attention and resources.

Measures were moderately generalizable across populations with the majority of empirical uses occurring in studies providing treatment for general mental health issues, substance use, depression, and other behavioral disorders (n = 84, 63%). The remaining empirical uses occurred in studies evaluating treatments for trauma, anxiety, suicidal ideation, mania, eating disorders, alcohol use, or grief, and several where the population was not specified or did not fit cleanly into any of these categories. Over half of the measure uses occurred in outpatient or school settings (n = 64, 57%), which are the primary settings in which people receive behavioral health care. The remaining empirical uses were in residential, inpatient, state mental health settings, or were not specified.

Comparison with previous systematic review

The findings of this updated review suggest a proliferation of measure development for mental and behavioral health in just the past 2 years—66 new measures were identified—with a continued uneven distribution of measures across implementation outcomes. This demonstrated growth in number of measures confirms that significant focus is being dedicated to measuring implementation outcomes. Importantly, those outcomes that some may argue are relatively unique to implementation, such as feasibility, compared with those that are common to intervention, such as acceptability, increased by 10-fold from 2015 to 2019. It is also worth noting that we found fewer measures of acceptability (n = 32) than in the previous review (n = 50). We believe that this discrepancy in identified measures may be due to our refined set of search terms and more structured construct mapping exercise. That is, we used a more precise definition of acceptability of an evidence-based practice, as opposed to a looser interpretation of satisfaction with organizational processes, which narrowed our set of synonyms included in the search. In this updated review, construct assignment was checked and confirmed by content expert (CCL) who reviewed items within each measure and/or scale, which was not a process we were able to employ in the initial publication given limits in our funding. This careful item-level may also explain why some measures identified in our initial systematic review were screened out in this updated study.

While more measures in this new review had psychometric information available (86; 70%) on at least one criterion compared with the measures in the previous review (56; 56%), psychometric information for some criteria, such as discriminant, convergent, and concurrent validity as well as responsiveness, remained limited even despite their criticality for scientific evaluations of implementation efforts. We hope that future adoption of measurement reporting standards prompts more reporting and, perhaps, more psychometric testing. However, overall, this finding illustrates that the field is continuing to grow in its testing and reporting of psychometric properties with more attention to the production of valid and reliable measures. With continued focus on gathering information and evidence for these important psychometric properties, the field may move toward a consensus battery of implementation outcomes measures that can be used across studies to accumulate evidence about what strategies work best for which interventions, for whom, and under what conditions.