Abstract

Objective To describe the utilization of online resources by patients prior to presentation to an ophthalmic emergency department (ED) and to assess the accuracy of online resources for ophthalmic diagnoses.

Methods This is a prospective survey of patients presenting to an ophthalmic ED for initial evaluation of ocular symptoms. Prior to evaluation, patients completed surveys assessing ocular symptoms, Internet usage, and presumed self-diagnoses. Demographics and characteristics of Internet usage were determined. Accuracy of self-diagnoses was compared between Internet users and nonusers. Diagnoses were classified as high or low acuity based on agreement between senior authors.

Results A total of 144 patients completed surveys. Mean (standard deviation) age was 53.2 years (18.0). One-third of patients used the Internet for health-related searches prior to presentation. Internet users were younger compared with nonusers (48.2 years [16.5] vs. 55.5 years [18.3], p = 0.02). There were no differences in sex, ethnicity, or race. Overall, there was a threefold difference in proportion of patients correctly predicting their diagnoses, with Internet users correctly predicting their diagnoses more often than nonusers (41 vs. 13%, p < 0.001). When excluding cases of known trauma, the difference in proportion increased to fivefold (Internet users 40% vs. nonusers 8%, p < 0.001). Upon classification by acuity level, Internet users demonstrated greater accuracy than nonusers for both high- (42 vs. 17%, p = 0.03) and low (41 vs. 10%, p = 0.001)-acuity diagnoses. Greatest accuracy was in cases of external lid conditions such as chalazia and hordeola (100% [4/4] of Internet users vs. 40% (2/5) of nonusers), conjunctivitis (43% [3/7] of Internet users vs. 25% [2/8] of nonusers), and retinal traction or detachments (57% [4/7] of Internet users vs. 0% [0/4] of nonusers). The most frequently visited Web sites were Google (82%) and WebMD (40%). Patient accuracy did not change according to the number of Web sites visited, but patients who visited the Mayo Clinic Web site had greater accuracy compared with those who visited other Web sites (89 vs. 30%, p = 0.003).

Conclusion Patients with ocular symptoms may seek medical information on the Internet before evaluation by a physician in an ophthalmic ED. Online resources may improve the accuracy of patient self-diagnosis for low- and high-acuity diagnoses.

Keywords: emergency department, Web site, Internet, patient education, self-diagnosis

With increasing accessibility, patients are turning to the Internet for medical guidance. The 2011 U.S. National Health Interview Survey found that 44% of U.S. adults searched for health-related information online. 1 While patient education and autonomy are augmented by access to information on the Internet, online content is not standardized or regulated. Recent studies report that the quality, content, and readability of health-related information on the Internet can vary widely. 2 Additionally, unsubstantiated claims can masquerade as dogma and dangerously mislead patients. 3 Thus, it is important for medical professionals to be aware of how patients access and use data from the Internet to accomplish their health-related goals.

This study reports on the utilization of online resources by patients prior to presentation to an ophthalmic emergency department (ED) and assesses the accuracy of these resources for ophthalmic diagnoses. Our purpose is to better characterize factors that may influence patient behavior in the acute ophthalmic setting as well as to increase understanding of the potential and limitations of online resources.

Methodology

Study Methodology

The study was approved by the Institutional Review Board of the University of Miami and conducted in accordance with the principles of the Declaration of Helsinki. It was conducted in the ophthalmology ED at the Bascom Palmer Eye Institute (BPEI) in Miami, FL from December 2019 to September 2020. The study was temporarily halted from March 2020 to July 2020 due to the COVID-19 pandemic. Patients aged 18 to 90 years presenting to the ED for initial evaluation of ophthalmic symptoms were invited to participate in the survey; assistance, either through study members or family members, was provided for the visually impaired. Family members could complete surveys for patients if they had conducted Internet searches for patients. Patients who had already undergone formal evaluation for the presenting complaint by any physician and patients who were unable to provide informed consent were excluded from the study.

Data Collected

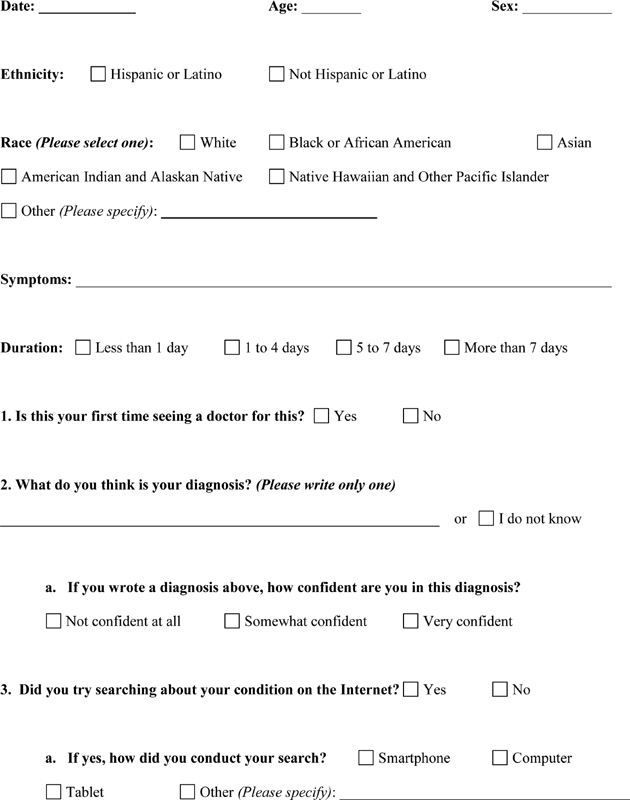

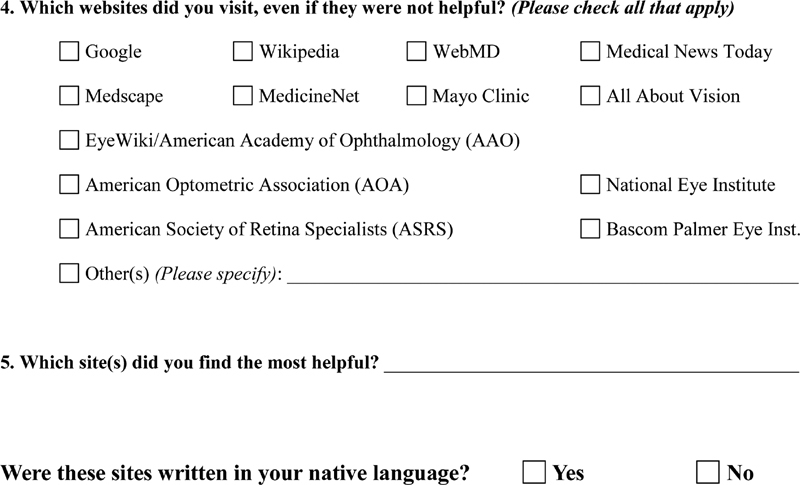

A five-question survey ( Appendix 1 ) was created in three languages (English, Spanish, and Haitian Creole) to collect demographic information (age, sex, and ethnicity/race according to the U.S. Census Bureau), ocular symptoms (including duration of symptoms), Internet usage (mode of access, Web sites visited, and Web sites perceived to be the most helpful), and presumed self-diagnoses. Patients were asked to participate in the survey before evaluation by an eye care professional. Following the clinical encounter, patient-presumed self-diagnoses were compared with physician diagnoses.

Diagnoses were classified as high or low acuity. A diagnosis of high acuity signified a need for emergent or urgent evaluation (e.g., corneal ulcers, uveitis, and retinal detachments). In contrast, a diagnosis of low acuity signified a lack of need for emergent or urgent evaluation (e.g., refractive error, dry eye, chalazia, and hordeola). Three independent graders (J.H., K.C., and J.S.) assigned acuity levels to diagnoses. The acuity level of each diagnosis was determined by agreement between at least two graders.

Statistical Analysis

Statistical analyses were performed using the SPSS 27.0 statistical package (IBM Corporation, Chicago, IL). Kruskal–Wallis' tests were used to compare differences in numerical and ordinal variables (age, number of Web sites, time to seeking care) between Internet users and nonusers. Pearson's chi-square and Fisher's exact tests were used to compare frequencies of nominal variables between groups. Statistical significance was determined by two-sided p -values less than 0.05.

Results

Demographics

Demographics are depicted in Table 1 . A total of 144 patients completed surveys. Mean (standard deviation) age was 53.2 years (18.0). Most participants identified as male (53%), Hispanic (69%), and white (80%). About one-third of patients used the Internet for health-related searches prior to presentation. There were no significant differences in sex ( p = 0.93), ethnicity ( p = 0.98), or race ( p = 0.53) between Internet users and nonusers. However, Internet users were of significantly younger age compared with nonusers (48.2 years [16.5] vs. 55.5 years [18.3], p = 0.02). There was approximately a 75% response rate of patients approached with no unique demographic of nonresponders.

Table 1. Demographics.

| Internet users | Nonusers | p -Value a | All | |

|---|---|---|---|---|

| n (%) | 46 (32) | 98 (68) | – | 144 |

| Age in y, mean (SD) | 48.2 (16.5) | 55.5 (18.3) | 0.02 | 53.2 (18.0) |

| Sex, n (%) b | ||||

| Male | 24 (31) | 53 (69) | 0.93 | 77 |

| Female | 21 (32) | 45 (68) | 66 | |

| Ethnicity, n (%) c | ||||

| Non-Hispanic | 14 (32) | 30 (68) | 0.98 | 44 |

| Hispanic | 32 (32) | 68 (68) | 100 | |

| Race, n (%) c | ||||

| White | 37 (31) | 82 (69) | 0.53 | 119 |

| Black or African American | 7 (33) | 14 (67) | 21 | |

| Asian | 2 (67) | 1 (33) | 3 | |

| Other | 0 (0) | 1 (100) | 1 | |

Abbreviation: SD, standard deviation.

Two-sided p -values with α level 0.05 for Internet users versus nonusers. Kruskal–Wallis' tests used for age. Pearson's chi-square tests used for sex, ethnicity, and race.

One patient declined to report sex.

Ethnicity and race categories are based on the U.S. Census Bureau. No participants identified as American Indian, Alaskan Native, Native Hawaiian, or other Pacific Islander.

Characteristics of Internet Usage

Characteristics of Internet usage are displayed in Table 2 . Among Internet users, the most common mode of accessing online resources was via a smartphone (77%), compared with a computer (27%) or tablet (9%). Eighteen different Web sites were visited by Internet users, with the most frequently visited Web sites being Google (82%), WebMD (40%), and Mayo Clinic (20%). The number of Web sites visited by each patient ranged from 1 to 13, while the median was 2. The majority of patients accessed Web sites in their native language (87%). Google (46%) and WebMD (33%) were the Web sites perceived to be the most helpful by patients.

Table 2. Characteristics of Internet usage.

| Mode, n (%) | Smartphone | 34 (77) |

| Computer | 12 (27) | |

| Tablet | 4 (9) | |

| Web sites visited, n (%) | 37 (82) | |

| WebMD | 18 (40) | |

| Mayo Clinic | 9 (20) | |

| BPEI | 4 (9) | |

| Wikipedia | 4 (9) | |

| EyeWiki/AAO | 3 (7) | |

| MedicineNet | 2 (4) | |

| NEI | 2 (4) | |

| YouTube | 2 (4) | |

| All About Vision | 1 (2) | |

| AOA | 1 (2) | |

| ASRS | 1 (2) | |

| Columbia University | 1 (2) | |

| EyeMed | 1 (2) | |

| Harvard Health | 1 (2) | |

| Healthline | 1 (2) | |

| Medical News Today | 1 (2) | |

| Medscape | 1 (2) | |

| NYU Langone Health | 1 (2) | |

| Most helpful Web sites a , n (%) | 11 (46) | |

| WebMD | 8 (33) | |

| AOA | 1 (4) | |

| BPEI | 1 (4) | |

| Healthline | 1 (4) | |

| Mayo Clinic | 1 (4) | |

| MedicineNet | 1 (4) | |

| YouTube | 1 (4) | |

| No. of Web sites, median (range) | 2 (1–13) | |

| In native language, n (%) | 39 (87) | |

Abbreviations: AAO, American Academy of Ophthalmology; AOA, American Optometric Association; ASRS, American Society of Retina Specialists; BPEI, Bascom Palmer Eye Institute; NEI, National Eye Institute; NYU, New York University; SD, standard deviation.

Most helpful as designated by patient filling survey.

Accuracy of Ophthalmic Diagnoses

Overall accuracy of ophthalmic diagnoses and comparison between Internet users and nonusers are displayed in Table 3 . Of all participants, 22% correctly predicted their diagnoses. Internet users correctly predicted their diagnoses more often than nonusers (41 vs. 13%, p < 0.001). When excluding cases of known trauma (e.g., traumatic corneal abrasions), the difference in proportion increased (Internet users 40% vs. nonusers 8%, p < 0.001). Upon classification by level of acuity, Internet users demonstrated greater accuracy compared with nonusers for both high- (42 vs. 17%, p = 0.03) and low (41 vs. 10%, p = 0.001)-acuity diagnoses. There were no significant differences in presentation to the ED based on Internet usage and level of acuity of diagnoses ( p = 0.64).

Table 3. Accuracy of ophthalmic diagnoses, n (%) .

| Responses | Internet users | Nonusers | p -Value a | All | |

|---|---|---|---|---|---|

| All cases | Correct | 9 (41) | 13 (13) | <0.001 | 32 (22) |

| Incorrect | 10 (22) | 12 (12) | 22 (15) | ||

| No prediction | 17 (37) | 73 (75) | 90 (63) | ||

| Total no. | 46 | 98 | – | 144 | |

| Excluding cases with known trauma | Correct | 17 (40) | 6 (8) | <0.001 | 23 (19) |

| Incorrect | 9 (20) | 10 (13) | 19 (16) | ||

| No prediction | 17 (40) | 63 (79) | 80 (65) | ||

| Total no. | 43 | 79 | – | 122 | |

| Cases of high acuity b | Correct | 10 (42) | 8 (17) | 0.03 | 18 (25) |

| Incorrect | 5 (21) | 6 (13) | 11 (15) | ||

| No prediction | 9 (38) | 33 (70) | 42 (59) | ||

| Total no. | 24 | 47 | – | 71 | |

| Cases of low acuity c | Correct | 9 (41) | 5 (10) | 0.001 | 14 (19) |

| Incorrect | 5 (23) | 6 (12) | 11 (15) | ||

| No prediction | 8 (36) | 40 (78) | 48 (66) | ||

| Total no. | 22 | 51 | – | 73 |

Note: “No prediction” indicates participants who did not provide self-diagnoses and instead selected the “I do not know” response.

Two-sided p -values with α level 0.05. Bolded p -values are significant. Pearson's chi-square tests were used for accuracy based on all cases and all cases excluding those with known trauma. Fisher's exact tests were used for accuracy based on cases of high and low acuities.

A diagnosis of high acuity signifies the need for emergent or urgent evaluation (e.g., corneal ulcers, uveitis, retinal detachments).

A diagnosis of low acuity signifies the lack of need for emergent or urgent evaluation (e.g., refractive error, dry eye, chalazia, hordeola).

Table 4 stratifies accuracy of self-predictions by specific diagnoses. Chalazia and hordeola were the most accurately predicted diagnoses overall (67% correct). When examining accuracy differences between Internet users and nonusers, no diagnosis reached statistical significance. However, 100% (4/4) of Internet users correctly predicted diagnoses of chalazia and hordeola compared with just 40% (2/5) of nonusers. Additionally, 43% (3/7) of Internet users correctly predicted diagnoses of conjunctivitis compared with 25% (2/8) of nonusers, which trended toward statistical significance ( p = 0.07). In cases of high acuity, 57% (4/7) of Internet users provided accurate diagnoses for retinal traction and detachments compared with 0% (0/4) of nonusers.

Table 4. Accuracy of ophthalmic diagnoses by diagnoses, n (%).

| Internet users | Nonusers | p -Value a | All | Total no. of cases b | ||

|---|---|---|---|---|---|---|

| Refractive error | Correct | 0 (0) | 0 (0) | – | 0 (0) | 4 (3) |

| Incorrect | 0 (0) | 0 (0) | 0 (0) | |||

| No prediction | 1 (100) | 3 (100) | 4 (100) | |||

| Strabismus | Correct | 0 (0) | 0 (0) | – | 0 (0) | 3 (2) |

| Incorrect | 0 (0) | 0 (0) | 0 (0) | |||

| No prediction | 0 (0) | 3 (100) | 3 (100) | |||

| Chalazia/hordeola | Correct | 4 (100) | 2 (40) | 0.17 | 6 (67) | 9 (6) |

| Incorrect | 0 (0) | 0 (0) | 0 (0) | |||

| No prediction | 0 (0) | 3 (60) | 3 (33) | |||

| Blepharitis | Correct | 0 (0) | 0 (0) | – | 0 (0) | 3 (2) |

| Incorrect | 0 (0) | 2 (67) | 2 (67) | |||

| No prediction | 0 (0) | 1 (33) | 1 (33) | |||

| Other orbital inflammation (nontraumatic) | Correct | 1 (100) | 0 (0) | 0.40 | 1 (20) | 5 (3) |

| Incorrect | 0 (0) | 1 (25) | 1 (20) | |||

| No prediction | 0 (0) | 3 (75) | 3 (60) | |||

| Dry eye | Correct | 0 (0) | 0 (0) | 1.00 | 0 (0) | 22 (15) |

| Incorrect | 1 (20) | 3 (18) | 4 (18) | |||

| No prediction | 4 (80) | 14 (82) | 18 (82) | |||

| Subconjunctival hemorrhage | Correct | 1 (50) | 0 (0) | 0.25 | 1 (13) | 8 (6) |

| Incorrect | 0 (0) | 0 (0) | 0 (0) | |||

| No prediction | 1 (50) | 6 (100) | 7 (88) | |||

| Conjunctivitis | Correct | 3 (43) | 2 (25) | 0.07 | 5 (33) | 15 (10) |

| Incorrect | 4 (57) | 1 (13) | 5 (33) | |||

| No prediction | 0 (0) | 5 (63) | 5 (33) | |||

| Keratitis | Correct | 2 (66) | 0 (0) | 0.11 | 2 (25) | 8 (6) |

| Incorrect | 0 (0) | 1 (20) | 1 (13) | |||

| No prediction | 1 (33) | 4 (80) | 5 (63) | |||

| Anterior uveitis | Correct | 1 (33) | 0 (0) | 1.00 | 1 (14) | 7 (5) |

| Incorrect | 0 (0) | 1 (25) | 1 (14) | |||

| No prediction | 2 (67) | 3 (75) | 5 (72) | |||

| Cataract/posterior capsular opacification | Correct | 0 (0) | 1 (33) | – | 1 (33) | 3 (2) |

| Incorrect | 0 (0) | 0 (0) | 0 (0) | |||

| No prediction | 0 (0) | 2 (67) | 2 (67) | |||

| Vitreous disease c | Correct | 0 (0) | 0 (0) | 1.00 | 0 (0) | 4 (3) |

| Incorrect | 1 (50) | 0 (0) | 1 (25) | |||

| No prediction | 1 (50) | 2 (100) | 3 (75) | |||

| Retinal traction/detachment c | Correct | 4 (57) | 0 (0) | 0.27 | 4 (36) | 11 (8) |

| Incorrect | 1 (14) | 1 (25) | 2 (18) | |||

| No prediction | 2 (29) | 3 (75) | 5 (45) | |||

| Retinal vascular disease | Correct | 1 (25) | 0 (0) | 1.00 | 1 (14) | 7 (5) |

| Incorrect | 1 (25) | 0 (0) | 1 (14) | |||

| No prediction | 2 (50) | 3 (100) | 5 (71) | |||

| Retinal degeneration | Correct | 0 (0) | 0 (0) | 0.33 | 0 (0) | 3 (2) |

| Incorrect | 1 (100) | 0 (0) | 1 (33) | |||

| No prediction | 0 (0) | 2 (100) | 2 (67) | |||

| Optic neuropathy | Correct | 0 (0) | 0 (0) | – | 0 (0) | 3 (2) |

| Incorrect | 0 (0) | 0 (0) | 0 (0) | |||

| No prediction | 0 (0) | 3 (100) | 3 (100) | |||

| Inflammation due to trauma/foreign substance | Correct | 2 (66) | 7 (37) | 0.16 | 9 (41) | 22 (15) |

| Incorrect | 1 (33) | 2 (11) | 3 (14) | |||

| No prediction | 0 (0) | 10 (53) | 10 (45) | |||

Note: “No prediction” indicates participants who did not provide self-diagnoses and instead selected the “I do not know” response.

Two-sided p -values with α level 0.05 for Internet users versus nonusers using Fisher's exact tests. Statistics not computed for groups with constant responses.

Total 144 cases.

Posterior vitreous detachment included in retinal traction/detachment, not vitreous disease.

Accuracy of self-diagnoses was also examined by presenting symptoms ( Table 5 ). Internet users had 62% (8/13) accuracy when presenting with eye redness, compared with 16% (3/19) of nonusers ( p = 0.002). Additionally, Internet users had 83% (5/6) accuracy with symptoms related to the orbit, compared with 18% (2/11) of nonusers ( p = 0.002).

Table 5. Accuracy of ophthalmic diagnoses by presenting symptoms, n (%) .

| Internet users | Nonusers | p -Value a | All | Total no. of cases | ||

|---|---|---|---|---|---|---|

| Mild eye discomfort | Correct | 2 (25) | 2 (11) | 0.46 | 4 (12) | 33 (23) |

| Incorrect | 3 (38) | 5 (26) | 8 (24) | |||

| No prediction | 3 (38) | 12 (63) | 21 (63) | |||

| Eye pain | Correct | 4 (57) | 8 (26) | 0.25 | 12 (32) | 38 (26) |

| Incorrect | 0 (0) | 3 (10) | 3 (8) | |||

| No prediction | 3 (43) | 20 (65) | 23 (61) | |||

| Eye redness | Correct | 8 (62) | 3 (16) | 0.002 | 11 (34) | 32 (22) |

| Incorrect | 4 (31) | 3 (16) | 7 (22) | |||

| No prediction | 1 (8) | 13 (68) | 14 (44) | |||

| Tearing | Correct | 1 (33) | 1 (17) | 1.00 | 2 (22) | 9 (6) |

| Incorrect | 1 (33) | 1 (17) | 2 (22) | |||

| No prediction | 1 (33) | 4 (67) | 5 (56) | |||

| Visual phenomenon (e.g., flashes, floaters, double vision) | Correct | 3 (27) | 0 (0) | 0.27 | 3 (16) | 19 (13) |

| Incorrect | 3 (27) | 1 (13) | 4 (21) | |||

| No prediction | 5 (45) | 7 (88) | 12 (63) | |||

| Decrease in vision | Correct | 4 (33) | 3 (11) | 0.28 | 7 (18) | 39 (27) |

| Incorrect | 1 (8) | 3 (11) | 4 (10) | |||

| No prediction | 7 (58) | 21 (78) | 28 (72) | |||

| Morphological change in eye (e.g., corneal defect, chemosis) | Correct | 0 (0) | 0 (0) | 0.25 | 0 (0) | 4 (3) |

| Incorrect | 1 (100) | 0 (0) | 1 (25) | |||

| No prediction | 0 (0) | 3 (100) | 3 (75) | |||

| Related to orbit | Correct | 5 (83) | 2 (18) | 0.002 | 7 (41) | 17 (12) |

| Incorrect | 1 (17) | 0 (0) | 1 (6) | |||

| No prediction | 0 (0) | 9 (82) | 9 (53) | |||

Note: “No prediction” indicates participants who did not provide self-diagnoses and instead selected the “I do not know” response.

Two-sided p -values with α level 0.05 for Internet users versus nonusers using Fisher's exact tests. Bolded p -values are significant.

Each Web site was analyzed for diagnostic accuracy ( Table 6 ). Participants who visited the Mayo Clinic Web site demonstrated significantly better accuracy compared with participants who used the Internet but did not visit the Mayo Clinic Web site (89% [8/9] vs. 30% [11/37] correct, p = 0.003). No significant differences were found when analyzing symptom or diagnosis by specific Web site ( p > 0.05). There were no significant differences in accuracy based on number of Web sites visited ( p = 0.44).

Table 6. Accuracy of ophthalmic diagnoses by web site within Internet users, n (%) .

| Visited | Did not visit | p -Value a | Total no. visited | ||

|---|---|---|---|---|---|

| Correct | 16 (43) | 3 (38) | 1.00 | 37 (82) | |

| Incorrect | 7 (19) | 2 (25) | |||

| No prediction | 14 (38) | 3 (38) | |||

| Mayo Clinic | Correct | 8 (89) | 11 (30) | 0.003 | 6 (20) |

| Incorrect | 1 (11) | 9 (24) | |||

| No prediction | 0 (0) | 17 (46) | |||

| WebMD | Correct | 8 (44) | 11 (41) | 0.86 | 18 (40) |

| Incorrect | 4 (22) | 5 (19) | |||

| No prediction | 6 (33) | 11 (41) | |||

| Wikipedia | Correct | 2 (50.0) | 17 (41) | 0.18 | 4 (8) |

| Incorrect | 2 (50.0) | 7 (17) | |||

| No prediction | 0 (0) | 17 (41) | |||

Notes: Web sites visited by less than five participants are not included. “No prediction” indicates participants who did not provide self-diagnoses and instead selected the “I do not know” response.

Two-sided p -values with α level 0.05 for Internet users versus nonusers using Fisher's exact tests. Statistics not computed for groups with constant responses. Bolded p -values are significant.

Time to Seeking Care

No significant differences were found in time to seeking care based on Internet usage (Internet users: 6 [13%] <1 day, 22 [48%] 1–4 days, 4 [9%] 5–7 days, 14 [30%] >7 days; nonusers: 22 [23%] <1 day, 41 [43%] 1–4 days, 13 [14%] 5–7 days, 20 [14%] >7 days; p = 0.32) or level of acuity ( p = 0.32).

Discussion

Previous reports focusing on the interface between ophthalmology, patients, and the Internet have examined ophthalmic-related Internet search activity, 4 5 search engine results for specific ocular diseases, 6 and the quality and readability of patient education materials. 2 7 A recent study examined the accuracy of a popular online symptom checker for ophthalmic diagnoses, and using simulated clinical vignettes, the authors found that the correct diagnosis was obtained in only 26% of cases. 8 The purpose of the current study was to determine the utility of online resources for self-diagnosis in a real-world ophthalmic ED setting. Our data suggest that a substantial percentage of patients consult the Internet for health-related information prior to seeking evaluation by an emergency eye care professional.

Similar to the findings of the 2011 U.S. National Health Interview Survey, 9 our demographic of patients using the Internet for ophthalmic concerns was significantly younger. Additionally, we found that patients who used the Internet for health-related information in the ophthalmic ED setting were three times more likely to predict their diagnoses correctly than those who did not use the Internet. After excluding cases of known trauma, in which disease etiologies are more explicit, accuracy among Internet users compared with nonusers grew to fivefold. Greater accuracy of Internet users over non-users persisted for diagnoses of both high and low acuities.

Online information remains of particular concern in the acute ophthalmic setting, as high-acuity ophthalmic conditions rely heavily on timely presentation and intervention. Such cases include but are not limited to uveitis, retinal detachments, retinal vascular disease, retinal degeneration, and optic neuropathy. Our study found that Internet users seemed to demonstrate better accuracy in self-diagnosis in cases of retinal detachments. Interestingly, we did not find that presentation to the ED or time to seeking care differed with varying levels of acuity. This illustrates the potential of online resources to improve patient recognition of severe pathology such as retinal detachments, but it more importantly highlights a deficit in clinical application as patients did not appear to seek care earlier despite increased recognition.

Although the Internet can help patients understand urgency in some cases, the Internet can mislead patients into believing their benign symptoms are dangerous and in need of acute care. Many patients continue to seek emergency care for nonacute, benign conditions such as refractive error, blepharitis, chalazia/hordeola, and dry eye. In our study, we found that several patients with benign diseases (chalazia/hordeola and viral/allergic conjunctivitis) still sought emergency care even when the Internet appeared to educate them about their condition. Additionally, this information did not seem to affect their time to seeking care.

The effect of online resources on patient behavior in the acute ophthalmic setting remains unclear. Our study did not detect a difference in time to seeking care between patients who used the Internet and those who did not. However, a limitation to this study is the inability to determine if online resources affected whether patients decided to seek or avoid emergency care. Other limitations to our study include its cross-sectional nature, small sample size due to interruption by the COVID-19 pandemic, and constraints associated with patient-reliant surveys.

Conclusions

It is important to recognize that the Internet will continue to have a growing presence in patients' lives, and health care professionals will continue to determine its capabilities and limitations. The Internet may be able to direct patients to seek care when needed and to educate patients when it is less likely to be beneficial, reducing the burden on the health care system. Future studies are needed to investigate the effect of online resources on patient behavior and ultimately utilize this information to create beneficial change within emergency ophthalmic care.

Acknowledgments

The Bascom Palmer Eye Institute received funding from the NIH Core Grant P30EY014801, Department of Defense Grant #W81XWH-13-1-0048, and a Research to Prevent Blindness Unrestricted Grant. The sponsors or funding organizations had no role in the design or conduct of this research.

Footnotes

Conflict of Interest Dr. Jayanth Sridhar is a consultant for Alcon, Alimera Sciences, Inc., Regeneron Pharmaceuticals, Inc., and Oxurion. Dr. Nicolas A. Yannuzzi is a consultant for Genentech, Inc., Novartis International AG, and Alimera Sciences, Inc. None of the other authors reports any disclosures.

Appendix 1 Ophthalmology emergency department survey.

References

- 1.Amante D J, Hogan T P, Pagoto S L, English T M, Lapane K L. Access to care and use of the Internet to search for health information: results from the US National Health Interview Survey. J Med Internet Res. 2015;17(04):e106. doi: 10.2196/jmir.4126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kloosterboer A, Yannuzzi N A, Patel N A, Kuriyan A E, Sridhar J. Assessment of the quality, content, and readability of freely available online information for patients regarding diabetic retinopathy. JAMA Ophthalmol. 2019;137(11):1240–1245. doi: 10.1001/jamaophthalmol.2019.3116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Lau D, Ogbogu U, Taylor B, Stafinski T, Menon D, Caulfield T. Stem cell clinics online: the direct-to-consumer portrayal of stem cell medicine. Cell Stem Cell. 2008;3(06):591–594. doi: 10.1016/j.stem.2008.11.001. [DOI] [PubMed] [Google Scholar]

- 4.Leffler C T, Davenport B, Chan D. Frequency and seasonal variation of ophthalmology-related Internet searches. Can J Ophthalmol. 2010;45(03):274–279. doi: 10.3129/i10-022. [DOI] [PubMed] [Google Scholar]

- 5.Deiner M S, McLeod S D, Wong J, Chodosh J, Lietman T M, Porco T C. Google searches and detection of conjunctivitis epidemics worldwide. Ophthalmology. 2019;126(09):1219–1229. doi: 10.1016/j.ophtha.2019.04.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kahana A, Gottlieb J L. Ophthalmology on the Internet: what do our patients find? Arch Ophthalmol. 2004;122(03):380–382. doi: 10.1001/archopht.122.3.380. [DOI] [PubMed] [Google Scholar]

- 7.Williams A M, Muir K W, Rosdahl J A. Readability of patient education materials in ophthalmology: a single-institution study and systematic review. BMC Ophthalmol. 2016;16:133. doi: 10.1186/s12886-016-0315-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Shen C, Nguyen M, Gregor A, Isaza G, Beattie A. Accuracy of a popular online symptom checker for ophthalmic diagnoses. JAMA Ophthalmol. 2019;137(06):690–692. doi: 10.1001/jamaophthalmol.2019.0571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Wimble M. Understanding health and health-related behavior of users of internet health information. Telemed J E Health. 2016;22(10):809–815. doi: 10.1089/tmj.2015.0267. [DOI] [PubMed] [Google Scholar]