Abstract

Making optimal decisions in the face of noise requires balancing short-term speed and accuracy. But a theory of optimality should account for the fact that short-term speed can influence long-term accuracy through learning. Here, we demonstrate that long-term learning is an important dynamical dimension of the speed-accuracy trade-off. We study learning trajectories in rats and formally characterize these dynamics in a theory expressed as both a recurrent neural network and an analytical extension of the drift-diffusion model that learns over time. The model reveals that choosing suboptimal response times to learn faster sacrifices immediate reward, but can lead to greater total reward. We empirically verify predictions of the theory, including a relationship between stimulus exposure and learning speed, and a modulation of reaction time by future learning prospects. We find that rats’ strategies approximately maximize total reward over the full learning epoch, suggesting cognitive control over the learning process.

Research organism: Rat

Introduction

Optimal behavior in decision making is frequently defined as maximization of reward over time (Gold and Shadlen, 2002), and this requires balancing the speed and accuracy of one’s choices (Bogacz et al., 2006). For example, imagine you are given a multiple-choice quiz on an esoteric topic with which you are familiar, such as behavioral neuroscience or cognitive psychology, and rewarded for every correct answer. In balancing speed and accuracy, you should spend some time on each question to ensure you get it right. Now imagine that you are given a different quiz on an esoteric topic with which you are not familiar, such as low Reynolds number hydrodynamics or underwater basket weaving. In balancing speed and accuracy, you should now guess on as many questions as you can as quickly as you can in order to maximize reward. The ideal balance of speed and accuracy differs considerably in the cases of high and low competence. However, there is an important additional dynamical aspect to consider: competence can change as a function of experience through learning. For instance, taking the hydrodynamics quiz enough times, you might start to get the hang of it, by going slow enough that you can remember questions and their associated answers, rather than guessing as quickly as you can. Given these almost opposing normative strategies for high and low competence, how does one effectively move from low competence to high competence? In other words, how does an agent strategically manage decision making in light of learning?

In this study, we formalize this problem in the context of a two-choice perceptual decision making task in rodents and simulated agents. Perceptual decisions, in particular two-choice decisions, allow us to leverage one of the most prolific decision making models, the drift-diffusion model (DDM) (Ratcliff, 1978) (and the considerable analytical dissections of it Bogacz et al., 2006), and one of the most prolific paradigms captured by it, the speed-accuracy trade-off (SAT), as a measurement of optimal behavior (i.e. maximization of reward per unit time) (Woodworth, 1899, Henmon, 1911, Garrett, 1922, Pew, 1969, Pachella, 1974, Wickelgren, 1977, Ruthruff, 1996, Ratcliff and Rouder, 1998, Gold and Shadlen, 2002, Bogacz et al., 2006; Bogacz et al., 2010; Heitz and Schall, 2012; Heitz, 2014, Rahnev and Denison, 2018).

Studies of the SAT have focused on how the brain may solve it (Gold and Shadlen, 2002, Roitman and Shadlen, 2002), what the optimal solution is (Bogacz et al., 2006), and whether agents can indeed manage it (Simen et al., 2006, Balci et al., 2011a; Simen et al., 2009; Bogacz et al., 2010, Drugowitsch et al., 2014, Drugowitsch et al., 2015; Manohar et al., 2015). Though most work in this area has taken place in humans and non-human primates, several studies have established the presence of a SAT in rodents (Uchida and Mainen, 2003, Abraham et al., 2004; Rinberg et al., 2006; Reinagel, 2013a; Reinagel, 2013b; Kurylo et al., 2020). The broad conclusion of much of this literature is that after extensive training, many subjects come close to optimal performance (Simen et al., 2009,Bogacz et al., 2010; Balci et al., 2011b; Zacksenhouse et al., 2010, Balci et al., 2011b, Starns and Ratcliff, 2010, Holmes and Cohen, 2014, Drugowitsch et al., 2014, Drugowitsch et al., 2015). When faced with deviations from optimality, several hypotheses have been proposed, including error avoidance, poor internal estimates of time, and a minimization of the cognitive cost associated with an optimal strategy (Maddox and Bohil, 1998, Bogacz et al., 2006, Zacksenhouse et al., 2010).

Past studies have shown how agents behave after reaching steady-state performance (Simen et al., 2009, Starns and Ratcliff, 2010, Bogacz et al., 2010,Zacksenhouse et al., 2010, Balci et al., 2011b, Balci et al., 2011a; Starns and Ratcliff, 2010, Drugowitsch et al., 2014, Drugowitsch et al., 2015), but relatively less attention has been paid to how agents learn to approach near-optimal behavior (but see Law and Gold, 2009, Balci et al., 2011b, Drugowitsch et al., 2019). While maximizing instantaneous reward rate is a sensible goal when the task is fully mastered, it is less clear that this objective is appropriate during learning.

Here, we set out to understand how agents manage the SAT during learning by studying the learning trajectory of rats and simulated agents in a free-response two-alternative forced-choice visual object recognition task (Zoccolan et al., 2009). Rats near-optimally maximized instantaneous reward rate () at the end of learning but chose response times that were too slow to be -optimal early in learning. To understand the rats’ learning trajectory, we examined learning trajectories in a recurrent neural network (RNN) trained on the same task. We derive a reduction of this RNN to a learning drift-diffusion model (LDDM) with time-varying parameters that describes the network’s average learning dynamics. Mathematical analysis of this model reveals a dilemma: at the beginning of learning when error rates are high, is maximized by fast responses (Bogacz et al., 2006). However, fast responses mean minimal stimulus exposure, little opportunity for perceptual processing, and consequently slow learning. Because of this learning speed/ (LS/) trade-off, slow responses early in learning can yield greater total reward over engagement with the task, suggesting a normative basis for the rats’ behavior. We then experimentally tested and confirmed several model predictions by evaluating whether response time and learning speed are causally related, and whether rats choose their response times so as to take advantage of learning opportunities. Our results suggest that rats exhibit cognitive control of the learning process, adapting their behavior to approximately accrue maximal total reward across the entire learning trajectory, and indicate that a policy that prioritizes learning in perceptual tasks may be advantageous from a total reward perspective.

Results

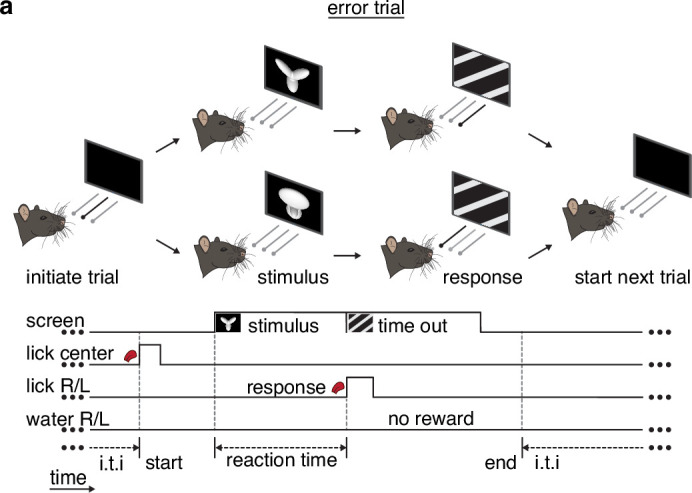

Trained rats solve the SAT

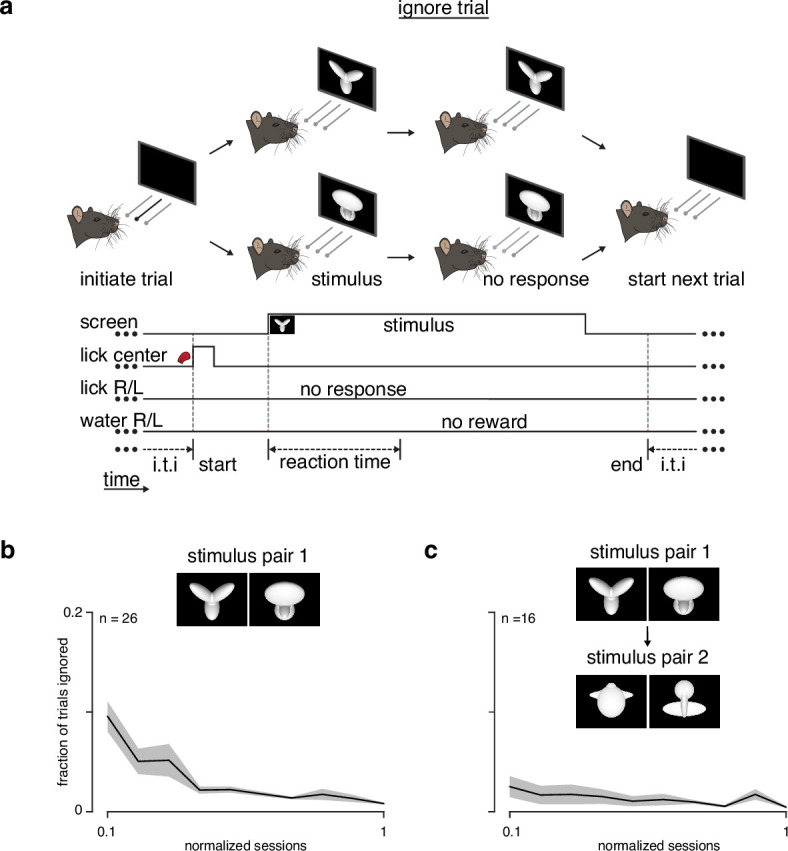

We trained rats on a visual object recognition two-alternative forced-choice task (see Methods) (Zoccolan et al., 2009). The rats began a trial by licking the central of three capacitive lick ports, at which time a static visual object that varied in size and rotation from one of two categories appeared on a screen. After evaluating the stimulus, the rats licked the right or left lick port. When correct, they received a water reward, and when incorrect, a timeout period (Figure 1a, Figure 1—figure supplement 1). Because this was a free-response task, rats were also able to initiate a trial and not make a response, but these ignored trials made up a small fraction of all trials and were not considered during our analysis (Figure 1—figure supplement 2).

Figure 1. Trained rats solve the speed-accuracy trade-off.

(a) Rat initiates trial by licking center port, one of two visual stimuli appears on the screen, rat chooses correct left/right response port for that stimulus and receives a water reward. (b) Speed-accuracy space: a decision making agent’s and mean normalized (a normalization of based on the average timing between one trial and the next, see Methods). Assuming a simple drift-diffusion process, agents that maximize (see Methods) must lie on an optimal performance curve (OPC, black trace) (Bogacz et al., 2006). Points on the OPC relate error rate to mean normalized decision time, where the normalization takes account of task timing parameters (e.g. average response-to-stimulus interval). For a given SNR, an agent’s performance must lie on a performance frontier swept out by the set of possible threshold-to-drift ratios and their corresponding error rates and mean normalized decision times. The intersection point between the performance frontier and the OPC is the error rate and mean normalized decision time combination that maximizes for that SNR. Any other point along the performance frontier, whether above or below the OPC, will achieve a suboptimal. Overall, increases toward the bottom left with maximal instantaneous reward rate at error rate = 0.0 and mean normalized decision time = 0.0. (c) Mean performance across 10 sessions for trained rats () at asymptotic performance plotted in speed-accuracy space. Each cross is a different rat. Color indicates fraction of maximum instantaneous reward rate () as determined by each rat’s performance frontier. Errors are bootstrapped SEMs. (d) Violin plots depicting fraction of maximum, a quantification of distance to the OPC, for same rats and same sessions as c. Fraction of maximum is a comparison of an agent’s current with its optimal given its inferred SNR. Approximately 15 of 26 (∼60%) of rats attain greater than 99% fraction maximum for their individual inferred SNRs. * denotes p < 0.05 one-tailed Wilcoxon signed-rank test for mean >0.99.

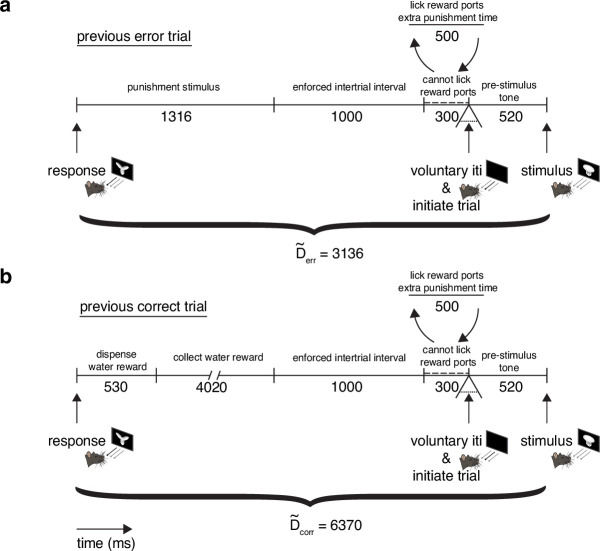

Figure 1—figure supplement 1. Task schematic for error trials.

Figure 1—figure supplement 2. Fraction of ignored trials during learning.

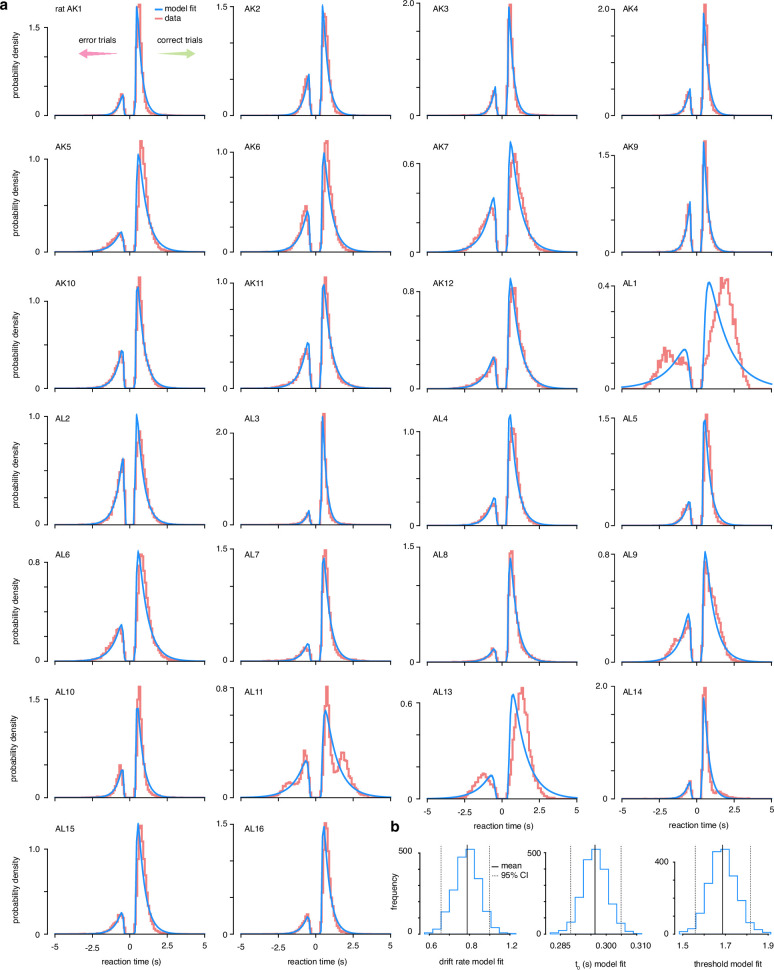

Figure 1—figure supplement 3. Drift-diffusion model data fits.

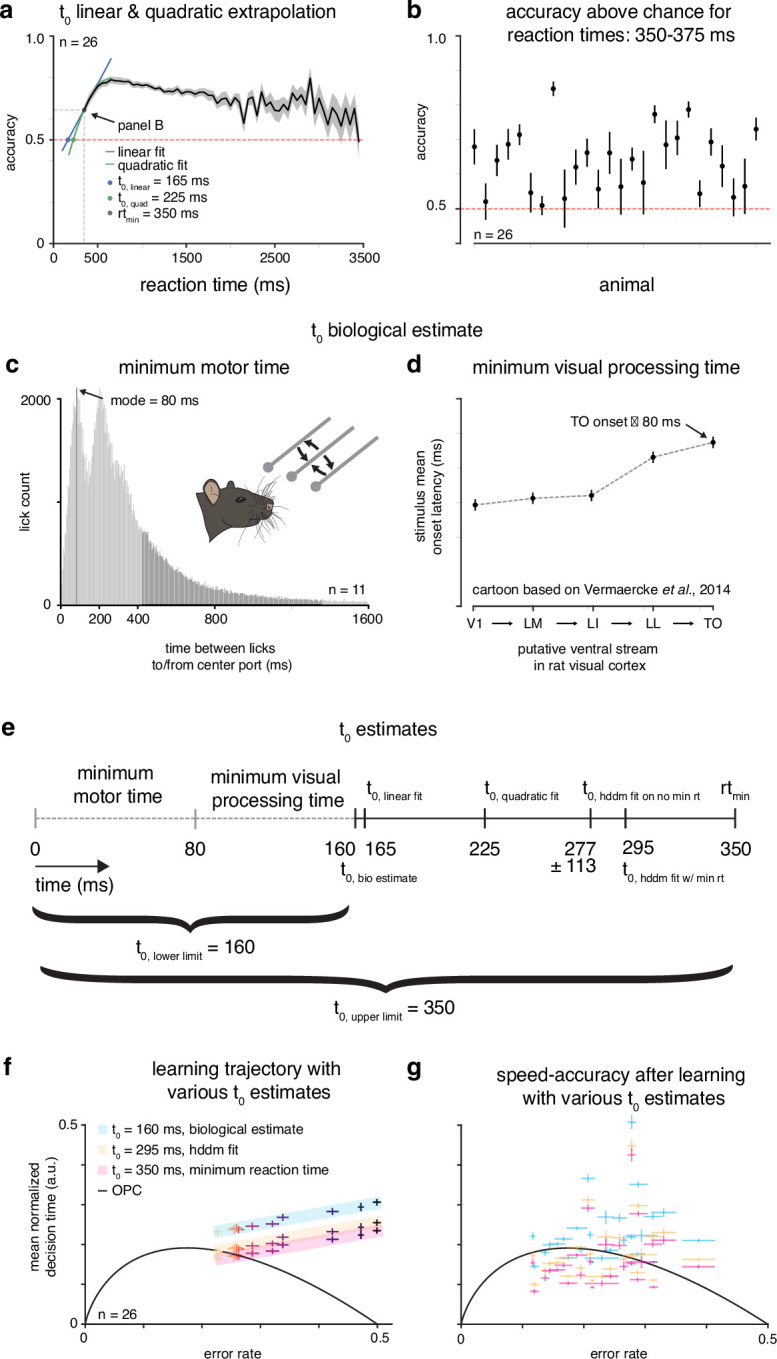

Figure 1—figure supplement 4. Estimating .

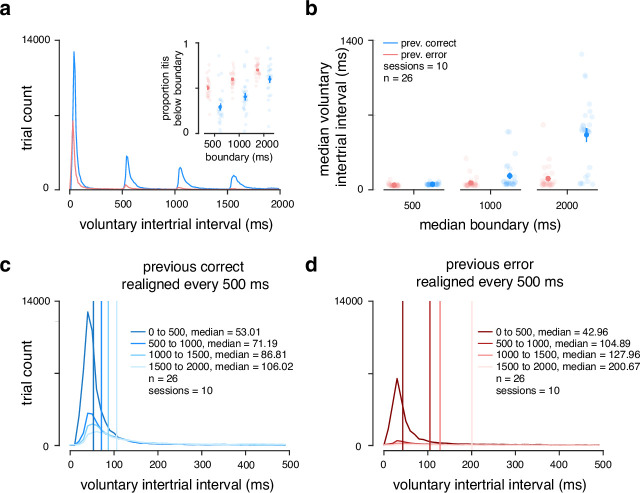

Figure 1—figure supplement 5. Analysis of voluntary intertrial intervals (ITIs).

Figure 1—figure supplement 6. Mandatory post-error () and post-correct () response-to-stimulus interval times.

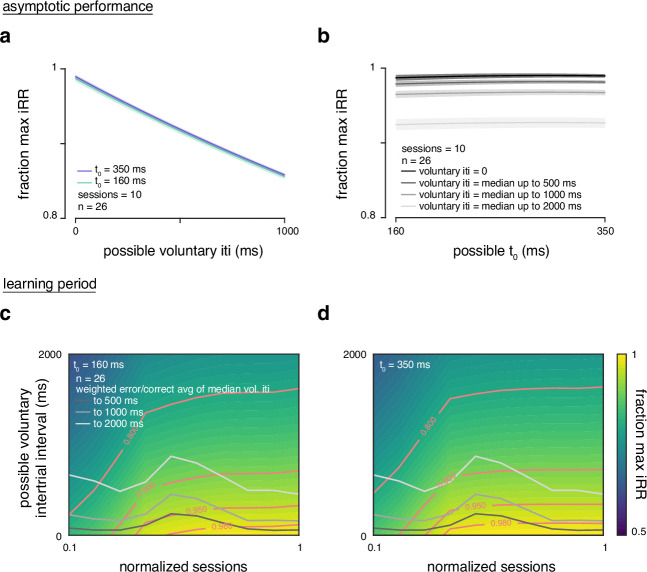

Figure 1—figure supplement 7. Reward rate sensitivity to and voluntary intertrial interval (ITI).

We examined the relationship between error rate () and reaction time () during asymptotic performance using the DDM (Figure 1—figure supplement 3). In the DDM, perceptual information is integrated through time until the level of evidence for one alternative reaches a threshold. The SAT is controlled by the subject’s choice of threshold, and is solved when a subject’s performance lies on an optimal performance curve (OPC; Figure 1b; Bogacz et al., 2006). The OPC defines the mean normalized decision time () and combination for which an agent will collect maximal (see Methods). At any given time, an agent will have some perceptual sensitivity (signal-to-noise ratio [SNR]) which reflects how much information about the stimulus arrives per unit time. Given this SNR, an agent’s position in speed-accuracy space (the space relating and ) is constrained to lie on a performance frontier traced out by different thresholds (Figure 1b). Using a low threshold yields fast but error-prone responses, while using a high threshold yields slow but accurate responses. An agent only maximizes when it chooses the and combination on its performance frontier that intersects the OPC. After learning the task to criterion, over half the subjects collected over 99% of their total possible reward, based on inferred SNRs assuming a DDM (Figure 1c and d).

Calculating mean normalized for comparison with the OPC requires knowing two quantities, and the average non-decision time per error trial . The average non-decision time contains the motor and initial perceptual processing components of , denoted T0; and the post-response timeout on error trials . Mean normalized is then the ratio . In order to determine each subject’s , we estimated T0 through a variety of methods, opting for a biological estimate (measured lickport latency response times and published visual processing latencies; Figure 1—figure supplement 4). To ensure that our results did not depend on our choice of T0, we ran a sensitivity analysis on a wide range of possible values of T0 (Figure 1—figure supplement 4f). We then had to determine , which can contain mandatory and voluntary intertrial intervals. We found that the rats generally kept voluntary intertrial intervals to a minimum, and we interpreted longer intervals as effectively ‘exiting’ the DDM framework (Figure 1—figure supplement 5). As such, we defined to only contain mandatory intertrial intervals (see Methods, Figure 1—figure supplement 6). To ensure that our results did not depend on either choice, we ran a sensitivity analysis on the combined effects of T0 and a containing voluntary intertrial intervals on RR (Figure 1—figure supplement 7). A full discussion of how these parameters were determined is included in the Methods.

Across a population, a uniform stimulus difficulty will reveal different SNRs because the internal perceptual processing ability in every subject will be different. Thus, although we did not explicitly vary stimulus difficulty (Simen et al., 2009, Bogacz et al., 2010; Zacksenhouse et al., 2010,Balci et al., 2011b), as a population, animals clustered along the OPC across a range of (Figure 1d), supporting the assertion that well-trained rats achieve a near maximal in this perceptual task. We note that subjects did not span the entire range of possible , and that the differences in optimal dictated by the OPC for the we did observe are not large. It remains unclear whether our subjects would be optimal over a wider range of task parameters. Notwithstanding, previous work with a similar task found that rats did increase in response to increased penalty times, indicating a sensitivity to these parameters (Reinagel, 2013a). Thus, for our perceptual task and its parameters, trained rats approximately solve the SAT.

Rats do not maximize instantaneous reward rate during learning

Knowing that rats harvested reward near-optimally after learning, we next asked whether rats harvested instantaneous reward near-optimally during learning as well. If rats optimized throughout learning, their trajectories in speed-accuracy space should always track the OPC.

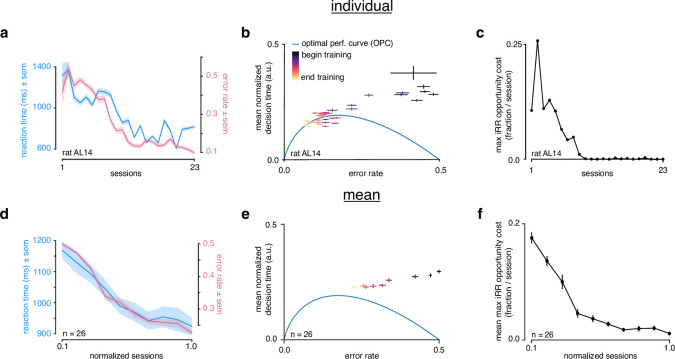

During learning, a representative individual () started with long that decreased as accuracy increased across training time (Figure 2a). Transforming this trajectory to speed-accuracy space revealed that throughout learning the individual did not follow the OPC (Figure 2b). Early in learning, the individual started with a much higher than optimal, but as learning progressed it approached the OPC. The maximum opportunity cost is the fraction of maximum possible relinquished for a choice of threshold (and average ) (see Methods). We found that this individual gave up over 20% of possible at the beginning of learning but harvested reward near-optimally at asymptotic performance (Figure 2c). These trends held when the learning trajectories of individuals were averaged (Figure 2d–f). To ensure that our particular training regime (which involved changes in stimulus size and rotation) was not responsible for these trends, we trained a separate cohort () with a simplified regime that did not involve any changes to the stimuli and we did not observe any meaningful differences (Figure 2—figure supplement 1, see Methods). These results show that rats do not greedily maximize throughout learning and lead to the question: if rats maximize at the end of learning, what principle governs their strategy at the beginning of learning?

Figure 2. Rats do not greedily maximize instantaneous reward rate during learning.

(a) Reaction time (blue) and error rate (pink) for an example subject (rat AL14) across 23 sessions. (b) Learning trajectory of individual subject (rat AL14) in speed-accuracy space. Color map indicates training time. Optimal performance curve (OPC) in blue. (c) Maximum opportunity cost (see Methods) for individual subject (rat AL14). (d) Mean reaction time (blue) and error rate (pink) for rats during learning. Sessions across subjects were transformed into normalized sessions, averaged and binned to show learning across 10 bins. Normalized training time allows averaging across subjects with different learning rates (see Methods). (e) Learning trajectory of rats in speed-accuracy space. Color map and OPC as in a. (f) Maximum opportunity cost of rats in b throughout learning. Errors reflect within-subject session SEMs for a and b and across-subject session SEMs for d, e, and f.

Figure 2—figure supplement 1. Comparison of training regimes.

Learning DDM

To theoretically understand the effect of different learning strategies, we developed a simple linear RNN formalism for our task. This framework enables investigation of how long-term perceptual learning across many trials is influenced by the choice of decision time on individual trials (Figure 3). We first describe this neural network formalism, before showing how it can be analytically reduced to a classic DDM with time-dependent parameters that evolve over the course of learning.

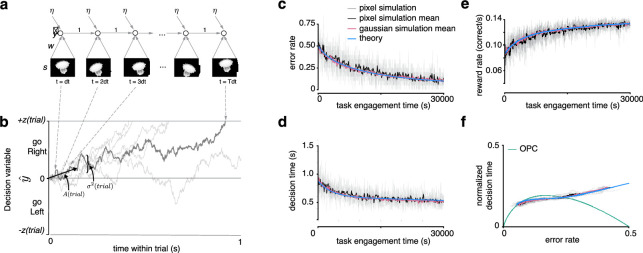

Figure 3. Recurrent neural network and learning drift diffusion model (DDM).

(a) Roll out in time of recurrent neural network (RNN) for one trial. (b) The decision variable for the recurrent neural network (dark gray), and other trajectories of the equivalent DDM for different diffusion noise samples (light gray). (c, d, e) Changes in , , and over a long period of task engagement in the RNN (light gray, pixel simulation individual traces; black, pixel simulation mean; pink, Gaussian simulation mean) compared to the theoretical predictions from the learning DDM (blue). (f) Visualization of traces in c and d in speed-accuracy space along with the optimal performance curve (OPC) in green. The threshold policy was set to be -sensitive for c–f.

Figure 3—figure supplement 1. Analytical reduction of linear drift-diffusion model (LDDM) matches error-corrective learning neural network dynamics during learning.

Linear RNN

Our model takes the form of a simple RNN, depicted unrolled through time in Figure 3a. The network receives noisy sensory input over time during a trial, amplifies this evidence through weighted synaptic connections, and integrates the result until a threshold is reached. After making a decision and receiving feedback, the synaptic connections are updated a small amount according to an error-corrective gradient descent learning rule. Therefore, there are two key timescales in the model: first, the fast activity dynamics during a single trial, which produces a single decision with a certain reaction time; and second, the slow weight dynamics due to learning across many trials. In the following, we denote time within trial as the variable , and the trial number as . We now describe the dynamics on each timescale in greater detail.

Within a trial, dimensional inputs arrive at discrete times , where is a small time step parameter. In our experimental task, might represent the activity of LGN neurons in response to a given visual stimulus. Because of eye motion and noise in the transduction from light intensity to visual activity, the response of individual neurons will only probabilistically relate to the correct answer at any given instant. In our simulations, we take to be the pixel values of the exact images presented to the animals, but transformed at each time point by small rotations (±20°) and translations (±25% of the image width and height), as depicted in Figure 3a. This input variability over time makes temporal integration valuable even in this visual classification task. To perform this integration, each input is filtered through perceptual weights and added to a read-out node (decision variable) along with i.i.d. integrator noise . This integrator noise models internal neural noise. The evolution of the decision variable is given by the simple linear recurrence

| (1) |

until the decision variable hits a threshold that is constant on each trial. Here, the RNN already performs an integration through time (a choice motivated by prior experiments in rodents Brunton et al., 2013), and improvements in performance come from adjusting the input-to-integrator weights to better extract task-relevant sensory information.

Across trials, the perceptual weights are updated to improve performance. In principle this could be accomplished with many possible learning mechanisms such as reinforcement learning (Law and Gold, 2009) or Bayesian inference (Drugowitsch et al., 2019). Here, we investigate gradient-based optimization of an objective function, as commonly used in deep learning approaches (Richards et al., 2019, Saxe et al., 2021). In particular, we consider using gradient descent on the hinge loss, corresponding to standard practice in deep learning. The hinge loss is

| (2) |

where is the correct output sign for the trial. Then the weights are updated by gradient descent on this loss,

| (3) |

where is a small learning rate. The hinge loss is a proxy for accuracy, and so this weight update implements a learning scheme based on error feedback. In essence, perceptual weights are updated after error trials to improve the likelihood of answering correctly in the future.

To summarize the key parameters of the RNN, the model requires specifying the input distribution , the initial perceptual weights , the integrator noise variance , the gradient descent learning rate , and the decision threshold used on each trial. With these parameters specified, the model can be simulated to make predictions for how behavior will evolve over training, as shown in Figure 3c–f, gray and black traces.

Reduction to LDDM

While the behavior of the RNN model obtained in simulations can be compared to data, deep network models remain challenging to understand (Saxe et al., 2021). We therefore sought to mathematically analyze this setting to derive a simple theory of the average learning dynamics that highlights key trade-offs.

We start by noting that the input to the decision variable at each time step is a weighted sum of many random variables, which by the law of large numbers will be approximately Gaussian. We therefore develop a reduction of this model based on an effective Gaussian scalar input distribution. At each time step the input pathway receives a Gaussian input , where parametrizes the signal related to , and the input noise variance parametrizes irreducible noise in input channels that cannot be rejected. This input is multiplied by a scalar weight , added to output noise of variance and sent into the integrating node ,

| (4) |

where we emphasize that and are now both scalar. We may then perform gradient descent on the hinge loss, yielding the update . As expected from the law of large numbers, for the right choice of input signal and parameters and ci, simulations of this effective Gaussian model closely match the full simulation from pixels, as shown in Figure 3c–f, pink trace.

Next, to relate these dynamics to the well-studied DDM framework, we examine behavior when the time step is small () to obtain a continuous time formulation. In the continuum limit, these discrete within-trial dynamics of the network yield decision variables with identical distributions to a drift-diffusion process with an effective SNR and normalized threshold

| (5) |

| (6) |

yielding the mean error rate () and decision time ()

| (7) |

| (8) |

Finally, we assume that the learning rate is small (), such that weights change little on any given trial and the gradient dynamics are driven by the mean update,

| , | (9) |

where denotes the average with respect to the distribution of outputs obtained with perceptual weights and threshold . These average dynamics depend in a complex way on the current performance of the network. We compute these average dynamics analytically (see Methods), yielding the continuous time change in effective SNR in the DDM that is equivalent to gradient descent learning in the underlying neural network model. In particular, gradient descent in the RNN is equivalent to the following SNR dynamics in the DDM:

| (10) |

Here, time measures seconds of task engagement (i.e. it measures time passing within a trial as well as intertrial time and any penalty delays after error trials), and is the average non-decision task engagement time per trial (where and are the average non-decision task engagement times after correct and error trials). The SNR dynamics depend on five parameters: the time constant related to the learning rate, the initial SNR , the asymptotic achievable SNR after learning , the integration-noise to input-noise variance ratio , and the choice of threshold over training. We note that the dependence of the dynamics on the choice of threshold is implicit in , and in Equation 10. The dynamics of this LDDM closely tracks simulated trajectories of the full network from pixels (Figure 3c–f blue trace, Figure 3—figure supplement 1; see Methods).

Remarkably, this reduction shows that the high-dimensional dynamics of the RNN receiving stochastic pixel input and performing gradient descent on the weights (Figure 3, gray trace) can be described by a DDM with a single deterministic scalar variable – the effective SNR – that changes over time (Figure 3, blue trace). Notably, without the mapping to the original RNN, it is not possible to understand what effect error-corrective gradient descent learning would have at the level of the DDM, or how the learning process is influenced by choice of decision times. In particular, the change in SNR that arises from gradient descent on the underlying RNN weights (Equation 10) is not equivalent to that arising from gradient descent on the SNR parameter in the DDM directly because gradient descent is not parametrization invariant.

Learning speed trades off with instantaneous reward rate

The LDDM reveals that learning dynamics depend on the choice of threshold on each trial over learning, because threshold impacts both error rate and decision time, which appear in the SNR dynamics of Equation 10. We next sought to qualitatively understand this relationship. A key prediction of the LDDM is a tension between learning speed and , the LS/ trade-off. This tension is clearest early in learning when are near 50%. Then the rate of change in SNR is

| (11) |

where the proportionality constant does not depend on (see derivation, Methods). Hence learning speed increases with increasing . By contrast, when accuracy is 50% the decreases with increasing ,

| (12) |

When encountering a new task, therefore, agents face a dilemma: they can either harvest a large or they can learn quickly.

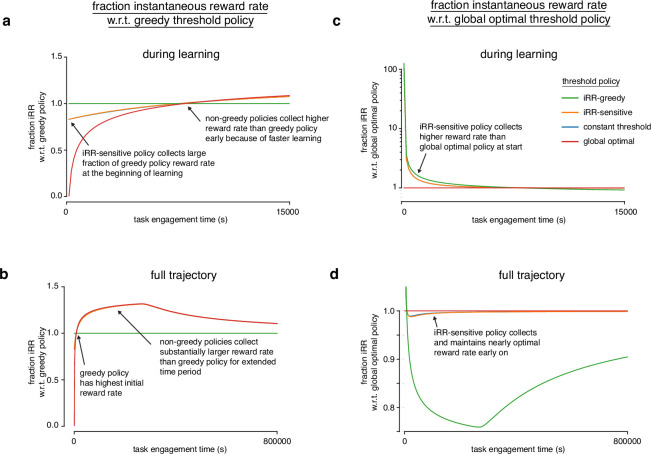

Learning dynamics depend on threshold policies

Just as the standard DDM instantiates different decision making strategies as different choices of threshold (for instance aimed at maximizing , accuracy, or robustness) (Holmes and Cohen, 2014; Zacksenhouse et al., 2010), the LDDM instantiates different learning strategies through the choice of threshold trajectory over learning. Threshold affects and , and through these, the learning dynamics in Equation 10. To consider a range of strategies, we developed four potential threshold policies.

Constant threshold. This policy implements a fixed constant threshold . It serves as a control for behavior that would arise without the ability to modulate decision threshold. Constant thresholds across difficulties have been found to be used as part of near-optimal and presumably cognitively cheaper strategies in humans (Balci et al., 2011b). This policy introduces the parameter z0.

iRR-greedy. This policy sets the threshold to the value that maximizes instantaneous reward on each trial, , such that behavior always lies on the OPC. This instantiates a ‘myopic’ strategy that does not consider how threshold can impact long-term learning. This policy is similar to a previously proposed neural network model of rapid threshold adjustment based on reward rate (Simen et al., 2006). The policy introduces no parameters.

iRR-sensitive. This policy implements a threshold that decays with time constant from an initial value toward the -optimal threshold,

Notably, as the SNR changes due to learning, the target threshold also changes through time. Asymptotically, this policy converges to greedy -optimal behavior; however, by starting with a high initial threshold, it can undergo a transient period where responses are slower or faster than -optimal, potentially influencing learning. It instantiates a heuristic strategy in which behavior differs from -optimal behavior early in learning. This policy introduces two parameters, z0 and .

Global optimal. This policy selects the threshold that maximizes total cumulative reward at some known predetermined end to the task ,

We approximately compute this threshold function using automatic differentiation (see Methods). This policy serves as a normative oracle to which behavior may be compared. We note that this optimal policy considers the full time course of learning and is aware of all task parameters such as the duration of total task engagement , asymptotically achievable SNR , etc. In practice these parameters cannot be known before experiencing the task, and so this policy is not an implementable strategy but a normative reference point. The policy introduces no parameters.

In designing this model, we kept components as simple as possible to highlight key qualitative trade-offs between learning speed and decision strategy. Because of its simplicity, like the standard DDM, it is not meant to quantitatively describe all aspects of behavior. We instead use it to investigate qualitative features of decision making strategy, and expect that these features would be preserved in other related models of perceptual decision making (Usher and McClelland, 2001,Mazurek et al., 2003, Gold and Shadlen, 2007, Heekeren et al., 2004,Heekeren et al., 2008,Ma et al., 2006,Brown and Heathcote, 2008, Ratcliff and McKoon, 2008, Beck et al., 2008, Roitman and Shadlen, 2002; Purcell et al., 2010,Bejjanki et al., 2011; Drugowitsch et al., 2012; Fard et al., 2017).

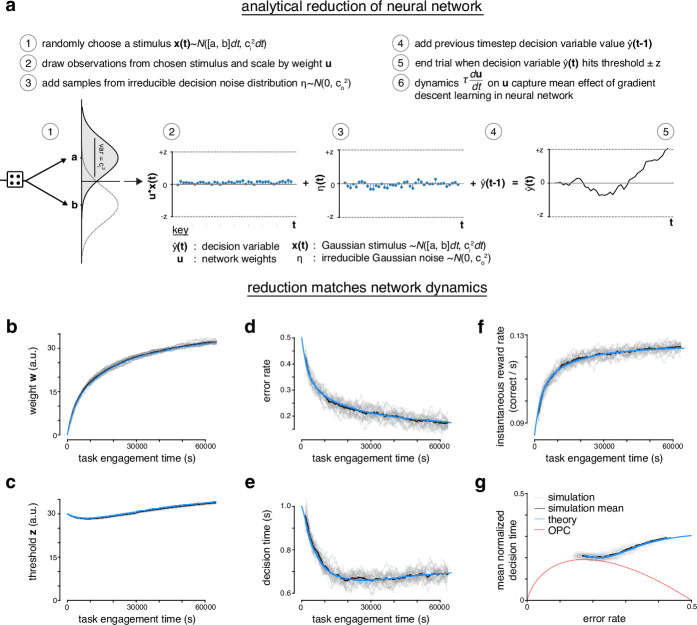

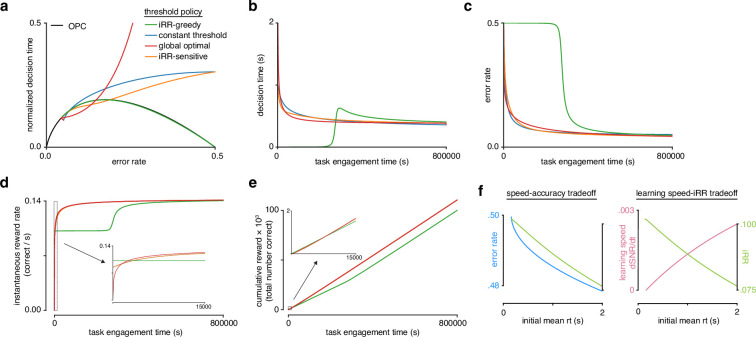

Model reveals that prioritizing learning can maximize total reward

In order to qualitatively understand how these models behave through time, we visualized their learning dynamics. To approximately place the LDDM task parameters in a similar space to the rats, we performed maximum likelihood fitting using automatic differentiation through the discretized reduction dynamics (see Methods). The four policies we considered clustered into two groups, distinguished by their behavior early in learning. A ‘greedy’ group, which contained just the -greedy policy, remained always on the OPC (Figure 4a), and had fast initial response times (Figure 4b), a long initial period at high error (Figure 4c), and high initial (Figure 4d). By contrast, a ‘non-greedy’ group, which contained the -sensitive, constant threshold, and global optimal policies, started far above the OPC (Figure 4a), and had slow initial response times (Figure 4b), rapid improvements in ER (Figure 4c), and low (Figure 4d). Notably, while members of the non-greedy group started off with lower , they rapidly surpassed the slow learning group (Figure 4d) and ultimately accrued more total reward (Figure 4e). Overall, these results show that threshold strategy strongly impacts learning dynamics due to the learning speed/ trade-off (Figure 4f), and that prioritizing learning speed can achieve higher cumulative reward than prioritizing instantaneous reward rate.

Figure 4. Model reveals rat learning dynamics lead to higher instantaneous reward rate and long-term rewards than greedily maximizing instantaneous reward rate.

(a) Model learning trajectories in speed-accuracy space plotted against the optimal performance curve (OPC) (black). (b) Decision time through learning for the four different threshold policies in a. (c) Error rate throughout learning for the four different threshold policies in a. (d) Instantaneous reward rate as a function of task engagement time for the full learning trajectory and a zoom-in on the beginning of learning (inset). (e) Cumulative reward as a function of task engagement time for the full learning trajectory and a zoom-in on the beginning of learning (inset). Threshold policies: -greedy (green), constant threshold (blue), -sensitive (orange), and global optimal (red). (f) In the speed-accuracy trade-off (left), (blue) decreases with increasing initial mean (green) at high error rates (∼0.5) also decreases with increasing initial mean . Thus, at high , an agent solves the speed-accuracy trade-off by choosing fast that result in higher and maximize . In the learning speed/ trade-off (right), initial learning speed (, pink) increases with increasing initial mean , whereas (green) follows the opposite trend. Thus, an agent must trade in order to access higher learning speeds. Plots generated using linear drift-diffusion model (LDDM).

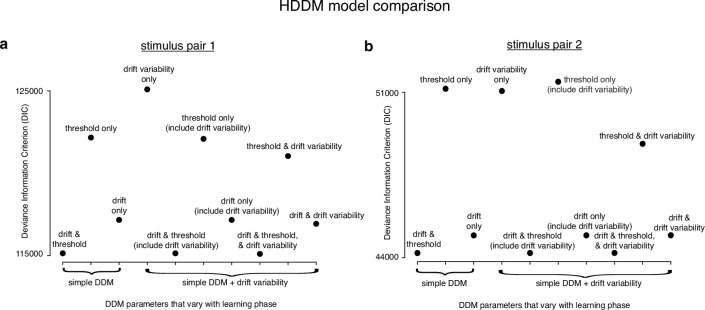

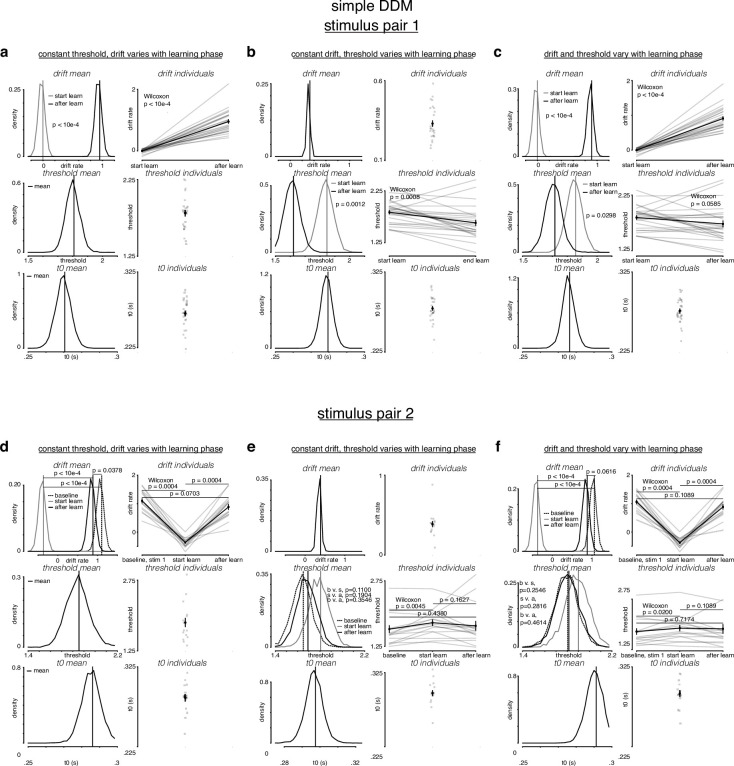

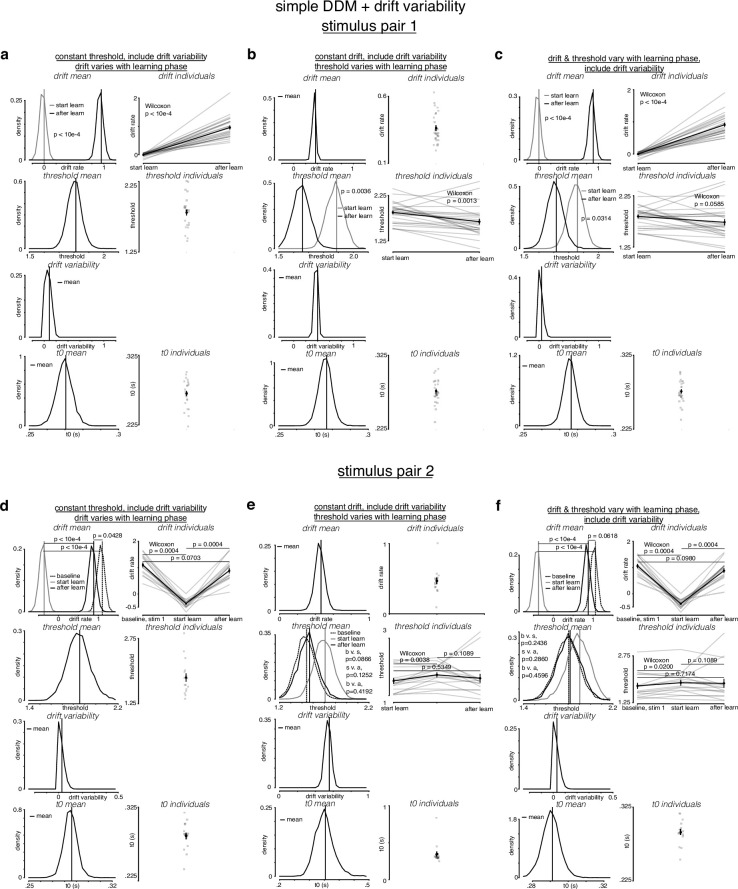

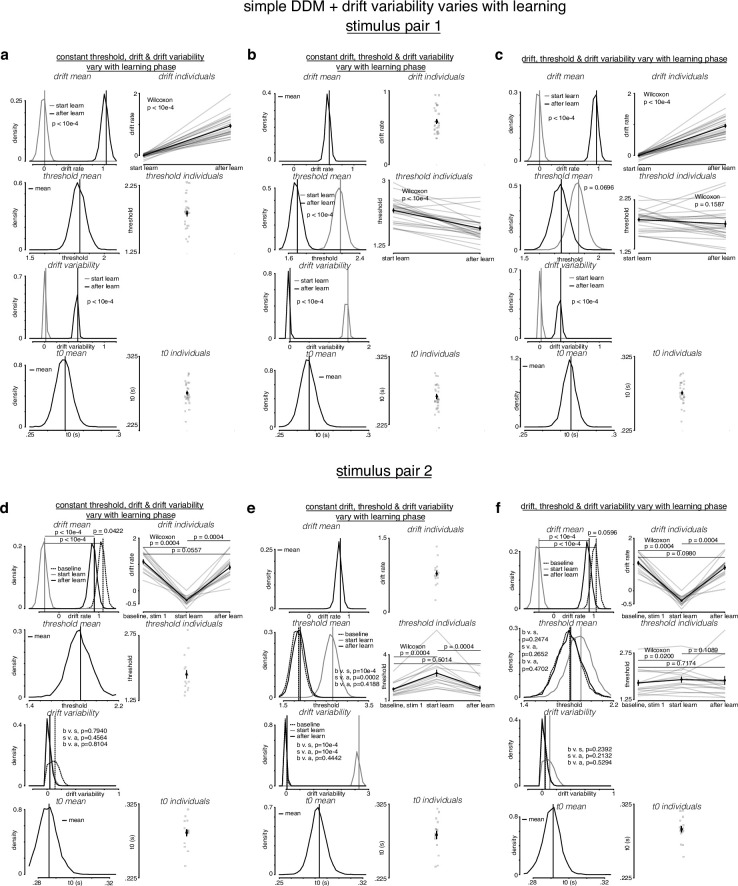

Figure 4—figure supplement 1. Allowing both drift rate and threshold to vary with learning provides the best drift-diffusion model (DDM) fits.

Figure 4—figure supplement 2. Simple drift-diffusion model (DDM) fits indicate threshold decreases and drift rate increases during learning.

Figure 4—figure supplement 3. Simple drift-diffusion model (DDM) + fixed drift rate variability fits indicate threshold decreases and drift rate increases during learning.

Figure 4—figure supplement 4. Simple drift-diffusion model (DDM) + variable drift rate variability fits indicate threshold decreases and drift rate increases during learning.

Figure 4—figure supplement 5. Model reveals rat learning dynamics resemble optimal trajectory without relinquishing initial rewards.

We further analyzed the differences between the three strategies in the non-greedy group. The global optimal policy selects extremely slow initial to maximize the initial speed of learning. By contrast, the -sensitive and constant threshold policies start with moderately slow responses. Nevertheless, we found that these simple strategies accrued 99% of the total reward of the global optimal strategy (Figure 4—figure supplement 5). Hence these more moderate policies, which do not require oracle knowledge of future task parameters, derive most of the benefit in terms of total reward and may reflect a reasonable approach when the duration of task engagement is unknown.

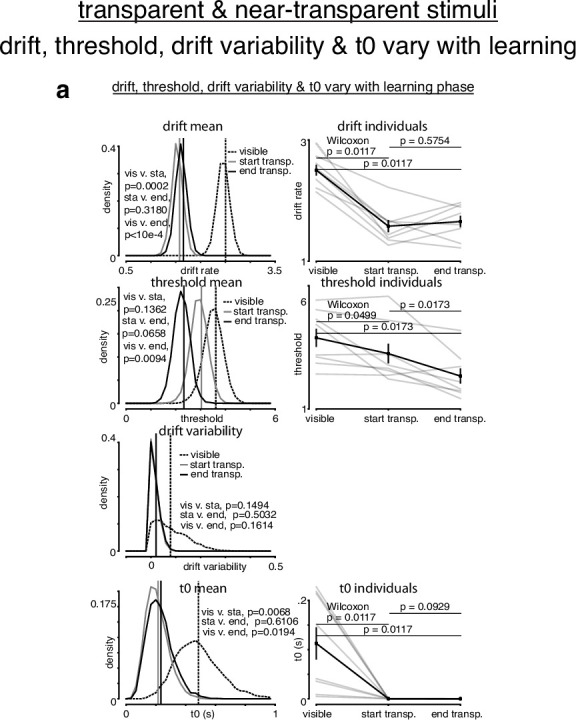

Considering the rats’ trajectories in light of these strategies, their slow responses early in learning stand in stark contrast to the fast responses of the -greedy policy (Figure 2b, Figure 4a). Equally, their responses were faster than the extremely slow initial of the global optimal model. Both the -sensitive and constant threshold models qualitatively matched the rats’ learning trajectory. However, the best DDM parameter fits of the rats’ behavior allowed their thresholds to decrease throughout learning, failing to support the constant threshold model (Figure 4—figure supplements 1–4). Subsequent experiments (Figure 6) provide further evidence against a simple constant threshold strategy. Consistent with substantial improvements in perceptual sensitivity through learning, DDM fits to the rats also showed an increase in drift rate throughout learning (Figure 4—figure supplements 1–4). Similar increases in drift rate have been observed as a universal feature of learning throughout numerous studies fitting learning data with the DDM (Ratcliff et al., 2006; Dutilh et al., 2009,Petrov et al., 2011,Balci et al., 2011b, Liu and Watanabe, 2012, Zhang and Rowe, 2014). These qualitative comparisons suggest that rats adopt a ‘non-greedy’ strategy that trades initial rewards to prioritize learning in order to harvest a higher sooner and accrue more total reward over the course of learning.

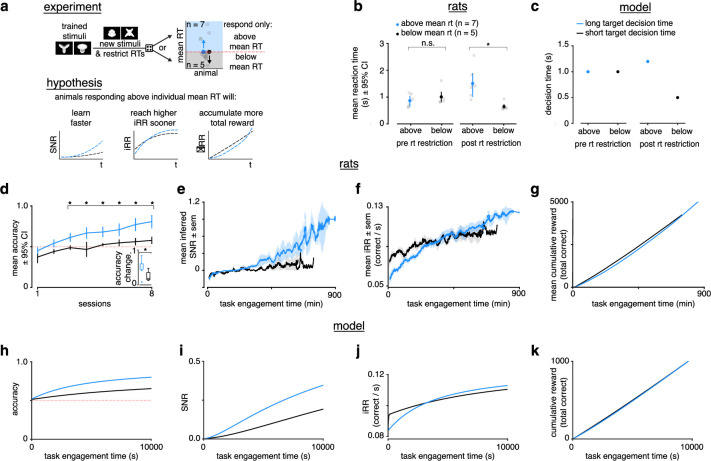

Learning speed scales with reaction time

To test the central prediction of the LDDM that learning (change in SNR) scales with mean , we designed an restriction experiment and studied the effects of the restriction on learning in the rats. Previously trained rats () were randomly divided into two groups in which they would have to learn a new stimulus pair while responding above or below their individual mean (‘slow’ and ‘fast’) for the previously trained stimulus pair (Figure 5a). Before introducing the new stimuli, we carried out practice sessions with the new timing restrictions to reduce potential effects related to a lack of familiarity with the new regime. After the restriction, were significantly different between the two groups (Figure 5b). In the model, we simulated an restriction by setting two different (Figure 5c).

Figure 5. Longer reaction times lead to faster learning and higher instantaneous reward rates.

(a) Schematic of experiment and hypothesized results. Previously trained animals were randomly divided into two groups: could only respond above (blue, ) or below (black, ) their individual mean reaction times for the previously trained stimulus and the new stimulus. Subjects responding above their individual mean reaction times were predicted to learn faster, reach a higher instantaneous reward rate sooner and accumulate more total reward. (b) Mean and individual reaction times before and after the reaction time restriction in rats. The mean reaction time for subjects randomly chosen to respond above their individual mean reaction times (blue, ) was not significantly different to those randomly chosen to respond below their individual means (black, ) before the restriction (Wilcoxon rank-sum test p > 0.05), but were significant after the restriction (Wilcoxon rank-sum test p < 0.05). Errors represent 95% confidence intervals. (c) In the model a long (blue) and a short (black) target decision time were set through a control feedback loop on the threshold, with parameter . (d) Mean accuracy ±95% confidence interval across sessions for rats required to respond above (blue, ) or below (black, ) their individual mean reaction times for a previously trained stimulus. Both groups had initial accuracy below chance because rats assume a response mapping based on an internal assessment of similarity of new stimuli to previously trained stimuli. To counteract this tendency and ensure learning, we chose the response mapping for new stimuli that contradicted the rats’ mapping assumption, having the effect of below-chance accuracy at first. * denotes p < 0.05 in two-sample independent -test. Inset: accuracy change (slope of linear fit to accuracy across sessions to both groups, units: fraction per session). * denotes p < 0.05 in a Wilcoxon rank-sum test. (e) Mean inferred signal-to-noise ratio (SNR), (f) mean, and (g) mean cumulative reward across task engagement time for new stimulus pair for animals in each group. (h) Accuracy, (i) SNR, (j) , and (k) cumulative reward across task engagement time for long (blue) and short (black) target decision times in the linear drift-diffusion model (LDDM).

We found no difference in initial mean session accuracy between the two groups, followed by significantly higher accuracy in the slow group in subsequent sessions (Figure 5d). The slope of accuracy across sessions was significantly higher in the slow group (Figure 5d, inset). Importantly, the fast group had a positive slope and an accuracy above chance by the last session of the experiment, indicating this group learned (Figure 5d).

Because of the SAT in the DDM, however, accuracy could be higher in the slow group even with no difference in perceptual sensitivity (SNR) or learning speed simply because on average they view the stimulus for longer during a trial, reflecting a higher threshold. To see if underlying perceptual sensitivity increased faster in the slow group, we computed the rats’ inferred SNR throughout learning (see Methods, Equation 24), which takes account of the relationship between and . The SNR of the slow group increased faster (Figure 5e), consistent with a learning speed that scales with .

We found that the slow group had a lower initial , but that this exceeded that of the fast group halfway through the experiment (Figure 5f). Similarly, the slow group trended toward a higher cumulative reward by the end of the experiment (Figure 5g). The LDDM qualitatively replicates all of our behavioral findings (Figure 5h–k). These results demonstrate the potential total reward benefit of faster learning, which in this case was a product of enforced slower .

Our experiments and simulations demonstrate that longer lead to faster learning and higher reward for our task setting both in vivo and in silico. Moreover, they are consistent with the hypothesis that rats choose high initial in order to prioritize learning and achieve higher and cumulative rewards during the task.

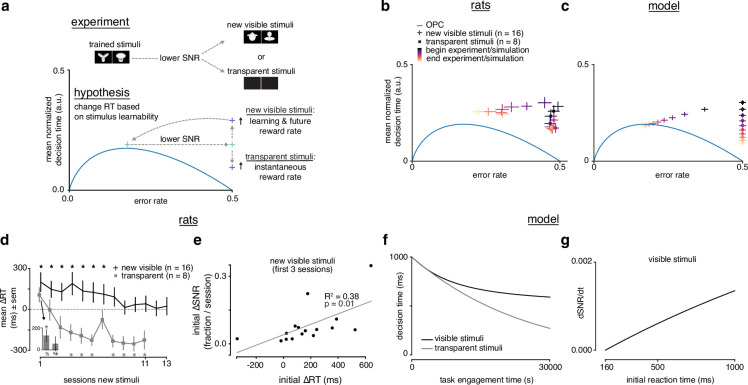

Rats choose reaction time based on learning prospects

The previous experiments suggest that rats trade initial rewards for faster learning. Nonetheless, it is unclear how much control rats exert over their . A control-free heuristic approach, such as adopting a fixed high threshold (our constant threshold policy), might incidentally appear near optimal for our particular task parameters, but might not be responsive to changed task conditions. If an agent is controlling the reward investment it makes in the service of learning, then it should only make that investment if it is possible to learn.

To test whether the rats’ modulations were sensitive to learnability, we conducted a new experiment in which we divided rats into a group that encountered new learnable visible stimuli (, sessions = 13), and another that encountered unlearnable transparent or near-transparent stimuli (, sessions = 11) (Figure 6a). From the perspective of the LDDM, both groups start with approximately zero SNR, however only the group with the visible stimuli can improve that SNR. Because the rats do not know the learnability of new stimuli, we initialize the LDDM with a high threshold to model the belief that any new stimuli may be learnable. If the rats choose their based on how much it is possible to learn, then: (1) rats encountering new stimuli that they can learn will increase their to learn quickly and increase future . (2) Rats encountering new stimuli that they cannot learn might first increase their to learn that there is nothing to learn, but (3) will subsequently decrease to maximize .

Figure 6. Rats choose reaction time based on stimulus learnability.

(a) Schematic of experiment: rats trained on stimulus pair 1 were presented with new visible stimulus pair 2 or transparent (alpha = 0, 0.1) stimuli. If rats change their reaction times based on stimulus learnability, they should increase their reaction times for the new visible stimuli to increase learning and future and decrease their reaction time to increase for the transparent stimuli. (b) Learning across normalized sessions in speed-accuracy space for new visible stimuli (, crosses) and transparent stimuli (, squares). Color map indicates time relative to start and end of the experiment. (c) -sensitive threshold model runs with ‘visible’ (crosses) and ‘transparent’ (squares) stimuli (modeled as containing some signal, and no signal) plotted in speed-accuracy space. The crosses are illustrative and do not reflect any uncertainty. Color map indicates time relative to start and end of simulation. (d) Mean change in reaction time across sessions for visible stimuli or transparent stimuli compared to previously known stimuli. Positive change means an increase relative to previous average. Inset: first and second half of first session for transparent stimuli. * denotes in permutation test. (e) Correlation between initial individual mean change in reaction time (quantity in d) and change in signal-to-noise ratio (SNR) (learning speed: slope of linear fit to SNR per session) for first three sessions with new visible stimuli. R2 and from linear regression in d. Error bars reflect standard error of the mean in b and d. (f) Decision time across time engagement time for visible and transparent stimuli runs in model simulation. (g) Instantaneous change in SNR () as a function of initial reaction time (decision time + non-decision time T0) in model simulation.

Figure 6—figure supplement 1. Reaction time analysis of transparent stimuli experiment.

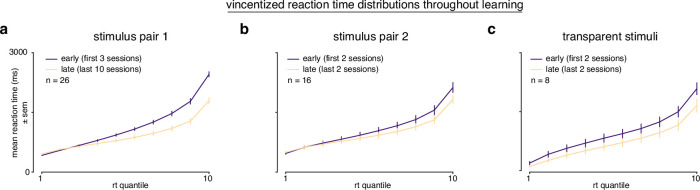

Figure 6—figure supplement 2. Vincentized reaction time distributions throughout learning.

Figure 6—figure supplement 3. Simple drift-diffusion model (DDM) + variable drift rate variability fits for transparent stimuli.

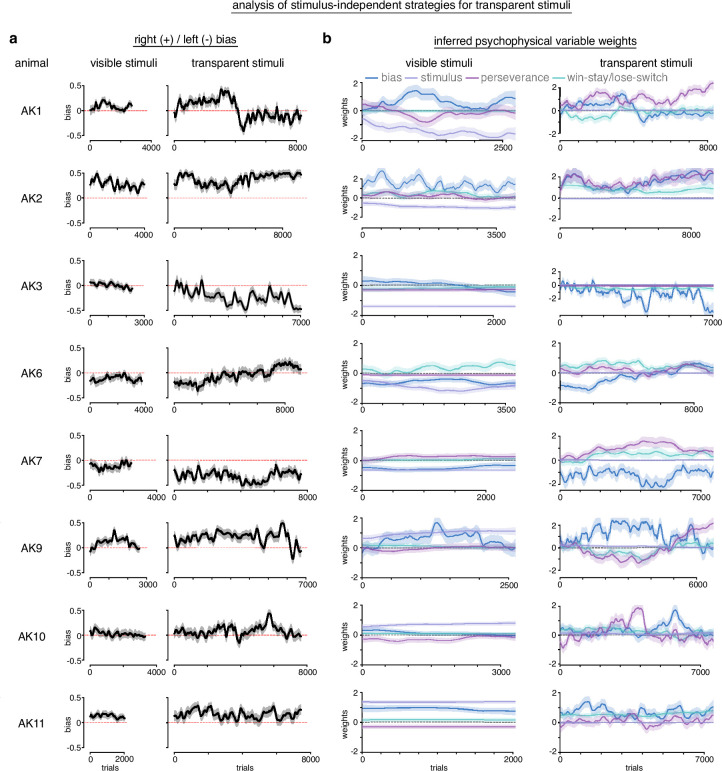

Figure 6—figure supplement 4. Analysis of stimulus-independent strategies for transparent stimuli.

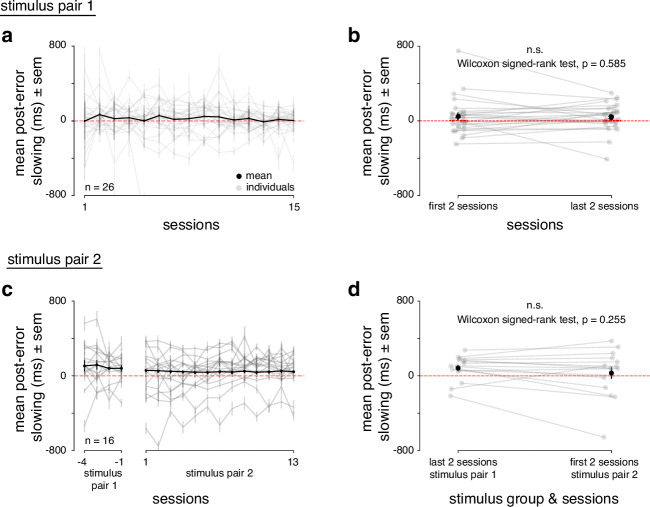

Figure 6—figure supplement 5. Post-error slowing during rat learning dynamics.

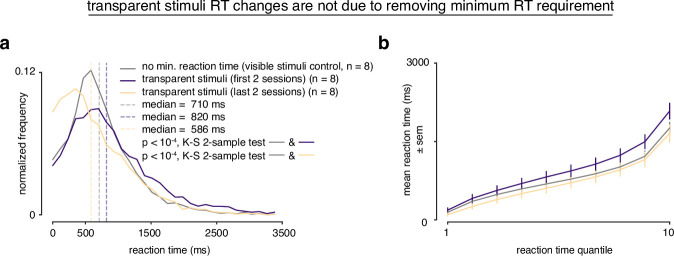

We found that the rats with the visible stimuli qualitatively replicated the same trajectory in speed-accuracy space that we found when rats were trained for the first time (Figure 2b, Figure 6b). Indeed, the best DDM fits were those that allowed both threshold and drift rate to vary with learning, as was the case with the first stimuli the rats encountered, and in line with the LDDM (Figure 4—figure supplements 1–4). Because these previously trained rats had already mastered the task mechanics, this result rules out non-stimulus-related learning effects as the sole explanation for long at the beginning of learning and supports our hypothesis that the slowdown in was attributable to the rats trying to learn the new stimuli efficiently. We calculated the mean change in (mean ΔRT) of new stimuli versus known stimuli. The visible stimuli group had a significant slowdown in lasting many sessions that returned to baseline by the end of the experiment (Figure 6d, black trace).

Rats with the transparent stimuli also approached the OPC by decreasing their across sessions to better maximize (Figure 6b). After a brief initial increase in in the first half of the first session (Figure 6d, inset), rapidly decreased (Figure 6d, gray trace). Notably, fell below the baseline , indicating a strategy of responding quickly, which approaches -optimal behavior for this zero SNR task. Additionally, we considered the rats’ entire distributions to investigate the effect of learnability beyond means. We found that while the distributions changed similarly from the beginning to end of learning for the learnable stimuli (stimulus pair 1 and 2), they differed for the unlearnable (transparent) stimuli, indicating an effect of learnability on the entire distributions (Figure 6—figure supplement 2). Hence, rodents are capable of modulating their strategy depending on their learning prospects.

Although there is no informative signal in this task with transparent stimuli, the rats could still be using stimulus-independent signals, such as choice history or feedback, to drive heuristic strategies. Indeed, DDM fits indicated a non-zero drift rate even in the absence of informative stimuli (Figure 6—figure supplement 3). To investigate whether the rats implemented stimulus-independent heuristic strategies in addition to random choice, we measured left/right bias and quantified the weights of bias, perseverance (choose the same port as the previous trial), and win-stay/lose-switch (choose the port that was correct on the previous trial) (Roy et al., 2021). In general, bias seemed to increase with transparent stimuli in the direction that each individual was already biased during visible stimuli. Perseverance and win-stay/lose-switch also seemed to increase and fluctuate more during transparent stimuli, suggesting a greater reliance on these heuristics now that the stimulus was uninformative (Figure 6—figure supplement 4). Engaging these heuristics may be a way that the rats expedited their choices in order to maximize while still ‘monitoring’ the task for any potentially informative changes or patterns. Despite the fact that the animals’ still engaged these non-optimal heuristics, the lack of learnability in the transparent stimuli still led to a change in strategy that was distinct from that with learnable stimuli.

Importantly, this learnability experiment argues against other simple strategies accounting for the changes in . If rats respond more slowly after error trials, a phenomenon known as post-error slowing (PES), they might exhibit slower early in learning when errors are frequent (Notebaert et al., 2009). Indeed, we found a slight mean post-error slowing effect of about 50 ms that was on average constant throughout learning, though it was highly variable across individuals (Figure 6—figure supplement 5). However, rats viewing transparent stimuli had constrained to 50%, yet their systematically decreased (Figure 6b), such that post-error slowing alone cannot account for their strategy. Similarly, choosing as a simple function of time since encountering a task would not explain the difference in trajectories between visible and transparent stimuli (Figure 6d).

A simulation of this experiment with the -sensitive threshold LDDM qualitatively replicated the rats’ behavior (Figure 6c, f and g). Rodent behavior is thus consistent with a threshold policy that starts with a relatively long upon encountering a new task, and then decays toward the -optimal . All other threshold strategies we considered fail to account for the totality of the results. The -greedy strategy – as before – stays pinned to the OPC and speeds up upon encountering the novel stimuli rather than slowing down. The constant threshold strategy fails to predict the speed-up in for the transparent stimuli if we assume constant diffusion noise. This is because when the perceptual signal is small, mean can be shown to be the squared ratio of threshold to diffusion noise (see Methods). It is thus also possible to explain the speed-up with a constant threshold and increasing diffusion noise. With either interpretation, however, it is clear that a policy where the ratio of threshold to diffusion noise is constant is not compatible with the results. Finally, the global optimal strategy (which has oracle knowledge of the prospects for learning in each task) behaves like the -greedy policy from the start on the transparent stimuli as there is nothing to learn.

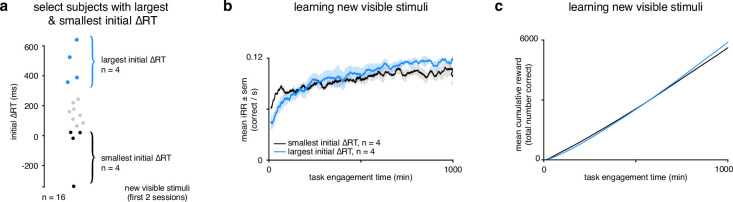

Our restriction experiment showed that higher initial led to faster learning, a higher and more cumulative reward. Consistent with these findings, there was a correlation between initial mean ΔRT and initial ΔSNR across subjects viewing the visible stimuli, indicating the more an animal slowed down, the faster it learned (Figure 6e). We further tested these results in the voluntary setting by tracking and cumulative reward for the rats in the learnable stimuli setting with the largest (blue, ) and smallest (black, ) ‘self-imposed’ change in (Figure 7a). The rats with the largest change started with a lower but ended with a higher mean iRR, and collected more cumulative reward (Figure 7b and c). Thus, in the voluntary setting there is a clear relationship between , learning speed, and its total reward benefits.

Figure 7. Rats that slowed down reaction times the most reached a higher instantaneous reward rate sooner and collected more reward.

(a) Schematic showing segregation of top 25% of subjects () with the largest initial ΔRTs for the new visible stimuli and the bottom 25% of subjects () with the smallest initial ΔRTs. Initial ΔRTs were calculated as an average of the first two sessions for all subjects. (b) Mean for subjects with largest and smallest mean changes in reaction time across task engagement time. (c) Mean cumulative reward over task engagement time for subjects as in b.

Discussion

Summary and limitations

Our theoretical and empirical results identify a trade-off between the need to learn rapidly and the need to accrue immediate reward in a perceptual decision making task. We find that rats adapt their decision strategy to improve learning speed and approximately maximize total reward, effectively navigating this trade-off over the total period of task engagement. In our experiments, rats responded slowly upon encountering novel stimuli, but only when there was a visual stimulus to learn from. This result indicates that they chose to respond more slowly in order to learn quickly, and only made the investment when learning was possible. This behavior requires foregoing both a cognitively easier strategy – fast random choice – and relinquishing a higher immediately available reward for several sessions spanning multiple days. By imposing different response times in groups of animals, we empirically verified our theoretical prediction that slow responses lead to faster learning and greater total reward in our task. These findings collectively show that rats exhibit cognitive control of the learning process, that is, the ability to engage in goal-directed behavior that would otherwise conflict with default or more immediately rewarding responses (Dixon et al., 2012, Shenhav et al., 2013; Shenhav et al., 2017, Cohen et al., 1990; Cohen and Egner, 2017).

Our high-throughput behavioral study with a controlled training protocol permits examination of the entire trajectory of learning, revealing hallmarks of non-greedy decision making. Nonetheless, it is accompanied by several experimental limitations. Our estimation of SNR improvements during learning relies on the DDM. Importantly, while this approach has been widely used in prior work (Brunton et al., 2013; Ratcliff et al., 2006; Balci et al., 2011b; Drugowitsch et al., 2019; Petrov et al., 2011 ), our conclusions are predicated on this model’s approximate validity for our task. Future work could address this issue by using a paradigm in which learners with different response deadlines are tested at the same fixed response deadline, equalizing the impact of stimulus exposure at test. This model-free paradigm is not trivial in rodents, because response deadlines cannot be rapidly instructed. Our study also focuses on one visual perceptual task. Further work should verify our findings with other perceptual tasks across difficulties, modalities, and organisms.

To understand possible learning trajectories, we introduced a theoretical framework based on an RNN, and from this derived an LDDM. The LDDM extends the canonical drift-diffusion framework to incorporate long-term perceptual learning, and formalizes a trade-off between learning speed and instantaneous reward. However, it remains approximate and limited in several ways. The LDDM builds off the simplest form of a DDM, while various extensions and related models have been proposed to better fit behavioral data, including urgency signals (Ditterich, 2006; Cisek et al., 2009; Deneve, 2012; Hanks et al., 2011; Drugowitsch et al., 2012), history-dependent effects (Busse et al., 2011; Scott et al., 2015; Akrami et al., 2018; Odoemene et al., 2018; Pinto et al., 2018; Lak et al., 2018; Mendonça et al., 2018), imperfect sensory integration (Brunton et al., 2013), confidence (Kepecs et al., 2008; Lak et al., 2014; Drugowitsch et al., 2019), and multi-alternative choices (Krajbich and Rangel, 2011, Tajima et al., 2019). Prior work in the DDM framework has investigated learning dynamics with a Bayesian update and constant thresholds across trials (Drugowitsch et al., 2019). Our framework uses simpler error-corrective learning rules, and focuses on how the decision threshold policy over many trials influences long-term learning dynamics and total reward. Future work could combine these approaches to understand how Bayesian updating on each trial would change long-term learning dynamics, and potentially, the optimality of different threshold strategies.

More broadly, it remains unclear whether the drift-diffusion framework in fact underlies perceptual decision making, with a variety of other proposals providing differing accounts (Gold and Shadlen, 2007, Zoltowski et al., 2019; Stine et al., 2020). We speculate that the qualitative learning speed/instantaneous reward rate trade-off that we formally derive in the LDDM would also arise in other models of within-trial decision making dynamics. In addition, on a long timescale over many trials, the LDDM improves performance through error-corrective learning. Future work could investigate learning dynamics under other proposed learning algorithms such as feedback alignment (Lillicrap et al., 2016), node perturbation (Williams, 1992), or reinforcement learning (Law and Gold, 2009). Additionally, the LDDM does not currently include a meta-learning component with which the agent can dynamically gauge the learnability of the task explicitly in order to set its decision threshold. Instead, the LDDM assumes a ‘learnability prior’ implemented as a high initial threshold condition for every new task. This limitation could be solved with a Bayesian observer that predicts learnability based on experience and controls the threshold accordingly. One potential avenue in this direction would be the implementation of the learned value of control theory, which provides a mechanism through which an agent can compare stimulus features to those it has encountered in the past in order to determine control allocation (Lieder et al., 2018). Moreover, the link between the LDDM and cognitive control is implicit: we interpret the choice of threshold in the DDM as a control process (a higher threshold than is optimal reflects control because it requires foregoing present reward in the service of future reward). Future modeling work should make the choice of control explicit, taking into account the inherent cost of control (Shenhav et al., 2013), and then using that choice to determine the decision threshold. Doing so would allow control to not only reflect the choice of threshold, as we have done, but also as a gain term on the drift rate (Leng et al., 2021), which may more completely capture control’s role in two-choice decisions.

Explore/exploit trade-off

Conceptually, the learning speed/instantaneous reward rate trade-off is related to the explore/exploit trade-off common in reinforcement learning, but differs in level of analysis. As traditionally framed in reinforcement learning, an agent has the option of maximizing reward based on its current information (exploitation), or of reaching a potentially larger future reward by expanding its current information (exploration). When framed this way, learning is an act of exploration. However, as framed in our study, learning is a systematic, directed strategy (or ‘action’), that is, exploitation, employed in order to maximize total future reward. The reconciliation between these seemingly contradictory accounts occurs at the meta-level: when an agent is aware that learning is the optimal strategy to maximize total future discounted reward, it is exploiting a strategy that trades learning speed for instantaneous reward rate. However, when that agent is not yet aware whether it can learn, then it must explore this question (i.e. meta-learn) before deciding whether it should exploit an explicit learning strategy (‘exploitation of exploration’) that will also come at the cost of instantaneous reward. Although explained sequentially, these two mechanisms can occur in parallel (i.e. an agent constantly probing its learning prospects). One intriguing finding is that state-of-the-art deep reinforcement learning agents, which succeed in navigating the traditional explore/exploit dilemma on complicated tasks like Atari games (Mnih et al., 2016), nevertheless fail to learn perceptual decisions like those considered here (Leibo et al., 2018). This may be because exploration and exploitation can mean different things depending on the level of analysis, and efficiently learning a perceptual task may require the ‘exploitation of exploration’. Our findings may thus offer routes for improving these artificial systems.

Cognitive control

In order to navigate the learning speed/instantaneous reward rate trade-off, our findings suggest that rats deploy cognitive control of the learning process. Two main features of cognitive control govern its use: it is limited (Shenhav et al., 2017), and it is costly (Krebs et al., 2010; Padmala and Pessoa, 2011, Kool et al., 2010, Dixon et al., 2012; Westbrook et al., 2013; Kool and Botvinick, 2018; Westbrook et al., 2019). If control is costly, then its application needs to be justified by the benefits of its application. The expected value of control (EVC) theory posits that control is allocated in proportion to the EVC (Shenhav et al., 2013). Previous work demonstrated that rats are capable of the economic reasoning required for optimal control allocation (Niyogi et al., 2014a; Niyogi et al., 2014b; Sweis et al., 2018). We demonstrated that rats incur a substantial initial instantaneous reward rate opportunity cost to learn the task more quickly, foregoing a cognitively less demanding fast random strategy that would yield higher initial rewards. Rather than optimizing instantaneous reward rate, which has been the focus of prior theories (Gold and Shadlen, 2002, Balci et al., 2011b; Bogacz et al., 2006), our analysis suggests that rats approximately optimize total reward over task engagement. Relinquishing initial reward to learn faster, a cognitively costly strategy, is justified by a larger total reward over task engagement. This pattern of behavior matches theoretical predictions of the value of learning based on a recent expansion of the EVC theory (Masís et al., 2021).

Assessing the expected value of learning in a new task requires knowing how much can be learned, how quickly one can learn, and for how long the task will be performed (Masís et al., 2021). None of these quantities is directly observable upon first encountering a new task, leading to the question of how rodents know to slow down in one task but not another. Importantly, rats only traded reward for information when learning was possible, a result in line with data demonstrating that humans are more likely to trade reward for information during long experimental time horizons, when learning is more likely (Wilson et al., 2014). Monkeys also reduce their reliance on expected value during decision making in order to explore strategically when it is deemed beneficial (Jahn et al., 2022). Moreover, previous work has highlighted the explicit opportunity cost of longer deliberation times (Drugowitsch et al., 2012), a trade-off that will differ during learning and at asymptotic performance, as we demonstrate here. One possibility is that rats estimate learnability and task duration through meta-learning processes that learn to estimate the value of learning through experience with many tasks (Finn et al., 2017; Wang et al., 2018; Metcalfe, 2009). The amount of control allocated to learning the current task could be proportional to its estimated value, determined based on similarity to previous learning situations and their reward outcomes and control costs (Lieder et al., 2018). Some of this bias for new information, termed curiosity, could be partly endogenous, serving as a useful heuristic for organisms outside of the lab, where rewards are sparse and action spaces are broad (Gottlieb and Oudeyer, 2018). Previous observations of suboptimal decision times in humans analogous to those we observed in rats might reflect incomplete learning, or subjects who think they still have more to learn (Balci et al., 2011b; Bogacz et al., 2010; Cohen et al., 1990). Future work could test further predictions emerging from a control-based theory of learning. An agent should assess both the predicted duration of task engagement and the predicted difficulty of learning in order to determine the optimal decision making strategy early in learning, and this can be tested by, for instance, manipulating the time horizon and difficulty of the task. From a control-based perspective, the expected reward from a task is also relevant to control allocation. Indeed, recent work in humans shows that externally motivating learners with the prospect of a test at the end of a task led to a much higher allocation of time on the harder-to-learn items compared to the case when learners were not warned of a test (Ten et al., 2020).

The trend of a decrease in response time and an increase in accuracy through practice – which we observed in our rats – has been widely observed for decades in the skill acquisition literature, and is known as the Law of Practice (Thorndike, 1913, Newell and Rosenbloom, 1981, Logan, 1992, Heathcote et al., 2000). Accounts of the Law of Practice have posited a cognitive control-mediated transition from shared/controlled to separate/automatic representations of skills with practice (Posner and Snyder, 1975, Shiffrin and Schneider, 1977, Cohen et al., 1990). On this view, control mechanisms are a limited, slow resource that impose unwanted processing delays. Our results suggest an alternative non-mutually exclusive reward-based account for why we may so ubiquitously observe the Law of Practice. Slow responses early in learning may be the goal of cognitive control, as they allow for faster learning, and faster learning leads to higher total reward. When faced with the ever-changing tasks furnished by naturalistic environments, it is the speed of learning which may exert the strongest impact on total reward.

Bounded optimality

More broadly, the optimization of behavior, not in a vacuum, but in the context of one’s constraints – intrinsic and environmentally determined – underlies several general theories of cognition, including theories that explain the allocation of cognitive control (Shenhav et al., 2013; Lieder et al., 2018), the selection of decision heuristics (Gigerenzer, 2008), and the rationale of seemingly irrational economic choices (Kahneman and Tversky, 1979, Juechems et al., 2021). These theories are instances of bounded optimality – a prominent theoretical framework of biological and artificial cognition stating that an agent is optimal when it maximizes reward per unit time within the limitations of its computational architecture (Russell and Subramanian, 1994, Lewis et al., 2014; Gershman et al., 2015; Griffiths et al., 2015; Bhui et al., 2021; Summerfield and Parpart, 2021).

Instances of this framework typically assume that cognitive constraints remain fixed and, more so, that agents do not take alterations of these constraints into account when choosing what to do. There exists, however, a novel theoretical avenue within this framework. An agent can optimize its behavior not only through maximization of reward within constraints, but also through the minimization of those constraints themselves. If an agent can change itself to minimize its constraints by, for example, improving its perceptual representations through learning, the future reward prospects of doing so should be considered in its current choices, even if it is at the expense of current reward. Intelligent agents, like humans, can and do change themselves through learning in order to improve future reward prospects. Our study formalizes this phenomenon in the context of two-choice perceptual decisions, but much work remains to be done in other contexts, modalities, and organisms.

Methods

Behavioral training

Subjects

All care and experimental manipulation of animals were reviewed and approved by the Harvard Institutional Animal Care and Use Committee (IACUC), protocol 27–22. We trained animals on a high-throughput visual object recognition task that has been previously described (Zoccolan et al., 2009). A total of 44 female Long-Evans rats were used for this study, with 38 included in analyses. Twenty-eight rats (AK1–12 and AL1–16) initiated training on stimulus pair 1, and 26 completed it (AK8 and AL12 failed to learn). Another 8 animals (AM1–8) were trained on stimulus pair 1 but were not included in the initial analysis focusing on asymptotic performance and learning (Figure 1d and e; Figure 2) because they were trained after the analyses had been completed. Subjects AM5–8, although trained, did not participate in other behavioral experiments so do not appear in this study. Sixteen animals (AL1–8, AL13–16, and AM1–8) participated in learning stimulus pair 2 (‘new visible stimuli’; canonical-only training regime) while 10 animals (AK1–3, 5–7, 9–12) initially participated in viewing transparent (alpha = 0; AK1, 3, 6, 7, 11) or near-transparent stimuli (alpha = 0.1; AK2, 5, 9, 10, 12), with the subjects sorted randomly into each group. The transparent and near-transparent groups were aggregated but two animals from the near-transparent group were excluded for performing above chance (AK5 and AK12) as this experiment focused on the effects of stimuli that could not be learned. The same 16 animals used for stimulus pair 2 were used for learning stimulus pair 3 under two different reaction time restrictions in which the subjects were sorted randomly. One rat (AL1) was excluded from the outset for not having learned stimulus pair 2. Two additional rats (AL4 and AL7) were excluded for not completing enough trials during practice sessions with the new reaction time restrictions. A final rat (AM1) was excluded because she failed to learn the task. The 12 remaining rats were grouped into seven subjects required to respond above (AL3, AL8, AL13, AL15, AL16, AM3, AM4) and five subjects required to respond below their individual average reaction times (AL2, AL5, AL6, AL14, AM2). Finally, eight rats (AN1–8) were trained on a simplified training regime (‘canonical only’) used as a control for the typical ‘size and rotation’ training object recognition regime (described below). Table 1 summarizes individual subject participation across behavioral experiments.

Table 1. Individual animal participation across behavioral experiments.

| Animal | Sex | Stimulus pair 1 | Stimulus pair 2 | Transparent stimuli | Stimulus pair 3 |

|---|---|---|---|---|---|

| AK1 | F | Size and rotation | Alpha = 0 | ||

| AK2 | F | Size and rotation | Alpha = 0.1 | ||

| AK3 | F | Size and rotation | Alpha = 0.0 | ||

| AK4 | F | Size and rotation | |||

| AK5 | F | Size and rotation | Alpha = 0.1 (excluded)‡ | ||

| AK6 | F | Size and rotation | Alpha = 0 | ||

| AK7 | F | Size and rotation | Alpha = 0 | ||

| AK8 | F | Size and rotation (excluded)* | |||

| AK9 | F | Size and rotation | Alpha = 0.1 | ||

| AK10 | F | Size and rotation | Alpha = 0.1 | ||

| AK11 | F | Size and rotation | Alpha = 0.0 | ||

| AK12 | F | Size and rotation | Alpha = 0.1 (excluded)‡ | ||

| AL1 | F | Size and rotation | Canonical only | (Excluded)§ | |

| AL2 | F | Size and rotation | Canonical only | Below | |

| AL3 | F | Size and rotation | Canonical only | Above | |

| AL4 | F | Size and rotation | Canonical only | Below (excluded) ¶ | |

| AL5 | F | Size and rotation | Canonical only | Below | |

| AL6 | F | Size and rotation | Canonical only | Below | |

| AL7 | F | Size and rotation | Canonical only | Below (excluded)¶ | |

| AL8 | F | Size and rotation | Canonical only | Above | |

| AL9 | F | Size and rotation | |||

| AL10 | F | Size and rotation | |||

| AL11 | F | Size and rotation | |||

| AL12 | F | Size and rotation (excluded)* | |||

| AL13 | F | Size and rotation | Canonical only | Above | |

| AL14 | F | Size and rotation | Canonical only | Below | |

| AL15 | F | Size and rotation | Canonical only | Above | |

| AL16 | F | Size and rotation | Canonical only | Above | |

| AM1 | F | Size and rotation† | Canonical only | Below (excluded)** | |

| AM2 | F | Size and rotation† | Canonical only | Below | |

| AM3 | F | Size and rotation† | Canonical only | Above | |

| AM4 | F | Size and rotation† | Canonical only | Above | |

| AM5 | F | Size and rotation† | |||

| AM6 | F | Size and rotation† | |||

| AM7 | F | Size and rotation† | |||

| AM8 | F | Size and rotation† | |||

| AN1 | F | Canonical only | |||

| AN2 | F | Canonical ony | |||

| AN3 | F | Canonical only | |||

| AN4 | F | Canonical only | |||

| AN5 | F | Canonical only | |||

| AN6 | F | Canonical only | |||

| AN7 | F | Canonical only | |||

| AN8 | F | Canonical only | |||

Failed to learn task.

Not included in initial learning experiment.

Above chance for near-transparent stimuli.

Failed to learn previous stimuli.

Not enough practice trials with reaction time restrictions.

Failed to learn stimuli with reaction time restrictions.

Behavioral training boxes

Rats were trained in high-throughput behavioral training rigs, each made up of four vertically stacked behavioral training boxes. In order to enter the behavioral training boxes, the animals were first individually transferred from their home cages to temporary plastic housing cages that would slip into the behavioral training boxes and snap into place. Each plastic cage had a porthole in front where the animals could stick out their head. In front of the animal in the behavior boxes were three easily accessible stainless steel lickports electrically coupled to capacitive sensors, and a computer monitor (Dell P190S, Round Rock, TX, USA; Samsung 943-BT, Seoul, South Korea) at approximately 40° visual angle from the rats’ location. The three sensors were arranged in a straight horizontal line approximately a centimeter apart and at mouth-height for the rats. The two side ports (L/R) were connected to syringe pumps (New Era Pump Systems, Inc NE-500, Farmingdale, NY, USA) that would automatically dispense water upon a correct trial. The center port was connected to a syringe that was used to manually dispense water during the initial phases of training (see below). Each behavior box was equipped with a computer (Apple Macmini 6,1 running OsX 10.9.5 [13F34] or Macmini 7.1 running OSX El Capitan 10.11.13, Cupertino, CA, USA) running MWorks, an open source software for running real-time behavioral experiments (MWorks 0.5.dev [d7c9069] or 0.6 [c186e7], The MWorks Project https://mworks.github.io/). The capacitive sensors (Phidget Touch Sensor P/N 1129_1, Calgary, Alberta, Canada) were controlled by a microcontroller (Phidget Interface Kit 8/8/8P/N 1018_2) that was connected via USB to the computer. The syringe pumps were connected to the computer via an RS232 adapter (Startech RS-232/422/485 Serial over IP Ethernet Device Server, Lockbourne, OH, USA). To allow the experimenter visual access to the rats’ behavior, each box was, in addition, illuminated with red LEDs, not visible to the rats.

Habituation

Long-Evans rats (Charles River Laboratories, Wilmington, MA, USA) of about 250 g were allowed to acclimate to the laboratory environment upon arrival for about a week. After acclimation, they were habituated to humans for 1 or 2 days. The habituation procedure involved petting and transfer of the rats from their cage to the experimenter’s lap until the animals were comfortable with the handling. Once habituated to handling, the rats were introduced to the training environment. To allow the animals to get used to the training plastic cages, the feedback sounds generated by the behavior rigs, and to become comfortable in the behavior training room, they were transferred to the temporary plastic cages used in our high-throughput behavioral training rigs and kept in the training room for the duration of a training session undergone by a set of trained animals. This procedure was repeated after water deprivation, and during the training session undergone by the trained animals, the new animals were taught to poke their head out of a porthole available in each plastic cage to receive a water reward from a handheld syringe connected to a lickport identical to the ones in the behavior training boxes in the training rigs. Once the animals reliably stuck their head out of the porthole (1 or 2 days) and accessed water from the syringe, they were moved into the behavior boxes.

Early shaping

On their first day in the behavior boxes, rats were individually tutored as follows: Water reward was manually dispensed from the center lickport which is normally used to initiate a trial. When the animal licked the center lickport, a trial began. After a 500 ms tone period, one of two visual objects (stimulus pair 1) appeared on the screen (large front view, degree of visual angle 40°) chosen pseudo-randomly (three randomly consecutive presentations of one stimulus resulted in a subsequent presentation of the other stimulus). This appearance was followed by a 350 ms minimum reaction time that was instituted to promote visual processing of the stimuli. If the animal licked one of the side (L/R) lickports during this time, then the trial was aborted, there would be a minimum intertrial time (1300 ms), and the process would begin again.

At the time of stimulus presentation, a free water reward was dispensed from the correct side (L/R) lickport. If the animals licked the correct side lickport within the allotted amount of time (3500 ms) then an additional reward was automatically dispensed from that port. This portion of training was meant to begin teaching the animals the task mechanics, that is to first lick the center port, and then one of the two side ports.

After the rats were sufficiently engaged with the lickports and began self-initiating trials by licking the center lickport (usually 1 to several days, determined by experimenter) no more water was dispensed manually through the center lickport, but the free water rewards from the side lickports were still given. Once the rats were self-initiating enough trials without manual rewards from the center lickport (>200 per session), the free reward condition was stopped, and only correct responses were rewarded.

Training