Abstract

The study is to incorporate polarized hyperspectral imaging (PHSI) with deep learning for automatic detection of head and neck squamous cell carcinoma (SCC) on hematoxylin and eosin (H&E) stained tissue slides. A polarized hyperspectral imaging microscope had been developed in our group. In this paper, we firstly collected the Stokes vector data cubes (S0, S1, S2, and S3) of histologic slides from 17 patients with SCC by the PHSI microscope, under the wavelength range from 467 nm to 750 nm. Secondly, we generated the synthetic RGB images from the original Stokes vector data cubes. Thirdly, we cropped the synthetic RGB images into image patches at the image size of 96×96 pixels, and then set up a ResNet50-based convolutional neural network (CNN) to classify the image patches of the four Stokes vector parameters (S0, S1, S2, and S3) by application of transfer learning. To test the performances of the model, each time we trained the model based on the image patches (S0, S1, S2, and S3) of 16 patients out of 17 patients, and used the trained model to calculate the testing accuracy based on the image patches of the rest 1 patient (S0, S1, S2, and S3). We repeated the process for 6 times and obtained 24 testing accuracies (S0, S1, S2, and S3) from 6 different patients out of the 17 patients. The preliminary results showed that the average testing accuracy (84.2%) on S3 outperformed the average testing accuracy (83.5%) on S0. Furthermore, 4 of 6 testing accuracies of S3 (96.0%, 87.3%, 82.8%, and 86.7%) outperformed the testing accuracies of S0 (93.3%, 85.2%, 80.2%, and 79.0%). The study demonstrated the potential of using polarized hyperspectral imaging and deep learning for automatic detection of head and neck SCC on pathologic slides.

Keywords: Polarized hyperspectral imaging, Stokes vector, head and neck cancer, deep learning, histologic slides, digital pathology

1. INTRODUCTION

Head and neck squamous cell carcinoma (SCC) is originated from the mucosal epithelium in the oral cavity, pharynx and larynx and is a major head and neck malignancy [1]. Computational pathology, also known as digital pathology, is an emerging technology that promises quantitative diagnosis of pathological samples, and traditional computational pathology relies on RGB digitized histology images [2]. Multidimensional optical imaging has grown rapidly in the recent years. Rather than measuring only the two-dimensional spatial distribution of light as in the conventional photography, multidimensional optical imaging captures unprecedented information about photons’ spatial coordinates, emittance angles, wavelength, time, and polarization [3].

Hyperspectral imaging (HSI) is an optical imaging method that was originally used in remote sensing, and it has been extended to the applications in several other promising fields including biomedical applications [4]. Hyperspectral imaging acquires the spectra at every pixel in a two-dimensional (2D) image and constructs a three-dimensional (3D) data cube, where rich spatial and spectral information can be obtained simultaneously. Hyperspectral imaging has been implemented on the detection of head and neck cancer [5–17]. Yushkov et al [5] developed an acoustic-optic hyperspectral imaging system with an amplitude mask, which improved the contrast for phase visualization in the stained and unstained histological sections of human thyroid cancer. A pilot study was implemented to test the feasibility of a hyperspectral imaging system for in vivo delineating the preoperatively lateral margins of ill-defined BCCs on the head and neck region [6]. Our group has investigated several machine learning and deep learning algorithms for head and neck cancer detection based on hyperspectral imaging, including principal component analysis (PCA) [7], tensor-based computation and modeling [8], the incorporation of support vector machine (SVM) into a minimum spanning forest [9, 10], non-negative matrix factorization (NMF) [11], the combination of super pixels, PCA, and SVM [12], as well as convolutional neural networks (CNN) [13, 14, 15, 16, 17].

Polarized light imaging is an effective optical imaging technique to explore the structure and morphology of biological tissues through obtaining their polarization characteristics. It can acquire the 2D spatial polarization information of the tissue, which reflects various physical properties of the tissue, including surface texture, surface roughness, and surface morphology information [18, 19, 20, 21, 22]. The categories of polarized light imaging techniques, namely linear polarization imaging [23, 24, 25]. Muller matrix imaging [26, 27], and Stokes vector imaging [28], have been applied on head and neck cancer detection. Orthogonal polarization spectral (OPS) imaging method, which is a type of linear polarization imaging method, was implemented for evaluation of anti-vascular tumor treatment and oral squamous cell carcinoma on tissue [23, 24]. A multispectral digital microscope (MDM) with an orthogonal polarized reflectance (OPR) imaging mode was developed for in vivo detection of oral neoplasia [25]. A 4×4 Muller matrix imaging and polar decomposition method were applied for diagnosis of oral precancer [27], and the researchers adopted a 3×3 Muller Matrix imaging method for oral cancer detection [26]. In our previous study, we developed a novel polarized hyperspectral imaging microscope, which is able to distinguish squamous cell carcinoma from normal tissue on hematoxylin and eosin (H&E) stained slides from larynx based on the spectra of Stokes vector [28].

Polarized hyperspectral imaging (PHSI) is a combination of polarization measurement, hyperspectral analysis, and space imaging technology. It can obtain the polarization, spectral and morphological information of the object simultaneously [29, 30, 31]. In this paper, we are developing a novel dual-modality optical imaging microscope by combining hyperspectral imaging and polarized light imaging. The microscope is capable of acquiring polarization, spectral and spatial information of an object simultaneously, and provides more image information for digital pathology compared to RGB digitized histology images. We incorporated the polarized hyperspectral imaging microscope with machine learning algorithms for automatic detection of SCC on H&E-stained tissue slides. We also investigated the ability of using deep learning algorithms to classify the data collected with the polarized hyperspectral imaging microscope. To the best of our knowledge, this is the first study to use polarized hyperspectral imaging for the detection of head and neck cancer based on full Stokes polarized hyperspectral imaging datasets, with the assistance of deep learning methods. Our home-made polarized hyperspectral microscope has the potential to automatic identify if a H&E stained tissue slide has tumor or not, and outline the tumor boundary on the slide, thus provides an innovative solution for automatic histopathology analysis.

2. METHODS

2.1. Polarized hyperspectral imaging

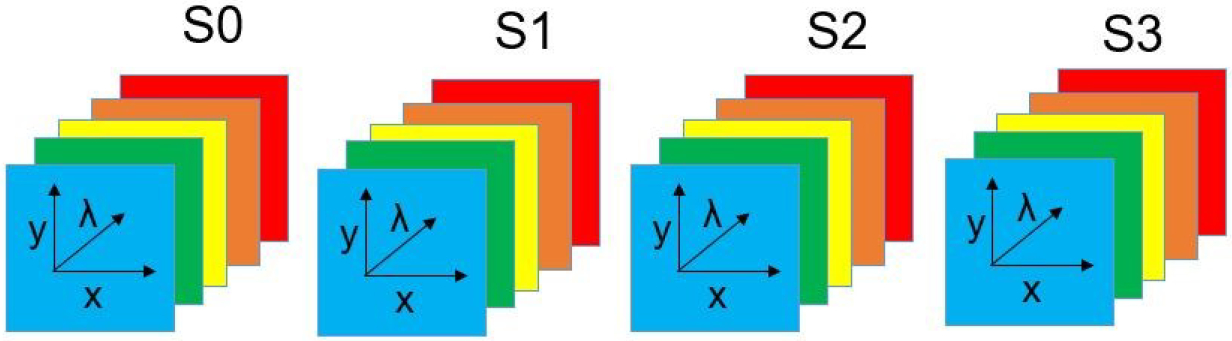

The setup of our home-made polarized hyperspectral microscope has been described in [28]. The system is capable of full Stokes polarized light hyperspectral imaging, which acquires the images of four Stokes vector parameters (S0, S1, S2, and S3) in the wavelength range from 467 nm to 750 nm. The images were collected under 10× magnification with an image size of 1200 × 1200 pixels, and the field of view of the imaging system was 656 um × 656 um. The core components of the imaging system include an optical microscope, two polarizers, two liquid crystal variable retarders (LCVR), and a novel SnapScan hyperspectral camera. The LCVRs and polarizers in the system are for polarized light imaging. The SnapScan hyperspectral camera is able to acquire data through the translation of the imaging sensor inside of the camera. The polarized light imaging components and hyperspectral imaging components work together in the image acquisition progress to obtain the Stokes vector parameters in the visible wavelength range. In the polarized hyperspectral imaging dataset obtained by the system, each Stokes vector parameter corresponds to a 3D data cube with two spatial dimensions and one spectral dimension, as shown in Figure 1.

Figure 1.

Diagram of full-polarization hyperspectral imaging data cubes. The data cube of each Stokes parameter (S0, S1, S2, and S3) has three dimensions including two spatial dimensions (x, y) and one spectral dimension (λ).

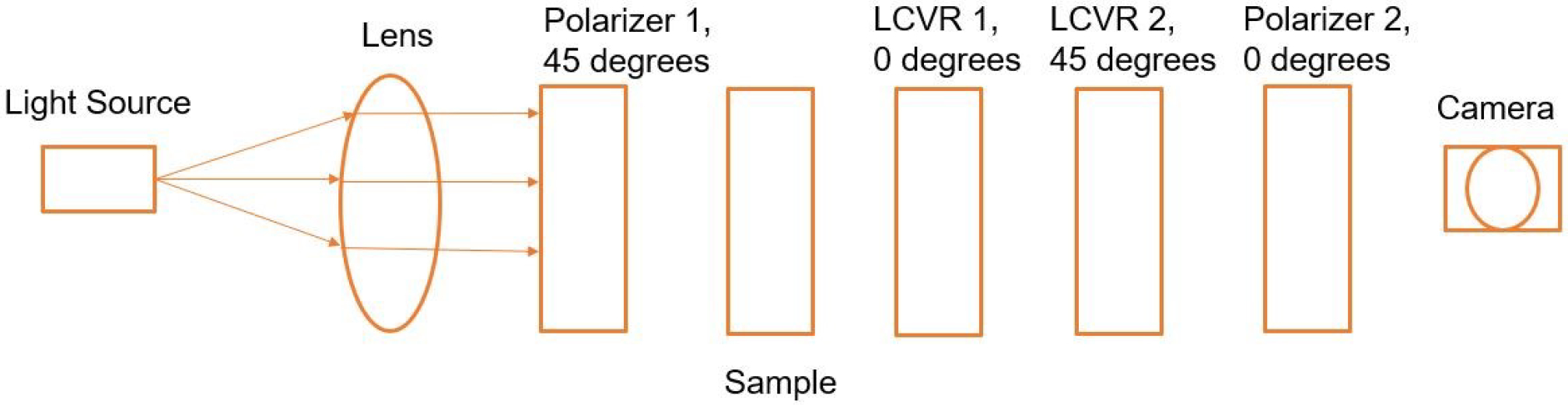

Polarized light imaging is realized by the two polarizers and two LCVRs. Figure 2 demonstrates the schematic of the imaging system with fast axis orientations of polarizers and LCVRs. Polarizer 1 was set at 45 degrees, and polarizer 2 was set at 0 degrees. LCVR 1 was set at 0 degrees, and LCVR 2 was set at 45 degrees. The system is capable of full Stokes polarimetric imaging, which produces all four components of the Stokes vector. Thus, the system can completely define the polarization properties of transmitted light. The way to calculate the four elements of Stokes vector (S0, S1, S2, and S3) is expressed in the following equation (1):

| (1) |

where Ih represent the light intensity measured with a horizontal linear analyzer, in which the retardations of LCVR 1 and LCVR 2 are both set at 0 rad; Iv represents the light intensity measured with a vertical linear analyzer, in which LCVR 1 is set at 0 rad retardation and LCVR 2 is set at π rad retardation; I45 represents the light intensity measured with a 45 degrees oriented linear analyzer, in which LCVR 1 and LCVR 2 are both set at π/2 rad retardation; Irc represents the light intensity measured with a right circular analyzer, in which LCVR 1 is set at 0 rad retardation and LCVR 2 is set at π/2 rad retardation. The phase retardation of LCVR is determined by different values of voltage applied on it. In addition, the value of S0 is equal to the value of light intensity.

Figure 2.

Schematic of the polarized light imaging system. The fast axis orientation of polarizer 1 was set at 45 degrees, and polarizer 2 was set at 0 degrees. The fast axis orientation LCVR 1 was set at 0 degrees, and LCVR 2 was set at 45 degrees.

2.2. Sample preparation and data acquisition

Fresh surgical tissue samples were obtained from patients who underwent surgical resection of head and neck cancer, as we described earlier [32]. Of each patient, a sample of the primary tumor, a normal tissue sample, and a sample at the tumor-normal margin were collected. Fresh ex-vivo tissues were formalin fixed, paraffin embedded, sectioned, stained with hematoxylin and eosin, and digitized using whole-slide scanning. Then, a board-certified pathologist with expertise in head and neck pathology outlined the cancer margin on the digital slides using Aperio ImageScope (Leica Biosystems Inc, Buffalo Grove, IL, USA). The annotations were used as histology ground truth in this study.

In the image acquisition progress, we acquired images of Ih, Iv, I−45, Ilc, and then calculated the Stokes vector parameters (S0, S1, S2, S3). We collected the image data from H&E-stained tissue slides of 17 patients with squamous cell carcinoma. The selected areas to be imaged on normal tissue slides were from healthy stratified squamous epithelium, and the selected areas to be imaged on cancerous tissue slides were at or close to cancer nests. We cropped the original images with the image size of 1200 × 1200 pixels into image patches with a patch size of 96 × 96 pixels.

2.3. Synthetic RGB images

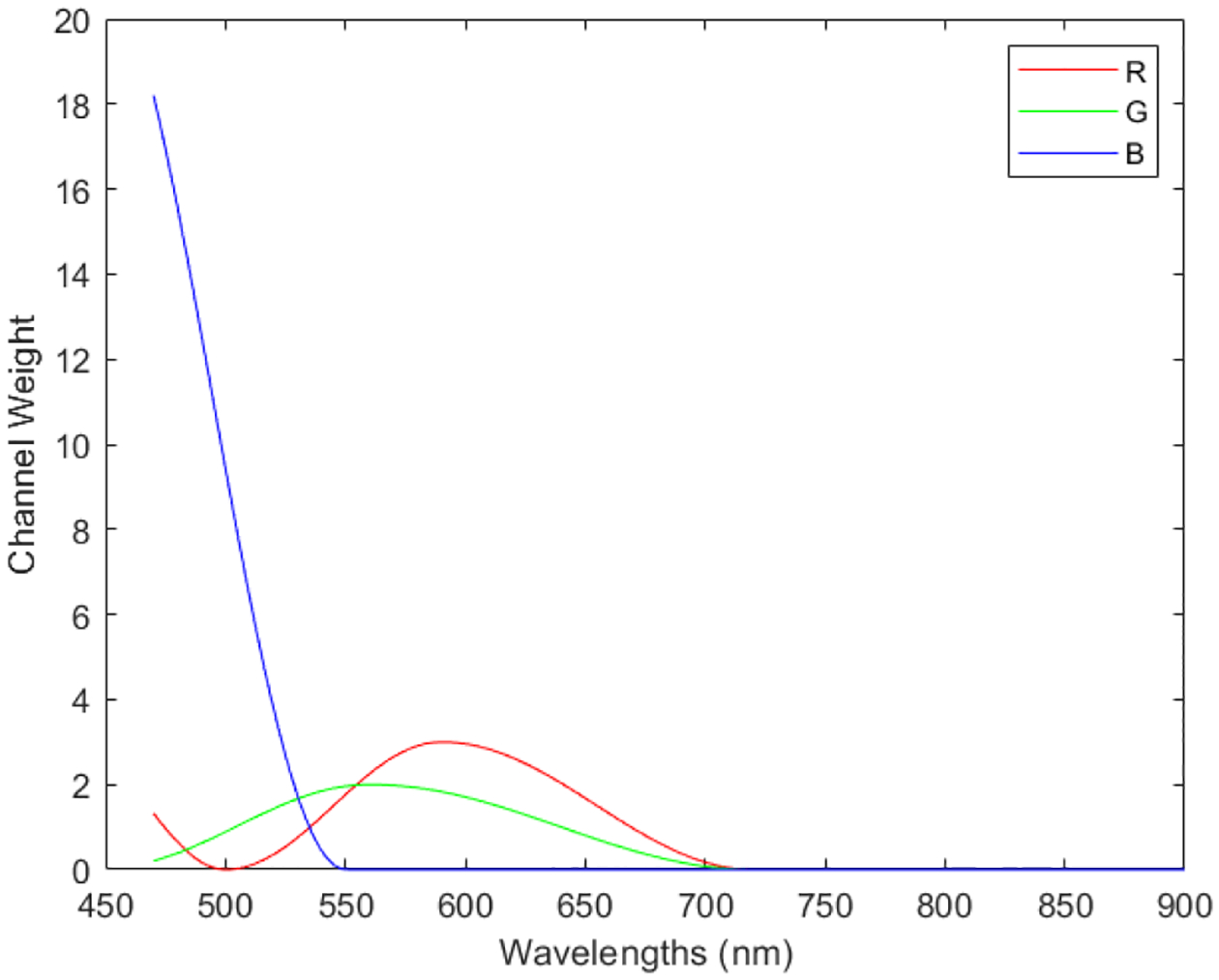

To generate synthetic RGB images, we adopted a HSI-to-RGB transformation function similar to the spectral response of human eye and modified it for our data to generate the synthetic RGB images [33]. The transformation function is shown in Figure 3. We applied this HSI-to-RGB transformation function to all the four Stokes vector parameters (S0, S1, S2, and S3) to generate four sets of PHSI-synthesized RGB images.

Figure 3.

Transformation function to synthesize pseudo-RGB images from the polarized hyperspectral data cubes.

2.4. Convolutional neural network classification

In this study, we trained our convolutional neural network (CNN) based on ResNet50 using transfer learning strategy. A residual neural network (ResNet) is a type of deep convolutional neural networks using skip connections to jump over some layers, which is proved to work efficiently on solving the problem of vanish gradients and degradation [34]. ResNet50 is a variant of ResNet model which has 48 Convolution layers along with 1 MaxPool and 1 Average Pool layer. Transfer learning is a strategy in machine learning that focused on storing knowledge gained while solving one problem and applying it to a different but related problem [35]. Deep Learning requires significant training data and training time compared to machine Learning like for computer vision or sequential text processing or audio processing. We can save the weights of our trained models and share for others to use. We also have pre-trained models today that are extensively used for Transfer Learning referred to as Deep Transfer Learning.

Firstly, we loaded the weights of the ResNet50 model trained based on ImageNet. Secondly, we replaced the last predicting layer of the pre-trained ResNet50 model a set of our own predicting layers, including a flatten layer, a dense layer, a batch normalization layer, an activation layer, a dropout layer, and another dense layer. Thirdly, we froze the weights of pre-trained ResNet50 model. The frozen pre-trained ResNet50 model were used as feature extractor, and not updated during the training. Finally, we started the train our models, and only updated the weights of our own predicting layers. Model training was implemented using Keras with Tensorflow backend on a GeForce RTX 2080 GPU. The optimizer used in the model training was Adam, the loss function was binary cross-entropy, and the activation function of the output layer was sigmoid. Table 1 demonstrates our CNN architecture and the total number of parameters at each layer of it.

Table 1.

CNN architecture.

| Layer | Parameter # |

|---|---|

| ResNet50 (Model) | 23587712 |

| Flatten | 0 |

| Dense | 524288 |

| BatchNormalization | 1024 |

| Activation (Relu) | 0 |

| Dropout (0.5) | 0 |

| Dense | 257 |

Image patches (96 × 96 pixels) generated from the synthetic RGB images of S0, S1, S2, and S3 were used for training the CNN. We took accuracy as the evaluation metric, which is defined as the ratio of the number of correctly classified image patches to the total number of image patches in the ground truth, as is expressed in equation (2):

| (2) |

where true positive (TP) refers to the number of correctly classified cancerous image patches, true negative (TN) refers to the number of correctly classified normal image patches, false positive (FP) refers to the number of normal image patches classified into cancerous image patches, and false negative (FN) refers to the number of cancerous image patches classified into normal image patches.

3. RESULTS

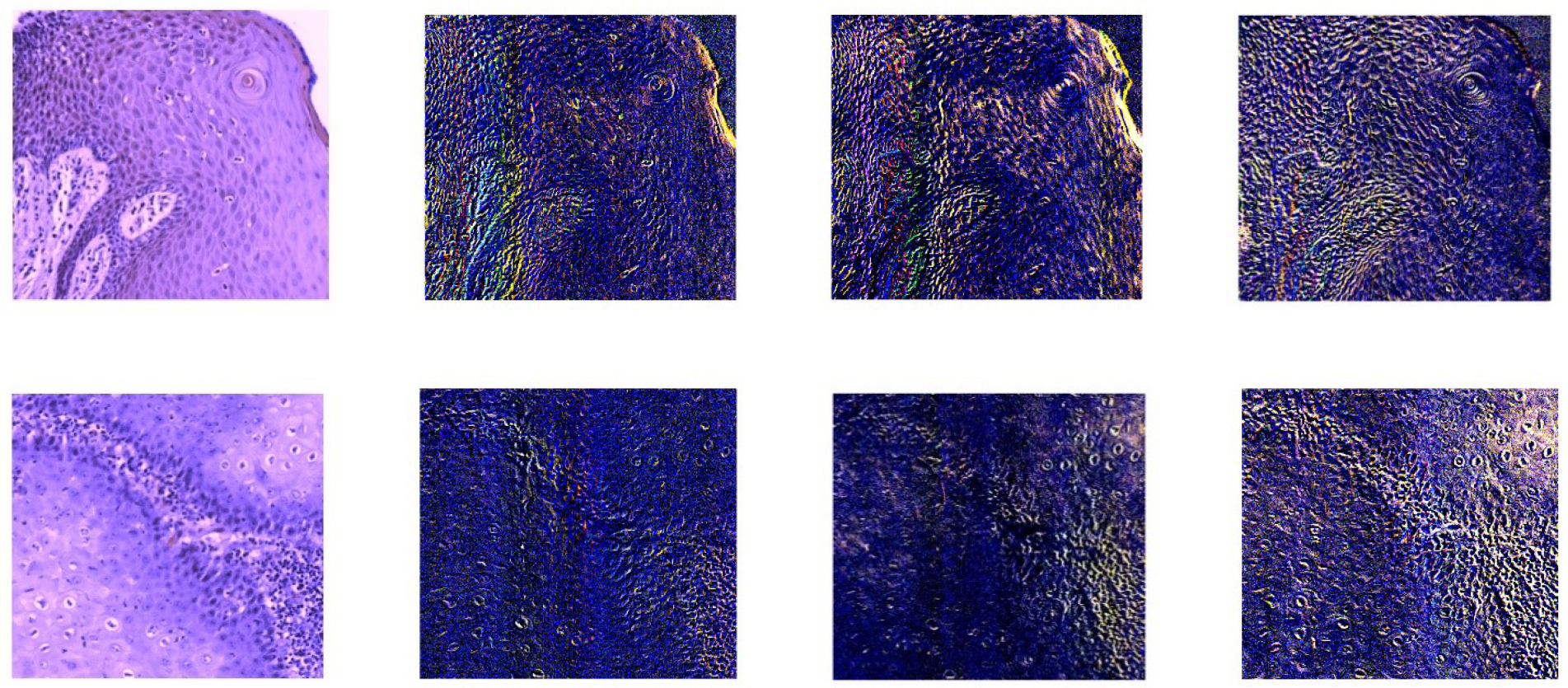

3.1. Comparison of Stokes vector parameters

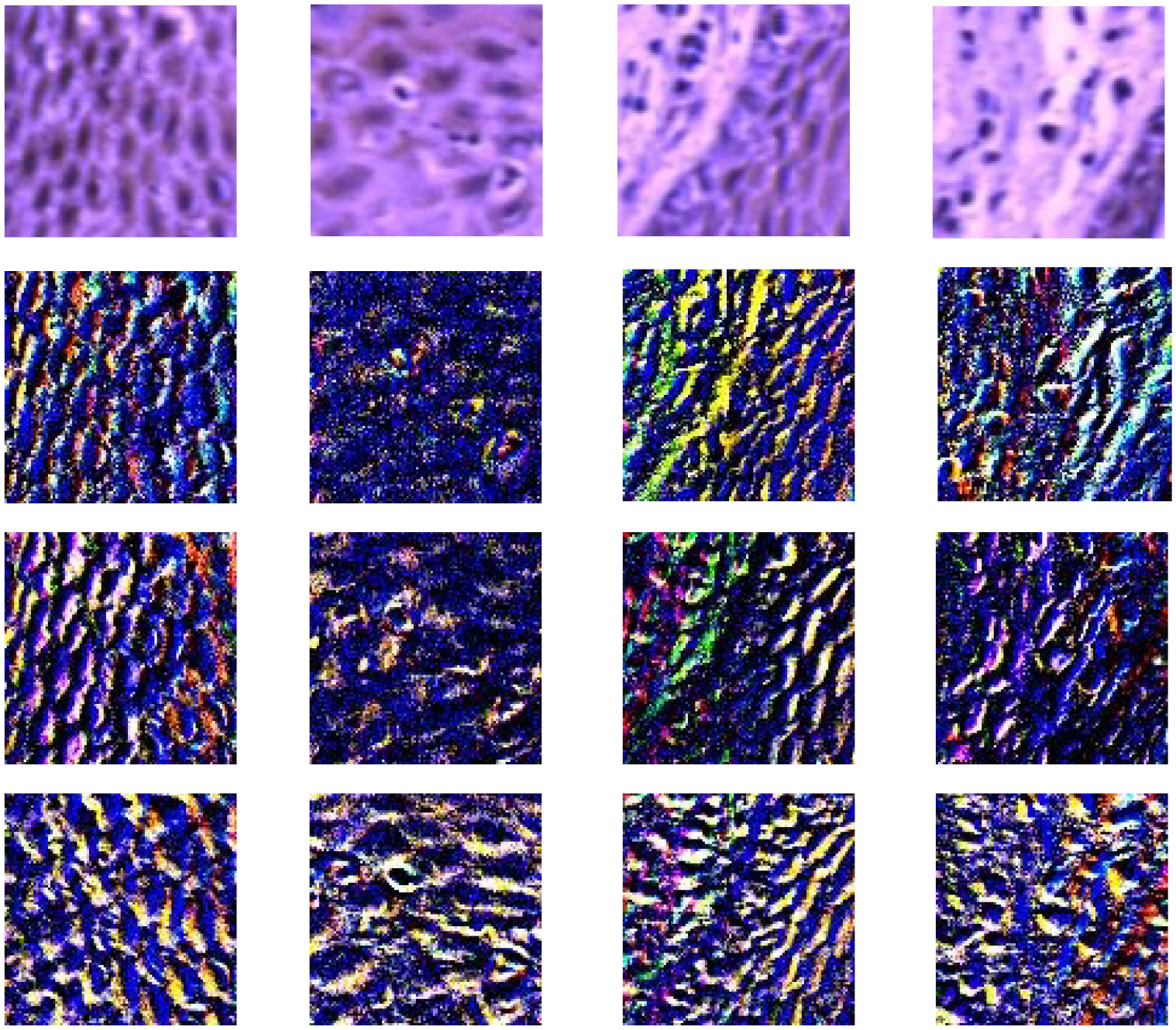

In Figure 4, we demonstrate the PHSI-synthesized RGB images of Stokes vector parameters (S0, S1, S2, and S3) from a normal image of the normal slide and a cancerous image of the tumor slide from the same patient. The value of S0 is equal to light intensity, so the PHSI-synthesized image of S0 looks very similar to the appearance of images acquired by RGB cameras of H&E stained tissue slides. In the H&E staining process, The hematoxylin stains cell nuclei a purplish blue, and eosin stains the extracellular matrix and cytoplasm pink, with other structures taking on different shades, hues, and combinations of these colors [36]. The overall image intensity of the PHSI-synthesized RGB images of S1, S2, and S3 are all relatively lower compared to S0, as they demonstrate the differences (not sum) between polarized light components. The PHSI-synthesized RGB images of S1, S2, and S3 to some extent lose the color information of purplish blue and pink, compared to the PHSI-synthetic RGB images of S0. However, the PHSI-synthetic RGB images of S1, S2, and S3 keep the clear structural information and image contrasts among cell nuclei, extracellular matrix, and cytoplasm.

Figure 4.

The PHSI-synthesized RGB images of S0, S1, S2, and S3 (left to right) from a normal area (top) and a cancerous area (bottom) of the same patient.

Figure 5 demonstrates the cropped PHSI-synthesized RGB image patches (96×96 pixels) of S0, S1, S2, and S3 from a normal image of a patient. We are able to see more detailed image features from the PHSI-synthesized RGB image patches. The 4 columns of images shown in figure 5 belong to 4 different regions of the slide. From each column of images, we can observe that different Stokes vector parameter keeps different types of structural information on the tissue slide, like the orientations of extracellular matrix (ECM), and the contours of cells.

Figure 5.

Four columns of cropped PHSI-synthesized RGB image patches (96×96 pixels) (S0, S1, S2, and S3) showing four different regions on one normal tissue slide.

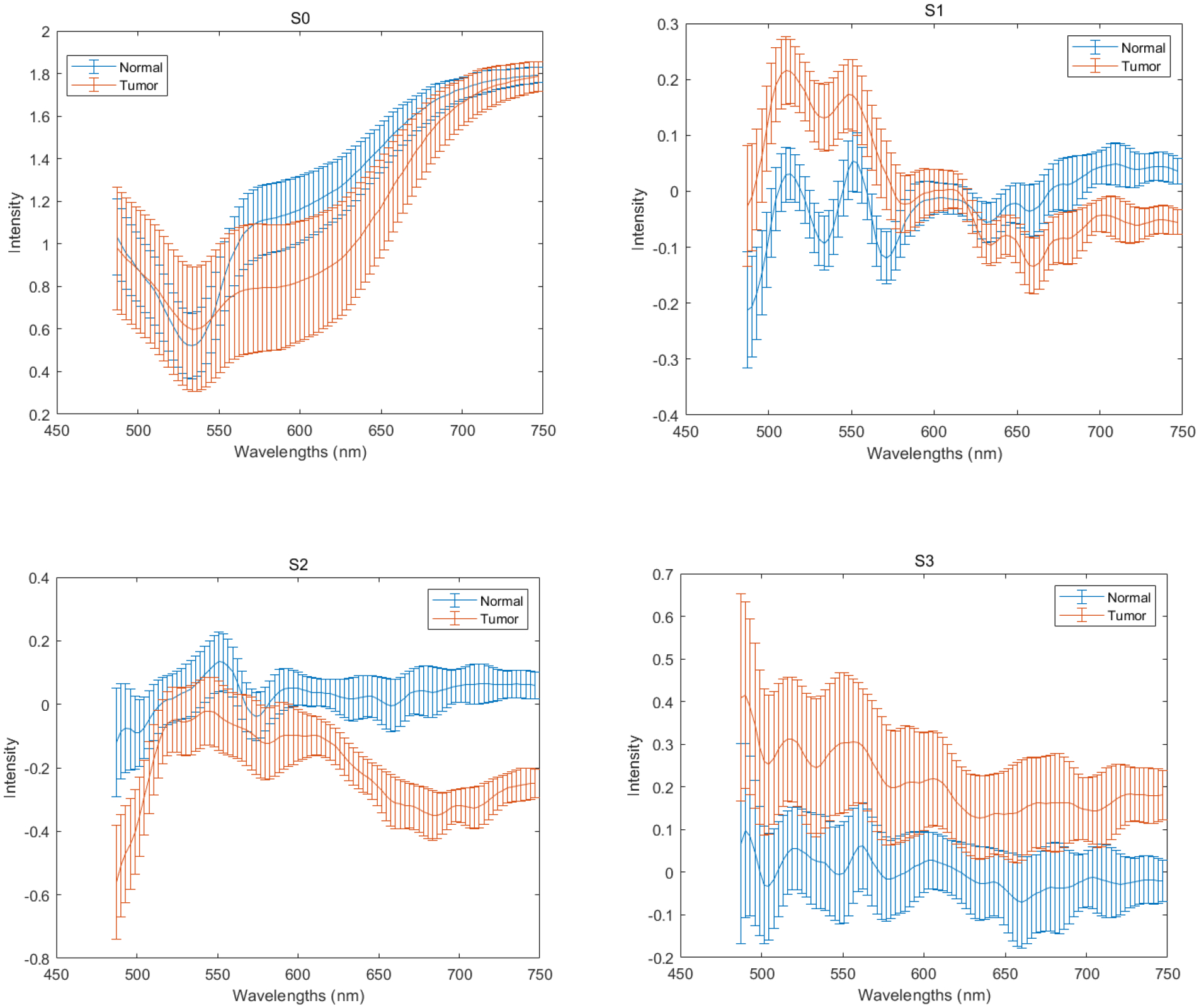

Figure 6 demonstrates the spectra for the Stokes vector parameters (S0, S1, S2, and S3) from the acquired normal image and the tumor image of one patient by the PHSI microscope. The spectra for (S0, S1, S2, and S3) are plotted with the average and standard deviation of an area of 200×200 pixels in the image. We can observe the differences of the spectra in S0, S1, S2, and S3.

Figure 6.

The spectra for the Stokes vector parameters (S0, S1, S2, and S3) from the acquired normal image and the tumor image of one patient

3.2. Performances of the convolutional neural network

Leave-patient-out cross validation was conducted on image patches from 17 patients based on the CNN architecture we described above. The patient numbers are as follows: 62, 68, 74, 103, 110, 120, 127, 133, 134, 137, 146, 149, 154, 161, 166, 184, 187. We used the PHSI-synthesized RGB image patches of S0, S1, S2, and S3 belonging to patient 74, patient 103, patient 127, patient 134, patient 161 and patient 166 to calculate the testing accuracies. Each time we used the image patches of a single parameter (S0, S1, S3 or S3) from one patient as the testing data, and used the image patches of the same parameter (S0, S1, S2, or S3) from the rest 16 patients as the training data. In the training progress, we implemented 8 fold cross validation. In each fold, we randomly chose 2 patients for validation to avoid bias. The results of testing accuracies are listed in Table 2.

Table 2.

Result of the testing accuracies for S0 and S1 of patient 127, patient 134, and patient 137.

| Patient # | S0 | S1 | S2 | S3 |

|---|---|---|---|---|

| 74 | 93.3% | 71.1% | 40.9% | 96.0% |

| 127 | 85.2% | 55.6% | 64.8% | 87.3% |

| 134 | 80.2% | 51.6% | 66.7% | 82.8% |

| 166 | 79.0% | 16.3% | 69.2% | 86.7% |

| 161 | 80.5% | 61.4% | 52.8% | 74.8% |

| 103 | 82.9% | 73.0% | 61.3% | 77.5% |

| Average | 83.5% | 54.8% | 59.3% | 84.2% |

The result shows that the average testing accuracy (84.2%) on S3 outperformed the average testing accuracy (83.5%) on S0. This suggested the potential of using polarized hyperspectral imaging and deep learning to increase the accuracy of automatic detection of head and neck SCC on pathologic slides. Furthermore, the testing accuracies of S3 (96.0%, 87.3%, 82.8%, and 86.7%) outperformed the testing accuracies of S0 (93.3%, 85.2, 80.2%, and 79.0%) from 4 of 6 patients in the testing data. Furthermore, the average testing accuracies of S1 (54.8%) and S2 (59.3%) are both lower than S0 (83.5%).

4. DISCUSSION AND CONCLUSION

This is the first work to use Stokes vector based polarized hyperspectral imaging and deep learning in cancer histopathology. The major innovative aspect of this work is the automatic detection of head and neck cancer based on pathologic slides using deep learning and different Stokes vector parameters. In this study, our experiment demonstrates the ability of using polarized hyperspectral imaging and deep learning for automatic detection of head and neck SCC on pathologic slides.

The current deep learning classifiers were set up only based on the PHSI-synthesized RGB images of Stokes vector parameters, which did not apply all the wavelength information contained in the data cubes of Stokes vector. In the future study, we will build up deep learning classifiers based on the complete PHSI data cubes of the four Stokes vector parameters (S0, S1, S2, and S3) from more patients and cancer types, and make the quantitative comparison among the performances of different classifiers.

The current classifiers were only trained based on the separated Stokes vector parameters. From the preliminary results shown in this paper, we could see that each Stokes vector contains its own unique structural and wavelength information in the PHSI data cubes. Therefore, it is worth to set up the deep learning classifier which is capable of utilizing all the information from those four Stokes vector parameters (S0, S1, S2, and S3) to finish the classification task. One feasible solution for this might be the ensemble learning strategy. In addition, the current images we collected from each tissue slide did not cover all the regions on the slide, and we will acquired more images from all the regions on the tissue slides, to generate whole slide images. We will prove that our home-made polarized hyperspectral microscope does not only have the potential to automatic identify if a H&E stained tissue slide has tumor or not, but also has the potential to outline the tumor boundary on the H&E stained tissue slide by using whole slide images.

ACKNOWLEDGMENTS

This research was supported in part by the U.S. National Institutes of Health (NIH) grants (R01CA156775, R01CA204254, R01HL140325, and R21CA231911) and by the Cancer Prevention and Research Institute of Texas (CPRIT) grant RP190588.

REFERENCES

- 1.Johnson Daniel E et al. , “Head and neck squamous cell carcinoma,” Nature Reviews Disease Primers, 6(1), 1–22 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ortega Samuel, et al. , “Hyperspectral and multispectral imaging in digital and computational pathology: a systematic review,” Biomedical Optics Express, 11(6), 3195–3233 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Gao Liang, and Wang Lihong V., “A review of snapshot multidimensional optical imaging: measuring photon tags in parallel,” Physics reports, 616, 1–37 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Lu G, and Fei B, “Medical hyperspectral imaging: a review,” Journal of biomedical optics 19(1), 010901 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Yushkov KB, and Molchanov VY, “Hyperspectral imaging acousto-optic system with spatial filtering for optical phase visualization,” Journal of biomedical optics 22(6), 066017 (2017). [DOI] [PubMed] [Google Scholar]

- 6.Salmivuori M et al. , “Hyperspectral Imaging System in the Delineation of Ill‐defined Basal Cell Carcinomas: A Pilot Study,” Journal of the European Academy of Dermatology and Venereology (2018). [DOI] [PubMed] [Google Scholar]

- 7.Lu G et al. , “Hyperspectral imaging for cancer surgical margin delineation: registration of hyperspectral and histological images.” Medical Imaging 2014: Image-Guided Procedures, Robotic Interventions, and Modeling, International Society for Optics and Photonics, 9036 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lu G et al. , “Spectral-spatial classification using tensor modeling for cancer detection with hyperspectral imaging,” Medical Imaging 2014: Image Processing, 903413 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Pike R et al. , “A minimum spanning forest based hyperspectral image classification method for cancerous tissue detection,” Medical Imaging 2014: Image Processing, 90341W (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Pike R et al. , “A Minimum Spanning Forest-Based Method for Noninvasive Cancer Detection With Hyperspectral Imaging,” IEEE Trans. Biomed. Engineering 63(3), 653–663 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lu G et al. , “Estimation of tissue optical parameters with hyperspectral imaging and spectral unmixing,” Medical Imaging 2015: Biomedical Applications in Molecular, Structural, and Functional Imaging 94170Q (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Chung H et al. , “Superpixel-based spectral classification for the detection of head and neck cancer with hyperspectral imaging,” Medical Imaging 2016: Biomedical Applications in Molecular, Structural, and Functional Imaging, 978813 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Halicek M et al. , “Tumor margin classification of head and neck cancer using hyperspectral imaging and convolutional neural networks,” Medical Imaging 2018: Image-Guided Procedures, Robotic Interventions, and Modeling, International Society for Optics and Photonics, 10576 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Halicek M et al. , “Deep convolutional neural networks for classifying head and neck cancer using hyperspectral imaging,” Journal of biomedical optics, 22(6), 060503 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Halicek M et al. , “ Optical biopsy of head and neck cancer using hyperspectral imaging and convolutional neural networks,” Journal of biomedical optics, 24(3), 060503 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Halicek M et al. , “ Hyperspectral imaging of head and neck squamous cell carcinoma for cancer margin detection in surgical specimens from 102 patients using deep learning,” Cancers, 11(9), (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Halicek M et al. , “ Tumor detection of the thyroid and salivary glands using hyperspectral imaging and deep learning,” Biomedical Optics Express, 11(3), (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Anderson RR, “Polarized light examination and photography of the skin,” Arch. Dermatol, 127(7), 1000–1005 (1991). [PubMed] [Google Scholar]

- 19.Pierangelo A et al. , “Polarimetric imaging of uterine cervix: a case study,” Optics express 21(12), 14120–14130 (2013). [DOI] [PubMed] [Google Scholar]

- 20.Raković MJ et al. , “Light backscattering polarization patterns from turbid media: theory and experiment,” Applied optics, 38(15), 3399–3408 (1999). [DOI] [PubMed] [Google Scholar]

- 21.Liu B et al. , “Mueller polarimetric imaging for characterizing the collagen microstructures of breast cancer tissues in different genotypes,” Optics Communications, 433, 60–67 (2019). [Google Scholar]

- 22.Chang J et al. , “Division of focal plane polarimeter-based 3× 4 Mueller matrix microscope: a potential tool for quick diagnosis of human carcinoma tissues,” Journal of biomedical optics, 21(5), 056002 (2016). [DOI] [PubMed] [Google Scholar]

- 23.Lindeboom JA, Mathura KR, and Ince C, “Orthogonal polarization spectral (OPS) imaging and topographical characteristics of oral squamous cell carcinoma,” Oral oncology, 42(6), 581–585 (2006). [DOI] [PubMed] [Google Scholar]

- 24.Pahernik S et al. , “Orthogonal polarisation spectral imaging as a new tool for the assessment of antivascular tumour treatment in vivo: a validation study,” British journal of cancer, 86(10), 1622 (2002). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Roblyer DM et al. , “Multispectral optical imaging device for in vivo detection of oral neoplasia,” Journal of biomedical optics, 13(2), 024019 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Manhas S et al. , “Polarized diffuse reflectance measurements on cancerous and noncancerous tissues,” Journal of biophotonics, 2(10), 581–587 (2009). [DOI] [PubMed] [Google Scholar]

- 27.Chung J et al. , “Use of polar decomposition for the diagnosis of oral precancer,” Applied optics, 46(15), 3038–3045 (2007). [DOI] [PubMed] [Google Scholar]

- 28.Zhou Ximing, et al. , “Development of a new polarized hyperspectral imaging microscope,” Imaging, Therapeutics, and Advanced Technology in Head and Neck Surgery and Otolaryngology 2020. Vol. 11213. International Society for Optics and Photonics; (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Dong Y et al. , “Quantitatively characterizing the microstructural features of breast ductal carcinoma tissues in different progression stages by Mueller matrix microscope,” Biomedical optics express 8(8), 3643–3655 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Vasefi Fartash, et al. , “Polarization-sensitive hyperspectral imaging in vivo: a multimode dermoscope for skin analysis, “ Scientific reports, 4(1), 1–10 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Wang Z et al. , “Polarization-resolved hyperspectral stimulated Raman scattering microscopy for label-free biomolecular imaging of the tooth,” Applied Physics Letters 108(3), 033701 (2016). [Google Scholar]

- 32.Lu G et al. , “Detection of head and neck cancer in surgical specimens using quantitative hyperspectral imaging,” Clinical Cancer Research, 5426–5436 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ling Ma, et al. , “Hyperspectral microscopic imaging for automatic detection of head and neck squamous cell carcinoma using histologic image and machine learning,” Medical Imaging 2020: Digital Pathology. Vol. 11320, International Society for Optics and Photonics, (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.He Kaiming, et al. , “Deep residual learning for image recognition, “ Proceedings of the IEEE conference on computer vision and pattern recognition, 770–778 (2016). [Google Scholar]

- 35.West Jeremy, Ventura Dan, and Warnick Sean, “Spring research presentation: A theoretical foundation for inductive transfer.” Brigham Young University, College of Physical and Mathematical Sciences, 1(08), (2007). [Google Scholar]

- 36.Chan John KC, “The wonderful colors of the hematoxylin–eosin stain in diagnostic surgical pathology,” International journal of surgical pathology 22(1), 12–32 (2014). [DOI] [PubMed] [Google Scholar]