Abstract

Background

Transfer learning is a form of machine learning where a pre-trained model trained on a specific task is reused as a starting point and tailored to another task in a different dataset. While transfer learning has garnered considerable attention in medical image analysis, its use for clinical non-image data is not well studied. Therefore, the objective of this scoping review was to explore the use of transfer learning for non-image data in the clinical literature.

Methods and findings

We systematically searched medical databases (PubMed, EMBASE, CINAHL) for peer-reviewed clinical studies that used transfer learning on human non-image data.

We included 83 studies in the review. More than half of the studies (63%) were published within 12 months of the search. Transfer learning was most often applied to time series data (61%), followed by tabular data (18%), audio (12%) and text (8%). Thirty-three (40%) studies applied an image-based model to non-image data after transforming data into images (e.g. spectrograms). Twenty-nine (35%) studies did not have any authors with a health-related affiliation. Many studies used publicly available datasets (66%) and models (49%), but fewer shared their code (27%).

Conclusions

In this scoping review, we have described current trends in the use of transfer learning for non-image data in the clinical literature. We found that the use of transfer learning has grown rapidly within the last few years. We have identified studies and demonstrated the potential of transfer learning in clinical research in a wide range of medical specialties. More interdisciplinary collaborations and the wider adaption of reproducible research principles are needed to increase the impact of transfer learning in clinical research.

Introduction

There is no doubt that most clinicians will use technologies integrating artificial intelligence (AI) to automate routine clinical tasks in the future. In recent years, the U.S. Food and Drug Administration has been approving an increasing number of AI-based solutions, dominated by deep learning algorithms [1]. Examples include atrial fibrillation detection via smart watches, diagnosis of diabetic retinopathy based on fundus photographs, and other tasks involving pattern recognition [1]. In the past, the development of such algorithms would have taken an enormous effort, both regarding computational capacity and technical expertise. Nowadays, computational tools, including cloud computing, free software, and training materials are more easily accessible than ever before [2]. This means that more researchers, with more diverse backgrounds, have access to machine learning, and that they can focus more on the subject matter when developing AI-based solutions for clinical practice. Despite this trend, machine learning, neural networks, transfer learning, and other elements of AI still seem to be surrounded by mystery in the clinical research community, and AI has yet to reach its potential in the clinic [1].

A neural network is a type of machine learning model, inspired by the structure of the human brain (S1 Glossary). In the simplest scenario, the input data flows through layers of artificial neurons, known as hidden layers. Each hidden neuron takes the results from previous neurons, calculates a weighted sum before applying a nonlinear function, and feeding this value forward to the next layer. The final layer transforms the results according to the prediction task, for example to probabilities, when the task is to predict whether a lung nodule on a chest X-ray is malignant. Fitting or training a neural network means optimizing the weights or parameters against some performance metric. Deep learning means the use of neural networks with several hidden layers of neurons. In the last decade, several neural network architectures were designed with some consisting of more than 100 layers and tens of millions of weights [3]. In such a deep neural network, neurons at lower levels (i.e. closer to input layer) ‘learn’ to recognize some lower-level features in the data (e.g. circles, vertical and horizontal lines in images), which the higher level neurons combine into more complex features (e.g. a face, some text, etc. in an image) [4]. Neural networks are popular tools in machine learning as they can approximate any complex nonlinear association. This flexibility, however, comes with a price, as fitting complex neural networks requires very large datasets, which limits the spectrum of fields where their application is feasible.

Transfer learning circumvents the above-mentioned limitation and unlocks the potential of machine learning for smaller datasets by reusing a pre-trained neural network built for a specific task, typically on a very large dataset, on another dataset and potentially for a different task. The pre-trained model is also known as the source model, while the new dataset and task is referred to as the target data and target task. A common example is to take a computer vision model, trained to identify everyday objects in millions of images, and further train this model for grading diabetic retinopathy on only a few thousand fundus photographs, instead of training the model from scratch on this smaller dataset [5]. This example demonstrates a type of transfer learning known as fine-tuning, or weight or parameter transfer. Another type of transfer learning is feature-representation transfer, where the features from the hidden layers of a model are used as inputs for another model, but other forms of transfer learning exist [6–8].

Applications of transfer learning are common in computer vision, where large datasets are publicly available to train models that can then be adapted to different domains. One of the most influential datasets is ImageNet, which includes more than a million images from everyday life [9]. Two recent scoping reviews focused on transfer learning for medical image analysis, one of which identified around a hundred articles applying ImageNet-based models for clinical prediction tasks [10, 11]. The number of published articles using transfer learning for medical image analysis approximately doubled every year in the last decade, demonstrating an increasing interest in transfer learning [10, 11]. To our knowledge, a comprehensive overview of the use of transfer learning for other non-image data types is lacking, despite that tabular and time series data seem to dominate in the clinical literature.

Therefore, the objective of this scoping review was to fill this knowledge gap by exploring and characterizing studies that used transfer learning for non-image data in clinical research.

Methods

Scoping reviews identify available evidence, examine research practices, and characterize attributes related to a concept (i.e. transfer learning in our case) [12]. This format fits better with our research objective than a traditional systematic review and meta-analysis, as the latter require a more well-defined research question. During the process, we followed the ‘PRISMA for Scoping Reviews’ guidelines and the manual for conducting scoping reviews by the Joanna Briggs Institute [13, 14].

Eligibility criteria

In accordance with the aim of this review, we only wanted to include studies using transfer learning for a clinical purpose. Similarly, we were only interested in articles indexed in medical databases, as opposed to in purely technical databases such as The Institute of Electrical and Electronics Engineers (IEEE) Xplore Digital Library, which most clinical researchers and practitioners might be unfamiliar with. Moreover, such articles are often written in a technical language, which limits their utility for the clinical research community. By only including articles indexed in medical databases, we could gauge how exposed clinical researchers are to clinical use of transfer learning and could also provide a list of articles that can serve as inspiration to clinical researchers interested in transfer learning.

To be considered for inclusion, studies needed to 1) be published, peer-reviewed, written in English, and indexed in a medical database (defined as PubMed, EMBASE, or CINAHL) since database inception, 2) be a clinical study or focus on clinically relevant outcomes or measurements, 3) use data from human participants or synthetic data representing human participants as target data, 4) use transfer learning with either fine-tuning (parameter transfer) or feature-representation transfer, and 5) analyze non-image target data (text, time-series, tabular data, or audio). Accordingly, we excluded preprints and conference abstracts, basic research, cell studies, animal studies, and studies analyzing image data. Videos were considered as blends of audio and images; therefore, we did not include studies analyzing this type of target data. However, we did not exclude studies that converted non-image data into images (e.g. converting an audio file into an audiogram) and then analyzed them using models from computer vision. Finally, we also excluded studies which combined source data with the target data as an integral part of their analysis as opposed to reusing only a source model. Eligibility criteria were predefined and the review protocol is available online [15].

Information sources and search strategy

We searched PubMed, EMBASE, and CINAHL from database inception until May 18, 2021. The search strategy was drafted by the authors with the assistance from an experienced librarian. The final search strategies for all databases are available online [15]. In brief, we searched for all studies that either specifically mentioned ‘transfer learning’ or included both a similar but less specific phrase (e.g. ‘transfer of learning’, ‘transfer weights’, ‘connectionist network’, etc.) and an AI-related keyword (e.g. ‘artificial intelligence’, ‘neural network’, ‘NLP’, etc.). The search strategy was supplemented by a call-out on Twitter by AH (@adamhulman) on May 25, 2021, and by scanning the references of relevant reviews found during the screening process. As per our aim, we did not include grey literature.

Selection of sources of evidence

The search results from each database were imported to the reference program Endnote 20.1 (Clarivate Analytics, Philadelphia, PA, USA). Duplicate removal was done by AE and is documented online [15]. Hereafter, the records were transferred to Covidence (Veritas Health Innovation, Melbourne, Australia) for the screening process.

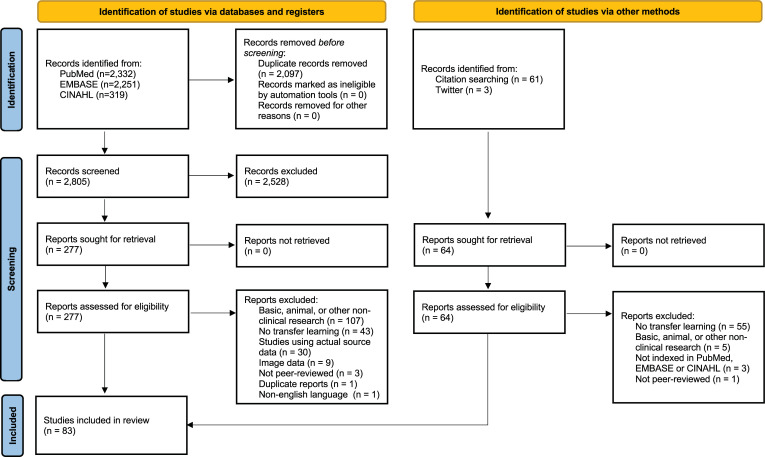

Abstracts and titles were screened against the predefined eligibility criteria by at least two independent authors (AE, MØT, OEA, AH). If an abstract and title clearly did not meet the inclusion criteria, the record was excluded. In case of uncertainty, the record was included for full-text screening. Next, full-text versions of all reports were retrieved if possible and assessed for eligibility. If a report was excluded at this step of the screening process, a reason was documented. In any stage of the screening process, conflicts were resolved through discussion between the dissenting authors. In case of no consensus, the last author (AH) made the final decision. The study selection process is presented using a PRISMA flow diagram [16] in Fig 1.

Fig 1. PRISMA flow diagram [16].

Data charting process

Research questions were pre-specified and published in the scoping review protocol [15]. Data of interest from each included study was extracted by two independent researchers based on a pre-developed and tested data extraction form [15]. Disagreements were solved as described above. Data on study characteristics, like the study area within medicine, the affiliation of the authors (medical or technical departments), and the aim of the study, was extracted. Furthermore, extracted data included knowledge on model characteristics such as what method and origin the model being transferred is based on, type of transfer learning, type of source and target data, and the advantages/disadvantages if compared to a non-transfer learning method. Lastly, information on the reproducibility of the studies was registered, i.e. the public availability of the data, the reused model, and the code for the analysis, as well as the software used. The studies are listed by field within medicine and data type in Table 1, and the complete dataset is available in the electronic supplement (S1 Data).

Table 1. Included articles by data type and primary medical specialty.

Some articles could have been assigned to more medical specialties, but the authors have chosen a primary one with consensus for the sake of simplicity.

Statistical analysis

Categorical variables were described with frequencies and percentages. The different combinations of source and target data were visualized using a Sankey diagram. Analyses were conducted in R 4.1.0 (R Foundation for Statistical Computing, Vienna, Austria) and Stata Statistical Software, Release 16.1 (StataCorp, College Station, Texas) was used for data management. The R code and the results are available as an RMarkdown document in the electronic supplement (S1 Code).

Results

The search resulted in 4,902 records, of which 2,097 were duplicates (Fig 1). After screening the remaining 2,805 records, 2,528 were excluded as irrelevant. Of the 277 records included for full-text review, 194 were excluded, mainly because they focused on basic, animal, or other non-clinical research (n = 107) or did not use transfer learning (n = 43). Another 64 records were identified in reviews or on Twitter, but none of the papers were eligible for inclusion. In total, 83 studies were included in this review (Table 1).

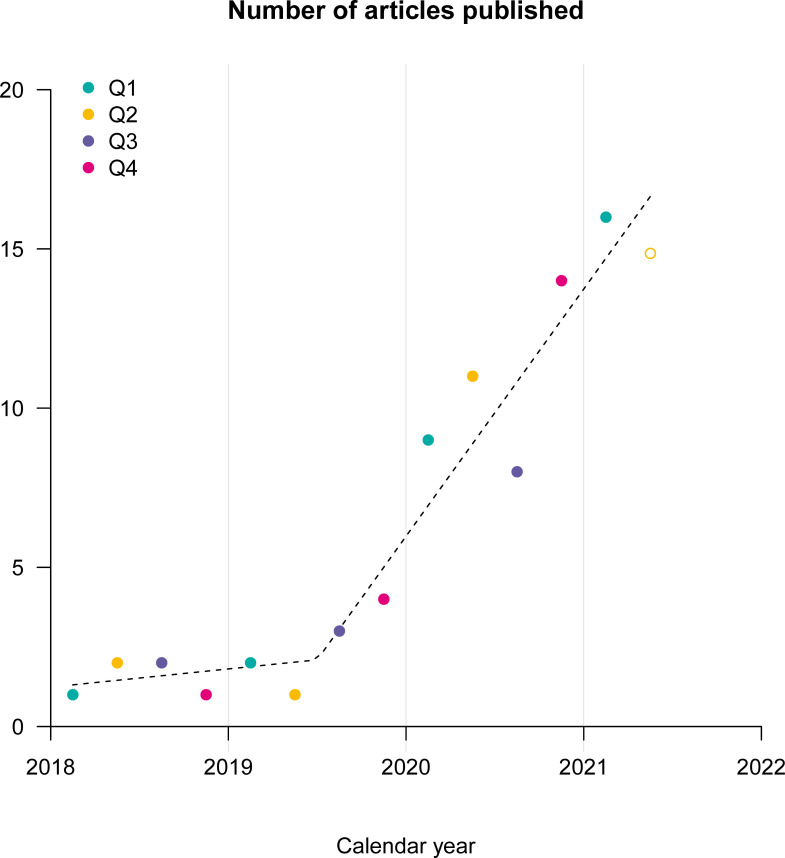

Only one of the identified studies [17] was published before 2018 (in 2012) and 52 out of 83 (63%) studies were published in the 12 months preceding the search conducted on 18th of May, 2021 (Fig 2). Seventeen (20%) studies were published in journals or proceedings of IEEE.

Fig 2. Number of articles published quarterly since 2018.

One article [17] published in 2012 is not shown on this graph. 2021/Q2 is an extrapolated value based on the number of articles in the observation period (01/03/21-18/05/21).

The most common fields of studies were neurology (n = 26) and cardiology (n = 18) followed by genetics, infectious diseases and psychiatry (n = 5 for each). In line with the medical specialties, analyses of electroencephalography (EEG) and electrocardiogram (ECG) data were common with 20 and 19 studies, respectively. Study aims were most often described with the terms: ‘prediction’, ‘detection’, and ‘classification’.

In total, 50 out of 83 (60%) studies included at least one author with a clinical affiliation and at least one with a technical affiliation. Studies with pure technical affiliations were more common (29 out of 83; 35%) than studies with pure clinical affiliations (4 out of 83; 5%), except for studies analyzing text, where all articles were written by clinicians with or without technical co-authors.

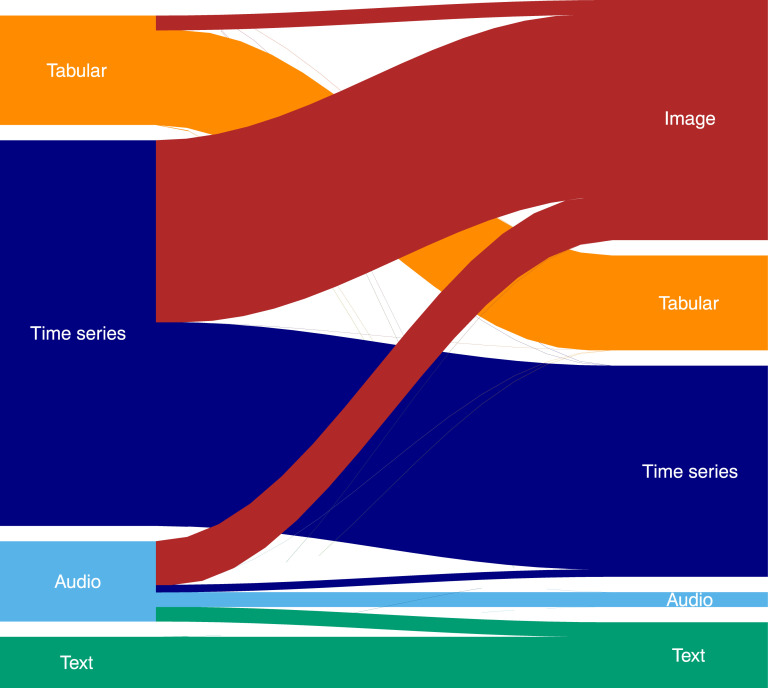

Time series was the most common target data type (n = 51; 61%), followed by tabular data (n = 15; 18%), audio (n = 10; 12%) and text (n = 7; 8%). The reused model was developed in a source dataset of a different type from the target data in 36 out of 83 (43%) of the studies (e.g. transforming time series to spectrograms before applying an image-based model). Transformation before analysis was often applied to time series (25 out of 51; 49%), while it was not used in the analysis of text at all. Image-based models were reused in 33 out of 83 (40%) studies, dominated by models developed in the ImageNet [9] dataset (n = 27). Fig 3 shows the different combinations of source and target data types.

Fig 3.

Visualization of data types (target) analyzed in the studies (on the left side) and the type of data that the reused model (source) was built on (on the right side).

Fine-tuning was approximately three times as common (58 out of 83; 70%) as feature-representation transfer (18 out of 83; 22%). Seven studies applied both approaches. Convolutional neural networks were the most often reused models (58 out of 83; 70%), followed by recurrent neural networks (18 out of 83; 22%). Among the 25 studies applying feature-representation transfer, support vector machines and ‘vanilla’ neural networks were the most common methods used as the final model producing outputs based on the feature representations. Seven studies applied and compared multiple methods for this purpose [18–24].

Half of the studies (41 out of 83; 49%) reused a publicly available model. Among these, 33 out of 41 (80%) models were from an external source, while the rest 20% developed the reused model as part of the actual study and made it publicly available.

Python was the most used software (43 out of 83; 52%), followed by MATLAB (12 out of 83; 14%), while the rest of the articles did not mention which software they used for the analysis. Overall, 22 out of 83 (27%) studies made their analysis code publicly available, and 55 out of 83 (66%) studies utilized at least one open access dataset. The PhysioNet repository [25] was mentioned as a data source in 25 out of 55 (45%) of these articles.

Discussion

Applications of transfer learning were identified in a variety of clinical studies and demonstrated the potential in reusing models across different prediction tasks, data types, and even species. Improvements in predictive performance were especially striking when transfer learning was applied to smaller datasets, as compared to training machine learning algorithms from scratch. Image-based models seemed to have a large impact outside of computer vision and were reused for the analysis of time series, audio, and tabular data. Many studies utilized publicly available datasets and models, but surprisingly few shared their code.

Transfer learning via fine-tuning and feature-representation learning has almost been unknown in the clinical literature until 2019, when the number of articles started to increase rapidly. This development is lagging a few years behind the trend seen in medical image analysis [11], which might be explained partly by the impact that computer vision models have on non-image applications too. Also, we only included peer-reviewed articles indexed in medical databases, while the latest developments in computer science are most likely to be first published as preprints or conference proceedings.

More than half of the studies were from the fields of neurology and cardiology, while the rest of the studies were more equally distributed across other fields. This could be due to the high-resolution nature of EEG and ECG data that suits well with the application of machine learning. Also, there are many publicly available datasets and data science competitions that attract the attention of the computer science community.

The majority of study aims were predictions of binary or categorical outcomes on the individual level e.g. detection of a disease, classification of disease stages, or risk estimation. Few studies focused on prediction of continuous outcomes (e.g. glucose levels [26, 27]) and forecasts on the population level (e.g. COVID-19 trend [28] and dengue fever outbreaks [29]). Prediction models are abundant in the clinical literature, however most do not make it into routine clinical use [30]. For machine learning algorithms, one of the barriers is that clinical practitioners often consider them as black boxes without intuitive interpretations, even though new solutions are constantly being developed to solve this problem [31]. Another issue is that machine learning algorithms are often used to analyze modestly sized tabular datasets and (not surprisingly) fail to outperform traditional statistical methods, contributing to even more skepticism. Transfer learning helps to overcome this problem by reusing models trained on large datasets and tailoring them to smaller ones. This provides the opportunity to capitalize on recent developments in machine learning research in new areas of clinical research. However, to guarantee the development of easily accessible and clinically relevant models that go beyond the ‘proof-of-concept’ level into clinical deployment, it is crucial that computer scientists and clinical researchers work together, which we observed in less than two-thirds of the studies. This proportion would probably have been even lower if we had considered studies indexed in non-clinical databases. Even the clinical studies using transfer learning, we identified, are still largely being published in rather specialized journals focusing on an audience with some technical background (e.g. IEEE and interdisciplinary journals) and have not yet reached mainstream clinical journals.

We were surprised by the high number of studies reusing a model that was developed in a dataset of a different data type than the target dataset. This also made it difficult to compare the size of source and target datasets quantitatively, as they might have been characterized in very different units (e.g. hours of audio recordings in the target dataset vs. number of images in the source dataset), but our observation was that the size of the target datasets was often much smaller than the source dataset. E.g. models developed in the ImageNet dataset (>1 million images) were used even in smaller clinical studies with <100 patients [24, 32, 33], where the use of machine learning models would otherwise not be recommended or feasible.

Many studies compared long lists of different model architectures (e.g. ResNet [34], Inception [35], GoogLeNet [36], AlexNet [37], VGGNet [38], MobileNet [39] etc.) or even different methods. This, often combined with the use of several performance metrics (area under the ROC-curve, F1-score, accuracy, sensitivity, specificity, etc.), makes it difficult to summarize the advantages/disadvantages of transfer learning, even though many studies included comparisons with other deep learning solutions without transfer learning. We observed that the degree of performance improvements varied greatly. Some studies reported faster and more stable model fitting process with transfer learning due to better utilization of data. Some of these results are described in the sections below characterizing the different data types.

Even though half of the studies reused a publicly available model and even more used a publicly available dataset, principles of reproducible research were followed less often. One-third of the studies did not report at all which software they used and even fewer shared their code. Those who did, most often used Python, then MATLAB, while we did not find a single study using R for the transfer learning part. This might be a consequence of the fact that commonly used libraries like PyTorch, TensorFlow, or fastai, are native in Python and only recently became available in R. Those authors who shared their code did so almost exclusively on GitHub.

A discussion of data type-specific observations is organized in the following four sections.

Time series

Time series was the most common target data type among the included studies, dominated by studies of sleep staging and seizure detection based on EEG data [19, 32, 40–55] and ECG analyses [23, 24, 56–70] (e.g. arrhythmia classification). Moreover, transfer learning was used for prediction of glucose levels [26, 27], estimation of Parkinson’s disease severity [71], detection of cognitive impairment [33] and schizophrenia [52], and forecasting of infectious disease trends [28] and outbreaks [29], among other applications [72–82].

Almost half of the studies transformed their data into images (e.g. spectrograms, scalograms), most often using Fourier (e.g. short-time or fast) or continuous wavelet transforms, to be able to utilize models from computer vision. A reason for this might be that publicly available time series datasets were rather small until recently, while in computer vision, ImageNet has been described and widely used as a large benchmark dataset for about a decade [9]. In 2020, PTB-XL [83] was described as the largest publicly available annotated clinical ECG-waveform dataset including 21,837 10-second recordings from almost twenty thousand patients. Recently, Strodthoff et al. [64] used this dataset for ECG analysis, in a similar role to ImageNet’s for computer vision and provided benchmark results for a variety of tasks and deep learning methods. The authors also demonstrated that a transfer learning solution based on PTB-XL provided much more stable performance in another smaller dataset when the size of the training data was further restricted in an experiment, as compared to models developed from scratch.

Transfer learning can enable the use of machine learning models in small datasets, where it would not be feasible to train models from scratch. This may have a positive impact on the study of rare diseases or minority groups, regardless of the type of data. Lopes et al. [69] used this strategy to detect a rare genetic heart disease based on ECG recordings, although they only had 155 recordings from patients. First, the authors developed a convolutional neural network for sex detection, which required a readily available outcome from their database including approximately a quarter of a million ECG recordings. Even though the model was initially trained for a task with little clinical relevance, the model still learnt some useful features from the data. This model was then fine-tuned for the detection of a rare genetic heart disease using only 310 recordings from 155 patients and 155 matched controls. With this approach, the authors achieved major improvements in model discrimination not only compared to training the same architecture from scratch, but also outperformed various machine learning approaches and clinical experts [61].

In the previous example, an easily accessible outcome or label (i.e. sex) made it possible to train the base model, however even this can be avoided by using autoencoders [84]. An autoencoder is an unsupervised machine learning algorithm often used for denoising a dataset by first reducing its dimensions and only keeping relevant information (encoding), before reconstructing the original dataset (decoding). The feature representations learnt during the encoding step might be then transferred to new tasks. Jang et al. [59] developed an autoencoder based on 2.6 million unlabeled ECG recordings which was then reused as the base of an ECG rhythm classifier. The authors compared this approach to an image-based transfer learning classifier and a model trained from scratch. The autoencoder solution performed best, but the difference to the model trained from scratch was much smaller than in the other example. More interestingly, the authors compared the results when using 100%, 50% and 25% of the available data, and found a major drop in performance of the model trained from scratch when using only 25% of the data, while the autoencoder-based transfer learning solution still had an excellent performance as it still indirectly utilized data from >2 million ECG recordings. The image-based solution had a slightly lower F1-score (indicating worse performance) than other methods when using 100% of the data, but it did not change much when using only 25% of the data, which led to a better performance than of the model trained from scratch.

EEG data were most often used for sleep staging and epileptic seizure detection. We highlight the study by Raghu et al. [51] using pre-trained convolutional neural networks for seizure detection based on EEG-based spectrograms, because the authors described both fine-tuning and feature-representation learning solutions. In the latter approach, features were fed into a support vector machine classifier, a popular machine learning technique. The authors found that taking features from deeper layers, representing higher-level features, resulted in higher accuracy for most architectures. For almost all architectures, feature-representation transfer was found to be better than fine-tuning regarding accuracy. However, this can partly be a consequence of the fact that many more models were fitted including features from different layers. Further, once the authors found out which layer provided the most useful representation for a specific problem, the optimization process was shortened markedly compared to the fine-tuning process (~4 vs. 52 min with the Inception-v3 architecture).

Audio

Voice recognition and other audio-based applications of AI surround us in our everyday lives. In a clinical setting, doctors have traditionally used audio signals from stethoscopes to screen patients e.g. for heart and lung diseases. Additionally, vocal biomarkers processed with machine learning algorithms are getting more and more attention in a research setting, and are expected to aid diagnosis and monitoring of diseases in the future [85]. Despite the increasing interest and opportunities for relatively easy and cheap data collection, we only found 10 studies that used transfer learning on audio data. However, these studies covered a variety of fields in medicine (and corresponding audio signals): neurology (speech and electromyography [21, 22, 86, 87]), cardiology (heart sound [88, 89]), pulmonology (respiratory sounds [90, 91]), infectious diseases (cough [92]), and otorhinolaryngology (breathing [93]).

Recordings of speech contain different levels of information: linguistic features that can be derived from transcripts, and temporal and acoustic features that can be derived from raw audio recordings. Two studies applied BERT [94], an open source, pre-trained natural language model, to analyze transcripts of speech with the aim of diagnosing Alzheimer’s disease [21, 87]. Balagopalan et al. [87] also tested a model for the same task that included acoustic features, however, this model performed worse than the model only based on linguistic features. This finding highlights the possible complexity of audio data analysis and the importance of benchmarking different models against one another to get the best performing algorithm.

Machine learning algorithms pre-trained on labeled image-data, like ImageNet, can recognize features on non-image data like audio [95]. To use image models on audio data, the data has to be transformed, usually into a spectrogram image. We were surprised to find that half of the audio studies used pre-trained image models to analyze audio data, and only two studies used pre-trained audio models. This, again, highlights the impact of image models even outside of computer vision. Of interest, Koike et al. [88] aimed to predict heart disease from heart sounds with transfer learning and compared two models: one trained on audio data and another on images. They found the transfer learning model pre-trained on the open source sound database, AudioSet [96], performed better than image models of different architectures such as VGG, ResNet, and MobileNet. Open source, labeled audio datasets exist (e.g. AudioSet [96], LibriSpeech [97]), and the results of Koike et. al highlight how models pre-trained on audio might perform better than image models.

With audio data easily obtainable in a clinical setting, there is a great opportunity to develop and improve transfer learning models that can aid clinicians to screen and diagnose patients in a cost-effective way.

Tabular data

Tabular data is probably the most used data type within clinical research. However, we only identified 15 studies using transfer learning on tabular data covering very different fields in medicine: two-thirds of them were from genetics [98–102], pathology [103–105], and intensive care [18, 106], while the remaining five were from surgery [17], neonatology [107], infectious disease [108], pulmonology [109], and pharmacology [110]. Oncological applications like classification of cancer and prediction of cancer survival were common among the studies in genetics or pathology. As an example for zooming in from a broader disease category to a specific, rarer disease, one study developed a model using gene expression data from a broad variety of cancer types and then fine-tuned the model using a much smaller dataset to predict cancer survival in lung cancer patients [101].

Transformation of tabular data into images was rare compared to time series and audio data. We only identified two studies transforming gene expression data into images [98, 101] and then applying computer vision models for a prediction task. Both of these studies used open access gene expression datasets: AlShibili et al. [98] studied classification of cancer types in a dataset from cBioPortal [111], and López-García et al. [101] predicted cancer survival in a dataset from the UCSC Xena Browser [112]. Both studies reported better accuracy, sensitivity, and specificity when using transfer learning compared to non-transfer learning approaches based on the original tabular data.

We also identified a study reusing a model across species, which highlights another potential of transfer learning. Seddiki et al. [105] reused a model developed on mass spectrometry data from animals to classify human mass spectrometry data. Here, the benefits of transfer learning are clear, as genetic data from animals are often easier to retrieve and share due to the lack of privacy issues. This can potentially provide new opportunities within translational clinical research by reusing scientific knowledge gained from animal studies.

Wardi et al. [108] used both a transfer learning approach and a non-transfer learning approach to predict septic shock in an emergency department setting based on data from electronic health records (EHR) like blood pressure, heart rate, temperature, saturation, and blood sample values. They showed that transfer learning outperformed the traditional machine learning model, especially when only a smaller fraction of data was used. Furthermore, the transfer learning model was externally validated with promising results, which supports its clinical utility. The study by Wardi et al. makes individual-specific predictions possible, which is a prerequisite before an AI-based tool can be implemented in everyday clinical practice to support decision making [1].

Another promising use of transfer learning was described in the study by Gao et al. [99]. The authors developed models for various prediction tasks using genetic data from a heterogeneous population with a focus on how to translate models that perform well on the ethnic majority group to ethnic minority groups. Transfer learning clearly improved performance as compared to other machine learning approaches developed from scratch separately for each ethnic minority group. This application demonstrates how transfer learning can be used to tackle inequalities in health research.

Text

We identified only seven studies using transfer learning on text. Applications ranged from risk assessment of psychiatric stressors, diseases, and medication abuse [20, 113–115], to prediction of morbidity, mortality [116, 117], and adverse incidents from oncological radiation [118]. It is somewhat surprising that we found so few studies, given the massive interest in NLP and the field’s rapid development in recent years [119, 120]. The main reason behind this is methodological. Our systematic search could only identify studies explicitly mentioning transfer learning or a similar term (e.g. ‘knowledge transfer’), however this does not seem to be a common practice in medical text analysis [121–123]. Future studies are warranted about the impact of specific models (e.g. BERT]) on clinical research. There are also technical challenges in medical text analysis (e.g. ambiguous abbreviations, specialty-specific jargon, etc.) [124]. We can indirectly observe this in our review, where all seven articles were written by clinicians and technical authors together or by clinicians alone, in contrast to other data types. This is not to say that technical authors are not interested in the use of transfer learning on clinical text, but rather a reflection that transfer learning in text analysis is still a relatively new method, and studies often focus on technical aspects of NLP and are published in technical journals [119, 120].

Another important reason why we found so few articles on text is that there currently only exist a few large, annotated, and publicly available datasets with EHR [124]. Removing patient identifiable data from EHR is one of the key challenges currently limiting the sharing of large medical text datasets, though automatic tools have recently been developed to fasten this task [125]. Indeed, among the seven identified studies, three analyzed public social media posts to predict mental illnesses, two used relatively small institutional EHR datasets (<1000 patients), one used a large UK database with restricted access, and only a single study used a large, curated, and openly available dataset (the MIMIC-III critical care database [126]). A review on the use of all types of NLP on radiological reports found a similar pattern, where most studies used institutional EHR datasets [127], again highlighting the need for more large, annotated, and publicly available datasets.

As a final note on transfer learning in text, we would like to highlight the study by Si et al. [117]. In this paper, the authors proposed a new method to analyze medical records to predict mortality and identify obesity-related comorbidities. In brief, this method took the temporal aspect of each patient’s documents into account, when predicting the patient’s risk of mortality within the next year. It makes intuitive sense from a clinical perspective that a recent myocardial infarction could be more informative for mortality than one more than ten years ago, and this technical development could be important for future research in clinical text analysis.

Limitations

In our study protocol, we choose to exclude studies combining source and target data (n = 30) from our review, even though we acknowledge the value of this approach in certain scenarios (e.g. [128]). We felt, however, that current data sharing restrictions would present barriers to the practical use and clinical impact of this type of transfer learning. Fine-tuning and feature-representation transfer, on the other hand, can aid to overcome such barriers by reusing models instead of the actual data, which is why these two forms of transfer learning were in the focus of our review.

The identification of clinical studies turned out to be more challenging than we expected, as it is a concept that is difficult to define. We had long discussions on whether to include brain-computer interface studies [129], where transfer learning seems to be a promising method to speed up calibration, with the final aim of helping people with a clinical condition. However, we concluded that the actual prediction models solve engineering problems rather than predict a clinical outcome. Similarly, we excluded NLP studies on biomedical named entity recognition, because even though the datasets might have been medically relevant documents, the task of identifying specific terms are often indirectly relevant from a clinical perspective e.g. by speeding up knowledge synthesis. A recent review provides an overview of the approach and its clinical applications [130].

The type of the target data might also be ambiguous, depending on how we define raw data. Transcripts of audio recordings could easily be considered as text, but we tried to evaluate what the first dataset was that e.g. a medical device output without further processing. Using this approach, the recordings were considered as a dataset of audio type and transcribing them to text was considered as a data transformation.

Our scoping review did not include clinical studies if they were only published as preprints, or in proceedings of computer science conferences. However, we chose our inclusion criteria this way consciously, so that we could give an overview of the field from the perspective of clinical researchers. Therefore, our review does likely not include the latest technical developments within AI research and transfer learning, but instead include the techniques which have started to impact clinical research.

During data extraction, we planned to find the best proxy variables to answer our research questions, but some of these were challenging. As described previously, we decided not to extract quantitative data on the size of the source and the target datasets, as the unit of observation often differed. It was also difficult to characterize comparisons of transfer learning vs. non-transfer learning solutions because many studies reported many different models and performance metrics. We were curious about the background of the authors (clinical vs. technical), but used only the affiliations as a proxy, as that was easily accessible information in most cases.

Conclusions

Our scoping review is a roadmap to transfer learning for non-image data in clinical research, providing clinical researchers an easily accessible resource to this relatively new technique in machine learning. The interest in transfer learning for non-image data in clinical research began to increase rapidly only recently, lagging a few years behind trends in its use for medical image analysis. Applications are unbalanced between different clinical research areas and data types. Neurology and cardiology seem to be among the ‘first movers’ with time series data, partly driven by the public availability of EEG and ECG datasets, which also suit machine learning due to their high-resolution nature. We found fewer classical epidemiological studies than expected despite transfer learning can help to overcome some of the big challenges of the field i.e. data collection on a large scale is often difficult and expensive, and data sharing can be hindered by privacy concerns. In the future, some of the largest epidemiological datasets (e.g. UK Biobank [131]) could serve as source data for the development of machine learning algorithms, that a wide range of smaller studies could build on by using transfer learning without access to the actual dataset. This is in line with the FAIR principles [132] supporting the reuse of digital assets in an environment with increased volume and complexity of datasets. Moreover, the wider use of reproducible research principles and stronger interdisciplinary collaborations between clinical researchers and computer scientists are crucial for the development of clinically relevant prediction models that can be reused with transfer learning across studies, clinical specialties, or even species.

Supporting information

(PDF)

(XLSX)

(PDF)

(PDF)

(PDF)

Acknowledgments

The authors are grateful to Anne Vils Møller (Librarian at the Royal Danish Library) for her valuable advice on the search strategy. We are also grateful for the comments of Professor Daniel R. Witte and the Epidemiology Group, Steno Diabetes Center Aarhus, Denmark, and of Zeinab Schäfer and Andreas Mathisen from the Department of Computer Science, Aarhus University, Denmark, on the first draft of the manuscript.

Data Availability

The data extracted from the identified articles and then presented in the Results section is available as an electronic supplement along with the code used for the analysis.

Funding Statement

OEA, MGV and AH are employed at Steno Diabetes Center Aarhus that is partly funded by a donation from the Novo Nordisk Foundation. AE and MØT are supported by PhD scholarships from Aarhus University. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med. 2019;25(1):44–56. doi: 10.1038/s41591-018-0300-7 [DOI] [PubMed] [Google Scholar]

- 2.Howard J, Gugger S. Deep Learning for Coders with fastai and PyTorch. 1 ed: O’Reilly Media, Inc.; 2020. [Google Scholar]

- 3.Khan A, Sohail A, Zahoora U, Qureshi AS. A survey of the recent architectures of deep convolutional neural networks. Artificial Intelligence Review. 2020;53:5455–516. [Google Scholar]

- 4.Zeiler MD, Fergus R. Visualizing and Understanding Convolutional Networks. arXiv. 2013:1311.2901. [Google Scholar]

- 5.Sallam MS, Asnawi AL, Olanrewaju RF, editors. Diabetic Retinopathy Grading Using ResNet Convolutional Neural Network. 2020 IEEE Conference on Big Data and Analytics (ICBDA); 2020. [Google Scholar]

- 6.Pratt LY, Mostow J, Kamm CA, editors. Direct transfer of learned information among neural networks. AAAI’91: Proceedings of the ninth National conference on Artificial intelligence; 1991. [Google Scholar]

- 7.Tan C, Sun F, Kong T, Zhang W, Yang C, Liu C. A Survey on Deep Transfer Learning. arXiv. 2018:1808.01974. [Google Scholar]

- 8.Pan SJ, Yang C. A Survey on Transfer Learning. IEEE Transactions on Knowledge and Data Engineering. 2010;22(10):1345–59. [Google Scholar]

- 9.Deng J, Dong W, Socher R, Li L, Li K, Li F-F, editors. ImageNet: A large-scale hierarchical image database. 2009 IEEE Conference on Computer Vision and Pattern Recognition; 2009. [Google Scholar]

- 10.Morid MA, Borjali A, Del Fiol G. A scoping review of transfer learning research on medical image analysis using ImageNet. Computers in Biology and Medicine. 2021;128:104115. doi: 10.1016/j.compbiomed.2020.104115 [DOI] [PubMed] [Google Scholar]

- 11.Cheplygina V, de Bruijne M, Pluim JPW. Not-so-supervised: A survey of semi-supervised, multi-instance, and transfer learning in medical image analysis. Medical Image Analysis. 2019;54:280–96. doi: 10.1016/j.media.2019.03.009 [DOI] [PubMed] [Google Scholar]

- 12.Munn Z, Peters MDJ, Stern C, Tufanaru C, McArthur A, Aromataris E. Systematic review or scoping review? Guidance for authors when choosing between a systematic or scoping review approach. BMC Medical Research Methodology. 2018;18(1):143. doi: 10.1186/s12874-018-0611-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Tricco AC, Lillie E, Zarin W, O’Brien KK, Colquhoun H, Levac D, et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Ann Intern Med. 2018;169(7):467–73. doi: 10.7326/M18-0850 [DOI] [PubMed] [Google Scholar]

- 14.Peters MDJ, Godfrey C, McInerney P, Munn Z, Tricco AC, Khalil H. Chapter 11: Scoping Reviews. In: Aromataris E, Munn Z, editors. JBI Manual for Evidence Synthesis; 2020. [Google Scholar]

- 15.Andreas E, Ole EA, Mette T, Michala Vilstrup G, Adam H. Transfer learning for non-image data in clinical research: a scoping review protocol. Figshare [Internet]. 2021. Available from: https://figshare.com/articles/online_resource/Transfer_learning_for_non-image_data_in_clinical_research_a_scoping_review_protocol/14638914. [Google Scholar]

- 16.Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. Bmj. 2021;372:n71. doi: 10.1136/bmj.n71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Chia CC, Karam Z, Lee G, Rubinfeld I, Syed Z. Improving surgical models through one/two class learning. Annu Int Conf IEEE Eng Med Biol Soc. 2012;2012:5098–101. doi: 10.1109/EMBC.2012.6347140 [DOI] [PubMed] [Google Scholar]

- 18.Steinberg E, Jung K, Fries JA, Corbin CK, Pfohl SR, Shah NH. Language models are an effective representation learning technique for electronic health record data. J Biomed Inform. 2021;113:103637. doi: 10.1016/j.jbi.2020.103637 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Zhang H, Silva FHS, Ohata EF, Medeiros AG, Rebouças Filho PP. Bi-Dimensional Approach Based on Transfer Learning for Alcoholism Pre-disposition Classification via EEG Signals. Front Hum Neurosci. 2020;14:365. doi: 10.3389/fnhum.2020.00365 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Dai HJ, Su CH, Lee YQ, Zhang YC, Wang CK, Kuo CJ, et al. Deep Learning-Based Natural Language Processing for Screening Psychiatric Patients. Front Psychiatry. 2020;11:533949. doi: 10.3389/fpsyt.2020.533949 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Roshanzamir A, Aghajan H, Soleymani Baghshah M. Transformer-based deep neural network language models for Alzheimer’s disease risk assessment from targeted speech. BMC Med Inform Decis Mak. 2021;21(1):92. doi: 10.1186/s12911-021-01456-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Chlasta K, Wołk K. Towards Computer-Based Automated Screening of Dementia Through Spontaneous Speech. Front Psychol. 2020;11:623237. doi: 10.3389/fpsyg.2020.623237 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Naz M, Shah JH, Khan MA, Sharif M, Raza M, Damaševičius R. From ECG signals to images: a transformation based approach for deep learning. PeerJ Comput Sci. 2021;7:e386. doi: 10.7717/peerj-cs.386 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Tadesse GA, Javed H, Thanh NLN, Thi HDH, Tan LV, Thwaites L, et al. Multi-Modal Diagnosis of Infectious Diseases in the Developing World. IEEE J Biomed Health Inform. 2020;24(7):2131–41. doi: 10.1109/JBHI.2019.2959839 [DOI] [PubMed] [Google Scholar]

- 25.Goldberger AL, Amaral LA, Glass L, Hausdorff JM, Ivanov PC, Mark RG, et al. PhysioBank, PhysioToolkit, and PhysioNet: components of a new research resource for complex physiologic signals. Circulation. 2000;101(23):E215–20. doi: 10.1161/01.cir.101.23.e215 [DOI] [PubMed] [Google Scholar]

- 26.Kushner T, Breton MD, Sankaranarayanan S. Multi- Hour Blood Glucose Prediction in Type 1 Diabetes: A Patient-Specific Approach Using Shallow Neural Network Models. Diabetes Technol Ther. 2020;22(12):883–91. doi: 10.1089/dia.2020.0061 [DOI] [PubMed] [Google Scholar]

- 27.De Bois M, El Yacoubi MA, Ammi M. Adversarial multi-source transfer learning in healthcare: Application to glucose prediction for diabetic people. Comput Methods Programs Biomed. 2021;199:105874. doi: 10.1016/j.cmpb.2020.105874 [DOI] [PubMed] [Google Scholar]

- 28.Li Y, Jia W, Wang J, Guo J, Liu Q, Li X, et al. ALeRT-COVID: Attentive Lockdown-awaRe Transfer Learning for Predicting COVID-19 Pandemics in Different Countries. J Healthc Inform Res. 2021:1–16. doi: 10.1007/s41666-020-00088-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Xu J, Xu K, Li Z, Meng F, Tu T, Xu L, et al. Forecast of Dengue Cases in 20 Chinese Cities Based on the Deep Learning Method. Int J Environ Res Public Health. 2020;17(2). doi: 10.3390/ijerph17020453 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Moons KG, Altman DG, Vergouwe Y, Royston P. Prognosis and prognostic research: application and impact of prognostic models in clinical practice. Bmj. 2009;338:b606. doi: 10.1136/bmj.b606 [DOI] [PubMed] [Google Scholar]

- 31.Lundberg SM, Erion G, Chen H, DeGrave A, Prutkin JM, Nair B, et al. From Local Explanations to Global Understanding with Explainable AI for Trees. Nat Mach Intell. 2020;2(1):56–67. doi: 10.1038/s42256-019-0138-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Jadhav P, Rajguru G, Datta D, Mukhopadhyay S. Automatic sleep stage classification using time–frequency images of CWT and transfer learning using convolution neural network. Biocybern Biomed Eng. 2020;40(1):494–504. [Google Scholar]

- 33.Yang D, Hong KS. Quantitative Assessment of Resting-State for Mild Cognitive Impairment Detection: A Functional Near-Infrared Spectroscopy and Deep Learning Approach. J Alzheimers Dis. 2021;80(2):647–63. doi: 10.3233/JAD-201163 [DOI] [PubMed] [Google Scholar]

- 34.He K, Zhang X, Ren S, Sun J. Deep Residual Learning for Image Recognition. arXiv. 2015:1512.03385. [Google Scholar]

- 35.Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z. Rethinking the Inception Architecture for Computer Vision. arXiv. 2015:1512.00567. [Google Scholar]

- 36.Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, et al. Going Deeper with Convolutions. arXiv. 2014:1409.4842. [Google Scholar]

- 37.Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional neural networks. Commun ACM. 2017;60(6):84–90. [Google Scholar]

- 38.Simonyan K, Zisserman A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv. 2014:1409.556. [Google Scholar]

- 39.Howard AG, Zhu M, Chen B, Kalenichenko D, Wang W, Weyand T, et al. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv. 2017:1704.04861. [Google Scholar]

- 40.Abou Jaoude M, Sun H, Pellerin KR, Pavlova M, Sarkis RA, Cash SS, et al. Expert-level automated sleep staging of long-term scalp electroencephalography recordings using deep learning. Sleep. 2020;43(11):zsaa112. doi: 10.1093/sleep/zsaa112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Andreotti F, Phan H, Cooray N, Lo C, Hu MTM, De Vos M. Multichannel Sleep Stage Classification and Transfer Learning using Convolutional Neural Networks. Annu Int Conf IEEE Eng Med Biol Soc. 2018;2018:171–4. doi: 10.1109/EMBC.2018.8512214 [DOI] [PubMed] [Google Scholar]

- 42.Banluesombatkul N, Ouppaphan P, Leelaarporn P, Lakhan P, Chaitusaney B, Jaimchariya N, et al. MetaSleepLearner: A Pilot Study on Fast Adaptation of Bio-signals-Based Sleep Stage Classifier to New Individual Subject Using Meta-Learning. IEEE J Biomed Health Inform. 2021;25(6):1949–63. doi: 10.1109/JBHI.2020.3037693 [DOI] [PubMed] [Google Scholar]

- 43.Chambon S, Galtier MN, Arnal PJ, Wainrib G, Gramfort A. A Deep Learning Architecture for Temporal Sleep Stage Classification Using Multivariate and Multimodal Time Series. IEEE Trans Neural Syst Rehabil Eng. 2018;26(4):758–69. doi: 10.1109/TNSRE.2018.2813138 [DOI] [PubMed] [Google Scholar]

- 44.Daoud H, Bayoumi MA. Efficient Epileptic Seizure Prediction Based on Deep Learning. IEEE Trans Biomed Circuits Syst. 2019;13(5):804–13. doi: 10.1109/TBCAS.2019.2929053 [DOI] [PubMed] [Google Scholar]

- 45.Gao Y, Gao B, Chen Q, Liu J, Zhang Y. Deep Convolutional Neural Network-Based Epileptic Electroencephalogram (EEG) Signal Classification. Front Neurol. 2020;11:375. doi: 10.3389/fneur.2020.00375 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Ilakiyaselvan N, Nayeemulla Khan A, Shahina A. Deep learning approach to detect seizure using reconstructed phase space images. J Biomed Res. 2020;34(3):240–50. doi: 10.7555/JBR.34.20190043 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Li Q, Li Q, Cakmak AS, Da Poian G, Bliwise D, Vaccarino V, et al. Transfer learning from ECG to PPG for improved sleep staging from wrist-worn wearables. Physiol Meas. 2021. doi: 10.1088/1361-6579/abf1b0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Narin A. Detection of Focal and Non-focal Epileptic Seizure Using Continuous Wavelet Transform-Based Scalogram Images and Pre-trained Deep Neural Networks. IRBM. 2020. [Google Scholar]

- 49.Nogay HS, Adeli H. Detection of Epileptic Seizure Using Pretrained Deep Convolutional Neural Network and Transfer Learning. Eur Neurol. 2020;83(6):602–14. doi: 10.1159/000512985 [DOI] [PubMed] [Google Scholar]

- 50.O’Shea A, Ahmed R, Lightbody G, Pavlidis E, Lloyd R, Pisani F, et al. Deep Learning for EEG Seizure Detection in Preterm Infants. Int J Neural Syst. 2021;31(8):2150008. doi: 10.1142/S0129065721500088 [DOI] [PubMed] [Google Scholar]

- 51.Raghu S, Sriraam N, Temel Y, Rao SV, Kubben PL. EEG based multi-class seizure type classification using convolutional neural network and transfer learning. Neural Netw. 2020;124:202–12. doi: 10.1016/j.neunet.2020.01.017 [DOI] [PubMed] [Google Scholar]

- 52.Shalbaf A, Bagherzadeh S, Maghsoudi A. Transfer learning with deep convolutional neural network for automated detection of schizophrenia from EEG signals. Phys Eng Sci Med. 2020;43(4):1229–39. doi: 10.1007/s13246-020-00925-9 [DOI] [PubMed] [Google Scholar]

- 53.Wang Y, Cao J, Wang J, Hu D, Deng M. Epileptic Signal Classification with Deep Transfer Learning Feature on Mean Amplitude Spectrum. Annu Int Conf IEEE Eng Med Biol Soc. 2019;2019:2392–5. doi: 10.1109/EMBC.2019.8857082 [DOI] [PubMed] [Google Scholar]

- 54.Yan R, Li F, Zhou DD, Ristaniemi T, Cong F. Automatic sleep scoring: A deep learning architecture for multi-modality time series. J Neurosci Methods. 2021;348:108971. doi: 10.1016/j.jneumeth.2020.108971 [DOI] [PubMed] [Google Scholar]

- 55.Zhang B, Wang W, Xiao Y, Xiao S, Chen S, Chen S, et al. Cross-Subject Seizure Detection in EEGs Using Deep Transfer Learning. Comput Math Methods Med. 2020;2020:7902072. doi: 10.1155/2020/7902072 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Abdelazez M, Rajan S, Chan ADC. Transfer Learning for Detection of Atrial Fibrillation in Deterministic Compressive Sensed ECG. Annu Int Conf IEEE Eng Med Biol Soc. 2020;2020:5398–401. doi: 10.1109/EMBC44109.2020.9175813 [DOI] [PubMed] [Google Scholar]

- 57.Al Rahhal MM, Bazi Y, Al Zuair M, Othman E, BenJdira B. Convolutional Neural Networks for Electrocardiogram Classification. J Med Biol Eng. 2018;38(6):1014–25. [Google Scholar]

- 58.Ghaffari A, Madani N. Atrial fibrillation identification based on a deep transfer learning approach. Biomed Phys Eng Expr. 2019;5:035015. [Google Scholar]

- 59.Jang JH, Kim TY, Yoon D. Effectiveness of Transfer Learning for Deep Learning-Based Electrocardiogram Analysis. Healthc Inform Res. 2021;27(1):19–28. doi: 10.4258/hir.2021.27.1.19 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Jiang F, Xu J, Lu Z, Song B, Hu Z, Li R, et al. A transfer learning approach to detect paroxysmal atrial fibrillation automatically based on ballistocardiogram signal. J Med Imag Health In. 2019;9(9):1943–9. [Google Scholar]

- 61.Bleijendaal H, Ramos LA, Lopes RR, Verstraelen TE, Baalman SWE, Oudkerk Pool MD, et al. Computer versus cardiologist: Is a machine learning algorithm able to outperform an expert in diagnosing a phospholamban p.Arg14del mutation on the electrocardiogram? Heart Rhythm. 2021;18(1):79–87. doi: 10.1016/j.hrthm.2020.08.021 [DOI] [PubMed] [Google Scholar]

- 62.Olsen M, Mignot E, Jennum PJ, Sorensen HBD. Robust, ECG-based detection of Sleep-disordered breathing in large population-based cohorts. Sleep. 2020;43(5):zsz276. doi: 10.1093/sleep/zsz276 [DOI] [PubMed] [Google Scholar]

- 63.Shi H, Wang H, Qin C, Zhao L, Liu C. An incremental learning system for atrial fibrillation detection based on transfer learning and active learning. Comput Methods Programs Biomed. 2020;187:105219. doi: 10.1016/j.cmpb.2019.105219 [DOI] [PubMed] [Google Scholar]

- 64.Strodthoff N, Wagner P, Schaeffter T, Samek W. Deep Learning for ECG Analysis: Benchmarks and Insights from PTB-XL. IEEE J Biomed Health Inform. 2021;25(5):1519–28. doi: 10.1109/JBHI.2020.3022989 [DOI] [PubMed] [Google Scholar]

- 65.Tadesse GA, Zhu T, Liu Y, Zhou Y, Chen J, Tian M, et al. Cardiovascular disease diagnosis using cross-domain transfer learning. Annu Int Conf IEEE Eng Med Biol Soc. 2019;2019:4262–5. doi: 10.1109/EMBC.2019.8857737 [DOI] [PubMed] [Google Scholar]

- 66.Torres-Soto J, Ashley EA. Multi-task deep learning for cardiac rhythm detection in wearable devices. NPJ Digit Med. 2020;3:116. doi: 10.1038/s41746-020-00320-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Weimann K, Conrad TOF. Transfer learning for ECG classification. Sci Rep. 2021;11(1):5251. doi: 10.1038/s41598-021-84374-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Yin W, Yang X, Li L, Zhang L, Kitsuwan N, Shinkuma R, et al. Self-adjustable domain adaptation in personalized ECG monitoring integrated with IR-UWB radar. Biomed Signal Proces. 2019;47:75–87. [Google Scholar]

- 69.Lopes RR, Bleijendaal H, Ramos LA, Verstraelen TE, Amin AS, Wilde AAM, et al. Improving electrocardiogram-based detection of rare genetic heart disease using transfer learning: An application to phospholamban p.Arg14del mutation carriers. Comput Biol Med. 2021;131:104262. doi: 10.1016/j.compbiomed.2021.104262 [DOI] [PubMed] [Google Scholar]

- 70.Yildirim O, Talo M, Ay B, Baloglu UB, Aydin G, Acharya UR. Automated detection of diabetic subject using pre-trained 2D-CNN models with frequency spectrum images extracted from heart rate signals. Comput Biol Med. 2019;113:103387. doi: 10.1016/j.compbiomed.2019.103387 [DOI] [PubMed] [Google Scholar]

- 71.Hssayeni MD, Jimenez-Shahed J, Burack MA, Ghoraani B. Ensemble deep model for continuous estimation of Unified Parkinson’s Disease Rating Scale III. Biomed Eng Online. 2021;20(1):32. doi: 10.1186/s12938-021-00872-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Agrusa AS, Allegra AB, Kunkel DC, Coleman TP. Robust Methods to Detect Abnormal Initiation in the Gastric Slow Wave from Cutaneous Recordings. Annu Int Conf IEEE Eng Med Biol Soc. 2020;2020:225–31. doi: 10.1109/EMBC44109.2020.9176634 [DOI] [PubMed] [Google Scholar]

- 73.Allen J, Liu H, Iqbal S, Zheng D, Stansby G. Deep learning based photoplethysmography classification for peripheral arterial disease detection: a proof-of-concept study. Physiol Meas. 2021;42(5). doi: 10.1088/1361-6579/abf9f3 [DOI] [PubMed] [Google Scholar]

- 74.Artoni P, Piffer A, Vinci V, LeBlanc J, Nelson CA, Hensch TK, et al. Deep learning of spontaneous arousal fluctuations detects early cholinergic defects across neurodevelopmental mouse models and patients. Proc Natl Acad Sci U S A. 2020;117(38):23298–303. doi: 10.1073/pnas.1820847116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Martinez M, De Leon PL. Falls Risk Classification of Older Adults Using Deep Neural Networks and Transfer Learning. IEEE J Biomed Health Inform. 2020;24(1):144–50. doi: 10.1109/JBHI.2019.2906499 [DOI] [PubMed] [Google Scholar]

- 76.Nasseri M, Pal Attia T, Joseph B, Gregg NM, Nurse ES, Viana PF, et al. Non-invasive wearable seizure detection using long-short-term memory networks with transfer learning. J Neural Eng. 2021;18(5). doi: 10.1088/1741-2552/abef8a [DOI] [PubMed] [Google Scholar]

- 77.Phan H, Chen OY, Koch P, Lu Z, McLoughlin I, Mertins A, et al. Towards More Accurate Automatic Sleep Staging via Deep Transfer Learning. IEEE Trans Biomed Eng. 2020;68(6):1787–98. [DOI] [PubMed] [Google Scholar]

- 78.Phan H, Mikkelsen K, Chén OY, Koch P, Mertins A, Kidmose P, et al. Personalized automatic sleep staging with single-night data: a pilot study with Kullback-Leibler divergence regularization. Physiol Meas. 2020;41(6):064004. doi: 10.1088/1361-6579/ab921e [DOI] [PubMed] [Google Scholar]

- 79.Sadouk L, Gadi T, Essoufi EH. A Novel Deep Learning Approach for Recognizing Stereotypical Motor Movements within and across Subjects on the Autism Spectrum Disorder. Comput Intell Neurosci. 2018;2018:7186762. doi: 10.1155/2018/7186762 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Shyam A, Ravichandran V, Preejith SP, Joseph J, Sivaprakasam M. PPGnet: Deep Network for Device Independent Heart Rate Estimation from Photoplethysmogram. Annu Int Conf IEEE Eng Med Biol Soc. 2019;2019:1899–902. doi: 10.1109/EMBC.2019.8856989 [DOI] [PubMed] [Google Scholar]

- 81.Song Q, Zheng YJ, Sheng WG, Yang J. Tridirectional Transfer Learning for Predicting Gastric Cancer Morbidity. IEEE Trans Neural Netw Learn Syst. 2021;32(2):561–74. doi: 10.1109/TNNLS.2020.2979486 [DOI] [PubMed] [Google Scholar]

- 82.Yang C, Ojha BD, Aranoff ND, Green P, Tavassolian N. Classification of aortic stenosis using conventional machine learning and deep learning methods based on multi-dimensional cardio-mechanical signals. Sci Rep. 2020;10(1):17521. doi: 10.1038/s41598-020-74519-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Wagner P, Strodthoff N, Bousseljot R-D, Kreiseler D, Lunze FI, Samek W, et al. PTB-XL, a large publicly available electrocardiography dataset. Scientific Data. 2020;7(1):154. doi: 10.1038/s41597-020-0495-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Bank D, Koenigstein N, Giryes R. Autoencoders. arXiv. 2020:2003.05991. [Google Scholar]

- 85.Fagherazzi G, Fischer A, Ismael M, Despotovic V. Voice for Health: The Use of Vocal Biomarkers from Research to Clinical Practice. Digit Biomark. 2021;5(1):78–88. doi: 10.1159/000515346 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Nodera H, Osaki Y, Yamazaki H, Mori A, Izumi Y, Kaji R. Deep learning for waveform identification of resting needle electromyography signals. Clin Neurophysiol. 2019;130(5):617–23. doi: 10.1016/j.clinph.2019.01.024 [DOI] [PubMed] [Google Scholar]

- 87.Balagopalan A, Eyre B, Robin J, Rudzicz F, Novikova J. Comparing Pre-trained and Feature-Based Models for Prediction of Alzheimer’s Disease Based on Speech. Front Aging Neurosci. 2021;13:635945. doi: 10.3389/fnagi.2021.635945 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Koike T, Qian K, Kong Q, Plumbley MD, Schuller BW, Yamamoto Y. Audio for Audio is Better? An Investigation on Transfer Learning Models for Heart Sound Classification. Annu Int Conf IEEE Eng Med Biol Soc. 2020;2020:74–7. doi: 10.1109/EMBC44109.2020.9175450 [DOI] [PubMed] [Google Scholar]

- 89.Tseng KK, Wang C, Huang YF, Chen GR, Yung KL, Ip WH. Cross-Domain Transfer Learning for PCG Diagnosis Algorithm. Biosensors (Basel). 2021;11(4):127. doi: 10.3390/bios11040127 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Demir F, Sengur A, Bajaj V. Convolutional neural networks based efficient approach for classification of lung diseases. Health Inf Sci Syst. 2020;8(1):4. doi: 10.1007/s13755-019-0091-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Hsiao CH, Lin TW, Lin CW, Hsu FS, Lin FY, Chen CW, et al. Breathing Sound Segmentation and Detection Using Transfer Learning Techniques on an Attention-Based Encoder-Decoder Architecture. Annu Int Conf IEEE Eng Med Biol Soc. 2020;2020:754–9. doi: 10.1109/EMBC44109.2020.9176226 [DOI] [PubMed] [Google Scholar]

- 92.Imran A, Posokhova I, Qureshi HN, Masood U, Riaz MS, Ali K, et al. AI4COVID-19: AI enabled preliminary diagnosis for COVID-19 from cough samples via an app. Inform Med Unlocked. 2020;20:100378. doi: 10.1016/j.imu.2020.100378 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Luo J, Liu H, Gao X, Wang B, Zhu X, Shi Y, et al. A novel deep feature transfer-based OSA detection method using sleep sound signals. Physiol Meas. 2020;41(7):075009. doi: 10.1088/1361-6579/ab9e7b [DOI] [PubMed] [Google Scholar]

- 94.Devlin J, Chang M-W, Lee K, Toutanova K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv. 2018:1810.04805. [Google Scholar]

- 95.Palanisamy K, Singhania D, Yao A. Rethinking cnn models for audio classification. arXiv. 2020:2007.11154 [Google Scholar]

- 96.Gemmeke JF, Ellis DPW, Freedman D, Jansen A, Lawrence W, Moore RC, et al., editors. Audio Set: An ontology and human-labeled dataset for audio events. 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP); 2017. [Google Scholar]

- 97.Panayotov V, Chen G, Povey D, Khudanpur S, editors. Librispeech: An ASR corpus based on public domain audio books. 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP); 2015. [Google Scholar]

- 98.AlShibli A, Mathkour H. A Shallow Convolutional Learning Network for Classification of Cancers Based on Copy Number Variations. Sensors (Basel). 2019;19(19):4207. doi: 10.3390/s19194207 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Gao Y, Cui Y. Deep transfer learning for reducing health care disparities arising from biomedical data inequality. Nat Commun. 2020;11(1):5131. doi: 10.1038/s41467-020-18918-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Kim S, Kim K, Choe J, Lee I, Kang J. Improved survival analysis by learning shared genomic information from pan-cancer data. Bioinformatics. 2020;36(Suppl_1):i389–i98. doi: 10.1093/bioinformatics/btaa462 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.López-García G, Jerez JM, Franco L, Veredas FJ. Transfer learning with convolutional neural networks for cancer survival prediction using gene-expression data. PLoS One. 2020;15(3):e0230536. doi: 10.1371/journal.pone.0230536 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Qiu YL, Zheng H, Devos A, Selby H, Gevaert O. A meta-learning approach for genomic survival analysis. Nat Commun. 2020;11(1):6350. doi: 10.1038/s41467-020-20167-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Mostavi M, Chiu YC, Chen Y, Huang Y. CancerSiamese: one-shot learning for predicting primary and metastatic tumor types unseen during model training. BMC Bioinformatics. 2021;22(1):244. doi: 10.1186/s12859-021-04157-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Santilli AML, Jamzad A, Sedghi A, Kaufmann M, Logan K, Wallis J, et al. Domain adaptation and self-supervised learning for surgical margin detection. Int J Comput Assist Radiol Surg. 2021;16(5):861–9. doi: 10.1007/s11548-021-02381-6 [DOI] [PubMed] [Google Scholar]

- 105.Seddiki K, Saudemont P, Precioso F, Ogrinc N, Wisztorski M, Salzet M, et al. Cumulative learning enables convolutional neural network representations for small mass spectrometry data classification. Nat Commun. 2020;11(1):5595. doi: 10.1038/s41467-020-19354-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Shickel B, Davoudi A, Ozrazgat-Baslanti T, Ruppert M, Bihorac A, Rashidi P. Deep Multi-Modal Transfer Learning for Augmented Patient Acuity Assessment in the Intelligent ICU. Front Digit Health. 2021;3:640685. doi: 10.3389/fdgth.2021.640685 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.He L, Li H, Wang J, Chen M, Gozdas E, Dillman JR, et al. A multi-task, multi-stage deep transfer learning model for early prediction of neurodevelopment in very preterm infants. Sci Rep. 2020;10(1):15072. doi: 10.1038/s41598-020-71914-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.Wardi G, Carlile M, Holder A, Shashikumar S, Hayden SR, Nemati S. Predicting Progression to Septic Shock in the Emergency Department Using an Externally Generalizable Machine-Learning Algorithm. Ann Emerg Med. 2021;77(4):395–406. doi: 10.1016/j.annemergmed.2020.11.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.Bae WD, Kim S, Park CS, Alkobaisi S, Lee J, Seo W, et al. Performance improvement of machine learning techniques predicting the association of exacerbation of peak expiratory flow ratio with short term exposure level to indoor air quality using adult asthmatics clustered data. PLoS One. 2021;16(1):e0244233. doi: 10.1371/journal.pone.0244233 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110.Ekpenyong ME, Edoho ME, Udo IJ, Etebong PI, Uto NP, Jackson TC, et al. A transfer learning approach to drug resistance classification in mixed HIV dataset. Informatics in Medicine Unlocked. 2021;24:100568. [Google Scholar]

- 111.Cerami E, Gao J, Dogrusoz U, Gross BE, Sumer SO, Aksoy BA, et al. The cBio cancer genomics portal: an open platform for exploring multidimensional cancer genomics data. Cancer Discov. 2012;2(5):401–4. doi: 10.1158/2159-8290.CD-12-0095 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 112.Goldman MJ, Craft B, Hastie M, Repečka K, McDade F, Kamath A, et al. Visualizing and interpreting cancer genomics data via the Xena platform. Nat Biotechnol. 2020;38(6):675–8. doi: 10.1038/s41587-020-0546-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.Al-Garadi MA, Yang YC, Cai H, Ruan Y, O’Connor K, Graciela GH, et al. Text classification models for the automatic detection of nonmedical prescription medication use from social media. BMC Med Inform Decis Mak. 2021;21(1):27. doi: 10.1186/s12911-021-01394-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114.Du J, Zhang Y, Luo J, Jia Y, Wei Q, Tao C, et al. Extracting psychiatric stressors for suicide from social media using deep learning. BMC Med Inform Decis Mak. 2018;18(Suppl 2):43. doi: 10.1186/s12911-018-0632-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 115.Howard D, Maslej MM, Lee J, Ritchie J, Woollard G, French L. Transfer Learning for Risk Classification of Social Media Posts: Model Evaluation Study. J Med Internet Res. 2020;22(5):e15371. doi: 10.2196/15371 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 116.Li Y, Rao S, Solares JRA, Hassaine A, Ramakrishnan R, Canoy D, et al. BEHRT: Transformer for Electronic Health Records. Sci Rep. 2020;10(1):7155. doi: 10.1038/s41598-020-62922-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 117.Si Y, Roberts K. Patient Representation Transfer Learning from Clinical Notes based on Hierarchical Attention Network. AMIA Jt Summits Transl Sci Proc. 2020;2020:597–606. [PMC free article] [PubMed] [Google Scholar]

- 118.Syed K, Sleeman Wt, Hagan M, Palta J, Kapoor R, Ghosh P. Automatic Incident Triage in Radiation Oncology Incident Learning System. Healthcare (Basel). 2020;8(3):272. doi: 10.3390/healthcare8030272 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 119.Alyafeai Z, AlShaibani MS, Ahmad I. A survey on transfer learning in natural language processing. arXiv. 2020:2007.04239. [Google Scholar]

- 120.Hahn U, Oleynik M. Medical Information Extraction in the Age of Deep Learning. Yearb Med Inform. 2020;29(01):208–20. doi: 10.1055/s-0040-1702001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 121.Haulcy R, Glass J. Classifying Alzheimer’s Disease Using Audio and Text-Based Representations of Speech. Front Psychol. 2021;11:624137. doi: 10.3389/fpsyg.2020.624137 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 122.Rawat BPS, Jagannatha A, Liu F, Yu H. Inferring ADR causality by predicting the Naranjo Score from Clinical Notes. AMIA Annu Symp Proc. 2020;2020:1041–9. [PMC free article] [PubMed] [Google Scholar]

- 123.Jiang H, Li Y, Zeng X, Xu N, Zhao C, Zhang J, et al. Exploring Fever of Unknown Origin Intelligent Diagnosis Based on Clinical Data: Model Development and Validation. JMIR Med Inform. 2020;8(11):e24375. doi: 10.2196/24375 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 124.Spasic I, Nenadic G. Clinical Text Data in Machine Learning: Systematic Review. JMIR Med Inform. 2020;8(3):e17984. doi: 10.2196/17984 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 125.Neamatullah I, Douglass MM, Lehman L-wH, Reisner A, Villarroel M, Long WJ, et al. Automated de-identification of free-text medical records. BMC Medical Informatics and Decision Making. 2008;8:32. doi: 10.1186/1472-6947-8-32 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 126.Johnson AE, Pollard TJ, Shen L, Lehman LW, Feng M, Ghassemi M, et al. MIMIC-III, a freely accessible critical care database. Sci Data. 2016;3:160035. doi: 10.1038/sdata.2016.35 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 127.Casey A, Davidson E, Poon M, Dong H, Duma D, Grivas A, et al. A systematic review of natural language processing applied to radiology reports. BMC Med Inform Decis Mak. 2021;21(1):179. doi: 10.1186/s12911-021-01533-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 128.Desautels T, Calvert J, Hoffman J, Mao Q, Jay M, Fletcher G, et al. Using Transfer Learning for Improved Mortality Prediction in a Data-Scarce Hospital Setting. Biomed Inform Insights. 2017;9:1178222617712994. doi: 10.1177/1178222617712994 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 129.Zhang K, Xu G, Zheng X, Li H, Zhang S, Yu Y, et al. Application of Transfer Learning in EEG Decoding Based on Brain-Computer Interfaces: A Review. Sensors (Basel). 2020;20(21):6321. doi: 10.3390/s20216321 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 130.Bose P, Srinivasan S, Sleeman WC, Palta J, Kapoor R, Ghosh P. A Survey on Recent Named Entity Recognition and Relationship Extraction Techniques on Clinical Texts. Applied Sciences. 2021;11(18):8319. [Google Scholar]

- 131.Sudlow C, Gallacher J, Allen N, Beral V, Burton P, Danesh J, et al. UK Biobank: An Open Access Resource for Identifying the Causes of a Wide Range of Complex Diseases of Middle and Old Age. PLOS Medicine. 2015;12(3):e1001779. doi: 10.1371/journal.pmed.1001779 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 132.Wilkinson MD, Dumontier M, Aalbersberg IJ, Appleton G, Axton M, Baak A, et al. The FAIR Guiding Principles for scientific data management and stewardship. Scientific Data. 2016;3:160018. doi: 10.1038/sdata.2016.18 [DOI] [PMC free article] [PubMed] [Google Scholar]