Abstract

Objectives

Orthognathic surgery is used to treat moderate to severe occlusal discrepancies. Examinations and measurements for preoperative screening are essential procedures. A careful analysis is needed to decide whether cases require orthognathic surgery. This study developed screening software using a multi-layer perceptron to determine whether orthognathic surgery is required.

Methods

In total, 538 digital lateral cephalometric radiographs were retrospectively collected from a hospital data system. The input data consisted of seven cephalometric variables. All cephalograms were analyzed by the Detectron2 detection and segmentation algorithms. A keypoint region-based convolutional neural network (R-CNN) was used for object detection, and an artificial neural network (ANN) was used for classification. This novel neural network decision support system was created and validated using Keras software. The output data are shown as a number from 0 to 1, with cases requiring orthognathic surgery being indicated by a number approaching 1.

Results

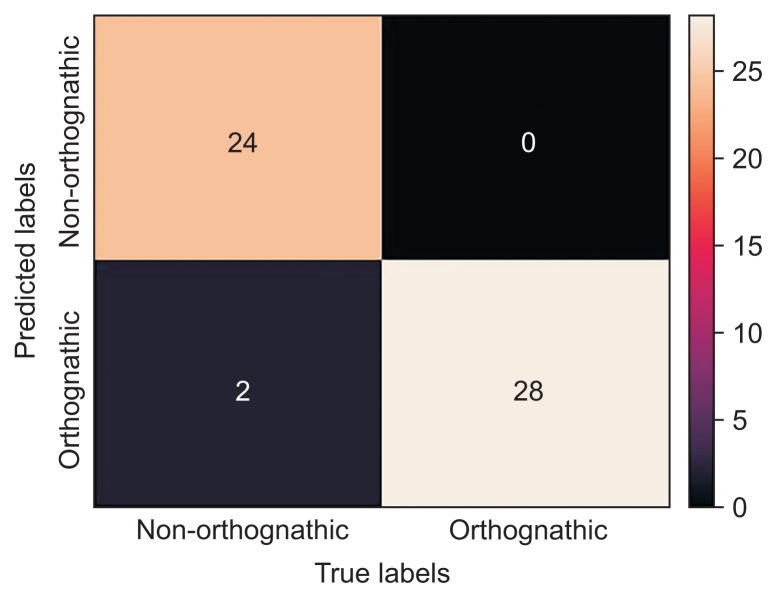

The screening software demonstrated a diagnostic agreement of 96.3% with specialists regarding the requirement for orthognathic surgery. A confusion matrix showed that only 2 out of 54 cases were misdiagnosed (accuracy = 0.963, sensitivity = 1, precision = 0.93, F-value = 0.963, area under the curve = 0.96).

Conclusions

Orthognathic surgery screening with a keypoint R-CNN for object detection and an ANN for classification showed 96.3% diagnostic agreement in this study.

Keywords: Orthognathic Surgery, Cephalometry, Neural Network Models, Classification, Artificial Intelligence

I. Introduction

Orthognathic surgery is used to reposition the jaw in patients with moderate to severe occlusal discrepancies and dentofacial deformities. This field of surgery uses dental, medical, and surgical concepts—most notably, orthodontics incorporated with oral and maxillofacial surgery—to treat facial deformities that have progressed beyond the treatment that can be provided by dental movement alone. Accurate pre-operative screening is highly important given patients’ need to be aware of the invasiveness of orthognathic surgery and the requirement for financial planning.

Prior to orthognathic surgery, examinations and measurements from dental casts, intra- and extra-oral photographs, panoramic and cephalometric radiographs, and patients’ perceptions are precisely evaluated by orthodontists [1,2]. Notably, an intricate interpretation of cephalometric tracings is part of the screening process, the definitive diagnosis, treatment planning, and clinical decision-making. Nonetheless, the interpretation of cephalometric tracings is time-consuming and requires expertise in orthodontics and maxillofacial surgery; otherwise, the interpretation of the measurements may be subject to error.

To overcome the limitations present at this stage, developments in computer technology have led to the digitization of cephalometric analysis. Moreover, artificial intelligence (AI) expert systems with deep learning have been shown to be useful in orthodontics [3]. AI contains many subfields, including machine learning, which digests large quantities of data to perform specific tasks and learns without explicit programming, and deep learning, which self-learns a specific task with increasingly greater accuracy using many layers of processing units. Common applications of deep learning include image and speech recognition [4]. The amalgamation of AI-aided digitization with neural network systems shows strong potential for the development of an automated decision support system for orthognathic surgery screening. Such a system would be highly beneficial for potential patients and convenient for referral dentists and dental specialists.

Deep learning algorithms analyzing very large datasets of digitized cephalometry have been developed to classify skeletal and dental discrepancies [5]. Artificial neural network (ANN) applications, which use deep learning systems that employ cephalograms as sources of variables (learning weights and biases) and then use the information in these cephalograms to determine whether surgery is required, have also been created. Moreover, the keypoint region-based convolutional neural network (R-CNN) showed better accuracy than earlier neural networks for object detection [6]. Therefore, this study applied AI to cephalometric analysis to develop a standardized decision-making system for orthognathic diagnosis. The specific objective of this study was to develop and validate a multi-layer perceptron (MLP) for preoperative screening of orthognathic surgery using a keypoint R-CNN for object detection.

II. Methods

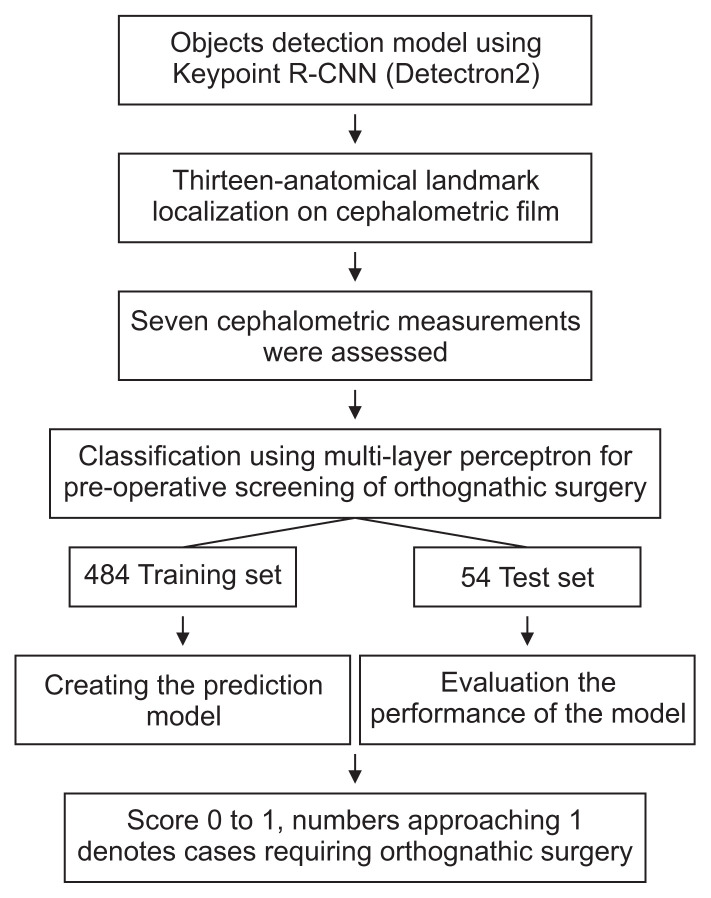

Prior to collecting the cephalometric radiographs and commencing the research, the ethical standards and research procedures were approved by the Institutional Review Board of the Faculty of Dentistry/Faculty of Pharmacy, Mahidol University (COA.No. MU-DT/PY-IRB 2021/032.2903). The flow chart of the entire process is shown in Figure 1.

Figure 1.

Flow chart of the entire process. R-CNN: region-based convolutional neural network.

The data were prepared from patients who visited the Department of Oral and Maxillofacial Radiology, Faculty of Dentistry, Mahidol University, from 2012 to 2021. The seven cephalometric parameters listed in Table 1 were measured in all subjects for the classification [7]. The seven measurements were U1 to PP (angle between the axis of the maxillary incisor and palatal plane), L1 to MP (angle between the axis of mandibular incisor and the mandibular plane), overjet (anterior-posterior overlap of the maxillary incisor over the mandibular incisor), U1 root tip to A-point (distance between the root apex of the maxillary incisor and the A-point as referenced by the functional occlusal plane which is a line connected between mesiobuccal cusp of mandibular first premolar (L4) to mesiobuccal cusp of mandibular first molar (L6)), L1 root tip to B-point (distance between the root apex of the mandibular incisor and the B-point as referenced by the functional occlusal plane), ANB (angle between A-point, nasion, and B-point), and Wits (distance between A-point perpendicular to the functional occlusal plane and B-point perpendicular to the functional occlusal plane).

Table 1.

Descriptions of seven cephalometric measurements used to assess dental deformities (U1 to PP, L1 to MP, overjet) and alveolar housing (U1 root tip to A-point, L1 root tip to B-point), and skeletal deformity (ANB, Wits) [7]

| Variable | Description |

|---|---|

| U1 to PP (°) | Angle between the axis of maxillary incisor and palatal plane |

| L1 to MP (°) | Angle between the axis of mandibular incisor and mandibular plane |

| Overjet (mm) | Anterior-posterior overlap of the maxillary incisor over the mandibular incisor |

| U1 root tip to A-point (mm) | Distance between root apex of maxillary incisor and A-point (as referenced by the functional occlusal plane which is a line connected between mesiobuccal cusp of mandibular first premolar (L4) to mesiobuccal cusp of mandibular first molar (L6)) |

| L1 root tip to B-point (mm) | Distance between root apex of mandibular incisor and B-point (as referenced by the functional occlusal plane) |

| ANB (°) | Angle between A-point, nasion, and B-point |

| Wits (mm) | Distance between A-point perpendicular to the functional occlusal plane and B-point perpendicular to the functional occlusal plane |

The samples used in this retrospective study consisted of 538 digital lateral cephalograms from Thai patients who were 20–40 years old. Patients who had an unerupted permanent tooth or a missing tooth, recognizable craniofacial abnormality or deformity, or previous orthodontic, plastic, or other maxillofacial surgical procedures were excluded. The cases included skeletal classes I, II, and III (Table 2). Two orthodontists (SM, ST), each with more than 10 years of experience, decided on the treatment plans. Of the 538 samples, non (orthognathic) surgery orthodontic treatment was chosen in 256, while 282 were deemed to require orthognathic surgery.

Table 2.

Characteristics of the samples (n = 538)

| Variable | n |

|---|---|

| Type of skeleton | |

| Class I | 43 |

| Class II | 30 |

| Class III | 465 |

|

| |

| Treatment | |

| Orthognathic surgery | 282 |

| Non-orthognathic surgery | 256 |

|

| |

| Set allocation | |

| Training set | 484 |

| Test set | 54 |

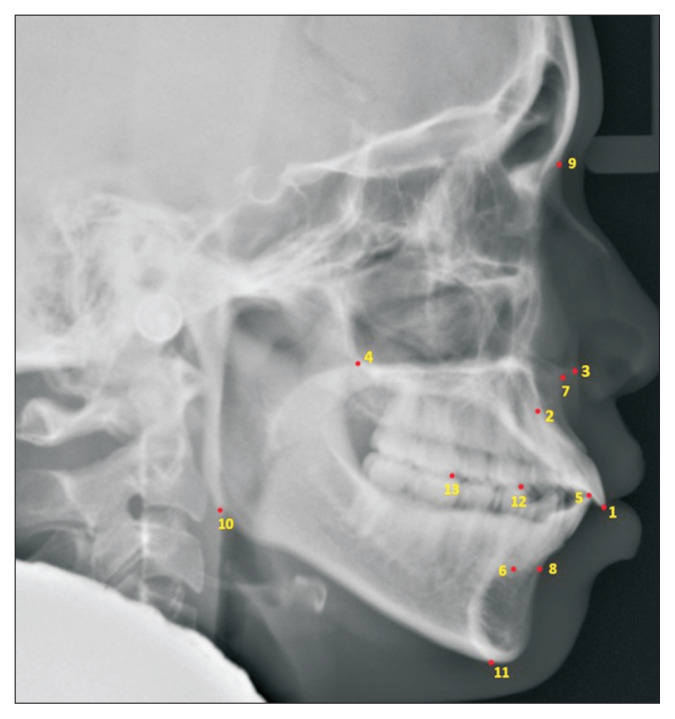

All 538 images were manually annotated using the Vision Marker II which was a web application created by Digital Storemesh company (by the first author; NC) and were validated by an experienced orthodontist (SM). Next, Detectron2 [8], which is a unified library of object detection algorithms from Facebook AI Research (FAIR), incorporated the models included in the keypoint R-CNN [9,10]. Detectron2 was created in Google Colaboratory, which enabled coding and execution in Python for locating and labeling 13 anatomical landmarks (the U1 incisal tip, U1 root tip, ANS, PNS, L1 incisal tip, L1 root tip, A-point, B-point, nasion, gonion, menton, mesiobuccal cusp of L4, and mesiobuccal cusp of L6) (Figure 2), allowing the seven measurements to be performed.

Figure 2.

Lateral cephalogram in which Detectron2 labeled 13 anatomical landmarks (1: U1 incisal tip, 2: U1 root tip, 3: ANS, 4: PNS, 5: L1 incisal tip, 6: L1 root tip, 7: A-point, 8: B-point, 9: nasion, 10: gonion, 11: menton, 12: mesiobuccal cusp of L4, and 13: mesiobuccal cusp of L6). The image showed the vertical and horizontal resolution of 96 dpi with a height and width of 1020 × 1024 pixels. See Table 1 for descriptions of anatomical landmarks.

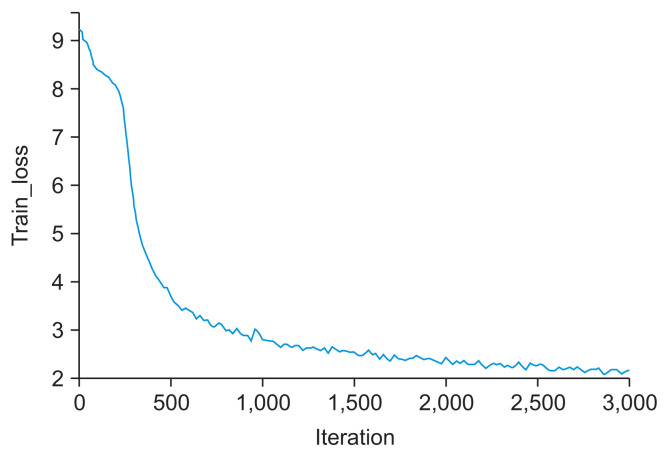

A loss function was then used to evaluate the performance of the model. An output approaching 0 indicates that the model is well trained (Figure 3). The root mean square error (RMSE) and percentage of detected joints (PDJ) [11] were also used to assess the performance of the model. The RMSE was calculated as 8.54 pixels, meaning that the accuracy of the model was acceptable (the image showed a vertical and horizontal resolution of 96 dpi with a height and width of 1020 × 1024 pixels). The PDJ setting at a threshold of 0.05 was calculated as 0.97, which was satisfactory as an evaluation of the distance between the prediction and ground truth (Figure 4).

Figure 3.

Training loss of the object detection model, with the graph decreasing to the point of stability.

Figure 4.

Image evaluated with the percentage of detected joints at a threshold of 0.05. Each circular zone includes the border of each landmark detection.

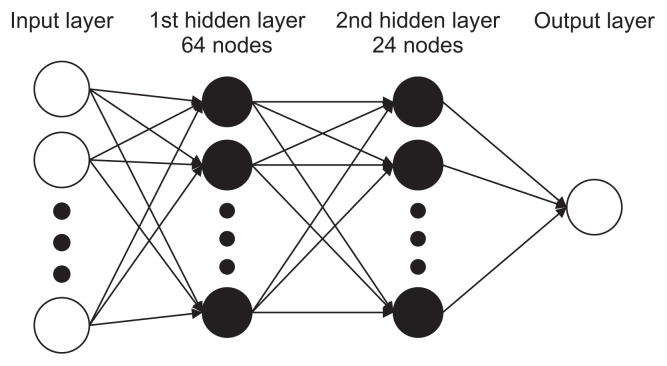

Each case was randomly assigned to either the training or testing dataset, with 484 radiographs allocated to the training set used to create the prediction model and the remaining 54 radiographs allocated to the test set. The test set was used to evaluate the performance of the model, and the training set was reevaluated as the validation set. To avoid overfitting, iterative learning was stopped at the lowest error point for the validation set. Max-min normalization was used to transform the input data to the range of 0–1. The applied machine learning model consisted of a four-layer neural network, including one input layer, two hidden layers (64 nodes in the first hidden layer and 24 nodes in the second hidden layer), and one output layer (epochs = 2,000, batch size = 32) (Figure 5). The learning rate was set at 0.01. Activation functions were used to improve the learning of the deep neural network. The rectified linear unit (ReLU) function [12] was applied to the hidden layers and a sigmoid function was used for the output layer. Keras [13], another neural network library, running in Python, was used to code neural network models in Google Colaboratory. Backward propagation was performed in Python to adjust the values of weights.

Figure 5.

Diagram of the multi-layer perceptron used in this study.

Finally, the output data were shown as numbers ranging from 0 to 1. Orthognathic surgery was suggested if the output approached the value of 1, whereas non-orthognathic surgery would be more appropriate if the output approached a value of 0. The data files can be freely and openly accessed on Open Science Foundation under https://osf.io/bcd4h/?view_only=8ff663525fc6468cb61109b7fb6abca6.

III. Results

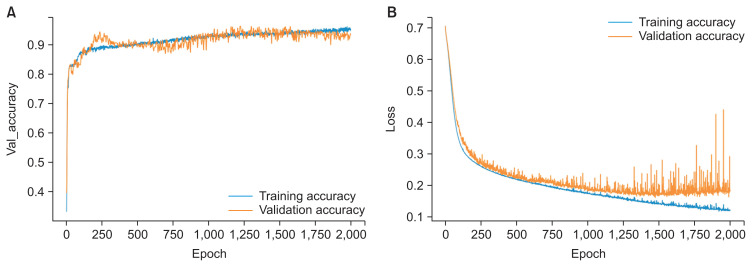

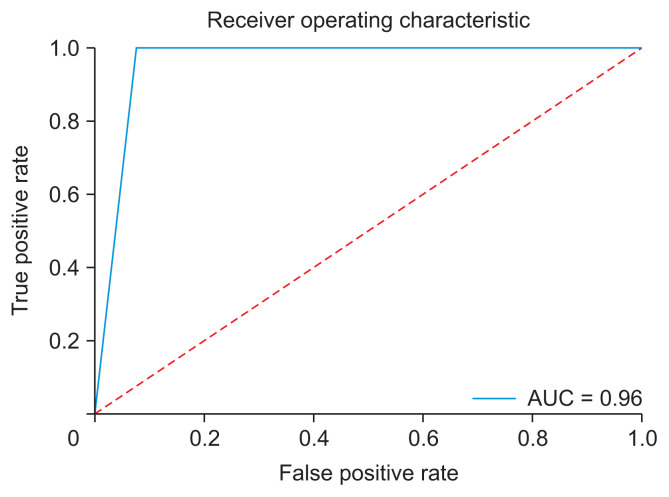

The model showed 96.3% diagnostic agreement for the classification of whether the patient required orthognathic surgery. A graph of the training accuracy and validation accuracy of the neural network model showed a plot increasing to the point of stability, as shown in Figure 6A. When the output approaches 1, the model could be considered to be well trained. Meanwhile, a graph of the training loss and validation loss of the neural network model showed a plot decreasing to the point of stability, as shown in Figure 6B. When the output approaches 0, the model could be considered to be well trained. Moreover, a receiver operating characteristic (ROC) curve showed the performance of the classification of the model (Figure 7). This graph swiftly changed from the origin to (0, 1), exhibiting a high true-positive rate and a low false-positive rate, which indicated that this was a good classification model. The area under the ROC curve was 0.96, showing that the model had excellent accuracy. Furthermore, a confusion matrix showed cases of misdiagnosis (Figure 8). Only two out of 54 cases were misdiagnosed. One was skeletal class II and the other was skeletal class III. As a result, the accuracy of the model was 0.963, showing a high rate of correct predictions. The sensitivity of the model was 1, indicating that all the positive cases were labeled as positive. The precision of the model was 0.93, showing that a high proportion of the predicted cases correctly turned out to be positive. The F-value was 0.963, showing that both the precision and sensitivity were high.

Figure 6.

(A) Training accuracy and validation accuracy of the neural network model, with the graph increasing to the point of stability. (B) Training loss and validation loss of the neural network model, with the graph decreasing to the point of stability.

Figure 7.

The receiver operating characteristic curve swiftly changed from the origin to (0, 1), exhibiting a high true-positive rate and a low false-positive rate, with an area under the curve (AUC) of 0.96.

Figure 8.

Confusion matrix showing only two misdiagnosed cases (accuracy = 0.963; sensitivity = 1; precision = 0.93; Fvalue = 0.963). Orthognathic stands for orthognathic surgery combined with orthodontic treatment, while non-orthognathic stands for non (orthognathic) surgery orthodontic treatment.

IV. Discussion

AI has been used in the fields of healthcare and medicine for several years, and applications seem to be developing at a breakneck speed [14]. Machine learning methods are usually used to perform either prediction or classification [15]. It is widely recognized that orthodontics has gained more precision in terms of structuring and improving its practices from computerization than any other dental discipline [16].

This study describes the creation and validation of a decision-making model based on a keypoint R-CNN for object detection and an ANN for classification. The procedure commenced with the orthodontist’s validation of manually localized anatomical landmarks, enhanced with the keypoint R-CNN for object localization. More specifically, the keypoint R-CNN is good at object localization of two-dimensional images because the main task of keypoint detection is to detect categorical boundary points. Moreover, the keypoint R-CNN may show particular attentiveness to the model boundary [17]. Meanwhile, ANNs are the foundation of deep learning algorithms, a subset of machine learning. ANNs are intelligent systems that are used to handle difficult issues in a wide variety of applications, including prediction. Hence, this study applied an ANN for prediction. ANNs employ a hidden layer to improve prediction accuracy [18]. ReLU was applied as an activation function in the hidden layer to decrease the vanishing gradient and provided a certain sparsity of the neural network [19].

Previous studies have analyzed the success rate of neural network-based decision support systems for orthognathic surgery. A decision support system with a deep convolutional neural network for image classification showed 95.4% to 96.4% rates of diagnostic agreement regarding orthognathic surgery between the actual diagnosis and the diagnosis made by the AI model [20]. Another study employed an ANN for image classification, and the model achieved a diagnostic agreement rate of 96% [21]. In the present study, we created and validated a keypoint R-CNN for the detection and deep learning-based classification of lateral cephalometric images, which showed a diagnostic agreement rate of 96.3%. Therefore, this model, using a keypoint R-CNN and ANN, could be beneficial for determining whether orthognathic surgery is required.

The precision of deep learning depends strongly on the amount of training data. There is still room to further improve the diagnostic agreement of our model by including additional cephalometric radiographs in the training set. Moreover, standardization of images could also improve the diagnostic agreement. When additional radiographs are analyzed, CNN training algorithms can enhance the weighting parameters in each layer of the architecture. Although this study evaluated more than 500 cephalograms with accurate anatomical landmark localization, we still recommend increasing the number of cephalograms in the training data in future research. With further datasets and training, the model could also be used for a variety of screening and diagnostic purposes [22].

The limitations of this study include the absence of input data on crowding, skeletal asymmetry, soft tissue profiles, and airway spaces. In addition, the patients’ perceptions, complaints, and expectations are important. Disparities in orthognathic surgery decisions could also be meaningful. For example, the decision-making could be affected by the patient’s and clinician’s preferences, airway space size, or the clinician’s experience [3]. While preferences are very hard to standardize, the patient’s subjective needs should be addressed, and agreement between the surgeon, orthodontist, and patient is essential [23].

The keypoint R-CNN also showed very strong performance in detecting the features of facial parts. Therefore, it is necessary to evaluate AI and human perceptions of other significant anatomical features, and we suggest this as a topic for future research. Furthermore, the lateral cephalogram is only a two-dimensional image, and the bilateral structures overlap, whereas three-dimensional cone-beam computed tomography (CBCT) can solve this drawback. CBCT images enable a more exact identification of cephalometric landmarks and can overcome the problem of superimposition of bilateral landmarks in cephalometry [24]. Moreover, merging frontal profiles and CBCT could provide more information on the relationship between soft tissue and the facial skeletal structure.

In summary, by combining a neural network model with information on clinical decision-making, a supplemental tool for orthognathic screening using digital lateral cephalometry images was created. The model showed a diagnostic agreement rate of 96.3%. Increasing the size of the training data set, evaluating additional important data (especially patients’ perceptions), and using three-dimensional CBCT would further improve this AI-aided approach to orthognathic screening.

Acknowledgments

This study was supported by Mahidol University. The technical advice and support from Assistant Professor Wasit Limprasert, College of Interdisciplinary Studies, Thammasat University, Thailand was truly appreciated. We are also grateful for the technical assistance from Digital Storemesh Co. Ltd. And we truly appreciate the help Dr. Sasipa Thiradilok gave to the team.

Footnotes

Conflict of Interest

No potential conflict of interest relevant to this article was reported.

References

- 1.Graber TM, Vanarsdall RL. Orthodontics: current principles & techniques. 3rd ed. St. Louis (MO): Mosby; 2000. [Google Scholar]

- 2.Proffit WR, Fields HW. Contemporary orthodontics. 3rd ed. St. Louis (MO): Mosby; 2000. [Google Scholar]

- 3.Jung SK, Kim TW. New approach for the diagnosis of extractions with neural network machine learning. Am J Orthod Dentofacial Orthop. 2016;149(1):127–33. doi: 10.1016/j.ajodo.2015.07.030. [DOI] [PubMed] [Google Scholar]

- 4.Wehle HD. Machine learning, deep learning and AI: what’s the difference. Proceedings of the Annual Data Scientist Innovation Day Conference; 2017 Mar 28; Brussels, Belgium. [Google Scholar]

- 5.Min S, Lee B, Yoon S. Deep learning in bioinformatics. Brief Bioinform. 2017;18(5):851–69. doi: 10.1093/bib/bbw068. [DOI] [PubMed] [Google Scholar]

- 6.Ding X, Li Q, Cheng Y, Wang J, Bian W, Jie B. Local keypoint-based Faster R-CNN. Appl Intell. 2020;50(10):3007–22. doi: 10.1007/s10489-020-01665-9. [DOI] [Google Scholar]

- 7.Wolford L, Stevao E, Alexander CA, Goncalves JR. Orthodontics for orthognathic surgery. In: Miloro M, editor. Peterson’s principles of oral and maxillofacial surgery. 2nd ed. Ontario, Canada: BC Decker Inc; 2004. pp. 1111–34. [Google Scholar]

- 8.Wu Y, Kirillov A, Massa F, Lo WY, Girshick R. Detectron2 [Internet] San Francisco (CA): github.com; 2019. [cited at 2022 Oct 10]. Available from: https://github.com/facebookresearch/detectron2 . [Google Scholar]

- 9.Wen H, Huang C, Guo S. The application of convolutional neural networks (CNNs) to recognize defects in 3D-printed parts. Materials (Basel) 2021;14(10):2575. doi: 10.3390/ma14102575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wu Y, Kirillov A, Massa F, Lo WY, Girshick R. Detectron2: a PyTorch-based modular object detection library [Internet] Menlo Park (CA): Facebook; 2019. [cited at 2022 Oct 10]. Available from: https://ai.facebook.com/blog/-detectron2-a-pytorch-based-modular-objectdetection-library-/ [Google Scholar]

- 11.Toshev A, Szegedy C. DeepPose: human pose estimation via deep neural networks. Proceeding of the IEEE Conference on Computer Vision and Pattern Recognition; 2014 Jun 23–28; Columbus, OH. pp. 1–5. [DOI] [Google Scholar]

- 12.Agarap AF. Deep learning using rectified linear units (ReLU) [Internet] Ithaca (NY): arXiv.org; 2018. [cited at 2022 Oct 10]. Available from: https://arxiv.org/abs/1803.08375 . [Google Scholar]

- 13.Gulli A, Pal S. Deep learning with Keras. Birmingham, UK: Packt Publishing Ltd; 2017. [Google Scholar]

- 14.Noorbakhsh-Sabet N, Zand R, Zhang Y, Abedi V. Artificial intelligence transforms the future of health care. Am J Med. 2019;132(7):795–801. doi: 10.1016/j.amjmed.2019.01.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Han J, Pei J, Kamber M. Data mining: concepts and techniques. Amsterdam, Netherlands: Elsevier; 2011. [Google Scholar]

- 16.Chen YW, Stanley K, Att W. Artificial intelligence in dentistry: current applications and future perspectives. Quintessence Int. 2020;51(3):248–57. doi: 10.3290/j.qi.a43952. [DOI] [PubMed] [Google Scholar]

- 17.Zhang WC, Fu C, Zhu M. Joint object contour points and semantics for instance segmentation [Internet] Ithaca (NY): arXiv.org; 2020. [cited at 2022 Oct 10]. Available from: https://arxiv.org/abs/2008.00460 . [Google Scholar]

- 18.Uzair M, Jamil N. Effects of hidden layers on the efficiency of neural networks. Proceedings of 2020 IEEE 23rd International Multitopic Conference (INMIC); 2020 Nov 5–7; Bahawalpur, Pakistan. pp. 1–6. [DOI] [Google Scholar]

- 19.Ide H, Kurita T. Improvement of learning for CNN with ReLU activation by sparse regularization. Proceedings of 2017 International Joint Conference on Neural Networks (IJCNN); 2017 May 14–19; Anchorage, AK. pp. 2684–2691. [DOI] [Google Scholar]

- 20.Lee KS, Ryu JJ, Jang HS, Lee DY, Jung SK. Deep convolutional neural networks based analysis of cephalometric radiographs for differential diagnosis of orthognathic surgery indications. Appl Sci. 2020;10(6):2124. doi: 10.3390/app10062124. [DOI] [Google Scholar]

- 21.Choi HI, Jung SK, Baek SH, Lim WH, Ahn SJ, Yang IH, et al. Artificial intelligent model with neural network machine learning for the diagnosis of orthognathic surgery. J Craniofac Surg. 2019;30(7):1986–9. doi: 10.1097/SCS.0000000000005650. [DOI] [PubMed] [Google Scholar]

- 22.Poedjiastoeti W, Suebnukarn S. Application of convolutional neural network in the diagnosis of jaw tumors. Healthc Inform Res. 2018;24(3):236–41. doi: 10.4258/hir.2018.24.3.236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Schabel BJ, McNamara JA, Jr, Franchi L, Baccetti T. Qsort assessment vs visual analog scale in the evaluation of smile esthetics. Am J Orthod Dentofacial Orthop. 2009;135(4 Suppl):S61–71. doi: 10.1016/j.ajodo.2007.08.019. [DOI] [PubMed] [Google Scholar]

- 24.Ludlow JB, Gubler M, Cevidanes L, Mol A. Precision of cephalometric landmark identification: cone-beam computed tomography vs conventional cephalometric views. Am J Orthod Dentofacial Orthop. 2009;136(3):312.e1–10. doi: 10.1016/j.ajodo.2008.12.018. [DOI] [PMC free article] [PubMed] [Google Scholar]