Abstract

Objective

Outpatient no-shows have important implications for costs and the quality of care. Predictive models of no-shows could be used to target intervention delivery to reduce no-shows. We reviewed the effectiveness of predictive model-based interventions on outpatient no-shows, intervention costs, acceptability, and equity.

Materials and Methods

Rapid systematic review of randomized controlled trials (RCTs) and non-RCTs. We searched Medline, Cochrane CENTRAL, Embase, IEEE Xplore, and Clinical Trial Registries on March 30, 2022 (updated on July 8, 2022). Two reviewers extracted outcome data and assessed the risk of bias using ROB 2, ROBINS-I, and confidence in the evidence using GRADE. We calculated risk ratios (RRs) for the relationship between the intervention and no-show rates (primary outcome), compared with usual appointment scheduling. Meta-analysis was not possible due to heterogeneity.

Results

We included 7 RCTs and 1 non-RCT, in dermatology (n = 2), outpatient primary care (n = 2), endoscopy, oncology, mental health, pneumology, and an magnetic resonance imaging clinic. There was high certainty evidence that predictive model-based text message reminders reduced no-shows (1 RCT, median RR 0.91, interquartile range [IQR] 0.90, 0.92). There was moderate certainty evidence that predictive model-based phone call reminders (3 RCTs, median RR 0.61, IQR 0.49, 0.68) and patient navigators reduced no-shows (1 RCT, RR 0.55, 95% confidence interval 0.46, 0.67). The effect of predictive model-based overbooking was uncertain. Limited information was reported on cost-effectiveness, acceptability, and equity.

Discussion and Conclusions

Predictive modeling plus text message reminders, phone call reminders, and patient navigator calls are probably effective at reducing no-shows. Further research is needed on the comparative effectiveness of predictive model-based interventions addressed to patients at high risk of no-shows versus nontargeted interventions addressed to all patients.

Keywords: predictive model, decision support system, appointment attendance, no-show, missed appointment

BACKGROUND

No-shows (ie, missed appointments without prior notification to the healthcare provider) are a significant challenge for healthcare systems, with implications for costs, patient waiting times, and quality of care. The global average no-show rate is 23%, but rates vary geographically and among medical specialties, ranging from 43% across Africa to 13% across Oceania.1 In 2019/2020, there were 5 656 365 outpatient no-shows across the National Health Service (NHS) in the United Kingdom,2 with an estimated annual cost as high as £750 million.3 Outpatient no-shows are associated with worse health outcomes4 and are an independent predictor of increased subsequent acute care utilization.5,6

Outpatient no-shows are a complex, multifactorial phenomenon, associated with patient characteristics, including demographics relevant to equity (eg, receipt of welfare payments, travel time to appointment) and characteristics of the appointment and the healthcare system (eg, lead time).1,4,7,8 Organizational interventions, such as overbooking, and interventions facilitating access to appointments, such as reminders,9–13 transportation to appointments, and financial incentives,14 have been developed to increase attendance, reduce no-shows, or increase timely cancelations and reschedules. These goals are distinct, and preference for one over the other may depend on the clinical context. For example, in settings where the main driver of no-shows is the resolution of the patient’s complaint, timely cancelations could free up appointments for other patients. On the contrary, clinics that work with patients with progressive, disabling disorders may prioritize increased attendance. In terms of effectiveness, reminders increase appointment attendance and reduce no-show rates.9–13 Financial incentives may be effective at increasing appointment attendance in some settings,15,16 but not in safety-net clinics,14 and there is mixed evidence on the effect of patient navigators, transportation to appointments, and patient contracts.14 Overbooking may potentially decrease no-show rates,17 but there are concerns about the potential impact of overbooking on patients.18

More recent interventions have attempted to incorporate big data and machine learning. Predictive models that identify appointments at high risk of no-show could complement existing interventions, for instance by guiding the selection of appointments to overbook. They could also serve to select patients for more resource-intensive interventions, such as patient navigators (ie, trained staff contacting patients to help facilitate their care journey).

The study of predictive models of no-shows is timely, because of their increasing availability. A 2020 systematic review identified 50 papers reporting the development of predictive models of no-shows, one-quarter of which had been published in the last 2 years.19 There is also ample opportunity for real-world implementation of such predictive models. For example, a predictive model of no-shows is included in the electronic health record (EHR) supplied by Epic Systems.20 Over 45% of the US population has an EHR in Epic,21 and Epic’s EHR is increasingly used in Canada, the European Union, Australia, and the United Kingdom.22–24 However, there is no published evidence synthesis on the effectiveness of interventions that include a predictive model to reduce no-shows, and the published review of these models reports no information on cost-effectiveness, acceptability, or equity.

OBJECTIVE

We conducted a rapid systematic review to synthesize the evidence on the effect of predictive model-based interventions on outpatient no-shows, and on costs, acceptability, and equity.

MATERIALS AND METHODS

We conducted a rapid systematic review of controlled trials assessing predictive model-based interventions aiming to reduce outpatient no-shows, to inform decision-making on the implementation of the Epic predictive model in the local context in a timely manner.25,26 We structured the review process using the Synthesis Without Meta-analysis (SWiM) guidelines for narrative evidence synthesis, adapted to rapid review.27 The review was prospectively registered (PROSPERO CRD42022321894).28

Data sources and search strategy

We searched Medline, Cochrane CENTRAL, Embase, and IEEE Xplore Digital Library for published studies. We searched ClinicalTrials.gov and the WHO International Clinical Trials Registry Platform (ICTRP) for studies with unpublished results and ongoing studies. We developed a search strategy based on Medical Subject Headings (MeSH) and free search terms used in published reviews of no-shows.1,19 The search strategy was initially developed for Medline using Ovid and adapted to IEEE Xplore and Cochrane CENTRAL. Searches were conducted on March 30, 2022. We compared the results with a test set of identified studies and achieved 100% return. We conducted an additional search with broader terms on EMBASE via Ovid on July 8, 2022, to identify any additional studies. The search strategies are in Supplementary Methods S1.

Study selection

Study inclusion criteria were:

Randomized controlled trials (RCTs), nonrandomized controlled trials, and interrupted time series.

Assessing interventions based on predictive models and aiming to reduce no-shows or increase attendance, with any comparator.

Conducted in outpatient care, with adults with any condition.

Reporting at least 1 outcome domain indicating appointment attendance (ie, no-shows, cancelations, attended appointments).

Study exclusion criteria were:

Studies lacking a contemporaneous control group (eg, studies using historical or simulated controls), interrupted time series with fewer than 3 data collection points before and 3 after the intervention. Studies lacking a contemporaneous control group were excluded because some of the determinants of no-shows identified in the literature are seasonal. One systematic review reports that determinants of no-shows include the year, season, and month of the appointment.1 In addition, a systematic review of predictive models of no-shows found that some predictive models used the season and month of the appointment, the weather, and the presence of holidays, as predictor variables of no-shows.19

Studies in pediatric settings (ie, participants were under 18 years old according to the inclusion criteria) or in general practitioner offices, and studies focused exclusively on vaccination appointments or population screening. These studies were excluded because vaccination and population screening are single-instance contacts of large portions of the public with the healthcare system. In contrast, outpatient care often concerns repeat appointments with chronically ill patients. Therefore, outpatient care generates datasets of patients’ attendance history, which can be used to develop predictive models.

One reviewer screened all abstracts and full texts. A second reviewer independently screened 10% of all abstracts and full texts (agreement rate 99.3%). One reviewer hand-searched the reference lists of all included articles for eligible studies. We retained multiple reports of the same study if they reported different information (eg, sensitivity analyses).

Data extraction and quality assessment

One reviewer extracted data from all included studies using a piloted form, and assessed study quality using the Cochrane Risk of Bias tool (ROB 2) or Risk Of Bias In Nonrandomized Studies of Interventions (ROBINS-I).29,30 A second reviewer checked all outcome data and independently assessed study quality. Disagreements were resolved by discussion and arbitration by a senior reviewer. If an included paper cited a previous publication where the predictive model was described, we used that publication in data extraction.

Data extraction included the following information:

Study design, follow-up duration, comparator, sample size, funding source (private/public/mixed), country, and medical specialty of the trial site.

Intervention characteristics, drawing on the template for intervention description and replication (TIDieR) items31: materials/procedures delivered to increase attendance (eg, phone call reminders), by whom, when, and at which dosage, when and how the predictive model was used to identify high-risk patients for intervention delivery, and, if applicable, any information on intervention tailoring.

Predictive model characteristics, including information on the dataset and the analysis used for model development and validation (number of appointments, no-show rate, type of analysis, variable selection technique, predictors), and model discrimination and predictive performance measures (eg, c-statistic with 95% confidence interval [CI]).

-

The following outcomes:

We extracted outcome data to calculate the risk ratio (RR) of no-shows as our primary outcome (ie, number of no-shows per arm, number of all appointments per arm). Where possible, we extracted outcome data to calculate the RR of secondary outcomes, including appointment attendance, cancelations, and/or reschedules. Intention-to-treat data were extracted where possible. RRs were calculated as: (number of participants who presented the outcome in question in the intervention group/number of participants assigned to the intervention group)/(number of participants who presented the outcome in question in the control group/number of participants assigned to the control group).

A narrative summary of costs and cost-effectiveness analyses, acceptability (defined as any measure of perceptions, feedback, or experience of healthcare professionals, patients, or other stakeholders), and intervention equitability. For the latter outcome, we extracted a narrative summary of any sensitivity analyses of differences in the intervention effect between participant subgroups. We focused on groups defined by the PROGRESS-Plus, which lists characteristics relevant to health equity (age, gender, place of residence, race/ethnicity, language, occupation, religion, education, socioeconomic status).32

Data synthesis

We grouped results by intervention type. Intervention types were identified from the initial examination and categorization of the data. A meta-analysis was not conducted due to the incomplete reporting of outcome data and significant heterogeneity in outcome measures. Specifically, no-show RRs at the appointment level could be calculated for only 3 studies, which assessed 3 different interventions (phone call reminders, patient navigator, and text message reminders).33 However, we present the range of RR (median, interquartile range, and minimum–maximum range). Where it was not possible to calculate RR, we provided a narrative summary of attendance outcomes, as reported in each study. Risk of bias figures was produced using the robvis app.34

We undertook GRADE assessment of the certainty of the evidence for the comparison of each intervention with usual care for the outcomes of no-shows, cancelations, reschedules, and attendance.35 GRADE assessment indicates the degree of confidence that the identified estimate of effect is close to the true quantity, ranging from very low to high. The assessment takes into consideration the risk of bias in the included studies, risk of publication bias in the body of evidence, directness of evidence, heterogeneity, and precision of effect estimates. GRADE assessment was performed by 2 reviewers who reached a consensus on all decisions. Our approach followed Murad 2017 because the evidence did not include pooled effect estimates.36

For each comparison, we presented the following outcomes in a Summary of Findings table: no-shows (main manuscript), cancelations, reschedules, and attendance (appendix). The Summary of Findings table includes information on the quality of the evidence, the magnitude of the effects of the included interventions, and the overall grading of the evidence for each outcome using the GRADE approach.35 We followed the advice of the GRADE working group in the terminology we used to communicate our GRADE assessments of evidence. As such, we use the terms “is effective,” “is probably effective,” and “may be effective” to refer to high, moderate, and low certainty evidence, respectively. We refer to very low certainty evidence as “uncertain effect.”

RESULTS

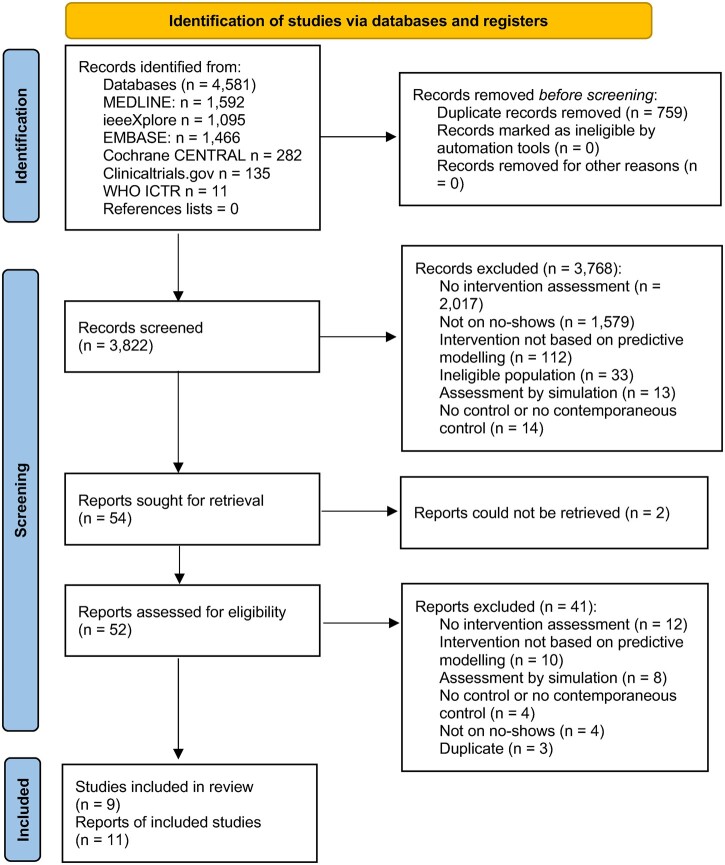

The search yielded 4581 records, of which we screened 3822 after duplicate removal (Figure 1). Of these, 3768 records were excluded after screening titles and abstracts, primarily because they were not reports of interventional studies (n = 2017) or they did not concern no-shows (n = 1579). We included 11 reports on 9 studies, 8 of which were completed and 1 was an incomplete study, suspended due to COVID-19 (NCT04376736). The suspended study reported no outcome data and therefore was not included in data extraction. One study did not report attendance outcomes but was included in the review because the main outcome (idle magnetic resonance imaging [MRI] scanner time) was relevant to the research question.37

Figure 1.

PRISMA flow chart. Overall, 4581 records were identified and 3822 were screened after duplicate removal. Eight completed studies and 1 registered, suspended study were eligible for inclusion in the review.

All 8 studies were conducted in high-income countries (n = 5, 63% in the United States and 1 study each in Italy, Singapore, and Spain), all but 1 study was randomized and none reported industry funding. Two studies were conducted in dermatology,38,39 and 2 in outpatient primary care.20,40 Other settings included a diagnostic MRI clinic, endoscopy, oncology, mental health, and pneumology.20,37,39,41–43

Four intervention types were identified from the initial examination and categorization of the data. Three studies assessed overbooking,37,38,41 3 studies assessed phone call reminders,39,40,42 1 study assessed text message reminders,20 and 1 study assessed patient navigators.43 In all studies, the output of the predictive model was used to select intervention recipients at high risk of a no-show. The median follow-up was 22 weeks (interquartile range [IQR] 11, 25 weeks) and the median sample size was 3851 appointments (IQR 1108, 4425 appointments). The comparator was usual appointment scheduling practice in all studies, but the definition of usual practice varied among studies. A detailed description of the studies is presented in Table 1. The characteristics of the predictive models are presented in Supplementary Table S1.

Table 1.

Characteristics of the included studies

| Study | Design | Participants | Comparator | Predictive model-based intervention | Follow-up |

|---|---|---|---|---|---|

| Phone call reminders | |||||

| Lee et al42 | RCT | 800 appointments in 1 women’s and children’s hospital in Singapore; unreported number of patients | Usual practice (no intervention) | The predictive model was used to generate daily reports. Appointments were color-coded based on no-show probability, presented alongside patient contact information and appointment information. Staff contacted the 50 patients at highest no-show risk daily, 2 working days before their appointments, using prespecified call verbiage to confirm attendance, cancel, or reschedule the appointment (up to 3 contact attempts). | 8 days |

| Shah et al40 | RCT | 2247 primary care patients in 1 hospital in the United States; unreported number of appointments | Usual practice (reminders) | Patients classified as no-shows by the predictive model were queued on a dedicated module in TopCare, an online, EHR-interoperable platform, 7 days before their appointment. The platform presented their contact information (name, phone number, appointment date and time, physician, and emergency contact) and the outcome of the reminder call. A reminder phone call was made to patients at ≥0.15 no-show risk, by a trained patient coordinator, using behavioral techniques to increase attendance. | 6 months |

| Valero-Bover et al39 | RCT | 805 dermatology and 303 pneumonology patients in 1 hospital in Spain; unreported number of appointments | Usual practice (no intervention) | A reminder phone call was made 1 week before the appointment to patients at high risk of no-show according to the predictive model. Up to 3 contact attempts were made. Patients were encouraged to attend their appointment or cancel it. | 2 months |

| Text message reminders | |||||

| Ulloa-Perez et al20 | RCT | 125 076 primary care appointments and 33 593 mental health appointments in 1 hospital in the United States; unreported number of patients | Usual practice (reminders) | No-show predictions were generated every night for next week's appointments, within Epic. An additional text message reminder (ie, 2 reminders in the intervention group vs 1 reminder in the control group) was sent 3 days before the appointment to patients at >0.51 risk of no-show for primary care and >0.21 risk of no-show for mental health. Patients were asked to confirm the appointment or cancel by phone or online. | 7 months |

| Patient navigator | |||||

| Percac-Lima et al43 | RCT | 4425 oncology appointments in 1 hospital in the United States; unreported number of patients | Usual practice (reminders) | The predictive model was used to generate reports at 7 and 1 days preappointment, listing no-show risk and patient information for all appointments (ie, EHR number, name, sex, age, date, time and reason for appointment, physician and contact information, recent hospitalization, language, clinic, location, and the outcome of the navigation phone call made to patients). A trained patient navigator, fluent in English and in Spanish, used the report to call the 20% of patients at highest no-show risk per day, at 7 days and 1 day before the appointment. The patient navigator confirmed the appointment and offered support as needed, including discussing components of the visit, answering questions, addressing barriers to attendance, and facilitating further patient-staff communication (up to 2 calls per patient, calls lasted 2–10 min). | 5 months |

| Overbooking | |||||

| Cronin and Kimball38 | RCT | Dermatology patients in 1 hospital in the United States, unreported sample size | Usual practice (overbooking based on historical booking maximums) | The predictive model was used to generate 2 reports within the Smart Booking system. The first report was used by staff in charge of appointment scheduling, to manage overbooking for the next 2–60 days, and the second report was generated daily and was used to manage overbooking the following day. Both reports identified appointments to overbook and suggested which appointment types should be booked in these slots. Physician preferences for overbooking were taken into account. | 4 months |

| RCT | 2446 endoscopy patients in 1 hospital in the United States, unreported number of appointments | Usual practice (reminders) | The predictive model was used to identify appointments with no-show risk >0.45 scheduled in the following 2 weeks. Staff did not overbook the appointment identified by the model. Instead, researchers actively recruited patients seeking care into “fast track” appointments, that is, appointments without a strictly specified time, in the half-day morning or afternoon slot of the likely no-show. “Fast track” appointments occurred within 2 weeks instead of the usual wait time of >30 days. Patients who opted in received a phone call to finalize the booking. | 9 months | |

| Parente et al37 | Non-RCT | 29 320 appointments in 1 magnetic resonance clinic in Italy; unreported number of patients | Usual practice (reminders) | Limited information is provided about the overbooking process. Appointment no-show probabilities, produced using the predictive model, and average expected appointment duration were used to estimate the number of minutes available for overbooking per day. When the available overbooking minutes were equal to the time needed for an examination, the appointment with the highest no-show probability of the day was overbooked. This occurred daily, for appointments scheduled the day after the next. The created appointments were filled on a first-come-first-served basis. | 6 months |

Abbreviations: EHR: electronic health record; RCT: randomized controlled trial.

Prediction model plus phone call reminders versus usual scheduling practice

In 3 RCTs (analyzing a total of 4959 appointments), patients identified by a predictive model to be at high risk of no-show received phone call reminders.39,40,42 The median no-show RR from these 3 RCTs was 0.61 (IQR 0.49, 0.68, min 0.49, max 0.75). Considering the GRADE assessment, there is moderate certainty evidence that predictive model-based phone call reminders probably reduce no-shows (Table 2). Uncertainty was due to the risk of bias (Figure 2). Two of the 3 RCTs (analyzing 3851 appointments) provided very low certainty evidence on the effect of predictive model-based phone call reminders on cancelations and reschedules, due to the risk of bias, indirectness, and imprecision (Supplementary Table S2).40,42 One of the 3 RCTs (analyzing 3851 appointments) provided moderate certainty evidence due to the risk of bias that predictive model-based phone call reminders probably do not increase attendance (Supplementary Table S2).40

Table 2.

Summary of findings

| Prediction plus phone reminders compared to usual scheduling for managing appointment attendance | |||||

|---|---|---|---|---|---|

| Patient or population: outpatients | |||||

| Setting: outpatient clinics | |||||

| Intervention: prediction plus phone reminders | |||||

| Comparison: usual scheduling | |||||

| Outcomes | Anticipated absolute effectsa (95% CI) |

Relative effect (95% CI) | No. participants (studies) | Certainty of the evidence (GRADE) | |

| Risk with usual scheduling | Risk with prediction plus phone reminders | ||||

|

226 per 1000 | 0 per 1000 (111–169)b | RR ranged from 0.49 to 0.75 | 4959 (3 RCTs)b |

|

| Prediction plus text message reminders compared to usual scheduling for managing appointment attendance | |||||

|---|---|---|---|---|---|

| Patient or population: outpatients | |||||

| Setting: outpatient clinics | |||||

| Intervention: prediction plus text message reminders | |||||

| Comparison: usual scheduling | |||||

| Outcomes | Anticipated absolute effectsa (95% CI) |

Relative effect (95% CI) | No. participants (studies) | Certainty of the evidence (GRADE) | |

| Risk with usual scheduling | Risk with prediction plus text message reminders | ||||

|

114 per 1000 | 0 per 1000 (102–106) | RR ranged from 0.89 to 0.93c | 158669 (1 RCT) |

|

| Prediction plus patient navigator compared to usual scheduling for managing appointment attendance | |||||

|---|---|---|---|---|---|

| Patient or population: outpatients | |||||

| Setting: outpatient clinics | |||||

| Intervention: prediction plus patient navigator | |||||

| Comparison: usual scheduling | |||||

| Outcomes | Anticipated absolute effectsa (95% CI) |

Relative effect (95% CI) | No. participants (studies) | Certainty of the evidence (GRADE) | |

| Risk with usual scheduling | Risk with prediction plus patient navigator | ||||

|

131 per 1000 | 72 per 1000 (60–88) | RR 0.55 (0.46–0.67) | 4425 (1 RCT) |

|

Abbreviations: CI: confidence interval; RCTs: randomized controlled trials; RR: risk ratio.

The risk in the intervention group (and its 95% confidence interval) is based on the assumed risk in the comparison group and the relative effect of the intervention (and its 95% CI).

Participant numbers and risk ratios were unavailable for Lee et al42 for all outcomes. Lee et al42 reported a statistically significant decrease of −18.7% (P < .001) in no-show rate.

Results were reported separately for mental health and primary care patients.

Two of 3 studies were at high risk of bias; this included high risk of bias for randomization in the largest study.

Single study at high risk of bias for randomization.

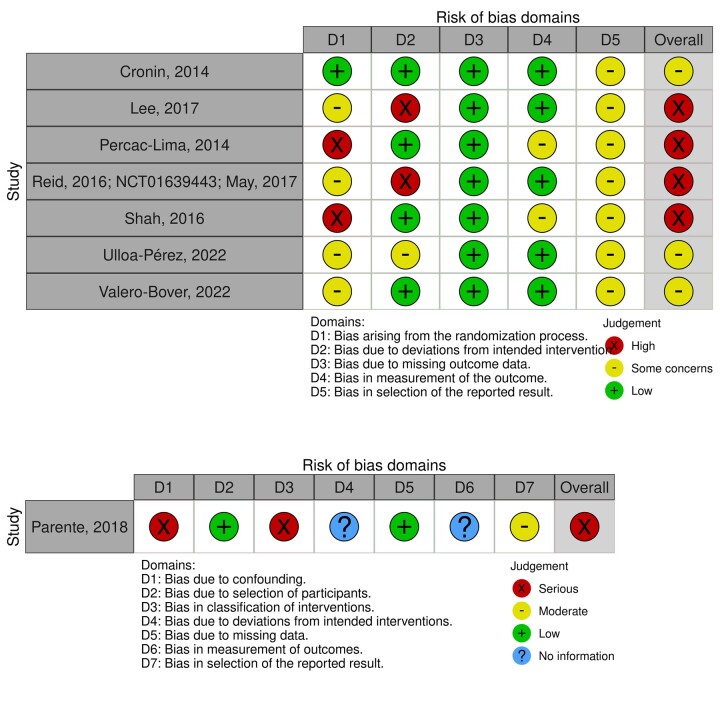

Figure 2.

Risk of bias of the included studies. The figure presents the risk of bias in the included studies, evaluated using the Cochrane Risk of Bias tool (ROB 2) for randomized controlled trials (top panel) and the Risk Of Bias In Nonrandomized Studies of Interventions (ROBINS-I) for nonrandomized controlled trials (bottom panel). Four (57%) randomized controlled trials were at high risk of bias and the nonrandomized controlled trial was at serious risk of bias. There were some concerns for the remaining randomized controlled trials.

Costs, acceptability, and equity

One of the 3 RCTs reported an effect of predictive model-based phone call reminders on the mean relative value units per patient (ie, a measure of value for physician services used in the United States, absolute difference 0.13, 95% CI −0.02 to 0.28).40 In one of the other RCTs, the authors conducted postintervention debriefing with 8 physicians and managers and found that they considered the intervention successful, but that there were issues with the workload resulting from achieving fewer no-shows and the workload associated with implementing the intervention.39 No information was reported on equity.

Prediction model plus text message reminders versus usual scheduling practice

In 1 RCT (analyzing a total of 158 669 appointments) patients identified by a predictive model to be at high risk of no-show received text message reminders.20 No-show RR was reported separately for primary care and mental health appointments (median RR 0.91, interquartile range 0.90, 0.92, min 0.89, max 0.93) (Table 2). There is high certainty evidence that predictive model-based text message reminders reduce no-shows. This RCT also provided low certainty evidence due to imprecision that predictive model-based text message reminders may increase cancelations (median RR 0.98, interquartile range 0.96–1.00, min 0.94, max 1.02) (Supplementary Table S2). In this study, the outcome was defined as same-day cancelations.

Costs, acceptability, and equity

A sensitivity analysis found no evidence of heterogeneous intervention effect on no-show rates by age, sex, race, or amount of co-pay.20 No information was reported on costs and acceptability.

Prediction model plus patient navigator versus usual scheduling practice

In 1 RCT (analyzing a total of 4425 appointments) patients identified by a predictive model to be at high risk of no-show received phone calls from a patient navigator.43 The RCT provides moderate certainty evidence due to the risk of bias that predictive model-based patient navigator calls probably reduce no-shows (RR 0.55, 95% CI 0.46–0.67, Table 2) and increase cancelations (RR 1.16, 95% CI 1.04–1.29, Supplementary Table S2).

Costs, acceptability, and equity

The average net income associated with this intervention was $5000 per month.43 In a subgroup analysis (unreported numeric data, odds ratios of appointment attendance for race, language, gender, age, and insurance subgroups presented in figures), the effect of the patient navigator intervention was significant for anglophone patients but not for other language groups, for white and African American patients but not Hispanic and Asian patients, and for Medicare and commercial insurance holders but not for Medicaid holders and self-paying patients. Regarding age, the intervention effect was significant only for patients in the 40–69 age group. No information was reported on acceptability.

Prediction model plus overbooking versus usual scheduling practice

In 2 RCTs and 1 non-RCT (analyzing 31 766 appointments), information on the risk of no-show was used to make overbooking decisions.37,38,41 Outcome data could not be summarized, because the 3 studies used different outcomes. Due to the risk of bias, inconsistency, indirectness, and imprecision in the available evidence, there is very low certainty whether there is an effect of predictive model-based overbooking on appointment attendance (Supplementary Table S2).

Costs, acceptability, and equity

Predictive model-based overbooking was associated with a relative increase of 15.4% in hourly revenue in the MRI clinic (absolute difference of 8.14$, unreported 95% CIs and significance),37 but with higher daily overtime costs in endoscopy (absolute difference of 26.13$).41 Regarding acceptability, 1 RCT reported that on intervention days, clinics ran longer by an average 34 min compared to control days (absolute difference 0.47 h [95% CI, 0.06–0.88, P = .02]).41 One non-RCT reported a nonsignificant increase of 6.2 min in patient in-clinic wait time (relative difference 3.66%) and 10 min in staff overtime (4.05%, unreported 95% CIs and significance).37 In 1 RCT, African Americans were twice as likely as whites to take up “fast track” appointments made available by overbooking (adjusted odds ratio 1.99, 95% CI, 1.26–3.17).41,44

DISCUSSION

This rapid systematic review examined 8 RCTs and non-RCTs assessing reminder, patient navigator, and overbooking interventions, all utilizing predictive modeling and aiming to reduce outpatient no-shows. Findings are limited by the small number of studies. We found that predictive model-based text message reminders were effective at reducing no-shows. Predictive model-based phone call reminders and patient navigator calls were probably effective at reducing no-shows. Finally, it is uncertain whether there was an effect of predictive model-based overbooking on appointment attendance. In addition, we identified evidence gaps in cost-effectiveness, acceptability, and equity, for all interventions.

Regarding secondary outcomes, predictive model-based phone call reminders are probably not effective at increasing attendance, and it is uncertain whether they affect cancelations and reschedules. Predictive model-based text message reminders may increase same-day cancelations, and predictive model-based patient navigator calls probably increase cancelations, probably by making cancelations easier for patients who did not intend to attend their appointments. Early cancelations, but not last-minute cancelations, could result in more available slots for patients. Overall, the magnitude and direction of intervention effects in the included studies varied, possibly due to heterogeneity in the sample (eg, medical specialty) and outcome definition.

The review findings on reminders align with previous research showing that nontargeted reminders (ie, reminders offered to all patients with scheduled appointments) can reduce no-shows.9–13 Our findings should be contextualized by comparison with the literature on the effectiveness of nontargeted interventions. Results from a previous review on the effect of receiving nontargeted reminder phone calls, compared to no reminders, show at least comparable benefits to predictive model-based phone call reminders.11 Results from 1 meta-analysis comparing nontargeted reminder text messages compared to no reminders found that those receiving nontargeted reminder text messages were 25% less likely to no-show, which is a larger benefit than that observed in the included studies of predictive model-based text message reminders.10 We did not find any studies reporting a head-to-head comparison of targeted versus nontargeted interventions to reduce no-shows. The added value of targeting interventions by using a predictive model to identify appointments at high risk of no-shows is unclear. Relevant to this question is the lack of studies reporting a full cost-effectiveness analysis of predictive model-based interventions, though studies reported limited evidence that patient navigators lead to cost savings, phone call reminders do not affect costs compared to usual scheduling, and overbooking leads to increases in overtime costs and in revenue.

None of the included studies reported a formal acceptability evaluation of the interventions, although some reported stakeholder feedback was reported anecdotally. For example, 1 study reported that staff appreciated the reduction in uncertainty and cognitive effort associated with making overbooking decisions,38 and another study reported that management supported the permanent implementation of the intervention.41 However, in both cases, data were not collected systematically and the qualitative analysis methodology was unreported, leaving an important evidence gap on whether predictive model-based interventions to reduce no-shows may have undesirable effects (eg, increased patient in-clinic wait time and staff overtime were reported in 1 study).37 Evidence on equity impact was limited, with reminder interventions potentially having a similar effect across demographics, while patient navigators had different effects by language, race, age, and insurance groups. Participants were poorly described in terms of PROGRESS characteristics, which are relevant to health equity (ie, age, gender, place of residence, race/ethnicity, language, occupation, religion, education, and socioeconomic status). For example, only half of the trials reported participants’ race/ethnicity. Heterogeneous intervention effects could also be attributed to patient characteristics relevant to equity, but as patient characteristics were poorly reported, this hypothesis could not be explored.

Implications for practice

This review showed that there are many interventions available to care organizations that have access to predictive models of no-shows. Organizations should select interventions that fit their needs and aims. For example, compared with interventions seeking to facilitate access to care (eg, patient navigators), overbooking may not address the impact of no-shows on individual patients’ outcomes, and it may increase patients’ treatment burden. In 1 included study, appointments created by overbooking carried a risk of unusually long in-clinic wait times and rescheduling in the event of clinic overload.41 Organizations should therefore monitor predictive model-based interventions after implementation to ensure that there are no undesirable effects and that benefits are uniformly experienced by all patients.

Implications for research

First, research on cost-effectiveness, staff and patient acceptability, and equity is urgently needed to inform implementation. Such research should seek to be patient-centered. For example, none of the studies reported patient or public involvement, or measured patients’ experience, except for in-clinic wait time. Patients who have difficulties managing appointments (eg, because of treatment burden) may have strong views of interventions such as overbooking when these are based on models that include prior no-shows as a predictor. In addition, patient-focused outcomes that can impact clinical outcomes should be measured. For example, an increased risk of discharge could lead to delays in care. Second, except for patient navigators, most of the included studies assessed relatively low-resource interventions. Precision care delivery, based on predictive modeling, could support the delivery of resource-intensive interventions that could not be feasibly delivered to all patients. Future studies could evaluate the effectiveness of such interventions for patient groups who are less likely to benefit from low-resource interventions and develop alternative solutions. Third, outcome types and definitions were heterogeneous and poorly reported. Future studies could facilitate evidence synthesis by reporting no-show data completely (ie, number of no-shows, attended appointments, cancelations, and reschedules per arm). Fourth, the included studies focused on participants classified to be at high risk of no-shows according to predictive models. Future research should study the added value of targeted interventions by comparing targeted versus nontargeted interventions (eg, text message reminders sent to all patients) across all clinic patients. Studies should also directly compare different predictive model-based interventions.

Strengths and limitations

This is the first evidence synthesis of predictive model-based interventions for no-shows. We searched 6 databases, including 2 trial registries to capture unpublished studies, and we performed a complete appraisal of the evidence using the ROB 2, ROBINS-I, and GRADE tools. It identified effective predictive model-based interventions and mapped important evidence gaps. The findings can be used to guide future research and support the current implementation of such predictive models in real-life care settings.

A meta-analysis could not be performed due to the incomplete and unclear reporting of outcome data, and heterogeneity in outcome measures and interventions. Although the range of observed RR is presented, this does not account for differences in the relative sizes of the studies. Second, as this is a rapid review, screening was primarily done by 1 researcher. Although the agreement between reviewers was excellent in the independent screening of 10% of retrieved titles, it is possible that we missed some eligible studies. The findings may not generalize to certain contexts, such as low-income countries and pediatrics. Finally, this review included findings from 8 studies. More high-quality studies are needed on the effectiveness of predictive model-based interventions.

CONCLUSIONS

Despite the limited number of studies, this rapid review found that predictive model-based text message reminders are effective at reducing outpatient no-shows, and phone call reminders and patient navigator calls are probably effective at reducing no-shows. Further research is needed on the effectiveness of predictive model-based overbooking, and on cost-effectiveness, acceptability, and equity, for all reviewed interventions.

Supplementary Material

ACKNOWLEDGMENTS

The authors thank Chunhu Shi for the resolution of conflicts in risk-of-bias assessment and Shuhan Liu for the conduct of searches for Embase.

CONFLICT OF INTEREST STATEMENT

None declared.

Contributor Information

Theodora Oikonomidi, Centre for Health Informatics, Division of Informatics, Imaging and Data Science, Manchester Academic Health Science Centre, The University of Manchester, Manchester, UK; National Institute for Health and Care Research Applied Research Collaboration Greater Manchester, Manchester, UK.

Gill Norman, National Institute for Health and Care Research Applied Research Collaboration Greater Manchester, Manchester, UK; Division of Nursing, Midwifery and Social Work, School of Health Sciences, University of Manchester, Manchester, UK.

Laura McGarrigle, National Institute for Health and Care Research Applied Research Collaboration Greater Manchester, Manchester, UK; Wythenshawe Hospital, Manchester University NHS Foundation Trust, Manchester, UK.

Jonathan Stokes, Centre for Primary Care & Health Services Research, The University of Manchester, Manchester, UK; MRC/CSO Social & Public Health Sciences Unit, University of Glasgow, Glasgow, UK.

Sabine N van der Veer, Centre for Health Informatics, Division of Informatics, Imaging and Data Science, Manchester Academic Health Science Centre, The University of Manchester, Manchester, UK; National Institute for Health and Care Research Applied Research Collaboration Greater Manchester, Manchester, UK.

Dawn Dowding, National Institute for Health and Care Research Applied Research Collaboration Greater Manchester, Manchester, UK; Division of Nursing, Midwifery and Social Work, School of Health Sciences, University of Manchester, Manchester, UK.

FUNDING

This research was funded by the National Institute for Health and Care Research Applied Research Collaboration Greater Manchester (NIHR200174). The views expressed in this publication are those of the author(s) and not necessarily those of the National Institute for Health and Care Research or the Department of Health and Social Care.

AUTHOR CONTRIBUTIONS

TO conceived the study, collected and analyzed data, and drafted the report. GN provided methodological support in the design of the study, collected data, and revised the report critically for important intellectual content. LM collected data and revised the report critically for important intellectual content. DD, JS, and SNvdV conceived the study and revised the report critically for important intellectual content.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

DATA AVAILABILITY

The dataset used in the analyses reported in this manuscript will be made available on Open Science Framework upon publication.

REFERENCES

- 1. Dantas LF, Fleck JL, Cyrino Oliveira FL, Hamacher S.. No-shows in appointment scheduling – a systematic literature review. Health Policy 2018; 122 (4): 412–21. [DOI] [PubMed] [Google Scholar]

- 2. NHS England. Quarterly Hospital Activity Statistics; 2020. https://www.england.nhs.uk/statistics/statistical-work-areas/hospital-activity/quarterly-hospital-activity/. Accessed March 8, 2022.

- 3. Torjesen I. Patients will be told cost of missed appointments and may be charged. BMJ 2015; 351: h3663. [DOI] [PubMed] [Google Scholar]

- 4. Wilson R, Winnard Y.. Causes, impacts and possible mitigation of non-attendance of appointments within the National Health Service: a literature review J Health Organ Manag 2022; 36 (7): 892–911. [DOI] [PubMed] [Google Scholar]

- 5. Hwang AS, Atlas SJ, Cronin PR, et al. Association between outpatient “no-shows” and subsequent acute care utilization. J Gen Intern Med 2014; 29(Suppl. 1): S31–S32. [Google Scholar]

- 6. Hwang AS, Atlas SJ, Cronin P, et al. Appointment “no-shows” are an independent predictor of subsequent quality of care and resource utilization outcomes. J Gen Intern Med 2015; 30 (10): 1426–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Brewster S, Bartholomew J, Holt RIG, Price H.. Non-attendance at diabetes outpatient appointments: a systematic review. Diabet Med 2020; 37 (9): 1427–42. [DOI] [PubMed] [Google Scholar]

- 8. Wolff DL, Waldorff FB, von Plessen C, et al. Rate and predictors for non-attendance of patients undergoing hospital outpatient treatment for chronic diseases: a register-based cohort study. BMC Health Serv Res 2019; 19 (1): 386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Reda S, Makhoul S.. Prompts to encourage appointment attendance for people with serious mental illness. Cochrane Database Syst Rev 2001; 2001 (2): CD002085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Robotham D, Satkunanathan S, Reynolds J, Stahl D, Wykes T.. Using digital notifications to improve attendance in clinic: systematic review and meta-analysis. BMJ Open 2016; 6 (10): e012116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. McLean SM, Booth A, Gee M, et al. Appointment reminder systems are effective but not optimal: results of a systematic review and evidence synthesis employing realist principles. Patient Prefer Adherence 2016; 10: 479–99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Boksmati N, Butler-Henderson K, Anderson K, Sahama T.. The effectiveness of SMS reminders on appointment attendance: a meta-analysis. J Med Syst 2016; 40 (4): 90. [DOI] [PubMed] [Google Scholar]

- 13. Guy R, Hocking J, Wand H, Stott S, Ali H, Kaldor J.. How effective are short message service reminders at increasing clinic attendance? A meta-analysis and systematic review. Health Serv Res 2012; 47 (2): 614–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Crable EL, Biancarelli DL, Aurora M, Drainoni ML, Walkey AJ.. Interventions to increase appointment attendance in safety net health centers: a systematic review and meta-analysis. J Eval Clin Pract 2021; 27 (4): 965–75. [DOI] [PubMed] [Google Scholar]

- 15. Lee KS, Quintiliani L, Heinz A, et al. A financial incentive program to improve appointment attendance at a safety-net hospital-based primary care hepatitis C treatment program. PLoS One 2020; 15 (2): e0228767. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Post EP, Cruz M, Harman J.. Incentive payments for attendance at appointments for depression among low-income African Americans. Psychiatr Serv 2006; 57 (3): 414–6. [DOI] [PubMed] [Google Scholar]

- 17. Bibi Y, Cohen AD, Goldfarb D, Rubinshtein E, Vardy DA.. Intervention program to reduce waiting time of a dermatological visit: managed overbooking and service centralization as effective management tools. Int J Dermatol 2007; 46 (8): 830–4. [DOI] [PubMed] [Google Scholar]

- 18. Harewood GC. Overbooking in endoscopy: ensure no-one is left behind!. Am J Gastroenterol 2016; 111 (9): 1274–5. [DOI] [PubMed] [Google Scholar]

- 19. Carreras-García D, Delgado-Gómez D, Llorente-Fernández F, Arribas-Gil A.. Patient no-show prediction: a systematic literature review. Entropy (Basel) 2020; 22 (6): 675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Ulloa-Perez E, Blasi PR, Westbrook EO, Lozano P, Coleman KF, Coley RY.. Pragmatic randomized study of targeted text message reminders to reduce missed clinic visits. Perm J 2022; 26 (1): 64–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Day JA. Why Epic. Johns Hopkins Medicine. https://www.hopkinsmedicine.org/epic/why_epic/. Accessed August 10, 2022.

- 22.Which Hospitals Use Epic in USA?https://digitalhealth.folio3.com/blog/which-hospitals-use-epic/#What_hospitals_that_use_Epic_in_Canada. Accessed August 10, 2022.

- 23.Hoeksma J. Speculation of National Epic Deal with NHS England. Digital Health; 2021. https://www.digitalhealth.net/2021/10/speculation-of-epic-deal-with-nhs-england/. Accessed July 27, 2022.

- 24. MFT Signs Contract with Epic for Ambitious New EPR Solution. Manchester University NHS Foundation Trust; 2020. https://mft.nhs.uk/2020/05/26/for-immediate-use-26th-may-mft-signs-contract-with-epic-for-ambitious-new-epr-solution/. Accessed July 27, 2022.

- 25. Moher D, Stewart L, Shekelle P.. All in the family: systematic reviews, rapid reviews, scoping reviews, realist reviews, and more. Syst Rev 2015; 4 (1): 183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Polisena J, Garritty C, Kamel C, Stevens A, Abou-Setta AM.. Rapid review programs to support health care and policy decision making: a descriptive analysis of processes and methods. Syst Rev 2015; 4 (1): 26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Campbell M, McKenzie JE, Sowden A, et al. Synthesis without meta-analysis (SWiM) in systematic reviews: reporting guideline. BMJ 2020; 368: l6890. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.PROSPERO: International Prospective Register of Systematic Reviews. https://www.crd.york.ac.uk/PROSPERO/. Accessed August 10, 2022.

- 29. Sterne JA, Savović J, Page MJ, et al. RoB 2: a revised tool for assessing risk of bias in randomised trials. BMJ 2019; 366: l4898. [DOI] [PubMed] [Google Scholar]

- 30. Sterne JA, Hernán MA, Reeves BC, et al. ROBINS-I: a tool for assessing risk of bias in non-randomised studies of interventions. BMJ 2016; 355: i4919. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Hoffmann TC, Glasziou PP, Boutron I, et al. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ 2014; 348: g1687. [DOI] [PubMed] [Google Scholar]

- 32. Tugwell P, Petticrew M, Kristjansson E, et al. Assessing equity in systematic reviews: realising the recommendations of the Commission on Social Determinants of Health. BMJ 2010; 341: c4739. [DOI] [PubMed] [Google Scholar]

- 33. McKenzie JE, Brennan SE.. Synthesizing and presenting findings using other methods. In: Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA, eds. Cochrane Handbook for Systematic Reviews of Interventions, version 6.3 (updated February 2022). Cochrane; 2022: 321–47. [Google Scholar]

- 34. McGuinness LA, Higgins JPT.. Risk-of-bias VISualization (robvis): an R package and Shiny web app for visualizing f-bias assessments. Res Syn Meth 2021; 12 (1): 55–61. [DOI] [PubMed] [Google Scholar]

- 35. Guyatt G, Oxman AD, Akl EA, et al. GRADE guidelines: 1. Introduction-GRADE evidence profiles and summary of findings tables. J Clin Epidemiol 2011; 64 (4): 383–94. [DOI] [PubMed] [Google Scholar]

- 36. Murad MH, Mustafa RA, Schünemann HJ, Sultan S, Santesso N.. Rating the certainty in evidence in the absence of a single estimate of effect. Evid Based Med 2017; 22 (3): 85–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Parente CA, Salvatore D, Gallo GM, Cipollini F.. Using overbooking to manage no-shows in an Italian healthcare center. BMC Health Serv Res 2018; 18 (1): 185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Cronin PR, Kimball AB.. Success of automated algorithmic scheduling in an outpatient setting. Am J Manag Care 2014; 20 (7): 570–6. [PubMed] [Google Scholar]

- 39. Valero-Bover D, Gonzalez P, Carot-Sans G, et al. Reducing non-attendance in outpatient appointments: predictive model development, validation, and clinical assessment. BMC Health Serv Res 2022; 22 (1): 451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Shah SJ, Cronin P, Hong CS, et al. Targeted reminder phone calls to patients at high risk of no-show for primary care appointment: a randomized trial. J Gen Intern Med 2016; 31 (12): 1460–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Reid MW, May FP, Martinez B, et al. Preventing endoscopy clinic no-shows: prospective validation of a predictive overbooking model. Am J Gastroenterol 2016; 111 (9): 1267–73. [DOI] [PubMed] [Google Scholar]

- 42. Lee G, Wang S, Dipuro F, et al. Leveraging on predictive analytics to manage clinic no show and improve accessibility of care. In: 2017 IEEE international conference on Data Science and Advanced Analytics (DSAA); 2017: 429-438. doi: 10.1109/DSAA.2017.25. [DOI]

- 43. Percac-Lima S, Cronin PR, Ryan DP, Chabner BA, Daly EA, Kimball AB.. Patient navigation based on predictive modeling decreases no-show rates in cancer care. Cancer 2015; 121 (10): 1662–70. [DOI] [PubMed] [Google Scholar]

- 44. May FP, Reid MW, Cohen S, Dailey F, Spiegel BMR.. Predictive overbooking and active recruitment increases uptake of endoscopy appointments among African American patients. Gastrointest Endosc 2017; 85 (4): 700–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The dataset used in the analyses reported in this manuscript will be made available on Open Science Framework upon publication.