Abstract

Despite the similarity in structure, the hemispheres of the human brain have somewhat different functions. A traditional view of hemispheric organization asserts that there are independent and largely lateralized domain-specific regions in ventral occipitotemporal (VOTC), specialized for the recognition of distinct classes of objects. Here, we offer an alternative account of the organization of the hemispheres, with a specific focus on face and word recognition. This alternative account relies on three computational principles: distributed representations and knowledge, cooperation and competition between representations, and topography and proximity. The crux is that visual recognition results from a network of regions with graded functional specialization that is distributed across both hemispheres. Specifically, the claim is that face recognition, which is acquired relatively early in life, is processed by VOTC regions in both hemispheres. Once literacy is acquired, word recognition, which is co-lateralized with language areas, primarily engages the left VOTC and, consequently, face recognition is primarily, albeit not exclusively, mediated by the right VOTC. We review psychological and neural evidence from a range of studies conducted with normal and brain-damaged adults and children and consider findings which challenge this account. Last, we offer suggestions for future investigations whose findings may further refine this account.

Keywords: hemispheric organization, visual object recognition, neural basis, distributed organization

Hemispheric Organization in the Service of Visual Object Recognition

The organization of the two cerebral hemispheres of the human brain, and specifically their individual and joint contributions to visual object recognition, has been the subject of decades of theoretical controversy. The empirical findings from hundreds of experiments, while important, have still not led to an obvious consensus. Although the structure of the two hemispheres appears remarkably similar, there are thought to be important functional differences between them. The nature of these lateralized differences is at the core of the dispute.

The nature of the functional specialization between hemispheres is intimately related to the nature of functional specialization within each hemisphere. A theoretical account that has largely dominated the literature in this regard claims that there are circumscribed and largely lateralized cortical “modules” subserving individual recognition functions. We first outline this domain-specific account and review the evidence that is taken to support it. We then argue that closer scrutiny of the data, as well as new evidence, suggests an alternative account in which visual recognition is the product of a distributed network of cortical regions that engages both hemispheres, and we lay out a set of computational principles that form the crux of this account. Thereafter, we describe empirical findings that are more consistent with this account than with the traditional one, and propose that the principles of this alternative account apply not only to visual object recognition but also have implications for other cognitive processes. Last, we tackle several empirical challenges that appear to run counter to this account and we lay out some future directions that might help adjudicate between different models of hemispheric organization.

Note that claims about domain-specificity and hemispheric lateralization are logically distinct. That is, a domain-specific module might span both hemispheres or, conversely, a more distributed function might nonetheless be restricted to regions within a single hemisphere. In practice, however, claims about modularity are often accompanied by claims of lateralization, whereas computational principles that might give rise to a more distributed organization would be expected to apply both within and between hemispheres. Thus, although we will need to be mindful of this distinction at various points in our analysis, a consideration of hemispheric organization is unavoidably bound together with a consideration of functional specialization.

Circumscribed Cortical Regions Subserving Recognition of Different Visual Classes

Domain-specific accounts of the organization of the hemispheres for object perception and its distinctive neural correlate have a long and compelling history. For example, Konorski (1967), perhaps the most extreme proponent of modularity and the champion of the “gnostic neuron,” argued that there are nine different subsystems engaged in visual object recognition, including, for example, subsystems for the recognition of small manipulable objects, larger partially manipulable objects, and nonmanipulable objects (see Figure 1; for further description of this view, also see Farah, 1992). The notion of independent brain areas with specific, independent functions is seemingly parsimonious, and theories that speculate about the existence of many independent abilities are intuitively appealing, well cited, and influential (Wilmer et al., 2014).

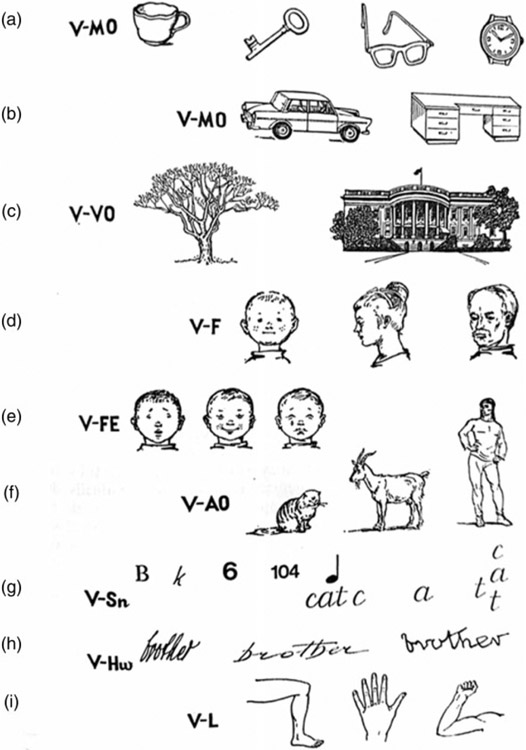

Figure 1.

Catalogue of nine different object recognition subsystems: (a) small, manipulable objects; (b) larger, partially manipulable objects; (c) nonmanipulable objects; (d) human faces; (e) emotional facial expressions; (f) animated objects; (g) signs; (h) handwriting; and (i) positions of limbs. Reprinted from Konoroski (1967).

A more recent theoretical view of regional and functional independence, which has had substantial impact in visual cognitive neuroscience, is also one in which there are distinct cortical regions that are individually specialized for the high-level visuoperceptual analysis of different classes of objects (often termed “domain specificity”), although the specific functions do not align with those proposed by Konorski (1967). Much of the evidence to support this view comes from studies using functional magnetic resonance imaging (fMRI), and these investigations have revealed cortical regions with selective, perhaps even dedicated, responses to particular visual classes (Kanwisher, 2010, 2017). For example, regions have been found with a selective response to written words (Cohen & Dehaene, 2004; Cohen et al., 2002; Price & Devlin, 2003; Price & Mechelli, 2005), numerals (Shum et al., 2013), common objects (Grill-Spector, Kushnir, Edelman, Itzchak, & Malach, 1998; Malach et al., 1995), scenes or houses (Epstein & Kanwisher, 1998), hands (Bracci, Caramazza, & Peelen, 2015, 2018), tools (Almeida, Mahon, & Caramazza, 2010; Martin, 2007), and body parts (Peelen, Glaser, Vuilleumier, & Eliez, 2009; Schwarzlose, Baker, & Kanwisher, 2005), with some of these regions—for example, the visual word form area (VWFA), fusiform face area (FFA), and parahippocampal place area (PPA)—showing distinct patterns of lateralization (see Figure 2 for some of these brain-behavior mappings).

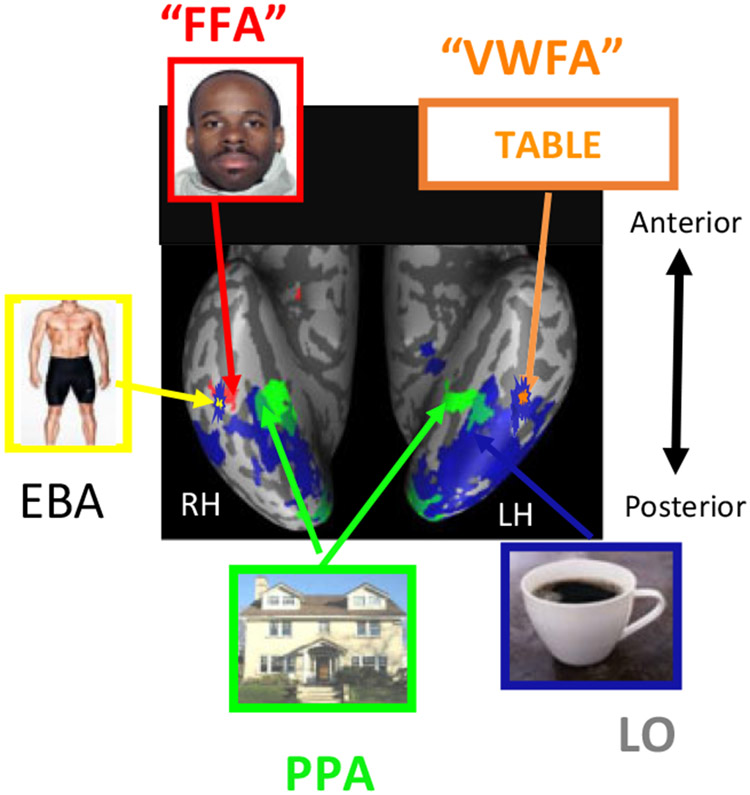

Figure 2.

Ventral stream category-specific topography depicting domain-specific regions on a single representative inflated brain. The regions included are those activated in response to visual words (VWFA), faces (FFA), bodies (EBA), scenes (PPA) and common objects (LO). As there is no single experiment that has examined the cortical activation associated with all these visual classes, this figure is a composite of the results of many different experiments and is a simplification of the domain-specific activation of ventral cortex. Taken with permission from Behrmann and Plaut (2013). VWFA = Visual Word Form Area; FFA = Fusiform Face Area; EBA = Extrastriate Body Area; PPA = Parahippocampal Place Area; LO = Lateral Occipital Area or Complex; RH = right hemisphere; LH = left hemisphere.

Among these candidate domains, two have received the most attention and are probably the strongest contenders for claims about domain-specificity: face recognition and word recognition, associated with the FFA and the right hemisphere (RH) and the VWFA and the left hemisphere (LH), respectively. Whereas both of these areas have been ascribed a distinct function based on extensive empirical findings, there are also several compelling a priori reasons why the processing of these two visual classes would be expected to be highly segregated and independent (Hellige, Laeng, & Michimata, 2010; Kanwisher, 2017; J. Levy, Heller, Banich, & Burton, 1983; Maurer, Rossion, & McCandliss, 2008; Mercure, Dick, Halit, Kaufman, & Johnson, 2008). First, the image properties of faces and words are completely distinct—whereas faces comprise 3D structure with more curved features and with parts which are not easily separable (e.g., eyes, nose and mouth), words are composed of 2D structure with individual letters which occur independently in their own right and are made of mostly straight edges. The image differences, and the engagement of more configural processing for faces and more part-based or compositional processing for words, have led to a view in which there are two distinct mechanisms, one a more holistic system and the other a more feature-based system, such that faces are processed entirely by the former, words are processed entirely by the latter, and other classes of objects are processed by some combination of the two (Boremanse, Norcia, & Rossion, 2014; Busigny & Rossion, 2011; Farah, 1991, 1992; Rossion et al., 2000).

A second motivation for the independence of the subsystems is that face recognition is an evolutionarily old skill and one that is likely to be conserved across species (McKone & Kanwisher, 2005; Sheehan & Nachman, 2014). In contrast, word recognition is only about 5,000 years old (Dehaene & Cohen, 2007) and, until roughly 200 years ago, was limited to a minority of the population. Moreover, word recognition capacity is largely restricted to humans (although extensive training can induce some form of symbol recognition in monkeys; Srihasam, Mandeville, Morocz, Sullivan, & Livingstone, 2012; Srihasam, Vincent, & Livingstone, 2014). Last, the differences in the acquisition of face and word representations are stark: Whereas face recognition is acquired incidentally starting at birth, word recognition usually requires explicit instruction and thousands of hours of practice, and generally starts when children begin formal schooling. Given the persuasive a priori arguments for independence of face and word processing, we now examine in detail the empirical data on this issue.

Face Selective Responses in the RH

Evidence from neuropsychology has played a large and highly informative role with respect to domain-specific claims of a RH face-selective region. Many studies have demonstrated that patients with a bilateral lesion, or even just a unilateral right-sided lesion, are impaired at recognizing known faces and even at judging the similarity of pairs of unknown faces (Barton, 2011; Busigny, Graf, Mayer, & Rossion, 2010; Sergent & Signoret, 1992). Whether the deficit is specific to faces or also affects the recognition of other objects still remains controversial—among the complications for comparing faces and another class of objects is that, relative to faces, these other classes are not well matched on exemplar homogeneity or expertise (Gauthier, Behrmann, & Tarr, 1999, 2004; Geskin & Behrmann, 2018). Last, there are behavioral signatures that are taken as evidence that face processing is domain-specific. For example, compared to other visual classes, face recognition is considered to engage more configural or holistic computations which fail to operate as the face is rotated away from the upright orientation in the picture plane (Farah, Wilson, Drain, & Tanaka, 1998; Yin, 1969; Yovel & Kanwisher, 2005) or when the face is decomposed into parts (Tanaka & Farah, 1993, 2003; for meta-analysis and review, see Richler & Gauthier, 2014).

Consistent with the domain-specificity illustrated in Figure 2, many neuroimaging studies have provided evidence for a selective neural response to the viewing of a face. Early positron emission tomography (PET; Sergent, Ohta, & MacDonald, 1992) and fMRI (Kanwisher, McDermott, & Chun, 1997) studies provided the first observations of face-selective responses in the right ventral occipitotemporal cortex (VOTC), and dozens if not hundreds of studies have replicated and extended these findings (for review, see Grill-Spector, Weiner, Kay, & Gomez, 2017). The face-selective nature of the RH has also gained substantial support from evoked response potential (ERP) studies and magnetoencephalography (MEG) studies, which uncover a specific N170 or M170 response to faces that is greater than the response to other tested categories (e.g., birds, cars, or furniture; Bentin, Allison, Puce, Perez, & McCarthy, 1996; Gao et al., 2013). Last, the distinction between face-selective and word-selective sites has also been noted in studies using intracranial recordings used to monitor pharmacologically resistant epilepsy (Allison, Puce, Spencer, & McCarthy, 1999; McCarthy, Puce, Belger, & Allison, 1999; Puce et al., 1995, 1996). Further confirmation has been obtained from more recent studies using electrocorticography (Ghuman et al., 2014) and stereotactic encephalography for recording and stimulation of neural responses in the same patient group (Parvizi et al., 2012; Rangarajan & Parvizi, 2016). Together, these findings support the domain-specific aspect of face recognition and uncover differences between the way faces are processed compared to other non-face stimuli.

Word Selective Responses in the LH

In complementary fashion to face domain-specificity, empirical evidence from neuropsychology has helped support the claim of an area in the LH that is selective for words (or letter strings). Since the classic studies of Dejerine (1891, 1892) and early descriptions of so-called “pure” alexia (Geschwind, 1965), many single case or small group studies have shown that a lesion to the left VOTC (and not necessarily to the splenium, as argued previously) can impair orthographic processing (Behrmann, Plaut, & Nelson, 1998; Damasio, 1983; Henderson, Friedman, Teng, & Weiner, 1985).

Consistently, neuroimaging studies have uncovered a region of the left VOTC that is preferentially activated by words (Schlaggar & McCandliss, 2007) and many early findings from PET (Petersen, Fox, Snyder, & Raichle, 1990) and fMRI studies (Carlos, Hirshorn, Durisko, Fiez, & Coutanche, 2019; Cohen et al., 2000; Cohen et al., 2002; Price, 2000) attest to the relative specificity of the response to orthographic input. The word-selective nature of the VWFA in the LH has also gained substantial support from studies using either ERPs (Appelbaum, Liotti, Perez, Fox, & Woldorff, 2009; Bentin et al., 1996; for a review, see Maurer & Mccandliss, 2008) or MEG (Tarkiainen, Helenius, Hansen, Cornelissen, & Salmelin, 1999) which uncover a specific N170 or M170 response to written words. Also, cortical surface electrophysiological recordings from left VOTC in patients showed a strong LH response to words presented singly or as part of a sentence (Canolty et al., 2007; Nobre, Allison, & McCarthy, 1994) and further confirmation of the LH advantage has been obtained from recent studies using electrocorticography (Ghuman & Fiez, 2018).

A Distributed Account of Hemispheric Organization of Face and Word Perception

As the earlier brief overview makes clear, the evidence favoring the separate processing of faces in the RH and words in the LH supports a strong account of psychological and neural domain segregation. In contrast to the claims of independence of function, however, we have proposed a distributed account in which the systems supporting face and word recognition exhibit graded and overlapping functional specialization both within and, especially, between hemispheres (Behrmann & Plaut, 2013, 2015; Plaut & Behrmann, 2011). This account was initially inspired by close scrutiny of the empirical data—detailed review of many studies has revealed bilateral, rather than unilateral, activation for words (Appelbaum et al., 2009) and for faces (Allison et al., 1999; Carmel & Bentin, 2002).

Perhaps even more compelling is that in those few studies in which cortical responses for faces and words are measured within-individual, as shown in Figure 3(a), there is bilateral activation for faces and for words although there is an asymmetry with greater activation for words than faces over the LH and greater activation for faces than words over the RH (see also Kay & Yeatman, 2017; Matsuo et al., 2015). A similar weighted asymmetry is noted in one of the relatively few ERP studies in which words and faces are displayed as a within-subject factor (Rossion, Joyce, Cottrell, & Tarr, 2003) and the same is found in the electrophysiological responses from surface electrodes in the RH and LH of patients being monitored prior to neurosurgery (Allison, McCarthy, Nobre, Puce, & Belger, 1994).

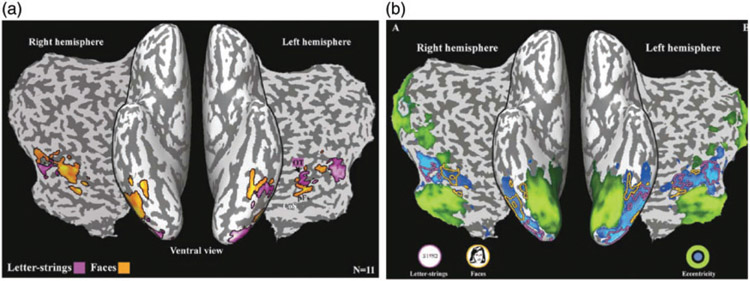

Figure 3.

(a) Activation map showing bilateral activation for faces (orange) and words (mauve) superimposed on both the ventral surface of an inflated brain and on an accompanying flat map. (b) Results of eccentricity mapping in the same individuals. Visual areas responsive to foveally presented images are in blue and to peripheral images in green shown on both the ventral surface of an inflated brain and on an accompanying flat map. Blood-Oxygen-Level-Dependent (BOLD) activation for faces (orange outline) and words (mauve outline) from (a) are superimposed on the eccentricity map and show that both face and word selective activation overlap regions of cortex associated with foveal vision. Adapted with permission from Hasson et al. (2002).

In addition to the possible overlap of neural regions, the behavioral signatures typically associated with either holistic- or part-based processing may apply to both faces and words. For example, the characteristic face-inversion effect (Farah, Tanaka, & Drain, 1995; Rossion et al., 1999; Yin, 1969)—that is, the substantial decrement in performance as a function of stimulus orientation—has been reported for words as well and reaction time is linearly slowed as a function of deviation from upright for both faces (Valentine, 1988) and words (Koriat & Norman, 1985; Wong, Wong, Lui, Ng, & Ngan, 2019). Last, the pattern of encoding following a RH or LH VOTC lesion is similar. For example, patients with prosopagnosia, a disproportionate impairment in face versus object recognition, usually after a RH VOTC lesion make multiple fixations across a face (Stephan & Caine, 2009), considered to reflect a breakdown in holistic processing. Similarly, patients with pure alexia, a disproportionate impairment in word versus object recognition after a LH VOTC lesion also make multiple fixations across the word and read in a piecemeal, letter-by-letter fashion (Behrmann, Shomstein, Black, & Barton, 2001).

These observations of similarity in the neural and psychological bases of face and word perception are left unexplained by modular theories of cortical organization. To be clear, they are not incompatible with such theories: It might be the case that the two independent modules just happen to be distributed across the hemispheres in complementary fashion and to operate according to similar principles. However, a modular theory provides no insight into why the face and word modules are organized in this manner, as they have nothing to do with each other.

In contrast to a modular account, we have formulated an account that can explain the data supporting partial segregation because face and word representations are not, in fact, independent. This more graded, distributed account is based on three key principles. None of these principles is novel or particularly controversial, but they have important implications when considered together.

The first principle is that representations and knowledge are distributed. We assume that the neural system for visual recognition consists of a set of hierarchically organized cortical areas, ranging from local retinotopic information in primary visual cortex, V1, through more global, object-based and semantic information in anterior temporal cortex (see Grill-Spector & Malach, 2004). At each level, the visual stimulus is represented by the activity of a large number of neurons, and each neuron participates in coding a large number of stimuli. Generally, stimuli that are similar with respect to the information coded by a particular region evoke similar (overlapping) patterns of activity in that region. Knowledge of how features at one level combine to form features at the next level is encoded by the pattern of synaptic connections and strengths between and within the regions. Learning involves modifying these synapses in a way that alters the representations to capture the relevant information in the domain better and to support better behavioral outcomes. With extended experience, expertise develops through the refinement, specialization, and elaboration of representations, requiring the recruitment of additional neurons and regions of cortex.

The second principle is that there is cooperation and competition between representations. The ability of a set of synaptic connections to encode a large number of stimuli depends on the degree to which the relevant knowledge is consistent or systematic (i.e., similar representations at one level correspond to similar representations at another). In general, systematic domains benefit from highly overlapping neural representations that support generalization, whereas unsystematic domains require largely nonoverlapping representations to avoid interference. Thus, if a cortical region represents one type of information (e.g., faces), it is ill-suited to represent another type of information that requires unrelated knowledge (e.g., words), with the result that the domains are better represented separately (due to competition). On the other hand, effective cognitive processing requires the coordination (cooperation) of multiple levels of representation within and across domains. Of course, representations can cooperate directly only to the extent that they are connected—that is, there are synapses between the regions encoding the relevant knowledge of how they are related; otherwise, they must cooperate indirectly through mediating representations. In this way, the neural organization of cognitive processing is strongly constrained by available connectivity for both competition and cooperation (Mahon & Caramazza, 2011).

The third principle is that there are pressures on hemispheric organization associated with topography and proximity. Brain organization must permit sufficient connectivity among neurons to carry out the necessary information processing, but the total axonal volume must fit within the confines of the skull. This constraint is severe: Fully connecting the brain’s 1011 neurons would require more than 20 million cubic meters of axon volume.1 Clearly, connectivity must be as local as possible. Long-distance projections are certainly present in the brain, but they are relatively rare and presumably play a sufficiently critical functional role to offset the “cost” in volume. In fact, the organization of human neocortex as a folded sheet can be understood as a compromise between the spherical shape that would minimize long-distance axon length and the need for greater cortical area to support highly elaborated representations (see recent papers on cytoarchitectonics and receptor architecture as constraints on functional organization; Amunts & Zilles, 2015; Caspers et al., 2015; Weiner et al., 2014). The organization into two hemispheres is also relevant here, as interhemispheric connectivity is largely restricted to homologous areas and is thus vastly less dense than connectivity within each hemisphere (Suarez et al., 2018). Even at a local scale, the volume of connectivity within an area can be minimized by adopting a topographic organization so that related information is represented in as close proximity as possible (Jacobs & Jordan, 1992). This is seen most clearly in the retinotopic organization of early visual areas, given that light falling on adjacent patches of the retina is highly likely to contain related information (also see Arcaro, Schade, & Livingstone, 2019a, for a related account with strong emphasis on constraints of retinotopy). The relevant dimensions of similarity for higher-level visual areas are, of course, far less well understood, but the local connectivity constraint is no less pertinent (Jacobs, 1997).

Despite their differences, both word and face recognition are highly overlearned and—given the high degree of visual similarity among exemplars—place extensive demands on high-acuity vision (Gomez, Natu, Jeska, Barnett, & Grill-Spector, 2018; Hasson, Levy, Behrmann, Hendler, & Malach, 2002; I. Levy, Hasson, Avidan, Hendler, & Malach, 2001). This foveal specificity also holds for many other cases of overlearned visual representations (Pokemon in Pokemon experts; Gomez, Barnett, & Grill-Spector, 2019). Thus, representations for both words and faces need to cooperate with (i.e., be connected to and, hence, adjacent to) representations of central visual information; as a result, in both hemispheres, words and faces compete for neural space in areas adjacent to retinotopic cortex in which information from central vision is encoded (Hasson, Levy, Behrmann, Hendler, & Malach, 2002; Roberts et al., 2013; Woodhead, Wise, Sereno, & Leech, 2011). These areas are sculpted further over development (Gomez et al., 2018; Nordt et al., 2019) and end up being labeled the VWFA in the LH and the FFA in the RH, although there is generally bilateral activation to both visual classes (see Figure 3).

To minimize connection length, orthographic representations are further constrained to be proximal to language-related information—especially phonology—which is left-lateralized in most right- and left-handed individuals; across the population, word-selective activation is co-lateralized with language areas (Cai, Paulignan, Brysbaert, Ibarrola, & Nazir, 2010; Gerrits, Van der Haegen, Brysbaert, & Vingerhoets, 2019; Van der Haegen, Cai, & Brysbaert, 2012). As a result, letter and word representations come to rely most heavily—albeit not exclusively—on the left VOTC region (VWFA) as an intermediate cortical region bridging between early vision and language. This claim is consistent with the interactive view in which left occipitotemporal regions become specialized for word processing because of top-down predictions from the language system integrating with bottom-up visual inputs (Carreiras et al., 2009; Devlin, Jamison, Gonnerman, & Matthews, 2006; Price & Devlin, 2011). With reading acquisition, as the LH region becomes increasingly tuned to represent words (Nordt et al., 2019), the competition with face representations in that region increases. Consequently, face representations that were initially bilateral in children (Dundas, Plaut, & Behrmann, 2014; Lochy, de Heering, & Rossion, 2019) become more lateralized to the right fusiform region (FFA) albeit, again, not exclusively. Last, the exact site of the VWFA is somewhat lateral (relative to FFA on the lateral to medial axis), and even within the VWFA there is a medial to lateral axis (Bouhali, Bezagu, Dehaene, & Cohen, 2019), with this arrangement likely a result of within-hemisphere competition to maintain close connectivity to areas engaged in phonological processing (Barttfeld et al., 2018; Dehaene et al., 2010).

Empirical Support for the Distributed Account of Hemispheric Organization

We and others have accumulated substantial evidence in support of this distributed view of face and word recognition, and, here, we describe the evidence as it pertains to the three key computational principles.

The first principle concerning the distribution of representation has already been covered earlier by the evidence favoring the spatial localization of the FFA and VWFA relative to the anterior extrapolation of the fovea in extrastriate cortex, and the medial-to-lateral organization in high visual-acuity regions (see Figure 3), and so we do not discuss this further.

Shared Representations With Weighting

The second principle of the distributed account states that representations that are compatible are coordinated and co-localized, and that incompatible information, which is subject to competition, is segregated. One possibility is that this incompatibility might result in binary separation between systems for face and word representation but in fact, as revealed earlier (Figure 3(a)), the cortical solution appears to be one of bihemispheric engagement but with greater weighting for face and word lateralization in the RH and LH, respectively.

Notwithstanding the fact that there is considerable evidence for bilateral activation for both faces and words, it still remains to be determined whether both hemispheres contribute functionally to both face and word perception rather than just being activated epiphenomenally, for example, through interhemispheric connectivity. To evaluate this, we tested the performance of adults with a unilateral lesion to VOTC to either the RH (three patients with prosopagnosia) or to the LH (four patients with pure alexia; Behrmann & Plaut, 2014). Face recognition was tested in one task in a same or different discrimination procedure with morphed faces and in a second task in which participants matched identity of a face across viewpoint. Word recognition was measured in a task requiring the reading aloud of words of different length and then in a lexical decision task with matched words and nonwords of various lengths. The key finding from these four experiments was that both patient groups were significantly impaired on all tasks, relative to their own group of matched controls, but, as predicted, on some dependent measures, a direct comparison of the two groups showed some of the differences associated with the postulated weighted asymmetry. For example, on word recognition, the Alexia group performed more slowly than the Prosopagnosia group and disproportionately so as word length increased. A second example is from the same or different face discrimination task: Although both groups made significantly more errors than respective controls, and there was no overall difference in accuracy between the two patient groups, there was a significant interaction of Patient Group × Condition. Closer scrutiny showed that even for easy discriminations, the Prosopagnosia group was less accurate than the Alexia group although the groups were equally poor on the medium discrimination trials.

Much of the current research with individuals with neuropsychological deficits such as pure alexia and prosopagnosia examine the findings in terms of a double dissociation. On the traditional account (words and faces are independent and never the twain shall meet), one might predict a double dissociation with the former group impaired at word but not face recognition and the latter group showing the converse. Indeed, recent criteria differentiate between classic versus strong dissociations (Shallice, 1988). For the former to hold, the patient’s score on one task should differ significantly from the controls but be in the normal range on the other task and there should be a statistical difference between the scores of the two tasks. For the latter to hold, there should be a significant difference between the patient’s score on both tasks compared to controls, but one task is performed better than the other task. The findings from the aforementioned patient study might be interpreted as satisfying the criteria for the strong but not classical dissociation in that the patients are impaired at both face and word tasks relative to controls, albeit significantly more so on one than the other (but see McIntosh, 2018) for the value of simple dissociations in neuropsychology). The strong dissociation results we report are at odds with the double dissociation predicted by the traditional account in that both Alexic and Prosopagnosic groups are clearly impaired at both word and face recognition, relative to controls, but the patients with LH lesions were more impaired at word than face recognition and the patients with RH lesions were more impaired at face than word recognition. These findings suggest a relationship between the mechanisms underlying face and word recognition and, when damage to this mechanism occurs, a deficit, albeit weighted, is evident on both tasks.

The co-occurrence of pure alexia and prosopagnosia has also been reported in some other studies such as that of a case with left occipital arteriovenous malformation (Y.-C. Liu, Wang, & Yen, 2011), Case 3 of Damasio, Damasio, and Van Hoesen (1982), and a few additional cases reported in the overview by Farah (1991) that may also fit this profile. Also relevant is the finding that patients with lesions to the left posterior fusiform gyrus were impaired at processing orthographic and complex nonorthographic stimuli (Roberts et al., 2013) as well as faces (Roberts et al., 2015). These authors also proposed a deficit in a common underlying mechanism, namely, the loss of high spatial frequency visual information coded in this region and damage thus affects both word and face recognition.

Analogous results come from a study of children between the ages of 5 and 17 years in which, following a unilateral posterior injury to the temporal lobe in infancy, there were no differences in the nature and extent of the face recognition deficit as a function of which hemisphere was affected (de Schonen et al., 2005). In addition, in recent studies of patients with impairments following posterior cerebral infarcts, all patients who exhibited word recognition difficulties also had problems in face recognition, regardless of which hemisphere was affected (Asperud, Kühn, Gerlach, Delfi, & Starrfelt, 2019; Gerlach, Marstrand, Starrfelt, & Gade, 2014).

In further support of the distributed view, in one study, adults with developmental dyslexia (DD) not only performed poorly on word recognition, but they also matched faces more slowly and discriminated between similar faces (but not cars) more poorly than controls (Gabay et al., 2017). Moreover, DD individuals showed reduced hemispheric lateralization of words and faces, as demonstrated using a half-field paradigm (see also Sigurdardottir, Ivarsson, Kristinsdottir, & Kristjansson, 2015). The neural profile of children with DD is also atypical in that, relative to controls, they evince a normal Blood-Oxygen-Level-Dependent (BOLD) response to checkerboards and houses but reduced activation to faces in the FFA and to words in the VWFA (Monzalvo, Fluss, Billard, Dehaene, & Dehaene-Lambertz, 2012). It is worth pointing out that, whereas the observation of mixed impairments following unilateral brain damage does not rule out bilateral, co-localized face and word modules, such an account provides no basis for understanding mixed and asymmetric deficits in the acquisition of faces and words, or any other functional relationship between the two domains.

Findings from the selectivity of extrastriate cortex in illiterate adults are also illuminating with respect to the relationship between word and face neural substrates. In one study, the BOLD fMRI response to spoken and written language, visual faces, houses, tools, and checkers was measured in individuals who were illiterate as well as those who became literate in adulthood or in later childhood (Dehaene, Cohen, Morais, & Kolinsky, 2015; Dehaene et al., 2010). Most relevant is that, unsurprisingly, the illiterate individuals revealed no response to written words in the left VWFA region but a response to faces was apparent in this region. In those individuals in whom literacy was acquired, however, this left fusiform area was activated by written words and concomitantly, activation of faces in this region was reduced. This competitive word-face effect was observed both in individuals who acquired literacy in childhood and in those who acquired literacy in adulthood, a finding that speaks to the possibility of ongoing competition in cortex over the lifespan. Somewhat at odds with these findings of a competitive effect with voxels in the LH being increasingly tuned to words, and subsequently voxels in the RH increasing in proportion to reading scores, is a new study conducted with a large number of individuals of varying degrees of literacy (Hervais-Adelman et al., 2019). The findings revealed that the acquisition of literacy does indeed recycle existing object representations, but there was no concomitant impinging on other stimulus categories, and face activation remained detectable in the left VOTC even after word acquisition.

Finally, we have shown that in children who have undergone left posterior lobectomy for the control of medically intractable epilepsy, the VWFA emerges in the RH (T. T. Liu, Freud, Patterson, & Behrmann, 2019; see also Cohen et al., 2004). This atypical localization suggests that the RH must have some capacity for word recognition and that this region can be recruited when necessary. Whether or not this RH VWFA is entirely normal in terms of its functional capability is not yet fully determined.

Developmental Emergence of the FFA and the VWFA

As has been demonstrated previously, face representations are acquired slowly over the course of development (Scherf, Behrmann, Humphreys, & Luna, 2007) and are not adult-like until just after the age of 30 years (Germine, Duchaine, & Nakayama, 2011). Critically, on the distributed account, the claim is that prior to the onset of literacy, which occurs usually around 5 or 6 years of age, there is no hemispheric specialization for face recognition. At the onset of literacy, the LH becomes increasingly tuned for word recognition under the pressure for communication between visual and language areas and, by virtue of the optimization of the left VOTC for orthographic processing, further refinement of face representations occurs primarily, although not exclusively, in the right VOTC (for related ideas and evidence (see Cantlon, Pinel, Dehaene, & Pelphrey, 2011; Dehaene et al., 2015)).

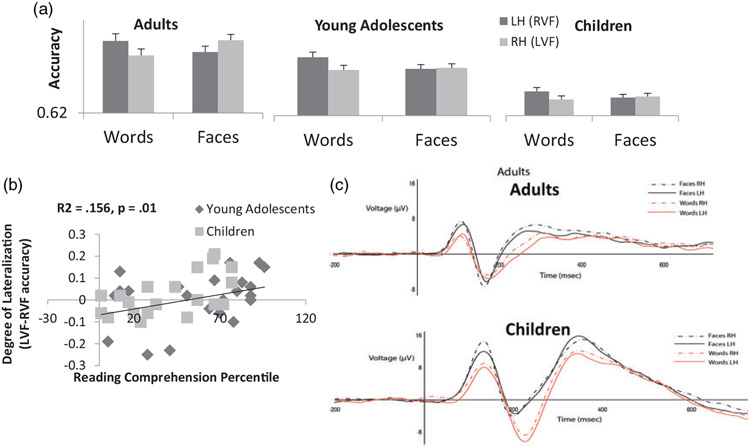

To evaluate this putative sequence of events, we collected behavioral as well as ERP data from children aged 7.5 to 9.5 years, adolescents aged 11 to 13 years, and adults performing a same or different discrimination task with words and faces as stimuli. On each trial, as shown in Figure 4, an initial word is shown centrally at a duration long enough for young children to encode the input, followed by the presentation of the same or a different word, which could appear with equal probability in the right or left visual field (RVF and LVF, respectively; see Figure 4). In other blocks of trials, the identical procedure was followed but with faces, rather than words, as input and the order of the face or word block was counterbalanced.

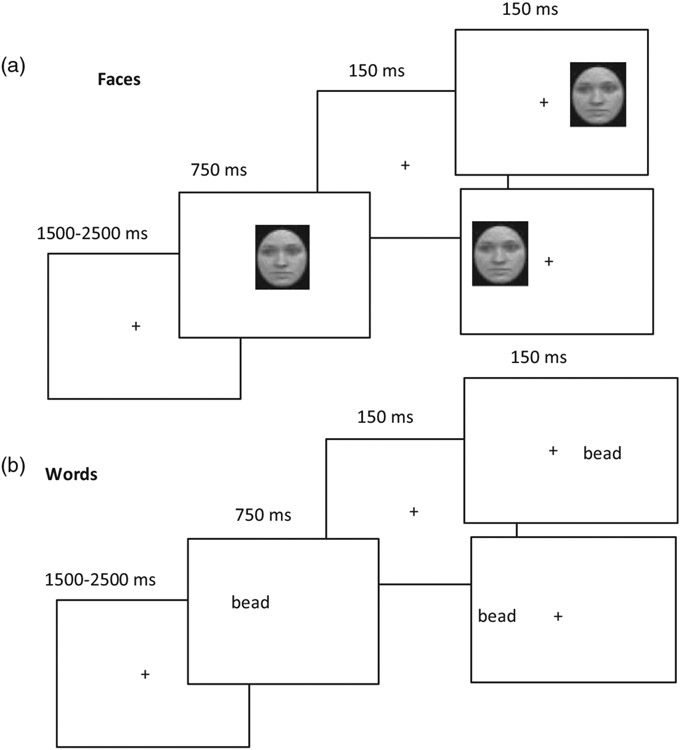

Figure 4.

Schematic depiction demonstrating the experimental procedure for a single trial for same/different matching of face (a) and of word (b) stimuli, respectively. On each trial, a fixation cross is shown in the center of a computer screen. Thereafter, a face or word appears over the fixation, followed by a screen of 150 ms duration containing a fixation cross. Last, a screen displaying a face or word with the stimulus presented with equal probability to the right or left of fixation is shown. Note the central stimulus presentation initially which is then followed by the lateralized probe stimulus. Adapted from Dundas et al. (2013).

Adults showed the expected hemispheric organization, with better performance for the matching task when words were presented to the RVF than LVF and better performance when faces were presented in the LVF than in the RVF (Dundas, Plaut, & Behrmann, 2013; see Figure 5(a)). Although their overall accuracy was roughly equal to that of adults, adolescents showed a hemispheric advantage only for words but not for faces, and the same was true for the children, although their overall accuracy was reduced relative to the other two groups. Of particular relevance was the observation of a significant correlation between reading competence and hemispheric lateralization of faces (after regressing out age, other cognitive scores and face discrimination accuracy) in the children and adolescents (see Figure 5(b)). The same finding of a positive correlation between the reading performance of 5-year-old children and the lateralization of their electrophysiological response to faces has also been reported using fast periodic visual stimulation: The more letters known by the children, the more right-lateralized their face response (Lochy et al., 2019; for further discussion, see section on Challenges to the Distributed Account of Hemispheric Organization of Face and Word Perception). Together, these findings support the notion that face and word recognition do not develop independently and that word lateralization, which emerges earlier, may drive face lateralization.

Figure 5.

(a) Accuracy of responses in a same/different discrimination task in adults, adolescents and children for faces and words as a function of hemifield of probe. (b) Correlation between reading competence and degree of lateralization for faces, calculated as accuracy for LVF-RVF. Note the high correlation between the reading skill and face lateralization in the children and young adolescents. (c) Average wave form in voltage (negative plotted downwards) for face and word discrimination over the RH and LH for adults and children. Note the lateralization of the N170 waveform for both faces and words in the adults and the lateralization for words but not for faces in the children. From Dundas et al. (2013, 2014). LH = left hemisphere; RH = right hemisphere; RVF = right visual field; LVF = left visual field.

We also used the identical discrimination paradigm described earlier in children and adults while ERP data were collected simultaneously. We then analyzed the ERP signal only for the centrally presented face or word (as ERPs to laterally presented stimuli tend to be weaker and a motor response was required for these stimuli). The data indicated that the standard N170 ERP component for adults was greater in the LH over RH for words and greater in the RH over LH for faces over posterior electrodes (Dundas et al., 2014; see Figure 5(c)), consistent with the behavioral findings reported earlier. Although the children (aged 7–12years) showed the greater LH over RH N170 superiority for words, there was neither a behavioral nor neural hemispheric superiority of faces. These electrophysiological findings further support the claim that the lateralization of word recognition may precede and drive the later lateralization of face perception.

The emergence of the left VWFA and right FFA has also been documented in young children aged 6 years of age and learning to read in the first trimester of school. In an imaging study, these children evinced activation of voxels specific to written words and digits in the LH VWFA location and, at the same time, RH activation in response to faces increased in proportion to reading scores (Dehaene-Lambertz, Monzalvo, & Dehaene, 2018). These findings further support the claim of an interdependence of face recognition with literacy acquisition.

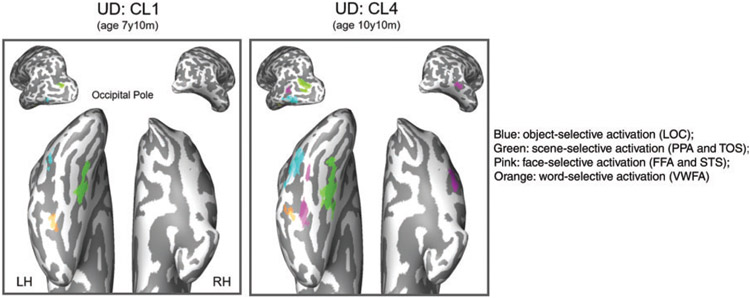

Having shown that the VOTCs of the two hemispheres are weighted differently but still interdependent, the question is what would happen in instances where only a single VOTC is present. This is indeed the situation in children who undergo surgical resection for the management of pharmacologically intractable epilepsy. Recently, we had occasion to conduct a longitudinal study of a single case, a child (from age roughly 7–10 years) with initials UD, who underwent surgical resection of the RH VOTC at age 6.9 years (T. T. Liu et al., 2018). Figure 6 shows the functional MRI data from two scans (category localizer [CL] consisting of faces, objects, words, houses, and patterns) at two timepoints roughly 3 years apart. The VWFA was detectable in the LH at the first timepoint at age 7 years 10 months, and by the age of 10 years and 10 months, both the VWFA and the FFA were detectable in the LH. Although not shown in this figure (but see T. T. Liu et al., 2018, Figure 4), the face-selective and word-selective voxels competed for representational space, such that, over time, the location of the voxels that were word-selective (VWFA) shifted rather more lateral than is typical of the lateral-medial axis present in controls, and the extent of the left voxels that were face-selective (FFA) expanded across the course of development and were situated more medial than is typical (see Figure 6).

Figure 6.

Category selectivity maps from a longitudinal study of child UD (right lobectomy at age 6 years 9 months) from CL1 to CL4 (across 7 years 10 months – 10 years 10 months of age) showing regions preferentially responsive to faces, scene, objects, and words. Most of the category-selective responses were confined to the LH, including the left FFA, left STS, left PPA and left TOS, left LOC, and left VWFA. Of relevance is the emergence of the FFA (FFA1 and FFA2), alongside the VWFA, in the preserved left VOTC. CL = Category Localizer; LH = left hemisphere; RH = right hemisphere.

Finally, we have developed computational (neural network) simulations that illustrate how the adult hemispheric differences emerge over development due to cooperative and competitive interactions in the formation of face and word representations (Plaut & Behrmann, 2011). A network, instantiating the three computational principles articulated earlier, was trained on abstract face, word, and house stimuli, and was required to identify the stimulus at output. The network exhibited the emergence of a LH-biased word recognition system by virtue of connectivity with LH phonology. Although there was some LH involvement in face recognition, the recognition system was mostly RH-biased. Damage to a region analogous to the LH fusiform cortex resulted in a deficit in word identification with a mild deficit in face identification, whereas damage to the RH analogue of the fusiform cortex produced a deficit in face identification with a concurrent mild deficit in word recognition, as observed empirically (Behrmann & Plaut, 2014).

Challenges to the Distributed Account of Hemispheric Organization of Face and Word Perception

Thus far, we have provided a theoretical account of the manner in which the division of labor for face and word recognition emerges with graded asymmetries across the two hemispheres. We have also offered empirical support for this account from a range of investigations, including ERP studies conducted with adults and with children across the course of development, behavioral half-field studies with children and adolescents as well as adults, and neuropsychological studies of patients’ impairment after a unilateral RH or LH lesion. Last, we have instantiated these principles in an artificial network which enabled us to explore the consequences of the core principles and (simulated) brain damage on face and word recognition behavior.

Since the publication of these studies, a number of empirical challenges have risen to the fore. Next, we describe these challenges and our response to them. In the end, we will conclude that there remains much more work to be done and a full resolution of all of the issues awaits further exploration.

Functional Specialization of Visual Cortex in Congenitally Blind Individuals

Our account emphasizes the importance of the nature and degree of visual experience with different types of stimuli in giving rise to graded domain-specific functional specialization among high-level visual cortical areas. A particularly intriguing challenge to our account, therefore, comes from observations that certain general aspects of the organization of visual cortex are preserved in congenitally blind individuals (Reich, Szwed, Cohen, & Amedi, 2011). For example, in blind individuals, the VWFA is activated during Braille reading (Büchel, Price, Frackowiak, & Friston, 1998; Reich et al., 2011; Sadato et al., 1996), when making other highly precise tactile discriminations (Siuda-Krzywicka et al., 2016), and in response to lexically associated auditory “sound-scapes” (Striem-Amit, Cohen, Dehaene, & Amedi, 2012; Striem-Amit, Wang, Bi & Caramazza, 2018). Similarly, the FFA is activated during tactile exploration of a face (Pietrini et al., 2004), vocal emotional expression (Fairhall et al., 2017), and in response to face-associated auditory stimuli such as laughing and whistling (van den Hurk, Van Baelen, & Op de Beeck, 2017). Indeed, visual cortical selectivity in sighted and blind individuals is similar in other respects, too, including the more lateral response to animals compared to the more medial response to tools (Mahon, Anzellotti, Schwarzbach, Zampini, & Caramazza, 2009) and to scenes (i.e., PPA; He et al., 2013; van den Hurk et al., 2017; Wang, Caramazza, Peelen, Han, & Bi, 2015).

It is important to bear in mind that all of the relevant studies were primarily concerned with establishing some statistically reliable relationship between the visual cortical organization of blind and sighted individuals. On close examination, however, the observed relationships appear to be relatively weak. For instance, Reich et al. (2011) reported only a similar peak of activation for blind Braille reading compared to sighted visual reading but provided no additional information about the distribution of the activation pattern or its degree of selectivity. In fact, Büchel et al. (1998) found massively broader activation in congenitally blind and late blind (mean time of onset aged 18 years) individuals compared to VWFA activation in sighted individuals reading the same words. More recently, van den Hurk et al. (2017) found that, in congenitally blind individuals, the topographic organization of responses to visual faces, objects, body parts, and scenes in sighted individuals accounted for only 1% of the variance of the analogous organization under auditory presentation (even when based on unthresholded selectivity labels). Moreover, all of the observed relationships are restricted to the medial-lateral axis and do not capture the detailed hierarchical structure observed for both faces (Grill-Spector et al., 2017) and words (Vinckier et al., 2007; Weiner et al., 2016). Thus, the organization of visual cortex in the blind appears perhaps to be only coarsely related to that in sighted individuals. Nonetheless, the fact that even very general aspects of the medial-lateral organization of visual cortex does not depend on visual input indicates that the account we have articulated to this point is incomplete.

Some researchers have suggested that the functions of high-level visual cortex can be characterized at a more abstract level that is not vision-specific (e.g., deriving category-based representations), and that, in the absence of visual input, these regions continue to carry out these functions on tactile or auditory input (Op de Beeck, Pillet, & Ritchie, 2019; Reich et al., 2011; Striem-Amit et al., 2012). While certainly a possibility, such accounts still need to specify not only how other modalities of input access occipitotemporal cortex, but how the same abstract functions emerge through a combination of innate structure and (altered) experience.

The critical question in the current context is whether the computational principles we have proposed can be extended to explain the observed findings. Most researchers ascribe the preservation of coarse domain-specific organization of visual cortex in the blind, at least in part, to pre-existing and possibly innate connectivity with domain-specific regions elsewhere in the brain (see, e.g., Mahon et al., 2009; Op de Beeck et al., 2019). This connectivity pattern accounts for the site of the emergence of future VOTC regions in children; for example, the (future) FFA is connected with RH anterior temporal cortex, superior temporal sulcus, and the amygdala (Grimaldi, Saleem, & Tsao, 2016; Saygin et al., 2012) and the (future) VWFA is connected with LH language-related areas (Bouhali et al., 2014; Saygin et al., 2016; Stevens, Kravitz, Peng, Henry Tessler, & Martin, 2017). Cross-modal activation would then result from back-activation via shared higher level representations. The influences of such connectivity on the functional organization of face and word representations in the fusiform gyrus can be viewed as extending our principle of topography and proximity to include top-down as well as bottom-up constraints and influences.

The question remains, though, as to the origin of such connectivity and whether it is properly interpreted as domain-specific. In this regard, Arcaro, Schade, and Livingstone (2019b; see also Livingstone, Arcaro, & Schade, 2019a) have recently put forth the intriguing proposal that critical aspects of this connectivity, and the categorical organization of visual cortex more generally, can be understood as the consequence of the cross-modal alignment of topographic “protomaps” (Srihasam, Vincent, & Livingstone, 2014) along high-precision to low-precision axes. The eccentricity bias that we emphasize is the visual version of this axis, and analogous distinctions can be made for auditory and tactile discrimination. This alignment—which might arise due to thalamic remapping or via cortical association areas—would allow, for example, high-precision tactile information (in Braille reading) to co-opt high-precision regions of visual cortex in the blind. Evidence for cross-modal activation in VOTC is clear, and there is growing agreement that VOTC can be activated by stimuli from other modalities such as haptics and audition (van den Hurk et al., 2017; von Kriegstein, Kleinschmidt, & Giraud, 2005; von Kriegstein, Kleinschmidt, Sterzer, & Giraud, 2005), favoring a view of multisensory alignment.

Beyond its parsimony, this alignment account has the advantage of being able to explain why difficult tactile discriminations which are not language-related also engage visual cortex (Siuda-Krzywicka et al., 2016) and why the coarse spatial similarity among blind versus sighted visual cortical areas seems to be restricted to the lateral (high-precision) to medial (low-precision) axis. Working out the computational details of how the protomaps become aligned during typical and modality-deprived development in a way that can account for the full range of observed findings remains a challenge for future work.

The Role of Literacy Acquisition as the Trigger for Lateralization

A further challenge for the distributed account concerns the finding of an early RH lateralization for face recognition. We have claimed that, prior to the onset of word recognition, there are no obvious hemispheric differences, and so, early in life, face processing is supported by both hemispheres. As a modal right-handed child starts to learn to read, however, word recognition increasingly tunes the VWFA in the LH to enable communication with language areas (and interactivity between VOTC and language areas in top-down fashion; Price & Devlin, 2011). As a product of cooperative and competitive dynamics, the representations of faces become largely but not exclusively tuned in the RH.

In contrast with our account, some data indicate that the RH lateralization for faces is present in infancy, long before the beginning of literacy. For example, one study using functional near infrared spectroscopy in 5- to 8-month-old children reported a significant difference between visually presented faces, compared with control visual stimuli, in the RH but not in the LH (Otsuka et al., 2007). Similarly, in an investigation that employed an electroencephalography face discrimination paradigm with lateralized presentation of faces to infants in the first postnatal semester, responses to faces were observed only in the RH, and this RH lateralization increased over the course of the first semester (Adibpour, Dubois, & Dehaene-Lambertz, 2018). Because these studies employed only faces and not any other category of homogeneous objects as target stimuli, we do not yet know whether this RH lateralization is specific to faces. It may be the case, for example, that the RH is more sensitive to all visual stimuli at this age, perhaps resulting from an early RH attentional advantage or from a spatial frequency bias (see below for further details).

In a further challenge, in experiments conducted with 4- to 6-month-old infants, natural images of faces were displayed embedded among a stream of common objects, and in a second experiment, phase scrambled versions of the faces and objects were displayed (de Heering & Rossion, 2015). A clear response at the base stimulation frequency was observed for faces but not for the phase-scrambled versions, and this response was present to a greater degree over the RH than LH (see also Leleu et al., 2019). These findings led to the conclusion that selective processes for face processing are present well before word recognition is acquired and hence cannot possibly be the outcome of the hemispheric competition and cooperation that ensues over development.

It is surprising, then, that fast periodic visual stimulation study conducted with children aged roughly 5 to 6 years showed a strong face-selective response but no lateralization or hemispheric superiority (Lochy et al., 2019) given that a study using the identical methods showed a right lateralized response for faces (de Heering & Rossion, 2015). Moreover, consistent with our account and with the data from Dundas et al. (2013) shown in Figure 4(b), in Lochy et al. (2019) there was a small positive correlation between the extent of letter knowledge and the degree of RH response superiority for faces (better letter knowledge is associated with greater RH response). Lochy et al. did recognize the inconsistency of the studies in infants and in young children and concluded that there must exist a nonlinearity in the development of face processing, with a very early RH lateralization which then disappears in 5- to 6-year-old children and then re-emerges in adulthood. Exactly why this nonlinearity exists and what purpose it might serve is obviously unknown and requires further investigation.

Although, on the surface, some of these findings appear to contest our distributed account, an early bias toward low spatial frequencies in infancy might afford the early superiority to the RH. This early RH advantage, however, might not be specifically related to the acquisition of cortical face representations. As discussed by Johnson and colleagues (Johnson, 2005; Johnson, Senju, & Tomalski, 2015), many studies have provided evidence for a rapid, low-spatial-frequency (LSF), sub-cortical face-detection system, labeled “ConSpec,” that involves the superior colliculus, pulvinar, and amygdala. The RH advantage in infants then might be the output of this sub-cortical system which supports the orienting of newborns and young infants to top-heavy stimuli like faces and, thus, does not reflect the cortical organization for face processing per se. This bias is akin to that suggested by Arcaro, Schade, and Livingstone (2019a) in which a preference for small dark regions on lighter background coupled with the upper field advantage as in monkeys (Hafed & Chen, 2016) may suffice to drive what appears on the surface to be a preference for faces. In fact, the idea that infants are born with any specific face-related information has been ruled out by demonstrations showing that young infants do not definitively evince a face preference (Cassia, Turati, & Simion, 2004; Turati, Simion, Milani, & Umilta, 2002; for a review, see Morton & Johnson, 1991). Rather, the claim is that bottom-up information, especially with a low spatial frequency bias, might be sufficient to account for an early RH lateralization (see earlier also for an alternative but consistent suggestion of an early RH attentional advantage).

Patients With a Selective Impairment of Either Face or Word Recognition

In support of the claim of bilateral representations of words and faces, we have presented data from patients with a lesion to the RH or LH VOTC and have shown an impairment for the patients in the recognition of both stimulus classes (see Shared Representations With Weighting section), albeit in a weighted fashion, with a greater face deficit following a RH than LH lesion and vice versa for words (Behrmann & Plaut, 2014).

One finding that seems inconsistent with this distributed account is that there are case reports of patients who are impaired in their recognition of only one of the two stimulus classes, with some showing either “pure” alexia (Cohen & Dehaene, 2004) or “pure” prosopagnosia (Busigny et al., 2010; Hills, Pancaroglu, Duchaine, & Barton, 2015; Susilo, Wright, Tree, & Duchaine, 2015) and preservation of the other stimulus class, a pattern suggestive of independence of the domains. How might the distributed account explain the presence of a selective deficit restricted to just one of the visual classes with normal performance on the other?

One possible way in which this might occur is by virtue of individual differences in the nature of the weighted asymmetry of the hemispheres. These hemispheric differences, we have argued, are a direct consequence of the cooperative and competitive forces that ensue over development and these may play out differently in different individuals. As a means of exploring individual differences, we have collected pilot data from a fMRI study in which 14 participants viewed blocks of consecutively shown faces or words (or objects, houses, or scrambled patterns) in a n-back paradigm (participants pressed a response button if two consecutive images are the same). Figure 7 plots the difference in the BOLD response from a simple subtraction of RH minus LH face activation against the difference of LH minus RH word activation. As evident from this figure, there are considerable individual differences in the magnitude of the superiority of one hemisphere over the other. Although many of the points fall close to the diagonal, showing a balanced advantage for the “preferred” stimulus in each hemisphere, there are some cases where there is more of an imbalance. For example, in Figure 7, there is a patient who shows a 0.4 signal advantage for faces in the RH over the LH but a 0.2 advantage for words in the LH over the RH. There is also a second example case which shows the reverse pattern, with greater asymmetry for words than for faces. Although there is no case which shows a sufficiently large degree of laterality for any one domain, this is of course hypothetically possible in a full distribution. We propose, then, that it is likely that some (rare) individuals will fall in the tail of one of these distributions. Depending on whether the absolute lateralization is strong for faces or for words, such an individual, following a lesion to the RH, might become selectively prosopagnosic or, following a lesion to the LH, might become selectively pure alexic and, of course, these kinds of “pure” cases are rare in their own right. An argument identical to this has already been offered in the literature in accounting for the presence or absence of surface dyslexia in individuals with semantic dementia (Woollams, Ralph, Plaut, & Patterson, 2007). Such an account can explain both the common association between face and word processing abilities (and their weighted deficits after a unilateral hemispheric lesion) but can also account for the possibility of dissociations in the same population.

Figure 7.

Face and word activation in an fMRI study with blocked presentation of faces and words. Hemispheric lateralization indices for faces (RH–LH) and words (LH–RH) using magnitude of BOLD responses from 15 individuals. LH = left hemisphere; RH = right hemisphere.

There is, in addition, another reasonably large group of individuals who appear to evince a selective impairment in face recognition (“congenital” or “developmental” prosopagnosia; CP for short). The severity of the face recognition disorder in such individuals can be as severe as that noted in individuals with a frank RH VOTC lesion and, like those individuals, These individuals rely on voice and other cues such as hairstyle to support individual face recognition (Avidan & Behrmann, 2014; Avidan et al., 2014; Barton, Albonico, Susilo, Duchaine, & Corrow, 2019; Rosenthal et al., 2017). In contrast with the association of word and face recognition deficits in DD, as reviewed in Shared Representations With Weighting section above, there are now several studies of individuals with CP which have demonstrated a dissociation between face and word recognition (Burns et al., 2017; Rubino, Corrow, Corrow, Duchaine, & Barton, 2016; Starrfelt, Klargaard, Petersen, & Gerlach, 2018).

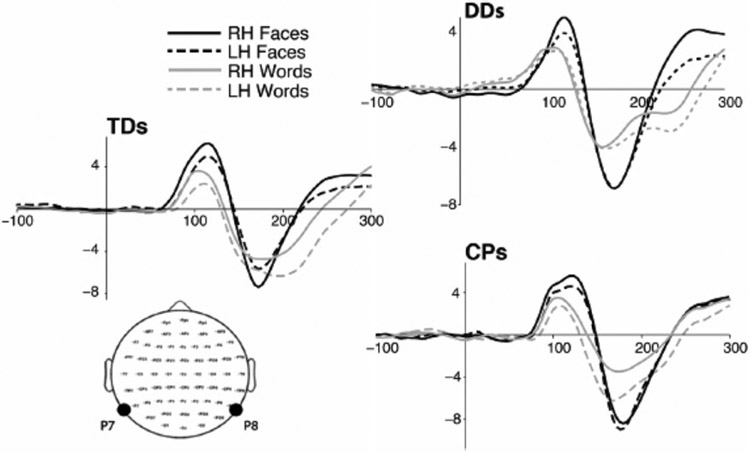

We suggest that, from a theoretical perspective, both the dissociation between face and word processing in CP and their association in DD may be explained within the distributed hemispheric account. On this account, if the acquisition of word recognition is impaired by, for example, a phonological deficit, as in DD, the initial trigger for lateralization, namely, the optimizing of the LH to connect visual and language areas will not be present. The absence of this tuning for words in the LH will not result in the competition that drives the lateralization of faces. In this scenario, both face and word recognition would be adversely impacted and their hemispheric organization affected. If, however, it is face recognition that is initially affected, the acquisition of word recognition can proceed apace and be preserved. The argument then is that there is a chronological sequence: Face lateralization is contingent on the process of preserved literacy acquisition but not vice versa. In an empirical study to examine this claim of temporal staging and the differential reliance of face lateralization on word lateralization, we collected data from two groups of adults, those with DD and those with CP, and matched control participants using the same behavioral and ERP paradigm shown in Figures 3 and 4.

As depicted in Figure 8, relative to the typically developed controls who evince a more negative waveform to faces over the RH than LH and a more negative waveform to words over the LH than RH in the expected N170 time window, the DD individuals showed no asymmetries over the LH or RH for either words or faces, as would be expected if face lateralization is contingent on normal lateralized word acquisition. In contrast, in the CP individuals, the ERP waveforms for faces shown no hemispheric differences, but there is a greater negative component over the LH than RH for words in the expected time window, as in the controls. These findings of a differential relationship between face and word hemispheric lateralization is borne out by the differences between the DD and CP individuals and this observed asymmetry is predicted a priori by the distributed account.

Figure 8.

Group averaged ERP waveforms (−100 to 300 ms) measured from the P7 (LH) and P8 (RH) electrodes in TD controls, DD adults, and congenital prosopagnosic adults in response to faces and words. Whereas there is a greater N170 waveform for faces over the RH and words over the LH for the TD controls, the DD group showed neither a RH nor a LH advantage for faces and words, respectively, while the CP group showed the LH advantage for words no hemispheric advantage for faces. TD = typically developed; DD = developmental dyslexic; CP = congenital prosopagnosic.

The Nature of Bilateral Hemispheric Representation of Faces and Words

We have suggested that representations of both faces and words exist in both hemispheres and that these bilateral representations play a functional role—a lesion to either hemisphere results in a recognition deficit for both classes of stimuli (albeit weighted depending on which hemisphere is affected; Behrmann & Plaut, 2014; see Shared Representations With Weighting section above). Thus far, we have not yet characterized the information content of the representations in each hemisphere and, in particular, have not evaluated whether these representations are the same or not (see section on Moving Forward). One existing proposal suggests that they are not. Barton et al. have shown that patients with left fusiform lesions and alexia do have face recognition deficits, but that the deficit is primarily for lip-reading and not for face recognition per se. And in complementary fashion, they showed that patients with right fusiform lesions and prosopagnosia do have difficulty reading but the problem is not in word recognition itself and is, rather, in identifying the font or handwriting of the text (Barton, Fox, Sekunova, & Iaria, 2010; Barton, Sekunova, et al., 2010; Hills et al., 2015). Albonico and Barton (2017) offer a potential resolution to the apparent inconsistency. They conclude that, in addition to the role in word recognition, left VOTC regions participate in face recognition—the key claim is that the left fusiform area codes or represents linear contours at higher spatial frequency and thus damage affects both word recognition as well as the processing of facial speech patterns. Whether a general process is also at play in the right fusiform area that would give rise to both prosopagnosia and a deficit in orthographic processing is yet to be determined.

The proposal in favor of LH lip-reading and RH font-perception is not necessarily at odds with the claim of bihemispheric representation of words and faces. Rather, the representation of individual faces and words, as we have suggested, may coexist with the processes engaged in lip-reading in the LH and font-detection in the RH. Clearly, further investigations remain to be conducted to confirm and elucidate this coexistence of processes.

Moving Forward

As we point out in several places throughout this article, much research remains to be done. One obvious line of investigation concerns the representational format of individual faces and words in the two hemispheres, and the extent to which they are the same or different. A multivariate analysis of BOLD data collected when observers view faces and words will be helpful in shedding light on this issue.

Another line of future study concerns the individual differences in hemispheric organization we have outlined in The Nature of Bilateral Hemispheric Representation of Faces and Words section. One hypothesis is that the mature hemispheric profile is an emergent function of the competition for representation in the two hemispheres. But what determines the nature and extent of the competition? One possible factor that might constrain this competition is the inter- as well as intrahemispheric structural connectivity. The prediction is that, across individuals, as the volume of the corpus callosum increases (with the capacity for fast and detailed interhemispheric transmission), word and face representations should become more evenly bilateral. Those with less callosal volume, in contrast, will evince more unilateral specialization for words in the LH and faces in the RH. Relatedly, in those with greater within-hemisphere connectivity (e.g., by virtue of greater integrity of the inferior fronto-occipital fasciculus), we would predict more unilateral VWFA as a result of stronger connections between left fusiform and left language areas. Through the competitive dynamics we have described, these differential patterns of connectivity would lead to greater lateralization of face representations to the RH. Those with less within-hemisphere connectivity might, then, have more balanced bilateral representations. These claims regarding the relative roles of between- and within-hemisphere connectivity remain speculative but if these predictions are upheld, they would further consolidate the distributed account of hemispheric organization and the nature of the interdependence of face and word representations.

There is much to be learned about the functional organization of the hemispheres and the manner in which this organization emerges over development. We have offered a framework within which to begin to outline a possible mechanism and further confirmation of the predictions, and expect that future challenges will help refine and extend this framework. We recognize that the description of the findings to date may appear to suffer from a confirmatory bias, and that the theory articulated may be viewed as unnecessarily complex. But this theoretical framework is subject to challenge as demonstrated earlier and there are many ways in which the model might be tested further and refuted by new findings. The goal of this study has been to lay out a computational account and empirical findings that examine the emergence of, and constraints on, the pattern of hemispheric organization of human ventral occipitotemporal cortex. This account has offered a number of testable predictions and, based on future investigations, is subject to modification or, if necessary, refutation. Above all, we hope that our focus on principles of hemispheric organization of human visual recognition helps to move the field forward.

Funding

The author(s) received no financial support for the research, authorship, and/or publication of this article.

Footnotes

Declaration of Conflicting Interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

We approximate the brain as 1011 neurons uniformly distributed within a sphere of radius 6.6 cm and connected by straight axons with cross-sectional radius of 0.1 μm (ignoring overlap). As the average distance between two random points within a sphere of radius r is , the average volume of an axon is (6.6 × 10−2 m) π (0.1 × 10−6 m)2 ≅ 2.13 × 10−15 m3. Thus, the total volume of 1022 axons (full connectivity) is 2.13 × 107 m3 = 21.3 million cubic meters. Even connecting each neuron to only 104 others—as is roughly true in the brain—would require 2.13 cubic meters of axon volume if connections were distributed randomly rather than mostly locally.

Based on the Perception Lecture delivered at the 42nd European Conference on Visual Perception, Leuven, 25 August 2019.

References

- Adibpour P, Dubois J, & Dehaene-Lambertz G (2018). Right but not left hemispheric discrimination of faces in infancy. Nature Human Behaviour, 2, 67–79. doi: 10.1038/s41562-017-0249-4 [DOI] [PubMed] [Google Scholar]

- Albonico A, & Barton JJS (2017). Face perception in pure alexia: Complementary contributions of the left fusiform gyrus to facial identity and facial speech processing. Cortex, 96, 59–72. doi: 10.1016/j.cortex.2017.08.029 [DOI] [PubMed] [Google Scholar]

- Allison T, McCarthy G, Nobre AC, Puce A, & Belger A (1994). Human extrastriate visual cortex and the perception of faces, words, numbers and colors. Cerebral Cortex, 5, 544–554. [DOI] [PubMed] [Google Scholar]

- Allison T, Puce A, Spencer DD, & McCarthy G (1999). Electrophysiological studies of human face perception. I: Potentials generated in occipitotemporal cortex by face and non-face stimuli. Cerebral Cortex, 9, 415–430. [DOI] [PubMed] [Google Scholar]

- Almeida J, Mahon BZ, & Caramazza A (2010). The role of the dorsal visual processing stream in tool identification. Psychological Science, 21, 772–778. doi: 10.1177/0956797610371343 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amunts K, & Zilles K (2015). Architectonic mapping of the human brain beyond Brodmann. Neuron, 88, 1086–1107. doi: 10.1016/j.neuron.2015.12.001 [DOI] [PubMed] [Google Scholar]

- Appelbaum LG, Liotti M, Perez R, Fox SP, & Woldorff MG (2009). The temporal dynamics of implicit processing of non-letter, letter, and word-forms in the human visual cortex. Frontiers in Human Neuroscience, 3, 56. doi: 10.3389/neuro.09.056.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arcaro MJ, Schade PF, & Livingstone MS (2019a). Universal mechanisms and the development of the face network: What you see is what you get. Annual Review of Vision Science, 5, 341–372. doi: 10.1146/annurev-vision-091718-014917 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arcaro MJ, Schade PF, & Livingstone MS (2019b). Body map proto-organization in newborn macaques. Proceedings of the National Academy of Sciences of the United States of America, 116, 24861–24871. doi: 10.1073/pnas.1912636116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Asperud J, Kühn CD, Gerlach C, Delfi TS, & Starrfelt R (2019). Word recognition and face recognition following posterior cerebral artery stroke: Overlapping networks and selective contributions. Visual Cognition, 1, 52–65. [Google Scholar]

- Avidan G, & Behrmann M (2014). Impairment of the face processing network in congenital prosopagnosia. Frontiers in Bioscience (Elite Edition), 6, 236–257. [DOI] [PubMed] [Google Scholar]

- Avidan G, Tanzer M, Hadj-Bouziane F, Liu N, Ungerleider LG, & Behrmann M (2014). Selective dissociation between core and extended regions of the face processing network in congenital prosopagnosia. Cerebral Cortex, 24, 1565–1578. doi: 10.1093/cercor/bht007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barton JJS (2011). Disorder of higher visual function. Current Opinion in Neurology, 24, 1–5. doi: 10.1097/WCO.0b013e328341a5c2 [DOI] [PubMed] [Google Scholar]

- Barton JJS, Albonico A, Susilo T, Duchaine B, & Corrow SL (2019). Object recognition in acquired and developmental prosopagnosia. Cognitive Neuropsychology, 36, 54–84. doi: 10.1080/02643294.2019.1593821 [DOI] [PubMed] [Google Scholar]

- Barton JJS, Fox CJ, Sekunova A, & Iaria G (2010). Encoding in the visual word form area: An fMRI adaptation study of words versus handwriting. Journal of Cognitive Neuroscience, 22, 1649–1661. doi: 10.1162/jocn.2009.21286 [DOI] [PubMed] [Google Scholar]

- Barton JJS, Sekunova A, Sheldon C, Johnston S, Iaria G, & Scheel M (2010). Reading words, seeing style: The neuropsychology of word, font and handwriting perception. Neuropsychologia, 48, 3868–3877. doi: 10.1016/j.neuropsychologia.2010.09.012 [DOI] [PubMed] [Google Scholar]

- Barttfeld P, Abboud S, Lagercrantz H, Aden U, Padilla N, Edwards AD, … Dehaene-Lambertz G (2018). A lateral-to-mesial organization of human ventral visual cortex at birth. Brain Structure and Function. doi: 10.1007/s00429-018-1676-3 [DOI] [PubMed] [Google Scholar]

- Behrmann M, & Plaut DC (2013). Distributed circuits, not circumscribed centers, mediate visual recognition. Trends in Cognitive Sciences, 17, 210–219. doi: 10.1016/j.tics.2013.03.007 [DOI] [PubMed] [Google Scholar]