Abstract

The selection of word embedding and deep learning models for better outcomes is vital. Word embeddings are an n-dimensional distributed representation of a text that attempts to capture the meanings of the words. Deep learning models utilize multiple computing layers to learn hierarchical representations of data. The word embedding technique represented by deep learning has received much attention. It is used in various natural language processing (NLP) applications, such as text classification, sentiment analysis, named entity recognition, topic modeling, etc. This paper reviews the representative methods of the most prominent word embedding and deep learning models. It presents an overview of recent research trends in NLP and a detailed understanding of how to use these models to achieve efficient results on text analytics tasks. The review summarizes, contrasts, and compares numerous word embedding and deep learning models and includes a list of prominent datasets, tools, APIs, and popular publications. A reference for selecting a suitable word embedding and deep learning approach is presented based on a comparative analysis of different techniques to perform text analytics tasks. This paper can serve as a quick reference for learning the basics, benefits, and challenges of various word representation approaches and deep learning models, with their application to text analytics and a future outlook on research. It can be concluded from the findings of this study that domain-specific word embedding and the long short term memory model can be employed to improve overall text analytics task performance.

Keywords: Word embedding, Natural language processing, Deep learning, Text analytics

Introduction

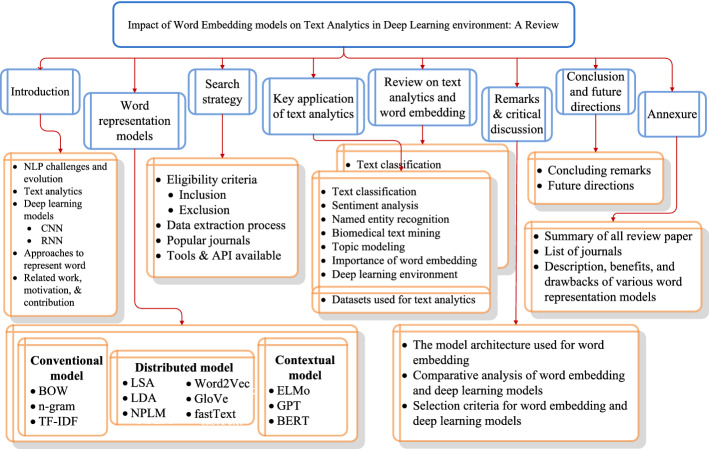

This research investigates the efficacy of word embedding in a deep learning environment for conducting text analytics tasks and summarizes the significant aspects. A systematic literature review provides an overview of existing word embedding and deep learning models. The overall structure of the paper is shown in Fig. 1.

Fig. 1.

Overall structure of the paper

Natural language processing (NLP)

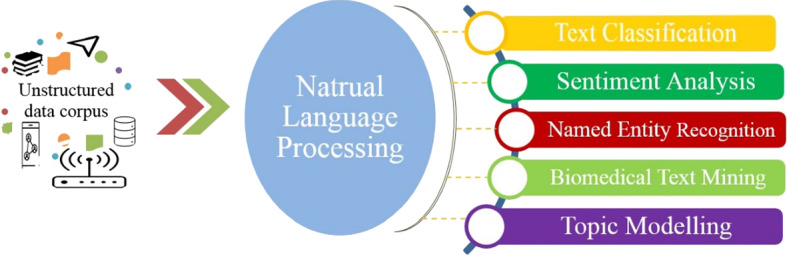

NLP is a branch of linguistics, computer science, and artificial intelligence concerned with computer–human interaction, mainly how to design computers to process and evaluate huge volumes of natural language data. NLP integrates statistical, machine learning, and deep learning models with computational linguistics rules-based modeling of human language. Speech recognition, natural language interpretation or understanding (NLI or NLU), and natural language production or generation (NLP or NLG) are all common challenges in natural language processing, as shown in Fig. 2. These technologies allow computers to understand and process human language.

Fig. 2.

Challenges and evolution of natural language processing

NLP research has progressed from punch cards and batch processing to the world of Google and others, where millions of web pages may be analyzed in under a second. NLP progresses from symbolic to statistical to neural NLP. Many NLP applications leverage deep neural network design and produce state-of-the-art results due to technological advancements, increased computer power, and abundant corpus availability (Young et al. 2018) (Lavanya and Sasikala 2021).

Text analytics

The majority of text data is unstructured and dispersed across the internet. This text data can yield helpful knowledge if it is properly obtained, aggregated, formatted, and analyzed. Text analytics can benefit corporations, organizations, and social movements in various ways. The easiest way to execute text analytics tasks is to use manually specified rules to link the keywords closely. In the presence of polysemy words, the performance of defined rules begins to deteriorate. Machine learning, deep learning, and natural language processing methods are used in text analytics to extract meaning from large quantities of text. Businesses can use these insights to improve profitability, consumer satisfaction, innovation, and even public safety. Techniques for analyzing unstructured text include text classification, sentiment analysis, named entity recognition (NER) and recommendation system, biomedical text mining, topic modeling, and others, as shown in Fig. 3. Each of these strategies is employed in a variety of contexts.

Fig. 3.

NLP techniques

Deep learning models

Deep learning methods have been increasingly popular in NLP in recent years. Artificial neural networks (ANN) with several hidden layers between the input and output layers are known as deep neural networks (DNN). This survey reviews 193 articles published in the last three years focusing on word embedding and deep learning models for various text analytics tasks. Deep learning models are categorized based on their neural network topologies, such as recurrent neural networks (RNN) and convolutional neural networks (CNN). RNN detects patterns over time, while CNN can identify patterns over space.

Convolutional neural networks

CNN is a neural network with many successes and inventions in image processing and computer vision. The underlying architecture of CNN is depicted in Fig. 4. A CNN consists of several layers: an input layer, a convolutional layer, a pooling layer, and a fully connected layer. The input layer receives the image pixel value as input and passes it to the convolutional layer. The convolution layer computes output using kernel or filter values, subsequently transferred to the pooling layer. The pooling layer shrinks the representation size and speeds up computation. Local and location-consistent patterns are easily recognized using CNN. These patterns could be key sentences that indicate a specific objective. CNN has grown in popularity as a text analytics model architecture.

Fig. 4.

Architecture of CNN

Recurrent neural networks

Text is viewed as a series of words by RNN models designed to capture word relationships and sentence patterns for text analytics. A typical representation of RNN and backpropagation through time is shown in Fig. 5. RNN accepts input xt at time t and computes output yt as the network's output. It computes the value of the internal state and updates the internal hidden state vector ht in addition to the output, then transmits this information about the internal state from the current time step to the next. The function of maintaining the internal cell state is represented by Eq. (1).

| 1 |

Fig. 5.

A typical representation of RNN

where ht represents the current state of the cell, fw represents a function parameterized by a set of weights w, and ht-1 represents the previous state. Wxh is a weight matrix that transforms the input to the hidden state, Whh is the weight that transforms from the previous hidden state to the next hidden state, Why is the hidden state to output.

RNN passes the intermediate information through a non-linear transformation function like tanh, as shown in Eq. (2). The intermediate output is passed through the softmax function, which output values 0 to 1 and adds up to 1, as represented using Eq. (3). RNN uses a backpropagation through time algorithm to learn from the data sequence and improve the prediction capabilities. Backpropagation is the recursive application of the chain rule, where it computes the total loss, L, as represented in Eq. (4) and shown in Fig. 5. RNN suffers due to vanishing and exploding gradients problems. The vanishing gradient problem can be addressed using the Gated Recurrent Unit (GRU) or Long Short Term Memory (LSTM) network architecture.

| 2 |

| 3 |

| 4 |

In an LSTM cell state, at a particular time t, the input vector xt passed through the three gate vectors, hidden state, and cell state. The LSTM architecture is shown in Fig. 6. The input gate receives the input signal and modifies the values of the current cell state using Eq. (5).

Fig. 6.

The architecture of LSTM

The forget gate ft updates its state using Eq. (6) and removes the irrelevant information. The output gate ot generates the output using Eq. (7) and sends it to the network in the next step. Sigma represents the sigmoid function, and tanh represents the hyperbolic tangent function. The ⊙ operator defines the element-wise product. The input modulation gate, mt is represented by Eq. (8). It uses weight matrices W and bias vector b to update the cell state ct at time t as defined by Eq. (9). The network updates the hidden states using these memory units, as shown in Eq. (10).

| 5 |

| 6 |

| 7 |

| 8 |

| 9 |

| 10 |

Word to vector representation models

Recent breakthroughs in deep learning have significantly improved several NLP tasks that deal with text semantic analysis, such as text classification, sentiment analysis, NER and recommendation systems, biomedical text mining, and topic modeling. Pre-trained word embeddings are fixed-length vector representations of words that capture generic phrase semantics and linguistic patterns in natural language. Researchers have proposed various methods for obtaining such representations. Word embedding has been shown to be helpful in multiple NLP applications (Moreo et al. 2021).

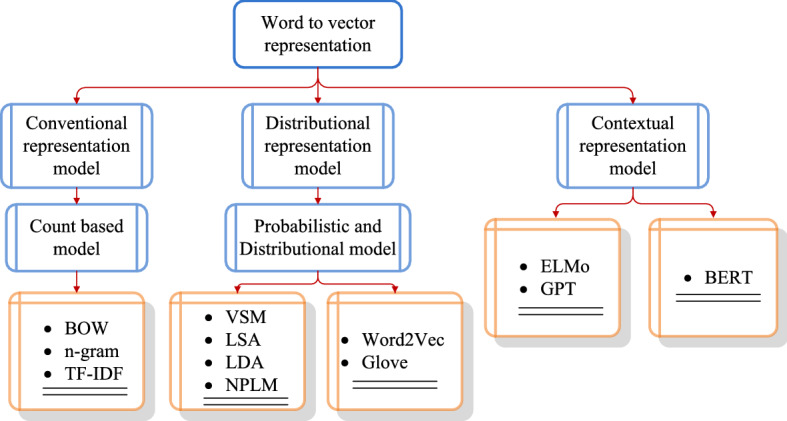

Word embedding techniques can be categorized into conventional, distributional, and contextual word embedding models, as shown in Fig. 7. Conventional word embedding, also called count-based/frequency-based models, is categorized into a bag of words (BoW), n-gram, and term frequency-inverse document frequency (TF-IDF) models. The distributional word embedding, also called static word embedding, consists of probabilistic-distributional models, such as vector space model (VSM), latent semantic analysis (LSA), latent Dirichlet allocation (LDA), neural probabilistic language model (NPLM), word to vector (Word2Vec), global vector (GloVe) and fastText model. The contextual word embedding models are classified into auto-regressive and auto-encoding models, such as embeddings from language models (ELMo), generative pre-training (GPT), and bidirectional encoder representations from transformers (BERT) models.

Fig. 7.

Approaches to represent a word

Related work

Selecting an effective word embedding and deep learning approach for text analytics is difficult because the dataset's size, type, and purpose vary. Different word embedding models have been presented by researchers to effectively describe a word's meaning and provide the embedding for processing. The word embedding model improved throughout the year to effectively represent out-of-vocabulary words and capture the significance of the contextual word. Previous studies have shown that a deep learning model can successfully predict outcomes by deriving significant patterns from the data (Wang et al. 2020).

The systematic studies on deep learning based emotion analysis (Xu et al. 2020), deep learning based classification of text (Dogru et al. 2021), and survey on training and evaluation of word embeddings (Torregrossa et al. 2021) focus on comparing the performance of word embedding and deep learning models for the domain-specific task. Studies also present an overview of other related approaches used for similar tasks. The focus of this research is to explore the effectiveness of word embedding in a deep learning environment for performing text analytics tasks and recommend its use based on the key findings.

Motivation and contributions

The primary motivation of this study is to cover the recent research trends in NLP and a detailed understanding of how to use word embedding and deep learning models to achieve efficient results on text analytics tasks. There are systematic studies on word embedding models and deep learning approaches focusing on a specific application. Still, no one includes a reference for selecting suitable word embedding and deep learning models for text analytics tasks and does not present their strengths and weaknesses.

The key contributions of this paper are as follows:

This study examines the contributions of researchers to the overall development of word embedding models and their different NLP applications.

A systematic literature review is done to develop a comprehensive overview of existing word embedding and deep learning models.

The relevant literature is classified according to criteria to review the essential uses of text analytics and word embedding techniques.

The study explores the effectiveness of word embedding in a deep learning environment for performing text analytics tasks and discusses the key findings. The review includes a list of prominent datasets, tools, and APIs available and a list of notable publications.

A reference for selecting a suitable word embedding approach for text analytics tasks is presented based on a comparative analysis of different word embedding techniques to perform text analytics tasks. The comparative analysis is presented in both tabular and graphical forms.

This paper provides a concise overview of the fundamentals, advantages, and challenges of various word representation approaches and deep learning models, as well as a perspective on future research.

The overall structure of the paper is shown in Fig. 1. Section 1 introduces the overview of NLP techniques for performing text analytics tasks, deep learning models, approaches to represent word to vector form, related work, motivation, and key contribution of the study. Section 2 presents the overall development of word embedding models. Section 3 explains the methodology of the conducted systematic literature review. It also covers the eligibility criteria, data extraction process, list of popular journals, and available tools and API. Sections 4 and 5 discuss studies on significant text analytics applications, word embedding models, and deep learning environments. Section 6 discusses a comparative analysis and a reference for selecting a suitable word embedding approach for text analytics tasks. Section 7 concludes the paper with a summary and recommendations for future work, followed by Annexures A and B, which contain an overview of all review papers and the benefits and challenges of various word embedding models.

Word representation models

This section will examine the techniques for word embedding training, describing how they function and how they differ from one another.

Conventional word representation models

Bag of words

The BoW model is a representation that simplifies NLP and retrieval. A text is an unordered collection of its words, with no attention to grammar or even word order. For text categorization, a word in a document is given a weight based on how frequently it appears in the document and how frequently it appears in different documents. The BoW representation for two statements consisting of words and their weights are as follows.

Statement 1: One cat is sleeping, and the other one is running.

Statement 2: One dog is sleeping, and the other one is eating.

| One | Cat | Is | Sleeping | And | The | Other | Dog | Running | Eating | |

|---|---|---|---|---|---|---|---|---|---|---|

| S1 | 2 | 1 | 2 | 1 | 1 | 1 | 1 | 0 | 1 | 0 |

| S2 | 2 | 0 | 2 | 1 | 1 | 1 | 1 | 1 | 0 | 1 |

The two statements have ten distinct words, representing each as ten element vector. Statement-1 is represented by [2,1,2,1,1,1,1,0,1,0], and statement-2 is represented by [2,0,2,1,1,1,1,1,0,1]. Each vector element is represented as a count of the corresponding entry in the dictionary.

BoW is suffering due to some limitations, such as sparsity. If the length of a sentence is large, it takes a more significant time to obtain its vector representation and needs considerable time to get sentence similarity. Frequent words have more power as a word occurs more times. Its frequency count increases, ultimately increasing its similarity scores, ignoring word orders and generating the same vector for totally different sentences, losing the sentence's contextual meaning out of vocabulary that cannot handle unseen words.

n-grams

It is a contiguous sequence of n tokens. For n = 1, 2, and 3, it is termed as 1-gram, 2-gram, and 3-gram, also termed as unigram model, bigram, and trigram. The n-gram model divides the sentence into word or character-level tokens. Consider two statements,

Statement-1: One cat is sleeping, the other is running.

Statement-2: One dog is sleeping, and the other one is eating.

The unigram and bigram word and character level representation is shown in the example below.

| 1-gram (unigram) | Word level tokens | [One, cat, is, sleeping, and, the, other, one, is, running] [One, dog, is, sleeping, and, the, other, one, is, eating] |

|---|---|---|

| Character level tokens | [O, n, e, _, c, a, t, _, i, s, _, s, l, e, e, p, i, n, g, _, a, n, d, _, t, h, e, _, o, t, h, e, r, _, o, n, e, _, i, s, _, r, u, n, n, i, n, g] [O, n, e, _, d, o, g, _, i, s, _, s, l, e, e, p, i, n, g, _, a, n, d, _, t, h, e, _, o, t, h, e, r, _, o, n, e, _, i, s, _, e, a, t, i, n, g] |

|

| 2-gram (bigram) | Word level tokens |

[One cat, cat is, is sleeping, sleeping and, and the, the other, other one, one is, is running] [One dog, dog is, is sleeping, sleeping and, and the, the other, other one, one is, is eating] |

| Character level tokens |

[On, ne, e_, _c, ca, at, t_, _i, is, s_, _s, sl, le, ee, ep, pi, in, ng, g_, _a, an, nd, d_, _t, th, he, e_, _o, ot, th, he, er, r_, _o, on, ne, e_, _i, is, s_, _r, ru, un, nn, ni, in, ng] [On, nn, ne, e_, _d, do, og, g_, _i, is, s_, _s, sl, le, ee, ep, pi, in, ng, g_, _a, an, nd, d_, _t, th, he, e_, _o, ot, th, he, er, r_, _o, on, ne, e_, _i, is, s_, _e, ea, at, ti, in, ng] |

Term frequency-inverse document frequency

TF-IDF is used to find how relevant the word is in the document. Word relevance is the amount of information that gives about the context. Term frequency measures how frequently a term occurs in a document, and the term has more relevance than other terms for the document. Consider two statements,

Statement-1: One cat is sleeping, and the other one is running.

Statement-2: One dog is sleeping, and the other one is eating.

The TF score of a word in sentences is shown in the example below.

| Statment 1 | Words | One | Cat | Is | Sleeping | And | The | Other | Running |

|---|---|---|---|---|---|---|---|---|---|

| TF score | 2/10 | 1/10 | 2/10 | 1/10 | 1/10 | 1/10 | 1/10 | 1/10 | |

| Value | 0.2 | 0.1 | 0.2 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | |

| Statment 2 | Words | One | Dog | Is | Sleeping | And | The | Other | Eating |

| TF score | 2/10 | 1/10 | 2/10 | 1/10 | 1/10 | 1/10 | 1/10 | 1/10 | |

| Value | 0.2 | 0.1 | 0.2 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 |

The TF score for both statements shows misleading information that the words “one” and “is” have more importance than the other word as they obtain the same higher score of 2. This result focuses on the need to calculate inverse document frequency.

| Statment 1 | Words | One | Cat | Is | Sleeping | And | The | Other | Running |

|---|---|---|---|---|---|---|---|---|---|

| IDF score | log(2/2) | log(2/1) | log(2/2) | log(2/2) | log(2/2) | log(2/2) | log(2/2) | log(2/1) | |

| Value | 0 | 0.3 | 0 | 0 | 0 | 0 | 0 | 0.3 | |

| Statment 2 | Words | one | dog | is | sleeping | and | the | Other | eating |

| IDF score | log(2/2) | log(2/1) | log(2/2) | log(2/2) | log(2/2) | log(2/2) | log(2/2) | log(2/1) | |

| Value | 0 | 0.3 | 0 | 0 | 0 | 0 | 0 | 0.3 |

The TF-IDF score is shown in the example below.

| Statment 1 | Words | One | Cat | Is | Sleeping | And | The | Other | Running |

|---|---|---|---|---|---|---|---|---|---|

| TF score | 0.2 | 0.1 | 0.2 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | |

| IDF score | 0 | 0.3 | 0 | 0 | 0 | 0 | 0 | 0.3 | |

| TF-IDF value | 0 | 0.03 | 0 | 0 | 0 | 0 | 0 | 0.03 | |

| Statment 2 | Words | One | Dog | Is | Sleeping | And | The | Other | Eating |

| TF score | 0.2 | 0.1 | 0.2 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | |

| IDF score | 0 | 0.3 | 0 | 0 | 0 | 0 | 0 | 0.3 | |

| TF-IDF value | 0 | 0.03 | 0 | 0 | 0 | 0 | 0 | 0.03 |

The value of TF-IDF shows more informative words concerning a particular statement. For statement-1, cat and running, whereas for statement-2, dog and eating represent more informative. Using TF-IDF, relativeness in the document is obtained, and the more informative words rule out the frequent word. As in the previous case, the word “one” and “is” shows higher frequency than other words in a document.

Calculating the cosine similarity of statements 1 and 2 using the formula. In BOW, the frequency of words affects the cosine similarity.

| Cosine similarity | |

| Cosine similarity using BOW | = = 0.85 |

| Cosine similarity using TF-IDF | = = 1 |

The distributional representation model

In the distributional representation model, the context in which a word is used determines its meaning in a sentence. Distributional models predict semantic similarity based on the similarity of observable contexts. If the two words have similar meanings, they frequently appear in the same context (Harris 1954) (Firth 1957) (Ekaterina Vylomova 2021). VSM is an algebraic representation of text as a vector of identifiers. A collection of documents from a documents space are identified by index terms and assign weights 0 or 1 according to their importance. Each document is represented by a t-dimensional vector as = with weight assign using TF-IDF scheme for representing the difference in information provided by each terms. The term represents the weight assign to the jth term in ith document.

The similarity coefficient between two document and , represented as S(, is computed to express the degree of similarity between terms and their weights. Two documents with similar index terms are close to each other in the space. The distance between two document points in the space is inversely correlated with the similarity between the corresponding vectors (Salton et al. 1975). A distributional model represents a word or phrase in context, but a VSM represents meaning in a high-dimensional space (Erk 2012). VSM suffers due to the curse of dimensionality resulting from a relatively sparse vector space with a larger dataset.

Latent semantic analysis

LSA is an automatic statistical technique for extracting and inferring predicted contextual use relations of words in discourse sequences. Singular value decomposition (SVD) is computed using a latent semantic indexing technique. The term-document matrix is first created by determining the correlation structure that defines the semantic relationship between the words in a document. SVD extracts data-associated patterns, ignoring the less important terms. Consistent phrases emerge in the document, indicating that it is associated with the data. The SVD of the term-document (t x d) matrix, X, is decomposed into three sub-matrices, such as . Where, are left, and right singular vectors matrices and have orthogonal unit-length columns, and is the diagonal matrix of singular values. The SVD takes a long time to map new terminology and documents and confront complex issues. The Latent Semantic Indexing (LSI) approach solves the synonymy problem by allowing numerous terms to refer to the same thing. It also helps with partial polysemy solutions (Scott Deerwester et al. 1990) (Flor and Hao 2021).

Latent dirichlet allocation

The LDA model is a probabilistic corpus model assigning high probability to corpus members and other comparable texts. It is a three-tier hierarchical Bayesian model in which each collection item is represented as a finite mixture across a set of underlying themes. Afterward, each topic is modeled as an infinite mixture of topic probability. For text modeling, topic probabilities provide an explicit description of a document. The latent topic is determined by the likelihood that a word appears in the topic. Even though LDA cannot collect syntactic data, it relies entirely on topic data. (Campbell et al. 2015) The LSA and LDA models construct embeddings using statistical data. The LSA model is based on matrix factorization and is subject to the non-negativity requirement. In contrast, the LDA model is based on the word distribution and is expressed by the Dirichlet prior distribution, which is the multinomial distribution's conjugate (Li and Yang 2018).

Neural probabilistic language model

Learning the joint probability function of sequences of words in a language is one of the goals of statistical language modeling. The curse of dimensionality is addressed with an NPLM that learns a distributed representation for words. Language modeling is the prediction of the probability distribution of the following word, given a sequence of words as shown in Eq. (11), and in each subsequent step, the product of conditional probabilities with the assumption that they are independent, as represented by Eq. (12).

| 11 |

| 12 |

where the term is the tth word. The conditional probability is represented by probability function C maps to the vocabulary V and maps function g to a conditional probability distribution over the word in V to obtain the following word , as shown in Eq. (13). The conditional probability is decomposed into two sub-parts.

| 13 |

The output of function g represents the estimated probability . Language models based on neural networks outperform n-gram models substantially (Bengio et al. 2003) (See 2019).

Word2Vec model

Conventional and static word representation methods treat words as atomic units represented as indices in a dictionary. These methods do not represent the similarity between words. The Word2Vec is a collection of model architectures and optimizations for learning word embeddings from massive datasets. The distributed representations technique uses neural networks to express word similarity adequately.

In several NLP applications, Word2Vec models such as continuous bag-of-word (CBOW) and Skip-Gram models are used to efficiently describe the semantic meanings of words (Mikolov et al. 2013a). The Word2Vec model takes a text corpus as input, processes it in the hidden layer, and outputs word vectors in the output layer. The model identifies the distinct word, creates a vocabulary, builds context, and learns vector representations of words in vector space using training data, as depicted in Fig. 8. Each unique word in the training set corresponds to a specific vector in space. Each word can have various degrees of similarity, indicating that words with similar contexts are more related.

Fig. 8.

Word2Vec model

The CBOW and Skip-Gram model architecture is shown in Fig. 9. The CBOW uses context words to forecast the target word. For a given input word, the Skip-Gram model predicts the context word.

Fig. 9.

The architecture of (a) CBOW model, (b) Skip-Gram model

The input is a one-hot encoded vector. The weights between the input and hidden layers are represented by the input weight vector, a V x N matrix, W. Each row of the matrix W represents the N-dimensional vector representation of the word input layers. The output weight vector represents the weights between the hidden and output layers, an N x V matrix, W'. The input and output weight vectors are used to award a score to each word in the vocabulary. In CBOW, the N-dimension vector representation vw of the related word of the input layer is represented in each row of W. The ith row of matrix W is , given a context word, assuming and for . The hidden layer activation function is linear, passing information from the previous layer to the next layer, i.e. copy the kth row of matrix W to the hidden state value h. The vector representation of the input word is represented by . The updated value of h is as shown in Eq. (14). The output weight matrix is used to compute the score from vocabulary for each word uj. The jth column of the matrix W' is represented by , as shown in Eq. (15).

| 14 |

| 15 |

The output layer uses the softmax activation function to compute the multinomial probability distribution of words. The jth unit output contains word representation from the input weight vector and output weight vector , as illustrated in Eq. (16).

| 16 |

For a window size of 2, the word wt-2, wt-1, wt+1, wt+2 are the context word for the target word wt. Compared to the CBOW model, the Skip-Gram model is the polar opposite. Based on the input word, the Skip-Gram model predicts context words. For a window size of 2, the word wt is the input word for the output context words wt-2, wt-1, wt+1, wt+2. The input weight vector is computed using a similar approach to the CBOW model. For the input wI the output of jth word on C multinomial distribution is represented by . Input to the jth unit is represented by . The jth word of the output layer is from the cth panel and the word represents the output context word. The output for each word is computed using the output weight vector, as represented in Eq. (17).

| 17 |

Multiplying the input by the input weights between the input and the hidden layer yields the input-hidden matrix. The output layer computes multinomial distributions using the hidden output weight matrix. The resulting errors are calculated by element-wise adding the error vectors. The error is propagated back to update the weight until the true element is found. The weights obtained between the hidden and output layers after training are called the word vector representation (Mikolov et al. 2013b).

GloVe

Word embeddings learned through Word2Vec are better at capturing word semantics and exploiting word relatedness. Word2Vec focuses solely on information collected from the local context window, whereas global statistic data is neglected. The GloVe is a hybrid of LSA and CBOW that is efficient and scalable for large corpora (Jiao and Zhang 2021). The GloVe is a popular model based on the global co-occurrence matrix, where each element xij in the matrix indicates the frequency with which the words wi and wj co-occur in a given context window. The number of times a particular word appears in the context of the word i, is denoted by Xi. The Pij represents the likelihood of the word j appearing in the context of the word i, as presented in Eqs. (18)–(19).

| 18 |

| 19 |

A weighted least squares regression model approximates the relationship between a word embedding and a co-occurrence matrix. The function f(Xij) represents a weighting function for the vocabulary of size V. The represents the word vectors and represents the context word vectors. The term and are bias for words wi and wj to restore the symmetry. When the word frequency is too high, a weight function f(x), as shown in Eqs. (20)–(21), ensures that the weight does not increase significantly.

| 20 |

| 21 |

The GloVe is an unsupervised learning technique for constructing word vector representations. The resulting illustrations highlight significant linear substructures of the word vector space, trained using a corpus's aggregated global word-word co-occurrence information. Glove pre-trained word embedding is based on 400 K vocabulary words trained on Wikipedia 2014 and Gigaword 5 as the corpus and 50, 100, 200, and 300 dimensions for word display (Pennington et al. 2014).

fastText

The fastText model uses internal subword information in the form of character n-grams to acquire information about the local word order and allows it to handle unique, out-of-vocabulary terms. The method creates word vectors to reflect the grammar and semantic similarity of words and produce vectors for unseen words. The Facebook AI Research lab announced fastText, an open-source technique for generating vectors for unknown words based on morphology. Each word w is expressed as w1, w2,…, wn in n-gram features and utilized as input to the fastText model. For example, the character trigram for the word “sleeping” is < sl, sle, lee, eep, epi, pin, ing, ng > . Each n-gram will create a vector, and the original vector will be combined with the vector of all its related n-grams during the training phase, as shown in Fig. 10.

Fig. 10.

The model architecture of fastText

Input to the model contains entire word vectors and character-level n-gram vectors, which are combined and averaged simultaneously (Joulin et al. 2017). Pre-trained word vectors generated from fastText using standard crawl and Wikipedia are available for 157 languages. The fastText model is trained using CBOW in dimension 300, with character n-grams of length five and a size 5 and 10 negatives window.1

Contextual representation models

The conventional and distributional representation approaches learn static word embedding. After training, each word representation is identified. The semantic meaning of the word polysemy can vary depending on the context. Understanding the actual context is required for most downstream tasks in natural language processing. For example, “apple” is a fruit but usually refers to a firm in technical articles. The vectors of words in the contextualized word embedding can be modified according to the input contexts utilizing neural language models.

Embeddings from language models

The ELMo representations use vectors derived from a bidirectional LSTM (BiLSTM) trained on a large text corpus. The ELMo model effectively addresses the problem of comprehending the syntax and semantic meaning of words and the language contexts in which they are used. ELMo considers the complete sentence when assigning an embedding to each word. It employs a bidirectional design, embedding depending on the sentence's next and preceding words, as shown in Fig. 11.

Fig. 11.

The architecture of ELMo

For a sequence of N tokens (t1, t2, …, tN), the aim is to find the language model's greatest probability in both directions. The likelihood of the sequence is computed using a forward language model, which models the chance of token tk considering the history (t1, t2, t3, …, tk). A backward language model is identical to a forward language model but goes backward through the sequence, anticipating the previous token based on the future context. The forward and backward language model and the join expression that optimizes the log probability in both directions are shown in Eqs. (22)–(24) (Peters et al. 2018).

| 22 |

| 23 |

| 24 |

Generative pre-training

The morphology of words in the application domain can be extensively exploited with GPT. GPT uses a one-way language model, transformer, to extract features, whereas ELMo employs a BiLSTM. The architecture of GPT is shown in Fig. 12.

Fig. 12.

The architecture of GPT

A standard language modeling objective for a sequence of tokens (t1, t2,…, tN) to maximize the likelihood is shown in Eq. (25). The language model employs a multi-layer transformer decoder with a self-attention mechanism to anticipate the current word through the first N-word (Vaswani et al. 2017). To achieve a proper distribution over target words, the GPT model employs a multi-headed self-attention operation over the input contextual tokens, accompanied by position-wise feed-forward layers, as shown in Eqs. (26)–(28).

| 25 |

| 26 |

| 27 |

| 28 |

The number of layers is represented as n, represents the token embedding matrix, the position embedding matrix and U is the context vector of tokens (Radford et al. 2018).

Bidirectional encoder representations from transformers

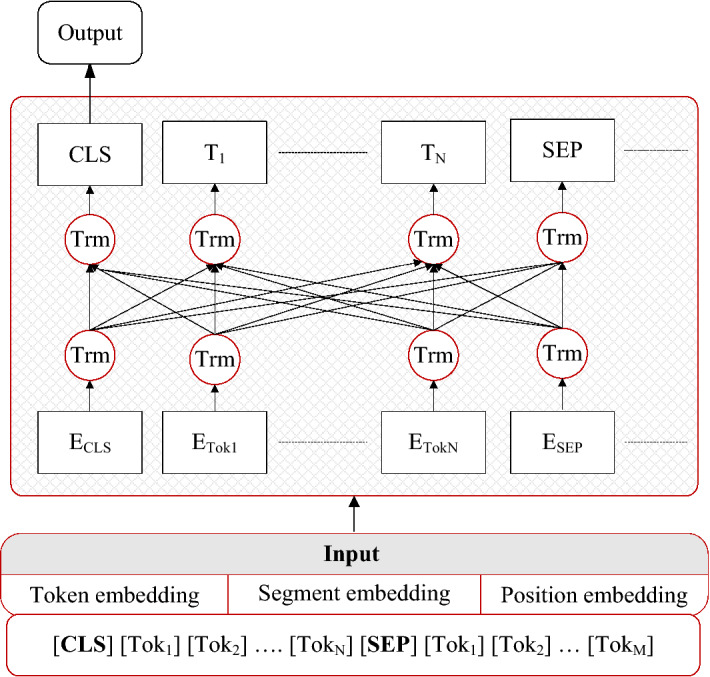

The ELMo model takes a feature-based approach and adds pre-trained representation as a feature. The GPT model uses a fine-tuning technique and only uses task-specific parameters that have been trained on downstream tasks. BERT model architecture includes a multi-layer bidirectional transformer encoder, as depicted in Fig. 13.

Fig. 13.

BERT Architecture

BERT employs masked language modeling to optimize and combine position embedding with static word embeddings as model inputs. It follows frameworks for both pre-training and fine-tuning. The model is trained on unsupervised learning from several pre-training tasks during pre-training. The BERT model is fine-tuned by first initializing it using the pre-trained parameters and then fine-tuning all parameters using labeled data from the downstream jobs (Devlin et al. 2019).

BERT uses word-piece embeddings. A special classification token [CLS] is always the first token in every sequence. Use the special token [SEP] to separate the sentences. BERT uses a deep, pre-trained neural network with transformer architecture to create dense vector representations for natural language. The BERT base or large category TF Hub model has L = 12/24 hidden layers (transformer blocks), H = 768/1024 hidden size, and A = 12/16 attention heads (TensorFlow Hub).

Search strategy

A comprehensive search for possibly relevant literature was undertaken in three electronic data sources (EDS), namely Institute of Electrical and Electronics Engineers (IEEE) Xplore, Scopus, and Science Direct, following the systematic guidelines outlined and declared by (Kitchenham 2004) (Okoli and Schabram 2010) for the journal and peer-reviewed conference articles published between the year 2019 to 2021. The search included the keywords “word embedding” or Word2Vec or GloVe in conjunction with deep learning. The set of search phrases and words used for each EDS is shown in Table 1.

Table 1.

Set of search phrases and words for each of the EDS

| EDS database | Search phrases and words |

|---|---|

|

Scopus (Journal articles) |

TITLE (“word embedding” OR word2vec OR glove) and TITLE-ABS-KEY(“deep learning”) AND (LIMIT-TO (SUBJAREA, “COMP”)) AND (LIMIT-TO(PUBYEAR,2021) OR LIMIT-TO (PUBYEAR,2020) OR LIMIT-TO(PUBYEAR,2019)) AND (LIMIT-TO(LANGUAGE, “English”)) AND (LIMIT-TO(SRCTYPE, “j”)) |

|

Scopus (Peer-reviewed conference articles) |

TITLE-ABS-KEY("word embedding" OR "word2vec" OR "Glove") AND TITLE-ABS-KEY("deep learning") AND (LIMIT-TO(EXACTSRCTITLE, "Emnlp Ijcnlp Conference On Empirical Methods In Natural Language Processing And International Joint Conference On Natural Language Processing Proceedings Of The Conference") OR LIMIT-TO(EXACTSRCTITLE, "Aaai Conference On Artificial Intelligence") OR LIMIT-TO(EXACTSRCTITLE, "Acl Ijcnlp Association For Computational Linguistics And The International Joint Conference On Natural Language Processing Of The Asian Federation Of Natural Language Processing Proceedings Of The Conference") OR LIMIT-TO(EXACTSRCTITLE, "Cognitive Modeling And Computational Linguistics Proceedings Of The Workshop") OR LIMIT-TO(EXACTSRCTITLE, "Conference On Computational Natural Language Learning Proceedings")) AND LIMIT-TO(PUBYEAR, 2021) OR LIMIT-TO(PUBYEAR, 2020) OR LIMIT-TO(PUBYEAR, 2019)) AND (LIMIT-TO(LANGUAGE, “English”) AND (LIMIT-TO(SUBJAREA, "COMP")) |

| Science direct |

Articles with these terms: word embedding; word2vec; glove, Title, abstract or author-specified keywords: deep learning, Year: 2019–2021 |

| IEEE Xplore | (“Document Title”: word embedding) OR (“Document Title”:word2vec) OR (“Document Title”: glove) AND (“Document Title”: deep learning) AND (“Abstract”: deep learning) AND (“Author Keywords”: deep learning), Filters Applied: Journals, Year range - 2019–2021 |

Eligibility criteria

Article eligibility and inclusion is an essential and strict inspection method for including the best potential articles in the study. The following points are defined to choose research examining the impact of word embedding models on text analytics in deep learning environments. The primary study selection criteria are categorized into inclusion criteria and exclusion criteria.

Inclusion criteria

Studies focus primarily on word embedding models that have been applied or reviewed for analytics.

Any analytics task, such as text classification, sentiment analysis, text summarization, and other text analysis activities utilizing word embedding models, will be included in the articles.

The research article from the database is selected only from the subject of computer science.

Research papers have been accepted and published in important and determinant peer-reviewed conferences focusing on word embedding and natural language processing and published in reputed journals.

Studies were published from 2019 to 2021.

Exclusion criteria

Studies not in the English language.

Studies focused only on understanding deep learning models, such as their architectural behaviors or motivation to utilize them.

Articles that do not meet the inclusion criteria are excluded.

Articles that were already examined in other EDS will be excluded.

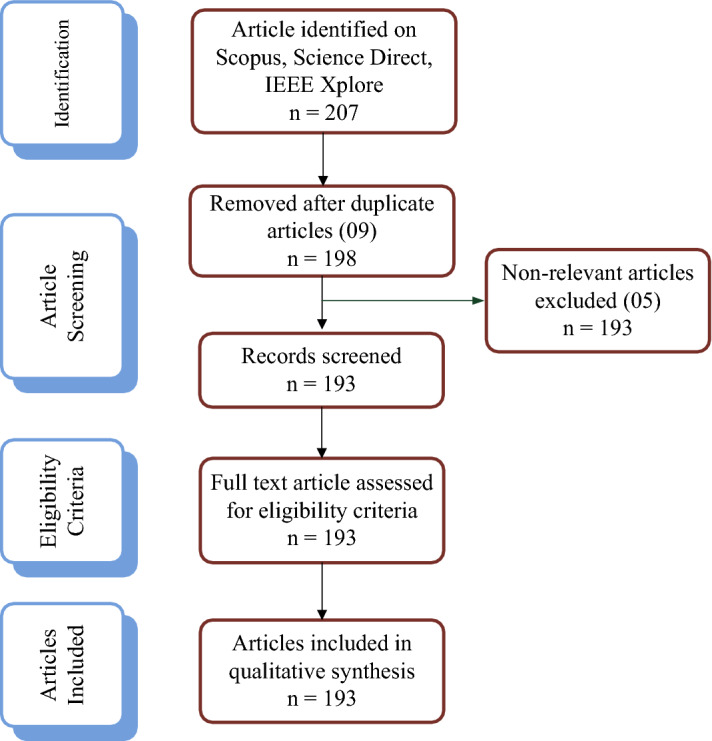

The EDS database is used to find the literature with the keywords “word embedding OR Word2Vec OR GloVe” and “deep learning” used in the title, abstract, and keywords section. The overall number of articles shown by the database is huge. When the research is confined to 2019 to 2021, the number drops to 207. The process is needed to filter more for the quality of the review. The language is selected only English, and the subject area is chosen as computer science. The published articles in important and determinant peer-reviewed conferences focusing on word embedding and natural language processing and reputed journals are included for the study's reliability and quality. The PRISMA diagram shown in Fig. 14 depicts the criteria for selecting articles and information about the article for review and record.

Fig. 14.

PRISMA diagram

The summary of articles selected for review is shown in Table 2. The 09 studies are excluded as duplicate articles from different EDS, and the 05 studies irrelevant to this review are also excluded. The final 193 articles on word embedding models in conjunction with deep learning and its applications in text analytics are selected to analyze the literature and find the gap and research direction.

Table 2.

Summary of articles selected for review

| Result by query | Articles selected for review | Articles repeated | Articles out of scope | |

|---|---|---|---|---|

|

Scopus (Journal articles) |

72 | 69 | 00 | 03 |

|

Scopus (Peer-reviewed conference articles) |

45 | 45 | 00 | 00 |

| Science Direct | 61 | 58 | 01 | 02 |

| IEEE Xplore | 29 | 21 | 08 | 00 |

| 207 | 193 | 09 | 05 |

Data extraction process

A detailed data extraction format is prepared in the spreadsheet to minimize any bias in the data extraction process. The spreadsheet was primarily used to extract and maintain each chosen research study data. A detailed overview of the data extraction procedure is discussed in Table 3.

Table 3.

Description of data extraction

| Data items | Description |

|---|---|

| Bibliographic information | Author, title, name of the publisher/journal, year of publication |

| Embedding approach used | The various embedding techniques that have been employed |

| Task and method | Various forms of word embedding models and deep learning methodologies are used in analytics |

| Dataset used | Datasets used for training and testing the suggested method were gathered |

| Performance parameter and evaluation | Enlist the parameters used for evaluation and the actual performance achieved using the proposed approach |

| Implementation API or tools | Information about various tools and APIs used for experimentation |

| Key/Critical findings | Significant findings and critical discussion related to the proposed approach and techniques used |

| Limitations and Future work | Limitations of the current research work and proposed future work |

Popular journals and year-wise studies

The research is restricted to important and determinant peer-reviewed conferences focusing on word embedding and natural language processing and reputed journal publications published between 2019 and 2021. The terms word embedding, deep learning, and their applications in text analytics were used in the search. Only papers that meet the inclusion and exclusion criteria are chosen for review. The study began in the fourth quarter of 2021; hence, fewer publications than in 2020. It is expected to have more publications in the coming years. Articles selected for the study are shown year-wise in Fig. 15(a). Google Trends2 is used to analyze word embedding and NLP topics in Google search queries worldwide from 2019 through 2021. The comparison of the search volume of queries over time is displayed in Fig. 15(b). According to recent trends, the embedding technique for natural language processing jobs has evolved significantly. The choice of an effective embedding strategy is critical to the success of an NLP task.

Fig. 15.

(a) Year-wise publication records selected for review, (b) Analysis of search query on word embedding and NLP in Google Trends

For review, articles published in important and determinant peer-reviewed conferences focusing on word embedding and natural language processing and reputed journals are chosen. It has been discovered that Elsevier publishes nearly 50% of the selected publications, almost 25% are published by IEEE, and Springer Nature publishes nearly 10%.

The journals of Elsevier publications, Information Processing and Management, Knowledge-Based Systems, and Applied Soft Computing, had 34 papers selected for review, the most of any other publication. IEEE Access is ranked second on the list, with 27 articles chosen for evaluation. The third journal on the list is Springer's Neural Computing and Applications. A circular dendrogram depicting the name of peer-reviewed conferences and journals selected for current review by year is shown in Fig. 16. The peer-reviewed conference and journal's names and abbreviations are listed in Table 13 in Annexure A.

Fig. 16.

Peer-reviewed conferences and journals selected for the current review

Table 13.

List of publishers/journals

| Abbreviation | Name of publishers/journals |

|---|---|

| ACM Tran | ACM Transactions on Audio, Speech, and Language Processing |

| AIKDE | AI, Knowledge and Data Engineering |

| AIL | Artificial Intelligence and Law |

| AIM | Artificial Intelligence in Medicine |

| ASCJ | Applied Soft Computing Journal |

| CCPE | Concurrency Computat Pract Exper |

| CEE | Computers and Electrical Engineering |

| CMPB | Computer Methods and Programs in Biomedicine |

| CS | Computer and Security |

| DEIDH | Data-Enabled Intelligence for Digital Health |

| DSE | Data Science and Engineering |

| DSS | Decision Support Systems |

| DTA | Data Technologies and Applications |

| EAAI | Engineering Applications of Artificial Intelligence |

| EIJ | Egyptian Informatics Journal |

| ESA | Expert Systems with Applications |

| ETRI Journal | Electronics and Telecommunications Research Institute |

| FGCS | Future Generation Computer Systems |

| HWCMC | Hindawi Wireless Communications and Mobile Computing |

| IEEE | Institute of Electrical and Electronics Engineers |

| IEEE Tran. PDS | IEEE Transactions on Parallel and Distributed Systems |

| IJCDS | International Journal of Computing and Digital Systems |

| IJCIA | International Journal of Computational Intelligence and Applications |

| IJIES | International Journal of Intelligent Engineering and Systems |

| IJISAE | International Journal of Intelligent Systems and Applications in Engineering |

| IJITEE | International Journal of Innovative Technology and Exploring Engineering |

| IJIV | International Journal on Informatics Visualization |

| IJKIES | International Journal of Knowledge-based and Intelligent Engineering Systems |

| IJMI | International Journal of Medical Informatics |

| IJMLC | International Journal of ML and Cybernetics |

| IJMS | International Journal of Molecular Sciences |

| IM | Information & Management |

| IMU | Informatics in Medicine Unlocked |

| INASS | The Intelligent Networks And System Society |

| IPM | Information Processing and Management |

| IS | Information Systems |

| ISAT | ISA Transactions |

| IST | Information and Software Technology |

| JBA | Journal of Business Analytics |

| JBI | Journal of Biomedical Informatics |

| JCMSE | Journal of Computational Methods in Sciences and Engineering |

| JDS | Journal of Decision Systems |

| JKSU-CIS | Journal of King Saud University—Computer and Information Sciences |

| JSS | The Journal of Systems and Software |

| JWS | John Wiley & Sons, Ltd |

| KBS | Knowledge-Based Systems |

| KDSD | Knowledge Discovery for Software Development |

| MIS | Mobile Information Systems |

| MM | Microprocessors and Microsystems |

| MTA | Multimedia Tools and Applications |

| NCA | Neural Computing and Applications |

| OSNM | Online Social Networks and Media |

| PRL | Pattern Recognition Letters |

| SNAM | Social Network Analysis and Mining |

| TJEECS | Turkish Journal of Electrical Engineering & Computer Sciences |

| TKDE | Transactions on Knowledge and Data Engineering |

| TSE | Transactions on Software Engineering |

| TVCG | Transactions on Visualization and Computer Graphics |

| AAAI | The Thirty-Third AAAI Conference on Artificial Intelligence |

| ACL | Association for Computational Linguistics |

| ACL & IJCNLP | 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing |

| ANLPW & ACL | Proceedings of the Fourth Arabic Natural Language Processing Workshop—Association for Computational Linguistics |

| CAIDA | 1st International Conference on Artificial Intelligence and Data Analytics |

| CMCL | Proceedings of the Workshop on Cognitive Modeling and Computational Linguistics |

| COLING | Proceedings of the 28th International Conference on Computational Linguistics |

| EMNLP & IJCNLP | (EMNLP & IJCNLP—ACL) Conference on Empirical Methods in Natural Language Processing and 9th International Joint Conference on Natural Language Processing—Association for Computational Linguistics |

| EuroSSP | IEEE European Symposium on Security and Privacy |

| ICIDT | International Conference on Intelligent Decision Technologies |

| ICLR | International Conference on Learning Representations |

| ICNLSP—ACL | Proceedings of the 4th International Conference on Natural Language and Speech Processing, Association for Computational Linguistics |

| IF | Elsevier—Information Fusion |

| IJCAI | Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence |

| IJCAI-DCT | Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence, Doctoral Consortium Track |

| IOT | IEEE Internet of Things Journal |

| IW3C2 | International World Wide Web Conference Committee |

| RepL4NLP—ACL | Proceedings of the 4th Workshop on Representation Learning for NLP, Association for Computational Linguistics |

| SNS | Springer Nature Singapore |

| TASLP | IEEE/ACM Transactions on Audio, Speech, and Language Processing |

| TCSS | IEEE Transactions on Computational Social Systems |

| TII | IEEE Transactions on Industrial Informatics |

| TKDE | IEEE Transactions on Knowledge and Data Engineering |

| TNSE | IEEE Transactions on Network Science and Engineering |

SANAD Single-label Arabic news articles datasets; NADiA News articles datasets in Arabic with multi-labels; HAN Hierarchical attention network; HDBSCAN Hierarchical Density-Based Spatial Clustering of Applications with Noise; LDA Logistic regression, linear discriminant analysis; QDA Quadratic discriminant analysi; NB Naïve Bayes; SVM Support vector machine; KNN k-nearest neighbor; DT Decision tree, RF Random forest; XGBoost MLP Multilayer perceptron; LIWC Linguistic Inquiry and Word Count features; NER Named entity recognition; PMMC Process model matching contest dataset; DLMF Digital Library of Mathematical Functions; GB Gradient Boosting; SGC Stochastic Gradient Descent; HAN Hierarchical attention network; DFFNN Deep feed-forward neural network

Tools and APIs available for implementing word embedding models

This section provides an overview of the available tools and API for implementing word embedding models.

Natural Language Toolkit: Natural Language Toolkit (NLTK)3 is a free and open-source Python library for natural language processing. NLTK provides stemming, lowercase, categorization, tokenization, spell check, lemmatization, and semantic reasoning text processing packages. It gives access to lexical resources like WordNet.

Scikit-learn: Scikit-learn4 is a Python toolkit for machine learning that supports supervised and unsupervised learning. It also includes tools for model construction, selection, assessment, and other features, such as data preprocessing. For the development of traditional machine learning algorithms, two Python libraries, NumPy and SciPy, are useful.

TensorFlow: Tensorflow5 is a free and open-source library for creating machine learning models. TensorFlow uses a Keras-based high-level API for designing and building neural networks. TensorFlow was created to perform machine learning and deep neural network research by researchers on the Google Brain team. Its flexible architecture enables computing to be deployed over various platforms like CPU, GPU, and TPU and makes it significantly easier for developers to transition from model development to deployment.

Keras: Keras6 is a Google-developed high-level deep learning API for implementing neural networks. It is built in Python and is used to simplify neural network implementation. It also enables the computation of numerous neural networks in the backend. Keras support the frameworks such as Tensorflow, Theano, and Microsoft Cognitive Toolkit. Keras allows users to create deep models for smartphones, browsers, and the java virtual machine. It also allows distributed deep-learning model training on clusters of GPU and TPU.

PyTorch: PyTorch7 is an open-source machine learning framework initially created by Facebook AI Research lab (FAIR) to speed up the transition from research development to commercial implementation. PyTorch has a user-friendly interface that allows quick, flexible experimentation and output. It supports NLP, machine learning, and computer vision technologies and frameworks. It enables GPU-accelerated Tensor calculations and the creation of computational graphs. The most recent version of PyTorch is 1.11, which includes data loading primitives for quickly building a flexible and highly functional data pipeline.

Pandas: Pandas8 is an open-source Python framework that supports high-performance, user-friendly information structures and analytic tools for Python. Pandas are applied in various scientific and corporate disciplines, including banking, business, statistics, etc. Pandas 1.4.1 is the most recent version and is more stable in terms of regression support.

NumPy: Travis Oliphant built Numerical Python (NumPy)9 in 2005 as an open-source package that facilitates numerical processing with Python. It has matrices, linear algebra, and the Fourier transform functions. The array object in NumPy is named ndarray, and it comes with a slew of helper functions that make working with it a breeze. The latest version of NumPy is 1.22.3, and it is used to interface with a wide range of databases smoothly and quickly.

SciPy: NumPy includes a multidimensional array with excellent speed and array manipulation features. SciPy10 is a Python library based on NumPy and is available for free. SciPy consists of several functions that work with NumPy arrays and are helpful for a variety of scientific and engineering tasks. The latest version of the SciPy toolkit is 1.8.0, and it offers excellent roles and methods for data processing and visualization.

Key applications of text analytics

Techniques for analyzing unstructured text include text classification, sentiment analysis, NER and recommendation systems, biomedical text mining, and topic modeling.

Text analytics

Text classification

Text classification is the process of categorizing texts into organized groups. Text gathered from a variety of sources offers a great deal of knowledge. It is difficult and time-consuming to extract usable knowledge from unstructured data. Text classification can be done manually or automatically, as shown in Fig. 17.

Fig. 17.

Approaches for text classification

Automatic text classification is becoming progressively essential due to the availability of enormous corpora. Automatic text classification can be done using either a rule-based or data-driven technique. A rule-based technique uses domain knowledge and a set of predefined criteria to classify text into multiple groups. Text is organized using a data-driven approach based on data observations. Machine learning or deep learning algorithms can be used to discover the intrinsic relationship between text and its labels based on data observation.

A data-driven technique fails to extract relevant knowledge from a large dataset using solely handmade characteristics. An embedding technique is used to map the text into a low-dimensional feature vector, which aids in extracting relationships and meaningful knowledge (Dhar et al. 2020).

Sentiment analysis

Sentences can be articulated in a variety of ways. It might be expressed through various emotions, judgments, visions or insights, or people's perspectives. The meaning of individual words has an impact on readers and writers. The writer uses specific words to communicate feelings, and the readers strive to interpret the emotion depending on their abilities to analyse. Deep learning systems have already demonstrated outstanding performance in NLP applications such as sentiment classification and emotion detection within many datasets. These models do not require any predefined selected characteristics. Instead, it learns advanced representations of the input datasets on its own (Dessì et al. 2021). Sentiment analysis techniques are divided into lexicon-based approaches, machine-learning approaches, and a combination of the two (Mohamed et al. 2020). The internet is an unorganized and rich source of knowledge that contains many text documents offering thoughts and reviews. Personal decisions, businesses, and institutions can benefit from sentiment recognition (Onan 2021).

Named entity recognition

A named entity is a word used to differentiate one object from a set of entities that share similar features. It restricts the range of entities that describe a subject by using one or more restrictive identifiers. At the sixth Message Understanding Conference, the term Named Entity was first used to describe the problem of recognizing names of enterprises, persons, and physical locations in literature and price, timing, and proportion statements. Then there was a surge in interest in NER, with numerous researchers devoting significant time and effort to the subject (Grishman and Sundheim 1996), (Nasar et al. 2021). The extraction of intelligent information from text relies heavily on NER. The NER task is difficult due to the polymorphemic behavior of many words (Khan et al. 2020). NER is used in various NLP applications, including text interpretation, information extraction, question answering, and autonomous text summarization. In NER, four main approaches are used: (1) Rule-based approaches, which rely on hand-crafted rules, (2) Unsupervised learning methods, which use unsupervised algorithms rather than hand-labeled training instances (3) Feature-based supervised learning techniques primarily depend on supervised learning algorithms that have been carefully engineered, (4) Deep-learning-based techniques that generate representations necessary for classification and identification from training dataset in an end-to-end way.

Biomedical text mining

Healthcare experts are struggling to classify diseases based on available data. Humans must recognize clinically named entities to assess massive electronic medical records effectively. Conventional rule-based systems require a significant amount of human effort to create rules and vocabulary, whereas machine learning-based approaches require time-consuming feature extraction. Deep learning models like LSTM with conditional random field (CRF) performed admirably in several datasets. Clinical named entity recognition is a process that identifies specific concepts from unorganized texts, medical tests, and therapies. It is crucial to convert unorganized electronic medical record material into organized medical information. (Yang et al. 2019).

Topic modeling

Topic modeling aims to ascertain how underlying document collections are structured. Topic models were first created to retrieve information from massive document collections. Without relying on metadata, topic models can be used to explore sets of journals by article subject. The LSA uses SVD to extract the fundamental themes from a term-document matrix, resulting in mathematically independent issues. Similar to how principal component analysis reduces the number of features in a prediction task, topic models are simply a compression technique that maximizes topic variance on a simplified representation of a document collection (Zhao et al. 2021). Text classification is the process of organizing text to extract valuable information from it. In contrast, topic modeling is determining an abstract topic for a group of texts or documents. Topic modeling is commonly used to extract semantic information from textual material (Kumar et al. 2021).

Datasets used for text analytics

This section outlines the datasets commonly used for text analytics purposes, as shown in Table 4. Researchers have offered several text analytics datasets. Text classification, sentiment analysis, NER, recommendation systems, and topic modeling are among the application fields found in the literature. The overview of attributes in terms of application area, datasets, model architecture, embedding methods, and performance evaluation are illustrated in Annexure A.

Table 4.

Dataset used for text analytics purpose

| Sr. No | Name of dataset | References |

|---|---|---|

| 1 | Amazon product review | Liu et al. (2021b), Wang et al. (2021c), Hajek et al. (2020), Rezaeinia et al. (2019), Hao et al. (2020), Yang et al. (2021a), Dau et al. (2021), and Khan et al. (2021) |

| 2 | Arabic news datasets | Almuzaini and Azmi (2020), Alrajhi and ELAffendi (2019), Almuhareb et al. (2019), and Elnagar et al. (2020) |

| 3 | Fudan dataset | Zhang et al. (2021) and Zhu et al. (2020a) |

| 4 | i2b2: Informatics for Integrating Biology & the Bedside | Yang et al. (2019), Catelli et al. (2021), and Catelli et al. (2020) |

| 5 | IMDB | Li et al. (2021), Wang et al. (2021c), Jang et al. (2020), Hao et al. (2020), and Zhu et al. (2020a) |

| 6 | Yelp | Wang et al. (2021c), Alamoudi and Alghamdi (2021), Hao et al. (2020), Zhu et al. (2020a), Yang et al. (2021a), Dau et al. (2021), Sun et al. (2020a), and Khan et al. (2021) |

| 7 | SemEval | Wang et al. (2021c), Alamoudi and Alghamdi (2021), Naderalvojoud and Sezer (2020), González et al. (2020), Rida-e-fatima et al. (2019), Zhu et al. (2020a), Liu and Shen (2020), and Sharma et al. (2021) |

| 8 | Sogou | Zhang et al. (2021) and Xiao et al. (2019) |

| 9 | Standford sentiment treebank | Wang et al. (2021c), Naderalvojoud and Sezer (2020), and Rezaeinia et al. (2019) |

| 10 | Amin et al. (2020), Alharthi et al. (2021), and Malla and Alphonse (2021) | |

| 11 | Wikipedia | Li et al. (2021) and Zhang et al. (2021) |

| 12 | Word-Sim | Hammar et al. (2020), Li et al. (2019a), and Zhu et al. (2020a) |

Amazon dataset: Customer reviews of products purchased through the Amazon website are included in the dataset. The dataset consists of binary and multiclass classifications for review categories. The data is arranged into training and testing sets for both product classification categories.

Arabic news datasets: The Arabic newsgroups dataset contains documents posted to several newsgroups on various themes. Different versions of this dataset are used for text classification, text clustering, and other tasks. The Arabic news texts corpus is organized into nine categories: culture, diversity, economy, international news, local news, politics, society, sports, and technology. It contains 10,161 documents with a total of 1.474 million words.

Fudan dataset: This is an image database containing pedestrian detection images. The photographs were taken in various locations around campus and on city streets. At least one pedestrian will appear in each photograph. The heights of tagged pedestrians lie between (180, 390) pixels. All of the pedestrians who have been classified are standing up straight. There are 170 photos in all, with 345 pedestrians tagged, with 96 photographs from the University of Pennsylvania and 74 from Fudan University.

i2b2: Informatics for Integrating Biology & the Bedside (i2b2) is a fully accessible clinical data processing and analytics exploration platform allowing heterogeneous healthcare and research data to be shared, integrated, standardized, and analyzed. All labeled and unannotated, de-identified hospital discharge reports are provided for academic purposes.

Movie review dataset: The movie review dataset is a set of movie reviews created to identify the sentiment involved with each study and decide whether it is favorable or unfavorable. There are 10,662 sentences, with an equal amount of negative and positive examples.

Yelp dataset: Two sentiment analysis tasks are included in the Yelp dataset. One method is to look for sentiment labels with finer granularity. The other predicts both excellent and negative emotions. Yelp-5 has 650,000 training data and 50,000 testing data for negative and positive classes, while Yelp-2 has 560,000 training datasets and 38,000 testing datasets.

SemEval: SemEval is a domain-specific dataset with reviews of laptops and restaurant services thoroughly annotated by humans. The overall aspect of a sentence, section, or text span, irrespective of the entities or their characteristics, the SemEval dataset, is frequently used. The dataset comprises over three thousand reviews in English for each product category.

Sogou dataset: The Sogou news dataset combines the news corpora from SogouCA and SogouCS. This Chinese dataset includes around 2.7 billion words and is published by a Chinese commercial search engine.

Stanford Sentiment Treebank (SST) dataset: The SST dataset is a more extended version of the movie review data. The SST1 includes fine-grained labels in a multiclass movie review dataset with training, testing, and validation sets. The binary label dataset in SST2 is split into three sections: training, testing, and validation.

Twitter dataset: With the tremendous increase in online social networking websites like blogs, vital information in sentiments, thoughts, opinions, and epidemic outbreaks is being conveyed. Twitter generates vast data about epidemic outbreaks, customer reviews about the product, and survey information. The Twitter Streaming API can be used to obtain a dataset from Twitter that includes disease information and a geographical study of Twitter users.

Wikipedia: Wikipedia pages are taken as the corpus to train the model. The preprocessing operations on the pages extract helpful information such as an article abstract. Processing takes place using a dictionary of selected terms.

WordSim: WordSim is a set of tests for determining the similarity or relatedness of words. The WordSim353 dataset consists of two groups: the first set includes 153-word pairs for evaluating similarity assigned by 13 subjects, and the other contains 16-word pairs for evaluating relatedness given by 16 subjects.

Review on text analytics, word embedding application, and deep learning environment

For many domains, researchers have created numerous text analytics models. When creating text analytics models, the primary concern that comes to mind is “what type of embedding method is suited for which application area and the appropriate deep learning strategy”. A description of various text analytics strategies with different embedding methods and deep learning algorithms is shown in Annexure A. It depicts the multiple approaches utilized and their performance as a function of the application domain.

Text classification

Text categorization issues have been extensively researched and solved in many real-world applications. Text classification is the process of grouping together texts like tweets, news articles, and customer evaluations. The construction of text classification and document classification techniques includes extracting features, dimension minimization, classifier selection, and assessments (Jang et al. 2020). Recent advances have focused on learning low-dimension and continuous vector representations of words, known as word embedding, which may be applied directly to downstream applications, including machine translation, natural language interpretation, and text analytics (El-Alami et al. 2021) (Elnagar et al. 2020). Word embedding uses neural networks to represent the context and relationships between the target word and its context words (Almuzaini and Azmi 2020). An attention mechanism and feature selection using LSTM and character embedding achieve an accuracy of 84.2% in classifying Chinese text (Zhu et al. 2020b). Deep feedforward neural network with the CBOW model achieves an accuracy of 89.56% for fake consumer review detection (Hajek et al. 2020).

LSTM with the Word2Vec model achieves an F1-score of 98.03% for word segmentation in the Arabic language (Almuhareb et al. 2019). Neural network-based word embedding efficiently models a word and its context and has become one of the most widely used methods of word distribution representation (N.H. Phat and Anh 2020)(Alharthi et al. 2021).

Machine learning algorithms such as Naive Bayes classifier (NBC), support vector machine (SVM), decision tree (DT), and the random forest (RF) were famous for information retrieval, document categorization, image, video, human activity classification, bioinformatics, safety and security (Shaikh et al. 2021). Deep learning model such as CNN and GloVe embedding improves citation screening and achieves an accuracy of 84.0% (V Dinter et al. 2021). To classify meaningful information into various categories, the deep learning model GRU with GloVe embedding achieves an accuracy of 84.8% (Zulqarnain et al. 2019). Information retrieval systems are applications that commonly use text classification methods (Greiner-Petter et al. 2020), (Kastrati et al. 2019). Text classification can be used for a variety of purposes, such as the classification of news articles (Spinde et al. 2021), (Roman et al. 2021), (Choudhary et al. 2021), (de Mendonça and da Cruz Júnior 2020), (Roy et al. 2020). The performance of Word2Vec, GloVe, and fastText is compared to match the corresponding activity pair. The experimental evaluation shows that the fastText embedding approach achieves the F1-socre of 91.00% (Shahzad et al. 2019). Extracting meta-textual features and word-level features using the BERT approach gains an accuracy of 95% for classifying insincere questions on question-answering websites (Al-Ramahi and Alsmadi 2021). CNN with the Word2Vec model achieves an accuracy of 90% for text classification tasks (Kim and Hong 2021), (Ochodek et al. 2020). It is challenging to extract discriminative semantic characteristics from text that contains polysemic words. The construction of a vectorized representation of semantics and the use of hyperplanes to break down each capsule and acquire the individual senses are proposed using capsule networks and routing-on-hyperplane (HCapsNet) techniques. Experimental investigation of a dynamic routing-on-hyperplane approach utilizing Word2Vec for text classification tasks like sentiment analysis, question classification, and topic classification reveals that HCapsNet achieves the highest accuracy of 94.2% (Du et al. 2019). A hierarchical attention network based on Word2Vec embedding achieves an accuracy of 84.57% for detecting fraud in an annual report (Craja et al. 2020). Text classification by transforming knowledge from one domain to another using LSTM and Word2Vec embedding model achieves an accuracy of 90.07% (Pan et al. 2019a). Social media tweets analysis (Hammar et al. 2020). Domain-specific word embedding outperforms the BERT embedding model and achieves an F1-score of 94.45% (Grzeça et al. 2020), (Zuheros et al. 2019), (Xiong et al. 2021). Ensemble deep learning model with RoBERT embedding achieves an accuracy of 90.30% to classify tweets for information collection (Malla and Alphonse 2021), (Hasni and Faiz 2021), (Zheng et al. 2020). CNN with a domain-specific word embedding model, achieves an F1-score of 93.4% to classify tweets into positive and negative (Shin et al. 2020).

Text categorization algorithms have been successfully applied to Korean/French/Arabic/Tigrinya/Chinese languages for document/tweets classification (Kozlowski et al. 2020), (Jin et al. 2020). CNN with the CBOW model achieves an accuracy of 93.41% for classifying text in the Trigniya language (Fesseha et al. 2021). LSTM with Word2Vec achieves 99.55% for tagging morphemes in the Arabic language (Alrajhi and ELAffendi 2019). With word2vec, CNN achieves an accuracy of 96.60% on Chinese microblogs. This result demonstrates that word vectors employing Chinese characters as feature components produce better accuracy than word vectors (Xu et al. 2020). The lexical consistency of the Hungarian language can be improved by embedding techniques based on sub-word units, such as character n-grams and lemmatization (Döbrössy et al. 2019). To accurately assess pre-trained word embeddings for downstream tasks, it is necessary to capture word similarity. Traditionally the similarity is determined by comparing it to human judgment. A Wikipedia Agent Using Local Embedding Similarities (WALES) is proposed as an alternative and valuable metric for evaluating word similarity. The WALES metric depends on a representative traversing the Wikipedia hyperlink graph. A performance evaluation of a graph-based technique on English Wikipedia demonstrates that it effectively measures similarity without explicit human labeling (Giesen et al. 2022). A Doc2Vec word embedding model is used to extract features from the text and pass them through CNN for classification. The experimental evaluation of the Turkish Text Classification 3600 (TTC-3600) dataset shows that the model efficiently classifies the text with an accuracy of 94.17% (Dogru et al. 2021). LSTM with CBOW achieves an accuracy of 90.5% for comparing the semantic similarity between words in the Chinese language (Liao and Ni 2021). The review of text classification techniques in terms of data source, application area, datasets, and performance evaluation are illustrated in Table 7 of Annexure A.

Table 7.

Review of text classification

| Sr. No | References | Application area | Name of dataset | Model architecture | Embedding method | Performance |

|---|---|---|---|---|---|---|

| 1. | Craja et al. (2020) | Annual report analysis for fraud detection | EDGAR database | LR, RF, SVM, XGB, ANN, HAN | Word2Vec | HAN achieves an accuracy of 84.57% |

| 2. | Alharthi et al. (2021) | Arabic text low-quality content classification | Twitter dataset | CNN, LSTM | Word2Vec, AraVec | LSTM achieves an accuracy of 98% |

| 3. | Kozlowski et al. (2020) | French social media tweet analysis for crisis management | French dataset | SVM, CNN | fastText, BERT, French FlauBert | FlauBert achieves a micro F1-score of 85.4% |

| 4. | Zuheros et al. (2019) | Social networking site tweet analysis for the use of polysemic words | Social media texts, both English and Spanish data | XGBoost, HAN, LSTM | GloVe | LSTM + GloVe achieves an F1-score of 97.90% |

| 5. | Liao and Ni (2021) | Semantic similarity between the word in the Chinese language | Manually collected datasets from students | SVM, LR, RF, CNN, LSTM | Word2Vec (CBOW, Skip-Gram) | LSTM + CBOW achieves an accuracy of 90.5% |

| 6. | Ochodek et al. (2020) | Estimation of software development | Projects data of Poznan University of Technology Company | CNN | DSWE | DSWE model achieves an accuracy of 45.33% |

| 7. | El-Alami et al. (2021) | Arabic text categorization | OSAC datasets (corpus including BBC, CNN, and OSAc) | SVM, MLP, CNN, LSTM, BiLSTM | ULMFiT, EMLo, AraBERT | AraBERT achieves an accuracy of 99% |

| 8. | Elnagar et al. (2020) | Arabic text classification | SANAD, NADiA | GRU, LSTM, CNN, BiGRU, BiLSTM, HAN | Word2Vec | GRU model achieves an accuracy of 96.94% |

| 9. | Shaikh et al. (2021) | Bloom's learning outcomes classification |

Sukkur IBA university dataset, Najran University, Saudi Arabia dataset |

SVM, NB, LR, RF, RNN, LSTM | Word2Vec, fastText, DSWE, GloVe | LSTM + DSWE achieves an accuracy of 87% |

| 10. | Zhu et al. (2020b) | Character embedding for Chinese short text classification | THUCNews dataset, Toutiao dataset, Invoice dataset | RNN, LSTM, HAN | Chinese character embedding (AFC) | LSTM + AFC achieves an accuracy of 84.2% |

| 11. | Roman et al. (2021) | Citation Intent Classification |

Citation Context Dataset, Sci-Cite dataset |

HDBSCAN | GloVe, BERT | Kmeans clustering + BERT achieves a precision of 89% |

| 12. | Dinter et al. (2021) | Citation screening to improve the systematic literature review process | 20 publicly available datasets | CNN | GloVe | CNN model achieves an accuracy of 88% |

| 13. | Hammar et al. (2020) | Classification of Instagram posts from the fashion domain |

Corpora of Instagram posts, Word-Sim353, SimLex-999, FashionSim |

CNN | fastText, Word2Vec, GloVe | CNN + fastText achieves an F1-score of 61.00% |

| 14. | Spinde et al. (2021) | Classification of news articles to detect bias-inducing words | News articles | LDA, NB, SVM, KNN, DT, RF, XGBoost, MLP | TF-TDF, LIWC | Achieves F1-score of 43% |