Abstract

Neurons in parietal cortex exhibit task-related activity during decision-making tasks. However, it remains unclear how long-term training to perform different tasks over months or even years shapes neural computations and representations. We examine lateral intraparietal area (LIP) responses during a visual motion delayed-match-to-category task. We consider two pairs of male macaque monkeys with different training histories: one trained only on the categorization task, and another first trained to perform fine motion-direction discrimination (i.e., pretrained). We introduce a novel analytical approach—generalized multilinear models—to quantify low-dimensional, task-relevant components in population activity. During the categorization task, we found stronger cosine-like motion-direction tuning in the pretrained monkeys than in the category-only monkeys, and that the pretrained monkeys’ performance depended more heavily on fine discrimination between sample and test stimuli. These results suggest that sensory representations in LIP depend on the sequence of tasks that the animals have learned, underscoring the importance of considering training history in studies with complex behavioral tasks.

Subject terms: Neuroscience, Computational neuroscience, Sensory processing, Visual system, Working memory

Posterior parietal cortex supports visual categorization in macaque monkeys. Here, the authors quantify low-dimensional neural population activity using tensor regression to find that long term training history impacts encoding of categorization.

Introduction

The activity of single neurons in the macaque lateral intraparietal area (LIP) encodes task-relevant information in a variety of decision-making tasks1. As a result, LIP has been proposed to support many different neural computations underlying perceptual decision making, including abstract visual categorization2,3. Throughout a lifetime, animals learn to make many different kinds of decisions in a variety of tasks and contexts, and different animals collect a unique set of experiences that shape their perceptual and decision-making skills and strategies4,5. In contrast, experiments designed to study neural mechanisms of decision making often focus on neurons recorded during a specific task in isolation. However, previously learned neural representations and strategies may impact how a cortical region is recruited when learning a new task. To understand the generality and flexibility of neural representations which support decision making, we aim to compare decision-related LIP activity in animals performing the same tasks, but with different long-term training histories.

In this study, we consider monkeys trained on two tasks using a delayed-match-to-sample paradigm which share the same structure, timings, stimuli, and motor response: a visual motion direction discrimination task and an abstract categorization task. On each trial, the monkey views two stimuli—sample and test—which are separated by a delay period. The monkey must decide if the motion direction of the test stimulus matches the direction of the sample stimulus according to a learned rule. We compare neural responses during categorization in monkeys that are only trained on the categorization task (category-only monkeys) to monkeys that are first trained on the discrimination task before learning the categorization task (pretrained monkeys).

It is plausible that extensive motion discrimination training during the discrimination task could have a lasting impact on the monkeys’ visual motion sensitivity, accompanied by changes in neural responses to visual motion. Similarly, different training histories may lead to different behavioral strategies to perform the categorization task, and different strategies may be reflected by different cortical computations6. There are several reasons that LIP is a strong candidate region for showing experience-related changes in the representation of visual motion as a result of training on perceptual decision-making and categorization tasks. First, while works from a number of groups have focused on LIP’s role in planning saccadic eye movements and directing spatial attention7, many studies have also shown that LIP contributes to the analysis of visual stimuli placed in neurons’ response fields8–11. In particular, LIP neurons robustly respond to visual motion presented in their response fields12,13, likely because LIP receives direct projections from upstream motion processing centers, such as the middle temporal (MT) and medial superior temporal (MST) areas14,15. Second, many previous studies have found category-selective responses in single neurons in LIP during abstract visual categorization tasks16,17. This contrasts with earlier sensory areas like MT which do not encode abstract category information even after extensive training16,18. However, the causal contribution of MT to motion-direction discrimination19 and coarse depth discrimination20 depends on training history. Third, many LIP cells show persistent category-related activity during the delay period which could contribute to working memory as part of a network that includes other regions that support categorization, such as the prefrontal cortex (PFC)21–24. Previous studies have found that delay-period activity in LIP depends on training13, and that stimulus encoding and working-memory dependent sustained-firing activity in PFC during cognitive tasks depends on training25,26. Finally, inactivation experiments demonstrate that LIP plays a causal role in sensory evaluation in motion categorization tasks27. We therefore hypothesize that differences in training history could result in differences in LIP population activity during the categorization task which reflect behaviorally relevant aspects of the neural computations underlying performance of the categorization task studied here2,28. Specifically, we aimed to determine how training regimes may influence the encoding of specific motion-direction information beyond category in LIP, the geometry of mixed selectivity of direction and category tuning in the population, and signatures of working memory-related dynamics during the delay period.

Determining how neural populations implement the computations involved in the categorization task—how sample category is computed and then stored during the delay period, or how the test stimulus is compared to the sample—based solely on single-neuron analyses may be obscured by the mixture of category- and direction-related responses in individual neurons (e.g., by analyzing tuning curves of single neurons). We therefore take a dimensionality reduction approach to compare the low-dimensional geometry of population responses to better illuminate how LIP encodes different task variables29. Disentangling population responses that encode motion direction (which may reflect bottom-up, sensory-driven signals) from activity encoding correlated cognitive variables (category or match/non-match computations) could support comparisons of LIP responses across animals better than only characterizing the combined response. However, because category and direction are correlated, separating stimulus-driven responses from the higher-level computations that occur at each stage of the trial requires analyzing LIP responses to both the sample and test stimuli across all trials. Our analysis must also include trials with variable timings and lengths because the monkey may respond (and end the trial) anytime during the test stimulus presentation. In addition, we sought to examine single-trial activity for signatures of working memory-related dynamics, not just trial-averaged responses.

Here, we introduce the generalized multilinear model (GMLM) as a model-based dimensionality reduction method for quantifying population activity during flexible tasks. By applying the GMLM to the LIP populations, we quantified population-level differences in LIP activity between animals and compared those differences with behavioral performance with respect to the animals’ training histories. We observed category and direction selectivity in LIP during the categorization task in all subjects. However, we found stronger cosine-like motion-direction tuning in LIP (which is consistent with bottom-up input from motion processing areas like MT) during the categorization task in monkeys first trained on the discrimination task compared to monkeys trained only on categorization. During the test stimulus presentation when the monkeys had to compare the incoming test stimulus to the remembered sample stimulus, sample category could be more reliably decoded from LIP responses irrespective of the test-stimulus direction in the category-only monkeys than in the pretrained monkeys. Behaviorally, the pretrained monkeys were more likely to make categorization errors when the sample and test stimuli were similar than the category-only monkeys. In addition to the effects observed in the mean population response, we examined the structure of single-trial variability in the population which could reflect signatures of different strategies or neural computations in the task. Specifically, we found a difference in oscillatory, single-trial dynamics during the delay period (when the sample stimulus must be stored in working memory) between the category-only and pretrained monkeys by introducing a novel dynamic spike history component to the GMLM. Together these results suggest that different subjects may recruit distinct behavioral and neuronal strategies for performing the categorization task, and that long-term training history may play a role in shaping these differences. Low-dimensional encoding of the categorization task in LIP may therefore reflect an animal’s unique training history or a particular task strategy (or both).

Results

Tasks and behavior

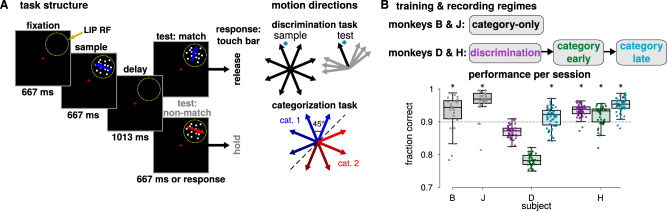

We examined LIP recordings in four monkeys performing two related tasks in which they were required to determine if sequentially presented motion directions matched according to a learned rule (Fig. 1A). In both tasks, the monkey viewed two random dot motion stimuli (sample and test) separated by a delay period. To receive a reward, the animal responded by releasing a touch bar if the two stimuli match or by continuing to hold the touch bar on non-match trials. On non-match trials, the first non-matching test stimulus was followed by a brief delay and a second test stimulus which always matched the sample category (requiring the monkey to release the touch bar). The delayed-match-to-sample task (discrimination task) was a memory-based, fine-direction discrimination task in which the sample and test motion stimuli matched only if they are in the exact same direction. By contrast, the delayed-match-to-category task (categorization task) was a rule-based task in which the stimuli matched if the directions belong to the same category (red or blue) according to a learned arbitrary category rule. In the categorization task, two matching stimuli could be nearly 180° apart but belong to matching categories, while neighboring directions on different sides of the category boundary did not match. The tasks therefore used the same structure and stimuli, and many of the sample-test pairs were rewarded for the same responses in both contexts (e.g., sample and test stimuli of the same direction match in both tasks). However, they required performing different perceptual and/or cognitive computations.

Fig. 1. Motion direction discrimination and categorization tasks.

A In both tasks, the animal fixated and viewed a motion direction stimulus (sample). Following a delay period, a second stimulus (test) was presented. The monkey signaled if the sample and test stimuli matched by releasing a touch bar, otherwise the monkey was required to hold the touch bar. In the discrimination task, the sample and test stimuli matched only if the directions were exactly the same. In the categorization task, the stimuli matched if they belonged to the same category: the motion directions were split into two equally sized categories with a 45°–225° boundary (red and blue directions; the boundary was constant for all sessions). The motion stimuli were placed inside the LIP cell’s response field (yellow circle) during recording. B (Top) Training and recording regimes for the four monkeys. (Bottom) Performance during each recording session (dots) for each animal is summarized by the box plots. Colors correspond to the task and training period (discrimination, category-early or late, and category-only). All four monkeys learned to perform the categorization task with a median per session performance of at least 90% per session, while chance level performance was 50% (asterisks denote p < 0.01, one-sided sign test, Holm–Bonferroni corrected; B n = 26, p = 1.25 × 10–3; J n = 27, p = 2.46 × 10–5; D-disc. n = 39, p = 1; D-early n = 33, p = 1; D-late n = 59, p = 7.74 × 10–4; H-disc. n = 55, p = 4.28 × 10–14; H-early n = 40, p = 1.11 × 10–3; H-late n = 50, p = 1.13 × 10–12). Box plots show the median and first and third quartiles over sessions and the whiskers extend to a 1.5 interquartile range from the edges.

In one pair of monkeys B and J22, the monkeys were trained only to perform the categorization task (i.e., without first training the monkeys on fine discrimination), and LIP recordings were made after training was completed (category-only populations). The second pair of monkeys pretrained monkeys D and H13, was first trained extensively on the discrimination task, and LIP recordings were obtained after training (discrimination populations). The monkeys were then retrained on the categorization task, and a set of LIP recordings was made during an intermediate stage of training (when the monkeys’ performance stabilized; category-early populations). After the category-early recordings, the monkeys received additional training which overemphasized near-category-boundary sample stimuli (the most difficult conditions where the monkeys’ performance was lowest) so that the monkeys’ performance increased. After the second training stage was complete, a final set of LIP neurons was recorded during the categorization task (category-late populations). In this study, the monkeys did not perform both tasks during a single session; they were switched exclusively to the categorization task and retrained over the course of months. Both pairs of animals learned to perform the categorization task at high levels after training was completed (Fig. 1B). In total, we analyzed eight LIP populations recorded during sets of sessions at a particular task or training stage from four animals.

Quantifying direction and category tuning in LIP

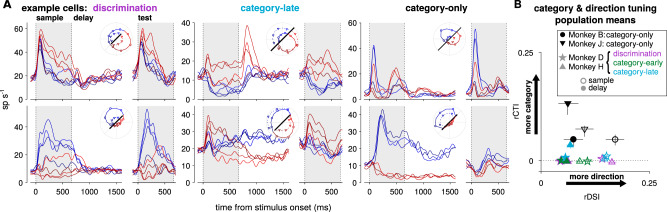

Single-neuron responses show a range of direction and category tuning across the individual neurons in the LIP datasets (Fig. 2A). We applied a receiver operating characteristic analysis for category selectivity in individual neurons. We found that the average category-selective tuning in the category-only population is greater than in the pretrained populations during the sample period (category-only vs pretrained category-late, rCTI increase of 0.051, 95% bootstrapped CI [0.044–0.059]; Fig. 2B). These results suggest differences in task representations in LIP may exist across the pairs of monkeys. Category tuning in the category-only monkey J was even stronger during the delay period compared to the sample stimulus period (one-sided bootstrap test; p < 0.01). In addition, monkey H showed significant category tuning during the delay period, but not during the sample period (p < 0.01). We found similar results using a parametric tuning curve model with cosine-direction tuning (Supplementary Fig. 2).

Fig. 2. LIP recordings during discrimination and categorization tasks.

A Mean firing rates of six single LIP cells recorded during each task. Colors correspond to the stimulus direction and category. Firing rates aligned to the sample stimulus onset are averaged by sample direction (left), and the test stimulus aligned rates are averaged over test direction (right). Although motion categories were not part of the discrimination task, the directions are labeled blue or red for consistency. (Inset polar plots) The mean firing rate for each sample direction during the sample stimulus presentation (circles and solid lines; 0–650 ms after motion onset) and delay period (triangles and dotted lines; 800–1450 ms after motion onset). The solid black line denotes 20 sp s−1. B Mean category and direction tuning measured in single cells during the sample (open symbols) and delay periods (filled symbols) in each population (number of cells per population: B n = 31, J n = 29, D-disc. n = 81, D-early n = 63, D-late n = 137, H-disc. n = 89, H-early n = 106, H-late n = 114). The receiver operating characteristic (ROC) based category tuning index (rCTI45) measures category-specific responses in a range of –0.5 to 0.5, where positive values indicate category-selective neurons while negative values indicate more selectivity for within-category directions. Similarly, positive values of the ROC-based direction selectivity index (rDSI13) indicate direction selectivity. Error bars denote a 95% interval over bootstraps. Individual cell results are shown in Supplementary Fig. 1.

However, there are several challenges for teasing apart direction and category tuning in single neurons. For example, some neurons appear to exhibit changes in stimulus-direction preference during the course of the trial (e.g., Fig. 2A bottom, middle; the cell’s preferred direction shifts from red to blue during the sample stimulus period). Traditional tuning curves using spike counts within a fixed window may therefore not capture dynamic representations in this task. In addition, category and direction are highly correlated: tuning to both variables may not be fully identifiable or separable given the responses of a single neuron in a single time window. Finally, the single-cell analysis does not demonstrate how the whole population supports the combination of category and direction encoding during the task30. We therefore next turned to population analysis approaches to better summarize the coding properties of a set of cells.

Low-dimensional representation of direction and category in LIP

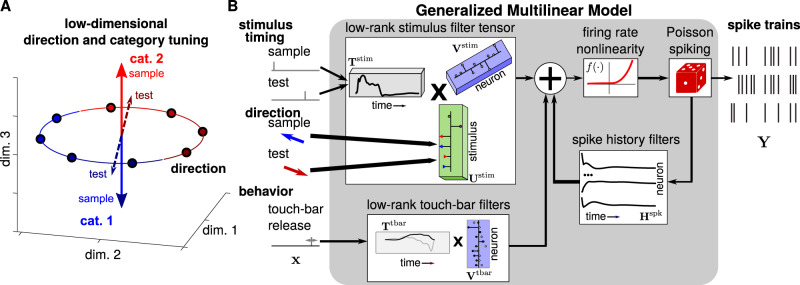

We sought to compare quantitatively the low-dimensional geometry of task variable encoding in LIP spike train responses during both sample and test stimulus presentations across the different populations (Fig. 3A). To accomplish this, we developed the generalized multilinear model (GMLM) as a dimensionality reduction approach that could analyze statistically the LIP population responses to each complete trial during the discrimination and categorization tasks (Fig. 3B). The GMLM is a tensor-regression extension of the generalized linear model (GLM) which describes a single neuron’s spiking response to different task events through a set of linear weights or kernels31–35. By taking a regression approach, we directly quantify low-dimensional activity as a function of the task variables, thereby performing dimensionality reduction and statistical testing within a common framework36. The GMLM fits the data from all cells in a dataset into a compact representation by forming a low-rank tensor of linear kernels that best captures the common motifs in the mean responses to the task events—the sample and test stimuli and the touch-bar release—shared by the population. The neural populations used in this study were not simultaneously recorded and the model does not consider noise correlations between neurons. As the number of components increases, the GMLM will match GLMs fit to the individual cells. However, the GMLM’s assumption of common response motifs in the population enables us to explicitly seek out low-rank structure that would not be directly recovered by single-cell GLMs. As with exponential family principal components analysis37, the GMLM can be applied directly to spike train data rather than requiring smoothed spike rates. The GMLM inherits the GLM’s flexibility for modeling trials with variable structure, including behavioral events like the touch-bar release. The statistical definition of the model can help account for uncertainty in the model fits36. Stimulus category and direction are low-dimensional variables, and motion direction tuning in sensory regions such as area MT can be well-captured by simple parametric models38. Therefore, the GMLM is well-suited for modeling how LIP populations represent combinations of these task variables during the categorization task.

Fig. 3. The generalized multilinear model for dimensionality reduction of neural populations.

A We hypothesized that the different stimulus directions and categories—including whether it is the sample or test stimulus—could be modeled as vectors as a function of time in a low-dimensional space where the dimensionality is the number of factors. Here, we constrain the direction tuning, but not category, to be the same over the sample and test stimuli. Thus, dimensionality reduction in our framework can take into account shared temporal dynamics and stimulus tuning information across the two stimulus presentations, possibly reflecting similar bottom-up input. Individual neurons’ stimulus tuning is a linear projection of the low-dimensional space. B Diagram of the GMLM. Incoming stimuli are factorized into temporal events and stimulus weights that encode direction and category information (left; Supplementary Fig. 3E–I). A set of temporal kernels and stimulus coefficients filter the linearized stimuli (left) into a low-dimensional stimulus response space. The touch-bar release event is similarly filtered using a low-dimensional set of temporal kernels. Each individual neuron’s firing rate at each time bin is a nonlinear function (here, ) of the sum of a linear weighting of the low-dimensional stimulus subspace, a linear weighting of the touch-bar subspace, and recent spike history. Spike trains (right) are modeled as a Poisson process given the instantaneous rate. Given the recorded spike trains, stimuli, and behavioral responses, the stimulus filter tensor, touch-bar filters, and spike history filters can be fit to the data.

The model’s parameters include a set of stimulus components, where each component contains a single temporal kernel (or linear filter) and a set of weights for the stimulus identity. Each component temporally filters the incoming stimulus onset events, and weights the filtered stimuli linearly by stimulus identity. As a result, each individual component contributes to the population encoding for all stimuli (not just a single motion direction or sample/test presentation). Each individual neuron’s tuning to the motion stimuli is a linear combination of the stimulus components. The model also includes a low-dimensional set of components to represent the touch-bar release event: a set of temporal kernels describe the population response to a touch-bar release such that each neuron’s touch-bar tuning is a linear combination of those kernels. Each spike train is then defined as a Poisson process in which the instantaneous firing rate is given by the sum of the filtered stimuli and touch-bar release, plus a linear function of recent spike history. In this formulation, the set of stimulus components is a tensor that represents each neuron’s responses over time to each motion stimulus. The factorized representation of the stimulus into temporal and identity weights captures shared temporal dynamics between different directions or between sample and test stimuli. As the number of components (i.e., rank) in the kernel tensor increases, the model approaches a GLM fit to each cell individually (that is, each fiber of the tensor along the temporal mode is a GLM filter for one neuron for one stimulus).

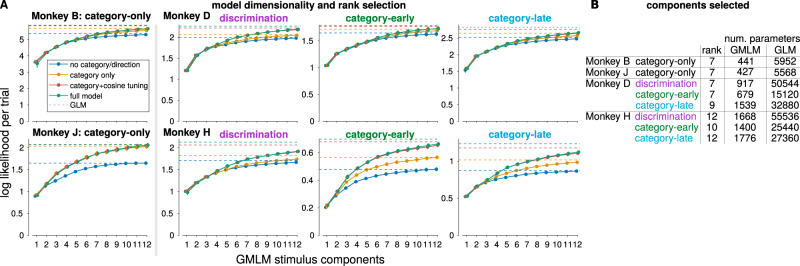

We confirmed that the stimulus-related activity in the LIP population responses was low-dimensional. We varied the number of components to include in the GMLM (i.e., the rank of the stimulus kernel tensor). We compared the GMLM to the corresponding single-cell GLM fits, where the GLMs represent the “full-rank” model. We computed the average likelihood per trial averaged over the neurons in each population for the GMLM fit, relative to the GMLM without any stimulus terms (Fig. 4A). We selected the rank by the number of components needed to explain, on average, 90% of the explainable log-likelihood per trial over all the neurons in each population (Supplementary Fig. 4A). The GMLM required 7–12 stimulus components per population, thereby using only a fraction—less than 8%—of the number of parameters compared to GLM fits to individual cells (Fig. 4B). An example of the GMLM with seven stimulus tensor components fit the LIP population from monkey B is shown in Supplementary Fig. 5A, B. Different components can have different temporal response dynamics and different stimulus tuning properties: for example, component 5 shows strong differentiation between the two stimulus categories (red and blue), while component 2 does not. The responses to the stimulus for individual cells as a combination of components are illustrated in Supplementary Fig. 5C, D.

Fig. 4. A low-rank model captures task-relevant LIP activity during the DMS and DMC tasks.

A We determined the dimensionality of the population activity by estimating model fitness while increasing the number of components. Each point shows the mean cross-validated log-likelihood per trial of the GMLM relative to a baseline model without any stimulus terms (i.e., the “rank 0” model) for two monkeys during the category task. The log-likelihood shows the average log probability of observing the spike train from a withheld trial from one neuron given the model relative to the log probability of that observation under the null model without stimulus-dependent terms. In a Gaussian model, the log-likelihood is proportional to the squared error and it is related to the variance explained. Here, we instead use the Poisson likelihood which is more appropriate for quantifying spike count observations37,80. The traces show the GMLM with different amounts of category or direction information included. The dashed lines show the corresponding GLM fits (the full-rank model). The GMLM accounts for most of the log-likelihood with a small number of components. B Number of stimulus components (rank) selected for the GMLM for each LIP population to account for 90% of the log-likelihood. The number of stimulus parameters in the low-rank GMLM is compared to total parameters in the equivalent single-cell GLM fits for each population.

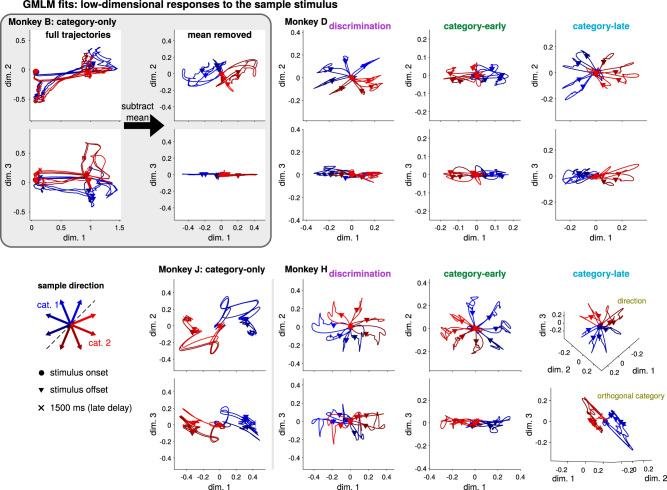

To gain intuition about how LIP dynamics may support the transformation of motion direction input into a representation of category, we visualized the low-dimensional population tuning to the sample stimuli. The top three dimensions of the trajectories show large, stimulus-independent transient responses (Fig. 5, inset). We therefore subtracted the mean response over stimuli and plotted the top three dimensions in the mean-removed responses39. The two category-only LIP populations showed primarily two-dimensional responses with strong category separation, with some direction tuning in the smaller third dimension in monkey J (Fig. 5). For the pretrained monkeys, the trajectories during the discrimination task reflected the stimulus geometry: the model shows two-dimensional transient activity organized by stimulus motion direction. The responses show little stimulus-specific persistent activity during the delay period13: the trajectories return to the origin after stimulus offset. During the category-early phase, the low-dimensional LIP response reflects the stimulus geometry, but the top dimension is aligned to the task axis (i.e., blue and red directions are separated along dimension 1). The trajectories are still two-dimensional without clear delay period encoding. After training was completed, monkey D’s category-late LIP activity showed strong direction tuning during the stimulus presentation which is elongated along the task axis (that is, the axis most oriented to the category along the 135°–315° stimulus directions). In contrast, LIP in monkey H had a three-dimensional stimulus response in the late period: two dimensions reflecting the circular motion directions during the stimulus presentation and a third orthogonal axis for category that was sustained through the delay period. Similar orthogonal stimulus input and working memory representations have been observed in other decision-making tasks36,40. In summary, the low-dimensional stimulus components of the LIP activity differed across animals such that the pretrained monkeys’ LIP showed strong, circular representations of motion direction reflecting the physical features of the stimuli, while the category-only monkeys had lower-dimensional responses that more strongly reflected the task-relevant categories.

Fig. 5. Low-dimensional representations of motion direction and category during the sample stimulus presentation and delay period.

The top three dimensions of the GMLM’s sample stimulus encoding for each of the eight LIP populations with the mean response over all directions removed through the first 1500 ms of each trial were computed by taking the higher-order singular value decomposition of the stimulus kernel tensor in the GMLM to get the dimensions that captured the most variance in the tensor. The inset for monkey B shows the top three dimensions including the mean (left) and the top three dimensions that remain after removing the mean (right). The two plots for each monkey show the top dimension on the x-axis plotted against the second or third dimensions on the y-axis (except for monkey H shown in the 3D plots). The red and blue traces show the response to each motion direction from stimulus onset (circles), to stimulus offset (triangles), and into the delay period (xs denote 1500 ms after sample motion onset). Supplementary Fig. 7 shows the full trajectories (i.e., without removing the mean) for all populations. Supplementary Fig. 8 shows the three-dimensional trajectories (of the cosine-tuned model) as a function of time.

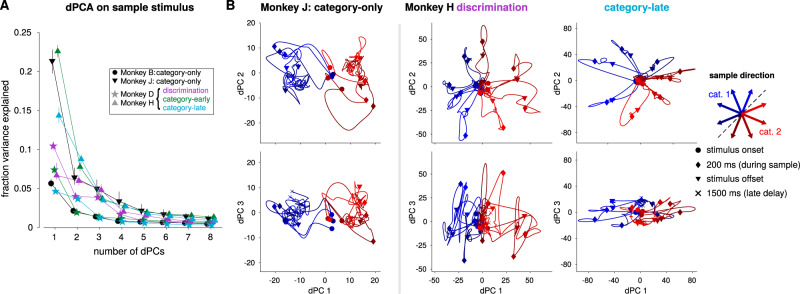

Comparison to demixed principal components analysis

We next compared our approach to demixed principal components analysis (dPCA), an extension of principal components analysis (PCA) for recovering task-relevant subspaces in neural population responses41. We used dPCA to find components that captured the population encoding of the sample stimulus direction during sample and delay periods of the trial. Stimulus-dependent activity was primarily contained within the first few components, confirming the low dimensionality of stimulus-dependent activity (Fig. 6A). The LIP activity projected into the stimulus-dependent dPCs shows similar patterns to the GMLM subspaces (Fig. 6B). In addition, we found similar subspaces by performing PCA on the GLM filters that were fit to each neuron individually (Supplementary Fig. 10).

Fig. 6. Low-dimensional responses to the sample stimulus recovered by dPCA reflect stronger motion-direction tuning in pretrained animals than in category-only animals.

A The percent of the sample-direction-related activity explained by each stimulus dPC. Most of the variance is explained by the first two or three dPCs for all eight populations. Each trace shows the results for a single LIP population. The points denote the median and error bars show a 95% interval over bootstraps. B The low-dimensional activity from two LIP populations during the categorization task projected into the first three dPCs for motion direction. Each trace shows the population response to one sample stimulus direction (denoted by color) from sample onset to 1500 ms after stimulus onset (near the end of the delay period). The markers indicate stimulus onset and offset. In this case, the motion direction components from dPCA simply correspond to PCA performed on the data with the mean response of each neuron across all motion directions subtracted. Because the category is determined completely by motion direction, dPCA cannot find separate “category” and “direction” components. The left column shows the three dPCs for category-only monkey J. The middle and right columns show the dPCs for pretrained-monkey H during the discrimination task and after training on the categorization task, respectively. The dPCs for all populations are shown in Supplementary Fig. 9.

There are several limitations common among dimensionality reduction methods, such as PCA, dPCA, or tensor components analysis42,43, for assessing the specific questions of interest in the current study. For example, bottom-up sensory-driven signals might be shared across the sample and test stimuli during the categorization task, while abstract category computations might differ across presentations. Therefore, comparing data across both stimulus presentations may help to disentangle sensory-driven activity from higher-level responses. However, there are several reasons it is difficult to perform dimensionality reduction to answer these questions with typical methods like dPCA. First, the monkey’s behavioral response is variable and determines the end-time of each trial. Dimensionality reduction approaches based on peristimulus time histograms (PSTH) including dPCA require temporally aligned trials of equal length, thereby limiting those approaches’ ability to quantify task-related responses through the complete trial36. Second, including both sample and test stimuli on each trial also results in many distinct conditions which may be impractical for PSTH-based methods (for eight motion directions in the categorization task, dPCA would require computing the mean firing rate independently over 64 stimulus combinations). Third, methods such as PCA and dPCA do not directly provide quantification about how the stimulus direction and category are represented in the low-dimensional subspace: additional statistical modeling is required after dimensionality reduction, rather than performing the dimensionality reduction with the statistical questions in mind. Finally, structure in the spike trains beyond mean rate would be missed by PCA or dPCA (e.g., oscillatory dynamics related to working memory44). Therefore, our method could recover task-dependent, low-dimensional activity similar to existing approaches, while also having the advantage of directly fitting the subspace to the data within a flexible statistical framework.

Contribution of direction and category to LIP population responses

We asked how each task variable contributed to the low-dimensional LIP responses. To do so, we quantified model fit as we monotonically increased the complexity within a set of nested models by including more information about the stimulus identity and category (Fig. 4A). We designed a set of four nested models (i.e., different linearizations of the motion stimuli with increasing complexity) in order to assess what stimulus information is encoded by an LIP population (Supplementary Fig. 3E–I). The simplest model was the no category or direction tuning model. In this model, the linear weights for the stimulus identity only defined whether the stimulus was the sample or test. This model can only capture the average trajectories in time during the task over all stimulus directions. The second model, the category-only model, includes stimulus category weights, but does not consider specific motion directions. The category-only model includes stimulus identity information for the category one and category two motion directions for both the sample and test stimuli. This way, the model can capture category tuning, which may be different for the two stimulus presentations. While the discrimination task had no category, we still fit category weights to the discrimination populations as a control (i.e., to ask what the model produces if category was not actually a behavioral factor in the task because category and direction are correlated variables). The third model included cosine direction tuning and category. In addition to the category weights from the previous model, this GMLM included two coefficients for the sine and cosine of the motion direction. The cosine and sine weights were the same for both sample and test stimuli. Thus, this model constrains the geometry of direction tuning to lie on an ellipse in the low-dimensional space (Fig. 3B). The final model, the full model, extends the cosine direction tuning model by allowing different weights for each individual motion direction, rather than constraining the direction information to be cosine tuned.

A majority of the log-likelihood was accounted for by the GMLM without category or direction tuning in all populations, which is consistent with many previous dimensionality reduction results41. Including category improved model fit for all populations. We note that category is correlated with direction: adding a category variable can capture aspects of motion tuning. For example, category could capture much of the stimulus tuning in the monkey D category-late population, which shows a strong red-blue direction preference along the first dimension in the low-dimensional space (Fig. 5). As a result, the improvement of model fit does not imply that the populations encoded category-specific information. However, the category-only model still improved the fit to the category-late populations more strongly than the discrimination populations.

Adding cosine direction tuning during the categorization task accounted for a larger improvement of the model fit over the category-only model for the pretrained monkeys than the category-only monkeys (monkey B, category-only 1.0 ± 0.4%; monkey J, category-only 0.2 ± 0.7%; monkey D, discrimination 4.1 ± 0.3%, category-early 1.6 ± 1.0%, category-late 1.9 ± 0.1%; monkey H, discrimination 8.2 ± 0.6%, category-early 11.9 ± 0.5%, category-late 9.9 ± 0.6%; mean percentage cross-validated log-likelihood accounted for by the cosine-tuned GMLM minus the category-only GMLM). Adding the unconstrained full-direction tuning showed a similar improvement over the category-only model (monkey B, category-only 1.2 ± 0.3%; monkey J, category-only 0.5 ± 0.8%; monkey D, discrimination 4.3 ± 0.3%, category-early 1.8 ± 1.1%, category-late 2.1 ± 0.2%; monkey H, discrimination 8.5 ± 0.6%, category-early 13.1 ± 0.7%, category-late 11.2 ± 0.6%.) This can be seen in the log-likelihoods in Fig. 4A where the red and yellow traces overlap for monkey J (left) while the red and green traces are significantly higher for monkey H (right). Thus, direction-tuning played a stronger role in the pretrained monkeys’ LIP activity than in the category-only monkeys.

We tested the adequacy of cosine parameterization of direction tuning by comparing with the more flexible full model. The cosine direction tuning model was comparable to the full model for all populations (monkey B, category-only 0.2 ± 0.2%; monkey J, category-only 0.3 ± 0.7%; monkey D, discrimination 0.2 ± 0.2%, category-early 0.2 ± 0.1%, category-late 0.2 ± 0.2%; monkey H, discrimination 0.3 ± 0.4%, category-early 1.1 ± 0.5%, category-late 1.3 ± 0.3%; mean percentage cross-validated log-likelihood accounted for by the full GMLM minus the cosine-tuned GMLM). For these tasks, the direction tuning in the population could therefore be approximated as an ellipse (and thus embedded within a plane).

The parameterizations above assumed that the direction tuning (but not category tuning) is the same for both sample and test stimuli: that is, the difference between the kernels for two motion directions within the same category is the same for both the sample and test stimulus. Such direction-tuning constancy would be consistent with common bottom-up, direction-tuned input from sensory areas such as MT for the two stimulus presentations. The model still includes test category filters, which allow for different category tuning or direction-independent gain differences between sample and test stimuli. We found that including separate sample-test direction tuning in either model did not improve the model fit (Supplementary Fig. 11A). In addition, comparing sample and test direction weighting in the low-dimensional GMLM component space showed similar direction preferences for the two stimuli (Supplementary Fig. 11B). Thus, the GMLM framework can constrain the parameters to enforce constant direction tuning between the two stimuli, and test statistically whether that assumption holds. In our datasets, these tests showed that direction encoding was cosine-tuned and constant across the sample and test stimuli, consistent with bottom-up input. This provides a parameterization of both category and direction by leveraging the two stimulus presentations, which have different cognitive demands, to support the approximate disentangling of the two variables.

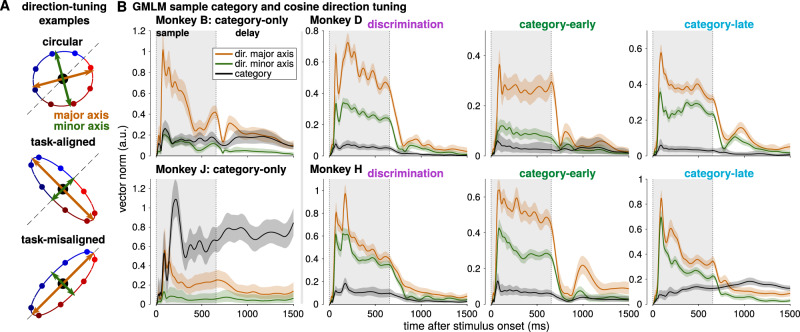

Quantifying the geometry of category and direction in the stimulus subspace

To go beyond visualization of the low-dimensional subspace, we wished to quantitatively assess the geometry of category- and direction-dependent responses in LIP. We therefore focused on the cosine-tuned GMLM, because this choice of parameterization decoupled direction and category, while still capturing a similar subspace to the full model. At each point in time, category was encoded along a vector while direction (parameterized by angle) was encoded on an ellipse in the stimulus subspace (Fig. 7A). The ellipse could be circular, which would represent motion directions uniformly, or elongated so that the population representations are biased toward a preferred motion direction. We compared the norm of major and minor axes of the direction ellipse and the norm of the sample category vector as a function of time relative to stimulus onset (Fig. 7B). Because category and direction are correlated, we applied a Bayesian analysis to take into account uncertainty in the direction and category encoding by exploring the tuning over the posterior distribution of the model parameters given the data, rather than only the best fitting parameters (see Methods section “Bayesian analysis of subspace geometry”).

Fig. 7. Quantification of category and direction encoding in LIP.

A Diagram of direction encoding in the cosine-tuned GMLM. Motion stimulus direction is encoded as an ellipse in the low-dimensional stimulus space. The ellipse has major (orange arrows) and minor (green arrows) axes that define its shape. If the axes are of similar length, tuning is approximately circular and the motion directions are evenly distributed in the low-dimensional space (top). If the major axis is elongate compared to the minor axis, the population shows a preferred direction which may be aligned with category (middle) or the null direction (bottom) or anywhere in between. Category is encoded as a vector in addition to the direction ellipse, and the category vector is constant for all motion directions within a category (in contrast to the task-aligned direction tuning which places near-boundary directions closer together). B Bayesian estimate of the geometry of the sample stimulus tuning for the eight LIP populations. Each plot shows the norm of the sample category vector (black) and the norms of the major (orange) and minor (green) axes of the direction-tuning ellipse for an LIP population as a function of time relative to the sample stimulus onset. The solid lines denote the posterior median at each time point, and the shaded regions denote 99% credible intervals. If the major and minor axes have equal norms, then direction would follow a circle in the low-dimensional space.

The category-only LIP population subspaces showed strong category tuning relative to direction tuning. The category vector in monkey B was of similar magnitude to the minor axis of the direction ellipse during stimulus presentation, and stronger during the delay period. Monkey B’s direction-tuned response decayed during the sample period, consistent with the transient direction response in the example cell shown in Fig. 2A (top right). The category vector in monkey J was larger than the direction-tuning ellipse throughout the stimulus presentation and delay period. The direction-tuning ellipses were elongated along a particular motion direction, rather than circular. In addition, the direction ellipse aligned both with category and with the choice biases in monkeys B and J on trials where the sample motion direction was ambiguous (Supplementary Fig. 12). The sample stimuli on ambiguous trials were placed on the category boundary, and the monkeys were rewarded randomly. The ambiguous trials were not used to fit the GMLM. Thus, the stimulus components in the category-only populations reflected category-specific input selection.

The pretrained monkeys’ LIP showed strong direction tuning during both the discrimination and categorization tasks, and task-relevant category tuning emerged after training on the categorization task. LIP reflected strong cosine-like direction tuning during the discrimination task, which in monkey H was nearly circular or uniform across the motion directions (i.e., the major and minor axes of the direction ellipse were of similar length). In the category-early sessions, the direction tuning was less circular than the discrimination and category-late populations, as if the stimulus space was squeezed toward a lower-dimensional space during learning. While a dimensionality shift would be consistent with learning to represent category (a one-dimensional variable) instead of the full space of directions, this effect did not persist after additional training. During the category-late sessions—but not during the discrimination task—monkey D’s direction ellipse was aligned with the task category (i.e., the major axis was along the 135°–315° angles; Supplementary Fig. 12C). Monkey D’s LIP activity showed similarly shaped elliptical tuning across discrimination and category-late recordings, but the activity realigned to place motion category along the major axis after training on the categorization task. The same task-aligned direction encoding during the categorization task was not observed in monkey H. In both the monkey D category-late and monkey H category-early populations, we found stimulus offset activity in the direction ellipse, but not in the category vector. As a result, individual neurons may appear to respond more strongly for a particular category early in the delay period, but the model accounted for this as a direction-tuned response rather than category-specific encoding (Fig. 7B). The monkey H-early and monkey D-early and category-late populations did not have large category vectors, and the low-dimensional activity instead reflects an elongated direction tuning ellipse (i.e., the major axis is larger than the minor axis) during stimulus presentation. In the monkey H category-late population, we observed a slow increase in the category vector length over time in the trial, which does not surpass the magnitude of direction tuning until the delay period. In summary, LIP in the pretrained monkeys continued to show strong direction tuning after training on the categorization task, but the tuning either realigned with the motion categories (monkey D) or showed additional category tuning in addition to the direction-tuned response to the sample stimulus (monkey H).

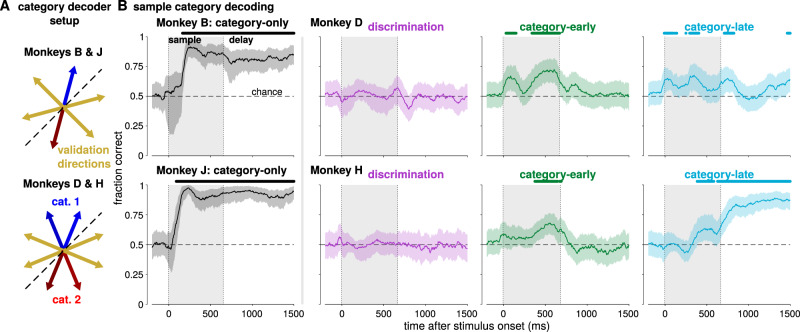

We then asked how the subspace geometry could affect how decoding methods assess category selectivity in LIP (whereas the GMLM is an encoding model). We applied a linear decoding technique previously proposed to reveal category representations independent of motion direction13,45. The decoder classifies the sample category based on spike counts from pseudopopulation trials. We trained and validated the decoder on trials from orthogonal sets of stimulus directions (Fig. 8A). The logic of the decoder is that, if motion direction is represented in the population circularly without any additional category-specific responses, the decoder will not generalize across the training and validation conditions. The discrimination populations provided a control for the method, because the monkey was not yet trained to classify motion category. We indeed found no significant category decoding in the two discrimination populations (Fig. 8B).

Fig. 8. Category and direction decoding in LIP.

A The training and test scheme for a direction-independent category decoder. Pseudopopulation trials were created from 50 random trials sampled with replacement for each direction from each cell. For monkeys B and J, the decoder is trained using one direction from each category (180° apart; red and blue) and validated on the remaining four directions (yellow). For monkeys D and H, the decoder is trained using two adjacent directions from each category (again using opposite directions from each category; red and blue) and validated on the remaining four directions (yellow). B Median decoder generalization performance as a function of time for each of the eight LIP populations. The decoder was trained and tested using spike counts in a 200-ms window centered at the time relative to sample stimulus onset on the x-axis. The shaded regions denote a 99% confidence interval over 1000 random pseudopopulations and the solid lines show the median. Symbols denote decoding significantly greater than chance (50%; p < 0.01 Benjamini–Hochberg corrected, one-sided bootstrap test). Supplementary Fig. 13 shows that we obtain consistent results with higher-rank models. Supplementary Fig. 12 shows that the stimulus tuning in monkeys B and J correlates with behavior on null-direction trials.

The decoder performances during the categorization task were consistent with the GMLM stimulus subspaces. Category could be decoded with high accuracy in monkeys B and J early in the stimulus presentation and throughout the delay period (Fig. 8B). Similarly, the GMLM analysis found strong category tuning beginning early in the stimulus period and continuing through the delay in those populations. The results were different in the pretrained animals. In both category-early and monkey D’s category-late populations, we found decoding above chance during stimulus presentation, but not during the delay. The task-aligned, non-circular response to stimulus direction in monkey H category-early and monkey D category-early and late enabled the decoder to generalize across conditions due to over-representation of signal along the task dimension (135°–315°), rather than a category vector independent of direction. In contrast, in the category-late population for monkey H, the decoder only found weak decoding late in the sample stimulus presentation, which became strong during the delay period. The orthogonal category dimension of monkey H category-late is only stronger than the circular direction coding during the delay period, corresponding to the onset of significant category decoding. The decoder’s failure to generalize during the early sample period can therefore be explained by strong direction selectivity swamping the weaker, orthogonal category signal.

Comparing the sample and test stimuli

The categorization task requires different computations for the test and sample stimuli: the category of the sample stimulus must be computed and stored in short-term memory, while the test stimulus must be compared to the stored sample category. Recent work has suggested that LIP linearly integrates the test and sample stimuli during the test period of the categorization task while PFC shows more nonlinear match/non-match selectivity46. We therefore compared the LIP encoding of the test stimulus to the encoding of the sample stimulus. In the GMLM fits to the categorization populations, we found that test category encoding during the test stimulus presentation was weaker than sample category encoding during the sample presentation (Supplementary Fig. 14). This can be visualized in the low-dimensional subspace for monkey H (Fig. 9A). During the sample stimulus, the subspace reflected category tuning orthogonal to the motion direction response (Fig. 5, bottom right). However, we did not find the same category-selective response to the test stimulus in the stimulus subspace. In addition, LIP population activity projected onto the touch-bar (motor response) subspace showed strong match/non-match separation with little category selectivity (Supplementary Fig. 15). Thus, LIP does not appear simply to extract and sum the categories of the two stimuli to compute match or non-match.

Fig. 9. Matching the test stimulus to the stored sample in the categorization task.

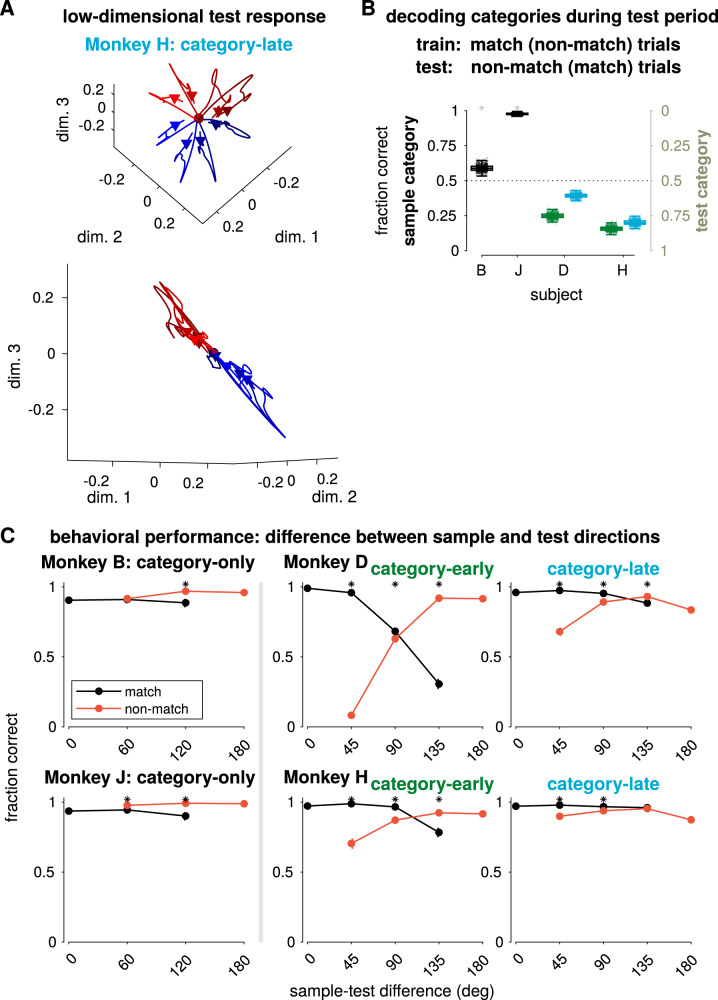

A The low-dimensional test stimulus-response for each direction for monkey H, category-late with the mean response removed projected into the same dimensions as in Fig. 5 (bottom right). B Decoding accuracy of sample or test category using the spike counts during the first 200 ms of the test stimulus (excluding the motor response for 95.7% of match trials). The decoder was trained on trials from all stimulus directions, but only from match (or non-match) trials and then tested on non-match (or match) trials. Performances for decoding sample and test category are mirror images (reflected over the 50% chance line): the training sets given to the binary classifiers are the same, but the test sets have opposite category labels. The box plots show the median and 25 and 75% range of decoder performance over bootstraps and the whiskers extend to a 1.5 interquartile range from the edges. All decoders generalized significantly different than chance (50%; p < 0.01 Benjamini–Hochberg corrected, two-sided bootstrap test with 1000 bootstraps of 50 trials per stimulus direction). C Average performance as a function of the difference in angle between the sample and test stimulus, sorted by match/non-match trials in the categorization task (error bars show a 99% credible interval). Asterisks indicate match and non-match are significantly different (p < 0.01, two-sided rank sum test, Benjamini–Hochberg corrected). Supplementary Fig. 14 compares the category tuning during the sample and test stimulus presentations. Supplementary Fig. 15 shows that the touch-bar subspace (encoding “match” responses) does not reflect category tuning.

We tested if the stored sample category and incoming test stimulus category were separable in the LIP population responses during the test stimulus presentation. We used linear classifiers to decode the sample category from pseudopopulation spike counts during the first 200 ms of the test stimulus presentation. The decoders were trained on trials of all stimulus directions. However, the training set consisted of only match (or non-match) trials, while the validation set included only non-match (or match trials). For the two category-only animals, monkeys B and J, the sample category decoder generalized across the two conditions (i.e., performed better than chance at 50%; Fig. 9B). We observed the opposite pattern in the pretrained monkeys: the sample decoder performed below chance. Decoding the test stimulus category instead produces a mirror image of these results (the training sets for the classifier are the same, but the test sets have opposite identities). In the category-only animals, sample category can therefore be read out by a single linear decoder regardless of the test stimulus identity, which is consistent with stronger separability of the remembered sample category and the incoming test stimulus in the category-only monkeys than in the pretrained monkeys. Increased separability suggests a coding scheme that reduces interference between the stored sample stimulus category and the specific test stimulus direction40.

We then asked how the monkeys’ performances depended on the similarity between the sample and test stimuli because this gives insight into the strategy the monkeys used to form their match versus non-match decisions. We compared the monkeys’ accuracy as a function of distance between test and sample directions (Fig. 9C). The pretrained monkeys showed a different pattern of accuracy than the category-only monkeys. At small sample-test differences, the pretrained animals showed better performance on match than non-match trials while the category-only monkeys performed similarly or better on non-match. In addition, the pretrained animals showed greater dependence on distance. These effects were greatest during the category-early training phase, but they persisted after extensive training on the order of several months (the total number categorization task training sessions between the category-early and category-late periods was 78 for monkey D and 65 for monkey H). Therefore, stimulus similarity—which was relevant in the discrimination task—affects categorization behavior more strongly in the pretrained monkeys than the category-only monkeys, reflecting the monkeys’ strategy.

Single-trial dynamics during the delay period

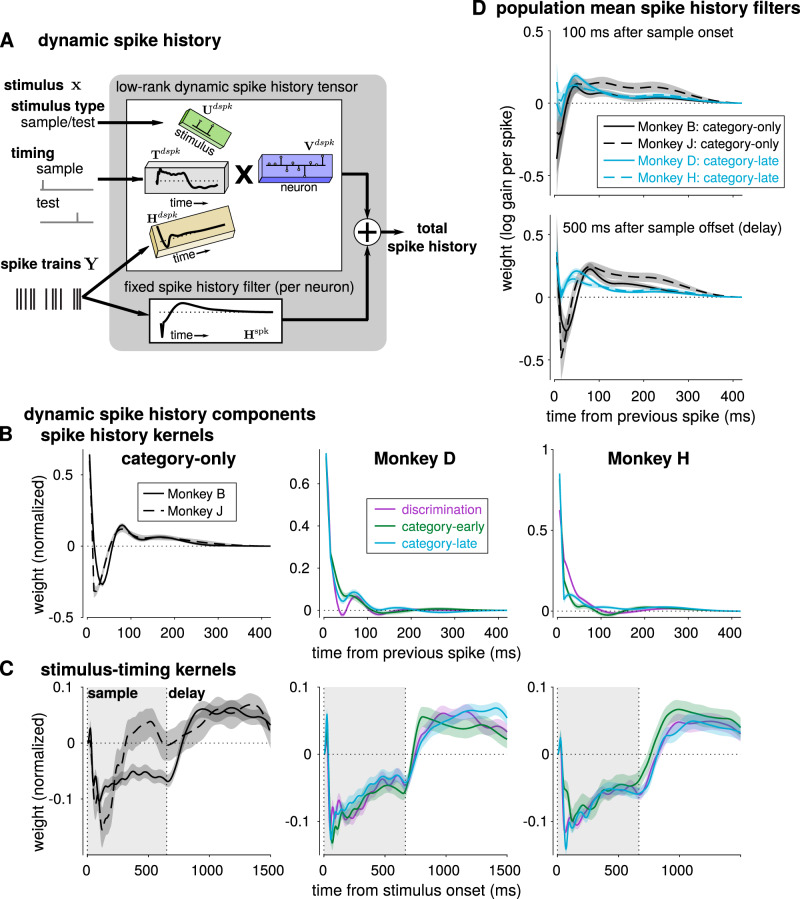

Neural dynamics during single-trials may reflect aspects of sensory processing and working memory beyond the mean firing rate44,47. For example, working memory may be supported by persistent activity48 or oscillatory bursts49 while stimulus-related activity may exhibit strong transient responses with quenched variability50. We therefore sought to characterize non-Poisson variability in single trials in LIP during the discrimination and categorization tasks, which could reflect signatures of different strategies in performing the tasks. The GLM framework accounts for non-Poisson variability or single-trial dynamics by conditioning firing rates on recent spiking activity through a spike history filter, an autoregressive term that can reflect a combination of intrinsic factors (e.g., refractory periods, adaptation, facilitation) and network properties (e.g., oscillations)51–53. Typically, GLMs are designed assuming the spike history filter to be constant: that is, spike history has the same effect on spike rate throughout a trial. While fixed spike history effects may be an appropriate assumption in early sensory regions under stimulation with steady-state stimulus statistics, spiking dynamics in higher-order areas such as LIP might vary between the stimulus presentation and the delay period due to both the transition in behavioral task demands and different sources of input (bottom-up vs. top-down) between these two periods of the task24. In order to quantify spike history effects in the discrimination and categorization tasks, we extended our model to allow the spike history filter to change over time during the trial (relative to the stimulus onset), which we have called dynamic spike history filters. However, estimating different spike history effects at each time point in the task for each neuron would be highly impractical (and even infeasible for single neurons). By instead using the low-dimensional GMLM to look for common changes in spike history effects that occur across the population, we added the dynamic spike history in the GMLM as a low-rank tensor with a linear spike history component and a gain term relative to the stimulus timing54 (Fig. 10A). The spike history feature is a kernel shared by the entire population (). We label the filter dynamic because the gain of the dynamic spike history’s contribution () changes during the trial. The effect of spike history on a neuron’s instantaneous firing rate therefore changes over the time course of the trial depending on the gain-modulated contribution of the dynamic spike history filter. Because the dynamic spike history filter and gain are shared, the model searches for the main features of spike history that change during the trial in the population. The resulting model can learn to capture distinct spike-history dependent dynamics between stimulus-driven and delay periods of the trial observed across many neurons.

Fig. 10. Dynamic spike history captures distinct stimulus-driven and delay-period dynamics.

A The dynamic spike history filter is a low-rank, four-way tensor. The tensor includes two temporal kernels: one which filters spike history (gold) and a second which determines the weighting of the spike history component relative to the stimuli (gray). The spike history is scaled by stimulus identity (for simplicity, limited to sample or test stimulus weights only, without any category information). Each neuron adds the (weighted) dynamically filtered spike history to the neuron’s constant spike history filter. The total spike history at any point in a trial is still a linear function of past spiking activity, but the effective linear kernel can change during a trial. The normalized rank-1 dynamic spike history components: B dynamic spike history kernels and C stimulus-timing kernels for each population (posterior median and 99% credible interval). The left columns show the two category-only monkeys, and the middle and right columns show all training stages for monkeys D and H respectively. D The population mean effective spike history filters at two points in the task for the four fully trained categorization populations (mean of MAP estimate of filters ±2 SEM). (top) The population mean spike history at 100 ms after sample stimulus onset. (bottom) The population mean spike history during the delay period (500 ms after sample stimulus offset). Positive weights indicate that a previous spike at the given lag increases a neuron’s probability of firing, while negative weights indicate that spiking is suppressed. Supplementary Fig. 16 quantifies the model fit improvement gained by including dynamic spike history and shows a higher-rank dynamic spike history fits.

We found the main spike history feature that varied during the task in each LIP population by fitting the GMLM with a single dynamic spike history component. Including dynamic spike history improved the cross-validated model performance for all populations (Supplementary Fig. 16A). The GMLM found similar dynamic spike history kernels for the two category-only monkeys, which showed oscillatory dynamics at approximately 12–14 Hz (low-beta; Fig. 10B). In contrast, the dynamic spike history kernel for the pretrained monkeys at all training stages was dominated by a faster timescale decay (time constants monkey D 10.2, 14.8 and 11.8 ms and monkey H 22.5, 9.0 and 6.6 ms discrimination, category-early, category-late, respectively), which suggests stronger gamma-frequency bursts. The stimulus-timing kernels showed that the dynamic spike history weighting is generally aligned with stimulus onset and offset (Fig. 10C). We note that the individual neurons’ fixed spike history kernels act as the mean spike history, effectively centering the timing kernels around zero. The dynamic spike history is therefore reflected by the difference in weight between the sample and delay period, not the absolute value. This change in the dynamic spike history weight would be consistent with a change in network activity between the stimulus-driven and working-memory periods of the task. One notable exception was monkey J: the timing showed only a short transient gain after stimulus onset. The timing of this kernel corresponded with the strong category-dependent transient response in monkey J (Fig. 8B), and thus raises the possibility that the network enters a memory-storage state prior to stimulus offset.

Lastly, we summarized differences in the single trial dynamics across populations by comparing the mean spike history filters across all neurons in each population. Here, we examined population differences in the total spike history: the dynamic spike history filter (which depends on time in the trial) plus the individual neurons’ fixed spike history filters. We computed the population mean spike history kernel at two different points (Fig. 10D): during stimulus-driven activity (100 ms after sample stimulus onset) and during the delay period (500 ms after sample offset). The mean spike history in the category-only monkeys showed a pronounced oscillatory-like trough during the delay period, compared to the pretrained monkeys (Fig. 10D, bottom). Spike history differences between the populations were less evident during stimulus presentation. These results were consistent for higher-rank dynamic spike history tensors (Supplementary Fig. 16A, B). We found similar differences can be observed in the spiking autocorrelation during the delay period (Supplementary Fig. 16C). However, the dynamic spike history in the GMLM did not require specifying a fixed window to compute the autocorrelation: the temporal gain term estimates when the spike history effects change in the trial and the stimulus terms help compute spike history effects in the presence of stimulus-driven changes in mean firing rate. Thus, the structure of single-trial variability during the delay period differed between category-only and pretrained monkeys, but was similar within each pair, which suggests that the balance of beta- and gamma-frequency-driven activity during the delay period differed between the animal pairs.

Discussion

Here we examined the low-dimensional geometry of task-related responses during a motion-direction categorization task in LIP in two pairs of monkeys performing the same motion categorization task, but with different training histories. In the monkeys that were pretrained on a motion-direction discrimination task, we found similar direction-dependent activity in LIP activity during the discrimination and categorization task: two-dimensional direction-encoding subspaces that reflected the stimulus geometry. Moreover, uniform direction tuning remained a dominant feature of this subspace after training on the categorization task. The common direction tuning observed across the sample and test periods of the task could reflect cosine-like signals from sensory regions such as area MT12,15,55. In contrast, the monkeys trained only on the categorization task showed stronger category tuning and category-aligned direction tuning in LIP compared to activity in animals first trained on the discrimination task. Performing the categorization task may involve computations including input selection and local and/or top-down recurrent dynamics. Our findings indicate that differences in the sequences of tasks learned by the animals over long periods may result in different network configurations that perform the same task, perhaps manifesting in different behavioral strategies.

We hypothesize that these differences may be indicative of the pretrained monkeys still using computational strategies learned for the discrimination task. Indeed, the pretrained monkeys’ behavior showed greater dependence on the angular difference between sample and test stimuli than category-only monkeys, which was a key factor in solving the discrimination task. Because the tasks used the same stimuli and shared many of the same correct or incorrect sample-test pairings, the same neural machinery and behavioral strategies could be recruited and maintained for the categorization task, despite extensive retraining. While many LIP neurons show delay period encoding of category during the categorization task, we did not see direction tuning in the average firing rates during the delay period in the discrimination task (Figs. 2A and 5). It is possible that direction is maintained in working memory in LIP populations during the delay period by sparse bursting activity, but not by persistent firing, which cannot be seen by our analysis using single-neuron recordings44. In addition, previous theoretical work from our lab has demonstrated that recurrent neural networks performing the discrimination task may recruit activity-silent computations to compare sample and test stimuli through short-term synaptic plasticity56. In that study using recurrent neural networks trained on both discrimination and categorization tasks, delay-period sustained activity was observed more often in tasks that required more complex manipulation of the sample stimulus information compared to the discrimination task. Our dynamic spike history analysis revealed single-trial dynamics with low-beta frequency oscillatory structure during the delay period in the category-only monkeys, but not the pretrained monkeys, which could reflect different working memory dynamics in the category-only pair44. Together, this raises the possibility that computations learned during the discrimination task which recruited activity-silent working memory during the delay period could explain the observed reduced separability of the sample and test stimulus in the neural subspace in the pretrained monkeys compared to the category-only monkeys57.

Studying how LIP populations encoded task variables in the discrimination and categorization tasks motivated our development of a statistical dimensionality reduction method to quantify the population code. We extended the GLM framework for individual neurons to perform dimensionality reduction on neural populations using a flexible tensor-regression model. In complex decision-making tasks, the trials may not be aligned such that common dimensionality reduction methods can be applied without artificially re-aligning single-trial firing rates by stretching or time-warping41. For example, the touch-bar release ended the trial early at a time determined by the animal in the discrimination and categorization tasks. This modeling framework could extend to many other tasks and questions, given appropriate linearizations of specific tasks. For instance, the tensor could be extended to model slow trial-to-trial changes in stimulus response within a recording session by including coefficients for weighting each trial, thereby generalizing applications of tensor component analysis as in42,43. Here, we applied the GMLM to perform dimensionality reduction to find task-relevant features in spike trains when the events in the task were not exactly aligned on every trial, without the need for an aligned trial structure. Our approach is related to reduced-rank regression58,59 and the recently proposed model-based targeted dimensionality reduction36 with two important distinctions: (1) our model is fit to spike trains through an autoregressive Poisson observation model and (2) we consider a more general tensor decomposition of task-related dynamics. The tensor decomposition is used to describe low-rank temporal dynamics in response to stimulus events, similar to low-rank receptive field models of early visual neurons60–62, and those components are shared across all neurons in a population. In contrast to demixed principal components analysis41 which requires balanced conditions across all variables to recover task-relevant subspaces, the cosine-tuned GMLM takes into account cosine-like direction tuning observed in sensory regions in order to disentangle category and direction information even though category is completely determined by direction. The GMLM takes into account cosine-like direction tuning observed in sensory regions in order to disentangle category and direction information even though direction and category are not separated in the task. Bayesian inference in this model allowed us to quantify uncertainty in the low-dimensional subspace and test hypotheses about the geometry of neural representations. The model showed that direction tuning was similar across the sample and test stimuli, while category-dependent responses differed, in order to support this disentangling of category and direction.

There are several important limitations about the inferred behavioral strategy and neural mechanisms in the present study. Primarily, this study included only a small number of animals, as is the norm in non-human primate experiments. Furthermore, multiple cortical and subcortical areas are involved in decision making (such as prefrontal cortex), and our analysis only considered neural activity in LIP. Even within a single region, it is possible that our results could depend on differences in neuron sampling within LIP between animals or sessions (e.g., either more anterior or more posterior), or other factors not directly related to the animals’ training histories. Given the large variation in LIP activity observed between animals within each pair, we cannot measure the magnitude of training history effects relative to variance due to individual differences. We cannot exclude the possibility that animals could switch behavioral strategy with additional training such that, for example, both pairs of monkeys would perform similarly. Consequently, the possibility remains that LIP representations of the categorization task could change to match the currently adopted behavioral strategy, rather than purely reflecting training history. We think this is unlikely because the LIP recordings were made after all animals had received extensive training on the categorization task and their behavioral accuracy had appeared to asymptote at a high level13. We note that, although the LIP recording sessions were performed using different sets of motion directions for the two pairs of monkeys (six directions for the category-only monkeys and eight for the pretrained pair), both pairs of monkeys were trained extensively on a larger set motion directions13. We focused on single-neuron recordings and the components of our model described common motifs in the mean responses to the task variables, while more aspects of the single-trial computations involved in categorization would require large population recordings30,63. Finally, there are many training and task variables that could impact learning beyond those considered here. For example, training on the categorization task first could influence direction coding in the discrimination task (learning the tasks in the opposite order that we considered) or using noisy motion stimuli (e.g., drifting motion direction) in the categorization task could affect the neural dynamics underlying categorization in LIP.

There are multiple ways that the brain could learn to perform the same task. Average results across animals may therefore fail to reflect the neural mechanisms of decision making in individual animals64,65. Individual differences are a major focus of human decision-making research and have led to many insights into cognitive functions including working memory66,67. Here, we explored between-subject differences in the dimensionality and the relationship between direction and category tuning in LIP, and we found differences that correlated with long-term training history. Primates in particular may participate in many experiments and receive extensive training in multiple closely related tasks over the course of years. Experimenters should report and consider animals’ training histories when interpreting such data and when comparing seemingly conflicting results from different labs. In conclusion, the low-dimensional dynamics that posterior parietal cortex enlists to support abstract visual categorization can manifest differently across subjects, and exploring long-term effects of training over more subjects can provide broader perspectives of the diverse neural computations that give rise to decision-making skills.

Methods

Data

All datasets used for this study were the same as in two previous studies from our group (refs. 22 and 13):

Tasks

The details of the tasks have been described previously for monkeys B and J category-only monkeys22,45 and monkeys D and H pretrained monkeys13. For all animals, stimuli were high-contrast, 100% coherent random dot motion stimuli with a dot velocity 12°s−1. The motion patch was 9.0° diameter, and the frame rate was 75 frames s−1. Monkeys were required to keep fixation within 2° radius of the fixation point during each trial.

For monkeys D and H, there were eight sample stimulus directions for both the discrimination and categorization tasks, spaced 45° apart: {22.5°, 67.5°, 112.5°, 157.5°, 202.5°, 247.5°, 292.5° and 337.5°}. The test stimuli for the discrimination task were 45°, 60°, 75°, or 0° (match) away from the sample stimulus, giving a total of 24 possible motion directions in the task. Test stimuli for the categorization task were the same as the eight sample stimuli. The stimulus presentations were 667 ms and the delay period was 1013 ms.

The categorization task for monkeys B and J used directions spaced evenly in 60° intervals: {15°, 75°, 135°, 195°, 255° and 315°}. The stimulus presentations were 650 ms and the delay period was 1000 ms.

For all monkeys tested on the categorization task in this study, the motion directions were split evenly into two categories separated by a constant boundary at 45° and 225°.

The categorization task for monkeys B and J included a set of null-direction trials (Supplementary Fig. 12A). In these trials, the sample direction was along the category boundary (45° or 225°) and the test direction was either 135° or 315° (one direction from each category, furthest from the boundary). These trials were not used to fit neural models, but examined for behavior in Supplementary Fig. 12B. The monkey’s response was randomly rewarded at 50% chance on these trials. We note that monkeys B and J were first trained on a simplified discrimination task where the sample and test stimuli were either match or 180° opposite. This version of discrimination task therefore did not require fine motion direction discrimination, and all correct sample-test response pairs in this task were consistent with the categorization task.

Electrophysiology

Neurons in LIP were recorded using single tungsten microelectrodes. During both the discrimination and categorization tasks, the motion stimuli were placed inside an LIP cell’s response field, which were assessed using a memory-guided saccade task prior to running the main experiment on each session.

In this study, we included only cells with a mean firing rate of at least 2 sp s−1, averaged from sample stimulus onset to test offset. We included N = 31 cells from 26 sessions for monkey B, and N = 29 from 27 sessions for monkey J. For monkey D, N = 81 cells from 39 sessions for the discrimination task, N = 63 cells from 33 sessions for the category-early period, and N = 137 cells from 59 sessions for the category-late period. For monkey H, N = 89 cells from 55 sessions for the discrimination task, N = 106 cells from 40 sessions for the category-early period, and N = 114 cells from 50 sessions for the category-late period.

Data used for modeling

For all the modeling and decoding analyses, we included only correct trials. The null-direction trials for categorization task for monkeys B and J were not included for model fitting.

We considered a time window in each trial starting from sample stimulus onset until 50 ms after the touch-bar release (if a touch-bar release occurred) or 50 ms after the test motion offset. We discretized the spike trains during each trial into 5 ms bins. We note that on non-match trials the animal was required to hold the touch bar until a second test stimulus (which is always a match) appeared. However, the second test stimulus presentation was never included in our analysis.