Abstract

Introduction: Over the last decades, interactive technologies appeared a promising solution in the ecological evaluation of executive functioning. We have developed the EXecutive-functions Innovative Tool 360° (EXIT 360°), a new instrument that exploits 360° technologies to provide an ecologically valid assessment of executive functioning. Aim: This work wanted to evaluate the convergent validity of the EXIT 360°, comparing it with traditional neuropsychological tests (NPS) for executive functioning. Methods: Seventy-seven healthy subjects underwent an evaluation that involved: (1) a paper-and-pencil neuropsychological assessment, (2) an EXIT 360° session, involving seven subtasks delivered by VR headset, and (3) a usability assessment. To evaluate convergent validity, statistical correlation analyses were performed between NPS and EXIT 360° scores. Results: The data showed that participants had completed the whole task in about 8 min, with 88.3% obtaining a high total score (≥12). Regarding convergent validity, the data revealed a significant correlation between the EXIT 360° total score and all NPS. Furthermore, data showed a correlation between the EXIT 360° total reaction time and timed neuropsychological tests. Finally, the usability assessment showed a good score. Conclusion: This work appears as a first validation step towards considering the EXIT 360° as a standardized instrument that uses 360° technologies to conduct an ecologically valid assessment of executive functioning. Further studies will be necessary to evaluate the effectiveness of the EXIT 360° in discriminating between healthy control subjects and patients with executive dysfunctions.

Keywords: executive functions, 360° environments, virtual reality, convergent validity, psychometric assessment, 360° videos

1. Introduction

Neuropsychological assessment is historically considered an integral part of the neurological examination and consists of the normatively informed application of performance-based assessments of various cognitive skills [1]. Among these cognitive abilities, the evaluation of executive functioning represents a challenge for neuropsychologists, due to the complexity of the construct [2] and the methodological difficulties [3,4,5].

The executive functions involve a wide range of neurocognitive processes and behavioral skills (e.g., reasoning, decision making, problem solving, planning, attention, control inhibitor, cognitive flexibility, and working memory) that appear to be crucial in many real-life situations [6]. Their dysfunction, typical in psychiatric and neurological pathologies, constitutes a significant global health challenge, due to their high impact on personal independence, social abilities (e.g., work, school, relationships), and cognitive and psychological development [7,8,9]. Specifically, executive function deficits affect daily tasks such as meal preparation, money management, housekeeping, and shopping [10,11], with an inevitable impact on the person’s quality of life and feelings of personal well-being [12]. Moreover, subjects with executive function impairments show problems in starting and stopping activities, increased distractibility, difficulties in learning, generating or planning strategies, and difficulties with online monitoring and inhibiting irrelevant stimuli [13,14]. Thus, identifying early strategies for evaluating and rehabilitating these disorders appears to be a priority [15].

The executive functions are traditionally evaluated with standard paper-and-pencil neuropsychological tests such as the Modified Wisconsin Card Sorting Test [16], Stroop Test [17], Frontal Assessment Battery [18,19], or the Trail Making Test [20], which allow standardized procedures and scores that make them valid and reliable. However, several studies have demonstrated that these tests were not able to predict the complexity of executive functioning in real-life settings [4,6,21,22,23,24]. An ecological assessment of executive functions appears critical for achieving excellent executive dysfunction management [23], given the significant impact of executive functions on daily life and personal independence [11, 25]. Therefore, innovative neuropsychological tests have been developed aimed at evaluating executive functioning within real-life scenarios [3], such as the Multiple Errands Test (MET) [21], in which patients are assessed while they are carrying out shopping tasks in a real supermarket, or UCSD Performance-based Skills Assessment (UPSA-B), in which patients must perform everyday tasks in two areas of functioning: communication and finances [26]. Data showed that these ecological evaluations provided a more accurate estimate of the patient’s deficits than were obtained within the laboratory [27]. However, they showed several limitations, including extended times, high economic costs, the difficulty of organization, poor controllability of experimental conditions and poor applicability for patients with motor deficits [28].

Therefore, the ecological limitations of the traditional neuropsychological battery and difficulties in administering tests in real-life scenarios have led researchers and clinicians to search for innovative solutions for achieving an ecologically valid evaluation of executive functions. In this framework, the use of interactive technologies (e.g., virtual reality, serious games, and 360° video) appeared as a promising solution, because they simulate real environments, situations, and objects, thereby allowing an ecologically valid assessment of executive functions [29,30,31,32] with a rigorous control on principal variables [33,34,35]. Several studies have shown VR-based tools to be appropriate instruments for assessing and rehabilitating executive functions, because they allowed clinicians to evaluate subjects while performing several everyday tasks in ecologically valid, secure, and controlled environments that reproduce everyday contexts [36,37,38]. Moreover, these VR-based instruments guarantee good control of the perceptual environment, a precise stimuli presentation, greater applicability, and user-friendly interfaces, and enable the acquisition of data and analysis of performance in real-time [14,31,32,33,39,40,41]. Indeed, several studies have shown the efficacy of VR-based assessment tools of executive functions in neurological and psychiatric populations, showing impairments invisible to traditional measurements [27,32,39,42,43,44,45,46,47,48].

In recent years, one of the most promising trends in the VR technology field is 360° technology [49], which has appeared as an interesting instrument in different healthcare sectors, including neuropsychological assessment [49,50,51], rehabilitation [52], and educational training [53]. Specifically, in neuropsychological assessment, the advances in 360° technologies allowed participants to be evaluated in virtual environments (photographs or immersive videos), which they experience from a first-person perspective without particular clinical negative effects (e.g., nausea, vertigo), enhancing the global user experience of evaluation [32]. In this direction, Serino and colleagues have developed a 360° version of the Picture Interpretation Test (PIT) for the detection of executive deficits (only the active visual-searching component), which has been successfully tested on patients with Parkinson’s disease and multiple sclerosis [51,54]. Following these promising results, Borgnis and colleagues developed the EXIT 360° (Executive-functions Innovative Tool 360°) for gathering information about many components of executive functioning (e.g., planning, decision making, problem solving, attention, and working memory) [55]. Indeed, the EXIT 360° was born to provide a complete evaluation of executive functionality, involving participants in a ‘game for health’ delivered via smartphones, in which they must perform everyday subtasks in 360° environments that reproduce different real-life contexts. Two previous studies showed promising and interesting results regarding usability, user experience, and engagement with the EXIT 360° in healthy control subjects [56] and patients with Parkinson’s disease [57]. Participants had a positive global impression of the tool, evaluating it as usable, easy to learn to use, original, friendly, and enjoyable. Interestingly, the EXIT 360° also appeared to be an engaging tool, with high spatial presence, ecological validity, and irrelevant adverse effects.

Since our purpose in developing the EXIT 360° is to produce an innovative tool for evaluating executive functions, this work aimed to assess the convergent validity of the EXIT 360°. The concept of ‘convergent validity’ means how closely the new scale or tool relates to other variables and measures of the same construct [58]. In other words, it assumes that tests based on the same or similar constructs should be highly correlated. In this context, one of the most used methods is to correlate the scores between the new assessment tool and others claimed to measure the same construct [59]. For this purpose, we have compared the EXIT 360° with standardized traditional neuropsychological tests for executive functioning.

2. Materials and Methods

2.1. Participants

Seventy-seven healthy control subjects were consecutively recruited at IRCCS Fondazione Don Carlo Gnocchi in Milan, according to the following inclusion criteria: (a) aged between 18 and 90 years; (b) education ≥ 5 years; (c) absence of cognitive impairment as determined by the Montreal Cognitive Assessment test [60] (MoCA score ≥17.54, cut-off of normality), corrected for age and years of education according to Italian normative data [61]; (d) absence of executive impairments as evaluated by a traditional neuropsychological battery for executive functioning; (e) ability to provide written, signed informed consent. Exclusion criteria: (a) overt hearing or visual impairment or visual hallucinations or vertigo; and (b) systemic, psychiatric, or neurological conditions.

The study was approved by the Fondazione Don Carlo Gnocchi ONLUS Ethics Committee in Milan. All participants obtained a complete explanation of the study’s purpose and risk before filling in the consent form, based on the revised Declaration of Helsinki (2013).

2.2. Procedure of Study

All participants underwent a one-session evaluation that involved three main phases: a pre-task evaluation (1), followed by an EXIT 360° session (2), and a brief post-task evaluation (3) [62].

2.2.1. Pre-Task Evaluation

Before the study’s initiation, the subjects signed the written informed consent and completed a questionnaire designed to collect the participants’ demographic data (e.g., age, gender, education level). Then, participants underwent an evaluation using traditional paper-and-pencil neuropsychological tests to exclude the presence of frank deficits in global cognitive and executive functioning. In detail, the neuropsychological evaluation allowed assessment of participants’ compliance with the inclusion criteria, and the convergent validity between the traditional neuropsychological tests for executive functions and the EXIT 360°.

The global cognitive profile was investigated using the MoCA test, a sensitive screening tool to exclude the presence of cognitive impairment.

Moreover, the neuropsychological battery for executive functioning included: Trail Making Test (in two specific sub-tests: TMT-A and TMT-B) [20], Phonemic Verbal Fluency Task (F.A.S.) [63], Stroop Test [17], Digit Span Backward [64], Frontal Assessment Battery (FAB) [18,19], Attentive Matrices [65] and Progressive Matrices of Raven [66,67,68]. Table 1 gives a detailed description of the different executive functions evaluated by each of these neuropsychological tests.

Table 1.

Pre-task evaluation: Neuropsychological tests.

| Name | Executive Function |

|---|---|

| Trail Making Test | Visual search Task switching Cognitive flexibility |

| Verbal Fluency Task | Access to vocabulary on phonemic key |

| Stroop Test | Inhibition |

| Digit Span Backward | Working memory |

| Frontal Assessment Battery | Abstraction Cognitive flexibility Motor programming/planning Interference sensitivity Inhibition control |

| Attentive Matrices | Visual search Selective attention |

| Progressive Matrices of Raven | Sustained and selective attention Reasoning |

2.2.2. EXIT 360° Session

After the neuropsychological assessment, all participants underwent an evaluation with the EXIT 360°. The neuropsychologist started the administration by inviting participants to sit on a swivel chair and wear a mobile-powered headset. Before wearing the headset, the psychologist provided a specific general instruction: “You will now wear a headset. Inside this viewer, you will see some 360° rooms of a house. To visualize the whole environment, I ask you to turn yourself around; you are sitting on a swivel chair for this reason. Within these environments, you will be asked to perform some tasks”.

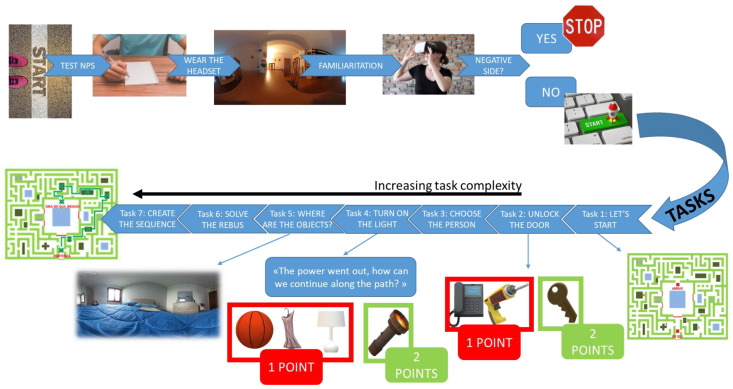

The EXIT 360° consists of 360° immersive domestic photos as virtual environments in which participants have to perform a preliminary familiarization phase and seven subtasks of increasing complexity.

In the first phase (one minute), participants had to familiarize themselves with the technology (they had to explore a 360° neutral environment freely) and report any side effects (such as nausea and vertigo) by answering ad-hoc questions (“Did you feel nauseous and/or dizzy during the exploration?”). If adverse effects occurred (of any intensity), the examiner had to stop the test immediately. Otherwise, subjects were immersed in a 360° environment representing a living room.

The real session (and time registration) started when the participants heard the following instruction: “You are about to enter a house. Your goal is to get out of this house in the shortest time possible. To exit, you will have to complete a path and a series of tasks that you will find along your way. Are you ready to start?”. During the EXIT 360° session, participants are immersed in several virtual environments that they must explore simply by moving their heads and rotating themselves, while remaining seated on the swivel chair.

Participants had to leave the house by completing the domestic path in the shortest possible time, while overcoming all seven subtasks: (1) Let’s Start; (2) Unlock the Door; (3) Choose the Person; (4) Turn On the Light; (5) Where Are the Objects?; (6) Solve the Rebus; and (7) Create the Sequence (for a detailed description, see [55,62]) (Figure 1).

Figure 1.

Evaluation process.

Briefly, the seven subtasks reproduce everyday scenarios that ask the subject to solve specific assignments according to the instructions. Let’s Start requires participants to observe a map and choose the path that allows them to reach the ‘finish’ in the shortest possible time. In the second subtask, the subjects have to open a door choosing between three specific options: key, telephone, and drill. The Choose the Person task requires the participant to explore a living room and select the correct person according to a particular instruction. In task 4, the subjects are immersed in a dark room because ‘the power went out,’ and they have to choose an object (i.e., flashlight) that allows them to continue the journey. In the following task, participants must identify the piece of furniture (among several pieces) on which four specific objects are placed. In task 6, subjects must complete a rebus consisting of many tiles, each containing a number and a geometric shape of different colors. Next to these tiles, a blank tile is inserted containing two question marks for the subject to fill in. Finally, they must memorize a sequence of numbers in the last task, reporting them in reverse.

Overall, the subtasks are designed to evaluate different components of executive functioning (e.g., planning, decision making, divided attention), and their level of complexity changes according to the cognitive load and the presence of confounding variables. Table 2 shows an overview of executive functions that the seven EXIT 360° subtasks could evaluate.

Table 2.

EXIT 360° subtasks and related executive functions.

| Name | Executive Function | |

|---|---|---|

| Task 1 | Let’s Start | Planning–Inhibition Control–Visual Search |

| Task 2 | Unlock the Door | Decision Making |

| Task 3 | Choose the Person | Divided Attention–Inhibition Control–Visual Search |

| Task 4 | Turn On the Light | Problem Solving–Planning–Inhibition Control |

| Task 5 | Where Are the Objects? | Visual Search–Selective and Divided Attention–Reasoning |

| Task 6 | Solve the Rebus | Planning–Reasoning–Set shifting–Selective and Divided Attention |

| Task 7 | Create the Sequence | Working Memory–Selective Attention–Inhibition Control |

To respond to subtasks’ requests, the subjects had to choose between three or more options, which allowed them to solve the task in the best possible way. Interestingly, in the mobile-powered headset, participants saw a small white dot/square, a ‘pointer’ that follows their gaze. When participants wanted to answer within the environment, they had to move their head, positioning the white dot over the answer for a few seconds. The response was then selected automatically.

The psychologist recorded all the subjects’ responses and reaction times: participants received only one point for the wrong answer (vs two for a correct one).

The digital solution was implemented to allow the subject to proceed automatically across the subtasks when they answered correctly. Where there was an error, the system provided visual feedback to the patient: “You have obtained a score of 1; inform the investigator”. Moreover, response times were calculated from the end of each subtask instruction until the participant provided the answer.

Overall, the EXIT 360° allowed data to be collected about a participant’s total score (range 7–14), and subtask and total reaction times (i.e., time in seconds registered from examiner’s instruction until the participant provided the last correct answer).

2.2.3. Post-Task Evaluation

After the EXIT 360° session, all subjects rated the usability of the EXIT 360° through the System Usability Scale [69,70,71,72], a ten-item questionnaire on a five-point scale, from ‘completely disagree’ to ‘strongly agree’, with the total score (range 0–100) indicating the overall usability of the system.

2.3. Statistical Analysis

Descriptive statistics included frequencies, percentages, median and interquartile range (IQR) for categorical variables, and mean and standard deviation (SD) for continuous measures. Skewness, Kurtosis, and histogram plots were visually explored to check the variables’ normal distributions, and perform parametric or non-parametric analyses when adequate. Pearson’s correlation (or Spearman’s correlation) was applied to evaluate the possible relationship between the scores of neuropsychological tests and the EXIT 360° (total score and subtask scores). Moreover, Pearson’s correlation was conducted to compare the total EXIT 360° score with the usability score. Furthermore, we evaluated the association (with univariate and multiple linear regression) between EXIT 360° variables and demographic characteristics to verify the possible influence of socio-demographic features on the results of the innovative tool. All statistical analyses were performed using Jamovi 1.6.7 software. A statistical threshold of p < 0.05 on two-tailed tests was considered statistically significant.

3. Results

3.1. Participants

Table 3 reports the demographic and clinical characteristics of the whole sample. The subjects (n = 77) are predominantly female (M:F = 29:48), with a mean age of 53.2 years (SD = 20.40, range = 24–89), and mean years of education of nearly 13 (IQR = 13–18, range 5–18). All participants included in the study showed an absence of cognitive impairment (MoCA correct score = 25.9 ± 2.62).

Table 3.

Demographic and MoCA scores of the whole sample.

| Subjects [n = 77] | ||

|---|---|---|

| Age (years) | Mean (SD) | 53.2 (20.40) |

| Sex (M:F) | 29:48 | |

| Education (years) | Median (IQR) | 13 (13–18) |

| MoCA_raw score | Mean (SD) | 26.9 (2.37) |

| MoCA_correct score | Mean (SD) | 25.9 (2.62) |

M = Male; F = Female; SD = Standard deviation; IQR= Interquartile range; n = Number; MoCA = Montreal Cognitive Assessment.

3.2. Traditional Neuropsychological Assessment

Table 4 reports the mean scores (raw and corrected scores) of neuropsychological tests with the respective cut-off of normality (equivalent score ≥ 2). All participants in the study showed scores within the normal range on all traditional neuropsychological tests for executive functions.

Table 4.

Scores of neuropsychological assessment.

| Neuropsychological Tests | Raw Score Mean (SD) |

Corrected Score Mean (SD) |

Cut-Off of Normality |

|---|---|---|---|

| Trail Making Test–Part A * | 37.2 (22.9) | 35.1 (19.3) | ≤68 |

| Trail Making Test–Part B * | 94.1 (58.9) | 90.6 (48.4) | ≤177 |

| Trail Making Test–Part B-A * | 56.9 (42.3) | 57 (34.2) | ≤111 |

| Verbal Fluency Task | 41.6 (11.1) | 37.9 (9.46) | ≥23 |

| Stroop Test–Errors | 0.68 (1.09) | 0.62 (1.13) | ≤2.82 |

| Stroop Test–Time * | 22.6 (15.5) | 23.8 (11.5) | ≤31.65 |

| Frontal Assessment Battery | 17.6 (1) | 17.7 (0.85) | ≥14.40 |

| Digit Span Backward | 4.77 (0.99) | 4.56 (0.97) | ≥3.29 |

| Attentive Matrices | 54.3 (5.53) | 48.6 (6.43) | ≥37 |

| Progressive Matrices of Raven | 32.3 (3.63) | 32.3 (3.2) | ≥23.5 |

SD = Standard Deviation; * = Total time in seconds.

3.3. EXIT 360°

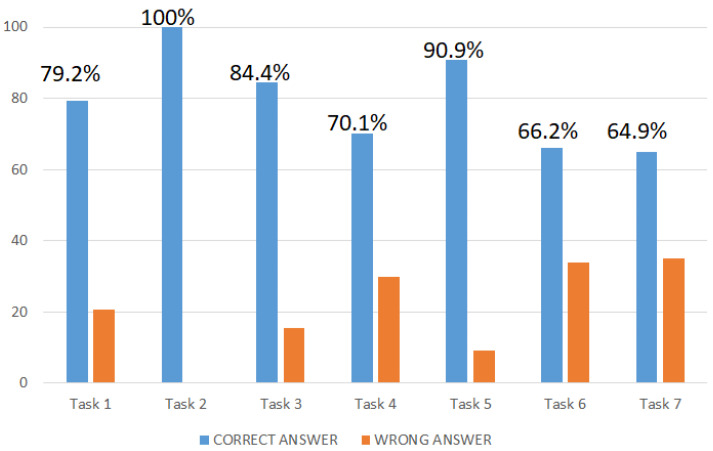

All participants in the study completed the whole task, obtaining only one point for wrong answers or two points for correct ones. Figure 2 reports participants’ scores (%) on all seven subtasks.

Figure 2.

Scores (%) on seven subtasks.

Overall, the descriptive analysis showed that healthy controls obtained a total score of 12.6 (±1.02; range = 10–14), with 88.3% of subjects receiving a score of ≥12. Regarding the total reaction time, participants took about 8 min (mean = 480 s ±130 sec; range = 192–963 sec) to complete the whole task.

The univariate linear regression shows a significant impact from age (β = −0.451, p < 0.001; R2 = 0.203) and education (p < 0.001; R2 = 0.300) on the EXIT 360° total score, but not from gender (β = −0.0980; p = 0.680; R2 = 0.002). Specifically, regarding education, a significant difference emerges between a low level of education (5 years) and medium to high ones, respectively 13 (β = 1.635, p < 0.001), 16 (β = 1.962, p < 0.001) and 18 (β = 1.923, p < 0.001). Moreover, Pearson’s correlation showed a significant negative correlation between age and total score (r = –0.451; p < 0.001). Regarding the EXIT 360° total reaction time, univariate linear regressions showed no significant impact from all of the demographic characteristics on the time variable (p > 0.05). The multiple linear regression (R2 = 0.342) confirmed the effect of education on the EXIT 360° total score (p < 0.05), but not the impact of age, which showed only a tendency to significance (β = −0.239, p = 0.051). Finally, the variable ‘sex’ did not impact the EXIT 360° total score (β = −0.127, p = 0.528).

3.4. Correlation between Neuropsychological Tests and EXIT 360°

Table 5 shows the correlations (Pearson’s correlation) between the traditional paper-and-pencil neuropsychological tests and the two scores of the EXIT 360°.

Table 5.

Correlation between EXIT 360° scores and neuropsychological assessment. In bold, statistically significant scores. * p < 0.05; ** p < 0.001.

| EXIT 360° Total Score |

EXIT 360° Total Reaction Time |

|

|---|---|---|

| Montreal Cognitive Assessment | 0.48 ** | −0.31 * |

| Progressive Matrices of Raven | 0.44 ** | - |

| Attentive Matrices | 0.26 * | −0.23 * |

| Frontal Assessment Battery | 0.41 ** | - |

| Verbal Fluency Task | 0.54 ** | - |

| Digit Span Backward | 0.32 * | - |

| Trail Making Test–Part A | - | 0.14 |

| Trail Making Test–Part B | - | 0.27 * |

| Trail Making Test–Part B-A | - | 0.29 * |

| Stroop Test–Errors | −0.32 * | 0.25 * |

| Stroop Test–Time | −0.45 ** | 0.28 * |

Specifically, Pearson’s correlation showed a significant correlation between the EXIT 360° total score and all neuropsychological tests. Moreover, data showed a correlation between the EXIT 360° total reaction time and several tests, particularly the timed ones (e.g., Trail Making Test, Stroop Test, and Attentive Matrices).

Furthermore, data showed no relationship between the EXIT 360° total score and EXIT 360° reaction time (p = 0.587), and only a correlation between the score and time of task 4 (r = 2.31; p < 0.05).

Finally, Table 6 shows the significant correlation between traditional neuropsychological tests and the seven subtask scores (Spearman’s correlation) and reaction time (Pearson’s correlation).

Table 6.

Correlation between subtask scores and neuropsychological assessment. Correlation significances are represented by colors: blue = not statistically significant scores; yellow = scores tending to statistical significance; orange = p < 0.05; red = p < 0.001.

| Task 1 | Task 2 | Task 3 | Task 4 | Task 5 | Task 6 | Task 7 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Score | Time | Score | Time | Score | Time | Score | Time | Score | Time | Score | Time | Score | Time | |

| PMR | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | 0.241 | n.s. | 0.484 | n.s. | 0.296 | n.s. |

| AM | n.s. | n.s. | n.s. | n.s. | n.s. | −0.218 | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | 0.284 | −0.226 |

| FAB | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | 0.254 | n.s. | n.s. | n.s. | 0.266 | n.s. | 0.283 | n.s. |

| V.F.T. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | 0.489 | n.s. | 0.438 | n.s. |

| DS | n.s. | −0.269 | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | 0.251 | n.s. | 0.341 | −0.253 | 0.303 | n.s. |

| TMT–A | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | −0.301 | n.s. | −0.462 | n.s. | −0.299 | 0.244 |

| TMT–B | n.s. | 0.333 | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | −0.31 | n.s. | −0.36 | n.s. | n.s. | n.s. |

| TMT B-A | n.s. | 0.366 | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | −0.259 | n.s. | n.s. | n.s. | n.s. | n.s. |

| ST_E | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | −0.29 | 0.28 | n.s. | n.s. | n.s. | n.s. |

| ST_T | n.s. | 0.339 | n.s. | n.s. | n.s. | n.s. | −0.282 | n.s. | −0.297 | n.s. | −0.344 | n.s. | −0.329 | 0.286 |

PMR = Progressive Matrices of Raven; AM = Attentive Matrices; FAB = Frontal Assessment Battery; VFT = Verbal Fluency Task; DS = Digit Span Backward; TMT-A = Trail Making Test–Part A; TMT-B = Trail Making Test–Part B; TMT-B-A = Trail Making Test–Part B-A; ST_E = Stroop Test–Errors; ST_T= Stroop Test–Time.

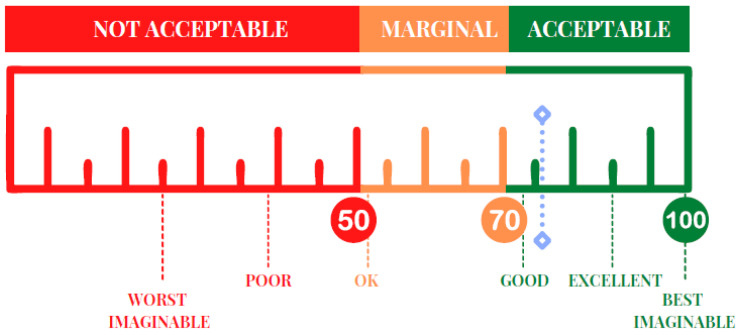

3.5. Usability

The mean value of the usability, calculated with the SUS, was 75.4 ± 13.2, indicating an acceptable level of usability, according to the scale’s score (cut-off = 68) and adjective ratings (Figure 3).

Figure 3.

A graphic representation of the SUS score.

Specifically, according to the cut-off score (cut-off = 68), more than 70% of participants showed scores above the cut-off. In addition, according to the adjective rating, 35.5% of subjects evaluated the EXIT 360° as ‘Good’, 32.9% as ‘Excellent’, and 27.6% as ‘Best imaginable’ [71].

Pearson’s or Spearman’s correlation showed no significant linear correlation between the SUS total score and the demographic characteristics, particularly for age (r = −0.045, p = 0.699) and education (r = −0.096; p = 0.405). Moreover, data showed the absence of a significant correlation between the SUS total score and the total score of the EXIT 360° (r = 0.126; p = 0.276).

4. Discussion

Over the last few years, there has been a growing interest in using VR-based solutions for making an ecologically valid assessment of executive functioning in several clinical populations [37,38,51,54]. Indeed, many studies have shown the efficacy of VR-based tools in evaluating executive functions in neurological and psychiatric populations, showing impairments invisible to traditional measurements [42,51].

Borgnis and colleagues used the advance of 360° technologies to develop the EXIT 360°, an innovative assessment instrument that aims to detect several executive deficits quickly, involving participants in a ‘game for health’, in which they must perform everyday subtasks in 360° environments that reproduce different real-life contexts [55,62]. After evaluating the usability of the EXIT 360° in a healthy control sample [56] and subjects with a neurological condition [57], the authors have assessed the convergent validity of this innovative tool for assessing executive functionality, comparing it with traditional standardized neuropsychological tests for executive functioning. Indeed, it is well known that a strong positive correlation between a new tool and other instruments designed on the same construct is evidence of the high convergent validity of the new test [59].

Findings on usability confirmed previous research, demonstrating a good-to-excellent usability score, with over 32% of participants evaluating the EXIT 360° as excellent and 27.6% as the best imaginable [56]. Interestingly, data showed no correlation between the total usability score and the total score of the EXIT 360°. Therefore, the score obtained by the participants in our innovative 360°-based tool is not influenced by the usability level, but only by participants’ performance (as also highlighted by the correlation between neuropsychological tests and total score).

Moreover, our data to test convergent validity showed a significant correlation between the EXIT 360° total score and all neuropsychological tests for executive functioning. Furthermore, an interesting and promising association was found between the EXIT 360° total reaction time and timed neuropsychological tests, such as the Trail Making Test, Stroop Test, and Attentive Matrices. As previously mentioned, a high correlation between the indexes of the new test (EXIT 360°) and the scores of other standardized instruments that evaluate the same construct (i.e., executive functioning) supported the high level of convergent validity of the new tool [59]. Therefore, we can conclude that the EXIT 360° showed a good convergent validity. In other words, the EXIT 360° can be considered an innovative solution to evaluate several components of executive functioning: selective and divided attention, cognitive flexibility, set shifting, working memory, reasoning, inhibition, and planning [55].

Further analysis showed that an evaluation with the EXIT 360° did not require a long administration time; indeed, participants took, on average, about 8 min to complete the entire task. This finding suggests that the EXIT 360° can be considered a quick and useful screening instrument to evaluate executive functioning. As regards the accuracy score, that is, the EXIT 360° total score, most of the participants (over 88%) achieved high scores (≥12). Further studies will be conducted to determine if a score of 12 could be a good cut-off value, able to differentiate between healthy and pathological groups.

In addition, no difference appeared in both EXIT 360° scores due to gender. Moreover, no impact of age and education appeared on the time variable. On the contrary, a difference occurred between the low education level (5) and medium-to-high education level groups on the EXIT 360° total score. A relationship also appeared between age and total score, with the older participants obtaining low scores. However, considering the joint impact of the demographic characteristic on the EXIT 360° total score results confirmed only the effect of the education variable (with only a tendency to significance for the age variable). As a result, just as with most neuropsychological tests, it will be necessary to provide a standardization of total scores for age and education.

Other analyses were conducted on the seven tasks to evaluate the performance at each task (correct answers), and any correlation between them and neuropsychological tests. These further analyses aimed to determine the: (1) potential differences in the complexity of subtasks and (2) executive functions evaluated by each. Data showed that Task 7 was the most complex (only 64.2% of participants gave a correct answer), followed by Task 6 (66.2%). Except for Task 1, the correlation analysis showed a growing cognitive load across the tasks. Moreover, the EXIT 360°, with its seven subtasks, appeared as a valuable and promising ecologically valid instrument to assess: [a] selected and divided attention (subtasks 3–5–6–7), [b] cognitive flexibility (subtasks 1–4–7–6–7), [c] inhibition control and interference sensitivity (subtasks 1–4–6–7), [d] working memory (subtasks 1–5–6–7), [e] planning (subtasks 4–6–7), [f] visual search (3–7), [g] set switching (subtask 1–5–6), and [h] reasoning (subtask 5–6–7). These findings supported the rationale behind the concept and design of the EXIT 360° activities, built to increase in terms of cognitive load (number of cognitive components evaluated) during the 360° experience. However, introducing confounding variables (distractors) could also increase the difficulty. Indeed, Tasks 5 and 6 assess the same load of executive functions, but Task 6 appeared more complex in terms of percentages of correct answers (90 vs. 66.2), due to the addition of confounding variables.

As regards Task 2, no correlation appeared with neuropsychological assessment; however, this result is not surprising since Task 2 was developed to evaluate the decision making that was not measured by the selected tests. As a result, the introduction to the neuropsychological evaluation of a test to measure decision-making ability could demonstrate the capacity of Task 2 to assess this executive function. Moreover, an additional possible explanation could be the ‘ceiling effect’, as all control subjects performed the task correctly.

Since executive function is a complex and heterogeneous construct with a high impact on everyday life and personal independence [11,25], an ecological evaluation of more components of executive functioning appears crucial to achieve optimal executive dysfunction management [23].

Despite the promising results, the present work has some limitations. Firstly, this study did not evaluate the inter-rater reliability assessment of the EXIT 360° for reaction time accuracy. Secondly, the neuropsychological tests chosen for convergent validity do not allow for assessing all components of executive functioning (for example, decision making). Another limitation is represented by the potential session-order effect. We think that the evaluation sessions of executive functions are based on different methodological paradigms (digital function led vs. conventional paper-and-pencil tests). However, another study may use a protocol in which the order of administration of the sessions is randomized to test the potential effect of session order.

5. Conclusions

This work appears as a first validation step towards considering the EXIT 360° as a valid and standardized instrument that exploits 360° technologies for conducting an ecologically valid assessment of executive functioning. Further studies will be necessary to: (1) provide standardization of the EXIT 360° total score for age and education, (2) assess the EXIT 360° inter-rater and test-retest reliability, to deepen its potential as a new screening tool; (3) evaluate the effectiveness of the EXIT 360° in discriminating between healthy control subjects and patients with executive dysfunctions; and (4) implement an automated scoring system for response times.

Author Contributions

F.B. (Francesca Borgnis), F.B. (Francesca Baglio) and P.C. conceived and designed the experiments. F.B. (Francesca Borgnis) performed the experiment and collected data. F.B. (Francesca Borgnis) and P.C. developed the new 360° Executive-function Innovative tool. P.C., F.B. (Francesca Borgnis) and F.B. (Francesca Borghesi) conducted the statistical analysis. F.B. (Francesca Borgnis) and F.R. wrote the first manuscript under the final supervision of F.B. (Francesca Baglio), L.L., P.C. and G.R. Moreover, E.P. and F.B. (Francesca Borghesi) supervised the sections on results and discussion. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Fondazione Don Carlo Gnocchi—Milan Ethics Committee on 7 April 2021, project identification code 09_07/04/2021.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study. Written informed consent was obtained from the participants to publish this paper.

Data Availability Statement

Data can be obtained upon reasonable request to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

Ricerca Corrente Italian Ministry of Health. Program 2022–2024.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Harvey P.D. Clinical applications of neuropsychological assessment. Dialog. Clin. Neurosci. 2012;14:91–99. doi: 10.31887/DCNS.2012.14.1/pharvey. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Stuss D.T., Alexander M.P. Executive functions and the frontal lobes: A conceptual view. Psychol. Res. 2000;63:289–298. doi: 10.1007/s004269900007. [DOI] [PubMed] [Google Scholar]

- 3.Chaytor N., Schmitter-Edgecombe M. The Ecological Validity of Neuropsychological Tests: A Review of the Literature on Everyday Cognitive Skills. Neuropsychol. Rev. 2003;13:181–197. doi: 10.1023/B:NERV.0000009483.91468.fb. [DOI] [PubMed] [Google Scholar]

- 4.Goldstein G. Functional Considerations in Neuropsychology. Gr Press/St Lucie Press, Inc.; Delray Beach, FL, USA: 1996. [Google Scholar]

- 5.Barker L.A., Andrade J., Romanowski C.A.J. Impaired Implicit Cognition with Intact Executive Function After Extensive Bilateral Prefrontal Pathology: A Case Study. Neurocase. 2004;10:233–248. doi: 10.1080/13554790490495096. [DOI] [PubMed] [Google Scholar]

- 6.Chan R.C.K., Shum D., Toulopoulou T., Chen E.Y. Assessment of executive functions: Review of instruments and identification of critical issues. Arch. Clin. Neuropsychol. 2008;23:201–216. doi: 10.1016/j.acn.2007.08.010. [DOI] [PubMed] [Google Scholar]

- 7.Goel V., Grafman J., Tajik J., Gana S., Danto D. A study of the performance of patients with frontal lobe lesions in a financial planning task. Brain. 1997;120:1805–1822. doi: 10.1093/brain/120.10.1805. [DOI] [PubMed] [Google Scholar]

- 8.Green M.F. What are the functional consequences of neurocognitive deficits in schizophrenia? Am. J. Psychiatry. 1996;153:321–330. doi: 10.1176/ajp.153.3.321. [DOI] [PubMed] [Google Scholar]

- 9.Green M.F., Kern R.S., Braff D.L., Mintz J. Neurocognitive deficits and functional outcome in schizophrenia: Are we measuring the “right stuff”? Schizophr. Bull. 2000;26:119–136. doi: 10.1093/oxfordjournals.schbul.a033430. [DOI] [PubMed] [Google Scholar]

- 10.Chevignard M., Pillon B., Pradat-Diehl P., Taillefer C., Rousseau S., Le Bras C., Dubois B. An ecological approach to planning dysfunction: Script execution. Cortex. 2000;36:649–669. doi: 10.1016/S0010-9452(08)70543-4. [DOI] [PubMed] [Google Scholar]

- 11.Fortin S., Godbout L., Braun C.M.J. Cognitive structure of executive deficits in frontally lesioned head trauma patients performing activities of daily living. Cortex. 2003;39:273–291. doi: 10.1016/S0010-9452(08)70109-6. [DOI] [PubMed] [Google Scholar]

- 12.Gitlin L.N., Corcoran M., Winter L., Boyce A., Hauck W.W. A randomized, controlled trial of a home environmental intervention: Effect on efficacy and upset in caregivers and on daily function of persons with dementia. Gerontologist. 2001;41:4–14. doi: 10.1093/geront/41.1.4. [DOI] [PubMed] [Google Scholar]

- 13.Crawford J.R. Introduction to the assessment of attention and executive functioning. Neuropsychol. Rehabil. 1998;8:209–211. doi: 10.1080/713755574. [DOI] [Google Scholar]

- 14.Alderman M.K. Motivation for Achievement: Possibilities for Teaching and Learning. Routledge; England, UK: 2013. [Google Scholar]

- 15.Van der Linden M., Seron X., Coyette F. La Prise En Charge Des Troubles Exécutifs. Traité De Neuropsychologie Clinique: Tome II. Solal; Marseille, France: 2000. pp. 253–268. [Google Scholar]

- 16.Nelson H.E. A Modified Card Sorting Test Sensitive to Frontal Lobe Defects. Cortex. 1976;12:313–324. doi: 10.1016/S0010-9452(76)80035-4. [DOI] [PubMed] [Google Scholar]

- 17.Stroop J.R. Studies of interference in serial verbal reactions. J. Exp. Psychol. 1935;18:643. doi: 10.1037/h0054651. [DOI] [Google Scholar]

- 18.Dubois B., Slachevsky A., Litvan I., Pillon B. The FAB: A frontal assessment battery at bedside. Neurology. 2000;55:1621–1626. doi: 10.1212/WNL.55.11.1621. [DOI] [PubMed] [Google Scholar]

- 19.Appollonio I., Leone M., Isella V., Piamarta F., Consoli T., Villa M.L., Forapani E., Russo A., Nichelli P. The frontal assessment battery (FAB): Normative values in an Italian population sample. Neurol. Sci. 2005;26:108–116. doi: 10.1007/s10072-005-0443-4. [DOI] [PubMed] [Google Scholar]

- 20.Reitan R.M. Trail Making Test: Manual for administration and scoring. Reitan Neuropsychology Laboratory; Tucson, Arizona: 1992. [Google Scholar]

- 21.Shallice T., Burgess P.W. Deficits in strategy application following frontal lobe damage in man. Brain. 1991;114:727–741. doi: 10.1093/brain/114.2.727. [DOI] [PubMed] [Google Scholar]

- 22.Klinger E., Chemin I., Lebreton S., Marie R.M. A virtual supermarket to assess cognitive planning. Annu. Rev. Cyber Ther. Telemed. 2004;2:49–57. [Google Scholar]

- 23.Burgess P.W., Alderman N., Forbes C., Costello A., M-A.Coates L., Dawson D.R., Anderson N.D., Gilbert S.J., Dumontheil I., Channon S. The case for the development and use of “ecologically valid” measures of executive function in experimental and clinical neuropsychology. J. Int. Neuropsychol. Soc. 2006;12:194–209. doi: 10.1017/S1355617706060310. [DOI] [PubMed] [Google Scholar]

- 24.Chaytor N., Burr R., Schmitter-Edgecombe M. Improving the ecological validity of executive functioning assessment. Arch. Clin. Neuropsychol. 2006;21:217–227. doi: 10.1016/j.acn.2005.12.002. [DOI] [PubMed] [Google Scholar]

- 25.Chevignard M.P., Catroppa C., Galvin J., Anderson V. Development and Evaluation of an Ecological Task to Assess Executive Functioning Post Childhood TBI: The Children’s Cooking Task. Brain Impair. 2010;11:125–143. doi: 10.1375/brim.11.2.125. [DOI] [Google Scholar]

- 26.Mausbach B.T., Harvey P.D., E Pulver A., A Depp C., Wolyniec P.S., Thornquist M.H., Luke J.R., A McGrath J., Bowie C.R., Patterson T.L. Relationship of the Brief UCSD Performance-based Skills Assessment (UPSA-B) to multiple indicators of functioning in people with schizophrenia and bipolar disorder. Bipolar Disord. 2010;12:45–55. doi: 10.1111/j.1399-5618.2009.00787.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Rand D., Rukan S.B.-A., Weiss P.L., Katz N. Validation of the Virtual MET as an assessment tool for executive functions. Neuropsychol. Rehabilitation. 2009;19:583–602. doi: 10.1080/09602010802469074. [DOI] [PubMed] [Google Scholar]

- 28.Bailey P.E., Henry J.D., Rendell P.G., Phillips L.H., Kliegel M. Dismantling the “age–prospective memory paradox”: The classic laboratory paradigm simulated in a naturalistic setting. Q. J. Exp. Psychol. 2010;63:646–652. doi: 10.1080/17470210903521797. [DOI] [PubMed] [Google Scholar]

- 29.Campbell Z., Zakzanis K.K., Jovanovski D., Joordens S., Mraz R., Graham S.J. Utilizing Virtual Reality to Improve the Ecological Validity of Clinical Neuropsychology: An fMRI Case Study Elucidating the Neural Basis of Planning by Comparing the Tower of London with a Three-Dimensional Navigation Task. Appl. Neuropsychol. 2009;16:295–306. doi: 10.1080/09084280903297891. [DOI] [PubMed] [Google Scholar]

- 30.Bohil C., Alicea B., Biocca F.A. Virtual reality in neuroscience research and therapy. Nat. Rev. Neurosci. 2011;12:752–762. doi: 10.1038/nrn3122. [DOI] [PubMed] [Google Scholar]

- 31.Parsons T.D., Courtney C.G., Arizmendi B., Dawson M. Virtual Reality Stroop Task for neurocognitive assessment. Stud. Health Technol. informatics. 2011;163:433–439. [PubMed] [Google Scholar]

- 32.Parsons T.D. Virtual Reality for Enhanced Ecological Validity and Experimental Control in the Clinical, Affective and Social Neurosciences. Front. Hum. Neurosci. 2015;9:660. doi: 10.3389/fnhum.2015.00660. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Rizzo A.A., Buckwalter J.G., McGee J.S., Bowerly T., Van Der Zaag C., Neumann U., Thiebaux M., Kim L., Pair J., Chua C. Virtual Environments for Assessing and Rehabilitating Cognitive/Functional Performance A Review of Projects at the USC Integrated Media Systems Center. PRESENCE: Virtual Augment. Real. 2001;10:359–374. doi: 10.1162/1054746011470226. [DOI] [Google Scholar]

- 34.Riva G. Virtual Reality in Neuro-Psycho-Physiology: Cognitive, Clinical and Methodological Issues in Assessment and Rehabilitation. Volume 44. IOS press; Amsterdam, The Netherlands: 1997. [PubMed] [Google Scholar]

- 35.Riva G. Psicologia Dei Nuovi Media. Il Mulino; Bologna, Italy: 2004. [Google Scholar]

- 36.Climent G., Banterla F., Iriarte Y. Virtual reality, technologies and behavioural assessment. AULA Ecol. Eval. Atten. Process. 2010:19–28. [Google Scholar]

- 37.Borgnis F., Baglio F., Pedroli E., Rossetto F., Uccellatore L., Oliveira J.A.G., Riva G., Cipresso P. Available Virtual Reality-Based Tools for Executive Functions: A Systematic Review. Front. Psychol. 2022;13 doi: 10.3389/fpsyg.2022.833136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Abbadessa G., Brigo F., Clerico M., De Mercanti S., Trojsi F., Tedeschi G., Bonavita S., Lavorgna L. Digital therapeutics in neurology. J. Neurol. 2021;269:1209–1224. doi: 10.1007/s00415-021-10608-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Armstrong C.M., Reger G.M., Edwards J., Rizzo A.A., Courtney C.G., Parsons T. Validity of the Virtual Reality Stroop Task (VRST) in active duty military. J. Clin. Exp. Neuropsychol. 2013;35:113–123. doi: 10.1080/13803395.2012.740002. [DOI] [PubMed] [Google Scholar]

- 40.Cipresso P., Serino S., Pedroli E., Albani G., Riva G. Psychometric reliability of the NeuroVR-based virtual version of the Multiple Errands Test; Proceedings of the 2013 7th International Conference on Pervasive Computing Technologies for Healthcare and Workshops; Venice, Italy. 5–8 May 2013; pp. 446–449. [Google Scholar]

- 41.Riva G., Carelli L., Gaggioli A., Gorini A., Vigna C., Algeri D., Repetto C., Raspelli S., Corsi R., Faletti G., et al. NeuroVR 1.5 in practice: Actual clinical applications of the open source VR system. Annu. Rev. Cyber Ther. Telemed. 2009;144:57–60. [PubMed] [Google Scholar]

- 42.Cipresso P., La Paglia F., La Cascia C., Riva G., Albani G., La Barbera D. Break in volition: A virtual reality study in patients with obsessive-compulsive disorder. Exp. Brain Res. 2013;229:443–449. doi: 10.1007/s00221-013-3471-y. [DOI] [PubMed] [Google Scholar]

- 43.Josman N., Schenirderman A.E., Klinger E., Shevil E. Using virtual reality to evaluate executive functioning among persons with schizophrenia: A validity study. Schizophr. Res. 2009;115:270–277. doi: 10.1016/j.schres.2009.09.015. [DOI] [PubMed] [Google Scholar]

- 44.Aubin G., Béliveau M.-F., Klinger E. An exploration of the ecological validity of the Virtual Action Planning–Supermarket (VAP-S) with people with schizophrenia. Neuropsychol. Rehabilitation. 2015;28:689–708. doi: 10.1080/09602011.2015.1074083. [DOI] [PubMed] [Google Scholar]

- 45.Wiederhold B.K., Riva G. Assessment of executive functions in patients with obsessive compulsive disorder by NeuroVR. Annu. Rev. Cybertherapy Telemed. 2012;2012:98. [PubMed] [Google Scholar]

- 46.Raspelli S., Carelli L., Morganti F., Albani G., Pignatti R., Mauro A., Poletti B., Corra B., Silani V., Riva G. A neuro vr-based version of the multiple errands test for the assessment of executive functions: A possible approach. J. Cyber Ther. Rehabil. 2009;2:299–314. [Google Scholar]

- 47.Raspelli S., Pallavicini F., Carelli L., Morganti F., Poletti B., Corra B., Silani V., Riva G. Validation of a Neuro Virtual Reality-based version of the Multiple Errands Test for the assessment of executive functions. Stud. Health Technol. informatics. 2011;167:92–97. [PubMed] [Google Scholar]

- 48.Rouaud O., Graule-Petot A., Couvreur G., Contegal F., Osseby G.V., Benatru I., Giroud M., Moreau T. Apport de l’évaluation écologique des troubles exécutifs dans la sclérose en plaques. Rev. Neurol. 2006;162:964–969. doi: 10.1016/S0035-3787(06)75106-2. [DOI] [PubMed] [Google Scholar]

- 49.Negro Cousa E., Brivio E., Serino S., Heboyan V., Riva G., De Leo G. New Frontiers for cognitive assessment: An exploratory study of the potentiality of 360 technologies for memory evaluation. Cyberpsychol. Behav. Soc. Netw. 2019;22:76–81. doi: 10.1089/cyber.2017.0720. [DOI] [PubMed] [Google Scholar]

- 50.Pieri L., Serino S., Cipresso P., Mancuso V., Riva G., Pedroli E. The ObReco-360°: A new ecological tool to memory assessment using 360° immersive technology. Virtual Real. 2021;26:639–648. doi: 10.1007/s10055-021-00526-1. [DOI] [Google Scholar]

- 51.Serino S., Baglio F., Rossetto F., Realdon O., Cipresso P., Parsons T.D., Cappellini G., Mantovani F., De Leo G., Nemni R., et al. Picture Interpretation Test (PIT) 360°: An Innovative Measure of Executive Functions. Sci. Rep. 2017;7:1–10. doi: 10.1038/s41598-017-16121-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Bialkova S., Dickhoff B. Encouraging Rehabilitation Trials: The Potential of 360 Immersive Instruction Videos; Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR); Osaka, Japan. 23–27 March 2019; pp. 1443–1447. [Google Scholar]

- 53.Violante M.G., Vezzetti E., Piazzolla P. Interactive virtual technologies in engineering education: Why not 360° videos? Int. J. Interact. Des. Manuf. 2019;13:729–742. doi: 10.1007/s12008-019-00553-y. [DOI] [Google Scholar]

- 54.Realdon O., Serino S., Savazzi F., Rossetto F., Cipresso P., Parsons T.D., Cappellini G., Mantovani F., Mendozzi L., Nemni R., et al. An ecological measure to screen executive functioning in MS: The Picture Interpretation Test (PIT) 360°. Sci. Rep. 2019;9:1–8. doi: 10.1038/s41598-019-42201-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Borgnis F., Baglio F., Pedroli E., Rossetto F., Riva G., Cipresso P. A Simple and Effective Way to Study Executive Functions by Using 360° Videos. Front. Neurosci. 2021;15:1–9. doi: 10.3389/fnins.2021.622095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Borgnis F., Baglio F., Pedroli E., Rossetto F., Isernia S., Uccellatore L., Riva G., Cipresso P. EXecutive-Functions Innovative Tool (EXIT 360°): A Usability and User Experience Study of an Original 360°-Based Assessment Instrument. Sensors. 2021;21:5867. doi: 10.3390/s21175867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Borgnis F., Baglio F., Pedroli E., Rossetto F., Meloni M., Riva G., Cipresso P. A Psychometric Tool for Evaluating Executive Functions in Parkinson’s Disease. J. Clin. Med. 2022;11:1153. doi: 10.3390/jcm11051153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Krabbe P.F.M., editor. The Measurement of Health and Health Status. Academic Press; Cambridge, MA, USA: 2017. Chapter 7— Validity; pp. 113–134. [DOI] [Google Scholar]

- 59.Chin C.L., Yao G. Convergent validity. Encycl. Qual. Life Well-Being Res. 2014;1 [Google Scholar]

- 60.Nasreddine Z.S., Phillips N.A., Bédirian V., Charbonneau S., Whitehead V., Collin I., Cummings J.L., Chertkow H. The Montreal Cognitive Assessment, MoCA: A Brief Screening Tool For Mild Cognitive Impairment. J. Am. Geriatr. Soc. 2005;53:695–699. doi: 10.1111/j.1532-5415.2005.53221.x. [DOI] [PubMed] [Google Scholar]

- 61.Santangelo G., Siciliano M., Pedone R., Vitale C., Falco F., Bisogno R., Siano P., Barone P., Grossi D., Santangelo F., et al. Normative data for the Montreal Cognitive Assessment in an Italian population sample. Neurol. Sci. 2014;36:585–591. doi: 10.1007/s10072-014-1995-y. [DOI] [PubMed] [Google Scholar]

- 62.Borgnis F., Baglio F., Pedroli E., Rossetto F., Meloni M., Riva G., Cipresso P. EXIT 360—Executive-functions innovative tool 360—A simple and effective way to study executive functions in P disease by using 360 videos. Appl. Sci. 2021;11:6791. doi: 10.3390/app11156791. [DOI] [Google Scholar]

- 63.Novelli G., Papagno C., Capitani E., Laiacona M. Tre test clinici di memoria verbale a lungo termine: Taratura su soggetti normali. Arch. Psicol. Neurol. Psichiatr. 1986;47(2):278–296. [Google Scholar]

- 64.Monaco M., Costa A., Caltagirone C., Carlesimo G.A. Forward and backward span for verbal and visuo-spatial data: Standardization and normative data from an Italian adult population. Neurol. Sci. 2013;34:749–754. doi: 10.1007/s10072-012-1130-x. [DOI] [PubMed] [Google Scholar]

- 65.Spinnler H., Tognoni G. Standardizzazione e taratura italiana di tests neuropsicologici. [Italian normative values and standardization of neuropsychological tests] Ital. J. Neurol. Sci. 1987;6:1–20. [PubMed] [Google Scholar]

- 66.Raven J.C. Progressive Matrices: Sets A, B, C, D, and E. University Press; H. K. Lewis & Co. Ltd.; London, UK: 1938. [Google Scholar]

- 67.Raven J.C. Board and Book Forms. Lewis; London, UK: 1947. Progressive matrices: Sets A, AbB. [Google Scholar]

- 68.Caffarra P., Vezzadini G., Zonato F., Copelli S., Venneri A. A normative study of a shorter version of Ravens progressive matrices 1938. Neurol. Sci. 2003;24:336–339. doi: 10.1007/s10072-003-0185-0. [DOI] [PubMed] [Google Scholar]

- 69.Brooke J. System Usability Scale (SUS): A Quick-and-Dirty Method of System Evaluation User Information. Digital Equipment Co Ltd.; Reading, UK: 1986. p. 43. [Google Scholar]

- 70.Brooke J. SUS: A ’Quick and Dirty’ Usability Scale. Usability Eval. Ind. 1996;189:4–7. [Google Scholar]

- 71.Lewis J.R., Sauro J. International Conference on Human Centered Design. Springer; Berlin/Heidelberg, Germany: 2009. The factor structure of the system usability scale; pp. 94–103. [Google Scholar]

- 72.Bangor A., Kortum P.T., Miller J.T. An Empirical Evaluation of the System Usability Scale. Int. J. Hum.–Comput. Interact. 2008;24:574–594. doi: 10.1080/10447310802205776. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data can be obtained upon reasonable request to the corresponding author.