Abstract

Remote robotic systems are employed in the CERN accelerator complex to perform different tasks, such as the safe handling of cables and their connectors. Without dedicated control, these kinds of actions are difficult and require the operators’ intervention, which is subjected to dangerous external agents. In this paper, two novel modules of the CERNTAURO framework are presented to provide a safe and usable solution for managing optical fibres and their connectors. The first module is used to detect touch and slippage, while the second one is used to regulate the grasping force and contrast slippage. The force reference was obtained with a combination of object recognition and a look-up table method. The proposed strategy was validated with tests in the CERN laboratory, and the preliminary experimental results demonstrated statistically significant increases in time-based efficiency and in the overall relative efficiency of the tasks.

Keywords: mobile robots, teleoperation, force control, slippage detection, industrial gripper

1. Introduction

CERN [1,2,3], European Center for Nuclear Research, located in Geneva, Switzerland, hosts more than 50 km of underground particle accelerators [4], including the Large Hadron Collider (LHC), the biggest particle accelerator in the world (it is approximately 27 km in circumference [5]), which contains a huge variety of scientific equipment. The size of the LHC poses continuous challenges concerning both its design and construction, and its maintenance, a critical point to ensure the regular operation of the experimental facility. Nevertheless, CERN experimental areas located underground present hazardous characteristics: the presence of radiation, high magnetic fields and possible lack of oxygen. Therefore, ensuring safe personnel access to the accelerator facilities can be challenging. The use of robotic systems in hazardous environments allows personnel safety to be ensured. Moreover, more accurate and detailed data about the environment can be collected during robotic operation, resulting in more opportunities for more accurate and precise operation [6]. Teleoperated robotic platforms can perform some of the maintenance tasks more safely and reliably than humans. The maintenance of the LHC includes a wide list of different tasks: visual inspection, screwing, welding, disassembling, reassembling and many others. For each of these tasks, different robots are needed, with sensors and tools, to face every situation.

Performing a stable grasp is the main challenge for most robotic manipulators, due to the need to avoid the application of excessive gripping force and prevent slippage as well as any possible damage to the object. The variability of the properties of the objects involved in activities of daily living makes grasping difficult when the robot handles novel objects without having prior information. Too much force could deform or damage the object or the gripper fingers, while too little pressure could let it slip or drop during displacements.

By using a robot gripper, dexterous handling can be achieved using information measured with sensors, such as force sensors, torque sensors, slip sensors, contact sensors, etc. [7]. The incipient slip information in human grasping [8] allows one to quickly adjust the gripping force in response to the object characteristics perceived upon initial contact [8,9]. In [10], an adaptive closed-loop grasping algorithm for novel objects is implemented on a robotic gripper instrumented with a force-sensing resistor (FSR) sensor and a laser-based slip sensor. This algorithm can immobilize a novel object within the fingers of the gripper with minimal deformation by estimating the exact minimal grasping force. In [11], a slipping avoidance algorithm is proposed to allow the robot to react to both linear and rotational slippage of the grasped object. The object grasped with a one-DoF gripper, provided by a six-axis force/tactile sensor, can be safely lifted with given orientation and information about its centre of gravity, and uncertain friction properties are not necessary. In [12], a model-free intelligent fuzzy sliding mode control strategy is employed in an ad hoc developed gripper sensorised with an FSR on the fingers. Slip information is obtained after three consecutive force variations exceeding a specific threshold. The gripper can dexterously pick and place various objects by using stiffness information and generating an appropriate grasping force, and the anti-slip control strategy can adjust the grasping force online to avoid object slippage. In [13], two different algorithms for controlling the grasping force are implemented in a one-DoF parallel gripper sensorised with six-axis force/tactile sensors. The first algorithm is aimed at avoiding both linear and rotational slippage [14] by using, on the grasped object, as little force as possible. The controller does not need information about the properties of the object to be grasped. The second algorithm achieves the pivoting task, i.e., a controlled rotation of the grasped object to change its orientation without releasing the object. In [15], gecko-inspired adhesives are mounted on the tips of commercial gripper fingers to delicately grasp objects by using little force. The gripper is equipped with tactile sensors [16]. Information about external forces and moments derive from a sensor on the robot’s wrist. The gecko adhesive characteristic is increased adhesion in proportion to the applied shear load. The knowledge of gripper performance combined with the gecko-inspired adhesive properties significantly reduces the overall demand for gripper actuators; then, smaller types can be used to lighten tools on robotic arms. In [17], the Vibratory Finger Manipulator, a simple and affordable mechanism based on the stick–slip phenomenon, where the application of vibrations enables friction to be actively controlled, is proposed to potentially augment the capabilities of any generic parallel jaw gripper. This approach generates propagation force onto the touched object, allowing the manipulation of the object to be achieved with accuracy of less than 2 mm.

During the grasping and lifting of various objects, visual cues and beforehand-gained knowledge allow humans to prepare for the imminent grasp by adapting the fingertip force based on the real object weight [18]. Humans evaluate the object weight to grasp using vision as an initial estimation; successively, they use tactile afferent control to improve the grasping precision [19]. Different approaches have been developed to estimate the object’s weight. In [20], active thermography and custom multi-channel neural networks are used to classify the density property. The approach is capable to estimate the weight of the unknown object by evaluating its volume. In [21], the estimation of the object weight is obtained by measuring the currents flowing in the gripper motor servos and by using linear regression between these and the weight values; the results show an estimation of the weight of the object with an average error of 22.42%.

For the execution of a task (e.g., plugging in/unplugging a connector), information on the object weight is not sufficient. Different solutions have been studied to overcome this problem. In [22], a gripper is proposed for the accurate alignment and holding of the position between the cable and the gripper. The method for plugging in the connector needs information on the exact connector position of the gripper. If the tolerance between the connectors is larger than the error position of the robot arm, the connection can be achieved using the position control of conventional industrial robots, without using force information. In [23], different solutions are presented to increase the degree of autonomy of robots involved in underwater intervention missions. In particular, an explored task involves the plugging in/unplugging of the hot stab connector. The whole procedure allows one to achieve the completion of the task, but no information is reported about the control of the grasping force. Just two parts of the presented algorithm to perform the task are notable: “Close the gripper completely; Reach the plug waypoint [...] This waypoint is 1 cm deeper than the manipulation waypoint because sometimes it is necessary to exert a bit of force to be sure that the connector has entered completely. The execution of known and pre-imposed actions is allowed by a controlled environment; again, a new unexpected condition could be unmanaged”.

In the case of the handle of optical fibres and their connectors, the interventions at CERN cannot be performed appropriately without a force control law that regulates the force applied by the grippers. The object could fall or the task could not be performed, and human intervention in hazardous environments would be necessary.

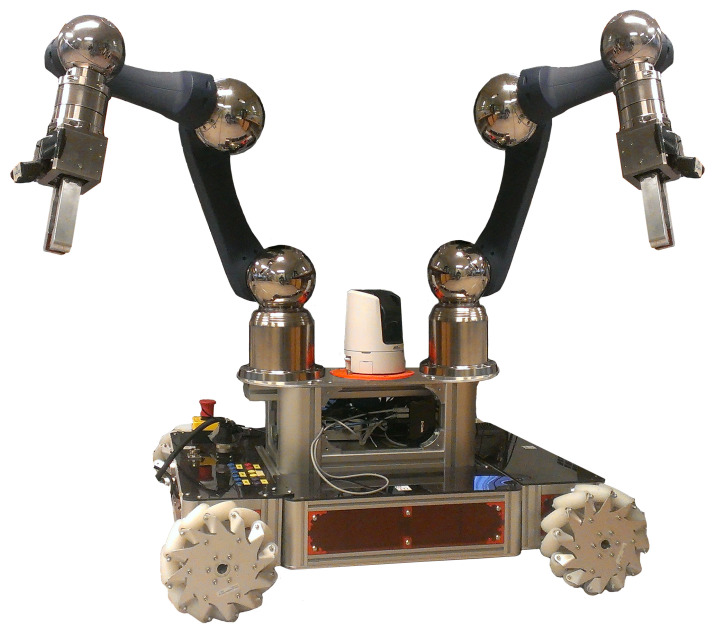

The aim of this work was to overcome this limitation by providing novel modules of the CERNTAURO [24] framework already present at CERN and used for other types of interventions: (i) the first module is a touch-and-slippage algorithm [25], and (ii) the second one, force-and-slippage control [26] developed for prosthetic hands and adapted for this work. CERNBot [27], a robotic platform (Figure 1) built at CERN, was used in the dual-arm configuration. On each arm, an industrial gripper equipped with two ad hoc developed fingers was munted.

Figure 1.

Dual-arm CERNBot.

The strategy also includes an object recognition module (ORM) to recognize metallic objects (e.g., connectors, sockets and patch panels) [28] and choose a force reference from a table for the gripper to perform the desired task. The tasks were performed in a teleoperated way to recreate the conditions of a real intervention in hazardous environments. The combination of the two novel modules and the ORM was employed to safely perform the plugging in and the unplugging of an optical fibre connector by applying the correct force to the connector for its management and to prevent any slipping during the execution of the tasks.

2. Materials and Methods

2.1. Touch-and-Slippage Detection Algorithm

The algorithm is able to detect (i) the first touch between the sensor and an object and (ii) slippage events. For removing the electronic noise from setup components, the mean value, , of the FSR conditioned signal (i.e., force or voltage), , was computed for every 5 samples.

| (1) |

The steps of the touch identification procedure are the following:

-

(1)

Computation of the average value on 10 samples of the voltage signal in the resting (calibration) period. In the calibration period, no force variation is detected, and represents the mean of background noise magnitude.

-

(2)

Comparison of with to obtain a mean voltage error.

| (2) |

Contact with the object is detected if is greater than the minimum voltage variation, , measured by the sensor when pressed.

| (3) |

Next, the value of , i.e., the voltage signal positive derivative, is computed. Only the positive derivative is considered, since the negative value corresponds to a pressure increment on the FSR.

| (4) |

The derivative of the mean values between two consecutive 5-sample sets is computed and compared with a threshold established using the ROC curve [29], ensuring the discrimination between true and false-positive slippage events. A binary value (called ) is set to 1 when is higher than the threshold (i.e., the slippage occurs); this is 0 otherwise.

| (5) |

The proposed approach can be applied both on voltage and force signals by simply choosing the negative or positive derivative of the signal. In this work, 100 Hz was the sampling rate for calculating touch and slippage.

2.2. Force Reference Estimation

The normal force reference, , is determined by analysing the gripper grasping and manipulating a set of objects (i.e., plugging in and unplugging connectors) available in hazardous environments. For each connector mounted on test benches in the laboratory, the gripper of the robot repeated the grasping and the operation of plugging in/unplugging three times. The grasping forces were acquired with the FSR sensors embedded in the fingers, and the mean values are reported in Table 1.

Table 1.

Force reference to plug in and unplug in the reported connectors.

| Connector Type | Manipulation Force (N) |

|---|---|

| FC | 2.3 ± 0.13 |

| Fisher ST-ST | 4.14 ± 0.34 |

| LEMO FIG | 3.67 ± 0.21 |

| LEMO FZG | 3.89 ± 0.16 |

Then, the camera mounted on the robot (used to perform interventions in a teleoperated manner) is used to recognize the object to grasp and to select the corresponding reference normal force, , from a table.

The strategy also includes the ORM [28], a module based on a deep learning-based module for object recognition [30] that allows metallic objects to be recognised (e.g., connectors, sockets and patch panels) according to non-textured attributes. The module is based on region-based convolutional neural networks (Faster-RCNN) already pre-trained in COCO [31]. The neuronal model chosen for this work to detect a large number of metallic parts was ResNet-101 [32].

2.3. Force-and-Slippage Control

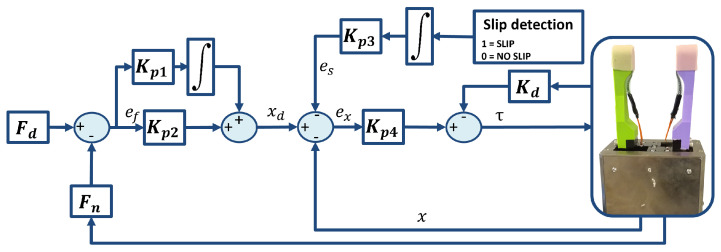

For this work, force-and-slippage control used in the prosthetic field [26] with a touch-and-slippage detection algorithm [25] developed by the authors was chosen (Figure 2). If the proposed strategy works well in a challenging area such as prosthetics, where stability and reduced response times are required, good functioning is also expected in other less stringent but equally challenging areas. The coordination of the fingers was not used, because the gripper only had one motor for moving the two fingers.

Figure 2.

Force-and-slippage control law.

, i.e., the sum of the components of the normal forces applied by the gripper fingertips [33] on the object surface and acquired with force sensors, is subtracted from the reference force, , to obtain a force error, , that has to be minimized by the control. Then, reference position is obtained with proportional–integrative (PI) force control,

| (6) |

and is compared with the actual position, x. and are the controller gains, and is the final integration time, while and are the controller gains obtained with a trial-and-error procedure, assuming them to be much higher than the stiffness of the sensorised fingers. An additional contribution, , is considered to manage slippage events [34,35]. Therefore, the position error is

| (7) |

where

| (8) |

is the binary signal equal to 0 obtained using the touch-and-slippage detection algorithm (Section 2.1) and is a constant regulating the weight in the control obtained with a trial-and-error procedure. The integration of this signal guarantees an increment in the applied grasping force in the presence of slippage [34].

The so-obtained position error should be reduced to zero by PD control written as

| (9) |

where is the torque; x, and are position, velocity and acceleration derived from the motor sensors; and are the proportional and the derivative gains, respectively.

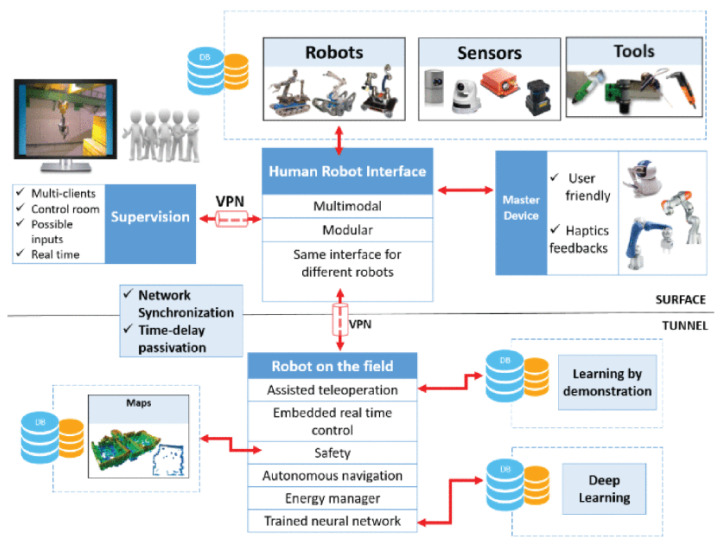

2.4. CERNTAURO Framework

The project in [24] (Figure 3) aims at creating a modular framework adaptable to specific intervention needs, to be upgraded accordingly as new features need to be integrated. The CERNTAURO framework is adaptable to different robots thanks to a configuration layer that takes into account several factors, such as type of hardware, communication layer and user needs, and can perform unmanned tasks in hazardous and semi-structured environments.

Figure 3.

CERNTAURO framework.

2.5. CERNBot

CERNBot (Figure 1) is a modular and flexible robotic platform built at CERN for complex interventions in the presence of hazards, such as ionization radiation. It is made of two robotic arms (Schunk) installed on a mobile platform. CERNBot uses standard industry components for most of its electronic and control hardware, making it a constantly evolving platform, as hardware is upgraded by the manufacturer. Further details can be found in [27]. For this application, an industrial gripper (already used and chosen among the available ones) was selected and installed on the robotic arm.

2.6. Multimodal Human–Robot Interface

The human–robot interface (HRI) presented in [36] has been developed for remote robotic intervention in hazardous environments, and it is part of the CERNTAURO framework. According to its definition, a multimodal interface provides different modalities for user interaction: the interaction domain of user and interface, and the interaction domain of user and robot.

In this work, the interaction between the user and the interface and that between the user and the robot were performed with the keyboard.

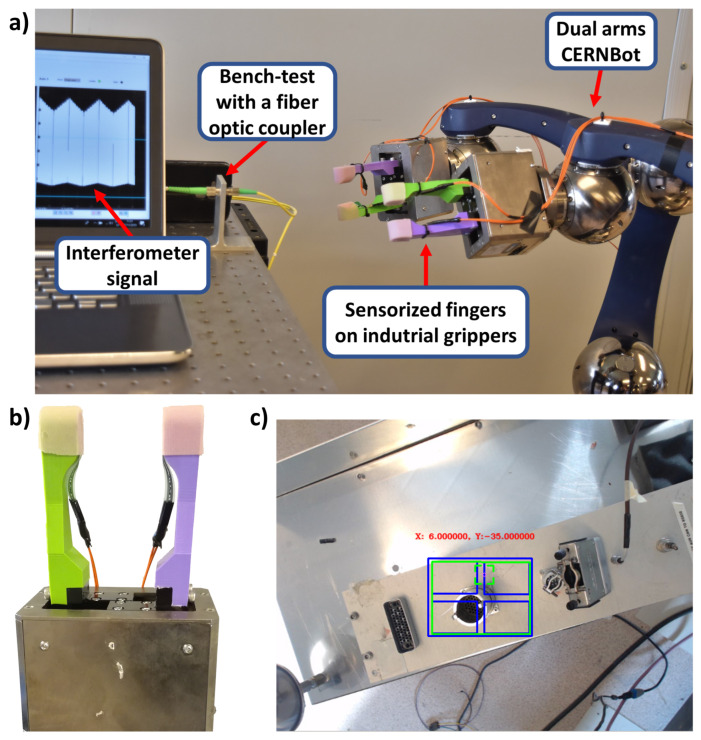

2.7. Experimental Setup and Protocol

CERNBot in the dual-arm configuration (right side of the Figure 4a) was positioned in front of a table where a test bench with a fibre optic coupler (in the centre of the Figure 4a) was mounted.

Figure 4.

(a) Setup for experimental validation. (b) Particular of the gripper with the two ad hoc fingers. (c) Object recognition for the force reference of choice.

Two commercial industrial grippers (Universal gripper PG; size, 70; Schunk, Lauffen am Neckar, Germany) [33], with fingers realized with PLA, instrumented with two FSR sensors Model 402 (Figure 4 [37]) and covered with silicon to increase the grip, were mounted on the robotic arms of CERNBot. This kind of gripper has one motor to drive the ball screw via a toothed belt drive; then, the rotational movement is transformed into a linear movement by base jaws mounted on the spindle nuts. The position was measured using an embedded encoder sensor. The position was the same for both fingers due to the parallel mechanisms [33].

The user was sitting in front of a monitor and was asked to perform the teleoperated tasks by following the whole operation using the camera mounted on the robot. Communication between the user and the robot was performed by means of an ethernet cable. The user manually chose the type of connector to recognize and handle, and the correct force value was automatically chosen from the table and set in the control.

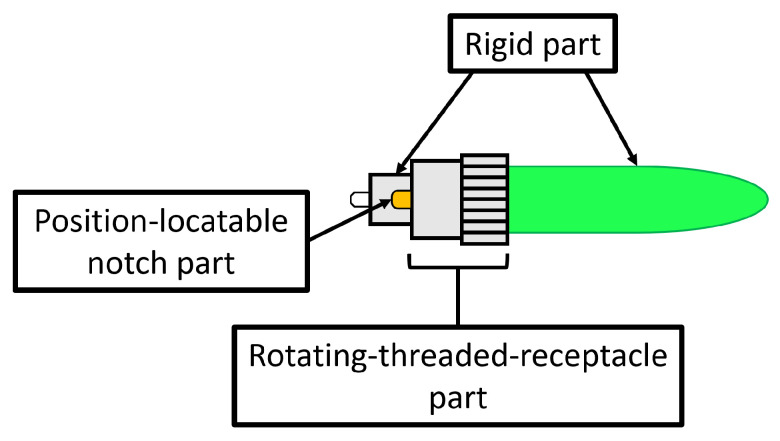

Two kinds of tasks were performed: the unplugging task, i.e., to unplug the FC connector to avoid losing it, and the plugging in task, i.e., to plug in the FC connector. The connector of the optical fibre was made of three parts (Figure 5): the first one was integrated with the cable and then rigid; the second one was the rotating threaded receptacle part useful for screwing/unscrewing the connector, with the specular part blocking them during use; the last one was a position-locatable notch to align male and female connectors.

Figure 5.

FC connector.

The normal use of this connector involves the screwing/unscrewing of the rotating part and then the grasping of the rigid part to remove the connector from the female part. An interferometer was connected to the backside of the fibre optic coupler to understand if the unplugging or the plugging in was correct, reading signal interruption for the first task and signal recovery for the second one. Information about the signal from the interferometer was read by employing a PC (left side of Figure 4a).

For the plugging in task, the following steps need to be performed:

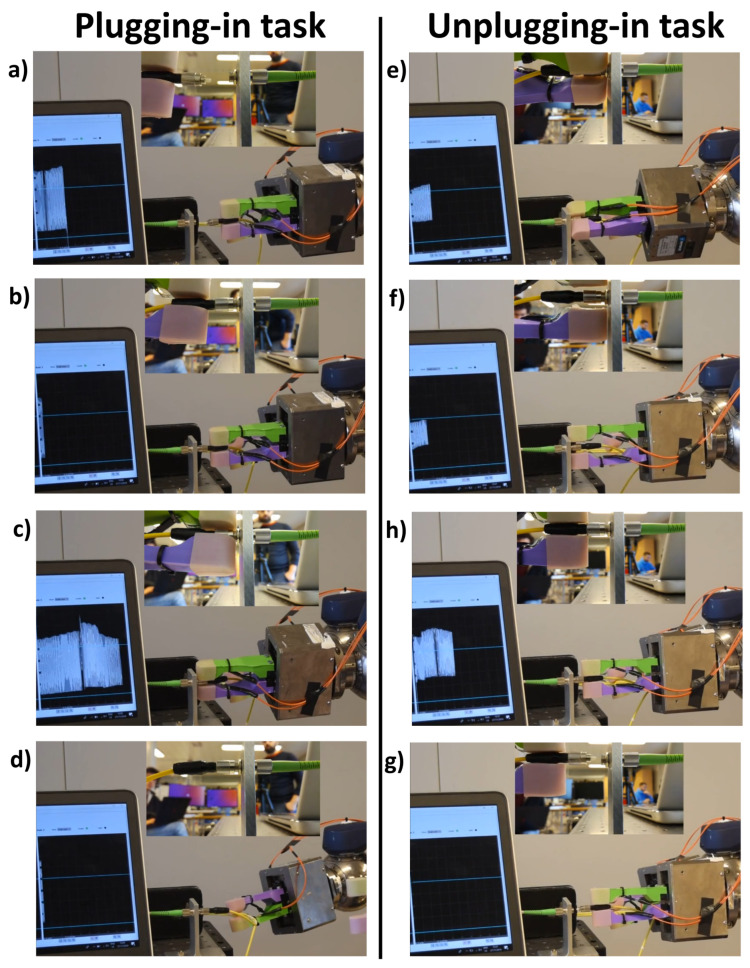

The gripper on the right arm grasps the cable while the gripper on the left side grasps the FC connector by the rigid part (Figure 6a);

The left arm moves to plug in the cable in the fibre optic coupler, aligning the position-locatable notch and the recess (Figure 6b);

The gripper on the left arm releases the rigid part to grasp the rotating threaded receptacle part and to screw the connector (Figure 6c);

The gripper on the left arm releases the rotating threaded receptacle part (Figure 6d).

Figure 6.

Different phases of the two tasks. (a) First step: the two grippers grasp the cable and the FC connector; (b) Second step: the left arm moves to plug-in the cable in the fiber optic coupler; (c) Third step: the gripper on the left arm releases the rigid part to grasp the rotating-threaded-receptacle part and to screw the connector; (d) Fourth step: the gripper on the left arm releases the rotating-threaded-receptacle part. (e) First step: the two grippers grasp the cable of the FC connector; (f) the gripper on the left arm releases the rotating-threaded-receptacle part; (g) the gripper on the left arm grasps the connector on the rigid part; (h) the right arm moves to unplug-in the cable.

For the unplugging task, the following steps need to performed:

The gripper on the right arm grasps the cable while the gripper on the left side grasps the FC connector on the rotating threaded receptacle part to unscrew it (Figure 6e);

The gripper on the left arm releases the rotating threaded receptacle part (Figure 6f);

The gripper on the left arm grasps the connector by the rigid part (Figure 6g);

The right arm moves to unplug the cable (Figure 6h).

For these tasks, 15 naive users (12 males and 3 females, 26 ± 5 years) were selected to perform the two tasks three times. The tasks were carried out using CERNbot and CERNTAURO with and without the two novel modules: the touch-and-slippage (TAS) and the force-and-slippage (FAS) modules. The naive user was supported by an expert user to explain the basic functionality of the robots in the two operative modalities before the first attempt and to provide minimal support during the entire test. An Arduino board for each gripper was used to read the voltage values from the conditioning circuit and to calculate touch and slippage for both fingers. The force applied on the sensor surface caused a mechanical deformation, resulting in resistance variations. Such variations were converted, using a voltage divider, into voltage values ranging from 0 to 5 V [38]. A relationship between the output voltage value from the FSR and the force value was established with statistical characterization [39]. The relation between voltage and force was modelled as reported in [40]. Force, touch and slippage information was delivered to the two developed modules implemented in the CERNTAURO framework to obtain the necessary torques for activating the gripper motion.

3. Results and Discussion

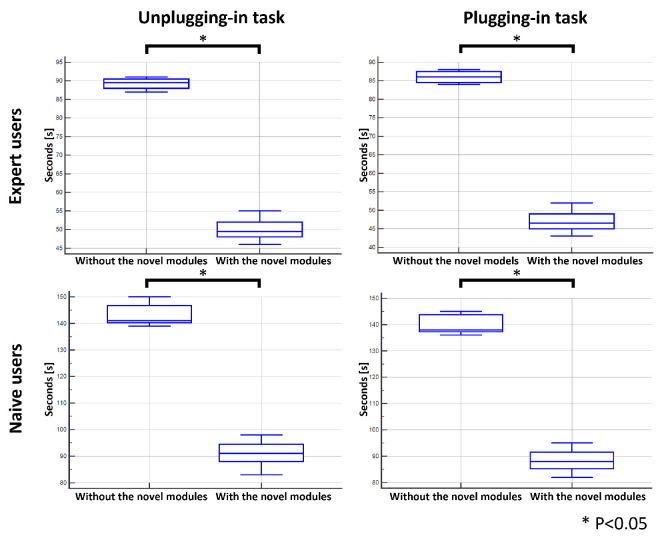

The users were asked to accomplish the two tasks (to unplug and plug in the cable) three times. Moreover, to provide a baseline for the time comparison, the tasks were executed in both modalities by two expert users; their execution time was considered a lower bound of the execution of the tasks. The difference in the execution time was statistically significant (Mann–Whitney test, p < 0.05) for both expert and naive users when the two tasks were performed with the combination of the two novel modules, i.e., TAS and FAS, and the ORM (Figure 7).

Figure 7.

Task execution time (in seconds) for expert and naive operators. Statistical significance when the novel modules were used is indicated with * p < 0.05 (Mann–Whitney test).

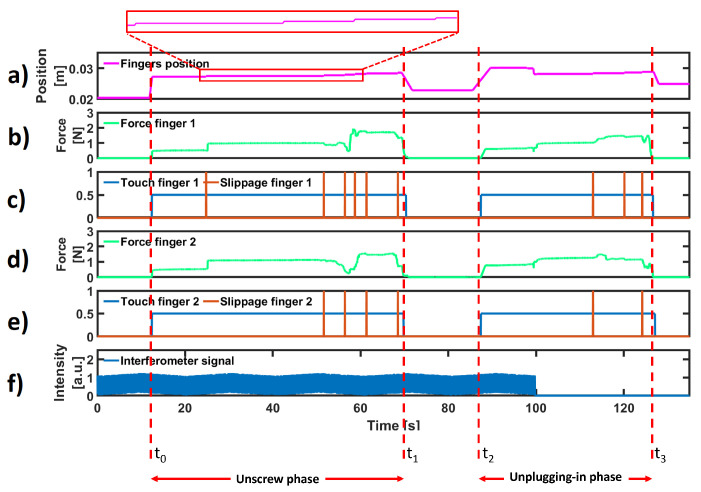

For the sake of brevity, force, touch, slippage, position and interferometer information is shown in Figure 8 for a single unplugging task. This task was performed in two parts: in the first one, the FC connector was unscrewed (from time to ), and in the second one, the fibre was removed from the fibre optic coupler of the test bench (from time to ).

Figure 8.

Experimental results for a single unplugging task. (a) Position of each finger. (b,d) Normal forces acquired by the FSR sensors on the fingers. (c,e) Detected touch and slippage events. (f) Interferometer signal.

Subplot (a) depicts the position of each finger. As described in Section 2.5, the gripper was symmetric, and the displacement of only one finger is reported. The gripper was positioned close to the test bench with an opening similar to the FC connector diameter (∼10 mm) to reduce the time of operation. For this reason, the position of each finger did not start from zero. Subplots (b) and (d) show the forces applied by the fingers. In subplots (c) and (e), the binary signals related to touch and slippage events detected by each finger [25] are reported. In the last subplot, the signal acquired by the interferometer was used to ensure that connector disconnection/connection had been established (in this case, at time , the loss of interferometer signal to demonstrate the success of the task). In the first phase, the gripper grasped the threaded receptacle, rotated to unscrew it and opened the fingers. In the second phase, it repositioned itself to grasp the rigid part of the connector to remove it from the fibre optic coupler of the test bench. In the first phase, one slippage event was detected, while in the second phase, many of them were detected and contrasted. This is normal behaviour, because the FC connector and the fibre are made of different materials and have different friction coefficients. Hence, the vibrations induced by slippage are different for each phase [41].

To measure the effectiveness (i.e., the ability to obtain the desired results in an ideal context) of using the novel modules, the interferometer signal was taken as a reference (f). When the FC connector was connected to the coupler, i.e., when a good signal transmission through the optical fibre was guaranteed, the amplitude of the interferometer signal had a maximum amplitude between 1.1 and 1.2 values. Therefore, if at the end of the plugging in operation, the connector was between the fingers of the gripper and the signal was less than 1.1, the task was still considered unsuccessful. Again, for the unplugging operation, the task was considered a success when the interferometer signal was equal to 0 and the connector was still between the fingers of the gripper.

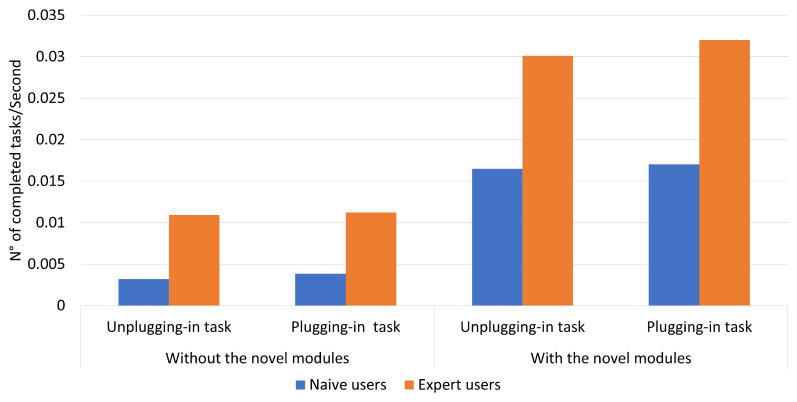

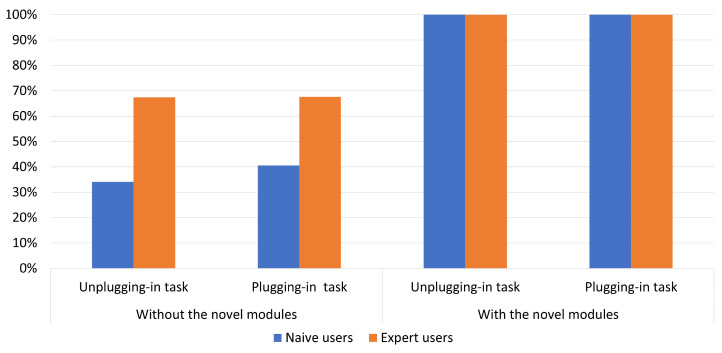

According to ISO-9241, efficiency represents the resources spent by the users to ensure accurate and complete achievement of the goals. Efficiency is measured in terms of the time employed by the participant to complete a task and can be defined in two ways: time-based efficiency (the measurement of the time spent by the user to complete the task; Figure 9) and the overall relative efficiency of the task (users who completed the task concerning the total time taken by all users; Figure 10) [42].

Figure 9.

Time-based efficiency of the two tasks performed without and with the two novel modules.

Figure 10.

Overall relative efficiency of the two tasks performed without and with the two novel modules.

In particular, the time-based efficiency for expert users was about 0.03 with the combination of the two novel modules as compared with the value obtained in the situation without the new modules, which was half. A similar situation was found for naive users, with values of about 0.01 with the novel modules and less than half without the new modules.

The overall relative efficiency was equal to 100% for both expert and naive users when the two modules were used. Without the novel modules, the percentages were less the 70% for expert users and less the 40% for naive users.

The combined use of the two novel modules, i.e., TAS and FAS, led to a success rate (i.e., No. of completed tasks/No. of tasks) of 100%.

4. Comparison with Other Approaches

The analysed works propose different strategies to control a gripper by managing grasping force and slippage.

In [10], the force reference is obtained with the initial grasp, but without lifting the object. Hence, object re-grasping is necessary for the phase immediately following lifting. Instead, the proposed approach detects the needed force to manage the grasped object in an offline phase to avoid a successive re-grasping phase. Moreover, when a slip is detected, a corrective action is performed to make the grasp action more robust. Differently from [11], where friction information is needed to solve an equation for determining the right torque to avoid slipping during grasping, the proposed approach only needs the information of the force reference previously calculated. During the task, only the forces read by the FSR sensors are employed.

Environments such as those where CERNBot is used cannot be equipped with systems to digitally reconstruct the entire workspace (such as optoelectronic systems with markers). Furthermore, human intervention needs to be limited or even forbidden, so the knowledge of the actual positions of each object within the work environment is difficult. This makes the methods described in the Introduction section unsuitable for our purposes.

On the other hand, the knowledge of some information about the operation to perform, such as the connectors to manipulate, is possible. Then, since such operations are planned in advance, the determination of the force references, as well as the recognition of new objects, can be made in the laboratory using bench tests.

5. Conclusions

Safety in the work environment is the main advantage of utilizing robotics [43]. Employees who work in hazardous environments can delegate to a robot dangerous tasks that are not possible or safe for humans. The CERNTAURO framework was developed at CERN to help users to perform robotic tasks comfortably, increasing the success rate and safety, and decreasing the intervention time. Teleoperation is currently the only solution to intervene for maintenance in extreme environments. Nevertheless, there are some types of tasks that require particular attention from users. In particular, grasping an optical fibre, and plugging in and unplugging its connector were open challenges.

Until today, the only solution for plugging in or unplugging connectors within hazardous environments was the employment of operators. This involved exposure to conditions dangerous to their health. Tests carried out within the laboratory showed a high failure rate if a robot was used without the solutions described in this work, thus still requiring the intervention of operators.

In this work, two novel modules were added to the CERNTAURO framework (i) to detect touching and slipping between the sensor positioned on the gripper finger and the object surface and to use this information (ii) to regulate the force and to avoid slips during the whole task.

The time-based efficiency when using the combination of the two novel modules was found to be twice as much as that achieved in the same task performed without them. The overall relative efficiency achieved using the two novel modules was 100% for both expert and naive users, while the percentage was less than 70% for expert users and less than 40% for naive users without the new modules. The combination of (i) the two novel modules, (ii) the sensorised fingers mounted on each gripper of the CERNBot platform and (iii) the ORM showed 100% success for both expert and naive users, as evidenced by both effectiveness and efficiency. In addition, users reported a decrease in anxiety due to the task to be performed, as the automatic management of force and slippage allowed them to only focus on plugging in or unplugging the connector.

Future works should test these novel modules during interventions in hazardous environments, such as the LHC [3], the collimator in the North Experimental area [44], the Super Proton Synchrotron accelerator [45] or the Antimatter Production facility [46].

Acknowledgments

This work was partly supported by Italian Institute for Labour Accidents (INAIL) with the RehabRobo@work (CUP: C82F17000040001) and PPR AS 1/3 (CUP: E57B16000160005) projects, partly by ANIA Foundation with Development of bionic upper limb prosthesis characterized by personalized interface and sensorial feedback for amputee patients with macro lesion after car accident project, partly by Campus Bio-Medico University Strategic Projects (Call 2018) with the SAFE-MOVER project, and partly by the Italian Ministry of University and Research (PON project research and innovation 2014-2020) with the ARONA project (CUP: B26G18000390005).

Author Contributions

C.G. designed the proposed approach, acquired and analysed the experimental data and wrote the paper; G.L. contributed to the design of the proposed approach and to the analysis of the experimental data and wrote the paper; L.R.B. and F.C. designed the study, supervised the writing and wrote the paper; M.D.C. contributed to the design of the proposed approach and of the experimental setup, wrote the paper and supervised the study; A.M. and L.Z. designed the paper and supervised the writing. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research received no external funding.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Bakker C.J. Cern: European Organizatioin for Nuclear Research. Phys. Today. 1955;8:8. doi: 10.1063/1.3062142. [DOI] [Google Scholar]

- 2.Amaldi E. CERN, The European council for nuclear research. Nuovo Cimento. 1955;2:339–354. doi: 10.1007/BF02746094. [DOI] [Google Scholar]

- 3.Evans L. Particle accelerators at CERN: From the early days to the LHC and beyond. Technol. Forecast. Soc. Chang. 2016;112:4–12. doi: 10.1016/j.techfore.2016.07.028. [DOI] [Google Scholar]

- 4.Lefevre C. The CERN Accelerator Complex. CERN PhotoLab; 2008. Technical Report. [Google Scholar]

- 5.Brüning O. LHC Design Report: The LHC Main Ring. Volume 1 European Organization for Nuclear Research; Meyrin, Switzerland: 2004. CERN Yellow Reports: Monographs. [Google Scholar]

- 6.Murphy R.R. Rescue robotics for homeland security. Commun. ACM. 2004;47:66–68. doi: 10.1145/971617.971648. [DOI] [Google Scholar]

- 7.Raval S., Patel B. A Review on Grasping Principle and Robotic Grippers. Int. J. Eng. Dev. Res. 2016;4:483–490. [Google Scholar]

- 8.Johansson R.S., Westling G. Roles of glabrous skin receptors and sensorimotor memory in automatic control of precision grip when lifting rougher or more slippery objects. Exp. Brain Res. 1984;56:550–564. doi: 10.1007/BF00237997. [DOI] [PubMed] [Google Scholar]

- 9.Johnson K.L., Johnson K.L. Contact Mechanics. Cambridge University Press; Cambridge, UK: 1987. [Google Scholar]

- 10.Ding Z., Paperno N., Prakash K., Behal A. An adaptive control-based approach for 1-click gripping of novel objects using a robotic manipulator. IEEE Trans. Control. Syst. Technol. 2018;27:1805–1812. doi: 10.1109/TCST.2018.2821651. [DOI] [Google Scholar]

- 11.Cirillo A., Cirillo P., De Maria G., Natale C., Pirozzi S. Control of linear and rotational slippage based on six-axis force/tactile sensor; Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA); Singapore. 29 May–3 June 2017; Piscataway, NJ, USA: IEEE; 2017. pp. 1587–1594. [Google Scholar]

- 12.Huang S.J., Chang W.H., Su J.Y. Intelligent robotic gripper with adaptive grasping force. Int. J. Control. Autom. Syst. 2017;15:2272–2282. doi: 10.1007/s12555-016-0249-6. [DOI] [Google Scholar]

- 13.Costanzo M., De Maria G., Natale C. Slipping Control Algorithms for Object Manipulation with Sensorized Parallel Grippers; Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA); Brisbane, QLD, Australia. 21–25 May 2018; Piscataway, NJ, USA: IEEE; 2018. pp. 7455–7461. [Google Scholar]

- 14.De Maria G., Falco P., Natale C., Pirozzi S. Integrated force/tactile sensing: The enabling technology for slipping detection and avoidance; Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA); Seattle, WA, USA. 26–30 May 2015; Piscataway, NJ, USA: IEEE; 2015. pp. 3883–3889. [Google Scholar]

- 15.Roberge J.P., Ruotolo W., Duchaine V., Cutkosky M. Improving industrial grippers with adhesion-controlled friction. IEEE Robot. Autom. Lett. 2018;3:1041–1048. doi: 10.1109/LRA.2018.2794618. [DOI] [Google Scholar]

- 16.Heydarabad S.M., Milella F., Davis S., Nefiti-Meziani S. High-performing adaptive grasp for a robotic gripper using super twisting sliding mode control; Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA); Singapore. 29 May–3 June 2017; Piscataway, NJ, USA: IEEE; 2017. pp. 5852–5857. [Google Scholar]

- 17.Nahum N., Sintov A. Robotic manipulation of thin objects within off-the-shelf parallel grippers with a vibration finger. Mech. Mach. Theory. 2022;177:105032. doi: 10.1016/j.mechmachtheory.2022.105032. [DOI] [Google Scholar]

- 18.Loh M.N., Kirsch L., Rothwell J.C., Lemon R.N., Davare M. Information about the weight of grasped objects from vision and internal models interacts within the primary motor cortex. J. Neurosci. 2010;30:6984–6990. doi: 10.1523/JNEUROSCI.6207-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Chitta S., Sturm J., Piccoli M., Burgard W. Tactile sensing for mobile manipulation. IEEE Trans. Robot. 2011;27:558–568. doi: 10.1109/TRO.2011.2134130. [DOI] [Google Scholar]

- 20.Aujeszky T., Korres G., Eid M., Khorrami F. Estimating Weight of Unknown Objects Using Active Thermography. Robotics. 2019;8:92. doi: 10.3390/robotics8040092. [DOI] [Google Scholar]

- 21.Mardiyanto R., Suryoatmojo H., Valentino R. Development of autonomous mobile robot for taking suspicious object with estimation of object weight ability; Proceedings of the 2016 International Seminar on Intelligent Technology and Its Applications (ISITIA); Lombok, Indonesia. 28–30 July 2016; Piscataway, NJ, USA: IEEE; 2016. pp. 649–654. [Google Scholar]

- 22.Yumbla F., Yi J.S., Abayebas M., Moon H. Analysis of the mating process of plug-in cable connectors for the cable harness assembly task; Proceedings of the 2019 19th International Conference on Control, Automation and Systems (ICCAS); Jeju, Korea. 15–18 October 2019; pp. 1074–1079. [Google Scholar]

- 23.Peñalver A., Pérez J., Fernández J.J., Sales J., Sanz P.J., García J.C., Fornas D., Marín R. Visually-guided manipulation techniques for robotic autonomous underwater panel interventions. Annu. Rev. Control. 2015;40:201–211. doi: 10.1016/j.arcontrol.2015.09.012. [DOI] [Google Scholar]

- 24.Di Castro M., Ferre M., Masi A. CERNTAURO: A Modular Architecture for Robotic Inspection and Telemanipulation in Harsh and Semi-Structured Environments. IEEE Access. 2018;6:37506–37522. doi: 10.1109/ACCESS.2018.2849572. [DOI] [Google Scholar]

- 25.Gentile C., Cordella F., Rodrigues C.R., Zollo L. Touch-and-slippage detection algorithm for prosthetic hands. Mechatronics. 2020;70:102402. doi: 10.1016/j.mechatronics.2020.102402. [DOI] [Google Scholar]

- 26.Cordella F., Gentile C., Zollo L., Barone R., Sacchetti R., Davalli A., Siciliano B., Guglielmelli E. A force-and-slippage control strategy for a poliarticulated prosthetic hand; Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA); Jeju, Korea. 27–29 August 2016; Piscataway, NJ, USA: IEEE; 2016. pp. 3524–3529. [Google Scholar]

- 27.Di Castro M., Buonocore L.R., Ferre M., Gilardoni S., Losito R., Lunghi G., Masi A. A dual arms robotic platform control for navigation, inspection and telemanipulation; Proceedings of the 16th International Conference on Accelerator and Large Experimental Control Systems (ICALEPCS’17); Barcelona, Spain. 8–13 October 2017; pp. 8–13. [Google Scholar]

- 28.Veiga Almagro C., Di Castro M., Lunghi G., Marín Prades R., Sanz Valero P.J., Pérez M.F., Masi A. Monocular Robust Depth Estimation Vision System for Robotic Tasks Interventions in Metallic Targets. Sensors. 2019;19:3220. doi: 10.3390/s19143220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Peterson W., Birdsall T., Fox W. The theory of signal detectability. Trans. Ire Prof. Group Inf. Theory. 1954;4:171–212. doi: 10.1109/TIT.1954.1057460. [DOI] [Google Scholar]

- 30.Di Castro M., Vera J.C., Ferre M., Masi A. Object Detection and 6D Pose Estimation for Precise Robotic Manipulation in Unstructured Environments; Proceedings of the International Conference on Informatics in Control, Automation and Robotics; Lieusaint-Paris, France. 7–9 July 2020; Berlin/Heidelberg, Germany: Springer; 2017. pp. 392–403. [Google Scholar]

- 31.ModelZoo Object Detection—Tensorflow Object Detection API. [(accessed on 19 July 2019)]. Available online: https://modelzoo.co/model/objectdetection.

- 32.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 770–778. [Google Scholar]

- 33.Schunk Datasheet. [(accessed on 27 December 2022)]. Available online: https://schunk.com/us_en/services/tools-downloads/data-sheets/list/series/EGL/egl.

- 34.Engeberg E.D., Meek S.G. Adaptive sliding mode control for prosthetic hands to simultaneously prevent slip and minimize deformation of grasped objects. IEEE/ASME Trans. Mechatronics. 2011;18:376–385. doi: 10.1109/TMECH.2011.2179061. [DOI] [Google Scholar]

- 35.Noce E., Gentile C., Cordella F., Ciancio A., Piemonte V., Zollo L. Grasp control of a prosthetic hand through peripheral neural signals. J. Phys. 2018;1026:12006. doi: 10.1088/1742-6596/1026/1/012006. [DOI] [Google Scholar]

- 36.Lunghi G., Marin R., Di Castro M., Masi A., Sanz P.J. Multimodal Human-Robot Interface for Accessible Remote Robotic Interventions in Hazardous Environments. IEEE Access. 2019;7:127290–127319. doi: 10.1109/ACCESS.2019.2939493. [DOI] [Google Scholar]

- 37.Datasheet FSR Model 400. Interlink Electronics. [(accessed on 27 December 2022)]. Available online: https://www.google.com.hk/url?sa=t&rct=j&q=&esrc=s&source=web&cd=&ved=2ahUKEwiwkoXpg4r9AhUs7TgGHYW4AzIQFnoECAsQAQ&url=https%3A%2F%2Fcdn.sparkfun.com%2Fdatasheets%2FSensors%2FForceFlex%2F2010-10-26-DataSheet-FSR400-Layout2.pdf&usg=AOvVaw2Fbh3ko6r8nYOzQZ24S16L.

- 38.Lauretti C., Cordella F., Tamantini C., Gentile C., Scotto di Luzio F., Zollo L. A Surgeon-Robot Shared Control for Ergonomic Pedicle Screw Fixation. IEEE Robot. Autom. Lett. 2020;5:2554–2561. doi: 10.1109/LRA.2020.2972892. [DOI] [Google Scholar]

- 39.Romeo R.A., Cordella F., Zollo L., Formica D., Saccomandi P., Schena E., Carpino G., Davalli A., Sacchetti R., Guglielmelli E. Development and preliminary testing of an instrumented object for force analysis during grasping; Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Milan, Italy. 25–29 August 2015; Piscataway, NJ, USA: IEEE; 2015. pp. 6720–6723. [DOI] [PubMed] [Google Scholar]

- 40.Leone F., Gentile C., Ciancio A.L., Gruppioni E., Davalli A., Sacchetti R., Guglielmelli E., Zollo L. Simultaneous sEMG classification of wrist/hand gestures and forces. Front. Neurorobotics. 2019;13:42. doi: 10.3389/fnbot.2019.00042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Adams M.J., Johnson S.A., Lefèvre P., Lévesque V., Hayward V., André T., Thonnard J.L. Finger pad friction and its role in grip and touch. J. R. Soc. Interface. 2013;10:20120467. doi: 10.1098/rsif.2012.0467. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.ISO; Geneva, Switzerland: 2010. Ergonomics of human-system interaction-Part 210: Human-centred design for interactive systems; p. 27. [Google Scholar]

- 43.Moniz A., Krings B.J. Robots working with humans or humans working with robots? Searching for social dimensions in new human-robot interaction in industry. Societies. 2016;6:23. doi: 10.3390/soc6030023. [DOI] [Google Scholar]

- 44.Marcastel F.C. CERN’s Accelerator Complex. CERN; Geneva, Switzerland: 2013. Technical Report. [Google Scholar]

- 45.Ducimetière L., Jansson U., Schroder G., Vossenberg E.B., Barnes M.J., Wait G. Design of the injection kicker magnet system for CERN’s 14 TeV proton collider LHC; Proceedings of the Digest of Technical Papers. Tenth IEEE International Pulsed Power Conference; Albuquerque, NM, USA. 3–6 July; Piscataway, NJ, USA: IEEE; 1995. pp. 1406–1411. [Google Scholar]

- 46.Kellerbauer A., Amoretti M., Belov A., Bonomi G., Boscolo I., Brusa R., Büchner M., Byakov V., Cabaret L., Canali C., et al. Proposed antimatter gravity measurement with an antihydrogen beam. Nucl. Instruments Methods Phys. Res. Sect. B. 2008;266:351–356. doi: 10.1016/j.nimb.2007.12.010. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.