Abstract

Sports and exercise training research is constantly evolving to maintain, improve, or regain psychophysical, social, and emotional performance. Exercise training research requires a balance between the benefits and the potential risks. There is an inherent risk of scientific misconduct and adverse events in most sports; however, there is a need to minimize it. We aim to provide a comprehensive overview of the clinical and ethical challenges in sports and exercise research. We also enlist solutions to improve method design in clinical trials and provide checklists to minimize the chances of scientific misconduct. At the outset, historical milestones of exercise science literature are summarized. It is followed by details about the currently available regulations that help to reduce the risk of violating good scientific practices. We also outline the unique characteristics of sports-related research with a narrative of the major differences between sports and drug-based trials. An emphasis is then placed on the importance of well-designed studies to improve the interpretability of results and generalizability of the findings. This review finally suggests that sports researchers should comply with the available guidelines to improve the planning and conduct of future research thereby reducing the risk of harm to research participants. The authors suggest creating an oath to prevent malpractice, thereby improving the knowledge standards in sports research. This will also aid in deriving more meaningful implications for future research based on high-quality, ethically sound evidence.

Keywords: research ethics, research, exercise, review, sport, guidelines

1. Introduction

Historical Milestones of Ethical and Scientific Misconduct in Research

Until the early 19th century, ‘truth’ was fundamentally influenced by cults, religion, and monarchism [1]. With the ‘enlightenment’ of academicians, clinicians and researchers in the 19th century [2], scientific research started to impact the lives of people by providing balanced facts, figures and uncertainties, thereby leading to a better explanation for reality (i.e., evidence vs. eminence). However, dualistic thinking was still interfering with the newer rationalized approach as the estimation of reality by scientific estimation was still being challenged by the dogmatic view of real truth [3].

Over the last decades, researchers underestimated the importance of good ethical conduct [4] in human research by misinterpreting the probabilistic nature of scientific reasoning. Scientific research had constantly been exploited for personal reputations, political power, and terror [3]. The ‘Eugenics program’ originating from the Nazi ideology is an unsettling example of ethical failure and scientific collapse. As part of this program, scientific research was being exploited to justify unwanted sterilization (0.5 million) [5] and mass-killing (0.25 million) [6] for the sake of selection and elimination of ‘unfit genetic material’. In 1955, more than 200,000 children were infected with a Polio vaccine that was not appropriately handled as per the recommended routines [7]. Likewise, the thalidomide disaster of 1962 led to limb deformities and teratogenesis in more than ten thousand newborn children [8]. Considering the aforementioned unethical practices and misconduct, there is a strong need to comply with and re-emphasize the importance of ethics and good scientific practice in humans and other species alike.

In the process of evolution of scientific research, the Nuremberg code laid the foundation for developing ethical biomedical research principles (e.g., the importance of ‘voluntary and informed consent’) [9]. Based on the Nuremberg code and the previously available medical literature, the first ethical principles (i.e., Declaration of Helsinki) were put into practice for safe human experimentation by the World Medical Association in 1964. This declaration proved to be a cornerstone of medical research involving humans and emphasized on considering the health of the patients as the topmost priority [10]. The year 1979 could also be seen as an important milestone, as the ‘Belmont report’ was introduced that supported the idea: ‘the interventions and drugs have to eventually show beneficial effects’. The Belmont report suggests that the recruitment, selection and treatment of participants needs to be equitable. It also highlights the importance of providing a valid rationale for testing procedures to prevent and minimize the risks or harms to the included participants [11].

As a result of the introduction of ethical principles, it became evident that research designs and results should be independent of political influence and reputational gains. There should also be no undeclared conflicts of interest [12]. Interestingly, sports and exercise science emerged as politically meaningful instruments for showing power during the Cold War (i.e., Eastern socialism versus Western capitalism) [13]. Researchers were either being manipulated or sometimes not even published to reduce awareness about the negative effects of performance-enhancing substances [14,15]. Even though these malpractices were strictly against the principles of the Declaration of Helsinki [14], these were prevalent globally, thereby contributing to several incidents of doping in sports [16]. To further minimize unethical research practices, the Good Clinical Practice (GCP) Standards were presented in 1997 to guide the design of clinical trials and formulation of valid research questions [17]. However, some authors criticise the Good Clinical Practice standards as not being morally sufficient to rule out personal conflicts of interest when compared to the ethical standards of the Declaration of Helsinki [18].

Nowadays, professional development and scientific reputation in the research community are related to an increase in the number of publications in high-ranked journals. However, the increasing number of publications gives very little information about the scientific quality of the employed methods, as some of the published papers either contain manipulated results [19] or methods that could not be replicated [12]. Moral and ethical standards are widely followed by sports researchers as evidenced by the applied methods that are mostly safe, justified, valuable, reliable and ethically approved. However, the ethical approval procedures, the dose and the application of exercise training vary greatly between studies and institutions. The review by Kruk et al., 2013 [20] provides a balanced summary of the various principles based on the Nuremberg Code and the Declaration of Helsinki. GCP standards of blinding (subjects and outcome assessors), randomization, and selection are not consistently considered and are sometimes difficult to follow due to limited financial and organizational resources. There is a prevalent trend in the publication of positive results in the scientific community, as negative results often fail to pass editorial review [21]. Additionally, certain unethical research practices have been observed, such as the multiple publication of data from a single trial (referred to as “data slicing”), the submission of duplicate findings to multiple journals, and instances of plagiarism [22]. These limitations negatively affect the power, validity, interpretability and applicability of the available evidence for future research in sports and exercise science. Previous research showed that, if used systematically, lifestyle change and exercise interventions can prove to be one of the most efficient strategies for obtaining positive health outcomes [23] and longevity [24]. Hence, the present article recommends avoiding malpractices and using the underlying ethical standards to balance risks and benefits along with preventing data manipulation and portrayal of false-positive results.

2. Codes of Conduct in Sport Research

All the available codes, declarations, statements, and guidelines aim at providing frameworks for conducting ethical research across disciplines. These frameworks generally cover the regulative, punitive, and educational aspects of research. Codes of ethical conduct not only outline the rules and recommendations for conducting research but also outline punishments in case of non-compliance or misconduct. Hence, these ethical codes and guidelines should be considered the most important educational keystones for researchers as these frameworks allow scientists to design and conduct their studies in a better way. Declarations and guidelines are regularly updated to accommodate newer information and corrections. Thus, one also needs to be flexible when using these guidelines as these reflect ongoing scientific and societal development.

Codes and declarations in sport and exercise science regulate both quantitative and qualitative research and include information about human and animal rights, research design and integrity, authorship and plagiarism. We will categorize these guidelines based on the individuals whom guidelines aim to protect (e.g., participants or researchers).

Legal codes and norms of a country are inherently binding to the researchers and institutions who are conducting the research and do not require ratification from the researching individual or organization. These laws can include data storage, child protection, intellectual property rights, or medical regulations applicable to a specific study. However, ethics codes not only cater to the questions of legality but also include moral parameters of research like conducting ‘true’ research. Likewise, if the codes are drafted by a research organization, everyone conducting research for this particular organization is supposed to follow these codes.

Researchers have the responsibility to assess which codes, and standards are relevant to their field of research depending on the country, participants, and research institution (Table 1). This can be confirmed by the academic supervisors or the scientific ethics board of the research institution. While there is a growing number of codes and guidelines for different research fields, it is important to consider that none of these can cater to the needs of every single research design alone. For example, the Code of Ethics of the American Sociological Association (ASA) states: “Most of the Ethical Standards are written broadly in order to apply to sociologists in varied roles, and the application of an Ethical Standard may vary depending on the context” [25]. Hence, as ethical standards are not exhaustive, scientific conduct that is not specifically addressed by this Code of Ethics is not necessarily ethical or unethical [25].

Table 1.

Detailed overview of Codes, Declarations, Statements and Guidelines relevant for sports and exercise science research.

| Whom Does It Protect? | What Are the Topics? | Regulating Declarations, Codes and Guidelines |

|---|---|---|

| Research Subject (Humans incl. vulnerable populations, animals, environment) |

Anonymity, confidentiality, privacy Informed consent Remuneration Safety and Security Sexual Harassment Gender Identity Human rights Children’s rights Disability rights Animal rights Anti-Doping |

WMA Declaration of Helsinki WHO Research Guidelines ASA Code of Ethics APA Ethical Principles NRC Guide BASES Expert Statements UNICEF procedure for ethical standards IOC Medical Code and Consensus Statements WMA Statement on animal use in biomedical research WADA Anti-Doping Code |

| Research Process | Research questions Study design Data collection Data analysis Result interpretation Result sharing Placebo Randomised Controlled Trials Sample Size Blinding |

EQUATOR Reporting Guidelines (CONSORT, etc.) ISA Guidelines UK MRC Guidelines UKRIO Code of Practice MRC Good research practice Montreal Statement on Research Integrity Singapore Statement on Research Integrity EURODAT Guidelines |

| Researcher (Individuals, Institutions) |

Conflicts of Interest Bias Plagiarism Authorship Fraud Governance Transparency Anti-Betting Anti-corruption |

University Ethics Codes and Guidelines IOC Charta IOC and IPC Ethics Code AAAS Brussels Declaration |

It is crucial to recognize the purpose of an ethics code rather than just following it for ticking boxes. Understanding the aims and limitations of an ethics code will allow for a more meaningful application of the underlying principles to the specific context without ignoring the potential limitations of a study. Unintentional transgressions can occur through subconscious bias, fallacies, or human errors. However, the unintentional errors can be mitigated by following the streamlined process of research conception, method development and study conduct following approval from the Institutional Review Boards (IRBs), Ethical Research Commissions (ERCs), supervisors, and peers. In case of intentional errors, the punitive aspect needs to come into action and the transgressors might need to be investigated and sanctioned, either by the research organizations or by law.

3. Differences between Drug and Exercise Trials

Randomized controlled trials (RCTs) are regarded as the highest level of evidence [26,27]. For both the cases (exercise vs. drug studies), RCTs primarily aim at investigating the dose-response relationships and obtaining causal relationships [28]. Drug trials compare one drug to other alternatives (e.g., another drug, a placebo, or a treatment as usual). Likewise, exercise trials often compare one mode of exercise to another exercise or no exercise interventions (e.g., usual care, waitlist control, true control, etc.), ideally under caloric, workload or time-matched conditions. However, placebo or sham trials are still rare in sports and exercise research due to their challenging nature [29]. The following quality requirements should be fulfilled for conducting high-quality exercise trials: (a) ensure blinding of assessors, participants and researchers; (b) placebo/sham intervention (if possible), and (c) adequate randomization and concealed allocation.

3.1. Blinding

The term ‘blinding’ (or ‘masking’) involves keeping several involved key persons unaware of the group allocation, the treatment, or the hypothesis of a clinical trial [30,31,32,33,34]. The term blinding and also the types of blinding (single, double, or triple blind) are being increasingly used and accepted by researchers but there is a lack of clarity and consistency in the interpretation of those terms [33,35,36]. Blinding should be conducted for participants, health care providers, coaches, outcome assessors, data analysts, etc. [31,33,34,37]. The blinding process helps in preventing bias due to differential treatment perceptions and expectations of the involved groups [28,30,31,32,38,39,40].

Previous research has shown that trials with inadequately reported methods [41] and non-blinded assessors [42] or participants [43] tend to overestimate the effects of intervention. Hence, blinding serves as an important prerequisite for controlling the methodological quality of a clinical trial, thereby reducing bias in assessed outcomes. Owing to this reason, most of the current methodological quality assessment tools and reporting checklists have dedicated sections for ‘blinding’. For example, three out of eleven items are meant for assessing ‘blinding’ in the PEDro scale [44]; the CONSORT checklist for improving the reporting of RCTs also includes a section on ‘blinding’ [45]. In an ideal trial, all participants involved in the study should be ‘blinded’ [30]. However, choosing whom to ‘blind’ also depends on and varies with the research question, study design and the research field under consideration. In the case of exercise trials, blinding is either not adequately done or poorly reported [36,46]. The lack of reporting might be the result of a lack of awareness of the blinding procedures rather than the poor methodological conduct of the trial itself [34]. Hence, blinding is not sufficiently addressed in exercise, medicine and psychology trials [47,48] due to lacking knowledge, awareness and guidance in these scientific fields leading to an increased risk of bias [48].

Blinding of participants is difficult to achieve and maintain [34,39,40,49] in exercise trials as the participants would usually be aware of whether they are in the exercise group or the control (inactive) group [31,39,50]. Likewise, the therapists are also generally aware of the interventions they are delivering [51], and the assessors are aware of the group allocation because it is common in sports sciences that researchers are involved in different parts of research (recruiting, assessment, allocation, training, data handling analysis) due to limited financial resources. Thus, the adequacy of blinding is usually not assessed as it is often seen as ’impossible’ in exercise trials.

Consequently, we strongly recommend using independent staff for testing, training, control and supervision to improve possibilities of blinding of the individuals involved in the study [39]. Researcher also need to decide if it is methodologically feasible and ethically acceptable to withhold the information about the hypothesis and the study aims [52] from assessors and participants. This needs to be considered, addressed and justified before the trial commences (i.e., a priori). While reporting methods of exercise trials, it is important not only to describe who was blinded but also to elaborate the methods used for blinding [33,48]. This helps the readers and research community to effectively evaluate the level of blinding in the trial under consideration [33,53]. Furthermore, if blinding was carried out, the authors can also include the assessment of success of the blinding procedure [33,54]. Readers can access more information about the various possibilities for blinding using the following link (http://links.lww.com/PHM/A246 accessed on 10 October 2022) [36].

3.2. “Placebo” (or Sham Intervention)

‘Placebo’ is an important research instrument used in pharmacology trials to demonstrate the true efficacy of a drug by minimizing therapy expectations of the participants [55]. As the term placebo is generally used in a broad manner, precise definitions are difficult. Placebo is used as a control therapy in clinical trials owing to their comparable appearance to the ‘real’ treatment without the specific therapeutic activity [56]. In an ideal research experiment, it would not be possible to differentiate between a placebo and an intervention treatment [57,58]. The participants should not be aware of the treatment group either, because it can lead to the knowledge of whether they received a placebo or the investigated drug [57]. A review of clinical trials comparing ‘no treatment’ to a ‘placebo treatment’ concluded that the placebo treatment had no significant additional effects overall but may produce relevant clinical effects on an individual level [59]. As outlined previously, the placebo effect is rarely investigated in sports and exercise studies. It is generally investigated using nutritional supplements, ergonomic aids, or various forms of therapy in the few existing studies [60]. Placebos have been shown to have a favorable effect on sports performance research [61], implying that these could be used for improving performance without using any additional performance-enhancing drugs [62].

However, it is quite difficult to have an adequate placebo in exercise intervention studies, as there is currently no standard placebo for structured exercise training [28]. For exercise training interventions, a placebo condition is defined as “an intervention that was not generally recognized as efficacious, that lacked adequate evidence for efficacy, and that has no direct pharmacological, biochemical, or physical mechanism of action according to the current standard of knowledge” [63]. As a result, using a placebo in exercise interventions is often seen as impractical and inefficient [57,58]. As the concept of blinding is also linked to the use of a placebo, it is usually difficult to implement in exercise trials.

When it comes to exercise experiments, an active control group is considered to be more effective than a placebo group [10,28]. In other cases, usual care or standard care can also be used as the control intervention [28]. In exercise trials, instead of using the term ‘placebo treatment’, the terms “placebo-like treatment” or “sham interventions” should be applied [64,65]. Previous recommendations by other researchers [61] also underpin our rationale.

3.3. Randomization and Allocation Concealment

Group allocation in a research study should be randomized and concealed by an independent researcher to minimize selection bias [66]. Randomization procedures ensure that the differences in treatment outcomes solely occur by chance [28,67]. Several methods for randomization are available; however, methods such as stratified randomization are being increasingly popular as they ensure equal distribution of participants to the different groups based on several important characteristics [66]. Other types of randomization, such as cluster randomization, may be appropriate when investigating larger groups, for example, in multicenter trials [28].

Since researchers are frequently involved in all phases of a trial (recruitment, allocation, assessment and data processing), randomization should usually be conducted by someone who is not familiar with the project’s aims and hypotheses. In studies with a large number of participants, the interaction between subjects and assessors can significantly impact the results [68]. The randomization procedure used in the clinical trial should be presented in scientific articles and project reports so that readers can understand and replicate the process if needed [66]. Based on the aforementioned aspects, exercise trials are not easily comparable to drug trials and the differences lead to difficulties in conducting scientifically conceptualized exercise trials. However, researchers should strive for quality research by using robust methods and providing detailed information on blinding, randomization, choice of control groups, or sham therapies, as appropriate. Researchers should critically evaluate the risk-benefit ratio of exercise so that the positive impacts of exercise on health can be derived and the cardiovascular risks associated with exercise could be minimized [69].

4. Key Elements of an Ethical Approval in Exercise Science

As previously described, ethical guidelines are needed to protect study participants from potential study risks and increase the chances of attaining results that ease interpretation. Therefore, a prospective ethical approval process is required prior to the recruitment of the participants [70]. This practice equally benefits the participants by safeguarding them against potential risks and the practitioners who base their clinical decisions on research results. Research results from a study with a strong methodology will enable informed and evidence-based decision making. If the methodology of a research project contains some major flaws, it will negatively affect the practical applicability of the observed results [71]. Various journal reviewers provide suggestions to reject manuscripts without any option to resubmit if no ethical approval information is provided. This demonstrates the importance of ethical approval and proper scientific conduct in research [70].

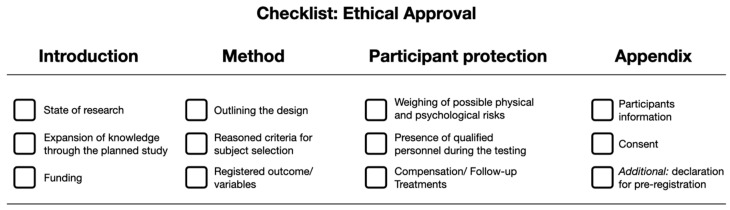

The following key elements need to be addressed in an ethical review proposal: Introduction, method, participant protection, and appendix. These key elements should be detailed in a proposal with at least three crucial characteristics addressed in each section (Figure 1). This hands-on framework would help to expedite the process of decision-making for members of the ethics committee [72].

Figure 1.

Overview 4 × 3 short list for outlining ethical approval in sport and exercise science.

The ‘introduction’ section should start with a general overview of the current state of research [4]. Researchers need to describe the rationale of the proposed study in an easy and comprehensible language considering the current state of knowledge on that topic [4]. The description helps to provide a balanced summary of the risks and benefits associated with the interventions in the proposed study. The novelty of the stated research question and the underlying hypothesis must be justified. If the proposed study fails to expand the current literature on the topic under consideration, conducting the study would be a ‘waste’ of time and financial resources for researchers, participants, and funding agencies [73]. Hence, ethical approvals should not be given for research projects that fail to provide novelty in the approach to the respective research area. The introduction should also include information on funding sources including the name of the funding partner, duration of monetary/resource support, and any potential conflicts of interest. If no funding is available, authors should declare that ‘This study received no funding’ [70].

The subsequent ‘methods’ section should include detailed information about the temporal and structural aspects of the study design. Researchers should justify the used study design in a detailed manner [4,28]. Multiple research designs can be utilized for addressing a specific research question, including experimental, quasi-experimental, and single-case trial designs [74,75]. However, a valid rationale should be provided for choosing a randomized cross-over trial design when the gold standard of randomized control trials is also feasible. Readers are advised to refer to the framework laid down by Hecksteden et al., 2018, for extensive information on this section [28]. Researchers should also provide a broad, global and up-to-date literature-based justification for their interventions or methods employed in the study. For instance, if the participants are asked to consume supplements, the recommendations for the dose needs to be explained based on prior high-quality studies and reviews for that supplement [4]. The criteria for subject selection (inclusion and exclusion criteria) and sample size estimation need to be explained in detail to allow replication of the study in the future [76]. Lacking sample size estimations is only acceptable in rare cases and requires detailed explanations (e.g., pilot trials, exploratory trials to formulate a hypothesis, acceptability trials). Moreover, sufficient details should be provided for the measuring devices used in the study and a sound rationale should be provided for the choice of that particular measuring device and the measured parameters [4].

The section on ‘participant protection’ deals with potential risks (physical and psychological adverse outcomes) and benefits to the participants. The focus should be adjusted to the study population under consideration. For example, while conducting a study on a novel weight training protocol with elite athletes, all information and possible effects on the athletes’ performance need to be considered, as their performance level is their ‘human capital’ [4]. The investigators also need to provide information on the individuals responsible for different parts of the study, i.e., treatment provider, outcome assessor, statistician, etc. In some cases, externally qualified personnel are needed during the examination process. For example, a physician might be needed for blood sampling or biopsies and this person should also be familiar with the regulations and procedures to avoid risk to the participants due to a lack of experience in this area. Prior experience and qualifications are required for conducting research with vulnerable groups, such as children, the elderly and pregnant females. Williams et al. (2011) summarized essential aspects of conducting research studies with younger participants [77]. Overall, the personnel should be blinded to the details of the group allocation and participants, if possible [30]. The study applicants also need to provide information about the planned compensation and the follow-up interventions. Harriss and colleagues suggested that the investigators are not expected to offer the treatments in case of injury to the participants during the study (except first aid) [70]. However, this recommendation is not usually documented and translated into research practice.

The ‘appendix’ section should contain relevant details about the following: consent, information to the participants and a declaration of pre-registration. The information to the participant and the consent forms need to be documented in an easy to understand language. A brief summary of the purpose of the study and the tasks to be performed by the participants should also be added. Then, a concise but comprehensive overview of the potential risks and benefits is needed. The next section should include information for participants: the participants’ right to decline participation without any consequence and the right to withdraw their consent at any time without any explanation. The regulations for the storage, sharing and retention of study data need to be detailed [70]. The names and institutional affiliations of all the researchers along with the contact information of the project manager should be listed. A brief overview of the study’s aim, tasks, methods and data acquisition strategies should be described. Finally, consent is needed for processing the recorded personal data [70]. The last section of the ‘appendix’ must include a declaration of pre-registration (e.g., registration in the Open Science Framework or trial registries) to avoid alterations in the procedure afterward and facilitate replication of study methods [78].

5. Study Design and Analysis Models

The process of conceptualizing an exercise trial might involve various pitfalls at every stage (hypothesis formulation, study design, methodology, data acquisition, data processing, statistical analysis, presentation and interpretation of results, etc.). Thus, the entire ‘design package’ needs to be considered when constructing an exercise (training) trial [28]. Formulation of an adequate and justified research question is the essential aspect before starting any research study. Formulating a good research question is pivotal to achieve adequate study quality [79]. According to Banerjee et al., 2009 [80], “a strong hypothesis serves the purpose of answering major part of the research question even before the study starts”. As outlined in previous sections, ethical research aspects must be taken into account while framing the research question to protect the privacy and reduce risks to the participants. The confidentiality of data should be ascertained and the participants should be free to withdraw from the study at any time. The authors should also avoid deceptive research practices [79].

Hecksteden et al., 2018, suggested that RCTs can be regarded as the gold standard for investigating the causal relationships in exercise trials [28]. However, it is sometimes not feasible to conduct RCTs in the field of sports science due to logistical issues, such as smaller sample sizes and blinding the location of the study (e.g., schools, colleges, clinics, etc.). In this case, alternative study designs such as cluster-RCTs, randomized crossover trials, N-of-1 trials, uncontrolled/non-randomized trials, and prospective cohort studies can be considered [81]. Considering the complex nature of exercise interventions, the Consensus on Exercise Reporting Template (CERT) has been developed to supplement the reporting and documentation of randomized exercise trials [81]. Adherence to these templates might help to improve the ethical proposal reporting standards when designing new RCTs.

A recent comment, in the journal ‘Nature’, highlights the importance of using the right statistical test and properly interpreting the results. According to the paper, the results of 51 percent of articles published in five peer-reviewed journals were misinterpreted [82]. Frequentist statistics and p-values are popular summaries of experimental results but there is a scope for misinterpretation due to the lack of supplementary information with these statistics. For instance, authors tend to draw inferences about the results of a study based on certain ‘threshold p-values’ (generally p < 0.05) [83]. However, with an increase in sample size, the p-value tends to come closer to zero regardless of the effect size of the intervention [83]. With the rise of larger datasets and thus potentially higher sample sizes, the p-value threshold becomes questionable. A call for action has recently been raised by more than 800 signatories to retire statistical significance and to stop categorizing results as being statistically significant or non-significant. Recently, researchers suggested using confidence intervals for improving the interpretation of study results [82]. Although alternative methods such as magnitude-based inference (MBI) exists, there is scarce evidence that MBI has checked the use of p-value and hypothesis testing by sports researchers [84]. MBI tends to reduce the type II error rate but it increases the type I error rate by about two to six times the rate of standard hypothesis testing [85]. In the next paragraphs, we focus on the commonly used practices within the frequentist statistics domain.

Frequentist statistical tests are categorized into parametric and non-parametric tests. Non-parametric tests do not require the data to be normally distributed, whereas parametric tests do [86]. The following factors help in deciding the appropriate statistical test: (a) type of dependent and independent variables (continuous, discrete, or ordinal); (b) type of distribution, if the groups are independent or matched; (c) levels of observations; and (d) time dependence. Readers can choose the right statistical tests based on the type of research data they are planning to use [87,88]. A recent publication outlined 25 common misinterpretations concerning p-values, confidence intervals, power calculations and key considerations while interpreting frequentist statistics [89]. We recommend sports researchers consider the listed warnings while interpreting the results of statistical tests.

Out of the various frequentist statistical methods, analysis of variances (ANOVA) is one of the most widely used tests to analyze the results of RCTs. It does not, however, provide an estimate of the difference between groups, which is usually the most important aspect of an RCT [90]. Linear models (e.g., t-tests) suffer from similar issues when analyzing categorical variables, which are a wider part of RCT analysis [91]. Type I errors (false positive, rejecting a null hypothesis that is correct) and Type II errors (false negative, failure to reject a false null hypothesis) are often discussed while interpreting RCT results [80]. Though it is not possible to completely eliminate these errors, there are ways to minimize their likelihood and report the statistics appropriately. The most commonly used methods for minimizing error rates include the following: (a) increasing the sample size; (b) adjusting for covariates and baseline differences [92]; (c) eliminating significance testing; and (d) reporting a confusion matrix [80,86,93].

Mixed logit models are potential solutions for some of the challenges listed above. They combine the advantages of random effects logistic regression analysis with the benefits of regression models [94]. In addition, mixed logit models, as part of the larger framework of generalized linear mixed models, provide a viable alternative for analyzing a wide range of outcomes. For increasing the transparency and interpretability of the observed results, mixed logit classification algorithms and evaluation matrices such as cross validation and presentation of a confusion matrix (type I and type II error rates) can be utilized [86]. Mixed logit models can also be utilized as predictive models rather just ‘inference testing’ models.

6. Limitations

Despite extensive efforts to incorporate empirical and current evidence regarding good scientific practice and ethics into this paper, it is possible that some literature may have been omitted. Nonetheless, the paper comprehensively covers key aspects of prevalent ethical misconducts and the standards that should be upheld to prevent such practices. As a result, readers can have confidence in the literature presented, which is based on a substantial body of existing evidence. Readers are also encouraged to engage in critical evaluation and to consider new approaches that could improve the overall scientific literature.

7. Conclusions

We highlighted the various pitfalls and misconduct that can take place in sports and exercise research. Individual researchers associated with a research organization need to comply with the highest available standards. They need to maintain an intact ‘moral compass’ that is unaffected by expectations and environmental constraints thereby reducing the likelihood of unethical behavior for the sake of publication quantity, interpretability, applicability and societal trust in evidence-based decision-making. To achieve these objectives, a Health and Exercise Research Oath (HERO) could be developed that minimizes the allurement to cheat and could be used by PhD candidates, senior researchers, and professors. Such an oath would prevent intentional or unintentional malpractices in sport and exercise research, thereby strengthening the knowledge standards based on ethical exercise science research. Overall, this will also improve the applicability and interpretability of research outcomes.

Acknowledgments

We acknowledge the financial support of the German Research Foundation (DFG) and the Open Access Publication Fund of Bielefeld University for the article processing charge.

Author Contributions

Conceptualization, N.K.A., G.R., S.C., A.P., B.K.L., A.N., P.W. and L.D.; methodology, N.K.A., G.R., S.C., A.P., B.K.L., A.N., P.W. and L.D.; resources, P.W. and L.D.; data curation, N.K.A., G.R., S.C., A.P., B.K.L., A.N., P.W. and L.D.; writing—original draft preparation, N.K.A., G.R., S.C., A.P., B.K.L., A.N., P.W. and L.D.; writing—review and editing, N.K.A., G.R., S.C., A.P., B.K.L., A.N., P.W. and L.D.; visualization, N.K.A., G.R., S.C., A.P., B.K.L., A.N., P.W. and L.D.; supervision, P.W. and L.D.; project administration, L.D. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research received no external funding.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Liquin E.G., Metz S.E., Lombrozo T. Science demands explanation, religion tolerates mystery. Cognition. 2020;204:104398. doi: 10.1016/j.cognition.2020.104398. [DOI] [PubMed] [Google Scholar]

- 2.Weisz G. The emergence of medical specialization in the nineteenth century. Bull. Hist. Med. 2003;77:536–575. doi: 10.1353/bhm.2003.0150. [DOI] [PubMed] [Google Scholar]

- 3.Casadevall A., Fang F.C. (A)HISTORICAL science. Infect. Immun. 2015;83:4460–4464. doi: 10.1128/IAI.00921-15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.West J. Research ethics in sport and exercise science. In: Iphofen R., editor. Handbook of Research Ethics and Scientific Integrity. Springer International Publishing; Cham, Switzerland: 2020. pp. 1091–1107. [Google Scholar]

- 5.Reilly P.R. Eugenics and involuntary sterilization: 1907–2015. Annu. Rev. Genom. Hum. Genet. 2015;16:351–368. doi: 10.1146/annurev-genom-090314-024930. [DOI] [PubMed] [Google Scholar]

- 6.Grodin M.A., Miller E.L., Kelly J.I. The nazi physicians as leaders in eugenics and “euthanasia”: Lessons for today. Am. J. Public Health. 2018;108:53–57. doi: 10.2105/AJPH.2017.304120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Offit P.A. The cutter incident, 50 years later. N. Engl. J. Med. 2005;352:1411–1412. doi: 10.1056/NEJMp048180. [DOI] [PubMed] [Google Scholar]

- 8.Vargesson N. Thalidomide-induced teratogenesis: History and mechanisms. Birth Defects Res. Part C Embryo Today Rev. 2015;105:140–156. doi: 10.1002/bdrc.21096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Moreno J.D., Schmidt U., Joffe S. The nuremberg code 70 years later. JAMA. 2017;318:795. doi: 10.1001/jama.2017.10265. [DOI] [PubMed] [Google Scholar]

- 10.World Medical Association Declaration of helsinki: Ethical principles for medical research involving human subjects. JAMA. 2013;310:2191. doi: 10.1001/jama.2013.281053. [DOI] [PubMed] [Google Scholar]

- 11.Sims J.M. A brief review of the belmont report: Dimens. Crit. Care Nurs. 2010;29:173–174. doi: 10.1097/DCC.0b013e3181de9ec5. [DOI] [PubMed] [Google Scholar]

- 12.Schachman H.K. From “publish or perish” to “patent and prosper”. J. Biol. Chem. 2006;281:6889–6903. doi: 10.1074/jbc.X600002200. [DOI] [PubMed] [Google Scholar]

- 13.Yessis M. Sportsmedicine cold war? Phys. Sportsmed. 1981;9:127. doi: 10.1080/00913847.1981.11711215. [DOI] [PubMed] [Google Scholar]

- 14.Catlin D.H., Murray T.H. Performance-enhancing drugs, fair competition, and Olympic sport. JAMA. 1996;276:231–237. doi: 10.1001/jama.1996.03540030065034. [DOI] [PubMed] [Google Scholar]

- 15.Nikolopoulos D.D., Spiliopoulou C., Theocharis S.E. Doping and musculoskeletal system: Short-term and long-lasting effects of doping agents: Doping and musculoskeletal system. Fundam. Clin. Pharmacol. 2011;25:535–563. doi: 10.1111/j.1472-8206.2010.00881.x. [DOI] [PubMed] [Google Scholar]

- 16.Ljungqvist A. Half a century of challenges. Bioanalysis. 2012;4:1531–1533. doi: 10.4155/bio.12.121. [DOI] [PubMed] [Google Scholar]

- 17.Otte A., Maier-Lenz H., Dierckx R.A. Good clinical practice: Historical background and key aspects. Nucl. Med. Commun. 2005;26:563–574. doi: 10.1097/01.mnm.0000168408.03133.e3. [DOI] [PubMed] [Google Scholar]

- 18.Kimmelman J., Weijer C., Meslin E.M. Helsinki discords: FDA, ethics, and international drug trials. Lancet. 2009;373:13–14. doi: 10.1016/S0140-6736(08)61936-4. [DOI] [PubMed] [Google Scholar]

- 19.Horbach S.P.J.M., Breit E., Halffman W., Mamelund S.-E. On the willingness to report and the consequences of reporting research misconduct: The role of power relations. Sci. Eng. Ethics. 2020;26:1595–1623. doi: 10.1007/s11948-020-00202-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kruk J. Good scientific practice and ethical principles in scientific research and higher education. Cent. Eur. J. Sport Sci. Med. 2013;1:25–29. [Google Scholar]

- 21.Caldwell A.R., Vigotsky A.D., Tenan M.S., Radel R., Mellor D.T., Kreutzer A., Lahart I.M., Mills J.P., Boisgontier M.P. Consortium for Transparency in Exercise Science (COTES) collaborators moving sport and exercise science forward: A call for the adoption of more transparent research practices. Sport. Med. Auckl. NZ. 2020;50:449–459. doi: 10.1007/s40279-019-01227-1. [DOI] [PubMed] [Google Scholar]

- 22.Navalta J.W., Stone W.J., Lyons T.S. Ethical issues relating to scientific discovery in exercise science. Int. J. Exerc. Sci. 2019;12:1–8. [PMC free article] [PubMed] [Google Scholar]

- 23.Vina J., Sanchis-Gomar F., Martinez-Bello V., Gomez-Cabrera M. Exercise acts as a drug; the pharmacological benefits of exercise: Exercise acts as a drug. Br. J. Pharmacol. 2012;167:1–12. doi: 10.1111/j.1476-5381.2012.01970.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Garatachea N., Pareja-Galeano H., Sanchis-Gomar F., Santos-Lozano A., Fiuza-Luces C., Morán M., Emanuele E., Joyner M.J., Lucia A. Exercise attenuates the major hallmarks of aging. Rejuven. Res. 2015;18:57–89. doi: 10.1089/rej.2014.1623. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.American Sociological Association . American Sociological Association Code of Ethics. American Sociological Association; Washington, DC, USA: 2018. [Google Scholar]

- 26.Bench S., Day T., Metcalfe A. Randomised controlled trials: An introduction for nurse researchers. Nurse Res. 2013;20:38–44. doi: 10.7748/nr2013.05.20.5.38.e312. [DOI] [PubMed] [Google Scholar]

- 27.Cartwright N. What are randomised controlled trials good for? Philos. Stud. 2010;147:59–70. doi: 10.1007/s11098-009-9450-2. [DOI] [Google Scholar]

- 28.Hecksteden A., Faude O., Meyer T., Donath L. How to construct, conduct and analyze an exercise training study? Front. Physiol. 2018;9:1007. doi: 10.3389/fphys.2018.01007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Beedie C.J. Placebo effects in competitive sport: Qualitative data. J. Sport. Sci. Med. 2007;6:21–28. [PMC free article] [PubMed] [Google Scholar]

- 30.Karanicolas P.J., Farrokhyar F., Bhandari M. Practical tips for surgical research: Blinding: Who, what, when, why, how? Can. J. Surg. J. Can. Chir. 2010;53:345–348. [PMC free article] [PubMed] [Google Scholar]

- 31.Forbes D. Blinding: An essential component in decreasing risk of bias in experimental designs. Evid. Based Nurs. 2013;16:70–71. doi: 10.1136/eb-2013-101382. [DOI] [PubMed] [Google Scholar]

- 32.Boutron I., Guittet L., Estellat C., Moher D., Hróbjartsson A., Ravaud P. Reporting methods of blinding in randomized trials assessing nonpharmacological treatments. PLoS Med. 2007;4:e61. doi: 10.1371/journal.pmed.0040061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Schulz K.F., Grimes D.A. Blinding in randomised trials: Hiding who got what. Lancet. 2002;359:696–700. doi: 10.1016/S0140-6736(02)07816-9. [DOI] [PubMed] [Google Scholar]

- 34.Boutron I., Estellat C., Guittet L., Dechartres A., Sackett D.L., Hróbjartsson A., Ravaud P. Methods of blinding in reports of randomized controlled trials assessing pharmacologic treatments: A systematic review. PLoS Med. 2006;3:e425. doi: 10.1371/journal.pmed.0030425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Devereaux P.J. Physician interpretations and textbook definitions of blinding terminology in randomized controlled trials. JAMA. 2001;285:2000. doi: 10.1001/jama.285.15.2000. [DOI] [PubMed] [Google Scholar]

- 36.Armijo-Olivo S., Fuentes J., da Costa B.R., Saltaji H., Ha C., Cummings G.G. Blinding in physical therapy trials and its association with treatment effects: A Meta-epidemiological study. Am. J. Phys. Med. Rehabil. 2017;96:34–44. doi: 10.1097/PHM.0000000000000521. [DOI] [PubMed] [Google Scholar]

- 37.Haahr M.T., Hróbjartsson A. Who is blinded in randomized clinical trials? A study of 200 trials and a survey of authors. Clin. Trials. 2006;3:360–365. doi: 10.1177/1740774506069153. [DOI] [PubMed] [Google Scholar]

- 38.Eston R.G. Stages in the development of a research project: Putting the idea together. Br. J. Sport. Med. 2000;34:59–64. doi: 10.1136/bjsm.34.1.59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Helmhout P.H., Staal J.B., Maher C.G., Petersen T., Rainville J., Shaw W.S. Exercise therapy and low back pain: Insights and proposals to improve the design, conduct, and reporting of clinical trials. Spine. 2008;33:1782–1788. doi: 10.1097/BRS.0b013e31817b8fd6. [DOI] [PubMed] [Google Scholar]

- 40.Kamper S.J. Blinding: Linking evidence to practice. J. Orthop. Sport. Phys. Ther. 2018;48:825–826. doi: 10.2519/jospt.2018.0705. [DOI] [PubMed] [Google Scholar]

- 41.Schulz K.F. Empirical evidence of bias. Dimensions of methodological quality associated with estimates of treatment effects in controlled trials. JAMA J. Am. Med. Assoc. 1995;273:408–412. doi: 10.1001/jama.1995.03520290060030. [DOI] [PubMed] [Google Scholar]

- 42.Liu C., LaValley M., Latham N.K. Do unblinded assessors bias muscle strength outcomes in randomized controlled trials of progressive resistance strength training in older adults? Am. J. Phys. Med. Rehabil. 2011;90:190–196. doi: 10.1097/PHM.0b013e31820174b3. [DOI] [PubMed] [Google Scholar]

- 43.Hróbjartsson A., Emanuelsson F., Skou Thomsen A.S., Hilden J., Brorson S. Bias due to lack of patient blinding in clinical trials. A systematic review of trials randomizing patients to blind and nonblind sub-studies. Int. J. Epidemiol. 2014;43:1272–1283. doi: 10.1093/ije/dyu115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.de Morton N.A. The PEDro scale is a valid measure of the methodological quality of clinical trials: A demographic study. Aust. J. Physiother. 2009;55:129–133. doi: 10.1016/S0004-9514(09)70043-1. [DOI] [PubMed] [Google Scholar]

- 45.The CONSORT Group. Schulz K.F., Altman D.G., Moher D. CONSORT 2010 Statement: Updated guidelines for reporting parallel group randomised trials. BMC Med. 2010;8:18. doi: 10.1186/1741-7015-8-18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Devereaux P.J., Choi P.T.-L., El-Dika S., Bhandari M., Montori V.M., Schünemann H.J., Garg A.X., Busse J.W., Heels-Ansdell D., Ghali W.A., et al. An observational study found that authors of randomized controlled trials frequently use concealment of randomization and blinding, despite the failure to report these methods. J. Clin. Epidemiol. 2004;57:1232–1236. doi: 10.1016/j.jclinepi.2004.03.017. [DOI] [PubMed] [Google Scholar]

- 47.Fergusson D. Turning a blind eye: The success of blinding reported in a random sample of randomised, placebo controlled trials. BMJ. 2004;328:432. doi: 10.1136/bmj.37952.631667.EE. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Kolahi J., Bang H., Park J. Towards a proposal for assessment of blinding success in clinical trials: Up-to-date review. Community Dent. Oral Epidemiol. 2009;37:477–484. doi: 10.1111/j.1600-0528.2009.00494.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Boutron I., Tubach F., Giraudeau B., Ravaud P. Blinding was judged more difficult to achieve and maintain in nonpharmacologic than pharmacologic trials. J. Clin. Epidemiol. 2004;57:543–550. doi: 10.1016/j.jclinepi.2003.12.010. [DOI] [PubMed] [Google Scholar]

- 50.Chang Y.-K., Chu C.-H., Wang C.-C., Wang Y.-C., Song T.-F., Tsai C.-L., Etnier J.L. Dose–response relation between exercise duration and cognition. Med. Sci. Sport. Exerc. 2015;47:159–165. doi: 10.1249/MSS.0000000000000383. [DOI] [PubMed] [Google Scholar]

- 51.Smart N.A., Waldron M., Ismail H., Giallauria F., Vigorito C., Cornelissen V., Dieberg G. Validation of a new tool for the assessment of study quality and reporting in exercise training studies: TESTEX. Int. J. Evid. Based Healthc. 2015;13:9–18. doi: 10.1097/XEB.0000000000000020. [DOI] [PubMed] [Google Scholar]

- 52.Bang H., Flaherty S.P., Kolahi J., Park J. Blinding assessment in clinical trials: A review of statistical methods and a proposal of blinding assessment protocol. Clin. Res. Regul. Aff. 2010;27:42–51. doi: 10.3109/10601331003777444. [DOI] [Google Scholar]

- 53.Schulz K.F., Chalmers I., Altman D.G. The landscape and lexicon of blinding in randomized trials. Ann. Intern. Med. 2002;136:254. doi: 10.7326/0003-4819-136-3-200202050-00022. [DOI] [PubMed] [Google Scholar]

- 54.Webster R.K., Bishop F., Collins G.S., Evers A.W.M., Hoffmann T., Knottnerus J.A., Lamb S.E., Macdonald H., Madigan C., Napadow V., et al. Measuring the success of blinding in placebo-controlled trials: Should we be so quick to dismiss it? J. Clin. Epidemiol. 2021;135:176–181. doi: 10.1016/j.jclinepi.2021.02.022. [DOI] [PubMed] [Google Scholar]

- 55.Enck P., Klosterhalfen S. Placebos and the placebo effect in drug trials. Handb. Exp. Pharmacol. 2019;260:399–431. doi: 10.1007/164_2019_269.2. [DOI] [PubMed] [Google Scholar]

- 56.Hróbjartsson A., Gøtzsche P.C. Is the placebo powerless? An analysis of clinical trials comparing placebo with no treatment. N. Engl. J. Med. 2001;344:1594–1602. doi: 10.1056/NEJM200105243442106. [DOI] [PubMed] [Google Scholar]

- 57.Beedie C., Foad A., Hurst P. Capitalizing on the placebo component of treatments. Curr. Sport. Med. Rep. 2015;14:284–287. doi: 10.1249/JSR.0000000000000172. [DOI] [PubMed] [Google Scholar]

- 58.Maddocks M., Kerry R., Turner A., Howick J. Problematic placebos in physical therapy trials: Placebos in physical therapy trials. J. Eval. Clin. Pract. 2016;22:598–602. doi: 10.1111/jep.12582. [DOI] [PubMed] [Google Scholar]

- 59.Hróbjartsson A., Gøtzsche P.C. Placebo interventions for all clinical conditions. Cochrane Database Syst. Rev. 2010;1:1–368. doi: 10.1002/14651858.CD003974.pub3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Beedie C.J., Foad A.J. The placebo effect in sports performance: A brief review. Sport. Med. 2009;39:313–329. doi: 10.2165/00007256-200939040-00004. [DOI] [PubMed] [Google Scholar]

- 61.Beedie C., Benedetti F., Barbiani D., Camerone E., Cohen E., Coleman D., Davis A., Elsworth-Edelsten C., Flowers E., Foad A., et al. Consensus statement on placebo effects in sports and exercise: The need for conceptual clarity, methodological rigour, and the elucidation of neurobiological mechanisms. Eur. J. Sport Sci. 2018;18:1383–1389. doi: 10.1080/17461391.2018.1496144. [DOI] [PubMed] [Google Scholar]

- 62.Bérdi M., Köteles F., Szabó A., Bárdos G. Placebo effects in sport and exercise: A meta-analysis. Eur. J. Ment. Health. 2011;6:196–212. doi: 10.5708/EJMH.6.2011.2.5. [DOI] [Google Scholar]

- 63.Lindheimer J.B., O’Connor P.J., Dishman R.K. Quantifying the placebo effect in psychological outcomes of exercise training: A meta-analysis of randomized trials. Sport. Med. 2015;45:693–711. doi: 10.1007/s40279-015-0303-1. [DOI] [PubMed] [Google Scholar]

- 64.Hróbjartsson A. What are the main methodological problems in the estimation of placebo effects? J. Clin. Epidemiol. 2002;55:430–435. doi: 10.1016/S0895-4356(01)00496-6. [DOI] [PubMed] [Google Scholar]

- 65.Niemansburg S.L., van Delden J.J.M., Dhert W.J.A., Bredenoord A.L. Reconsidering the ethics of sham interventions in an era of emerging technologies. Surgery. 2015;157:801–810. doi: 10.1016/j.surg.2014.12.001. [DOI] [PubMed] [Google Scholar]

- 66.Moher D., Hopewell S., Schulz K.F., Montori V., Gotzsche P.C., Devereaux P.J., Elbourne D., Egger M., Altman D.G. CONSORT 2010 explanation and elaboration: Updated guidelines for reporting parallel group randomised trials. BMJ. 2010;340:c869. doi: 10.1136/bmj.c869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Armitage P. The role of randomization in clinical trials. Stat. Med. 1982;1:345–352. doi: 10.1002/sim.4780010412. [DOI] [PubMed] [Google Scholar]

- 68.Kramer M.S. Scientific CHALLENGES IN THE APPLICATION OF RANDOMIZED TRIALS. JAMA J. Am. Med. Assoc. 1984;252:2739. doi: 10.1001/jama.1984.03350190041017. [DOI] [PubMed] [Google Scholar]

- 69.Warburton D.E., Taunton J., Bredin S.S., Isserow S. The risk-benefit paradox of exercise. [(accessed on 11 October 2021)];Br. Columbia Med. J. 2016 58:210–218. Available online: https://bcmj.org/articles/risk-benefit-paradox-exercise. [Google Scholar]

- 70.Harriss D.J., MacSween A., Atkinson G. Ethical standards in sport and exercise science research: 2020 update. Int. J. Sport. Med. 2019;40:813–817. doi: 10.1055/a-1015-3123. [DOI] [PubMed] [Google Scholar]

- 71.Stewart R.J., Reider B. The ethics of sports medicine research. Clin. Sport. Med. 2016;35:303–314. doi: 10.1016/j.csm.2015.10.009. [DOI] [PubMed] [Google Scholar]

- 72.West J., Bill K., Martin L. What constitutes research ethics in sport and exercise science? Res. Ethics. 2010;6:147–153. doi: 10.1177/174701611000600407. [DOI] [Google Scholar]

- 73.Fleming S. Social research in sport (and beyond): Notes on exceptions to informed consent. Res. Ethics. 2013;9:32–43. doi: 10.1177/1747016112472872. [DOI] [Google Scholar]

- 74.Ato M., López J.J., Benavente A. A classification system for research designs in psychology. An. Psicol. 2013;29:1038–1059. [Google Scholar]

- 75.Montero I., León O.G. A guide for naming research studies in Psychology. Int. J. Clin. Health Psychol. 2007;7:847–862. [Google Scholar]

- 76.Harriss D., Macsween A., Atkinson G. Standards for ethics in sport and exercise science research: 2018 update. Int. J. Sport. Med. 2017;38:1126–1131. doi: 10.1055/s-0043-124001. [DOI] [PubMed] [Google Scholar]

- 77.Williams C.A., Cobb M., Rowland T., Winter E. The BASES expert statement on ethics and participation in research of young people. [(accessed on 10 October 2022)];Sport Exerc. Sci. 2011 29:12–13. Available online: https://www.bases.org.uk/imgs/ethics_and_participation_in_research_of_young_people625.pdf. [Google Scholar]

- 78.Cumming G. The new statistics: Why and how. Psychol. Sci. 2014;25:7–29. doi: 10.1177/0956797613504966. [DOI] [PubMed] [Google Scholar]

- 79.Ratan S., Anand T., Ratan J. Formulation of research question—Stepwise approach. J. Indian Assoc. Pediatr. Surg. 2019;24:15. doi: 10.4103/jiaps.JIAPS_76_18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Banerjee A., Chitnis U., Jadhav S., Bhawalkar J., Chaudhury S. Hypothesis testing, type I and type II errors. Ind. Psychiatry J. 2009;18:127. doi: 10.4103/0972-6748.62274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Major D.H., Røe Y., Grotle M., Jessup R.L., Farmer C., Småstuen M.C., Buchbinder R. Content reporting of exercise interventions in rotator cuff disease trials: Results from application of the Consensus on Exercise Reporting Template (CERT) BMJ Open Sport Exerc. Med. 2019;5:e000656. doi: 10.1136/bmjsem-2019-000656. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Amrhein V., Greenland S., McShane B. Scientists rise up against statistical significance. Nature. 2019;567:305–307. doi: 10.1038/d41586-019-00857-9. [DOI] [PubMed] [Google Scholar]

- 83.Lin M., Lucas H.C., Shmueli G. Research commentary—Too big to fail: Large samples and the p-value problem. Inf. Syst. Res. 2013;24:906–917. doi: 10.1287/isre.2013.0480. [DOI] [Google Scholar]

- 84.Lohse K.R., Sainani K.L., Taylor J.A., Butson M.L., Knight E.J., Vickers A.J. Systematic review of the use of “magnitude-based inference” in sports science and medicine. PLoS ONE. 2020;15:e0235318. doi: 10.1371/journal.pone.0235318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Sainani K.L. The problem with “magnitude-based inference”. Med. Sci. Sport. Exerc. 2018;50:2166–2176. doi: 10.1249/MSS.0000000000001645. [DOI] [PubMed] [Google Scholar]

- 86.Hopkins S., Dettori J.R., Chapman J.R. Parametric and nonparametric tests in spine research: Why do they matter? Glob. Spine J. 2018;8:652–654. doi: 10.1177/2192568218782679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Bhalerao S., Parab S. Choosing statistical test. Int. J. Ayurveda Res. 2010;1:187. doi: 10.4103/0974-7788.72494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Nayak B.K., Hazra A. How to choose the right statistical test? Indian J. Ophthalmol. 2011;59:85–86. doi: 10.4103/0301-4738.77005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Greenland S., Senn S.J., Rothman K.J., Carlin J.B., Poole C., Goodman S.N., Altman D.G. Statistical tests, p values, confidence intervals, and power: A guide to misinterpretations. Eur. J. Epidemiol. 2016;31:337–350. doi: 10.1007/s10654-016-0149-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Vickers A.J. Analysis of variance is easily misapplied in the analysis of randomized trials: A critique and discussion of alternative statistical approaches. Psychosom. Med. 2005;67:652–655. doi: 10.1097/01.psy.0000172624.52957.a8. [DOI] [PubMed] [Google Scholar]

- 91.Agresti A. An Introduction to Categorical Data Analysis. 2nd ed. Volume 394. John Wiley & Sons Inc.; Hoboken, NJ, USA: 2007. [DOI] [Google Scholar]

- 92.Vickers A.J., Altman D.G. Analysing controlled trials with baseline and follow up measurements. BMJ. 2001;323:1123–1124. doi: 10.1136/bmj.323.7321.1123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Rothman K.J. Curbing type I and type II errors. Eur. J. Epidemiol. 2010;25:223–224. doi: 10.1007/s10654-010-9437-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Jaeger T.F. Categorical data analysis: Away from ANOVAs (transformation or not) and towards logit mixed models. J. Mem. Lang. 2008;59:434–446. doi: 10.1016/j.jml.2007.11.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.