Abstract

This study is to evaluate the feasibility of deep learning (DL) models in the multiclassification of reflux esophagitis (RE) endoscopic images, according to the Los Angeles (LA) classification for the first time. The images were divided into three groups, namely, normal, LA classification A + B, and LA C + D. The images from the HyperKvasir dataset and Suzhou hospital were divided into the training and validation datasets as a ratio of 4 : 1, while the images from Jintan hospital were the independent test set. The CNNs- or Transformer-architectures models (MobileNet, ResNet, Xception, EfficientNet, ViT, and ConvMixer) were transfer learning via Keras. The visualization of the models was proposed using Gradient-weighted Class Activation Mapping (Grad-CAM). Both in the validation set and the test set, the EfficientNet model showed the best performance as follows: accuracy (0.962 and 0.957), recall for LA A + B (0.970 and 0.925) and LA C + D (0.922 and 0.930), Marco-recall (0.946 and 0.928), Matthew's correlation coefficient (0.936 and 0.884), and Cohen's kappa (0.910 and 0.850), which was better than the other models and the endoscopists. According to the EfficientNet model, the Grad-CAM was plotted and highlighted the target lesions on the original images. This study developed a series of DL-based computer vision models with the interpretable Grad-CAM to evaluate the feasibility in the multiclassification of RE endoscopic images. It firstly suggests that DL-based classifiers show promise in the endoscopic diagnosis of esophagitis.

1. Introduction

Gastroesophageal reflux disease (GERD) is a condition in which gastroesophageal reflux leads to esophageal mucosal lesions and troublesome symptoms [1, 2]. It is classified into reflux esophagitis (RE) with mucosal injuries and nonerosive reflux disease (NERD) only with symptoms [3]. Recently, the prevalence of RE has increased in Eastern Asia, due to the westernized lifestyle and diet [4, 5]. The severe complications of RE include ulcer bleeding and strictures. Even though RE-induced death is rare, these severe complications are related with significant morbidity and mortality rates [6].

According the Japan's 3rd guideline [3] and the Lyon Consensus [1], the paradigm of GERD diagnosis hinges on the identification of esophageal mucosal lesions and then the grading of the severity of esophagitis. In general, GERD is generally evaluated by clinical symptoms or responses to antisecretory therapy; however, the diagnosis required endoscopy and reflux monitoring [7]. Endoscopy is necessary to the grading of esophagitis, which plays a key role in the algorithms for the diagnosis and treatment of GERD [3]. The Los Angeles (LA) classification is the most widely used and validated scoring system to describe the endoscopic appearance of esophageal mucosa and stratify its severity [8].

Deep learning (DL) is a statistical learning method that empowers computers to extract features of raw data, including structured data, images, text, and audio, without human intervention. The remarkable progress of DL-based artificial intelligence (AI) has reshaped various aspects of clinical practice [9]. DL presents a significant advantage in the fields of computer vision to analyze medical images and videos containing gigantic quantities of information [10]. In gastroenterology, AI is increasingly being integrated into computer-aided diagnosis (CAD) systems to improve lesions detection and characterization in endoscopy [11]. To our best knowledge, there were no previous reports concerning the application of DL in the endoscopic classification of RE.

In this multicentral retrospective study, we aimed to evaluate the feasibility of DL models in the multiclassification of RE endoscopic images, according to the LA classification.

2. Methods

2.1. Datasets

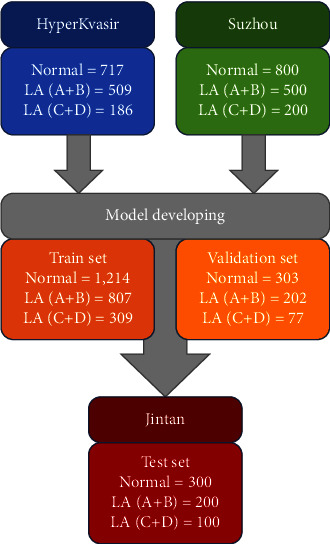

Subjects who underwent the upper endoscopy were recruited from two hospitals as follows: (1) Suzhou: The First Affiliated Hospital of Soochow University and (2) Jintan: Affiliated Hospital of Jiangsu University, between 2015 and 2021. In the two centers, subjects were excluded if they have (1) esophagitis of other etiologies, e.g., pills-induced esophagitis, eosinophilic, radiation, and infectious esophagitis; (2) esophageal varices; (3) esophageal squamous cell cancer. This study was approved by the Ethics Committee of The First Affiliated Hospital of Soochow University and conducted in accordance with the Helsinki Declaration of 1975 as revised in 2000 (the IRB approval number 2022098). All participants signed statements of informed consent before inclusion. Besides, the Z-line endoscopic images were also obtained from an open dataset, HyperKvasir, which now is the largest dataset of the gastrointestinal endoscopy (https://datasets.simula.no/hyper-kvasir/) [12]. The dataset offers labeled/unlabeled/segmented image data and annotated video data from Bærum Hospital in Norway. The characteristic of the datasets was shown in Figure 1. Each endoscopic image of Z-line was determined and labeled as normal, LA classification A + B (LA A + B), or LA classification C + D (LA C + D) by three rich-experienced endoscopists, based on the LA classification. The endoscopic devices in our hospital include Olympus GIF-Q260, GIF-H290, and Fuji EG-601WR, while in the HyperKvasir dataset, they include Olympus and Pentax at the Department of Gastroenterology, Bærum Hospital.

Figure 1.

The characteristic of the datasets.

2.2. Models

2.2.1. CNNs-Based Architectures

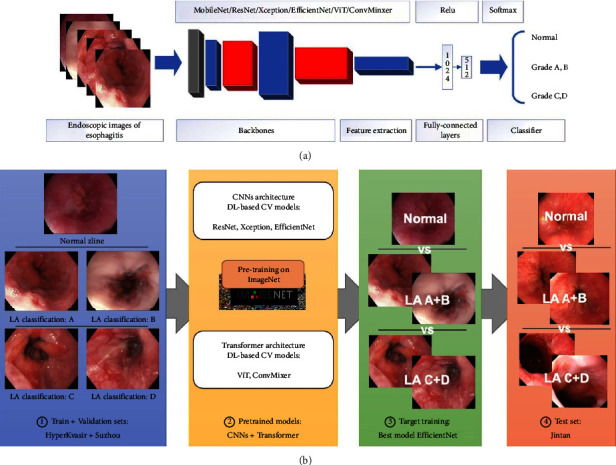

Pretrained convolutional neural networks (CNNs) include convolutional layers, average pooling layers, and fully connected layers, with ReLU activation. Besides, two dense layers (ReLU activation) and one dense layer (Softmax activation) were added on the top of the pretrained CNNs layers for feature extraction, as shown in Figure 2(a).

Figure 2.

The flowchart of the study.

2.2.2. Transformer-Based Architectures

Transformer is characterized by synchronous input based on the self-attention mechanism. The Transformer encoder consists of three main components, namely, input embedding, multihead attention, and feed-forward neural networks. Similar as the CNNs, following them, three dense layers (ReLU or Softmax activation) were added on the top of the pretrained Transformer-based architectures.

2.2.3. Pretrained Models

The six CNNs-or Transformer-architectures models, i.e., MobileNet (MobileNet V1), ResNet (ResNet50 V2), Xception (Xception V1), EfficientNet (EfficientNet V2 small), ViT (ViT B/16), and ConvMixer (ConvMixer-768/32) were selected. These computer vision models were previously trained on the ImageNet database (https://www.image-net.org). The pretrained models and parameters were obtained from Keras or TensorFlow Hub (https://hub.tensorflow.google.cn/).

2.3. Training and Validation

2.3.1. Implementation

The CNNs-or Transformer-architectures models were transfer learning via Keras (TensorFlow framework as backbone). The Adam optimizer and the categorical cross-entropy cost function, with a fixed learning rate of 0.0001 and a batch size of 32, were compiled in the training of models. A link to the codes concerning the training procedure could be obtained here on https://osf.io/4tdhu/?view_only=b279429b6a284ad885da7cad79126df7.

2.3.2. Target Training

Endoscopic images of Z-line were saved as JPEG format. All images were rescaled to 331 × 331 pixels and then the pixel values were normalized from 0 to 255 to 0 to 1. Based on the LA classification, the images were divided into three groups, namely, normal, LA A + B, and LA C + D. Images from the HyperKvasir dataset and Suzhou hospital were divided into the training and validation datasets as a ratio of 4 : 1. The flowchart of the study was plotted in Figure 2(b).

2.3.3. External Test

A total of 600 endoscopic images (as JPEG format) from Jintan hospital were the external test set, including 300 normal, 200 LA A + B, and 100 LA C + D (Figure 2(b)). The endoscopic devices in Jintan hospital include Olympus GIF-Q260 and GIF-H290.

2.3.4. Comparison with Endoscopists

To further evaluate the performance of the models, the images from the test dataset were determined by two endoscopists (junior, five-year endoscopic experience, and senior, more than ten-year experience).

2.3.5. Visualization of the Model

The visualization of the models was proposed using Gradient-weighted Class Activation Mapping (Grad-CAM) [13]. Grad-CAM uses the class-specific gradient information into the final convolutional layers of CNNs-based architectures to create projecting maps of the key areas in the images without retraining. Based on the best multiclassification model, the Grad-CAM technology was to offer inferential explanation on the original images.

2.4. Statistical Analysis

The training of the models was performed on Python (version: 3.9) and TensorFlow (2.8.0). The performance was mainly evaluated by accuracy and recall. True positives (TP), true negatives (TF), false positives (FP), and false negatives (FN) were enumerated to assess the classifiers. Formulas were as follows: Accuracy = (TP + TN)/(TP + FP + FN + TN), recall = TP/(TP + FN), Marco-recall = mean recalls, Matthew's correlation coefficient (MCC) = (TP ∗ TN − FP ∗ FN)/√(TP + FP) (TP + FN) (TN + FP) (TN + FN), and Cohen's kappa k = (p0 − pe)/(1 − pe) (p0: relative observed agreement among raters; pe: hypothetical probability of chance agreement).

3. Results

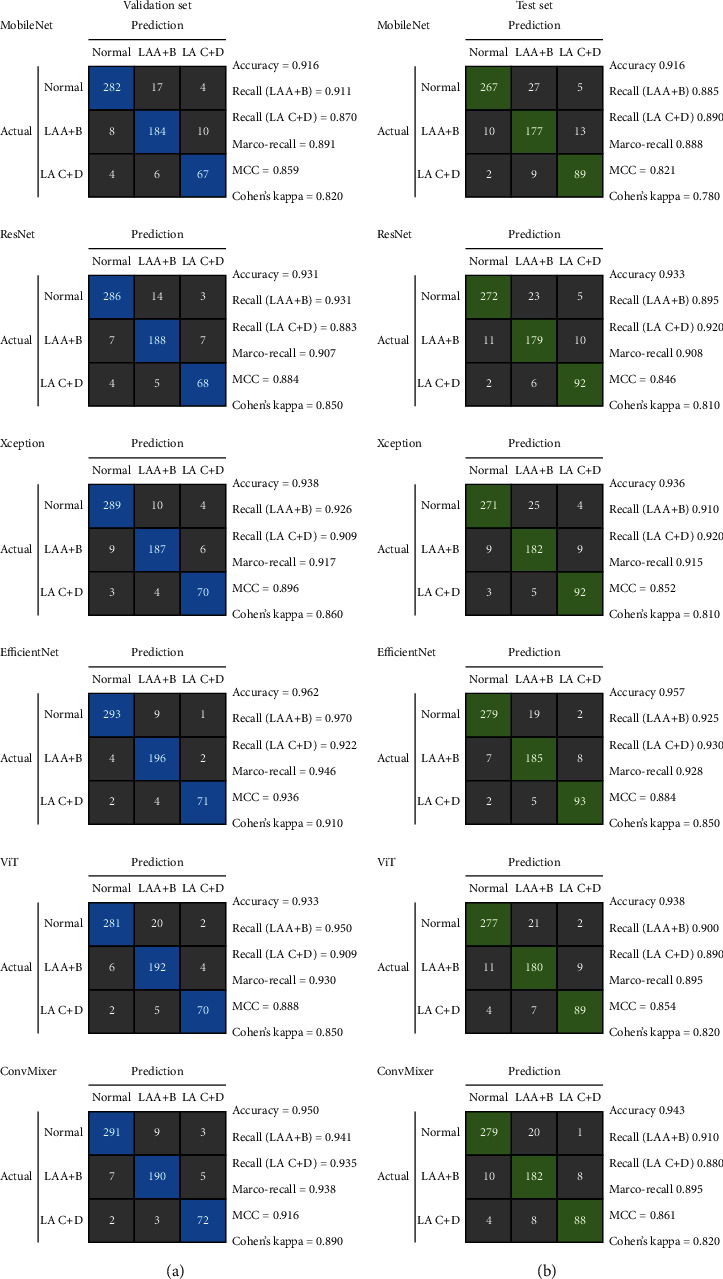

3.1. Performance in the Validation Set

The confusion matrix of the six models in the validation set was plotted in Figure 3(a). The EfficientNet model showed the highest accuracy of 0.962, followed by the ConvMixer model (0.950) and Xception (0.938) (Table 1). The recalls for LA A + B and LA C + D of the EfficientNet model were 0.970 and 0.922, while its Marco-recall was 0.946. In term of multiclass metrics, its MCC and Cohen's kappa were also highest (0.936 and 0.910).

Figure 3.

Confusion matrix of the models.

Table 1.

Performance metrics of models and endoscopists.

| Models | Accuracy | Matthew's correlation coefficient | Cohen's kappa | |

|---|---|---|---|---|

| Validation dataset | ||||

| MobileNet | 0.916 | 0.859 | 0.820 | |

| ResNet | 0.931 | 0.884 | 0.850 | |

| Xception | 0.938 | 0.896 | 0.860 | |

| EfficientNet | 0.962 | 0.936 | 0.910 | |

| ViT | 0.933 | 0.888 | 0.850 | |

| ConvMixer | 0.950 | 0.916 | 0.890 | |

|

| ||||

| Test dataset | ||||

| MobileNet | 0.916 | 0.821 | 0.780 | |

| ResNet | 0.933 | 0.846 | 0.810 | |

| Xception | 0.936 | 0.852 | 0.810 | |

| EfficientNet | 0.957 | 0.884 | 0.850 | |

| ViT | 0.938 | 0.854 | 0.820 | |

| ConvMixer | 0.943 | 0.861 | 0.820 | |

| Junior endoscopist | 0.916 | 0.820 | 0.780 | |

| Senior endoscopist | 0.945 | 0.864 | 0.830 | |

The bold figures indicate the highest numeric values.

3.2. Performance in the Test Set

The confusion matrix in the test set was plotted in Figure 3(b)). The EfficientNet model still presented the best performance. Its accuracy was 0.957, followed by ConvMixer (0.943) and Xception (0.936) (Table 1). Moreover, the recalls for LA A + B and LA C + D of the EfficientNet model were 0.925 and 0.930, while its Marco-recall reached 0.928, better than the other models. In term of multiclass metrics, its MCC and Cohen's kappa were still highest (0.884 and 0.850).

3.3. Comparison with the Endoscopists

In the test set, the junior endoscopist presented an accuracy of 0.916, recalls for LA A + B 0.885 and LA C + D 0.840, Marco-recall 0.863, MCC 0.820, and Cohen's kappa 0.780 (Table 1). In the meantime, the senior endoscopist showed an accuracy of 0.945, recalls for LA A + B 0.905 and LA C + D 0.890, Marco-recall 0.898, MCC 0.864, and Cohen's kappa 0.830.

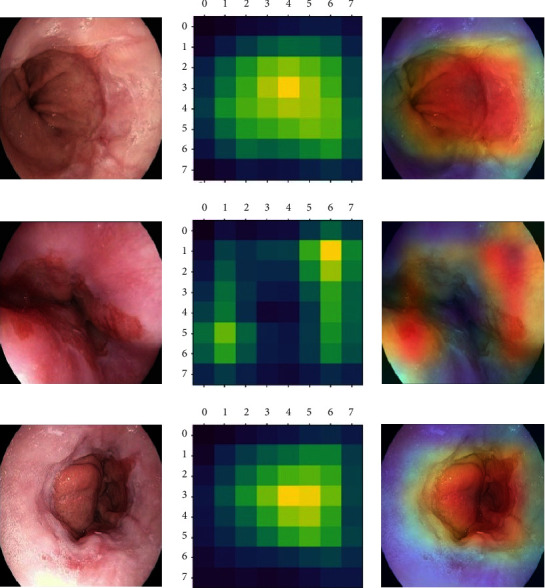

3.4. The Grad-CAM Heatmap

According to the gradient information of the last convolution layer of the EfficientNet model, the Grad-CAM was plotted and highlighted the lesions of the original images (Figure 4). The left column displays the original endoscopic images. The middle column illustrates the Grad-CAM heatmap on the output of the last convolution layer. The right column shows the Grad-CAM heatmap added to the original endoscopic images, in which the highlighted regions reflect the lesions determined by the EfficientNet model.

Figure 4.

Grad-CAM heatmap.

The left column displays the original endoscopic images. The middle column illustrates the Grad-CAM heatmap on the output of the last convolution layer. The right column shows the Grad-CAM heatmap added to the original endoscopic images, in which the highlighted regions reflect the lesions determined by the EfficientNet model.

4. Discussion

This study proposed a series of multiclassification computer vision models with the interpretable Grad-CAM to evaluate the feasibility of DL in the endoscopic images of RE, according to the LA classification. Six CNNs-or Transformer-architectures models were developed and the EfficientNet model showed practicable performance, better than the endoscopists.

In 1999, Lundell et al. [8] developed the LA classification to describe the mucosal appearance in endoscopy and to assess its correlation with the clinical changes in patients with RE. It was developed for the purpose of stratifying clinically relevant severity of RE. According to the LA classification, type A is defined as one (or more) mucosal break, no longer than 5 mm-long, that does not extend between the tops of two mucosal folds; type B is defined as one (or more) mucosal break, more than 5 mm, that still does not extend between the tops of two folds; type C is defined as one (or more) mucosal break that is continuous between the tops of two or more mucosal folds but which is no longer than 3/4 of the esophageal circumference; type D is defined as one (or more) mucosal break that is more than 3/4 of the circumference [8]. According to the Japan 2021 guideline [3] and the ACG 2021 guideline [7], RE is classified into mild RE (grade A or B of LA classification) and severe RE (grade C or D), in which the latter was defined as the high grade of RE, based on the Lyon Consensus [1]. The stratification is essential to the detailed diagnosis and the decision-making of therapy [3]. Thus, in this study, we labeled the images and trained the multiclassification models based on the forementioned guidelines. AI is being widely applied in a variety of clinical settings aiming to improve the management of the gastrointestinal diseases [14]. DL is a subset of machine learning that can automatically extract features of input data via artificial neural networks, organized as CNNs and Transformer [15]. The past five years witness a series of studies assessing the performance of DL in the diagnosis of esophageal diseases [16–22]. The main application is the computer vision task, consisting of the detection and segmentation lesions in esophageal endoscopic images or video [23, 24]. The CAD system is designed to detect and differentiate lesions based on the mucosal/vascular pattern, to stratify the progression of the diseases or to assist the decision-making of therapy [20, 25, 26]. The remarkable advantage is reducing the workload of endoscopists and improving diagnostic accuracy [27, 28].

Recently, Visaggi et al. [29] performed a meta-analysis concerned machine learning in the diagnosis of esophageal diseases. According to their review, there were a total of 42 studies. Among them, nine were focused on Barrett's esophagus and three were about GERD [30–32]. In terms of DL, Ebigbo et al. [33] developed a real-time endoscopic system to classify normal Barrett's esophagus and early esophageal adenocarcinoma, which showed an accuracy of 89.9%. Similarly, a CAD system by de Groof et al. [19] was used to improve the detection of dysplastic Barrett's esophagus. The ResNet/UNet-based system showed the performance of high accuracy detection and near-perfect segmentation, better than general endoscopists. One month ago, Tang et al. [17] trained a multitask DL model to diagnose esophageal lesions (normal vs. cancer vs. esophagitis). According to their report, the model achieved a high accuracy (93.43%) in complex classification, as well as a satisfied coefficient (77.84%) in semantic segmentation. Guimaraes et al. [18] proposed a CNNs-based multiclassification model (normal vs. eosinophilic esophagitis vs. candidiasis). In the test set, the model presented a fine global accuracy (0.915), higher than endoscopists.

In this multicentral study, six CNNs-or Transformer-architectures computer vision models were transfer learning to the multiclassification of RE endoscopic images, according to the LA classification. The EfficientNet model displayed the highest accuracy and Marco-recall. EfficientNet is a CNNs architecture and scaling method that uniformly scales all dimensions with a set of fixed scaling coefficients [34]. There are various models designed to improve training efficiency, e.g., Transformer blocks in Transformers-architectures models. But expensive overhead depending on parameter size comes as an issue. EfficientNetV2 is the successor of EfficientNet, which is a family of models optimized for floating point operations and parameter efficiency. In 2021, Google used a combination of training-aware neural architecture search, scaling to further optimize the training speed and parameter efficiency to develop this new family [35]. EfficientNetV2 overcomes some of the training bottlenecks and outperforms the V1 models. Moreover, compared with Transformer-architectures models, EfficientNet shows advantage in this small dataset with limited computing power. In the comparison with the endoscopists, the EfficientNet model also showed advantages both in accuracy and recall. Interpretability for a DL model has been one of the essential respects. Computer scientists and medical practitioners are showing more concerns about the inference of AI during the development of models, especially in the field of computer vision. Therefore, lastly, we proposed the Grad-CAM technology to visualize the inferential explanation on the original images.

Our study has some limitations. To begin with, we only focus on esophagitis caused by reflex, rather than various etiologies, e.g., radiation, eosinophilic, and pill-induced esophagitis. Further studies, based on medical history and biopsy, are required to develop more complex classifiers for esophagitis. Besides, the images dataset was limited, while video files were not involved in the analyzation. This study still required more data for validation. Lastly, we did not deploy the models in endoscopic devices. We believe that this study may contribute to the future deployment in the actual practice.

5. Conclusions

In this study, we developed a series of DL-based computer vision models with the interpretable Grad-CAM to evaluate the feasibility of AI in the multiclassification of RE endoscopic images for the first time. It suggests that DL-based classifiers show promise in the endoscopic diagnosis of esophagitis. In the future, it is necessary to investigate the multimodal fusion in the classification of RE, integrating endoscopic images, clinical symptoms, esophageal pH monitoring, etc.

Acknowledgments

This study was supported by the National Natural Science Foundation of China (82000540), Science and Technology Plan (Apply Basic Research) of Changzhou City (CJ20210006), Clinical Technology Development Fund of Jiangsu University (JLY20180134), Medical Education Collaborative Innovation Fund of Jiangsu University (JDY2022018), and Youth Program of Suzhou Health Committee (KJXW2019001).

Contributor Information

Jinzhou Zhu, Email: jzzhu@zju.edu.cn.

Weixin Yu, Email: ywx7097@163.com.

Data Availability

The dataset used to support the findings of the study is from HyperKvasir, which is now the largest open dataset of the gastrointestinal endoscopy (https://datasets.simula.no/hyper-kvasir/).

Ethical Approval

This study was approved by the Ethics Committee of The First Affiliated Hospital of Soochow University (the IRB approval number 2022098). All procedures performed in studies involving human participants were in accordance with the Helsinki Declaration of 1975 as revised in 2000. All subjects gave written informed consent before participation.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Authors' Contributions

Hailong Ge, Xin Zhou, and Jinzhou Zhu provided acquisition, analysis and interpretation of data, and statistical analysis. Yu Wang and Jian Xu performed development of methodology and writing. Feng Mo and Chen Chao were responsible for description and visualization of data. Weixin Yu contributed to study design. Jinzhou Zhu and Hailong Ge provided technical and material support. All authors have read and agreed to the published version of the manuscript. Hailong Ge, Xin Zhou, and Yu Wang contributed equally to this work.

References

- 1.Gyawali C. P., Kahrilas P. J., Savarino E., et al. Modern diagnosis of GERD: the Lyon Consensus. Gut . 2018;67(7):1351–1362. doi: 10.1136/gutjnl-2017-314722. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kia L., Hirano I. Distinguishing GERD from eosinophilic oesophagitis: concepts and controversies. Nature Reviews Gastroenterology & Hepatology . 2015;12(7):379–386. doi: 10.1038/nrgastro.2015.75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Iwakiri K., Fujiwara Y., Manabe N., et al. Evidence-based clinical practice guidelines for gastroesophageal reflux disease 2021. Journal of Gastroenterology . 2022;57(4):267–285. doi: 10.1007/s00535-022-01861-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Nirwan J. S., Hasan S. S., Babar Z. U. D., Conway B. R., Ghori M. U. Global prevalence and risk factors of gastro-oesophageal reflux disease (GORD): systematic review with meta-analysis. Scientific Reports . 2020;10(1):p. 5814. doi: 10.1038/s41598-020-62795-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Sakaguchi M., Manabe N., Ueki N., et al. Factors associated with complicated erosive esophagitis: a Japanese multicenter, prospective, cross-sectional study. World Journal of Gastroenterology . 2017;23(2):318–327. doi: 10.3748/wjg.v23.i2.318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ohashi S., Maruno T., Fukuyama K., et al. Visceral fat obesity is the key risk factor for the development of reflux erosive esophagitis in 40-69-years subjects. Esophagus . 2021;18(4):889–899. doi: 10.1007/s10388-021-00859-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Katz P. O., Dunbar K. B., Schnoll-Sussman F. H., Greer K. B., Yadlapati R., Spechler S. J. ACG clinical guideline for the diagnosis and management of gastroesophageal reflux disease. American Journal of Gastroenterology . 2022;117(1):27–56. doi: 10.14309/ajg.0000000000001538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lundell L. R., Dent J., Bennett J. R., et al. Endoscopic assessment of oesophagitis: clinical and functional correlates and further validation of the Los Angeles classification. Gut . 1999;45(2):172–180. doi: 10.1136/gut.45.2.172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Sumiyama K., Futakuchi T., Kamba S., Matsui H., Tamai N. Artificial intelligence in endoscopy: present and future perspectives. Digestive Endoscopy . 2021;33(2):218–230. doi: 10.1111/den.13837. [DOI] [PubMed] [Google Scholar]

- 10.Tontini G. E., Neumann H. Artificial intelligence: thinking outside the box. Best Practice & Research Clinical Gastroenterology . 2021;52-53 doi: 10.1016/j.bpg.2020.101720.101720 [DOI] [PubMed] [Google Scholar]

- 11.Visaggi P., de Bortoli N., Barberio B., et al. Artificial intelligence in the diagnosis of upper gastrointestinal diseases. Journal of Clinical Gastroenterology . 2022;56(1):23–35. doi: 10.1097/mcg.0000000000001629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Borgli H., Thambawita V., Smedsrud P. H., et al. HyperKvasir, a comprehensive multi-class image and video dataset for gastrointestinal endoscopy. Scientific Data . 2020;7(1):p. 283. doi: 10.1038/s41597-020-00622-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Jiang H., Xu J., Shi R., et al. A multi-label deep learning model with interpretable grad-CAM for diabetic retinopathy classification. Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Annual International Conference; July 2020; Montreal, Canada. pp. 1560–1563. [DOI] [PubMed] [Google Scholar]

- 14.Le Berre C., Sandborn W. J., Aridhi S., et al. Application of artificial intelligence to gastroenterology and hepatology. Gastroenterology . 2020;158(1):76–94 e2. doi: 10.1053/j.gastro.2019.08.058. [DOI] [PubMed] [Google Scholar]

- 15.Sana M. K., Hussain Z. M., Shah P. A., Maqsood M. H. Artificial intelligence in celiac disease. Computers in Biology and Medicine . 2020;125 doi: 10.1016/j.compbiomed.2020.103996.103996 [DOI] [PubMed] [Google Scholar]

- 16.Struyvenberg M. R., de Groof A. J., van der Putten J., et al. A computer-assisted algorithm for narrow-band imaging-based tissue characterization in Barrett’s esophagus. Gastrointestinal Endoscopy . 2021;93(1):89–98. doi: 10.1016/j.gie.2020.05.050. [DOI] [PubMed] [Google Scholar]

- 17.Tang S., Yu X., Cheang C. F., et al. Diagnosis of esophageal lesions by multi-classification and segmentation using an improved multi-task deep learning model. Sensors . 2022;22(4):p. 1492. doi: 10.3390/s22041492. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Guimaraes P., Keller A., Fehlmann T., Lammert F., Casper M. Deep learning-based detection of eosinophilic esophagitis. Endoscopy . 2022;54(3):299–304. doi: 10.1055/a-1520-8116. [DOI] [PubMed] [Google Scholar]

- 19.de Groof A. J., Struyvenberg M. R., van der Putten J., et al. Deep-learning system detects neoplasia in patients with barrett’s esophagus with higher accuracy than endoscopists in a multistep training and validation study with benchmarking. Gastroenterology . 2020;158(4):915–929. doi: 10.1053/j.gastro.2019.11.030. [DOI] [PubMed] [Google Scholar]

- 20.Ebigbo A., Mendel R., Probst A., et al. Real-time use of artificial intelligence in the evaluation of cancer in Barrett’s oesophagus. Gut . 2020;69(4):615–616. doi: 10.1136/gutjnl-2019-319460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Yuan X. L., Guo L. J., Liu W., et al. Artificial intelligence for detecting superficial esophageal squamous cell carcinoma under multiple endoscopic imaging modalities: a multicenter study. Journal of Gastroenterology and Hepatology . 2022;37(1):169–178. doi: 10.1111/jgh.15689. [DOI] [PubMed] [Google Scholar]

- 22.Yang X. X., Li Z., Shao X. J., et al. Real-time artificial intelligence for endoscopic diagnosis of early esophageal squamous cell cancer (with video) Digestive Endoscopy . 2021;33(7):1075–1084. doi: 10.1111/den.13908. [DOI] [PubMed] [Google Scholar]

- 23.Tokat M., van Tilburg L., Koch A. D., Spaander M. C. Artificial intelligence in upper gastrointestinal endoscopy. Digestive Diseases . 2021;40(4):395–408. doi: 10.1159/000518232. [DOI] [PubMed] [Google Scholar]

- 24.Du W., Rao N., Yong J., et al. Improving the classification performance of esophageal disease on small dataset by semi-supervised efficient contrastive learning. Journal of Medical Systems . 2021;46(1):p. 4. doi: 10.1007/s10916-021-01782-z. [DOI] [PubMed] [Google Scholar]

- 25.Dickson I. Deep-learning AI for neoplasia detection in Barrett oesophagus. Nature Reviews Gastroenterology & Hepatology . 2020;17(2):66–67. doi: 10.1038/s41575-019-0252-5. [DOI] [PubMed] [Google Scholar]

- 26.Chen J., Jiang Y., Chang T. S., et al. Detection of Barrett’s neoplasia with a near-infrared fluorescent heterodimeric peptide. Endoscopy . 2022;54(12):1198–1204. doi: 10.1055/a-1801-2406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sharma P., Hassan C. Artificial intelligence and deep learning for upper gastrointestinal neoplasia. Gastroenterology . 2022;162(4):1056–1066. doi: 10.1053/j.gastro.2021.11.040. [DOI] [PubMed] [Google Scholar]

- 28.Liu G., Hua J., Wu Z., et al. Automatic classification of esophageal lesions in endoscopic images using a convolutional neural network. Annals of Translational Medicine . 2020;8(7):p. 486. doi: 10.21037/atm.2020.03.24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Visaggi P., Barberio B., Gregori D., et al. Systematic review with meta-analysis: artificial intelligence in the diagnosis of oesophageal diseases. Alimentary Pharmacology & Therapeutics . 2022;55(5):528–540. doi: 10.1111/apt.16778. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Pace F., Riegler G., de Leone A., et al. Is it possible to clinically differentiate erosive from nonerosive reflux disease patients? A study using an artificial neural networks-assisted algorithm. European Journal of Gastroenterology and Hepatology . 2010;22(10):1163–1168. doi: 10.1097/MEG.0b013e32833a88b8. [DOI] [PubMed] [Google Scholar]

- 31.Pace F., Buscema M., Dominici P., et al. Artificial neural networks are able to recognize gastro-oesophageal reflux disease patients solely on the basis of clinical data. European Journal of Gastroenterology and Hepatology . 2005;17(6):605–610. doi: 10.1097/00042737-200506000-00003. [DOI] [PubMed] [Google Scholar]

- 32.Horowitz N., Moshkowitz M., Halpern Z., Leshno M. Applying data mining techniques in the development of a diagnostics questionnaire for GERD. Digestive Diseases and Sciences . 2007;52(8):1871–1878. doi: 10.1007/s10620-006-9202-5. [DOI] [PubMed] [Google Scholar]

- 33.Ebigbo A., Mendel R., Rückert T., et al. Endoscopic prediction of submucosal invasion in Barrett’s cancer with the use of artificial intelligence: a pilot study. Endoscopy . 2021;53(9):878–883. doi: 10.1055/a-1311-8570. [DOI] [PubMed] [Google Scholar]

- 34.Abedalla A., Abdullah M., Al-Ayyoub M., Benkhelifa E. Chest X-ray pneumothorax segmentation using U-Net with EfficientNet and ResNet architectures. PeerJ Computer Science . 2021;7 doi: 10.7717/peerj-cs.607.e607 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Tan M., Le Q. V. EfficientNetV2: smaller models and faster Training2021. 2021. https://ui.adsabs.harvard.edu/abs/2021arXiv210400298T .

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The dataset used to support the findings of the study is from HyperKvasir, which is now the largest open dataset of the gastrointestinal endoscopy (https://datasets.simula.no/hyper-kvasir/).