Abstract

Objectives

This study is aimed at developing a screening tool that could evaluate the upper airway obstruction on lateral cephalograms based on deep learning.

Methods

We developed a novel and practical convolutional neural network model to automatically evaluate upper airway obstruction based on ResNet backbone using the lateral cephalogram. A total of 1219 X-ray images were collected for model training and testing.

Results

In comparison with VGG16, our model showed a better performance with sensitivity of 0.86, specificity of 0.89, PPV of 0.90, NPV of 0.85, and F1-score of 0.88, respectively. The heat maps of cephalograms showed a deeper understanding of features learned by deep learning model.

Conclusion

This study demonstrated that deep learning could learn effective features from cephalograms and automated evaluate upper airway obstruction according to X-ray images. Clinical Relevance. A novel and practical deep convolutional neural network model has been established to relieve dentists' workload of screening and improve accuracy in upper airway obstruction.

1. Introduction

Upper airway obstruction can result in reduction of breathing or impediment of gas exchange, and it is usually associated with sleep-disordered breathing (SDB) [1, 2]. The cause of upper airway obstruction includes polyps, environmental irritants, allergic rhinitis, and adenotonsillar hypertrophy [3, 4]. Increasing evidence has shown an association between dentofacial anomalies and obstruction in upper airway. Lopatiene et al. analyzed examination results including dental casts and radiographs of 49 children with respiratory obstruction, and they found significant link between nasal resistance and increase overjet, open bite, and maxillary crowding [5]. Children with upper airway obstruction may manifest as mouth breathing, which can result in narrow maxilla, mandibular skeletal retrognathism, increased lower facial height, and high palate [6]. Most children with this type of malocclusion and craniofacial deformity present to dental clinics complaining of occlusal disorder or dissatisfaction with their profile. Lateral cephalogram was a useful and common tool for dentists to evaluate the severity of upper airway obstruction. Although there are multiple other tools applied to assess upper airway obstruction, including computed tomography (CT), fluoroscopy, magnetic resonance imaging (MRI), and fibreoptic pharyngoscopy; lateral cephalometry is still an appealing approach for screening upper airway obstruction in dental clinics as it is a cheap and easily available technique with less radiation and certain diagnostic value [7–9]. Cephalometric analysis based on McNamara method is a classical measurement for airway analysis [10]. However, the landmark-label process is time- and energy-consuming even for a senior orthodontist. Besides, this experience-dependent technique is difficult to master for young dentists and dentists who have not investigated cephalometric measurements such as endodontists or prosthodontists, which may lead to missed or delayed diagnosis. Thus, it would be useful for orthodontists to develop an automated evaluation method to improve efficiency in upper airway obstruction using lateral cephalograms.

In the past several years, automated methods based on deep learning have achieved excellent results in diagnosis, segmentation, detection tasks, and so on [11–13]. For example, Mahdi et al. proposed a residual network-based faster R-CNN model to recognize teeth and evaluate both positional relationship and confidence score of the candidates. This model showed an F1-score of 0.982 which indicated that deep learning was useful and reliable for dental assistance [14]. Similarly, deep learning was also used for identifying the brand and model of a dental implant from a radiograph with a sensitivity of 93.5% and a specificity of 94.2% [15]. In orthodontics, accurate skeletal classification can assist orthodontists in making treatment plans. Yu et al. developed a deep learning model for skeletal classification solely from the lateral cephalogram. In that work, deep learning learned from X-ray imaging labeled by human experts and exhibited superior performance with >90% sensitivity, specificity, and accuracy for vertical and sagittal skeletal diagnosis [16].

In this paper, we developed a novel deep convolutional neural network (DCNN) based on ResNet backbone for automated evaluation of upper airway obstruction. Here, our study was novel with 4 main contributions. Firstly, this is the first research to evaluate upper airway obstruction based on deep learning using lateral cephalograms with high sensitivity and specificity. Secondly, the heat maps of cephalograms showed a deeper understanding of features learned by deep learning model. This visualization provided interpretable information in upper airway obstruction based on deep learning. Finally, our model is lite and practical and can be deployed in fundamental clinics with less memory and computational overhead.

2. Materials and Methods

2.1. Dataset

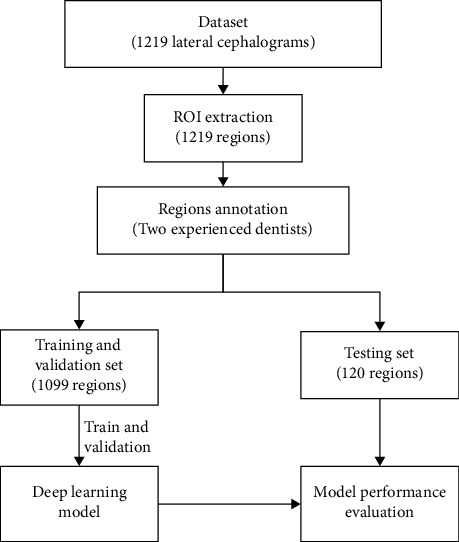

A study flowchart of our study was presented in Figure 1. Cephalometric radiographs were retrospectively examined for 1783 cohorts who had initially visited our hospital between March and September 2019. We first excluded the low-quality images (324 images) and eliminated images (240 images) where the anatomic structure (soft palate, tongue, or pharyngeal wall) was difficult to recognize. Finally, a total of 1219 X-ray images of cohorts with lateral X-ray examination were obtained, of which the numbers of upper airway obstruction and nonobstruction were 610 and 609, respectively. Cephalometric radiographs were taken from X-ray machine Morita ×550 (Tube energy 80 kV, Tube current 10 mA; Morita, Kyoto, Japan). The distance between X-ray plate and X-ray machine was 180 cm, and the resolution of X-ray images is 1752 × 1537 (22.9 cm × 20.1 cm). We cropped the airway region from the center to the bottom of original X-ray images with a resolution of 1000 × 500. The airway regions of X-ray images were randomly divided into 2 groups: a training set (1099 images) and a testing set (120 images). Demographic data are shown in Table 1.

Figure 1.

Study flowchart.

Table 1.

Clinical and demographic characteristics of study cohorts.

| Characteristic | Training set (N = 1099) | Testing set (N = 120) |

|---|---|---|

| Median age (range) | 12.15 (7-18) | 12.02 (7-18) |

| Sex | ||

| Male | 545 | 54 |

| Female | 554 | 66 |

| Clinical evaluation | ||

| Obstructive | 550 | 60 |

| Nonobstructive | 549 | 60 |

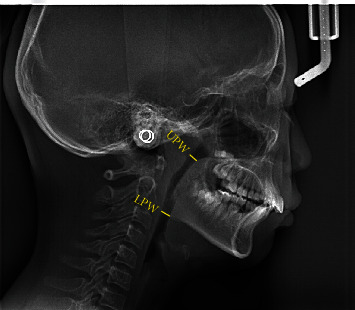

The McNamara method was considered a classic cephalometric analysis for evaluation of upper airway dimension [10]. Linear measurements were performed using Image J software (Rasband software, W.S., Image J, National Institutes of Health, Bethesda, MD, http://rsb.info.nih.gov/ij/). Many studies have applied McNamara method for assessment of upper airway, which can be divided into nasopharynx (upper pharynx) and oropharynx (lower pharynx) [17–19]. The upper pharyngeal width (UPW) was measured linearly from a point on the posterior wall of the soft palate to the posterior pharyngeal wall where there was the greatest closure of the airway. The measurement of the lower pharyngeal width (LPW) was a linear distance from an intersection point of the posterior border of the tongue and the lower border of mandible to the closest point on the posterior pharyngeal wall [17] (Figure 2). In our research, X-ray images of patients with mixed dentition were manually labelled as “obstruction” (UPW <12 mm or LPW <10 mm) or “nonobstruction” (UPW ≥ 12 mm and LPW ≥ 10 mm), and the images of patients with permanent dentition were manually labelled as “obstruction” (UPW <17.4 mm or LPW <10 mm) or “nonobstruction” (UPW ≥ 17.4 mm and LPW ≥ 10 mm). All the measurements were carried out by the two experienced dentists blinded by each other. A third senior orthodontic specialist with 30 years of experience was consulted in cases of disagreement. If the three experts still could not get an agreement, the confusing image would be excluded.

Figure 2.

Illustration of cephalometric measurements for upper airway. Upper pharyngeal width (UPW): linear distance from a point on the posterior wall of the soft palate (the anterior half part) to the posterior pharyngeal wall where there was the greatest closure of the airway. Lower pharyngeal width (LPW): measured from an intersection point of the posterior border of the tongue and the lower border of mandible to the closest point on the posterior pharyngeal wall.

2.2. Deep Learning Model

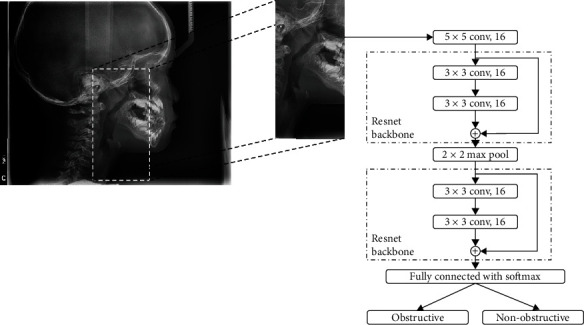

ResNet-18 is a famous model and achieved excellent results in the field of image classification [20]. In this model, the backbone structure consists of two convolutional layers with skip connection. This backbone has been proven that it has a strong capacity of feature extraction in the image classification task. Hence, we chose ResNet-18 as the backbone to develop a lite and practical model for automated evaluation of upper airway obstruction. Regarding our model, the kernel size of two convolutional layers is 3×3 and its stride is 1. To overcome overparameterization problem, we not only reduce the size and the number of kernels but also reduce the number of backbone blocks. The kernel number is 16 in the first backbone and the second backbone. Max pooling with the size of 2 × 2 is deployed between two backbone blocks. The features of airway region are extracted in the first extra convolutional layer with 5 × 5 kernel and 1 stride. And the feature maps produced by first layer are given to backbone structure. At the end of backbone structure, fully-connected layer with softmax switched feature maps into the probability of obstruction or nonobstruction. The rectified linear unit (ReLU) is included in every convolutional layer. The architecture of model is shown in Figure 3.

Figure 3.

Preprocessing and model architecture.

2.3. Statistical Analysis and Evaluation Criteria

To better measure the performance of the model, we used evaluation metrics of sensitivity (SEN), specificity (SPEC), positive predictive value (PPV), negative predictive value (NPV) and F1-score [21–24]. And the metrics equations are calculated as follows:

| (1) |

where the TP, FP, TN, and FN indicated true positive, false negative, true negative, and false negative, respectively. Positive/negative means that the model predicts that the X-ray image is obstructive/nonobstructive, and true/false means that the prediction is right/wrong. In these metrics, the F1-score is the most overall metric which indicates the harmonic mean of PPV and SEN. The highest possible value of F1-score is 1, indicating perfect PPV and SEN, and the lowest possible value is 0, if either PPV or SEN is zero. All value of metrics is ranged from 0 to 1.

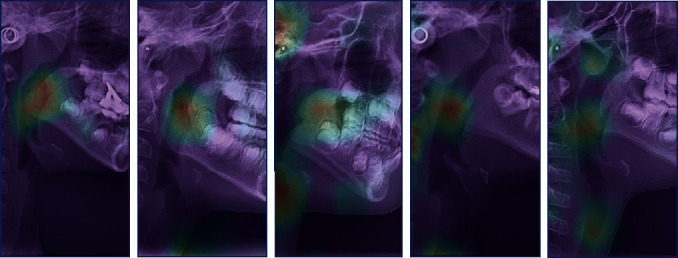

For deeper understanding of the feature in X-ray images, the heat map with class activation mapping (CAM) was generated according to the method proposed by Zhou et al. [25]. This map visually highlights the cephalogram region that is most informative in evaluation of upper airway obstruction.

3. Results

The study was developed using 1099 X-ray images for training and 120 X-ray images for testing. All experiments were performed in Python 3.6 and TensorFlow 1.9 on a single NVIDIA RTX 2080Ti [26]. We randomly selected 100 images from training set as a validation set to observe training situation and obtain the highest performance. In the training phase, we used a learning rate of 0.001 in the Adam optimizer and used the “Cross-Entropy” loss function with the batch size of 50. After 30 epochs, automatic evaluation of upper airway obstruction was performed using the testing dataset. In many dental applications, VGG16 is a popular model for classification and diagnosis [27, 28]. Hence, we also carried out experimental comparison between VGG16 and DCNN. In the testing set, DCNN model showed 0.86 sensitivity, 0.89 specificity, 0.90 PPV, 0.85 NPV, and 0.88 F1-score, respectively. DCNN showed higher performance than VGG16 in our study (Table 2).

Table 2.

The performance of deep learning model.

| Operator | SEN | SPEC | PPV | NPV | F1-score |

|---|---|---|---|---|---|

| VGG16 | 0.84 | 0.86 | 0.86 | 0.84 | 0.85 |

| ResNet-18 | 0.83 | 0.86 | 0.87 | 0.82 | 0.85 |

| EfficientNet | 0.84 | 0.88 | 0.88 | 0.83 | 0.86 |

| MobileNet v2 | 0.85 | 0.88 | 0.88 | 0.85 | 0.87 |

| DCNN (ours) | 0.86 | 0.89 | 0.90 | 0.85 | 0.88 |

Figure 4 shows the heat maps created with class activation mapping. This is an indication of a well-trained model that effectively uses the information in the cephalogram. According to the heat maps, the upper airway area was activated when model received a sample with airway obstruction. This activated area revealed that model taught itself according to human annotated conclusion without extra orientation. The processing speed of DCNN was about 5 s for analyzing 120 lateral cephalograms with a single NVIDIA RTX 2080Ti graphic processing unit.

Figure 4.

Class activation maps. The red area shows key features discovered by deep learning.

4. Discussion

Upper airway obstruction, as a hot topic studied by dentists and otolaryngologists, showed an intimate association with malocclusion and development of craniofacial complex, and it was also the main etiological factor of obstructive sleep apnea syndrome (OSAS) in children [29]. OSAS may lead to problems which were harmful to children, such as inattention, poor learning, failure to thrive, or even pulmonary hypertension [30]. However, missed or delayed diagnosis was common as signs and symptoms of children were not clear and the experience-dependent diagnosis method was difficult to master [31]. For children who were in an early stage of mental and physical development, airway patency and sleep quality were significant. So, developing a timely and accurate screen system for upper airway obstruction was advantageous.

As a two-dimensional analysis method, lateral cephalometric images have long been discussed about its reliability in assessing pharyngeal volumes. A study consisting of 36 prepubertal children ranging from 4.9–9.8 years old compared the validity of upper airway using MRI and cephalometric measurements, and researchers found that cephalometric measurements showed significant correlations with MRI measurements. The authors concluded that the cephalometric radiograph was a useful screening tool when evaluating nasopharyngeal or retropalatal airway size [9]. Besides, cephalometric analysis was also investigated as a useful tool to evaluate OSAS patients [32]. In this paper, we developed a novel and practical DCNN model to automatically evaluate upper airway obstruction using the lateral cephalogram. Our data demonstrated that deep learning method was able to evaluate upper airway obstruction with high accuracy and improve screening efficiency in dental clinics.

To the best of our knowledge, so far, there is only one research that is similar to our research, which applied artificial intelligence technology to detect patients with severe obstructive sleep apnea based on cephalometric radiographs [33]. However, it only focused on the oropharynx but not on the nasopharynx. Some research reported automatic segmentation of the airway space with convolutional neural network on CBCT images [34, 35]. We must admit that CBCT offers information on cross-sectional areas, volume, and 3D form that cannot be determined by cephalometric images. However, many studies have confirmed the screening value of cephalometric images [9, 32], which possesses lots of advantages, including lower cost, and less radiation dose. Besides, cephalometric images are more wildly used by dentists, especially in developing countries and clinics which cannot afford CBCT machines.

In our study, a DCNN model based on ResNet backbone was applied for automated evaluation of upper airway obstruction. Our experimental results revealed that a simplified model can overcome overparameterization problems to some extent. In dental applications, the size of dental image dataset is always smaller than natural image datasets generally, since the acquisition of data requires the authorization of the patient. Additionally, professional knowledge is required in the work of data annotation. Thus, it is difficult to collect enough high-quality dental samples for training and testing. Under this condition, a typical model like VGG16 with a large number of parameters is suffered from insufficient data so that it can easily overfit the dataset. To avoid the defects mentioned above, we used ResNet as the backbone to construct a simplified DCNN model, which showed better performance than VGG16.

Although labeling landmarks in the lateral cephalogram were important for orthodontic diagnosis [36–38], errors in landmark identification method are widespread, necessitating time-consuming manual correction. Hence, we applied deep learning method to directly classify X-ray images rather than identification key point methods. Our model showed good performance in both sensitivity and specificity. Moreover, our model did not require extra steps of feature extraction for training or prediction. The heat maps (Figure 4) also confirmed that the well-trained model can discover abnormal area in X-ray images by itself without extra processing.

Nevertheless, our study presented several limitations. First, although 1219 was a large number within the realm of dental research, it was far less than the application requirement of deep learning. Second, our 1219 X-ray images were produced by Morita ×550 at one resolution. Our model may not be robust at other resolutions, which should be addressed through appropriate expansion of the training set with images at other resolutions. Last but not the least, major improvements for the sensitivity and specificity of our research may be achieved in the future by increasing sample size, applying advanced architectures, optimal training strategies, and data generation.

5. Conclusions

This study presents a deep learning model that can automatically detect upper airway obstruction with higher accuracy and more time-efficiency, which would reduce the burden on dentists in clinical work. A simplified DCNN model based on ResNet backbone structure showed good performance for automatic evaluation of upper airway obstruction based on the lateral cephalogram. However, deep learning is not completely accurate in the detection of upper airway obstruction. To avoid false negative diagnosis, regular follow-ups and reevaluations are required if necessary.

Acknowledgments

This work was supported by the Sichuan Science and Technology Program (grant numbers. 2022ZDZX0031), Angelalign Scientific Research Fund (grant number. SDTS21-3-21), and Public Service Platform of Chengdu (grant number. 2021-0166-1-2).

Data Availability

The datasets generated and/or analyzed during the current study are not publicly available due to data security but are available from the corresponding author on reasonable request.

Consent

Written informed consent was not required for this study because all the included patients were collected retrospectively. Exemption of informed consent will not affect the rights and health of included patients. The application for free informed consent has been approved by the Institutional Review Board.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Katyal V., Pamula Y., Martin A. J., Daynes C. N., Kennedy J. D., Sampson W. J. Craniofacial and upper airway morphology in pediatric sleep-disordered breathing: systematic review and meta-analysis. American Journal of Orthodontics and Dentofacial Orthopedics . 2013;143(1):20–30.e3. doi: 10.1016/j.ajodo.2012.08.021. [DOI] [PubMed] [Google Scholar]

- 2.Flores-Mir C., Korayem M., Heo G., Witmans M., Major M. P., Major P. W. Craniofacial morphological characteristics in children with obstructive sleep apnea syndrome: a systematic review and meta-analysis. Journal of the American Dental Association (1939) . 2013;144(3):269–277. doi: 10.14219/jada.archive.2013.0113. [DOI] [PubMed] [Google Scholar]

- 3.Preston C. B. Chronic nasal obstruction and malocclusion. The Journal of the Dental Association of South Africa . 1981;36(11):759–763. [PubMed] [Google Scholar]

- 4.Abe M., Mitani A., Yao A. T., Zong L., Hoshi K., Yanagimoto S. Awareness of malocclusion is closely associated with allergic rhinitis, asthma, and arrhythmia in late adolescents. Healthcare . 2020;8(3):p. 209. doi: 10.3390/healthcare8030209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Lopatiene K., Babarskas A. Malocclusion and upper airway obstruction. Medicina . 2002;38(3):277–283. [PubMed] [Google Scholar]

- 6.Stellzig-Eisenhauer A., Meyer-Marcotty P. Interaction between otorhinolaryngology and orthodontics: correlation between the nasopharyngeal airway and the craniofacial complex. GMS Current Topics in Otorhinolaryngology, Head and Neck Surgery . 2010;9, article Doc04 doi: 10.3205/cto000068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Quinlan C. M., Otero H., Tapia I. E. Upper airway visualization in pediatric obstructive sleep apnea. Paediatric Respiratory Reviews . 2019;32:48–54. doi: 10.1016/j.prrv.2019.03.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Samman N., Mohammadi H., Xia J. Cephalometric norms for the upper airway in a healthy Hong Kong Chinese population. Hong Kong Medical Journal . 2003;9(1):25–30. [PubMed] [Google Scholar]

- 9.Pirila-Parkkinen K., Lopponen H., Nieminen P., Tolonen U., Paakko E., Pirttiniemi P. Validity of upper airway assessment in children a clinical, cephalometric, and MRI study. Angle Orthodontist . 2011;81(3):433–439. doi: 10.2319/063010-362.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.McNamara J. A. A method of cephalometric evaluation. American Journal of Orthodontics and Dentofacial Orthopedics . 1984;86(6):449–469. doi: 10.1016/S0002-9416(84)90352-X. [DOI] [PubMed] [Google Scholar]

- 11.Casalegno F., Newton T., Daher R., et al. Caries detection with near-infrared transillumination using deep learning. Journal of Dental Research . 2019;98(11):1227–1233. doi: 10.1177/0022034519871884. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zhao Y., Li P. C., Gao C. Q., et al. TSASNet: tooth segmentation on dental panoramic X-ray images by two-stage attention segmentation network. Knowledge-Based Systems . 2020;206, article 106338 doi: 10.1016/j.knosys.2020.106338. [DOI] [Google Scholar]

- 13.Endres M. G., Hillen F., Salloumis M., et al. Development of a deep learning algorithm for periapical disease detection in dental radiographs. Diagnostics . 2020;10(6):p. 430. doi: 10.3390/diagnostics10060430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Mahdi F. P., Motoki K., Kobashi S. Optimization technique combined with deep learning method for teeth recognition in dental panoramic radiographs. Scientific Reports . 2020;10(1):p. 12. doi: 10.1038/s41598-020-75887-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Said M. H., Le Roux M. K., Catherine J. H., Lan R. Development of an artificial intelligence model to identify a dental implant from a radiograph. The International Journal of Oral & Maxillofacial Implants . 2020;35(6):1077–1082. doi: 10.11607/jomi.8060. [DOI] [PubMed] [Google Scholar]

- 16.Yu H. J., Cho S. R., Kim M. J., Kim W. H., Kim J. W., Choi J. Automated skeletal classification with lateral cephalometry based on artificial intelligence. Journal of Dental Research . 2020;99(3):249–256. doi: 10.1177/0022034520901715. [DOI] [PubMed] [Google Scholar]

- 17.Silva N. N., Lacerda R. H., Silva A. W., Ramos T. B. Assessment of upper airways measurements in patients with mandibular skeletal class II malocclusion. Dental Press Journal of Orthodontics . 2015;20(5):86–93. doi: 10.1590/2177-6709.20.5.086-093.oar. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Baka Z. M., Fidanboy M. Pharyngeal airway, hyoid bone, and soft palate changes after class II treatment with Twin-block and Forsus appliances during the postpeak growth period. American Journal of Orthodontics and Dentofacial Orthopedics . 2021;159(2):148–157. doi: 10.1016/j.ajodo.2019.12.016. [DOI] [PubMed] [Google Scholar]

- 19.Fareen N., Alam M. K., Khamis M. F., Mokhtar N. Treatment effects of two different appliances on pharyngeal airway space in mixed dentition Malay children. International Journal of Pediatric Otorhinolaryngology . 2019;125:159–163. doi: 10.1016/j.ijporl.2019.07.008. [DOI] [PubMed] [Google Scholar]

- 20.He K. M., Zhang X. Y., Ren S. Q., Sun J. Deep residual learning for image recognition. 2016 Ieee Conference on Computer Vision and Pattern Recognition; 2016; Las Vegas, NV, USA. pp. 770–778. [DOI] [Google Scholar]

- 21.Ali M. M., Ahmed K., Bui F. M., et al. Machine learning-based statistical analysis for early stage detection of cervical cancer. Computers in Biology and Medicine . 2021;139, article 104985 doi: 10.1016/j.compbiomed.2021.104985. [DOI] [PubMed] [Google Scholar]

- 22.Ali M. M., Paul B. K., Ahmed K., Bui F. M., Quinn J. M. W., Moni M. A. Heart disease prediction using supervised machine learning algorithms: performance analysis and comparison. Computers in Biology and Medicine . 2021;136, article 104672 doi: 10.1016/j.compbiomed.2021.104672. [DOI] [PubMed] [Google Scholar]

- 23.Hemu A. A., Mim R. B., Ali M. M., Nayer M., Ahmed K., Bui F. M. Identification of significant risk factors and impact for ASD prediction among children using machine learning approach. Paper presented at: 2022 Second International Conference on Advances in Electrical, Computing, Communication and Sustainable Technologies (ICAECT); 2022; Bhilai, India. [DOI] [Google Scholar]

- 24.Ontor M. Z. H., Ali M. M., Hossain S. S., Nayer M., Ahmed K., Bui F. M. YOLO_CC: deep learning based approach for early stage detection of cervical cancer from cervix images using YOLOv5s model. Paper presented at: 2022 Second International Conference on Advances in Electrical, Computing, Communication and Sustainable Technologies (ICAECT); 2022; Bhilai, India. [DOI] [Google Scholar]

- 25.Zhou B., Khosla A., Lapedriza A., Oliva A., Torralba A. Learning deep features for discriminative localization. 2016 Ieee Conference on Computer Vision and Pattern Recognition; 2016; Las Vegas, NV, USA. pp. 2921–2929. [DOI] [Google Scholar]

- 26.Abadi M., Barham P., Chen J., et al. TensorFlow: a system for large-scale machine learning. Proceedings of Osdi'16: 12th Usenix Symposium on Operating Systems Design and Implementation; 2016; Savannah, GA, USA. pp. 265–283. [Google Scholar]

- 27.Matsuda S., Miyamoto T., Yoshimura H., Hasegawa T. Personal identification with orthopantomography using simple convolutional neural networks: a preliminary study. Scientific Reports . 2020;10(1):p. 7. doi: 10.1038/s41598-020-70474-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Sukegawa S., Yoshii K., Hara T., et al. Deep neural networks for dental implant system classification. Biomolecules . 2020;10(7):p. 984. doi: 10.3390/biom10070984. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Garg R. K., Afifi A. M., Garland C. B., Sanchez R., Mount D. L. Pediatric obstructive sleep apnea. Plastic and Reconstructive Surgery . 2017;140(5):987–997. doi: 10.1097/PRS.0000000000003752. [DOI] [PubMed] [Google Scholar]

- 30.Carroll J. L. Obstructive sleep-disordered breathing in children: new controversies, new directions. Clinics in Chest Medicine . 2003;24(2):261–282. doi: 10.1016/S0272-5231(03)00024-8. [DOI] [PubMed] [Google Scholar]

- 31.Rosen C. L., D'Andrea L., Haddad G. G. Adult criteria for obstructive sleep apnea do not identify children with serious obstruction. The American Review of Respiratory Disease . 1992;146(5_part_1):1231–1234. doi: 10.1164/ajrccm/146.5_Pt_1.1231. [DOI] [PubMed] [Google Scholar]

- 32.Deberryborowiecki B., Kukwa A., Blanks R. H. I. Cephalometric analysis for diagnosis and treatment of obstructive sleep apnea. The Laryngoscope . 1988;98(2):226–234. doi: 10.1288/00005537-198802000-00021. [DOI] [PubMed] [Google Scholar]

- 33.Tsuiki S., Nagaoka T., Fukuda T., et al. Machine learning for image-based detection of patients with obstructive sleep apnea: an exploratory study. Sleep & Breathing . 2021;25:2297–2305. doi: 10.1007/s11325-021-02301-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Shujaat S., Jazil O., Willems H., et al. Automatic segmentation of the pharyngeal airway space with convolutional neural network. Journal of Dentistry . 2021;111, article 103705 doi: 10.1016/j.jdent.2021.103705. [DOI] [PubMed] [Google Scholar]

- 35.Park J., Hwang J., Ryu J., et al. Deep learning based airway segmentation using key point prediction. Applied Sciences . 2021;11(8):p. 3501. doi: 10.3390/app11083501. [DOI] [Google Scholar]

- 36.Nishimoto S., Sotsuka Y., Kawai K., Ishise H., Kakibuchi M. Personal computer-based cephalometric landmark detection with deep learning, using cephalograms on the internet. The Journal of Craniofacial Surgery . 2019;30(1):91–95. doi: 10.1097/SCS.0000000000004901. [DOI] [PubMed] [Google Scholar]

- 37.Dot G., Rafflenbeul F., Arbotto M., Gajny L., Rouch P., Schouman T. Accuracy and reliability of automatic three-dimensional cephalometric landmarking. International Journal of Oral and Maxillofacial Surgery . 2020;49(10):1367–1378. doi: 10.1016/j.ijom.2020.02.015. [DOI] [PubMed] [Google Scholar]

- 38.Lee J. H., Yu H. J., Kim M. J., Kim J. W., Choi J. Automated cephalometric landmark detection with confidence regions using Bayesian convolutional neural networks. BMC Oral Health . 2020;20(1):p. 270. doi: 10.1186/s12903-020-01256-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated and/or analyzed during the current study are not publicly available due to data security but are available from the corresponding author on reasonable request.