Abstract

A kernel-based quantum classifier is the most practical and influential quantum machine learning technique for the hyper-linear classification of complex data. We propose a Variational Quantum Approximate Support Vector Machine (VQASVM) algorithm that demonstrates empirical sub-quadratic run-time complexity with quantum operations feasible even in NISQ computers. We experimented our algorithm with toy example dataset on cloud-based NISQ machines as a proof of concept. We also numerically investigated its performance on the standard Iris flower and MNIST datasets to confirm the practicality and scalability.

Subject terms: Computational science, Information theory and computation, Quantum information

Introduction

Quantum computing opens up new exciting prospects of quantum advantages in machine learning in terms of sample and computation complexity1–5. One of the foundations of these quantum advantages is the ability to form and manipulate data efficiently in a large quantum feature space, especially with kernel functions used in classification and other classes of machine learning6–14.

The support vector machine (henceforth SVM)15 is one of the most comprehensive models that help conceptualize the basis of supervised machine learning. SVM classifies data by finding the optimal hyperplane associated with the widest margin between the two classes in a feature space. SVM can also perform highly nonlinear classifications using what is known as the kernel trick16–18. The convexity of SVM guarantees global optimization.

One of the first quantum algorithms exhibiting an exponential speed-up capability is the least-square quantum support vector machine (LS-QSVM)5. However, the quantum advantage of LS-QSVM strongly depends on costly quantum subroutines such as density matrix exponentiation19 and quantum matrix inversion20,21 as well as components such as quantum random access memory (QRAM)2,22. Because the corresponding procedures require quantum computers to be fault-tolerant, LS-QSVM is unlikely to be realized in noisy intermediate-scale quantum (NISQ) devices23. On the other hand, there are a few quantum kernel-based machine-learning algorithms for near-term quantum applications. Well-known examples are quantum kernel estimators (QKE)8, variational quantum classifiers (VQC)8, and Hadamard or SWAP test classifiers (HTC, STC)10,11. These algorithms are applicable to NISQ, as there are no costly operations needed. However, the training time complexity is even worse than in the classical SVM case. For example, the number of measurements required to generate only the kernel matrix evaluation of QKE scales with the number of training samples to the power of four8.

Here, we propose a novel quantum kernel-based classifier that is feasible with NISQ devices and that can exhibit a quantum advantage in terms of accuracy and training time complexity as exerted in Ref.8. Specifically, we have discovered distinctive designs of quantum circuits that can evaluate the objective and decision functions of SVM. The number of measurements for these circuits with a bounded error is independent from the number of training samples. The depth of these circuits scales also linearly with the size of the training dataset. Meanwhile, the exponentially fewer parameters of parameterized quantum circuits (PQCs)24 encodes the Lagrange multipliers of SVM. Therefore, the training time of our model with a variational quantum algorithm (VQA)25 scales as sub-quadratic, which is asymptotically lower than that of the classical SVM case5,26,27. Our model also shows an advantage in classification due to its compatibility with any typical quantum feature map.

Results

Support vector machine (SVM)

Data classification infers the most likely class of an unseen data point given a training dataset . Here, and . Although the data is real-valued in practical machine learning tasks, we allow complex-valued data without a loss of generality. We focus on binary classification. (i.e., ), because multi-class classification can be conducted with a multiple binary SVM via a one-versus-all or a one-versus-on scheme28. We assume that is linearly separable in the higher dimensional Hilbert space given some feature map . Then, there should exist two parallel supporting hyperplanes that divide training data. The goal is to find hyperplanes for which the margin between them is maximized. To maximize the margin even further, the linearly separable condition can be relaxed so that some of the training data can penetrate into the “soft” margin. Because the margin is given as by simple geometry, the mathematical formulation of SVM13 is given as

| 1 |

where the slack variable is introduced to represent a violation of the data in the linearly separable condition. The dual formulation of SVM is expressed as29

| 2 |

where the positive semi-definite (PSD) kernel is for . The values are non-negative Karush-Kuhn-Tucker multipliers. This formulation employs an implicit feature map uniquely determined by the kernel. The global solution is obtained in polynomial time due to convexity29. After optimization, the optimum bias is recovered as for any . Such training data with non-zero weight are known as the support vectors. We estimate the labels of unseen data with a binary classifier:

| 3 |

In a first-hand principle analysis, the complexity of solving Eq. (2) is with accuracy of . A kernel function with complexity of is queried times to construct the kernel matrix, and quadratic programming takes to find for a non-sparse kernel matrix5. Although the complexity of SVM decreases when employing modern programming methods26,27, it is still higher than or equal to due to kernel matrix generation and quadratic programming. Thus, a quantum algorithm that evaluates all terms in Eq. (2) for time and achieves a minimum of fewer than evaluations would have lower complexity than classical algorithms. We apply two forms of transformations to Eq. (2) to realize an efficient quantum algorithm.

Change of variable and bias regularization

Constrained programming, such as that in the SVM case, is often transformed into unconstrained programming by adding penalty terms of constraints to the objective function. Although there are well-known methods such as an interior point method29, we prefer the strategies of a ‘change of variables’ and ‘bias regularization’ to maintain the quadratic form of SVM. Although motivated to eliminate constraints, the results appear likely to lead to an efficient quantum SVM algorithm.

First, we change optimization variable to , where and to eliminate inequality constraints. The -normalized variable is an M-dimensional probability vector given that and . Let us define . We substitute the variables into Eq. (2):

| 4 |

where is a set of M-dimensional probability vectors. Because for an arbitrary due to the property of the positive semi-definite kernel, is a partial solution that maximizes Eq. (4) on B. Substituting with Eq. (4), we have

| 5 |

Finally, because maximizing is identical to minimizing , we have a simpler formula that is equivalent to Eq. (2):

| 6 |

The above Eq. (6) implies that instead of optimizing M numbers of bounded free parameters or , we can optimize the -qubit quantum state and define . Therefore, if there exists an efficient quantum algorithm that evaluates the objective function of Eq. (6) given , the complexity of SVM would be improved. In fact, in the later section, we propose quantum circuits with linearly scaling complexity for that purpose.

The equality constraint is relaxed after adding the -regularization term of the bias to Eq. (1). Motivated by the loss function and regularization perspectives of SVM30, this technique was introduced31,32 and developed33,34 previously. The primal and dual forms of SVM become

| 7 |

| 8 |

Note that is a positive definite. As shown earlier, changing the variables causes Eq. (8) to become another equivalent optimization problem:

| 9 |

As the optimal bias is given as according to the Karush-Kuhn-Tucker condition, the classification formula inherited from Eq. (3) is expressed as

| 10 |

Equations (8) and (9) can be viewed as Eqs. (2) and (6) with a quadratic penalizing term on the equality constraint such that they become equivalent in terms of the limit of . Thus, Eqs. (7), (8), and (9) are more relaxed SVM optimization problems with an additional hyperparameter .

Variational quantum approximate support vector machine

One way to generate the aforementioned quantum state is to use amplitude encoding: . However, doing so would be inefficient because the unitary gate of amplitude encoding has a complex structure that scales as 35. Another way to generate is to use a parameterized quantum circuit (PQC), known as an ansatz in this case. Because there is no prior knowledge in the distribution of s, the initial state should be . The ansatz can transform the initial state into other states depending on gate parameter vector : . In other words, optimization parameters encoded by with the ansatz are represented as . Given the lack of prior information, the most efficient ansatz design can be a hardware-efficient ansatz (HEA), which consists of alternating local rotation layers and entanglement layers36,37. The number of qubits and the depth of this ansatz are .

We discovered the quantum circuit designs that compute Eqs. (9) and (10) within time. Conventionally, the quantum kernel function is defined as the Hilbert Schmidt inner product: 4,7,8,10–12. First, we divide the objective function in Eq. (9) into the loss and regularizing functions of using the above ansatz encoding:

| 11 |

Specifically, the objective function is equal to . Similarly, the decision function in Eq. (10) becomes

| 12 |

Inspired by STC10,11, the quantum circuits in Fig. 1 efficiently evaluate and . The quantum gate embeds the entire training dataset with the corresponding quantum feature map , so that . Therefore, the quantum state after state preparation is . We apply a SWAP test and a joint measurement in the loss and decision circuits to evaluate and :

| 13 |

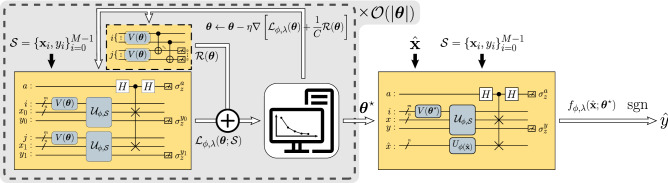

Figure 1.

Circuit architecture of VQASVM. Loss, decision, and regularization circuits are shown in the order of panel (a,b), and (c) all qubits of index registers i and j are initialized to , and the rest to . Ansatz is a PQC of qubits that encodes probability vector . embeds a training data set with a quantum feature map , which embeds classical data to a quantum state . n denotes the number of qubits for the quantum feature map, which is usually N, but can be reduced to if an amplitude encoding feature map is used.

Here, is a Pauli Z operator and is a projection measurement operator of state . See “Methods” for specified derivations. The asymptotic complexities of the loss and decision circuits are linear with regard to the amount of training data. can be prepared with operations4. See “Methods” for the specific realization used in this article. Because has depth and the SWAP test requires only operations, the overall run-time complexity of evaluating and with bounded error is (see the Supplementary Fig. S5 online for numerical verification of the scaling). Similarly, the complexity of estimating with accuracy is due to two parallel s and CNOT gates.

We propose a variational quantum approximate support vector machine (VQASVM) algorithm that solves the SVM optimization problem with VQA25 and transfers the optimized parameters to classify new data effectively. Figure 2 summarizes the process of VQASVM. We estimate , which minimizes the objective function; this is then used for classifying unseen data:

| 14 |

Figure 2.

Variational quantum approximated support vector machine. The white round boxes represent classical calculations whereas the yellow round boxes represent quantum operations. The white arrows represent the flow of classical data whereas the black arrows represent the embedding of classical data. The grey areas indicate the corresponding training phase of each iteration. The regularization circuit in the black dashed box can be omitted for a hard-margin case where .

Following the general scheme of VQA, the gradient descent (GD) algorithm can be applied; classical processors update the parameters of , whereas the quantum processors evaluate the functions for computing gradients. Because the objective function of VQASVM can be expressed as the expectation value of a Hamiltonian, i.e.,

| 15 |

where , the exact gradient can be obtained by the modified parameter-shift rule38,39. GD converges to a local minimum after iterations with the difference 29 given that estimation error of the objective function is smaller than . Therefore, the total run-time complexity of VQASVM is with error of as the number of parameters is .

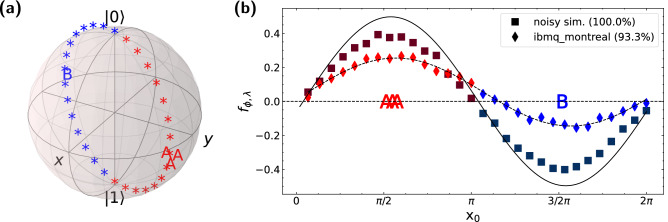

Experiments on IBM quantum processors

We demonstrate the classification of a toy dataset using the VQASVM algorithm on NISQ computers as a proof-of-concept. Our example dataset is mapped to a Bloch sphere, as shown in Fig. 3a. Due to decoherence, we set the data dimension to and number of training data instances to . First, we randomly choose the greatest circle on the Bloch sphere that passes and . Then, we randomly choose two opposite points on the circle to be the center of two classes, A and B. Subsequently, four training data instances are generated close to each class center in order to avoid overlaps between the test data and each other. This results in a good training dataset with the maximum margin such that soft-margin consideration is not needed. In addition, thirty test data instances are generated evenly along the great circle and are labelled as 1 or − 1 according to the inner products with the class centers. In this case, we can set hyperparameter and the process hence requires no regularization circuit evaluation. The test dataset is non-trivial to classify given that the test data are located mostly in the margin area; convex hulls of both training datasets do not include most of the test data.

Figure 3.

Experiments on a ibmq_montreal cloud NISQ processor. (a) The toy training (letters) and test (asterisk) data are shown here in a Bloch sphere. The color indicates the true class label of the data; i.e., red = class A, and blue = class B. The letters A represent the training data of class A, and letter B represents the training datum of class B. (b) classification results performed on ibmq_montreal QPU (diamonds) and a simulation with noise (squares) compared to theoretical values(solid line). is the decision function value of each test datum. The letters A and B represent the training data, located at their longitudinal coordinates on the Bloch sphere (). Curved dashed lines are the sine-fitting of the ibmq_montreal results. Values inside the round brackets in the legend are the classification accuracy rates.

We choose a quantum feature map that embeds data into a Bloch sphere instead of qubits: . Features and are the latitude and the longitude of the Bloch sphere. We use two qubits ( and ) RealAmplitude40 PQC as the ansatz: . In this experiment, we use ibmq_montreal, which is one of the IBM Quantum Falcon processors. “Methods” section presents the specific techniques for optimizing quantum circuits against decoherence. The simultaneous perturbation stochastic approximation (SPSA) algorithm is selected to optimize due to its rapid convergence and good robustness to noise41,42. The measurements of each circuits are repeated times to estimate expectation values, which was the maximum possible option for ibmq_montreal. Due to long queue time of a cloud-based QPU, we reduce the QPU usage by applying warm-start and early-stopping techniques explained in “Methods” section.

Figure 3 shows the classification result. Theoretical decision function values were calculated by solving Eq. (9) with convex optimization. A noisy simulation is a classical simulation that emulates an actual QPU based on a noise parameter set estimated from a noise measurement. Although the scale of the decision values is reduced, the impact on the classification accuracy is negligible given that only the signs of decision values matter. This logic has been applies to most NISQ-applicable quantum binary classifiers. The accuracy would improve with additional error mitigation and offset calibration processes on the quantum device. Other VQASVM demonstrations with different datasets can be found as Supplementary Fig. S2 online.

Numerical simulation

In a practical situation, the measurement on quantum circuits is repeated R times to estimate the expectation value within error, which could interfere with the convergence of VQA. However, the numerical analysis with the Iris dataset43 confirmed that VQASVM converges even with the noise in objective function estimation exists. The following paragraphs describe the details of the numerical simulation, such as data preprocessing and the choice of the quantum kernel and the ansatz.

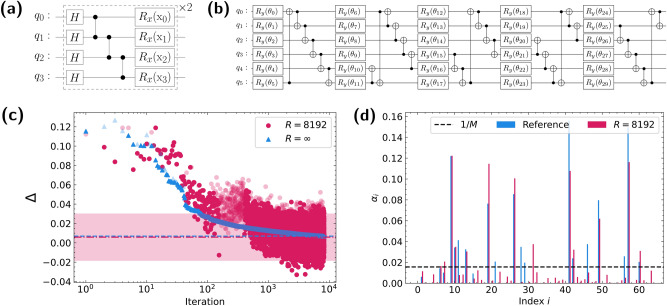

We assigned the labels + 1 to Iris setosa and − 1 to Iris versicolour and Iris virginica for binary classification. The features of the data were scaled so that the range became . We sampled training data instances from the total dataset and treated the rest as the test data. The training kernel matrix constructed with our custom quantum feature map in Fig. 4a is learnable; i.e., the singular values of the kernel matrix decay exponentially. After testing the PQC designs introduced in Ref.36, we chose the PQC exhibited in Fig. 4b as the ansatz for this simulation (see Supplementary Figs. S6–S9 online). The number of PQC parameters is 30, which is less than M. In this simulation, the SPSA optimizer was used for training due to its fast convergence and robustness to noise41,42.

Figure 4.

Numerical analysis on the iris dataset (). (a) Custom quantum feature map for the iris dataset. (b) PQC design for with gate parameters. ((c) The shaded circles and triangles depict the training convergence outcomes of the residual losses. At the final iteration, () the residual loss for repeated measurements (red dot-dashed line) is almost equal to the case of (blue dashed line), where the error when estimating the expectation value is 0. The red shaded area represents the 95% credible intervals of the last 16 residual losses for the case. (d) The spectrum of optimized weights, s, of for the theoretical and cases are compared. The dashed black line indicates the level of the uniform weight . ‘Reference’ in the legend refers to the theoretical values of the s obtained by convex optimization.

The objective and decision functions were evaluated in two scenarios. The first case samples a finite number of measurement results to estimate the expectation values such that the error of the estimation is non-zero. The second case directly calculates the expectation values with zero estimation error, which corresponds to sampling infinitely many measurement results; i.e., . We defined the residual loss of training as at iteration t to compare the convergence. Here, is the theoretical minimum of Eq. (9) as obtained by convex optimization.

Although containing some uncertainty, Fig. 4c shows that SPSA converges to a local minimum despite the estimation noise. Both the and cases show the critical convergence rule of SPSA; for a sufficiently large number of iterations t. More vivid visualization can be found as Supplementary Fig. S10 online. In addition, the spectrum of the optimized Lagrange multipliers s mostly coincides with the theory, especially for the significant support vectors. (Fig. 4d) Therefore, we concluded that training VQASVM within a finite number of measurements is achievable. The classification accuracy was 95.34% for and 94.19% for .

The empirical speed-up of VQASVM is possible because the number of optimization parameters is exponentially reduced by the PQC encoding. However, in the limit of large number of qubits (i.e., ), it is unclear that such PQC can be trained with VQA to well-approximate the optimal solution. Therefore, we performed numerical analysis to empirically verify that VQASVM with parameters achieves bounded classification error even for large M. For this simulation, the MNIST dataset44 was used instead of the Iris dataset because there are not enough Iris data points to clarify the asymptotic behavior of VQASVM. In this setting, the number of maximum possible MNIST training data instances for VQASVM is .

A binary image data of ‘0’s and ‘1’s with a image size were selected for binary classification, the features of which were then reduced to 10 by means of a principle component analysis (PCA). The well-known quantum feature map introduced in Ref.8 was chosen for the simulation: , where and . The visualization of the feature map can be found as Supplementary Fig. S4 online. The ansatz architecture used for this simulation was the PQC template shown in Fig. 4b with 19 layers; i.e., the first part of the PQC in Fig. 4b is repeated 19 times. Thus, the number of optimization parameters is .

The numerical simulation shows that although residual training loss linearly increases with the number of PQC qubits, the rate is extremely low, i.e., . Moreover, we could not observe any critical difference in classification accuracy against the reference, i.e., theoretical accuracy obtained by convex optimization. Therefore, at least for , we conclude that run-time complexity of with bounded classification accuracy is empirically achievable for VQASVM due to number of parameters (see Supplementary Fig. S11 online for a visual summarization of the result).

Discussion

In this work, we propose a novel quantum-classical hybrid supervised machine learning algorithm that achieves run-time complexity of , whereas the complexity of the modern classical algorithm is . The main idea of our VQASVM algorithm is to encode optimization parameters that represent the normalized weight for each training data instance in a quantum state using exponentially fewer parameters. We numerically confirmed the convergence and feasibility of VQASVM even in the presence of expectation value estimation error using SPSA. We also observed the sub-quadratic asymptotic run-time complexity of VQASVM numerically. Finally, VQASVM was tested on cloud-based NISQ processors with a toy example dataset to highlight its practical application potential.

Based on the numerical results, we presume that our variational algorithm can bypass the issues evoked by the expressibility36 and trainability relationship of PQCs; i.e., the highly expressive PQC is not likely to be trained with VQA due to the vanishing gradient variance45, a phenomenon known as the barren plateau46. This problem has become a critical barrier for most VQAs utilizing a PQC to generate solution states. However, given that the SVM is learnable (i.e., the singular values of the kernel matrix decay exponentially5), only a few Lagrange multipliers corresponding to the significant support vectors are critically large; 29,30. For example, Fig. 4d illustrates the statement. Thus, the PQCs encoding optimal multipliers should not necessarily be highly expressive. We speculate that there exists an ansatz generating these sparse probability distributions. Moreover, the optimal solution would have exponential degeneracy because only measurement probability matters instead of the amplitude of the state itself. Therefore, we could not observe a critical decrease in classification accuracy for these reasons even though the barren plateau exists, i.e., . More analytic discussion on the trainability of VQASVM and the ansatz generating sparse probability distribution should be investigated in the future.

The VQASVM method manifests distinctive features compared to LS-QSVM, which solves the linear algebraic optimization problem of least-square SVM47 with the quantum algorithm for linear systems of equations (HHL)20. Given that the fault-tolerant universal quantum computers and efficient quantum data loading with QRAM2,22 are possible, the run-time complexity of LS-QSVM is exponentially low: with error and effective condition number . However, near-term implementation of LS-QSVM is infeasible due to lengthy quantum subroutines, which VQASVM has managed to avoid. Also, training LS-QSVM has to be repeated for each query of unseen data because the solution state collapses after the measurements at the end; transferring the solution state to classify multiple test data violates the no-cloning theorem. VQASVM can overcome these drawbacks. VQASVM is composed of much shorter operations; VQASVM circuits are much shallower than HHL circuits with the same moderate system size when decomposed in the same universal gate set. The classification phase of VQASVM can be separated from the training phase and performed simultaneously; training results are classically saved and transferred to a decision circuit in other quantum processing units (QPUs).

We continue the discussion on the advantage of our method compared to other quantum kernel-based algorithms, such as a variational quantum classifier (VQC) and quantum kernel estimator (QKE), which are expected to be realized in the near-term NISQ devices8. VQC estimates the label of data as , where is the empirical average of a binary function f(z) such that z is the N-bit computational basis measurement result of quantum circuit . The parameters and b are trained with variational methods that minimize the empirical risk: . This requires quantum circuit measurements per iteration4. Subsequently, the complexity of VQC would match VQASVM from the heuristic point of view. However, VQC does not take advantage of the strong duality; the optimal state that should generate has no characteristic, whereas the optimal distribution of that ansatz of VQASVM should generate is very sparse, i.e., most s are close to zero. Therefore, optimizing VQC in terms of the sufficiently large number of qubits would be vulnerable to issues such as local minima and barren plateaus. On the other hand, QKE estimates the kernel matrix elements from the empirical probability of measuring a N-bit zero sequence on quantum circuit , given the kernel matrix . The estimated kernel matrix is feed into the classical kernel-based algorithms, such as SVM. That is why we used QKE as the reference for the numerical analysis. The kernel matrix can be estimated in measurements with the bounded error of . Thus, QKE has much higher complexity than both VQASVM and classical SVM8. In addition, unlike QKE, the generalized error converges to zero as due to the exponentially fewer parameters of VQASVM, strengthening the reliability of the training48. The numerical linearity relation with the decision function error and supports the claim (see Supplementary Fig. S11 online).

VQASVM can be enhanced further with kernel optimization. Like other quantum-kernel-based methods, the choice of the quantum feature map is crucial for VQASVM. Unlike previous methods (e.g., quantum kernel alignment49), VQASVM can optimize a quantum feature map online during the training process. Given that is tuned with other parameters , optimal parameters should be the saddle point: . In addition, tailored quantum kernels (e.g., ) can be adapted with the simple modification10 on the quantum circuits for VQASVM to improve classification accuracy. However, because the quantum advantage in classification accuracy derived from the power of quantum kernels is not the scope of this paper, we leave the remaining discussion for the future. Another method to improve VQASVM is boosting. Since VQASVM is not a convex problem, the performance may depend on the initial point and not be immune to the overfitting problem, like other kernel-based algorithms. A boosting method can be applied to improve classification accuracy by cascading low-performance VQASVMs. Because each VQASVM model only requires space, ensemble methods such as boosting are suitable for VQASVM.

Methods

Proof of Eq. (13)

First, we note that a SWAP test operation in Fig. 1 measures the Hilbert–Schmidt inner product between two pure states by estimating , where a is the control qubit and and are states on target qubits b and c, respectively. Quantum registers i and j in Fig. 1 are traced out because measurements are performed on only a and y qubits. The reduced density matrix on x and y quantum registers before the controlled-SWAP operation is , which is the statistical sum of quantum states with probability . Let us first consider the decision circuit (Fig. 1b). Given that the states and are prepared, due to separability. Here, can be or . Similarly, for the loss circuit (Fig. 1a), we have states and with probability such that . Therefore, from the definition of our quantum kernel, each term in Eq. (13) matches the loss and decision functions in Eqs. (11) and (12). More direct proof is provided in Ref.10,11 and in the Supplementary Information section B (online).

Realization of quantum circuits

In this article, is realized using uniformly controlled one-qubit gates, which require at most CNOT gates, M one-qubit gates, and a single diagonal -qubit gate35,50. We compiled the quantum feature map with a basis gate set composed of Pauli rotations and CNOT. can be efficiently implemented by replacing all Pauli rotations with uniformly controlled Pauli rotations. The training data label embedding of can also be easily implemented using a uniformly controlled Pauli X rotation (i.e., setting the rotation angle to if the label is positive and 0 otherwise). Although this procedure allows one to incorporate existing quantum feature maps, the complexity can increase to if the quantum feature map contains all-to-all connecting parameterized two-qubit gates. Nonetheless, such a value of has linear complexity proportional to the number of training data instances.

Application to IBM quantum processors

Because IBM quantum processors are based on superconducting qubits, all-to-all connections are not possible. Additional SWAP operations among qubits for distant interactions would shorten the effective decoherence time and increase the noise. We carefully selected the physical qubits of ibmq_montreal in order to reduce the number of SWAP operations. For and single qubit embedding, and . Thus, multi-qubit interaction is required for the following connections: , , , , and . We initially selected nine qubits connected in a linear topology such that the overall estimated single and two-qubit gate errors are lowest among all other possible options. The noise parameters and topology of ibmq_montreal are provided by IBM Quantum. For instance, the physical qubits indexed as 1, 2, 3, 4, 5, 8, 11, 14, and 16 in ibmq_montreal were selected in this article (see Supplementary Fig. S1 online) We then assign a virtual qubit in the order of so that the aforementioned required connections can be made between qubits next to each other. In conclusion, mapping from virtual qubits to physical qubits proceeds as in this experiment. We report that with this arrangement, the circuit depths of loss and decision circuits are correspondingly 60 and 59 for a balanced dataset and 64 and 63 for the an unbalanced dataset in the basis gate set of ibmq_montreal: .

Additional techniques on SPSA

The conventional SPSA algorithm has been adjusted for faster and better convergence. First, the blocking technique was introduced. Assuming that the variance of objective function is uniform on parameter , the next iteration is rejected if . SPSA would converge more rapidly with blocking by preventing its objective function from becoming too large with some probability (see Supplementary Fig. S10). Second, Early-stopping is applied. Iterations are terminated if certain conditions are satisfied. Specifically, we stop SPSA optimization if the average of last 16 recorded training loss values is greater than or equal to the last 32 recorded values. Early stopping reduces the training time drastically, especially when running on a QPU. Last, we averaged the last 16 recorded parameters to yield the result . Combinations of these techniques were selected for better optimization. We adopted all these techniques for the experiments and simulations as the default condition.

Warm-start optimization

We report cases in which the optimization of IBM Q Quantum Processors yields vanishing kernel amplitudes due to the constantly varying error map problem. The total run time should be minimized to avoid this problem. Because accessing a QPU takes a relatively long queue time, we apply a ‘warm-start’ technique, which reduces number of QPU uses. First, we initialize and proceed a few iterations (32) with a noisy simulation on a CPU and then evaluate the functions on a QPU for the remaining iterations. Note that an SPSA optimizer requires heavy initialization computation, such as when the initial variance is calculated. With this warm-start method, we are able to obtain better results on some trials.

Supplementary Information

Acknowledgements

This work was supported by the Samsung Research Funding & Incubation Center of Samsung Electronics under Project Number SRFC-TF2003-01. We acknowledge the use of IBM Quantum services for this work. The views expressed are those of the authors and do not reflect the official policy or position of IBM or the IBM Quantum team.

Author contributions

S.P. contributed to the development and experimental verification of the theoretical and circuit models; D.K.P. contributed to developing the initial concept and the experimental verification; J.K.R. contributed to the development and validation of the main concept and organization of this work. All co-authors contributed to the writing of the manuscript.

Data availability

The numerical data generated in this work are available from the corresponding author upon reasonable request. https://github.com/Siheon-Park/QUIC-Projects.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-023-29495-y.

References

- 1.Sharma K, et al. Reformulation of the no-free-lunch theorem for entangled datasets. Phys. Rev. Lett. 2022;128:070501. doi: 10.1103/PhysRevLett.128.070501. [DOI] [PubMed] [Google Scholar]

- 2.Lloyd, S., Mohseni, M. & Rebentrost, P. Quantum algorithms for supervised and unsupervised machine learning (arXiv preprint) (2013).

- 3.Biamonte J, et al. Quantum machine learning. Nature. 2017;549:195–202. doi: 10.1038/nature23474. [DOI] [PubMed] [Google Scholar]

- 4.Schuld M, Petruccione F. Supervised Learning with Quantum Computers. Springer; 2018. [Google Scholar]

- 5.Rebentrost P, Mohseni M, Lloyd S. Quantum support vector machine for big data classification. Phys. Rev. Lett. 2014;113:130503. doi: 10.1103/PhysRevLett.113.130503. [DOI] [PubMed] [Google Scholar]

- 6.Schuld M, Fingerhuth M, Petruccione F. Implementing a distance-based classifier with a quantum interference circuit. EPL (Europhys. Lett.) 2017;119:60002. doi: 10.1209/0295-5075/119/60002. [DOI] [Google Scholar]

- 7.Schuld M, Killoran N. Quantum machine learning in feature Hilbert spaces. Phys. Rev. Lett. 2019;122:040504. doi: 10.1103/PhysRevLett.122.040504. [DOI] [PubMed] [Google Scholar]

- 8.Havlíček V, et al. Supervised learning with quantum-enhanced feature spaces. Nature. 2019;567:209–212. doi: 10.1038/s41586-019-0980-2. [DOI] [PubMed] [Google Scholar]

- 9.Lloyd, S., Schuld, M., Ijaz, A., Izaac, J. & Killoran, N. Quantum embeddings for machine learning (arXiv preprint) (2020).

- 10.Blank C, Park DK, Rhee J-KK, Petruccione F. Quantum classifier with tailored quantum kernel. NPJ Quantum Inf. 2020;6:41. doi: 10.1038/s41534-020-0272-6. [DOI] [Google Scholar]

- 11.Park DK, Blank C, Petruccione F. The theory of the quantum kernel-based binary classifier. Phys. Lett. A. 2020;384:126422. doi: 10.1016/j.physleta.2020.126422. [DOI] [Google Scholar]

- 12.Schuld, M. Supervised quantum machine learning models are kernel methods (arXiv preprint) (2021).

- 13.Liu Y, Arunachalam S, Temme K. A rigorous and robust quantum speed-up in supervised machine learning. Nat. Phys. 2021;17:1013–1017. doi: 10.1038/s41567-021-01287-z. [DOI] [Google Scholar]

- 14.Blank C, da Silva AJ, de Albuquerque LP, Petruccione F, Park DK. Compact quantum kernel-based binary classifier. Quantum Sci. Technol. 2022;7:045007. doi: 10.1088/2058-9565/ac7ba3. [DOI] [Google Scholar]

- 15.Cortes C, Vapnik V. Support-vector networks. Mach. Learn. 1995;20:273–297. doi: 10.1007/BF00994018. [DOI] [Google Scholar]

- 16.Boser, B. E., Guyon, I. M. & Vapnik, V. N. A training algorithm for optimal margin classifiers. In Proceedings of the Fifth Annual Workshop on Computational Learning Theory, 144–152 (1992).

- 17.Vapnik V, Golowich SE, Smola A. Support vector method for function approximation, regression estimation, and signal processing. Adv. Neural Inf. Process. Syst. 1997;20:281–287. [Google Scholar]

- 18.Guyon, I., Vapnik, V., Boser, B., Bottou, L. & Solla, S. Capacity control in linear classifiers for pattern recognition. In Proceedings od 11th IAPR International Conference on Pattern Recognition. Vol. II. Conference B: Pattern Recognition Methodology and Systems, 385–388. 10.1109/ICPR.1992.201798 (IEEE Comput. Soc. Press, 1992).

- 19.Lloyd S, Mohseni M, Rebentrost P. Quantum principal component analysis. Nat. Phys. 2014;10:631–633. doi: 10.1038/nphys3029. [DOI] [Google Scholar]

- 20.Harrow AW, Hassidim A, Lloyd S. Quantum algorithm for linear systems of equations. Phys. Rev. Lett. 2009;103:150502. doi: 10.1103/PhysRevLett.103.150502. [DOI] [PubMed] [Google Scholar]

- 21.Aaronson S. Read the fine print. Nat. Phys. 2015;11:291–293. doi: 10.1038/nphys3272. [DOI] [Google Scholar]

- 22.Giovannetti V, Lloyd S, Maccone L. Quantum random access memory. Phys. Rev. Lett. 2008;100:160501. doi: 10.1103/PhysRevLett.100.160501. [DOI] [PubMed] [Google Scholar]

- 23.Preskill J. Quantum computing in the NISQ era and beyond. Quantum. 2018;2:79. doi: 10.22331/q-2018-08-06-79. [DOI] [Google Scholar]

- 24.Benedetti M, Lloyd E, Sack S, Fiorentini M. Parameterized quantum circuits as machine learning models. Quantum Sci. Technol. 2019;4:043001. doi: 10.1088/2058-9565/ab4eb5. [DOI] [Google Scholar]

- 25.Cerezo M, et al. Variational quantum algorithms. Nat. Rev. Phys. 2021;3:625–644. doi: 10.1038/s42254-021-00348-9. [DOI] [Google Scholar]

- 26.Chang C-C, Lin C-J. LIBSVM. ACM Trans. Intell. Syst. Technol. 2011;2:1–27. doi: 10.1145/1961189.1961199. [DOI] [Google Scholar]

- 27.Coppersmith D, Winograd S. Matrix multiplication via arithmetic progressions. J. Symb. Comput. 1990;9:251–280. doi: 10.1016/S0747-7171(08)80013-2. [DOI] [Google Scholar]

- 28.Giuntini, R. et al. Quantum state discrimination for supervised classification (arXiv preprint) (2021).

- 29.Boyd SP, Vandenberghe L. Convex Optimization. Cambridge University Press; 2004. [Google Scholar]

- 30.Deisenroth MP, Faisal AA, Ong CS. Mathematics for Machine Learning. Cambridge University Press; 2020. [Google Scholar]

- 31.Mangasarian O, Musicant D. Successive overrelaxation for support vector machines. IEEE Trans. Neural Netw. 1999;10:1032–1037. doi: 10.1109/72.788643. [DOI] [PubMed] [Google Scholar]

- 32.Frie, T.-T., Cristianini, N. & Campbell, C. The kernel-adatron algorithm: A fast and simple learning procedure for support vector machines. In Machine Learning: Proceedings of the Fifteenth International Conference (ICML’98), 188–196 (1998).

- 33.Hsu C-W, Lin C-J. A simple decomposition method for support vector machines. Mach. Learn. 2002;46:291–314. doi: 10.1023/A:1012427100071. [DOI] [Google Scholar]

- 34.Hsu Chih-Wei, Lin Chih-Jen. A comparison of methods for multiclass support vector machines. IEEE Trans. Neural Netw. 2002;13:415–425. doi: 10.1109/72.991427. [DOI] [PubMed] [Google Scholar]

- 35.Mottonen, M., Vartiainen, J. J., Bergholm, V. & Salomaa, M. M. Transformation of quantum states using uniformly controlled rotations (arXiv preprint) (2004).

- 36.Sim S, Johnson PD, Aspuru-Guzik A. Expressibility and entangling capability of parameterized quantum circuits for hybrid quantum-classical algorithms. Adv. Quantum Technol. 2019;2:1900070. doi: 10.1002/qute.201900070. [DOI] [Google Scholar]

- 37.Kandala A, et al. Hardware-efficient variational quantum eigensolver for small molecules and quantum magnets. Nature. 2017;549:242–246. doi: 10.1038/nature23879. [DOI] [PubMed] [Google Scholar]

- 38.Mitarai K, Negoro M, Kitagawa M, Fujii K. Quantum circuit learning. Phys. Rev. A. 2018;98:032309. doi: 10.1103/PhysRevA.98.032309. [DOI] [Google Scholar]

- 39.Schuld M, Bergholm V, Gogolin C, Izaac J, Killoran N. Evaluating analytic gradients on quantum hardware. Phys. Rev. A. 2019;99:032331. doi: 10.1103/PhysRevA.99.032331. [DOI] [Google Scholar]

- 40.ANIS, M. D. S. et al. Qiskit: An open-source framework for quantum computing. 10.5281/zenodo.2573505 (2021).

- 41.Spall JC. A one-measurement form of simultaneous perturbation stochastic approximation. Automatica. 1997;33:109–112. doi: 10.1016/S0005-1098(96)00149-5. [DOI] [Google Scholar]

- 42.Spall J. Adaptive stochastic approximation by the simultaneous perturbation method. IEEE Trans. Autom. Control. 2000;45:1839–1853. doi: 10.1109/TAC.2000.880982. [DOI] [Google Scholar]

- 43.Fisher, R. A. & Marshall, M. Iris data set (1936).

- 44.Deng L. The mnist database of handwritten digit images for machine learning research. IEEE Signal Process. Mag. 2012;29:141–142. doi: 10.1109/MSP.2012.2211477. [DOI] [Google Scholar]

- 45.Holmes Z, Sharma K, Cerezo M, Coles PJ. Connecting ansatz expressibility to gradient magnitudes and barren plateaus. PRX Quantum. 2022;3:010313. doi: 10.1103/PRXQuantum.3.010313. [DOI] [Google Scholar]

- 46.McClean JR, Boixo S, Smelyanskiy VN, Babbush R, Neven H. Barren plateaus in quantum neural network training landscapes. Nat. Commun. 2018;9:4812. doi: 10.1038/s41467-018-07090-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Suykens JAK, Vandewalle J. Least squares support vector machine classifiers. Neural Process. Lett. 1999;9:293–300. doi: 10.1023/A:1018628609742. [DOI] [Google Scholar]

- 48.Caro MC, et al. Generalization in quantum machine learning from few training data. Nat. Commun. 2022;13:1–11. doi: 10.1038/s41467-022-32550-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Glick, J. R. et al. Covariant quantum kernels for data with group structure (arXiv preprint). 10.48550/arxiv.2105.03406 (2021).

- 50.Bergholm V, Vartiainen JJ, Möttönen M, Salomaa MM. Quantum circuits with uniformly controlled one-qubit gates. Phys. Rev. A. 2005;71:052330. doi: 10.1103/PhysRevA.71.052330. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The numerical data generated in this work are available from the corresponding author upon reasonable request. https://github.com/Siheon-Park/QUIC-Projects.