Abstract

Background

Vaccinations play a critical role in mitigating the impact of COVID-19 and other diseases. Past research has linked misinformation to increased hesitancy and lower vaccination rates. Gaps remain in our knowledge about the main drivers of vaccine misinformation on social media and effective ways to intervene.

Objective

Our longitudinal study had two primary objectives: (1) to investigate the patterns of prevalence and contagion of COVID-19 vaccine misinformation on Twitter in 2021, and (2) to identify the main spreaders of vaccine misinformation. Given our initial results, we further considered the likely drivers of misinformation and its spread, providing insights for potential interventions.

Methods

We collected almost 300 million English-language tweets related to COVID-19 vaccines using a list of over 80 relevant keywords over a period of 12 months. We then extracted and labeled news articles at the source level based on third-party lists of low-credibility and mainstream news sources, and measured the prevalence of different kinds of information. We also considered suspicious YouTube videos shared on Twitter. We focused our analysis of vaccine misinformation spreaders on verified and automated Twitter accounts.

Results

Our findings showed a relatively low prevalence of low-credibility information compared to the entirety of mainstream news. However, the most popular low-credibility sources had reshare volumes comparable to those of many mainstream sources, and had larger volumes than those of authoritative sources such as the US Centers for Disease Control and Prevention and the World Health Organization. Throughout the year, we observed an increasing trend in the prevalence of low-credibility news about vaccines. We also observed a considerable amount of suspicious YouTube videos shared on Twitter. Tweets by a small group of approximately 800 “superspreaders” verified by Twitter accounted for approximately 35% of all reshares of misinformation on an average day, with the top superspreader (@RobertKennedyJr) responsible for over 13% of retweets. Finally, low-credibility news and suspicious YouTube videos were more likely to be shared by automated accounts.

Conclusions

The wide spread of misinformation around COVID-19 vaccines on Twitter during 2021 shows that there was an audience for this type of content. Our findings are also consistent with the hypothesis that superspreaders are driven by financial incentives that allow them to profit from health misinformation. Despite high-profile cases of deplatformed misinformation superspreaders, our results show that in 2021, a few individuals still played an outsized role in the spread of low-credibility vaccine content. As a result, social media moderation efforts would be better served by focusing on reducing the online visibility of repeat spreaders of harmful content, especially during public health crises.

Keywords: content analysis, COVID-19, infodemiology, misinformation, online health information, social media, trend analysis, Twitter, vaccines, vaccine hesitancy

Introduction

The global spread of the novel coronavirus (SARS-CoV-2) over the last 2 years affected the lives of most people around the world. As of December 2021, over 330 million cases of COVID-19 were detected and 5.5 million deaths were recorded due to the pandemic [1]. In the United States, COVID-19 was the third leading cause of death in 2020 according to the National Center for Health Statistics [2]. Despite their socioeconomic repercussions [3,4], nonpharmaceutical interventions such as social distancing, travel restrictions, and national lockdowns have proven to be effective at slowing the spread of the coronavirus [5-7]. As the pandemic evolved, pharmaceutical interventions such as vaccinations and antiviral treatments became increasingly important to manage the pandemic [8,9].

Less than one year into the pandemic, we witnessed the swift development of COVID-19 vaccines, expedited by new mRNA technology [10]. Both Pfizer-BioNTech [11] and Moderna [12] vaccines, among others, obtained emergency authorizations in the United States and Europe by the end of 2020, and governments began to distribute them to the public immediately. Mounting evidence shows that vaccines effectively prevent infections and severe hospitalizations, despite the emergence of new viral strains of the original SARS-CoV-2 virus [13,14]. It was estimated that the US vaccination program averted up to 140,000 deaths by May 2021 [15] and over 10 million hospitalizations by November 2021 [16].

The widespread adoption of vaccines is extremely important to reduce the impact of this highly contagious virus [17]. However, as of December 2021, when supplies were no longer limited, only 62% of US citizens had received two doses of COVID-19 vaccines [18]. Unvaccinated or partially vaccinated individuals still face risks of infection and death that are much higher than the risks for individuals who completed their vaccination cycle [19]. The geographically uneven vaccination coverage of the population can also lead to localized outbreaks and hinder governmental efforts to mitigate the pandemic [20].

Worldwide, most people are in favor of vaccines and vaccination programs, but a proportion of individuals are hesitant about some or all vaccines. Vaccine hesitancy describes a spectrum of attitudes, ranging from people with small concerns to those who completely refuse all vaccines. Previous literature links vaccine hesitancy to several factors that include the political, cultural, and social background of individuals, as well as their personal experience, education, and information environment [21]. Ever since public discourse moved online, concerns have been raised about the spread of false claims regarding vaccines on social media, which may erode public trust in science and promote vaccine hesitancy or refusal [22-25].

After the outbreak of the COVID-19 pandemic, a massive amount of health-related misinformation—the so-called “infodemic” [26]—was observed on multiple social media platforms [27-30], undermining public health policies to contain the disease. Online misinformation included false claims and conspiracy theories about COVID-19 vaccines, hindering the effectiveness of vaccination campaigns [31,32].

A few recent studies reveal a positive association between exposure to misinformation and vaccine hesitancy at the individual level [33] as well as a negative association between the prevalence of online vaccine misinformation and vaccine uptake rates at the population level [34]. Motivated by these findings, the aim of this study was to investigate the spread of COVID-19 vaccine misinformation by analyzing almost 300 million English-language tweets shared during 2021, when vaccination programs were launched in most countries around the world.

There are several studies related to the present work. Yang et al [30] carried out a comparative analysis of English-language COVID-19–related misinformation spreading on Twitter and Facebook during 2020. They compared the prevalence of low-credibility sources on the two platforms, highlighting how verified pages and accounts earned a considerable amount of reshares when posting content originating from unreliable websites. Muric et al [35] released a public data set of Twitter accounts and messages, collected at the end of 2020, which specifically focused on antivaccine narratives. Preliminary analyses show that the online vaccine-hesitancy discourse was fueled by conservative-leaning individuals who shared a large amount of vaccine-related content from questionable sources. Sharma et al [36] focused on identifying coordinated efforts to promote antivaccine narratives on Twitter during the first 4 months of the US vaccination program. They also carried out a content-based analysis of the main misinformation narratives, finding that side effects were often mentioned along with COVID-19 conspiracy theories.

Our work makes two key contributions to existing research. First, we studied the prevalence of COVID-19 vaccine misinformation originating from low-credibility websites and YouTube videos, which was compared to information published on mainstream news websites. As described above, previous studies either analyzed the spread of misinformation about COVID-19 in general (during 2020) or focused specifically on antivaccination messages and narratives. They also analyzed a limited time window, whereas our data capture 12 months into the rollout of COVID-19 vaccination programs. Second, we uncovered the role and the contribution of important groups of vaccine misinformation spreaders, namely verified and automated accounts, whereas previous work either focused on detecting users with a strong antivaccine stance or inauthentic coordinated behavior.

Considering these contributions, we addressed the first research question (RQ):

RQ1: What were the patterns of prevalence and contagion of COVID-19 vaccine misinformation on Twitter in 2021?

Leveraging a data set of millions of tweets, we identified misinformation at the domain level based on a list of low-credibility sources (website domains) compiled by professional fact-checkers and journalists, which is an approach that has been widely adopted in the literature to study unreliable information at scale [37-41]. Additionally, we considered a set of mainstream and public health sources as a baseline for reliable information. We then compared the volume of vaccine misinformation against reliable news, identified temporal trends, and investigated the most shared sources. We also explored the prevalence of misinformation that originated on YouTube and was shared on Twitter [30,42,43].

Analogous to the role of virus superspreaders in pandemic outbreaks [44], recent studies suggest that certain actors play an outsized role in disseminating misleading content [30,39,42]. For example, just 10 accounts were responsible for originating over 34% of the low-credibility content shared on Twitter during an 8-month period in 2020 [45]. To examine how vaccine misinformation was posted and amplified by various actors on social media, we addressed a second RQ:

RQ2: Who were the main spreaders of vaccine misinformation?

Specifically, we analyzed two types of accounts. First, we investigated the presence and characteristics of users who generated the most reshares of misinformation [45,46], with a specific focus on the role of “verified” accounts. Twitter deems these accounts “authentic, notable, and active” (see [47]). Second, we investigated the presence and role of social bots (ie, social media accounts controlled in part by algorithms). Previous studies showed that bots actively amplified low-credibility information in various contexts [38,48,49].

These findings deepen our understanding of the ongoing pandemic and generate actionable knowledge for future health crises.

Methods

Twitter Data Collection

On January 4, 2021, we started a real-time collection of tweets about COVID-19 vaccines using the Twitter application programming interface (API). The tweets were collected by matching relevant keywords through the POST statuses/filter v1.1 API endpoint [50]. This effort is part of our CoVaxxy project, which provides a public dashboard [51] to visualize the relationship between online (mis)information and COVID-19 vaccine adoption in the United States [52].

To capture the online public discourse around COVID-19 vaccines in English, we defined as complete a set as possible of English-language keywords related to the topic. Starting with covid and vaccine as our initial seeds, we employed a snowball sampling technique to identify co-occurring relevant keywords in December 2020 [52,53]. The resulting list contained almost 80 keywords. We show a few examples in Textbox 1; the full list can be accessed through the online repository associated with this project [54]. To validate the data collection procedure, we examined the coverage obtained by adding keywords one at a time, starting with the most common terms. Over 90% of the tweets contained at least one of the three most common keywords: “vaccine,” “vaccination,” or “vaccinate.” This indicates that the collected tweets are very relevant to the topic of vaccines.

Sample keywords employed to collect tweets about vaccines.

covid19vaccine

covidvaccine

coronavirusvaccine

vaccination

covid19 pfizer

pfizercovidvaccine

oxfordvaccine

getvaccinated

covid19

moderna

vaccine

covid19 pfizer

mrna vaccinate

covax

coronavirus moderna

vax

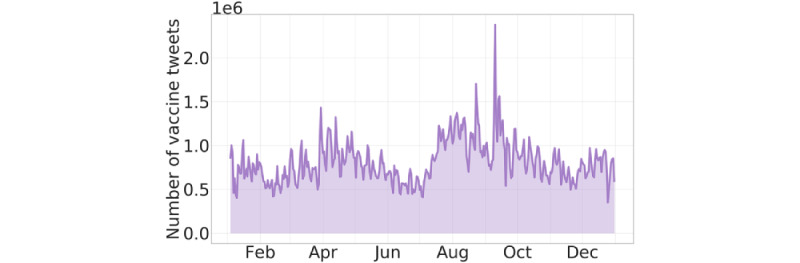

In this study, we analyzed the data collected in the period from January 4 to December 31, 2021. This comprises 294,081,599 tweets shared by 19,581,249 unique users, containing 8,160,838 unique links (URLs) and 1,287,703 unique hashtags. Figure 1 shows the daily volume of vaccine-related tweets collected.

Figure 1.

Time series of the daily number of vaccine-related tweets shared between January 4 and December 31, 2021. The median daily number of tweets is 720,575.

To comply with Twitter’s terms of service, we are only able to share the tweet IDs with the public, accessible through a public repository [54]. One can “rehydrate” the data set by querying the Twitter API or using tools such as Hydrator [55] or twarc [56].

Identifying Online Misinformation

We identified misinformation in our data set using two approaches. Following a common method in the literature [37-41], the first approach identified tweets sharing links to low-credibility websites that were labeled by journalists, fact-checkers, and media experts for repeatedly sharing false news, hoaxes, conspiracy theories, unsubstantiated claims, hyperpartisan propaganda, click-bait, and so on. Specifically, we employed the Iffy+ Misinfo/Disinfo list of low-credibility sources [57]. This list is mainly based on information provided by the Media Bias/Fact Check (MBFC) website [58], an independent organization that reviews and rates the reliability of news sources. Political leaning was not considered for determining inclusion in the Iffy+ list. Instead, the list includes sites labeled by MBFC as having a “Very Low” or “Low” factual-reporting level and those classified as “Questionable” or “Conspiracy-Pseudoscience” sources. The 674 low-credibility sources in the Iffy+ list also include fake-news websites flagged by BuzzFeed, FactCheck.org, PolitiFact, and Wikipedia.

To expand our list of low-credibility sources, we also employed news reliability scores provided by NewsGuard [59], a journalistic organization that routinely assesses the reliability of news websites based on multiple criteria. NewsGuard assigns news outlets a trust score in the range of 0-100. While it considers outlets with scores below 60 as “unreliable,” we adopted a stricter definition and only considered outlets with a score ≤30 as low-credibility sources. This yielded a list of 1181 websites, which we cannot disclose to the public since the NewsGuard data are proprietary. By combining the Iffy+ and NewsGuard lists, we obtained a total of 1718 low-credibility sources.

We tested the reliability of this domain-based approach to identify misinformation through a qualitative approach similar to that adopted in previous studies [38,60]. We randomly chose 50 low-credibility links in our data set and manually coded them as either “factual,” “misinformation,” or “unverified.” Two authors independently visited the actual web page of each link and researched its content to determine if it was accurate. A link was coded as “factual” if all claims within the article were corroborated by other sources. The “unverified” label was utilized for links that could no longer be accessed (eg, because the web page no longer exists). All other links were coded as “misinformation.” In the event of coding disagreements, authors shared and discussed what they learned during their independent research to reach an agreement on a single label. At the end of this procedure, 7 links were coded as “factual,” 38 as “misinformation,” 4 as “unverified,” and a single article was excluded as it appeared to be a personal blog post. We also note that of the 7 articles labeled as “factual,” 6 were from state propaganda outlets with a selection bias (eg, sputniknews.com or rt.com).

As a second approach, we analyzed links to YouTube videos shared on Twitter that might contain misinformation. We extracted unique video identifiers from links shared in the collected tweets and queried the YouTube API for the video status using the Videos:list endpoint. In light of recent YouTube efforts to remove antivaccine videos according to their COVID-19 policy [61] and their updated policy [62], we considered videos to be suspicious if they were not publicly accessible. Previous research shows that inaccessible videos contain a high proportion of antivaccine content, such as the

“Plandemic” conspiracy documentary [30]. The efficacy of this approach to identifying videos that contain antivaccine content is further supported in research that analyzed available videos shared by users that had also shared an inaccessible video [63]. The authors found that the majority of available videos tweeted by these users promulgated an antivaccine or antimandate stance. As some estimates suggest that it takes an average of 41 days for YouTube to remove videos that violate their terms [43], we checked the status of videos in March 2022, at least 2 months after the last video was posted on Twitter.

Sources of Reliable Information

We curated a list of reliable, mainstream sources of vaccine-related news as our baseline to interpret the prevalence of misinformation and characterized its spreading patterns [30]. In particular, we considered websites with a NewsGuard trust score higher than 80, resulting in a list of 2765 sources. We also included the websites of two authoritative sources of COVID-19–related information, namely the US Centers for Disease Control and Prevention (CDC) [64] and the World Health Organization [65]. In the rest of the paper, we use “low credibility” and “mainstream” to refer to the two sets of sources.

Link Extraction

Identifying low- and high-credibility links and YouTube links required extracting the top-level domains from the URLs embedded in tweets and matching them against our lists of web domains. Shortened links occurred frequently in our data set; therefore, we identified the most prevalent link-shortening services (see Textbox 2 for the list) and obtained the original links through HTTP requests.

List of URL-shortening services considered in our analysis.

bit.ly, dlvr.it, liicr.nl, tinyurl.com, goo.gl, ift.tt, ow.ly, fxn.ws, buff.ly, back.ly, amzn.to, nyti.ms, nyp.st, dailysign.al, j.mp, wapo.st, reut.rs, drudge.tw, shar.es, sumo.ly

Bot Detection

To measure the level of bot activity for different types of information, we employed

BotometerLite [66], a publicly available tool that can efficiently identify likely automated accounts on Twitter [67]. For each Twitter account, BotometerLite generates a bot score in the range of 0-1, where a higher score indicates that the account is more likely to be automated. BotometerLite evaluates an account by inspecting the profile information that is embedded in each tweet. This enabled us to perform bot analysis at the level of tweets in our data set.

Ethical Considerations

This research is based on observations of public data with minimal risks to human subjects. The study was thus deemed exempt from review by the Indiana University Institutional Review Board (protocol 1102004860). Data collection and analysis were performed in compliance with the terms of service of Twitter.

Results

Prevalence and Contagion of Online Misinformation

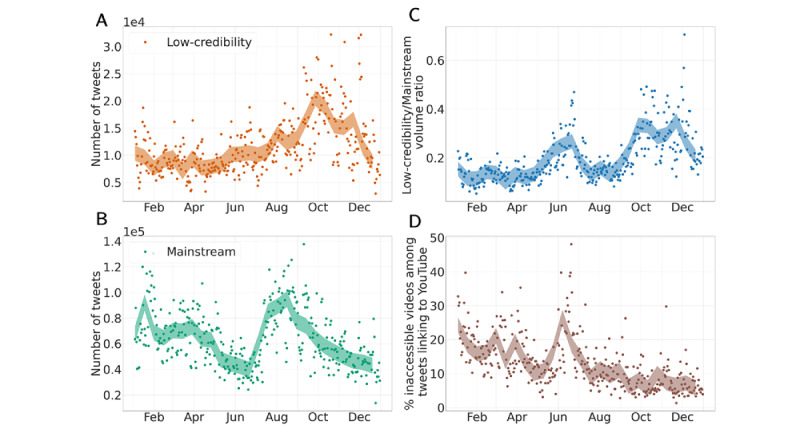

To address RQ1, we compared the prevalence of tweets that linked to domains in our lists of low-credibility and mainstream sources over time. We carried out a similar analysis for suspicious YouTube videos. As shown in Figure 2A-B, we observed a significant increasing trend in the daily prevalence of low-credibility information over time and a significant opposite trend for mainstream news. This is further confirmed in Figure 2C, which shows the daily ratio between the volumes of tweets linking to low-credibility and mainstream news. A significant increasing trend was observed, suggesting that the public discussion about vaccines on Twitter shifted over time from referencing trustworthy sources in favor of low-credibility sources. The peak in July corresponds to a time when the prevalence of mainstream news was particularly low (see Figure 2B). During this period, we also observed a burst of reshares for content originating from Children’s Health Defense (CHD), the most prominent source of vaccine misinformation (further discussed below).

Figure 2.

Timelines of prevalence of vaccine information on Twitter. Trends were evaluated with the nonparametric Mann-Kendall test. Colored bands correspond to a 14-day rolling average with 95% CIs. (A) Daily number of vaccine tweets sharing links to news articles from low-credibility sources. There is a significant increasing trend (P<.001). (B) Daily number of vaccine tweets sharing links to news articles from mainstream sources. There is a significant decreasing trend (P<.001). (C) Ratio between the volumes of tweets sharing links to low-credibility and mainstream sources. There is a significant increasing trend (P<.001). (D) Daily percentage of tweets sharing links to inaccessible YouTube videos among all tweets sharing links to YouTube. There is a significant decreasing trend (P<.001).

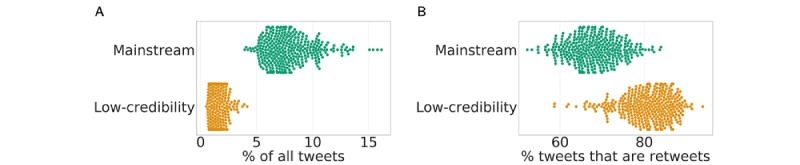

During the entire period of analysis, we found that misinformation was generally less prevalent than mainstream news, as shown in Figure 3A. However, we observed that low-credibility content tended to spread more through retweets compared to mainstream content, as shown in Figure 3B. This indicated that while low-credibility vaccine content was less prevalent overall, it had greater potential for contagion through the social network, suggesting that it might have only spread through a subsection of the population.

Figure 3.

Comparisons between prevalence of tweets linking to mainstream and low-credibility sources. (A) Daily percentage of vaccine tweets and retweets that share links to low-credibility news sources (median: 1.31%) and mainstream news sources (median: 7.53%). The distributions are significantly different according to a two-sided Mann-Whitney test (P<.001). (B) Distributions of the proportion of tweets linking to low-credibility sources (median: 89.19%) and mainstream sources (median: 67.96%) that are retweets. The distributions are significantly different according to a two-sided Mann-Whitney test (P<.001).

Overall, the fraction of vaccine-related tweets linking to YouTube videos was very small (daily median: 0.52%). However, a nonnegligible proportion of these posts (daily median: 10.95%) shared links to inaccessible videos, with a larger prevalence in the first half of 2021 (a peak of 45% was observed in July) and a significant decreasing trend toward the end of the year (see Figure 2D).

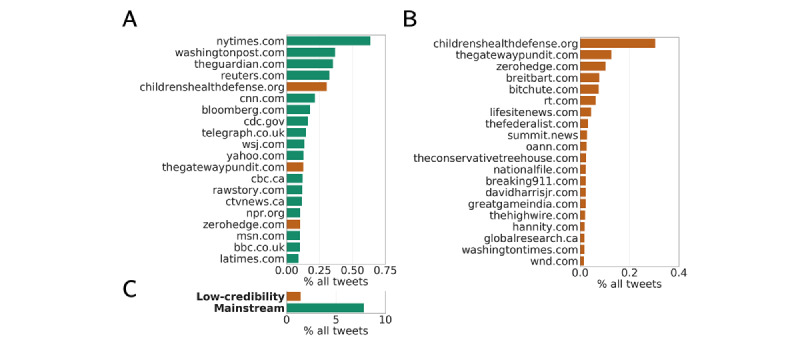

Most Popular Misinformation Sources

Looking at different sources of news about vaccines, Figure 4A shows the 20 most shared websites. We note three unreliable sources in this ranking: childrenshealthdefense.org, thegatewaypundit.com, and zerohedge.com. The most popular low-credibility source was the website of the CHD organization, an antivaccine group led by Robert F Kennedy Jr that became very popular during the pandemic as an alternative and natural medicine site [46,68]. This source was banned from Facebook and Instagram for repeatedly violating their guidelines against spreading medical misinformation in August 2022 [69]. Accounting for approximately 0.30% of all vaccine tweets, the prevalence of CHD was comparable to that of reputable sources such as washingtonpost.com and reuters.com, and was roughly twice the prevalence of CDC links (0.16%). As shown in Figure 4B, CHD tweets were much more widely shared than other low-credibility sources, most of which accounted for less than 0.05% of all shared tweets. CHD accounted for approximately 18% of all tweets linking to low-credibility sources, whereas the aggregated 20 most shared sources generated approximately 61% of all such tweets. Nevertheless, the total fraction of tweets sharing low-credibility news about vaccines accounted for only 1.5% compared to approximately 7.8% of tweets that linked to mainstream sources (see Figure 4C).

Figure 4.

Top sources of vaccine content. (A) The top 20 news sources ranked by percentage of vaccine tweets. (B) The top 20 low-credibility news sources ranked by percentage of vaccine tweets. (C) Percentages of all vaccine tweets linking to low-credibility and mainstream news sources.

Superspreaders of Misinformation

Recent work reveals that accounts disseminating a disproportionate amount of low-credibility content—so-called “superspreaders”—played a central role in the digital misinformation crisis [30,39,42,45,46]. These contributions also show that “verified” accounts often act as superspreaders of unreliable information. Therefore, we further investigated the role of such accounts to address RQ2.

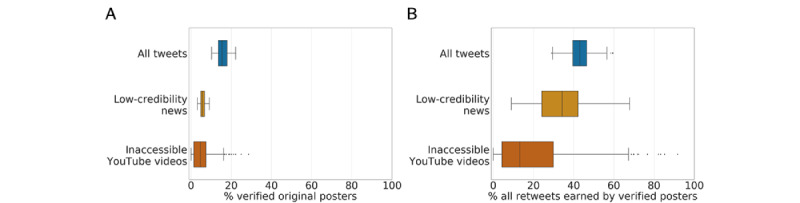

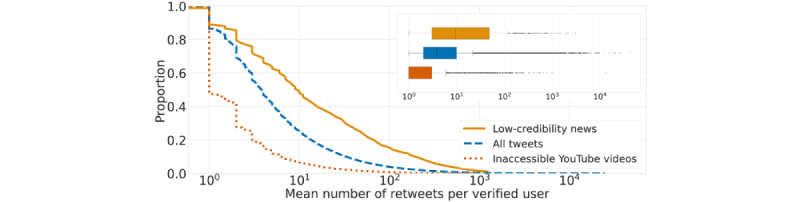

Figure 5 shows that over time, verified accounts represented approximately 15% of those that posted vaccine content, but were consistently responsible for approximately 43% of that content. When focusing on the low-credibility content, verified accounts represented an even smaller proportion of accounts, less than 6%. Still, they were responsible for approximately 34% of retweets. These findings highlight a stunning concentration of impact and responsibility for the spread of vaccine misinformation among a small group of verified accounts. While there were substantially fewer verified accounts sharing low-credibility vaccine content (n=828) compared to those sharing vaccine content in general (n=98,612), Figure 6 shows that verified accounts tended to receive more retweets when posting low-credibility content than general vaccine content.

Figure 5.

Comparisons between percentages of original posters who are verified accounts and of retweets earned by verified accounts for different categories of vaccine content. Each data point is a daily proportion. The median daily proportions of verified accounts among posters of vaccine content, low-credibility news, and inaccessible YouTube videos are 15.4%, 5.6%, and 4.5%, respectively. The median daily proportions of retweets earned by verified posters of vaccine content, low-credibility news, and inaccessible YouTube videos are 43.1%, 34.2%, and 13.2%, respectively. All distributions are significantly different from each other according to two-sided Mann-Whitney tests (P<.001).

Figure 6.

Distributions of the mean numbers of retweets earned by verified accounts when sharing vaccine content (median 3.82), low-credibility news (median 9.43), and links to inaccessible YouTube videos (median 1). We display the complementary cumulative distributions in the main plot because the distributions are broad. In fact, the box plots (inset) have many outliers. All distributions are significantly different from each other according to two-sided Mann-Whitney tests (P<.001).

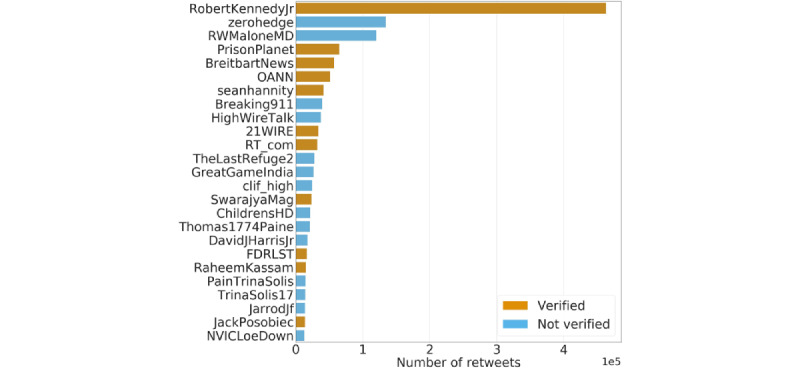

The top 25 accounts are ranked by the number of retweets to their posts linking to low-credibility sources in Figure 7. Eleven of these misinformation superspreaders are accounts that have been verified by Twitter, some of which are associated with untrustworthy news sources (eg, @zerohedge, @BreitbartNews, and @OANN). The top superspreader, Robert Kennedy Jr (@RobertKennedyJr), earned approximately 3.45 times the number of retweets of the second most-retweeted account (@zerohedge). Kennedy was identified as one of the pandemic’s “disinformation dozen” [42,46]. His influence fueled the high prevalence of links to the CHD website within our data set (as shown in Figure 4). His verified account had approximately 3.8 times more followers than the unverified @ChildrensHD account (416,200 vs 109,800, respectively, as of April 24, 2022). Retweets of Mr. Kennedy’s tweets singularly accounted for 13.4% of all retweets of low-credibility vaccine content. A robustness check removing this account from the data yielded consistent results for all analyses reported in this section.

Figure 7.

Top 25 accounts ranked by the number of retweets earned when sharing links to low-credibility news websites. Colors indicate whether accounts are verified (orange) or not (blue).

We also investigated the role of verified users in sharing suspicious videos from YouTube. As shown in Figures 5 and 6, we found that verified accounts do not play as central a role in spreading this content as found for content from low-credibility domains.

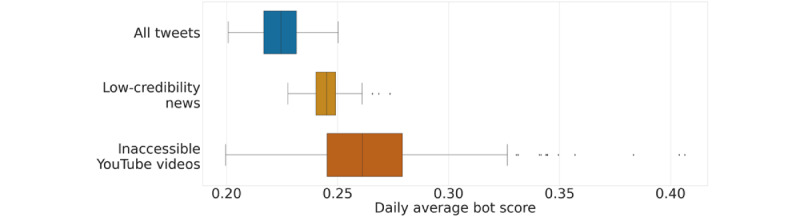

Role of Social Bots

To address RQ2, we also inspected the role of likely automated accounts in spreading COVID-19 vaccine misinformation. As mentioned in the Methods section, we employed BotometerLite [67] to calculate a bot score for all the accounts posting a tweet in our data set. We did not observe notable temporal trends in the activity of likely bots over time; therefore, Figure 8 shows the distributions of daily average bot scores for tweets sharing vaccine content, links to low-credibility sources, and inaccessible YouTube videos.

Figure 8.

Comparison between the daily average bot score of tweets sharing different categories of vaccine content. The median daily average bot scores of accounts sharing vaccine content, low-credibility news, and inaccessible YouTube videos are 0.22, 0.25 and 0.26, respectively. All distributions are significantly different from each other according to two-sided Mann-Whitney tests (P<.001).

We observed that tweets sharing links to low-credibility sources had significantly higher bot-activity levels than those of vaccine tweets overall. In addition, the daily average bot scores for tweets sharing inaccessible YouTube videos were even higher than those linking to low-credibility sources.

This analysis was carried out at the tweet level, meaning that if a bot-like account tweeted more times, it made a larger contribution. We observed similar results when performing the analysis at the account level by considering the contribution of each account once.

Discussion

We investigated COVID-19 vaccine misinformation spreading on Twitter during 2021 following the rollout of vaccination programs around the world. Leveraging a source-based labeling approach, we identified millions of tweets sharing links to low-credibility and mainstream news websites. While low-credibility information was generally less prevalent than mainstream content over the year, we observed an increasing trend in the reshares of unreliable news during the year and an opposite, decreasing trend for reliable information. Our data mostly capture English-language conversations, which could originate from different countries. However, our aggregate analysis could not disentangle the infodemic trends and peaks associated with different countries as observed in prior work [29].

Focusing on specific news sources, we noticed three low-credibility websites with volumes of reshares comparable to those of reliable sources. Alarmingly, the most prominent source of vaccine misinformation, CHD, earned more than twice the number of tweets as those linking to the CDC. Looking at users who earned the most retweets when sharing low-credibility news about vaccines, we observed the presence of many verified accounts. In particular, the verified user who earned the most retweets was Robert Kennedy Jr, the founder of CHD.

Given the increase in misinformation over time and the outsized role of a small group of verified users, we hypothesize that financial incentives may play an important role [68,70]. Low-credibility websites monetize visitors through donations, advertising, and merchandise. Our finding that vaccine misinformation tended to spread more through retweets compared to mainstream news suggests that misinformation content lends itself to such exploitation. Amplification by automated accounts may also have played a role in increasing levels of misinformation, as we found these accounts to be significantly more active at sharing low-credibility news and inaccessible YouTube videos compared to vaccine-related content overall. However, we did not find a trend of increased levels of automated sharing over time.

There are a number of limitations to our study. The source-based approach to identify low-credibility information at scale is not perfect. Credible sources may occasionally report inaccuracies and low-credibility sources often publish a mixture of reliable and unreliable information. Our analysis based on a sample of articles suggests that approximately 76% of articles from low-credibility sources do contain false or misleading content.

While we cannot publicly disclose NewsGuard ratings, they are available to researchers upon agreement, which should ensure reproducibility. We elected to include NewsGuard data because these data are more comprehensive and up-to-date, and the methodology is better documented compared to other ratings such as those from Iffy+ Misinfo/Disinfo. Nevertheless, it would be possible to repeat our analysis using only the free ratings from Iffy+ Misinfo/Disinfo since, as the literature suggests, the ratings are highly correlated [71,72]. In fact, we observed a high overlap between our lists of top sources; for example, 17 of the top 20 sources in Figure 4B are also present in the Iffy+ Misinfo/Disinfo list. More importantly, over 86% of the total number of low-credibility tweets identified with the merged list originate from websites contained in the Iffy+ Misinfo/Disinfo list alone. This suggests that the results are robust with respect to the ratings source.

Similar limitations exist with respect to labeling inaccessible YouTube videos as “low credibility.” For example, some of these videos may be inaccessible due to restricted access or copyright violations. An uploader’s choice to restrict access to a video may serve as a way to circumvent content moderation policies or could be unrelated to antivaccination efforts. However, in the context of the vaccination discussion on Twitter, examinations of videos and their Twitter posters suggest that most inaccessible videos are likely antivaccination in their orientation [63]. In addition, not all accessible videos contain accurate information about vaccines. YouTube may fail to identify content that should be removed according to its own policies. As such, analyses of inaccessible videos should be treated more as lower-bound estimates.

Another limitation is that the BotometerLite algorithm we employed to detect automated accounts is not perfect and may not accurately classify social bots [67]. We investigated whether bot-like behavior, as identified by BotometerLite, is associated with suspicious activity on Twitter. We used Twitter’s Compliance API [73] to check all accounts for suspension from the platform as of November 2022. We observed a significant positive correlation between the BotometerLite score, binned into 40 equal intervals, and the proportion of accounts suspended (Pearson r=0.93, P<.001). This suggests that the classifier reliably reveals behaviors that eventually lead to suspension on the platform.

Perhaps most importantly, Twitter users may not be very representative of the real-world population across a range of demographic groups [74], although information circulating around Twitter can have a great influence over the news media agenda [75]. Further studies should consider multiple social media platforms simultaneously, especially those with upward adoption trends [76].

Despite these limitations, our findings help map the landscape of online vaccine misinformation and can guide the design of intervention strategies to curb its spread. The presence of misinformation around COVID-19 vaccines on Twitter shows that there was an audience for this type of content, which might reflect a deeper distrust of medicine, health professionals, and science [77]. In a context of widespread uncertainty such as the COVID-19 pandemic, trust is critical for overcoming vaccine hesitancy, and recent research shows how online misinformation fueled vaccine hesitancy and refusal sentiment [25,34].

Our findings reveal the presence of a small number of main producers and repeat spreaders of low-credibility content. Given that these superspreaders played key roles in disseminating vaccine misinformation, a straightforward strategy could be to deplatform them [78,79], as shown by recent studies in different contexts [79-81] and as has been done by major platforms in notable cases such as Alex Jones [82] and Donald Trump [83].

While social media platforms have legal rights to regulate online conversations, the decisions to deplatform public figures should be made with caution. In fact, past interventions have sparked a vivid debate around free speech and caused many users to migrate to alternative platforms [79,81]. It is also unclear whether reducing the supply of false information and increasing the supply of accurate information can “cure” the problem of vaccine hesitancy [32]. An alternative path of action could be to reduce the financial incentives of those who profit from the spread of misinformation. Our results also show that vaccine misinformation is more viral than other kinds of information. Other effective approaches to reduce its spread include lowering the visibility of certain content (“down-ranking”) or not showing that content to users (“shadow banning”), as well as adding warning labels to content that is potentially harmful or inaccurate [84,85]. Platforms should partner with policymakers and researchers in evaluating the impacts of such different interventions [86].

There are several interesting RQs that are outside the scope of the present work, but that could be addressed by future research. For instance, further investigations could address the impact of Twitter’s removal of users due to the January 6 riots on the spread of misinformation in the following months. Other studies could investigate how the CHD organization shifted its antivaccination narratives from children to a broader COVID-19 vaccination campaign and remained the most popular source for the antivaccination movement. Future work could also analyze exposure to low-credibility information, which is more difficult to measure compared to the sharing patterns quantified in this study. This would allow answering the question of whether the spread of low-credibility information was confined to a limited group of people or reached a wide audience. Finally, it is still unclear how governmental and societal changes might have affected conversations around vaccines during the COVID-19 pandemic compared to the (anti)vaccination debate in previous years.

All in all, we believe our work provides actionable insights for addressing the online spread of vaccine misinformation. Such insights can be beneficial during the ongoing pandemic and future health crises.

Acknowledgments

This work is supported in part by the Italian Ministry of Education (PRIN project HOPE), European Union Horizon 2020 (grant 101016233), National Science Foundation (grant ACI-1548562), Knight Foundation, and Craig Newmark Philanthropies.

Abbreviations

- API

application programming interface

- CDC

Centers for Disease Control and Prevention

- CHD

Children's Health Defense

- MBFC

Media Bias/Fact Check

- RQ

research question

Footnotes

Conflicts of Interest: None declared.

References

- 1.COVID-19 dashboard. Center for Systems Science and Engineering at Johns Hopkins University. [2023-02-08]. https://coronavirus.jhu.edu/map.html .

- 2.Ahmad FB, Anderson RN. The leading causes of death in the US for 2020. JAMA. 2021 May 11;325(18):1829–1830. doi: 10.1001/jama.2021.5469. https://europepmc.org/abstract/MED/33787821 .2778234 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bonaccorsi G, Pierri F, Cinelli M, Flori A, Galeazzi A, Porcelli F, Schmidt AL, Valensise CM, Scala A, Quattrociocchi W, Pammolli F. Economic and social consequences of human mobility restrictions under COVID-19. Proc Natl Acad Sci U S A. 2020 Jul 07;117(27):15530–15535. doi: 10.1073/pnas.2007658117. https://www.pnas.org/doi/abs/10.1073/pnas.2007658117?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub0pubmed .2007658117 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bonaccorsi G, Pierri F, Scotti F, Flori A, Manaresi F, Ceri S, Pammolli F. Socioeconomic differences and persistent segregation of Italian territories during COVID-19 pandemic. Sci Rep. 2021 Oct 27;11(1):21174. doi: 10.1038/s41598-021-99548-7.10.1038/s41598-021-99548-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Chinazzi M, Davis JT, Ajelli M, Gioannini C, Litvinova M, Merler S, Pastore Y Piontti A, Mu K, Rossi L, Sun K, Viboud C, Xiong X, Yu H, Halloran ME, Longini IM, Vespignani A. The effect of travel restrictions on the spread of the 2019 novel coronavirus (COVID-19) outbreak. Science. 2020 Apr 24;368(6489):395–400. doi: 10.1126/science.aba9757. https://www.science.org/doi/abs/10.1126/science.aba9757?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub0pubmed .science.aba9757 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Haug N, Geyrhofer L, Londei A, Dervic E, Desvars-Larrive A, Loreto V, Pinior B, Thurner S, Klimek P. Ranking the effectiveness of worldwide COVID-19 government interventions. Nat Hum Behav. 2020 Dec 16;4(12):1303–1312. doi: 10.1038/s41562-020-01009-0.10.1038/s41562-020-01009-0 [DOI] [PubMed] [Google Scholar]

- 7.Chang S, Pierson E, Koh PW, Gerardin J, Redbird B, Grusky D, Leskovec J. Mobility network models of COVID-19 explain inequities and inform reopening. Nature. 2021 Jan 10;589(7840):82–87. doi: 10.1038/s41586-020-2923-3.10.1038/s41586-020-2923-3 [DOI] [PubMed] [Google Scholar]

- 8.Thanh Le T, Andreadakis Z, Kumar A, Gómez Román R, Tollefsen S, Saville M, Mayhew S. The COVID-19 vaccine development landscape. Nat Rev Drug Discov. 2020 May 09;19(5):305–306. doi: 10.1038/d41573-020-00073-5.10.1038/d41573-020-00073-5 [DOI] [PubMed] [Google Scholar]

- 9.Mitjà O, Clotet B. Use of antiviral drugs to reduce COVID-19 transmission. Lancet Glob Health. 2020 May;8(5):e639–e640. doi: 10.1016/S2214-109X(20)30114-5. https://linkinghub.elsevier.com/retrieve/pii/S2214-109X(20)30114-5 .S2214-109X(20)30114-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ball P. The lightning-fast quest for COVID vaccines - and what it means for other diseases. Nature. 2021 Jan 18;589(7840):16–18. doi: 10.1038/d41586-020-03626-1.10.1038/d41586-020-03626-1 [DOI] [PubMed] [Google Scholar]

- 11.Pfizer-BioNTech COVID-19 Vaccine EUA Letter of Authorization. Food and Drug Administration. 2020. [2022-01-01]. https://www.fda.gov/media/144412/download .

- 12.Moderna COVID-19 Vaccine EUA Letter of Authorization. Food and Drug Administration. 2020. [2022-01-01]. https://www.fda.gov/media/144636/download .

- 13.Dagan N, Barda N, Kepten E, Miron O, Perchik S, Katz MA, Hernán MA, Lipsitch M, Reis B, Balicer RD. BNT162b2 mRNA Covid-19 vaccine in a nationwide mass vaccination setting. N Engl J Med. 2021 Apr 15;384(15):1412–1423. doi: 10.1056/NEJMoa2101765. https://europepmc.org/abstract/MED/33626250 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lopez Bernal J, Andrews N, Gower C, Gallagher E, Simmons R, Thelwall S, Stowe J, Tessier E, Groves N, Dabrera G, Myers R, Campbell CN, Amirthalingam G, Edmunds M, Zambon M, Brown KE, Hopkins S, Chand M, Ramsay M. Effectiveness of Covid-19 vaccines against the B.1.617.2 (Delta) variant. N Engl J Med. 2021 Aug 12;385(7):585–594. doi: 10.1056/NEJMoa2108891. https://europepmc.org/abstract/MED/34289274 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gupta S, Cantor J, Simon KI, Bento AI, Wing C, Whaley CM. Vaccinations against COVID-19 may have averted up to 140,000 deaths in the United States. Health Aff. 2021 Sep 01;40(9):1465–1472. doi: 10.1377/hlthaff.2021.00619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Schneider E, Shah A, Sah P, Moghadas S, Vilches T, Galvani A. The U.S. COVID-19 vaccination program at one year: how many deaths and hospitalizations were averted? The Commonwealth Fund. 2021. Dec 14, [2022-01-01]. https://www.common wealthfund.org/publications/issue-briefs/2021/dec/us-covid-19-vaccination-program-one-year-how-many-deaths-and .

- 17.Randolph HE, Barreiro LB. Herd immunity: understanding COVID-19. Immunity. 2020 May 19;52(5):737–741. doi: 10.1016/j.immuni.2020.04.012. https://linkinghub.elsevier.com/retrieve/pii/S1074-7613(20)30170-9 .S1074-7613(20)30170-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Mathieu E, Ritchie H, Ortiz-Ospina E, Roser M, Hasell J, Appel C, Giattino C, Rodés-Guirao L. A global database of COVID-19 vaccinations. Nat Hum Behav. 2021 Jul 10;5(7):947–953. doi: 10.1038/s41562-021-01122-8.10.1038/s41562-021-01122-8 [DOI] [PubMed] [Google Scholar]

- 19.Johnson AG, Amin AB, Ali AR, Hoots B, Cadwell BL, Arora S, Avoundjian T, Awofeso AO, Barnes J, Bayoumi NS, Busen K, Chang C, Cima M, Crockett M, Cronquist A, Davidson S, Davis E, Delgadillo J, Dorabawila V, Drenzek C, Eisenstein L, Fast HE, Gent A, Hand J, Hoefer D, Holtzman C, Jara A, Jones A, Kamal-Ahmed I, Kangas S, Kanishka F, Kaur R, Khan S, King J, Kirkendall S, Klioueva A, Kocharian A, Kwon FY, Logan J, Lyons BC, Lyons S, May A, McCormick D, MSHI. Mendoza E, Milroy L, O'Donnell A, Pike M, Pogosjans S, Saupe A, Sell J, Smith E, Sosin DM, Stanislawski E, Steele MK, Stephenson M, Stout A, Strand K, Tilakaratne BP, Turner K, Vest H, Warner S, Wiedeman C, Zaldivar A, Silk BJ, Scobie HM. COVID-19 incidence and death rates among unvaccinated and fully vaccinated adults with and without booster doses during periods of Delta and Omicron variant Emergence - 25 U.S. Jurisdictions, April 4-December 25, 2021. MMWR Morb Mortal Wkly Rep. 2022 Jan 28;71(4):132–138. doi: 10.15585/mmwr.mm7104e2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Bilal U, Mullachery P, Schnake-Mahl A, Rollins H, McCulley E, Kolker J, Barber S, Diez Roux AV. Heterogeneity in spatial inequities in COVID-19 vaccination across 16 large US cities. Am J Epidemiol. 2022 Aug 22;191(9):1546–1556. doi: 10.1093/aje/kwac076. https://europepmc.org/abstract/MED/35452081 .6572388 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.MacDonald NE, SAGE Working Group on Vaccine Hesitancy Vaccine hesitancy: definition, scope and determinants. Vaccine. 2015 Aug 14;33(34):4161–4164. doi: 10.1016/j.vaccine.2015.04.036. https://linkinghub.elsevier.com/retrieve/pii/S0264-410X(15)00500-9 .S0264-410X(15)00500-9 [DOI] [PubMed] [Google Scholar]

- 22.Wilson SL, Wiysonge C. Social media and vaccine hesitancy. BMJ Glob Health. 2020 Oct 23;5(10):e004206. doi: 10.1136/bmjgh-2020-004206. https://gh.bmj.com/lookup/pmidlookup?view=long&pmid=33097547 .bmjgh-2020-004206 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Larson HJ. The biggest pandemic risk? Viral misinformation. Nature. 2018 Oct 16;562(7727):309–309. doi: 10.1038/d41586-018-07034-4.10.1038/d41586-018-07034-4 [DOI] [PubMed] [Google Scholar]

- 24.Burki T. Vaccine misinformation and social media. Lancet Digital Health. 2019 Oct;1(6):e258–e259. doi: 10.1016/S2589-7500(19)30136-0. [DOI] [Google Scholar]

- 25.Rathje S, He J, Roozenbeek J, Van Bavel JJ, van der Linden S. Social media behavior is associated with vaccine hesitancy. PNAS Nexus. 2022 Sep;1(4):pgac207. doi: 10.1093/pnasnexus/pgac207. https://europepmc.org/abstract/MED/36714849 .pgac207 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Zarocostas J. How to fight an infodemic. Lancet. 2020 Feb 29;395(10225):676. doi: 10.1016/S0140-6736(20)30461-X. https://europepmc.org/abstract/MED/32113495 .S0140-6736(20)30461-X [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Chen E, Jiang J, Chang HH, Muric G, Ferrara E. Charting the information and misinformation landscape to characterize misinfodemics on social media: COVID-19 infodemiology study at a planetary scale. JMIR Infodemiology. 2022 Feb 8;2(1):e32378. doi: 10.2196/32378. https://europepmc.org/abstract/MED/35190798 .v2i1e32378 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Cinelli M, Quattrociocchi W, Galeazzi A, Valensise CM, Brugnoli E, Schmidt AL, Zola P, Zollo F, Scala A. The COVID-19 social media infodemic. Sci Rep. 2020 Oct 06;10(1):16598. doi: 10.1038/s41598-020-73510-5.10.1038/s41598-020-73510-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Gallotti R, Valle F, Castaldo N, Sacco P, De Domenico M. Assessing the risks of 'infodemics' in response to COVID-19 epidemics. Nat Hum Behav. 2020 Dec 29;4(12):1285–1293. doi: 10.1038/s41562-020-00994-6.10.1038/s41562-020-00994-6 [DOI] [PubMed] [Google Scholar]

- 30.Yang K, Pierri F, Hui P, Axelrod D, Torres-Lugo C, Bryden J, Menczer F. The COVID-19 infodemic: Twitter versus Facebook. Big Data Soc. 2021 May 05;8(1):205395172110138. doi: 10.1177/20539517211013861. [DOI] [Google Scholar]

- 31.Hotez P, Batista C, Ergonul O, Figueroa JP, Gilbert S, Gursel M, Hassanain M, Kang G, Kim JH, Lall B, Larson H, Naniche D, Sheahan T, Shoham S, Wilder-Smith A, Strub-Wourgaft N, Yadav P, Bottazzi ME. Correcting COVID-19 vaccine misinformation: Lancet Commission on COVID-19 Vaccines and Therapeutics Task Force Members. EClinicalMedicine. 2021 Mar;33:100780. doi: 10.1016/j.eclinm.2021.100780. https://linkinghub.elsevier.com/retrieve/pii/S2589-5370(21)00060-2 .S2589-5370(21)00060-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Pertwee E, Simas C, Larson HJ. An epidemic of uncertainty: rumors, conspiracy theories and vaccine hesitancy. Nat Med. 2022 Mar 10;28(3):456–459. doi: 10.1038/s41591-022-01728-z.10.1038/s41591-022-01728-z [DOI] [PubMed] [Google Scholar]

- 33.Loomba S, de Figueiredo A, Piatek SJ, de Graaf K, Larson HJ. Measuring the impact of COVID-19 vaccine misinformation on vaccination intent in the UK and USA. Nat Hum Behav. 2021 Mar 05;5(3):337–348. doi: 10.1038/s41562-021-01056-1.10.1038/s41562-021-01056-1 [DOI] [PubMed] [Google Scholar]

- 34.Pierri F, Perry BL, DeVerna MR, Yang K, Flammini A, Menczer F, Bryden J. Online misinformation is linked to early COVID-19 vaccination hesitancy and refusal. Sci Rep. 2022 Apr 26;12(1):5966. doi: 10.1038/s41598-022-10070-w.10.1038/s41598-022-10070-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Muric G, Wu Y, Ferrara E. COVID-19 vaccine hesitancy on social media: building a public Twitter data set of antivaccine content, vaccine misinformation, and conspiracies. JMIR Public Health Surveill. 2021 Nov 17;7(11):e30642. doi: 10.2196/30642. https://publichealth.jmir.org/2021/11/e30642/ v7i11e30642 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Sharma K, Zhang Y, Liu Y. COVID-19 vaccine misinformation campaigns and social media narratives. International AAAI Conference on Web and Social Media; June 6-9, 2022; Atlanta, GA. 2022. May 31, pp. 920–931. [DOI] [Google Scholar]

- 37.Lazer DMJ, Baum MA, Benkler Y, Berinsky AJ, Greenhill KM, Menczer F, Metzger MJ, Nyhan B, Pennycook G, Rothschild D, Schudson M, Sloman SA, Sunstein CR, Thorson EA, Watts DJ, Zittrain JL. The science of fake news. Science. 2018 Mar 09;359(6380):1094–1096. doi: 10.1126/science.aao2998.359/6380/1094 [DOI] [PubMed] [Google Scholar]

- 38.Shao C, Ciampaglia GL, Varol O, Yang K, Flammini A, Menczer F. The spread of low-credibility content by social bots. Nat Commun. 2018 Nov 20;9(1):4787. doi: 10.1038/s41467-018-06930-7.10.1038/s41467-018-06930-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Grinberg N, Joseph K, Friedland L, Swire-Thompson B, Lazer D. Fake news on Twitter during the 2016 U.S. presidential election. Science. 2019 Jan 25;363(6425):374–378. doi: 10.1126/science.aau2706.363/6425/374 [DOI] [PubMed] [Google Scholar]

- 40.Pennycook G, Rand DG. Fighting misinformation on social media using crowdsourced judgments of news source quality. Proc Natl Acad Sci U S A. 2019 Feb 12;116(7):2521–2526. doi: 10.1073/pnas.1806781116. https://www.pnas.org/doi/abs/10.1073/pnas.1806781116?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub0pubmed .1806781116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Bovet A, Makse HA. Influence of fake news in Twitter during the 2016 US presidential election. Nat Commun. 2019 Jan 02;10(1):7. doi: 10.1038/s41467-018-07761-2.10.1038/s41467-018-07761-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.The Disinformation Dozen. Center for Countering Digital Hate. 2021. [2022-01-01]. https://counterhate.com/research/the-disinfor mation-dozen/

- 43.Knuutila A, Herasimenka A, Au H, Bright J, Nielsen R, Howard P. COVID-related misinformation on YouTube: the spread of misinformation videos on social media and the effectiveness of platform policies. COMPROP Data Memo 2020.6. Oxford Project on Computational Propaganda. 2020. Sep 21, [2022-01-01]. https://demtech.oii.ox.ac.uk/wp-content/uploads/sites/12/2020/09/Knuutila-YouTube-misinfo-memo-v1.pdf .

- 44.Liu Y, Eggo RM, Kucharski AJ. Secondary attack rate and superspreading events for SARS-CoV-2. Lancet. 2020 Mar 14;395(10227):e47. doi: 10.1016/S0140-6736(20)30462-1. https://europepmc.org/abstract/MED/32113505 .S0140-6736(20)30462-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.DeVerna MR, Aiyappa R, Pacheco D, Bryden J, Menczer F. Identification and characterization of misinformation superspreaders on social media. arXiv. 2022. Jul 27, [2023-02-08]. [DOI]

- 46.Nogara G, Vishnuprasad P, Cardoso F, Ayoub O, Giordano S, Luceri L. The disinformation dozen: an exploratory analysis of Covid-19 disinformation proliferation on Twitter. WebSci '22: 14th ACM Web Science Conference 2022; June 26-29, 2022; Barcelona. 2022. [DOI] [Google Scholar]

- 47.How to get the blue checkmark on Twitter. Twitter Help. [2023-02-08]. https://help.twitter.com/en/managing-your-account/about- twitter-verified-accounts .

- 48.Ferrara E, Varol O, Davis C, Menczer F, Flammini A. The rise of social bots. Commun ACM. 2016 Jun 24;59(7):96–104. doi: 10.1145/2818717. [DOI] [Google Scholar]

- 49.Broniatowski DA, Jamison AM, Qi S, AlKulaib L, Chen T, Benton A, Quinn SC, Dredze M. Weaponized health communication: Twitter bots and Russian trolls amplify the vaccine debate. Am J Public Health. 2018 Oct;108(10):1378–1384. doi: 10.2105/AJPH.2018.304567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Twitter API. Twitter Developer Platform. [2023-02-01]. https://developer.twitter.com/en/docs/twitter-api .

- 51.Covaxxy. Indiana University Observatory on Social Media. [2023-02-08]. https://osome.iu.edu/tools/covaxxy .

- 52.DeVerna MR, Pierri F, Truong BT, Bollenbacher J, Axelrod D, Loynes N, Torres-Lugo C, Yang K, Menczer F, Bryden J. CoVaxxy: a collection of English-language Twitter posts about COVID-19 vaccines. Fifteenth International AAAI Conference on Web and Social Media (ICWSM2021); June 7-10, 2021; virtual. 2021. May 22, pp. 992–999. [DOI] [Google Scholar]

- 53.Giovanni MD, Pierri F, Torres-Lugo C, Brambilla M. VaccinEU: COVID-19 Vaccine Conversations on Twitter in French, German and Italian. Sixteenth International AAAI Conference on Web and Social Media (ICWSM); June 6-9, 2022; Atlanta, GA. 2022. May 31, pp. 1236–1244. [DOI] [Google Scholar]

- 54.DeVerna MR, Pierri F, Truong B, Bollenbacher J, Axelrod D, Loynes N. CoVaxxy Tweet IDs dataset. Zenodo. 2021. [2022-01-01]. [DOI]

- 55.Hydrator. GitHub. [2023-02-08]. https://github.com/DocNow/hydrator .

- 56.twarc. [2023-02-08]. https://twarc-project.readthedocs.io/en/latest/

- 57.Iffy+ Mis/Disinfo Sites. Iffy News. [2022-03-01]. https://iffy.news/iffy-plus/

- 58.Media Bias/Fact Check. [2023-02-08]. https://mediabiasfactcheck.com/

- 59.Rating process and criteria. NewsGuard. 2021. [2021-09-01]. https://www.newsguardtech.com/ratings/rating-process-criteria/

- 60.Shao C, Hui P, Wang L, Jiang X, Flammini A, Menczer F, Ciampaglia GL. Anatomy of an online misinformation network. PLoS One. 2018 Apr 27;13(4):e0196087. doi: 10.1371/journal.pone.0196087. https://dx.plos.org/10.1371/journal.pone.0196087 .PONE-D-18-02101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.COVID-19 medical misinformation policy. YouTube. 2020. [2022-01-01]. https://support.google.com/youtube/answer/9891785?hl=en .

- 62.Managing harmful vaccine content on YouTube. YouTube. 2021. Sep, [2022-01-01]. https://blog.youtube/news-and-events/managing -harmful-vaccine-content-youtube/

- 63.Axelrod DS, Harper BP, Paolillo JC. YouTube COVID-19 vaccine misinformation on Twitter: platform interactions and moderation blind spots. Conference on Truth and Trust Online; October 12-14, 2022; Boston, MA. 2022. Aug 31, https://truthandtrustonline.com/wp-content/uploads/2022/10/TTO_2022_paper_1.pdf . [DOI] [Google Scholar]

- 64.Centers for Disease Control and Prevention (CDC) [2023-02-08]. https://www.cdc.gov/

- 65.World Health Organization. [2023-02-08]. https://www.who.int/

- 66.Botometer Pro. Rapid API. [2023-02-08]. https://rapidapi.com/OSoMe/api/botometer-pro .

- 67.Yang K, Varol O, Hui P, Menczer F. Scalable and generalizable social bot detection through data selection. AAAI Conference on Artificial Intelligence; February 7-12, 2020; New York, NY. 2020. Apr 03, pp. 1096–1103. [DOI] [Google Scholar]

- 68.Smith MR. How a Kennedy built an anti-vaccine juggernaut amid COVID-19. AP News. 2021. [2022-01-01]. https://apnews.com/article/how-rfk-jr-built-anti-vaccine-juggernaut-amid-covid-4997be1bcf591fe8b7f1f90d16c9321e .

- 69.Frenkel S. Facebook and Instagram remove Robert Kennedy Jr's nonprofit for misinformation. New York Times. 2022. [2023-02-08]. https://www.nytimes.com/2022/08/18/technology/facebook-instagram-robert-kennedy-jr-misinformation.html .

- 70.Manjoo F. Alex Jones and the wellness-conspiracy industrial complex; 2022. New York Times. 2022. [2023-02-08]. https://www.nytimes.com/2022/08/11/opinion/alex-jones-wellness-conspiracy.html .

- 71.Broniatowski DA, Kerchner D, Farooq F, Huang X, Jamison AM, Dredze M, Quinn SC, Ayers JW. Twitter and Facebook posts about COVID-19 are less likely to spread misinformation compared to other health topics. PLoS One. 2022 Jan 12;17(1):e0261768. doi: 10.1371/journal.pone.0261768. https://dx.plos.org/10.1371/journal.pone.0261768 .PONE-D-21-17260 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Lin H, Lasser J, Lewandowsky S, Cole R, Gully A, Rand DG, Pennycook G. High level of agreement across different news domain quality ratings. PsyArXiv. 2022. [2023-02-08]. https://psyarxiv.com/qy94s/ [DOI] [PMC free article] [PubMed]

- 73.Batch compliance. Twitter Developer Platform. [2023-02-08]. https://developer.twitter.com/en/docs/twitter-api/compliance/batch- compliance/quick-start .

- 74.Wojcik S, Hughes A. Sizing Up Twitter Users. Pew Research Center. 2019. Apr 24, [2022-01-01]. https://www.pewresearch.org/internet/2019/04/24/sizing-up-twitter-users/

- 75.McGregor SC, Molyneux L. Twitter’s influence on news judgment: an experiment among journalists. Journalism. 2018 Oct 05;21(5):597–613. doi: 10.1177/1464884918802975. [DOI] [Google Scholar]

- 76.Auxier B, Anderson M. Social Media Use in 2021. Pew Research Center. 2021. [2022-01-01]. https://www.pewresearch.org/internet/2021/04/07/social-media-use-in-2021/

- 77.Dubé E, Laberge C, Guay M, Bramadat P, Roy R, Bettinger JA. Vaccine hesitancy: an overview. Hum Vaccin Immunother. 2013 Aug 27;9(8):1763–1773. doi: 10.4161/hv.24657. https://europepmc.org/abstract/MED/23584253 .24657 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Rogers R. Deplatforming: following extreme Internet celebrities to Telegram and alternative social media. Eur J Commun. 2020 May 06;35(3):213–229. doi: 10.1177/0267323120922066. [DOI] [Google Scholar]

- 79.Jhaver S, Boylston C, Yang D, Bruckman A. Evaluating the effectiveness of deplatforming as a moderation strategy on Twitter. Proc ACM Hum Comput Interact. 2021 Oct 18;5(CSCW2):1–30. doi: 10.1145/3479525. [DOI] [Google Scholar]

- 80.Chandrasekharan E, Pavalanathan U, Srinivasan A, Glynn A, Eisenstein J, Gilbert E. You can't stay here: the efficacy of Reddit's 2015 ban examined through hate speech. Proc ACM Hum Comput Interact. 2017 Dec 06;1(CSCW):1–22. doi: 10.1145/3134666. [DOI] [Google Scholar]

- 81.Ali S, Saeed M, Aldreabi E, Blackburn J, De CE, Zannettou S. Understanding the effect of deplatforming on social networks. 13th ACM Web Science Conference; June 21-25, 2021; online. 2021. [DOI] [Google Scholar]

- 82.Bilton N. The downfall of Alex Jones shows how the internet can be saved. Vanity Fair. 2021. [2022-01-01]. https://www.vanity fair.com/news/2019/04/the-downfall-of-alex-jones-shows-how-the-internet-can-be-saved .

- 83.Permanent suspension of @realDonaldTrump. Twitter Blog. [2023-02-08]. https://blog.twitter.com/en_us/topics/company/2020/suspension .

- 84.Papakyriakopoulos O, Goodman E. The Impact of Twitter Labels on Misinformation Spread and User Engagement: Lessons from Trump?s Election Tweets. ACM Web Conference; April 2022; Lyon, France. 2022. p. 2541. [DOI] [Google Scholar]

- 85.Zannettou S. "I Won the Election!": an empirical analysis of soft moderation interventions on Twitter. 15th International Conference on Web and Social Media (ICWSM '21); June 7-10, 2021; virtual. 2021. May 22, pp. 865–876. [DOI] [Google Scholar]

- 86.Pasquetto I, Swire-Thompson B, Amazeen MA. Tackling misinformation: What researchers could do with social media data. HKS Misinfo Review. 2020 Dec 9;:1–8. doi: 10.37016/mr-2020-49. https://misinforeview.hks.harvard.edu/article/tackling-misinformation-what-researchers-could-do-with-social-media-data/ [DOI] [Google Scholar]