Large language models (LLMs) enabled by artificial intelligence (AI) technology can generate sentences that are increasingly difficult to distinguish from text written by people. In particular, ChatGPT (Fig. 1), which is an LLM released recently by OpenAI (https://openai.com/), has become a sensation by showing astonishing performance. However, simultaneously, ChatGPT and other LLMs raise concerns in the fields of education and scientific publications because of their ability to write text for essays, reports, exams, and scientific papers.

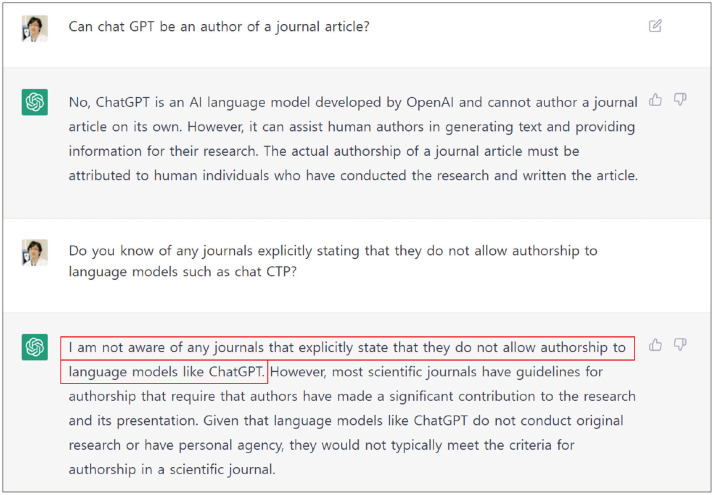

Fig. 1. A screen capture of a conversation with ChatGPT performed at 12 PM (UTC+9) on February 2, 2023. Although the sentences are plausible-sounding and grammatically correct, clearly wrong information is noted (red rectangles), as ChatGPT was not trained with data after 2021.

Acknowledging the concerns, leading academic journals and organizations in science, including premier journals such as Nature, Science, and JAMA, have recently expressed their editorial policies that ban the inclusion of these nonhuman technologies as authors, with some even prohibiting the inclusion of AI-generated text in submitted work [1,2,3].

The Korean Journal of Radiology (KJR) completely agrees with the concerns and the subsequent new editorial policies regarding the use of LLMs aforementioned. Therefore, we explicitly state that no LLMs will be accepted as a credited author on scientific papers that KJR publishes.

Footnotes

Conflicts of Interest: The author has no potential conflicts of interest to disclose.

Funding Statement: None

References

- 1.Tools such as ChatGPT threaten transparent science; here are our ground rules for their use. Nature. 2023;613:612. doi: 10.1038/d41586-023-00191-1. [DOI] [PubMed] [Google Scholar]

- 2.Thorp HH. ChatGPT is fun, but not an author. Science. 2023;379:313. doi: 10.1126/science.adg7879. [DOI] [PubMed] [Google Scholar]

- 3.Flanagin A, Bibbins-Domingo K, Berkwits M, Christiansen SL. Nonhuman “Authors” and implications for the integrity of scientific publication and medical knowledge. JAMA. 2023 Jan 31; doi: 10.1001/jama.2023.1344. [Epub] [DOI] [PubMed] [Google Scholar]