Review Highlights

-

•

Brief overview of the Multilevel Monte Carlo estimator.

-

•

Review of the importance sampling algorithm to reduce the overall variance of the MLMC estimator associated with the option pricing problems in financial engineering.

-

•

Review of extension of adaptive sampling algorithm to multilevel paradigm, with the aim of improving the computational complexity while estimating the Value-at-Risk (VaR) and Conditional VaR (CVaR).

Keywords: MLMC, Option Pricing, Importance Sampling, Risk Management, Adaptive Sampling

Method name: Importance Sampling for Option Pricing and Adaptive Sampling in Efficient Risk Estimation

Graphical abstract

Abstract

In this article, we present a review of the recent developments on the topic of Multilevel Monte Carlo (MLMC) algorithms, in the paradigm of applications in financial engineering. We specifically focus on the recent studies conducted in two subareas, namely, option pricing and financial risk management. For the former, the discussion involves incorporation of the importance sampling algorithm, in conjunction with the MLMC estimator, thereby constructing a hybrid algorithms in order to achieve reduction for the overall variance of the estimator. In case of the latter, we discuss the studies carried out in order to construct an efficient algorithm in order to estimate the risk measures of Value-at-Risk (VaR) and Conditional Var (CVaR). In this regard, we briefly discuss the motivation and the construction of an adaptive sampling algorithm with an aim to efficiently estimate the nested expectation, which, in general is computationally expensive.

Specifications table

| Subject area | Mathematics and Statistics |

|---|---|

| More specific subject area | Computational Finance |

| Name of the reviewed methodology | Importance Sampling for Option Pricing and Adaptive Sampling in Efficient Risk Estimation. |

| Keywords | MLMC; Option Pricing; Importance Sampling; Risk Management; Adaptive Sampling |

| Resource availability | N.A. |

| Review question | 1. What are the recent developments in the field of Multilevel Monte Carlo? |

| 2. How have these developments led to the improved efficiency of the existing estimator for various applications in finance? | |

| 3. What are the shortcomings of the presented studies? | |

| 4. What are the recent developments to address these shortcomings? |

Introduction

In the broader area of computational finance, the mere establishment of the existence of solution to a problem is not sufficient towards achieving the tangible financial goals, for the problem that has been posed. Accordingly, as is the case for many applications, we seek a solution that (in practice) happens to be an approximation to the actual solution being sought. To this end, we begin by observing that for problems in quantitative finance, one can arrive at either an analytical or (possibly) a semi-analytical solution, in only a handful of cases. Therefore, for the most part, one needs to devise efficient methods to arrive at the desired and appropriate solution to the posed problem which, in turn, necessitates the resorting to computational techniques. At the heart of this paper, lies a specific computational technique, widely used in the finance industry, namely, the Monte Carlo simulation approach. Accordingly, we begin our presentation with a brief narrative about this approach, with the main focus of the discussion being steered towards the recent research and development in the area of Multilevel Monte Carlo (MLMC). The interested readers may refer to [1], [2], [3], [4], [5], [6], [7] for greater clarity on the approach of MLMC and the key developments with respect to algorithms, as well as the applications in financial engineering problems. In this article we primarily focus on the importance sampling approach developed and studied in [8], [9], [10] and also on how MLMC has led to the development of algorithms for efficient risk estimation in the field of financial risk management, discussed by the authors in [11], [12]. However, we give a brief overview of Monte Carlo and MLMC before directing our discussion towards the aforesaid specific topics.

Monte Carlo methods have become one of the driving computing tools in the finance industry. The necessity of simulating high-dimensional stochastic models (which in turn may be attributed to the linear development in the complexity corresponding to the size of the problem itself) is one of the primary reason for this approach becoming a critical computational strategy in the industry. The main objective of this method, in case of computational finance is to achieve the necessary degree of accuracy (which is coupled with a high computational cost). More specifically, we intend to approximate where is functional of the random variable . The traditional Monte Carlo approach requires a computational complexity of the order of so to attain the Root Mean Square Error (RMSE) of , in a biased context [1]. This limitation led to the introduction of the multilevel framework in [1]to address this issue and also achieve a computational complexity pf , in the biased framework.

The idea behind the multilevel architecture is to employ independent standard Monte Carlo on various resolution levels and use the differences as the control variate for the Monte Carlo simulation at its most granular level, which in mathematical terms is given by,

| (1) |

Using the standard Monte Carlo as the estimator to approximate the expectation on the right hand side of (1), we obtain,

| (2) |

and therefore, . Here is the approximation of the random variable on level and this approximation is contingent on the application under consideration. For example, if the underlying stochastic process is driven by a stochastic differential equation (SDE), then is the approximation of , with some time discretization parameter . We now present at a slightly generalized version of the original theorem by Giles [1]. The stated theorem corresponds to the study carried out in [13].

Theorem 1

Letdenote a functional of the random variable X, and letdenote the corresponding levelnumerical approximation. If there exist independent estimatorsbased onMonte Carlo samples, each with expected cost, and positive constantssuch thatand

- (a)

- (b)

- (c)

- (d)

then there exists a positive constant, such that for any, there are valuesand, for which the multilevel estimator, has a MSE with bound,

with a computational complexity, having the bound,

It is evident from the above theorem that the complexity of the MLMC is dependent on and . Therefore, one of the main challenge while developing MLMC based estimator is to determine the parameters and for the underlying approximation. With this brief introduction of MLMC, we now direct our discussion towards its recent developments, pertaining to algorithms and financial applications.

In this article, we discuss four methodological contributions to the paradigm of MLMC simulation. Because the article is a review article, we give a brief overview of these methods, focusing solely on the improvement they exhibit over standard Multilevel algorithms. As the problem of derivative pricing and estimation of failure probabilities is both computationally expensive and of utmost importance in financial institutions, the research discussed is highly relevant in the current scenario.

Importance Sampling Multilevel Algorithm

Since the advent of MLMC in literature, one of the directions of its progression has been through various attempts to combine this algorithm, with the already existing variance reduction techniques. For instance, in [3], [14] the authors studied and analyzed the combination of antithetic variates and MLMC in order to bypass the Levy area simulation (encountered while using Milstein discretization scheme) in order to simulate higher dimensional SDEs. However, in our discussion we primarily focus on the combination of importance sampling algorithm and multilevel estimator.

The idea of incorporating importance sampling with multilevel estimators is derived from the seminal paper by Arouna [15]. Arouna’s idea relied upon the parametric change of measure and using a search algorithm to approximate the optimal change of the measure parameter, in order to minimize the variance of the standard Monte Carlo estimator. Before we discuss the research undertaken in the area of multilevel pertaining to importance sampling algorithm, we give a brief overview of the parametric importance sampling approach.

Consider a general problem of estimating , where is a -dimensional random variable. If is the multivariate density function of , then,

where, . This implies that, . Therefore, we need to determine the optimal value of such that is minimum. Mathematically this is represented as,

| (3) |

In order to solve the above problem, one can resort to the usage of the Robbins-Monro algorithm that deals with a sequence of random variable , which approximate accurately. However, the convergence of this algorithm requires certain restrictive conditions, which are known as the non explosion condition (NEC) (given in [16]),

In order to deal with this restrictive condition, the authors in [17], [18] introduced a truncation based procedure which was furthered in [19], [20]. An unconstrained procedure to approximate , by using the regularity of the density function in an extensive manner, was introduced in [21] along with the proof of convergence of the algorithm. Besides the stochastic approximation algorithm, one can also use deterministic algorithm such as sample average approximation, which, while being computationally expensive, provides for a better approximation to .

In problems dealing with the pricing of the options, for the most part the underlying stochastic process (where is a finite time horizon), is governed by some SDEs. The general form of these SDEs is given as follows:

| (4) |

where is a -dimensional Brownian motion on a filtered probability space , with and being the functions satisfying the following condition:

Assumption ensures the existence and the uniqueness of solution to (4). For the most part, constructing an analytical or semi-analytical solution to (4) is not possible, and therefore we need to rely on discretization schemes such as Euler or Milstein in order to simulate the SDEs. For detailed discussion on these discretization schemes, the interested readers may refer to [22]. Further, following the idea of [15], we consider a family of stochastic process , with , being governed by the following SDE:

| (5) |

As a consequence of the Girsanov’s Theorem, we know that there exists a risk-neutral probability measure , which is equivalent to such that,

| (6) |

under which the process is a Brownian motion. Therefore,

| (7) |

Therefore, following the discussion above we have,

Here, . Now the idea of importance sampling Monte Carlo method is to estimate , where is given by,

| (8) |

In the context of Multilevel estimator, we present two approaches studied in [8], [9], [10], adapting the ideas studied by authors in [15], [16] and extending it to multilevel scenarios. Under the parametric change of measure, the general multilevel estimator is defined as,

| (9) |

Under the framework of multilevel estimator, the parametric importance sampling estimator looks like,

| (10) |

Considering the variance of the above estimator, we have [8],

| (11) |

where,

Therefore, as discussed, in order to solve the problem of minimizing the overall variance of the estimator described above, we intend to minimize the variance at each level of resolution, i.e., we aim at approximating for , such that,

| (12) |

Further, pertinent to the discussion carried out in [16] and another application of the Girsanov’s Theorem, the above problem can be reformulated as,

| (13) |

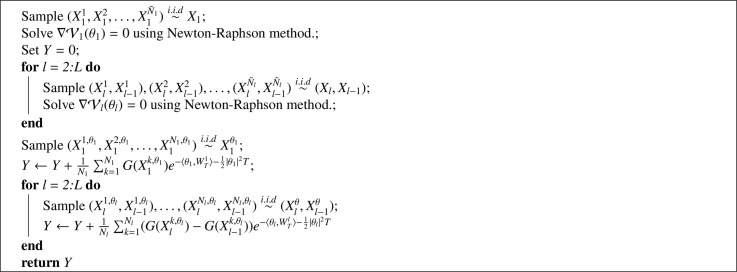

We present below the two algorithm namely, the sample average approximation and stochastic approximation, in order to approximate the ’s as the solution to (13).

Sample Average Approximation

The sample average approximation deals with approximating the above expectations using sample paths. More specifically,

| (14) |

and,

| (15) |

Having approximated the expectation in the minimization problem, the authors used the standard Newton-Raphson algorithm on the functions and in order to approximate , for . In [16] it is proved that if the functional satisfies the non-degeneracy conditions i.e., and and have finite second moments, then by Lemma 2.1 in [16], and are infinitely continuously differentiable. Moreover, both and are both strongly convex, thus implying the existence of the unique minimum and as the solution to equation (13).

Adaptive Stochastic Approximation

Under the stochastic approximation, studied in [9], [10] the aim of determining the optimal change of parameter for is carried out using the Robbins-Monro algorithm. Here, we briefly describe the algorithm. Consider a compact convex set such that . Then the recursive algorithm with projection is defined as follows,

| (16) |

where is the Euclidean projection onto the set . The sequence is a decreasing sequence of positive real numbers satisfying,

| (17) |

Also,

| (18) |

The algorithm described above is the constrained version of the Robbins-Monro algorithm. The inclusion of the projection operator in the recursive algorithm is to satisfy the NEC. Similar to the discussion carried out in the previous section, if the non-degeneracy conditions are satisfied i.e., and , further assuming the finite second moment of and , we can conclude the convergence of the , constructed recursively using equation (16), for various level of resolutions.

The term adaptive is used in the sense that, the estimation of the optimal importance sampling parameter and the MLMC run simultaneously. The multilevel estimator in this case is given as follows,

| (19) |

However, for the purpose of the practical implementation, one needs to stop the approximations procedure after finite number of iterations.

Having approximated the for , we use the multilevel algorithm described by equation (16) to estimate our expectation. The studies carried out in [8], [9], [10] demonstrate the accuracy of the hybrid importance sampling multilevel algorithm over standard multilevel algorithm, through a series of numerical examples, where the underlying SDEs are multi-dimensional.

As one can readily observe, the sample average approximation is a deterministic algorithm, whereas the stochastic one is not. The robustness and the stability of the sample average approximation make it a dominant choice for estimating . However, the algorithm suffers from a low convergence rate, and large memory footprint [8]. Also, the algorithm does require the calculation of , which in turn requires more conditions on the regularity of the payoff , making it less convenient from the practitioner’s point of view. On the other hand, the stochastic algorithm does not require the calculation of , resulting in less restrictive conditions and making it feasible from the practitioner’s point of view. Although the convergence of the stochastic algorithm is faster than the sample average algorithm, it is not robust and is sensitive to the parameter . Further, it is evident from Algorithm 1 and 2 that both these algorithms are computationally more expensive as compared to the standard MLMC algorithm. Nevertheless, the variance reduction achieved by these algorithms compensates for the increased computational cost. It may be pointed out that the study performed above only deals with the Euler MLMC, restricted to the use of Euler discretization to simulate the underlying SDEs. More recently, a study carried out by authors in [23] generalize this approach, undertaking higher order discretization schemes such as Milstein to simulate the underlying SDEs. The interested reader can refer to the references mentioned therein to get a more rigorous understanding of this hybrid algorithm.

Algorithm 1.

Importance sampling: Sample average approximation

Algorithm 2.

Importance sampling: Stochastic Approximation

MLMC and Efficient Risk Estimation

Risk measurement and consequent management is one of the essential components of financial engineering. The computation of the former (risk measures) for a financial portfolio is both challenging and computationally intensive, which may be ascribed to computations involving nested expectation, which entails multiple evaluations of the loss to the portfolio, for distinct risk scenarios. Further, the cost of computing loss of portfolio entailing thousands of derivatives becomes progressively expensive with an increase in the size of the portfolio [24]. Value-at-Risk (VaR), Conditional VaR (CVaR), and the likelihood of a large loss are the necessary risk metrics used to estimate the risk of a financial portfolio. At the core of these estimation, is the necessity of evaluating the nested expectation, given by,

| (20) |

where, is the Heaviside function. More specifically, suppose we need to compute the probability of the expected loss being greater than , i.e., we are interested in the following computation:

| (21) |

where is the expected loss in a risk-neutral world, with being a possible risk scenario at some short risk (time) horizon . Also, is the average loss of many losses incurred from different financial derivatives, depending upon similar underlying assets [24], that is,

| (22) |

where is the total number of derivatives and is the loss from the -th derivative. The average is considered to ensure the boundedness of , when the portfolio size of increases. A standard and straight forward way to estimate the nested expectation (20) is the usage of Monte Carlo method. This involves, simulating independent scenarios of the risk parameter , and for each risk scenario, total loss samples, which are independent. This method was explored in [25] and an extended analysis was carried out in [26]. The total computational cost to perform the above simulation is in order to achieve the RMSE of [24]. In order to handle this issue we present the ideas studied in [11], [12] under the realm of MLMC.

Adaptive Sampling Multilevel Estimator

As mentioned in the previous section, the cost of the standard Monte Carlo to achieve the RMSE of is . To improve the computational complexity, the authors in [27] developed an efficient through the adaptation of the sample size required in the inner sampler of Monte Carlo, to the particular outer sampler random variable. Under certain conditions, the authors were able to achieve the computational complexity to achieve the RMSE of . Giles in [11] extended this approach to the multilevel framework and was able to achieve computational cost for a RMSE tolerance . Before presenting the work initiated by Giles, we put forth a brief review of the studies carried out in [25] and [27].

The authors in [25], estimated the inner expectation of the equation (20), i.e., , for a given , using the unbiased Monte Carlo estimator, with sample paths, as given by,

| (23) |

where, are the mutually independent samples from the random variable , conditioned on . Again using the Monte Carlo for the outer expectation, we have,

| (24) |

where are the mutually independent samples from the random variable . Further, they proved that if the two random variables and have the joint density and assuming that for , exists, plus there exists a non-negative function , such that,

for all , then the RMSE of the estimator (24) is . Therefore, in order to achieve the RMSE of we need and , leading to the total computational complexity of . Authors in [27] developed an adaptive sampling technique to deal with high computational complexity previously discussed. Their approach was based on the likelihood that an additional sample will result in a negative estimate of having estimated that for given . More specifically they showed that,

where and . Therefore, if , then the probability that is equal to . Based on these observations, the authors in [27] introduced two algorithms, the first being based on the minimization of the total number of samples for all inner Monte Carlo samplers with respect to given tolerance , and the second being iterative, estimating and after every iteration, for given value of , using samples further adding more inner samples till exceeds some error margin threshold. Under these two algorithms it was observed that the overall computational complexity is [11]. The authors in [11] introduced the above algorithms in the realm of multilevel simulation, wherein they used multilevel estimator in order to achieve an approximation to the outer expectation, while making use of the sample size in the inner expectation as the discretization parameter. More specifically,

| (25) |

where,

| (26) |

with being the i.i.d samples of the random variable , given . Also, . Now under the assumptions , it can be proved that [11],

Further, under the assumption that there exists constants and such that, , for all where is the random variable with density , the authors in [11] proved that, if and are the two random variables, satisfying the stated assumption, then,

| (27) |

The above result determines the strong convergence property necessary to analyze the full potential of the MLMC estimator, in this scenario. However, if , then with standard MLMC complexity analysis it is easy to determine that the computational complexity required to achieve RMSE of , we need computational complexity. To cater to this high computational demand, even in the framework of MLMC, the authors undertook the adaptive approach developed in [27] and extended it to the framework of MLMC.

Giles extended the studies carried out by authors in [27] to multilevel paradigm with an aim to reduce the overall computational cost to . In addition to the assumptions stated above, it is further assumed that,

Thus, under the above stated assumptions, it was proved in Lemma 2.5 (for the perfect adaptive sampling) and Theorem 2.7 of [11], that if the maximum number of sample paths is restricted to,

| (28) |

then the further number of sample path of various level of resolutions are given by,

| (29) |

with being some confidentiality constant and for the perfect adaptive sampling and

when the values of and is approximated. Therefore,

| (30) |

thereby leading to the overall computational complexity of the desired order. In a detailed discussion carried out in Section 4 of [11], it was proved (pertaining to the calculation of VaR and CVaR) that in order to achieve the overall computational cost of RMSE, the required computational complexity is for the estimation of VaR and CVaR, respectively. The numerical test on a model problem undertaken shows the efficacy of the algorithm constructed. Readers are directed to the referred paper for detailed discussion on the proofs of the above stated results. It may be noted that the computational complexity increases with an increase in the portfolio size, . A random sub-sampling approach, extending it to a multilevel framework, thereby addressing the dependency on the portfolio size, to achieve the desired RMSE was recently introduced in [24].

Estimation of Probabilities

A recent study carried out by authors in [12] undertake a more general problem of developing an efficient numerical method in order to estimate,

| (31) |

for a given tolerance level . The study performed by the authors goes beyond the applications related to the calculation of the nested expectations. We, however, focus our discussion to the applications pertaining to the estimation of risk. In the study carried out for the one-dimensional setting, the authors consider the problem of estimating the . In doing so, they have considered an increasingly accurate approximation converging to , almost surely, as . The algorithm proposed in [12] is thus the generalization of the study performed in [11] as discussed in the Subsection (Adaptive Sampling Multilevel Estimator). As before, the idea is to approximate with , with the choice of being large enough to control the bias generated by the approximation. Therefore, adapting the idea of the multilevel estimator, the corresponding estimator is,

| (32) |

The presented study require less restrictive condition to the one discussed in the Subsection (Adaptive Sampling Multilevel Estimator). Before discussing further on this, we put forth various assumptions undertaken by the authors and briefly review the consequence of these assumptions [12].

Assumption 1 For some , and positive valued random variable , define,

assuming is uniformly bounded in .

Assumption 2 Let . Then there exists such that for all , we have,

for all .

Under the assumptions and , it was proven in Proposition 2.3 of [12] that,

which consequently also act as the bound for . Under the same assumptions, we require that , in order to observe computational complexity. Since in most applications (including the one under consideration here), [12], therefore a tighter bound for the bias is essential to determine the computational complexity accurately. This is achieved by further incorporating the assumption and also assuming , for . Then , and . With these assumptions and bounds, it was shown that the computational complexity is bounded as described below,

| (33) |

From the above equation, it is quite evident that the discontinuity of the function affects the computational complexity of the MLMC estimator. The idea proposed in [12] is to use , (where is a random, non-negative integer), to approximate on level . Further, the refinement is performed between levels , where is a supplied parameter based on . The procedure to perform adaptive sampling at level is given in Algorithm 4, wherein the parameter determines the strictness of the refinement. Under ideal conditions, a large value of is desirable in order to observe the maximum benefit for the MLMC complexity. Also, it is important that the refining procedure does not affect the almost sure convergence of to . Further, it is assumed that cost of computing is of the order . A comprehensive work analysis carried out in Section 3 of [12] shows the potential of the above algorithm to achieve the computational cost comparable to the standard MLMC, albeit under some additional assumptions. In the context of the application under consideration, i.e., the calculation of the VaR, we take and consequently, , where, and . Using Algorithm 4, the refining procedure form level to is carried out by adding samples to the already existing samples on the level . Using the variance of , as the approximation of , on level , we can write,

| (34) |

where, is Student’s t-statistic with samples . A thorough analysis undertaken in Section 4 of [12], shows how the above procedure satisfies the underlying assumptions for the MLMC computations stated above. Readers can refer to the referred article for a deeper understanding of the underlying mathematics and proofs.

Algorithm 4.

Adaptive Sampling at level [12]

In conclusion, we would like to highlight specific differences between the algorithm presented above and the one discussed in Subsection (Adaptive Sampling Multilevel Estimator) in the refinement procedure. Firstly, the algorithm studied by Giles is tailor-made to improve the computational cost of nested expectation. In contrast, the approach discussed above is more general and is also applicable to the problem of derivative pricing. Unlike the algorithm developed by Giles in [11], which requires generating samples of , independent of , in the above algorithm, the samples generated for the computation of can be reused in the refinement to . This allows for acceleration in the refinement procedure. Further, as one can observe that the Algorithm 3 returns the number of samples required for the computation of contrary to the Algorithm 4, which returns the estimate of as the output of the refinement process. However, both these algorithms suffer from large kurtosis, as all even moments of are equal, which in turn impacts the robustness of the MLMC algorithm. Therefore, exploring ideas to deal with large kurtosis to obtain a reliable estimate of bias and variance on level is still an open problem.

Algorithm 3.

Adaptive algorithm to deduce [11]

Numerical Illustrations

In this section, we present numerical examples both in the context of option pricing and risk management to demonstrate the efficacy and practicality of the algorithms discussed. As we aim to present the applicability of the discussed algorithm, we intend to keep things simple, working preferably in a single dimension.

Option Pricing

Consider a stochastic process governed by the one dimensional gBm i.e.,

| (35) |

where denotes the riskfree interest rate, denotes the volatility, and is a one-dimensional standard Brownian motion defined on the probability space . Now, under the probability space , the above SDE becomes,

| (36) |

Further, we intend to estimate the value of the European call option, with payoff the function,

| (37) |

where is the strike price. In order to perform simulation, we set , , , and . The price obtained using the closed-form solution is 29.4987. Also, we use the Euler discretization scheme in order to simulate the SDE, i.e.,

| (38) |

where and . Similarly, for the parametric SDE, we have

| (39) |

With all the preludes, we present the numerical results to showcase the efficacy of the two importance sampling algorithms.

We have used the formulas from [5] in order to estimate number of levels and samples per level for the MLMC implementation. Also, we have taken and for performing the stochastic approximation, whereas for the deterministic approximation we have used the formulas from [8] in order to approximate . From the practical point of view, we stop both algorithms after a finite number of iterations. For most cases, the number of levels required for the MLMC implementation does not exceed six. Therefore, pre-computing for these levels for a given discretization parameter can accelerate the algorithm substantially. The interested reader may refer to https://bitbucket.org/pefarrell/pymlmc/src/master/ for implementation of MLMC in option pricing. Further, necessary changes with respect to importance sampling can be incorporated in the suggested package. The results of the implementation is presented in Figure 1.

Fig. 1.

Implementation of the algorithms for option pricing

Risk Estimation

In this section, we compare the two algorithms over a model problem studied in [11], [12]. Here we seek to estimate,

| (40) |

where,

The above problem describes a model for a delta-hedged portfolio with a negative Gamma so that the probability of occurrence of a huge loss is very low. Based on the small study performed in Section 4.1 of [11], the above problem can be further modified as , where

In order to perform our numerical experimentation, we set and . For the above values . We present in Figure 2 a visual comparison of the two algorithms discussed concerning risk management. We compare the total number of samples i.e., samples used in the MLMC estimator plus the samples for the adaptive algorithm, for a desired tolerance level.

Fig. 2.

Implementation of algorithm for risk estimation

Figure 2 gives a pictorial justification to the discussion carried out in Section (MLMC and Efficient Risk Estimation). The readers can refer to https://github.com/JSpence97/mlmc-for-probabilities for the software package developed by the authors in [12] to implement the algorithms discussed with respect to risk management.

Conclusion

In this paper, we gave a brief overview of the recent trends in the paradigm of the multilevel algorithm concerning the importance sampling in the case of option pricing and an adaptive sampling approach while determining VaR and CVaR for large portfolios. The algorithms discussed serves as the improvement in the computational efficiency of the standard multilevel estimators, each having its merits and shortcomings. As discussed in Section (Importance Sampling Multilevel Algorithm), the importance sampling algorithm combined with multilevel estimators significantly decreases variance at various resolution levels. However, this decrease in variance comes at the cost of increased computational complexity in either case and an increase in the sensitivity to approximate the optimal parameter. As for developing MLMC based algorithm for efficient risk estimation discussed in Subsection (Adaptive Sampling Multilevel Estimator), the adaptive sampling approach introduced in this paradigm leads to a significant improvement in the overall computational complexity to achieve the desired RMSE. The algorithm discussed in Subsection suffers from the dependency on the portfolio size, which is curtailed in the algorithm discussed in Subsection. However, both these algorithms suffer from large kurtosis, impacting the robustness of the MLMC estimator. Overall, the presented ideas have substantially contributed to the research and development of the multilevel algorithm for various applications encountered in financial engineering problems. However, the scope to enrich the standard algorithm with non-standard variance reduction techniques is still an exciting path for future research.

Ethics statements

The authors state that the work did not involve any data collected from social media platforms.

Declaration of Competing Interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

CRediT authorship contribution statement

Devang Sinha: Conceptualization, Writing – original draft. Siddhartha P. Chakrabarty: Conceptualization, Writing – review & editing.

Acknowledgments

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Contributor Information

Devang Sinha, Email: dsinha@iitg.ac.in.

Siddhartha P. Chakrabarty, Email: pratim@iitg.ac.in.

Appendix A. Algorithms for pricing derivatives

Appendix B. Algorithms for risk management

Data availability

No data was used for the research described in the article.

References

- 1.Giles M.B. Multilevel monte carlo path simulation. Operations research. 2008;56(3):607–617. [Google Scholar]

- 2.Giles M. Monte Carlo and Quasi-Monte Carlo Methods 2006. Springer; 2008. Improved multilevel monte carlo convergence using the milstein scheme; pp. 343–358. [Google Scholar]

- 3.Giles M.B., Szpruch L. Monte Carlo and Quasi-Monte Carlo Methods 2012. Springer; 2013. Antithetic multilevel monte carlo estimation for multidimensional sdes; pp. 367–384. [Google Scholar]

- 4.Giles M., Szpruch L. Multilevel monte carlo methods for applications in finance. Recent Developments in Computational Finance: Foundations, Algorithms and Applications. 2013:3–47. [Google Scholar]

- 5.Lemaire V., Pagès G. Multilevel richardson–romberg extrapolation. Bernoulli. 2017;23(4A):2643–2692. [Google Scholar]

- 6.Belomestny D., Schoenmakers J., Dickmann F. Multilevel dual approach for pricing american style derivatives. Finance and Stochastics. 2013;17(4):717–742. [Google Scholar]

- 7.Heinrich S. International Conference on Large-Scale Scientific Computing. Springer; 2001. Multilevel monte carlo methods; pp. 58–67. [Google Scholar]

- 8.Kebaier A., Lelong J. Coupling importance sampling and multilevel monte carlo using sample average approximation. Methodology and Computing in Applied Probability. 2018;20(2):611–641. [Google Scholar]

- 9.Alaya M.B., Hajji K., Kebaier A. Improved adaptive multilevel monte carlo and applications to finance. arXiv preprint arXiv:1603.02959. 2016 [Google Scholar]

- 10.Ben Alaya M., Hajji K., Kebaier A. Adaptive importance sampling for multilevel monte carlo euler method. Stochastics. 2022:1–25. [Google Scholar]

- 11.Giles M.B., Haji-Ali A.-L. Multilevel nested simulation for efficient risk estimation. SIAM/ASA Journal on Uncertainty Quantification. 2019;7(2):497–525. [Google Scholar]

- 12.Haji-Ali A.-L., Spence J., Teckentrup A.L. Adaptive multilevel monte carlo for probabilities. SIAM Journal on Numerical Analysis. 2022;60(4):2125–2149. [Google Scholar]

- 13.Cliffe K.A., Giles M.B., Scheichl R., Teckentrup A.L. Multilevel monte carlo methods and applications to elliptic pdes with random coefficients. Computing and Visualization in Science. 2011;14(1):3–15. [Google Scholar]

- 14.Giles M.B., Szpruch L. Antithetic multilevel monte carlo estimation for multi-dimensional sdes without lévy area simulation. The Annals of Applied Probability. 2014;24(4):1585–1620. [Google Scholar]

- 15.B. Arouna, Adaptative Monte Carlo Method, A Variance Reduction Technique, Monte Carlo Methods and Applications 10.

- 16.Alaya M.B., Hajji K., Kebaier A. Importance sampling and statistical romberg method. Bernoulli. 2015;21(4):1947–1983. [Google Scholar]

- 17.Chen H.-F., Guo L., Gao A.-J. Convergence and robustness of the robbins-monro algorithm truncated at randomly varying bounds. Stochastic Processes and their Applications. 1987;27:217–231. [Google Scholar]

- 18.Chen H.F., Zhu Y. Stochastic approximation procedures with randomly varying truncations. Science in China, Ser. A. 1986 [Google Scholar]

- 19.Andrieu C., Moulines É., Priouret P. Stability of stochastic approximation under verifiable conditions. SIAM Journal on control and optimization. 2005;44(1):283–312. [Google Scholar]

- 20.Lelong J. Almost sure convergence of randomly truncated stochastic algorithms under verifiable conditions. Statistics & Probability Letters. 2008;78(16):2632–2636. [Google Scholar]

- 21.Lemaire V., Pagès G. Unconstrained recursive importance sampling. The Annals of Applied Probability. 2010;20(3):1029–1067. [Google Scholar]

- 22.Kloeden P.E., Platen E. Numerical Solution of Stochastic Differential Equations. Springer; 1992. Stochastic differential equations; pp. 103–160. [Google Scholar]

- 23.Sinha D., Chakrabarty S.P. Multilevel richardson-romberg and importance sampling in derivative pricing. arXiv preprint arXiv:2209.00821. 2022 [Google Scholar]

- 24.Giles M.B., Haji-Ali A.-L. Sub-sampling and other considerations for efficient risk estimation in large portfolios. arXiv preprint arXiv:1912.05484. 2019 [Google Scholar]

- 25.Gordy M.B., Juneja S. Nested simulation in portfolio risk measurement. Management Science. 2010;56(10):1833–1848. [Google Scholar]

- 26.Giorgi D., Lemaire V., Pagès G. Limit theorems for weighted and regular multilevel estimators. Monte Carlo Methods and Applications. 2017;23(1):43–70. [Google Scholar]

- 27.Broadie M., Du Y., Moallemi C.C. Efficient risk estimation via nested sequential simulation. Management Science. 2011;57(6):1172–1194. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

No data was used for the research described in the article.