Abstract

Firing across populations of neurons in many regions of the mammalian brain maintains a temporal memory, a neural timeline of the recent past. Behavioral results demonstrate that people can both remember the past and anticipate the future over an analogous internal timeline. This paper presents a mathematical framework for building this timeline of the future. We assume that the input to the system is a time series of symbols—sparse tokenized representations of the present—in continuous time. The goal is to record pairwise temporal relationships between symbols over a wide range of time scales. We assume that the brain has access to a temporal memory in the form of the real Laplace transform. Hebbian associations with a diversity of synaptic time scales are formed between the past timeline and the present symbol. The associative memory stores the convolution between the past and the present. Knowing the temporal relationship between the past and the present allows one to infer relationships between the present and the future. With appropriate normalization, this Hebbian associative matrix can store a Laplace successor representation and a Laplace predecessor representation from which measures of temporal contingency can be evaluated. The diversity of synaptic time constants allows for learning of non-stationary statistics as well as joint statistics between triplets of symbols. This framework synthesizes a number of recent neuroscientific findings including results from dopamine neurons in the mesolimbic forebrain.

Keywords: Temporal memory, prediction, Laplace transform, convolution

1. Introduction

Consider the experience of listening to a familiar melody. As the song unfolds, notes feel as if they recede away from the present, an almost spatial experience. According to Husserl (1966) “points of temporal duration recede, as points of a stationary object in space recede when I ‘go away from the object’.” For a familiar melody, Husserl (1966) argues that events predicted in the future also have an analogous spatial extent, a phenomenon he referred to as protention. This experience is consistent with the hypothesis that the brain maintains an inner timeline extending from the distant past towards the present and from the present forwards into the future. In addition to introspection and phenomenological analysis, one can reach similar conclusions from examination of data in carefully controlled cognitive psychology experiments (Tiganj, Singh, Esfahani, & Howard, 2022).

The evolutionary utility of an extended timeline for future events is obvious. Knowing what will happen when in the future allows for selection of an appropriate action in the present. Indeed, much of computational neuroscience presumes that the fundamental goal of the cortex is to predict the future (Clark, 2013; Friston, 2010; Friston & Kiebel, 2009; Palmer, Marre, Berry, & Bialek, 2015; Rao & Ballard, 1999).

In AI, a great deal of research focuses on reinforcement learning (RL) algorithms that attempt to optimize future outcomes within a particular planning horizon (Dabney et al., 2020; Ke et al., 2018) without a temporal memory. From the perspective of psychology, RL is a natural extension of the Rescorla-Wagner model (Rescorla & Wagner, 1972) an associative model for classical conditioning (Schultz, Dayan, & Montague, 1997; Sutton & Barto, 1981; Waelti, Dickinson, & Schultz, 2001). Associative models describe connections between a pair of stimuli (or stimulus and an outcome etc) as a simple scalar value. In simple associative models, variables that affect the strength of an association, such as the number of pairings between stimuli, or attention, etc, must all combine to affect a single scalar value. Thus, although the strength of an association can fall off with the time between stimuli, the association itself does not actually convey information about time per se (C. Gallistel, 2021a).

Cognitive psychologists have argued that classical conditioning does not reflect atomic associations between stimuli, but rather explicit storage and retrieval of temporal relationships (Arcediano, Escobar, & Miller, 2005; Balsam & Gallistel, 2009; Cohen & Eichenbaum, 1993; C. Gallistel, Craig, & Shahan, 2019; Namboodiri, 2021). In this view, behavioral associations in classical conditioning reflect learning of temporal contingencies between stimuli, such that knowing that a particular stimulus was experienced in the present changes our expectations for the time at which an outcome will be experienced (Floeder, Jeong, Mohebi, & Namboodiri, 2024; Jeong et al., 2022). Such a theory clearly requires a temporal memory in order to learn temporal relationships between stimuli.

This paper presents a formal hypothesis for how populations of neurons could learn and express temporal relationships between symbols, ignoring similarity structure within stimuli. We assume the existence of a temporal memory expressed in the firing of neurons with an effectively continuous spectrum of time constants, forming the Laplace transform of the recent past (Atanas et al., 2023; Bright et al., 2020; Kanter, Lykken, Moser, & Moser, 2024; Tsao et al., 2018; Zuo et al., 2023). Neurophysiological results suggest that the temporal memory expressed in neural firing extends at least several minutes (Tsao et al., 2018). We additionally hypothesize a neural timeline of the future expressed as Laplace transform (Cao, Bright, & Howard, in press). The present is part of both the past and the future, so that the current symbol is simultaneously the most recent part of the past and the most imminent part of the future. Hebbian associations between the Laplace transform for the past and the present symbol store temporal relationships between symbols. In addition, a continuous spectrum of synaptic time scales enable learning of temporal relationships over time scales much longer than a few minutes. This spectrum of synaptic time constants also enables learning of higher-order relationships among symbols expressed as their joint statistics.

2. Constructing neural timelines of the past and future

We take as input a finite set of discrete symbols, x, y, etc., that are occasionally presented for an instant in continuous time. There are consistent temporal relationships between some of the symbols, such that knowing one symbol was presented at time may provide information about the occurrence of another symbol at time . For convenience we assume that the time between repetitions of any given symbol is much longer than the temporal relationships that are to be discovered and much longer than the longest time constant . Much like the assumptions necessary to write out the Rescorla-Wagner model (C. Gallistel, 2021b; Namboodiri, 2021), this set of assumptions allows us to imagine that experience is segmented into a series of discrete trials and that each symbol can be presented at most once per trial. This assumption allows easy interpretation of quantities that we will derive.

2.1. The present

Let us take as input a vector valued function of time . The notation refers to a vector with each element a real number, is a transposed vector, so that is the inner product, a scalar, and is the outer product, a matrix. We assume a tokenized representation between symbols, so that where is the Kronecker delta function. We write for the symbol available at time . At instants when no stimulus is presented, , the zero vector. If we present a specific symbol at a specific time , this adds to the basis vector for that symbol multiplied by a delta function over time centered at . At most times, the input is zero. We will occasionally refer to the moment on a particular trial when x is presented, as .

We write to describe the true past that led up to time . The continuous variable runs from , corresponding to the moment of the past closest to the present backwards to , corresponding to the distant past. Whereas is the symbol available in the present at a particular instant , , is the timeline that led up to time . Under the assumption that every symbol is presented at most once per trial, each component of over the interval is either a delta function at some particular or zero everywhere. The goal of the associative memory is to provide a guess about the future that will follow time , , (Figure 1) given the symbol available in the present.

Fig. 1.

Guide to notation. A. Time measured externally is drawn as a horizontal line; the “internal timeline” available to the agent at each moment is drawn as a diagonal line. The remembered past at time is drawn below the horizontal; the predicted future is drawn above the horizontal. Locations on the internal timeline are spaced to suggest logarithmic compression. Consider a case in which x and y are presented many times with a consistent temporal relationship. If x is presented at and then y is presented at some later time . After x is presented at , it recedes into the past, so for we find that . At the moment y is presented, . After the relationship between x and y is learned, then after x is presented y is predicted a time in the future. As time proceeds after presentation of x, the predicted occurrence of y should approach closer and closer to the present. B. Sign conventions. At the present moment , objective time runs from to . corresponds to time . The real Laplace domain variable runs from 0+ to +∞ for both past and future, approximated as and . The units of are ; the values corresponding to different points of the timeline are shown in the same vertical alignment. Cell number for Laplace and inverse spaces are aligned with one another. The variable describes position along the inverse spaces. It is in register with and derived from . C. The stimulus available in the present, provides input to two sets of neural manifolds. One set of neural manifolds represents the past; the other estimates the future. stores temporal relationships between events.

The symbol provided in the present is available to both the past and the future. The present enters the past timeline at its most recent point. In this formulation, the present is also available as the most rearward portion of the future timeline. By associating the past to the rearward portion of the future, we can learn temporal relationships between symbols separated in time. By probing these associations with the present—as the most recent part of the past timeline—we can construct an extended estimate of the future.

2.2. Laplace neural manifolds for the past and the future

We estimate both the past and the future as functions over neural manifolds. Each manifold is a population of processing elements—neurons—each of which is indexed by a position in a coordinate space. We treat the coordinates as effectively continuous and locally Euclidean. At each moment, each neuron is mapped onto a scalar value corresponding to its firing rate over a macroscopic period of time on the order of say 100 ms. We propose that the past and the future are represented by separate manifolds that interact with one another.

The representations for both the past and the future each utilize two connected manifolds. We refer to one kind of manifold, indexed by an effectively continuous variable , as a Laplace space. The other kind of manifold, indexed by an effectively continuous variable , is referred to as an inverse space. Taken together, we refer to these paired representations as a Laplace Neural Manifold. The representations of the past follow previous work in theoretical neuroscience (Howard et al., 2014; Shankar & Howard, 2013), cognitive psychology (Howard, Shankar, Aue, & Criss, 2015; Salet, Kruijne, van Rijn, Los, & Meeter, 2022), and neuroscience (Bright et al., 2020; Cao, Bladon, Charczynski, Hasselmo, & Howard, 2022).

2.2.1. Laplace spaces for remembered past and predicted future

The Laplace space corresponding to the past, which we write as encodes the Laplace transform of , the past leading from the present at time back towards the infinite past:

| (1) |

We restrict to real values on the positive line (but see Aghajan, Kreiman, & Fried, 2023).1 Many neurons tile the axis continuously for each symbol. To the extent that we can ensure a set of exponential receptive fields with a continuous spectrum of values, we have established that is the Laplace transform of the past. Exponential receptive fields over the past with a continuous spectrum of time constants have been observed in many brain regions and species (Atanas et al., 2023; Bright et al., 2020; Cao et al., in press; Danskin et al., 2023; Tsao et al., 2018; Zuo et al., 2023).

The index assigned to a neuron corresponds to the inverse of its functional time constant. The Laplace space corresponding to the future, which we write as is an attempt to estimate the Laplace transform of the future, over the interval . Thus, there is a natural mapping between and within both the past and the future. By convention, is positive for both the past and the future so that is the Laplace transform of for whereas is the Laplace transform of for .

Although is effectively continuous, this does not require that neurons sample evenly. Following previous work in psychology (e.g., Chater & Brown, 2008; Howard & Shankar, 2018; Piantadosi, 2016), neuroscience (Cao et al., 2022; Guo, Huson, Macosko, & Regehr, 2021), and theoretical neuroscience (Lindeberg & Fagerström, 1996; Shankar & Howard, 2013), we assume that is sampled on a logarithmic scale. Let be the neuron number, starting from the largest value of nearest and extending out from the present. We obtain a logarithmic scale by choosing .

2.2.2. Updating Laplace spaces in real time

Suppose that we have arranged for one particular component of or to hold the Laplace transform of one particular symbol, which we write as . Suppose further that is zero in the neighborhood of . Consider how this component, which we write as or , should update as time passes. Let us pick some minimal increment of time on the order of, say, 100 ms. At time , information in for recedes further away from the present, so that

. In contrast, at time , information in the future for comes closer to the present, so that . More generally, suppose that is the Laplace transform of a function over some variable, . Defining , we can update as

| (2) |

where is the translation operator, and we have used the expression for the Laplace transform of translated functions. Equation 2 describes a recipe for updating both with in the absence of new input. Using the sign convention developed here, we fix for and fix for . It is possible to incorporate changes into the rate of flow of subjective time by letting change in register, such that for all . The expression in Eq. 2 holds more generally and can be used to update Laplace transforms over many continuous variables of interest for cognitive neuroscience (Howard & Hasselmo, 2020; Howard, Luzardo, & Tiganj, 2018; Howard et al., 2014).

We are in a position to explain how comes to represent the Laplace transform of over the interval ; a discussion of how comes to estimate the future requires more development and will be postponed. When a symbol is presented at time , it enters timeline of the past at . So, incorporating the Laplace transform of the most recent part of the past with the past that is already available and then evolving to time we have

| (3) |

At time , the input from time is encoded as the Laplace transform of that symbol a time in the past. At each subsequent time step, an additional factor of accumulates. As time passes, the input from time is always stored as Laplace transform of a delta function at the appropriate place on the timeline. Because this is true for all stimuli that enter , we conclude that encodes the Laplace transform of the past over the interval .

The middle panel of Figure 2 illustrates the profile of activity over and , shown as a function of cell number , resulting from the Laplace transform of a delta function at various moments in time. In the middle panel, the axis for the past is reversed to allow appreciation of the relationship between past time and . Note that the Laplace transform of a delta function has a characteristic shape as a function of cell number that merely translates as time passes. Note that the magnitude of the translation of depends on the value of . It can be shown that for a delta function with . This can be appreciated by noting that the distances between successive lines in the middle panel of Fig. 2 are not constant despite the fact that they correspond to the same time displacement. Whereas goes down as time passes for as the past becomes more remote from the present, increases with the passage of time for as the future grows closer to the present.

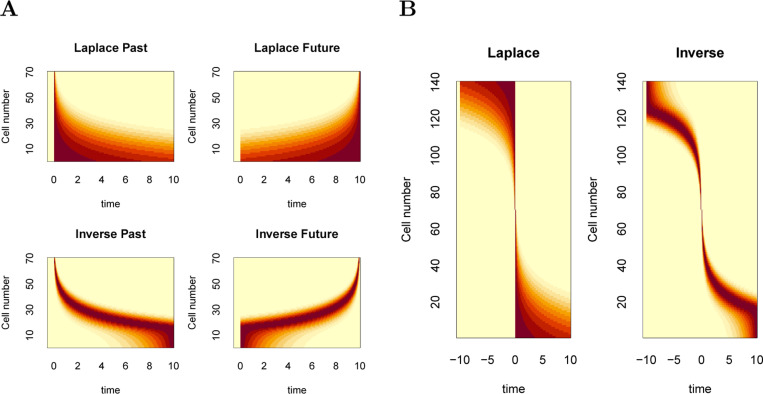

Fig. 2.

Neural manifolds that construct a logarithmically-compressed internal timeline of the past and the future. Top: A temporal relationship exists between x and y such that y always follows x after a delay of seconds. Consider how the internal timeline ought to behave after x is presented at . At time , the past should include x seconds in the past and y seconds in the future. Middle and bottom: Samples of the timeline at evenly-spaced moments between zero and . At each moment, there is a pattern of activity over neurons indexed by . The state of the timeline at earlier moments, closer to , are darker and later moments closer to are lighter. Red lines are neurons coding for x (primarily in the past except precisely at ), blue lines are neurons coding for y (primarily in the future except precisely at ). Middle: Laplace spaces for the past (left) and future (right) shown as a function of cell number ; Bottom: inverse spaces, constructed using the Post approximation, for the past (left) and future (right) shown as a function of log time. Exactly at time , x is available a time 0+ in the future (dark horizontal red line, middle right). Similarly, exactly at , y is available a time 0− in the past (light horizontal blue line, middle left).

There are implementational challenges to building a neural circuit that obeys Eq. 2; these challenges are especially serious when , which requires activation to grow exponentially. If one is willing to restrict the representation of each symbol to the Laplace transform of a delta function at a single point in time, it is straightforward to implement a continuous attractor network (Khona & Fiete, 2021) to allow the “edge” in the Laplace transform as a function of to translate appropriately. Daniels and Howard (submitted) constructed a simple continuous attractor network to demonstrate the feasibility of this approach.

2.2.3. Inverse spaces for remembered past and predicted future

The mammalian brain also contains “time cells” with circumscribed receptive fields (MacDonald, Lepage, Eden, & Eichenbaum, 2011; Pastalkova, Itskov, Amarasingham, & Buzsaki, 2008; Schonhaut, Aghajan, Kahana, & Fried, 2023; Tiganj, Cromer, Roy, Miller, & Howard, 2018). Time cells resemble a “direct” estimate of the past and are reasonably well approximated as:

| (4) |

where is a unimodal function with its maximum at 1 and is here defined to be negative (Fig. 1). estimates the true past in the neighborhood of . As becomes more and more sharp, approaching a delta function, goes to . In this sense is like the inverse Laplace transform of . However, because receptive fields depend only on the ratio of , and because neurons sample the axis logarithmically, is a convolution of and another function of log that controls the blur.

The bottom panel of Figure 2 shows a graphical depiction of the inverse space for the past and the future during the interval between presentation of x and y. The inverse spaces approximate the past, for and the future, for on a log scale. As the delta function corresponding to the time of x recedes into the past, the corresponding bump of activity in also moves, keeping its shape but moving more and more slowly as x recedes further and further into the past. In the future, the delta function corresponding to the predicted time of y should start a time in the future and come closer to the present as time passes. As the prediction for y approaches the present, the corresponding bump of activity in keeps its shape but the speed of the bump accelerates rather than slowing with the passage of time.

It is in principle possible to construct the inverse space from the Laplace space via a linear feedforward operator. Previous papers (e.g., Shankar & Howard, 2013) have made use of the Post approximation to the inverse Laplace transform to construct the inverse space from the Laplace space. This is not neurally reasonable (Gosmann, 2018); the Post approximation is difficult to implement even in artificial neural networks (e.g., Jacques, Tiganj, Howard, & Sederberg, 2021; Tano, Dayan, & Pouget, 2020). A more robust approach would be a continuous attractor network (for a review see Khona & Fiete, 2021) that takes input as the derivative of with respect to . The width of the bump in would depend on internal connections between neurons in and global inhibition would stabilize the activity over . In this case, moving the bump in different directions, corresponding to and is analogous to moving a bump of activity in a ring attractor in different directions. A companion paper fleshes out these ideas (Daniels & Howard, submitted).

2.3. Predicting the future from the past

The previous subsection describes how to evolve the Laplace manifold for the past. If we could somehow initialize the representation of the future appropriately then we could use the same approach to evolve the Laplace manifold for the future during periods when no symbol is experienced. Initializing the future will be accomplished via learned temporal relationships between the past and the future.

The model has access to the Laplace transform of the past, as described above. We define the present so that it overlaps with both the most recent part of the past and the most imminent, or “rearward,” part of the future. We form Hebbian associations between the Laplace transform of the past and the Laplace transform of the rearward portion of the future. Recall that products of Laplace transforms are the Laplace transform of the convolution of these functions. Because there is a reflection between the definition of and , the convolution of these two functions measures distances between time points in the past and the present. Later the present stimulus, taken as the Laplace transform of the most recent part of the past, can be used to recover the Laplace transform of an extended future timeline.

There are two sets of weights storing these associations, and . Each of these weights learn associations between the Laplace transform of the past, , and the present stimulus . The two sets of weights are normalized differently. Roughly speaking, stores the Laplace transform of the future conditionalized on the present symbol. In contrast stores the Laplace transform of the past conditionalized on the present symbol. With the assumptions that let us consider discrete trials, these transforms are understandable as pairwise statistics of events corresponding to a presentation of each symbol on a trial. We will see that taken together and enable us to estimate the associative and temporal contingency between each pair of symbols conditionalized on each other symbol.

The learning rate and forgetting rate for the sets of weights fixes a time horizon for learning over trials. By choosing a continuous spectrum of forgetting rates and learning rates , both and retain a memory for the history as a function of trials. Continuous forgetting allows the weights to implement a discrete approximation to the Laplace transform. This property of and means that it is in principle possible to aggregate joint statistics between stimuli.

2.3.1. Encoding

The moment a nonzero stimulus is experienced, we assume it is available to both and , triggering a number of operations which presumably occur sequentially within a small window of time on the order of 100 ms. First, the present stimulus updates a prediction for the future via a set of connections organized by . Then these connections are updated by associating the past to the present. Finally the present stimulus is added to the representation of the past. For ease of exposition we will first focus on describing the connections between the past and the future.

We write for a set of connections that associates the Laplace transform of the past to the Laplace transform of the future (Fig. 3). We postpone discussion of the other set of weights . For any particular value , is a matrix describing connections from each symbol in to each symbol in . For each pair of symbols, say x and y, we write for the strength of the connection from the cell corresponding to with in to the cell corresponding to in with . does not include connections between neurons with different values of . On occasion it will be useful to think of the set of connections between a pair of symbols over all values of , which we write as . Similarly, we write for the set of connections from y in to all stimuli in over all values of . We write for the set of connections to y in from all symbols and all values of . In this paper, the superscripting and subscripting of has no significance beyond a visual aid to help keep the indices straight.

Fig. 3.

Schematic figure illustrating . and components for all the possible symbols, here shown schematically as sheets. Two symbols x and y are shown in both and . Each symbol is associated with a population of neurons spanning a continuous set of values, shown as the heavy lines in this cartoon. describes the connections between each symbol in to each symbol in for each value of . The curved lines illustrate the set of weights connecting units corresponding to x in to units corresponding y in . Connections exist only between units with the same values of . The strength of the connections in vary as a function of in a way that reflects the pairwise history between x and y.

When a particular stimulus y is presented the connections to and from that stimulus in are updated. When y is presented, the connections from y in the past towards all stimuli in the present are updated as

| (5) |

That is, the connections from to every other stimulus for each value of are all scaled down by a value . Later we will consider the implications of a continuous spectrum of values; for now let us just treat as a fixed parameter restricted to be between zero and one. When y is presented, it momentarily becomes available at the “rearward part” of the future. In much the same way that the present enters the past (Eq. 3) at , we also assume that the present is also available momentarily in the future at . When y is presented, the connections from each symbol in the past to y in the future are updated as

| (6) |

Connections involving symbols that are not present in the history retained by are not updated. We can understand Eq. 6 as a Hebbian association between the units in , whose current activation is given by and the units in the future corresponding to the present stimulus y (see Fig. 4). More generally, we can understand this learning rule as strengthening connections from the past to the rearward part of the future, . Because the second term is the product of two Laplace transforms, it can also be understood as the Laplace transform of a convolution, here, the convolution of the present with the past.2 Convolution has long been used as an associative operation in mathematical psychology (Jones & Mewhort, 2007; Kato & Caplan, 2017; Murdock, 1982), neural networks (Blouw, Solodkin, Thagard, & Eliasmith, 2016; Eliasmith, 2013; Plate, 1995), and computational neuroscience (Steinberg & Sompolinsky, 2022).

Fig. 4.

Learning and expressing pairwise associations with . The horizontal line is time; the diagonal lines indicate the internal timeline at the moments they intersect. Memory for the past is below the horizontal line; prediction of the future is above. When x is presented for the first time, it predicts nothing. When y is presented, the past contains a memory for x in the past. When y is presented, stores the temporal relationship between x in the past and y in the present—the rearward part of the future. In addition to storing learned relationships, connections from each item decay each time it was presented (not shown). When x is repeated much later in time, the stored connections in retrieve a prediction of y in the future.

2.2.2. is a Laplace successor representation

From examination of Eqs. 5 and 6, we see that after each trial is multiplied by when x was presented. For trials on which y was also presented, is added to . Writing as an indicator variable for the history of presentations of y on the trial steps in the past we find at the conclusion of a trial that

| (7) |

Note that if , then after an infinitely long series of trials and for all choices of . Following similar logic, if we relax the assumption that and take the limit as goes to 1, we find that .

Now let us relax the assumption that the time lag between x and y always takes the same value. Let the lag be a random variable subject to the constraint that is always . This is not a fundamental restriction; if changed sign, those observations would contribute to instead of . Now, again taking the limit as , we find

| (8) |

where we have used the definition for the Laplace transform of a random variable, again with the understanding that we restrict to be real and positive.

Equation 8 illustrates several important properties of . First, we can see that provides complete information about the distribution of temporal lags between x preceding y. This can be further appreciated by noting that the Laplace transform of the random variable on the right hand side is the moment generating function of . Keeping the computation in the Laplace domain means that there is no blur introduced by going into the inverse space as in previous attempts to build a model for predicting the future (Goh, Ursekar, & Howard, 2022; Shankar, Singh, & Howard, 2016; Tiganj, Gershman, Sederberg, & Howard, 2019). Second, because as long as the expectation of is finite, and captures the pairwise probabilities between all symbols.

In the limit as , is closely related to the successor representation (Carvalho, Tomov, de Cothi, Barry, & Gershman, 2024; Dayan, 1993; Gershman, Moore, Todd, Norman, & Sederberg, 2012; Momennejad et al., 2017; Stachenfeld, Botvinick, & Gershman, 2017) with a continuous distribution of discount rates (Kurth-Nelson & Redish, 2009; Masset et al., 2023; Momennejad & Howard, 2018; Sousa et al., 2023; Tano et al., 2020). More precisely, if one assumes a complete compound serial representation of the past and a fixed action policy, then computes the successor representation from RL (Dayan, 1993; Gershman et al., 2012), but with a continuous spectrum of discount rates , one would obtain with the identification . However, computing does not require temporal difference learning. In RL language, is an ensemble of eligibility traces with a continuous spectrum of forgetting rates. Associating this multiscale eligibility trace to outcomes is sufficient to compute , which we might refer to as a Laplace successor representation.

2.3.3. is a Laplace predecessor representation

It is straightforward to construct a Laplace predecessor representation (Namboodiri & Stuber, 2021) using , the Laplace transform of the past, and Hebbian learning. We write out a new set of connections . Adapting Eqs. 5 and 6, when each item y is presented

| (9) |

That is, when y is presented at time and x is available in , is incremented. Following similar steps as for , in the limit as , we get

| (10) |

which can be compared to Equation 8. Thus, with learning as in Eq. 9, we can refer to as a Laplace predecessor representation.

Note that the convention of is different than . Whereas describes relationships between x preceding y, describes relationships between y preceding x. In this sense is like . In addition one must also account for the reflection operator involved in the definition of as compared to and the different marginalization.

The foregoing makes clear that if the brain has access to —an eligibility trace with a continuum of time horizons—it is straightforward to compute either a successor representation or a predecessor representation in a way that maintains complete information about the temporal relationships between stimuli. This approach does not require selecting a single time horizon or time constant for either representation (Floeder et al., 2024).

2.3.4. Measures of contingency using and

Information contained in and can be used to not only describe pairwise relationships between stimuli but also to assess contingency between symbols, allowing solutions to the temporal credit assignment problem. The goal here is not to propose a specific measure of contingency—there are undoubtedly a multiplicity of such rules that could be used for cognitive and neural modeling—but simply to sketch out the properties of and . We continue attending to the limit as .

For this illustration, let us restrict our attention to relationships between three symbols x, y and z. We assume for simplicity that, if they are presented on a trial, the three stimuli are presented in order on each trial. Let us refer to the time lags between symbols as random variables , ; on trials where all three symbols are observed . For convenience let’s assume that the distributions are chosen such that the relative times of presentation do not overlap. We denote the probabilities of each symbol occuring on a trial such that gives the conditional probability that z is observed on a trial given that y is also observed on that trial.

We are interested in how much “credit” to allocate y for the occurrence and timing of z, taking into account x. We will compare , which describes the future occurrences of z conditionalized on y in the present to (Fig. 5). This quantity is —the future of z predicted by x—multiplied by —the past occurrence of x predicted by knowing that y is in the present. That is, describes the future of z predicted by the past occurrence of x that is observed when y is in the present. The reflection operator allows the integration of these two timelines in a way that can be compared to the future of z given that y was observed in the present (Cole, Barnet, & Miller, 1995).

Fig. 5.

Measuring contingency by comparing pairwise relationships between y and z to pairwise relationships conditionalized on x. a. Equation 12 captures the Laplace transform of the random variable . By assumption, on each trial . b. Equation 14 captures the convolution of and . If these intervals are independent across trials then .

We will work through the implications of this high level description under very simple circumstances. Recall that under the circumstances described in this subsection,

| (11) |

| (12) |

Using properties of the Laplace transform we can rewrite as

| (13) |

| (14) |

The second term describes the Laplace transform of the convolution of and . Because the sum of two independent random variables is equal to their convolution, the Laplace transforms in Eqs 12 and 14 will enable us to assess the dependence between the times of presentations of x,y, and z.

Associative contingency at

gives information about the pairwise probabilities between each pair of symbols. Suppose that x, y and z occur on different trials. Is the occurrence of z predicted by y or x? Or some more complex situation?

From Eqs. 8 and 10 and basic properties of random variables, we could compare

| (15) |

to

| (16) |

If Eqs 15 and 16 are equal to one another, then credit for z should go to x rather than y. To the extent they differ, then y should get credit for the occurrence of z.

Of course there are limits to how well the future can be predicted with pairwise statistics. More generally, we would like to consider joint statistics. This requires estimating higher order probabilities, e.g., . We establish later that joint statistics can be estimated from . In an environment where joint statistics are important, predicting the future using simple pairwise relationships is untenable. However, it should be possible to recode the symbols into a new set of symbols that can be used to predict the future using pairwise relationships.

Temporal contingency

So that we can focus on temporal contingency, let us assome that all three stimuli are presented on each trial so that . Because contains information about every moment of the distribution it is straightforward to ask whether the distribution of times for z conditionalized on y is higher or lower entropy than the distribution conditionalized on x. It is also possible to use and to capture more subtle temporal relationships.

Recall that the distribution of the sum of two random variables equals the convolution of those random variables if they are independent of one another. Thus comparing the distribution of to the distribution of the convolution allows us to assess the dependency across trials of the timing of the three stimuli. Equation 12 shows that the Laplace transform of is stored in , whereas Equation 14 shows that the Laplace transform of is stored in . Comparing these two quantities allows us to assess the dependence between the times of occurrence of x and z conditionalized on y in the present.

2.4. Continuum of allows a temporal memory across trials

For the past several subsections we have considered the limit where . That limit is not physically realizable. How should we choose the value of The answer is that we should not choose a single value of . In much the same way we treat as a continuous variable rather than treating it as a parameter to be estimated from the data, we can also treat as a continuous variable. Continuous means that maintains a temporal memory of the entire past. Similarly, continuous enables to retain complete information about pairwise relationships as a function of trial history. Similar relationships can be worked out for but we focus on here for simplicity.

Equation 7, which describes the situation where is equal to on each trial, can be rewritten as

where is the Z-transform, the discrete analog of the Laplace transform (Ogata, 1970). An analogous relationship can be written for .

Although the notation is a bit more unwieldy, allowing to vary across trials we see that the trial history of timing is also retained by . Writing the delay between x and y on the trial steps in the past as , and we can write

| (17) |

We understand the Z-transform to be taken over the discrete variable and not the continuous variable .

Because the Z-transform is in principle invertible, information about the entire trial history has been retained by virtue of having a continuum of forgetting rates . Figure 6 illustrates the ability to extract the trial history including timing information of events that follow x from .

Fig. 6.

contains information about both time within a trial and trial history. Left: Consider a single pairing of x and y on the most recent trial. The heatmap shows the degree to which y is cued by x by projected onto log time. The profile as a function of is identical to the profile for future time in Figure 2. If the pairing between x and y had a longer delay, the edge would be further to the right. Right: The single pairing of x and y is followed by an additional series of trials on which x was presented by itself. Now there is an edge in both trial history and time within trial. Additional trials with only x would push this edge further towards the top of the graph. Additional trials with x and y paired would be added to this plot with a time delay that reflects the timing of the pairing.

This illustrates a remarkable property of Laplace-based temporal memory. Although each synaptic matrix with a specific value of forgets exponentially with a fixed time horizon (the time constant is given by ), the set of matrices with a continuum of retains information about the entire trial history. Although each matrix has a specific time horizon, the set of all matrices with continuous values of has a continuity of time horizons, tiling the entire trial history. In practice there must be some numerical imprecision in the biological instantiation of . In principle however, a continuum of forgetting rates means that the past is not forgotten. Instead the past, as a function of trial history, has been written across the continuum of .

2.5. Estimating three point correlation functions from Z-transform

A great deal of information can be extracted from the trial histories encoded in and . contains the two-point probability distribution of y and z. It would be preferable to predict the occurrence and timing of using the three-point probability distribution.

Because contains information about the paired trial history, in principle we can extract information about the three-point correlation function. The problem of estimating the three-point correlation function between stimuli is straightforward if one has access to the trial history of both the past conditionalized on the present and the future conditionalized on the present. This information is contained in and respectively.

For instance, if only occurs on trials on which both x and y are presented, but not on trials when only one of them are presented, then we should observe a positive correlation between the trial history encoded in and . Similarly, one can imagine that the joint timing of the presentations of x and y predicts the timing of z, as if all three symbols are being generated by a process that can unfold at different rates.

Access to the joint statistics between symbols can in principle be leveraged to provide a much more complete prediction of the future, especially when integrated into deep networks that recode the symbols into new sets of symbols. Moreover, continuous values of may allow networks built using and to respond to non-stationary statistics.

2.6. Updating the future

Let us return to the problem of generating a prediction of the immediate future. We again restrict our attention to the limit as goes to 1 and assume the system has experienced a very long sequence of trials with the same underlying statistics. Moreover, we assume for the present that only pairwise relationships are important, so we can neglect the temporal credit assignment problem, and construct the Laplace transform of the future that predicted solely on the basis of the present stimulus.

There are two problems that need to be resolved to write an analog of Eq. 3 for . First, we can only use Eq. 2 to update if is already the Laplace transform of a predicted future; we must create a circumstance that makes that true. Second, we need to address the situation where a prediction reaches the present. Because of the discontinuity at special considerations are necessary to allow the time of a stimulus to pass from the future to the past.

2.6.1. Predicting the future with the present

Equation 8 indicates that the weights in record the future occurrences of y given that x occurs in the present. captures both the probability that y will follow x as well as the distribution of temporal delays at which y is expected to occur. This information is encoded as a probability times the Laplace transform of a random variable. If we only need to consider x in predicting the future, then is precisely how we would like to initialize the future prediction for y in after x is presented (Fig. 4).

We probe with the “immediate past.” When x is presented it enters as . Multiplying from the right with the immediate past, yields a prediction for the future.

| (18) |

More generally, the input to the future at time should be given by . For concision we write this as . Because the past stored in was a probability times the Laplace transform the distribution of a random variable, the future recovered in this way is also understandable as a probability times the Laplace transform of a random variable. If only Laplace transforms of delta functions can be represented in , then we can imagine sampling from this distribution of future times, perhaps with a preference for times more near to the future.

2.6.2. Continuity of the predicted future through

The neural representation described here approximates a continuous timeline by stitching together separate Laplace neural manifolds for the past and the future. With the passage of time, information in the future moves ever closer to the present. As time passes and a prediction reaches the present, this discontinuity must be addressed. Otherwise, the firing rates will grow exponentially without bound.

We can detect predictions that have reached the present by examining , which only rises from zero when . In practice, we would use which should be on the order of . If the future that is being represented is the Laplace transform of a delta function, then we can simply take components for which to zero for all at the next time step. More generally, if the future that is represented is not simply a delta function, the linearity of the Laplace transform allows us to subtract from all values without affecting the evolution at subsequent time points.

If a prediction reaches the present and is observed, then no further action is needed. If a prediction reaches the present, but is not observed, we can trigger an observation of a “not symbol”, written e.g., to describe the observation of a failed prediction for a stimulusx. Although we won’t pursue it here, one could allow “not symbols” to be predicted by stimuli and to predict other stimuli, allowing for the model to provide appropriate predictions for a relatively complex set of contingencies using only pairwise relationships.

2.6.3. Evolving

Integrating these two additional factors allows us to write a general expression for evolving to .

| (19) |

If the future is expressed as a delta function, continuous attractor networks with an edge are sufficient to support this evolution (Daniels & Howard, submitted). Because the future is in general more complex than a delta function, and predictions for distant parts of the future can change as events happen in the present, additional considerations are necessary.

3. Neural predictions

Regions as widely separated as the cerebellum (De Zeeuw, Lisberger, & Raymond, 2021; Wagner & Luo, 2020), striatum (e.g., van der Meer & Redish, 2011), PFC (e.g., Ning, Bladon, & Hasselmo, 2022; Rainer, Rao, & Miller, 1999), OFC (e.g., Namboodiri et al., 2019; Schoenbaum, Chiba, & Gallagher, 1998; Young & Shapiro, 2011), hippocampus (Duvelle, Grieves, & van der Meer, 2023; Ferbinteanu & Shapiro, 2003) and thalamus (Komura et al., 2001) contain active representations that code for the future. One can find evidence of predictive signals extending over long periods of time that modulate firing in primary visual cortex (Gavornik & Bear, 2014; Homann, Koay, Chen, Tank, & Berry, 2022; H. Kim, Homann, Tank, & Berry, 2019; Yu et al., 2022). Prediction apparently involves a substantial proportion of the brain. Coordinating activity and plasticity over such a wide region would require careful synchronization (Hamid, Frank, & Moore, 2021; Hasselmo, Bodelón, & Wyble, 2002). The timescale of this synchronization, presumably on the order of 100 ms, fixes , places a bound on the fastest timescales that can be observed, and operationalizes the duration of the “present.”

Given the widespread nature of predictive signal, we will not attempt to map specific equations onto specific brain circuits. Rather we will illustrate the observable properties implied by these equations with an eye towards facilitating future empirical work. The predictions fall into two categories. One set of predictions describes properties of ongoing firing of neurons participating in Laplace Neural Manifolds for past and future time. Another set of predictions are a direct consequence of the properties of learned weights. We also briefly discuss the model in this paper in the context of recent empirical work on the computational basis of the dopamine signal (Jeong et al., 2022).

3.1. Active firing neurons

This paper proposes the existence of Laplace Neural Manifolds to code for the identity and time of future events. This implies there should be two related manifolds, one implementing the Laplace space and one implementing the inverse space. Previous neuroscientific work has shown evidence for Laplace and inverse spaces for a timeline for the past. The properties of the proposed neural manifolds for future time can be understood by analogy to the neural manifolds for the past.

3.1.1. Single-cell properties of neurons coding for the past

So-called temporal context cells observed in the entorhinal cortex (Bright et al., 2020; Tsao et al., 2018) are triggered by a particular event and then relax exponentially back to baseline firing with a variety of time constants. The firing of temporal context cells is as one would expect for a population coding . So-called time cells observed in the hippocampus (MacDonald et al., 2011; Pastalkova et al., 2008; Schonhaut, Aghajan, Kahana, & Fried, 2022; Shahbaba et al., 2022; Shikano, Ikegaya, & Sasaki, 2021; Taxidis et al., 2020) and many other brain regions (e.g., Akhlaghpour et al., 2016; Bakhurin et al., 2017; Jin, Fujii, & Graybiel, 2009; Mello, Soares, & Paton, 2015; Subramanian & Smith, 2024; Tiganj et al., 2018; Tiganj, Kim, Jung, & Howard, 2017) fire sequentially as events recede into the past, as one would expect from neurons participating in for . Time cells are consistent with qualitative and quantitative predictions, including the conjecture that time constants are distributed along a logarithmic scale (Cao et al., 2022).

3.1.2. Single-cell and population-level properties of neurons coding for the past and the future

In situations where the future can be predicted, and should behave as mirror images of the corresponding representations of the past. Figure 7A illustrates the firing of cells coding for a stimulus remembered in the past (left) and predicted in the future (right). Neurons participating in the Laplace space, sorted on their values of , are shown in the top; neurons participating in the inverse space, sorted on their values of are shown on the bottom.

Fig. 7.

Predicted firing for Laplace and inverse spaces plotted as heatmaps. A. Consider an experiment in which x precedes y separated by 10 s. The top row shows firing as a function of time for cells in the Laplace space for the past (left) and the future (right). Note that the cells in peak at time zero and then decay exponentially. In contrast cells in peak at 10 s and ramp up exponentially. The bottom row shows firing as a function of time for cells in the Inverse space. B. Consider an experiment in which y is predicted to occur at time zero and then recedes into the past. Cells coding for both past and future are recorded together and sorted on the average time at which they fire. Left: For Laplace spaces, neurons in are sorted to the top of the figure and neurons are sorted to the bottom of the figure. Right: Inverse spaces show similar properties but give rise to a characteristic “pinwheel” shape.

The firing of neurons constituting the Laplace space shows a characteristic shape when plotted as a function of time in this simple experiment. Neurons coding for the past are triggered shortly after presentation of the stimulus and then relax exponentially with a variety of rates. Neurons coding for the future ramp up, peaking as the predicted time of occurrence grows closer. The ramps have different characteristic time constants. Different populations are triggered by the presentation of different symbols (not shown) so that the identity of the remembered and predicted symbols as well as their timing can be decoded from populations coding and . The largest value of in the figure is chosen to be a bit longer than the delay in the experiment, resulting in a subset of neurons that appear to fire more or less constantly throughout the delay (Enel, Wallis, & Rich, 2020).

The firing of neurons constituting the inverse space also shows a characteristic shape when plotted as a function of time in this simple experiment. Neurons tile the delay, with more cells firing early in the interval with more narrow receptive fields. The logarithmic compression of results in a characteristic “backwards J” shape for the past and a mirror image “J” shape for the future. Again, different populations would code for different stimuli in the past and in the future (not shown) so that the identity of the remembered and predicted stimuli and their time from the present could be decoded from a population coding . Figure 7B shows firing that would be expected for a population that includes cells coding for the same stimulus, sayy, both in the past and the future around the time of a predicted occurrence of that symbol.

3.1.3. Plausible anatomical locations for an internal future timeline

This computational hypothesis should evaluated with carefully planned analyses. However, the published literature shows evidence that is at least roughly in line with the hypothesis of neural manifolds for future time. Firing that ramps systematically upward in anticipation of important outcomes including planned movements has been observed in (at least) mPFC (Henke et al., 2021), ALM (Inagaki, Inagaki, Romani, & Svoboda, 2018), cerebellum (Garcia-Garcia et al., 2024), and thalamus (Komura et al., 2001). Komura et al. (2001) showed evidence for ramping firing in the thalamus that codes for outcomes in a Pavlovian conditioning experiment. Two recent papers show evidence that ramping neurons during motor preparation in ALM (Affan et al., 2024; Inagaki et al., 2018) and interval timing in mPFC (Cao et al., in press; Henke et al., 2021) do so with a continuous spectrum of time constants.

For instance, Cao et al. (in press), reanalyzing data originally published by Henke et al. (2021) observed the firing of neurons during the reproduction phase of an interval reproduction task. On each trial, the animal is exposed to a delay of seconds, which must then be reproduced. Let us refer to the moment the reproduction phase begins as . Now at time , the beginning of the interval is seconds in the past and the planned movement is a time seconds in the future. Figure 9A shows that some neurons in mPFC ramped down as with a continuum of rate constants and other neurons ramped up as , again with a continuum of rate constants . There are potentially important differences between the empirical results in the paper and the theoretical model presented here—for instance many of the neurons coding for the time of the future planned movements rescaled the timecourse of their firing depending on the value of on that trial—but the overall correspondence to the predictions described here is striking. In at least some regions, in some tasks, the ongoing firing of cortical neurons codes the time of planned future events via real Laplace transform of the future.

Fig. 9.

Recent observation of key neural predictions of this approach. A. In this interval reproduction experiment, rodents had to reproduce a delay of some duration . Calling the start of the reproduction period , at time , the beginning of the interval is seconds in past while the planned end of the reproduction period is seconds in the future. Firing of neurons in rodent mPFC have properties resembling those predicted for and , showing exponential decay/ramping with a continuous spectrum of time constants. Compare to Figure 7A. Adapted from Cao, et al., (2024). B. In a classical conditioning experiment, different stimuli predicted a rewarding outcome at different delays. Firing of dopamine neurons was recorded following each of the stimuli. The change in firing as function of the time until the reward was fitted with an exponential curve (left), indexed by the discount rate . Across dopamine neurons, a wide range of time constants was observed (right). The results are as one would expect if the dopamine system projects information about the time of future events to the rest of the brain via . Adapted from Masset, et al., (2023).

There is also circumstantial neurophysiological evidence for sequential firing leading to predicted future events as predicted by for coding for future events. Granule cells in cerebellum appear to fire in sequence in the time leading up to an anticipated reward (Wagner, Kim, Savall, Schnitzer, & Luo, 2017; Wagner & Luo, 2020). During performance of a task in which monkeys must perform a sequence of movements, neurons firing in sequence that decoded the time of future movements were observed in PFC but not in posterior parietal cortex (Watanabe, Kadohisa, Kusunoki, Buckley, & Duncan, 2023). OFC may be another good candidate region to look for “future time cells.” OFC has long been argued by many authors to code for the identity of predicted outcomes (Hikosaka & Watanabe, 2000; Mainen & Kepecs, 2009; Schoenbaum & Roesch, 2005). More recently Enel et al. (2020) showed sequential activation in OFC during a task in which it was possible to predict the value of a reward that was delayed for several seconds. Finally, it should be noted that the properties of over the future are a temporal analog of spatial “distance-to-goal” cells that have been observed in spatial navigation studies (Gauthier & Tank, 2018; Sarel, Finkelstein, Las, & Ulanovsky, 2017).

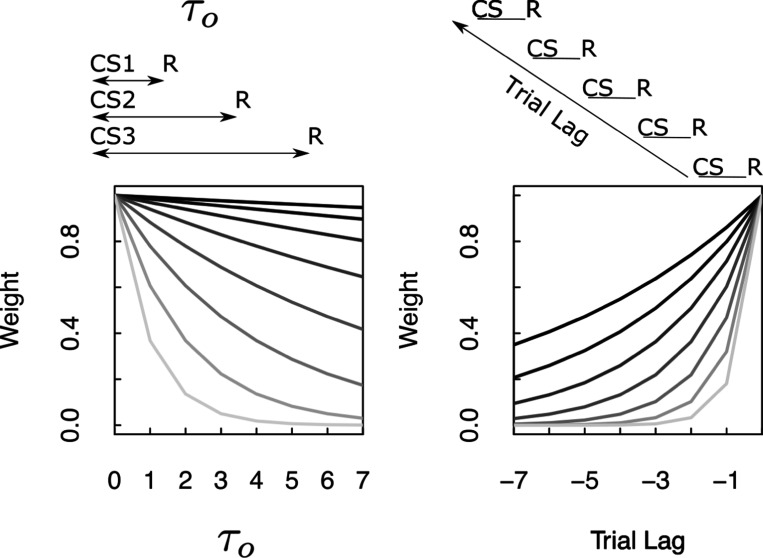

3.2. Predictions from weight matrix

3.2.1. Properties of weights due to

Consider an experiment in which different symbols, denoted cs1, cs2, etc, precede an outcome r by a delay . The value of changes across the different symbols (Figure 8A). Ignoring for the moment, the strength of the connections from each cs to r depend on the value of for that stimulus and the value of for each synapse: . When a particular cs is presented at time , the amount of information that flows along each synapse is and the pulse of input to corresponding to the outcome is .

Fig. 8.

Neural predictions derived from properties of . Left. Plot of the magnitude of the entry in connecting each of the conditioned stimuli cs to the outcome r as a function of the corresponding to that cs. Different lines correspond to entries with different values of . Weights corresponding to different values of show exponential discounting as is manipulated, with a variety of discount rates. Right. Plot of the magnitude of associated with a single pairing of cs and r a certain number of trials in the past. Different lines show the results for different values of . For clarity, these curves have been normalized such that they have the same value at trial lag zero.

Thus, considering each connection as a function of , firing should go down exponentially as a function of with a rate constant that depends on the value of . This pattern of results aligns well with experimental results observed in mid-brain dopamine neurons (Masset et al., 2023; Sousa et al., 2023). It has long been known that firing of dopamine neurons, averaged over neurons, around the time of the conditioned stimulus goes down with delay (Fiorillo, Newsome, & Schultz, 2008). Masset et al. (2023) measured the firing of dopamine neurons to different stimuli that predicted reward delivery at different delays. This study showed that there was a heterogeneity of exponential decay rates in the firing of dopamine neurons in this paradigm (Fig. 9B), much as illustrated in Fig. 8A. In the context of TDRL, this finding is consistent with a continuous spectrum of exponential discount rates (Momennejad & Howard, 2018; Tano et al., 2020). In any event, these findings (Masset et al., 2023; Sousa et al., 2023) are clear evidence that the phasic firing of midbrain dopamine neurons at the time of a predictive stimulus codes for the Laplace transform of the time until future reward.

3.2.2. Properties of weights due to

A continuum of forgetting rates predicts a range of trial history effects. Figure 8B shows the weights in over past trials that result from different values of . This is simply where is the trial recency with values normalized such that the weight at the most recent trial is 1. The weights record the Z-transform of the trial history of reinforcement. Many papers show dependence on previous trial outcomes in response to a cue stimulus in learning and decision-making experiments (Akrami, Kopec, Diamond, & Brody, 2018; Bernacchia, Seo, Lee, & Wang, 2011; Hattori, Danskin, Babic, Mlynaryk, & Komiyama, 2019; Hattori & Komiyama, 2022; Morcos & Harvey, 2016; Scott et al., 2017). These studies show history-dependent effects in a wide range of brain regions and often show a continuous spectrum of decay rates within a brain region (see especially Bernacchia et al., 2011; Danskin et al., 2023). Notably, distributions of time constants for trial history effects cooccur with distributions of ongoing activity in multiple brain regions (Spitmaan, Seo, Lee, & Soltani, 2020).

3.3. Dopamine and learning

The connection between TDRL and neuroscience related to dopamine has been one of the great triumphs of computational neuroscience (Schultz et al., 1997). The standard account is that the firing of dopamine neurons signals reward prediction error (RPE) which drives plasticity. Despite its remarkable success at predicting the findings of many behavioral and neurophysiological experiments, the RPE account has been under increasing strain over recent years. The standard account did not predict the existence of a number of striking effects, including increasing dopamine firing during delay under uncertainty (Fiorillo, Tobler, & Schultz, 2003), dopamine ramps in spatial experiments (Howe, Tierney, Sandberg, Phillips, & Graybiel, 2013), dopamine waves (Hamid et al., 2021), and heterogeneity of dopamine responses across neurons and brain regions (Dabney et al., 2020; Masset, Malik, Kim, Bech Vilaseca, & Uchida, 2022; W. Wei, Mohebi, & Berke, 2021), although many of these phenomena can be accommodated within the RPE framework with elaboration. (Gardner, Schoenbaum, & Gershman, 2018; Gershman, 2017; H.R. Kim et al., 2020; Lee, Engelhard, Witten, & Daw, 2022) Jeong et al. (2022) reported the results of several experiments that flatly contradict the standard model. These experiments were proposed to evaluate an alternative hypothesis for dopamine firing in the brain.

Jeong et al. (2022) propose that dopamine signals whether the current stimulus is a cause of reward. The model developed there, referred to as ANCCR, assesses the contingency between a stimulus and outcomes. and contain information about the contingencies—temporal and otherwise—between a symbol and possible outcomes. Both ANCCR and the framework developed in this paper are inspired by a similar critique of Rescorla-Wagner theory and TDRL (C. Gallistel, 2021b). In order to make a complete account of the experiments in the (Jeong et al., 2022) paper, the current framework would have to be elaborated in several ways. However, the current framework does not require one to specify an intrinsic timescale of association a priori. Perhaps it is possible to develop a generalization of the current framework that does not rely on the simplifying assumption of discrete trials in order to yield readily interpretable measures of contingency.

4. Discussion

This paper takes a phenomenological approach to computational neuroscience. The strategy is to write down equations that, if the brain could somehow obey them, would be consistent with a wide range of observed cognitive and neural phenomena. The phenomenological equations make concrete predictions that can be evaluated with cognitive and neurophysiological experiments. To the extent the predictions hold, the question of how the brain manages to obey these phenomenological equations could then become a separate subject of inquiry. The phenomenological equations require a number of capabilities of neural circuits, both at the level of synapses and in terms of ongoing neural activity. We make those explicit here.

4.1. Circuit assumptions for synaptic weights

and require that the brain uses continuous variables, and , to organize connections between many neurons, most likely spanning multiple brain regions. For the phenomenological equations to be viable, these continuous variables should be deeply embedded in the functional architecture of the brain. For instance, in order to invert the integral transforms, it is necessary to compute a derivative over these continuous variables. This suggests a gradient in these continuous variables should be anatomically identifiable. Conceivably anatomical gradients in gene expression and functional architecture (e.g., Guo et al., 2021; Phillips et al., 2019; Roy, Zhang, Halassa, & Feng, 2022) could generate anatomical gradients in and/or . Perhaps part of the function of traveling waves of activity such as theta oscillations (Lubenov & Siapas, 2009; Patel, Fujisawa, Berényi, Royer, & Buzsáki, 2012; Zhang & Jacobs, 2015) or dopamine waves (Hamid, Frank, & Moore, 2019) is to make anatomical gradients salient.

4.2. Circuit assumptions for ongoing activity

At the neural level, this framework assumes the existence of circuits that can maintain activity of a Laplace Neural Manifold over time. There is evidence that the brain has found some solution to this problem (Atanas et al., 2023; Bright et al., 2020; Cao et al., in press; Tsao et al., 2018; Zuo et al., 2023). Exponential growth of firing, as proposed by Eq. 19 seems on its face to be a computationally risky proposition (but see Daniels & Howard, submitted). However, this proposal does create testable predictions. Moreover, firing rates that increase monotonically as a function of one or another environmental variable are widely observed. For instance border cells as an animal approaches a barrier (Solstad, Boccara, Kropff, Moser, & Moser, 2008) and evidence accumulation cells (Roitman & Shadlen, 2002) both increase monotonically. If this monotonic increase in firing reflects movement of an edge along a Laplace Neural Manifold, the characteristic time scale of the increase should be heterogeneous across neurons. If the brain has access to a circuit with paired , it could reuse this circuit to construct cognitive models for spatial navigation (Howard et al., 2014), evidence accumulation (Howard et al., 2018), and perhaps cognitive computation more broadly (Howard & Hasselmo, 2020). Consistent with this hypothesis, monotonic cells in spatial navigation and evidence accumulation—border cells and evidence accumulation cells—have sequential analogues (Koay, Charles, Thiberge, Brody, & Tank, 2022; Morcos & Harvey, 2016; Wilson & McNaughton, 1993) as one would expect if they reflect a Laplace space that is coupled with an inverse space.

Perhaps part of the solution to implementing these equations in the brain is to restrict the kinds of functions that can be represented over the Laplace Neural Manifold. A continuous attractor network that can maintain and evolve the Laplace transform of a single delta function per basis vector can readily be constructed (Daniels & Howard, submitted). In this case, each component of and would be at any moment the Laplace transform of a delta function; and would still be able to store distributions over multiple presentations. In this case when an item is presented perhaps could update by sampling from the distribution expressed by .

4.3. Generalizing beyond time

It should be possible to extend the current framework to multiple dimensions beyond time, including real space and abstract spaces (Howard et al., 2018, 2014). Properties of the Laplace domain enable data-independent operators that enable efficient computation (Howard & Hasselmo, 2020). For instance, given that a state of a Laplace neural manifold is the Laplace transform of a function, we can construct the Laplace transform of the translated function (Eq. 2, see also Shankar et al., 2016). Critically, the translation operator is independent of the function to be translated. Restricting our attention to Laplace transforms of delta functions, we can construct the sum or difference using convolution and cross correlation respectively (Howard & Hasselmo, 2020; Howard et al., 2015). The binary operators for addition and subtraction also do not need to be learned. Perhaps the control theory that governs behavior is analogous to generic spatial navigation in a continuous space.

4.4. Scale-covariance as a design goal

Because the values are sampled along a logarithmic scale, all of the quantities in this paper are scale-covariant. Rescaling time, taking , , etc, simply takes . Because the values are chosen in a geometric series, rescaling time simply translates along the axis. All the components of the model, , , , and , all use the same kind of logarithmic scale for time. All of the components of the model are time scale-covariant, responding to rescaling time with a translation over cell number. Thus any measure that integrates over (and is not subject to edge effects) is scale-invariant.

Empirically, there is not a characteristic time scale to associative learning (Balsam & Gallistel, 2009; Burke et al., 2023; C.R. Gallistel & Shahan, 2024; Gershman, 2022); any model that requires choice of a time scale for learning to proceed is thus incorrect. Logarithmic time scales are observed neurally (Cao et al., 2022; Guo et al., 2021). Logarithmic time scales can be understood as a commitment to a world with power law statistics (Piantadosi, 2016; X.-X. Wei & Stocker, 2012) or as an attempt to function in many different environments without a strong prior on the time scales it will encounter (Howard & Shankar, 2018).

Recent work has shown that the use of logarithmic time scales also enables scale-invariant CNNs for vision (Jansson & Lindeberg, 2021) and audition (Jacques, Tiganj, Sarkar, Howard, & Sederberg, 2022). For instance, (Jacques et al., 2022) trained deep CNNs to categorize spoken digits. When tested on digits presented at very different speeds than the training examples (imagine someone saying the word “seven” stretched over four seconds), the deep CNN with a logarithmic time axis generalized perfectly. Rescaling time translates the neural representation at each layer; convolution is translation equivariant; including a maxpool operation over the convolutional layer renders the entire CNN translation-invariant. Time is not only important in speech perception (e.g., Lerner, Honey, Katkov, & Hasson, 2014) but vision as well (Russ, Koyano, DayCooney, Perwez, & Leopold, 2022) suggesting that these ideas can be incorporated into a wide range of sensory systems.

4.5. Convolution, relational memory and cognitive graphs

There is a long-standing tension in psychology between accounts of learning based on simple associations and cognitive representations. For instance Tolman (1948) contrasted behaviorist accounts of stimulus-response associations with a “cognitive map” studying the behavior of rats in spatial mazes. This paper has already touched on this tension between association and temporal contingency—which requires metric temporal relationships between stimuli—in the study of Pavlovian learning and reward systems in the brain. (Fodor & Pylyshyn, 1988) used analogous arguments in an early critique of connectionism that echoes to the present day in contemporary debates about whether large language models “understand” language or not. Continuing interest in “neurosymbolic” artificial intelligence can be seen as an extension of this longstanding debate (Marcus, 2018).

For researchers studying episodic memory and neural representations in the hippocampus, cognitive maps rather than simple atomic associations have long been the dominant view (O’Keefe & Nadel, 1978). Cohen and Eichenbaum (1993) emphasized that cognitive maps are more general than spatial maps of the physical environment and can be used to describe other forms of relationships. In their view a relational representation “maintains the ‘compositionality’ of the items, that is, the encoding of items both as perceptually distinct ‘objects’ and as parts of larger scale ‘scenes’ and ‘events’ that capture the relevant relations between them.” In the view of Cohen and Eichenbaum (1993), relational memory is critical for flexible, context-dependent expression of stored knowledge, in much the same way that rats can take a novel shortcut to a reward in a pre-learned maze (Tolman, 1948). These ideas about relational memory have led to “neurosymbolic” computational models developed with specific attention to hippocampal function (Whittington et al., 2020).

The convolutions stored in the Laplace domain in and are precisely relational representations. The convolution of two functions is neither , nor , but is composed from them. The convolution between the function x was seconds in the past and the function y is in the rearward portion of the future describes an “event” including x and y in a particular relationship. If we substitute functions of physical space rather than functions of time, it would be straightforward to understand this convolution as a “scene” as proposed by Cohen and Eichenbaum (1993). Because simple Hebbian association of Laplace representations is is sufficient to perform convolution, it is straightforward to at least write down neural models for relatively complex data structures in the Laplace domain.

Not only do convolutions provide a way to implement relational representations as envisioned by Cohen and Eichenbaum (1993), they also lend themselves to flexible expression of memory. Convolution has an inverse operation, cross-correlation. So, if , then , where is the cross-correlation. This property enables symbolic computation (Gayler, 2004; Schlegel, Neubert, & Protzel, 2022). For instance, consider convolutions of delta functions. If is a delta function at and is a delta function at , then is a delta function at . Convolution of delta functions is thus mapped onto addition and cross-correlation—which is just convolution with reflection of one of the functions along the axis—maps onto subtraction. With a bit of creativity to deal with positive and negative numbers, not unlike the treatment of a timeline that continues from to used here, one can build a computational system that implements the group describing the reals under addition, clearly meeting the requirements for a symbolic computer. Coming back to the hippocampus, navigation in a physical space requires vector subtraction. For instance to know how to get from physical location to physical location , we must be able to compute . One can also perform spatial navigation in abstract spaces using the same data-independent operators (C.R. Gallistel & King, 2011; Howard & Hasselmo, 2020).

Laplace Neural Manifolds are thus well-suited to not only learn, represent, and store relationships between stimuli but also to flexibly re-express relational information in a context-appropriate manner using data-independent operators. These two properties make Laplace Neural Manifolds ideal for cognitive maps of both real and abstract spaces.

Acknowledgements.

Address correspondence to Marc Howard. The authors gratefully acknowledge discussions with Karthik Shankar, Vijay Namboodiri, Joe McGuire, Nao Uchida and the Uchida lab, and Kia Nobre as well as careful review of previous versions of the manuscript by Nicole Howard, Hallie Botnovcan, Aakash Sarkar, and an anonymous reviewer. This paper is dedicated to the memory of Karthik Shankar. arxiv://2302.10163

Footnotes

Conflict of interest statement

MWH and PBS are co-founders of Cognitive Scientific AI, Inc., which could benefit indirectly from publication of this manuscript.

The sign convention here is distinct from prior papers that did not require (and which will be introduced shortly) to be defined on both sides of zero (Fig. 1).

Because of the sign conventions adopted here, is the Laplace transform of whereas is the transform of . Viewed in this light it is more precise to think of Eq. 6 as learning the Laplace transform of the cross-correlation between the present and the past.

References

- Affan R.O., Bright I.M., Pemberton L., Cruzado N.A., Scott B.B., Howard M. (2024). Ramping dynamics in the frontal cortex unfold over multiple timescales during motor planning. bioRxiv, 2024–02, [DOI] [PMC free article] [PubMed]

- Aghajan Z.M., Kreiman G., Fried I. (2023). Minute-scale periodicity of neuronal firing in the human entorhinal cortex. Cell Reports, 42(11), , [DOI] [PMC free article] [PubMed] [Google Scholar]

- Akhlaghpour H., Wiskerke J., Choi J.Y., Taliaferro J.P., Au J., Witten I. (2016). Dissociated sequential activity and stimulus encoding in the dorsomedial striatum during spatial working memory. eLife, 5, e19507, [DOI] [PMC free article] [PubMed] [Google Scholar]

- Akrami A., Kopec C.D., Diamond M.E., Brody C.D. (2018). Posterior parietal cortex represents sensory history and mediates its effects on behaviour. Nature, 554(7692), 368–372, [DOI] [PubMed] [Google Scholar]

- Arcediano F., Escobar M., Miller R.R. (2005). Bidirectional associations in humans and rats. Journal of Experimental Psychology: Animal Behavior Processes, 31(3), 301–18, [DOI] [PubMed] [Google Scholar]

- Atanas A.A., Kim J., Wang Z., Bueno E., Becker M., Kang D., … others (2023). Brain-wide representations of behavior spanning multiple timescales and states in c. elegans. Cell, 186(19), 4134–4151, [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bakhurin K.I., Goudar V., Shobe J.L., Claar L.D., Buonomano D.V., Masmanidis S.C. (2017). Differential encoding of time by prefrontal and striatal network dynamics. Journal of Neuroscience, 37(4), 854–870, [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balsam P.D., & Gallistel C.R. (2009). Temporal maps and informativeness in associative learning. Trends in Neuroscience, 32(2), 73–78, [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernacchia A., Seo H., Lee D., Wang X.J. (2011). A reservoir of time constants for memory traces in cortical neurons. Nature Neuroscience, 14(3), 366–72, [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blouw P., Solodkin E., Thagard P., Eliasmith C. (2016). Concepts as semantic pointers: A framework and computational model. Cognitive science, 40(5), 1128–1162, [DOI] [PubMed] [Google Scholar]