Abstract

Machine learning has been recently used especially in the medical field. In the diagnosis of serious diseases such as cancer, deep learning techniques can be used to reduce the workload of experts and to produce quick solutions. The nuclei found in the histopathology dataset are an essential parameter in disease detection. The nucleus segmentation was performed using the colorectal histology MNIST dataset for nucleus detection in this study. The graph theory, PSO, watershed, and random walker algorithms were used for the segmentation process. In addition, we present the 10-class MedCLNet visual dataset consisting of the NCT-CRC-HE-100 K dataset, LC25000 dataset, and GlaS dataset that can be used in transfer learning studies from deep learning techniques. The study proposes a transfer learning technique using the MedCLNet database. Deep neural networks pre-trained with the proposed transfer learning method were used in the classification with the colorectal histology MNIST dataset in the experimental process. DenseNet201, DenseNet169, InceptionResNetV2, InceptionV3, ResNet152V2, ResNet101V2, and Xception deep learning algorithms were used in transfer learning and the classification studies. The proposed approach was analyzed before and after transfer learning with different methods (DenseNet169 + SVM, DenseNet169 + GRU). In the performance measurement, using the colorectal histology MNIST dataset, 94.29% accuracy was obtained in the DenseNet169 model, which was initiated with random weights in the multi-classification study, and 95.00% accuracy after transfer learning was applied. In comparison with the results obtained from empirical studies, it was demonstrated that the proposed method produced satisfactory outcomes. The application is expected to provide a secondary evaluation for physicians in colon cancer detection and the segmentation.

Graphical Abstract

Keywords: Max-flow grab cut, MedCLNet, Nucleus segmentation, Particle swarm optimization, Transfer learning

Introduction

Colorectal cancer is a common type of cancer and is a cause of death globally [1]. Early diagnosis is vital for the successful treatment of cancer [2]. The most frequently used method in diagnosing colorectal cancer diseases in medical practice is histopathological examination [3]. Pathologist identifies tissues with cancer areas by observing tissue changes and cells through microscopic observation [4]. The pathological analysis is a time-consuming and challenging process dependent on human experience [5]. Physicians’ incorrect data interpretation in diagnosing disease reduces the accuracy rate and may prevent the early diagnosis [6]. In a study on the concordance of breast biopsies among pathologists, it was found that pathologists did not agree with each other, with an average rate of 24.7%; a high rate of variability is an opportunity for computer-assisted histopathology interpretation [7]. Deep convolutional neural networks (CNNs) have shown promise in medical imaging applications [8]. The success of CNN-based deep learning models depends on large amounts of labeled datasets [9]. In the field of medical imaging, deep learning algorithms are used for classification [10–15], detection [15, 16], and segmentation [17–20]. Unlabeled big data is easier to access in many areas, including medical imaging. However, the safe acquisition of labeled data requires hard work by the radiologist/expert, and these studies are time-consuming and costly [21]. Since it is not always possible to obtain large amounts of labeled data in the medical field, new approaches and high-performance methods are needed. Transfer learning can decrease the amount of labeled data required to train a performant model. Instead of beginning with random weights, transfer learning starts with a model trained on another large image dataset and uses the limited labeled data for additional training [22–24]. In this study, the MedCLNet dataset was collected to train deep neural networks in the transfer learning process. The models trained with DenseNet201, DenseNet169, InceptionResNetV2, InceptionV3, ResNet152V2, ResNet101V2, and Xception with the MedCLNet dataset from modern deep neural networks were retrained and were tested with a different dataset (colorectal histology MNIST). In this study, nucleus segmentation was applied to detect nuclei found in histopathology data, which have an essential role in disease detection. Graph theory, particle swarm optimization (PSO), watershed, and random walker methods, which are unsupervised learning techniques, are carried out in the segmentation. The colorectal histology MNIST dataset has been used in the experimental process analysis. In addition, we introduce the 10-class MedCLNet visual database consisting of histopathology data of colorectal cancer to be used in transfer learning studies. In the study, DenseNet201, DenseNet169, InceptionResNetV2, InceptionV3, ResNet152V2, ResNet101V2, and Xception deep learning algorithms were used.

In the octal classification with the colorectal histology MNIST dataset, deep learning algorithms were initially started with random weights, then using the ImageNet weights, and finally, the classification was performed with the weights obtained from the MedCLNet database. The study results before and after transfer learning (using MedCLNet weights) were shared using methods such as DenseNet169 + support vector machine (SVM) and DenseNet169 + gated recurrent unit (GRU). Within the empirical results, it has been observed that the proposed method produces more accurate results. In the performance evaluation of the models, accuracy (ACC), sensitivity (SN), specificity (SP), Cohen’s kappa (Kappa), confusion matrix, and ROC-AUC metrics were used.

The contributions of our paper can be summarized as follows:

In colon cancer applications, a 10-category MedCLNet dataset consisting of colon cancer histopathology images that can be used in the transfer learning process is introduced.

In the detection of colon cancer, hybrid methods proposed with DenseNet201, DenseNet169, InceptionResNetV2, InceptionV3, ResNet152V2, ResNet101V2, Xception deep neural networks and GRU, and SVM (DenseNet169 + GRU, DenseNet169 + SVM, etc.) are applied.

Deep neural networks trained with the ImageNet database, a popular approach in transfer learning applications, were used in the detection process of the disease.

Fine-tuning method was applied in the transfer learning process proposed with the MedCLNet database.

Experimental results are presented comparatively before pre-trained (random initialization) and after pre-trained (ImageNet-based model, MedCLNet-based model).

In this study, the MedCLNet database is given in the “MedCLNet Dataset” section, materials and methods are detailed in the “Material and Method” section, experimental results are presented in the “Experimental Results” section, limitations in the “Limitations” section, discussion and conclusions in the “Discussion” section, and future work are given in the “Conclusion and Future Work” section.

MedCLNet Dataset

The MedCLNet is a ten-category visual database of histopathological images of colon data. The MedCLNet is built on the NCT-CRC-HE-100 K dataset, LC25000 dataset, and GlaS dataset. In the dataset, there are 4000 images in total, 400 images in each category. All images were resized as 224 × 224 in the PIL library [25], and these images were converted to.jpg format. The labels determined for ten classes in the dataset are given in Table 1.

Table 1.

Labels used in the MedCLNet dataset

| Label | Description | Number of images |

|---|---|---|

| 0 | Adipose | 400 |

| 1 | Background | 400 |

| 2 | Debris | 400 |

| 3 | Lymphocytes | 400 |

| 4 | Mucus | 400 |

| 5 | Smooth muscle | 400 |

| 6 | Normal colon mucosa | 400 |

| 7 | Cancer-associated stroma | 400 |

| 8 | Adenocarcinoma | 400 |

| 9 | Benign | 400 |

Datasets Used in the MedCLNet Database.

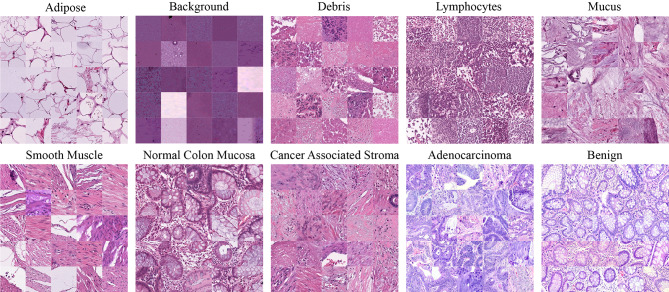

NCT-CRC-HE-100 K dataset: the dataset consists of nine categories: adipose, background, debris, lymphocytes, mucus, smooth muscle, normal colon mucosa, cancer-associated stroma, and colorectal adenocarcinoma epithelium. There are 100,000 images in the dataset. All images are 224 × 224 in size and in.tif format [26]. LC25000 dataset: there are lung and colon images. The colon dataset consists of two classes, 5000 benign and 5000 colon adenocarcinoma, and 10,000 histopathological images. In the lung dataset, there are 15,000 images. These are 768 × 768 in size and in.jpg format [27]. The GlaS dataset: the dataset consists of two categories as benign and malignant. There are 165 images in the dataset. These images have different sizes like 775 × 522, 589 × 453, and 574 × 433, and all images are in.bmp format [28]. A detailed analysis of the datasets used in the MedCLNet database is given in Table 2. An example of the dataset is shown in Fig. 1.

Table 2.

MedCLNet dataset creation process

| Dataset | Label-type | Number of images | Image sizes | Number of images/image size used in MedCLNet |

|---|---|---|---|---|

| NCT-CRC-HE-100 K | Adipose | 10,407 | 224 × 224 | 400/224 × 224 |

| Background | 10,566 | 224 × 224 | 400/224 × 224 | |

| Debris | 11,512 | 224 × 224 | 400/224 × 224 | |

| Lymphocytes | 11,557 | 224 × 224 | 400/224 × 224 | |

| Mucus | 8896 | 224 × 224 | 400/224 × 224 | |

| Smooth muscle | 13,536 | 224 × 224 | 400/224 × 224 | |

| Normal colon mucosa | 8763 | 224 × 224 | 400/224 × 224 | |

| Cancer-associated stroma | 10,446 | 224 × 224 | 400/224 × 224 | |

| Colorectal adenocarcinoma epithelium | 14,317 | 224 × 224 | 149/224 × 224 | |

| LC25000 | Colon adenocarcinoma | 5000 | 768 × 768 | 160/224 × 224 |

| Colon benign | 5000 | 768 × 768 | 326/224 × 224 | |

| GlaS | Malignant (adenocarcinoma) | 91 | Different sizes (e.g., 775 × 522, 589 × 453, 574 × 433 etc.) | 91/224 × 224 |

| Benign | 74 | 74/224 × 224 |

Fig. 1.

The MedCLNet dataset’s samples

The MedCLNet database was created from medical images from the NCT-CRC-HE-100 K, LC25000, and GlaS datasets. In the study, all images in NCT-CRC-HE-100 K and LC25000 datasets could not be used due to a lack of technical equipment. Four thousand medical images have been used, including 3349 images in the NCT-CRC-HE-100 K dataset, 486 images in the LC25000 dataset, and 165 images in the GlaS dataset.

Material and Method

The MedCLNet dataset is prepared to be used for classification through transfer learning in datasets consisting of histopathological images of colon cancer. In our study, the MedCLNet dataset was trained with DenseNet201, DenseNet169, InceptionResNetV2, InceptionV3, ResNet152V2, ResNet101V2, and Xception deep learning algorithms. Then, the obtained weight vectors were used in the classification study with new colon histopathology data. The performance of the study was evaluated with ACC, SN, SP, Kappa, Confusion matrix, and ROC-AUC metrics. The details of the dataset used in the classification are provided in the “Dataset Used in the Classification” section. The CNN-based deep learning models used in the study are presented in the “Deep Learning Models” section. Information about pre-trained transfer learning is given in the “Transfer Learning and Pre-trained” section. Four different methods were used to evaluate the performance of the proposed method in the study, and these methods are presented in the “Other Methods Applied in the Study” section. Finally, the performance metrics used to interpret the experimental results in the study are given in the “Performance Metrics for Multi-class Classification” section.

Dataset Used in the Classification

The colorectal histology MNIST dataset has been used for the eight-class classification. In the dataset, there are 625 images in each category, a total of 5000 images. All images in the dataset are 150 × 150 in size and in.tif format [29]. A total of 2800 histopathological images have been used, and 350 images for each category in all classification applications made within the scope of the study (Fig. 2). In transfer learning, deep neural networks are trained on different large heterogeneous datasets beforehand; the trained models are retrained on more limited labeled datasets and tested. In this study, deep neural networks are used in the transfer learning process; in the first phase, MedCLNet is primarily trained with the heterogeneous database. The trained models were retrained and tested with a new colon dataset—the colorectal histology MNIST dataset. In the second step, deep neural networks trained with a heterogeneous large amount of the ImageNet dataset were retrained and tested with a new colon dataset colorectal histology MNIST dataset. In the third stage, the retransfer learning technique was applied. In this phase, deep neural networks pre-trained with the heterogeneous large amount of ImageNet datasets are retrained with the MedCLNet dataset. Deep neural networks trained with the ImageNet and MedCLNet datasets were retrained and tested with the colorectal histology MNIST dataset. The training and testing processes of deep neural networks initialized with random weights were performed with the colorectal histology MNIST dataset. In the study, training and testing processes of deep neural networks initiated with transfer learning and random weights were carried out under the same conditions. ACC, SN, SP, and Kappa metrics were used to compare experimental results with random weights and transfer learning methods.

Fig. 2.

Examples of the colorectal histology MNIST dataset

Deep Learning Models

In transfer learning and classification, DenseNet201 [30], DenseNet169 [30], InceptionResNetV2 [31], InceptionV3 [32], ResNet152V2 [33], ResNet101V2 [34], and Xception [35] deep learning models have been used. The hyperparameters used in deep learning models are given in Table 3.

Table 3.

Parameters used in deep learning models

| Hyperparameters | Value |

|---|---|

| Input shape | 224 × 224 |

| Batch size | 32 |

| Epoch | 50 |

| Optimizer | Adam |

| Monitor | Validation loss |

| Mode | Minimum |

| Loss | Categorical cross-entropy |

| Metrics | Accuracy |

| Initial learning rate | 1e − 06 |

Transfer Learning and Pre-trained

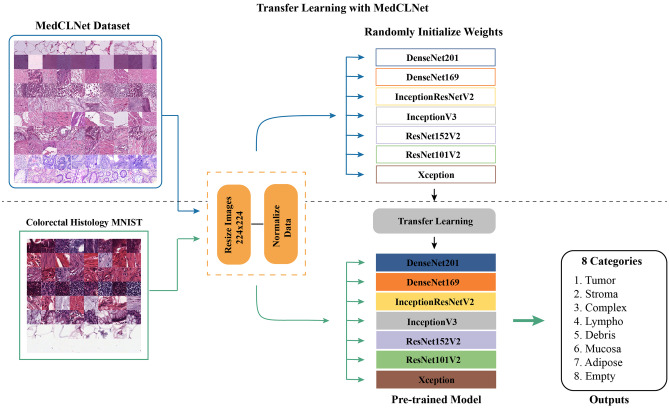

Transfer learning is one of the deep learning techniques inspired by the human thinking system [15], where the network is trained with large datasets (source, base dataset), and the learned information is used in small datasets (target dataset) [14]. In this study, MedCLNet was created from histopathological data of colon cancer and trained with deep learning networks. The resulting weight vectors (learned information) were used for octal classification in a new colon histopathological dataset. The transfer learning is summarized in Fig. 3.

Fig. 3.

Transfer learning flowchart

Deep learning networks in Fig. 3 are trained with the MedCLNet database. The information obtained was used in the multi-class classification study in the eight-class colorectal histology MNIST dataset, a new dataset.

Other Methods Applied in the Study

In this section, four different methods are applied for the performance/success of the MedCLNet approach.

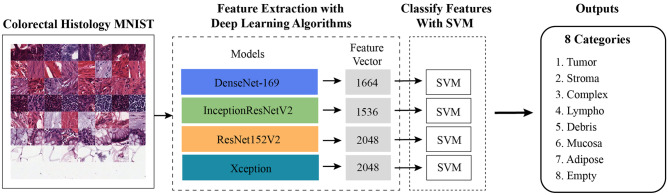

Deep Learning Algorithms with SVM (Method 1)

While machine learning methods are generally successful in small datasets, the success rate is generally lower in larger and complex datasets. Deep learning algorithms mostly produce very successful results with big data [9]. In the first method, a hybrid method was applied with DenseNet-169, ResNet152V2, InceptionResNetV2, and Xception models from deep learning algorithms and SVM (GridSearchCV) from machine learning methods (Fig. 4). The hyperparameters of the GridSearchCV algorithm are given in Table 4.

Fig. 4.

Deep learning algorithms + SVM

Table 4.

SVM hyperparameters

| Parameters | Value |

|---|---|

| Estimator | SVC |

| CV | 10 |

| C | 1, 10, 100, 1000 |

| Gamma | 0.0001, 0.001, 0.01, 0.1, 1 |

Deep Learning Algorithms with GRU (Method 2)

The GRU is one of the commonly used recurrent neural network (RNN) architectures with long short-term memory (LSTM) [36]. In the second method, a hybrid method was applied with DenseNet-169, ResNet152V2, InceptionResNetV2, and Xception models from deep learning algorithms and GRU from RNN architectures (Fig. 5).

Fig. 5.

Deep learning algorithms + GRU

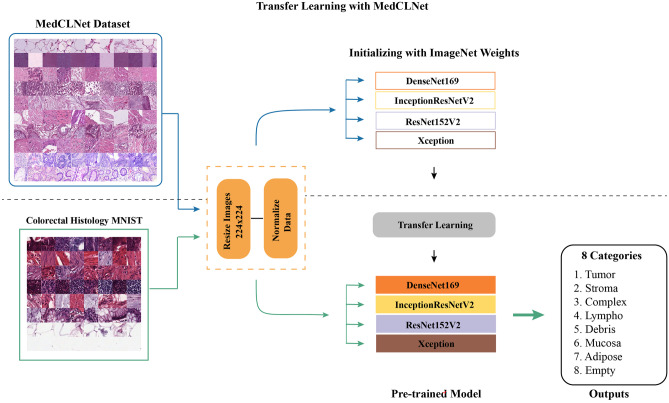

MedCLNet with ImageNet (Method 3)

In the third method, DenseNet-169, ResNet152V2, InceptionResNetV2, and Xception models initiated with ImageNet weights are trained with the MedCLNet dataset, and the weight vectors obtained are multi-class classification with the colorectal histology MNIST dataset (Fig. 6).

Fig. 6.

MedCLNet with ImageNet

Fine-Tuning/Frozen (Method 4)

In the classification made with the data used in the pre-training network and the information transferred with transfer learning, fine-tuning can be applied according to the similarity between the data used and whether the data used in the classification is big or small [37].

Performance Metrics for Multi-class Classification.

In the multi-classification problem, the confusion matrix consists of a matrix of mxm size. For example, the 3 × 3 dimensional complexity matrix is given in Table 5. According to Table 5, if the Y class is selected as true positive (TP), TP shows the correctly predicted class values, and E shows the incorrectly predicted class values. In the study, ACC, SN, SP, and Kappa metrics are used for the performance/success of the applied methods [38–41].

Table 5.

Multi-class classification confusion matrix

| Predicted class | |||||||

|---|---|---|---|---|---|---|---|

| Class X | Class Y | Class Z | |||||

| Actual class | Class X | TP (XX) | E (XY) | FP | E (XZ) | ||

| Class Y | E (YX) | FN | TP (YY) | E (YZ) | FN | ||

| Class Z | E (ZX) | E (ZY) | FP | TP (ZZ) | |||

TN true negative, FP false positive, FN false negative

SN and SP metrics are calculated according to the average (macro, micro, weighted) parameter in multi-class classification. In this study, macro values of SN and SP metrics are used. ACC, SNmacro, SPmacro, and Cohen’s kappa are calculated according to the equations in Table 6.

Table 6.

The performance metrics of the multi-class classification

| Metric | Formula |

|---|---|

| Accuracy | |

| Sensitivity | |

| Specificity | |

| Sensitivity (macro average) | |

| Specificity (macro average) | |

| Cohen’s Kappa |

|

Experimental Results

In this section, the experimental results of the proposed transfer learning (with MedCLNet) and the classification results and nucleus segmentation results using the colorectal histology MNIST dataset are shared. In colorectal histology MNIST classification, data were divided as 9:1 (training, test) during training. Ten percent of the training set was used for validation data, and 2800 images were used in the colorectal histology MNIST dataset. A total of 2268 medical images were used in training, 252 images were used as validation, and 280 images of them were used as test data.

Results of Transfer Learning Study with the MedCLNet Dataset

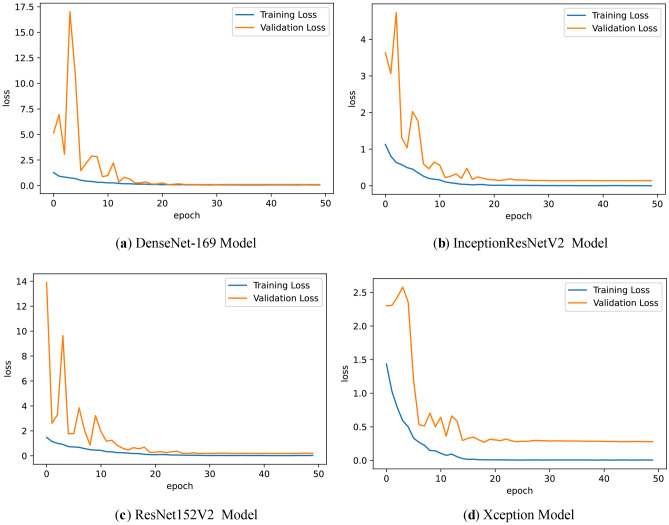

The MedCLNet dataset consists of 4000 images. It was trained with DenseNet201, DenseNet169, InceptionResNetV2, InceptionV3, ResNet152V2, ResNet101V2, and Xception networks. During the training phase, the data was divided into 9:1 (training, validation). The training dataset consists of 3600 images, and the validation dataset consists of 400 images. The results of loss, validation loss, accuracy, and validation accuracy metrics of weight vectors of deep learning networks trained with the MedCLNet dataset are given in Table 7. The loss graphs of the weight vectors are also shown in Fig. 7.

Table 7.

Experimental results of the metrics of the models

| Model | Loss | Accuracy | Validation loss | Validation accuracy |

|---|---|---|---|---|

| DenseNet-169 | 0.0701 | 0.9767 | 0.0934 | 0.9800 |

| InceptionResNetV2 | 0.0060 | 0.9989 | 0.1312 | 0.9575 |

| ResNet152V2 | 0.0423 | 0.9875 | 0.1942 | 0.9350 |

| Xception | 0.0075 | 0.9989 | 0.2699 | 0.9200 |

Fig. 7.

a DenseNet-169 model loss graph, b InceptionResNetV2 model loss graph, c ResNet152V2 model loss graph, and d Xception model loss graph.

The highest validation accuracy value of 98% was obtained from the DenseNet-169 algorithm.

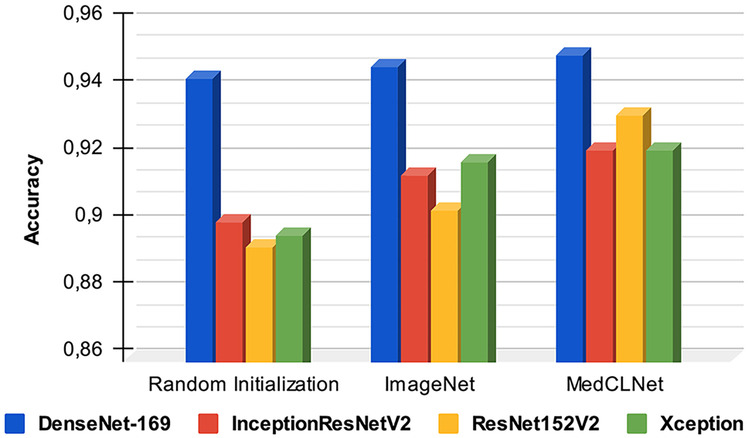

Evaluation of Models Launched with Pre-training Networks and Random Weights in the Classification

The random initialization, ImageNet-based model, and MedCLNet-based model methods were used in the multi-class classification study with the colorectal histology MNIST dataset, and the results obtained from the methods are given in Table 8. The random initialization method is our first approach in the study; DenseNet201, DenseNet169, InceptionResNetV2, InceptionV3, ResNet152V2 ResNet101V2, and Xception models were trained from scratch with train and validation data, and the model test data performance was measured. In our second approach, pre-trained ImageNet weights were used together with the models used in the study. In our last study, classification was made with the networks trained with the MedCLNet database proposed in the study. Within the experimental results, models started with random weights had a lower success rate than pre-trained networks such as ImageNet and MedCLNet. When compared experimentally with MedCLNet and ImageNet, it was observed that studies with MedCLNet produced more successful results using 50 epochs (Table 8). Confusion matrix, loss, and ROC graphs of the DenseNet-169 algorithm before pre-trained and after pre-trained, initiated with MedCLNet weights, are given in Figs. 8, 9, and 10, respectively. Figure 11 shows the pre-trained and post–pre-trained ACC graph of DenseNet-169, InceptionResNetV2, ResNet152V2, and Xception deep neural networks.

Table 8.

Classification results in the colorectal histology MNIST dataset with pre-trained models and models starting with random weights

| Model | ACC | SNmacro | SPmacro | Kappa | Epoch |

|---|---|---|---|---|---|

| Random initialization (w/o pre-training) | |||||

| DenseNet201 | 0.9286 | 0.9362 | 0.9897 | 0.9179 | 50 |

| DenseNet169 | 0.9429 | 0.9449 | 0.9917 | 0.9343 | 50 |

| InceptionResNetV2 | 0.9000 | 0.9096 | 0.9856 | 0.8852 | 50 |

| InceptionV3 | 0.9143 | 0.9204 | 0.9877 | 0.9015 | 50 |

| ResNet152V2 | 0.8929 | 0.9023 | 0.9846 | 0.8770 | 50 |

| ResNet101V2 | 0.9214 | 0.9273 | 0.9887 | 0.9097 | 50 |

| Xception | 0.8964 | 0.9052 | 0.9852 | 0.8811 | 50 |

| ImageNet-based model (w/ pre-training) | |||||

| DenseNet201 | 0.9321 | 0.9358 | 0.9902 | 0.9220 | 50 |

| DenseNet169 | 0.9464 | 0.9536 | 0.9923 | 0.9385 | 50 |

| InceptionResNetV2 | 0.9143 | 0.9196 | 0.9877 | 0.9015 | 50 |

| InceptionV3 | 0.9321 | 0.9371 | 0.9901 | 0.9220 | 50 |

| ResNet152V2 | 0.9036 | 0.9114 | 0.9862 | 0.8893 | 50 |

| ResNet101V2 | 0.9000 | 0.9097 | 0.9856 | 0.8852 | 50 |

| Xception | 0.9179 | 0.9297 | 0.9883 | 0.9058 | 50 |

| MedCLNet-based model (w/ pre-training) | |||||

| DenseNet201 | 0.9464 | 0.9505 | 0.9922 | 0.9384 | 50 |

| DenseNet169 | 0.9500 | 0.9555 | 0.9928 | 0.9425 | 50 |

| InceptionResNetV2 | 0.9214 | 0.9301 | 0.9887 | 0.9098 | 50 |

| InceptionV3 | 0.9393 | 0.9406 | 0.9913 | 0.9302 | 50 |

| ResNet152V2 | 0.9321 | 0.9356 | 0.9903 | 0.9220 | 50 |

| ResNet101V2 | 0.9393 | 0.9445 | 0.9913 | 0.9303 | 50 |

| Xception | 0.9214 | 0.9251 | 0.9886 | 0.9097 | 50 |

Fig. 8.

a Confusion matrix of DenseNet-169 initialized with random weights. b Confusion matrix of DenseNet-169 initialized with MedCLNet weights

Fig. 9.

a Loss graph for the DenseNet-169 model initialized with random weights. b Loss graph for the DenseNet-169 model initialized with MedCLNet weights

Fig. 10.

a ROC graph for the DenseNet-169 model initialized with random weights. b ROC graph for the DenseNet-169 model initialized with MedCLNet weights

Fig. 11.

ACC plot of DenseNet-169, InceptionResNetV2, ResNet152V2, and Xception models initialized with random initialization, ImageNet, and MedCLNet weights

The DenseNet169 algorithm produced the best ACC value in all three applications, and the ACC, SNmacro, SPmacro, and Cohen’s Kappa values were found to be 94.29%, 94.49%, 99.17%, and 93.43%, respectively, in the classification study with random weights. In this study performed with proposed MedCLNet weights, it was 95.00%, 95.55%, 99.28%, and 94.25%.

The results of the study, which can reveal the relationship between CNN-based deep learning models and the amount of data used during training, are presented in Table 8. According to the table, the size of the amount of data used in education is directly proportional to the accuracy, and the information was obtained. The study was carried out with the DenseNet-169 model. When the ratio of the data set used in training to the data set used in the test was 50%:50%, with a rate of 70%:30% and with a rate of 90%:10%, the results of the ACC, SNmacro, and SPmacro metrics were obtained (Table 9). We achieved the best result at a rate of 90%:10%.

Table 9.

Sample training set and test set results for the DenseNet169 model

| Training set/test set | ACC | SNmacro | SPmacro |

|---|---|---|---|

| 90%: 10% | 0.9429 | 0.9449 | 0.9917 |

| 70%: 30% | 0.9262 | 0.9271 | 0.9894 |

| 50%: 50% | 0.9257 | 0.9255 | 0.9894 |

Other Studies Done Using MedCLNet Weights

In this section, an experimental comparison of the results of models started with random weights (before pre-training) and models started with MedCLNet weights (after pre-training) is made. When the results were examined, it was observed that studies initiated with MedCLNet weights produced better results.

Hybrid Working with Deep Learning Algorithms and SVM (Method 1)

In the proposed method, feature extraction of images is one of the deep learning algorithms made with DenseNet-169, ResNet152V2, InceptionResNetV2, and Xception models. SVM (GridSearchCV) was used for multi-classification on the colorectal histology MNIST dataset using the obtained features. The study is presented as pre-training before and after (Table 10).

Table 10.

Deep learning algorithms with SVM

| Model | Before | After | ||||

|---|---|---|---|---|---|---|

| ACC | SNmacro | SPmacro | ACC | SNmacro | SPmacro | |

| DenseNet-169 + SVM | 0.9429 | 0.9435 | 0.9917 | 0.9500 | 0.9487 | 0.9927 |

| InceptionResNetV2 + SVM | 0.8929 | 0.8965 | 0.9846 | 0.9393 | 0.9385 | 0.9912 |

| ResNet152V2 + SVM | 0.8929 | 0.8902 | 0.9847 | 0.9036 | 0.9011 | 0.9860 |

| Xception + SVM | 0.8036 | 0.8063 | 0.9718 | 0.8071 | 0.8116 | 0.9724 |

In the study conducted with DenseNet-169 + SVM (GridSearchCV) architecture, ACC, SNmacro, and SPmacro values were obtained as 94.29%, 94.35%, and 99.17%, respectively, in the study before pre-training (random initialization), and after pre-training (using MedCLNet weights), ACC, SNmacro, and SPmacro values were obtained as 95.00%, 94.87%, and 99.27%, respectively.

Hybrid Working with Deep Learning Algorithms and GRU (Method 2)

In the proposed method, feature extraction of images is one of the deep learning algorithms made with DenseNet-169, ResNet152V2, InceptionResNetV2, and Xception models. GRU was used for multi-classification in the colorectal histology MNIST dataset using the obtained features. The study is presented as pre-training before/after (Table 11).

Table 11.

Deep learning algorithms with GRU

| Model | Before | After | ||||

|---|---|---|---|---|---|---|

| ACC | SNmacro | SPmacro | ACC | SNmacro | SPmacro | |

| DenseNet-169 + GRU | 0.9071 | 0.9059 | 0.9868 | 0.9357 | 0.9424 | 0.9907 |

| InceptionResNetV2 + GRU | 0.9143 | 0.9233 | 0.9877 | 0.9179 | 0.9263 | 0.9882 |

| ResNet152V2 + GRU | 0.8893 | 0.8876 | 0.9841 | 0.9214 | 0.9267 | 0.9887 |

| Xception + GRU | 0.9107 | 0.9180 | 0.9872 | 0.9143 | 0.9228 | 0.9877 |

In the study performed with DenseNet-169 + GRU architecture, ACC, SNmacro, and SPmacro values were 90.71%, 90.59%, and 98.68%, respectively, in the study before pre-training (random initialization), and after pre-training (using MedCLNet weights), the ACC, SNmacro, and SPmacro values were 93.57%, 94.24%, 99.07%, respectively.

MedCLNet with ImageNet (Method 3)

In the proposed method, training was conducted with DenseNet-169, ResNet152V2, InceptionResNetV2, Xception models, and MedCLNet data launched with ImageNet weights, the trained deep learning networks were used in the classification study on the colorectal histology MNIST dataset. When the performance of the networks started with MedCLNet weights in multi-classification and the performance of the networks trained with the MedCLNet + ImageNet hybrid method in multi-classification are examined, it is observed that the proposed hybrid system produces more successful results (Fig. 12). The results of loss, validation loss, accuracy, and validation accuracy metrics of the weight vectors of deep learning networks trained with MedCLNet + ImageNet are given in Table 12.

Fig. 12.

The MedCLNet weights and initiated with the MedCLNet + ImageNet hybrid method; ACC graph of DenseNet-169, ResNet152V2, InceptionResNetV2, and Xception models

Table 12.

Experimental results of metrics of hybrid models

| Model | Loss | Accuracy | Validation loss | Validation accuracy |

|---|---|---|---|---|

| DenseNet-169 | 0.0082 | 0.9986 | 0.0875 | 0.9675 |

| InceptionResNetV2 | 0.0046 | 0.9989 | 0.0946 | 0.9800 |

| ResNet152V2 | 0.0671 | 0.9842 | 0.3590 | 0.8825 |

| Xception | 0.0123 | 0.9953 | 0.1399 | 0.9750 |

In the study performed with DenseNet-169 architecture initiated with MedCLNet weights, ACC, SNmacro, and SPmacro values were 95.00%, 95.55%, and 99.28%. In the hybrid method, the ACC, SNmacro, and SPmacro values were 95.36%, 95.55%, and 99.33%, respectively (Table 13).

Table 13.

MedCLNet and hybrid method

| Model | MedCLNet | MedCLNet + ImageNet | ||||

|---|---|---|---|---|---|---|

| ACC | SNmacro | SPmacro | ACC | SNmacro | SPmacro | |

| DenseNet-169 | 0.9500 | 0.9555 | 0.9928 | 0.9536 | 0.9555 | 0.9933 |

| InceptionResNetV2 | 0.9214 | 0.9301 | 0.9887 | 0.9286 | 0.9357 | 0.9898 |

| ResNet152V2 | 0.9321 | 0.9356 | 0.9903 | 0.9500 | 0.9541 | 0.9928 |

| Xception | 0.9214 | 0.9251 | 0.9886 | 0.9250 | 0.9296 | 0.9892 |

Fine-Tuning/Frozen (Method 4)

In this section, pre-training work was done with MedCLNet. The study is presented as before/after fine-tuning. In the unfrozen study performed with DenseNet-169 architecture, ACC, SNmacro, and SPmacro values were obtained as 95.00%, 95.55%, and 99.28%, respectively. In this study, fine-tuning was done by freezing the first 30% of DenseNet-169, InceptionResNetV2, ResNet152V2, and Xception algorithms. For the frozen study, the ACC, SNmacro, and SPmacro values were found to be 92.14%, 92.91%, and 98.87%, respectively (Table 14).

Table 14.

Fine-tuning results

| Model | Unfrozen | Frozen | ||||

|---|---|---|---|---|---|---|

| ACC | SNmacro | SPmacro | ACC | SNmacro | SPmacro | |

| DenseNet-169 | 0.9500 | 0.9555 | 0.9928 | 0.9214 | 0.9291 | 0.9887 |

| InceptionResNetV2 | 0.9214 | 0.9301 | 0.9887 | 0.8679 | 0.8772 | 0.9811 |

| ResNet152V2 | 0.9321 | 0.9356 | 0.9903 | 0.9036 | 0.9079 | 0.9861 |

| Xception | 0.9214 | 0.9251 | 0.9886 | 0.8250 | 0.8293 | 0.9750 |

Nucleus Segmentation Results

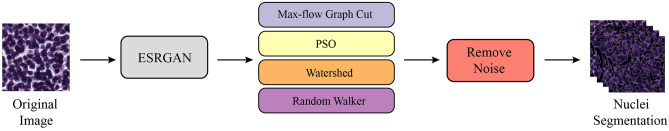

In this section, segmentation of nuclei is an essential parameter in the detection and grading of cancer in the histopathology data; max-flow graph cut [42, 43], one of the graph theorems, is a network of nodes and edges connecting the nodes; and PSO [44, 45], watershed [46], and random walker [47] algorithms are population-based algorithms developed by observing the behavior of bird and fish flocks. The high resolution of the images is effective in the fast and efficient processing of the data [48]. The data in the colorectal histology MNIST dataset used in the nucleus segmentation study was 150 × 150 in size. Firstly, images were resized to 592 × 592 with enhanced super-resolution generative adversarial networks (ESRGAN) [49], then segmentation algorithms were applied, and finally, the noise in the images was removed (Fig. 13). Graph cut theorem is shown in Fig. 14 and the flowchart of the PSO algorithm in Fig. 15. Nucleus segmentation results of the four applied theorems are presented in Fig. 16. The hyperparameters used in the PSO algorithm are given in Table 15.

Fig. 13.

Segmentation workflow

Fig. 14.

Flowchart of the graph cut theorem

Fig. 15.

Flowchart of the PSO algorithm

Fig. 16.

Results of nucleus segmentation

Table 15.

PSO algorithm hyperparameters

| Parameters | Value |

|---|---|

| Iteration | 100 |

| Swarm size | 5 |

| Inertia weight (w) | 0.99 |

| Acceleration coefficients (c1–c2) | 1.99 |

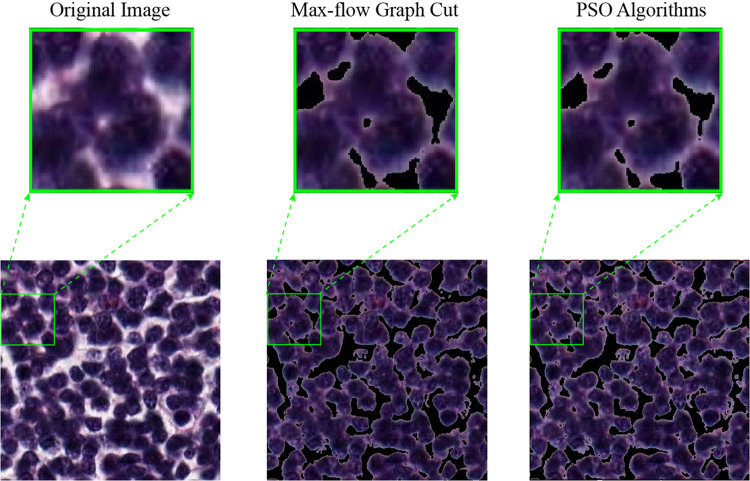

To illustrate in detail the effectiveness of the max-flow graph cut, PSO, watershed, and random walker algorithms in segmenting nuclei, we present the segmentation results of five histopathological images in the colorectal histology MNIST dataset in Fig. 16. The random walker algorithm has the worst segmentation results in all histopathological images.

It was insufficient to detect nuclei, especially in regions where nuclei are dense, and accurate border detection could not be found in most images. The successful results in the study belong to max-flow graph cut and PSO algorithms. In nucleus boundary detection, there are regions where both algorithms outperform each other (Fig. 17). Significantly as the density in the image increased, the max-flow graph cut algorithm was insufficient in determining the borders of the nuclei. However, the PSO algorithm gave better results than the max-flow graph cut algorithm in dense images. In images with low density, the max-flow graph cut algorithm gave better results than the PSO algorithm. The high resolution of images in segmentation studies will pave the way for algorithms to produce more successful results. Since the images used in the study consisted of both low resolution and dense images, max-flow graph cut, PSO, watershed, and random walker algorithms created the basis for pixel missing. This study observed that all four algorithms produced successful regions with low density (Fig. 18). It produced better segmentation results than the PSO algorithm of the max-flow graph cut algorithm.

Fig. 17.

PSO algorithm and max-flow graph cut algorithm result comparison

Fig. 18.

Segmentation of lower density (yellow counter) and higher density (red counter) images with max-flow graph cut, PSO, watershed, and random walker algorithms

PSO is better resulting than max-flow graph cut. As in Fig. 19, the nucleus boundaries in the regions with less density were determined more accurately by the algorithms. However, due to the low resolution of the images and the denser regions, the borders of the nuclei could not be determined by the algorithms.

Fig. 19.

The max-flow graph cut, PSO, watershed, and random walker algorithms’ examples of nucleus segmentation

The nucleus segmentation results of max-flow graph cut, PSO, watershed, and random walker algorithms with the histopathological image are given in Fig. 19. The worst value in the segmentation of the region selected from the original image belongs to the random walker algorithm. The best value belongs to the PSO algorithm. Unfortunately, the borders were not clear in the histopathological image. Because of this, algorithms had a hard time finding boundaries.

Our study on the histopathological classification and segmentation of colon cancer presented our MedCLNet database visualization. In the proposed method, different models were analyzed before and after transfer learning on the eight-class colorectal histology MNIST dataset. The data obtained due to our empirical studies showed that the proposed method produced satisfying results. The images were low resolution for segmentation. This situation greatly reduced the success of the algorithm. We believe that the algorithm will produce better results in datasets with higher resolution. We also believe that max-flow graph cut, PSO, and watershed algorithms will significantly succeed in segmentation future works. The work will continue to be developed with images with standard features. Our study can provide a second perspective to pathologists/experts in diagnosing colon cancer, and it is anticipated that it will save time in diagnosing the disease.

Limitations

This study has some limitations: (i) The MedCLNet database consists of a limited number of 4000 images and ten categories due to lack of technical equipment and (ii) the proposed transfer learning method was analyzed in “The Colorectal Histology MNIST dataset,” consisting of an eight-class histopathology dataset. For a detailed analysis of the proposed method, it can be applied in binary and multi-class classification studies with different datasets.

Discussion

CNN-based deep neural networks have been frequently applied in medical image processing in recent years [10, 15, 17]. CNN-based deep neural networks with high discrimination capabilities can generally produce accurate results with the large datasets [9]. Unlabeled datasets are easier to access in medical imaging and more. However, experts are needed for the secure acquisition of labeled images [21]. Current problems such as overfitting and underfitting are encountered in relatively small datasets and deep neural networks trained from scratch. The transfer learning technique is a popular solution in solving for such problems [22]. In applications developed with transfer learning, the ImageNet dataset is frequently used [50]. High performance is generally achieved in applications developed with ImageNet [50]. Here, ImageNet consists of heterogeneous data samples. Therefore, deep neural networks trained with ImageNet focus on learning with a dataset consisting of many different categories. In medical image processing applications, in the disease diagnosis process that requires high sensitivity, the transfer learning method proposed with ImageNet heterogeneous dataset can be disadvantageous in the diagnosis process. A transfer learning application is recommended with the MedCLNet dataset, which consists of only colon cancer histopathology medical images from the main contributions of this study. To evaluate the performance of the proposed method for this study, DenseNet201, DenseNet169, InceptionResNetV2, InceptionV3, ResNet152V2, ResNet101V2, and Xception deep neural networks initiated with random weights, together with ImageNet weights and MedCLNet weights were compared with the initiated deep neural networks in the experimental process. Based upon our experimental results, it has been observed that the transfer learning method proposed with MedCLNet produces more accurate results. Another contribution of this study is the application of nucleus segmentation to detect nuclei that have an essential role in disease detection. Max-flow graph cut and PSO algorithms, which are unsupervised learning methods, produced successful results in the segmentation application. The primary purpose of this study is to propose a solution that can increase the performance of deep neural networks to be applied in classification performed by medical image processing. Experimental results have shown that the proposed method produces successful results. As a result of this situation, the proposed method may benefit experts in colon cancer detection studies.

Conclusion and Future Work

This study proposes a transfer learning method with a MedCLNet visual dataset consisting of colon cancer histopathology medical images. The proposed transfer learning method was applied in colon cancer detection. In the transfer learning and classification process, the use of DenseNet201, DenseNet169, InceptionResNetV2, InceptionV3, ResNet152V2, ResNet101V2, and Xception deep neural networks and hybrid methods are suggested. Within the scope of the study, firstly, deep neural networks were trained with the MedCLNet visual dataset. In the second step, the trained neural networks were applied in tumor detection with the colorectal histology MNIST dataset consisting of histopathology images. Experimental results are given comparatively before and after transfer learning with MedCLNet and ImageNet. Our research findings show that the transfer learning application proposed with MedCLNet is more successful in the disease detection process than the deep neural networks initiated with ImageNet and random weights. In the study’s first phase, DenseNet-169 architecture trained with the MedCLNet dataset was the most successful model, with 98.00% validation accuracy. In the test phase of the tumor detection study from pre-transfer learning histopathology images, the ResNet152V2 model produced an accuracy of 89.29%. In contrast, in the test phase in the post-transfer learning tumor detection study with ImageNet weights, the ResNet152V2 model accuracy was 90.36%. In the post-transfer learning tumor detection study with MedCLNet weights, the ResNet152V2 model produced an accuracy of 93.21% during the test phase.

In future studies, the number of samples and the number of categories in the dataset can be increased to improve the success of the transfer learning method proposed with MedCLNet. A detailed analysis of the proposed method with MedCLNet can be analyzed with histopathological datasets of different sizes. In core segmentation, images can be improved with the autoencoder method to enhance the success of the applied unsupervised learning algorithms.

Author Contribution

All authors contributed to the study conception and design. Material preparation, data collection, and analysis were performed by Hatice Catal Reis and Veysel Turk. The first draft of the manuscript was written by Hatice Catal Reis, and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Declarations

Ethics Approval

This study used a public free dataset, and it has confirmed that no ethical approval is required.

Conflict of Interest

The authors declare no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Jiang D, Liao J, Duan H, Wu Q, Owen G, Shu C, et al. A machine learning-based prognostic predictor for stage III colon cancer. Scientific reports. 2020;10(1):1–9. doi: 10.1038/s41598-020-67178-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Al-shawesh RA, Chen YX: Enhancing Histopathological Colorectal Cancer Image Classification by using Convolutional Neural Network. medRxiv. 10.1101/2021.03.17.21253390, 2021.

- 3.Liang M, Ren Z, Yang J, Feng W, Li B. Identification of colon cancer using multi-scale feature fusion convolutional neural network based on shearlet transform. IEEE Access. 2020;8:208969–208977. doi: 10.1109/ACCESS.2020.3038764. [DOI] [Google Scholar]

- 4.Sarwinda D, Paradisa RH, Bustamam A, Anggia P. Deep Learning in Image Classification using Residual Network (ResNet) Variants for Detection of Colorectal Cancer. Procedia Computer Science. 2021;179:423–431. doi: 10.1016/j.procs.2021.01.025. [DOI] [Google Scholar]

- 5.AbdElNabi MLR, Wajeeh Jasim M, El-Bakry HM, Hamed N. Taha M, Khalifa NEM: Breast and colon cancer classification from gene expression profiles using data mining techniques. Symmetry. 2020;12(3):408. doi: 10.3390/sym12030408. [DOI] [Google Scholar]

- 6.Pacal I, Karaboga D, Basturk A, Akay B, Nalbantoglu U: A comprehensive review of deep learning in colon cancer. Computers in Biology and Medicine 104003. 10.1016/j.compbiomed.2020.104003, 2020. [DOI] [PubMed]

- 7.Khened M, Kori A, Rajkumar H, Krishnamurthi G, Srinivasan B. A generalized deep learning framework for whole-slide image segmentation and analysis. Scientific reports. 2021;11(1):1–14. doi: 10.1038/s41598-021-90444-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wu YH, Gao SH, Mei J, Xu J, Fan DP, Zhang RG, et al. Jcs: An explainable covid-19 diagnosis system by joint classification and segmentation. IEEE Transactions on Image Processing. 2021;30:3113–3126. doi: 10.1109/TIP.2021.3058783. [DOI] [PubMed] [Google Scholar]

- 9.Kim YG, Choi G, Go H, Cho Y, Lee H, Lee AR. A fully automated system using a convolutional neural network to predict renal allograft rejection: Extra-validation with giga-pixel Immunostained slides. Scientific reports. 2019;9(1):1–10. doi: 10.1038/s41598-019-41479-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Tripathi S, Singh SK. Ensembling handcrafted features with deep features: an analytical study for classification of routine colon cancer histopathological nuclei images. Multimedia Tools and Applications. 2020;79(47):34931–34954. doi: 10.1007/s11042-020-08891-w. [DOI] [Google Scholar]

- 11.Iizuka O, Kanavati F, Kato K, Rambeau M, Arihiro K, Tsuneki M. Deep learning models for histopathological classification of gastric and colonic epithelial tumours. Scientific Reports. 2020;10(1):1–11. doi: 10.1038/s41598-020-58467-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kim YG, Kim S, Cho CE, Song IH, Lee HJ, Ahn S, et al. Effectiveness of transfer learning for enhancing tumor classification with a convolutional neural network on frozen sections. Scientific Reports. 2020;10(1):1–9. doi: 10.1038/s41598-020-78129-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Jain R, Nagrath P, Kataria G, Kaushik VS, Hemanth DJ. Pneumonia detection in chest X-ray images using convolutional neural networks and transfer learning. Measurement. 2020;165:108046. doi: 10.1016/j.measurement.2020.108046. [DOI] [Google Scholar]

- 14.Rehman A, Naz S, Razzak MI, Akram F, Imran M. A deep learning-based framework for automatic brain tumors classification using transfer learning. Circuits, Systems, and Signal Processing. 2020;39(2):757–775. doi: 10.1007/s00034-019-01246-3. [DOI] [Google Scholar]

- 15.An G, Akiba M, Omodaka K, Nakazawa T, Yokota H. Hierarchical deep learning models using transfer learning for disease detection and classification based on small number of medical images. Scientific reports. 2021;11(1):1–9. doi: 10.1038/s41598-021-83503-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Brozek-Pluska B, Dziki A, Abramczyk H. Virtual spectral histopathology of colon cancer-biomedical applications of Raman spectroscopy and imaging. Journal of Molecular Liquids. 2020;303:112676. doi: 10.1016/j.molliq.2020.112676. [DOI] [Google Scholar]

- 17.Moshkov N, Mathe B, Kertesz-Farkas A, Hollandi R, Horvath P. Test-time augmentation for deep learning-based cell segmentation on microscopy images. Scientific reports. 2020;10(1):1–7. doi: 10.1038/s41598-020-61808-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Wu TC, Wang X, Li L, Bu Y, Umulis DM. Automatic wavelet-based 3D nuclei segmentation and analysis for multicellular embryo quantification. Scientific reports. 2021;11(1):1–13. doi: 10.1038/s41598-021-88966-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wan T, Zhao L, Feng H, Li D, Tong C, Qin Z. Robust nuclei segmentation in histopathology using ASPPU-Net and boundary refinement. Neurocomputing. 2020;408:144–156. doi: 10.1016/j.neucom.2019.08.103. [DOI] [Google Scholar]

- 20.Lagree A, Mohebpour M, Meti N, Saednia K, Lu FI, Slodkowska E, et al. A review and comparison of breast tumor cell nuclei segmentation performances using deep convolutional neural networks. Scientific Reports. 2021;11(1):1–11. doi: 10.1038/s41598-021-87496-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Enguehard J, O’Halloran P, Gholipour A. Semi-supervised learning with deep embedded clustering for image classification and segmentation. IEEE Access. 2019;7:11093–11104. doi: 10.1109/ACCESS.2019.2891970. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Apostolopoulos ID, Mpesiana TA. Covid-19: automatic detection from x-ray images utilizing transfer learning with convolutional neural networks. Physical and Engineering Sciences in Medicine. 2020;43(2):635–640. doi: 10.1007/s13246-020-00865-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Pathak Y, Shukla PK, Tiwari A, Stalin S, Singh S. Deep transfer learning based classification model for COVID-19 disease. Irbm. 2020 doi: 10.1016/j.irbm.2020.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Mehrotra R, Ansari MA, Agrawal R, Anand RS. A Transfer Learning approach for AI-based classification of brain tumors. Machine Learning with Applications. 2020;2:100003. doi: 10.1016/j.mlwa.2020.100003. [DOI] [Google Scholar]

- 25.Hu M, Zhang Y: The Python/C API: Evolution, Usage Statistics, and Bug Patterns. In 2020 IEEE 27th International Conference on Software Analysis, Evolution and Reengineering (SANER) 532–536. 10.1109/SANER48275.2020.9054835, 2020.

- 26.Macenko M, Niethammer M, Marron JS, Borland D, Woosley JT, Guan X, et al: A method for normalizing histology slides for quantitative analysis. In 2009 IEEE international symposium on biomedical imaging: from nano to macro pp. 1107–1110. IEEE. 10.1109/ISBI.2009.5193250, 2009.

- 27.Bukhari SUK, Asmara S, Bokhari SKA, Hussain SS, Armaghan SU, Shah SSH: The Histological Diagnosis of Colonic Adenocarcinoma by Applying Partial Self Supervised Learning. medRxiv. 10.1101/2020.08.15.20175760, 2020.

- 28.Sirinukunwattana K, Pluim JP, Chen H, Qi X, Heng PA, Guo YB, et al. Gland segmentation in colon histology images: The glas challenge contest. Medical image analysis. 2017;35:489–502. doi: 10.1016/j.media.2016.08.008. [DOI] [PubMed] [Google Scholar]

- 29.Ibrahim N, Pratiwi NKC, Pramudito MA, Taliningsih FF: Non-Complex CNN Models for Colorectal Cancer (CRC) Classification Based on Histological Images. In Proceedings of the 1st International Conference on Electronics, Biomedical Engineering, and Health Informatics 746: 509–516. 10.1007/978-981-33-6926-9_44, 2021.

- 30.Bressem KK, Adams LC, Erxleben C, Hamm B, Niehues SM, Vahldiek JL. Comparing different deep learning architectures for classification of chest radiographs. Scientific reports. 2020;10(1):1–16. doi: 10.1038/s41598-020-70479-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Ho N, Kim YC. Evaluation of transfer learning in deep convolutional neural network models for cardiac short axis slice classification. Scientific reports. 2021;11(1):1–11. doi: 10.1038/s41598-021-81525-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Spiesman BJ, Gratton C, Hatfield RG, Hsu WH, Jepsen S, McCornack B, et al. Assessing the potential for deep learning and computer vision to identify bumble bee species from images. Scientific reports. 2021;11(1):1–10. doi: 10.1038/s41598-021-87210-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Hwooi SKW, Loo CK, Sabri AQM. Emotion Differentiation Based on Arousal Intensity Estimation from Facial Expressions. In Information Science and Applications. 2020;621:249–257. doi: 10.1007/978-981-15-1465-4_26. [DOI] [Google Scholar]

- 34.Elakkiya R, Vijayakumar P, Karuppiah M: COVID_SCREENET: COVID-19 Screening in Chest Radiography Images Using Deep Transfer Stacking. Information Systems Frontiers 1–15. 10.1007/s10796-021-10123-x, 2021. [DOI] [PMC free article] [PubMed]

- 35.Zhu X, Li X, Ong K, Zhang W, Li W, Li L, et al. Hybrid AI-assistive diagnostic model permits rapid TBS classification of cervical liquid-based thin-layer cell smears. Nature Communications. 2021;12(1):1–12. doi: 10.1038/s41467-021-23913-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Nguyen DTA, Joung J, Kang X. Deep Gated Recurrent Unit-Based 3D Localization for UWB Systems. IEEE Access. 2021;9:68798–68813. doi: 10.1109/ACCESS.2021.3077906. [DOI] [Google Scholar]

- 37.Darma IWAS, Suciati N, Siahaan D: Balinese Carving Recognition using Pre-Trained Convolutional Neural Network. In 2020 4th International Conference on Informatics and Computational Sciences (ICICoS) 1–5. 10.1109/ICICoS51170.2020.9299021, 2020.

- 38.Siniosoglou I, Radoglou-Grammatikis P, Efstathopoulos G, Fouliras P, Sarigiannidis P. A unified deep learning anomaly detection and classification approach for smart grid environments. IEEE Transactions on Network and Service Management. 2021;18(2):1137–1151. doi: 10.1109/TNSM.2021.3078381. [DOI] [Google Scholar]

- 39.Zheng C, Xie X, Wang Z, Li W, Chen J, Qiao T, et al. Development and validation of deep learning algorithms for automated eye laterality detection with anterior segment photography. Scientific Reports. 2021;11(1):1–8. doi: 10.1038/s41598-020-79809-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Markoulidakis I, Rallis I, Georgoulas I, Kopsiaftis G, Doulamis A, Doulamis N. Multiclass Confusion Matrix Reduction Method and Its Application on Net Promoter Score Classification Problem. Technologies. 2021;9(4):81. doi: 10.3390/technologies9040081. [DOI] [Google Scholar]

- 41.Foody GM. Explaining the unsuitability of the kappa coefficient in the assessment and comparison of the accuracy of thematic maps obtained by image classification. Remote Sensing of Environment. 2020;239:111630. doi: 10.1016/j.rse.2019.111630. [DOI] [Google Scholar]

- 42.Goyal A. Image-based clustering and connected component labeling for rapid automated left and right ventricular endocardial volume extraction and segmentation in full cardiac cycle multi-frame MRI images of cardiac patients. Medical and biological engineering and computing. 2019;57(6):1213–1228. doi: 10.1007/s11517-019-01952-9. [DOI] [PubMed] [Google Scholar]

- 43.Qian C, Su E, Yang X. Segmentation of the common carotid intima-media complex in ultrasound images using 2-D continuous max-flow and stacked sparse auto-encoder. Ultrasound in Medicine and Biology. 2020;46(11):3104–3124. doi: 10.1016/j.ultrasmedbio.2020.07.021. [DOI] [PubMed] [Google Scholar]

- 44.Upadhyay P, Chhabra JK. Multilevel thresholding based image segmentation using new multistage hybrid optimization algorithm. Journal of Ambient Intelligence and Humanized Computing. 2021;12:1081–1098. doi: 10.1007/s12652-020-02143-3. [DOI] [Google Scholar]

- 45.Sharif M, Amin J, Raza M, Yasmin M, Satapathy SC. An integrated design of particle swarm optimization (PSO) with fusion of features for detection of brain tumor. Pattern Recognition Letters. 2020;129:150–157. doi: 10.1016/j.patrec.2019.11.017. [DOI] [Google Scholar]

- 46.Patmonoaji A, Tsuji K, Suekane T. Pore-throat characterization of unconsolidated porous media using watershed-segmentation algorithm. Powder Technology. 2020;362:635–644. doi: 10.1016/j.powtec.2019.12.026. [DOI] [Google Scholar]

- 47.Lutton EJ, Collier S, Bretschneider T. A Curvature-Enhanced Random Walker Segmentation Method for Detailed Capture of 3D Cell Surface Membranes. IEEE Transactions on Medical Imaging. 2020;40(2):514–526. doi: 10.1109/TMI.2020.3031029. [DOI] [PubMed] [Google Scholar]

- 48.Horwath JP, Zakharov DN, Mégret R, Stach EA: Understanding important features of deep learning models for segmentation of high-resolution transmission electron microscopy images. npj Computational Materials 6(1): 1–9. 10.1038/s41524-020-00363-x, 2020.

- 49.Wang X, Yu K, Wu S, Gu J, Liu Y, Dong C, et al: Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European conference on computer vision (ECCV) workshops 63–79. 10.1007/978-3-030-11021-5_5, 2018.

- 50.Ayana G, Park J, Choe SW. Patchless Multi-Stage Transfer Learning for Improved Mammographic Breast Mass Classification. Cancers. 2022;14(5):1280. doi: 10.3390/cancers14051280. [DOI] [PMC free article] [PubMed] [Google Scholar]