Abstract

Learning engagement has gained increasing attention in the field of education. Previous studies have adopted conventional methods to analyze learning engagement, but these methods cannot provide timely feedback for learners. This study analyzed automated group learning engagement via deep neural network models in a computer-supported collaborative learning (CSCL) context. A quasi-experimental research design was implemented to examine the effects of the automated group learning engagement analysis and feedback approach on collaborative knowledge building, group performance, socially shared regulation, and cognitive load. In total, 120 college students participated in this study; they were assigned to 20 experimental groups and 20 control groups of three students each. The students in the experimental groups adopted the automated group learning engagement analysis and feedback approach, whereas those in the control groups used the traditional online collaborative learning approach. Both quantitative and qualitative data were collected and analyzed in depth. The results indicated significant differences in group learning engagement, group performance, collaborative knowledge building, and socially shared regulation between the experimental and control groups. The proposed approach did not increase the cognitive load for the experimental groups. The implications of the findings can potentially contribute to improving group learning engagement and group performance in CSCL.

Keywords: Computer-supported collaborative learning, Learning engagement, Group performance, Collaborative knowledge building, Socially shared regulation, Cognitive load

Introduction

Computer-supported collaborative learning (CSCL) has been widely adopted in the field of education. As a branch of the learning sciences, CSCL considers how people learn in groups using computers (Stahl et al., 2006). CSCL involves collaboratively solving problems, designing projects, or creating products through social interaction (Munoz-Carril et al., 2021) and is a constellation of shared learning processes and activities (Lämsä et al., 2021). The benefits of CSCL, such as improving learning performance (Shin et al., 2020), problem-solving abilities (Rosen et al., 2020), and collaboration skills (Law et al., 2021), have been well documented in previous studies. The purpose of CSCL research is to understand technologically mediated peer interaction and learning outcomes (Cress et al., 2019).

Previous studies on CSCL featured a wide variety of research foci, including the use of technological tools to facilitate CSCL (Chen et al., 2018; Shin & Jung, 2020), employment of various strategies to promote CSCL (Pietarinen et al., 2021), and analysis and evaluation of CSCL processes and outcomes using various methods (Lämsä et al., 2021). Recently, learning engagement has received increasing attention in the CSCL field. Learning engagement is defined as the extent of learners’ devotion to a learning activity that results in a desired learning outcome (Hu & Kuh, 2002). Learning engagement is positively related to learning achievement (Li & Baker, 2018) and can significantly predict learning achievement (Liu et al., 2022). Therefore, learning engagement plays a crucial role in improving learning achievement. Many researchers have begun to pay attention to learning engagement in the CSCL context. For example, Qiu (2019) proposed a systemic framework to improve individual learning engagement in a problem-based collaborative learning setting. Huang (2021) adopted collaborative video projects to improve the learning engagement of English as a Foreign Language (EFL) learners.

Although previous studies have enriched our knowledge of individual learning engagement, the understanding of group learning engagement in the CSCL context remains limited. Only a few studies have explored group learning engagement. For example, Sinha et al. (2015) proposed that group learning engagement is a multifaceted construct that includes behavioral, social, cognitive, and conceptual-to-consequential engagement. Curşeu et al. (2020) claimed that group learning engagement reflects the shared experiences of a group and is characterized by group vigor, group absorption, and group dedication. Furthermore, Fredricks et al. (2016) posited that learning engagement includes cognitive, behavioral, emotional, and social engagement. Biasutti and Frate (2018) revealed that group metacognition is crucial to successful collaborative learning. Therefore, this study proposes that group learning engagement refers to a collective commitment that depends on the extent to which all group members are involved in collaborative learning activities that result in desired learning outcomes. Group learning engagement is a multidimensional construct and includes cognitive engagement, metacognitive engagement, behavioral engagement, emotional engagement, and social engagement. In addition, individual learning engagement underlies group learning engagement. However, group learning engagement is not simply equal to the sum of the learning engagement measures of individuals since collaborative learning is a complex system that is not merely the sum of its parts but is often distinct from those parts (Jacobson et al., 2019). Group learning engagement is a collective exercise that results in properties that emerge from individual learning engagement.

In the CSCL context, analyzing group learning engagement is crucial to improve group performance. However, traditional methods have analyzed group learning engagement manually after online collaborative learning, which does not provide real-time personalized feedback for learners. In addition, previous studies have mainly focused on individual learning engagement in CSCL (Qiu, 2019; Xie et al., 2019). To date, few studies have paid attention to group learning engagement in the CSCL context. Group learning engagement should be analyzed at the group level, but previous studies have analyzed group learning engagement via individual level instruments such as surveys (Curşeu et al., 2020) or manual ratings (Sinha et al., 2015). Thus, there is a lack of research on the automatic analysis of group learning engagement in the CSCL context. To close these research gaps, this study proposes an automated group learning engagement analysis and feedback approach via deep neural network models (DNNs) in the CSCL context. The automated group learning engagement analysis and feedback approach can automatically analyze group learning engagement and provide group-specific feedback based on the analysis results during online collaborative learning. The automated group learning engagement analysis and feedback approach provides insight into how to improve group learning engagement and group performance and enriches our understanding of the nature of CSCL.

The purpose of this study is to examine the impacts of the automated group learning engagement analysis and feedback approach on collaborative knowledge building, group performance, socially shared regulation (SSR), and cognitive load. Collaborative knowledge building is defined as a process in which students learn as a group to build shared knowledge or solve problems through a series of coordinated attempts (Muhonen et al., 2017). Group performance is the quality or quantity of output produced by group members (Weldon & Weingart, 1993). Socially shared regulation is conceptualized as the processes by which group members regulate their collective activity (Järvelä et al., 2016). Cognitive load is a multidimensional construct representing the load that task completion imposes on a learner’s cognitive system (Paas & van Merriënboer, 1994). Feedback can positively promote learning achievements (Mousavi et al., 2021), knowledge-building levels, and group performance (Zheng et al., 2022). Therefore, the following research questions are addressed:

Can the automated group learning engagement analysis and feedback approach promote group learning engagement better than the traditional online collaborative learning approach?

Can the automated group learning engagement analysis and feedback approach improve collaborative knowledge building better than the traditional online collaborative learning approach?

Can the automated group learning engagement analysis and feedback approach enhance group performance better than the traditional online collaborative learning approach?

Can the automated group learning engagement analysis and feedback approach promote socially shared regulation better than the traditional online collaborative learning approach?

Can the automated group learning engagement analysis and feedback approach increase the cognitive load better than the traditional online collaborative learning approach?

This study hypothesizes that the automated group learning engagement analysis and feedback approach has more significant and positive impacts on group learning engagement, collaborative knowledge building, group performance, and socially shared regulation than the traditional online collaborative learning approach. We hypothesize that the automated group learning engagement analysis and feedback approach will negatively affect cognitive load.

Literature review

Learning engagement

Learning engagement has received increasing attention in recent years. Learning engagement is a prerequisite to positive learning effects (Zhang et al., 2020), and researchers have found that learning engagement is positively related to learning achievements (Li & Baker, 2018; Phan et al., 2016). Previous studies have explored individual learning engagement in various contexts. For example, Lan and Hew (2020) examined learning engagement in a massive open online course (MOOC) context and found that it can predict learners’ perceptions of learning. Lu et al. (2017) developed a parallelized action-based algorithm to measure learning engagement in a MOOC course. Rizvi et al. (2020) adopted educational process mining techniques to investigate the detailed processes of learning engagement over time in a MOOC course. Doo and Bonk (2020) found that self-regulation impacted learning engagement in a flipped learning setting. In addition, Moon and Ke (2020) adopted game-based learning to enhance learning engagement and found that the types of learners’ in-game actions promoted differential learning engagement. Furthermore, previous studies have explored learning engagement from various perspectives, such as the factors influencing learning engagement (Yun et al., 2020), the promotion of learning engagement by blogs, mobile learning and assessment tools (Bedenlier et al., 2020), and methods for measurement of individual learning engagement (Li et al., 2021a, b, c).

Researchers have adopted various methods to measure individuals’ learning engagement. The most frequently used methods for measuring individuals’ learning engagement include self-report surveys, observational checklists and ratings, and automated measurements from facial expressions using the timing and accuracy of students’ responses, physiological and neurological sensor readings, and computer vision (Whitehill et al., 2014; Li et al., 2021a, b, c) adopted a short questionnaire and support vector machine to measure and predict cognitive engagement. Traditionally, individual learning engagement can be measured at a micro or macro level. At the macro level, researchers usually adopt observations, interviews, surveys, teacher ratings, discourse analysis, and cultural and critical analysis to analyze learning engagement from a contextualized and holistic perspective (Li et al., 2021a, b, c; Sinatra et al., 2015). At the micro level, learning engagement has been measured by individuals’ response times, self-reported scales, eye tracking data, facial expressions, body movements, mouse movements, and trace data collected during learning activities (Li et al., 2021a, b, c; Zhang et al., 2020).

However, very few studies have explored group learning engagement in the CSCL context. Though group learning engagement has received little attention in the CSCL field, it is nevertheless important for improving group performance in the CSCL context. Several studies have noted the importance of group learning engagement. For example, Khosa and Volet (2014) found that productive group learning engagement was positively related to better conceptual understanding in collaborative learning activities. Curşeu et al. (2020) revealed that group learning engagement mediated the influence of group identification on group performance. Sinha et al. (2015) found that behavioral and social engagement promoted high-quality cognitive and conceptual-to-consequential engagement. Furthermore, previous studies have adopted traditional methods such as observations (Sinha et al., 2015), surveys (Curşeu et al., 2020), and scores (Castellanos et al., 2016) to measure group learning engagement. Despite this level of interest, there are notable limitations to available methods for measuring group learning engagement. First, these traditional methods depend heavily on manually analyzed datasets, which is time consuming and labor intensive. Second, traditional methods are not able to capture the evolution of learning engagement (Li et al., 2021a, b, c). Third, traditional methods are not able to provide real-time analysis or personalized feedback for learners.

Therefore, data-driven learning engagement tracking and analysis in the digital era is needed. Data-driven learning engagement is a bottom-up approach that is helpful for analyzing learning engagement and improving the quality of learning and teaching (Yang et al., 2019; Zhang et al., 2020) found that data-driven learning engagement analytics can provide more precise and reliable results than traditional subject analysis methods. Akhuseyinoglu and Brusilovsky (2021) found that data-driven models performed significantly better than several traditional models in predicting learning engagement. Yang et al. (2019) adopted data-driven learning engagement to categorize learning engagement and found that data-driven learning engagement analytics yield more robust evidence in pedagogical practices and prevent improper predefined frameworks and misleading interpretations. Nevertheless, there is a dearth of studies on data-driven group learning engagement analytics in the CSCL context. The purpose of the current study is to close these research gaps and provide insight into the effects of a data-driven and automated group learning engagement analysis and feedback approach.

Text mining and its applications in online collaborative learning

Text mining, also known as text data mining, is the process of extracting interesting and nontrivial patterns or knowledge from unstructured text (Tan, 1999). Text mining aims to extract valuable knowledge through various text mining techniques to assist in human decision-making. Text mining consists of two components: text refining, which transforms unstructured text into an intermediate form, and knowledge distillation, which deduces knowledge or patterns from the intermediate form (Tan, 1999). Text mining has been widely used in the areas of search engines, spam email filtering, opinion mining, feature extraction, and sentiment analysis (Chen et al., 2021). In the field of education, text mining has been used for evaluation, student support, analytics, question/content generation, user feedback, and recommendation systems (Ferreira-Mello et al., 2019). Text mining techniques include text classification, clustering, information extraction, information visualization, topic tracking, and summarization (Gupta & Lehal, 2009).

Recently, researchers have adopted various text mining techniques for the analysis of unstructured text-based discussion transcripts in online collaborative learning. For example, Wong et al. (2021) adopted latent Dirichlet allocation (LDA) to automatically analyze text-based online discussion content and visualize the learning patterns of social interaction. Xie et al. (2018) employed logistic regression and adaptive boosting models to detect leadership in peer-moderated online collaborative learning. Li and Lai (2022) adopted semantic network analysis techniques to identify knowledge co-construction processes in online collaborative writing. Furthermore, text data mining technologies are useful for the automatic analysis of learning engagement. For example, Hayati et al. (2017) adopted a traditional machine learning algorithm, namely, support vector machines (SVM), to classify learners’ cognitive engagement in online discussions. Liu et al. (2022b) utilized a Bayesian probabilistic generative model to automatically analyze learners’ cognitive engagement in MOOC discussions. However, these traditional machine learning methods are highly dependent on handcrafted features, which require expertise in order to design and extract effectively and are thus not automatically ideal for use in extraction of the most useful information from the raw data (Chauhan & Singh, 2018).

Recently, deep learning has attracted increasing attention and has been adopted in the field of education. Deep learning techniques have the potential to achieve higher performance than traditional machine learning approaches since deep learning techniques provide an automated method of representation learning rather than relying on manual feature engineering ( Liu et al., 2017; Bashar, 2019). Deep Neural Networks (DNNs) are the neural networks used in deep learning (Sze et al., 2017). DNNs are able to learn high-level features with more complexity than shallower neural networks, which is the property that enables DNNs to achieve superior performance in many domains (Sze et al., 2017). Several researchers have adopted DNNs for data-driven learning engagement analytics. For example, Liu et al. (2022a) adopted DNNs to automatically analyze individual cognitive and emotional engagement in MOOC discussions. Werlang and Jaques (2021) employed DNNs to recognize students’ learning engagement in videos. However, very few studies have adopted DNNs to automatically analyze group learning engagement in the CSCL context. To address this research gap, this study utilizes DNNs to analyze online discussion transcripts for automatic group learning engagement analysis and to provide group-specific feedback. The online discussion transcripts fully reflect the collaborative learning processes since online collaborative learning occurred entirely online and learners had to interact with each other online to complete the collaborative learning tasks.

Methods

Automated group learning engagement analysis and feedback

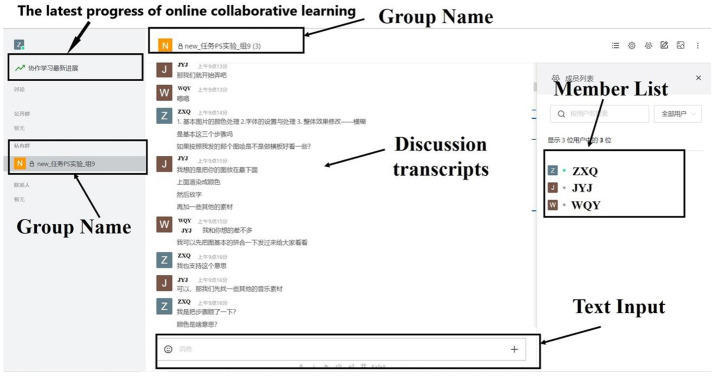

In this study, the automated group learning engagement analysis and feedback approach were proposed and validated via a quasi-experiment. The proposed approach included three phases: collecting data, the automatic analysis of learning engagement, and providing group-specific feedback. In the first phase, all participants engaged in online collaborative learning to complete the same tasks. The online discussion transcripts of each group were automatically recorded by the online collaborative learning platform (see Fig. 1).

Fig. 1.

Screenshot of the online collaborative learning platform

In the second phase, a widely used deep neural network modeling tool referred to as BERT, which stands for the bidirectional encoder representations from transformers (BERT), was adopted to automatically analyze group learning engagement. BERT was selected from among state-of-the-art pretrained large language models because it achieved the best performance in some specific prior text classification research (González-Carvajal & Garrido-Merchán, 2020). The BERT model was proposed by Devlin et al. (2019), who designed BERT to pretrain deep bidirectional representations from unlabeled texts. BERT is trained using what is referred to as a masked language modeling approach that recovers masked token representations using a bidirectional transformer (Minaee et al., 2021). BERT takes an input of a sequence of less than 512 tokens and outputs a vector-based representation of the sequence referred to as an embedding (Sun et al., 2019a, b). More specifically, BERT’s output representation is constructed through a composition procedure over the corresponding token, segment, and position embeddings (Devlin et al., 2019). In the current study, Chinese-based BERT was adopted as the pretrained model with 12 layers, a hidden layer width of 768, 12 self-attention heads, and 110 M parameters. These hyperparameter settings were based on Devlin et al. (2019). There are two steps in BERT’s framework: pretraining, in which the model is trained on unlabeled texts, and fine-tuning, in which the model is fine-tuned for a specific task using labeled texts (González-Carvajal & Garrido-Merchán, 2020).

A total of 17,118 online discussion transcripts collected by the first author were adopted to train, test, and validate BERT. These online discussion transcripts were records of online collaborative learning. The datasets were collected from college students who participated in online collaborative learning with the same collaborative learning tasks before this study. The 17,118 online discussion transcripts were precisely classified into cognitive engagement, metacognitive engagement, behavioral engagement, and emotional engagement by two experienced researchers. In total, 70% of the data were selected as the training set, 20% of the data were used as the validation set, and 10% of the data were used as the test set. The parameters of the model were selected based on Turc et al. (2019) with the following hyperparameter settings: the maximum sequence length was 256; the training batch size was 16; the number of training epochs was 3; and the learning rate was 5e-5.

After configuration of the BERT model for our purposes, it was adopted to automatically classify cognitive engagement, metacognitive engagement, behavioral engagement, and emotional engagement during online collaborative learning. In particular, cognitive engagement was automatically classified into remembering, understanding, applying, and evaluating as well as off-topic information adapted from Bloom et al. (1956). Bloom’s cognitive domains taxonomy includes remembering, understanding, applying, analyzing, evaluating, and creating (Bloom et al., 1956). The main reason for adapting Bloom’s cognitive domain taxonomy was that a previous study found that Bloom’s taxonomy was appropriate for measuring cognitive engagement (Crompton et al., 2019). Metacognitive engagement was automatically classified into planning, monitoring, reflection and evaluation as well as off-topic information adapted from Zheng et al. (2021). Behavioral engagement was automatically classified into knowledge building, regulation, support and agreement, asking questions, and off-topic information based on the Zheng et al. (2021). Emotional engagement was automatically classified into positive, negative, and neutral based on Pang and Lee (2008). Table 1 shows examples of cognitive engagement, metacognitive engagement, behavioral engagement, and emotional engagement. In addition, social engagement was measured through the number of interactions a student and other group members, which were obtained through online discussion records. Furthermore, various models, including BERT, SVM, logistic regression (LR), and naive Bayes (NB), were compared using the same dataset. The accuracy, precision, recall, and F1-scores for each model are shown in Table 2. According to Sokolova et al. (2006), accuracy is the ratio of correct predictions to all predictions, precision is the number of true positives divided by the number of false positives plus true positives, recall is the number of true positives divided by the number of true positives plus false negatives, and the F1-score is the harmonic mean of the model’s precision and recall. As shown in Table 2, BERT clearly achieved the highest performance in the four dimensions of group learning engagement. Furthermore, reliability was calculated by a kappa statistic to evaluate how faithfully the automatic classification matched those of human analysts. The reliabilities of cognitive engagement, metacognitive engagement, behavioral engagement, and emotional engagement were 0.98, 0.89, 0.99, and 0.99, respectively. Therefore, our model and method were reliable for the automatic analysis of group learning engagement.

Table 1.

Examples of cognitive, metacognitive, behavioral, and emotional engagement

| Category | Subcategories | Examples |

|---|---|---|

| Cognitive engagement | Remembering | “Xiao Zhang: I remember that image extraction includes creating selection and image stitching.” |

| Understanding | “Xiao Li: Creating a selection is the first step for image extraction based on my understanding.” | |

| Applying | “Xiao Wang: Yes. We can apply different methods to create a selection for our poster, such as using the lasso, using the wand, using the magnetic lasso, and so on.” | |

| Evaluating | “Xiao Zhang: I prefer using the magnetic lasso to create a selection because it is very precise.” | |

| Off-topic | “Xiao Li: I will go out to pick up a cup of water and come back soon.” | |

| Metacognitive engagement | Planning | “Xiao Wang: Hello, everyone, there are many topics, such as fighting the COVID-19 epidemic, various kinds of festivals, 24 solar terms in China, popular films, and so on.” |

| Monitoring | “Xiao Zhang: We should speed up to confirm the topic of our poster. Time flies.” | |

| Reflection and evaluation | “Xiao Li: I think the topic of fighting the COVID-19 epidemic is suitable for the current situation. This topic is better than others.” | |

| Off-topic | “Xiao Wang: I want to see a film after the discussion.” | |

| Behavioral engagement | Knowledge-building | “Xiao Wang: Image adjustment includes saturation adjustment, brightness adjustment, transformation, and so on.” |

| Regulation | “XiaoLi: We can adjust images first. Then, Xiao Zhang helps with image beautification.” | |

| Support and agreement | “Xiao Wang: I agree with you. Good idea.” | |

| Asking questions | “Xiao Zhang: However, how can we beautify images? Do you have any ideas about it?” | |

| Off-topic | “Xiao Wang: I don’t know. I want to go shopping this afternoon.” | |

| Emotional engagement | Positive | “Xiao Zhang: I think it is a good idea to refine our poster by adding a filter.” |

| Negative | “Xiao Li: Sorry, I have no idea at this moment.” | |

| Neutral | “Xiao Wang: There are many kinds of filters, such as the fresco filter, Gaussian blur filter, motion blur filter, and so on.” |

Table 2.

The performance of different models

| Models | Classifications | Accuracy | Precision | Recall | F1-score |

|---|---|---|---|---|---|

| BERT | Cognitive engagement | 0.76 | 0.75 | 0.76 | 0.75 |

| Metacognitive engagement | 0.85 | 0.86 | 0.81 | 0.82 | |

| Behavioural engagement | 0.73 | 0.77 | 0.74 | 0.73 | |

| Emotional engagement | 0.99 | 0.97 | 0.96 | 0.96 | |

| SVM | Cognitive engagement | 0.57 | 0.57 | 0.57 | 0.54 |

| Metacognitive engagement | 0.81 | 0.83 | 0.81 | 0.81 | |

| Behavioural engagement | 0.62 | 0.61 | 0.62 | 0.59 | |

| Emotional engagement | 0.97 | 0.94 | 0.97 | 0.95 | |

| NB | Cognitive engagement | 0.64 | 0.76 | 0.64 | 0.60 |

| Metacognitive engagement | 0.84 | 0.82 | 0.84 | 0.77 | |

| Behavioural engagement | 0.63 | 0.70 | 0.63 | 0.56 | |

| Emotional engagement | 0.97 | 0.95 | 0.97 | 0.96 | |

| LR | Cognitive engagement | 0.55 | 0.56 | 0.55 | 0.51 |

| Metacognitive engagement | 0.84 | 0.85 | 0.84 | 0.83 | |

| Behavioural engagement | 0.63 | 0.60 | 0.63 | 0.58 | |

| Emotional engagement | 0.97 | 0.94 | 0.97 | 0.95 |

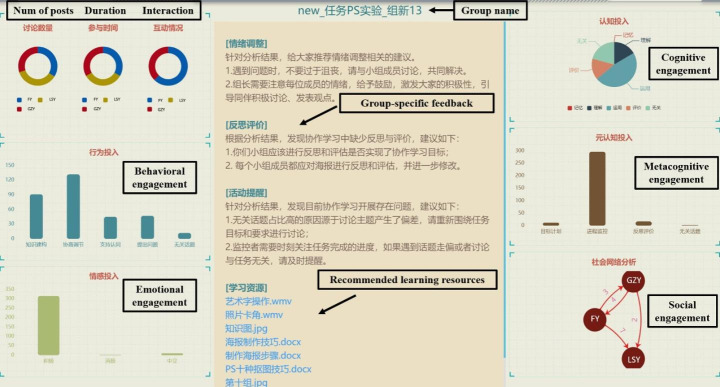

In the third phase, group-specific feedback was automatically provided for each group based on group learning engagement analysis results. This study adopted three principles to design the feedback. The first principle was to design reasonable feedback rules to promote learning engagement. Meyer (2008) found that if rules of feedback consider learners’ status and progress, effective online learning will be promoted. The present study designed various feedback rules based on the status of group learning engagement and progress. The second principle was to provide suggestive feedback, which includes hints and suggestions that call for certain actions (Deeva et al., 2021). Suggestive feedback contributes to improving learning engagement (Sedrakyan et al., 2019), metacognition (Guasch et al., 2019), and learning achievements (Jin & Lim, 2019). Therefore, the present study provided suggestive feedback based on the group learning engagement analysis results. The third principle was to provide useful feedback to reduce cognitive load, as suggested by Redifer et al. (2021). Therefore, this study sought to provide useful and valuable feedback information for learners. Furthermore, group-specific feedback and recommendations were demonstrated in the module of the latest progress of online collaborative learning platforms. The participants in the experimental group could switch the screens of the online discussion and feedback when they needed group-specific feedback and recommendations, which were provided according to predefined rules and specific thresholds. The participants in the experimental group received feedback when group learning engagement reached specific thresholds. This study adopted the average of the level of engagement of the experimental group as the specific threshold based on Lu et al. (2017). Various types of group-specific feedback and recommendations were provided according to whether a group’s level of engagement was lower, higher, or equal to the thresholds, as shown in Table 3. Fig. 2 shows a screenshot of group learning engagement and group-specific feedback.

Table 3.

The rules of group-specific feedback and recommendations

| Conditions | Group-specific feedback and recommendations |

|---|---|

| Lower than average level of cognitive engagement | According to the analysis results, there was a lack of cognitive engagement in your group. It is suggested that your group have a better understanding of collaborative learning tasks and apply what you have learned to make and evaluate posters when you complete the task. You can do it! |

| Equal to average level of cognitive engagement | According to the analysis results, your group demonstrated a medium level of cognitive engagement. It is suggested that your group continually apply what you have learned as you make posters, reflect and evaluate how to further refine posters. You can do it! |

| Higher than average level of cognitive engagement | According to the analysis results, it was found that your group demonstrated a high level of cognitive engagement. Well done! Keep going! |

| Lower than average level of metacognitive engagement |

1. According to the analysis results, it was found that there is a lack of metacognitive engagement in your group. It is suggested that your group set goals, make plans, assign different roles, and select appropriate strategies to complete the tasks. It is recommended that your group further monitor its collaborative learning progress and detect errors or check reliability. In addition, each group member should reflect and evaluate how to further revise and refine the poster. You can do it! 2. Your group can refer to the recommended learning resources on how to make posters and examples of excellent posters to make plans, monitor, and reflect further. |

| Equal to average level of metacognitive engagement |

1. According to the analysis results, it was found that your group can do better in terms of metacognitive engagement. It is suggested that your group set particular goals and make further detailed plans. It is suggested that your group monitor and control as well as further reflect on and evaluate the collaborative learning progress. If there is only partial understanding or a comprehension failure, you can clearly address and discuss this together. You can do it! 2. Your group can refer to the recommended learning resources to monitor, evaluate and further reflect on collaborative learning processes and outcomes. |

| Higher than average level of metacognitive engagement | According to the analysis results, your group demonstrated a high level of metacognitive engagement. Well done! Keep going! |

| Lower than average level of off-topic behavioral engagement | According to the analysis results, your group is concentrating on completing collaborative learning tasks. Keep going! You can do it! |

| Equal to average level of off-topic behavioral engagement | According to the analysis results, there is off-topic discussion in your group. Don’t get off-topic. Please focus on the collaborative learning tasks. You can do it! |

| Higher than average level of off-topic behavioral engagement | According to the analysis results, there is much off-topic discussion in your group. Don’t discuss anything irrelevant to collaborative learning tasks. Each group member should concentrate on the collaborative learning tasks. The monitor should remind group members in a timely way if there is off-topic discussion. You can do it! |

| Lower than average level of behavioral engagement |

1. According to the analysis results, your group demonstrated a low level of behavioral engagement. It is suggested that the group leader engage each member in building knowledge, regulating, supporting peers, and asking questions. It is recommended that the group solve problems from different perspectives and co-construct knowledge. 2. Your group can refer to the recommended learning resources to complete collaborative learning tasks together. |

| Equal to average level of behavioral engagement |

1. According to the analysis results, your group demonstrated a medium level of behavioral engagement. It is suggested that your group engage more effectively in knowledge building, negotiations and regulations, support and agreement, and propose new questions. 2. Your group can refer to the recommended learning resources to further engage in collaborative learning. |

| Higher than average level of behavioral engagement | According to the analysis results, your group demonstrated the highest level of behavioral engagement. Well done! Keep going! |

| Lower than average level of negative emotion regarding emotional engagement | According to the analysis results, the discussion atmosphere of your group is hot! Your group demonstrated positive emotions. There is little negative emotion. Keep going! You can do it! |

| Equal to average level of negative emotion regarding emotional engagement | According to the analysis results, your group demonstrated a medium level of negative emotions during collaborative learning. It is suggested that all group members regulate themselves towards becoming more positive and active. It is suggested that each group member share positive ideas with peers. You can do it! |

| Higher than average level of negative emotion regarding emotional engagement | According to the analysis results, your group demonstrated many negative emotions during collaborative learning. It is suggested that the group leader pay attention to the emotions of each group member and motivate each member to be more positive. Group members can discuss with peers when they have difficulties. You are the best. Don’t be sad or worried. Take it easy. You can do it! |

| Lower than average level of positive emotional engagement | According to the analysis results, your group demonstrated less positive emotion during collaborative learning. It is suggested that group members discuss with peers when they have problems. Don’t be sad or worried. All group members should regulate their emotions and become more positive and active. Take it easy. You can do it! |

| Equal to average level of positive emotional engagement | According to the analysis results, your group demonstrated a medium level of positive emotional engagement. It is suggested that each group member regulate himself or herself to become more positive and active. The group leader should pay attention to each member’s emotions and encourage everyone to be more positive. Take it easy. You can do it! |

| Higher than average level of positive emotional engagement | According to the analysis results, your group demonstrated a high level of positive emotional engagement during collaborative learning. Well done! Keep going! |

| Lower than average level of social engagement | According to the analysis results, your group needs to further enhance social engagement. It is suggested that each group member interact with other members to complete collaborative learning tasks. In addition, each group member should build on peers’ ideas, ask peers questions, or provide suggestions for peers. You can do it! |

| Equal to average level of social engagement | According to the analysis results, your group can achieve a better level of social engagement. It is suggested that each group member interact with other members to complete collaborative learning tasks. In addition, each group member should build on peers’ ideas, ask peers questions, or provide suggestions for peers. You can do it! |

| Higher than average level of social engagement | According to the analysis results, your group demonstrated a high level of social engagement during collaborative learning. Keep going! You can do it! |

Fig. 2.

Screenshot of group learning engagement and group-specific feedback

Participants

A total of 120 college students from a top-10 public university in China voluntarily participated in this study. The participants’ average age was 20. They came from a variety of majors including law, economics, education, psychology, literature, communication, mathematics, computer science, statistics, and physics. All participants were assigned to 20 experimental groups and 20 control groups. The three students in each group were of different ages and majors, and they had not collaborated prior to this experiment. 13 of the groups comprised one male and two females per group. The remaining 27 groups comprised three females per group. There were no significant differences between conditions in terms of gender (X2 = 0.07, p = .78), major (X2 = 23.31, p = .22), age (X2 = 10.11, p = .07), or prior knowledge (t = 0.031, p = .976). Informed consent was obtained, and the participants were able to withdraw from the study at any time.

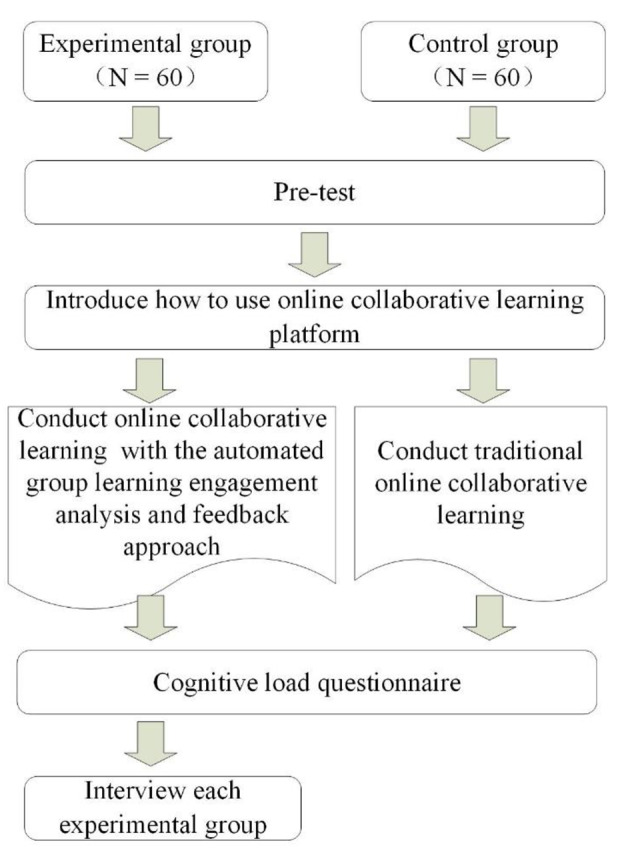

Procedures

This experiment was conducted to examine the effects of the automated group learning engagement analysis and feedback approach on group learning engagement, group performance, collaborative knowledge building, socially shared regulation behaviors, and cognitive load. The procedure is shown in Fig. 3. First, the pretest was administered online to all participants for 20 min. The results revealed no significant difference in the pretest scores between the experimental and control groups (t = 0.031, p = .976). Second, the three students in each group were placed in different rooms but participated in the study synchronously. One laptop with Wi-Fi connections and a complete software package including Photoshop software was provided for each student. Group members were able to share a Photoshop document through an online collaborative learning platform with their team members. Then, the research assistant introduced collaborative learning activities as well as the operation of the online collaborative learning platform. After the introduction, all participants engaged in online collaborative learning for 180 min to complete tasks together. In accordance with CSCL practices, the online collaborative learning task was to create a poster using Adobe Photoshop software. More specifically, the collaborative learning task included four subtasks, namely, discussing and selecting the theme and content of a poster, discussing the techniques and procedures of making a poster, making a poster using Photoshop software, and evaluating and refining the poster. The task and its objectives were the same as those of the multimedia technology course. The only difference was that the students in the experimental group learned via the automated group learning engagement analysis and feedback approach, while the students in the control group learned via the conventional online collaborative learning method. That is, the students in the control group did not receive the group learning engagement analysis results or group-specific feedback. After completion of the online collaborative learning, each group submitted the poster as the group product. Then, a postquestionnaire was administered for 10 min to measure cognitive load. Finally, semi-structured focus group interviews were conducted face-to-face by two research assistants to gain an understanding of the learners’ perceptions of the proposed approach. Each experimental group was interviewed for 30 min.

Fig. 3.

The experimental procedures

Instruments

The instruments included a pretest concerning prior knowledge of Adobe Photoshop and a cognitive load questionnaire. The items of the pretest included ten multiple-choice questions with one correct answer and a maximum score of 40, five true-false questions with a maximum score of 10, five multiple-choice questions with multiple correct answers and a maximum score of 25, and two short answer questions with a maximum score of 25. The pretest was developed by an experienced teacher who had taught Photoshop for more than ten years. The purpose of the pretest was to examine the equivalence in prior knowledge. No posttest was administered in this study because group performance was measured by the quality of the group product.

The cognitive load questionnaire was adapted from Hwang, Yang, and Wang (2013) and included five items that measured mental load and three items that measured mental effort. The Cronbach’s alpha reliability of the cognitive load questionnaire was 0.91, indicating good reliability. One example item of the cognitive load questionnaire was “I need to put lots of effort into completing the learning tasks or achieving the learning objectives in this learning activity.”

Data analysis methods

In this study, the datasets included a pretest of 120 participants, the online discussion transcripts of the 40 groups, the 40 group products, 120 questionnaires, and the interview records from the 20 experimental groups. The two-way independent variable was the learning approach (the automated group learning engagement analysis and feedback approach vs. the traditional online collaborative learning approach), and the dependent variables included group learning engagement, group performance, collaborative knowledge-building level, socially shared regulation, and cognitive load. The covariate was the pretest score measuring prior knowledge. All dependent and covariate variables were measured and calculated at the group level. Group learning engagement includes cognitive engagement, metacognitive engagement, behavioral engagement, emotional engagement, and behavioral and social engagement. Group learning engagement was automatically analyzed through deep neural network models embedded in an online collaborative learning platform. Group performance was measured by the total score of each group’s poster. The collaborative knowledge-building level was measured through the activity quantities of all knowledge nodes in a knowledge graph associated with each group. Socially shared regulation was analyzed manually based on the coding scheme of Table 4. Cognitive load was measured as the average scores of mental load and mental effort for each group. The data analysis methods used include a computer-assisted knowledge graph analysis method, a content analysis method, a lag sequential analysis method, and a statistical analysis method.

Table 4.

The coding scheme of socially shared regulation

| Code | Behaviors | Descriptions | Examples |

|---|---|---|---|

| OG | Setting goals | Setting learning goals and establishing task demands. | “Our group goal is to create a poster using Photoshop.” |

| MP | Planning | Planning how to complete tasks. | “Let’s discuss our plans.” |

| ES | Enacting strategies | Searching and processing information, proposing and examining solutions, and completing tasks. | “We should search for images first and then create a beautiful background.” |

| MC | Monitoring and controlling | Monitoring and controlling the collaborative learning processes and the progress. | “We need to speed it up due to the time limit.” |

| ER | Evaluating and reflecting | Evaluating and reflecting on the collaborative learning process, solutions, and outcomes. | “Let’s evaluate our group product first and then refine further.” |

| AM | Adapting | Modifying the learning goals, plans, or strategies. | “We should revise our plans.” |

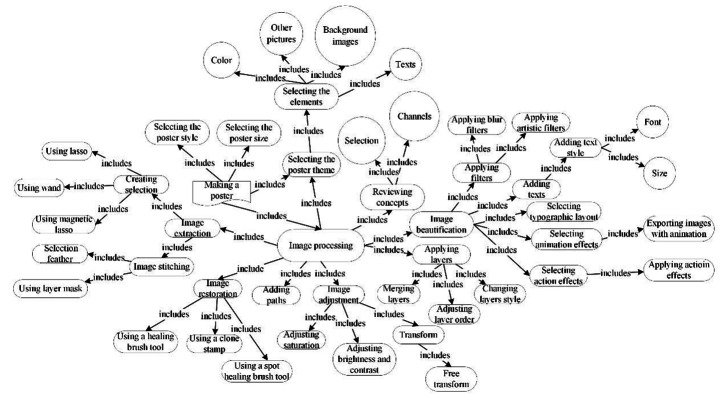

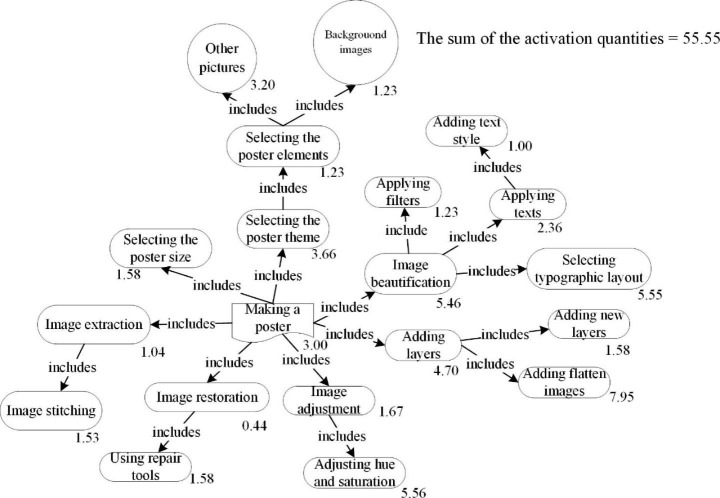

First, two researchers evaluated the pretest independently. The level of agreement between the two researchers was measured at 0.85 kappa, indicating good reliability. Second, the online discussion transcripts of the 40 groups were analyzed via the computer-assisted knowledge graph method to examine the collaborative knowledge-building level. The computer-assisted knowledge graph method was developed by Zheng (2017), and has been validated in previously published studies (Zheng et al., 2021, 2022). It consists of three steps. The first step is to draw a target knowledge graph that represents the relationships among the components of the target knowledge. Fig. 4 shows the target knowledge graph for this study. The second step is to segment and code online discussion transcripts based on predefined rules. The rules specify that online discussion transcripts are segmented when learners, cognitive levels, information types, or knowledge subgraphs change. Two research assistants independently segmented the online discussion transcripts, with agreement measured at 0.81 kappa. The last step was to automatically calculate the collaborative knowledge-building level, which is equal to the activity measured across all knowledge nodes in the knowledge graph. Each knowledge node’s activity measure indicates the information entropy indicated by the associated portions of the online discussion transcripts, which can be calculated using a validated formula. The details of this formula can be found in Zheng (2017). Software to automate the aforementioned three steps was developed by the authors.

Fig. 4.

The target knowledge graph

Third, content analysis and lag sequential analysis methods were employed to analyze socially shared regulation. Two research assistants analyzed the online discussion transcripts based on the coding scheme of socially shared regulations adapted from Zheng (2017). This SSR coding scheme has been validated in a previous study (Zheng et al., 2021); it recognized SSR behaviors more effectively than other coding schemes. Table 4 shows the SSR coding scheme. The interrater reliability calculated using kappa statistics reached 0.9, indicating a high level of consistency. Fourth, each group’s poster was evaluated independently by two research assistants according to the assessment criteria (see Table 5). The assessment criteria included five dimensions, and the full score of each dimension was 20. The assessment criteria were used several times in the multimedia technology course to evaluate the posters objectively. The quality of each group’s poster corresponded to the group’s performance. The kappa value was 0.86, indicating good reliability. Finally, the interview transcripts were analyzed according to grounded theory (Corbin & Strauss, 2008) to categorize four themes: promoting learning engagement, improving collaborative knowledge building, improving group performance, and promoting socially shared regulation. Two research assistants analyzed all interview transcripts based on the subcategories shown in Table 6, and discrepancies were resolved via face-to-face discussion. The interrater reliability of the interviews reached 0.9, indicating good reliability.

Table 5.

The assessment criteria for posters

| Dimensions | 16–20 | 11–15 | 6–10 | 1–5 |

|---|---|---|---|---|

| Themes and content | The poster theme and content are innovative and original. | The poster theme and content are somewhat innovative and original. | The poster theme and content lack innovation and originality. | The poster theme and content originate from others. |

| Layers, channels, and filters | Comprehensive use of multiple layers, channels, and filters. | Use of multiple layers and filters without channels. | Use of layers without filters or channels. | Use of only a single layer without filters and channels. |

| Image stitching | The image-stitching effect is natural, and the color matching is appropriate. | The image-stitching effect is natural, but the color matching is unreasonable. | The image-stitching effect is unnatural, and the color matching is unreasonable. | The images are not stitched. |

| Paths | Employs various paths to create a poster, and the effect is natural. | Employs various paths to create a poster, but the effect is unnatural. | Only one type of path is used. | The poster has been created without using a path. |

| Collaboration | All group members collaborated to create and revise posters. | Each group member completed a part, and then the parts were integrated into a poster. | One member completed the poster, and the others revised it. | Only one member created the poster. |

Table 6.

The t test results of group learning engagement of the two groups

| Dimensions | Groups | Number of groups | Mean | SD | t | d |

|---|---|---|---|---|---|---|

| Cognitive engagement | Experimental group | 20 | 265.40 | 66.92 | 2.31* | 0.73 |

| Control group | 20 | 217.55 | 64.01 | |||

|

Metacognitive engagement |

Experimental group | 20 | 333.90 | 89.31 | 2.34* | 0.74 |

| Control group | 20 | 272.80 | 75.14 | |||

|

Behavioural engagement |

Experimental group | 20 | 332.45 | 89.65 | 2.26* | 0.72 |

| Control group | 20 | 272.90 | 76.22 | |||

| Emotional engagement | Experimental group | 20 | 339.15 | 93.03 | 2.39* | 0.76 |

| Control group | 20 | 267.85 | 95.46 | |||

| Social engagement | Experimental group | 20 | 34.55 | 24.76 | 4.96*** | 1.57 |

| Control group | 20 | 6.50 | 5.01 |

Note. *p < .05. ***p < .001

Results

Analysis of group learning engagement

An independent-sample t test was adopted to test whether there was a significant difference in group learning engagement between the experimental and control groups. As shown in Table 7, the results indicated significant differences in cognitive (t = 2.31, p = .02), metacognitive (t = 2.34, p = .02), behavioral (t = 2.26, p = .03), emotional (t = 2.39, p = .02), and social engagement (t = 4.96, p = .000). Therefore, the automated group learning engagement analysis and feedback approach significantly promoted cognitive, metacognitive, behavioral, emotional, and social engagement.

Table 7.

ANCOVA results for the collaborative knowledge building of the two groups

| Group | Number of groups | Mean | SD | Adjusted mean | SE | F |

|

|---|---|---|---|---|---|---|---|

| Experimental group | 20 | 602.46 | 364.89 | 602.75 | 56.00 | 20.47* | 0.29 |

| Control group | 20 | 244.74 | 102.53 | 244.45 |

Note. **p < .01

Analysis of collaborative knowledge building

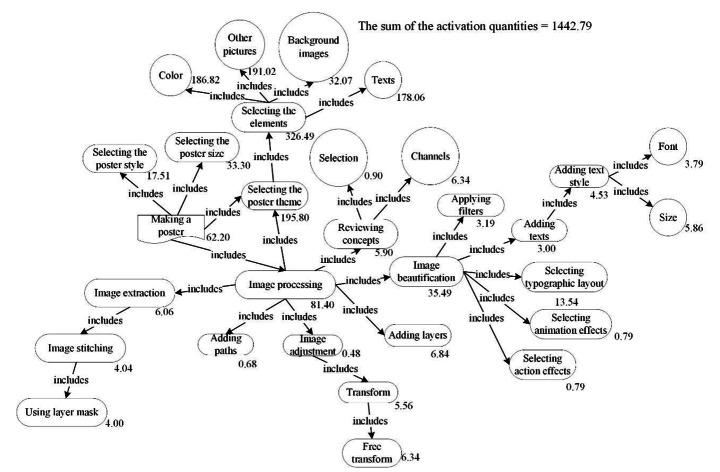

First, the Kolmogorov–Smirnov test was performed to examine whether the datasets were normally distributed. The results indicated that the pretest and collaborative knowledge-building scores were normally distributed (p > .05). Second, the homogeneity of regression slopes was tested. The assumption was not violated (F = 2.328, p = .136). Therefore, one-way analysis of covariance (ANCOVA) was adopted to examine the differences in collaborative knowledge building between conditions, with the learning approach as a two-way independent variable, the pretest as the covariate to exclude its effects, and collaborative knowledge building as the dependent variable. Table 8 shows the ANCOVA results, which revealed a significant difference in collaborative knowledge building between the experimental and control groups (F = 20.47, p = .000). Furthermore, the adjusted means of the experimental group were significantly higher than those of the control group. In addition, omega-squared was used to calculate the effect size and correct the bias of eta-squared (ŋ2) (Lakens, 2013). The results indicated that the automated group learning engagement analysis and feedback approach greatly affected ( = 0.29) collaborative knowledge building based on Cohen’s (1988) criteria. Figs. 5 and 6 display the knowledge graphs of the experimental and control groups, respectively. The number beside each node denotes the activation quantity. The figures clearly show that the total of the experimental group’s activation quantities (1,442.79) was higher than that of the control group (55.55). The experimental group co-constructed a knowledge graph with 30 knowledge nodes and 30 relationships. In contrast, the knowledge graph of the control group had only 20 knowledge nodes and 19 relationships. Therefore, the collaborative knowledge-building level of the experimental group was better than that of the control group.

= 0.29) collaborative knowledge building based on Cohen’s (1988) criteria. Figs. 5 and 6 display the knowledge graphs of the experimental and control groups, respectively. The number beside each node denotes the activation quantity. The figures clearly show that the total of the experimental group’s activation quantities (1,442.79) was higher than that of the control group (55.55). The experimental group co-constructed a knowledge graph with 30 knowledge nodes and 30 relationships. In contrast, the knowledge graph of the control group had only 20 knowledge nodes and 19 relationships. Therefore, the collaborative knowledge-building level of the experimental group was better than that of the control group.

Table 8.

ANCOVA results of the group performances of the two groups

| Group | Number of groups | Mean | SD | Adjusted mean | SE | F |

|

|---|---|---|---|---|---|---|---|

|

Experimental group Control group |

20 | 93.10 | 2.52 | 93.09 | 0.74 | 53.30*** | 0.57 |

| 20 | 85.45 | 3.95 | 85.45 |

Note. ***p < .001

Fig. 5.

The knowledge graph of an experimental group

Fig. 6.

The knowledge graph of a control group

Analysis of group performance

The Kolmogorov–Smirnov test was adopted to examine whether the group performance followed a normal distribution. The results showed that all group performance data were normally distributed (p > .05). The assumption of the homogeneity of regression slopes was not violated (F = 0.000, p = .986), implying that ANCOVA could be adopted to examine the difference in the group performance between the experimental and the control groups by excluding the impact of the pretest scores. Table 9 shows the analysis results. Group performances between the experimental group and control group were significantly different (F = 53.30, p = .000). Furthermore, the adjusted means of the experimental group were significantly higher than those of the control group. The omega-squared value  = 0.57 implied that the automated group learning engagement analysis and feedback approach greatly affected group performance.

= 0.57 implied that the automated group learning engagement analysis and feedback approach greatly affected group performance.

Table 9.

Descriptive statistics of socially shared regulation behaviors

| Groups | Items | OG | MP | ES | MC | ER | AP |

|---|---|---|---|---|---|---|---|

| Experimental group | Number | 43 | 139 | 221 | 270 | 58 | 11 |

| Mean | 2.15 | 6.95 | 11.05 | 13.50 | 2.90 | 0.55 | |

| SD | 1.66 | 3.51 | 7.96 | 6.72 | 1.68 | 1.35 | |

| Control group | Number | 56 | 139 | 141 | 188 | 25 | 10 |

| Mean | 2.80 | 6.95 | 7.05 | 9.40 | 1.25 | 0.50 | |

| SD | 2.89 | 3.62 | 5.52 | 6.69 | 1.51 | 0.68 |

Note: OG = Orienting goals, MP = Forming plans, ES = Enacting strategies, MC = Monitoring and controlling, ER = Evaluating and reflecting, AM = Adapting metacognition

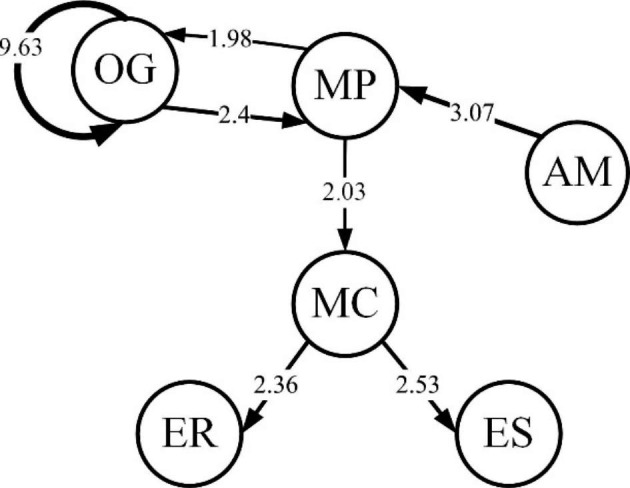

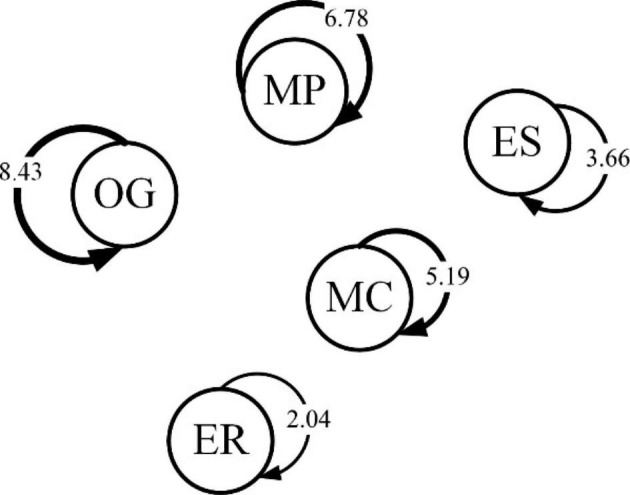

Analysis of socially shared regulation

Table 10 shows the descriptive statistics results of socially shared regulation and demonstrates that monitoring and controlling were highest in both the experimental and control groups. Furthermore, the lag sequential analysis method was used to analyze the differences in socially shared regulation behaviors. Table 11 shows the adjusted residuals of the experimental group. The vertical direction of Table 11 represents the initial behaviors, and the horizontal direction indicates the subsequent behaviors. If the z score is greater than 1.96, then the behavioral sequence reached significance (Bakeman & Quera, 2011).

Table 10.

Adjusted residuals of socially shared regulation for the experimental group

| Starting behaviour | Subsequent behaviour | |||||

|---|---|---|---|---|---|---|

| OG | MP | ES | MC | ER | AM | |

| Orientating goals (OG) | 9.63* | 2.40* | -2.44 | -2.50 | -2.00 | -0.84 |

| Making plans (MP) | 1.98* | 1.94 | -2.57 | 2.03* | -2.84 | -1.63 |

| Enacting strategies (ES) | -3.52 | -0.71 | 1.39 | 0.39 | 0.19 | 1.13 |

| Monitoring and controlling(MC) | -1.89 | -2.64 | 2.53* | -0.97 | 2.36* | 0.68 |

| Evaluating and reflecting(ER) | -1.72 | -0.46 | -0.25 | 0.28 | 1.85 | 0.19 |

| Adapting metacognition(AM) | -0.74 | 3.07* | -1.56 | 0.00 | -0.99 | -0.42 |

Note. *p < .05

Table 11.

Adjusted residuals of socially shared regulation for the control group

| Starting behaviour | Subsequent behaviour | |||||

|---|---|---|---|---|---|---|

| OG | MP | ES | MC | ER | AM | |

| Orientating goals (OG) | 8.43* | 0.35 | -1.81 | -3.26 | -1.02 | -0.04 |

| Making plans (MP) | -1.49 | 6.78* | -1.37 | -3.26 | -0.55 | -1.87 |

| Enacting strategies (ES) | -1.89 | -2.00 | 3.66* | -0.26 | -1.06 | 1.02 |

| Monitoring and controlling(MC) | -2.08 | -3.42 | -1.52 | 5.19* | 1.03 | 0.54 |

| Evaluating and reflecting(ER) | -0.78 | -2.82 | 0.48 | 1.46 | 2.04* | 0.91 |

| Adapting metacognition(AM) | 0.23 | -0.18 | 1.26 | -1.45 | 0.98 | -0.42 |

Note. *p < .05

As shown in Table 11, six significant behavioral sequences occurred only in the experimental group, namely, OG→MP (forming plans after setting goals), MP→MC (monitoring after forming plans), MC→ES (enacting strategies after monitoring), MC→ER (evaluating and reflecting after monitoring), AM→MP (forming plans after adapting metacognition), and MP→OG (setting goals after forming plans). Figure 7 shows the behavioral transition diagram of the experimental group. There were only five significant behavior sequences in the control group (Table 12), including OG→OG (repeatedly setting goals), MP→MP (repeatedly forming plans), MC→MC (repeatedly monitoring and controlling), ES→ES (repeatedly enacting strategies), and ER→ER (repeatedly evaluating and reflecting). The behavioral transition diagram of the control group is shown in Fig. 8. The control group increasingly repeated the same socially shared regulation behaviors. The transitions of different socially shared regulation behaviors for the experimental group were significantly greater than those for the control group.

Fig. 7.

SSR behavioral transition diagram of the experimental group

Table 12.

The t test results of cognitive load of the two groups

| Dimensions | Group | Number of groups | Mean | SD | t | Cohen’s d |

|---|---|---|---|---|---|---|

| Cognitive load | Experimental group | 20 | 2.55 | 0.85 | 1.36 | 0.25 |

| Control group | 20 | 2.76 | 0.83 | |||

| Mental load | Experimental group | 20 | 2.63 | 0.92 | 1.30 | 0.24 |

| Control group | 20 | 2.85 | 0.90 | |||

| Mental effort | Experimental group | 20 | 2.42 | 0.86 | 1.24 | 0.23 |

| Control group | 20 | 2.62 | 0.89 |

Fig. 8.

The SSR behavioral transition diagram of the control group

Analysis of cognitive loads

An independent-sample t test assessed whether the experimental and control groups had significantly different cognitive load measures. As shown in Table 13, the experimental and control groups did not have significantly different cognitive load measures (t = 1.36, p = .17). In addition, there was no significant difference in mental load (t = 1.30, p = .19) or mental effort (t = 1.24, p = .21). Therefore, the automated group learning engagement analysis and feedback approach did not increase the learners’ cognitive load.

Table 13.

Interview results of learning perceptions of using the proposed approach

| Category | Subcategory | Mentioned N (%) |

|---|---|---|

| Promoting learning engagement | The automated group learning engagement analysis and feedback approach clearly presented cognitive engagement, metacognitive engagement, behavioral engagement, emotional engagement, behavioral and social engagement, which enhanced group learning engagement. | 16/20 (80%) |

| The automated group learning engagement analysis and feedback approach clearly demonstrated the number of posts of each group member, which promoted learning engagement of our group. | 16/20 (80%) | |

| The automated group learning engagement analysis and feedback clearly demonstrated the social network analysis results, which enhanced social engagement. | 10/20 (50%) | |

| Promoting collaborative knowledge building | The automated group learning engagement analysis and feedback motivated us to co-construct knowledge of Photoshop together. | 12/20 (60%) |

| Our group acquired new knowledge of Photoshop through peers and recommended resources. This new approach is truly useful. | 18/20 (90%) | |

| Improving group performance | Our group often reflected upon, evaluated, and improved our products based on the analysis results. | 13/20 (65%) |

| The new approach improved collaboration skills. | 14/20 (70%) | |

| Our group completed the product using the recommended learning resources. | 15/20 (75%) | |

| Promoting socially shared regulation | Our group regulated our learning strategies based on the analysis results, and the proposed approach promoted SSR. | 18/20 (90%) |

| Our group monitored the collaborative learning progress based on the analysis results. | 16/20 (80%) | |

| Our group adapted our goals and plans based on the analysis results. | 15/20 (75%) | |

| Our group adjusted our emotions based on the analysis results. | 13/20 (65%) | |

| Impact on cognitive load | The automated group learning engagement analysis and feedback did not increase cognitive load. | 20/20 (100%) |

Interview results

To more deeply understand the learners’ perceptions of the automated group learning engagement analysis and feedback approach, 20 experimental groups were interviewed face-to-face after the online collaborative learning activity. First, as shown in Table 6, all interviewees believed that the automated group learning engagement analysis and feedback approach clearly presented cognitive engagement, metacognitive engagement, behavioral engagement, emotional engagement, and social engagement, which enhanced group learning engagement (80%). The interviewees of 16 of the 20 groups believed that the number of posts of each group member and the entire group’s posts were clearly shown via the automated group learning engagement analysis and feedback approach, which provided information about group awareness and promoted learning engagement (80%). In addition, the social network analysis results guided and increased interaction among group members, which further enhanced group learning engagement (50%). Second, the cognitive engagement analysis results encouraged the learners to co-construct knowledge (60%). The interviewees reported that they were able to acquire new knowledge from peers and the recommended learning resources (90%). Therefore, automated group learning engagement analysis and feedback promoted collaborative knowledge building. Third, the majority of interviewees reported that collaboration skills improved (70%), and they often reflected upon and revised group products based on the analysis results (65%). Therefore, the automated group learning engagement analysis and feedback approach improved group performance. Fourth, most interviewees believed that the automated group learning engagement analysis and feedback approach facilitated socially shared regulation (90%), such as setting goals, enacting strategies, monitoring progress, and regulating behaviors and emotions. The interviewees believed that they are able to adjust their emotions based on the analysis results (65%). Finally, all of the interviewees noted that the automated group learning engagement analysis and feedback approach did not increase their cognitive load (100%).

Discussion

In this study, the automated group learning engagement analysis and feedback approach was developed based on deep neural network models, and its effectiveness was validated in the CSCL context. The results indicated that the proposed approach significantly improved group learning engagement, group performance, collaborative knowledge building, and socially shared regulation. The students’ cognitive load did not increase when using the proposed approach. These results were confirmed by participants during the semi-structured interviews.

Effects on group learning engagement and group performance

The results showed a significant difference in group learning engagement between the experimental and control groups. The experimental group outperformed the control group. There are two possible explanations for the results. First, the students in the experimental group had the opportunity to browse the group learning engagement analysis results, which promoted awareness of learning engagement and further improved group learning engagement. This was confirmed by several interviewees: “Our group often browsed the group learning engagement analysis results, which enabled us to be aware of the latest progress in learning engagement. If we found a low level of group learning engagement, our group would cheer up and keep going.” The findings were in line with Peng et al. (2022), who claimed that group awareness improved students’ learning engagement in online collaborative writing. Second, group-specific suggestive feedback was provided for each group, which promoted group learning engagement. Espasa et al. (2022) found that suggestive feedback had a positive influence on students’ learning engagement.

The results showed a significant difference in group performance between the experimental and control groups. There are several possible explanations for the results. First, the students in the experimental group had a higher level of group learning engagement than those in the control group, which promoted group performance. A previous study found that learning engagement is positively associated with learning performance (Chen, 2017). Therefore, the group performance of the experimental group was higher than that of the control group. Another possible explanation may be that the students in the experimental group constantly browsed the learning engagement analysis results, which promoted further monitoring, reflection, evaluation, and revision of group products. For example, if there was a lack of reflection and evaluation during collaborative learning, the system provided feedback to remind learners to reflect on and evaluate processes and the group product. This increased reflection and evaluation. Lei and Chan (2018) found that reflective assessment promotes advances in knowledge during online collaborative learning. Therefore, the group performance of the experimental group was higher than that of the control group. Third, the students in the experimental group received group-specific suggestive feedback and recommendations based on predefined rules. Sedrakyan et al. (2020) found that suggestive feedback increases learning performance. A previous study also revealed that group-level feedback promotes group performance (Zheng et al., 2022). Furthermore, feedback design is able to moderate the effect of feedback (Schrader & Grassinger, 2021). In the present study, various feedback rules were designed that fully considered the learning engagement level of each group, which moderated group performance. These reasons might explain why the group performance of the experimental group was better than that of the control group.

Effects on collaborative knowledge building

The results revealed a significant difference in collaborative knowledge building between the experimental and control groups. There are several possible explanations for the results. First, the automated group learning engagement analysis and feedback approach demonstrated the analysis results of group learning engagement, which promoted awareness of the latest progress and improved collaborative knowledge building. Li et al. (2021b) revealed that group awareness contributes to promoting knowledge building in CSCL.

Second, the analysis results of group learning engagement contributed to the monitoring and evaluation of collaborative knowledge building. For example, when our system detected fewer knowledge-building behaviors, it reminded learners to concentrate on collaborative knowledge building, and the amount of knowledge building significantly increased. Karaoglan Yilmaz and Yilmaz (2020) indicated that learning analytics is able to serve as a metacognitive tool to monitor and evaluate the learning process. Caballé et al. (2011) also found that the provision of valuable information can be useful for monitoring and evaluating the discussion process.

Third, group-specific suggestive feedback contributed to the improvement in collaborative knowledge building. This result was consistent with Zheng et al. (2022), who found that feedback promoted collaborative knowledge building in CSCL. In this study, group-specific suggestive and elaborated feedback was provided for each group. For example, when the system detected unrelated information, it reminded and advised learners to focus on collaborative learning tasks and co-constructed knowledge. Swart et al. (2019) found that feedback design, especially elaborated feedback, moderated the effectiveness of feedback and that elaborated feedback yielded the best effect. Elaborated suggestive feedback contributes to fostering deep learning and collaborative knowledge improvement (Tan & Chen, 2022). Furthermore, Resendes et al. (2015) stated that the provision of group-level formative feedback promoted collaborative knowledge building since feedback can enhance learners’ knowledge-building abilities. Feedback also facilitates collective knowledge improvement and promotes a deep understanding that is unattainable by one person (Hong et al., 2019). This might be the reason why the collaborative knowledge building of the experimental group was better than that of the control group.

Effects on socially shared regulation

The current study revealed that the experimental group demonstrated more socially shared regulation behaviors than the control group. The main reason might be that the proposed approach clearly demonstrated the latest progress in cognitive, metacognitive, behavioral, emotional, and social engagement, which promoted group awareness and encouraged the experimental group to jointly regulate during CSCL. Rojas et al. (2022) revealed that group awareness can facilitate group regulation in the CSCL context. As several interviewees said, “We truly like the proposed approach because it clearly demonstrates the results of group learning engagement, which stimulates us to collectively regulate behaviors, emotions, cognition, metacognition, and social interaction.” Furthermore, Pellas (2014) found that learning engagement was closely related to regulation. The findings indicated that learning engagement was positively associated with socially shared regulation, as shown by Li et al. (2021a). Moreover, the present study provided group-specific feedback based on the analysis results of group learning engagement, which promoted socially shared regulation to a significant extent. For example, if less interaction among group members was detected, then the system reminded learners to communicate and interact with peers. Thus, social engagement significantly improved. Meyer (2008) argued that rules of feedback contribute to regulating and adjusting online learning activities. Sedrakyan et al. (2020) proposed that learning analytics dashboard feedback can support the regulation of learning. Hence, feedback design moderates socially shared regulation in CSCL.

Several possible confounding variables in this study included gender, major, age, and prior knowledge. These confounding variables might have impacts on participants’ regulated behaviors (Yang et al., 2018). To avoid the impacts of these confounding variables, this study selected participants in the experimental and control groups who had similar backgrounds in terms of gender, major, age, and prior knowledge. Therefore, this study found that the automated group learning engagement analysis and feedback approach had significant impacts on socially shared regulation.

Effects on cognitive load

The findings of this study revealed that the automated group learning engagement analysis and feedback approach did not increase learners’ cognitive load. The proposed approach was effective since it provided valuable information for improving collaborative knowledge building, group performance, and socially shared regulation. Yang et al. (2020) indicated that the use of effective strategies can reduce cognitive load. Redifer et al. (2021) found that useful information can reduce cognitive load. In addition, the design of feedback in an appropriate way can moderate and reduce cognitive load (Swart et al., 2019). In this study, the feedback was not presented in the interface of online discussion to reduce cognitive load and avoid interruption. The students in the experimental group had the opportunity to browse the analysis results of group learning engagement as needed. Furthermore, the experimental and control groups completed the same tasks with equal duration. This might be the principal reason why the cognitive load measures of the two groups were not significantly different.

Implications

The current study has several implications for teachers, practitioners, and developers in the field of CSCL. First, the automated group learning engagement analysis and feedback approach provides real-time and group-specific feedback, which is useful and effective for guiding learners toward improving learning engagement and performance. Dillenbourg and Fischer (2007) stated that collaborative learning does not occur spontaneously. Feedback and intervention are necessary for improving learning engagement and performance (Lu et al., 2017). Therefore, teachers and practitioners can adopt the proposed approach to help learners improve group learning engagement and learning performance during CSCL.

Second, the automated group learning engagement analysis and feedback approach facilitates socially shared regulation during CSCL. Järvelä et al. (2015) revealed that socially shared regulation contributes to productive collaborative learning. Therefore, the proposed approach can be adopted to promote socially shared regulation by jointly setting collaborative learning goals, forming plans, monitoring collaborative learning processes, and reflecting and evaluating collaborative learning processes and outcomes.

Third, the automated group learning engagement analysis and feedback approach is efficient for providing real-time analysis results and group-specific feedback with the aid of text mining techniques, especially using deep neural network models. Text mining techniques play a leading role in automatically analyzing online discussion transcripts (Ahmad et al., 2022). As a deep learning technique, deep neural network models are inherently able to overcome overfitting and the disadvantages of traditional machine learning algorithms dependent on hand-designed features (Liu et al., 2017). Therefore, developers can also propose new deep neural network models to conduct more accurate and efficient analysis.

Limitations

This study was constrained by several limitations. First, this study was not conducted in a curriculum context, and the participants were volunteers from only one university due to COVID-19, which might influence the validity of the study. Future studies should be framed in a curriculum context and expand the sample size to further examine the approach. Second, group-specific feedback was provided based on predefined rules in this study. Different feedback rules might influence the findings. Future studies should investigate how various feedback rules impact collaborative knowledge building, group performance, socially shared regulation, and cognitive load. Third, this study focused on one production-oriented collaborative learning task with a short duration due to COVID-19, which might also influence the transferability of the findings. Future studies should examine the proposed approach in various types of tasks in various learning domains via longitudinal studies.

Conclusion

This study proposed an approach that employed automated analysis and personalized feedback of group learning engagement in the CSCL context. The findings of this study revealed that the proposed approach significantly improved collaborative knowledge building and group performance and promoted socially shared regulation. This study makes two main contributions. The first is that the study defined the constructs of group learning engagement. The second contribution is that it employed a BERT-based deep neural network model to automatically analyze group learning engagement and provide timely and group-specific feedback based on the analysis results. This study deepens our understanding of group learning engagement and enriches our knowledge of CSCL.

Acknowledgements

This study is funded by the International Joint Research Project of Huiyan International College, Faculty of Education, Beijing Normal University (ICER202101).

Declarations

Conflict of interest