Abstract

Viewing a live facial expression typically elicits a similar expression by the observer (facial mimicry) that is associated with a concordant emotional experience (emotional contagion). The model of embodied emotion proposes that emotional contagion and facial mimicry are functionally linked although the neural underpinnings are not known. To address this knowledge gap, we employed a live two-person paradigm (n = 20 dyads) using functional near-infrared spectroscopy during live emotive face-processing while also measuring eye-tracking, facial classifications and ratings of emotion. One dyadic partner, ‘Movie Watcher’, was instructed to emote natural facial expressions while viewing evocative short movie clips. The other dyadic partner, ‘Face Watcher’, viewed the Movie Watcher's face. Task and rest blocks were implemented by timed epochs of clear and opaque glass that separated partners. Dyadic roles were alternated during the experiment. Mean cross-partner correlations of facial expressions (r = 0.36 ± 0.11 s.e.m.) and mean cross-partner affect ratings (r = 0.67 ± 0.04) were consistent with facial mimicry and emotional contagion, respectively. Neural correlates of emotional contagion based on covariates of partner affect ratings included angular and supramarginal gyri, whereas neural correlates of the live facial action units included motor cortex and ventral face-processing areas. Findings suggest distinct neural components for facial mimicry and emotional contagion.

This article is part of a discussion meeting issue ‘Face2face: advancing the science of social interaction’.

Keywords: interactive face-processing, functional near-infrared spectroscopy (fNIRS), hyperscanning, facial mimicry, emotional contagion

1. Introduction

It has long been recognized that live dyadic interactions frequently include unconscious imitation (mimicry) during reciprocal interactions [1–3]. For example, it is also commonly observed in conversations between dyads, where copying non-verbal and verbal features is observed in addition to the explicit content of the speech [4–6]. Although the social function of these unconscious ‘imitation’ behaviours is not well understood, it has been proposed that facial mimicry and other forms of converging interactive behaviours represent prosocial responses that generally increase social affiliation [7]. For this reason, dyadic mimicry has been referred to as ‘social glue’ [8] and represents a high-priority behavioural topic for investigation of live, spontaneous, social interactions.

The theory of embodied emotion proposes a relationship between the neural systems that underlie dynamic facial mimicry and the processing of emotion [9–12]. Consistent with this theory, it has been noted that simulation of a perceived facial expression partially activates the corresponding emotional state, providing a basis for inferring the underlying emotion of the expresser [1]. The significance of this question lies with the hypothesis that facial mimicry may represent a native biological mechanism that supports the conveyance of emotion between interacting humans and serves as a mechanism that underlies interpretation of facial expressions. Behavioural evidence for such a relationship between facial mimicry and emotional processing has been provided by a paradigm where mimicry of facial expressions was blocked by using a face stabilizer consisting of a pencil in the mouth of the ‘Face Watcher’. Findings confirmed that prevention of the physical mimicry of the facial expression impaired ability to recognize emotions [13]. A similar experiment that blocked facial mimicry was also performed while recording electroencephalogram (EEG) signals during passive viewing of facial expressions with emotional content including anger, fear and happiness. Mu desynchronization was observed when participants could freely move their facial muscles but not when their facial movements were inhibited. The findings were interpreted as consistent with a neural link between motor activity, automatic mimicry of facial expressions and the communication of emotion [14].

This hypothesized neural link between the encoding of facial mimicry and neural activity within the emotion-processing systems has also been investigated using botulinum toxin to immobilize frown muscles while neuroimaging using fMRI. Self-initiated frown expressions during the effective period of the toxin resulted in reduced responses in the amygdala (a brain region known to be sensitive to emotional stimuli) during gaze at angry faces relative to the pre-botulinum administration. Results were interpreted as support for the hypothesis that mimicry of passively viewed emotional expressions provides a physiological basis for the social transfer of emotion [15]. However, the neural basis for this relationship remains an active and high-priority question.

Humans are thought to be profoundly social [16], and the dynamic and expressive human face is a universally recognized social ‘meter’ [17]. Skill in accurately translating facial expressions indicating another person's situation or emotional status is an everyday requirement for successful social relationships and conventional interpersonal encounters [18,19]. The focus on faces and eyes [20–22] has long provided an entry point to investigate the neural processing of salient visual features and models of face-processing [23–27]. Neural processing of social, cognitive and emotional behaviours is also embedded in decades of neuroscience based on behavioural, electrophysiological, computational and functional imaging contributing to an ever-expanding knowledge base of the social brain [16,28–30]. The theoretical frameworks for face and social processing merge with increasing focus on natural and spontaneous interactions between individuals.

Current understanding of face and social processing is primarily based on static representations of each. However, models of face-processing, eye contact and social mechanisms including verbal interactions come together with investigations of live and dynamic facial expressions [31–34], spoken language [35,36] and the related dyadic sharing of emotional information. Here we employ live and spontaneous emotion-expressing faces as primary social stimuli. Measures of facial classifications and associated neural responses based on functional near-infrared spectroscopy, fNIRS, and behavioural ratings are applied to investigate cross-brain (dyadic) effects of emotional faces.

The organizational principles of neural coding underlying dynamic and interpersonal social interactions are critically understudied relative to their importance for understanding basic human behaviours in both typical individuals and psychiatric, neurological, and/or developmental disorders [37–43]. This barrier to progress is largely due to technical limitations related to the need to acquire neuroimaging data on two or more individuals simultaneously while engaging in interactive behaviours. Conventional neuroimaging methods, functional magnetic resonance imaging (fMRI) and positron emission tomography (PET), are generally constrained to single-subject studies that do not include ecologically valid interactions. Here we address this knowledge gap using a two-person paradigm and neuroimaging technology specialized for hyperscanning in natural conditions. This novel and emerging focus on the dyad rather than single brains interweaves and extends models of dynamic face-processing and interactive social, cognitive and emotional neuroscience

Obstacles related to imaging neural activity acquired from two interacting individuals are largely addressed using the emerging neural imaging technology fNIRS, which acquires blood oxygen level-dependent (BOLD)-like signals using optical techniques rather than magnetic resonance [44]. This enables functional brain imaging in natural (upright and face-to-face) conditions during hyperscanning of two interacting individuals [45,46]. The signal is based on differential absorption of light by oxyhaemoglobin (OxyHb) and deoxyhaemoglobin (deOxyHb), a proxy for neural activity [47–49]. Although fNIRS has been widely applied for neuroimaging of infants and children, the technology has not been widely applied to adult cognitive research, largely owing to sparse optode coverage and low spatial resolution (approximately 3 cm) relative to fMRI. However, tolerance to movement and the absence of conditions such as a high magnetic field, constraining physical conditions, the supine position and loud noise recommend this alternative technology for two-person live interactive studies. Signals are acquired at roughly every 30 ms, which provides a signal-processing advantage for measures of functional connectivity [50] and neural coupling [32,45] even though the signal is the (slow) haemodynamic response function. Additional technical advances optimize two-person neuroimaging, including extensive bilateral optode coverage for both participants, ‘smart glass’ technology to occlude or reveal views according to a block paradigm that creates ‘rest’ blocks in the time series (figure 1a), and the multi-modal acquisitions including simultaneous facial classifications, eye-tracking, behavioural ratings and live interactive paradigms.

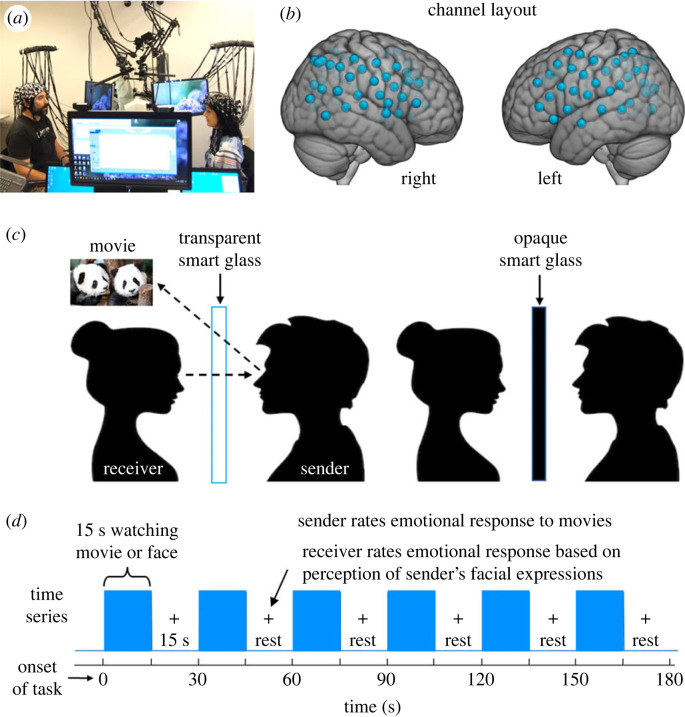

Figure 1.

(a) Set-up for simultaneous neuroimaging of interacting participants separated by a glass panel, i.e. ‘smart glass’ (picture permissions obtained). (b) Channel layout. Right and left hemispheres of a single-rendered brain illustrate median channel locations (blue dots) for 58 channels per participant. Montreal Neurological Institute (MNI) coordinates for each recording channel and corresponding anatomical locations were determined with NIRS-SPM [51]. (c) Paradigm schematic. The ‘smart glass’ divider is transparent during the task (blue bars in (d)) and opaque during rest periods (15 s blank in (d)). (d) Time series for a single run.

Topographical maps of retinal space are distributed throughout the cortex and provide a framework for cortical organization of information-processing [52]. Face-processing modules have been proposed within this framework and are supported by evidence of hierarchical specializations for faces along the ventral stream [19,25]. These prior foundational findings predict that live and interactive face-processing may indeed engage these and additional neural systems above and beyond those that have been so thoroughly studied using conventional single-subject, static and simulated face methods. Additional mechanisms for live and interactive faces are also predicted by the interactive brain hypothesis [53], which proposes specialized neural and cognitive effects due to live interactions between individuals. Specifically, we have shown that live and interactive face-processing mechanisms intersect known social mechanisms and engage right processes including the right angular gyrus, and dorsal stream regions including somatosensory association cortex and supramarginal gyrus [32–34]. We hypothesize that these dorsal regions will also underlie encoding of emotional information shared during spontaneous dyadic face interactions.

Spontaneous facial mimicry has been widely investigated for its putative role in communication and affiliation. It has been suggested that this mechanism is implemented by prefrontal activity that mediates top-down influences on sensory and social systems [54,55]. This suggestion is also consistent with fMRI findings where participants observed an emotional facial expression and were requested to emit an inconsistent expression. Findings of this ‘Stroop-like’ task revealed activity in right frontal regions previously found active during the resolution of sensory conflict [56], activity in supplementary motor cortex as expected with engagement of facial muscles employed in mimicry, and activity in posterior superior temporal sulcus as predicted based on known social systems [28]. Findings were interpreted as evidence for a frontal and posterior neural substrate associated with dynamic and adaptive interpretation and encoding of intentional facial expressions [57]. fMRI findings have also suggested a central role for right inferior frontal gyrus in a task where emotional expressions were intentionally simulated on command [58]. However, the neural correlates of facial mimicry have not been directly investigated in a live two-person paradigm without the confound of cognitive tasks expected to engage associated executive and conflict resolution functions. The significance of these questions and mechanisms is enhanced by prior observations of impairments in automatic mimicry in children with autism spectrum disorder, ASD, referred to as the ‘broken mirror’ theory of autism [59].

Here we focus on a novel multi-modal approach using live dyadic interactions, facial classifications, eye-tracking, neuroimaging using fNIRS, and subjective behavioural reports of conveyed affect during passive gaze at an emotive face. We aim to isolate neural correlates that underlie spontaneous mimicry and emotional contagion. The large body of behavioural evidence suggests that emotional contagion via passive viewing of a facial expression is linked to spontaneous mimicry of the expression. These observations suggest that the neural systems for emotional contagion and facial mimicry may be shared. However, it is not known if these neural systems are separate components of an integrated biological complex or if these systems are bundled together within interactive face-processing and social systems. Here we investigate these alternatives. A priori temporal–parietal expectations include right hemisphere social systems such as regions within the right junction [28], systems associated with ventral stream face-processing including the lateral occipital cortex and superior temporal gyrus [19,52], and regions previously associated with live and interactive faces including right dorsal and temporal-parietal regions [34], in addition to motor cortex associated with faces.

2. Methods

(a) . Participants

Adults 18 years of age and older who were healthy and had no known neurological disorders (by self-report) were eligible to participate. The study sample included 40 participants (26 women, 12 men and 2 identified as another gender; mean age: 26.3 ± 10.5 years; 36 right-handed and 4 left-handed. Eleven participants identified as Asian, five as biracial, one as White Latin X, and 23 as White. Dyad types included eight female–female pairs, eight male–female pairs, two female–other pairs and two male–male pairs (see electronic supplementary material, tables S1 and S2). Counterbalancing for dyad type, gender and race was achieved by order of recruitment in a diverse population, which tended to distribute these potentially confounding factors, to avoid influence on findings. There were no repeated measures. The sample size was based on a power analysis and prior dyadic studies in real interactive conditions. It was determined that relative signal strengths (beta values) for task-based activation were 0.00055 ± 0.00103, giving a distance of 0.534. Therefore, 15 dyads (n = 30) were required to achieve a power of 0.80. Our sample size of 20 dyads (n = 40) provides greater than required confidence for our statistical analysis. Twenty dyads was our a priori targeted sample size. All participants provided written informed consent in accordance with guidelines approved by the Yale University Human Investigation Committee (HIC no. 1501015178) and were reimbursed for participation. Dyad members were unacquainted prior to the experiments and assigned in order of recruitment. Laboratory practices are mindful of goals to assure diversity, equity and inclusion, and accruals were monitored by regular evaluations based on expected distributions in the surrounding area. Each participant provided demographic and handedness information before the experiment.

(b) . Set-up

Dyads were seated 140 cm across a table from each other (figure 1a) and were fitted with an extended head-coverage fNIRS cap (figure 1b). As seen in figure 1a,c, separating the two participants was a custom-made controllable ‘smart glass’ window that could change between transparent and opaque states by application of a programmatically manipulated electrical current. Attached to the top and middle of the smart glass were two small 7-inch LCD monitors with a resolution of 1024 × 600 pixels. The monitors were placed in front of and above the heads of each participant, so the screens were clearly visible but did not obstruct their partner's face. Monitors displayed video clips (Movie Watcher only; figure 1c) and cued participants to rate subjective intensity and valence (positive or negative) of the affective experiences using the dial.

(c) . Paradigm

During the interaction, participants took turns in two aspects of a dyadic interactive task. One partner within a dyad, the Movie Watcher, watched short (3–5 s) video clips on the LCD screen (randomly presented using a custom Python script) while the other partner, the Face Watcher, observed the face of the Movie Watcher (figure 1c). Partners alternated roles as Movie Watcher and Face Watcher. Movies were presented in 3 min runs that alternated between 15 s of movies and 15 s of rest (figure 1d). There were five movies in each 15 s task block with the run. Each Movie Watcher saw a total of 60 movie clips (two runs) for each movie type, and there were three movie types, so each Movie Watcher saw a total of 180 movie clips. After each 15 s set of movie stimuli, Movie Watchers rated their affective responses with a dial on a Likert-type scale evaluating both valence and intensity (positive: 0 to +5, negative: 0 to −5) to the movie block. Face Watchers rated the intensity of their own affective feelings based on their experience of the Movie Watcher's facial expressions during the same period. The comparison of these affective ratings between dyads is reported to document the extent to which the emotion was communicated via a facial expression on an epoch-by-epoch basis. For purposes of description, ratings for all trials and all participants are represented graphically and summarized by a scatterplot that includes both within- and across-subject data. The affect ratings were also analysed on a dyad by dyad basis and the average correlations between all dyads are presented to represent the overall strength of the observed association (emotional contagion).

Facial expressions of both partners were acquired by cameras (recorded as part of the Python script) and analysed with OpenFace (details below). Each participant performed the Movie Watching and Face Watching tasks three times, including two runs for every movie type (‘adorables’, ‘creepies’ and ‘neutrals’, see below) for a total of six 3 min runs and a total duration of 18 min. Movie clips were not repeated. Similar to the affect of ratings above, facial action units (AUs) for all trials and all participants are represented graphically and summarized by a scatterplot that includes both within- and across-subject data. The mean intensity of the first principal component (PC) of facial AUs was also analysed on a dyad by dyad basis and the average correlation across dyads is presented to represent the overall strength of the observed association (facial mimicry).

(d) . Movie library of emotive stimuli to induce natural facial expressions

Emotionally evocative videos (movies) intended to elicit natural facial expressions were collected from publicly accessible sources and trimmed into 3–5 s clips. All video stimuli were tested and rated for emotive properties by laboratory members. The clips contained no political, violent or frightening content, and participants were given general examples of what they might see prior to the start of the experiment. The three categories of videos included: ‘neutrals’, featuring landscapes; ‘adorables’, featuring cute animal antics; and ‘creepies’, featuring spiders, worms and states of decay. Videos were rated prior to use in the experiment according to the intensity of emotions experienced (from 0 to 100 on a continuous-measure Likert-type scale; 0: the specific emotion was not experienced, and 100: emotion was present and highly intense) according to basic emotion types (joy, sadness, anger, disgust, surprise and fear). For example, a video clip of pandas rolling down a hill (from the 'adorables' category) might be rated an 80 for joy, 40 for surprise and 0 for sadness, fear, anger and disgust. Responses were collected and averaged for each video. The final calibrated set used in the experiment consisted of clips that best evoked intense affective reactions (except for the ‘neutrals’ category, from which the lowest-rated videos were chosen).

(e) . Instructions to participants

Participants were informed that the experiment aimed to understand live face-processing mechanisms and were instructed according to their role (i.e. Face Watcher or Movie Watcher). The Face Watcher was instructed to look naturally at the face of the Movie Watcher when the smart glass was clear. The Movie Watcher was instructed to look only at the movies and emote natural facial expressions during that same time period. Natural expressions (such as smiles, eye blinks and other natural non-verbal expressions) were expected owing to the emotive qualities of the movies. Participants were instructed not to talk during the runs, and eye-tracking in addition to the scene cameras confirmed compliance with directional gaze instructions.

(f) . Functional near-infrared spectroscopy signal acquisition and channel localization

Functional NIRS signal acquisition, optode localization and signal-processing, including global component removal, were similar to methods described previously [45,56,60–64] and are briefly summarized below. Haemodynamic signals were acquired using three wavelengths of light, and an 80-fibre multichannel, continuous-wave fNIRS system (LABNIRS, Shimadzu, Kyoto, Japan). Each participant was fitted with an optode cap with predefined channel distances. Three sizes of caps were used based on the circumference of the participants' heads (60 cm, 56.5 cm or 54.5 cm). Optode distances of 3 cm were designed for the 60 cm cap but were scaled equally to smaller caps. A lighted fibre-optic probe (Daiso, Hiroshima, Japan) was used to remove all hair from the optode holder before optode placement.

Optodes consisting of 40 emitters and 40 detectors were arranged in a custom matrix providing a total of 58 acquisition channels per participant. For consistency, the placement of the most anterior midline optode holder on the cap was centred one channel length above nasion. To ensure acceptable signal-to-noise ratios, intensity was measured for each channel before recording, and adjustments were made for each channel until all optodes were calibrated and able to sense known quantities of light from each laser wavelength [61,65,66]. Anatomical locations of optodes in relation to standard head landmarks were determined for each participant using a structure.io three-dimensional scanner (Occipital, Boulder, CO) and portions of code from the fieldtrip toolbox implemented in Matlab 2022a [67–71]. Optode locations were used to calculate positions of recording channels (figure 1b), and Montreal Neurological Institute (MNI) coordinates [72] for each channel were obtained with NIRS-SPM software [51] and WFU PickAtlas [73,74].

(g) . Eye-tracking

Two Tobii Pro x3–120 eye trackers (Tobii Pro, Stockholm, Sweden), one per participant, were used to acquire simultaneous eye-tracking data at a sampling rate of 120 Hz. Eye trackers were mounted on the screen facing each participant. Prior to the start of the experiment, a three-point calibration method was used to calibrate the eye tracker on each participant. The partner was instructed to stay still and look straight ahead while the participant was told to look first at the partner's right eye, then left eye, then the tip of the chin. Eye-tracking data were not acquired on a subset of participants owing to technical reasons associated with the loss of the signal for some participants for which the eye-tracking was not sensitive. The eye-tracking served to confirm that there was no eye contact between the Face Watcher and the Movie Watcher and, when not available, data from the scene cameras substituted for this confirmation. Thus, expected gaze directions were confirmed on all participants. This is important because it has been shown that mimicry is modulated by direct gaze [75–77].

(h) . Facial classification: validation of manual ratings and automated facial action units

Automated facial AUs were acquired simultaneously from both partners using OpenFace [78] and Logitech C920 face cameras to acquire facial features. OpenFace is one of several available platforms that provide algorithmically derived tracking of facial motion in both binary and continuous format. Automatic detection of facial AUs using these platforms has become a standard building block of facial expression analysis, where facial movements are described as dynamic conformational patterns of facial muscle anatomy. Although a direct relationship between distinct emotions and activation patterns has been postulated [79], here facial expressions are partitioned into discrete muscular components and dynamics without association with emotional labels. Participants rated overall affect intensity and valence rather than naming an emotion associated with the movie clip (Movie Watcher) or facial expression (Face Watcher). The facial AU analysis using OpenFace included 17 separate classifications of anatomical configurations.

Conventional methods to validate the relationship between emotions and facial expressions have employed manual codes [80,81]. For example, Ekman, Friesen & Hager developed a manual observer-based method for coding facial expression measurements [82] referred to as the facial action coding system (FACS). FACS provides a technique to record an objective description of facial expressions based on activations of facial muscles and has provided a foundation to link human emotions with specific human facial expressions. By contrast, application of the OpenFace platform in this investigation does not relate facial AUs to any specific emotion. Spontaneous expressions of the Movie Watcher are classified as discrete constellations of moving parts (AUs) and rated by the Face Watcher using a scale from −5 to +5 indicating affect valence and intensity. There is no inference with respect to a specific emotion.

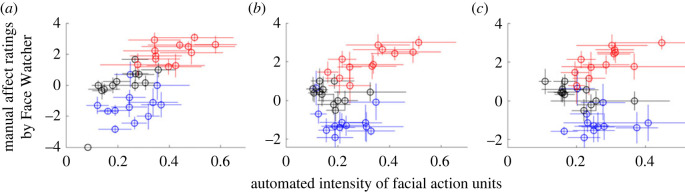

The application of spontaneous and live individual human facial expressions as a stimulus for the investigation of face-processing is novel. Dynamic faces constitute a high-dimensional stimulus space not previously explored by conventional experimental paradigms. To validate this dyadic methodology we plot the average manual affect ratings (y-axis) against average automatic measures of facial AUs (x-axis) for all three movie types: 'adorables' (red), 'neutrals' (black) and 'creepies' (blue), all 17 AUs, and all participants (see electronic supplementary material, figure S1a,b). Figure 2a–c (below) shows examples of three typical AUs: (a) 12 (Lip Corner Pull), (b) 25 (Lips Part) and (c) 17 (Chin Raiser). Each data point (circle) in the scatterplots represents averaged information from each 15 s task block (figure 1d). Vertical error bars indicate the s.e.m. of all ratings, which, on average, is ±0.34 s.e.m. across all blocks and AUs, and confirms a high level of consistency across participants who viewed the spontaneous and natural expressions from the many expressers (Movie Watchers). Horizontal error bars indicate the s.e.m. of AU intensities which, on average, is ±0.04 s.e.m. across all blocks and AUs. This relatively high variability of the facial AU intensities (x-axis) is consistent with a range of individual differences that consist of natural and spontaneous real human facial expressions from each of the individuals who participated in the experiment [83]. None of these individuals was a part of the research team and all were naive to the experimental details. These scatterplots and validity metrics illustrate that, in spite of the highly variable faces and facial expressions (x-axis), face raters (y-axis) consistently rated valence and intensity in accordance with movie types: ratings of the ‘adorable’ movies are in the positive range, ratings of the ‘neutral landscapes’ are in the ‘no-affect’ range and ratings of the ‘creepie’ movies are in the negative range. These inter-rater reliability scatterplots serve to validate this core variable of automated facial AUs acquired by OpenFace as a measure of live and spontaneous facial motion.

Figure 2.

Manual affect ratings by face viewer averaged for all participants are plotted versus the averaged automated intensity measures of three representative AUs [78] from the Movie Watcher: Lip Corner Pull (12) (a), Lips Part (25) (b) and Chin Raiser (17) (c). Each dot represents a 15 s task block. The y-axis shows average affect rating of the Face Watcher based on the Movie Watcher's facial expression. The x-axis shows the AU intensity obtained by OpenFace for the 15 s block. The vertical error bars indicate the inter-rater reliability and the horizontal error bars indicate the variation in the facial stimuli presented to the Face Watcher. In spite of the highly variable facial expressions, Face Watchers consistently rated valence and intensity in accordance with expectations of movie types.

Given the uniform classifications of valence and intensity, a principal components analysis (PCA) was applied to represent the facial dynamics. The first principal component (PC1) was used as modulator of the neural data. This approach included all 17 facial AUs weighted according to their contribution. The Pearson's correlation coefficient of the PC1 AUs between the two partners was taken as an objective representation of facial movement mimicry from the Movie Watcher (expresser) to the Face Watcher (face viewer). The average of the correlation coefficients across all dyads is taken as the group measure of the strength of that association.

(i) . Functional near-infrared spectroscopy signal processing

Raw optical density variations were acquired at three wavelengths of light (780, 805 and 830 nm), which were translated into relative chromophore concentrations using a Beer–Lambert equation [84–86]. Signals were recorded at 30 Hz. Baseline drift was removed using wavelet detrending provided in NIRS-SPM [51]. In accordance with recommendations for best practices using fNIRS data [87], global components attributable to blood pressure and other systemic effects [88] were removed using a PCA spatial global mean filter [60,62,89] before general linear model (GLM) analysis. This study involves emotional expressions that originate from specific muscle movements of the face, which may cause artefactual noise in the OxyHb signal. To minimize this potential confound, we used the HbDiff signal, which combines the OxyHb and deOxyHb signals for all statistical analyses. However, following best practices [87], baseline activity measures of both OxyHb and deOxyHb signals are processed as a confirmatory measure. The HbDiff signal averages are taken as the input to the second level (group) analysis [90]. Comparisons between conditions were based on GLM procedures using NIRS-SPM [51]. Event epochs within the time series were convolved with the haemodynamic response function provided from SPM8 [91] and fitted to the signals, providing individual ‘beta values’ for each participant across conditions. Group results based on these beta values are rendered on a standard MNI brain template (TD-ICBM152 T1 MRI template [72]) in SPM8 using NIRS-SPM software with WFU PickAtlas [73,74].

(j) . General linear model analysis

The primary GLM analysis consists of fitting four model regressors (referred to as covariates) to the recorded data. For each 30 s block, there are 15 s of task, either movie viewing or face viewing (depending upon the condition), and 15 s of rest. During the 15 s task epochs, visual stimuli were presented to both participants: the Movie Watcher viewed movie clips on a small LCD monitor (figure 1c), and the smart glass was transparent so the Face Watcher could observe the face of the Movie Watcher. For each type of movie, the onsets and durations were used to construct the square wave block design model. The three movie types served as the first three covariates. The fourth model covariate (referred to as Intensity) was a modulated block design created to specifically interrogate the neural responses of the Face Watcher's brain by either the affective ratings or the facial AUs of the Movie Watcher.

3. Results

(a) . Affective ratings

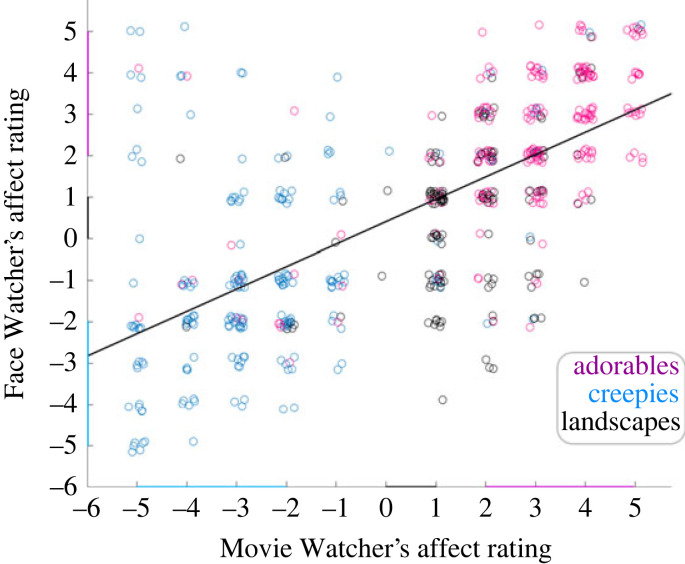

Average emotional ratings are represented on the scatterplot in figure 3, where the Movie Watchers' ratings of affect valence and intensity (x-axis) are plotted against the Face Watchers’ ratings (y-axis) for all three movie types: red, black and blue circles represent ‘adorables’, ‘neutrals’ and ‘creepies’, respectively. This graphical illustration confirms that ratings were generally matched within the dyad for both intensity and valence. In particular, expressions associated with the ‘adorable' movies (red) were ranked as higher than the ‘landscapes’ (black). The ‘creepy' movies tended to be more variable because a ‘cringe’ expression (negative valence) of the Movie Watcher, in some cases, elicited a jovial response (positive valence) from the Face Watcher. However, overall evidence for emotional contagion is provided by the average correlation (r = 0.67 ± 0.04 s.e.m.) between the ratings across all movie types.

Figure 3.

Scatterplot of the Movie Watchers' and Face Watchers' emotional affect based on ratings of valence and intensity. Red: 'adorables', blue: 'creepies' and black: 'landscape' movies. This scatterplot illustrates all within- and across-subject observations. For quantitative purposes, each dyad pair was analysed separately and the mean correlation across all dyads was r = 0.67 ± 0.04 s.e.m. The correlation between the two ratings is consistent with emotional contagion. That is, the expression on the face of the Movie Watcher (as rated by the Movie Watcher) tended to convey the emotion to the Face Watcher.

(b) . Facial classifications

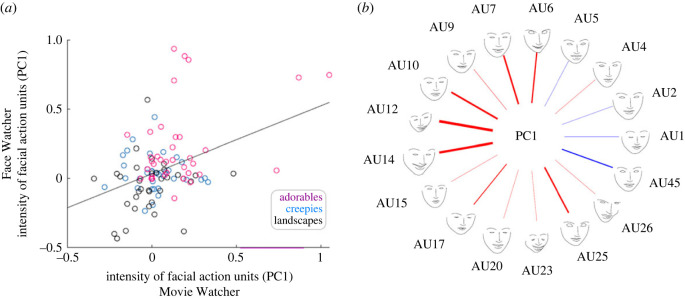

The facial AUs were acquired in real time simultaneously on both partners during the task (transparent glass) epochs. Evidence for facial mimicry is provided by the correlation between the first PC calculated for each participant and movie type and represented as shown in figure 4a. The x- and y-axes represent facial classifications based on the PC1 for each of the dyadic partners. The correlation (r = 0.36 ± 0.11 s.e.m.) between the two partners and across the movie types is taken as an objective representation of facial movement mimicry from the Movie Watcher to the Face Watcher. Together these findings are consistent with the hypothesis that facial mimicry is observed during the conveyance of affective perceptions [57,92,93].

Figure 4.

(a) Scatterplot of the Movie Watcher's and Face Watcher's PC facial AUs: Movie Watcher on the x-axis and Face Watcher on the y-axis. The scatterplot illustrates the relationship between the partners' facial AUs for each of the movie types (red, 'adorables'; blue, 'creepies'; and black, 'landscapes'). All 17 AUs are included in the dataset and are represented for each movie type and all participants. Quantification of the relationship between the expresser (Movie Watcher) and the responder (Face Watcher) is based on the average of the individual correlations for each dyad (r = 0.36 ± 0.11 s.e.m). Findings are consistent with the hypothesis of facial mimicry (sometimes referred to as sensorimotor simulation, [1]). That is, the expression on the face of the Movie Watcher showed some tendency to be automatically replicated on the face of the Face Watcher. (b) Illustration of the relative contributions of each AU to PC1. The AUs are shown on the outer circle by name. The thickness of each line represents the coefficient of the AU to PC1 and the colour of the line represents the valence: positive (red) or negative (blue) of the coefficient. PC1 explains 37 ± 1.6% s.e.m. of the total variance.

Figure 4b illustrates the relative contributions (line thickness) of each of the facial AUs and their valence (red, positive; blue, negative) to PC1. As expected from prior reports, AU12 (zygomaticus major) and AU6 (orbicularis oculi) are relevant to positive facial reactions whereas AUs 4 and 5 associated with frowning are less relevant in this application [80,94]. Further descriptions of these specific AUs have been reported previously [78]. The PC1 accounted for 37 ± 1.6% s.e.m. of the total variance.

(c) . Neural responses: how does the expressive face of the Movie Watcher modulate the brain of the Face Watcher?

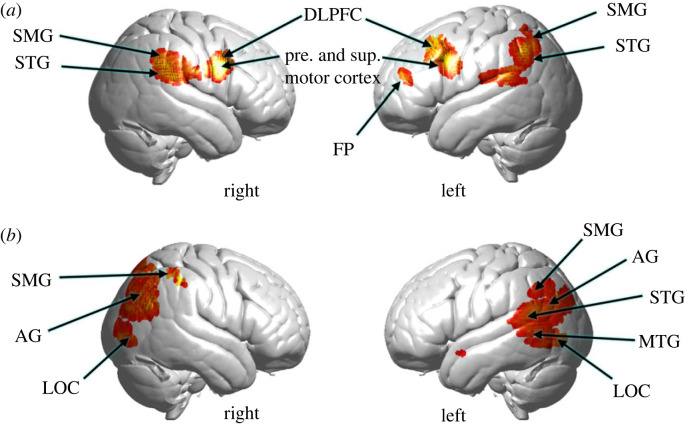

For each dyad, the principal component AU, PC1 AU, was applied to modulate the neural responses of the Face Watcher. Figure 5a shows increased activity in the right temporal–parietal junction, including the superior temporal gyrus, STG, and supramarginal gyrus, SMG, consistent with prior findings of interactive face gaze [32–34], and bilateral pre-and supplementary motor cortex, consistent with an additional motor response related to face-processing. See electronic supplementary material, table S3.

Figure 5.

(a) The Movie Watcher's facial expressions as represented by the PC1 of facial AUs are applied as a modulator (covariate) of neural activity on the Face Watcher's fNIRS signals acquired while viewing the emotive face of the Movie Watcher (combined oxyhaemoglobin (OxyHb) and deoxyhaemoglobin (deOxyHb) signals). See electronic supplementary material, table S3 for cluster details. (b) The Movie Watcher's affect valence and intensity ratings applied as a modulator of neural activity of the Face Watcher's fNIRS signals acquired while viewing the emotive face of the Movie Watcher (combined OxyHb and deOxyHb signals). AG, angular gyrus; DLPFC, dorsolateral prefrontal cortex; FP, frontal pole; LOC, lateral occipital cortex; MTG, middle temporal gyrus; SMG, supramarginal gyrus; STG, superior temporal gyrus. See electronic supplementary material, table S4 for cluster details.

(d) . Neural responses: how does the affect rating of the Movie Watcher modulate the brain of the Face Watcher?

The average affect intensity and valence ratings for the Movie Watcher for each movie type were employed as a covariate on the neural responses of the Face Watcher using the GLM described above. Right temporal–-parietal junction regions (figure 5b) are observed adjacent and posterior to areas responsive to the facial AU shown in figure 5a, including SMG, AG and lateral occipital cortex, LOC. These regions have been implicated in facial and social processes including Theory of Mind [95]. Activity is also observed in left LOC and inferior, middle and superior temporal gyri in addition to the angular gyrus and supramarginal gyrus, suggesting a robust bilateral neural response during the conveyance of affect from the face of a dyadic partner. See electronic supplementary material, table S4.

4. Discussion

(a) . From the single brain to the dyad: a theoretical shift

Within a dyadic model, a single human brain is only one half of the fundamental social unit. The emerging development of two-brain functional imaging systems advances a paradigm shift from single brains to dyads. As a result, a new set of principles of dyadic functions that underlie the neural correlates for human cognition, perception and emotions come into focus, suggesting an important future direction. Although emotional contagion is recognized as a foundational feature of biological social interactions, the underlying mechanisms are not understood, partly owing to prior technical roadblocks to investigation of dyadic behaviours. It has been suggested that spontaneous facial mimicry may provide an interactive mechanism for the transfer of emotion from one person to another. As such, this putative mechanism is a focus for investigation of dyadic exchanges in this study.

We apply a dyadic neuroimaging approach enabled by optical imaging and multi-modal techniques to simultaneously investigate the mechanisms of facial mimicry and emotional contagion. One participant, the ‘Movie Watcher’, generated facial expressions while watching emotionally provocative silent videos, and the other partner, the ‘Face Watcher’, observed the face of the Movie Watcher. Ratings of the emotional experience (dial rotation) were acquired simultaneously for both participants at the end of each 15 s block of similar videos (each 3–4 s in duration). Both affect ratings and facial classifications were applied as modulators of face-processing neural data to highlight the neural systems that were active during live viewing of facial expressions.

The videos viewed by the Movie Watcher included ‘neutrals’, featuring landscapes, ‘adorables’, featuring animal antics, ‘creepies’, featuring spiders, worms and states of decay, intended to induce a variety of facial expressions and emotional responses that varied from positive to negative although no specific affect was targeted. The Movie Watcher rated his/her emotional valence and intensity following each 15 s video epoch. The Face Watcher rated his/her affect based on expressions on the Movie Watcher's face. The correlation of affect ratings between the Movie Watcher and Face Watcher dyads confirmed that the affect was transmitted by facial expressions, and was taken as a measure of ‘emotional contagion’. The correlation between the first principal component, PCA1, of all facial AUs of the Movie Watcher's and Face Watcher's faces was taken as a measure of facial mimicry. As such, the effects of facial mimicry and emotional contagion were both acquired simultaneously in the live interactive paradigm.

A primary aim of this investigation was to isolate the neural systems that underlie each of these dyadic functions. Specifically we test two alternative hypotheses related to neural organization. The co-occurrence of facial mimicry and emotional contagion suggests that the underlying neural systems may indeed be a common system. Alternatively, the complexity of contributing motor, social and visual functions suggests that the underlying neural systems may be separate.

In the case of facial mimicry (figure 5a), bilateral motor (pre- and supplementary motor cortex) and dorsolateral prefrontal cortex, DLPFC, are prominently featured and provide the face-validity of the expected motor finding. The additional components consisting of supramarginal gyrus, SMG, and superior temporal gyrus, STG, are also well-known components of the live interactive face system [32–34,96]. Together, findings of this study suggest that these neural systems support the processes of facial mimicry during the exchange of emotional information. In the case of emotional contagion during the same experimental conditions (figure 5b), clusters of neural activity include bilateral lateral occipital cortex, LOC, a ventral stream face-processing component, angular gyrus, AG, a social and interactive face-processing region, and the dorsal parietal region of SMG, previously observed in live face-processing tasks [34]. Together, these separate neural systems suggest that the processes of emotional contagion are distinct from those engaged during simultaneous facial mimicry. Comparison of the activity in figure 5a,b indicates that these regions are not shared between facial mimicry and emotional contagion. We note that this neural finding is also consistent with the apparent lower correlation of facial mimicry (r = 0.36 ± 0.11 s.e.m.) as compared with the correlation of emotional ratings (0.67 ± 0.04 s.e.m.), which further suggests that pathways for emotional contagion and physical mirroring of these emotional responses are indeed processed separately. Future investigation of functional connections and interactive properties between these neural component is suggested by these findings.

(b) . Limitations and advantages

Optical imaging of human brain function using fNIRS is limited by the shallow signal source, which is 1.5–2.0 cm from the surface. This restricts interrogation of the neural systems to superficial cortex. Thus, theoretical frameworks emerging from the haemodynamic dual-brain techniques using fNIRS represent only a subset of the working brain. However, this limitation is balanced with the advantages of imaging live social interactions that cannot be imaged by conventional magnetic resonance imaging owing to the single-person limitation. Technology provided by fNIRS is foundational for novel investigations of live social interactions. Live two-person interactive neuroscience extends the single-subject knowledge base of social behaviour and neural correlates to an emerging knowledge-based related to dyadic behavioural functions. The supporting role of multi-modal complementary approaches is also highlighted with optical imaging techniques as these approaches are not encumbered by physical constraints of the scanner or a high magnetic field. The acquisition of simultaneous behavioural information including eye-tracking, facial classification and subjective reports, for example, extends the model components that enrich neural models of live and spontaneous facial processing. However, these novel applications raise new standards for methodological validations. In particular, automated classifications of facial AUs (expressions) are currently under development. Although state-of-the-art technology has been applied here, future improvements in these methods may increase precision of these findings. A future direction for dyadic studies of emotional contagion and facial mimicry includes cross-brain neural coupling as an emerging cornerstone for interactive neuroscience. Although beyond the scope of this initial investigation, future studies using these techniques can be designed to further investigate neural coupling, functional connectivity and related neural mechanisms that underlie dyadic interactions included in emotional contagion and facial mimicry.

Ethics

Ethical approval was obtained from the Yale University Human Research Protection Program (HIC no. 1501015178, 'Neural mechanisms of the social brain'). Informed consent was obtained from each participant by the research team.

Data accessibility

The datasets analysed for this study are available from the Dryad Digital Repository: https://doi.org/10.5061/dryad.dz08kps16 [97] and in the electronic supplementary material [98].

Authors' contributions

J.H.: conceptualization, data curation, formal analysis, funding acquisition, investigation, methodology, project administration, resources, software, supervision, validation, visualization, writing—original draft, writing—review and editing; X.Z.: data curation, formal analysis, investigation, methodology, software, supervision, validation, visualization, writing—review and editing; J.A.N.: data curation, formal analysis, investigation, methodology, software, supervision, validation, visualization, writing—review and editing; A.B.: data curation, investigation, visualization, writing—review and editing.

All authors gave final approval for publication and agreed to be held accountable for the work performed herein.

Conflict of interest declaration

The authors declare that this research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Funding and acknowledgements

This research was partially supported by the National Institute of Mental Health of the National Institutes of Health under award numbers R01MH107513 (PI J.H.); 1R01MH119430 (PI J.H.). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

References

- 1.Wood A, Rychlowska M, Korb S, Niedenthal P. 2016. Fashioning the face: sensorimotor simulation contributes to facial expression recognition. Trends Cogn. Sci. 20, 227-240. ( 10.1016/j.tics.2015.12.010) [DOI] [PubMed] [Google Scholar]

- 2.Cañigueral R, Krishnan-Barman S, Hamilton AFC. 2022. Social signalling as a framework for second-person neuroscience. Psychon. Bull. Rev. 29, 2083–2095. ( 10.3758/s13423-022-02103-2) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Heyes C. 2011. Automatic imitation. Psychol. Bull. 137, 463-483. ( 10.1037/a0022288) [DOI] [PubMed] [Google Scholar]

- 4.Hale J, Ward JA, Buccheri F, Oliver D, Hamilton AFC. 2020. Are you on my wavelength? Interpersonal coordination in dyadic conversations. J. Nonverbal Behav. 44, 63-83. ( 10.1007/s10919-019-00320-3) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Stephens GJ, Silbert LJ, Hasson U. 2010. Speaker–listener neural coupling underlies successful communication. Proc. Natl Acad. Sci. USA 107, 14 425-14 430. ( 10.1073/pnas.1008662107) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Wilson M, Wilson TP. 2005. An oscillator model of the timing of turn-taking. Psychon. Bull. Rev. 12, 957-968. ( 10.3758/BF03206432) [DOI] [PubMed] [Google Scholar]

- 7.Wang Y, Hamilton AFC. 2013. Understanding the role of the ‘self’ in the social priming of mimicry. PLoS ONE 8, e60249. ( 10.1371/journal.pone.0060249) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hale J, Hamilton AFC. 2016. Testing the relationship between mimicry, trust and rapport in virtual reality conversations. Scient. Rep. 6, 35295. ( 10.1038/srep35295) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Damasio AR. 1996. The somatic marker hypothesis and the possible functions of the prefrontal cortex. Phil. Trans. R. Soc. Lond. B 351, 1413-1420. ( 10.1098/rstb.1996.0125) [DOI] [PubMed] [Google Scholar]

- 10.Niedenthal PM, Maringer M. 2009. Embodied emotion considered. Emotion Rev. 1, 122-128. ( 10.1177/1754073908100437) [DOI] [Google Scholar]

- 11.Niedenthal PM, Barsalou LW, Winkielman P, Krauth-Gruber S, Ric F. 2005. Embodiment in attitudes, social perception, and emotion. Pers. Social Psychol. Rev. 9, 184-211. ( 10.1207/s15327957pspr0903_1) [DOI] [PubMed] [Google Scholar]

- 12.Winkielman P, Niedenthal P, Wielgosz J, Eelen J, Kavanagh LC. 2015. Embodiment of cognition and emotion. In Attitudes and social cognition. APA handbook of personality and social psychology, vol. 1 (eds M Mikulincer, PR Shaver, E Borgida, JA Bargh), pp. 151–175. Washington, DC: American Psychological Association. ( 10.1037/14341-004) [DOI]

- 13.Oberman LM, Winkielman P, Ramachandran VS. 2007. Face to face: blocking facial mimicry can selectively impair recognition of emotional expressions. Social Neurosci. 2, 167-178. ( 10.1080/17470910701391943) [DOI] [PubMed] [Google Scholar]

- 14.Birch-Hurst K, Rychlowska M, Lewis MB, Vanderwert RE. 2022. Altering facial movements abolishes neural mirroring of facial expressions. Cogn. Affect. Behav. Neurosci. 22, 316-327. ( 10.3758/s13415-021-00956-z) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hennenlotter A, Dresel C, Castrop F, Ceballos-Baumann AO, Wohlschläger AM, Haslinger B. 2009. The link between facial feedback and neural activity within central circuitries of emotion – new insights from botulinum toxin-induced denervation of frown muscles. Cereb. Cortex 19, 537-542. ( 10.1093/cercor/bhn104) [DOI] [PubMed] [Google Scholar]

- 16.Adolphs R. 2009. The social brain: neural basis of social knowledge. Annu. Rev. Psychol. 60, 693-716. ( 10.1146/annurev.psych.60.110707.163514) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hari R, Henriksson L, Malinen S, Parkkonen L. 2015. Centrality of social interaction in human brain function. Neuron 88, 181-193. ( 10.1016/j.neuron.2015.09.022) [DOI] [PubMed] [Google Scholar]

- 18.Emery NJ. 2000. The eyes have it: the neuroethology, function and evolution of social gaze. Neurosci. Biobehav. Rev. 24, 581-604. ( 10.1016/S0149-7634(00)00025-7) [DOI] [PubMed] [Google Scholar]

- 19.Gobbini MI, Haxby JV. 2007. Neural systems for recognition of familiar faces. Neuropsychologia 45, 32-41. ( 10.1016/j.neuropsychologia.2006.04.015) [DOI] [PubMed] [Google Scholar]

- 20.Senju A, Johnson MH. 2009. The eye contact effect: mechanisms and development. Trends Cogn. Sci. 13, 127-134. ( 10.1016/j.tics.2008.11.009) [DOI] [PubMed] [Google Scholar]

- 21.Hoffman EA, Haxby JV. 2000. Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nat. Neurosci. 3, 80-84. ( 10.1038/71152) [DOI] [PubMed] [Google Scholar]

- 22.Mccarthy G, Puce A, Gore JC, Allison T. 1997. Face-specific processing in the human fusiform gyrus. J. Cogn. Neurosci. 9, 605-610. ( 10.1162/jocn.1997.9.5.605) [DOI] [PubMed] [Google Scholar]

- 23.Haxby JV, Hoffman EA, Gobbini MI. 2000. The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223-233. ( 10.1016/S1364-6613(00)01482-0) [DOI] [PubMed] [Google Scholar]

- 24.Ishai A, Schmidt CF, Boesiger P. 2005. Face perception is mediated by a distributed cortical network. Brain Res. Bull. 67, 87-93. ( 10.1016/j.brainresbull.2005.05.027) [DOI] [PubMed] [Google Scholar]

- 25.Kanwisher N, Mcdermott J, Chun MM. 1997. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 17, 4302-4311. ( 10.1523/JNEUROSCI.17-11-04302.1997) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Pitcher D, Dilks DD, Saxe RR, Triantafyllou C, Kanwisher N. 2011. Differential selectivity for dynamic versus static information in face-selective cortical regions. Neuroimage 56, 2356-2363. ( 10.1016/j.neuroimage.2011.03.067) [DOI] [PubMed] [Google Scholar]

- 27.Powell LJ, Kosakowski HL, Saxe R. 2018. Social origins of cortical face areas. Trends Cogn. Sci. 22, 752-763. ( 10.1016/j.tics.2018.06.009) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Carter RM, Huettel SA. 2013. A nexus model of the temporal–parietal junction. Trends Cogn. Sci. 17, 328-336. ( 10.1016/j.tics.2013.05.007) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Saxe R, Moran JM, Scholz J, Gabrieli J. 2006. Overlapping and non-overlapping brain regions for theory of mind and self reflection in individual subjects. Social Cogn. Affect. Neurosci. 1, 229-234. ( 10.1093/scan/nsl034) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Calder AJ, Lawrence AD, Keane J, Scott SK, Owen AM, Christoffels I, Young AW. 2002. Reading the mind from eye gaze. Neuropsychologia 40, 1129-1138. ( 10.1016/S0028-3932(02)00008-8) [DOI] [PubMed] [Google Scholar]

- 31.Risko EF, Richardson DC, Kingstone A. 2016. Breaking the fourth wall of cognitive science: real-world social attention and the dual function of gaze. Curr. Dir. Psychol. Sci. 25, 70-74. ( 10.1177/0963721415617806) [DOI] [Google Scholar]

- 32.Noah JA, Zhang X, Dravida S, Ono Y, Naples A, Mcpartland JC, Hirsch J. 2020. Real-time eye-to-eye contact is associated with cross-brain neural coupling in angular gyrus. Front. Hum. Neurosci. 14, 19. ( 10.3389/fnhum.2020.00019) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kelley MS, Noah JA, Zhang X, Scassellati B, Hirsch J. 2021. Comparison of human social brain activity during eye-contact with another human and a humanoid robot. Front. Robot. AI 7, 599581. ( 10.3389/frobt.2020.599581) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Hirsch J, Zhang X, Noah JA, Dravida S, Naples A, Tiede M, Wolf JM, Mcpartland JC. 2022. Neural correlates of eye contact and social function in autism spectrum disorder. PLoS ONE 17, e0265798. ( 10.1371/journal.pone.0265798) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Descorbeth O, Zhang X, Noah JA, Hirsch J. 2020. Neural processes for live pro-social dialogue between dyads with socioeconomic disparity. Social Cogn. Affect. Neurosci. 15, 875-887. ( 10.1093/scan/nsaa120) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Hirsch J, Tiede M, Zhang X, Noah JA, Salama-Manteau A, Biriotti M. 2021. Interpersonal agreement and disagreement during face-to-face dialogue: an fNIRS investigation. Front. Hum. Neurosci. 14, 606397. ( 10.3389/fnhum.2020.606397) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Bolis D, Schilbach L. 2018. Observing and participating in social interactions: action perception and action control across the autistic spectrum. Dev. Cogn. Neurosci. 29, 168-175. ( 10.1016/j.dcn.2017.01.009) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Wheatley T, Boncz A, Toni I, Stolk A. 2019. Beyond the isolated brain: the promise and challenge of interacting minds. Neuron 103, 186-188. ( 10.1016/j.neuron.2019.05.009) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Hasson U, Frith CD. 2016. Mirroring and beyond: coupled dynamics as a generalized framework for modelling social interactions. Phil. Trans. R. Soc. B 371, 20150366. ( 10.1098/rstb.2015.0366) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Hasson U, Ghazanfar AA, Galantucci B, Garrod S, Keysers C. 2012. Brain-to-brain coupling: a mechanism for creating and sharing a social world. Trends Cogn. Sci. 16, 114-121. ( 10.1016/j.tics.2011.12.007) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Redcay E, Schilbach L. 2019. Using second-person neuroscience to elucidate the mechanisms of social interaction. Nat. Rev. Neurosci. 20, 495-505. ( 10.1038/s41583-019-0179-4) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Schilbach L. 2010. A second-person approach to other minds. Nat. Rev. Neurosci. 11, 449. ( 10.1038/nrn2805-c1) [DOI] [PubMed] [Google Scholar]

- 43.Schilbach L, Timmermans B, Reddy V, Costall A, Bente G, Schlicht T, Vogeley K. 2013. Toward a second-person neuroscience. Behav. Brain Sci. 36, 393-414. ( 10.1017/S0140525X12000660) [DOI] [PubMed] [Google Scholar]

- 44.Villringer A, Chance B. 1997. Non-invasive optical spectroscopy and imaging of human brain function. Trends Neurosci. 20, 435-442. ( 10.1016/S0166-2236(97)01132-6) [DOI] [PubMed] [Google Scholar]

- 45.Pinti P, Aichelburg C, Gilbert S, Hamilton A, Hirsch J, Burgess P, Tachtsidis I. 2018. A review on the use of wearable functional near-infrared spectroscopy in naturalistic environments. Jap. Psychol. Res. 60, 347-373. ( 10.1111/jpr.12206) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Pinti P, Tachtsidis I, Hamilton A, Hirsch J, Aichelburg C, Gilbert S, Burgess PW. 2020. The present and future use of functional near-infrared spectroscopy (fNIRS) for cognitive neuroscience. Ann. N Y Acad. Sci. 1464, 5. ( 10.1111/nyas.13948) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Boas DA, Elwell CE, Ferrari M, Taga G. 2014. Twenty years of functional near-infrared spectroscopy: introduction for the special issue. Neuroimage 85, 1-5. ( 10.1016/j.neuroimage.2013.11.033) [DOI] [PubMed] [Google Scholar]

- 48.Ferrari M, Quaresima V. 2012. A brief review on the history of human functional near-infrared spectroscopy (fNIRS) development and fields of application. Neuroimage 63, 921-935. ( 10.1016/j.neuroimage.2012.03.049) [DOI] [PubMed] [Google Scholar]

- 49.Scholkmann F, Holper L, Wolf U, Wolf M. 2013. A new methodical approach in neuroscience: assessing inter-personal brain coupling using functional near-infrared imaging (fNIRI) hyperscanning. Front. Hum. Neurosci. 7, 813. ( 10.3389/fnhum.2013.00813) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Ono Y, Zhang X, Noah JA, Dravida S, Hirsch J. 2022. Bidirectional connectivity between Broca's area and Wernicke's area during interactive verbal communication. Brain Connect. 12, 210-222. ( 10.1089/brain.2020.0790) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Ye J, Tak S, Jang K, Jung J, Jang J. 2009. NIRS-SPM: statistical parametric mapping for near-infrared spectroscopy. Neuroimage 44, 428-447. ( 10.1016/j.neuroimage.2008.08.036) [DOI] [PubMed] [Google Scholar]

- 52.Arcaro MJ, Livingstone MS. 2021. On the relationship between maps and domains in inferotemporal cortex. Nat. Rev. Neurosci. 22, 573-583. ( 10.1038/s41583-021-00490-4) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Di Paolo EA, De Jaegher H. 2012. The interactive brain hypothesis. Front. Hum. Neurosci. 6, 163. ( 10.3389/fnhum.2012.00163) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Wang Y, Hamilton AFC. 2012. Social top-down response modulation (STORM): a model of the control of mimicry in social interaction. Front. Hum. Neurosci. 6, 153. ( 10.3389/fnhum.2012.00153) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Korb S, Goldman R, Davidson RJ, Niedenthal PM. 2019. Increased medial prefrontal cortex and decreased zygomaticus activation in response to disliked smiles suggest top-down inhibition of facial mimicry. Front. Psychol. 10, 1715. ( 10.3389/fpsyg.2019.01715) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Noah JA, Dravida S, Zhang X, Yahil S, Hirsch J. 2017. Neural correlates of conflict between gestures and words: a domain-specific role for a temporal-parietal complex. PLoS ONE 12, e0173525. ( 10.1371/journal.pone.0173525) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Lee TW, Josephs O, Dolan RJ, Critchley HD. 2006. Imitating expressions: emotion-specific neural substrates in facial mimicry. Social Cogn. Affect. Neurosci. 1, 122-135. ( 10.1093/scan/nsl012) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Lee TW, Dolan RJ, Critchley HD. 2008. Controlling emotional expression: behavioral and neural correlates of nonimitative emotional responses. Cereb. Cortex 18, 104-113. ( 10.1093/cercor/bhm035) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Hamilton AFC. 2008. Emulation and mimicry for social interaction: a theoretical approach to imitation in autism. Q. J. Exp. Psychol. 61, 101-115. ( 10.1080/17470210701508798) [DOI] [PubMed] [Google Scholar]

- 60.Zhang X, Noah JA, Hirsch J. 2016. Separation of the global and local components in functional near-infrared spectroscopy signals using principal component spatial filtering. Neurophotonics 3, 015004. ( 10.1117/1.NPh.3.1.015004) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Noah JA, Ono Y, Nomoto Y, Shimada S, Tachibana A, Zhang X, Bronner S, Hirsch J. 2015. fMRI validation of fNIRS measurements during a naturalistic task. J. Visualized Exp. 100, e52116. ( 10.3791/52116) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Zhang X, Noah JA, Dravida S, Hirsch J. 2017. Signal processing of functional NIRS data acquired during overt speaking. Neurophotonics 4, 041409. ( 10.1117/1.NPh.4.4.041409) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Dravida S, Noah JA, Zhang X, Hirsch J. 2018. Comparison of oxyhemoglobin and deoxyhemoglobin signal reliability with and without global mean removal for digit manipulation motor tasks. Neurophotonics 5, 011006. ( 10.1117/1.NPh.5.1.011006) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Piva M, Zhang X, Noah JA, Chang SWC, Hirsch J. 2017. Distributed neural activity patterns during human-to-human competition. Front. Hum. Neurosci. 11, 571. ( 10.3389/fnhum.2017.00571) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Ono Y, Nomoto Y, Tanaka S, Sato K, Shimada S, Tachibana A, Bronner S, Noah JA. 2014. Frontotemporal oxyhemoglobin dynamics predict performance accuracy of dance simulation gameplay: temporal characteristics of top-down and bottom-up cortical activities. Neuroimage 85, 461-470. ( 10.1016/j.neuroimage.2013.05.071) [DOI] [PubMed] [Google Scholar]

- 66.Tachibana A, Noah JA, Bronner S, Ono Y, Onozuka M. 2011. Parietal and temporal activity during a multimodal dance video game: an fNIRS study. Neurosci. Lett. 503, 125-130. ( 10.1016/j.neulet.2011.08.023) [DOI] [PubMed] [Google Scholar]

- 67.Eggebrecht AT, White BR, Ferradal SL, Chen C, Zhan Y, Snyder AZ, Dehghani H, Culver JP. 2012. A quantitative spatial comparison of high-density diffuse optical tomography and fMRI cortical mapping. Neuroimage 61, 1120-1128. ( 10.1016/j.neuroimage.2012.01.124) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Ferradal SL, Eggebrecht AT, Hassanpour M, Snyder AZ, Culver JP. 2014. Atlas-based head modeling and spatial normalization for high-density diffuse optical tomography: in vivo validation against fMRI. Neuroimage 85, 117-126. ( 10.1016/j.neuroimage.2013.03.069) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Homölle S, Oostenveld R. 2019. Using a structured-light 3D scanner to improve EEG source modeling with more accurate electrode positions. J. Neurosci. Methods 326, 108378. ( 10.1016/j.jneumeth.2019.108378) [DOI] [PubMed] [Google Scholar]

- 70.Okamoto M, Dan I. 2005. Automated cortical projection of head-surface locations for transcranial functional brain mapping. Neuroimage 26, 18-28. ( 10.1016/j.neuroimage.2005.01.018) [DOI] [PubMed] [Google Scholar]

- 71.Singh AK, Okamoto M, Dan H, Jurcak V, Dan I. 2005. Spatial registration of multichannel multi-subject fNIRS data to MNI space without MRI. Neuroimage 27, 842-851. ( 10.1016/j.neuroimage.2005.05.019) [DOI] [PubMed] [Google Scholar]

- 72.Mazziotta J, et al. 2001. A probabilistic atlas and reference system for the human brain: International Consortium for Brain Mapping (ICBM). Phil. Trans. R. Soc. B 356, 1293-1322. ( 10.1098/rstb.2001.0915) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Maldjian JA, Laurienti PJ, Kraft RA, Burdette JH. 2003. An automated method for neuroanatomic and cytoarchitectonic atlas-based interrogation of fMRI data sets. Neuroimage 19, 1233-1239. ( 10.1016/s1053-8119(03)00169-1) [DOI] [PubMed] [Google Scholar]

- 74.Maldjian JA, Laurienti PJ, Burdette JH. 2004. Precentral gyrus discrepancy in electronic versions of the Talairach atlas. Neuroimage 21, 450-455. ( 10.1016/j.neuroimage.2003.09.032) [DOI] [PubMed] [Google Scholar]

- 75.de Klerk CCJM, Hamilton AFC, Southgate V. 2018. Eye contact modulates facial mimicry in 4-month-old infants: an EMG and fNIRS study. Cortex 106, 93-103. ( 10.1016/j.cortex.2018.05.002) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Wang Y, Hamilton AFC. 2014. Why does gaze enhance mimicry? Placing gaze-mimicry effects in relation to other gaze phenomena. Q. J. Exp. Psychol. 67, 747-762. ( 10.1080/17470218.2013.828316) [DOI] [PubMed] [Google Scholar]

- 77.Wang Y, Ramsey R, Hamilton AFC. 2011. The control of mimicry by eye contact is mediated by medial prefrontal cortex. J. Neurosci. 31, 12 001-12 010. ( 10.1523/JNEUROSCI.0845-11.2011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Baltrušaitis T, Robinson P, Morency LP. 2016. OpenFace: an open source facial behavior analysis toolkit. In Proc. 2016 Inst Electric. Electron. Engrs (IEEE) Winter Conf. Applications of Computer Vision (WACV), 7–10 March 2016, Lake Placid, NY, 16023491. New York, NY: IEEE. ( 10.1109/WACV.2016.7477553) [DOI]

- 79.Ekman P. 1993. Facial expression and emotion. Am. Psychol. 48, 384-392. ( 10.1037/0003-066X.48.4.384) [DOI] [PubMed] [Google Scholar]

- 80.Kartali A, Roglić M, Barjaktarović M, Đurić-Jovičić M, Janković MM. 2018. Real-time algorithms for facial emotion recognition: a comparison of different approaches. In 2018 14th Symp. on Neural Networks and Applications (Neurel), 20-21 Nov. 2018. Belgrade, Serbia. [Google Scholar]

- 81.Skiendziel T, Rosch AG, Schultheiss OC. 2019. Assessing the convergent validity between the automated emotion recognition software Noldus FaceReader 7 and Facial Action Coding System Scoring. PLoS ONE 14, e0223905. ( 10.1371/journal.pone.0223905) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Ekman P, Friesen WV, Hager JC. 2002. Facial action coding system. The manual. Salt Lake City, UT: Research Nexus. [Google Scholar]

- 83.Binetti N, Roubtsova N, Carlisi C, Cosker D, Viding E, Mareschal I. 2022. Genetic algorithms reveal profound individual differences in emotion recognition. Proc. Natl Acad. Sci. USA 119, e2201380119. ( 10.1073/pnas.2201380119) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Hazeki O, Tamura M. 1988. Quantitative analysis of hemoglobin oxygenation state of rat brain in situ by near-infrared spectrophotometry. J. Appl. Physiol. 64, 796-802. ( 10.1152/jappl.1988.64.2.796) [DOI] [PubMed] [Google Scholar]

- 85.Hoshi Y. 2003. Functional near-infrared optical imaging: utility and limitations in human brain mapping. Psychophysiology 40, 511-520. ( 10.1111/1469-8986.00053) [DOI] [PubMed] [Google Scholar]

- 86.Matcher SJ, Elwell CE, Cooper CE, Cope M, Delpy DT. 1995. Performance comparison of several published tissue near-infrared spectroscopy algorithms. Anal. Biochem. 227, 54-68. ( 10.1006/abio.1995.1252) [DOI] [PubMed] [Google Scholar]

- 87.Yücel MA, et al. 2021. Best practices for fNIRS publications. Neurophotonics 8, 012101. ( 10.1117/1.NPh.8.1.012101) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Tachtsidis I, Scholkmann F. 2016. False positives and false negatives in functional near-infrared spectroscopy: issues, challenges, and the way forward. Neurophotonics 3, 031405. ( 10.1117/1.NPh.3.3.031405) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Noah JA, Zhang XZ, Dravida S, DiCocco C, Suzuki T, Aslin RN, Tachtsidis I, Hirsch J. 2021. Comparison of short-channel separation and spatial domain filtering for removal of non-neural components in functional near-infrared spectroscopy signals. Neurophotonics 8, 015004. ( 10.1117/1.NPh.8.1.015004) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Tachtsidis I, Tisdall MM, Leung TS, Pritchard C, Cooper CE, Smith M, Elwell CE. 2009. Relationship between brain tissue haemodynamics, oxygenation and metabolism in the healthy human adult brain during hyperoxia and hypercapnea. Adv. Exp. Med. Biol. 645, 315-320. ( 10.1007/978-0-387-85998-9_47) [DOI] [PubMed] [Google Scholar]

- 91.Penny WD, Friston KJ, Ashburner JT, Kiebel SJ, Nichols TE. 2011. Statistical parametric mapping: the analysis of functional brain images. Amsterdam, The Netherlands: Elsevier. [Google Scholar]

- 92.Hatfield E, Cacioppo JT, Rapson RL. 1993. Emotional contagion. Curr. Dir. Psychol. Sci. 2, 96-100. ( 10.1111/1467-8721.ep10770953) [DOI] [Google Scholar]

- 93.Wild B, Erb M, Eyb M, Bartels M, Grodd W. 2003. Why are smiles contagious? An fMRI study of the interaction between perception of facial affect and facial movements. Psychiatry Res. 123, 17-36. ( 10.1016/S0925-4927(03)00006-4) [DOI] [PubMed] [Google Scholar]

- 94.Namba S, Sato W, Osumi M, Shimokawa K. 2021. Assessing automated facial action unit detection systems for analyzing cross-domain facial expression databases. Sensors 21, 4222. ( 10.3390/s21124222) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Saxe R, Kanwisher N. 2003. People thinking about thinking people: the role of the temporo-parietal junction in ‘theory of mind’. Neuroimage 19, 1835-1842. ( 10.1016/S1053-8119(03)00230-1) [DOI] [PubMed] [Google Scholar]

- 96.Hirsch J, Zhang X, Noah JA, Ono Y. 2017. Frontal temporal and parietal systems synchronize within and across brains during live eye-to-eye contact. Neuroimage 157, 314-330. ( 10.1016/j.neuroimage.2017.06.018) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Hirsch J, Zhang X, Noah JA, Bhattacharya A. 2023. Data from: Neural mechanisms for emotional contagion and spontaneous mimicry of live facial expressions. Dryad Digital Repository. ( 10.5061/dryad.dz08kps16) [DOI] [PMC free article] [PubMed]

- 98.Hirsch J, Zhang X, Noah JA, Bhattacharya A. 2023. Neural mechanisms for emotional contagion and spontaneous mimicry of live facial expressions. Figshare. ( 10.6084/m9.figshare.c.6412271) [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Citations

- Hirsch J, Zhang X, Noah JA, Bhattacharya A. 2023. Data from: Neural mechanisms for emotional contagion and spontaneous mimicry of live facial expressions. Dryad Digital Repository. ( 10.5061/dryad.dz08kps16) [DOI] [PMC free article] [PubMed]

- Hirsch J, Zhang X, Noah JA, Bhattacharya A. 2023. Neural mechanisms for emotional contagion and spontaneous mimicry of live facial expressions. Figshare. ( 10.6084/m9.figshare.c.6412271) [DOI] [PMC free article] [PubMed]

Data Availability Statement

The datasets analysed for this study are available from the Dryad Digital Repository: https://doi.org/10.5061/dryad.dz08kps16 [97] and in the electronic supplementary material [98].