Abstract

Data collected from a validation substudy permit calculation of a bias-adjusted estimate of effect that is expected to equal the estimate that would have been observed had the gold standard measurement been available for the entire study population. In this paper, we develop and apply a framework for adaptive validation to determine when sufficient validation data have been collected to yield a bias-adjusted effect estimate with a prespecified level of precision. Prespecified levels of precision are decided a priori by the investigator, based on the precision of the conventional estimate and allowing for wider confidence intervals that would still be substantively meaningful. We further present an applied example of the use of this method to address exposure misclassification in a study of transmasculine/transfeminine youth and self-harm. Our method provides a novel approach to effective and efficient estimation of classification parameters as validation data accrue, with emphasis on the precision of the bias-adjusted estimate. This method can be applied within the context of any parent epidemiologic study design in which validation data will be collected and modified to meet alternative criteria given specific study or validation study objectives.

Keywords: epidemiologic methods, quantitative bias analysis, study design, validation substudies

Abbreviations

- CI

confidence interval

- EHR

electronic health record

- ICD-9

International Classification of Diseases, Ninth Revision

- NPV

negative predictive value

- OR

odds ratio

- PPV

positive predictive value

- STRONG

Study of Transition, Outcomes and Gender

Designing epidemiologic studies typically involves calculating the statistical power needed to detect a specific association based on the type of study, the sizes of the comparison groups, the effect size, the risk of the outcome, and acceptable type I error. This approach to planning study size has been criticized because it is rooted in statistical inference that relies on null-hypothesis statistical significance testing (1). Other frameworks for planning study size have been proposed, such as planning based on the desired precision of the estimate of interest or based on available resources (1, 2). A study design based on precision aligns with the goal of many epidemiologic studies—to estimate the magnitude and uncertainty of an underlying effect. Study size based on precision accounts for random error only. Investigators may also want to account for systematic error, such as error due to mismeasurement of the exposure or outcome.

A validation substudy compares an imperfect measurement of a variable with its gold standard measurement, in a subset of the study population. Validation data allow analysts to quantify the degree to which the imperfect measurement approximates the gold standard, and that information can be used to inform calculation of a bias-adjusted estimate of effect. Guidance on optimal sampling of participants for a validation substudy pertains primarily to scenarios in which the complete study population has been enrolled and follow-up has been completed (3–7). Little guidance has been offered for how to conduct a validation substudy that is focused on the precision of the bias-adjusted effect estimate (8). Given the cost and resources required to implement a validation study, researchers might be more inclined to pursue this work if they had guidance regarding the scope of validation efforts needed to meet the objectives of validation.

We previously developed an adaptive approach to validation substudy design to monitor validation data as they accrue until prespecified stopping criteria have been met (9, 10). In our prior work, we illustrated how the adaptive validation approach can be used to monitor validation data until prespecified precision and threshold values of the classification parameters have been met. However, validation data are often used to inform a bias-adjusted estimate of effect. Therefore, in the current study, we extend the adaptive validation approach to provide guidance on validation study design and stopping rules based on the precision of a bias-adjusted effect estimate.

We demonstrate the utility of this approach using data from the Study of Transition, Outcomes and Gender (STRONG) cohort to estimate the association between transmasculine/transfeminine status and self-inflicted injury among transgender and gender-nonconforming children and adolescents (11). As gender identity develops in children and adolescents, it may not match the sex recorded at birth (12–14). The development of gender identity and gender nonconformity in children and adolescents is an evolving area of research. Estimates suggest that 10%–30% of gender-nonconforming children may go on to identify with a gender that differs from their sex recorded at birth (15). Mental health conditions and their sequelae, such as self-inflicted injury, are an especially important concern for the health of transgender or gender-nonconforming youth (16–18). We recognize that binary gender definitions are suboptimal; however, for the purpose of this analysis, a person whose gender identity differs from female sex recorded at birth will be referred to as transmasculine, and a person whose gender identity differs from male sex recorded at birth will be referred to as transfeminine (19, 20).

In the STRONG cohort, investigators previously reported elevated risks of self-inflicted injury and other mental health outcomes among transgender youth, compared with youth sampled from the general population (21). In the current study, we describe an adaptive validation design for planning a validation substudy, in which sample validation data are collected until the bias-adjusted effect estimate reaches a prespecified level of precision. We present an applied example of this design in the examination of the association between transmasculine/transfeminine status and self-inflicted injury, adjusting for the possible misclassification of transmasculine/transfeminine status.

METHODS

Study population

The STRONG study is an electronic health record (EHR)-based cohort study of transgender and gender-nonconforming individuals that was established to understand long-term effects of hormone therapy and surgery on gender dysphoria, mental health, acute conditions such as injury, and chronic illnesses such as cardiovascular disease and cancer (11). The STRONG youth cohort includes individuals aged 3–17 years at the index date, identified using International Classification of Diseases, Ninth Revision (ICD-9), codes and keywords related to transgender or gender-nonconforming status in EHRs from Kaiser Permanente health plans in Georgia, Northern California, and Southern California. In the STRONG youth cohort, the index date corresponds to cohort entry and is defined as the first date with a recorded ICD-9 code or keyword reflecting transgender or gender-nonconforming status between 2006 and 2014. Demographic data collected from the EHRs included the member’s gender, but whether that variable captures gender identity versus sex recorded at birth is unknown. This potential misclassification of sex recorded at birth resulted in potential misclassification of cohort members to transmasculine or transfeminine status. We refer to this measurement as the “misclassified sex recorded at birth.” To overcome this limitation of the available data, cohort members’ archived and complete medical records—the gold standard in this study—were reviewed to determine sex recorded at birth. Medical records were reviewed by keyword search in selected text strings to identify additional anatomy- or therapy-related terms that would unambiguously indicate sex recorded at birth. We refer to this as “gold standard sex recorded at birth.”

Outcome

Self-inflicted injury was identified using ICD-9 codes in EHRs following a previously described method (22). Self-inflicted injury was categorized as ever versus never, which allowed for the occurrence of an event at any point over the course of cohort enrollment and follow-up, before or after the index date.

Exposure status

The exposure of interest was transmasculine and transfeminine status, which can be determined from knowledge of the sex recorded at birth and current gender identity. As introduced above, the misclassified sex recorded at birth was based on the recent EHR data; it was known to be misclassified because it could either represent sex recorded at birth or reflect concurrent gender identity. For example, if the cohort member had a gender code of “female,” this code was assumed to refer to a “female” sex recorded at birth, and therefore represented an individual who would identify as transmasculine. Similarly, a gender code of “male” was assumed to refer to “male” sex recorded at birth, and therefore represented an individual who would identify as transfeminine.

Exposure validation

The misclassified sex recorded at birth variable was validated for all members who were at least 18 years of age as of January 1, 2015 (n = 535; 40% of the youth cohort). Members under 18 years of age on this date were not validated, which was a design decision of the original investigators (11). Although the validation data have been collected, we illustrate the adaptive validation study design as though the validation data were being collected prospectively. In the adaptive validation studies, we compute the bias parameters that are used in the quantitative bias analysis to adjust the observed effect estimate for misclassification. We carry out the approach using the positive predictive value (PPV) and the negative predictive value (NPV), as well as sensitivity and specificity. The PPV was defined as the probability that sex recorded at birth in the gold standard medical record was female among those whose sex recorded at birth in the concurrent EHR data was female. The NPV was defined as the probability that the sex recorded at birth in the gold standard medical record was male among those whose sex recorded at birth in the concurrent EHR data was male. Similarly, sensitivity was defined as the probability that the sex recorded at birth in the concurrent EHR data was female among those whose sex recorded at birth in the gold standard medical record was female, and specificity as the probability that the sex recorded at birth in the concurrent EHR data was male among those whose sex recorded at birth in the gold standard medical record was male. Both the PPV/NPV and sensitivity/specificity were estimated within strata of self-inflicted injury to facilitate the quantitative bias analysis. Because self-inflicted injury could occur before or after the index date, differential exposure misclassification was likely.

Adaptive validation sampling design

We used the STRONG cohort’s misclassified sex recorded at birth obtained from the EHR and the validation data on gold standard sex recorded at birth obtained from the medical record to calculate the classification parameters within strata of the outcome. We determined the sample size of the validation substudy necessary for the classification parameters to meet a stopping criterion—precision of the bias-adjusted effect estimate that is no more than 80% wider than the conventional estimate. Precision was defined as the ratio of the upper limit of the 95% confidence interval (CI) to the lower limit of the 95% CI. The stopping criterion was set based on the precision of the conventional estimate (1.99) and allowing for wider confidence intervals that would still be substantively meaningful. Investigators should choose a stopping criterion that is substantively meaningful for their research question, similar to precision-based approaches to sample-size calculations that have been advocated (1).

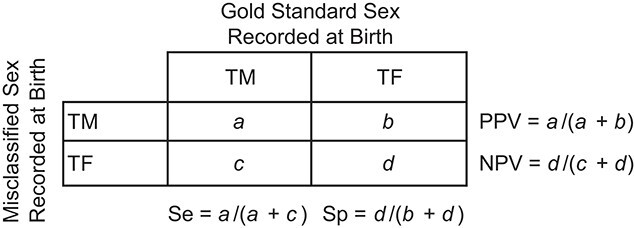

Estimates of PPV and NPV or sensitivity and specificity can be used to account for exposure misclassification in quantitative bias analysis (8). Estimates of PPV and NPV are generated from a validation substudy that conditions on the observed exposure status, as illustrated in Figure 1, or a random sample of the study population. Estimates of sensitivity and specificity can be calculated by either taking a random sample of the study population to be used in validation substudy or conditioning on the gold standard exposure status. In this example, 40% of the study population had validation data, so we were able to condition on the gold standard sex recorded at birth.

Figure 1.

Classification parameters for exposure misclassification in the STRONG youth cohort. Se, sensitivity; Sp, specificity; TF,transfeminine; TM, transmasculine; STRONG, Study of Transition, Outcomes and Gender.

The adaptive validation sampling design and its use in the STRONG cohort have been described previously (9). Briefly, among cohort members with validation data on their sex recorded at birth, we used an iterative beta-binomial Bayesian model to update the classification parameters within strata of self-inflected injury (23). Before including any validation data, we assume we know nothing about the 4 bias parameters, PPVd and NPVd, where d = 0 (controls) or d = 1 (cases).

|

This specification of the beta distribution is identical to a uniform distribution, with all values of the classification parameters (0, 1) having equal probability. This procedure is easily modified to incorporate prior information by choosing different parameters for the beta distribution. We repeatedly updated the bias parameters in blocks of 10 participants in each of the 4 exposure (EHR-defined sex recorded at birth) × outcome (self-harm) categories. Therefore, at each iteration we performed a small (n = 40) validation study to inform the 4 predictive values. Individuals were randomly sampled without replacement, and their gold-standard information was abstracted to estimate the bias parameters. This process of sampling 40 participants and validating their exposure data was repeated until the stopping rule was achieved. In the jth iteration of the validation procedure, yxdj people were found to have the gold standard exposure in the group of people sampled from the observed (possibly misclassified) exposure group x and outcome group d. These data are combined with the prior specifications above and after the jth iteration. The posterior distributions of the PPV and NPV are

|

where  ,

,  ,

,  , and

, and  . The mean value of each bias parameter’s posterior distribution can be used as a point estimate for the updated parameter—for example,

. The mean value of each bias parameter’s posterior distribution can be used as a point estimate for the updated parameter—for example,  . We demonstrate the adaptive validation approach under 2 sampling designs: first, sampling conditional on the concurrent sex recorded at birth to compute estimates of the PPV and NPV; and second, sampling conditional on the gold standard sex recorded at birth to compute estimates of sensitivity and specificity.

. We demonstrate the adaptive validation approach under 2 sampling designs: first, sampling conditional on the concurrent sex recorded at birth to compute estimates of the PPV and NPV; and second, sampling conditional on the gold standard sex recorded at birth to compute estimates of sensitivity and specificity.

Quantitative bias analysis

The variance of the PPV- and NPV-adjusted estimate of effect was previously derived by Marshall (24). The variance of the bias-adjusted odds ratio (OR) is a function of the classification parameters, the bias-adjusted prevalence of the exposure within strata of the outcome, and the sample sizes of both the validation substudy and the total study population. The equations below define the variance of the bias-adjusted (BA) OR using the PPV and NPV computed from an internal validation study, which we use as the foundation for our first sampling design (equation 1).

|

(1) |

where

|

(2) |

|

(3) |

|

(4) |

and

|

(5) |

Marshall similarly reported a derivation of this formula when sensitivity and specificity are used as the bias parameters (24). Equations 6–9 use sensitivity (Se) and specificity (Sp) from the second sampling design to compute the variance of the bias-adjusted OR in equation 1.

|

(6) |

|

(7) |

|

(8) |

|

(9) |

In equations 1–9, the subscript d (d = 1, 0) refers to the strata of self-inflicted injury (outcome). Subscript x refers to the observed exposure prevalence, which is known to be misclassified. Subscript e refers to the bias-adjusted prevalence, which uses either the PPV and NPV or sensitivity and specificity to bias-adjust the observed exposure prevalence (equations 4 and 8). Finally, f is the proportion of cohort members included in the validation substudy. Marshall defines K as the “coefficient of reliability,” which corresponds to the square of the correlation between the observed exposure and the bias-adjusted exposure (equations 5 and 9) (24).

For each iteration of the adaptive validation approach, the parameter values in equations 2–4 or equations 6–8 were estimated to compute the bias-adjusted OR and the corresponding variance, which were used to determine the precision of the bias-adjusted OR. Validation efforts cease once the prespecified level of precision is reached. We plot the P value function of the bias-adjusted OR at each iteration to illustrate the changes in precision. All of the analyses were carried out in R, version 4.0 (R Foundation for Statistical Computing, Vienna, Austria).

RESULTS

The STRONG youth cohort included 1,331 persons, of whom 710 (53%) were classified as transmasculine and 621 (47%) were classified as transfeminine based on the potentially misclassified sex recorded at birth variable (Table 1). There were 113 (16%) and 54 (9%) recorded instances of self-inflicted injury among these transmasculine and transfeminine individuals, respectively. In the conventional model, the OR associating transmasculine versus transfeminine status with self-inflicted injury was 1.99 (95% CI: 1.41, 2.80), using the potentially misclassified sex recorded at birth code to determine exposure status (Table 2).

Table 1.

Characteristics of the STRONG Youth Cohort Population (n = 1,331), 2006–2014

| Gender a | ||||

|---|---|---|---|---|

| Transmasculine | Transfeminine | |||

| Characteristic | No. | % | No. | % |

| Total | 710 | 53 | 621 | 47 |

| Age, years | ||||

| 3–9 | 96 | 14 | 155 | 25 |

| 10–17 | 614 | 86 | 466 | 75 |

| Self-inflicted injury | ||||

| Yes | 113 | 16 | 54 | 9 |

| No | 597 | 84 | 567 | 91 |

Abbreviation: STRONG, Study of Transition, Outcomes and Gender.

a Transmasculine and transfeminine status were ascertained using the misclassified sex recorded at birth.

Table 2.

Conventional and Bias-Adjusted Effect Estimates for the Association Between Transmasculine (Versus Transfeminine) Gender Status and Self-Inflicted Injury Among Children and Adolescents in the STRONG Youth Cohort, 2006–2014

| Self-Inflicted Injury | No Self-Inflicted Injury | OR | 95% CI | Precision a | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Estimation Method | PPV | 95% CI | NPV | 95% CI | PPV | 95% CI | NPV | 95% CI |

No. of Persons in

Validation Substudy |

|||

| Conventional | 100 | 100 | 100 | 100 | 0 | 1.99 | 1.41, 2.80 | 1.99 | ||||

| Adaptive validation | 81 | 64, 93 | 76 | 56, 90 | 91 | 75, 98 | 84 | 67, 95 | 117 | 1.40 | 0.77, 2.52 | 3.27 |

| Complete validation set | 87 | 76, 95 | 78 | 58, 91 | 93 | 89, 96 | 81 | 76, 86 | 535 | 1.47 | 0.95, 2.26 | 2.38 |

| Se | 95% CI | Sp | 95% CI | Se | 95% CI | Sp | 95% CI | |||||

| Adaptive validation | 88 | 74, 96 | 73 | 56, 87 | 81 | 66, 93 | 94 | 83, 99 | 118 | 1.37 | 0.76, 2.50 | 3.29 |

| Complete validation set | 89 | 81, 97 | 75 | 63, 87 | 83 | 74, 93 | 92 | 85, 99 | 535 | 1.50 | 0.97, 2.31 | 2.38 |

Abbreviations: CI, confidence interval; NPV, negative predictive value; OR, odds ratio; PPV, positive predictive value; Se, sensitivity; Sp, specificity; STRONG, Study of Transition, Outcomes and Gender.

a Ratio of the upper limit of the 95% CI to the lower limit of the 95% CI.

Adaptive validation: PPV and NPV

Among participants with a self-inflected injury, the PPV and NPV of the misclassified sex recorded at birth variable, calculated from the complete validation substudy, were 87% (95% CI: 76, 95) and 78% (95% CI: 58, 91), respectively (Table 2). Among those without a self-inflicted injury, the PPV was 93% (95% CI: 89, 96) and the NPV was 81% (95% CI: 76, 86). These were computed from a validated sample of 535 participants (40% of the full cohort), including 283 (53%) transmasculine and 252 (47%) transfeminine cohort members who were at least 18 years of age as of January 1, 2015 (where transmasculine and transfeminine were based on the misclassified sex recorded at birth).

Our prespecified stopping criterion for the validation study was based on no more than an 80% increase in the ratio of the upper to lower confidence limits for the bias-adjusted estimate compared with that ratio for the conventional estimate (precision = 2.80/1.41 = 1.99). We therefore continued with validation until the precision of the bias-adjusted estimate was no greater than 1.80 × 1.99 = 3.58. This required an adaptive validation sample of 117 cohort members, from which estimated values for PPV and NPV were 81% (95% CI: 64, 93) and 76% (95% CI: 56, 90), respectively, among those with a reported self-inflicted injury and 91% (95% CI: 75, 98) and 84% (95% CI: 67, 95), respectively, among those without a reported self-inflicted injury (Table 2, Figure 2). The validation substudy met the goals for validation after 3 iterations of the adaptive validation approach. However, only 117 cohort members were included in the adaptive validation study instead of the full validation study size of 535. The adaptive validation study included 117 persons instead of 120 because there were only 27 cohort members with a reported self-inflicted injury and misclassified sex recorded at birth as male, so 30 could not be included. Application of the estimates of the bias parameters from the adaptive validation design yielded a bias-adjusted estimate of ORBA = 1.40 (95% CI: 0.77, 2.52), with a precision of 3.27 (2.52/0.77). The ORBA using the full validation substudy was 1.47 (95% CI: 0.95, 2.26), with a precision of 2.38 (2.26/0.95) (Table 2, Figure 3).

Figure 2.

Estimates of positive predictive value (PPV) and negative predictive value (NPV) within strata of self-inflicted injury from the adaptive validation sampling approach as compared with the full validation data, STRONG youth cohort, 2006–2014. Bars represent 95% confidence intervals. STRONG, Study of Transition, Outcomes and Gender.

Figure 3.

P value functions for the conventional and bias-adjusted odds ratios and 95% confidence intervals associating transmasculine/transfeminine status with self-inflicted injury under the adaptive validation sampling approach using the positive and negative predictive values as compared with the full validation data, STRONG youth cohort, 2006–2014. STRONG, Study of Transition, Outcomes and Gender.

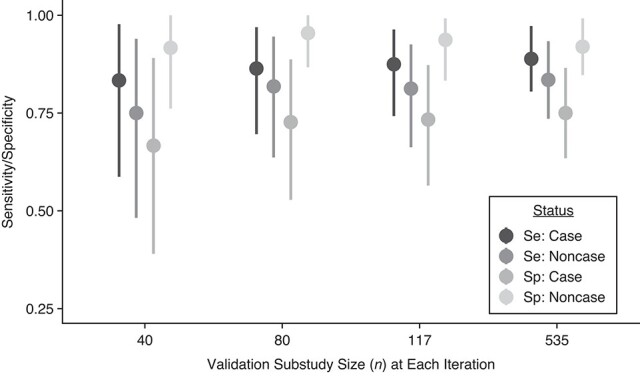

Adaptive validation: sensitivity and specificity

Among persons with a self-inflicted injury, the sensitivity and specificity of the misclassified sex recorded at birth status, calculated from the complete validation substudy, were 89% (95% CI: 81, 97) and 75% (95% CI: 63, 87), respectively (Table 2). Among those without a self-inflicted injury, the sensitivity was 83% (95% CI: 74, 93) and the specificity was 92% (95% CI: 85, 99). Similar to the first sampling approach, the adaptive validation substudy stopping criterion was achieved after 3 iterations. However, only 118 cohort members were included in the adaptive validation study instead of the full validation study size of 535. The adaptive validation study included 118 individuals instead of 120 because there were only 28 cohort members with a reported self-inflicted injury and a gold standard sex recorded at birth of male. Among those included in the adaptive validation substudy, the sensitivity and specificity were 88% (95% CI: 74, 96) and 73% (95% CI: 56, 87), respectively, among those with a reported self-inflicted injury and 81% (95% CI: 66, 93) and 94% (95% CI: 83, 99), respectively, among those without self-inflicted injury (Table 2, Figure 4). Application of the estimated bias parameters from the adaptive validation design yielded a bias-adjusted estimate of ORBA = 1.37 (95% CI: 0.76, 2.50), with a precision of 3.29. The ORBA from the full validation substudy was 1.50 (95% CI: 0.97, 2.31), with a precision of 2.38 (Table 2, Figure 5).

Figure 4.

Estimates of sensitivity (Se) and specificity (Sp) from the adaptive validation sampling approach as compared with the full validation data, STRONG youth cohort, 2006–2014. Bars represent 95% confidence intervals. STRONG, Study of Transition, Outcomes and Gender.

Figure 5.

P value functions for the conventional and bias-adjusted odds ratios and 95% confidence intervals associating transmasculine/transfeminine status with self-inflicted injury under the adaptive validation sampling approach using sensitivity and specificity as compared with the full validation data, STRONG youth cohort, 2006–2014. STRONG, Study of Transition, Outcomes and Gender.

DISCUSSION

In this study, we developed and applied an approach to collecting validation data until a prespecified level of precision of the bias-adjusted estimate of effect had been reached. The conventional estimate obtained using the potentially misclassified gender code was slightly further from null than the bias-adjusted effect estimate obtained by accounting for misclassification of transmasculine/transfeminine status. Using the adaptive validation approach, the bias-adjusted estimates were similar under both the PPV/NPV approach and the sensitivity/specificity approach. Additionally, both required a similar number of participants to be included in the validation substudy (n = 117 and n = 118, respectively). These estimates were also comparable to the bias-adjusted ORs obtained using the complete validation data, which used 535 validated records. Similarly, the PPV/NPV and sensitivity/specificity estimates calculated from the adaptive validation approach were comparable to those obtained from the complete validation substudy, though estimated with less precision.

The STRONG youth cohort investigators previously reported that transgender and gender-nonconforming youth were more likely to present with mental health conditions, especially those related to anxiety and depression, than population-based comparators (21, 22). Prevalence ratio estimates of self-inflicted injury were especially pronounced among both transmasculine and transfeminine individuals as compared with population-based referents of the same age (21). In another study of the STRONG cohort, Mak et al. (22) reported that suicide attempts were more common among transmasculine cohort members (4.8%) than among transfeminine cohort members (3.0%), although that study included both adults and adolescents. In the current study, we found that transgender and gender-nonconforming youths who were classified as transmasculine were more likely to inflict self-harm than those classified as transfeminine, although the relative excess effect was reduced by half after accounting for potential misclassification of sex recorded at birth.

In recent years, psychiatric epidemiology has increasingly turned towards the use of electronic health registry data for research, including efforts to better understand self-harm (25, 26). While these data sources hold enormous potential for improving our understanding of these constructs, a current challenge within this paradigm is how to best capture nondiagnostic variables that impact health (27). Methods development related to assessment of the validity of nondiagnostic variables in these data sources is critical if we are to continue to use medical record data to improve our understanding of self-harm. This study presents one such approach.

Our method outlines an approach to study design based on precision due to both random and systematic errors (28). An important advance is the development of an approach to validation substudy design suitable for scenarios in which validation data are collected in real time and applicable to any parent epidemiologic study. This method provides a valuable tool for prospective validation, allowing researchers to carefully allocate fixed study resources when implementing validation studies. We illustrated the method using a Bayesian framework for adaptive validation; however, a frequentist approach is also feasible, and no prior specification would be required. We demonstrated the ability of the method to determine when a validation study has generated sufficient data to support a prespecified precision of a bias-adjusted estimate, thereby expanding the considerations for planning of studies based on precision. Using this design, the classification parameters calculated from the limited sample in the adaptive validation set were comparable to those obtained from full validation efforts. However, fewer persons required validation using the adaptive design. Because the outcome was rare (13%), a substantial proportion of transmasculine and transfeminine children and adolescents with a reported self-inflicted injury were included in the validation substudies (34%).

Validation data are often expensive to collect. When the gold standard is measured by medical record review, substantial labor and data-access costs accrue. When the gold standard is measured by bioassay, specimens must be collected, stored, and analyzed. It is almost always true that data for the gold-standard measure are more expensive or difficult to collect than data for the misclassified measure; otherwise, the gold-standard data would have been collected in the first place. Iterative updating of classification parameters informs values of the parameters of interest and can be used as a marker for the point at which sufficient information has been collected, indicating when validation efforts can stop. This process allows researchers to decide how to efficiently allocate resources to validation studies, with the ability to adapt based on the specific needs of their study. Our method may enhance the ability of researchers to save resources allocated to validation, by thoughtfully validating over the study period while regularly assessing the performance of the variables mismeasured in the study. In this approach, the sample size necessary to achieve the specified stopping criterion is potentially smaller than would be required by other approaches, and validation can be completed in parallel with cohort enrollment.

We demonstrated how investigators may use the adaptive validation design to estimate PPV/NPV or sensitivity/specificity. Predictive values are specific to the study from which they arise and are not readily applied outside of the given study population. Moreover, PPV and NPV should be estimated within strata of the outcome, as they are dependent on prevalence, and may be more difficult to implement concurrent with cohort enrollment if outcome status has not yet been determined. Sensitivity and specificity are more easily transportable to other populations and are also more readily assessed before outcome status is known, especially if nondifferential misclassification can be assumed because of prospective design. However, to estimate sensitivity and specificity, researchers need to randomly sample everyone in the study population or have a naturally occurring subset of the population in which the gold standard is measured. Many studies will not have such a naturally occurring subset, so must either sample at random (which is usually inefficient) (3) or sample conditional on the mismeasured value (which allows valid estimation of only predictive values). Finally, our approach used a simple quantitative bias analysis to determine the precision of the bias-adjusted estimate. It is often preferable to use a probabilistic bias analysis that can incorporate uncertainty of the bias parameters, incorporate random error, and allow for control of potential confounders (8). Still, the adaptive validation design can be used to determine the stopping criteria, and additional analytical approaches can be used to address uncertainties in the study’s analysis.

Another important consideration is how to decide what is an acceptable level of precision of the bias-adjusted effect estimate. In studies that account for random error only, 95% CI widths of 2–3 are commonly used as examples for acceptable levels of precision (1, 29). Multiple parameters contribute to the variance of the bias-adjusted estimate (equations 1–5), including the sample sizes of the study population and validation substudy, as well as the bias-adjusted prevalence among cases and noncases. It is conceivable that accounting for mismeasurement of the exposure will result in distributions of the exposure that are more statistically efficiently allocated, and precision of the bias-adjusted estimate may equal that of the uncorrected estimate with sufficient validation data. However, it is also possible that less statistical efficiency will be observed after accounting for measurement error, and the same variance as the uncorrected estimate will never be reached. In general, accounting for measurement error as an additional layer of uncertainty will result in less precision, and researchers will have to determine the acceptable level of increase in the 95% CI width that will still yield substantively meaningful results.

Our proposed adaptive validation design is beneficial for calculating classification parameters as validation data accrue in epidemiologic studies, which can lead to effective and efficient conduct of validation substudies and planning for epidemiologic studies that may suffer from systematic errors. In this application, we demonstrated the utility of monitoring validation data as they accrued to determine when the bias-adjusted estimate reached a prespecified stopping criterion. In this example, fewer than 120 cohort members were necessary to achieve the goals of validation compared with the initially validated 535 cohort members. Furthermore, we report that the association between transmasculine status (versus transfeminine status) and self-inflicted injury in children and adolescents was overestimated by the potentially misclassified gender code, conditional on the accuracy of the bias model.

ACKNOWLEDGMENTS

Author affiliations: Department of Population Health Sciences, Huntsman Cancer Institute, University of Utah, Salt Lake City, Utah, United States (Lindsay J. Collin); Division of Epidemiology and Community Health, School of Public Health, University of Minnesota, Minneapolis, Minnesota, United States (Richard F. MacLehose); Department of Surgery, Robert Larner, M.D. College of Medicine, University of Vermont, Burlington, Vermont, United States (Thomas P. Ahern); Department of Epidemiology, Rollins School of Public Health, Emory University, Atlanta, Georgia, United States (Michael Goodman, Timothy L. Lash); Department of Epidemiology, School of Public Health, Boston University, Boston, Massachusetts, United States (Jaimie L. Gradus); Department of Research and Evaluation, Kaiser Permanente Southern California, Pasadena, California, United States (Darios Getahun); Department of Health Systems Science, Kaiser Permanente Bernard J. Tyson School of Medicine, Pasadena, California, United States (Darios Getahun); and Division of Research, Kaiser Permanente Northern California, Oakland, California, United States (Michael J. Silverberg).

This work was supported in part by the National Cancer Institute (grant F31CA239566 awarded to L.J.C.) and the National Library of Medicine (grant R01LM013049 awarded to T.L.L.). T.P.A. was supported by an award from the National Institute of General Medical Sciences (grant P20 GM103644). STRONG cohort data were collected with support from the Patient-Centered Outcomes Research Institute (contract AD-12-11-4532) and the Eunice Kennedy Shriver National Institute of Child Health and Human Development (grant R21HD076387 awarded to M.G.). L.J.C. was also supported, in part, by the National Center for Advancing Translational Sciences (grant TL1TR002540). J.L.G. was supported, in part, by the National Institute of Mental Health (grant R01MH109507).

Data are available from Dr. Michael Goodman (mgoodm2@emory.edu) upon approval from the STRONG investigative team.

This work was presented at the 54th Annual Meeting of the Society for Epidemiologic Research (virtual), June 22–25, 2021.

The views expressed in this article are those of the authors and do not reflect those of the National Institutes of Health.

Conflict of interest: none declared.

REFERENCES

- 1. Rothman KJ, Greenland S. Planning study size based on precision rather than power. Epidemiology. 2018;29(5):599–603. [DOI] [PubMed] [Google Scholar]

- 2. von Elm E, Altman DG, Egger M, et al. The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement: guidelines for reporting observational studies. Int J Surg. 2014;12(12):1495–1499. [DOI] [PubMed] [Google Scholar]

- 3. Holcroft CA, Spiegelman D. Design of validation studies for estimating the odds ratio of exposure-disease relationships when exposure is misclassified. Biometrics. 1999;55(4):1193–1201. [DOI] [PubMed] [Google Scholar]

- 4. Spiegelman D, Rosner B, Logan R. Estimation and inference for logistic regression with covariate misclassification and measurement error in main study/validation study designs. J Am Stat Assoc. 2000;95(449):51–61. [Google Scholar]

- 5. Greenland S. Variance estimation for epidemiologic effect estimates under misclassification. Stat Med. 1988;7(7):745–757. [DOI] [PubMed] [Google Scholar]

- 6. Spiegelman D, Gray R. Cost-efficient study designs for binary response data with Gaussian covariate measurement error. Biometrics. 1991;47(3):851–869. [PubMed] [Google Scholar]

- 7. Holford TR, Stack C. Study design for epidemiologic studies with measurement error. Stat Methods Med Res. 1995;4(4):339–358. [DOI] [PubMed] [Google Scholar]

- 8. Lash TL, Fox MP, MacLehose RF, et al. Good practices for quantitative bias analysis. Int J Epidemiol. 2014;43(6):1969–1985. [DOI] [PubMed] [Google Scholar]

- 9. Collin LJ, MacLehose RF, Ahern TP, et al. Adaptive validation design: a Bayesian approach to validation substudy design with prospective data collection. Epidemiology. 2020;31(4):509–516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Collin LJ, Riis AH, MacLehose RF, et al. Application of the adaptive validation substudy design to colorectal cancer recurrence. Clin Epidemiol. 2020;12:113–121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Quinn VP, Nash R, Hunkeler E, et al. Cohort profile: Study of Transition, Outcomes and Gender (STRONG) to assess health status of transgender people. BMJ Open. 2017;7(12):e018121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Lombardi E. Enhancing transgender health care. Am J Public Health. 2001;91(6):869–872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Steensma TD, van der Ende J, Verhulst FC, et al. Gender variance in childhood and sexual orientation in adulthood: a prospective study. J Sex Med. 2013;10(11):2723–2733. [DOI] [PubMed] [Google Scholar]

- 14. Wallien MSC, Cohen-Kettenis PT. Psychosexual outcome of gender-dysphoric children. J Am Acad Child Adolesc Psychiatry. 2008;47(12):1413–1423. [DOI] [PubMed] [Google Scholar]

- 15. Costa R, Carmichael P, Colizzi M. To treat or not to treat: puberty suppression in childhood-onset gender dysphoria. Nat Rev Urol. 2016;13(8):456–462. [DOI] [PubMed] [Google Scholar]

- 16. Levine DA, Committee on Adolescence, Braverman PK, et al. Office-based care for lesbian, gay, bisexual, transgender, and questioning youth. Pediatrics. 2013;132(1):e297–e313. [DOI] [PubMed] [Google Scholar]

- 17. Coleman E, Bockting W, Botzer M, et al. Standards of care for the health of transsexual, transgender, and gender-nonconforming people, version 7. Int J Transgenderism. 2012;13(4):165–232. [Google Scholar]

- 18. Wilczynski C, Emanuele MA. Treating a transgender patient: overview of the guidelines. Postgrad Med. 2014;126(7):121–128. [DOI] [PubMed] [Google Scholar]

- 19. Rosenthal SM. Transgender youth: current concepts. Ann Pediatr Endocrinol Metab. 2016;21(4):185–192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Leibowitz SF, Spack NP. The development of a gender identity psychosocial clinic: treatment issues, logistical considerations, interdisciplinary cooperation, and future initiatives. Child Adolesc Psychiatr Clin N Am. 2011;20(4):701–724. [DOI] [PubMed] [Google Scholar]

- 21. Becerra-Culqui TA, Liu Y, Nash R, et al. Mental health of transgender and gender nonconforming youth compared with their peers. Pediatrics. 2018;141(5):e20173845. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Mak J, Shires DA, Zhang Q, et al. Suicide attempts among a cohort of transgender and gender diverse people. Am J Prev Med. 2020;59(4):570–577. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Gelman A, Carlin JB, Stern HS, et al., eds. Bayesian Data Analysis. 3rd ed. Boca Raton, FL: Chapman & Hall/CRC Press; 2013. [Google Scholar]

- 24. Marshall RJ. Validation study methods for estimating exposure proportions and odds ratios with misclassified data. J Clin Epidemiol. 1990;43(9):941–947. [DOI] [PubMed] [Google Scholar]

- 25. Weissman MM. Big data begin in psychiatry. JAMA Psychiatry. 2020;77(9):967–973. [DOI] [PubMed] [Google Scholar]

- 26. Gradus JL, Rosellini AJ, Horváth-Puhó E, et al. Prediction of sex-specific suicide risk using machine learning and single-payer health care registry data from Denmark. JAMA Psychiatry. 2020;77(1):25–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Weissman MM, Pathak J, Talati A. Personal life events—a promising dimension for psychiatry in electronic health records. JAMA Psychiatry. 2020;77(2):115–116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Fox MP, Lash TL. Quantitative bias analysis for study and grant planning. Ann Epidemiol. 2020;43:32–36. [DOI] [PubMed] [Google Scholar]

- 29. Poole C. Low P values or narrow confidence intervals: which are more durable? Epidemiology. 2001;12(3):291–294. [DOI] [PubMed] [Google Scholar]