Abstract

In the last decade, convolutional neural networks (ConvNets) have been a major focus of research in medical image analysis. However, the performances of ConvNets may be limited by a lack of explicit consideration of the long-range spatial relationships in an image. Recently Vision Transformer architectures have been proposed to address the shortcomings of ConvNets and have produced state-of-the-art performances in many medical imaging applications. Transformers may be a strong candidate for image registration because their substantially larger receptive field enables a more precise comprehension of the spatial correspondence between moving and fixed images. Here, we present TransMorph, a hybrid Transformer-ConvNet model for volumetric medical image registration. This paper also presents diffeomorphic and Bayesian variants of TransMorph: the diffeomorphic variants ensure the topology-preserving deformations, and the Bayesian variant produces a well-calibrated registration uncertainty estimate. We extensively validated the proposed models using 3D medical images from three applications: inter-patient and atlas-to-patient brain MRI registration and phantom-to-CT registration. The proposed models are evaluated in comparison to a variety of existing registration methods and Transformer architectures. Qualitative and quantitative results demonstrate that the proposed Transformer-based model leads to a substantial performance improvement over the baseline methods, confirming the effectiveness of Transformers for medical image registration.

Keywords: Image Registration, Deep Learning, Vision Transformer, Computerized Phantom

1. Introduction

Deformable image registration (DIR) is fundamental for many medical imaging analysis tasks. It functions by establishing spatial correspondence in order to minimize the differences between a pair of fixed and moving images. Traditional methods formulate image registration as a variational problem for estimating a smooth mapping between the points in one image and those in another (Avants et al. 2008; Beg et al. 2005; Vercauteren et al. 2009; Heinrich et al. 2013a; Modat et al. 2010). However, such methods are computationally expensive and usually slow in practice because the optimization problem needs to be solved de novo for each pair of unseen images.

Recently, deep neural networks (DNNs), especially convolutional neural networks (ConvNets), have demonstrated state-of-the-art performance in many computer vision tasks, including object detection (Redmon et al. 2016), image classification (He et al. 2016), and segmentation (Long et al. 2015). Ever since the success of U-Net in the ISBI cell tracking challenge of 2015 (Ronneberger et al. 2015), ConvNet-based methods have become a major focus of attention in medical image analysis fields, such as tumor segmentation (Isensee et al. 2021; Zhou et al. 2019), image reconstruction (Zhu et al. 2018), and disease diagnostics (Lian et al. 2018). In medical image registration, ConvNet-based methods can produce significantly improved registration performance while operating orders of magnitudes faster (after training) compared to traditional methods. ConvNet-based methods replace the costly per-image optimization seen in traditional methods with a single global function optimization during a training phase. The ConvNets learn the common representation of image registration from training images, enabling rapid alignment of an unseen image pair after training. Initially, the supervision of ground-truth deformation fields (which are usually generated using traditional registration methods) is needed for training the neural networks (Onofrey et al. 2013; Yang et al. 2017b; Rohé et al. 2017). Recently, the focus has been shifted towards developing unsupervised methods that do not depend on ground-truth deformation fields (Balakrishnan et al. 2019; Dalca et al. 2019; Kim et al. 2021; de Vos et al. 2019, 2017; Lei et al. 2020; Chen et al. 2020; Zhang 2018). Nearly all of the existing deep-learning-based methods mentioned above used U-Net (Ronneberger et al. 2015) or the simply modified versions of U-Net (e.g., tweaking the number of layers or changing down- and up-sampling schemes) as their ConvNet designs.

ConvNet architectures generally have limitations in modeling explicit long-range spatial relations (i.e., relations between two voxels that are far away from each other) present in an image due to the intrinsic locality (i.e., the limited effective receptive field) of convolution operations (Luo et al. 2016). The U-Net (or V-Net (Milletari et al. 2016)) was proposed to overcome this limitation by introducing down- and up-sampling operations into a ConvNet, which theoretically enlarges the receptive field of the ConvNet and, thus, encourages the network to consider long-range relationships between points in images. However, several problems remain: first, the receptive fields of the first several layers are still restricted by the convolution-kernel size, and the global information of an image can only be viewed at the deeper layers of the network; second, it has been shown that as the convolutional layers deepen, the impact from far-away voxels decays quickly (Li et al. 2021). Therefore, the effective receptive field of a U-Net is, in practice, much smaller than its theoretical receptive field, and it is only a portion of the typical size of a medical image. This limits the U-Net’s ability to perceive semantic information and model long-range relationships between points. Yet, it is believed that the ability to comprehend semantic scene information is of great importance in coping large deformations (Ha et al. 2020). Many works in other fields (e.g., image segmentation) have addressed this limitation of U-Net (Zhou et al. 2019; Jha et al. 2019; Devalla et al. 2018; Alom et al. 2018). To allow for a better flow of multi-scale contextual information throughout the network, Zhou et al. (Zhou et al. 2019) proposed a nested U-Net (i.e., U-Net++), in which the complex up- and down-samplings along with multiple skip connections were used. Devalla et al. (Devalla et al. 2018) introduced dilated convolution to the U-Net architecture that enlarges the network’s effective receptive field. A similar idea was proposed by Alom et al. (Alom et al. 2018), where the network’s effective receptive field was increased by deploying recurrent convolutional operations. Jha et al. proposed ResUNet++ (Jha et al. 2019) that incorporates the attention mechanisms into U-Net for modeling long-range spatial information. Despite these methods’ promising performance in other medical imaging fields, there has been limiting work on using advanced network architectures for medical image registration.

Transformer, which originated from natural language processing tasks (Vaswani et al. 2017), has shown its potential in computer vision tasks. A Transformer deploys self-attention mechanisms to determine which parts of the input sequence (e.g., an image) are essential based on contextual information. Unlike convolution operations, whose effective receptive fields are limited by the size of convolution kernels, the self-attention mechanisms in a Transformer have large size effective receptive fields, making a Transformer capable of capturing long-range spatial information (Li et al. 2021). Dosovitskiy et al. (Dosovitskiy et al. 2020) proposed Vision Transformer (ViT) that applies the Transformer encoder from NLP directly to images. It was the first purely self-attention-based network for computer vision and achieved state-of-the-art performance in image recognition. Subsequent to their success, Swin Transformer (Liu et al. 2021a) and its variants (Dai et al. 2021; Dong et al. 2021) have demonstrated their superior performances in object detection, and semantic segmentation. Recently, Transformer-related methods have gained increased attention in medical imaging (Chen et al. 2021b; Xie et al. 2021; Wang et al. 2021b; Li et al. 2021; Wang et al. 2021a; Zhang et al. 2021); the major application has been the task of image segmentation.

Transformer can be a strong candidate for image registration because it can better comprehend the spatial correspondence between the moving and fixed images. Registration is the process of establishing such correspondence, and intuitively, by comparing different parts of the moving to the fixed image. A ConvNet has a narrow field of view: it performs convolution locally, and its field of view grows in proportion to the ConvNet’s depth; hence, the shallow layers have a relatively small receptive field, limiting the ConvNet’s ability to associate the distant parts between two images. For example, if the left part of the moving image matches the right part of the fixed image, ConvNet will be unable to establish the proper spatial correspondence between the two parts if it cannot see both parts concurrently (i.e., when one of the parts falls outside of the ConvNet’s field of view). However, Transformer is capable of handling such circumstances and rapidly focusing on the parts that need deformation, owing to its large receptive field and self-attention mechanism.

Our group has previously shown preliminary results that demonstrated the bridging of ViT and V-Net provided good performance in image registration (Chen et al. 2021a). In this work, we extended that preliminary work and investigated various Transformer models from other tasks (i.e., computer vision and medical imaging tasks). We present a hybrid Transformer-ConvNet framework, TransMorph, for volumetric medical image registration. In this method, the Swin Transformer (Liu et al. 2021a) was employed as the encoder to capture the spatial correspondence between the input moving and fixed images. Then, a ConvNet decoder processed the information provided by the Transformer encoder into a dense displacement field. Long skip connections were deployed to maintain the flow of localization information between the encoder and decoder stages. We also introduced diffeomorphic variations of TransMorph to ensure a smooth and topology-preserving deformation. Additionally, we applied variational inference on the parameters of TransMorph, resulting in a Bayesian model that predicts registration uncertainty based on the given image pair. Qualitative and quantitative evaluation of the experimental results demonstrate the robustness of the proposed method and confirm the efficacy of Transformers for image registration.

The main contributions of this work are summarized as follows:

Transformer-based model: This paper presents the pioneering work on using Transformers for image registration. A novel Transformer-based neural network, TransMorph, was proposed for affine and deformable image registration.

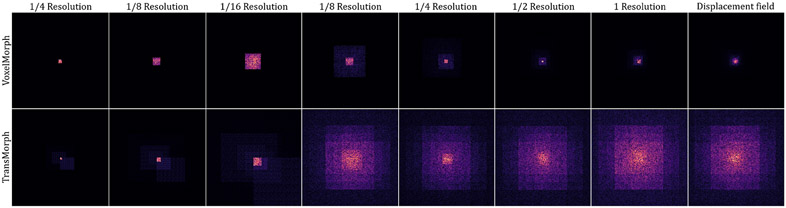

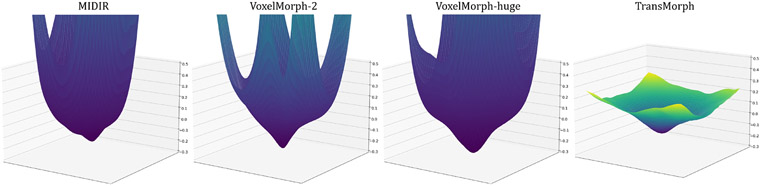

Architecture analysis: Experiments in this paper demonstrate that positional embedding, which is a commonly used element in Transformer by convention, is not required for the proposed hybrid Transformer-ConvNet model. Secondly, we show that Transformer-based models have larger effective receptive fields than ConvNets. Moreover, we demonstrated that TransMorph promotes a flatter registration loss landscape.

Diffeomorphic registration: We demonstrate that TransMorph can be easily integrated into two existing frameworks as a registration backbone to provide diffeomorphic registration.

Uncertainty quantification: This paper also provides a Bayesian uncertainty variant of TransMorph that yields transformer uncertainty and perfectly calibrated appearance uncertainty estimates.

State-of-the-art results: We extensively validate the proposed registration models on two brain MRI registration applications (inter-patient and atlas-to-patient registration) and on a novel application of XCAT-to-CT registration with an aim to create a population of anatomically variable XCAT phantom. The datasets used in this study (which include a publicly available dataset, the IXI dataset1) contain over 1000 image pairs for training and testing. The proposed models were compared with various registration methods and demonstrated state-of-the-art performance. Eight registration approaches were employed as baselines, including learning-based methods and widely used conventional methods. The performances of four recently proposed Transformer architectures from other tasks (e.g., semantic segmentation, classification, etc.) were also evaluated on the task of image registration.

Open source: We provide the community with a fast and accurate tool for deformable registration. The source code, the pre-trained models, and our preprocessed IXI dataset are publicly available at https://bit.ly/37eJS6N.

The paper is organized as follows. Section 2 discusses related work. Section 3 explains the proposed methodology. Section 4 discusses experimental setup, implementation details, and datasets used in this study. Section 5 presents experimental results. Section 6 discusses the findings based on the results, and Section 7 concludes the paper.

2. Related Work

This section reviews the relevant literature and provides fundamental knowledge for the proposed method.

2.1. Image Registration

Deformable image registration (DIR) establishes spatial correspondence between two images by optimizing an energy function:

| (1) |

where and denote, respectively, the moving and fixed image, denotes the deformation field that warps the moving image (i.e., ), imposes smoothness of the deformation field, and is the regularization hyper-parameter that determines the trade-off between image similarity and deformation field regularity. The optimal warping, is given by minimizing this energy function:

| (2) |

In the energy function, measures the level of alignment between the deformed moving image, , and the fixed image, . Some common choices for are mean squared error (MSE) (Beg et al. 2005; Wolberg and Zokai 2000), normalized cross-correlation (NCC) (Avants et al. 2008), structural similarity index (SSIM) (Chen et al. 2020), and mututal information (MI) (Viola and Wells III 1997). The regularization term, , imposes spatial smoothness on the deformation field. A common assumption in most applications is that similar structures exist in both moving and fixed images. As a result, a continuous and invertible deformation field (i.e., a diffeomorphism) is needed to preserve topology, and the regularization, is meant to enforce or encourage this. Isotropic diffusion (equivalent to Gaussian smoothing) (Balakrishnan et al. 2019), anisotropic diffusion (Pace et al. 2013), total variation (Vishnevskiy et al. 2016), and bending energy (Johnson and Christensen 2002) are popular options for .

2.1.1. Image registration via deep neural networks

While traditional image registration methods iteratively minimize the energy function in (1) for each pair of moving and fixed images, DNN-based methods optimize the energy function for a training dataset, thereby learning a global representation of image registration that enables alignment of an unseen pair of volumes. DNN methods are often categorized as supervised or unsupervised, with the former requiring a ground truth deformation field for training and the latter relying only on the image datasets.

In supervised DNN methods, the ground-truth deformation fields are either produced synthetically or generated by traditional registration methods (Yang et al. 2017b; Sokooti et al. 2017; Cao et al. 2018). Yang et al. 2017b proposed a supervised ConvNet that predicts the LDDMM (Beg et al. 2005) momentum from image patches. Sokooti et al. 2017 trained a registration ConvNet with synthetic displacement fields. The ground-truth deformation fields are often computationally expensive to generate, and the registration accuracy of these methods is highly dependent on the quality of the ground truth.

Due to the limitations of supervised methods, the focus of research has switched to unsupervised DNN methods that do not need ground-truth deformation fields. Unsupervised DNNs optimize an energy function on the input images, similar to traditional methods. However, DNN-based methods learn a common registration representation from a training set and then apply it to unseen images. Note that the term “unsupervised” refers to the absence of ground-truth deformation fields, but the network still needs training (this is also known as “self-supervised”). de Vos et al. 2019; Balakrishnan et al. 2018, 2019 are representative of unsupervised DNN-based methods.

More recently, diffeomorphic deformation representations have been developed to address the issue of non-smooth deformations in DNN-based methods. We briefly introduce its concepts in the next subsection.

2.1.2. Diffeomorphic image registration

Diffeomorphic deformable image registration is important in many medical image applications, owing to its special properties including topology preservation and transformation invertibility. A diffeomorphic transformation is a smooth and continuous one-to-one mapping with invertible derivatives (i.e., non-zero Jacobian determinant). Such a transformation can be achieved via the time-integration of time-dependent (Beg et al. 2005; Avants et al. 2008) or time-stationary velocity fields (SVFs) (Arsigny et al. 2006; Ashburner 2007; Vercauteren et al. 2009; Hernandez et al. 2009). In the time-dependent setting (e.g., LDDMM (Beg et al. 2005) and SyN (Avants et al. 2008)), a diffeomorphic transformation is obtained via integrating the sufficiently smooth time-varying velocity fields , i.e., , where is the identity transform. On the other hand, in the stationary velocity fields (SVFs) setting (e.g., DARTEL Ashburner 2007 and diffeomorphic Demons (Vercauteren et al. 2009)), the velocity fields are assumed to be stationary over time, i.e., . Dalca et al. (Dalca et al. 2019) first adopt the diffeomorphism formulation in a deep learning model, using the SVFs setting with an efficient scaling-and-squaring approach (Arsigny et al. 2006). In the scaling-and-squaring approach, the deformation field is represented as a Lie algebra member that is exponentiated to generate a time 1 deformation , which is a member of the Lie group: . This means that the exponentiated flow field compels the mapping to be diffeomorphic and invertible using the same flow field. Starting from an initial deformation field:

| (3) |

where p denotes the spatial locations. The can be obtained using the recurrence:

| (4) |

Thus, .

In practice, a neural network first generates a displacement field, which is then scaled by 1/2T to produce an initial deformation field . Subsequently, the squaring technique (i.e., Eqn. 4) is applied recursively to T times via a spatial transformation function, resulting in a final diffeomorphic deformation field . Despite the fact that diffeomorphisms are theoretically guaranteed to be invertible, interpolation errors can lead to invertibility errors that increase linearly with the number of interpolation steps (Avants et al. 2008; Mok and Chung 2020).

2.2. Self-attention Mechanism and Transformer

Transformer makes use of a self-attention mechanism that estimates the relevance of one input sequence to another via the Query-Key-Value (QKV) model (Vaswani et al. 2017; Dosovitskiy et al. 2020). The input sequences often originate from the flattened patches of an image. Let x be an image volume defined over a 3D spatial domain (i.e., ). The image is first divided into N flattened 3D patches , where (H, W, L) is the size of the original image, (P, P, P) is the size of each image patch, and . Then, a learnable linear embedding E is applied to , which projects each patch into a D × 1 vector representation:

| (5) |

where the dimension D is a user-defined hyperparemeter. Then, a learnable positional embedding is added to so that the patches can retain their positional information, i.e., , where . These vector representations, often known as tokens, are subsequently used as inputs for self-attention computations.

Self-attention.

To compute self-attention (SA), is encoded by (i.e., a linear layer) to three matrix representations: Queries , Keys , and Values . The scaled dot-product attention is given by:

| (6) |

where A is the attention weight matrix, each element of A represents the pairwise similarity between two elements of the input sequence and their respective query and key representations. In general, SA computes a normalized score for each input token based on the dot product of the Query and Key representations. The score is subsequently applied to the Value representation of the token, signifying to the network whether or not to focus on this token.

Multi-head self-attention.

A Transformer employs multi-head self-attention (MSA) rather than a single attention function. MSA is an extension of self-attention in which h self-attention operations (i.e., “heads”) are processed in parallel, thereby effectively increasing the number of trainable parameters. Then, the outputs of the SA operations are concatenated then projected onto a D-dimensional representation:

| (7) |

where , and Dh is typically set to D/h in order to keep the number of parameters constant before and after the MSA operation.

2.3. Bayesian Deep Learning

Uncertainty estimates help comprehend what a machine learning model does not know. They indicate the likelihood that a neural network may make an incorrect prediction. Because most deep neural networks are incapable of providing an estimate of the uncertainty in their output values, their predictions are frequently taken at face value and thought to be correct. Bayesian deep learning estimates predictive uncertainty, providing a realistic paradigm for understanding uncertainty within deep neural networks (Gal and Ghahramani 2016). The uncertainty caused by the parameters in a neural network is known as epistemic uncertainty, which is modeled by placing a prior distribution (e.g., a Gaussian prior distribution: on the parameters of a network and then attempting to capture how much these weights vary given specific data. Recent efforts in this area include the Bayes by Backprop (Blundell et al. 2015), its closely related mean-field variational inference by assuming a Gaussian prior distribution (Tölle et al. 2021), stochastic batch normalization (Atanov et al. 2018), and Monte-Carlo (MC) dropout (Gal and Ghahramani 2016; Kendall and Gal 2017). The applications of Bayesian deep learning in medical imaging expands on image denoising (Tölle et al. 2021; Laves et al. 2020b) and image segmentation (DeVries and Taylor 2018; Baumgartner et al. 2019; Mehrtash et al. 2020). In deep-learning-based image registration, the majority of methods provide a single, deterministic solution of the unknown geometric transformation. Knowing about epistemic uncertainty helps determine if and to what degree the registration results can be trusted and whether the input data is appropriate for the neural network.

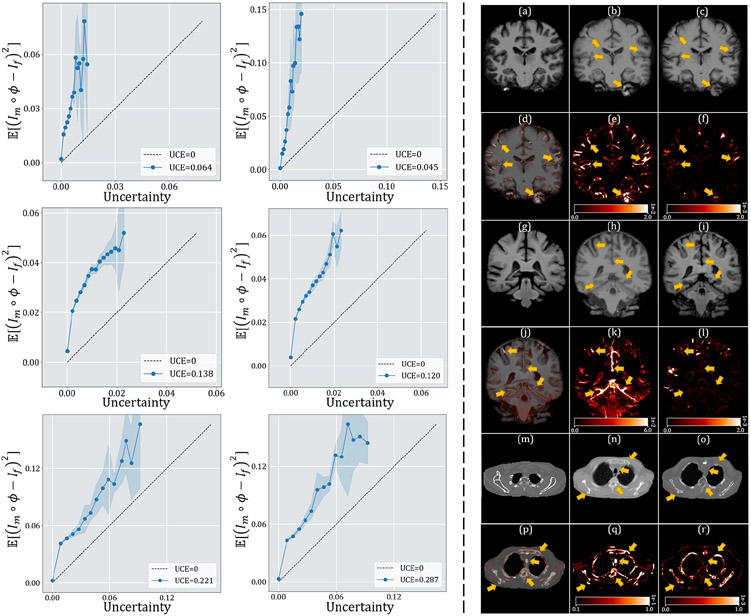

In general, two categories of registration uncertainty may be modeled using the epistemic uncertainty of a deep learning model: transformation uncertainty and appearance uncertainty (Luo et al. 2019; Xu et al. 2022). Transformation uncertainty measures the local ambiguity of the spatial transformation (i.e., the deformation), whereas appearance uncertainty quantifies the uncertainty in the intensity values of registered voxels or the volumes of the registered organs. Transformation uncertainty estimates may be used for uncertainty-weighted registration (Simpson et al. 2011; Kybic 2009), surgical treatment planning, or directly visualized for qualitative evaluations (Yang et al. 2017b). Appearance uncertainty may be translated into dose uncertainties in cumulative dose for radiation or radiopharmaceutical therapy (Risholm et al. 2011; Vickress et al. 2017; Chetty and Rosu-Bubulac 2019; Gear et al. 2018). These registration uncertainty estimates also enable the assessment of operative risks and leads to better-informed clinical decisions (Luo et al. 2019). Cui et al. (Cui et al. 2021) and Yang et al. (Yang et al. 2017b) incorporated MC dropout layers in their registration network designs, which allows for the estimation of transformation uncertainty by sampling multiple deformation field predictions from the network.

The proposed image registration framework expands on these ideas. In particular, a new registration framework is presented that leverages a Transformer in the network design. We demonstrate that this framework can be readily adapted to several existing techniques to allow diffeomorphism for image registration, and incorporate Bayesian deep learning to estimate registration uncertainty.

3. Methods

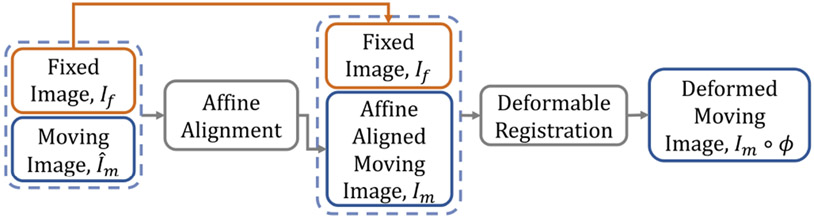

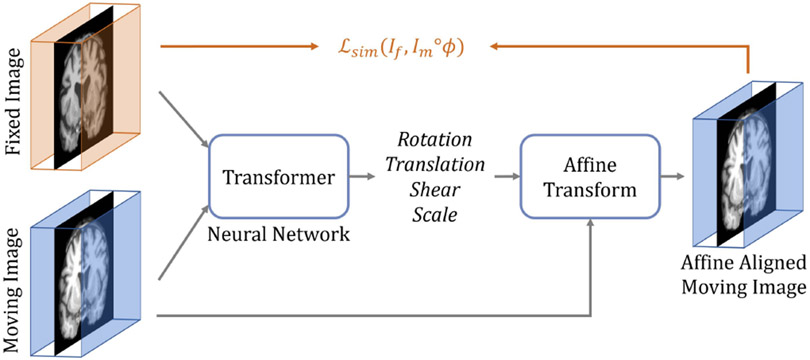

The conventional paradigm of image registration is shown in Fig. 2. The moving and fixed images, denoted respectively as and , are first affinely transformed into a single coordinate system. The resulting affine-aligned moving image is denoted as . Subsequently, is warped to using a deformation field, , generated by a DIR algorithm (i.e., ). Fig. 3 presents an overview of the proposed method. Here, both the affine transformation and the deformable registration are performed using Transformer-based neural networks. The affine Transformer takes and as inputs and computes a set of affine transformation parameters (e.g., rotation angle, translation, etc.). These parameters are used to affinely align with via an affine transformation function, yielding an aligned image . Then, a DIR network computes a deformation field given and , which warps using a spatial transformation function (i.e., ). During training, the DIR network may optionally include supplementary information (e.g., anatomical segmentation). The network architectures, the loss and regularization functions, and the variants of the method are described in detail in the following sections.

Fig. 2:

The conventional paradigm of image registration.

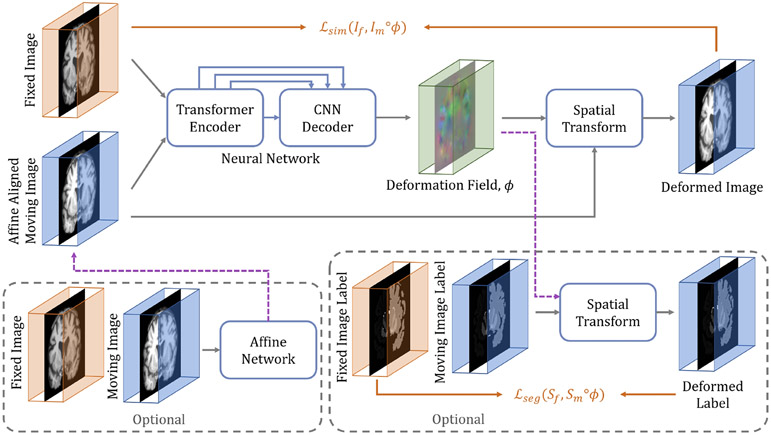

Fig. 3:

The overall framework of the proposed Transformer-based image registration model, TransMorph. The proposed hybrid Transformer-ConvNet network takes two inputs: a fixed image and a moving image that is affinely aligned with the fixed image. The network generates a nonlinear warping function, which is then applied to the moving image through a spatial transformation function. If an image pair has not been affinely aligned, an affine Transformer may be used prior to the deformable registration (left dashed box). Additionally, auxiliary anatomical segmentations may be leveraged during training the proposed network (right dashed box).

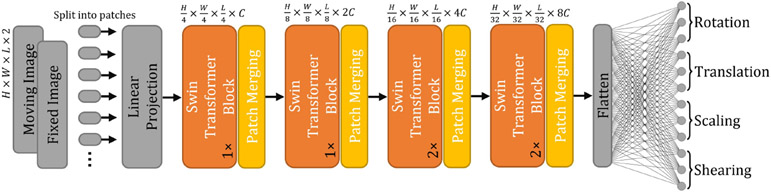

3.1. Affine Transformation Network

Affine transformation is often used as the initial stage in image registration because it facilitates the optimization of the following more complicated DIR processes (de Vos et al. 2019). An affine network examines a pair of moving and fixed images globally and produces a set of transformation parameters that aligns the moving image with the fixed image. Here, the architecture of the proposed Transformer-based affine network is a modified Swin Transformer (Liu et al. 2021a) that takes two 3D volumes as the inputs (i.e., and ) and generates 12 affine parameters: three rotation angles, three translation parameters, three scaling parameters, and three shearing parameters. The details and a visualization of the architecture are shown in Fig. A.19 in the Appendix. We reduced the number of parameters in the original Swin Transformer due to the relative simplicity of affine registration. The specifics of the Transformer’s architecture and parameter settings are covered in a subsequent section.

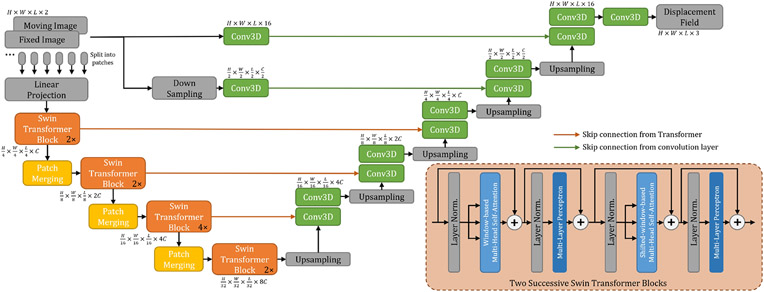

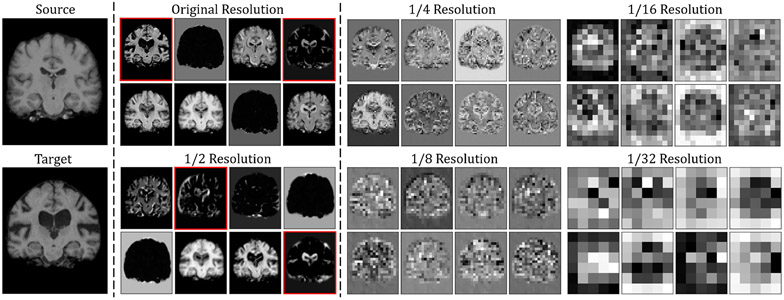

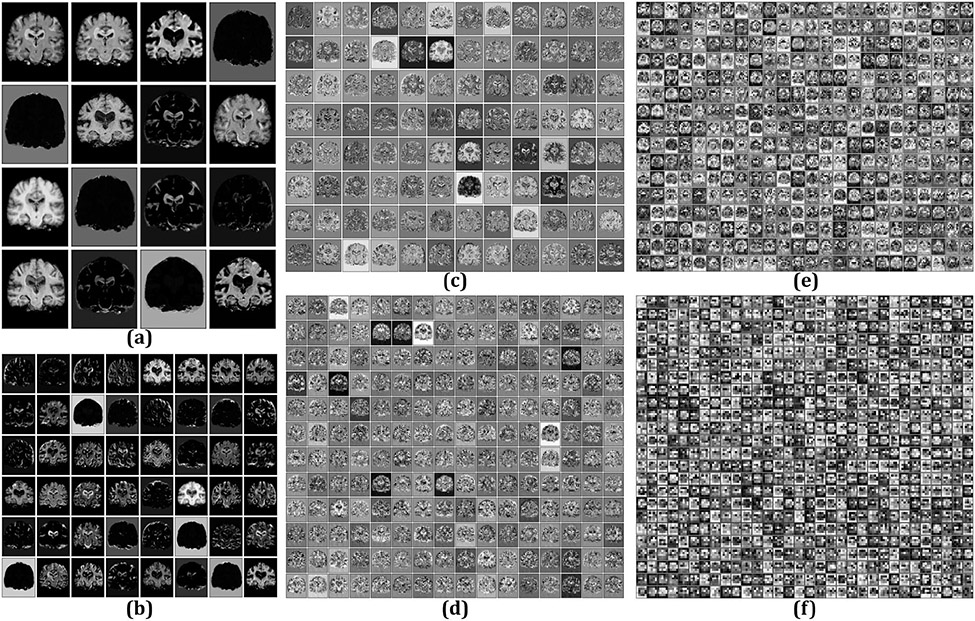

3.2. Deformable Registration Network

Fig. 1 shows the network architecture of the proposed TransMorph. The encoder of the network first splits the input moving and fixed volumes into non-overlapping 3D patches, each of size 2 × P × P × P, where P is typically set to 4 (Dosovitskiy et al. 2020; Liu et al. 2021a; Dong et al. 2021). We denote the ith patch as , where i ∈ {1,..., N} and is the total number of patches. Each patch is flattened and regarded as a “token”, and then a linear projection layer is used to project each token to a feature representation of an arbitrary dimension (denoted as C):

| (8) |

where denotes the linear projection, and the output has a dimension of N × C.

Fig. 1:

The architecture of the proposed TransMorph registration network.

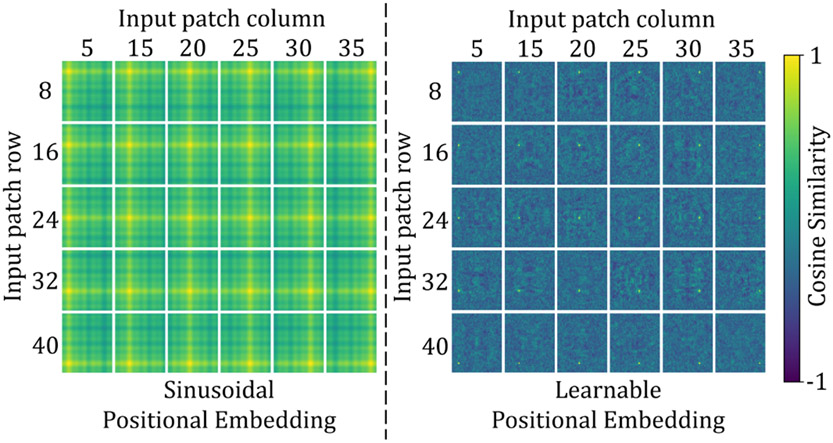

Because the linear projection operates on image patches and does not keep the token’s location relative to the image as a whole, previous Transformer-based models often added a positional embedding to the linear projections in order to integrate the positional information into tokens, i.e. (Vaswani et al. 2017; Dosovitskiy et al. 2020; Liu et al. 2021a; Dong et al. 2021). Such Transformers were primarily designed for image classification, where the output is often a vector describing the likelihood of an input image being classified as a certain class. Thus, if the positional embedding is not employed, the Transformer may lose the positional information. However, for pixel-level tasks such as image registration, the network often includes a decoder that generates a dense prediction with the same resolution as the input or target image. The spatial correspondence between voxels in the output image is enforced by comparing the output with the target image using a loss function. Any spatial mismatches between output and target would contribute to the loss and be backpropagated into the Transformer encoder. The Transformer should thereby inherently capture the tokens’ positional information. In this work, we observed, as will be shown in section 6.1.2, that positional embedding is not necessary for image registration, and it only adds extra parameters to the network without improving performance.

Following the linear projection layer, several consecutive stages of patch merging and Swin Transformer blocks (Liu et al. 2021a) are applied on the tokens . The Swin Transformer blocks outputs the same number of tokens as the input, while the patch merging layers concatenate the features of each group of 2 × 2 × 2 neighboring tokens, thus they reduce the number of tokens by a factor of 2 × 2 × 2 = 8 (e.g., ). Then, a linear layer is applied on the 8C-dimensional concatenated features to produce features each of 2C-dimension. After four stages of Swin Transformer blocks and three stages of patch merging in between the Transformer stages (i.e., orange boxes in Fig. 1), the output dimension at the last stage of the encoder is . The decoder consists of successive upsampling and convolutional layers with the kernel size of 3 × 3. Each of the upsampled feature maps in the decoding stage was concatenated with the corresponding feature map from the encoding path via skip connections, then followed by two consecutive convolutional layers. As shown in Fig. 1, the Transformer encoder can only provide feature maps up to a resolution of owing to the nature of patch operation (denoted by the orange arrows). Hence, Transformer may fall short of delivering high-resolution feature maps and aggregating local information at lower layers (Raghu et al. 2021). To address this shortcoming, we employed two convolutional layers using the original and downsampled image pair as inputs to capture local information and generate high-resolution feature maps. The outputs of these layers were concatenated with the feature maps in the decoder to produce a deformation field. The output deformation field, , was generated the application of sixteen 3 × 3 convolutions. Except for the last convolutional layer, each convolutional layer is followed by a Leaky Rectified Linear Unit (Maas et al. 2013) activation. Finally, the spatial transformation function (Jaderberg et al. 2015) is used to apply a nonlinear warp to the moving image with the deformation field (or the displacement field ) provided by the network.

In the next subsections, we discuss the Swin Transformer block, the spatial transformation function, and the loss functions in detail.

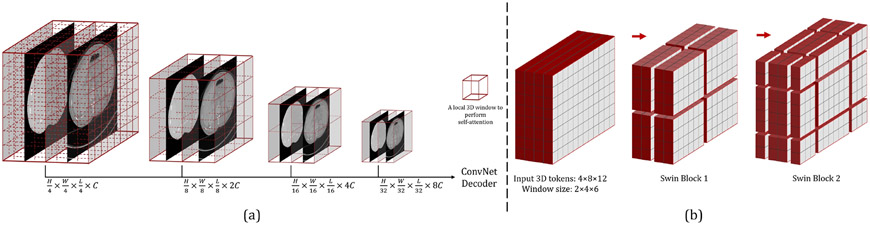

3.2.1. 3D Swin Transformer Block

Swin Transformer (Liu et al. 2021a) can generate hierarchical feature maps at various resolutions by using patch merging layers, making it ideal for usage as a general-purpose backbone for pixel-level tasks like image registration and segmentation. Swin Transformer’s most significant component, apart from patch merging layers, is the shifted window-based self-attention mechanism. Unlike ViT (Dosovitskiy et al. 2020), which computes the relationships between a token and all other tokens at each step of the self-attention modules. Swin Transformer computes self-attention within the evenly partitioned non-overlapping local windows of the original and the lower resolution feature maps (as shown in Fig. 5 (a)). In contrast to the original Swin Transformer, this work uses rectangular-parallelepiped windows to accommodate non-square images, and each has a shape of . At each resolution, the first Swin Transformer block employs a regular window partitioning method, beginning with the top-left voxel, and the feature maps are evenly partitioned into non-overlapping windows of size . The self-attention is then calculated locally within each window. To introduce connections between neighboring windows, the Swin Transformer uses a shifted window design: in the successive Swin Transformer blocks, the windowing configuration shifts from that of the preceding block, by displacing the windows in the preceding block by () voxels. As illustrated by an example in Fig. 5 (b), the input feature map has 4 × 8 × 12 voxels. With a window size of 2 × 4 × 6, the feature map is evenly partitioned into 2 × 2 × 2 = 8 windows in the first Swin Transformer block (“Swin Block 1” in Fig. 5 (b)). Then, in the next block, the windows are shifted by , and the number of windows becomes 3 × 3 × 3 = 27. We extended the original 2D efficient batch computation (i.e., cyclic shift) (Liu et al. 2021a,b) to 3D and applied it to the 27 shifted windows, keeping the final number of windows for attention computation at 8. With the windowing-based attention, two consecutive Swin Transformer blocks can be computed as:

| (9) |

where W-MSA and SW-MSA denote, respectively, window-based multi-head self-attention and shifted-window-based multi-head self-attention modules; MLP denotes the multi-layer perceptron module (Vaswani et al. 2017); and denote the output features of the (S)W-MSA and the MLP module for block , respectively. The self-attention is computed as:

| (10) |

where are query, key, value matrices, d denotes the dimension of query and key features, is the number of tokens in a 3D window, and B represents the relative position of tokens in each window. Since the relative position between tokens along each axis (i.e., x, y, z) can only take values from [, ], the values in B are taken from a smaller bias matrix . For the reasons given previously, we will show in section 6.1.2 that positional bias B is not needed for the proposed network and that it just adds extra parameters without improving registration performance.

Fig. 5:

(a): Swin Transformer creates hierarchical feature maps by merging image patches. The self-attention is computed within each local 3D window (the red box). The feature maps generated at each resolution are sent into a ConvNet decoder to produce an output. (b): The 3D cyclic shift of local windows for shifted-window-based self-attention computation.

3.2.2. Loss Functions

The overall loss function for network training derives from the energy function of traditional image registration algorithms (i.e., Eqn. (1)). The loss function consists of two parts: one computes the similarity between the deformed moving and the fixed images, and another one regularizes the deformation field so that it is smooth:

| (11) |

where denotes the image fidelity measure, and denotes the deformation field regularization.

Image Similarity Measure.

In this work, we experimented with two widely-used similarity metric for . The first was the mean squared error, which was the mean of the squared difference in voxel values between and :

| (12) |

where denotes the voxel location, and represents the image domain.

Another similarity metric used was the local normalized cross-correlation between and :

| (13) |

where and denotes the mean voxel value within the local window of size n3 centered at voxel . We used n = 9 in the experiments.

Deformation Field Regularization.

Optimizing the similarity metric alone would encourage to be visually as close as possible to . The resulting deformation field , however, might not be smooth or realistic. To impose smoothness in the deformation field, a regularizer was added to the loss function. encourages the displacement value in a location to be similar to the values in its neighboring locations. Here, we experimented with two regularizers. The first was the diffusion regularizer Balakrishnan et al. 2019:

| (14) |

where is the spatial gradients of the displacement field . The spatial gradients were approximated using forward differences, that is, ).

The second regularizer was bending energy (Rueckert et al. 1999), which penalizes sharply curved deformations, thus, it may be helpful for abdominal organ registration. Bending energy operates on the second derivative of the displacement field, and it is defined as:

| (15) |

where the derivatives were estimated using the same forward differences that were used previously.

Auxiliary Segmentation Information.

When the organ segmentations of and are available, TransMorph may leverage this auxiliary information during training to improve the anatomical mapping between and . A loss function that quantifies the segmentation overlap is added to the overall loss function (Eqn. 11):

| (16) |

where and represent, respectively, the organ segmentation of and , and is a weighting parameter that controls the strength of . In the field of image registration, it is common to use Dice score (Dice 1945) as a figure of merit to quantify registration performance. Therefore, we directly minimized the Dice loss (Milletari et al. 2016) between and , where k represents the kth structure/organ:

| (17) |

To allow backpropagation of the Dice loss, we used a method similar to that described in (Balakrishnan et al. 2019), in which we designed and as image volumes with K channels, each channel containing a binary mask defining the segmentation of a specific structure/organ. Then, is computed by warping the K-channel with using linear interpolation so that the gradients of can be backpropagated into the network.

3.3. Probabilistic and B-spline Variants

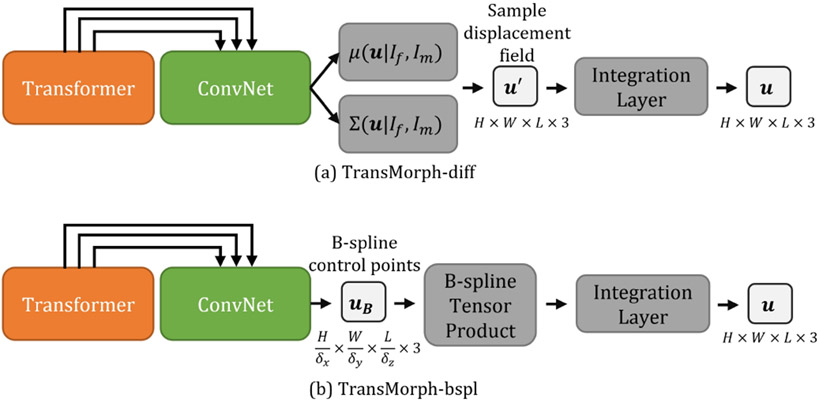

In this section, we demonstrate that by simply altering the decoder, TransMorph can be used in conjunction with the concepts from prior research to ensure a diffeomorphic deformation such that the resulting deformable mapping is continuous, differentiable, and topology-preserving. The diffeomorphic registration was achieved using the scaling-and-squaring approach (described in section 2.1.2) with a stationary velocity field representation (Arsigny et al. 2006). Two existing diffeomorphic models, VoxelMorph-diff (Dalca et al. 2019) and MIDIR (Qiu et al. 2021), have been adopted as bases for the proposed TransMorph diffeomorphic variants, designated by TransMorph-diff (section Appendix H) and TransMorph-bspl (section Appendix I), respectively. The architectures of the two variants are shown in Fig. 6. The detailed derivation of these two variants are listed in Appendix.

Fig. 6:

The probabilistic and B-spline variants of TransMorph. (a): The architecture of the probabilistic diffeomorphic TransMorph. (b): The architecture of the B-spline diffeomorphic TransMorph.

TransMorph-diff was trained using the same loss functions as VoxelMorph-diff (Dalca et al. 2019):

| (18) |

and when anatomical label maps are available:

| (19) |

However, it is important to note that in (Dalca et al. 2019), and represent anatomical surfaces obtained from label maps. In contrast, we directly used the label maps as and in this work. They were image volumes with multiple channels, each channel contained a binary mask defining the segmentation of a certain structure/organ.

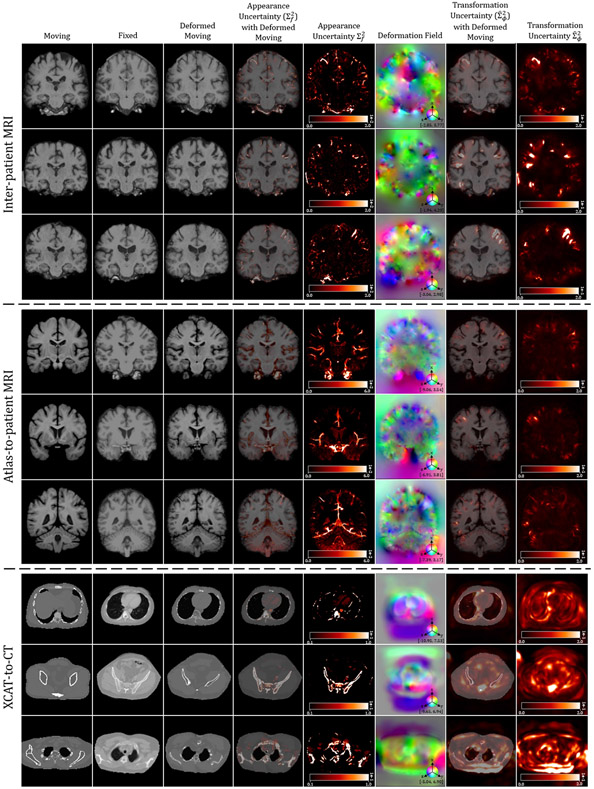

3.4. Bayesian Uncertainty Variant

In this section, we extend the proposed TransMorph to a Bayesian neural network (BNN) using the variational inference framework with Monte Carlo dropout (Gal and Ghahramani 2016), for which we refer readers to (Gal and Ghahramani 2016; Yang et al. 2017a, 2016) for both theoretical and technical details. We denoted the resulting model as TransMorph-Bayes. In this model, Dropout layers were inserted into the Transformer encoder of the TransMorph architecture but not into the ConvNet decoder, in order to avoid imposing excessive regularity for the network parameters and thus decreasing performance. We added a dropout layer after each fully connected layer in the MLPs (Eqn. 9) and after each self-attention computation (Eqn. 10). Note that these are the locations where dropout layers are commonly used for Transformer training. We set the dropout probability p to 0.15 to further avoid the network imposing an excessive degree of regularity on the network weights.

Both the transformation and appearance uncertainty can be estimated as the variability from the predictive mean (i.e., the variance), where the predictive mean of the deformation fields and the deformed images can be estimated by Monte Carlo integration (Gal and Ghahramani 2016):

| (20) |

and

| (21) |

This is equivalent to averaging the output of T forward passes through the network during inference, where represents the deformation field produced by tth forward pass. The transformation and appearance uncertainty can be estimated using the predictive variances of the deformation fields and the deformed images, respectively, as:

| (22) |

and

| (23) |

3.4.1. Appearance uncertainty calibration

An ideal uncertainty estimate should be properly correlated to the inaccuracy of the registration results; that is, a high uncertainty value should indicate a large registration error, and vice versa. Otherwise, doctors/surgeons may be misled by the erroneous estimate of registration uncertainty and place unwarranted confidence in the registration results, resulting in severe consequences (Luo et al. 2019; Risholm et al. 2013, 2011). The appearance uncertainty given by Eqn. 23 is expressed as the variability from the mean model prediction. Such an appearance uncertainty estimation does not account for the systematic errors (i.e., bias) between the mean registration prediction and the target image; therefore, a low uncertainty value given by Eqn. 23 does not always guarantee an accurate registration result.

When the predicted uncertainty values closely corresponded to the expected model error, the uncertainty estimates are considered to be well-calibrated (Laves et al. 2019; Levi et al. 2019). In an ideal scenario, the estimated registration uncertainty should completely reflect the actual registration error. For instance, if the predictive variance of a batch of registered images generated by the network is found to be 0.5, the expectation of the squared error should likewise be 0.5. Accordingly, if the expected model error is quantified by MSE, then the perfect calibration of appearance uncertainty may be defined as the following (Guo et al. 2017; Levi et al. 2019; Laves et al. 2020c):

| (24) |

In the conventional paradigm of Bayesian neural networks, the uncertainty estimate is derived from the predictive variance relative to the predictive mean as in Eqn. 23. However, it can be shown that this predictive variance can be miscalibrated as a result of overfitting the training dataset (as shown in Appendix B). Therefore, the uncertainty values estimated based on in Eqn. 23 may be biased. This bias must be corrected in applications such as image denoising or classification (Laves et al. 2019; Guo et al. 2017; Kuleshov et al. 2018; Phan et al. 2018; Laves et al. 2020c,a), such that the uncertainty values closely reflect the expected error. In image registration, however, the expected appearance error may be computed even during the test time since the target image is always known. Therefore, a perfectly calibrated appearance uncertainty quantification may be achieved without additional effort. Here, we propose to replace the predicted mean with the target image in Eqn. 23. Then, the appearance uncertainty is the equivalent to the expected error:

| (25) |

A comparison between the two appearance uncertainty estimate methods (i.e., and ) is shown later in this paper.

4. Experiments

4.1. Datasets and Preprocessing

Three datasets including over 1000 image pairs were used to thoroughly validate the proposed method. The details of each dataset are described in the following sections.

4.1.1. Inter-patient Brain MRI Registration

For the inter-patient brain MR image registration dataset, we used a dataset of 260 T1–weighted brain MRI images acquired at Johns Hopkins University. The images were anonymized and acquired under IRB approval. The dataset was split into 182, 26, and 52 (7:1:2) volumes for training, validation, and test sets. Each image volume was used as a moving image to form two image pairs by randomly matching it to two other volumes in the set (i.e., the fixed images). Then, the moving and fixed images were inverted to form another two image pairs, resulting in four registration pairings of and . The final data comprises 768, 104, and 208 image pairs for training, validation, and testing, respectively. FreeSurfer (Fischl 2012) was used to perform standard preprocessing procedures for structural brain MRI, including skull stripping, resampling, and affine transformation. The pre-processed image volumes were all cropped to size of 160 × 192 × 224. Label maps including 30 anatomical structures were obtained using FreeSurfer for evaluating registration performances.

4.1.2. Atlas-to-patient Brain MRI Registration

We used a publicly available dataset to evaluate the proposed model with atlas-to-patient brain MRI registration task. A total number of 576 T1–weighted brain MRI images from the Information extraction from Images (IXI) database2 was used as the fixed images. The moving image for this task was an atlas brain MRI obtained from (Kim et al. 2021). The dataset was split into 403, 58, and 115 (7:1:2) volumes for training, validation, and test sets. FreeSurfer was used to pre-process the MRI volumes. We carried out the same pre-processing procedures we used for the previous dataset applied to the IXI dataset. All image volumes were cropped to size of 160 × 192 × 224. Label maps of 30 anatomical structures were used to evaluate registration performances.

4.1.3. Learn2Reg OASIS Brain MRI Registration

We additionally evaluated TransMorph on a public registration challenge, OASIS (Marcus et al. 2007; Hoopes et al. 2021), obtained from the 2021 Learn2Reg challenge (Hering et al. 2021) for inter-patient registration. This dataset contains a total of 451 brain T1 MRI images, with 394, 19, and 38 images being used for training, validation, and testing, respectively. FreeSurfer (Fischl 2012) was used to pre-process the brain MRI images, and label maps for 35 anatomical structures were provided for evaluation.

4.1.4. XCAT-to-CT Registration

Computerized phantoms have been widely used in the medical imaging field for algorithm optimization and imaging system validation (Christoffersen et al. 2013; Chen et al. 2019; Zhang et al. 2017). The four-dimensional extended cardiac-torso (XCAT) phantom (Segars et al. 2010) was developed based on anatomical images from the Visible Human Project data. While the current XCAT phantom3 can model anatomical variations through organ and phantom scaling, it cannot completely replicate the anatomical variations seen in humans. As a result, XCAT-to-CT registration (which can be thought of as atlas-to-image registration) has become a key method for creating anatomically variable phantoms (Chen et al. 2020; Fu et al. 2021; Segars et al. 2013). This research used a CT dataset from (Segars et al. 2013) that includes 50 non-contrast chest-abdomen-pelvis (CAP) CT scans that are part of the Duke University imaging database. Selected organs and structures were manually segmented in each patient’s CT scan. The structures segmented included the following: the body outline, the bone structures, lungs, heart, liver, spleen, kidneys, stomach, pancreas, large intestine, prostate, bladder, gall bladder, and thyroid. The manual segmentation was done by several medical students, and the results were subsequently corrected by an experienced radiologist at Duke University. The CT volumes have voxel sizes ranging from 0.625 × 0.625 × 5mm to 0.926 × 0.926 × 5mm. We used trilinear interpolation to resample all volumes to an identical voxel spacing of 2.5 × 2.5 × 5mm. The volumes were all cropped and zero-padded to have a size of 160 × 160 × 160 voxels. The intensity values were first clipped in the range of [−1000, 700] Hounsfield Units and then normalized to the range of [0, 1]. The XCAT attenuation map was generated with a resolution of 1.1 × 1.1 × 1.1mm using the material compositions and attenuation coefficients of the constituents at 120 keV. It was then resampled, cropped, and padded so that the resulting volume matched the size of the CT volumes. The XCAT attenuation map’s intensity values were also normalized to be within a range of [0, 1]. The XCAT and CT images were rigidly registered using the proposed affine network. The dataset was split into 35, 5, and 10 (7:1:2) volumes for training, validation, and testing. We conducted five-fold cross-validation on the fifty image volumes, resulting in 50 testing volumes in total.

4.2. Baseline Methods

We compared TransMorph to various registration methods that have previously demonstrated state-of-the-art registration performance. We begin by comparing TransMorph with four non-deep-learning-based methods. The hyper-parameters of these methods, unless otherwise specified, were empirically set to balance the trade-off between registration accuracy and running time. The methods and their hyperparameter settings are described below:

SyN4(Avants et al. 2008): For both inter-patient and atlas-to-patient brain MR registration tasks, we used the mean squared difference (MSQ) as the objective function, along with a default Gaussian smoothing of 3 and three scales with 180, 80, 40 iterations, respectively. For XCAT-to-CT registration, we used cross-correlation (CC) as the objective function, a Gaussian smoothing of 5 and three scales with 160, 100, 40 iterations, respectively.

NiftyReg5(Modat et al. 2010): We used the sum of squared differences (SSD) as the objective function and bending energy as a regularizer for all registration tasks. For inter-patient brain MR registration, we empirically used a regularization weighting of 0.0002 and three scales with 300 iterations each. For atlas-to-patient brain MR registration, the regularization weighting was set to 0.0006, and we used three scales with 500 iterations each. For XCAT-to-CT registration, we used a regularization weight of 0.0005 and five scales with 500 iterations each.

deedsBCV6 (Heinrich et al. 2015): The objective function was self-similarity context (SSC) (Heinrich et al. 2013b) by default. For both inter-patient and atlas-to-patient brain MR registration, we used the hyperparameter values suggested in (Hoffmann et al. 2020) for neuroimaging, in which the grid spacing, search radius, and quantization step were set to 6 × 5 × 4 × 3 × 2, 6 × 5 × 4 × 3 × 2, and 5 × 4 × 3 × 2 × 1, respectively. For XCAT-to-CT registration, we used the default parameters suggested for abdominal CT registration (Heinrich et al. 2015), where the grid spacing, search radius, and quantization step were 8 × 7 × 6 × 5 × 4, 8 × 7 × 6 × 5 × 4, and 5 × 4 × 3 × 2 × 1, respectively.

LDDMM7 (Beg et al. 2005): MSE was used as the objective function by default. For both inter-patient and atlas-to-patient brain MR registration, we used the smoothing kernel size of 5, the smoothing kernel power of 2, the matching term coefficient of 4, the regularization term coefficient of 10, and the iteration number of 500. For XCAT-to-CT registration, we used the same kernel size, kernel power, the matching term coefficient, and the number of iteration. However, the regularization term coefficient was empirically set to 3.

Next, we compared the proposed method with several existing deep-learning-based methods. For a fair comparison, unless otherwise indicated, the loss function (Eqn. 11) that consists of MSE (Eqn. 12) and diffusion regularization (Eqn. 14) was used for inter-patient brain MR registration, while we instead used LNCC (Eqn. 13) for atlas-to-patient MRI registration. For XCAT-to-CT registration, we used the loss function (Eqn. 16) that consists of LNCC (Eqn. 13), bending energy (Eqn. 15), and Dice loss (Eqn. 17). Auxiliary data (organ segmentation) was used for XCAT-to-CT registration only. Recall that the hyperparameters and define, respectively, the weight for deformation field regularization and Dice loss. The detailed parameter settings used for each method were as follows:

VoxelMorph8 (Balakrishnan et al. 2018, 2019): We employed two variants of VoxelMorph, the second variant doubles the number of convolution filters in the first variant; they are designated as VoxelMorph-1 and −2, respectively. For inter-patient and atlas-to-patient brain MR registration, the regularization hyperparameter was set, respectively, to 0.02 and 1, where these values were reported as the optimal values in Balakrishnan et al. 2019. For XCAT-to-CT registration, we set .

VoxelMorph-diff9 (Dalca et al. 2019): For both inter-patient and atlas-to-patient brain MR registration tasks, the loss function . (Eqn. 18) was used with set to 0.01 and set to 20. For XCAT-to-CT registration, we used the loss function . (Eqn. 19) with and .

CycleMorph10 (Kim et al. 2021): In CycleMorph, the hyerparameters , , and , correspond to the weights for cycle loss, identity loss, and deformation field regularization. For inter-patient brain MR registration, we set = 0.1, = 0.5, and . Whereas for atlas-to-patient brain MR registration, we set = 0.1, = 0.5, and . These values were recommended in (Kim et al. 2021) as the optimal values for neuroimaging. For XCAT-to-CT registration, we modified the CycleMorph by adding a Dice loss with a weighting of 1 to incorporate organ segmentation during training, and we set = 0.1 and = 1. We observed that the value of 1 suggested in (Kim et al. 2021) yielded over-smoothed deformation field in our application. Therefore, the value of was decreased to 0.1.

MIDIR11 (Qiu et al. 2021): The same loss function and value as VoxelMorph were used. In addition, the control point spacing for B-spline transformation was set to 2 for all tasks, which was shown to be an optimal value in Qiu et al. 2021.

To evaluate the proposed Swin-Transformer-based network architecture, we compared its performance to existing Transformer-based networks that achieved state-of-the-art performance in other applications (e.g., image segmentation, object detection, etc.). We customized these models to make them suitable for image registration. They were modified to produce 3-dimensional deformation fields that warp the given moving image. Note that the only change between the methods below and VoxelMorph is the network architecture, with the spatial transformation function, loss function, and network training procedures remaining the same. The first three models used the hybrid Transformer-ConvNet architecture (i.e., ViT-V-Net, PVT, and CoTr), while the last model used a pure Transformer-based architecture (i.e., nnFormer). Their network hyperparameter settings were as follows:

ViT-V-Net12 (Chen et al. 2021a): This registration network was developed based on ViT (Dosovitskiy et al. 2020). We applied the default network hyperparameter settings suggested in (Chen et al. 2021a).

PVT13 (Wang et al. 2021c): The default settings were applied, except that the embedding dimensions were to be {20, 40, 200, 320}, the number of heads was set to {2, 4, 8, 16}, and the depth was increased to {3, 10, 60, 3} to achieve a comparable number of parameters to that of TransMorph.

CoTr14 (Xie et al. 2021): We used the default network settings for all registration tasks.

nnFormer15 (Zhou et al. 2021): Because nnFormer was also developed on the basis of Swin Transformer, we applied the same Transformer hyperparameter values as in TransMorph to make a fair comparison.

4.3. Implementation Details

The proposed TransMorph was implemented using PyTorch (Paszke et al. 2019) on a PC with an NVIDIA TITAN RTX GPU and an NVIDIA RTX3090 GPU. All models were trained for 500 epochs using the Adam optimization algorithm, with a learning rate of 1 × 10−4 and a batch size of 1. The brain MR dataset was augmented with flipping in random directions during training, while no data augmentation was applied to the CT dataset. Restricted by the sizes of the image volumes, the window sizes (i.e., ) used in Swin Transformer were set to {5, 6, 7} for MR brain registration, {5, 5, 5} for XCAT-to-CT registration, and {}respectively. The Transformer hyperparameter settings for TransMorph are listed in the first row of Table. 2. Note that the variants of TransMorph (i.e., TransMorph-Bayes, TransMorph-bspl, and TransMorph-diff) share the same Transformer settings as TransMorph. The hyperparameter settings for each proposed variant are described as follows:

Table 2:

The architecture hyperparameters of the TransMorph models used in the ablation study. “Embed. Dimension” denotes the embedding dimension, C, in the very first stage (described in section 3.2); “Swin-T.” denotes Swin Transformer.

| Model | Embed. Dimension | Swin-T. block numbers | Head numbers | Parameters (M) |

|---|---|---|---|---|

| TransMorph | 96 | {2, 2, 4, 2} | {4, 4, 8, 8} | 46.77 |

| TransMorph-tiny | 6 | {2, 2, 4, 2} | {4, 4, 8, 8} | 0.24 |

| TransMorph-small | 48 | {2, 2, 4, 2} | {4, 4, 4, 4} | 11.76 |

| TransMorph-large | 128 | {2, 2, 12, 2} | {4, 4, 8, 16} | 108.34 |

| VoxelMorph-huge | - | - | - | 63.25 |

TransMorph: The identical loss function parameters as VoxelMorph were used for all tasks.

TransMorph-Bayes: The identical loss function parameters as VoxelMorph were applied here for all tasks. The dropout probability was set to 0.15.

TransMorph-bspl: The loss function settings for all tasks were the same ones as those used in VoxelMorph. The control point spacing, , for B-spline transformation was also set to 2, the same value used in MIDIR.

TransMorph-diff: We applied the same loss function parameters as those used in VoxelMorph-diff.

The affine model presented in this work comprises of a compact Swin Transformer. The Transformer parameter settings were identical to TransMorph except that the embedding dimension was set to be 12, the numbers of Swin Transfomer block were set to be {1, 1, 2, 2}, and the head numbers were set to be {1, 1, 2, 2}. The resulting affine model has a total number of 19.55 millions of parameters and a computational complexity of 0.4 GMacs. Because the MRI datasets were affinely aligned as part of the preprocessing, the affine model was only used in the XCAT-to-CT registration.

4.4. Additional Studies

In this section, we present experiments designed to verify the effect of the various Transformer modules in TransMorph architecture. Specifically, we carried out two additional studies of network components and model complexity. They are performed using the validation datasets from the three registration tasks, and the system-level comparisons are reported on test datasets. The following subsections provide detailed descriptions of these studies.

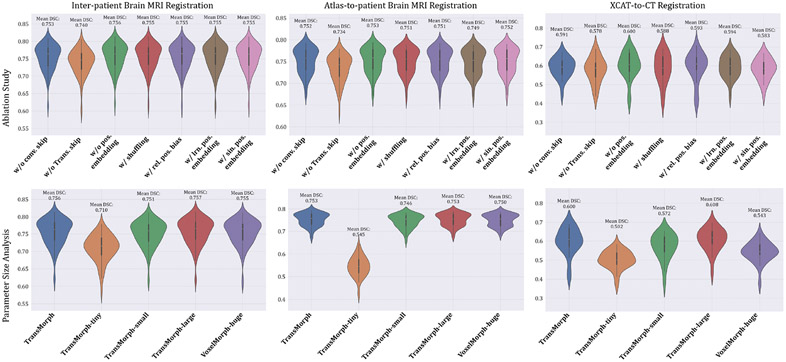

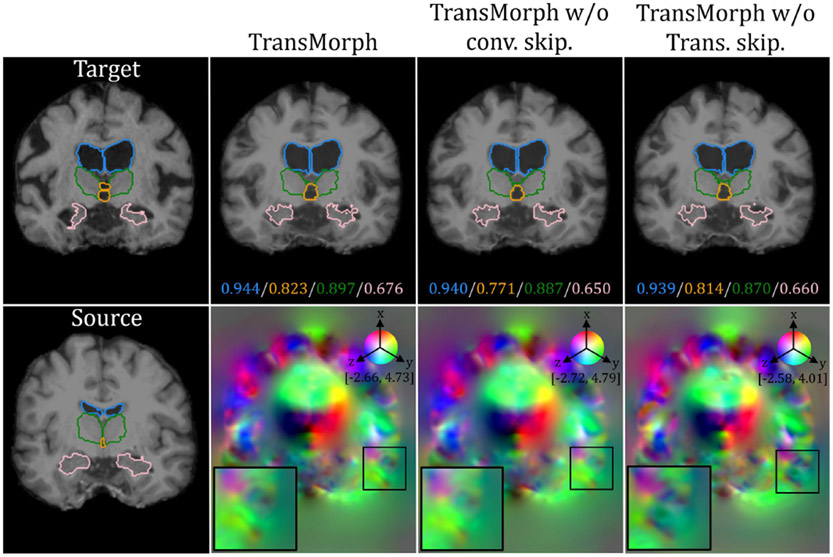

4.4.1. Ablation study on network components

We begin by examining the effects of several network components on registration performance. Table 1 lists three variants of TransMorph that either keep or remove the network’s long skip connections or the positional embeddings in the Transformer encoder. In “w/o conv. skip.”, the long skip connections from the two convolutional layers were removed (including two convolutional layers), which are the green arrows in Fig. 1. In “w/o trans. skip.”, the long skip connections coming from the Swin Transformer blocks were removed, which are the orange arrows in Fig. 1. We claimed in section 3.2 that the positional embedding (i.e., in Eqn. 8) was not a necessary element of TransMorph, because the positional information of tokens can be learned implicitly in the network via the consecutive up-sampling in the decoder and backpropagating the loss between output and target. Here, we conducted experiments to study the effectiveness of positional embeddings. Table 1 also lists five variants of TransMorph that either keep or remove the positional embeddings in the Transformer encoder. In the third variation, ”w/o positional embedding”, we did not employ any type of positional embedding. In the fourth variant, “w/ shuffling”, we did not employ any positional embedding but instead randomly shuffled the positions of the tokens (i.e., the dimension N of z in Eqn. 8 and 9) just before the self-attention calculation. Following the self-attention calculation, the positions are permuted back into their original order. This way, the self-attention modules in the Transformer encoder are truly invariant to the order of the tokens. In the fifth variant, “w/ rel. positional bias”, we used the relative positional bias in the self-attention computation (i.e. B in Eqn. 10) as used in the Swin Transformer (Liu et al. 2021a). In the second to last variant, “w/ lrn. positional embedding”, we added the same learnable positional embedding to the patch embeddings at the start of the Transformer encoder as used in the ViT (Dosovitskiy et al. 2020) while keeping the relative positional bias. In the last variant, “w/ sin. positional embedding”, we substituted the learnable positional embedding with a sinusoidal positional embedding, the same embedding used in the original Transformer (Vaswani et al. 2017), which hardcodes the positional information in the tokens.

Table 1:

The ablation study of TransMorph models with skip connections and positional embedding. “Conv. skip.” denotes the skip-connections from convolutional layers (indicated by green arrows in Fig. 1); “Trans. skip,” denotes the skip-connections from the Transformer blocks (indicated by orange arrows in Fig. 1); “lrn. positional embedding” denotes the learnable positional embedding; “sin. positional embedding” denotes the sinusoidal positional embedding.

| Model | Conv. skip. | Trans. skip. | Parameters (M) |

|---|---|---|---|

| w/o conv. skip. | ✓ | - | 46.70 |

| w/o Trans. skip. | - | ✓ | 41.55 |

| w/o positional embedding | ✓ | ✓ | 46.77 |

| w/ shuffling | ✓ | ✓ | 46.77 |

| w/ rel. positional bias | ✓ | ✓ | 46.77 |

| w/ lrn. positional embedding | ✓ | ✓ | 63.63 |

| w/ sin. positional embedding | ✓ | ✓ | 46.77 |

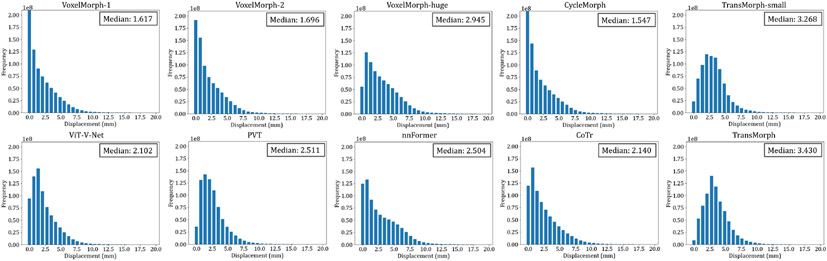

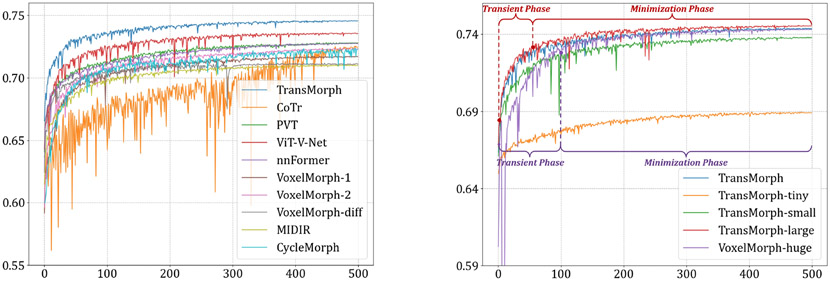

4.4.2. Model complexity study

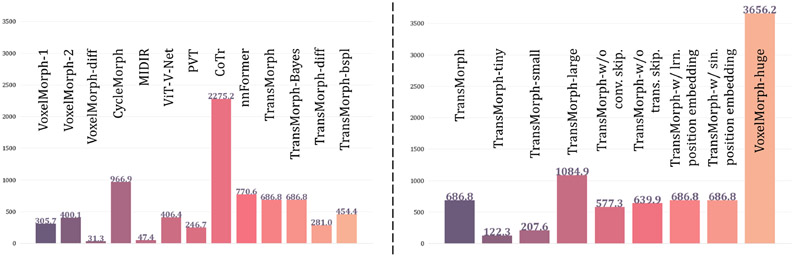

The impact of model complexity on registration performance was also investigated in this paper. Table 2 listed the parameter settings and the number of trainable parameters of four variants of the proposed TransMorph model. In the base model, TransMorph, the embedding dimension C was set to 96, and the number of Swin Transformer blocks in the four stages of the encoder was set to 2, 2, 4, and 2, respectively. Additionally, we introduced TransMorph-tiny, TransMorph-small, and TransMorph-large, which are about 1/200×, 1/4×, and 2× the model size of TransMorph. Finally, we compared our model to a customized VoxelMorph (denoted VoxelMorph-huge), which has a comparable parameter size to that of TransMorph w/ lrn. positional embedding. Specifically, we maintained the same number of layers in VoxelMorph-huge as in VoxelMorph, but increased the number of convolution kernels in each layer. As a result, VoxelMorph-huge has 63.25 million trainable parameters.

4.5. Evaluation Metrics

The registration performance of each model was evaluated based on the volume overlap between anatomical/organ segmentation, which was quantified using the Dice score (Dice 1945). We averaged the Dice scores of all anatomical/organ structures for all patients. The mean and standard deviation of the averaged scores were compared across various registration methods.

To quantify the regularity of the deformation fields, we also reported the percentages of non-positive values in the determinant of the Jacobian matrix on the deformation fields (i.e., ).

Additionally, for XCAT-to-CT registration, we used the structural similarity index (SSIM) (Wang et al. 2004) to quantify the structural difference between the deformed XCAT and the target CT images. The mean and standard deviation of the SSIM values of all patients were reported and compared.

5. Results

5.1. Inter-patient Brain MRI Registration

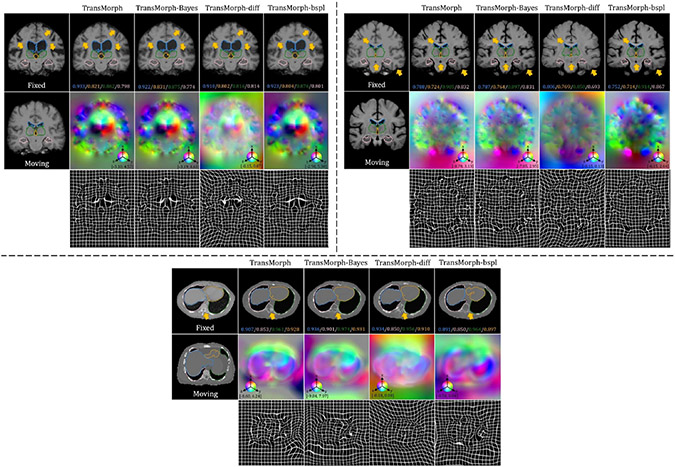

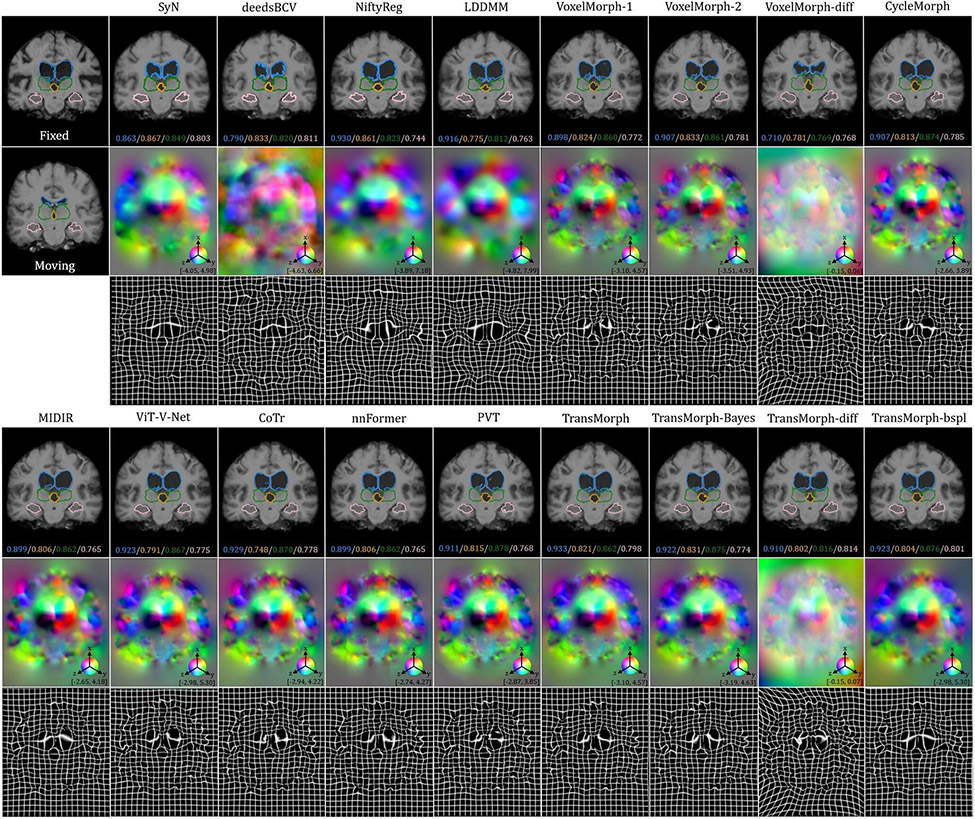

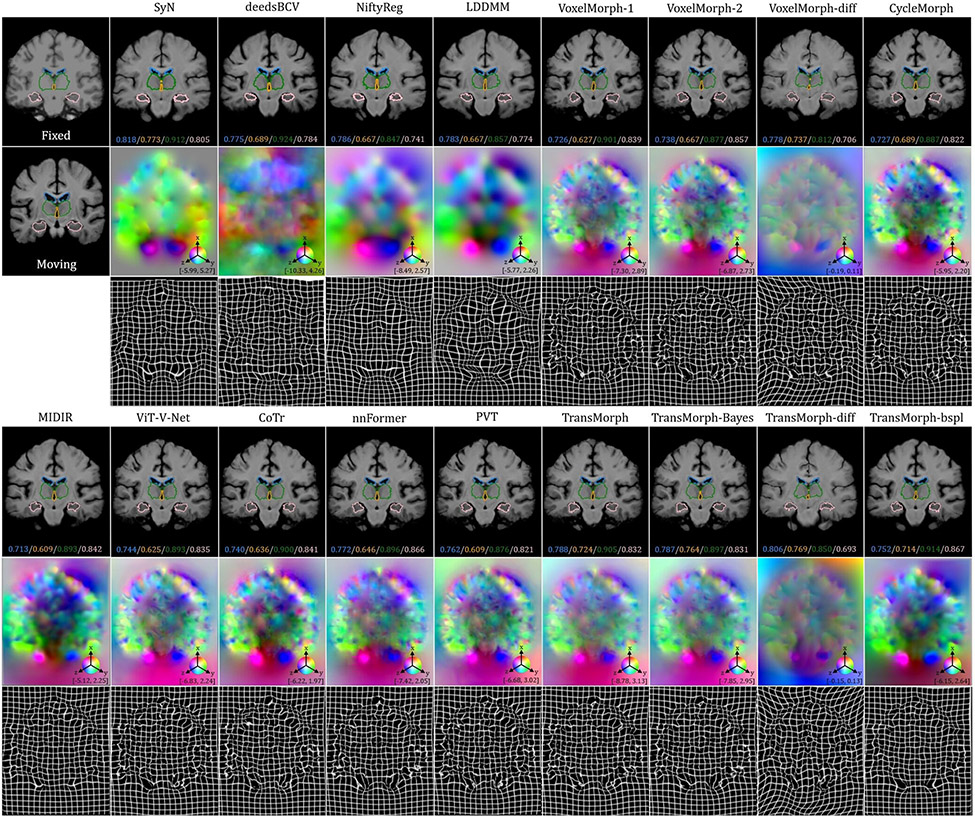

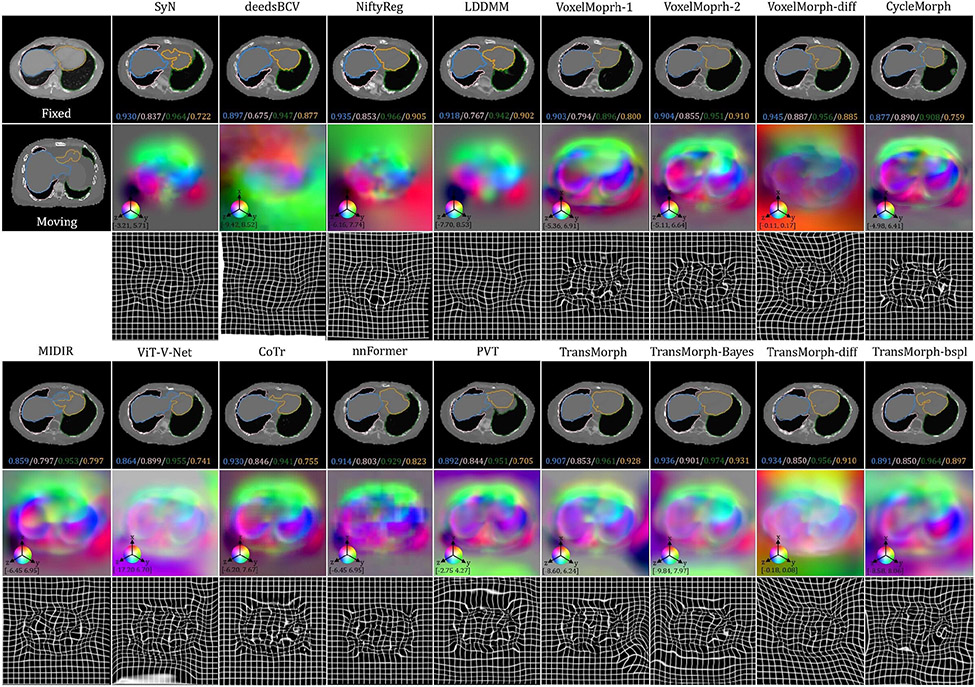

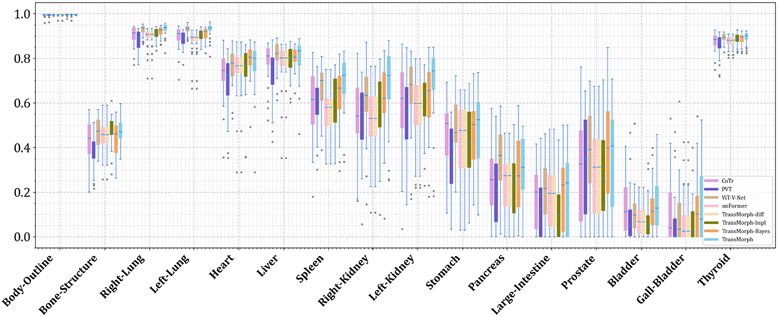

The top-left panel of Fig. 8 shows the qualitative results of a sample slice for inter-patient brain MRI registration. The scores in blue, orange, green, and pink correspond to ventricles, third ventricle, thalami, and hippocampi, respectively. Additional qualitative comparisons across all methods are shown in Fig. C.20 in Appendix C. Among the proposed models, diffeomorphic variants (i.e., TransMorph-diff and TransMorph-bspl) generated smoother displacement fields, with TransMorph-bspl producing the smoothest deformations inside the brain area. On the other hand, TransMorph and TransMorph-Bayes showed better qualitative results (highlighted by the yellow arrows) with higher Dice scores for the delineated structures.

Fig. 8:

Qualitative results of TransMorph (2nd column) and its Bayesian- (3rd column), probabilistic- (4th column), and B-spline (5th column) variants. Top-left & Top-right panels: Results of inter-patient and atlas-to-patient brain MRI registration. The blue, orange, green, and pink contours define, respectively, the ventricles, third ventricle, thalami, and hippocampi. Bottom panel: Results of XCAT-to-CT registration. The blue, orange, green, and pink contours define, respectively, the liver, heart, left lung, and right lung. The second row in both panels exhibits the displacement fields , where spatial dimension x, y, and z is mapped to each of the RGB color channels, respectively. The [p, q] in color bars denotes the magnitude range of the fields.

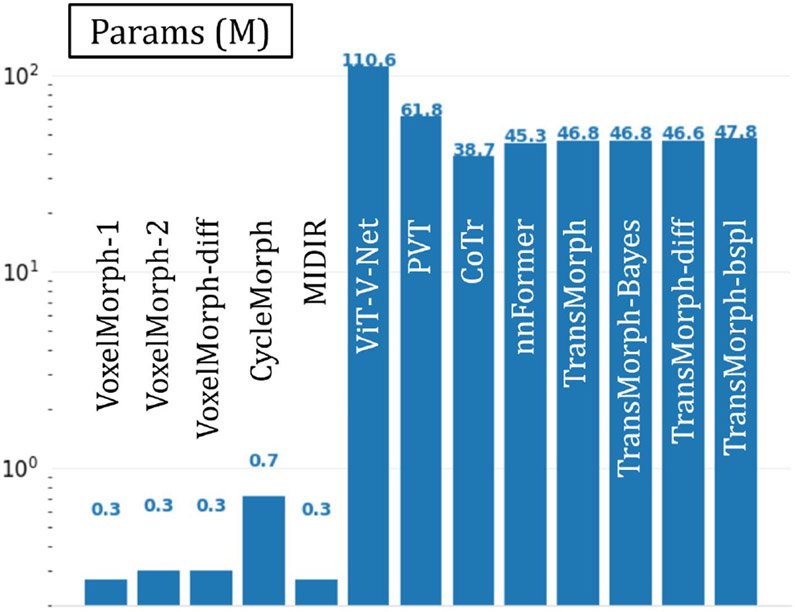

The quantitative evaluations are shown in Table 3. The results presented in the table show that the proposed method, TransMorph, achieved the highest mean Dice score of 0.745. Although the diffeomorphic variants produced slightly lower Dice scores than TransMorph, they still outperformed the existing registration methods and generated almost no foldings (i.e., ~ 0% of ) in the deformation fields. By comparison, TransMorph improved Dice score by >0.2 when compared to VoxelMorph and CycleMorph. We found that the Transformer-based models (i.e., TransMorph, ViT-V-Net, PVT, CoTr, and nnFormer) generally produced better Dice scores than the ConvNet-based models. Note that even though ViT-V-Net had almost twice the number of the trainable parameters (as shown in Fig. 7), TransMorph still outperformed all the Transformer-based models (including ViT-V-Net) by at least 0.1 in the Dice score, demonstrating Swin-Transformer’s superiority over other Transformer architectures. When we conducted hypothesis testing on the results using the paired t-test with Bonferroni correction Armstrong 2014 (i.e., dividing the p-values by 13, the total number of the paired t-tests performed), the p-values between the best performing TransMorph variant (i.e., TransMorph) and all other methods were p ≪ 0.0005.

Table 3:

Quantitative evaluation results of the inter-patient (i.e., the JHU dataset) and the atlas-to-patient (i.e., the IXI dataset) brain MRI registration. Dice score and percentage of voxels with a non-positive Jacobian determinant (i.e., folded voxels) are evaluated for different methods. The bolded numbers denote the highest scores, while the italicized ones indicate the second highest.

| Inter-patient MRI | Atlas-to-patient MRI | |||

|---|---|---|---|---|

| Model | DSC | % of | DSC | % of |

| Affine | 0.572±0.166 | - | 0.386±0.195 | - |

| SyN | 0.729±0.127 | <0.0001 | 0.645±0.152 | <0.0001 |

| NiftyReg | 0.723±0.131 | 0.061±0.093 | 0.645±0.167 | 0.020±0.046 |

| LDDMM | 0.716±0.131 | <0.0001 | 0.680±0.135 | <0.0001 |

| deedsBCV | 0.719±0.130 | 0.253±0.110 | 0.733±0.126 | 0.147±0.050 |

| VoxelMorph-1 | 0.718±0.134 | 0.426±0.231 | 0.729±0.129 | 1.590±0.339 |

| VoxelMorph-2 | 0.723±0.132 | 0.389±0.222 | 0.732±0.123 | 1.522±0.336 |

| VoxelMorph-diff | 0.715±0.137 | <0.0001 | 0.580±0.165 | <0.0001 |

| CycleMorph | 0.719±0.134 | 0.231±0.168 | 0.737±0.123 | 1.719±0.382 |

| MIDIR | 0.710±0.132 | <0.0001 | 0.742±0.128 | <0.0001 |

| ViT-V-Net | 0.729±0.128 | 0.402±0.249 | 0.734±0.124 | 1.609±0.319 |

| PVT | 0.729±0.130 | 0.427±0.254 | 0.727±0.128 | 1.858±0.314 |

| CoTr | 0.725±0.131 | 0.415±0.258 | 0.735±0.135 | 1.292±0.342 |

| nnFormer | 0.729±0.128 | 0.399±0.234 | 0.747±0.135 | 1.595±0.358 |

| TransMorph-Bayes | 0.744±0.125 | 0.389±0.241 | 0.753±0.123 | 1.560±0.333 |

| TransMorph-diff | 0.730±0.129 | <0.0001 | 0.594±0.163 | <0.0001 |

| TransMorph-bspl | 0.740±0.123 | <0.0001 | 0.761±0.122 | <0.0001 |

| TransMorph | 0.745±0.125 | 0.396±0.240 | 0.754±0.124 | 1.579±0.328 |

Fig. 7:

The number of parameters in each deep-learning-based model. The values are in units of millions of parameters.

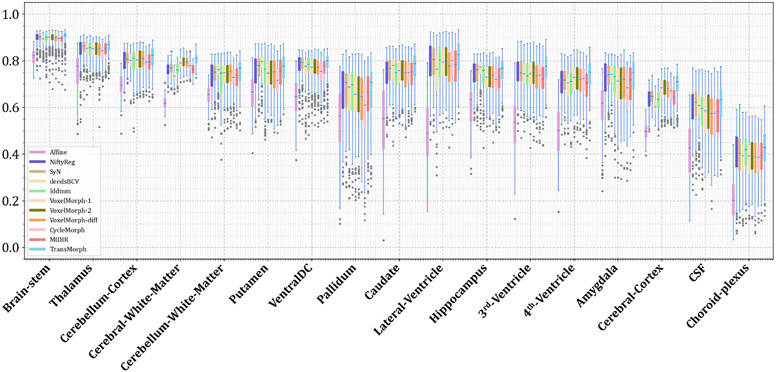

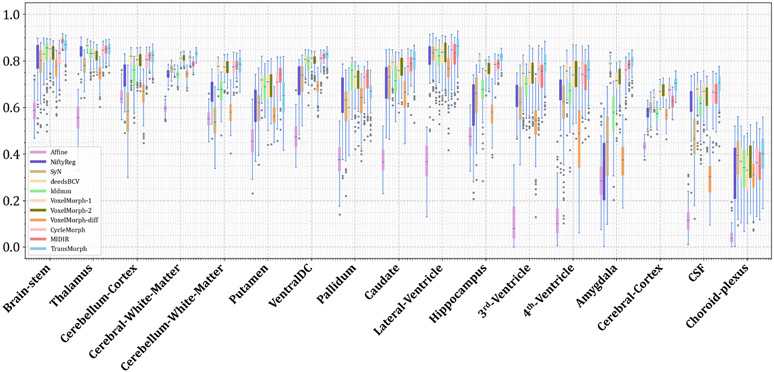

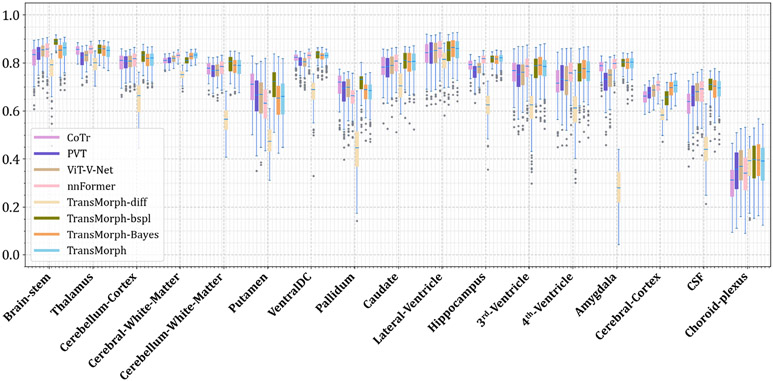

Figs. C.21 and C.22 show additional Dice results for a variety of anatomical structures, with Fig. C.21 comparing TransMorph to current registration techniques (both optimization- and learning-based methods), and Fig. C.22 comparing the Dice scores between the Transformer-based models.

5.2. Atlas-to-patient Brain MRI Registration

The top-right panel of Fig. 8 shows the qualitative results of the TransMorph variants on a sample MRI slice for atlas-to-patient brain MRI registration. As highlighted by the yellow arrows, the diffeomorphic variants resulted in the deformed images that were less comparable to the fixed image in terms of visual appearance. In contrast, the variants without diffeomorphic deformations (i.e., TransMorph and TransMorph-Bayes) produced better qualitative results, with the sulci in the deformed atlas images more closely matching those in the fixed image. Additional qualitative comparisons are shown in Fig. D.23 in Appendix D, where we observed that all the learning-based methods yielded more detailed and precise deformation fields than the conventional methods. This might be owing to the high parameterization of the DNNs, which enables the modeling of more complicated deformations.

Table. 3 shows the quantitative evaluation results of the atlas-to-patient registration. The highest mean Dice score of 0.761 was achieved by the proposed TransMorph-bspl with nearly no folded voxels. The second best Dice score of 0.754 was achieved by both TransMorph and TransMorph-Bayes, while TransMorph-Bayes yielded a smaller standard deviation. In comparison to these TransMorph variants, TransMorph-diff produced a lower Dice score of 0.594. However, note that this score is still higher (~0.02) than the one produced by VoxelMorph-diff, which is the base model of TransMorph-diff. Additionally, we observed that the registration methods that used MSE for training or optimization resulted in lower Dice scores (i.e., SyN, NiftyReg, LDDMM, VoxelMorph-diff, and TransMorph-diff). This was most likely due to the significant disparity in the intensity values of brain sulci between the atlas and the patient MRI images. As seen in the top-right panel of Fig 8, the sulci in the atlas image (i.e., the moving image) exhibited low-intensity values comparable to the background, but the sulci in the patient MRI image had intensity values more comparable to the neighboring gyri. Thus, the discrepancies in the sulci intensity values may account for the majority of the MSE loss during training, compelling the registration models to fill the sulci in the atlas image with other brain structures (as shown in Fig. D.23, these models produced significantly smaller sulci than models trained with LNCC), thereby limiting registration performance. The paired t-tests with Bonferroni correction (Armstrong 2014) revealed the p-values of p ≪ 0.0005 between the best performing model (i.e., TransMorph-bspl) and all other methods. This indicates that the proposed method outperformed the comparative registration methods and network architectures.

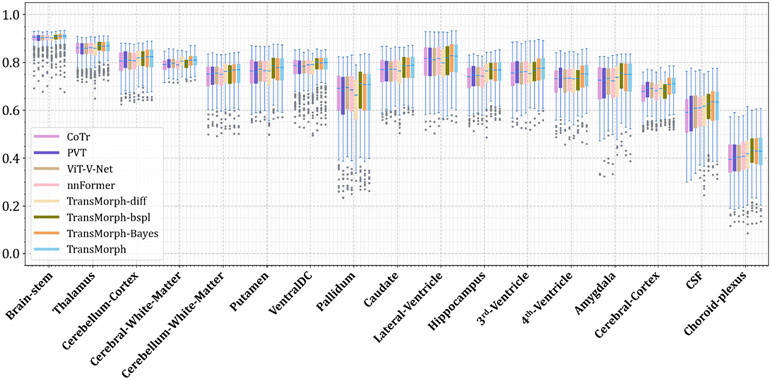

A detailed breakdown of Dice scores for a variety of anatomical structures is shown in Figs. D.24 and D.25 in Appendix D.

5.3. Learn2Reg OASIS Brain MRI Registration

Table 5 shows the quantitative results of the validation and test sets of the challenge. The validation scores of the various methods were obtained from the leaderboard of the challenge, whilst the test scores were obtained directly from the organizers. TransMorph performed similarly to the best-performing method (LapIRN (Mok and Chung 2021)) of the challenge on the validation set, where TransMorph-large achieved the best mean Dice score of 0.862 and mean HdDist95 of 1.431. VoxelMorph-huge performed significantly poor than TransMorph, with a p-value less than 0.01 from paired t-test. This reveals the superiority of Transformer-based architecture over ConvNet despite having a comparable number of parameters. On the test set, the TransMorph and TransMorph-large achieved comparable mean Dice score to that of LapIRN. Despite the comparable performance, LapIRN produced much more uniform deformation fields as measured by SDlogJ. In a separate study, we presented a simple extension of TransMorph that significantly outperformed LapIRN while maintaining smooth deformation fields. We direct interested readers to (Chen et al. 2022) for further details. Moreover, LapIRN employed a multiresolution framework in which three ConvNet registration backbones were involved in generating deformation fields at three different scales. TransMorph, however, operated on a single resolution. We underline that TransMorph is a registration backbone, and that it may be easily adapted to LapIRN or any advanced registration frameworks.

Table 5:

Quantitative evaluation results for brain MRI registration of the OASIS dataset from the 2021 Learn2Reg challenge task 3. Dice score of 35 cortical and subcortical brain structures, the 95th percentile percentage of the Hausdorff distance, and the standard deviation of the logarithm of the Jacobian determinant (SDlogJ) of the displacement field are evaluated for different methods. The validation results came from the challenge’s leaderboard, whereas the test results came directly from the challenge’s organizers. The bolded numbers denote the highest scores, while the italicized ones indicate the second highest.

| Validation | |||

|---|---|---|---|

| Model | DSC | HdDist95 | SDlogJ |

| Lv et al. 2022 | 0.827±0.013 | 1.722±0.318 | 0.121±0.015 |

| Siebert et al. 2021 | 0.846±0.016 | 1.500±0.304 | 0.067±0.005 |

| Mok and Chung 2021 | 0.861±0.015 | 1.514±0.337 | 0.072±0.007 |

| VoxelMorph-huge | 0.847±0.014 | 1.546±0.306 | 0.133±0.021 |

| TransMorph | 0.858±0.014 | 1.494±0.288 | 0.118±0.019 |

| TransMorph-Large | 0.862±0.014 | 1.431±0.282 | 0.128±0.021 |

| Test | |||

| Model | DSC | HdDist95 | SDlogJ |

| Initial | 0.56 | 3.86 | - |

| Lv et al. 2022 | 0.80 | 1.77 | 0.08 |

| Siebert et al. 2021 | 0.81 | 1.63 | 0.07 |

| Mok and Chung 2021 | 0.82 | 1.67 | 0.07 |

| TransMorph | 0.816 | 1.692 | 0.124 |

| TransMorph-Large | 0.820 | 1.656 | 0.124 |

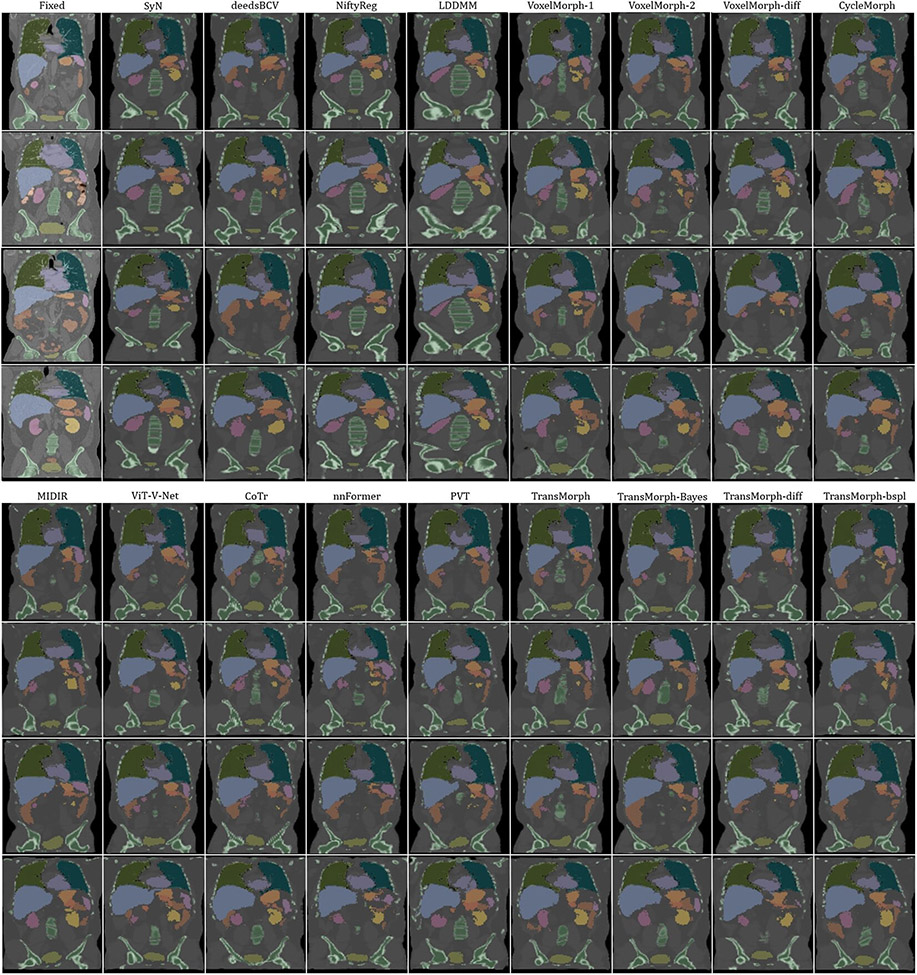

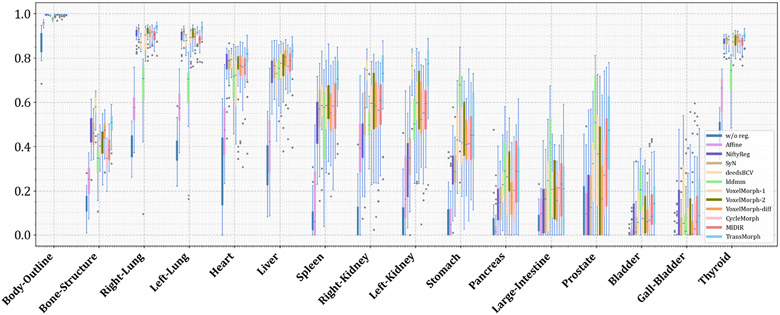

5.4. XCAT-to-CT Registration

The bottom panel of Fig. 8 shows the qualitative results for a representative CT slice. The blue, orange, green, and pink lines denote the liver, heart, left lung, and right lung, respectively, while the bottom values show the corresponding Dice scores. Similar to the findings in the previous sections, TransMorph and TransMorph-Bayes gave more accurate registration results (highlighted by the yellow arrows and the delineated structures), while the diffeomorphic variants produced smoother deformations. Additional qualitative comparisons are shown in Fig. E.26 in Appendix E. It is possible to see certain artifacts in the displacement field created by nnFormer (as shown in Fig. E.26); these were most likely caused by the patch operations of the Transformers used in its architecture. nnFormer is a near-convolution-free model (convolutional layers are employed only to form displacement fields). In contrast to the relatively small displacements in brain MRI registration, displacements in XCAT-to-CT registration may exceed the patch size. Consequently, the lack of convolutional layers to refine the stitched displacement field patches may have resulted in artifacts. Four example coronal slices of the deformed XCAT phantoms generated by various registration methods are shown in Fig. E.27 in Appendix E.