Abstract

Computational approaches are increasingly being used to model behavioral and neural processes in mood and anxiety disorders. Here we explore the extent to which the parameters of popular learning and decision-making models are implicated in anhedonic symptoms of major depression. We first highlight the parameters of reinforcement learning that have been implicated in anhedonia, focusing, in particular, on the role that choice variability (i.e., “temperature”) may play in explaining heterogeneity across previous findings. We then turn to neuroimaging findings implicating attenuated ventral striatum response in anhedonic responses and discuss possible causes of the heterogeneity in the literature. Taken together, the reviewed findings highlight the potential of the computational approach in teasing apart the observed heterogeneity in both behavioral and functional imaging results. Nevertheless, considerable challenges remain, and we conclude with five unresolved questions that seek to address issues highlighted by the reviewed data.

Keywords: reinforcement learning, mood disorders, anxiety, decision making, computational psychiatry

Introduction

Mood and anxiety disorders are a major worldwide health burden across individual, social, and economic levels (Beddington et al., 2008). Despite a number of effective therapies and growing neuroscientific understanding of disease processes, resistance to established treatment strategies remains high (Yonkers, Warshaw, Massion, & Keller, 1996). This may be, at least in part, because current clinical diagnoses of these disorders rely primarily on subjective symptoms and behaviors, while the goal of neuroscience is to understand objective (i.e., observer-independent) biological mechanisms. Mapping these two approaches onto one another is exceptionally difficult and fraught with potential bias but is ultimately critical if we want to improve our ability to develop new treatments and target current treatments more effectively. To this end, it has been suggested that computational modeling of behavior—the focus of this review—can provide a means of bridging the gap between observable symptoms and behavior to underlying neurobiological mechanisms (Huys, Maia, & Frank, 2016; Montague, Dolan, Friston, & Dayan, 2012).

Computational Modeling of Behavior

Computational models of behavior offer a large and powerful explanatory repertoire with the potential of integrating information derived from a variety of different sources (e.g., electrophysiology, neuroimaging, behavior) into a coherent theoretical structure. Applying such models to psychiatric disorder symptomology offers a number of advantages:

Models require hypotheses to be explicitly quantified. That is to say, experimental design and analysis require an explicit proposal of what is driving behavior or neural activity. Rather than specifying that major depression is associated with, say, “negative affective bias” (Roiser, Elliott, & Sahakian, 2012), the component parts driving that bias must be specified. For instance, one must specify if a single parameter drives sensitivity to reward and punishment processing (i.e., whether an individual dislikes punishments to the exact same degree that he or she likes rewards) or whether these should be considered separate processes (allowing particularly extreme dislike of punishments with ambivalence toward rewards, for example).

Although “all models are wrong” (Box, 1976), some are more wrong than others, and evaluation of the components that improve model fit in a model comparison procedure can formally assess the relative strengths of competing hypotheses to explain a given dataset. For example, the relative explanatory validity of single versus separate reward and punishment sensitivity parameters can be directly compared. Simulations and comparison across datasets can then provide further support for or against a given model (Palminteri, Wyart, & Koechlin, 2017).

Rather than relying on summary mean or variance statistics, models can be used to explore trial-by-trial variance. This is particularly important when studying cognitive and learning processes (which are strongly implicated in many psychiatric disorders, including major depression; Rock, Roiser, Riedel, & Blackwell, 2014) in which subjects’ behavior can change dynamically through the task. This temporally rich approach explores variance over time and thus obtains more information from a given dataset. Indeed, subtle effects that are often overwhelmed in collapsed mean accuracy or response times can only be revealed using the modeling approach (White, Ratcliff, Vasey, & McKoon, 2010).

Models can be constrained by our understanding of what is biophysically plausible given our understanding of neuronal and pharmacological interactions. Combined with neuroimaging, winning model parameters can provide us with a means of mechanistically linking observable behavior and underlying neural substrates.

Models can show emergent properties, and explanations that might otherwise be considered excessively complex can be specified and tested. A good example of this is where meta parameters—or variance of model parameters—are implicated in pathologies. For instance, trait anxiety is associated with reduced learning rate adaptability (Browning, Behrens, Jocham, O’Reilly, & Bishop, 2015). Specifying and identifying this alteration without a model would be more difficult from both practical and conceptual standpoints.

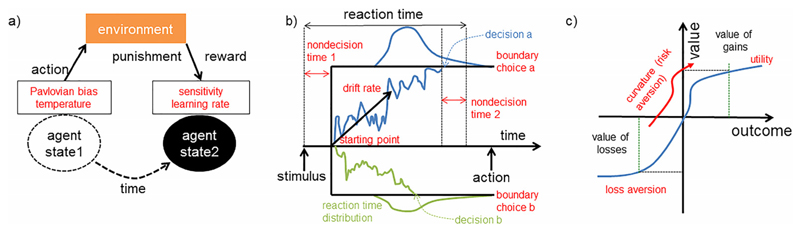

In this review, we focus on the application of computational modeling to anhedonia—diminished reward processing—in major depression. We review findings from two primary classes of models (Figure 1) that have been implicated in anhedonia (and, to a lesser extent, in anxiety, which is often comorbid with depression): (a) reinforcement learning models and (b) reaction time models. We also briefly refer to (c) models of economic choice under uncertainty, as they allow us to illustrate a point about the importance of temperature (see section “Simulation Showing the Importance of Temperature in Decision-Making Models”). Notably, although we consider them separately here, these models can also be combined to generate more complicated models (Pedersen, Frank, & Biele, 2016).

Figure 1. Schematic of the learning and choice models discussed in the article.

a) Illustrative example of reinforcement learning models. b) Illustrative example of reaction time models (e.g., drift diffusion models). c) Illustrative example of models of economic choice under uncertainty.

Reinforcement learning models

Reinforcement learning (RL; Figure 1A) models seek to explain choice behavior based on response to rewards and punishments received from the environment. Specifically, organisms are thought to respond to deviations in expectancy about rewards and punishments based on a prior belief about how likely an event is to occur. Each occurrence of a stimulus–outcome pairing increases the expectancy of that outcome and gradually reduces prediction error (PE). The PE for the current trial is the actual outcome value of the current trial compared to the expected value of the outcome (Bush & Mosteller, 1955; Rescorla & Wagner, 1972). The expected value is calculated by adding the value of the previous trial to the PE of the previous trial multiplied by the rate at which an individual learns the new association, known as the learning rate (Equation 2); Sutton & Barto, 1998). This value calculation can also be influenced by Pavlovian biases that encourage a bias toward making actions that lead to reward and inhibiting actions that lead to punishment (as an evolutionarily efficient means of maximizing rewards and minimizing punishments (Equation 3); Guitart-Masip et al., 2011; Huys, Golzer, et al., 2016). How these parameters may be related to symptoms of mood disorders are reviewed in Table 1. To compile this table, we primarily focused on salient exemplars of particular paradigms and analysis techniques. However, we also performed a literature search to include as many examples of data consistent or inconsistent with the construct in question as possible.

Table 1.

Reinforcement learning model parameters that could be altered in anhedonia

| Construct | Description | Computational instantiation | Evidence implicating | Evidence exonerating | Missing evidence |

|---|---|---|---|---|---|

| Value-guided behavior | Capacity of value representations to guide choice | Value (Equation 1) | Most studies report broadly intact acquisition | ||

| Feedback insensitivity | “Blunted” response to feedback, both positive and negative | Reduced learning rate (Equation 2) | Chase, Frank et al. (2010), Steele et al. (2007) | Rothkirch, Tonn, Kohler, & Sterzer (2017) | |

| Enhanced punishment sensitivity | Relatively enhanced response to negative feedback | Enhanced learning rate if outcome is aversive | Beevers et al. (2013), Herzallah et al. (2013), Maddox et al. (2012), Murphy, Michael, Robbins, & Sahakian (2003), Taylor Tavares et al. (2008) | Cavanagh, Bismark, Frank, & Allen (2011), Chase, Frank et al. (2010), Whitmer, Frank, & Gotlib (2012) | |

| Reduced reward sensitivity | Relatively reduced response to positive feedback | Reduced learning rate if outcome is appetitive | Beevers et al. (2013), DelDonno et al. (2015), Herzallah et al. (2013), Kunisato et al. (2012), Maddox et al. (2012), O. J. Robinson et al. (2012), Treadway, Bossaller, Shelton, & Zald (2012) | Cavanagh et al. (2011), Chase, Frank et al. (2010), Chase, Michael, Bullmore, Sahakian, & Robbins (2010), Whitmer et al. (2012) | |

| Pavlovian bias | Influence of reward- or punishment-predictive stimuli on behavior | See Equation 3 | Bylsma, Morris, & Rottenberg (2008), Huys, Golzer et al. (2016), Radke, Guths, Andre, Muller, & de Bruijn (2014); see Mkrtchian, Aylward, Dayan, Roiser, & Robinson (2017) for anxiety | ||

| Temperature | Stochastic choice | Temperature (Equation 4) | Huys et al. (2012), Huys et al. (2013), Kunisato et al. (2012); for indirect evidence, see Blanco, Otto, Maddox, Beevers, & Love (2013), Clery-Melin et al. (2011); for trend level, see Chase et al. (2017) | Chung et al. (2017), Rothkirch et al. (2017) | |

| Reduced outcome magnitude sensitivity | Linear or nonlinear scaling of utility across increasing expected value | [Outcome*sensitivity] or [Outcomeˆsensitivity] | Indirect evidence: Herzallah et al. (2013), Treadway et al. (2012) | ||

| Effort costs | Suppression of responding by effort | [Outcome value–effort cost] | Hershenberg et al. (2016), Treadway et al. (2012), Yang et al. (2016), Yang et al. (2014) | No simple increase in effort costs: Clery-Melin et al. (2011), Sherdell, Waugh, & Gotlib (2012) | |

| Working memory/“model-based” learning | Rapid adaptation of behavior in response to feedback | Various approaches, e.g., control choice in terms of previous outcome (Myers et al., 2016) | N/A | N/A | Little direct examination in MDD |

| Uncertainty-modulated learning | Increases or decreases in learning rate in response to uncertainty | Modulation of learning rate (e.g., Equation 2) by stimulus/outcome uncertainty | N/A | N/A | Little direct examination in MDD (but see Browning et al., 2015, on anxiety) |

Note. Here we define indirect evidence as suggestive that the construct might be significant, but this was not assessed directly via a modeling or other analytic strategy. To complete this table, combinations of the following terms were used in systematic searches: reward, model-based learning, Pavlovian, exploration, decision, choice, punishment learning, with anhedonia or major depression. The goal of the table is to provide an overview of salient exemplars of existing data from studies incorporating depressed, dysphoric, or euthymic individuals, which may be particularly relevant for the constructs listed.

Reaction time models

While RL models are concerned with decision making and choices, diffusion models try to explain the distribution of reaction times to make those choices (Tsetsos, Gao, McClelland, & Usher, 2012; Figure 1B). There are a number of different frameworks, but most share the fundamental concept that information is accumulated (they are sometimes referred to as accumulator models) until a threshold is reached and a decision is made (Ratcliff, Smith, Brown, & McKoon, 2016; Tsetsos et al., 2012). The parameters in such models generally include (a) how far apart the decision options are (boundaries), (b) the rate at which a decision speeds toward the boundaries (drift rate), (c) whether the individual has a bias toward one or another of the options (starting point between the boundaries), and (d) the time to encode the stimuli and process a motor response (nondecision time; White et al., 2010). These parameters are then used to explain the distributions of reaction times for one decision over another (they are most commonly used for tasks in which participants have to decide between one of two responses).

Models of economic choice under uncertainty

Economic models of decision making can describe adaptive choice when options are uncertain or subject to various costs (Figure 1C). In general, most individuals overweigh losses relative to equivalent gains and show a preference for certain over-risky outcomes with equal or higher expected value. Within this framework, economic decisions can be explained by a combination of two factors: reduced sensitivity to outcome value as value increases (i.e., risk aversion) and an overweighting of losses relative to gains (i.e., loss aversion; Charpentier, Aylward, Roiser, & Robinson, 2016; Sokol-Hessner et al., 2009; Tversky & Kahneman, 1992).

There is limited work exploring these models in depression (but see Beevers et al., 2013; Maddox, Gorlick, Worthy, & Beevers, 2012), so we do not review their relationship with anhedonia here. Rather, we use this model to make a point about the temperature parameter that is more clearly illuminated in the absence of the learning that is inherent in RL models (see section “Simulation Showing the Importance of Temperature in Decision-Making Models”). In other words, although we focus primarily on RL as a paradigmatic example of a broader decision-theoretical approach to psychopathology (Montague, 2012), the theoretical and practical issues raised may be broadly applicable across paradigms (e.g., loss or risk aversion but also temporal discounting, Lempert & Pizzagalli, 2010; Pulcu, Trotter et al., 2014, and social decision making, Gradin et al., 2015; Pulcu, Zahn, et al., 2014).

Anhedonia and Impairments of Reward-Directed Behavior

A recurrent feature of a variety of psychiatric disorders is a “loss of interest or pleasure” in previously enjoyable activities. This phenotype is referred to as anhedonia and is particularly prevalent in disorders such major depression, schizophrenia (SZ), and addiction (Franken, Rassin, & Muris, 2007). Here we focus on major depression, in which anhedonia is a key symptom used for diagnosis. Although anhedonia might be straightforwardly characterized in terms of a reduction in response (across cognitive domains) to rewarding events, many features of the phenotype remain puzzling (Pizzagalli, 2010). For instance, it has been argued that anhedonia should be broken down into anticipatory, decisional, and consummatory components (Argyropoulos & Nutt, 2013; Treadway & Zald, 2011) as well as further distinct disturbances in the social domain (Christianson et al., 2008). Within these categories, responses to certain kinds of reinforcers might be selectively altered (e.g., affective responses to music; Martinez-Molina, Mas-Herrero, Rodriguez-Fornells, Zatorre, & Marco-Pallares, 2016).

Anhedonia is often measured, for both experimental and clinical purposes, using sel-freport questionnaires and interviews (Rizvi, Pizzagalli, Sproule, & Kennedy, 2016). Although these tools are frequently psychometrically reliable, they suffer from prominent limitations, namely, demand characteristics (i.e., individuals give you the answer they think you expect) and anchoring effects (i.e., individuals give answers consistent with the first answer they gave). Indeed, as highlighted by the memory literature, an individual’s pattern of behavioral responses on cognitive tasks can be a more reliable proxy of the degree of memory encoding than self-report (Shanks & St. John, 1994; Vadillo, Konstantinidis, & Shanks, 2016). Consistent with this is evidence that behavioral measures of anhedonia can vary independently from self-reported anhedonia (Pechtel, Dutra, Goetz, & Pizzagalli, 2013). Moreover, despite clear differences in self-reported anhedonia, prior reviews have yielded little evidence of substantial differences between depressed and healthy individuals in terms of their hedonic responses on consummatory tests (Treadway & Zald, 2011). For instance, the pleasure-evoking properties of sweet tastes are generally similarly rated by depressed and healthy individuals (Treadway & Zald, 2011). This discrepancy is somewhat perplexing—if anhedonic individuals describe a selective lack of interest in rewarding experiences, why is this not borne out in their behavioral responses? The computational approach might help resolve this question. For instance, it may be that, while the end points (e.g., response to sweet tastes) appear identical in anhedonic individuals, the individual components of the mechanisms (e.g., detection of, or learning about, the tastes) that lead to this end point differ. The anhedonic individual may take longer, or require more evidence, to reach the same end point. These effects will be hidden if one measures the end point alone: Computational models enable the specification and exploration of these hidden component parts.

Applying Computational Approaches to Behavioral Findings in Anhedonia

Sensitivity to Value

One way to consider a deficit in reward-oriented behavior is to argue that individuals are able to learn associations between actions and reinforcers but fail to use this information to guide behavior. A typical choice rule (Equation 1), also referred to as an observation model, describes the probability of eliciting a response (i.e., action probability) given an action’s value using what is known as a softmax function:

| (1) |

The value that is entered into this equation needs to be acquired from the environment by the individual. This is commonly described via a RL process where outcomes are positive for gains and negative for losses and, crucially, that includes a free parameter, called sensitivity, that describes how much weight an individual ascribes to those outcomes:

| (2) |

Looking at Equation 1, it is possible to see that if the value is low, then the probability of a response will be diminished. So if anhedonia reduces value—perhaps by decreasing the influence of outcome through the sensitivity parameter—a natural prediction is that it would suppress responding.

In two alternative forced-choice (2AFC) cognitive tasks frequently used to study RL, a participant will have no option but to accept one option, so two equally (un)attractive options will lead to essentially random responding, because neither is favored. In go/no-go tasks— where the alternative choice is to do nothing (no-go)—this would lead to random decisions to wait (or, in the case of animal models, engage in grooming behavior). Broadly, however, the empirical evidence has not strongly supported a clear role of reduced sensitivity to value in anhedonia. There are numerous examples of intact or at least adequate instrumental control by reinforcers: The majority of studies in Table 1 report broadly compatible task acquisition across healthy and depressed cohorts.

Learning Rate

While patients with depression can show moderate deficits in memory (Rock et al., 2014), they typically show broadly intact acquisition of the basic reward contingencies employed in RL tasks, indicating intact learning processes. Computational methods provide some support for this view, with tasks that are sensitive to anhedonia showing intact task acquisition learning (Huys, Pizzagalli, Bogdan, & Dayan, 2013). Moreover, tasks which have designed to isolate specific learning rates for rewards and punishments (i.e., where the learning rate is different for rewarding and aversive outcomes, respectively; see Equation 2) have provided mixed data but have frequently failed to support diminished reward learning or heightened punishment learning (see Table 1). For example, in the case of the Probabilistic Selection Task (PST), one study showed similar rates of positive and negative learning between controls and patients (Chase, Frank et al., 2010) and a second showed relatively enhanced positive learning in low-but not high-dysphoric individuals (Kunisato et al., 2012), while two further studies showed complex findings depending on trial type (Cavanagh et al., 2011) or rumination induction (Whitmer et al., 2012). Finally, a theoretically compatible but somewhat distinct procedure revealed asymmetrical learning rates as well as an influence of medication (Herzallah et al., 2013). Thus, when taken together, the complexity of these findings argues against a simple alteration of learning rates.

In some cases, differences in performance may emerge because individuals adopt a different learning model from that outlined in Equation 2. Individuals may vary in their tendency to change behavior on the trial immediately after unexpected feedback. Within the basic RL framework, this might be driven by a heightened (Murphy et al., 2003) or by a diminished (Chase, Frank et al., 2010; Steele, Kumar, & Ebmeier, 2007) impact of the previous trial. The broader interpretation of this type of feedback sensitivity is a topic of active debate: Recent computational approaches sometimes posit a win–stay, lose–shift parameter (den Ouden et al., 2013; Myers et al., 2016), which is, in essence, a simplistic 1-back learning model and may reflect the function of working memory (Collins & Frank, 2012). This representational system does not keep track of reinforcement history beyond a very restricted number of recent outcomes (e.g., one). This approach can work well in some environments but will perform poorly on tasks (such as the commonly used Iowa Gambling Task; IGT) in which the most rewarding choice is also associated with large, if occasional, losses and in which incremental learning is necessary.

It is possible that these sorts of decision-making strategies (as well as different exploratory strategies; Knox, Otto, Stone, & Love, 2011), rather than a more fundamentally impaired learning rate mechanism, are responsible for the relatively subtle deficits that are observed in patient groups. Critically, the adoption of such strategies does not preclude intact learning: They may simply reflect selection of a strategy that will perform favorably in deterministic designs but suboptimally in others (Collins, Brown, Gold, Waltz, & Frank, 2014). Further support for this position is the presence of deficits on tasks with shifting contingencies, such as probabilistic reversal learning (Murphy et al., 2003) and the IGT (Must et al., 2006), performance on which may be particularly dependent on such strategies (e.g., den Ouden et al., 2013). Some modeling approaches (Collins & Frank, 2012; Myers et al., 2016) have therefore sought to combine these two approaches, with both RL and win–stay, lose–shift components in the same model, combined with a free parameter to determine the likelihood of one or another when the two systems come into conflict (e.g., straight after negative feedback). Overall, however, clear evidence for altered learning rates in anhedonia across a variety of paradigms is lacking.

Pavlovian Bias

Learned or prepotent Pavlovian biases can represent powerful decision-making shortcuts that can influence RL. Specifically, approaching rewards and avoiding punishments, or stimuli which may predict them, consitutes a simple behavioral heuristic that will serve well in a wide range of scenarios. These adaptive behavioral heuristics are often referred to as Pavlovian biases. Pavlovian instrumental transfer (PIT) describes the influence that these biases can have over instrumental control (e.g., the simple goal-directed behaviors described by Equations 1 and 2). Experimentally, this manifests as, for example, making it extremely difficult for both humans and animal models to “approach” (e.g., make a go response to) a cue that has previously been associated with punishment (Guitart-Masip et al., 2011). Cavanagh, Eisenberg, Guitart-Masip, Huys, and Frank (2013) have operationalized Pavlovian influence over RL as a multiplier on stimulus value (and is often represented by an independent, additive parameter to that representing action or instrumental value: cf. Holland, 2004):

| (3) |

Where Value(st) is a state value, while Value{st}{a} is a state-action value-–both being independently learned by a prediction error mechanism (i.e., following the form of Equation 2). Value′ determines choice via a softmax equation (e.g., Equation 1).

If the value (see Equation 2) is positive (i.e., rewarding), then this bias parameter increases value, whereas if the value is negative (punishing), this parameter serves to decrease value. Examining PIT, Huys, Golzer, et al. (2016) observed a reduced influence of Pavlovian bias on approach and avoidance behavior in depressed individuals compared to healthy controls (HC). Similarly, work has demonstrated that an approach bias for positive stimuli and an avoidance bias for negative stimuli in HC—that is, Pavlovian bias—is absent in depressed individuals (Radke et al., 2014). In other words, although overall instrumental behavior is generally intact in depression, the influence of incidental Pavlovian cues on behavior may be reduced. These findings from PIT or PIT-like paradigms may also be relevant for interpreting data from emotional cues more generally: A meta-analysis of responses to emotional cues, both positive and negative, suggested a general deficit in response to affective cues in depression (Bylsma et al., 2008).

Of note, however, the impact of Pavlovian bias is dependent on individuals having obtained the learned association. If they do not know that a cue leads to reward, then they will not be driven to approach it. As such, the impact of these sorts of biases may change over time. They will have minimal impact at the start of the process before the cue–outcome contingencies have been aquired. Reduced Pavlovian influence in depressed patients could therefore plausibly reflect delayed instrumental learning in some cases (despite eventual intact learning, given enough trials). Notably, reduced Pavlovian influence in depressed individuals may differ from what is found in those with increased clinical anxiety symptoms who demonstrate increased reliance on Pavlovian avoidance (and not approach) biases (Mkrtchian et al., 2017). Existing work is on this topic is limited, so further exploration of the interaction between Pavlovian bias and instrumental learning in major depressive disorder (MDD) would be worthwhile.

Choice Variability (Temperature)

Returning to the softmax choice function (Equation 1), we can add another parameter, which provides a modulatory impact on choice. This parameter is referred to in different ways across the literature, but one common description is temperature, with greater temperature referring to greater response variability:

| (4) |

In principle, it should be possible to distinguish changes in temperature-driven choice variability from the effect of value in the learning model, but in practice, it can be difficult to do so because of the ambiguity surrounding behavioral indicators of exploration. Specifically, choice variability could result from a deliberate exploration of the presented options or simply from a failure to learn or otherwise express the true value of a given choice. Equation 4 reveals the problem explicitly: Value and temperature are directly proportional such that increases in value can simply be counteracted by increases in temperature (or vice versa).

Increased reliance on the temperature parameter has been used to explain performance on tasks in which depression is linked to increased exploration (Huys et al., 2012; Kunisato et al., 2012). Reinforcement learning–based analyses have argued that increased exploration drives response switching on RL tasks (Blanco et al., 2013), probabilistic reversal learning (Dombrovski, Szanto, Clark, Reynolds, & Siegle, 2013; Murphy et al., 2003; Taylor Tavares et al., 2008), and IGTs (Must et al., 2006). However, in many of these designs, it is also possible to explain behavior in terms of increased temperature. This, in turn, may be due to impaired task acquisition but could also be due to unmodeled decision-making strategies (e.g., the win–stay, lose–shift process described in section “Learning Rate”).

Perhaps the clearest evidence for the value–temperature trade-off comes from the probabilistic reward task (Pizzagalli, Jahn, & O’Shea, 2005), in which individuals are asked to discriminate between two lengths of lines presented on a screen. One of the responses has a greater probability of reinforcement following an accurate response, so that, for example, the accurate selection of the long line is three times more likely to be rewarded than the accurate selection of the short line. By providing asymmetric reward for one option, a reward-related bias is introduced in healthy individuals who normatively select the rewarded option if unsure (Huys et al., 2013). Individuals with high levels of anhedonia generally fail to show such a bias (Huys et al., 2013). Critically, a meta-analysis of this task using a computational analysis of performance identified increased temperature as the key parameter driving these effects in anhedonia (Huys et al., 2013). In other words, anhedonia led to noisier, more variable choices. As far as we are aware, the only existing study in which temperature was shown to be reduced by major depression/anhedonia (i.e., the opposite effect) was a study of the probabilistic selection task referred to in Table 1 (Chase, Frank et al., 2010). However, a reduced overall learning rate associated with anhedonia was also observed in this study, and it is generally difficult to dissociate estimation of temperature from overall performance or learning rate estimation (Daw, 2011). In summary, therefore, it seems plausible that anhedonia may be associated with increased temperature.

Simulation showing the importance of temperature in decision-making models

Looking at the softmax choice equation (Equation 4), increasing the value of the chosen option can be directly counteracted by increasing the temperature parameter, such that these parameters are now necessarily “underdetermined” or, more informally, “two sides of the same coin.” This point is emphasized by Huys and colleagues (2013) in their analysis of the probabilistic reward task and is valuable for two reasons. First, it increases focus on aspects of paradigm design and model fitting that might obscure identification of choice variability (or be obscured by it). Second, it has implications for sample size and statistical power: Specifically, choice variability, whether well specified or controlled by a particular task, or not, adds noise and provides an impediment to accurately identifying other modeled parameters. This could potentially affect the estimation of model parameters for many choice-oriented tasks across a variety of cognitive domains.

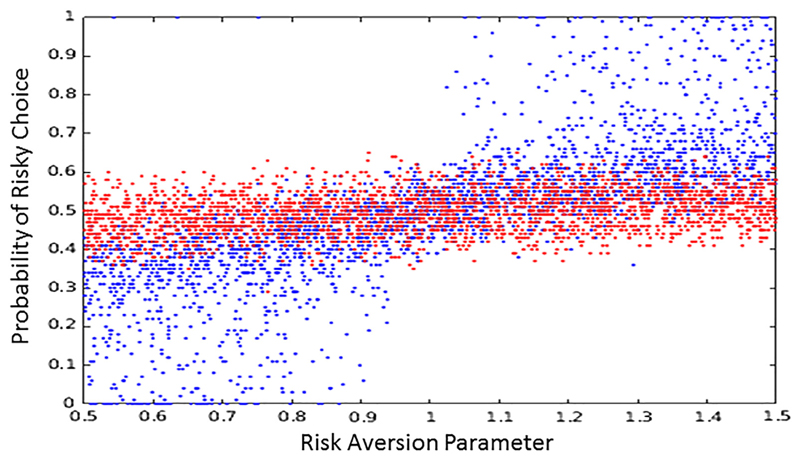

We can illustrate this interaction between value and temperature through simulation. Here we considered economic decision making under uncertainty (Figure 1C) rather than RL, because temperature is more transparently estimated in the absence of trial-by-trial learning (Daw, 2011). Critically, this simulation assumes that values and associated probabilities of outcomes are explicitly stated so that changes in decisions are not confounded with differences in learning the values:

| (5) |

Consider the following: A decision-making paradigm of 100 trials assesses risk preference by asking the participant to choose between a risky 50% chance of winning $1 (vs. $0) or a sure 100% chance of winning $0.50 (i.e., on average, the value of both options is the same). Preference is determined by the relative valuation of $0.50 relative to $1, for example, a curved utility function (Equation 5). If risk aversion is greater than 1, the risky option is preferred, and if it is less than 1, the safe option is preferred. However, we might also assume that choice is stochastic, controlled by a softmax function and an accompanying temperature parameter (Equation 4).

We conducted 10,000 simulations of this scenario, allowing temperature to vary between 0 and 2 and the curvature (risk aversion) to vary between 0.5 and 1.5.1 A multiple regression model was then fit to the resulting data, with risk preference (the proportion of risky options selected) being predicted by temperature, risk aversion, and their interaction. As expected, the curvature/risk aversion parameter (t = 67.96) was a highly significant predictor of risky choice, and temperature on its own had little effect (t = −1.87). Critically, however, their interaction was highly significant (t = −62.99). This interaction reflects the crucial influence of the temperature parameter, controlling the effect of the mapping between the key construct of interest (the valuation function) and the dependent measure (risk preference; see Figure 1C). This simulation demonstrates how temperature can play a crucial moderating role, with increases in temperature diminishing the influence of a given manipulation (in this case, risk aversion). Specifically, as can be seen by the red dots in Figure 2, at high temperatures, the probability of a risky choice is essentially random (50%) regardless of the level of risk aversion, and the curvature evident in the low-temperature blue dots is absent.

Figure 2. Moderation of relationship between the risk aversion parameter (see Equation 5) and risk preference by temperature.

High temperatures are red; low temperatures are blue. A low score on the risk aversion parameter amplifies the utility of small wins, leading to risk aversion, but this is only clearly manifest in behavior if the temperature is low. Likewise, a high score reduces the utility of small wins, leading to risk seeking, but again, only if the temperature is low.

Ultimately, choice variability can have a major impact on observed behavior, and failing to account for it may lead to erroneous inferences regarding the influence of an experimental manipulation. For instance, reduced temperature might lead to a pattern of risky choice in healthy individuals, while high temperature would lead to (relatively) more random responding in a patient group. A pattern of noisier decisions could therefore be incorrectly taken as evidence of reduced risk taking in the patient group. In fact, the consequences are much broader than the present example: High temperature would make any other parameter within the same model harder to estimate, regardless of the paradigm or direction of between-group effects.

A role for temperature in accounting for the influence of effort manipulations?

With the preceding simulation in mind, enhanced temperature-driven choice variability might plausibly help explain conflicting findings within the literature. For example, there are ambiguities in data obtained using effort-based paradigms (e.g., Clery-Melin et al., 2011; Sherdell et al., 2012; Treadway et al., 2012) in which subjects are required to complete effortful tasks (e.g., press a button multiple times quickly or squeeze something with high pressure). On one hand, Treadway and colleagues (2012) found that anhedonic depressed participants were less likely overall to select high-effort option choices with a favorable expected value than healthy control subjects (see also Hershenberg et al., 2016). This would be consistent with reduced sensitivity to reward, given that effort-related costs are matched between the samples. On the other hand, Clery-Melin et al. (2011) showed that, unlike controls, MDD patients did not increase their grip force to obtain rewards of greater value but rather showed similar (mean) output across all rewards. However, this study also observed that effort could be enhanced in response to arousing stimuli in MDD patients but not in controls. In other words, some stimuli do have the capacity to elicit increases in effort in MDD patients, and effort expenditure is not reduced overall; rather, the increase in reward magnitude on this task is specifically ineffective at achieving increases in effort. This goes back to the point that most human research tends to employ forced-choice paradigms in which behavior is described by a choice between two options (i.e., 2AFC). If both options have low motivational properties overall, this would lead to low expected value and hence low overall action probability (e.g., Equation 4). In other words, if neither option is of much interest to the anhedonic participant, then he or she will respond more randomly—likely resulting in a high estimate of the temperature parameter. We should stress that this does not necessarily negate the role of reward sensitivity nor effort costs in the observed effort task effects (Treadway et al., 2012); rather, this highlights the broader potential of computational approaches in clarifying observed discrepancies.

Anhedonia Reduces Drift Rate but No Other Parameters in Drift Diffusion Models?

A smaller number of studies have explored the effect of the drift diffusion model in depression. In a study using the flanker task—where subjects have to identify the orientation of an arrow in the face of congruent and incongruent proceeding distractors—depressed individuals were slower but more accurate on incongruent trials. Modeling showed that this was driven by reduced prepotent and executive drift rates across both congruent error and correct incongruent trials (Dillon et al., 2015) in depressed individuals compared to controls. This replicated an effect of reduced drift rate in depressed patients on a signal (color ratio) detection task (Vallesi, Canalaz, Balestrieri, & Brambilla, 2015) and prior work with the flanker task (Pe, Vandekerckhove, & Kuppens, 2013). Critically, drift rate correlated negatively with overall depressive symptoms (Pe et al., 2013; Vallesi et al., 2015) and with anhedonia specifically (Dillon et al., 2015). In none of the studies was depression associated with increased nondecision time, arguing against a generic psychomotor slowing effect; decision threshold (boundaries), arguing against speed–accuracy trade-offs (Dillon et al., 2015); or starting point, arguing against asymmetrical differences in boundaries.

However, it should be noted that there is some concern that the diffusion model can be overspecified, especially when trial numbers are limited and the study is not designed to detect more subtle effects (van Ravenzwaaij, Donkin, & Vandekerckhove, 2017). Indeed, very basic versions of the model can outperform more complex versions in simulations (van Ravenzwaaij et al., 2017). This is because more complex models can lead to overfitting, especially when the number of trials recorded is limited, which in turn reduces the power to detect group differences in core parameters (e.g., decision boundaries; van Ravenzwaaij et al., 2017). Comprehensive model comparison is needed in future research to be confident that effects of depression are in fact restricted to drift rate.

Neuroimaging of the Anhedonic Phenotype

Altered Reward-Related Striatal Reactivity in Major Depressive Disorder

The learning and decision-making processes described can be integrated with neuroimaging in an attempt to map model parameters onto the underlying neuronal hardware and bridge the symptomatic, computational, and neurobiological levels. Release of dopamine in the striatum has long been linked to RL models (Kishida et al., 2016; Lohrenz, Kishida, & Montague, 2016; Pessiglione, Seymour, Flandin, Dolan, & Frith, 2006), so a large body of work has focused on the role of the striatum in anhedonia and depression. Before discussing explicitly computational work, we first highlight noncomputational work implicating the ventral striatum (VS) in anhedonia and depression.

A widely used paradigm for assessing reward and motivational processes in psychiatric populations is the monetary incentive delay paradigm (MID; Knutson, Fong, Adams, Varner, & Hommer, 2001; Knutson & Heinz, 2015). Although there are a variety of task versions, the general structure involves an expectancy cue that informs the participant about the amount of a reward he or she will receive if he or she makes a fast response. In general, the task is designed such that it is possible to separately model neural responses to (a) anticipation of reward and (b) receipt of rewarded outcomes.

We identified 13 studies in the literature using this task that are of relevance to the present discussion (Table 2). The task elicits quite reliable activation within the striatum in healthy individuals (Wu, Samanez-Larkin, Katovich, & Knutson, 2014), but there are inconsistencies as to when this activation occurs. While most studies reveal reduced activity in depression and anhedonia, they are coupled variably to anticipation or outcome and to different regions within the striatum. It is often less explicitly articulated (but see Knutson, Bhanji, Cooney, Atlas, & Gotlib, 2008) that the presence of null results—no difference between patients and controls—opens the potential for robust VS activations in the MDD group. Thus there is an obvious parallel with behavioral studies reviewed earlier: While deficits are regularly observed, the overall picture is not one of global hyporesponsivity to rewards. In addition, although there is a theme that less severely depressed individuals are less likely to show deficits (Hagele et al., 2015), Table 2 includes some studies with relatively severe patients that report null findings (Knutson et al., 2008).

Table 2.

Exploring reward processing in the striatum

| Study | Groups | Outcome magnitude | Probability (%) | Response contingent | Task length | Striatum differences | Reported null findings |

|---|---|---|---|---|---|---|---|

| Hagele et al. (2015) | AUD, SZ, MDD, BD (manic), ADHD, HC | ±€0.1, €0.6, €3 | 67 | Yes | 2 × 72 trials | Right VS: Increasing depression severity reduces reward anticipation vs. neutral | |

| Stoy et al. (2012) | MDD (before and during treatment), HC | €0.1, €0.6, €3 | 67 | Yes | 2 × 72 trials | VS: HC > MDD, reward and loss anticipation vs. neutral—partially recovers after treatment | |

| Knutson et al. (2008) | Unmedicated MDD, HC | ± $0.1, $0.2, $1, $5 | 67 | Yes (individually calibrated RT threshold) | 2 × 90 trials | Putamen: HC > MDD, reward outcome vs. neutral | VS: Reward anticipation |

| Admon et al. (2015) | MDD, HC | Variable: mean +$2.15, –$2 | 50 | No; instructed | 5 × 24 trials | Caudate: HC > MDD, reward and loss outcomes vs. neutral | |

| Wacker, Dillon, & Pizzagalli (2009) | Healthy individuals varying in anhedonic symptoms | Variable: mean +$2.15, –$2 | 50 | No; instructed | 5 × 24 trials | VS: Increasing anhedonia reduces reward outcome vs. neutral | VS: Reward anticipation |

| Pizzagalli et al. (2009) | MDD, HC | Variable: mean +$2.15, –$2 | 50 | No; instructed | 5 × 24 trials | Putamen: HC > MDD, reward anticipation vs. neutral; Caudate/VS: HC > MDD, reward outcome vs. neutral; | VS: Reward anticipation |

| Smoski, Rittenberg, & Dichter (2011) | MDD, HC | Money (+$1), IAPS pictures | 67 | Yes | 2 × 2 × 40 trials | Putamen: Anticipation Group × Reward Type interaction | Widespread anticipation-related activation; little outcome-related activation |

| Arrondo et al. (2015) | MDD, SZ, HC | High (£1), low (£0.01) | 70 high win, 30 low win | No; instructed | 30 win, 30 neutral trials | VS: HC > MDD/SZ, reward anticipation; relationship of VS anticipation activation with anhedonia in SZ, not MDD | |

| Dichter, Kozink, McClernon, & Smoski (2012) | Remitted MDD, HC | +$1 for wins | 67 | Yes | 20 potential win, 20 neutral | Caudate: remitted MDD > HC, reward anticipation | |

| Mori et al. (2016) | Students with/without subthreshold depression | ±¥0, ¥20, ¥100, ¥500 | N.S. | N.S. | 40 gain, 40 loss, 10 neutral | Differences not within striatum | VS: Reward anticipation |

| Misaki, Suzuki, Savitz, Drevets, & Bodurka (2016) | MDD, HC | ±$0.2, $1 | 66 | Yes (individually calibrated RT threshold) | 15 high win, 15 low win, 15 neutral, 15 high loss, 15 low loss | Left VS: HC > MDD during high win anticipation | No differences seen at low reward anticipation in left VS or low/high anticipation on right VS; no outcome-locked differences but overall activations not strong |

| Ubl et al. (2015) | Remitted MDD, HC | High (±€2), low (±€0.2) wins and losses | 50% (approx.) | Yes (individually calibrated RT threshold) | N.S. | Differences not within striatum | |

| Stringaris et al. (2015) | Clinical, subthreshold depression, HC (adolescent) | 10, 2, 0 points | 66 (approx.) | Yes (individually calibrated RT threshold) | 66 trials | VS: HC > clinical/subthreshold depression, reward anticipation; reduced VS activation to reward anticipation also predicted transition to depression at 2-year follow-up and was related to symptoms of anhedonia. VS: Subthreshold depression > HC, positive outcomes Subthreshold depression and anhedonia > HC, negative outcomes |

Note. Table summarizing design and findings of studies of MDD or other depression-related cohorts that employed a reward-based version of the MID task. ADHD = attention-deficit hyperactivity disorder. AUD = alcohol use disorder. BD = bipolar disorder. N.S. = not stated. RT = reaction time. The contents of the table represent all the studies we were able to find using systematic searches for monetary incentive delay fMRI studies. A recent study of Admon et al. (2017) was not included, as it was focused on a dopaminergic drug manipulation, but it also found significant group (control > MDD) differences in the VS coupled to outcomes in the placebo condition.

It should be also noted that some studies, one in healthy adults (Schlagenhauf et al., 2013) and another in a geriatric depressed population (Dombrovski et al., 2015), have demonstrated correlations between VS activation and generic measures of cognitive function (IQ and self-reported executive function, respectively). These findings provide a complication in that cognitive dysfunction and anhedonic symptoms may be correlated and confounded. It is not always possible to correct for these associations within a given analysis. Moreover, the limitations of reverse inference are relevant in interpreting striatal activations coupled to reward events (Poldrack, 2011). Specifically, a reduction in striatal response, frequently observed in the absence of behavioral or symptomatic effects, is often interpreted as reduced reward sensitivity. This is clearly an oversimplification, as many studies have shown that striatal activation can be elicited by a wide range of behaviors and stimuli, including, for instance, punishment (Robinson, Overstreet, Charney, Vytal, & Grillon, 2013). Nevertheless, we interpret the evidence in Table 2 as broadly supporting a role of attenuated VS response to reward in anhedonia.

Integrating Computational Approaches and Brain Imaging

The work reviewed in Table 2 nevertheless shows considerable heterogeneity. As argued earlier, computational models can help isolate sources of this variability. The integration of computational and fMRI methods is sometimes referred to as model-based analysis and represents a departure from the typical approach to modeling stimuli in neuroimaging that assumes that the eliciting stimulus is essentially equivalent on every trial. Specifically, computational models enable the exploration of parameters that vary on a trial-by-trial basis within subjects. Parameters such as temperature, explored in the section “Simulation Showing the Importance of Temperature in Decision-Making Models,” are generally fixed for individual subjects (i.e., they differ between subjects but do not change on a trial-by-trial basis), but these fixed values can be combined with the pattern of rewards and punishments received by the individual to calculate trial-by-trial regressors like PEs or value. These regressors can then be used as parametric modulators of functional magnetic resonance imaging acquisition outputs over time to determine their neural correlates. Specifically, PEs at a given time represent deviations from expected values (see also Equation 2).

| (6) |

where the expected values are often updated according to a learning rate:

| (7) |

Previous studies, particularly by Steele and colleagues (2007), have successfully used these or similar models to describe blood oxygenation level–dependent signal changes in the VS, finding that activation coupled to appetitive PEs is altered in major depression (Gradin et al., 2011; Kumar et al., 2008). Other groups have made compatible observations (e.g., Robinson, Cools, Carlisi, Sahakian, & Drevets, 2012; Ubl et al., 2015) such that there is, at present, some evidence of attenuated outcome-locked reward PEs in major depression.

Reinforcement learning–linked striatal response locked to cue or outcome?

The studies in Table 2 generally reported changes to striatal activation locked to outcome or cue. However, the exact timing of the signal has critical implications for our understanding of the underlying deficit. Reduced anticipatory responses but intact outcome-locked responses might suggest a failure to learn about rewards (i.e., if a person has not learned that a cue predicts reward, the individual is not going to anticipate it) but an intact response to the rewards when received. The opposite pattern—reduced outcome but intact anticipation—can be harder to explain in the computational RL framework. Specifically, if outcome value is diminished (i.e., an individual cares less about rewards when they are received), then it is unclear how the individual would learn about the value of those rewards to develop an intact anticipation response. In other words, within RL, an intact response to value at outcome is a crucial step in the pathway to develop intact anticipation of that value. How, therefore, do we account for the outcome-linked effects reviewed in Table 2?

One potential explanation for outcome-linked effects is that there is faster learning or habituation in one group, such that early on in a paradigm, a participant might show intact outcome responses that enable learning, but then this outcome response (but not the anticipation response) declines over time, leaving the anticipatory response in place. It may also potentially be explained within a framework that posits separate systems for anticipation/preparation and consummation, such as wanting versus liking (Berridge & Robinson, 2003), sign tracking versus goal tracking (Flagel, Watson, Robinson, & Akil, 2007), or even anticipatory versus consummatory anhedonia (Argyropoulos & Nutt, 2013).

Another way to account for anticipation versus outcome effects is to account for them explicitly within the same model. For example, Kumar and colleagues (2008) fitted a RL model to reward-related VS activations, observing reward-related hypoactivation in MDD, but they modeled cue and outcome stages within the same framework. Specifically, they employed a modified temporal difference (TD) model (Sutton & Barto, 1998): a real-time development of RL in which reward PEs are computed continuously within a trial, with reference to expected values. This model has outcome PEs (like Equation 6) but also represents PEs during anticipation. Specifically, cues that predict rewards can also elicit their own reward PEs if their presence is uncertain but (more or less) reliably predicts reward. Thus the TD PE signal represents deviations from the expected upcoming reward, whether signaled by cues or experienced directly at the outcome. Kumar and colleagues’ (2008) finding that TD-coupled striatal responses were reduced in MDD compared to controls represents a development of the traditional, independent modeling of cue- and outcome-locked activation, as described in Table 2, toward a unified account in terms of a TD-derived PE signal.

A final way to account for outcome effects is to examine statistical relationships between anticipation- and outcome-locked striatal activations. Across two MDD cohorts using a reward-based guessing task, cue-locked reward expectancy and outcome-locked PE-related activations appeared similar across patients and controls, but the relationship between them differed (Chase et al., 2013; Greenberg et al., 2015). Specifically, on one hand, HC showed a negative relationship between expectancy and outcome, suggesting a different rate of learning-induced transmission from outcome to cue-related activity. On the other hand, individuals with MDD did not show this relationship, despite showing similar overall magnitude of reward anticipation- and outcome-locked responses. When examining this effect at the whole-brain level using individual differences in anhedonia as a moderator (Greenberg et al., 2015), the largest effect size was found very close to a meta-analytically determined anterior caudate region of the VS (Zhang, Chang, Guo, Zhang, & Wang, 2013).

Although the reason for this anticipation–outcome correlation finding is unclear, it provides a novel interpretation of the mixed findings in Table 2 and of unified accounts, such as that of Kumar and colleagues (2008). Specifically, current RL models may not fit to VS activation in MDD owing to the presence of an unmodeled relationship between outcome and cue responses. In other words, attenuated reward-related activation in MDD might simply reflect an inadequate fit of the general linear model rather than hypoactivation per se (Xu, 2015). Critically, this provides a direct parallel with our behavioral simulation (see the section “Simulation Showing the Importance of Temperature in Decision-Making Models”), in which apparent reductions in reward response can actually be driven by poor model fit rather than meaningful parameter differences. Future work, ideally comprising more complex models alongside principled model comparison, is necessary to clarify this.

Five Questions for Future Research

The evidence reviewed earlier reveals a promising start. Notably, the computational approach may provide potential ways of reconciling apparently contradictory findings about the role of reward processing and the VS in anhedonia. However, as is also clear from the preceding simulations, the computational approach adds its own set of complications and assumptions that require further evaluation. In this final section, we outline five unresolved questions, which we believe will prove fruitful for future research.

What Is the Role of Temperature?

As discussed, it may well be that some decision-making differences in anhedonia are down to noisier decisions captured by the temperature parameter, but a major empirical challenge for studying the temperature parameter is that it is difficult to separate from value (see also Huys et al., 2013). Given that these two, supposedly distinct, constructs are inextricably linked within the choice rule (e.g., the softmax in Equation 4), it may not be possible to disambiguate them cleanly within one single paradigm. Moreover, although other choice rules for 2AFC paradigms might be considered, they often show very similar properties and a similar underlying mathematical form (Bogacz, Brown, Moehlis, Holmes, & Cohen, 2006; Yuille & Gaiger, 2003).

We therefore identify four potential strategies for resolving the role of temperature. First, if noisy, temperature-driven choices are a critical component of anhedonia, then they should be seen across different paradigms and task contingencies. If high choice variability were not also seen on other, conceptually distinct paradigms in the same individuals, then it might be possible to build a case that the effects on an effort task (for instance) are not driven by temperature differences. Second, it may be possible to manipulate outcome uncertainty to promote or reduce noisy decisions (Le Pelley, Suret, & Beesley, 2009), while keeping value consistent (and vice versa). Alternatively, forms of directed exploration have been proposed (Frank, Doll, Oas-Terpstra, & Moreno, 2009; Wilson, Geana, White, Ludvig, & Cohen, 2014), which might exist independently of a more passive, disinterested choice variability and be revealed on certain kinds of paradigms. The temperature parameter as realized within the softmax algorithm (Equation 4) is theoretically silent on this difference, and it may be that isolating these two components will provide more specificity into the underlying alteration in individuals with MDD. Together, these experimental approaches might enable the identification of exploration-related phenomena, which can be modeled independently of option value.

Third, it is worth considering whether a participant whose data provide evidence of high choice variability is just poorly fit by the model employed. Temperature could simply reflect the “residual” behavior that is not explained by the model itself. Thus other potential models of behavior might be explored that would better account for observed performance to clarify whether increased temperature, rather than just a poorly fitting model, is indeed the driving factor. Full comparison of a wide range of models, followed by clear simulations that reiterate nonmodeled behavioral patterns, can help determine the extent of this issue (Palminteri et al., 2017).

Finally, following from the preceding, large sample sizes can also provide benefits to model comparison and fitting. They can provide a clearer picture of parameter distributions and assist in their estimation, while nonnormal or discontinuous distribution of data (e.g., Chase et al., 2017; Chung et al., 2017) may be more readily identified. In addition, deriving more precise predictions from previous studies and/or via direct replication may facilitate Bayesian parameter estimation (Gershman, 2016).

Can We Disentangle Responses to Outcome Versus Cue?

Adopting a reinforcement learning–based approach to modeling and interpreting ventral striatal activation may help to explain differences between findings arising from reward paradigms of different design or contingencies (e.g., see Table 2), without needing to suggest separate mechanisms for cue- and outcome-locked activation. For instance, some paradigms may employ relatively sparse reinforcement, that is, a weak relationship between predictive cues and rewards (e.g., Segarra et al., 2015), and thus show mostly low cue-locked reward anticipation. In this case, outcome-locked activation would be expected to carry a mostly PE-related signal, but it may also vary between subjects or groups in terms of outcome sensitivity, but not in terms of learning rate. In other words, a patient versus control group difference in a sparse reinforcement contingency is more likely to be driven by differences in outcome value or PE than by differences in learning rate. By contrast, in paradigms in which rewards are more frequent, and preceding cues can be effective predictors of the value of the outcome, both outcome- and anticipation-locked activation might show variability related to individual differences in both learning rate and outcome value. Thus, in this case, a group difference related to learning rate might be revealed in a way that would not be apparent in a sparse reinforcement design. Direct exploration of the impact of sparse versus frequent rewards in anhedonic individuals may help shed some light on this issue.

However, there are areas where the explanatory capability of a simple RL model may become limited. Importantly, for example, RL in neuroimaging has generally been applied to describe phasic, spiking responses of midbrain dopamine (DA) neurons (Schultz, Dayan, & Montague, 1997), as opposed to tonic DA release. While modification of the RL framework has been suggested to account for tonic DA fluctuations (Daw, Kakade, & Dayan, 2002; Niv, Daw, Joel, & Dayan, 2007), other evidence suggests that DA release at different timescales can be integrated into a single signal (Hamid et al., 2016). The operation of motivational systems at different timescales may be relevant for MDD, with respect to both behavioral evidence (Dillon et al., 2015) and neural responses to the cue and to the outcome. In particular, several studies have suggested that sustained rather than phasic responses to reward can be associated with MDD (Admon & Pizzagalli, 2015) and response to treatment (Heller et al., 2013). Thus building models that distinguish between the phasic and sustained responses to reward is likely to be an important future direction and may contribute to resolving some of the existing discrepancies with respect to cue- and outcome-locked activations.

What Is the Role of Patient Heterogeneity?

Much of the logic behind the present work is based on the idea that patients with MDD will show high levels of anhedonia, which might drive the reward-related deficits observed. However, although it is true that anhedonia is a core clinical feature of MDD, a diagnosis of MDD may be given on the basis of heightened negative mood, despite normal hedonic tone. A radical consequence of this heterogeneity may be the inability to reproduce patterns of neuroimaging findings across cohorts (Muller et al., 2017). Although reward-based neuroimaging paradigms have been successful in identifying differences between patients with MDD and controls (Zhang et al., 2013), analyzing data as a function of continuous constructs may provide more rigorous tests by accounting for the heterogeneity within a given cohort. For example, using continuous measures, such as anhedonia questionnaire scores—as suggested by the research domain criteria framework (Kozak & Cuthbert, 2016)—may help resolve aspects of the conflicting data presented in Table 2. However, this endeavor will also benefit from more effective and validated measures of anhedonia (Rizvi et al., 2016). In this regard, computational methods may provide some assistance: A metric of approach motivation or reward sensitivity derived from a computational model of behavior could, for instance, be used as a betweensubject continuous variable in such analyses.

Medication remains another important source of variability, one that can be difficult to control in patient populations (e.g., Hafeman, Chang, Garrett, Sanders, & Phillips, 2012). Potential solutions to this problem are to (a) examine the effect of medication in a control population on a comparable task (Kumar et al., 2008) or (b) examine unmedicated patients to compare with findings from medicated patients (Greenberg et al., 2015; Robinson et al., 2012). All of these possibilities are associated with inferential blind spots, but there should be no reason why the influence of medication could not be isolated eventually, as has been possible with Parkinson’s disease, for example (Cools, Barker, Sahakian, & Robbins, 2001).

How Does Anxiety Interact With Anhedonia?

Related to the question of patient heterogeneity is comorbidity. Anhedonia is highly comorbid with anxiety. If anhedonia can be operationally characterized as reduced processing of rewards, the affective state of anxiety can be operationally characterized as increased processing of threats (Davis, Walker, Miles, & Grillon, 2010; Grillon, 2008; Robinson, Vytal, Cornwell, & Grillon, 2013). These are complementary and competing drives, and a full understanding of mood disorders will likely require integrating understandings of both.

Prior work using computational approaches to understand elevated aversive processing in anxiety is, however, extremely limited (but for a review, see Raymond, Steele, & Series, 2017). From a RL perspective, anxiety induced by threat of shock has been shown to increase a positively signed aversive PE signal to unexpected fearful face stimuli in the VS (Robinson, Overstreet, et al., 2013). This replicates animal work (Oleson, Gentry, Chioma, & Cheer, 2012) and is broadly consistent with a wide range of studies implicating the VS in aversive PEs under normal conditions (Delgado, Li, Schiller, & Phelps, 2008; Robinson, Frank, Sahakian, & Cools, 2010; Seymour, Daw, Dayan, Singer, & Dolan, 2007) but contrasts with the putative attenuated striatal response to reward in anhedonia reviewed earlier in this article. Similarly, while the earlier reviewed evidence did not suggest a role of learning rate differences in the manifestation of anhedonia, trait anxiety has been shown to be negatively associated with the ability to modulate learning rates (Browning et al., 2015) in response to environmental volatility. This could potentially result in aversive outcomes being experienced as less predictable and controllable than they are and thus help maintain the anxious state. Moreover, pathological anxiety has also been shown to increase rather than decrease reliance on Pavlovian avoidance parameters (Mkrtchian et al., 2017). Notably, in this particular study, the winning model had two separate Pavlovian parameters: one for approaching rewards and one for avoiding punishments. Anxiety disorders were associated with increased reliance on the avoidance parameter but not on the approach parameter. An interesting question for future work in anhedonia, therefore, is whether a similar, albeit attenuated bias exists for the approach parameter alone if it is modeled separately. Another study looking at models of economic decision making under uncertainty (Figure 1C) suggested that anxiety was associated with risk but not with loss aversion (Charpentier et al., 2016). Finally, there is evidence that high trait anxiety is associated with increased boundary separation and nondecision time on the drift diffusion model (White et al., 2010) rather than the reduced drift rate seen in anhedonia (although see Aylward, Hales, Robinson, & Robinson, 2017, for potential evidence of drift rate changes in anxiety disorders).

In sum, research into the computational parameters of anxiety, while in their infancy, reveals effects that might differ in important ways from those in anhedonia, and understanding how these interact in the clinical manifestation of mixed anxiety and depression is a key unresolved question.

What Are the Clinical Implications?

The onset and causes of mood disorders remain puzzling (Kendler & Halberstadt, 2013). The efficacy of our dominant treatment strategies—pharmacotherapy and psychological therapy (NICE, 2014)—are unpredictable. The increasing empirical focus in the mood disorders literature on reinforcement processes has been accompanied by increasing acknowledgment of the antidepressant potential of dopaminergic agents (e.g., Fawcett et al., 2016; Racagni, Canonico, Ravizza, Pani, & Amore, 2004), which may act by influencing RL processes. As such, understanding individual differences in reward pathways, for example, using RL paradigms, may offer an effective way to stratify patients and predict treatment response. For example, SSRIs may show reduced efficacy for individuals with high levels of anhedonia and, hence, deficits in reward-related behavior (Vrieze et al., 2012), while there may be learning signatures that indicate that an individual will respond well to psychological intervention (see, e.g., Culver, Vervliet, & Craske, 2015). Future work integrating computational approaches with clinical trials may ultimately improve our ability to target treatments.

Overall Summary

The premise of the present review is that models of learning and choice can help illuminate the core symptoms of mood disorders. Indeed, we find some empirical support for the role of computational model parameters in explaining variability in behavioral and neural responses to obtaining rewards in anhedonia. Most notably, the reviewed data highlight—in contrast to the typical focus on reduced reward or enhanced punishment sensitivity—a potential role for attenuated response to Pavlovian biases, increased choice variability captured by temperature, and reduced drift rates in reaction times in driving anhedonia-linked behavioral variability. These changes may cumulatively manifest as the attenuated striatal response to rewards that are often observed in neuroimaging studies. Critically, however, the adoption of computational methods has brought to light factors that would have been difficult to identify previously. Our final five unresolved questions build on the insights from the reviewed findings: Resolving these questions will, we hope, take us a step closer to understanding the precise nature of the behavioral deficits underlying mood disorders and to the ultimate goal of improving outcomes for patients.

Funding Information

Oliver J. Robinson is funded by a Medical Research Council Career Development Award (MR/K024280/1). Henry W. Chase is supported by NIMH award 1R21MH108421-01A1.

Footnotes

Author Contributions

Oliver J. Robinson and Henry W. Chase contributed equally to this article.

Competing Interests: The authors declare no conflicts of interests.

Code used in these simulations has been made available online at https://doi.org/10.6084/m9.figshare.5103322.v1 (Chase, 2017)

Supporting Information: https://figshare.com/articles/risk_simulations/5103322

References

- Admon R, Kaiser RH, Dillon DG, Beltzer M, Goer F, Olson DP, et al. Pizzagalli DA. Dopaminergic enhancement of striatal response to reward in major depression. American Journal of Psychiatry. 2017;174:378–386. doi: 10.1176/appi.ajp.2016.16010111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Admon R, Nickerson LD, Dillon DG, Holmes AJ, Bogdan R, Kumar P, et al. Pizzagalli DA. Dissociable corticostriatal connectivity abnormalities in major depression in response to monetary gains and penalties. Psychological Medicine. 2015;45:121–131. doi: 10.1017/S0033291714001123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Admon R, Pizzagalli DA. Corticostriatal pathways contribute to the natural time course of positive mood. Nature Communications. 2015;6 doi: 10.1038/ncomms10065. Article 10065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Argyropoulos SV, Nutt DJ. Anhedonia revisited: Is there a role for dopamine-targeting drugs for depression? Journal of Psychopharmacology. 2013;27:869–877. doi: 10.1177/0269881113494104. [DOI] [PubMed] [Google Scholar]

- Arrondo G, Segarra N, Metastasio A, Ziauddeen H, Spencer J, Reinders NR, et al. Murray GK. Reduction in ventral striatal activity when anticipating a reward in depression and schizophrenia: A replicated cross-diagnostic finding. Frontiers in Psychology. 2015;6 doi: 10.3389/fpsyg.2015.01280. Article 1280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aylward J, Hales C, Robinson E, Robinson OJ. Back-translating a rodent measure of negative bias into humans: The impact of induced anxiety and unmedicated mood and anxiety disorders. bioRxiv. 2017 doi: 10.1101/143453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beddington J, Cooper CL, Field J, Goswami U, Huppert FA, Jenkins R, et al. Thomas SM. The mental wealth of nations. Nature. 2008;455:1057–1060. doi: 10.1038/4551057a. [DOI] [PubMed] [Google Scholar]

- Beevers CG, Worthy DA, Gorlick MA, Nix B, Chotibut T, Todd Maddox W. Influence of depression symptoms on history-independent reward and punishment processing. Psychiatry Research. 2013;207(1–2):53–60. doi: 10.1016/j.psychres.2012.09.054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berridge KC, Robinson TE. Parsing reward. Trends in Neuroscience. 2003;26:507–513. doi: 10.1016/S0166-2236(03)00233-9. [DOI] [PubMed] [Google Scholar]

- Blanco NJ, Otto AR, Maddox WT, Beevers CG, Love BC. The influence of depression symptoms on exploratory decision-making. Cognition. 2013;129:563–568. doi: 10.1016/j.cognition.2013.08.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bogacz R, Brown E, Moehlis J, Holmes P, Cohen JD. The physics of optimal decision making: A formal analysis of models of performance in two-alternative forced-choice tasks. Psychological Review. 2006;113:700–765. doi: 10.1037/0033-295X.113.4.700. [DOI] [PubMed] [Google Scholar]

- Box GEP. Science and statistics. Journal of the American Statistical Association. 1976;71:791–799. doi: 10.2307/2286841. [DOI] [Google Scholar]

- Browning M, Behrens TE, Jocham G, O’Reilly JX, Bishop SJ. Anxious individuals have difficulty learning the causal statistics of aversive environments. Nature Neuroscience. 2015;18:590–596. doi: 10.1038/nn.3961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bush RR, Mosteller F. Stochastic models for learning. John Wiley; Oxford, England: 1955. [Google Scholar]

- Bylsma LM, Morris BH, Rottenberg J. A meta-analysis of emotional reactivity in major depressive disorder. Clinical Psychology Review. 2008;28:676–691. doi: 10.1016/j.cpr.2007.10.001. [DOI] [PubMed] [Google Scholar]

- Cavanagh JF, Bismark AJ, Frank MJ, Allen JJ. Larger error signals in major depression are associated with better avoidance learning. Frontiers in Psychology. 2011;2 doi: 10.3389/fpsyg.2011.00331. Article 331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cavanagh JF, Eisenberg I, Guitart-Masip M, Huys Q, Frank MJ. Frontal theta overrides Pavlovian learning biases. Journal of Neuroscience. 2013;33:8541–8548. doi: 10.1523/JNEUROSCI.5754-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Charpentier CJ, Aylward J, Roiser JP, Robinson OJ. Enhanced risk aversion, but not loss aversion, in unmedicated pathological anxiety. Biological Psychiatry. 2016;81:1014–1022. doi: 10.1016/j.biopsych.2016.12.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chase H. Risk simulations. figshare. 2017 doi: 10.6084/m9.figshare.5103322.v1. Retrieved: Nov 28, 2017. [DOI] [Google Scholar]

- Chase HW, Fournier JC, Bertocci MA, Greenberg T, Aslam H, Stiffler R, et al. A pathway linking reward circuitry, impulsive sensation-seeking and risky decision-making in young adults: Identifying neural markers for new interventions. Translational Psychiatry. 2017;7(4):e1096. doi: 10.1038/tp.2017.60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chase HW, Frank MJ, Michael A, Bullmore ET, Sahakian BJ, Robbins TW. Approach and avoidance learning in patients with major depression and healthy controls: Relation to anhedonia. Psychological Medicine. 2010;40:433–440. doi: 10.1017/S0033291709990468. [DOI] [PubMed] [Google Scholar]

- Chase HW, Michael A, Bullmore ET, Sahakian BJ, Robbins TW. Paradoxical enhancement of choice reaction time performance in patients with major depression. Journal of Psychopharmacology. 2010;24:471–479. doi: 10.1177/0269881109104883. [DOI] [PubMed] [Google Scholar]

- Chase HW, Nusslock R, Almeida JR, Forbes EE, LaBarbara EJ, Phillips ML. Dissociable patterns of abnormal frontal cortical activation during anticipation of an uncertain reward or loss in bipolar versus major depression. Bipolar Disorders. 2013;15:839–854. doi: 10.1111/bdi.12132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Christianson JP, Paul ED, Irani M, Thompson BM, Kubala KH, Yirmiya R, et al. The role of prior stressor controllability and the dorsal raphe nucleus in sucrose preference and social exploration. Behavioural Brain Research. 2008;193:87–93. doi: 10.1016/j.bbr.2008.04.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chung D, Kadlec K, Aimone JA, McCurry K, King-Casas B, Chiu PH. Valuation in major depression is intact and stable in a non-learning environment. Scientific Reports. 2017;7 doi: 10.1038/srep44374. Article 44374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clery-Melin ML, Schmidt L, Lafargue G, Baup N, Fossati P, Pessiglione M. Why don’t you try harder? An investigation of effort production in major depression. PLoS ONE. 2011;6(8):e23178. doi: 10.1371/journal.pone.0023178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins AG, Brown JK, Gold JM, Waltz JA, Frank MJ. Working memory contributions to reinforcement learning impairments in schizophrenia. Journal of Neuroscience. 2014;34:13747–13756. doi: 10.1523/JNEUROSCI.0989-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins AG, Frank MJ. How much of reinforcement learning is working memory, not reinforcement learning? A behavioral, computational, and neurogenetic analysis. European Journal of Neuroscience. 2012;35:1024–1035. doi: 10.1111/j.1460-9568.2011.07980.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cools R, Barker RA, Sahakian BJ, Robbins TW. Enhanced or impaired cognitive function in Parkinson’s disease as a function of dopaminergic medication and task demands. Cerebral Cortex. 2001;11:1136–1143. doi: 10.1093/cercor/11.12.1136. [DOI] [PubMed] [Google Scholar]

- Culver NC, Vervliet B, Craske MG. Compound extinction using the Rescorla–Wagner model to maximize exposure therapy effects for anxiety disorders. Clincial Psychological Science. 2015;3:335–348. [Google Scholar]

- Davis M, Walker DL, Miles L, Grillon C. Phasic vs. sustained fear in rats and humans: Role of the extended amygdala in fear vs anxiety. Neuropsychopharmacology. 2010;35:105–135. doi: 10.1038/npp.2009.109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw ND. Trial-by-trial data analysis using computational models. In: Delgado MR, Phelps EA, Robbins TW, editors. Decision making, affect, and learning: Attention and performance XXIII. Oxford, England: Oxford University Press; 2011. pp. 3–38. [Google Scholar]

- Daw ND, Kakade S, Dayan P. Opponent interactions between serotonin and dopamine. Neural Networks. 2002;15:603–616. doi: 10.1016/S0893-6080(02)00052-7. [DOI] [PubMed] [Google Scholar]

- DelDonno SR, Weldon AL, Crane NA, Passarotti AM, Pruitt PJ, Gabriel LB, et al. Langenecker SA. Affective personality predictors of disrupted reward learning and pursuit in major depressive disorder. Psychiatry Research. 2015;230:56–64. doi: 10.1016/j.psychres.2015.08.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delgado MR, Li J, Schiller D, Phelps EA. The role of the striatum in aversive learning and aversive prediction errors. Philosophical Transactions of the Royal Society, Series B. 2008;363:3787–3800. doi: 10.1098/rstb.2008.0161. [DOI] [PMC free article] [PubMed] [Google Scholar]