Abstract

Robust perception of self-motion requires integration of visual motion signals with non-visual cues. Neurons in area MSTd may be involved in this sensory integration, as they respond selectively to global patterns of optic flow, as well as translational motion in darkness. Using a virtual reality system, we have characterized the three-dimensional (3D) tuning of MSTd neurons to heading directions defined by optic flow alone, inertial motion alone, and congruent combinations of the two cues. Among 255 MSTd neurons, 98% exhibited significant 3D heading tuning in response to optic flow, whereas 64% were selective for heading defined by inertial motion. Heading preferences for visual and inertial motion could be aligned, but were just as frequently opposite. Moreover, heading selectivity in response to congruent visual/vestibular stimulation was typically weaker than that obtained using optic flow alone, and heading preferences under congruent stimulation were dominated by the visual input. Thus, MSTd neurons did not integrate visual and non-visual cues to achieve better heading selectivity. A simple two-layer neural network, which received eye-centered visual inputs and head-centered vestibular inputs, reproduced the major features of the MSTd data. The network was trained to compute heading in a head-centered reference frame under all stimulus conditions, such that it performed a selective reference frame transformation of visual, but not vestibular, signals. The similarity between network hidden units and MSTd neurons suggests that MSTd is an early stage of sensory convergence involved in transforming optic flow information into a (head-centered) reference frame that facilitates integration with vestibular signals.

Keywords: monkey, MST, optic flow, heading, visual, vestibular

Introduction

For many common behaviors, it is important to know one's direction of heading (here, we consider heading to be the instantaneous direction of translation of one's head/body in space). Many psychophysical and theoretical studies have shown that visual information—specifically, the pattern of optic flow across the retina—plays an important role in computing heading (see Warren, 2003 for review). However, eye movements, head movements, and object motion all confound the optic flow that results from head translation, such that visual information alone is not always sufficient to judge heading accurately (e.g., Warren and Hannon, 1990; Royden et al., 1992; Royden, 1994; Banks et al., 1996; Royden and Hildreth, 1996; Crowell et al., 1998).

For this reason, heading perception often requires the integration of visual motion information with non-visual cues, which may include vestibular, eye/head movement, and proprioceptive signals. Vestibular signals regarding translation are encoded by the otolith organs, which sense linear accelerations of the head through space (Fernandez and Goldberg, 1976a, b). Vestibular contributions to heading perception have not been studied extensively, but there is evidence that humans integrate visual and vestibular signals to estimate heading more robustly (Telford et al., 1995; Ohmi, 1996; Harris et al., 2000; Bertin and Berthoz, 2004). Little is known, however, about how or where this sensory integration takes place in the brain.

In monkeys, several cortical areas (MST, VIP, 7a, STP) are involved in coding patterns of optic flow that typically result from self-motion (Tanaka et al., 1986; Tanaka et al., 1989; Duffy and Wurtz, 1991; Schaafsma and Duysens, 1996; Siegel and Read, 1997; Anderson and Siegel, 1999; Bremmer et al., 2002a; Bremmer et al., 2002b). The dorsal subdivision of MST (MSTd) has been a main focus of investigation, since single neurons in MSTd appear well-suited to signal heading based on optic flow (Duffy and Wurtz, 1995). In addition, electrical microstimulation of MSTd can bias monkeys' heading percepts based on optic flow (Britten and van Wezel, 1998; Britten and Van Wezel, 2002), and lesions to the human homologue of MST can seriously impair one's ability to navigate using optic flow (Vaina, 1998). Thus, MSTd appears to contribute to heading judgments based on optic flow.

Recent studies have also shown that MSTd neurons respond to translation of the body in darkness, suggesting that they might integrate visual and vestibular signals to code heading more robustly (Duffy, 1998; Bremmer et al., 1999; Page and Duffy, 2003). To test this hypothesis further, we have developed a virtual reality system that can move animals along arbitrary paths through a three-dimensional (3D) virtual environment. Importantly, motion trajectories are dynamic (Gaussian velocity profile) such that coding of velocity and acceleration can be distinguished. Moreover, the dynamic pattern of optic flow is precisely matched to the inertial motion of the animal, and the system allows testing of all directions of translation in 3D space. We have used this system to measure the 3D heading tuning of MSTd neurons under conditions in which heading is defined by optic flow only, inertial motion only, or congruent combinations of the two cues. Our physiological findings do not support the idea that MSTd neurons combine sensory cues to code heading more robustly, but modeling does provide an alternate explanation for visual/vestibular convergence in MSTd.

Materials and Methods

Subjects and surgery

Physiological experiments were performed in two male rhesus monkeys (Macaca mulatta) weighing 4–6 kg. The animals were chronically implanted with a circular molded, lightweight plastic ring (5 cm in diameter) that was anchored to the skull using titanium inverted T-bolts and dental acrylic. The ring was placed in the horizontal plane with the center at A-P 0. During experiments, the monkey’s head was firmly anchored to the apparatus by attaching a custom-fitting collar to the plastic ring. Both monkeys were also implanted with scleral coils for measuring eye movements in a magnetic field (Robinson, 1963). After sufficient recovery, animals were trained using standard operant conditioning to fixate visual targets for fluid reward.

Once the monkeys were sufficiently trained, a recording grid (2 × 4 × 0.5 cm) constructed of plastic (Delrin) was fitted inside the ring and stereotaxically secured to the skull using dental acrylic. The grid was placed in the horizontal plane as close as possible to the surface of the skull. The grid contained staggered rows of holes (spaced 0.8 mm apart) that allowed insertion of microelectrodes vertically into the brain via transdural guide tubes that were passed through a small burr hole in the skull (Dickman and Angelaki, 2002). The grid extended from the midline to the area overlying MST bilaterally. All animal surgeries and experimental procedures were approved by the Institutional Animal Care and Use Committee at Washington University and were in accordance with NIH guidelines.

Motion platform and Visual stimuli

Translation of the monkey along any arbitrary axis in 3D space was accomplished using a 6 degree-of-freedom motion platform (MOOG 6DOF2000E) (Fig.1A). Monkeys sat comfortably in a primate chair mounted on top of the platform and inside the magnetic field coil frame. The trajectory of inertial motion was controlled in real time at 60 Hz over an Ethernet interface. This system has a substantial temporal bandwidth, with a 3dB cutoff at 2 Hz and a maximum acceleration of ± 0.6 G, with a maximum excursion of approximately ± 20 cm along each axis of translation. Feedback was provided at 60 Hz from optical encoders on each of the 6 movement actuators, allowing accurate measurement of platform motion.

Figure 1.

Experimental setup and heading stimuli. A) Schematic illustration of the ‘virtual reality’ apparatus. The monkey, eye-movement monitoring system (field coil), and projector sit on top of a motion platform with 6 degrees of freedom. B) Illustration of the 26 movement vectors used to measure 3D heading tuning curves. C) Normalized population responses to visual and vestibular stimuli (gray curves) are superimposed on the stimulus velocity and acceleration profiles (solid and dashed black lines). The dotted vertical lines illustrate the 1s analysis interval used to calculate mean firing rates.

A 3-chip DLP projector (Christie Digital Mirage 2000) was mounted on top of the motion platform to rear-project images onto a 60 × 60 cm tangent screen that was viewed by the monkey from a distance of 30 cm (thus subtending 90 × 90o of visual angle) (Fig. 1A). This projector incorporates special circuitry such that image updating is precisely time-locked to the vertical refresh of the video input (with a one-frame delay). The tangent screen was mounted on the front of the field coil frame. The sides, top and back of the coil frame were covered with black enclosures such that the monkey’s field of view was restricted to visual stimuli presented on the screen. The visual display, with a pixel resolution of 1280 × 1024 and 32-bit color depth, was updated at the same rate as the movement trajectory (60 Hz). Visual stimuli were generated by an OpenGL accelerator board (nVidia Quadro FX3000G), which was housed in a dedicated dual-processor PC. Visual stimuli were plotted with sub-pixel accuracy using hardware anti-aliasing.

In these experiments, visual stimuli depicted movement of the observer through a 3D cloud of ‘stars’ that occupied a virtual space 100 cm wide, 100 cm tall, and 40 cm deep. Star density was 0.01/cm3, with each star being a 0.15cm × 0.15cm yellow triangle. Roughly 1500 stars were visible at any time within the field of view of the screen. Accurate rendering of the optic flow, motion parallax, and size cues that accompanied translation of the monkey was achieved by plotting the star field in a 3D virtual workspace and by moving the OpenGL ‘camera’ through this space along the exact trajectory followed by the monkey’s head. All visual stimuli were presented dichoptically at zero disparity (i.e., there were no stereo cues). The display screen was located in the center of the star field before stimulus onset and remained well within the depth of the star field throughout the motion trajectory. To avoid extremely large (near) stars from appearing in the display, a near clipping plane was imposed such that stimulus elements within 5cm of the eyes were not rendered.

Platform motion and optic flow stimuli could be presented either together or separately (see Experimental Protocol). During simultaneous presentation, stimuli were synchronized by eliminating time lags between platform motion and updating of the visual display using predictive control. In our apparatus, feedback from the motion platform actuators has a one-frame delay (16.7 ms), and there is an additional one-frame delay in the output of the projector. Thus, if we simply used feedback to directly update the visual display, there would be at least a 30–40 ms lag between platform motion and visual motion. To overcome this problem, we performed a dynamical systems analysis of the motion platform and constructed a transfer function that could be used to accurately predict platform motion from the command signal for a desired trajectory. We then time-shifted the predicted position of the platform such that visual motion was synchronous with platform motion (to within ~1 ms). To fine-tune the synchronization of visual and inertial motion stimuli, a world-fixed laser projected a small spot on the tangent screen, and images of a world-fixed cross hair were also rendered on the screen by the video card. While the platform was moved, a delay parameter in the software was adjusted carefully (1 ms resolution) until the laser spot and the cross hair moved precisely together. This synchronization was verified occasionally during the period of data collection.

To evaluate the accuracy of predictions from the transfer function, we input low-pass filtered Gaussian white noise (20dB cutoff at 4Hz) as the command signal, and we compared the measured feedback signal (from the actuators) to the predicted position of the platform. We quantified the deviation of the prediction by computing the normalized RMS error between predicted and actual motion:

| (1) |

where Pf is measured feedback position and Pp is the position predicted by the transfer function. The result of equation (1) estimates the error relative to the signal. The normalized error was 0.038 for our noise input, indicating a close match between measured and predicted position of the platform (correlation coefficient = 0.998, p<<0.001, n = 361 samples at 60 Hz). Thus, our dynamic characterization of the motion platform allowed highly synchronous and accurate combinations of visual and inertial motion to be presented.

Electrophysiological recordings

We recorded extracellularly the activities of single neurons from three hemispheres in two monkeys. A tungsten microelectrode (Frederick Haer, Bowdoinham, ME; tip diameter 3 μm, impedance 1–2 MΩ at 1 kHz) was advanced into the cortex through a transdural guide tube, using a micromanipulator (Frederick Haer, Bowdoinham, ME) mounted on top of the Delrin ring. Single neurons were isolated using a conventional amplifier, a bandpass 8-pole filter (400–5000 Hz), and a dual voltage-time window discriminator (Bak Electronics, Mount Airy, MD). The times of occurrence of action potentials and all behavioral events were recorded with 1 ms resolution by the data acquisition computer. Eye movement traces were low-pass filtered and sampled at 250 Hz. Raw neural signals were also digitized at 25 kHz and stored to disk for offline spike sorting and additional analyses.

Area MSTd was first identified using MRI scans. An initial scan was performed on each monkey prior to any surgeries using a high-resolution sagittal MPRAGE sequence (0.75 mm × 0.75mm × 0.75mm voxels). SUREFIT software (Van Essen et al., 2001) was used to segment gray matter from white matter. A second scan was performed after the head holder and recording grid had been surgically implanted. Small cannulae filled with a contrast agent (Gadoversetamide) were inserted into the recording grid during the second scan to register electrode penetrations with the MRI volume. The MRI data were converted to a flat map using CARET software (Van Essen et al., 2001) and the flat map was morphed to match a standard macaque atlas. The data were then refolded and transferred onto the original MRI volume. Thus MRI images were obtained showing the functional boundaries between different cortical areas, along with the expected trajectories of electrode penetrations through the guide tubes. Area MSTd was identified as a region centered about 15 mm lateral to the midline and ~3–6 mm posterior to the interaural plane.

Several other criteria were applied to identify MSTd neurons during recording experiments. First, the patterns of gray and white matter transitions along electrode penetrations were identified. MSTd was usually the first gray matter encountered that modulated its responses to flashing visual stimuli. Second, we mapped MSTd neurons’ receptive fields (RFs) manually by moving a patch of drifting random dots around the visual field and observing a qualitative map of instantaneous firing rates on a custom graphical interface. MSTd neurons typically had large RFs that occupied a quadrant or a hemi-field on the display screen. In most cases, RFs were centered in the contralateral visual field but also extended into the ipsilateral field and included the fovea. Many of the RFs were well contained within the boundaries of our display screen, but some RFs clearly extended beyond the boundaries of the screen. The average RF size was 44º±8º × 58º±13º s.e., which is similar to RF sizes reported previously for MSTd (Van Essen et al., 1981; Desimone and Ungerleider, 1986; Komatsu and Wurtz, 1988b). Moreover, MSTd neurons usually were activated only by large visual stimuli (random-dot patches >10º × 10º), with smaller patches typically evoking little response. These properties are typical of neurons in area MSTd, and distinct from area MSTl (Komatsu and Wurtz, 1988a, b; Tanaka et al., 1993).

To further aid identification of recording locations, electrodes were often further advanced into area MT. There was usually a quiet region 0.3–1 mm long before MT was reached, which helped confirm the localization of MSTd. MT neurons were identified according to several properties including smaller receptive fields (diameter ~ eccentricity), sensitivity to small visual stimuli as well as large stimuli, and similar direction preferences within penetrations roughly normal to the cortical layers (Albright et al., 1984). The changes in receptive field location of MT neurons across guide tube locations were as expected from the known topography of MT (Zeki, 1974; Gattass and Gross, 1981; Van Essen et al., 1981; Desimone and Ungerleider, 1986; Albright and Desimone, 1987; Maunsell and Van Essen, 1987). Thus, we took advantage of the retinotopic organization of MT receptive fields to help identify the locations of our electrodes within MSTd (as described in Fig. 3).

Figure 3.

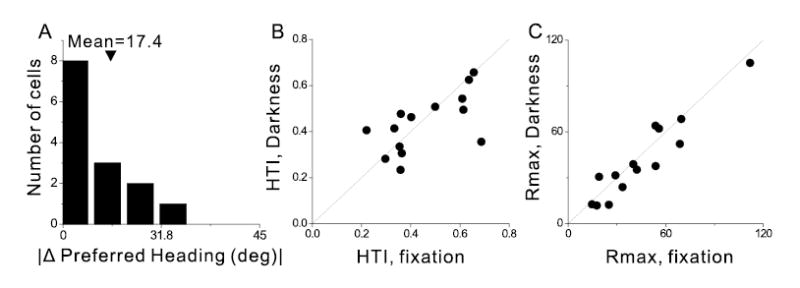

Summary of heading tuning in response to inertial motion in the Vestibular condition versus complete darkness. A) Distribution of the differences in preferred heading for 14 neurons tested under the standard Vestibular condition, with a fixation target, and in complete darkness with no requirement to fixate. The difference in preferred heading was binned according to the cosine of the angle (in accordance with the spherical nature of the data) (Snyder, 1987). B) Scatter plot of HTI values for the same 14 cells tested under both conditions. C) Scatter plot of the maximum response amplitude (Rmax) under both conditions.

Experimental protocol

Once action potentials from a single MSTd neuron were satisfactorily isolated, the RF was mapped as described above. Next, regardless of the strength of visual responses, we tested the 3D heading tuning of the neuron by recording neural activity to heading stimuli presented along 26 heading directions corresponding to all combinations of azimuth and elevation angles in increments of 45 degrees (Fig. 1B). The stimuli were presented for a duration of 2 seconds, although most of the movement occurred within the middle 1 second. The stimulus trajectory had a Gaussian velocity profile and a corresponding biphasic acceleration profile. The motion amplitude was 13 cm (total displacement), with a peak acceleration of ~0.1 G (~0.98m/s2), and a peak velocity of ~30cm/s (Fig. 1C). For inertial motion, these accelerations far exceed vestibular thresholds (see Gundry, 1978 for review; Benson et al., 1986; Kingma, 2005).

The experimental protocol included three primary stimulus conditions: 1) In the Vestibular condition, the monkey was moved along each of the 26 heading trajectories in the absence of optic flow. The screen was blank, except for a head-centered fixation point. Note that that we refer to this as the ‘Vestibular’ condition for simplicity, even though other extra-retinal signal contributions (e.g., from body proprioception) cannot be excluded. 2) In the Visual condition, the motion platform was stationary while optic flow simulating movement through the cloud of stars was presented on the screen. 3) In the Combined condition, the animal was moved using the motion platform while a congruent optic flow stimulus was presented. To measure the spontaneous activity of each neuron, additional trials without platform motion or optic flow were interleaved, resulting in a total of 395 trials (including 5 repetitions of each distinct stimulus). During all three cue conditions, the animal was required to fixate a central target (0.2º in diameter), which was introduced first in each trial and had to be fixated for 200 ms before stimulus onset (fixation windows spanned 1.5o ×1.5o of visual angle). The animals were rewarded at the end of each trial for maintaining fixation throughout stimulus presentation. If fixation was broken at any time during the stimulus, the trial was aborted and the data were discarded. Neurons were included in the sample if each stimulus was successfully repeated at least 3 times. Across our sample of MSTd neurons, 85% of cells were isolated long enough for at least 5 stimulus repetitions.

In some experiments, binocular eye movements were monitored to evaluate possible changes in vergence angle during stimulus presentation. Vergence angle was computed as the average difference in position of the two eyes over the middle 1 s interval of the Gaussian velocity profile. Because changes in vergence angle can be elicited by radial optic flow under some circumstances (Busettini et al., 1997), we examined how vergence angle depended on heading direction within the horizontal place (8 azimuth angles, 45 deg apart). We found no significant dependence of vergence angle on heading direction in any of the three stimulus conditions (One-way ANOVA, p>0.05, see Supplementary Figure 1).

For a subpopulation of MSTd neurons that showed significant tuning in the Vestibular condition, neural responses were also collected during platform motion along each of the 26 different directions in complete darkness (with the projector turned off). In these controls, there was no behavioral requirement to fixate and rewards were delivered manually to keep the animal motivated.

Data analysis

Because the responses of MSTd neurons largely followed stimulus velocity (Fig. 1C), mean firing rates were computed during the middle 1 s interval of each stimulus presentation. When longer duration analyses were used (i.e., 1.5 or 2 s), results were very similar. To quantify the strength of heading tuning for each of the Vestibular, Visual and Combined conditions, the mean firing rate in each trial was considered to represent the magnitude of a 3D vector whose direction was defined by the azimuth and elevation angles of the respective movement trajectory. A Heading Tuning Index (HTI) was then computed as the magnitude of the vector sum of these individual response vectors, normalized by the sum of the magnitudes of the individual response vectors, according to the following equation:

| (2) |

where Ri is the mean firing rate for the ith stimulus direction after subtraction of spontaneous activity, and n corresponds to the number of different heading directions tested. The HTI ranges from 0 to 1 (weak to strong tuning). Its statistical significance was assessed using a permutation test based on 1000 random reshufflings of the stimulus directions. The preferred heading direction for each stimulus condition was computed from the azimuth and elevation of the vector sum of the individual responses (numerator of equation 2).

Our heading tuning functions are intrinsically spherical in nature because we have sampled heading directions uniformly around the sphere (Fig. 1B). To plot these spherical data on Cartesian axes (e.g., Fig. 2), we have transformed the data using the Lambert cylindrical equal-area projection (Snyder, 1987). In these flattened representations of the data, the abscissa represents the azimuth angle, and the ordinate represents a sinusoidally transformed version of the elevation angle.

Figure 2.

Examples of 3D heading tuning functions for 3 MSTd neurons. Color contour maps show mean firing rate as a function of azimuth and elevation angles. Each contour map shows the Lambert cylindrical equal-area projection of the original spherical data (see Methods, Snyder, 1987). In this projection, the ordinate is a sinusoidally transformed version of elevation angle. Tuning curves along the margins of each color map illustrate mean (±SEM) firing rates plotted as a function of either elevation or azimuth (averaged across azimuth or elevation, respectively). Data from the Vestibular, Visual and Combined stimulus conditions are shown from left to right. A) Data from a neuron with congruent tuning for heading defined by visual and vestibular cues. B) Data from a neuron with opposite heading preferences for visual and vestibular stimuli. C) Data from a neuron with strong tuning for heading defined by optic flow, but no vestibular tuning. D) Definitions of azimuth and elevation angles used to define heading stimuli in 3D.

Mathematical description of heading tuning functions

To simulate the behavior of a network of units that resemble MSTd neurons, it was first necessary to obtain a simple mathematical model that could adequately describe the heading selectivity of MSTd cells. After evaluating several alternatives, we found that the 3D heading tuning of MSTd neurons could be well described by a Modified Sinusoid Function (MSF) having 5 free parameters:

| (3) |

where R is the response amplitude, azi is the azimuth angle (range: 0 and 2π) and ele is the elevation angle (range: –π/2 and π/2). The azi and ele variables in (3) have been expressed in rotated spherical coordinates such that the peak of the function, given by parameters (azip, elep), lies at the preferred heading of the neuron in 3D. Thus, the five free parameters are the preferred azimuth (azip), the preferred elevation (elep), response modulation amplitude (A), the baseline firing rate (DC), and the exponent parameter (n) of the nonlinearity G. The non-linear transformation G() is given by:

| (4) |

n where n (constrained to be > 0) is the parameter that controls the nonlinearity. When n is close to zero, G(x) has no effect on the tuning curve. As n gets larger, this function amplifies and narrows the peak of the tuning function, while suppressing and broadening the trough. The operation []* in (3) represents normalization to the range [−1,1] following application of the nonlinearity. This normalization avoids confounding the nonlinearity parameter, n, with the amplitude and DC offset parameters. Goodness of fit was quantified by correlating the mean responses of neurons with the model fits (across heading directions).

Network model: design and training

A simple feedforward two-layer artificial neural network was implemented, trained using back propagation, and used to explore intermediate representations of visual and vestibular signals that might contribute to heading perception. The MSF described by equation 3 was used to characterize the heading tuning of input and output units in the model. The nonlinearity (n), amplitude (A), and DC response parameters in the MSF were set to 1, 1.462, and −0.462, respectively, so that the responses of input and output units were normalized to the range of [−1,1]. These values were chosen because they produced tuning functions similar to the majority of MSTd neurons. Hidden layer units in the network were characterized by hyperbolic tangent (sigmoid) activation functions, whereas the output layer units had linear activation functions. The network was fully connected, such that each hidden layer unit was connected to all inputs and each output unit was connected to all hidden layer units. It can be shown that such a network is capable of estimating an arbitrary function, given a sufficient number of hidden units (Bishop, 1995).

The network had 26 visual input units, each with a different heading preference around the sphere (spaced apart as in Fig. 1B). The 26 vestibular input units and 26 output units also had heading preferences spaced uniformly on the sphere. Visual input units coded heading in an eye-centered spatial reference frame, whereas vestibular input units and output units coded heading in a head-centered reference frame. The overall heading estimate of the network, resulting from the activity of the output units, was defined by a population vector (Georgopoulos et al., 1986) and was computed as the vector sum of the responses of the 26 output units. The network also received 12 eye position inputs, each with a response that was a linear function of eye position. Six units coded horizontal eye position (three different positive slopes and three different negative slopes) and 6 units coded vertical eye positions (with the same slopes).

The network was trained to compute the correct direction of heading, in a head-centered reference frame, under each of the simulated Vestibular, Visual and Combined conditions. For all combinations of heading directions and eye positions, network connection weights were adjusted to minimize the sum squared error between the actual outputs of the network and the desired outputs, plus the sum of the absolute values of all network weights and biases. The second term caused training to prefer networks with the smallest set of weights and biases. The network was built and trained using the Matlab Neural Network Toolbox, with the basic results being independent of the particular minimization algorithm used. The data presented here were obtained using the Scaled Conjugate Gradient algorithm, which typically provided the best performance. When analyzing the responses of model units (Figs. 10–12), in order to avoid unrealistic negative response values, we subtracted the minimum response of each unit to make all responses positive.

Figure 10.

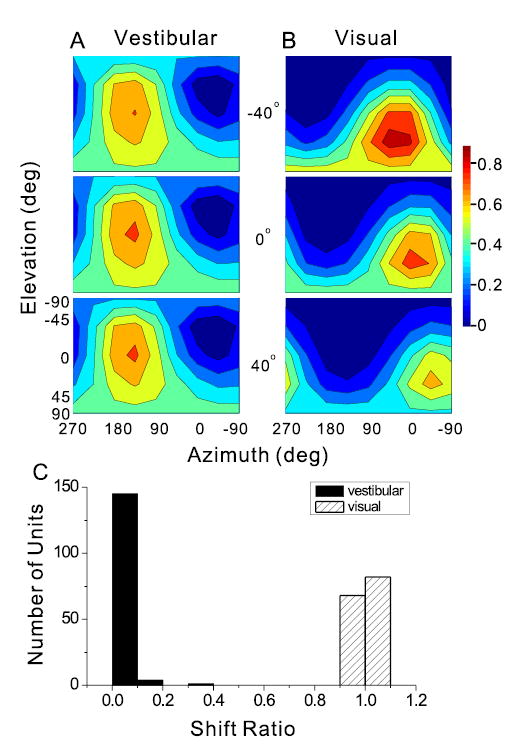

A, B) Example of 3D heading tuning functions for a network hidden unit tested at three horizontal eye positions (from top to bottom, 40° left, 0°, and 40° right) under (A) the Vestibular and (B) the Visual condition. Format similar to Fig. 2. C) Shift ratio distributions for all 150 hidden units under the two single-cue conditions.

Figure 12.

Distribution of the absolute difference in preferred heading, |Δ Preferred Heading|, for the hidden layer units between (A) the Visual and Vestibular conditions, (B) the Combined and Vestibular conditions, and (C) the Combined and Visual conditions. Data are means (±SD) from 5 training sessions. Format as in Fig. 6D–F.

In each simulated stimulus condition, horizontal/vertical eye position took on one of 5 possible values from trial to trial: ±40º, ±20º, and 0º. In the simulated Visual condition, the network was only given visual inputs and eye position inputs, and was trained to compute heading direction in a head-centered frame of reference. Thus, in this condition, the network was required to interact eye position signals with visual inputs, since the latter originated in an eye-centered reference frame. In the simulated Vestibular condition, although input signals were already in a head-centered reference frame, the network was again given both vestibular and eye position inputs. In the simulated Combined condition, all inputs were active and the network was again required to compute heading direction in a head-centered reference frame. Thus, across all stimulus conditions and eye positions, the network was required to selectively transform visual inputs from eye-centered to head-centered, while retaining correct behavior for vestibular inputs. Whereas the reference frames used by the input units were fixed (by design), hidden units in the model could potentially code heading in any reference frame. The reference frames of hidden units were quantified by computing a ‘Shift Ratio’, which was defined as the observed change in heading preference between a pair of eye positions divided by the difference in eye position. Shift-ratios near 0 indicate a head-centered reference frame and values near 1 represent an eye-centered reference frame.

Results

The activities of 255 MSTd neurons from 2 monkeys (169 from monkey 1 and 86 from monkey 2) were characterized during actual and simulated motions along a variety of different headings in 3D space, using a virtual reality apparatus (Fig. 1A). We recorded from every well-isolated neuron in MSTd that was spontaneously active or that responded to a large flickering field of random dots. MSTd was localized based on multiple criteria, as described in the Methods section. Every MSTd neuron was tested under three stimulus conditions in which heading direction was defined by inertial motion alone (Vestibular condition), optic flow alone (Visual condition), or congruent combinations of inertial and visual motion (Combined condition). Note that heading directions in all conditions are referenced to physical body motion (i.e., “heading direction” for optic flow refers to the direction of simulated body motion).

To quantify heading selectivity in the following analyses, the mean neural firing rate for each heading was calculated from the middle 1s of the stimulus profile (see Methods), a period that contains most of the velocity variation. As illustrated in Fig. 1C, the population responses to either optic flow or inertial motion look much more like delayed and smeared out versions of stimulus velocity than stimulus acceleration (Fig. 1C, compare population responses with black solid and dashed lines). Each population response was computed as a peristimulus time histogram (PSTH), by summing up each cell’s contribution, which was taken along the heading direction that produced the maximum response, with each 50 ms bin being normalized by the maximum bin. The dynamics of MSTd responses were further analyzed by computing correlation coefficients between each neuron’s PSTH and the velocity and acceleration profiles of the stimulus, using a range of correlation delays from 0–300ms. For over 90% of neurons in each stimulus condition, the maximum correlation with velocity was larger than that for acceleration (paired t-test; p<<0.001).

The responses of the majority of MSTd neurons were modulated by heading direction under all three stimulus conditions. Figure 2 shows typical examples of heading tuning in MSTd, illustrated as contour maps of mean firing rate (represented by color) plotted as a function of azimuth (abscissa) and elevation (ordinate). The MSTd cell illustrated in Fig. 2A represents a 'congruent' neuron, for which heading tuning is quite similar for visual and vestibular inputs. The cell exhibited broad, roughly sinusoidal tuning, with a peak response during inertial motion at 0º azimuth and −30º elevation, corresponding to a rightward and slightly upward trajectory (Vestibular condition, left column). A similar preference was observed under the Visual condition (middle column), with the peak response occurring for simulated rightward/upward trajectories. As expected based on this neuron’s congruent tuning for visual and vestibular stimuli, a similar heading preference was also seen in the Combined condition (Fig. 2A, right column). However, the peak response in the Combined condition was not strengthened as compared to the Visual condition, suggesting that sensory information might not be linearly combined in the activities of MSTd neurons.

Congruent cells such as this one might be useful for coding heading under natural conditions in which both inertial and optic flow cues provide information about self-motion. However, many MSTd neurons were characterized by opposite tuning preferences in the Visual and Vestibular conditions. Fig 2B shows an example of an 'anti-congruent' cell, with a preferred heading for the Vestibular condition that was nearly opposite to that in the Visual condition. Both the heading preference and the maximum response in the Combined condition were similar to those in the Visual condition, suggesting that vestibular cues were strongly de-emphasized in the combined response. A third main type of neuron encountered in MSTd, with heading-selective responses only in the Visual and Combined conditions but not the Vestibular condition, is illustrated in Fig. 2C.

In the following, we first summarize heading tuning properties under each single-cue condition. We then examine closely the relationship between heading tuning in the Combined condition and that in the single-cue conditions.

Single Cue Responses

The strength of heading tuning of MSTd neurons was quantified using a Heading Tuning Index (HTI), which ranges from 0 to 1 (poor and strong tuning, respectively; see Methods). For reference, a neuron with idealized cosine tuning would have an HTI value of 0.31, whereas an HTI value of 1 is reached when firing rate is zero (spontaneous) for all but a single stimulus direction. For the Visual condition, HTI values averaged 0.48 ± 0.16 SD, with all but four cells (251/255, 98%) being significantly tuned, as assessed by a permutation test (p<0.05). For the Vestibular condition, HTI values were generally smaller (mean ± SD: 0.26 ± 0.16), with only 64% of neurons (162/255) being significantly tuned (p<0.05). Across the population, the HTI for the Vestibular condition was significantly smaller than that for the Visual condition (paired t-test, p<<0.001).

Because of the behavioral requirement to maintain fixation on a head-fixed central target, significant heading tuning under the Vestibular condition might not necessarily represent sensory responses to inertial motion. For example, the observed responses could be driven by a pursuit-like signal related to suppression of the vestibulo-ocular reflex (VOR) (Thier and Erickson, 1992a, b; Fukushima et al., 2000). To examine this possibility, 14 neurons were also tested using a modified Vestibular condition, in which the animal sat in complete darkness and there was no requirement to maintain fixation. We found that heading tuning in complete darkness was very similar to that seen during the standard fixation task. First, the absolute difference in heading preference between these two conditions was small, with a mean value of 17.4º ± 9.9 SD (Fig. 3A). In addition, neither the HTI (paired t-test, p=0.54) nor the maximum response (paired t-test, p=0.18) was significantly different between the two conditions (Fig. 3B, C). These results suggest that heading tuning in the Vestibular condition reflects sensory signals that arise from vestibular and/or proprioceptive inputs, rather than a VOR suppression or pursuit-like signal (see Discussion).

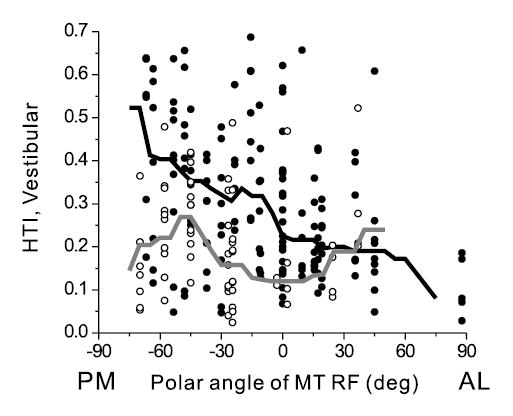

Neurons with significant vestibular tuning were not uniformly distributed within MSTd. Across animals, there was a significant (ANOVA, p<<0.001) difference between Vestibular HTI values for the three hemispheres, with means (± SEM) of 0.20 ± 0.01, 0.26 ± 0.02 and 0.33 ± 0.05 for the left hemisphere of monkey 1, the right hemisphere of monkey 1 and the right hemisphere of monkey 2, respectively. No significant difference between hemispheres was found for Visual HTI values (ANOVA, p=0.42). At most guide tube locations, we advanced the electrodes past MSTd and mapped receptive fields (RFs) in area MT. HTI values for the Vestibular condition have been plotted against the polar angle of the underlying MT RFs in Fig. 4. For the two right hemispheres, which also had the largest average HTI values, there was a significant dependence of HTI on MT receptive field location (correlation coefficient, R=−0.4, p<<0.001), with larger HTI values for MT RFs in the lower hemifield (Fig. 4, filled symbols). The solid line through the data points is the running median, computed using 30º bins at a resolution of 5º. Based on the known topography of MT (Zeki, 1974; Gattass and Gross, 1981; Van Essen et al., 1981; Desimone and Ungerleider, 1986; Albright and Desimone, 1987; Maunsell and Van Essen, 1987), this relationship suggests that vestibular tuning tends to be stronger in the posterior medial portions of MSTd. No such relationship was found for the left hemisphere of monkey 1 (Fig. 4, open symbols and gray line; correlation coefficient, R=−0.13, p=0.5). These results suggest that a gradient of vestibular heading selectivity might exist within area MSTd.

Figure 4.

Relationship between the HTI for the Vestibular condition and recording location within MSTd. Recording location was estimated from the polar angle of the underlying MT receptive field, with 90º/–90º corresponding to the upper/lower vertical meridian and 0º to the horizontal meridian in the contralateral visual field. Thus, moving from left to right along the abscissa corresponds roughly to moving from posterior-medial (PM) to anterior-lateral (AL) within MSTd. The thick lines through the data illustrate the running median, using a bin width of 30° and a resolution of 5°. Data are shown separately for the right (filled symbols and black line, n=153) and left hemispheres (open symbols and gray line, n=62).

For cells with significant tuning (p<0.05, permutation test), the heading preference was defined as the azimuth and elevation of the vector average of the neural responses (see Methods). Heading preferences in MSTd were distributed throughout the spherical stimulus space, but there was a significant predominance of cells that preferred lateral versus fore-aft motion directions in both single-cue conditions (Fig. 5). For both the Visual and Vestibular conditions, the distribution of preferred azimuths was significantly bimodal, with peaks around 0º and 180º, respectively (Fisher's test for uniformity against a bipolar alternative, p<0.01, Fisher et al., 1987).

Figure 5.

Distributions of 3D heading preferences of MSTd neurons for (A) the Vestibular condition and (B) the Visual condition. Each data point in the scatter plot corresponds to the preferred azimuth (abscissa) and elevation (ordinate) of a single neuron with significant heading tuning (A, n=162; B, n=251). The data are plotted on Cartesian axes that represent the Lambert cylindrical equal-area projection of the spherical stimulus space. Histograms along the top and right sides of each scatter plot show the marginal distributions.

Relationship between Visual/Vestibular Tuning and Combined Cue Responses

If MSTd neurons integrate vestibular and visual signals to achieve better heading selectivity, the combination of the two cues should produce stronger heading tuning than either single cue alone. Scatter plots of HTI for all paired combinations of the Visual, Vestibular and Combined conditions are illustrated in Fig. 6A-C. A bootstrap analysis revealed that the Visual HTI was significantly larger (p < 0.05) than the Vestibular HTI for 62% (157/255) of MSTd neurons (Fig. 6A; filled symbols above the diagonal). The reverse was true for only 5% (14/255) of neurons (Fig. 6A; filled symbols below the diagonal). Similarly, for 57% (144/255) of the cells, the Combined condition also resulted in larger HTI values compared to the Vestibular condition (Fig. 6B, filled symbols above the diagonal). The reverse was true for only 10% (25/255) of the cells (Fig. 6B, filled symbols below the diagonal).

Figure 6.

Comparison of heading selectivity (A–C) and tuning preferences (D–F) of MSTd neurons across stimulus conditions. A) The Heading Tuning Index (HTI) for the Visual condition plotted against the HTI for the Vestibular condition. B) HTI for the Combined condition vs. HTI for the Vestibular condition. C) HTI for the Combined condition vs. HTI for the Visual condition. Solid (open) circles: Cells with (without) significantly different HTI values for the two conditions (bootstrap, n=1000, p<0.05). Number of cells, n=255. The solid lines indicate the unity-slope diagonal. D–F) Distribution of the difference in preferred heading, |Δ Preferred Heading|, between (D) the Visual and Vestibular conditions (n=160); (E) the Combined vs. Vestibular conditions (n=156); (F) the Combined vs. Visual conditions (n=239). Note that bins were computed according to the cosine of the angle (in accordance with the spherical nature of the data) (Snyder, 1987). Only neurons with significant heading tuning in each pair of conditions have been included.

Although adding optic flow to inertial motion generally improved spatial tuning (paired t-test, p<<0.001), the converse was not true. Instead, adding inertial motion to optic flow actually reduced heading selectivity, such that the average HTI for the Combined condition was lower than that in the Visual condition (paired t-test, p<<0.001). This is illustrated in Fig. 6C, where 36% (93/255) of the neurons had a Combined HTI that was significantly smaller than the Visual HTI (filled symbols below the diagonal). For only 7% (19/255) of the neurons did the combination of cues result in a higher HTI than the optic flow stimulus alone (Fig. 6C, filled symbols above the diagonal). For the remaining 57% of the cells, the tuning indices for the Combined and Visual conditions were not significantly different (Fig. 6C, open symbols). Thus, combining visual and non-visual cues generally did not strengthen, but frequently weakened, the tuning of MSTd neurons to optic flow.

The lack of improvement in HTI under the Combined condition is at least partly due to the fact that many MSTd neurons did not have congruent heading preferences in the Visual and Vestibular conditions (e.g., Fig. 2B). The distributions of the absolute differences in preferred heading (|Δ Preferred Heading|) between the three stimulus conditions are summarized in Fig. 6D-F for all neurons with significant heading tuning. This metric is the smallest angle between a pair of preferred heading vectors in 3D. Note that |Δ Preferred Heading| is not plotted on a linear axis due to the spherical nature of the data. If the preferred heading vectors for the Visual and Vestibular conditions were distributed randomly around the sphere, then |Δ Preferred Heading| would not be distributed uniformly on a linear scale but would rather have a clear peak around 90 deg. Instead, the |Δ Preferred Heading| values have been transformed sinusoidally such that the distribution would be flat if the preferred heading vectors for Visual and Vestibular conditions were not correlated.

The distribution of |Δ Preferred Heading| between the Visual and Vestibular conditions was broad and clearly bimodal (Silverman's bimodal test, p < 0.001, Silverman, 1986), indicating that visual and vestibular heading preferences tended to be either matched or opposite (Fig. 6D). The distribution of |Δ Preferred Heading| between the Combined and Vestibular conditions was also very broad and significantly bimodal (Fig. 6E), indicating that the vestibular heading preference was often not a good predictor of the combined preference. In contrast, the difference in heading preference between the Combined and Visual conditions showed a very narrow distribution centered close to 0° (Fig. 6F). Therefore, the heading preference in the Combined condition was strongly dominated by the tuning for optic flow.

Poorly matched heading preferences between the Visual and Vestibular conditions (Fig. 6D) may explain the weakened heading tuning in the Combined condition, relative to Visual, when both cues were provided (Fig. 6C). Indeed, when the difference in HTI between the Combined and Visual conditions was plotted against |Δ Preferred Heading| for the Visual and Vestibular conditions, a significant trend (Spearman rank correlation, R=−0.35, p<<0.001) was observed (Fig. 7). The larger the |Δ Preferred Heading| between the Visual and Vestibular conditions, the smaller the HTI for the Combined relative to the Visual condition. However, even for congruent Visual and Vestibular heading preferences (gray area), heading selectivity was not significantly strengthened in the Combined condition (mean HTI difference ± SD = −0.02 ± 0.01, p=0.23). Therefore, poor matching of the Visual and Vestibular heading preferences only partially explains the weakened tuning in the Combined condition.

Figure 7.

Scatter plot of the difference in HTI between the Combined and Visual conditions plotted against the difference in preferred heading, |Δ Preferred Heading|, between the Vestibular and Visual conditions. Solid (open) symbols: Cells with (without) significantly different HTI values for the Combined and Visual conditions (bootstrap, n=1000, p<0.05, from Fig. 6C). Solid line: best linear fit through all data (both open and solid symbols). Gray area highlights neurons with Vestibular and Visual heading preferences matched to within 45 deg. Only neurons with significant tuning in both the Vestibular and Visual conditions are included (n=160).

To further explore the vestibular contribution to combined cue responses, we estimated a ‘vestibular gain’, defined as the fraction of a cell’s vestibular responses that must be added to the visual responses in order to explain the combined tuning. This can be described as:

| (5) |

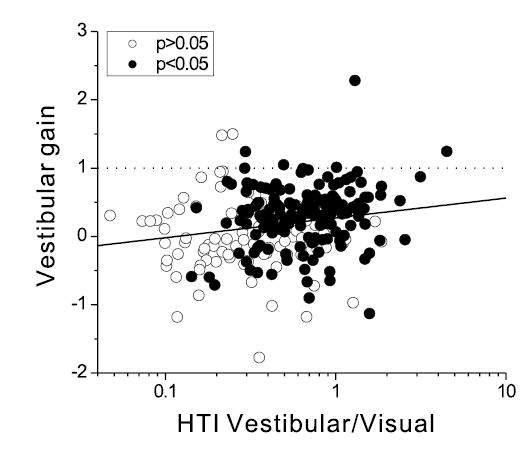

where Rx are matrices of mean firing rates for all heading directions, a is the ‘vestibular gain’ and b is a constant that can account for direction-independent differences between the three conditions. If a = 1, vestibular and visual modulations contribute to the combined response in proportion to the strengths of the individual cue responses. In contrast, when a = 0, vestibular responses make no contribution, such that the combined response is determined exclusively by the visual modulation. For values of a between 0 and 1, vestibular signals contribute less than expected from responses to the single-cue conditions, whereas the reverse is true for values of a larger than 1. The vestibular gain for MSTd neurons with significant vestibular tuning averaged 0.30 ± 0.45 SD and was significantly different from both 0 and 1 (t-test, p<<0.001). Only a weak correlation existed between vestibular gain and the ratio of the Vestibular and Visual HTIs (Fig. 8; linear regression, R=0.19, p=0.002), such that even neurons with strong vestibular tuning typically had vestibular gains < 0.5. Thus, the vestibular signal contribution appeared to be consistently de-emphasized in the Combined condition.

Figure 8.

Quantification of vestibular contribution to the Combined response. The vestibular gain, a (equation 5) for all 255 MSTd neurons is plotted as a function of the ratio between HTI values for the Vestibular and Visual conditions. Solid (open) symbols denote neurons with (without) significant vestibular tuning, respectively. Solid line: linear regression through all data points.

In summary, these results illustrate that, although the majority of MSTd neurons were significantly tuned for heading in response to both optic flow and inertial motion, heading preferences were poorly matched and responses to the combined stimulus were dominated by the cell’s visual responses. Importantly, adding non-visual motion-related signals to the visual responses did not improve heading selectivity but actually weakened spatial tuning.

Model predictions/simulations

The experimental observations described above appear to be inconsistent with the hypothesis that MSTd neurons have integrated visual and non-visual cues for heading perception. So how might we explain the vestibular heading tuning of MSTd neurons? Related to the potential role of MSTd in sensory integration is the fact that optic flow and vestibular signals are known to originate in different spatial reference frames. Specifically, visual motion signals originate in retinal coordinates (Squatrito and Maioli, 1996; Bremmer et al., 1997; Squatrito and Maioli, 1997), whereas vestibular signals are head/body-centered (Shaikh et al., 2004). It is often assumed that optic flow signals must first be converted into a head-centered reference frame, before being useful for heading perception (Royden, 1994; Royden et al., 1994; Banks et al., 1996).

It is not immediately obvious what patterns of visual/vestibular interactions might be expected within a network that carries out a reference frame transformation selectively for one sensory cue (visual) versus another (vestibular). To explore potential visual/vestibular interactions that could emerge within cell populations that carry out such a selective reference frame transformation, we implemented a very simple two-layer neural network model (Fig. 9) that receives eye-centered visual inputs, head-centered vestibular inputs, and eye position signals. The network was trained to compute the (head-centered) heading direction of the head/body in space regardless of whether this was specified by visual cues, vestibular cues, or both together. To simulate the responses of model neurons, we used a 5-parameter Modified Sinusoid Function (MSF; see equation 3 in Methods) that was found to adequately describe the 3D heading tuning functions of MSTd neurons (average R2 = 0.79±0.02 SE and 0.88±0.01 SE for the Vestibular and Visual conditions, respectively). Other technical details regarding the network model can be found in the Methods section.

Figure 9.

Schematic diagram of a simple two-layer, feedforward neural network model that was trained to compute the head-centered direction of heading from eye-centered visual inputs, head-centered vestibular inputs and eye position signals. Hidden units (n=150) have sigmoidal activation functions, whereas output units are linear.

To provide an intuitive appreciation of the computations carried out by the network, Fig. 10A and B illustrate the 3D heading tuning of a typical hidden layer unit under the simulated Vestibular and Visual conditions, plotted separately for three eye positions: 0°, which corresponds to straight ahead (middle row), 40° left (top row), and 40° right (bottom row). Vestibular responses did not depend on eye position, indicating that heading was coded in a head-centered (or body-centered) reference frame (Fig. 10A). In contrast, the visual responses of this hidden unit shifted systematically with eye position, as expected for an eye-centered reference frame (Fig. 10B). This pattern was typical of all hidden units, as illustrated in Fig. 9C, which summarizes the mean shift ratio of all hidden layer units under the Vestibular and Visual conditions (black and gray fills, respectively). The shift ratio was computed as the observed change in preferred heading between a pair of eye positions divided by the difference in eye position. Shift-ratios near 0 represent coding of heading in a head-centered frame, whereas shift ratios near 1 correspond to an eye-centered reference frame. The shift ratio of 150 hidden units averaged 0.01±0.04 SD for the Vestibular condition and 1.0 ± 0.01 SD for the Visual condition (Fig 10C).

As previously demonstrated by Zisper and Andersen (1988), the transformation of optic flow signals from an eye-centered to head-centered reference frame was implemented in the hidden layer of our model through modulation of hidden unit responses by eye position (i.e., gain fields). This is illustrated for the example hidden unit in Fig. 10B, where larger responses were seen when eye position was 40° to the left than 40° to the right. This effect was quantified for all hidden layer units using linear regression. The absolute values of the regression slopes were roughly four-fold larger for the Visual than the Vestibular condition, and this difference in gain field strength was highly significant (paired t-test, p<<0.001). Thus, the network implemented the reference frame transformation for optic flow through gain modulation, while vestibular signals remained largely unaltered. Keeping this functionality of the network in mind, we next summarize the tuning strengths and heading preferences of hidden layer units in the network.

For a direct comparison between response properties of hidden layer units and MSTd neurons, we quantified hidden layer tuning using the same metrics described in previous sections. HTI values for each pairing of Visual, Vestibular and Combined conditions are illustrated in Fig. 11A-C. Each panel shows data from 150 hidden layer units obtained from one representative training session of the network. In agreement with the experimental observations, HTI values for hidden units in the Vestibular condition (0.33 ± 0.007 SE) were significantly smaller than HTI values for the Visual condition (0.37 ± 0.008 SE) (paired t-test, p<<0.001) (Fig. 11A). In addition, HTI values for the Combined condition (0.36 ± 0.006 SE) were significantly larger than those for the Vestibular condition (paired t-test, p<<0.001) (Fig. 11B), but marginally smaller than HTI values for the Visual condition (paired t-test, p=0.025) (Fig. 11C). Mean HTI values were fairly consistent across different network training sessions (Fig. 10D; ANOVA, p>0.05), as were differences in HTI among stimulus conditions. Thus, similar to the properties of MSTd neurons (Fig. 6), hidden units showed stronger heading tuning for the Visual than the Vestibular condition, and slightly weaker heading selectivity for the Combined condition relative to the Visual condition. These differences in HTI were substantially smaller for hidden units than for MSTd neurons, but the overall pattern of results was quite similar.

Figure 11.

Comparison of heading selectivity for hidden layer units across different conditions. Scatter plots of HTI for (A) the Visual versus Vestibular conditions, (B) the Combined versus Vestibular conditions, and (C) the Combined versus Visual conditions. Format as in Fig. 6A–C. (D) Average results (±SEM) from 5 training sessions. Filled circles: Visual vs. Vestibular; Open circles: Combined vs. Vestibular; Triangles: Combined vs. Visual conditions). The stars illustrate the means corresponding to the data in A–C. The lines illustrate the diagonals (unity-slope).

Importantly, there were also striking similarities between hidden layer units and MSTd neurons when considering the relationships between heading preferences across stimulus conditions. Figure 12 summarizes the mean (± SE) of the |Δ Preferred Heading| values from 5 training sessions, in a format similar to that shown for MSTd neurons in Figure 6. There was little correlation between preferred headings for the Vestibular and Visual conditions (Fig. 12A), such that the distribution of |Δ Preferred Heading| was broad with a significant tendency toward bimodality (Silverman's bimodal test, p < 0.01, Silverman, 1986). The distribution of |Δ Preferred Heading| between the Combined and Vestibular conditions, although clearly skewed more towards zero, was also quite broad (Fig. 12B). In contrast, the histogram of |Δ Preferred Heading| between the Combined and Visual conditions was more strongly skewed towards zero (t-test, p<<0.001, Fig. 12C), similar to the MSTd data (Fig. 6F). Interestingly, when the network was trained to compute heading in an eye-centered reference frame under all three stimulus conditions, thus requiring a reference frame change for vestibular signals but not for visual signals, we found the opposite pattern of results (Combined responses were dominated by the vestibular inputs). Thus, a simple network trained to perform a reference frame transformation for only one of two sensory inputs predicts a hidden layer network whose responses are dominated by the transformed input, and an inconsistent pattern of relative heading preferences for the two sensory cues. Considering that a reference frame change is necessary for visual (but not vestibular) cues in order to compute heading in head/body-centered coordinates, the properties of network hidden units qualitatively recapitulate the basic features of heading selectivity observed in area MSTd.

Discussion

Using a virtual reality system to provide actual and/or simulated motion in 3D space, we have shown that, although the majority of MSTd neurons exhibited significant heading tuning in the Vestibular condition, the addition of inertial motion to optic flow generally did not improve heading selectivity. Rather, heading tuning in the Combined condition was dominated by optic flow responses and was typically weakened relative to the Visual condition. In addition, heading preferences between the Vestibular and Visual conditions were often opposite. These findings were qualitatively predicted by the properties of hidden units in a simple neural network that received head-centered vestibular signals and eye-centered optic flow information. The network computed heading in a head-centered reference frame by transforming the visual, but not the vestibular, inputs. Hidden layer properties were biased towards the input that undergoes the transformation, and resemble in many respects the properties of MSTd neurons.

Vestibular signals in MSTd

Spatial tuning driven by inertial motion has been described previously in MSTd (Duffy, 1998; Bremmer et al., 1999; Page and Duffy, 2003). The percentage of neurons with significant vestibular tuning in our experiments (64%) is substantially higher than that of previous studies (e.g., 24% in Duffy, 1998). A likely explanation for these differences is our use of 3D motion and a Gaussian velocity profile that provides a more efficient linear acceleration stimulus to otolith afferents (Fernandez et al., 1972; Fernandez and Goldberg, 1976a, b). Recording location may also be a factor, since our analyses suggest that vestibular tuning is stronger in posterior-medial portions of MSTd in the right hemisphere. Although our data do not allow us to make strong conclusions regarding hemispheric differences, they are consistent with findings of a right-hemispheric-dominance of vestibular responses in fMRI experiments involving caloric stimulation (Fasold et al., 2002).

Although we refer to our inertial motion stimulus as the Vestibular condition, responses to this stimulus might not be exclusively vestibular in nature. There are at least two alternative possibilities regarding their origin. First, they might represent efference copy signals related to VOR cancellation. We have excluded this possibility by showing that responses of a subpopulation of MSTd neurons in the Vestibular condition were nearly identical when tested in complete darkness without any requirement for fixation (Fig. 3). Second, MSTd responses during inertial motion in the absence of optic flow might also arise from skin receptors and body proprioceptors (see Lackner and DiZio, 2005 for review). Although we cannot exclude this possibility, a recent fMRI study has reported only visual and vestibular (but not proprioceptive) activation in area hMT/V5 (Fasold et al., 2004).

Temporal dynamics of MSTd responses

One barrier to integrating vestibular and visual signals for heading perception is that these signals are initially encoded with different temporal dynamics. Inertial motion acceleration is encoded by primary otolith afferents (Fernandez & Goldberg, 1976). In contrast, visual motion is thought to be encoded in terms of velocity signals (Rodman and Albright, 1987; Lisberger and Movshon, 1999; but see Cao et al., 2004). If MSTd neurons integrate vestibular and visual information, these signals should be coded with similar dynamics. Using stimuli with a Gaussian velocity profile has allowed us to demonstrate that the response dynamics of MSTd neurons, in both the Vestibular and Visual conditions, follow more closely the stimulus velocity profile rather than the biphasic acceleration profile (Fig. 1C). In contrast, previous MSTd studies have used constant velocity stimuli (e.g., Duffy 1998; Page and Duffy 2003), which have the disadvantage of not appropriately activating primary otolith afferents. Our results suggest that vestibular and visual responses in MSTd satisfy the temporal requirement of sensory integration.

At the population level, vestibular and visual responses extend considerably beyond the time course of the stimulus (Fig. 1C). This could be due to individual neurons having sustained responses or to latency variation across the population. A detailed analysis of response dynamics will be presented elsewhere, but we note here that both factors clearly contribute to the observed population responses.

Visual-vestibular interactions in MSTd

For neurons that integrate sensory cues for heading perception, a sensible expectation is that heading preferences should be matched for the two single-cue conditions and that heading tuning should be more robust under the Combined condition. In contrast, we found that single-cue heading preferences were frequently opposite and that adding inertial motion cues to optic flow generally impaired heading selectivity. These findings extend previous work done in MSTd using 1D or 2D stimulation (Duffy, 1998; Bremmer et al., 1999). In an experiment involving 1D (fore-aft) motion on a parallel swing, Bremmer et al. (1999) reported that roughly half of MSTd neurons showed opposite heading preferences for visual and vestibular stimulation. In a 2D experiment involving constant-velocity motion in the horizontal plane, Duffy (1998) reported no correlation between heading preferences for visual and vestibular stimulation, and described generally weaker heading selectivity under combined stimulation than visual stimulation. In addition to extending these previous studies to 3D, we have reported an additional relationship between heading strength and heading preference: larger differences in heading preference between single cue conditions were correlated with weaker heading tuning in the Combined condition relative to the Visual condition (Fig. 7). These findings are consistent with some fMRI and PET results, in which activation of area hMT/V5 was significantly smaller when optic flow was combined with caloric or electrical vestibular stimulation (Brandt et al., 1998; Brandt et al., 2002). Thus, the available data suggest that MSTd neurons have not integrated visual and vestibular cues to allow more robust heading perception.

The reference frame problem for heading perception

Vestibular and visual signals not only originate with different temporal dynamics, but also encode motion in different spatial reference frames. Inertial motion signals, originating from the otolith organs of the inner ear, measure linear accelerations of the head, i.e., they reflect heading in head-centered coordinates. On the other hand, visual motion signals originate in eye-centered coordinates and thus encode heading direction relative to the current position of the eyes. For visual and vestibular signals to interact synergistically for heading perception, they may need to be brought into a common spatial reference frame. This common frame could be eye-centered, head-centered, or an intermediate frame in which visual and vestibular signals are dependent on both head and eye position.

Although previous studies have shown that responses of some MST neurons are at least partially compensated for the velocity of eye and head movements (Bradley et al., 1996; Page and Duffy, 1999; Shenoy et al., 1999; Shenoy et al., 2002; Ilg et al., 2004) , this does not necessarily indicate that MST codes motion in a head- or world-centered reference frame (i.e., velocity compensation does not necessarily imply position compensation). The reference frame for heading selectivity based on optic flow in MSTd has not yet been examined during static fixation at different eye positions, although MSTd receptive fields have been reported to be largely eye-centered with gain modulations (Squatrito and Maioli, 1996; Bremmer et al., 1997; Squatrito and Maioli, 1997). The reference frame in which MSTd neurons code heading based on vestibular signals is also unknown. Our preliminary results suggest that visual and vestibular information about heading in MSTd is indeed coded in different spatial reference frames, specifically eye-centered and head-centered, respectively (Fetsch et al., 2005). Preliminary results also indicate that many MSTd neurons show gain modulations by eye position in the Visual condition, but rarely in the Vestibular condition (Fetsch et al., 2005).

It has been suggested that optic flow signals are first converted into a head- or body-centered reference frame before being useful for heading perception (Royden, 1994; Royden et al., 1994; Banks et al., 1996). If MSTd is involved in such a reference frame transformation for optic flow (but not vestibular) signals, what patterns of visual/vestibular interactions might be expected to exist? Addressing this question is fundamental to understanding visual/vestibular interactions in MSTd.

What is the role of MSTd in heading perception?

To explore potential visual/vestibular interactions that may emerge within a population of neurons that carry out a selective reference frame transformation, we implemented a simple two-layer neural network model that receives eye-centered visual inputs, head-centered vestibular inputs, and eye position signals. The network was trained to compute the head-centered direction of heading regardless of whether heading was specified by optic flow, vestibular signals, or both. Thus, the network was required to transform visual inputs into a head-centered reference frame and integrate them with vestibular inputs. After training, hidden layer units evolved to have tuning properties similar to those of MSTd neurons. The most important similarities between MSTd neurons and hidden units were the frequently mismatched heading preferences between the Visual and Vestibular conditions, and the dominance of visual responses in the Combined condition (Fig. 12). Interestingly, when the network was instead trained to represent eye-centered heading directions under all cue conditions, we found that responses to the Combined condition were dominated by vestibular responses.

These results suggest that the apparently puzzling properties of visual/vestibular interactions in MSTd may arise because neurons in this area are involved in performing a selective reference frame transformation for visual but not vestibular signals. Our model predicts that responses of MSTd neurons to optic flow should be gain modulated by eye position, whereas responses to inertial motion should not. Our preliminary studies support this prediction (Fetsch et al., 2005). Importantly, linear summation of the hidden layer outputs in the model produces a cue-invariant, head-centered 3D heading representation. Thus, it appears that area MSTd contains the building blocks needed to construct more advanced representations of heading in downstream areas.

Supplementary Material

Acknowledgments

This work was supported by grants from NIH (EY12814, EY016178 DC04260), the EJLB Foundation (to GCD), and the McDonnell Center for Higher Brain Function. We thank Dr S. Lisberger for helpful comments on an earlier version of the manuscript. We are also grateful to Kim Kocher, Erin White, and Amanda Turner for assistance with animal care and training, and to Christopher Broussard for outstanding computer programming.

References

- Albright TD, Desimone R. Local precision of visuotopic organization in the middle temporal area (MT) of the macaque. Exp Brain Res. 1987;65:582–592. doi: 10.1007/BF00235981. [DOI] [PubMed] [Google Scholar]

- Albright TD, Desimone R, Gross CG. Columnar organization of directionally selective cells in visual area MT of the macaque. J Neurophysiol. 1984;51:16–31. doi: 10.1152/jn.1984.51.1.16. [DOI] [PubMed] [Google Scholar]

- Anderson KC, Siegel RM. Optic flow selectivity in the anterior superior temporal polysensory area, STPa, of the behaving monkey. J Neurosci. 1999;19:2681–2692. doi: 10.1523/JNEUROSCI.19-07-02681.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banks MS, Ehrlich SM, Backus BT, Crowell JA. Estimating heading during real and simulated eye movements. Vision Res. 1996;36:431–443. doi: 10.1016/0042-6989(95)00122-0. [DOI] [PubMed] [Google Scholar]

- Benson AJ, Spencer MB, Stott JR. Thresholds for the detection of the direction of whole-body, linear movement in the horizontal plane. Aviat Space Environ Med. 1986;57:1088–1096. [PubMed] [Google Scholar]

- Bertin RJ, Berthoz A. Visuo-vestibular interaction in the reconstruction of travelled trajectories. Exp Brain Res. 2004;154:11–21. doi: 10.1007/s00221-003-1524-3. [DOI] [PubMed] [Google Scholar]

- Bishop CM (1995) Neural Networks for Pattern Recognition. Oxford: Clarendon Press.

- Bradley DC, Maxwell M, Andersen RA, Banks MS, Shenoy KV. Mechanisms of heading perception in primate visual cortex. Science. 1996;273:1544–1547. doi: 10.1126/science.273.5281.1544. [DOI] [PubMed] [Google Scholar]

- Brandt T, Bartenstein P, Janek A, Dieterich M. Reciprocal inhibitory visual-vestibular interaction. Visual motion stimulation deactivates the parieto-insular vestibular cortex. Brain. 1998;121 ( Pt 9):1749–1758. doi: 10.1093/brain/121.9.1749. [DOI] [PubMed] [Google Scholar]

- Brandt T, Glasauer S, Stephan T, Bense S, Yousry TA, Deutschlander A, Dieterich M. Visual-vestibular and visuovisual cortical interaction: new insights from fMRI and pet. Ann N Y Acad Sci. 2002;956:230–241. doi: 10.1111/j.1749-6632.2002.tb02822.x. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Duhamel JR, Ben Hamed S, Graf W. Heading encoding in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002a;16:1554–1568. doi: 10.1046/j.1460-9568.2002.02207.x. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Ilg UJ, Thiele A, Distler C, Hoffmann KP. Eye position effects in monkey cortex. I. Visual and pursuit-related activity in extrastriate areas MT and MST. J Neurophysiol. 1997;77:944–961. doi: 10.1152/jn.1997.77.2.944. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Kubischik M, Pekel M, Lappe M, Hoffmann KP. Linear vestibular self-motion signals in monkey medial superior temporal area. Ann N Y Acad Sci. 1999;871:272–281. doi: 10.1111/j.1749-6632.1999.tb09191.x. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Klam F, Duhamel JR, Ben Hamed S, Graf W. Visual-vestibular interactive responses in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002b;16:1569–1586. doi: 10.1046/j.1460-9568.2002.02206.x. [DOI] [PubMed] [Google Scholar]

- Britten KH, van Wezel RJ. Electrical microstimulation of cortical area MST biases heading perception in monkeys. Nat Neurosci. 1998;1:59–63. doi: 10.1038/259. [DOI] [PubMed] [Google Scholar]

- Britten KH, Van Wezel RJ. Area MST and heading perception in macaque monkeys. Cereb Cortex. 2002;12:692–701. doi: 10.1093/cercor/12.7.692. [DOI] [PubMed] [Google Scholar]

- Busettini C, Masson GS, Miles FA. Radial optic flow induces vergence eye movements with ultra-short latencies. Nature. 1997;390:512–515. doi: 10.1038/37359. [DOI] [PubMed] [Google Scholar]

- Cao P, Gu Y, Wang SR. Visual neurons in the pigeon brain encode the acceleration of stimulus motion. J Neurosci. 2004;24:7690–7698. doi: 10.1523/JNEUROSCI.2384-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crowell JA, Banks MS, Shenoy KV, Andersen RA. Visual self-motion perception during head turns. Nat Neurosci. 1998;1:732–737. doi: 10.1038/3732. [DOI] [PubMed] [Google Scholar]

- Desimone R, Ungerleider LG. Multiple visual areas in the caudal superior temporal sulcus of the macaque. J Comp Neurol. 1986;248:164–189. doi: 10.1002/cne.902480203. [DOI] [PubMed] [Google Scholar]

- Dickman JD, Angelaki DE. Vestibular convergence patterns in vestibular nuclei neurons of alert primates. J Neurophysiol. 2002;88:3518–3533. doi: 10.1152/jn.00518.2002. [DOI] [PubMed] [Google Scholar]

- Duffy CJ. MST neurons respond to optic flow and translational movement. J Neurophysiol. 1998;80:1816–1827. doi: 10.1152/jn.1998.80.4.1816. [DOI] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH. Sensitivity of MST neurons to optic flow stimuli. I. A continuum of response selectivity to large-field stimuli. J Neurophysiol. 1991;65:1329–1345. doi: 10.1152/jn.1991.65.6.1329. [DOI] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH. Response of monkey MST neurons to optic flow stimuli with shifted centers of motion. J Neurosci. 1995;15:5192–5208. doi: 10.1523/JNEUROSCI.15-07-05192.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fasold O, Trenner MU, Wolfert J, Kaiser T, Villringer A, Wenzel R (2004) Right hemispheric dominance in temporoparietal BOLD-response to right and left neck muscle vibration. In: SFN.

- Fasold O, von Brevern M, Kuhberg M, Ploner CJ, Villringer A, Lempert T, Wenzel R. Human vestibular cortex as identified with caloric stimulation in functional magnetic resonance imaging. Neuroimage. 2002;17:1384–1393. doi: 10.1006/nimg.2002.1241. [DOI] [PubMed] [Google Scholar]

- Fernandez C, Goldberg JM. Physiology of peripheral neurons innervating otolith organs of the squirrel monkey. I. Response to static tilts and to long-duration centrifugal force. J Neurophysiol. 1976a;39:970–984. doi: 10.1152/jn.1976.39.5.970. [DOI] [PubMed] [Google Scholar]

- Fernandez C, Goldberg JM. Physiology of peripheral neurons innervating otolith organs of the squirrel monkey. II. Directional selectivity and force-response relations. J Neurophysiol. 1976b;39:985–995. doi: 10.1152/jn.1976.39.5.985. [DOI] [PubMed] [Google Scholar]

- Fernandez C, Goldberg JM, Abend WK. Response to static tilts of peripheral neurons innervating otolith organs of the squirrel monkey. J Neurophysiol. 1972;35:978–987. doi: 10.1152/jn.1972.35.6.978. [DOI] [PubMed] [Google Scholar]

- Fetsch CR, Gu Y, DeAngelis GC, Angelaki DE (2005) Visual and vestibular heading signals in area MSTd do not share a common spatial reference frame. Soc Neurosci Abstr.

- Fisher NI, Lewis T, Embleton BJJ (1987) Statistical analysis of spherical data. Cambridge: Cambridge University Press.

- Fukushima K, Sato T, Fukushima J, Shinmei Y, Kaneko CR. Activity of smooth pursuit-related neurons in the monkey periarcuate cortex during pursuit and passive whole-body rotation. J Neurophysiol. 2000;83:563–587. doi: 10.1152/jn.2000.83.1.563. [DOI] [PubMed] [Google Scholar]

- Gattass R, Gross CG. Visual topography of striate projection zone (MT) in posterior superior temporal sulcus of the macaque. J Neurophysiol. 1981;46:621–638. doi: 10.1152/jn.1981.46.3.621. [DOI] [PubMed] [Google Scholar]

- Georgopoulos AP, Schwartz AB, Kettner RE. Neuronal population coding of movement direction. Science. 1986;233:1416–1419. doi: 10.1126/science.3749885. [DOI] [PubMed] [Google Scholar]

- Gundry AJ. Thresholds of perception for periodic linear motion. Aviat Space Environ Med. 1978;49:679–686. [PubMed] [Google Scholar]

- Harris LR, Jenkin M, Zikovitz DC. Visual and non-visual cues in the perception of linear self-motion. Exp Brain Res. 2000;135:12–21. doi: 10.1007/s002210000504. [DOI] [PubMed] [Google Scholar]

- Ilg UJ, Schumann S, Thier P. Posterior parietal cortex neurons encode target motion in world-centered coordinates. Neuron. 2004;43:145–151. doi: 10.1016/j.neuron.2004.06.006. [DOI] [PubMed] [Google Scholar]

- Kingma H. Thresholds for perception of direction of linear acceleration as a possible evaluation of the otolith function. BMC Ear Nose Throat Disord. 2005;5:5. doi: 10.1186/1472-6815-5-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Komatsu H, Wurtz RH. Relation of cortical areas MT and MST to pursuit eye movements. III. Interaction with full-field visual stimulation. J Neurophysiol. 1988a;60:621–644. doi: 10.1152/jn.1988.60.2.621. [DOI] [PubMed] [Google Scholar]

- Komatsu H, Wurtz RH. Relation of cortical areas MT and MST to pursuit eye movements. I. Localization and visual properties of neurons. J Neurophysiol. 1988b;60:580–603. doi: 10.1152/jn.1988.60.2.580. [DOI] [PubMed] [Google Scholar]