Abstract

The two visual pathway description of Ungerleider and Mishkin (1982) changed the course of late 20th century systems and cognitive neuroscience. Here, I try to re-examine our lab’s work through the lens of the Pitcher and Ungerleider’s (2021) new third visual pathway. I also briefly review the literature related to brain responses to static and dynamic visual displays, visual stimulation involving multiple individuals, and compare existing models of social information processing for the face and body. In this context, I examine how the posterior superior temporal sulcus (pSTS) might generate unique social information relative to other brain regions that also respond to social stimuli. I discuss some of the existing challenges we face with assessing how information flow progresses between structures in the proposed functional pathways, and how some stimulus types and experimental designs may have complicated our data interpretation and model generation. I also note a series of outstanding questions for the field. Finally, I examine the idea of a potential expansion of the third visual pathway, to include aspects of previously proposed ‘lateral’ visual pathways. Doing this would yield a more general entity for processing motion/action (i.e., ‘[inter]action’) that deals with interactions between people, as well as people and objects. In this framework, a brief discussion of potential hemispheric biases for function, and different forms of neuropsychological impairments created by focal lesions in the posterior brain is highlighted to help situate various brain regions into an expanded [inter]action pathway.

INTRODUCTION: A TRIBUTE

Cognitive, social and systems neuroscientists who study the characteristics of the visual system in human and non-human primates owe so much to the late Dr. Leslie Ungerleider. For decades her groundbreaking work in primate neurophysiology, neuroanatomy and neuroimaging in visual system function has laid the cornerstone for how we think about visual information processing in the primate brain. I dedicate this article to Dr. Ungerleider’s memory and honor her by first trying to put our work into the scientific context that she created, and then considering how that context might be expanded. I would also like to acknowledge the influence and contribution of two close colleagues who are also no longer with us today — Drs. Truett Allison and Shlomo Bentin. We all stand on the shoulders of giants.

VISUAL PATHWAYS: AND THEN THERE WERE THREE…

In 1982, I was embarking on a graduate career and starting to perform studies in the human visual system when the landmark manuscript on parallel visual pathways in the human brain was published (Ungerleider & Mishkin, 1982). The discussion and implications for the field in that paper helped channel and shape my research directions for the decades to come. Ungerleider and Mishkin (1982) had a clear ‘What?’ and ‘Where?’ emphasis for the main functional divisions of the respective ventral and dorsal pathways. Their work was predominantly based on the non-human primate literature – on painstaking studies of single-unit neurophysiology and structural neuroanatomy – based on investigations of object recognition and their locations in space. A slightly different interpretation of the ventral and dorsal visual systems was proposed a decade later (Goodale & Milner, 1992), where the dorsal system was examined from the point view of ‘How?’ In this formulation, based heavily on the apraxia literature, both spatial location and how an object was handled were important. To non-experts, the two visual pathway model made vision seem simple. However, when the now classic schema of known anatomical areal interconnections in the primate brain was viewed through the data lens of the early 1990s, the story far from simple even then (Felleman & Van Essen, 1991)!

One vexing issue, which still looms large to this day, relates to exactly how information between the pathways is transferred and used by each visual system. For us, our everyday world is a seamlessly continuous and complete one, and today there are still many questions about how we achieve this holistic view. From existing white matter tract knowledge of human cerebral cortex (e.g., Mori, Oishi, & Faria, 2009; Wang, Metoki, Alm, & Olson, 2018; Zekelman et al., 2022), sometimes there might be no direct white matter connections between brain structures that share common functions. For example, the human posterior superior temporal sulcus (pSTS) and the midfusiform gyrus (FG) (Fig. 1a) exhibit strong sensitivity to faces but have no direct interconnections (Ethofer, Gschwind, & Vuilleumier, 2011). Even today, existing structural interconnections between human brain areas sensitive to faces have been challenging to document clearly (Fig 1a; Babo-Rebelo et al., 2022; Grill-Spector, Weiner, Kay, & Gomez, 2017).

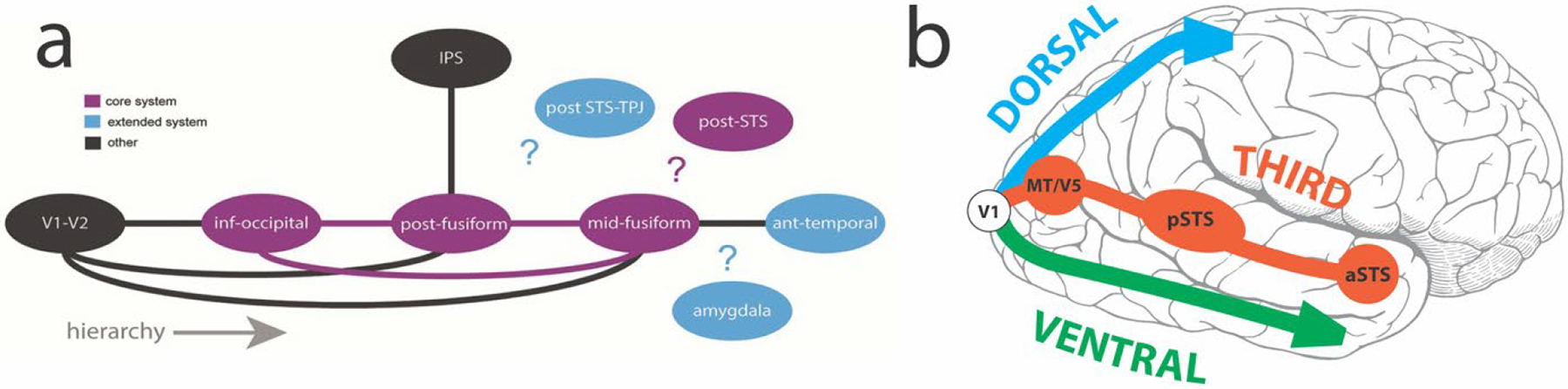

Figure 1. Potential structural and functional connections between main brain structures in the face processing network.

a.Known structural connections between main structures (solid lines), based mainly in the ventral system. Question marks highlight unknown structural connections in the network. Core (purple) and extended (blue) systems are color-coded, as are other (black) important brain regions. Reproduced with CC BY 4.0 Deed from Babo-Rebelo, M., Puce, A., Bullock, D., Hugueville, L., Pestilli, F., Adam, C., … George, N. (2022). Visual Information routes in the posterior dorsal and ventral face network studied with intracranial neurophysiology and white matter tract endpoints. Cereb Cortex, 32(2), 342–366. b. The third visual pathway of Pitcher and Ungerleider (2021). The general directions of the dorsal and ventral pathways are displayed by respective blue and green arrows as they emerge from primary visual cortex (V1). For the third visual pathway, key component structures MT/V5, p(osterior) STS and a(nterior) STS are shown by red-brick colored circles in a pathway to the anterior temporal lobe.

Perhaps a real understanding of the interconnections between dynamic face (and body) and other visually-sensitive brain regions dealing with object motion is lacking? MRI-guided electrical micro-stimulation of ‘face-patches’ in monkey IT cortex highlights their strong interconnections and separation from non-face regions (Moeller, Freiwald, & Tsao, 2008), yet micro-stimulation in face-patches influences activity in ‘object’ regions when ‘face-like’ objects or abstract faces are viewed (Moeller, Crapse, Chang, & Tsao, 2017). What seems to be critical here is the study of visually-sensitive cortex that is not directly responsive to either faces or objects. Recent elegant work taking this line of reasoning has proposed a complex object map, or space, in monkey IT where these category-specific properties can be observed (Bao, She, McGill, & Tsao, 2020). This approach channels a now classic human fMRI study, albeit at coarser spatial scale (Haxby et al., 2001), now refined with a state-of-the-art machine learning data analysis technique known as ‘hyperalignment’. This computationally-demanding method effectively scrubs out individual subject idiosyncrasies in high-resolution fMRI data, showcasing across-subject similarities in category-specific activation patterns in human occipitotemporal cortex (Haxby, Connolly, & Guntupalli, 2014; Haxby, Guntupalli, Nastase, & Feilong, 2020).

A second issue for the original dorsal/ventral visual pathway scheme was that it was not clear where structures such as the posterior STS (pSTS) sat. The pSTS is highly active in many studies dealing with dynamic human form (Allison, Puce, & McCarthy, 2000; Puce & Perrett, 2003; Yovel & O’Toole, 2016), and for this reason, it was seen as part of the dorsal system (Bernstein & Yovel, 2015; O’Toole, Roark, & Abdi, 2002). Working with Truett Allison, we had always regarded the pSTS as an important information integration point between the two visual pathways (Allison et al., 2000).

Perhaps the uncertainty in classifying and connecting other brain structures to the two visual pathways came about because this was not the complete picture? What were we missing? Was this the motivation for David Pitcher and Leslie Ungerleider when they proposed their ‘third visual pathway’, in which the pSTS was a major feature? The third pathway is a freeway linking primary visual cortex with area MT/V5, pSTS and anterior STS (aSTS) (Fig. 1b; Pitcher & Ungerleider, 2021).

Pitcher and Ungerleider’s (2021) questioning of the status quo is not unique: current thinking relating to brain pathways devoted to emotion (de Gelder & Poyo Solanas, 2021), and how emotions arise or progress (Critchley & Garfinkel, 2017; Li & Keil, 2023), for example, have also undergone ‘remodeling’. In terms of visual pathways themselves, the idea of an additional pathway to the ventral and dorsal systems is not new. Weiner and Grill-Spector (2013) proposed an additional lateral pathway that selectively processed information related to faces and limbs and integrated vision, haptics, action, and language (Weiner & Grill-Spector, 2013). Perplexingly, dynamic visual stimulation was not considered in this model, so structures strongly driven by human face and body motion (such as pSTS) are not included in this model. The pSTS is also not explicitly considered in other ‘lateral’ visual pathway formulations that centered on the LOTC (with a left-hemisphere bias) and in which MT/V5, the extrastriate body area (EBA) and middle temporal gyrus (MTG) feature prominently (Lingnau & Downing, 2015; Wurm & Caramazza, 2022), in contrast to the pSTS-centered third visual pathway which has a right hemisphere bias (Pitcher & Ungerleider, 2021). To complicate the picture still further, the idea of the dorsal system contributing unique knowledge regarding object representations has also been advanced (Freud, Behrmann, & Snow, 2020; Freud, Plaut, & Behrmann, 2016).

INVASIVE HUMAN BRAIN RESPONSES TO OBSERVED FACIAL AND BODY MOTION

Our facial movements provide clear social signals about our emotional states and foci of social attention. Close-up this information related to emotions comes from characteristic changes in upper and lower face parts (e.g., Muri, 2016; Waller, Julle-Daniere, & Micheletta, 2020). In the non-emotional domain, the eyes (via gaze direction) signal the focus of (social) attention, and can shift the (visual) attention of the viewer (Dalmaso, Castelli, & Galfano, 2020). In humans, rhythmic mouth movements (in the 3–8 Hz range) are tightly correlated with rhythmic vocalizations, unlike in non-human primates, where rhythmic facial motion is absent (Ghazanfar & Takahashi, 2014). Therefore, mouth movements provide supplementary information on verbal output. To improve comprehension, even people with normal hearing lipread in noisy environments, or when listening to speakers in their non-native language (Campbell, 2008). So, an opening mouth might be attention-grabbing, as it could signal the onset of an utterance (Carrick, Thompson, Epling, & Puce, 2007; Puce, Smith, & Allison, 2000).

The human brain’s response to viewing gaze changes of others

It has been known for a long time that non-invasive and invasive neurophysiological responses to viewing dynamic gaze aversions and mouth opening movements are significantly larger to direct gaze shifts or closing mouths (Allison et al., 2000; Caruana et al., 2014; Ulloa, Puce, Hugueville, & George, 2014). Functional MRI studies from many laboratories have consistently shown that the pSTS is a critical locus for facial motion signals (Campbell et al., 2001; Puce, Allison, Bentin, Gore, & McCarthy, 1998; Yovel & O’Toole, 2016). Neurophysiologically, MT/V5 also shows some selectivity to dynamic faces relative to non-face controls (Campbell, Zihl, Massaro, Munhall, & Cohen, 1997; Miki & Kakigi, 2014; Watanabe, Kakigi, & Puce, 2001). These older findings are consistent with the proposed active loci in the third visual pathway.

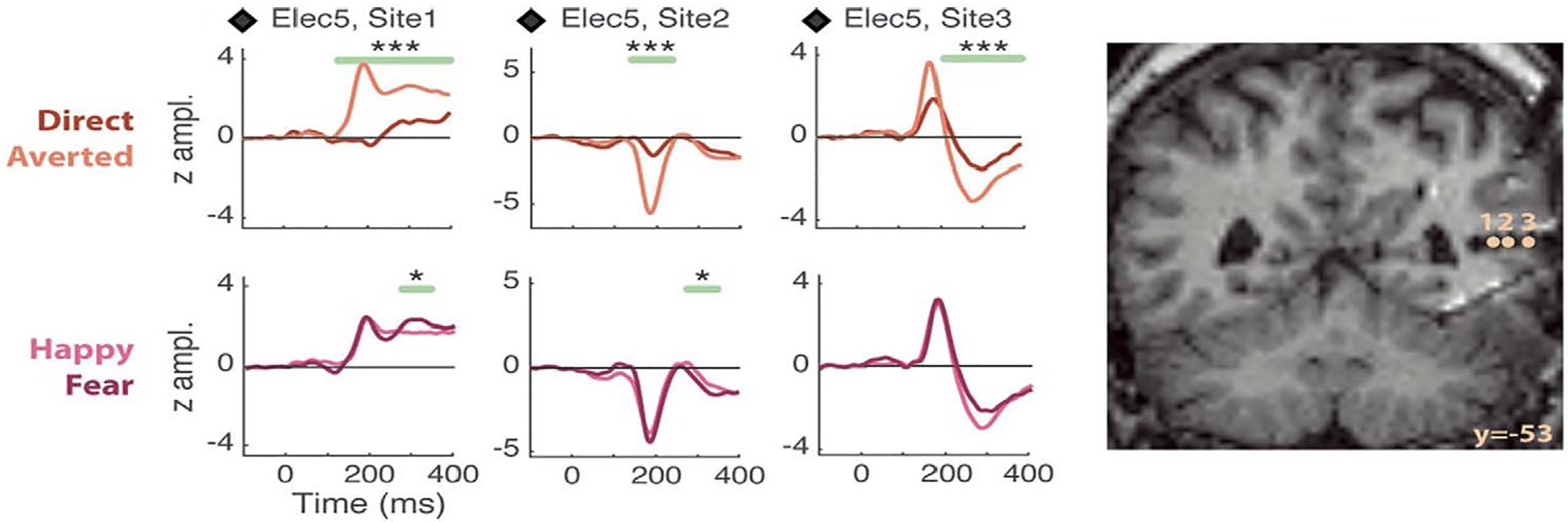

What about the time course for this neural activity? Non-invasive neurophysiological effects discussed above occur in the 170–220 ms post-motion onset range. Intracranial responses recorded to viewed dynamic gaze changes concur with non-invasive data: significantly larger field potentials in pSTS occur to gaze aversions relative to direct gaze transitions. In contrast, modulation of dynamic emotions (happiness versus fear) is not a prominent feature in the STS response (Fig. 2; Babo-Rebelo et al., 2022). This pattern of results was seen in 4 patients (of a total of 11 studied).

Figure 2. Effects of gaze and emotion in pSTS field potentials.

Left panel: Data from an epilepsy patient displays field potentials from 3 electrode contacts within pSTS to viewing a dynamic face changing its gaze (direct, averted) and expression (from neutral to either fear or happiness). Significant differences between averted and direct gaze (top line of plots) were seen, with largest responses for averted gaze. These significant differences could persist beyond the displayed 400 ms epoch (not shown). Emotion conditions (bottom line of plots) do not show prolonged amplitude differences post-emotion change. Two phase reversals in the potential at ~ 200 ms seen across the 3 sites – a signal of local generators at these locations. The respective MNI co-ordinate locations (x,y,z) for the 3 electrode contacts were: Site 1: +43,−53,+9; Site 2: +48,−53,+9; Site 3: +54,−53,+9. LEGEND: *: P <0.05, **: P <0.01, ***: P <0.005, corrected-over-time Monte Carlo P values. Right panel: Locations of left panel electrode sites appear on coronal views of the post-implant structural MRI. Reproduced with CC BY 4.0 Deed from Babo-Rebelo, M., Puce, A., Bullock, D., Hugueville, L., Pestilli, F., Adam, C., … George, N. (2022). Visual Information Routes in the Posterior Dorsal and Ventral Face Network Studied with Intracranial Neurophysiology and White Matter Tract Endpoints. Cereb Cortex, 32(2), 342–366.

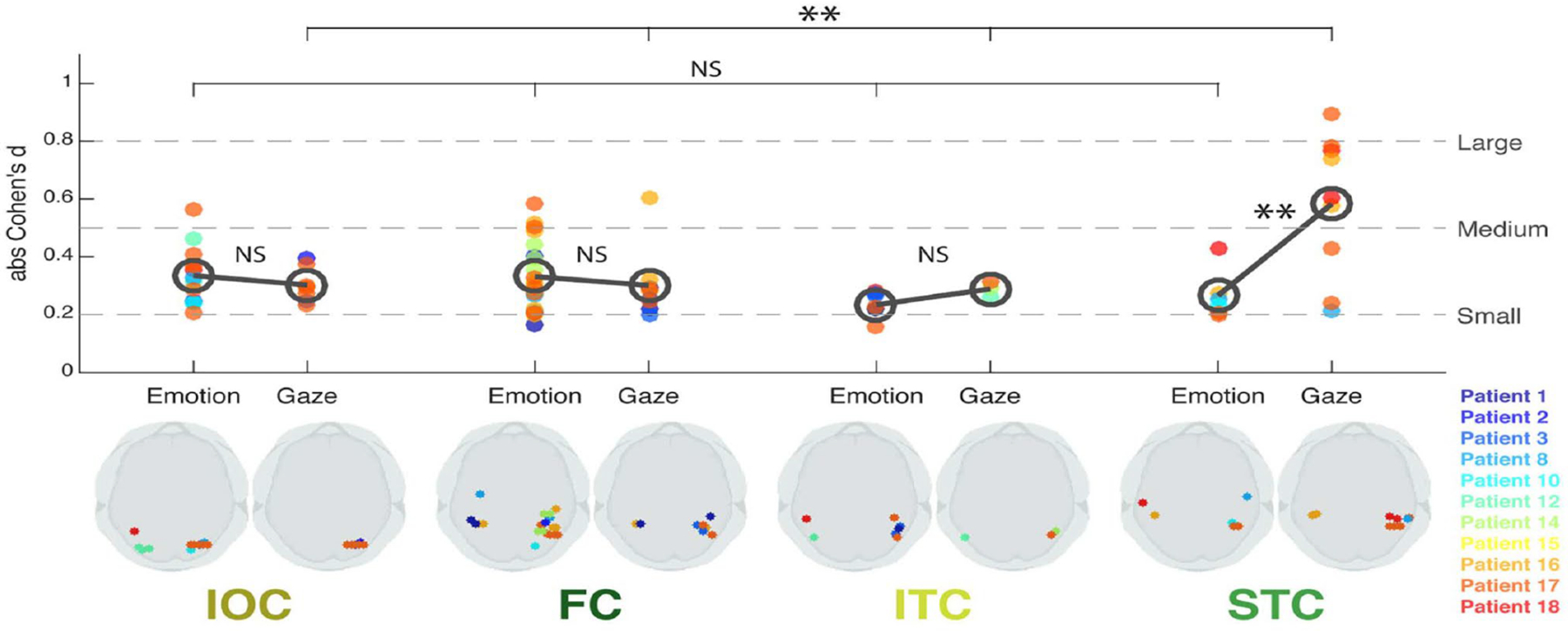

In addition to the STC, or superior temporal cortex, region of interest (ROI) that included cortex on the superior temporal gyrus and in pSTS, 3 other occipitotemporal ROIs were studied: an inferior temporal cortical region (‘ITC’, which included the inferior temporal sulci and gyri), a fusiform cortical region (‘FC’, including the midfusiform sulcus, and occipitotemporal and collateral sulci), and an inferior occipital region (‘IOC’, comprised of mainly the inferior occipital gyrus). Effect sizes to viewing facial changes were calculated for normalized amplitudes of bipolar field potentials at active electrode pairs in the 4 ROIs in the 11 patients (Fig. 3). All four ROIs showed responses to both gaze and emotion transitions, but the pSTS (STC ROI on the plot on Fig. 3) was most sensitive to gaze relative to emotion. These effects are not due to motion extent per se: for these same stimuli, as the largest facial changes occurred for emotion transitions – specifically in the lower part of the face (Huijgen et al., 2015).

Figure 3. Effect sizes for gaze and emotion within 4 occipitotemporal ROIs.

Bottom panel: Schematic axial slices for each ROI (IOC, FC, ITC, and STC) showing bipolar site pair locations (indicated by dots) responding significantly to Emotion (left side) or Gaze (right side). Color legend for individual patients is at right. Top panel: Effect size (absolute Cohen’s d) plotted as a function of ROI for each patient’s bipolar electrode sites. The dark gray open circles denote mean effect size across sites within each ROI, for Emotion and Gaze, respectively. Statistical comparison of effect sizes between Emotion and Gaze in each ROI (gray bars between open circles) and across ROIs for Emotion and Gaze effects (top bars) was performed. Broken lines on the plot represent commonly accepted evaluations of effect size values. LEGEND: IOC, Inferior Occipital Cortex; FC, Fusiform Cortex; ITC, Inferior Temporal Cortex; STC, Superior Temporal Cortex. NS: not significant; **: P <0.01. Reproduced with CC BY 4.0 Deed from Babo-Rebelo, M., Puce, A., Bullock, D., Hugueville, L., Pestilli, F., Adam, C., … George, N. (2022). Visual Information Routes in the Posterior Dorsal and Ventral Face Network Studied with Intracranial Neurophysiology and White Matter Tract Endpoints. Cereb Cortex, 32(2), 342–366.

Our data indicate that initial gaze processing in pSTS is already underway ~ 1/5 of a second after the gaze change. Typically, field potentials in human V1 occur at ~100 ms post-stimulus onset (Allison, Puce, Spencer, & McCarthy, 1999), and presumably this information travels to MT/V5 (Watanabe et al., 2001) and then to the pSTS, consistent with the information flow in the third visual pathway. The pSTS is clearly important for processing gaze in real life: lesions of human pSTS, can produce deficits in judging gaze direction/social attention in others (Akiyama, Kato, Muramatsu, Saito, Nakachi, & Kashima, 2006; Akiyama, Kato, Muramatsu, Saito, Umeda, & Kashima, 2006).

Amygdalae recordings in 5 patients have shown small amplitude selective responses to gaze aversion and not facial emotion using the same stimuli and task. The early response latency of ~120 ms in the right amygdala (Huijgen et al., 2015), was earlier than that in extrastriate cortex (Babo-Rebelo et al., 2022), raising questions about alternate information flow perhaps via pulvinar-collicular routes. The left amygdala’s sensitivity to increased eye white area (as seen in fear), relative to the right amygdala which responds to various changes in eye white area (including gaze aversions and depicted fear) (Hardee, Thompson, & Puce, 2008) has previously been reported. Notably, patients with amygdala injury can have difficulties judging gaze direction (Gosselin, Spezio, Tranel, & Adolphs, 2011) as well as emotions, especially fear (Adolphs, Tranel, Damasio, & Damasio, 1994; Gosselin et al., 2011), so the absence of field potentials to fearful faces in the amygdala are puzzling.

Insular cortex is also sensitive to dynamic eye gaze transitions. Averted gaze changes produce larger invasive neurophysiological responses than do direct gaze transitions. Gaze extent per se is not a factor – as evidenced by smaller evoked responses to spatially-large extreme left-right gaze shifts (Caruana et al., 2014).

The human brain’s response to viewing the mouth movements of others

Earlier, I mentioned the similarity in morphology and latency of non-invasive neural activity and pSTS field potentials to dynamic gaze changes. An outstanding question has been whether there are field potentials in the pSTS and/or other brain regions that are selectively elicited to viewing mouth movements?

In the late 1990s, we recorded field potentials to faces, face parts, objects, and scrambled versions of these stimuli in more than 20 activation tasks in ~ 100 epilepsy surgery patients (Allison et al., 1999; McCarthy, Puce, Belger, & Allison, 1999; Puce, Allison, & McCarthy, 1999). We began some new studies, including a dynamic facial motion task from which we had already collected non-invasive data (i.e., Puce et al., 2000). Here I include data originally published in abstract form (Puce & Allison, 1999), data that generated substantial interest at the Memorial Symposium devoted to Dr. Leslie Ungerleider’s memory (at NIH in September 2022). While these data remain anecdotal, I present them here to stimulate further thinking and seed further studies.

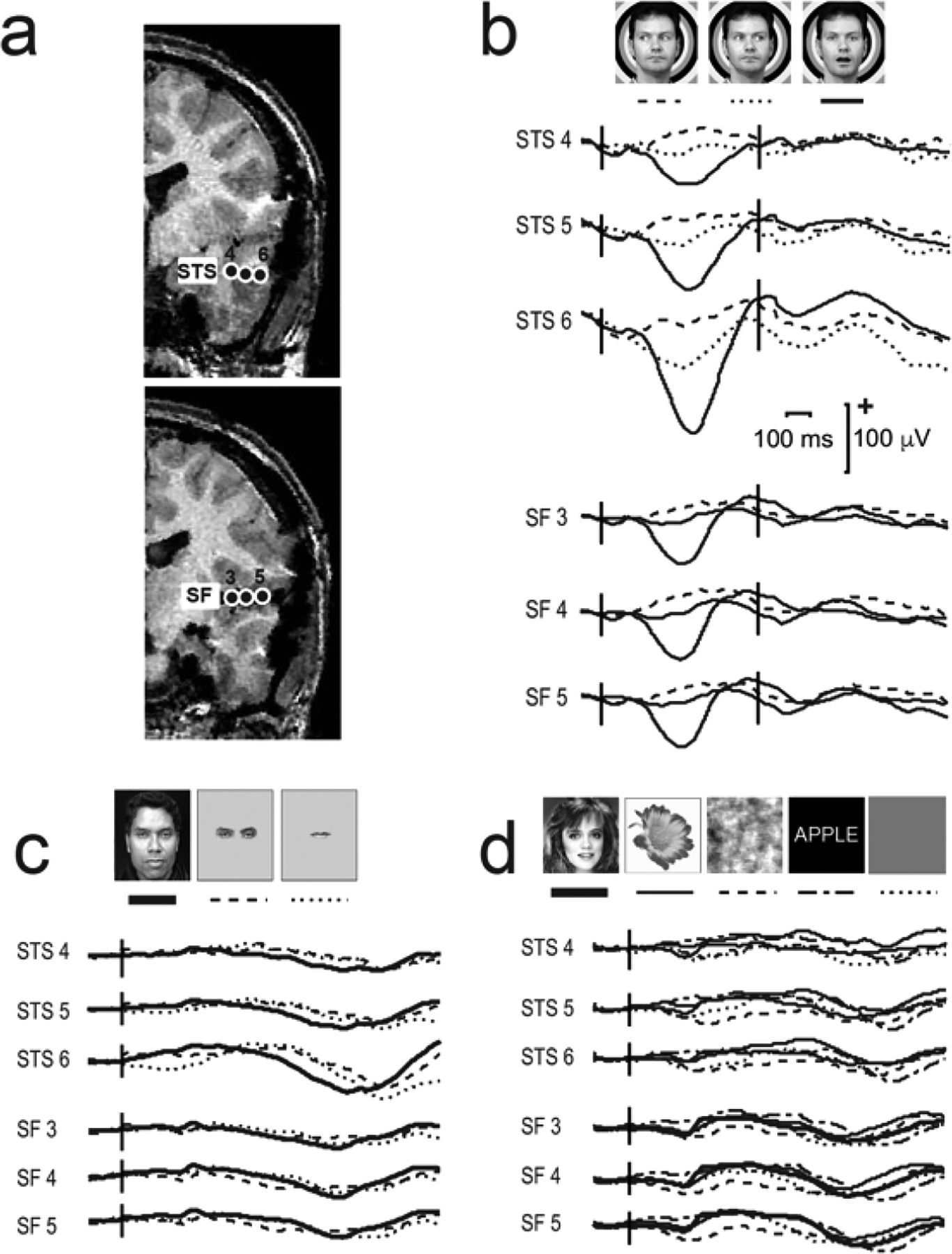

For the dynamic facial motion study, we recorded data from epilepsy surgery patients (who provided informed consent in a study approved by the Human Investigations Committee at the Yale School of Medicine). Talairach coordinates of active electrodes were calculated (Allison et al., 1999). Figure 4 displays data recorded from two depth electrodes in a patient - one electrode was in pSTS, and the other in the Sylvian fissure, abutting insular cortex (Fig. 4a). Averaged field potentials selective to mouth opening were seen at ~ 400 ms after motion onset and were polarity negative in both sites. At these latencies no prominent evoked activity occurred to dynamic gaze changes (Fig. 4b), static isolated mouths or eyes, full faces (Fig. 4c), or other static visual stimuli (Fig. 4d). Some slow, non-descript, late activity (after 500 ms to the static full face and parts might be present in STS 6 (Fig. 4c), and general static visual stimuli (Fig. 4d) appear to produce small deflections at earlier (400 ms) and later (800 ms) latencies, suggesting that while these sites show a distinct preference to moving mouths, nevertheless they retain some degree of general visual responsivity.

Figure 4. Field potentials from STS and Sylvian fissure (insular cortex) from three experiments.

a. Two partial coronal slices of a structural MRI scan display electrode contacts in the STS (top image) and Sylvian fissure (SF; insular cortex) (bottom image). b. The dynamic facial motion study elicits strong responses to mouth opening movements at ~ 400 ms post-motion onset and negligible responses to gaze aversions. c. Isolated static face parts (eyes and mouth) and full faces elicit very long latency responses, particularly in STS contact 6. d. Visual stimuli in general (faces, flowers, scrambled faces or words) do not appear to induce prominent responses at these sites. LEGEND: Talairach co-ordinates (x,y,z) for the electrode contacts were: STS 4 −12,−41,−6; STS 5 −8,−49,−7; STS 6 −8,−55,−7; SF 3 −15,−46,+12; SF 4 −15,−53,+13; SF 5 −15, −60, +14. Data from Puce, A., & Allison, T. (1999). Differential processing of mobile and static faces by temporal cortex (OHBM Annual Meeting abstract). Neuroimage 9(6), S801.

The Sylvian fissure/insula responses (Fig. 4b) are intriguing, given this general region’s known rich functional neuroanatomy (Gogolla, 2017; Uddin, Nomi, Hebert-Seropian, Ghaziri, & Boucher, 2017). Unfortunately, insular recordings are not common, as a number of major branches of the middle cerebral artery course through it (Ture, Yasargil, Al-Mefty, & Yasargil, 2000). In a rare study of intracranial recordings from insular cortex, a sensitivity to gaze aversion in the posterior insula has been previously reported with field potentials in the 200–600 ms range post-motion onset (Caruana et al., 2014), paralleling the anecdotal data presented to moving mouths here.

The location of the depth probe in the Sylvian fissure/insula (Fig. 4) is likely posterior to primary auditory cortex (Heschl’s gyrus) in the temporal lobe, and posterior to gustatory cortex, secondary somatosensory cortex and cortex related to vestibular function in the insula (Gogolla, 2017; Uddin et al., 2017). It is more likely to be near a region sensitive to coughing in the Sylvian fissure (Simonyan, Saad, Loucks, Poletto, & Ludlow, 2007), and a region in the posterior insula known to be sensitive to visual stimuli (Frank & Greenlee, 2018). Notably, epilepsy surgery of the insula (but not temporal lobe) is known to affect emotion recognition – for happiness and surprise (Boucher et al., 2015), emotions where mouth configuration is a prominent component.

DO LOW-LEVEL VISUAL FEATURES DRIVE BRAIN RESPONSES TO OBSERVED FACIAL EMOTIONS?

I have already noted that mouth (opening and closing) movements that appear in a neutral face devoid of emotion generate reliable fMRI activation and neurophysiological activity in the pSTS, and robust non-invasive EEG activity. Studies in our lab using impoverished visual stimuli i.e., biological motion displays of faces with opening and closing mouths, elicit identical neurophysiological responses to stimulation with full (greyscale) faces (Puce et al., 2000; Rossi, Parada, Kolchinsky, & Puce, 2014). In stark contrast, when biological motion displays of faces with averting and direct dynamic gaze are contrasted to the same motion in full faces, the brain responses are very different (Rossi et al., 2014; Rossi, Parada, Latinus, & Puce, 2015). These striking neurophysiological differences suggest that multiple low-level visual mechanisms might drive these respective effects. Mouth movements occur from the action of an articulated joint – the mandible is physically linked to the cranium via the temporomandibular joint. Hence, a strong response to a biological motion display of a moving mouth might be expected (Rossi et al., 2014). In contrast, a biological motion effect should not be present for (impoverished) eye motion, which does not involve joint action, but arises from the coordinated action of a suite of ocular muscles. This is exactly what we see in our studies (Rossi et al., 2014; Rossi et al., 2015).

The biological motion effect is not the only low-level visual factor that could generate stimulus-driven activity from viewing a dynamic face. These could come about due to local luminance and contrast changes. For example, when a person is very happy, their smile (or laugh) will likely show teeth. White teeth can be clearly seen against the darker aspect of the lips and mouth cavity. Sometimes the teeth might also be seen in fear. Indeed, displayed teeth can be clearly seen at a distance. The presence, or absence, of teeth in a mouth expression affects neuro-physiological sensory responses in the latency range of ~100–200 ms (i.e., P100 and N200). Subjects also rate mouths with visible teeth as being more arousing relative to those without visible teeth (daSilva, Crager, et al., 2016). So, this additional low-level visual effect may explain in part why neural studies of emotion consistently report larger responses to happiness (e.g., (daSilva, Crager, & Puce, 2016). Teeth are more likely to be visible to happiness in its canonical form (F. W. Smith et al., 2008).

A local luminance/contrast effect, when discriminating between emotions, could also apply to the eye region. The human sclera are bright white relative to the iris and pupil, and this is unusual relative to other primates who typically do not have such a high luminance/contrast structure of the eye (Kobayashi & Kohshima, 1997). In gaze aversions, and in the display of emotions such as fear and surprise, high luminance/ contrast changes in local visual space occur from iris movement or expansion of the eye – resulting in an eye white area increase relative to a neutral face (Hardee et al., 2008). Like the teeth in a smile, gaze aversions or widened eyes in fearful and surprised expressions can also be well seen at a distance. We believe that the local luminance/ contrast change in the eye is the major driver of the larger neurophysiological response in the pSTS during a gaze aversion. Although there is a significant fMRI signal increase in the pSTS, the amygdala appears to be even more sensitive than the pSTS (Hardee et al., 2008).

A third low-level effect that might drive part of the response to a dynamic facial expression may be due to the extent of the facial movement itself. Mouth movements can produce large changes in mouth configuration, when one looks at facial images depicting the net change in the face (Huijgen et al., 2015). The mouth may also take up more area on the face than the eyes, depending on its configuration e.g., as in a wide smile or a grimace of extreme fear. In contrast, in a gaze change, or widening of the eyes, the main shape of the eye is preserved. From the eye gaze data of Caruana et al. (2014) gaze aversions from direct gaze produce larger neurophysiological effects than did large extreme left-right/right-left gaze transitions (Caruana et al., 2014), so motion extent, in terms of excursion of the iris, does not appear to be a factor here. One further possibility to consider is that the attention-grabbing nature of the facial motion simply makes us foveate the potentially most informative location in space.

A final point on ‘low-level’ effects: Some facial features function as social cues only when the face is upright. Multiple factors can affect identity recognition, and inverted faces typically serve as control stimuli (e.g., McKone & Yovel, 2009; Rhodes, Brake, & Atkinson, 1993; Rossion, 2009). In the Thatcher Illusion, the eyes and mouth are inverted in an upright face. When the face is viewed upright the result is grotesque, but when viewed inverted no glaring irregularities are noted (P. Thompson, 1980), suggesting that mouth and eye orientation, and therefore configuration, matters. When human face pairs are compared on judgments of gender or emotional expression, inversion impairs difference judgments of expressions and gender, and sameness judgements of expression (see Pallett & Meng, 2015)). These data imply that aspects of evaluating gender or viewed emotions i.e., social cues, show that configuration matters and that there may be holistic aspects to processing gender and emotions.

WHERE WE LOOK ON SOMEONE’S FACE MATTERS FOR COMPREHENDING SOCIAL INTERACTIONS

In the abovementioned studies, subjects typically fixated on a fixation cross placed at the nose bridge on a [full] face. Alternatively, if face parts were presented in isolation, subjects gazed at a fixation cross at screen’s center. In everyday life, our eyes rove continuously about the visual scene, and when they land on a person’s face they will not necessarily look at the bridge of the nose. In a social interaction, our eyes travel to the most informative parts of the face. In paintings and naturalistic scenes with people in them, subjects often fixate on faces, and the eye and mouth regions in particular (Bush & Kennedy, 2016; Yarbus, 1967). In the 1960s, the Russian scientist Alfred Yarbus clearly showed that observers ‘triangulate’ a face when viewing it, i.e., they focus their gaze on the two eyes and the mouth, and their eye scanning movements form a triangular shape when they examine a face (Yarbus, 1967).

Much has been made of the information provided by the eyes in emotion recognition and Theory of Mind tasks (Baron-Cohen, Wheelwright, Hill, Raste, & Plumb, 2001). Therefore, it is surprising that when an observer’s gaze is tracked as they successfully recognize dynamic (basic) emotions, healthy subjects can spend more time looking at the mouth region relative to the eyes (Blais, Roy, Fiset, Arguin, & Gosselin, 2012).

SOME ISSUES THAT MAY HAVE COMPLICATED THE SCIENCE

Implicit versus explicit studies involving experiments with facial stimuli

The brain responses that I mainly focused on above are involuntary and are consistently observed during implicit tasks, and likely arise from low-level visual factors. This implicit way of functioning seems more ecologically-valid and might closer approximate what we do in everyday life unconsciously (Puce et al., 2016; R. Smith & Lane, 2016). Yet, when we read the social attention and emotion recognition literature, so much of it is built on explicit tasks e.g., requiring emotions to be categorized or named, often by forced choice. How do brain responses to these explicit tasks vary relative to those in implicit tasks, when identical stimulus material is presented?

We studied how neurophysiology is modified across the implicit–explicit task dimension for social attention, low-level visual factors, and emotion. First, in a social attention task, gaze in neutral faces changed with different degrees of aversion. In the implicit task, subjects indicated by button press if gaze deviated to the ‘left’ or ‘right’ and we replicated our averted gaze > direct gaze N170 effect. In the explicit task using the same stimuli, subjects indicated if gaze moved ‘toward’ or ‘away’ from them. This time, N170 was equally large to gaze aversions and gaze returning to look directly at the observer (Latinus et al., 2015).

Second, in 3 different mouth configurations we changed the presence/absence of teeth – I already mentioned effects of visible teeth earlier. In the implicit task, subjects detected infrequent greyscale versions [target] of any of the [color] mouth stimuli. In the explicit task, subjects saw color stimuli only and pressed one of 3 response buttons to indicate if the mouth formed an ‘O’, an arc, or a straight line – mouth configurations typically seen in surprise, happiness or fear. Mouth shapes could occur with, or without, visible teeth. A robust main effect of teeth for P100, N170 and VPP occurred for both tasks, but there were also Teeth X Task interactions in the explicit task. For later potentials: (1) P250 showed no main effects for teeth, but showed Task X Teeth and Task X Mouth Configuration interactions; (2) LPP/P350 was only seen in the implicit task; (3) SPW was seen only in the explicit task (daSilva, Crager, et al., 2016).

Third, faces portraying positive emotions (happiness and pride) and neutral expressions were studied. In the implicit task, subjects looked to see if a freckle was on the face (between the eyes and mouth) (an infrequent target). N170, VPP, and P250 ERPs were significantly greater for both emotions relative to neutral but did not differ between emotions. The late SPW potential significantly differentiated between happy and proud expressions. In the explicit task, subjects pressed one of 3 buttons to differentiate the neutral, happy and proud faces. We saw the same main effects occurred for N170, VPP, P250, LPP and SPW, but this time we also saw Emotion X Task interactions involving P250 and SPW (daSilva, Crager, & Puce, 2016).

Across the above 3 experiments task interactions with main effects occurred mainly in the longer latency responses, also raising questions for how these neurophysiological changes might impact hemodynamic activation patterns in fMRI studies.

How generalizable are the results we observe in the laboratory to what we experience in everyday life? From our social attention studies, we posited that in real life we might function in two main modes – a ‘non-social’ or default mode (not the same as the resting-state concept), and a ‘socially-aware’ mode (where we explicitly judge others on some social attribute). These modes might function somewhat akin to the implicit and explicit evaluations of faces we use in the lab. When we are in a non-social mode (out in the world and interacting with objects or with others at a superficial level), our sensory systems do some of the hard work for us and differentiate between certain socially salient stimuli – just in case we might wish to explicitly evaluate them further (by switching to social mode). In social mode, our sensory systems likely increase their input gain, so incoming social signals are augmented, enabling us to better evaluate rapidly unfolding social situations (Puce et al., 2016).

Use of morphs in studies of emotion

Creating stimulus sets with genuine dynamic emotional expressions is incredibly challenging, so for many years experimenters resorted to using static faces displayed at the peak of the emotion (e.g., Ekman & Matsumoto, 1993). Early attempts at creating emotional displays with real faces showed how dependent the behavioral performance of subjects was on technical aspects of animated stimuli, such as frame rate (Kamachi et al., 2013). Morphing between static faces of the same identity, but with different expressions at their peak could make blended emotional stimuli. Similarly, neutral faces and emotional expressions at their peak were blended, with different proportions of the emotion being mixed with the neutral face. Unfortunately, dynamic morphed displays using different morphing strategies could produce different experimental results (Vikhanova, Mareschal, & Tibber, 2022). These morphed stimuli were criticized for not being ecologically valid, as real emotions can be initiated by one part of the face and then progressively involve other face parts. So, non-linear facial changes occur in real emotions, but not in morphed displays. It has been argued that this is an important cue for perceiving real emotions (Korolkova, 2018). Indeed, quite different neurophysio-logical activity can be elicited with real versus artificially-created dynamic facial expressions (Perdikis et al., 2017). A recent review examines some of these issues and challenges for studying emotional expressions (Straulino, Scarpazza, & Sartori, 2023).

Feigned emotional stimuli have also been criticized, as studies using real versus feigned emotional faces can report inconsistent findings. In real-life we can generate emotional expressions via 2 motor pathways – a spontaneous and involuntary one, and a volitional one. Two upper motoneuron (UMN) tracts project to the facial nerve nucleus in the pons. In evolutionary terms, the extrapyramidal UMN tract is the older one – it produces involuntary and automatic facial expressions that arise rapidly and can be short lasting. In contrast, the pyramidal UMN, the evolutionarily newer route, allows us to make volitional expressions that can onset more slowly and be longer lasting (see (Straulino et al., 2023).

A disconnect between literatures?

Our face and body movements display our emotions, intentions and actions. Social interactions are dynamic and involve the orchestrated dance of a suite of facial and bodily muscles that signal one’s inner mental states rapidly, spontaneously and involuntarily. Alternatively, we can also conceal these important aspects of our inner mental life. As observers parsing the face and bodily movements of others we do not need to explicitly name or note them – we register them effortlessly and unconsciously, and adjust our behavior to suit the social situation we are in. So, in one sense, from motion comes emotion… Unfortunately, the bulk of studies have been based on viewing isolated static stimuli on computer screens and collecting impoverished behavioral measures (e.g., simple button presses). Only relatively recently have naturalistic tasks - which are challenging to perform - become part of mainstream social neuroscience (Morgenroth et al., 2023; Saarimaki, 2021).

Another major issue for the field? How do we make sense of the varied scientific findings that are complicated by an apparent disconnect between the ‘low-level sensory’ and ‘affective’ literatures? This seems most pertinent for studying how the brain perceives and recognizes portrayed emotions. The famous 1977 Sydney Harris cartoon comes to mind where two scientists are at a blackboard solving a complex problem. Between 2 sets of detailed formulae, a text fragment reads, ‘a miracle happens…’. One scientist says to the other ‘I think you should be more specific in Step 2.’ (http://www.sciencecartoonsplus.com/gallery/physics/index.php). So, at the core of our emotion problem, ‘the miracle’ occurs between sensory stimulus registration and recognition of the emotion. It seems that many scientists working on the side of low-level visual effects (e.g., local contrast or brightness) often ignore some important higher-order cognitive and affective confounds, whereas others working at higher levels of functional brain organization often do not consider potentially significant low-level confounds.

That all said, a number of investigators who are studying top-down and bottom-up interactions to better understand how we see an integrated, seamless world report interesting findings. First, retinotopic properties of (newer) category-specific regions in posterior cortex have differential areal properties. For example, some higher-level’ brain areas (e.g., OPA [occipital place area] and LOTC [lateral occipito-temporal complex]) have overlapping multiple retinotopic maps, whereas others such as OFA [occipital face area] do not (Silson, Groen, Kravitz, & Baker, 2016). These results could be clues to the differential computations these areas might provide. Second, ‘typical’ spatial locations (e.g., eyes placed higher and mouth lower in a display) produce larger retinotopic hemodynamic signals and better behavioral measures (de Haas et al., 2016) – a finding with direct implications for the face inversion literature. Third, contralateral bias varies in higher-level category-specific visual regions. For instance, the right FFA [fusiform face area] and FBA [fusiform body area] exhibit more information integration from both hemifields relative to their left hemisphere homologs (Herald, Yang, & Duchaine, 2023) – helping explain why prosopagnosias have a right hemisphere basis (Meadows, 1974).

Fourth, still on biases but at a higher level, top-down social cognitive factors (e.g., in-out group attributes, attitudes, stereotypes, prejudices) can provide a powerful lens which shapes our perception and how we look at others. Specifically, information from the anterior temporal lobe (ATL) on social knowledge and stereotypes from a lifetime of experience can be accessed by orbitofrontal cortex and fed down to the FFA, affecting how sensory information is processed (Brooks & Freeman, 2019). This top-down drive can be unconscious and rapid (Freeman & Johnson, 2016), where facial appearance of an unfamiliar individual can drive strong (right or wrong) impressions (e.g., intelligent, trustworthy etc.) – as shown by behavioral and hemodynamic studies of face-trait spaces (Stolier, Hehman, & Freeman, 2018; Todorov, Said, Engell, & Oosterhof, 2008). The culture that we grow up in shapes our social cognitive impressions (Freeman, Rule, & Ambady, 2009). We form these social impressions quickly – from ‘thin slices of behavior’ – in about 30 seconds or so (Ambady & Rosenthal, 1992). A number of years ago, an interacting top-down/bottom-up connectionist model for person construal was described. Partial parallel interactions were probabilistic in nature, allowing continuously evolving activation states that are consistent with an individual’s goals (Ambady & Freeman, 2011). It would be good to see this used more in multimodal social neuroscience data interpretation.

The disembodied brain and … face?

In the latter half of the 20th century, reductionist approaches in cognitive and social neuroscience focused on identifying the basic building blocks for cognitive and social functions. While this work was fundamental and important, it seemed like we had laid out the jigsaw puzzle pieces on the table, but we had no idea of the overall image that the jigsaw presented. Perhaps part of the problem was that we had disembodied the brain? There it was sitting in a glass jar separated from the body – isolated from interoceptive messages from the body’s internal milieu and from integrated multisensory input from the external world.

During this century, literature on the ‘brain-heart’ and ‘brain-gut’ axes has highlighted the key role in interoception and modulation of the viscera by the vagus nerve. The bidirectional vagal messaging to and from the brain affects both physical and mental function and has major implications for disease (Manea, Comsa, Minca, Dragos, & Popa, 2015; Mayer, Nance, & Chen, 2022). Regular electrical activity of the heart can be recorded as the electrocardiogram (ECG) – a series of waves labelled by alphabetic letters from P-T, with the R-wave signaling the main (ventricular) contraction of the heart (Hari & Puce, 2023). Additionally, electrical activity from smooth muscle contractions of the gut can be recorded with electrogastrography (EGG). The EGG signal is a complex entity where periodic respiratory activity from electrical activity of the diaphragm as well as cardiac activity are also sampled with electrical activity of the gut, due to the effects of volume conduction in the body. The three electrophysiological signals can be easily teased apart from their different power spectral content (for a review see Wolpert, Rebollo, & Tallon-Baudry, 2020).

Studies in social neuroscience are starting to investigate interoceptive neurophysiology. For example, the brain is sensitive to the beating of the heart: a biphasic response in primary somatosensory cortex 280–360 ms occurs after the contraction of the heart’s ventricles (Kern, Aertsen, Schulze-Bonhage, & Ball, 2013). Interoception of cardiac activity can modulate brain activity in somatosensory cortex and behavior during the detection and localization of somatosensory stimuli (Al et al., 2020).

With respect to the ‘disembodied’ face, in social neuroscience, the literature has tended to focus on the face, which is somewhat ironic given how we see our (whole) conspecifics in everyday interactions. In the next section, I discuss some face and body processing models to illustrate how thinking has progressively changed, as knowledge of structural and functional neuroanatomy has grown and (non-invasive) assessment techniques for studying the in-vivo brain and body activity have improved.

It would be remiss of me not to mention the cerebellum. In some ways it is the ‘Cinderella of the brain’. The cerebellum has more neurons than cerebral cortex and is an important contributor to social cognitive function (Schmahmann, 2019), but our focus has always been on the cerebral cortex. Some relatively new work indicates that cerebellar activity can be reliably detected and modeled with MEG and EEG methods (Samuelsson, Sundaram, Khan, Sereno, & Hamalainen, 2020). Perhaps Cinderella will now be able to wear her glass slippers?

‘FACING UP’ TO SOME EXISTING MODELS OF PROCESSING INCOMING INFORMATION FROM THE FACE AND BODY

In 1967 Polish neurophysiologist Jerzy Konorski proposed the idea of ‘gnostic units and areas’ in the central nervous system, predicting neurons and brain areas with selectivity for faces, objects, places and words. His 9 category model (Konorski, 1967), fore-shadowed ideas of category-specificity and pre-dated the ‘grandmother cell’ concept by a couple of years (Gross, 2002). The first non-invasive neurophysiological evidence for human category-specific responsivity came from E.R. John – published in a memorial special journal issue (following Dr. Konorski’s untimely death) (John, 1975). Konorski’s predictions included where on the human scalp selective ERP responses would occur in the 10–20 EEG electrode system (Jasper, 1958). E.R. John’s anecdotal data (Fig. 5) showed a clear negative potential at 150–200 ms to a vertical line signifying the letter ‘I’, which was less evident when read as the number ‘1’ (John, 1975)! At the time, neuropsychological studies were amassing evidence on visual agnosias, especially to faces, in patients with acquired brain lesions (Meadows, 1974). In the lab of Truett Allison and Greg McCarthy in the 1990s, a discussion of Konorski’s categories for selective stimulus evaluation was quite common.

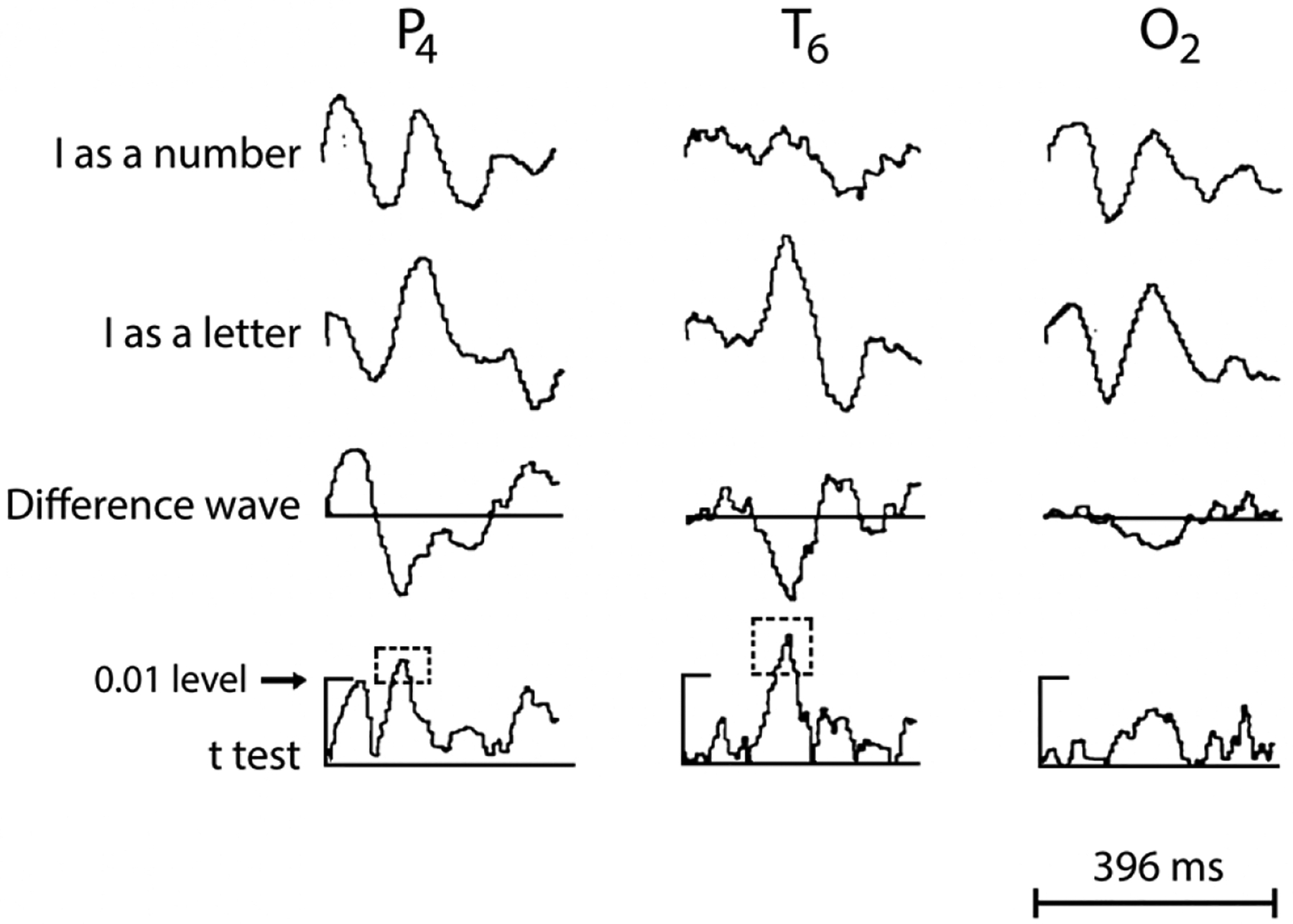

Figure 5. A single subject category-selective non-invasive neurophysiological response.

Averaged EEG data from 10–20 sites on the right parietal (P4), temporal (T6) and occipital (O2) scalp. Top 2 traces show waveforms elicited to 50 presentations of a vertical line described to the subject as the letter ‘I’, and the number ‘1’, respectively. Electrode T6 shows a prominent potential (first peak) in the older ‘negative polarity is up’ display convention. The voltage calibration bar is absent, but activity appears in μV. A time calibration marker ( bottom of the figure) shows total time in milliseconds (ms). Trace 3 displays difference waveforms between the two stimulus types. A larger response to the letter relative to the number is seen halfway through the epoch in all 3 sites at ~200 ms. Trace 4 depicts point-by-point t-test values between the two conditions, with significant differences ( p < 0.01; identified within the outlined box with broken lines) for sites T6 and P4, but not O2. The original figure showed data from another subject with similar effects in the left temporal scalp. From John, E.R. (1975) Konorski’s concept of gnostic areas and units: some electrophysiological considerations. Acta Neurobiologiae Experimentalis (ANE) 35: 417–429, reprinted with kind permission from the Editor-in-Chief, ANE.

In the 1980s it was noted that a prosopagnosic patient could generate an (unconscious) autonomic Galvanic Skin Response (GSR) to a familiar face, even though he could not identify it (Bauer, 1984). The patient was a motorcycle accident victim who had sustained extensive bilateral occipito-temporal lesions, as seen from the computerized tomography images (Bauer, 1982). It appears that the left pSTS and bilateral V1 were spared, leaving a potential route for the unconscious response to the familiar face.

Not long after Bauer’s (1984) case report, a model for familiar face recognition was proposed by British neuropsychologists Vicky Bruce and Andy Young, which was based on decades of neuropsychological investigations in patients with facial processing deficits (Bruce & Young, 1986). The model also included facial speech processing and facial expression analysis – in a separate parallel pathway to that for familiar face recognition. Following human and monkey fMRI and neurophysiological studies devoted to face perception in the latter two decades of the 20th century this model was refined (Fig. 6a; see Gobbini & Haxby, 2007). Interestingly, the ‘emotion’ component of this later model focused on emotional reactions generated in the viewer of the familiar face, and not in the emotions or other dynamic social signals present on the familiar individual’s face. The original model by (Haxby, Hoffman, & Gobbini, 2000) was modified by both (Gobbini & Haxby, 2007) and (O’Toole et al., 2002). O’Toole’s model expanded on how dynamic faces are analyzed and had identity signals being sent from the dynamic face from the dorsal pathway (where STS was said to be) to the ventral pathway (Fig. 6b).

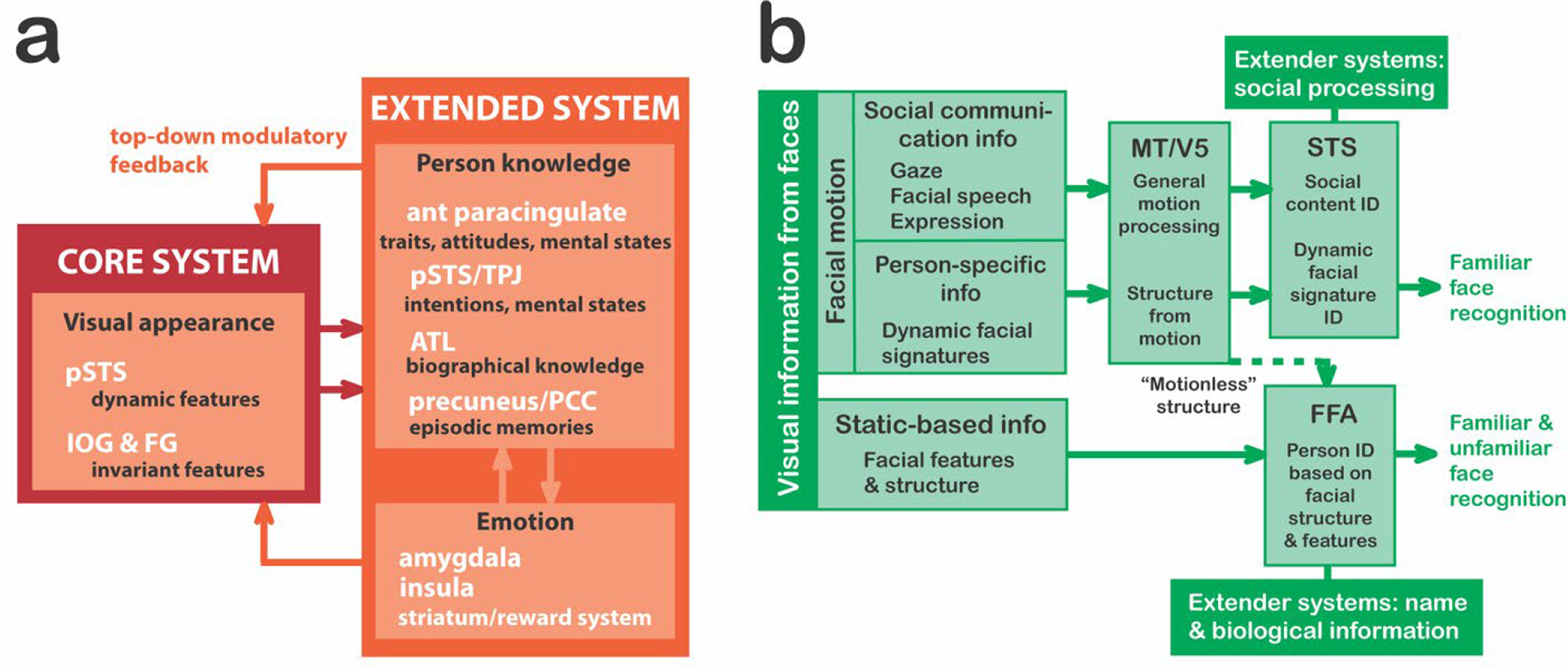

Figure 6. Two models of active brain regions in face perception and recognition.

In both models, an initial branch point occurs where invariant facial features (important for identity recognition) are separated from dynamic aspects of the face (important for emotional expressions and social attention). a. The refined Gobbini and Haxby (2007) familiar face recognition model has a core system that decodes visual appearance via two streams: one for invariant feature identification and another for dynamic face feature perception. Information from core passes to the extended system, activating either aspects of person knowledge or our own emotions elicited by that person b. The O’Toole et al. (2002) model expands the original Haxby et al. (2000) model. Here dynamic aspects of a familiar (and unfamiliar) face (e.g., an expressed emotion) are processed in the dorsal visual pathway and an identity signal is sent to the ventral pathway. STS is assumed to be part of the dorsal pathway.

Years later, Bernstein and Yovel (2015) worked with the ‘Haxby (2000)’ and ‘O’Toole (2002)’ models to try to deal with some existing issues. For example, OFA is a ventral pathway region that extracts form information from faces and is strongly connected to FFA, but both areas do not share strong connections with pSTS. Hence, the pSTS was placed in the other pathway, devoted to extracting information from dynamic faces. Bernstein and Yovel (2015) placed aSTS and inferior frontal gyrus – structures reactive to dynamic faces – in the dorsal face pathway because in their view, “the primary functional division between the dorsal and ventral pathways of the face perception network is the dissociation of motion and form information” (Bernstein & Yovel, 2015).

The models typically have not included human voice processing, and remained largely visuocentric, even though the original Bruce and Young (1986) model included speech analysis. A model for the ‘auditory’ face i.e., processing auditory information from human voices, proposed a parallel structure to the analysis of visual information from the human face (Fig. 7; Belin, Bestelmeyer, Latinus, & Watson, 2011; Belin, Fecteau, & Bedard, 2004).

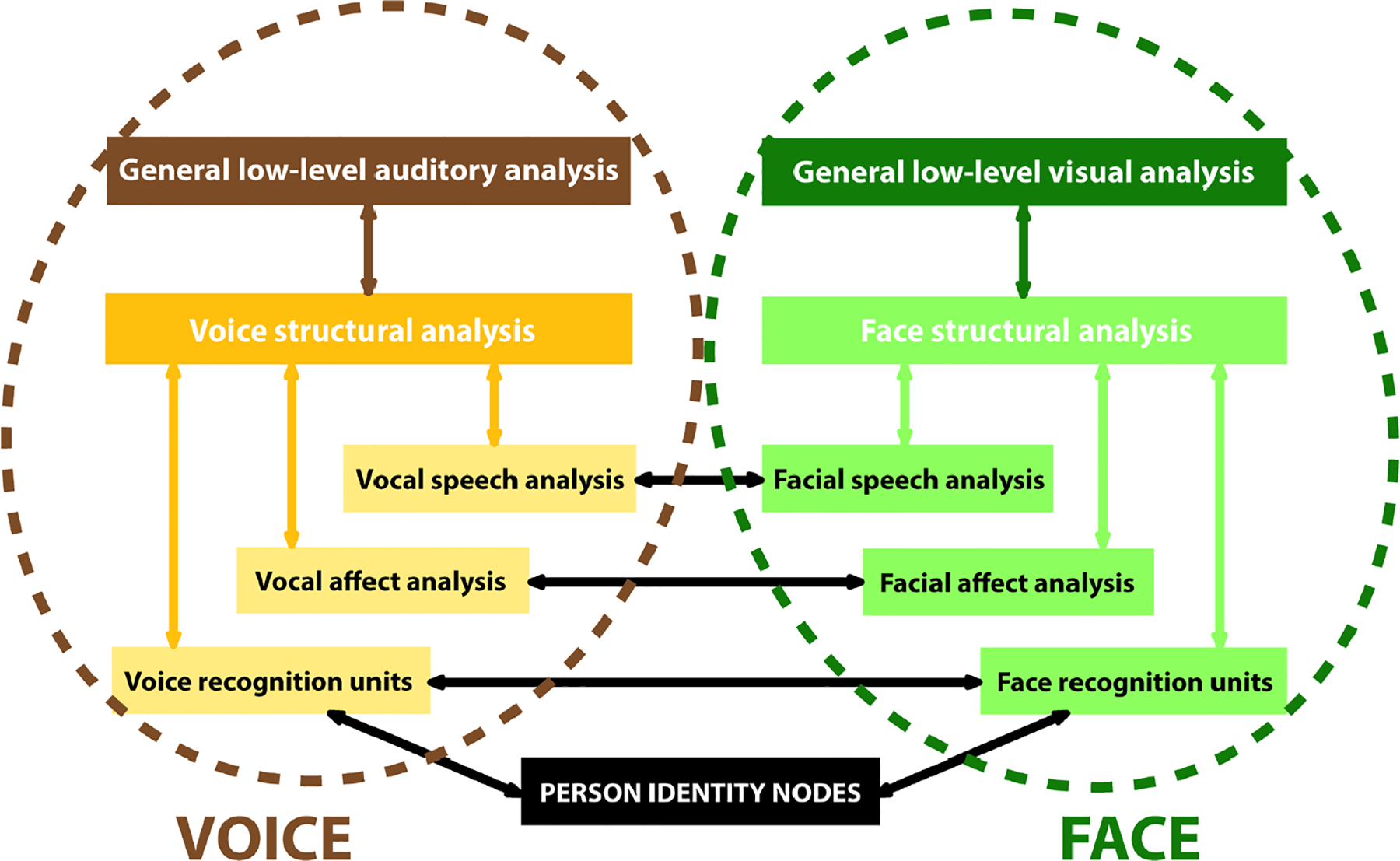

Figure 7. A model of voice perception.

The Belin et al., (2004) VOICE perception model (left: brown/gold flowchart) shows parallelism with the original components of the Bruce & Young (1986) familiar FACE perception pathway (right: green flowchart). There are paths of intercommunication between the two. This model is based on extensive unimodal processing (within each pathway, colored arrows) and multimodal interactions (cross-pathway interactions, black arrows).

As already noted, bodies are important messengers of emotional state and action intent, as well as signaling identity. Not surprisingly, fMRI studies identified areas of occipitotemporal cortex sensitive to human body motion, such as the Extrastriate Body Area (EBA) (Downing, Jiang, Shuman, & Kanwisher, 2001) and Fusiform Body Area (FBA) (Peelen & Downing, 2004). Intracranial field potential studies have reported selective responses to human hands and bodies in ventral and lateral occipitotemporal regions (Pourtois, Peelen, Spinelli, Seeck, & Vuilleumier, 2007; Puce & Allison, 1999). The parallel fMRI studies in our lab also showed lateral regions – most prominently the inferior temporal sulcus The EBA was sensitive to body parts and wholes (Downing & Peelen, 2016), whereas the FBA responded more vigorously to whole bodies, although it could respond to body parts (Peelen & Downing, 2007). The pSTS also showed a vigorous selective fMRI response to both realistic human hand and leg motion and animated avatars of whole mannequin bodies, faces and hands (J. C. Thompson, Clarke, Stewart, & Puce, 2005; Wheaton, Thompson, Syngeniotis, Abbott, & Puce, 2004). In contrast, the middle temporal gyrus (MTG) is highly active to man-made object/tool motion (Beauchamp, Lee, Haxby, & Martin, 2003).

A challenge for the field has been to place activation to (dynamic) bodies and their parts into existing (face-centric) models of social information processing. It is no surprise that these body-related findings prompted substantial revisions to existing models, e.g., a multisensory model for person recognition using dynamic information from faces, bodies and voices has been proposed. Here the pSTS acts as a ‘neural hub for dynamic person recognition’, sending multisensory information to the aSTS and then onto ATL — a region critical for person recognition. Unisensory auditory (pSTS and aSTS) and visual (OFA and FFA) pathways also send information to the ATL for person recognition (Yovel & O’Toole, 2016). The EBA and FBA are not part of this model. Another important aspect of interpreting ‘body language’ is the unconscious nature and speed with which we make sense of this information – proposed to be possible via the existence of subcortical and cortical pathways, which are intertwined in three interconnected brain networks (Fig. 8; de Gelder, 2006).

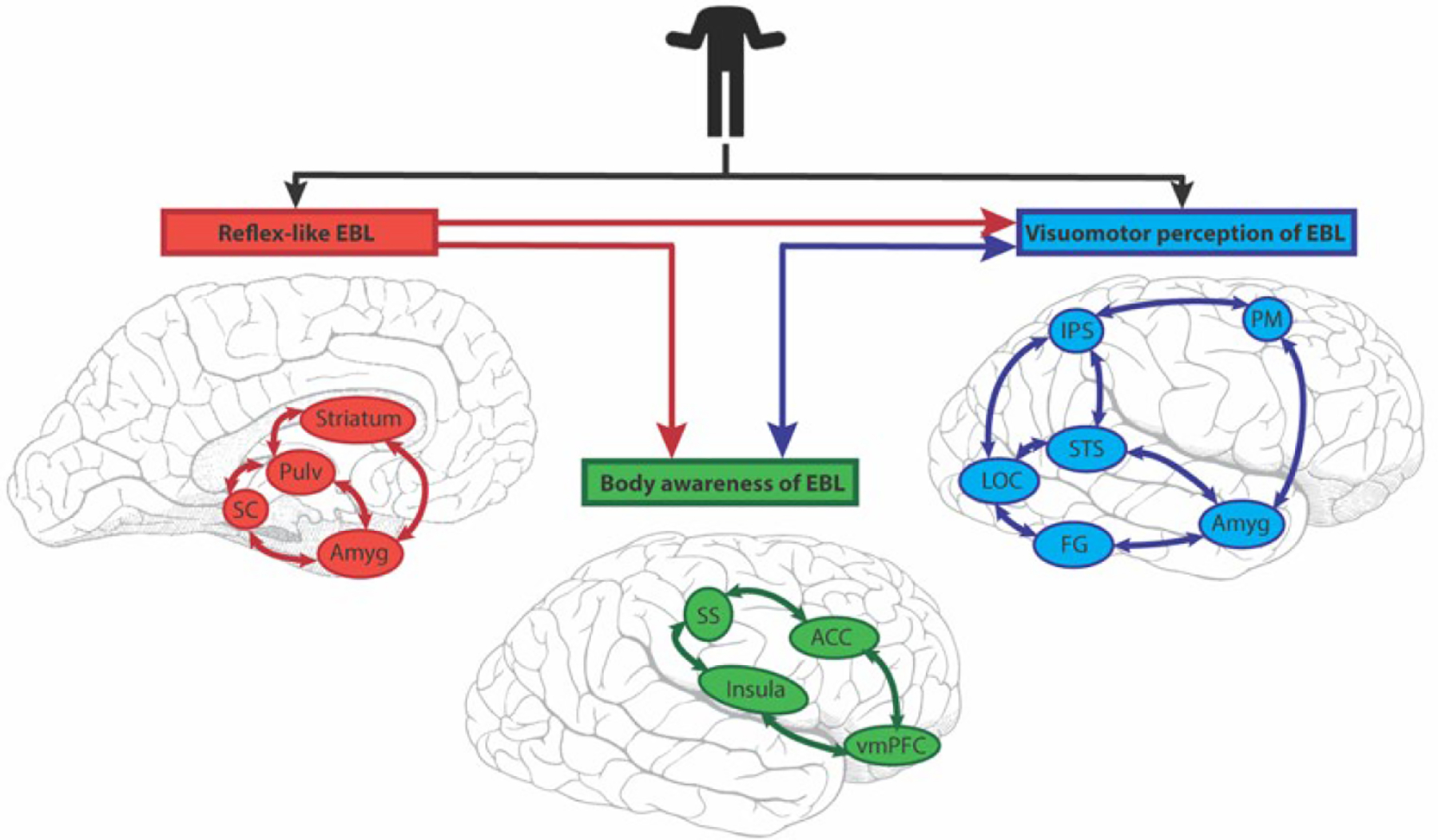

Figure 8. Emotional body language (EBL) processing across three interrelated brain networks.

EBL visual information enters subcortical (red) and cortical (blue) routes in parallel. The subcortical Reflex-like EBL network (red) is rapid and comprises the superior colliculus (SC), pulvinar (Pulv), striatum and amygdala (Amyg). Its output is not amenable to conscious awareness. The (cortical) Visuomotor perception of EBL network (blue) has the core areas of lateral occipital complex (LOC), superior temporal sulcus (STS), intraparietal sulcus (IPS), premotor cortex (PM), fusiform gyrus (FG) and amygdala (Amyg). (The amygdala is common to two networks in this scheme.) The third network is called the (cortical) Body awareness of EBL (green). Its core structures are the insula, somatosensory cortex (SS), anterior cingulate cortex (ACC) and ventromedial prefrontal cortex (vmPFC). It processes incoming information from others, and interoceptive information from the individual. The subcortical (Reflex-like EBL) sends feedforward connections (red lines) to the two cortical networks. Reciprocal interactions (blue lines) exist between the two cortical systems

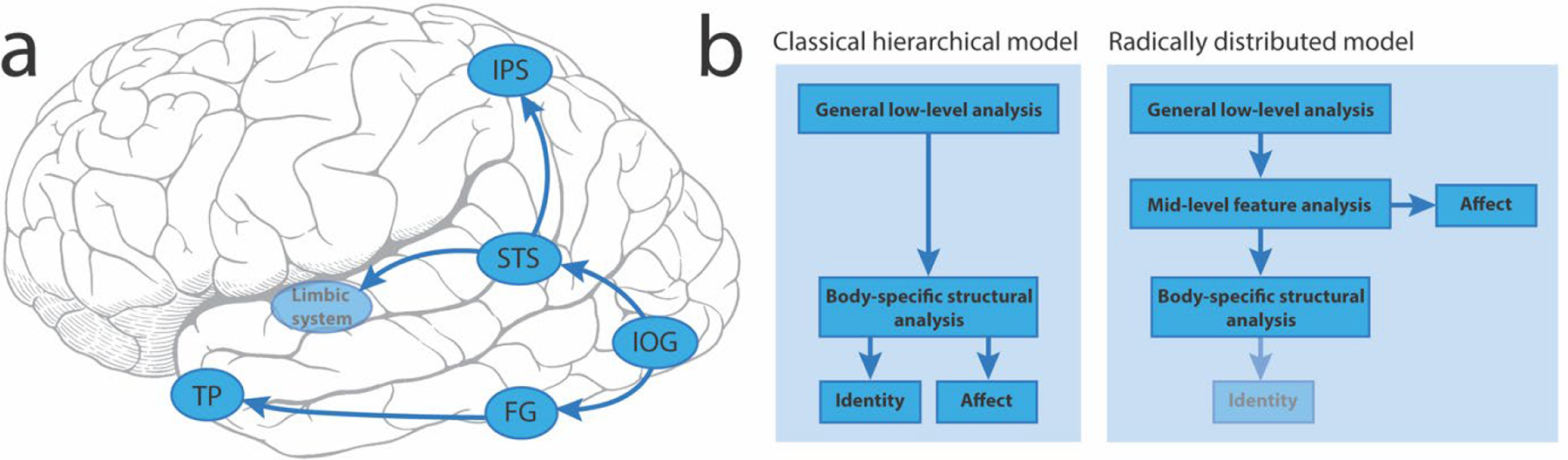

An updated model for recognizing emotion from body motion has been proposed (de Gelder & Poyo Solanas, 2021). The EBA and FBA are not in this model. Processing of body movements starts in the inferior occipital gyrus (IOG), dividing into a ventral and a ‘dorsal’ route where pSTS plays a key role, relaying information to the limbic system and intra-parietal sulcus (IPS). This ‘radically distributed’ model includes a subset of brain regions sensitive to human face motion (Fig. 9a). A major departure from more established models is the addition of a mid-level of feature analysis dealing with affect, which no longer passes through a structural analysis of the body (Fig. 9b). This would allow affective information to be processed more rapidly. Identity recognition would be dealt with via the body specific structural analysis route, but there is no direct link between it and affective analysis of body posture. Given that we have idiosyncratic bodily movements and postures, it is not clear how this information would be extracted in this formulation.

Figure 9. A model for recognizing emotional expressions from an individual’s movements.

a. Brain regions involved in recognizing emotions and their connections. LEGEND: IOG=inferior occipital gyrus; FG=fusiform gyrus; TP=temporal pole; STS=superior temporal sulcus; IPS=intraparietal sulcus. b. Side-by-side flowcharts of the classical hierarchical model for recognizing emotional expressions versus an alternative proposal (radically distributed model) that does not rely on a uniquely hierarchical progression of information from lower- to higher-level brain regions. Note: in this newer model the fainter identity box indicates that this element was not present in the original figure in de Gelder & Poyo Solanas (2021), but from the discussion in the manuscript I surmise that this would be the case.

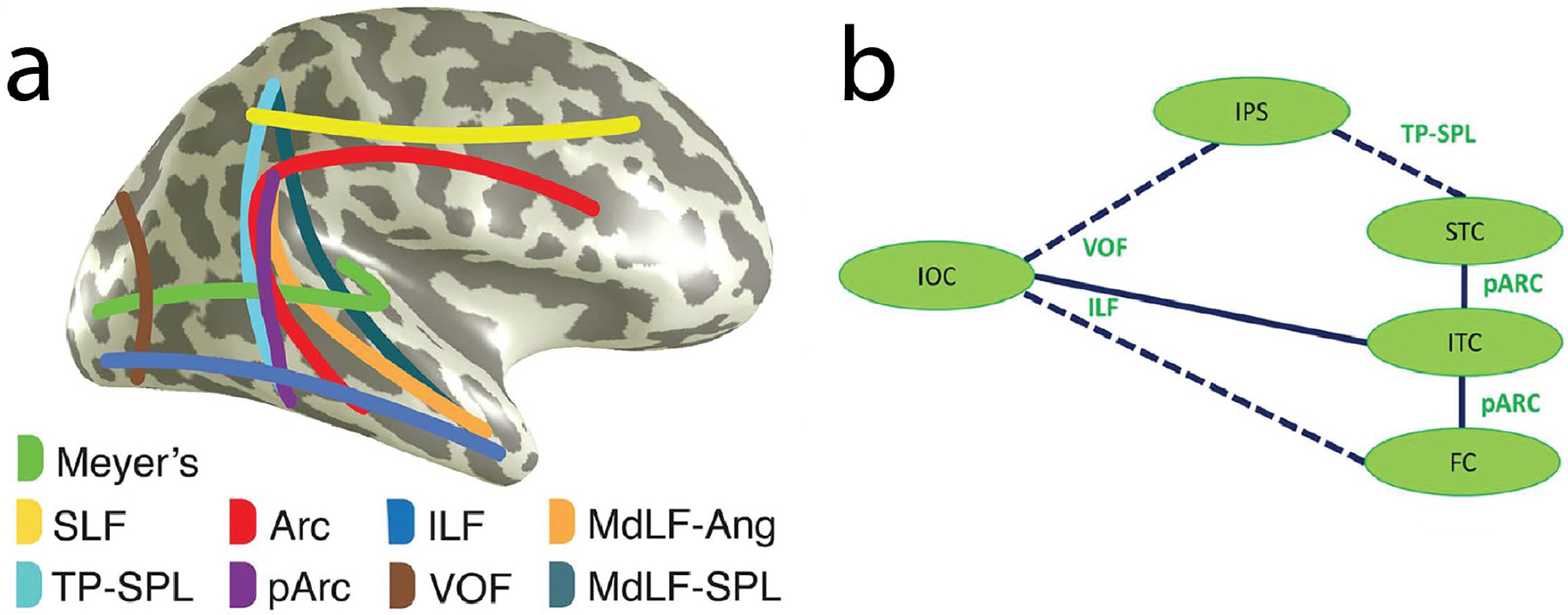

The pathway of de Gelder & Poyo Solanas (2021) has its starting point in the IOG - similar to that of the original suggestion by Haxby and colleagues (2000), their updated model (Gobbini & Haxby, 2007), and that of (Ishai, 2008). In our recent intracranial study of four occipitotemporal ROIs and dynamic changes in gaze direction and emotion (see Figs. 2 and 3), we evaluated likely white matter pathways that might carry information between these regions (Babo-Rebelo et al., 2022). Posterior brain major white matter pathway endpoints were identified (Bullock et al., 2019) from 1066 healthy brains from the Human Connectome Project (Fig. 10a). Then an overlap analysis between these endpoints and active intracerebral sites in the 11 epilepsy surgery patients was performed. From the overlap analysis and field potential latencies we proposed a potential information flow in part of the occipitotemporal cortex (Fig. 10b).

Figure 10. Putative information flow routes for faces in the posterior brain.

a. Schematic figure of white matter pathways routing information from visually sensitive brain regions. LEGEND: SLF, superior longitudinal fasciculus; TP-SPL, temporoparietal connection of the superior parietal lobule; Arc, arcuate fasciculus; pArc, posterior arcuate fasciculus; ILF, inferior longitudinal fasciculus; VOF, vertical occipital fasciculus; MdLF-Ang, middle longitudinal fasciculus branch of the angular gyrus; MdLF-SPL, middle longitudinal fasciculus branch of the superior parietal lobule. Meyer’s (loop), the optic radiation connecting the lateral geniculate nucleus and occipital lobe, is also included. b. Cartoon of putative routes of information flow relating to faces focusing mainly on fusiform and superior temporal cortex. This schematic is based on an overlap analysis of white matter tract endpoints (from 1066 healthy subjects) and coordinates of active bipolar sites (from epilepsy patients). All data are in MNI space. Solid lines represent routes with overlap between tract endpoints and active sites. Broken lines show connections with overlap at one tract end only, as seen from the data of Babo-Rebelo et al (2022). Note: short-range fibers aiding information flow across ventral occipitotemporal cortex were not included in the tract endpoint analysis and are not represented here. LEGEND: IOC: inferior occipital cortex, FC: fusiform cortex, ITC: inferior temporal cortex, STC: superior temporal cortex, IPS: inferior parietal sulcus. Tract abbreviations are identical to part a. Parts a and b reproduced with CC BY 4.0 Deed from Babo-Rebelo, M., Puce, A., Bullock, D., Hugueville, L., Pestilli, F., Adam, C., … George, N. (2022). Visual information routes in the posterior dorsal and ventral face network studied with intracranial neurophysiology and white matter tract endpoints. Cereb Cortex, 32(2), 342–366.

Our data-driven model (Fig. 10) is incomplete: it was focused on how information might be routed between pSTS and fusiform cortex. We posit that inferior temporal cortex may act as the mediator between the two, with information transfer via the posterior arcuate fasciculus. Our invasive neurophysiological dataset was limited – sampling cortex implanted for clinical needs. Therefore, other connective links in the face pathway could not be evaluated. That said, we propose that combined neurophysiological and neuroanatomical investigations are one way forward for making sense of the complex network of interconnections that allow processing of the human form, and also for visual function in a more general sense.

Looking beyond the visual pathway scheme and existing models of face and body processing, we also need to consider existing social brain network models. In one 4-network model, the pSTS sits in a mentalizing (theory of mind) network, together with the EBA, FFA, temporo-parietal junction, temporal pole, posterior cingulate cortex (PCC), and parts of dorso-medial prefrontal cortex (dmPFC). The other 3 networks include the amygdala network (amygdala, middle fusiform/inferior temporal cortex, and parts of ventro-medial prefrontal/orbitofrontal cortex (vmPFC/OFC), the empathy network (parts of the insula and middle cingulate cortex) and the mirror/ simulation/action perception network (parts of inferior parietal and inferior frontal cortex) (Stanley & Adolphs, 2013). Another literature review-based model makes pSTS a central hub for 3 neural social information processing systems: social perception, action observation, and theory of mind (Yang, Rosenblau, Keifer, & Pelphrey, 2015). This latter formulation does not consider the EBA or FFA.

One other important consideration relates to the actual nature of a real-life social interaction. These do not happen in isolation and involve at least one other individual. For this reason, the need for ‘2-person’ (or dyadic) social neuroscience studies has been emphasized (Hari & Kujala, 2009; Quadflieg & Koldewyn, 2017; Schilbach et al., 2013). Dynamic dyadic stimuli contain information along (at least) three dimensions (i.e., perceptual, action and social), therefore requiring multiple control conditions. For example, the perceptual dimension includes interpersonal signals such as mutual smiles or coordinated movement patterns. In the action dimension, these can be independent or joint (e.g., reading versus discussing), have opposing goals (e.g., collaborating versus competing), or are positive or negative (e.g., kissing versus punching someone). In the social dimension, acquaintance type (e.g. strangers or acquaintances/family), interaction type (e.g., formal, casual or intimate), and its level (e.g., subordinate or dominant) matter (Quadflieg & Koldewyn, 2017).

Just being an observer for a social interaction is also not enough – our science needs to study the neural sequelae of real-life human interactions. Fortunately, now we have portable technology to perform such studies, but data acquisition and analysis methods are not without pitfalls (Hari & Puce, 2023). Additionally, new dynamic stimulus sets with large numbers of exemplars are being generated – including social and non-social interactions, and interactions with objects (e.g., Monfort et al., 2020).

SLURPING FROM THE BRAIN REGION ‘BOWL OF ALPHABET SOUP’

Altogether we now have a large alphabet soup of brain regions, including the pSTS, OFA, FFA, FBA, and EBA – regions known for evaluating social stimuli. Relevant to the social brain models discussed earlier, where the EBA and FBA belong is important (Taubert, Ritchie, Ungerleider, & Baker, 2022). The pSTS has been proposed to be critical for analysis of social scenarios involving multiple individuals (Quadflieg & Koldewyn, 2017), which would site it in a mentalizing network (Stanley & Adolphs, 2013) or as a central hub for social information processing networks (Yang et al., 2015). The EBA is active for viewing multiple people. It’s role is to potentially generate “perceptual predictions about compatible body postures and movements between people that result in enhanced processing when these predictions are violated” (Quadflieg & Koldewyn, 2017). Both STS and EBA augment their activation when individuals’ body postures and movements face each other (see Taubert et al., 2022). Notably, when inverted, reliable behavioral decrements occur relative to their upright counterparts (Papeo & Abassi, 2019). When facing body stimuli are evaluated, effective connectivity increases between EBA and pSTS (Bellot, Abassi, & Papeo, 2021), suggesting that delineating their respective roles will be challenging.

Where does EBA belong in the visual streams? Some investigators argue that it does not belong in the ventral stream (Zimmermann, Mars, de Lange, Toni, & Verhagen, 2018). Is the EBA heteromodal? This does not seem to be the case. The pSTS has subregions that have multimodal capability (Landsiedel & Koldewyn, 2023). It also has a complex anterior-posterior gradient of functionality with considerable overlap between functions (Deen, Koldewyn, Kanwisher, & Saxe, 2015). Gradient complexity does not increase in a simple posterior-anterior direction because proximity of the temporo-parietal junction (TPJ) to pSTS complicates the functionality gradient. TPJ is active in Theory of Mind tasks such as false belief. Additional heterogeneity of pSTS functionality is evident from multivoxel pattern analysis (MVPA). While MVPA shows similarities in EBA and pSTS function during observing dyadic interactions, the EBA shows unique functionality in dyadic interaction conditions that the pSTS does not (Walbrin & Koldewyn, 2019). To add to this complex story, human EBA and pSTS function can be doubly dissociated. In a clever manipulation visual psychophysics, fMRI-guided TMS delivered to the EBA disrupts body form discrimination, whereas TMS to pSTS disrupts body motion discrimination (Vangeneugden, Peelen, Tadin, & Battelli, 2014). There are two additional alphabet soup ingredients: the pSTS and aSTS have been subdivided to have ‘social interaction’ subregions (i.e., pSTS-SI and aSTS-SI). These are subregions that respond to interactions between multiple individuals, but not to affective information per se (McMahon, Bonner, & Isik, 2023) (and see also Walbrin & Koldewyn, 2019).

What about dynamic human interactions with objects? The LOC (lateral occipital complex) activates to human interactions with objects – but primarily represents the object information in the interaction or possibly the features of the interaction (Baldassano, Beck, & Fei-Fei, 2017), leading Walbrin and Koldewyn (2019) to suggest that the EBA/LOTC may play a role relative to distinct people and objects (or distinct ways in which to use them) (see also Wu, Wang, Wei, He, & Bi, 2020)). Here the term LOTC refers LOC cortex in close proximity to the EBA. We also need to remember that the classic ventral LOC, essential in object identification, also has visuo-haptic properties, so should be considered as a multisensory region (Amedi, Malach, Hendler, Peled, & Zohary, 2001).

HOW WILL A THIRD VISUAL PATHWAY CHANGE THE EXISTING LANDSCAPE IN SOCIAL AND SYSTEMS NEUROSCIENCE?

How does the third visual pathway proposed by Pitcher and Ungerleider (2021) fit into the existing context of the other two, dorsal and ventral, pathways originally proposed by Ungerleider and Mishkin (1982)? It has been proposed to be a pathway for social perception with component structures strongly activated by human motion/action and social perception. The ventral pathway has always been the stalwart for form processing and as such included human (faces, bodies) and non-human (objects, animals) forms. Acquired lesions produce various types of visual agnosias in this pathway. The dorsal pathway is devoted to processing space and different types of spatial neglect likely are the most well-known of the sequelae of acquired lesions of this pathway. The dorsal pathway also codes space in various co-ordinate systems relative to eye, head, hand and body co-ordinates.

An expanded third/lateral pathway?

For me at least, it might make sense for the third pathway to have the (multisensory) capability to decode:

other human, animal and object (including tool) motion and action;

other human interactions with other humans (including dyads or groups);

other human interactions with other animate beings (e.g., animals) or the natural environment;

other human interactions with objects (including tools);

self-other interactions (with other humans);

self-interactions with animals;

self-interactions with tools.

With the above expanded features, the third/lateral pathway would preserve some parallelism with the ventral form pathway. It might therefore be regarded as a ‘[inter]action’ pathway, and the proposed social perception pathway of Pitcher and Ungerleider (2021) would be an essential component within that scheme.

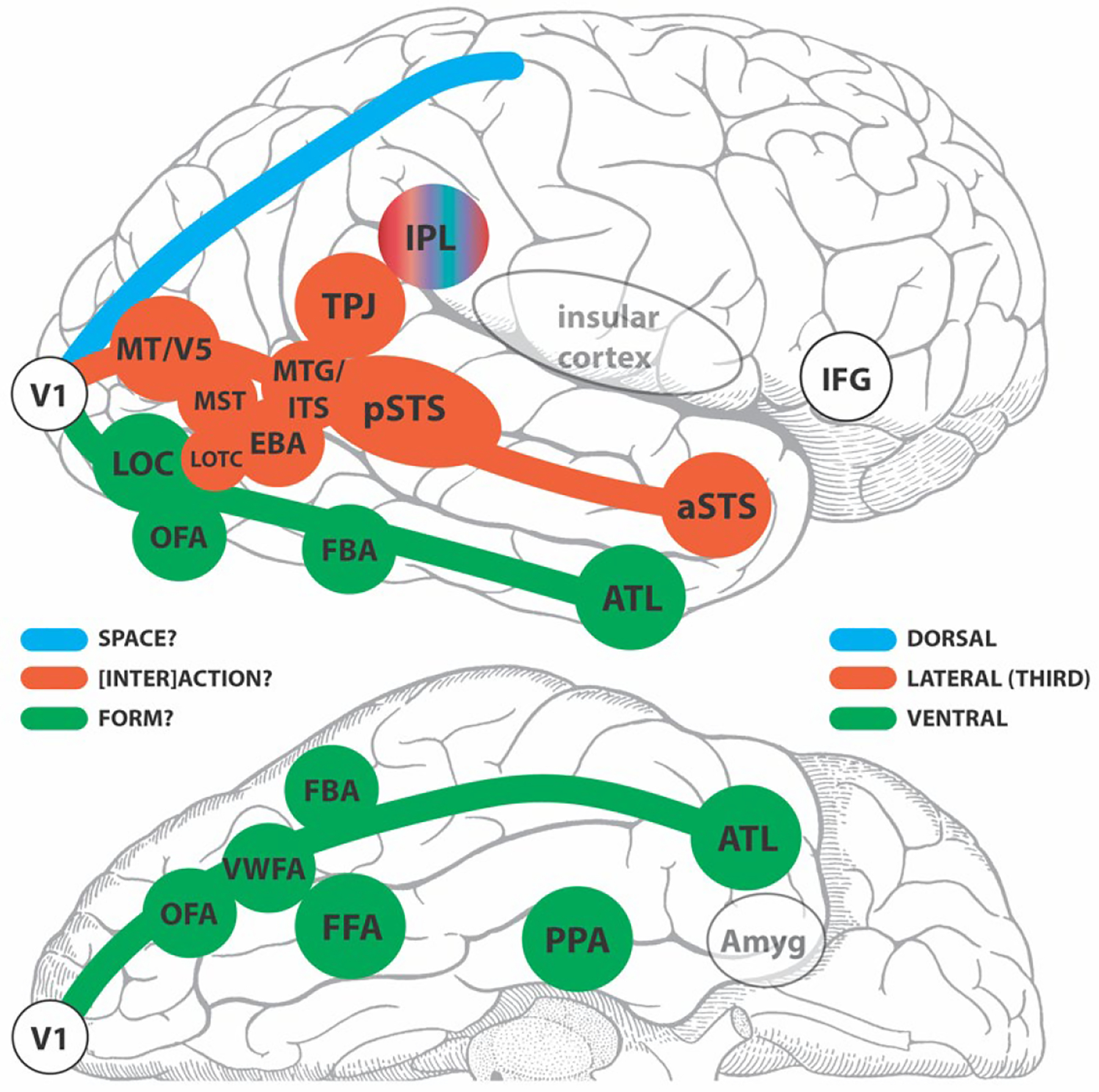

In Figure 11, based on the above proposition, I have tried to sort the ingredients of our brain region alphabet soup into 2 basic divisions – assigning putative membership to the ventral pathway or the expanded third pathway. I have not considered the classical dorsal pathway and its putative elements as this is beyond the scope of this review. Note I have not included lower-level visual areas V2, V3, V4 here for the sake of simplicity. My purpose here is to start a discussion on what the main tasks of the ventral and lateral/third pathways might be, and who the card-carrying members of each might be. I have based the general distinctions, or biases (Fig. 11), not only on human functional neuroimaging and neurophysiological studies, but also on sequelae of acquired human lesions. I have focused shamelessly on the human side of the fence, as some of the capabilities I have listed cannot easily be tested in non-human primates. At the very least, shining a spotlight on some human impairments in the clinical literature might stimulate future experimentation in healthy subjects that could help fill out the general classification scheme. I would posit that once there was a better consensus of which structures sit in the ventral and third//lateral pathways, then it might be time to tackle the dorsal pathway in a similar exercise to determine pathway members and their standing relative to the other two pathways.

Figure 11. Putative members of the ventral and third/lateral pathways in the human brain?

A schematic of a lateral (top) and partial inferior (bottom) view of a human cerebral hemisphere segregating some selected known functional brain areas into third/lateral or ventral pathways. The dorsal pathway appears as an outline and is not considered further here. The green, red-brick and blue colors identify the 3 pathways for which primary visual cortex (V1) is the departure point. The inferior parietal lobule (IPL) is presented as an outlier and hence does not fit the color scheme. LEGEND: V1=primary visual cortex; LOC=lateral occipital complex; OFA=occipital face area; FFA= fusiform face area; VWFA=visual word form area; FBA=fusiform body area; ATL=anterior temporal lobe; EBA=extrastriate body area; MT/V5=motion-sensitive fifth visual area; MST=human homolog of macaque medial superior temporal area with high-level motion sensitivity; LOTC=lateral occipitotemporal complex; MTG/ITS middle temporal gyral and inferior temporal sulcal cortex (sensitive to motion of animals and tools); pSTS=posterior superior temporal sulcus; aSTS=anterior superior temporal sulcus; TPJ=temporoparietal junction; IPL=inferior parietal lobule; IFG=inferior frontal gyrus; Amyg=amygdala.

Below I consider in which division of the visual pathways some of the ingredients of our alphabet soup might sit. For the structures appearing in the ventral pathway in Figure 11, a large literature with converging evidence exists from neuropsychological lesion studies with various types of agnosia, epilepsy surgery patients, and neuroimaging studies in healthy subjects, in addition to the monkey studies. With respect to the idea of an expanded lateral (or third) pathway, there are a number of uncertainties that arise from the fact that:

some of the newer category-selective regions have never been definitively seated into the original visual pathway scheme;

the new expanded scheme of the third/lateral pathway (Fig. 11) considers functionality that has not previously been included in previous pathway classifications.

From the task-related work of Vangeneugden and colleagues (2014) described in the previous section, the EBA would seem to fit best in the ventral stream. That said, resting-state investigations coupled with diffusion-weighted MRI data site it in the dorsal stream, based on effective connectivity measures (Zimmermann et al., 2018). If someone forced my hand on the issue, I would place the EBA in the third/lateral pathway, and not the dorsal stream, based on its task-related response properties. If we regard the third/lateral pathway as a ‘[inter]action’ stream, with social processing as a central component, then the EBA would be a member – because like parts of the pSTS – it activates to dyadic and multiperson interactions.

In real-life we evaluate animate (biological) and also inanimate motion (i.e., from man-made objects such as tools) to which we know that the middle temporal gyral and inferior temporal sulcal cortex (MTG/ITS) have respective sensitivities (Beauchamp et al., 2003; Beauchamp & Martin, 2007). Therefore, I would advocate that brain regions with these response properties would sit in this third/lateral ‘[inter]action’ pathway. That said, however, this raises a lot of questions. What about complex motion deficits in stroke patients, such the inability to process form from motion, or in recognize motion per se (e.g., discussed by Cowey & Vaina, 2000) – rare cases with posterior brain lesions with very specific deficits? Also, given a three visual pathway scheme, where would first- and second-order motion processing now sit? In the ventral/dorsal visual pathway model, these were proposed to sit in the ventral and dorsal systems, respectively, based on monkey literature and rare acquired lesions in patients (Vaina & Soloviev, 2004).

Social perception involves evaluating interactions with others, relative to our integrated (multisensory and embodied) self. Even our personal space is delimited by our arm length. The TPJ is an important locus for multisensory integration within the self. Notably, when functionality of the TPJ is disrupted by focal epileptic seizures, bizarre phenomena such as out-of-body-experiences (OBE) can occur, and complex visual hallucinations involving the self, as well as relative to others can be experienced (Blanke, 2004; Blanke & Arzy, 2005). Out-of-body-like sensations can be elicited in epilepsy patients with direct cortical stimulation of the TPJ (Blanke, Ortigue, Landis, & Seeck, 2002), or in healthy subjects using TMS (Blanke et al., 2005). The OBE is an extreme example of an autoscopic phenomenon, where different degrees of multisensory disintegration of visual, proprioceptive, and vestibular senses can take place at the lower-level. These lower-levels features can also interact with higher-level features such as egocentric visuo-spatial perspective taking and self-location, as well as agency (Blanke & Arzy, 2005). The TPJ is also active in Theory of Mind tasks, Therefore, given that successful interactions with others in the world cannot occur without an intact self, I would situate the TPJ in the [inter]action pathway.

Some outstanding questions

What is the impact of the ‘other’ route from retina to cortex on the visual pathway model?

The three-pathway model’s input in the current formulation is V1 – acknowledging input from the retina via the lateral geniculate route. However, a more rapid, lower resolution, pathway from the retina passes thru the pulvinar nucleus and the superior colliculus to ‘extrastriate cortex’. Currently, its exact terminations with respect to the functional areas making up the ventral and third/lateral pathways are not known. Knowing where the optic radiations terminate in individual subjects would not only be important for understanding the structural connectivity, but also would impact functionality as well.

Issues related to underlying short- and long-white matter fiber connections, and relationships between structural and functional connectivity.

Wang and colleagues (2020) performed a heroic study evaluating data in 677 Human Connectome Project healthy subjects across 3 dimensions in MRI-based data: structural connectivity (SC), functional connectivity (FC) using resting state (RS) and face localizer task data, and effective connectivity (EC). Their conclusions need to be interpreted with caution, as the included HCP fMRI data only have RS and a face localizer task (a 0-back and 2-back working memory task with no social evaluation). Their analyses included 9 face network ROIs/hemisphere and they estimated short- vs long-range white matter fibers in their SC connectome analysis. More than ~60% of ROI–ROI connections could be regarded as short-range, with the rest being labelled as long-range i.e., tracts in the white matter atlas. If there was greater physical distance between any two face ROIs, then this was associated with an increased amount of long-range fiber connections.

The early visual cortex (presumably V1?), OFA, FFA, STS, interior frontal gyrus (IFG) and PCC were observed to form a (functional) 6 region core subnetwork, which was active and synchronized across RS and task-related contexts (Wang et al., 2020). Given the low-level of task requirements related to social judgments, perhaps this might correspond to the ‘default mode of social processing’ in implicit tasks that I mentioned earlier (Latinus et al., 2015; Puce et al., 2016)?

Overall, (Wang et al., 2020) reported that the organization of the 9 ROI face network was highly homogeneous across subjects from the point of view of SC, RS FC and task-related FC in their 677 subjects. These results give a real shot in the arm for data analysis using hyperalignment methods (Haxby et al., 2014; Haxby et al., 2020).