Abstract

Influential reinforcement learning (RL) theories propose that “prediction error” signals in the brain’s nigrostriatal system guide learning for trial-and-error decision-making. However, since different decision variables can be learned from quantitatively similar error signals, a critical question is what is the content of decision representations trained by the error signals. We used functional magnetic resonance imaging (fMRI) to monitor neural activity in a two-armed-bandit counterfactual decision task that provided human subjects with information about foregone as well as obtained monetary outcomes so as to dissociate teaching signals that update expected values for each action, vs. signals that train relative preferences between actions (a “policy”). The reward probabilities of both choices varied independently from each other. This specific design allowed us to test whether subjects’ choice behavior was guided by policy-based methods, which directly map states to advantageous actions, or value-based methods such as Q-learning, where choice policies are instead generated by learning an intermediate representation (reward expectancy). Behaviorally, we found human participants’ choices were significantly influenced by obtained as well as foregone rewards from the previous trial. We also found subjects’ blood-oxygen-level-dependent (BOLD) responses in striatum were modulated in opposite directions by the experienced and foregone rewards but not by reward expectancy. This neural pattern, as well as subjects’ choice behavior, is consistent with a teaching signal for developing “habits” or relative action preferences, rather than prediction errors for updating separate action values.

INTRODUCTION

According to influential theories, the dopamine system broadcasts a “prediction error” signal for reinforcement learning (RL) (Barto, 1995; Schultz et al., 1997; Dayan and Abbott, 2001; Rangel et al., 2008). However, relatively little is known about the precise action of this signal in guiding subsequent decisions, and indeed, error-driven learning can support qualitatively different decision-making strategies (Sutton and Barto, 1998; Dayan and Abbott, 2001). Two approaches differ in the content of the information learned. Policy-based (“direct actor”) methods such as the actor/critic learn a “policy” or direct mapping from situations to advantageous actions, adjusting this in light of received rewards (Barto, 1995; Sutton and Barto, 1998; Dayan and Abbott, 2001). In contrast, value-based (“indirect actor”) methods such as Q-learning produce choice policies indirectly by learning an intermediate representation: the expected reward (“value”) for each candidate action (Watkins and Dayan, 1992). These intermediate representations can then be compared to derive a policy.

These algorithms formalize important neuropsychological concepts. Policy learning parallels the notion that reinforcement “stamps in” stimulus-response habits, which is central to contemporary accounts of drug abuse (Thorndike, 1898; Dickinson and Balleine, 2002; Everitt and Robbins, 2005). However, either value or policy learning can be accomplished using teaching signals that, in typical tasks, appear nearly identical. In a typical task, where subjects repeatedly select from different options for rewards, action values can be learned from a prediction error (PE) measuring the difference between the received reward and the option’s previously predicted value. A policy can also be updated by comparing the received reward to an expected (e.g., reference or state) value prediction (Dayan and Abbott, 2001). Indeed, the actor/critic algorithm learns both values and policies using the same error signal (Barto, 1995). Attempts using such tasks to distinguish versions of these signals have produced inconsistent results (Morris et al., 2006; Roesch et al., 2007).

We studied human choices and fMRI signals in an RL task modified to distinguish signals appropriate for updating policies versus value predictions (Fig. 1A). Subjects repeatedly chose between two slot machines, associated with independent probabilities of delivering monetary reward. For each choice, the screen displayed the amount of reward subjects won, but also what they would have won, had they chosen the other option. This information should affect teaching signals for values or policies differently, allowing us to distinguish these computational strategies. If values for the two options are learned separately, then two prediction errors are needed: comparing each value prediction to its associated outcome. In contrast, the policy requires only a single update, depending only on the difference between the obtained and foregone outcomes (such that rewards need not be compared to predictions).

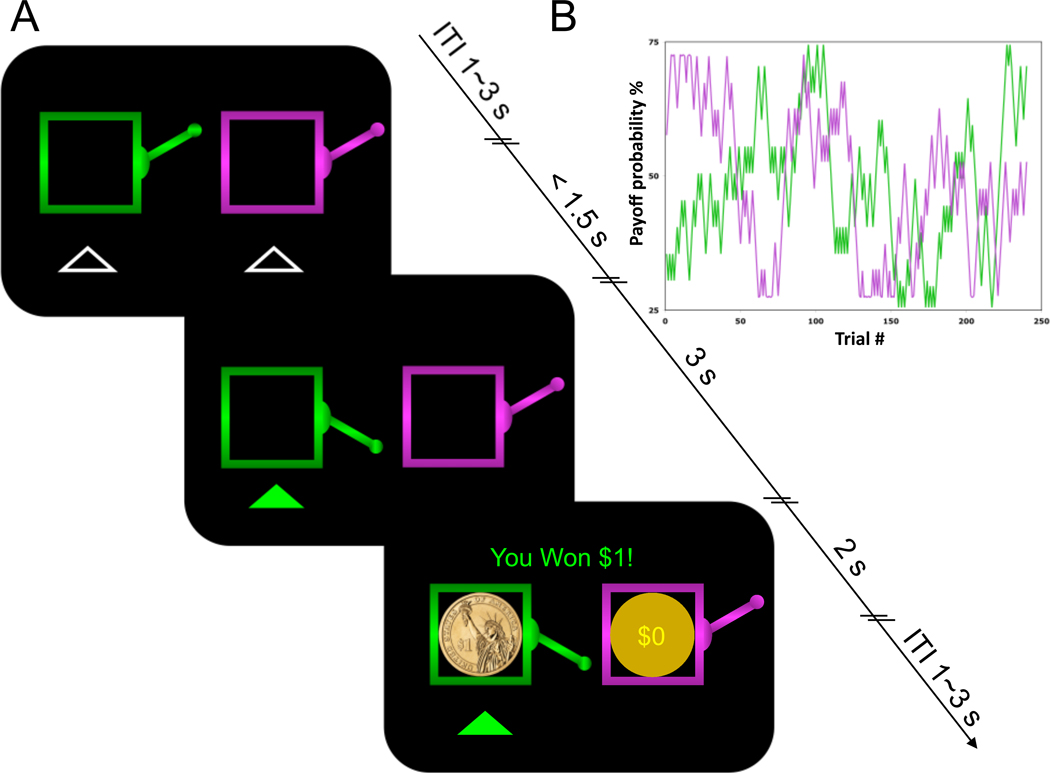

Figure 1.

Experimental Design. A, Timeline of a single trial. B, Example from one subject of changing reward probabilities for both slots.

Since both rewards were conditionally independent, it was unambiguous to determine their separate effects on neural activity. We focused on activity in the ventral striatum, which has been shown to correlate with PE signals similar to those seen in dopaminergic neuron recordings (Schultz et al., 1997; Berns et al., 2001; O'Doherty et al., 2003; Lohrenz et al., 2007).

Materials and Methods

Subjects

20 subjects participated in the experiment (13 female, mean age = 21.2 ± 5.0 years, age range = 18 – 39). The study was approved by the university committee on activities involving human subjects (UCAIHS), and all subjects provided informed consent before the experiment.

Counterfactual Learning Task

The task consisted of four sessions of 60 trials each, separated by short breaks. At the start of each trial, subjects were presented with pictures of two differently colored (green and purple) slot machines. Subjects could select their choice of the slot machines using two button boxes (Fig. 1A) one for each hand and the left-right ordering of the slot machines was counterbalanced across subjects but kept constant across the task for each individual. Subjects had a maximum of 1.5 seconds to enter a choice; if no choice was entered during the 1.5 sec window, a warning message “Please respond faster!” was displayed for 1 second, which was then followed by a 1–5 seconds delay before another trial started. In general, subjects responded well before the timeout, with a mean reaction time of 523 ± 20 ms (mean ± s.e.m). On valid trials, the chosen slot machine was highlighted and, three seconds later, the outcomes for both chosen and unchosen slot machines were displayed, by overlaying a picture of a $1 coin or a circle marked $0 on each machine (Fig. 1A). The outcomes stayed on the screen for 2 seconds, after which the screen was cleared. The trial sequence ended 6.5 seconds after trial onset, and a randomly jittered inter-trial interval (ITI) with a mean of 2 seconds was introduced before the beginning of the next trial.

The payoff for each slot machine (i={L, R}) on each trial (t) was either $1 or $0 (Fig. 1A), with each payoff drawn independently from a binomial distribution according to a machine-specific probability pi,t that gradually changed over trials (Fig. 1B). At the beginning of the task (t=1), both probabilities were independently drawn from a uniform distribution with boundaries of [0.25,0.75]. Following each trial, the probabilities were each diffused either up or down by adding or subtracting 0.05 (equiprobably and independently). The updated probabilities pi,t+1 were then reflected off the boundaries [0.25,0.75] to maintain them within that range.

Subjects were instructed that they would be paid only according to the accumulated outcomes of the slot machines that they actually chose but not the forgone choices. Subjects were also told that the final points they earned would be converted proportionally to dollars but not told the actual scaling factor (which was 0.25).

Behavioral Analysis

We first used a logistic regression analysis to estimate how a subject’s choice on trial t (the dependent variable, for all t ≥ 2) was influenced by the chosen and unchosen rewards (Rc and Ru) on the previous trial. We specified three independent variables based on the events on the preceding trial: reward on the chosen slot machine (coded as 0 for no reward, or +1 or −1 for reward following a left or right choice, respectively), reward on the unchosen slot machine (coded similarly), and the choice on that trial (a dummy variable coded as +1 or −1 for left or right choices, so as to capture any first-order autocorrelation in the choices (Lau and Glimcher, 2005)). We estimated regression weights for each subject individually using maximum likelihood, and report summary statistics for these quantities across subjects (Table 1).

Table 1.

Mean parameter fits ± S.E.M. from 20 subjects for three models

| Q-Learning | Policy-gradient | Logistic regression | |||

|---|---|---|---|---|---|

| QL,0 | 0.54 ± 0.09 | -- | Rc | 3.44 ± 1.51 | |

| QR,0 | 0.61 ± 0.08 | η | 0.48 ± 0.06 | Ru | 2.09 ± .053 |

| α | 0.35 ± 0.07 | α | 62.23 ± 20.22 | Repetition | 1.89 ± 0.58 |

| κ * α | 0.24 ± 0.07 | κ | 0.48 ± 0.07 | Intercept | −0.03 ± 0.06 |

| β | 9.08 ± 2.62 | W0 | −14.28 ± 35.87 | -- |

We also fit the parameters of two learning models (detailed below) to each subject’s choices by maximizing the (log) likelihood of the choice sequence:

separately for each subject s. Here, cs,t denotes the choice made by subject s on trial t and Θ is the parameter set. We sought optimal parameters using a nonlinear optimization algorithm (fmincon, Matlab optimization toolbox), and 30 different starting search locations for each subject so as to avoid local maxima. We report negative log likelihoods (smaller values indicate better fit), both pure and penalized (Kass and Raftery, 1995) for model complexity using the Bayesian information criterion (BIC). We also report a pseudo-r2 statistic (Camerer and Ho, 1999; Daw et al., 2006), defined as (r-l)/r, where l and r are, respectively, the negative log likelihoods of the data under either fit model and under purely random choices (Pc,t = 0.5 for all trials and subjects).

Reinforcement learning models: Q-Learning model

A Q-learning model (Dayan and Abbott, 2001; Sutton and Barto, 1998; Watkins and Dayan, 1992; Daw et al., 2006; Li et al., 2006) learns an expected value (“Q value”) for each option based on experienced outcomes, and then chooses accordingly. We adapted a standard model to allow learning from unchosen as well as chosen rewards; this simply updates each machine’s value on each trial according to its own prediction error. We allow the updating of the unchosen option to be controlled by a distinct learning rate, to capture any differences in attention to the two outcomes. Specifically, at each trial, both values were updated according to the feedback received:

where α is a free learning rate parameter, κ modulates the learning rate for the unchosen option, and

| (1) |

are prediction errors for the rewards R received on each machine.

We further assumed the probability of choosing either machine (i={L, R}) was softmax in its Q value:

with free exploration parameter β and initial Q values (QL,0 and QR,0; see Table 1).

Policy-gradient model

A second approach to reinforcement learning maintains policy parameters specifying a preference over options, and updates this preference with feedback to achieve stochastic gradient ascent on the expected reward (Dayan and Abbott, 2001; Dayan and Daw, 2008). We again represent the selection policy as softmax, here the chance of choosing machine L is

with the policy parameter w. Here, .

If 〈rR〉 and 〈rL〉 are the average expected reward on either slot machine, then the expected reward given any particular policy is:

and its gradient with respect to the policy parameter,

is proportional to the difference between the two reward rates. On each trial, then, the gradient can thus be sampled stochastically as the difference between obtained and foregone rewards; note that this does not require separately estimating the average values themselves. This algorithm instead works directly with the policy w.

To write the gradient rule in a way that relates more directly to the standard case when only the chosen reward is received, we formulate it in terms of the chosen and unchosen rewards (rather than left and right), so that

with error term

| (2) |

stepsize parameter α, and decay parameter (to allow learning in the case of nonstationarity, as in the task here) η, and initial w (w0). The final free parameter, κ, again allows for the gradient to skew (e.g. due to differential attention) toward the chosen or unchosen reward (Rc and Ru, respectively).

Imaging acquisition

Functional images (T2*-weighted echo-planar images with BOLD contrast) were collected using a 3T Siemens Allegra head-only scanner and a Nova Medical (Wakefield, MA) NM-011 head coil. To optimize functional sensitivity in the orbitofrontal cortex and temporal lobes we used a tilted acquisition oriented at 300 above the AC-PC line (Deichmann et al., 2003). This yielded 33 oblique-axial slices with 3mm inter-slice thickness, 3×3 mm in-plane resolution, with coverage from the base of the orbitofrontal cortex and medial temporal lobes to the superior border of the dorsal anterior cingulate cortex. Repetition time (TR) was 2 seconds. Subjects’ heads were restrained by plastic pads to minimize head movement during the experiment. A T1-weighted structural image (MPRAGE sequence, 1×1×1 mm) was acquired after the functional run for each subject to allow localization of functional activity. High-pass filtering with a cutoff period of 128 seconds was also applied to the data.

Functional imaging analysis

Imaging data were preprocessed and analyzed using SPM5 (Wellcome Department of Imaging Neuroscience, Institute of Neurology, London, U.K.) and xjView (http://www.alivelearn.net/xjview/), except for multiple comparisons correction on final results, which was done using SPM8. Motion effects were corrected by aligning images in each run to the first volume using a 6-parameter rigid body transformation. (To account for additional residual effects of movement, the six scan-to-scan motion parameters produced during realignment were also included as nuisance regressors in the functional analysis) Mean functional images were then coregistered to the structural image, and normalized into MNI template space using a 12-parameter affine transformation (SPM5 “segment and normalize” estimated from the structural). Normalized functional images were resampled into 2×2×2 voxel resolution. A Gaussian kernel with a full width at half maximum (FWHM) of 6mm was applied for spatial smoothing.

For statistical analysis, we constructed three impulse events for each trial, at the times of slot machine presentation, choice entry, and outcome presentation. The first two events were included to control the overall variance; we focused on the outcome event here due to the fact that the key prediction error signal is associated with the outcome. In three separate GLMs, we modulated the outcome events with different parametric regressors. First, in an initial attempt to seek activity correlated with teaching signals for either policy gradient or prediction errors associated with chosen and unchosen choices, we constructed a policy gradient regressor as Rc-κRu, the difference between the obtained and foregone reward, and also prediction error regressors for both chosen and unchosen options, Rc-Qc and Ru-Qu. These were entered in two different GLMs (one for the policy signal, one for both reward prediction errors) for whole-brain regression analyses (Fig. 2). Next, to more carefully differentiate effects related to either type of signal, we noted that equation (1) and equation (2) both consist of different linear combinations of the four variables Rc, Ru, Qc, and Qu; we thus constructed a third GLM containing each of these as parametric regressors modulating the outcome impulse event (Fig. 3).

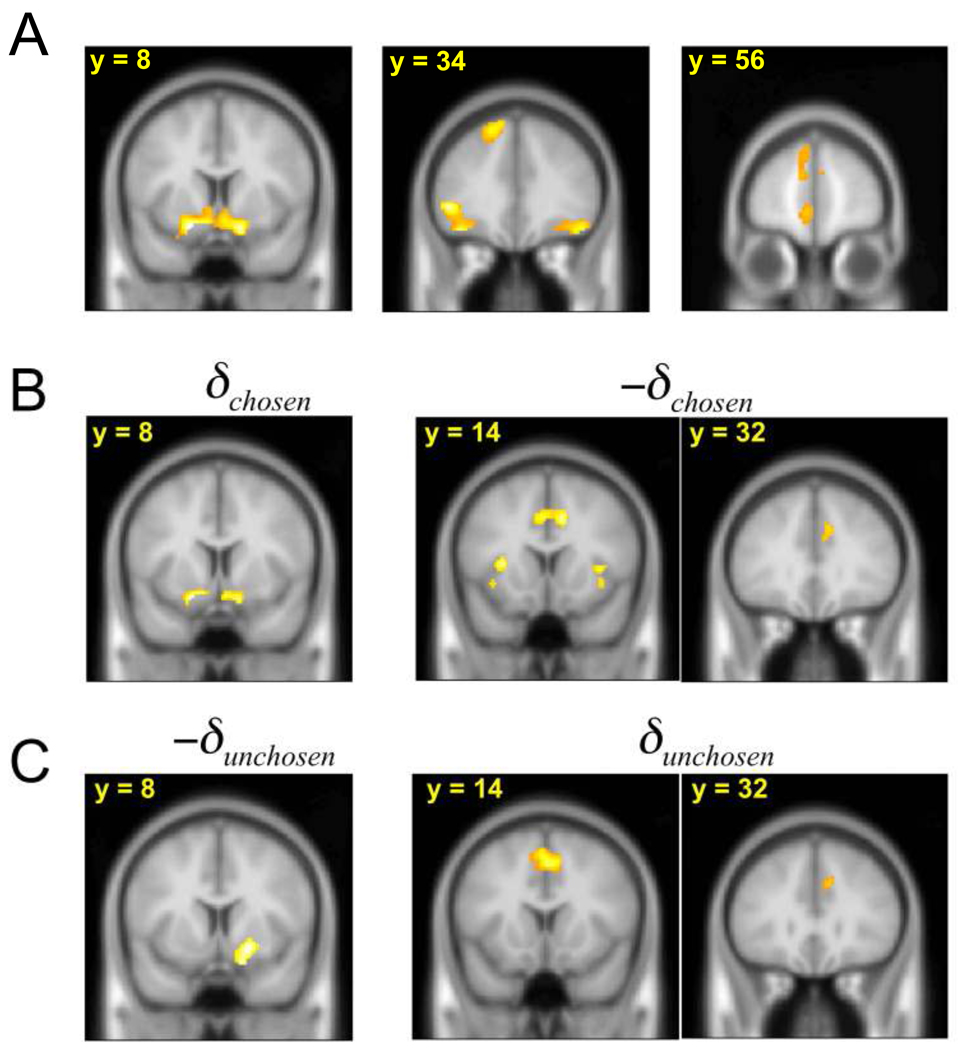

Figure 2.

A, Neural correlates of Rc-κRu. Overlapping view of the neural correlates of B, prediction errors of chosen choices (δchosen and −δchosen) and C, foregone choices (δunchosen and −δunchosen) (p < 0.05).

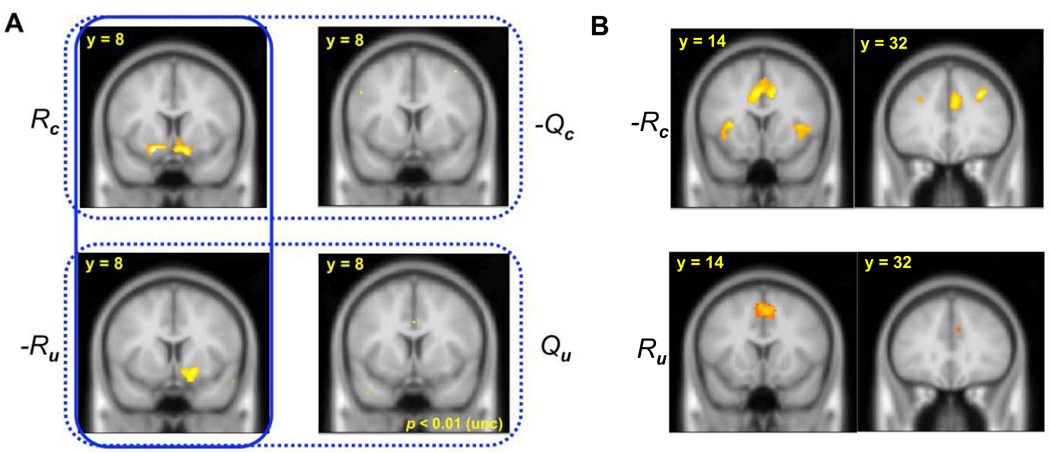

Figure 3.

A, Effect of decision variables in striatum. BOLD activity positively correlates with Rc and −Ru (p < 0.05) but not with –Qc or Qu, even at a loose threshold (p = 0.01, uncorrected). The dotted boxes surround the pairs of effects expected to be significant for value-based learning (Rc-Qc and –(Ru-Qu)) and the solid box surrounds those for policy learning (Rc- κRu). B, Similar activities (bilateral insula, anterior cingulate cortex (ACC) and DLPFC) were positively correlated with –Rc and Ru (p < 0.05).

For all these analyses, the chosen and unchosen rewards were taken directly from the outcomes experienced by the subject, and the Q values were those implied by the Q learning model on each trial, using the outcomes experienced by the subject and the free parameters for the model estimated to best fit the subject’s choice data (Fig. 3). We then convolved these regressors with SPM5’s canonical hemodynamic response function, computed parameter estimates for each subject, and took these estimates to the group random effects level for statistical testing (Friston et al., 1995).

We report significance of activations correcting for whole-brain multiple comparisons using cluster-level false discovery rate (“topoFDR”) algorithm implemented in SPM8, on maps generated at an underlying uncorrected threshold of p<.001. (Note that we did not employ voxel-level FDR, which has recently been argued to be invalid (Chumbley and Friston, 2009)). Accordingly, except where noted, activations are rendered for display using the p<.001 uncorrected threshold, but retaining only those clusters that pass the p<.05 cluster size correction.

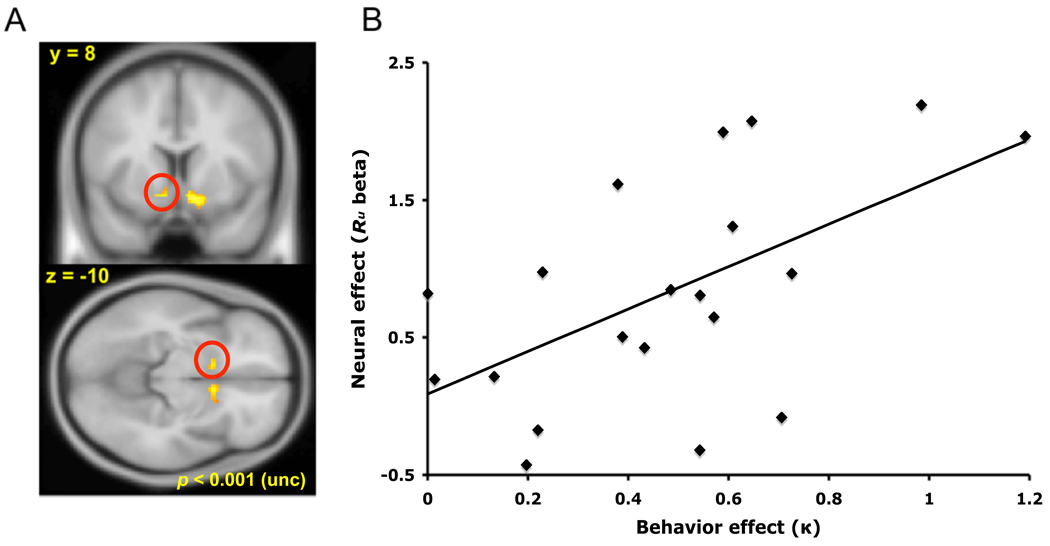

Finally, for the ROI regression analysis (Fig. 4), we first identified two ROIs in striatum using the conjunction (Nichols et al., 2005) of Rc and -Ru (thresholded at p < 0.001, uncorrected; Fig. 4A). We used the average activity from each of these regions for the subsequent regression between the neural effect of Ru (the per-subject regression weight for that variable) and the behavioral effect (the per-subject estimate of κ from the best fitting policy gradient model). Note that this approach largely skirts the problem of multiple comparisons in inter-subject regression analyses, since the initial analysis to identify the ROI (the existence of a conjunction effect, in the mean across subjects) does not bias the subsequent test for the between-subject pattern of variation. Significance levels need thus be corrected only for two comparisons (two ROIs, left and right; Fig. 4A) rather than for the whole-brain multiple comparisons used to select the regions.

Figure 4.

A, Error signaling ROI in left ventral striatum, identified from the conjunction of Rc and –Ru across subjects (p < 0.001, uncorrected). B, In left striatum (circled in Figure 4A), the neural effect size for –Ru was positively correlated, across subjects, with the weight for the unchosen reward, κ, estimated from choice behavior (p = 0.018, Bonferroni corrected; r = 0.57).

All results reported herein were qualitatively the same when Q values were computed using a common set of parameters across subjects, taken as the average over all subjects of those from the individual fits. Results were also invariant to changes in the ordering of the entry of regressors in the design matrix (which, due to serial orthogonalization of parametric regressors in SPM, might hypothetically have impacted their relative significance).

RESULTS

Foregone Reward and Action Selection

First, we assessed subjects’ behavioral sensitivity to the experienced and foregone rewards by using logistic regression to predict each subject’s choices as a function of the feedback she received on the previous trial (Table 1). Across subjects, both experienced (t19=2.27, p <.02) and foregone (t19=3.94, p < .001) rewards significantly predicted the subject’s next choice, with no significant difference in the strength between the two influences (paired samples, t19=.84, p > 0.4). This result is consistent with the previous literature on counterfactual effects, indicating that humans’ choices are affected not only by “what was” but also by “what might have been” (Camille et al., 2004; Coricelli et al., 2005; Lohrenz et al., 2007).

We used two RL models to examine more detailed hypotheses about how experience drove choices via learning (Sutton and Barto, 1998; Dayan and Abbott, 2001; Bhatnagar et al., 2008). A standard value-based model, Q-learning (Watkins and Dayan, 1992), separately tracks the expected value for each option and compares these predicted values at choice time to derive a policy for action selection. However, a policy-based model, the direct actor, learns a policy representing the probability of choosing either action, with an update that is determined by stochastic gradient ascent on the overall expected reward (Dayan and Abbott, 2001; Bhatnagar et al., 2008). Both models were straightforwardly adapted to incorporate foregone rewards, and both included an additional free parameter, κ, to allow for a possible difference in weighting (e.g. attention) experienced rewards and foregone rewards (see Materials and Methods and Table 1). We estimated free parameters and compared the models’ fits by maximizing the likelihood of each subject’s choices (Table 1). The policy-based model generally performed better than the value-based model. Individually the policy-based model outperformed the Q-learning model for 19 of 20 subjects (Table 2) according to the Bayesian Information Criterion.

Table 2.

Qualities of behavioral fits of both models

| Direct Actor | Q-Learning | |

|---|---|---|

| −LL | 107.6 | 114.3 |

| Pseudo-R 2 | 0.3534 | 0.3131 |

| # parameters | 4 | 5 |

| BIC | 118.6 | 128.0 |

Neural Correlates of Q-Learning and Policy Gradient

Choice behavior was thus most consistent with a policy-based strategy. However, because the behavioral predictions of the two learning strategies are qualitatively similar to one another, we next tested for neural signatures of teaching signals, about which the two hypotheses make quite qualitatively distinct predictions. Specifically (see Materials and Methods and Camerer and Ho, 1999), learning the value of each option separately requires two independent prediction error signals, measuring the difference between the rewards received (Rc and Ru) and expected (Qc and Qu) for both chosen and unchosen options (Rc-Qc and Ru-Qu). In contrast, due to the symmetry of the task, updating action preferences requires a unitary signal (formally, the gradient of the expected reward with respect to the policy) proportional to the difference between the obtained and foregone rewards, Rc-κRu.

As a first step, we verified whether these three candidate error signals correlated with blood-oxygen-level-dependent (BOLD) activity in each trial at the time when outcomes were revealed and learning was expected to take place for both Q-learning and policy gradient models. These three signals were extracted from each model using the parameters that best fit the behavior. We focused on ventral striatum, where many previous studies using fMRI have shown error-related neural activity (Berns et al., 2001; O'Doherty et al., 2003; McClure et al., 2003; O'Doherty et al., 2004; Delgado et al., 2005; Daw and Doya, 2006). Accordingly, both the prediction error for the chosen action’s value in the value-based model, Rc-Qc, and that for the policy in the policy-based model, Rc-κRu, correlated positively with BOLD activities in the ventral striatum (Figs. 2A, B; p <.05; unless otherwise noted, all statistics concerning fMRI activations are corrected at the cluster level for false discovery rate due to whole-brain multiple comparisons); the prediction error for the unchosen option Ru-Qu correlated negatively with activity in a similar region (p <.05; Fig. 2C).

The finding that error signals from both models were correlated with BOLD activity in ventral striatum presumably reflects the fact that the candidate error signals are themselves mutually correlated, necessitating finer investigation to separate them apart. For instance, the chosen value error (Rc-Qc) and policy error (Rc-κRu) signals might potentially each correlate significantly with striatal BOLD in virtue of sharing the same term Rc. More particularly, all these signals consist of different linear combinations of the same variables: the chosen and unchosen rewards, Rc and Ru, and the expected rewards Qc and Qu. Taking advantage of this decomposition and the additivity of the general linear model (GLM) to examine more carefully which error signal was most consistent with striatal BOLD activity, we estimated a GLM that included these four factors as separate regressors. The policy-based model predicts that Rc and Ru should together modulate any teaching-related brain activity in the same voxels but with opposite directions (see Materials and Methods): the weighted combination of these two effects corresponds to the policy error signal. In contrast, the value-based model (Q-Learning) makes no particular claim about spatial overlap or relative direction of Rc and Ru effects (which according to this hypothesis are part of separate error signals that are not necessarily spatially distinct). However, this value-based model does predict that each effect (Rc or Ru) should be accompanied by spatially overlapping activity correlated with its correspondingly associated predictions (Qc or Qu), with opposite signs for the R and Q components (Rc-Qc and Ru-Qu).

Activity in bilateral ventral striatum positively correlated with Rc (Figure 3A; p <.05), which is a component of teaching signals in both hypotheses. However, as predicted by the policy model, Ru was negatively correlated with BOLD activity in substantially the same region of striatum (Fig. 3A; p <.05). A conjunction analysis confirmed that these two effects occurred in overlapping voxels (shown bilaterally at p <.001 uncorrected in Fig. 4A; the cluster on the right survived whole-brain correction at p <.05). Furthermore, we tested for differences in the spatial expression of these effects by using the contrasts Rc> -Ru and Rc< -Ru to identify voxels where the positive effect of Rc and the negative effect of Ru differed in size. Consistent with the policy model, no such differences were found in striatum (p <.05). Finally, we tested whether value prediction signals Qc and Qu were correlated with striatal BOLD activity in the directions opposite to their associated rewards (Fig. 3A), as predicted by the value-based model. However, no such correlations were found for either signal, even at a much weaker threshold (shown in Fig. 3A at p < 0.01 uncorrected) and in either positive or negative directions. In all, the results are consistent with net ventral striatal BOLD activity expressing a single teaching signal proportional to Rc-κRu, as predicted by the policy gradient algorithm, but do not demonstrate any evidence for a pair of value prediction errors for each of the chosen and unchosen options, either overlapping or spatially separate (Lohrenz et al., 2007). (Indeed, the results also are not consistent with an alternative value-based decision variable, i.e. the relative action value [Qc - Qu], since the prediction error, [Rc - Ru] - [Qc - Qu], for this combined quantity also predicts effects of both Qs.) The lack of significant value prediction-related activity in our study seems to contrast with many other studies in which striatal BOLD activity was demonstrably modulated by value expectation, as for prediction errors (Berns et al., 2001; O'Doherty et al., 2003; Tanaka et al., 2004; Hare et al., 2008). This apparent difference may be due to the design of the current task, which differs from many others in that, because of its symmetric form and the inclusion of counterfactual feedback, the teaching signal for the policy contains no reward expectation term.

Similarly, and strikingly, throughout the rest of the brain, activity correlated with Rc and Ru was observed in almost the same set of neural pathways (Fig. 3B), but with opposite directions of effect. Evidence for spatially non-overlapping effects was found (Rc > -Ru, p <.05) only in posterior portions of the brain: occipital visual cortex and fusiform areas. Over the whole brain, no activity was found to correlate positively or negatively with Qc or Qu even at a lower threshold (p <0.01 uncorrected).

Correlation of Behavioral and Neural Sensitivities to Foregone Reward

Finally, we compared neural and behavioral variation across subjects to investigate whether foregone outcome signaling in ventral striatum was related to choice behavior. In particular, we tested whether, across subjects, there was covariation between estimates of the weight (e.g. attention) given to the foregone outcome as assessed from choice behavior (the parameter κ from the policy-gradient model) and from neural error-related activity in striatum (the effect size for the foregone reward). We first identified areas of error signaling in left and right ventral striatum (using the conjunction of Rc and –Ru effects; Fig. 4A), then tested within each cluster whether the contribution of Ru to this signal covaried across subjects with the weight to foregone rewards estimated from choice behavior. The predicted correlation between the behavioral and neural effects of the foregone reward was significant in left striatum (Fig. 4B, circled in red in Fig. 4A; p = 0.018; Bonferroni corrected for two comparisons, left and right striatum), and trended in the same direction, though not significantly so, in right striatum (p=0.45 corrected; data not shown), suggesting that both neural and behavioral analyses consistently tapped a common learning process.

DISCUSSION

A longstanding question in psychology – dating back to early debates surrounding behaviorism (Thorndike, 1898; Tolman, 1949; Dickinson and Balleine, 2002)– is the representational question: what exactly is learned from reinforcement? Error-driven RL theories are surprisingly ambivalent on this issue. Value-based (Q-Learning) models propose that the difference between obtained and predicted rewards is used to update expected action values, and choice policies are subsequently derived by comparing these intermediate quantities (Barraclough et al., 2004; Daw et al., 2005; O'Reilly and Frank, 2006; Hare et al., 2008; Boorman et al., 2009). Policy-based approaches instead update a choice policy directly, though often alongside a value representation. What makes these two approaches difficult to differentiate is that in most circumstances the policy update signal, derived from the gradient of the expected rewards with respect to the policy, also takes the form of a difference between obtained and expected rewards. Indeed, the signals are so similar that the prominent actor/critic algorithm actually uses the same error signal to update both state values and policies.

Here, we studied a task in which this is not the case, allowing us to distinguish a teaching signal for direct policy preferences from signals for learning value predictions. In particular, foregone reward takes the place of the expected reward in a policy teaching signal, but not in an action value teaching signal. We found evidence that net outcome-related BOLD activity in the striatum is appropriate to learning policies, but no similar evidence for signals appropriate for updating separate action values. That said, the latter (negative) conclusion relies on a stronger test for PE signaling than is often used in the literature. In many studies, and ours as well (Fig. 2), striatal BOLD activity correlated with a PE signal for Q values. We decomposed the activity to show that in our task this correlation was likely due only to outcome- and not prediction-related activity, supporting the policy model. Although most previous authors did not report this particular analysis, many showed other evidence that the striatal BOLD response was modulated by predictions as well as outcomes (e.g. by including outcome as an effect of no interest or contrasts between expected and unexpected outcomes (Berns et al., 2001; McClure et al., 2003; Li et al., 2006; Hare et al., 2008; Li et al., 2011)). Interestingly, Behrens and his colleagues (Behrens et al., 2008; see also Behrens et al., 2007) separately tested both effects in the same manner we do, and also found no effect of predictions. This may be because in their task, like ours, foregone rewards were known, so that expectancies would also disappear from the policy update signal.

A number of recent studies suggest that error-related BOLD activity in striatum may reflect, at least in part, the dopaminergic input from midbrain (Pessiglione et al., 2006; Knutson and Gibbs, 2007; Schonberg et al., 2010). Of course, since the fMRI BOLD signal is a generic metabolic signal not specific to a single underlying neural cause, unit recordings will be required to determine whether our results generalize to the prediction error responses of midbrain dopamine neurons.

Our results do, however, provide positive evidence in the human brain for a teaching signal specifically appropriate for updating action policies. Unlike error signals previously reported in other tasks, which did not probe the distinction since value and policy errors coincided (Morris et al., 2006; Daw et al., 2006; Hampton et al., 2006; Schonberg et al., 2007; Schonberg et al., 2010), this signal cannot alternatively be interpreted as a prediction error for values. Perhaps the best previous evidence for a policy-specific update signal was an influential report of spatially distinct correlates in striatum of a prediction error during a free choice compared to an instructed choice condition (O'Doherty et al., 2004, also see Tricomi et al., 2004). Although in that task also, modeled value and policy teaching signals were substantially the same, activity in the dorsal striatum was specific for the free-choice condition, and interpreted as a policy teaching signal on that basis. Interestingly, standard actor-critic models do not obviously predict that policy teaching signals will be specific to free-choice conditions; instead, they assert that both actor (policy learning) and critic (value learning) modules just use a common error signal (Barto, 1995). Here, we skirt this interpretational difficulty by investigating whether the information carried by the signal itself is appropriate for training policies or values.

The comparison with the O'Doherty et al. study also points to another unresolved puzzle in both datasets (and in studies of striatal error signaling in humans more generally), which is that lesion work in rodents identifies stimulus-response policy learning specifically within the dorsolateral striatum (Yin et al., 2004), which corresponds to the dorsal putamen in humans. Most PE-related activity in humans, including, surprisingly, the policy errors in the present study, is instead focused more ventrally in ventral putamen and caudate (though see Schonberg et al., 2010). Meanwhile, in the O'Doherty et al. (2004) study, the putative policy error in the free-choice condition was localized in the dorsal caudate (dorsomedial striatum), which is also not consistent with stimulus-response learning in rats (Yin et al., 2005). Thus, further work remains to detect counterparts in human striatal error signaling of the striatal suborganization suggested by rodent work (Tricomi et al., 2009; Schonberg et al., 2010).

Alongside our positive evidence for a policy update signal, our accompanying failure to detect evidence for value update signals in the present task should not be interpreted as contradicting the overwhelming evidence that neural signals, including those in the nigrostriatal system, reflect action value expectations in many other circumstances (Barraclough et al., 2004; Daw et al., 2005; Samejima et al., 2005; O'Reilly and Frank, 2006; Rangel et al., 2008; Hare et al., 2008; Seo and Lee, 2008; Boorman et al., 2009). First, we cannot rule out that our results are idiosyncratic to the present task. For instance, subjects’ brains might have adopted the direct actor strategy here because it’s particularly straightforward and efficient due to the symmetric information structure of our task design. Anyway, a true neural value representation would be required even under the wildest possible extrapolation from the current results – that all previously reported error signals, too, can be understood as policy rather than value update signals – since in those tasks even the error signal for policies itself contains value predictions.

The necessity of value predictions for producing an error signal (for policies) does not imply that those value predictions are themselves also learned from that error signal, although the latter is also assumed in standard models like the actor/critic. For instance, even if value predictions are not trained by a nigrostriatal error signal, they may instead arise from prediction processes involving other brain systems. A longstanding idea in psychology and cognitive neuroscience is that the brain relies on multiple learning and memory systems, which can each learn different representations (Knowlton et al., 1996; Poldrack et al., 2001; Dickinson and Balleine, 2002; Daw et al., 2005). In addition to values directly learned from prediction errors, value predictions are also believed to be constructed from still more fundamental information – e.g., from “action-outcome” associations, cognitive maps, or “model-based” RL, often associated with prefrontal or declarative memory systems (Poldrack et al., 2001; Dickinson and Balleine, 2002; Daw et al., 2005). These predictions may, in turn, inform nigrostriatal signals for policy update (Doll et al., 2009; Simon and Daw, 2011; Daw et al., 2011).

Such “model-based” evaluation processes likely played a more prominent role in a pair of recent studies of counterfactual effects on choice (Camille et al., 2004; Coricelli et al., 2005), because in those studies subjects had to evaluate new options on each trial based on a pictorial description, rather than learn a choice policy by trial-and-error as in the present task. The requirement for such explicit evaluation may help to explain why, in those studies, the level of “regret” related to foregone options was specifically associated with distinct neural correlates in orbital prefrontal cortex, whereas both actual and foregone rewards engaged largely identical brain networks in the present study.

In another related study, Lohrenz et al. reported correlations in striatal BOLD with “fictive error signals” in a decision task in which subjects learned how much to bet on a simulated trading market (Lohrenz et al., 2007). This “fictive error” signal (the difference between the obtained reward and the largest possible reward) can be viewed as another instance of the policy gradient signal reported here, and indeed the authors noted this potential interpretation. However, because that task involved only a single dimension of action (how much to bet) with a single reward (the movement of the market), rather than two actions with two independent rewards, it would have been difficult to tease apart the constituents of the signal as we have done in our task, so as for instance to distinguish a counterfactual policy update signal (how much to move the bet policy toward the all-in bet) from other correlated quantities such as a counterfactual value update signal (e.g., updating a stored value prediction for the all-in bet).

Our findings thus provide specific new evidence about the computational form and function of error-driven learning in the human striatum, adding to an accumulating body of evidence about these processes. The suggestion that these signals directly reinforce choice policies, rather than training value predictions that serve as intermediate quantities in evaluating candidate choices, may have particular relevance for dysfunctions involving compulsive action, such as drug abuse, gambling, and impulse control disorders (Dickinson and Balleine, 2002; Everitt and Robbins, 2005).

Acknowledgements

This project was supported by Award Number R01MH087882 from the National Institute Of Mental Health as part of the NSF/NIH CRCNS Program, and by a Scholar Award from the McKnight Foundation. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute Of Mental Health or the National Institutes of Health. We thank Peter Dayan for helpful discussions.

References

- Barraclough DJ, Conroy ML, Lee D. Prefrontal cortex and decision making in a mixed-strategy game. Nat Neurosci. 2004;7:404–410. doi: 10.1038/nn1209. [DOI] [PubMed] [Google Scholar]

- Barto AG. Adaptive critics and the basal ganglia. In: Houk JC, Davis J, Beiser D, editors. Models of information processing in the basal ganglia. Cambridge MA: MIT Press; 1995. pp. 215–232. [Google Scholar]

- Behrens TE, Hunt LT, Woolrich MW, Rushworth MF. Associative learning of social value. Nature. 2008;456:245–249. doi: 10.1038/nature07538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behrens TEJ, Woolrich MW, Walton ME, Rushworth MFS. Learning the value of information in an uncertain world. Nat Neurosci. 2007;10:1214–1221. doi: 10.1038/nn1954. [DOI] [PubMed] [Google Scholar]

- Berns GS, McClure SM, Pagnoni G, Montague PR. Predictability modulates human brain response to reward. J Neurosci. 2001;21:2793–2798. doi: 10.1523/JNEUROSCI.21-08-02793.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bhatnagar S, Sutton RS, Ghavamzadeh M, Lee M. Incremental natural actor-critic algorithms. In: Platt J, Koller D, Singer Y, Roweis S, editors. Advances in neural information processing systems. Vol. 20. Cambridge, MA: MIT Press; 2008. pp. 105–112. [Google Scholar]

- Boorman ED, Behrens TE, Woolrich MW, Rushworth MF. How green is the grass on the other side? frontopolar cortex and the evidence in favor of alternative courses of action. Neuron. 2009;62:733–743. doi: 10.1016/j.neuron.2009.05.014. [DOI] [PubMed] [Google Scholar]

- Camerer C, Ho T. Experience-weighted attraction learning in normal form games. Econometrica. 1999;67:827–874. [Google Scholar]

- Camille N, Coricelli G, Sallet J, Pradat-Diehl P, Duhamel JR, Sirigu A. The involvement of the orbitofrontal cortex in the experience of regret. Science. 2004;304:1167–1170. doi: 10.1126/science.1094550. [DOI] [PubMed] [Google Scholar]

- Chumbley JR, Friston KJ. False discovery rate revisited: FDR and topological inference using gaussian random fields. Neuroimage. 2009;44:62–70. doi: 10.1016/j.neuroimage.2008.05.021. [DOI] [PubMed] [Google Scholar]

- Coricelli G, Critchley HD, Joffily M, O'Doherty JP, Sirigu A, Dolan RJ. Regret and its avoidance: A neuroimaging study of choice behavior. Nat Neurosci. 2005;8:1255–1262. doi: 10.1038/nn1514. [DOI] [PubMed] [Google Scholar]

- Daw ND, Doya K. The computational neurobiology of learning and reward. Curr Opin Neurobiol. 2006;16:199–204. doi: 10.1016/j.conb.2006.03.006. [DOI] [PubMed] [Google Scholar]

- Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat Neurosci. 2005;8:1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- Daw ND, Gershman SJ, Seymour B, Dayan P, Dolan RJ. Model-based influences on humans' choices and striatal prediction errors. Neuron. 2011 doi: 10.1016/j.neuron.2011.02.027. (In press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw ND, O'Doherty JP, Dayan P, Seymour B, Dolan RJ. Cortical substrates for exploratory decisions in humans. Nature. 2006;441:876–879. doi: 10.1038/nature04766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dayan P, Daw ND. Decision theory, reinforcement learning, and the brain. Cogn Affect Behav Neurosci. 2008;8:429–453. doi: 10.3758/CABN.8.4.429. [DOI] [PubMed] [Google Scholar]

- Dayan P, Abbott LF. Theoretical neuroscience: Computational and mathematical modeling of neural systems. Cambridge, MA: The MIT Press; 2001. [Google Scholar]

- Deichmann R, Gottfried JA, Hutton C, Turner R. Optimized EPI for fMRI studies of the orbitofrontal cortex. Neuroimage. 2003;19:430–441. doi: 10.1016/s1053-8119(03)00073-9. [DOI] [PubMed] [Google Scholar]

- Delgado MR, Miller MM, Inati S, Phelps EA. An fMRI study of reward-related probability learning. Neuroimage. 2005;24:862–873. doi: 10.1016/j.neuroimage.2004.10.002. [DOI] [PubMed] [Google Scholar]

- Dickinson A, Balleine B. In: The role of learning in the operation of motivational systems. Pashler H, Gallistel R, editors. New York: New York: John Wiley & Sons; 2002. p. 533. [Google Scholar]

- Doll BB, Jacobs WJ, Sanfey AG, Frank MJ. Instructional control of reinforcement learning: A behavioral and neurocomputational investigation. Brain Res. 2009;1299:74–94. doi: 10.1016/j.brainres.2009.07.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Everitt BJ, Robbins TW. Neural systems of reinforcement for drug addiction: From actions to habits to compulsion. Nat Neurosci. 2005;8:1481–1489. doi: 10.1038/nn1579. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Frith CD, Frackowiak RS, Turner R. Characterizing dynamic brain responses with fMRI: A multivariate approach. Neuroimage. 1995;2:166–172. doi: 10.1006/nimg.1995.1019. [DOI] [PubMed] [Google Scholar]

- Hampton AN, Bossaerts P, O'Doherty JP. The role of the ventromedial prefrontal cortex in abstract state-based inference during decision making in humans. J Neurosci. 2006;26:8360–8367. doi: 10.1523/JNEUROSCI.1010-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hare TA, O'Doherty J, Camerer CF, Schultz W, Rangel A. Dissociating the role of the orbitofrontal cortex and the striatum in the computation of goal values and prediction errors. J Neurosci. 2008;28:5623–5630. doi: 10.1523/JNEUROSCI.1309-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kass RE, Raftery AE. Bayes factors. J Amer Statistical Assoc. 1995;90:773–795. [Google Scholar]

- Knowlton BJ, Mangels JA, Squire LR. A neostriatal habit learning system in humans. Science. 1996;273:1399–1402. doi: 10.1126/science.273.5280.1399. [DOI] [PubMed] [Google Scholar]

- Knutson B, Gibbs SE. Linking nucleus accumbens dopamine and blood oxygenation. Psychopharmacology. 2007;191:813–822. doi: 10.1007/s00213-006-0686-7. [DOI] [PubMed] [Google Scholar]

- Lau B, Glimcher PW. Dynamic response-by-response models of matching behavior in rhesus monkeys. J Exp Anal Behav. 2005;84:555–579. doi: 10.1901/jeab.2005.110-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li J, Delgado MR, Phelps EA. How instructed knowledge modulates the neural systems of reward learning. Proc Natl Acad Sci. 2011;108:55–60. doi: 10.1073/pnas.1014938108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li J, McClure SM, King-Casas B, Montague PR. Policy adjustment in a dynamic economic game. PLoS ONE. 2006;1:e103. doi: 10.1371/journal.pone.0000103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lohrenz T, McCabe K, Camerer CF, Montague PR. Neural signature of fictive learning signals in a sequential investment task. Proc Natl Acad Sci. 2007;104:9493–9498. doi: 10.1073/pnas.0608842104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McClure SM, Berns GS, Montague PR. Temporal prediction errors in a passive learning task activate human striatum. Neuron. 2003;38:339–346. doi: 10.1016/s0896-6273(03)00154-5. [DOI] [PubMed] [Google Scholar]

- Morris G, Nevet A, Arkadir D, Vaadia E, Bergman H. Midbrain dopamine neurons encode decisions for future action. Nat Neurosci. 2006;9:1057–1063. doi: 10.1038/nn1743. [DOI] [PubMed] [Google Scholar]

- Nichols T, Brett M, Andersson J, Wager T, Poline JB. Valid conjunction inference with the minimum statistic. Neuroimage. 2005;25:653–660. doi: 10.1016/j.neuroimage.2004.12.005. [DOI] [PubMed] [Google Scholar]

- O'Doherty J, Dayan P, Schultz J, Deichmann R, Friston K, Dolan RJ. Dissociable roles of ventral and dorsal striatum in instrumental conditioning. Science. 2004;304:452–454. doi: 10.1126/science.1094285. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP, Dayan P, Friston K, Critchley H, Dolan RJ. Temporal difference models and reward-related learning in the human brain. Neuron. 2003;38:329–337. doi: 10.1016/s0896-6273(03)00169-7. [DOI] [PubMed] [Google Scholar]

- O'Reilly RC, Frank MJ. Making working memory work: A computational model of learning in the prefrontal cortex and basal ganglia. Neural Comput. 2006;18:283–328. doi: 10.1162/089976606775093909. [DOI] [PubMed] [Google Scholar]

- Pessiglione M, Seymour B, Flandin G, Dolan RJ, Frith CD. Dopamine-dependent prediction errors underpin reward-seeking behaviour in humans. Nature. 2006;442:1042–1045. doi: 10.1038/nature05051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poldrack RA, Clark J, Pare-Blagoev EJ, Shohamy D, Creso Moyano J, Myers C, Gluck MA. Interactive memory systems in the human brain. Nature. 2001;414:546–550. doi: 10.1038/35107080. [DOI] [PubMed] [Google Scholar]

- Rangel A, Camerer C, Montague PR. A framework for studying the neurobiology of value-based decision making. Nat Rev Neurosci. 2008;9:545–556. doi: 10.1038/nrn2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roesch MR, Calu DJ, Schoenbaum G. Dopamine neurons encode the better option in rats deciding between differently delayed or sized rewards. Nat Neurosci. 2007;10:1615–1624. doi: 10.1038/nn2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Samejima K, Ueda Y, Doya K, Kimura M. Representation of action-specific reward values in the striatum. Science. 2005;310:1337–1340. doi: 10.1126/science.1115270. [DOI] [PubMed] [Google Scholar]

- Schonberg T, Daw ND, Joel D, O'Doherty JP. Reinforcement learning signals in the human striatum distinguish learners from nonlearners during reward-based decision making. J Neurosci. 2007;27:12860–12867. doi: 10.1523/JNEUROSCI.2496-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schonberg T, O'Doherty JP, Joel D, Inzelberg R, Segev Y, Daw ND. Selective impairment of prediction error signaling in human dorsolateral but not ventral striatum in parkinson's disease patients: Evidence from a model-based fMRI study. Neuroimage. 2010;49:772–781. doi: 10.1016/j.neuroimage.2009.08.011. [DOI] [PubMed] [Google Scholar]

- Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- Seo H, Lee D. Cortical mechanisms for reinforcement learning in competitive games. Philos Trans R Soc Lond B Biol Sci. 2008;363:3845–3857. doi: 10.1098/rstb.2008.0158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simon DA, Daw ND. Neural correlates of forward planning in a spatial decision task in humans. J Neurosci. 2011 doi: 10.1523/JNEUROSCI.4647-10.2011. (In press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sutton RS, Barto AG. Reinforcement learning: An introduction. Cambridge, Mass: MIT Press; 1998. [Google Scholar]

- Tanaka SC, Doya K, Okada G, Ueda K, Okamoto Y, Yamawaki S. Prediction of immediate and future rewards differentially recruits cortico-basal ganglia loops. Nat Neurosci. 2004;7:887–893. doi: 10.1038/nn1279. [DOI] [PubMed] [Google Scholar]

- Thorndike EL. Animal intelligence: an experimental study of the associative processes in animals. New York and London: The Macmillan company; 1898. [Google Scholar]

- Tolman EC. Purposive behavior in animals and men. Berkeley, Los Angeles: University of California Press; 1949. [Google Scholar]

- Tricomi E, Balleine BW, O'Doherty JP. A specific role for posterior dorsolateral striatum in human habit learning. Eur J Neurosci. 2009;29:2225–2232. doi: 10.1111/j.1460-9568.2009.06796.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tricomi EM, Delgado MR, Fiez JA. Modulation of caudate activity by action contingency. Neuron. 2004;41:281–292. doi: 10.1016/s0896-6273(03)00848-1. [DOI] [PubMed] [Google Scholar]

- Watkins CJCH, Dayan P. Q-learning. Machine Learning. 1992;8:272–292. [Google Scholar]

- Yin HH, Knowlton BJ, Balleine BW. Lesions of dorsolateral striatum preserve outcome expectancy but disrupt habit formation in instrumental learning. Eur J Neurosci. 2004;19:181–189. doi: 10.1111/j.1460-9568.2004.03095.x. [DOI] [PubMed] [Google Scholar]

- Yin HH, Ostlund SB, Knowlton BJ, Balleine BW. The role of the dorsomedial striatum in instrumental conditioning. Eur J Neurosci. 2005;22:513–523. doi: 10.1111/j.1460-9568.2005.04218.x. [DOI] [PubMed] [Google Scholar]