Abstract

Most models assume that top-down attention enhances the gain of sensory neurons tuned to behaviorally-relevant stimuli (on-target gain). However, theoretical work suggests that when targets and distracters are highly similar, attention should enhance the gain of neurons that are tuned away from the target, because these neurons better discriminate neighboring features (off-target gain). While it is established that off-target neurons support difficult fine discriminations, it is unclear if top-down attentional gain can be optimally applied to informative off-target sensory neurons or if gain is always applied to on-target neurons, irrespective of task demands. To test the optimality of attentional gain in human visual cortex, we used fMRI and an encoding model to estimate the response profile across a set of hypothetical orientation-selective channels during a difficult discrimination task. The results suggest that top-down attention can adaptively modulate off-target neural populations, but only when the discriminanda are precisely specified in advance. Furthermore, logistic regression revealed that activation levels in off-target orientation channels predicted behavioral accuracy on a trial-by-trial basis. Overall, these data suggest that attention does not always increase the gain of sensory-evoked responses, but instead may bias population response profiles in an optimal manner that respects both the tuning properties of sensory neurons and the physical characteristics of the stimulus array.

Introduction

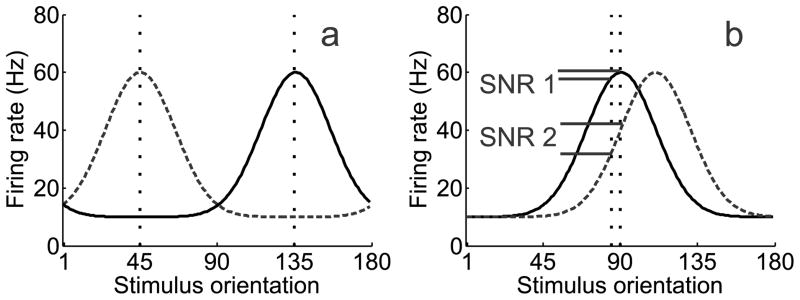

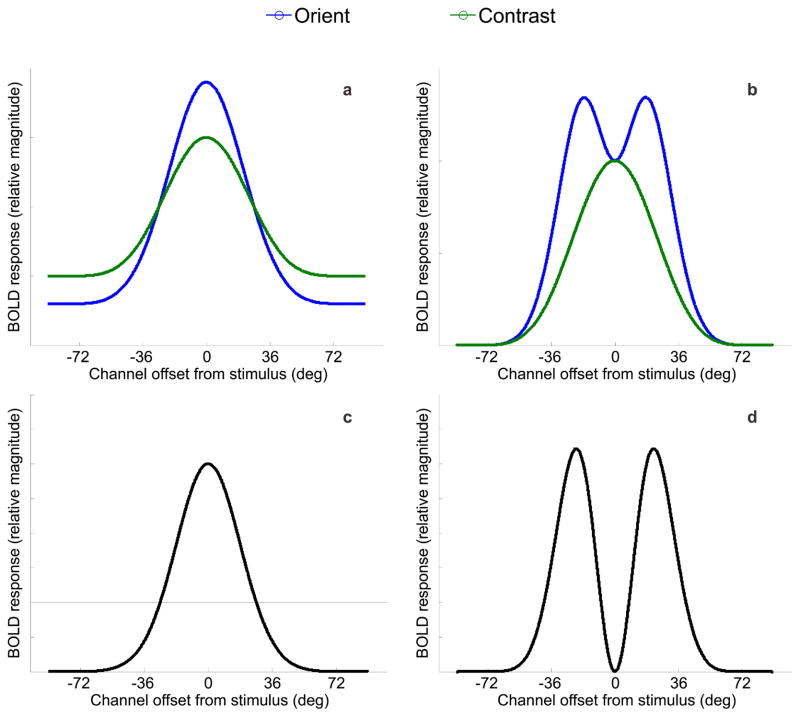

When making a difficult perceptual decision, selective attention ensures that relevant features are preferentially processed over distracters (Desimone and Duncan, 1995, Reynolds and Desimone, 1999). Most accounts, such as the feature similarity gain model, suggest that attention increases the firing rates of neurons tuned to relevant features and suppresses neurons tuned to irrelevant features (Treue and Martinez Trujillo, 1999, Martinez-Trujillo, 2004, Boynton, 2005, Maunsell and Treue, 2006, Reynolds and Heeger, 2009; Lee and Maunsell, 2009). Enhancing the gain of the neurons tuned to a relevant feature (on-target neurons) is optimal when discriminating highly dissimilar features (Figure 1a). However, on-target enhancement is not always ideal; neurons tuned away from the target (off-target neurons) better signal differences between similar features (Figure 1b; Regan and Beverley, 1985, Seung and Sompolinsky, 1993, Hol and Treue, 2001, Itti, Koch and Braun, 2000; Pouget et al., 2001, Schoups et al., 2001, Raiguel et al., 2006; Purushothaman and Bradley, 2004, Jazayeri and Movshon, 2006, Navalpakkam and Itti, 2006, Jazayeri and Movshon, 2007, Navalpakkam and Itti, 2007, Moore, 2008; Scolari and Serences, 2009, 2010).

Figure 1.

(a) Target (135°) and distracter (45°) orientations are marked by vertical dashed lines. During a coarse discrimination with low target/distracter similarity, neurons tuned to the behaviorally relevant feature should be the target of attentional gain, given their large signal-to-noise ratio (SNR). However, (b) during a fine discrimination, off-target neurons that flank the behaviorally-relevant feature theoretically carry more information, yielding a higher SNR. Target (90°) and distracter (92°) orientations are marked by vertical dashed lines. Adapted with permission from Navalpakkam and Itti (2007).

Recent psychophysical studies suggest that top-down attentional gain can be optimally deployed to early sensory neurons in accordance with perceptual demands. However, these behavioral results are equally consistent with the notion that off-target sensory responses are selectively “read-out” by downstream regions (Law and Gold, 2008; 2009), independent from any changes in sensory gain. Both possibilities can account for the behavioral data. One recent functional magnetic resonance imaging (fMRI) study demonstrated a predictive relationship between activation levels in off-target populations in V1 and behavioral performance during a difficult discrimination task (Scolari and Serences, 2010). However, because the data were post-sorted based on behavioral accuracy and attention was not directly manipulated, the observed activation differences cannot be conclusively attributed to top-down attentional gain.

Here we first used fMRI and a forward encoding model (Kay et al., 2008, Brouwer and Heeger, 2009; 2011; reviewed in Naselaris et al., 2011; Serences and Saproo, 2011) to determine if top-down attentional gain is optimally directed to off-target neural populations during a challenging discrimination. Subjects judged whether two simultaneously presented oriented gratings matched, where a ‘mismatch’ grating was rotated slightly away from a pre-cued orientation; the rotational direction was either randomly selected (Experiment 1), or pre-cued (Experiment 2). In addition, we used logistic regression to determine whether behavioral performance was predicted by trial-by-trial changes in off-target responses.

When the rotational offset of the two stimuli was not pre-cued (Experiment 1), top-down attentional gain was applied to on-target neural populations. However, when precise information about the rotational offset was given (Experiment 2), off-target neural populations were selectively enhanced. In both cases, we observed a significant correlation between off-target activation and behavioral performance, suggesting that decision-making mechanisms rely primarily on input from the most informative populations of sensory neurons.

Materials and Methods

Experiment 1

Subjects

Ten neurologically healthy subjects (3 males and 7 females) with an age range of 22 to 48 years (M = 28, SD = 7.36) were recruited from the University of California, San Diego (UCSD) community (one additional subject enrolled in the study, but did not complete both scan sessions). Data from two subjects were discarded: one subject made eye movements to fixate each peripheral stimulus (which was discovered upon debriefing) and the other fell asleep in the scanner. All subjects gave written informed consent as per the Institutional Review Board requirements at UCSD. Subjects completed 1hr of training outside the scanner, followed by two, 2hr scan sessions; compensation included $10/hr for training and $20/hr for scanning.

Experimental paradigm

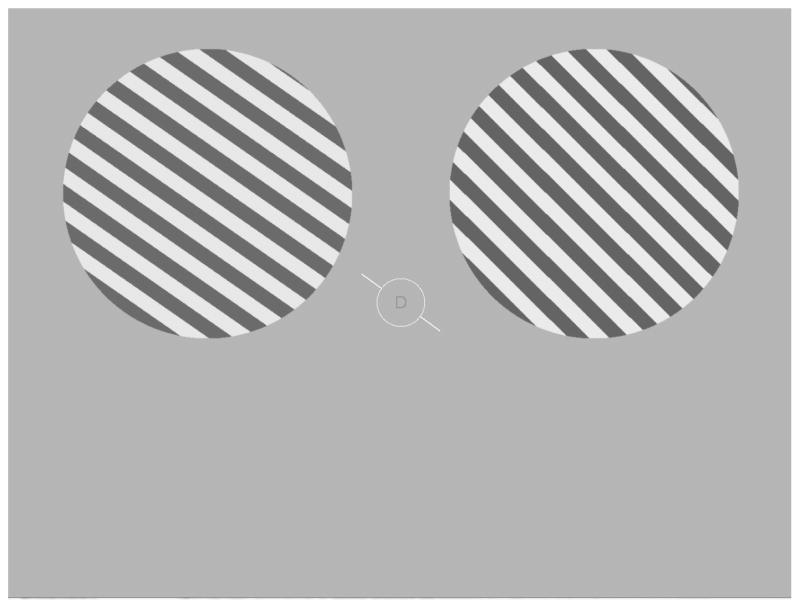

All visual stimuli were generated using the Matlab programming language (v.7.7, Natick, MA) with the Psychophysics Toolbox (v.3; Brainard, 1997, Pelli, 1997) running on a PC laptop with the Windows XP operating system. The luminance output of both the computer monitors used during training in the lab and the projector used during scanning were measured with a Minolta LS-110 photometer and linearized in the stimulus presentation software. Following training (see below), subjects completed 1 of 3 attention tasks during each fMRI scanning session; all tasks were comprised of identical displays, and task type depended only on the attended aspect of the display (attend orientation, attend contrast, or attend central letter stream; Figure 2). Throughout each trial, observers maintained fixation on a central white ring (diameter: 1.16° of visual angle) at the center of the screen. An attention cue consisting of a white oriented line (length: 2.52° of visual angle), whose center was occluded by a gray disk demarcated by the ring, was rendered at an orientation that was selected from a set of 10 values spanning the 180° of orientation space in 18° steps (0°, 18°, 36°, 54°, 72°, 90°, 108°, 126°, 144°, and 162°). One second after cue onset, two spatially anti-aliased square wave gratings (0.74 cycles/°) appeared simultaneously for 3s, one centered in each upper quadrant (horizontally and vertically offset from fixation by 4.63° and 2.73° of visual angle, respectively, with the diameter of each grating 6.79°). Each grating was flickered at a rate of 5Hz for the duration of each 3s trial, with the spatial phase of each grating randomly selected on each 100ms presentation. The orientation of one stimulus (either the left or right grating) closely matched the orientation of the central attention cue, differing only by a small amount of jitter (a pseudorandomly selected value uniformly selected from a range spanning ±3°). The orientation of the remaining stimulus was either the same as the other stimulus, or was offset by an average of ±7.01° (SEM across subjects = 1.3°, range across subjects: 3°–12°; henceforth termed the ‘deviant grating’). The subject’s task on attend-orientation blocks was to make a button-press response indicating if the two gratings were rendered at the same orientation (match trials), or at different orientations (mismatch trials). The orientation offset of the gratings on mismatch trials was determined separately for each subject in the training session held outside of the scanner (see description below) and then titrated as needed on a scan-by-scan basis to maintain accuracy at approximately 70% in the two main peripheral attention conditions. For four of the subjects, the general orientation of the cue was selected pseudorandomly on a trial-by-trial basis from the set of 10 possible values. For the remaining four subjects, the same general orientation was presented for four consecutive trials (forming ‘mini-blocks’) in an effort to maximize orientation selective responses. However, since this mini-block manipulation had no effect on the magnitude of the orientation selective response or on the attentional modulations, we do not discuss it further. The contrast of either the left or the right stimulus was always selected pseudorandomly from a uniform range extending from 65–75%, and the contrast of the remaining stimulus either matched the first on half the trials or was offset on average by ±6.8% (SEM across subjects = .92%, range across subjects = 3%–16%). On attend-contrast blocks, the subject’s task was to ignore changes in orientation and to instead report whether the contrast of two gratings either matched or did not match. On all trials, a central rapid serial visual presentation (RSVP) stream was also presented inside the fixation ring at an average exposure duration of 67ms per letter (letter height: 0.28° of visual angle); in the attend-letter stream condition, subjects reported on each trial whether an ‘X’ or a ‘Y’ was presented. The attention condition (attend orientation, contrast, or letter) was blocked and the order of the blocks was counterbalanced across subjects, with all subjects completing an equal number of each block type.

Figure 2.

Schematic of the general experimental paradigm. Experiment 1: While maintaining fixation on the central ring, subjects attended to either the orientation of the gratings, the contrast of the gratings, or a central letter RSVP stream. The orientation of one grating always closely matched the oriented cue line presented at fixation (randomly selected from a set of 10 orientations equally spaced across 180°); the orientation of the remaining grating either matched the other, or mismatched by a small clockwise (CW) or a counterclockwise (CCW) offset. Similarly, the contrast of one grating ranged from 65–75%, and the second grating either matched the first or mismatched by adding or subtracting a small contrast change. The subject’s task was to decide if the stimuli either matched or mismatched with respect to the relevant attended feature. Experiment 2: The display was identical to Experiment 1 (shown), with the following changes: (1) The central RSVP stream was removed, so subjects only attended to either orientation or contrast on alternating blocks of trials, and (2) The central cue was rendered in either green or red to indicate with 100% validity either a CW or CCW rotational offset in the event of an orientation-mismatch trial.

Each scan contained 40 trials (with four trials containing gratings rendered at each of the 10 possible orientations), and every trial was separated by a 2.5s response window in which only the central fixation ring was present on the screen. Eight blank null-trials, consisting only of the fixation ring for the length of a standard trial (6.5s), were pseudorandomly intermixed with the 40 task trials with the constraint that a block never started with a null-trial. Subjects completed at least nine 321s scans in each scanning session (and four subjects completed 12 scans in the first scan session), for a total of six or seven scans per attention condition across both sessions.

Pre-scanning behavioral training session

Subjects were trained on the task outside of the scanner at least one day before they were scanned. All subjects completed 12 blocks of training on the experimental task (4 blocks of each attention condition). Trial-by-trial auditory error-feedback was provided via either a high or low frequency beep; however, auditory feedback was omitted during scan sessions, where feedback on behavioral performance was only given at the end of each scan. For the attend-orientation condition, the offset between two mismatched gratings was randomly selected from a set of four values (1°, 4°, 8°, and 16°); similarly, in the attend-contrast condition, the percent change in contrast between two mismatched gratings was randomly selected from a set of four values (1%, 4%, 8%, and 16%). The orientation and contrast offsets at which subjects responded correctly on approximately 70% of trials were selected for subsequent scan sessions.

Independent functional localizer scans

Two 312s functional localizer scans were run in each scan session to independently identify voxels within retinotopically organized visual areas that responded to the spatial positions occupied by the stimuli used in the attention scans. On half of the trials, a full contrast flickering checkerboard stimulus (4.31 cycles/°, 5Hz reversal frequency) was presented in the same central spatial position that was occupied by the fixation circle in the main attention scans for 10s. On the remaining trials, two flickering checkerboard stimuli were presented in the spatial locations occupied by the peripheral gratings in the main attention scans (0.74 cycles/°, 5Hz reversal time). On all trials, irrespective of the spatial position of the checkerboards, subjects were instructed to make a button-press response within 1s when the contrast of the stimulus decreased slightly. Each contrast-change target was presented for two video frames (33.33ms), and there were 3 targets presented on each trial. The timing of each target was pseudorandomly determined with the following constraints: 1) each target was separated from the previous one by at least 1s, and 2) targets were restricted to a window of 1–9s following the onset of the trial. Each of the 15 stimulus epochs was followed by a blank 10s inter-trial-interval. A general linear model (GLM) was then used to identify voxels that responded more during epochs of peripheral stimulation compared to epochs of central stimulation (see fMRI data acquisition and analysis section below).

Retinotopic mapping procedure

Each subject participated in at least one retinotopic mapping scan, which required passive fixation of a full-contrast checkerboard stimulus and standard presentation parameters (stimulus flickering at 8Hz and subtending 60° of polar angle; Engel et al., 1994, Sereno et al., 1995). This procedure was used to identify ventral visual areas V1, V2v, and V3v (given that the stimuli were centered in the upper quadrants, we did not map dorsal visual areas). We projected the retinotopic mapping data onto a computationally inflated representation of each subject’s gray/white matter boundary in order to better visualize these cortical areas.

fMRI data acquisition and analysis

MRI scanning was performed on a 3T GE MR750 scanner equipped with an 8-channel head coil at the Keck Center for Functional MRI, University of California, San Diego. Anatomical images were acquired using a T1-weighted sequence that yielded images with a 1mm3 resolution (TR/TE=11/3.3 ms, TI=1100ms, 172 slices, flip angle=18°). Functional images were acquired using a gradient echo EPI pulse sequence which covered the occipital lobe with 26 transverse slices. Slices were acquired in ascending interleaved order with 3mm thickness (TR = 1500 ms, TE = 30ms, flip angle = 90°, image matrix = 64 (AP) × 64 (RL), with FOV = 192mm (AP) × 192mm (RL), 0mm gap, voxel size = 3mm × 3mm × 3mm). Data analysis was performed using BrainVoyager QX (v 1.86; Brain Innovation, Maastricht, The Netherlands) and custom timeseries analysis routines written in Matlab (version 7.1; The Math Works, Natick, Massachusetts). EPI images were slice-time corrected, motion-corrected (both within and between scans), and high pass filtered (3 cycles/run) to remove low frequency temporal components from the timeseries. The timeseries from each voxel in each observer was then z-transformed on a scan-by-scan basis to normalize the mean response intensity across time to zero. This normalization was done to correct for differences in mean signal intensity across voxels (e.g. differences related to the distance of a given voxel from the coil elements). In addition, we removed the mean activation level across all voxels on each trial to ensure that orientation selectivity resulted from the pattern of activation as opposed to a spatially global change in the BOLD response that was evoked by different orientations. We then added the mean BOLD response for a given attention condition to each respective orientation tuning function (see below) to account for any differences in mean amplitude across the attention conditions. All reported results are qualitatively unchanged if this spatial normalization step is not performed.

Voxel selection using independent localizer scans

To identify voxels that responded to the retinotopic positions of the two peripheral stimulus apertures, data from the functional localizer scans were analyzed using a GLM that contained two regressors, each one marking the timecourse of one stimulus type (peripheral or central checkerboard), along with a third regressor to account for shifts in the overall magnitude of the signal in each voxel across each scan (the constant term in the GLM). This boxcar model of each stimulus type was then convolved with the standard difference-of-two gamma function model of the hemodynamic response function (HRF) that is implemented in Brain Voyager (time to peak of positive response: 5s, time to maximum negative response: 15s, ratio of positive and negative responses: 6, positive and negative response dispersion: 1). Voxels within each identified visual area (V1, V2v, V3v) that responded more to epochs of peripheral stimulation, compared to central stimulation, were used to analyze data from the attention scans if they passed a threshold of p<0.01, corrected for multiple comparisons using the False Discovery Rate (FDR) algorithm implemented in Brain Voyager.

Estimating trial-by-trial BOLD responses on attention scans

To estimate the trial-by-trial magnitude of the BOLD response during the main attention scans, we shifted the timeseries from each voxel identified in the functional localizer scans by 6s to account for the temporal lag in the hemodynamic response function, and then extracted and averaged data from the two consecutive 1.5s TRs that corresponded to the duration of each 3s trial (see e.g. Kamitani and Tong, 2005; Serences and Boynton, 2007a,b; Serences et al., 2009 for a similar approach). These trial-by-trial estimates of the BOLD response amplitude were subsequently used as input to the forward encoding model described below to estimate orientation tuning curves in each visual area.

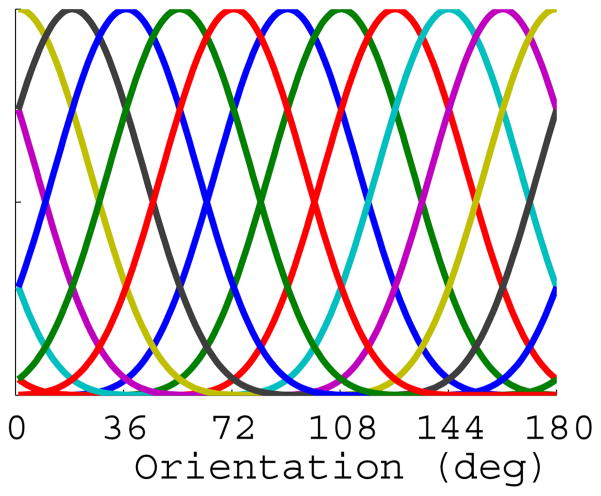

Estimating orientation selective BOLD response profiles

We assumed that the BOLD response in a given voxel represents the pooled activity across a large population of orientation selective neurons, and that the distribution of neural tuning preference is biased within a given voxel due to large-scale feature maps (Freeman et al., 2011; Sasaki et al., 2006; Jia et al., 2011) and/or to subvoxel anisotropies in the number of orientation-selective cortical columns with a specific preference (Kamitani and Tong, 2005, Swisher et al., 2010). Thus, the BOLD response measured from many of the voxels in primary visual cortex exhibit a modest but robust orientation preference (Haynes and Rees, 2005, Kamitani and Tong, 2005, Serences et al., 2009, Freeman et al., 2011). Given this response bias, we inferred changes in the allocation of attentional gain by modeling the response of each voxel as a linearly weighted combination of a set of half-sinusoidal basis functions (Brouwer and Heeger, 2009; 2011). We used half-sinusoidal functions that were raised to the 6th power to approximate the shape of single-unit tuning functions in V1, where the 1/√2 half-bandwidth of orientation tuned cells is approximately 20° (although there is a considerable amount of variability in bandwidth, see Ringach et al., 2002a; Ringach et al., 2002b; Gur et al, 2005; Schiller 1976). Given that the half-sinusoids were raised to the 6th power, a minimum of seven linearly independent basis functions was required to adequately cover orientation space (Freeman and Adelson, 1991); however, since we presented ten unique orientations in the experiment, we used a set of ten evenly distributed functions (see Figure 3 and text below). Given the width of the selected functions, the use of more than the required seven basis functions is not problematic so long as the number of functions does not exceed the number of measured stimulus values. While we selected the bandwidth of the basis functions based on physiology studies, all results that we report are robust to reasonable variations in this value (i.e. raising the half-sinusoids to the 5th–8th power, all of which are reasonable choices based the documented variability of single-unit bandwidths).

Figure 3.

Depiction of the 10 half-cycle sinusoid basis functions, evenly distributed across 180° of orientation space.

We then used this basis set to estimate orientation selective responses in visual cortex using a forward model developed by Brouwer and Heeger (2009; 2011; note that we adopt their terminology and variable naming convention for consistency). Let m be the number of voxels in a given visual area, n be the number of trials, and k be the number of hypothetical orientation channels (10 in this case). The data were first segmented into a training data set (an m × n matrix B1 consisting of data from all but one scan of each of the three task types)and a test data set (B2, consisting of the remaining data from one scan of each of the three task types). The training data in B1 were then mapped onto the full rank matrix of channel outputs (C1, k × n) by the weight matrix (W, m × k) that was estimated using a GLM of the form:

| (1) |

where the ordinary least-squares estimate of W is computed as:

| (2) |

The channel responses (C2) on each trial were then estimated for the test data (B2) using the weights estimated in (2):

| (3) |

Next, the 10-point channel response function estimated for each trial (in C2) was circularly shifted so that the channel matching the orientation of the attention cue presented on that trial was positioned in the center of the tuning curve, thereby aligning each channel response profile to a common center. The channel response estimation procedure was then iterated across all unique combinations of holding out one scan of each attention condition for use as a test set (i.e., on each iteration we held a total of three scans out from the attend-letter, attend-orientation, and attend-contrast conditions in Experiment 1, and two scans out from the attend-orientation and attend-contrast conditions in Experiment 2; see methods for Experiment 2 below); this ensured that the training set had a balanced number of each trial type. Data were then averaged across all trials from a given condition to generate the orientation tuning curves depicted in Figures 5–7 (note that even though the magnitude of the channel responses is influenced by the amplitude of the basis functions, which was set to 1 in this study, the relationship between responses is maintained as long as all channels have the same amplitude; therefore, the y-axes of all data plots read “BOLD response: relative magnitude”).

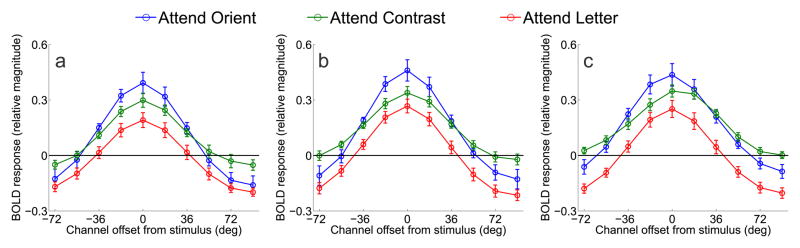

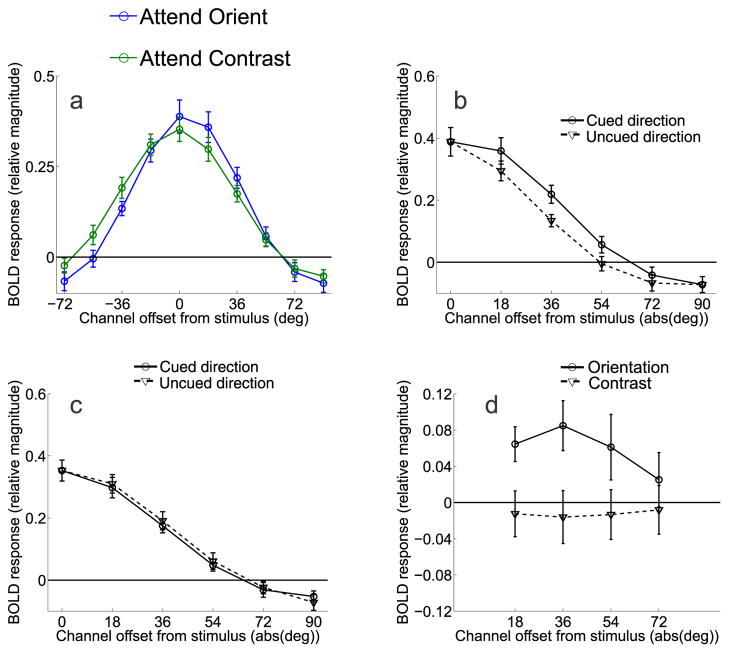

Figure 5.

Orientation-selective tuning curves from V1, V2v, V3v in Experiment 1. (a) Relative BOLD responses across orientation-selective channels in V1 for each of the three attention conditions averaged across subjects. Orientation-tuning curves from each trial were circularly shifted so that 0° indicates the cued orientation by convention, and the x-axis indicates the orientation preference of each channel with respect to the cue (negative values indicate a preferred orientation that is rotated CW from the cue, and positive values indicate a preferred orientation rotated CCW from the cue). (b) Same as panel A, except that data are from V2v. (c) Same as in panels A, B, but data are from V3v. All error bars are ±1S.E.M across subjects.

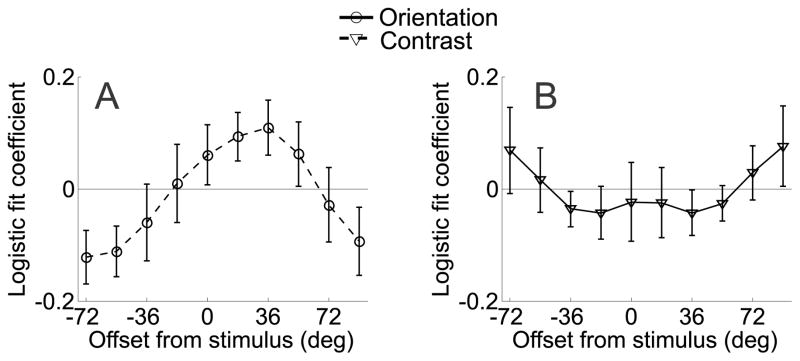

Figure 7.

Channel tuning functions from V1 in Experiment 2, averaged across all eight subjects. (a) Data from the orientation discrimination condition were shifted so that 0° indicates the cued orientation, positive values indicate channels offset in the cued direction and negative values indicate channels offset in the uncued direction. (b) Comparison of responses in channels offset in the cued direction and the uncued direction when subjects were attending to orientation. (c) Comparison of responses in channels offset in the cued direction and the uncued direction when subjects were attending to contrast. (d) Difference between corresponding channels tuned 18° to 72° in the cued and uncued directions for both the attend-orientation task (solid line) and attend-contrast task (dashed line). All error bars are ±1S.E.M across subjects.

Relating behavioral responses to orientation selective BOLD modulations

To determine if the trial-by-trial fluctuations in response magnitude in each orientation channel predicted the behavioral performance of the observer, we used logistic regression to map the continuous BOLD response in each orientation channel onto the binary accuracy value on mismatch trials (i.e. correct/incorrect). The bilateral stimulus presentation format ensured that the left and right stimuli projected into opposite cortical hemispheres, at least in the early stages of occipital cortex that we measured in the present study (i.e. V1-V3v). To map these spatially lateralized responses onto accuracy, we reasoned that accuracy on mismatch trials should be predicted by the extent to which responses in left and right visual cortex differed. Thus, for each channel, we computed the difference between the responses in contralateral and ipsilateral visual areas with respect to the deviant grating on a trial-by-trial basis. These difference scores were then entered into a logistic regression analysis on a channel-by-channel basis to map them onto the probability of a subject responding correctly on mismatch trials (see Figures 6 and 9).

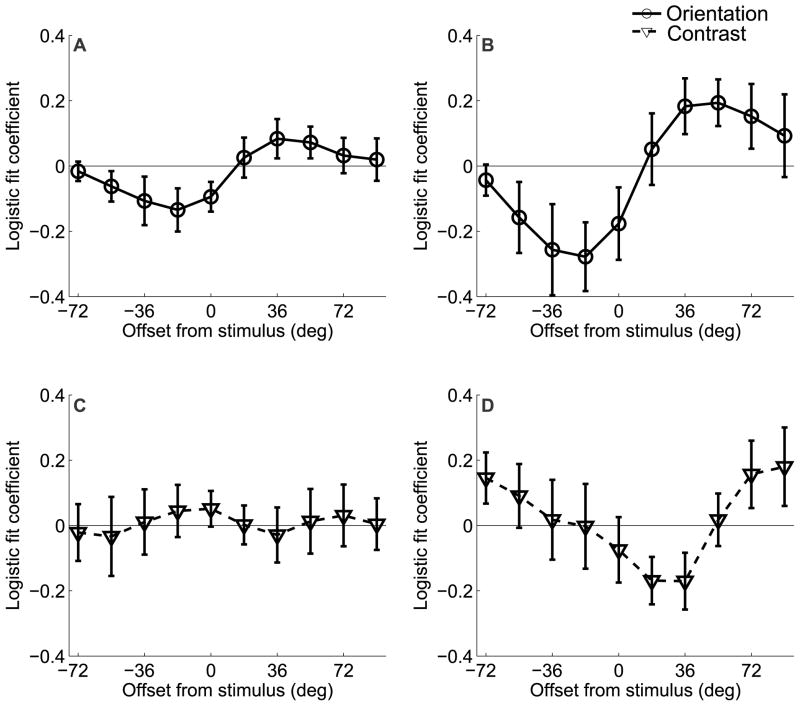

Figure 6.

Results of the logistic regression analysis for Experiment 1 that relates behavioral accuracy to the size of the differential BOLD response extracted from regions of V1 that were contralateral and ipsilateral to the deviant grating. Each channel response difference (contralateral-ipsilateral) was entered into a logistic regression as a single predictor; the logistic fit coefficients of each of the 10 analyses are plotted together (a) for the attend-orientation task across both sessions and (b) Day 2 alone. Note that here, positive values refer to offsets in the direction of the deviant grating, and negative values refer to offsets in the opposite direction. On Day 2, trial-by-trial fluctuations in orientation channels tuned to −18° and +54° predict subjects’ accuracy on attend-orientation mismatch trials. This pattern was not observed for the attend-contrast task on either (c) both sessions combined or (d) Day 2 alone. All error bars are ±1 S.E.M. across subjects.

Figure 9.

Results of the logistic regression analysis for Experiment 2 that relates behavioral accuracy to the size of the differential BOLD response extracted from regions of V1 and V2v that were contralateral and ipsilateral to the deviant grating (similar to Figure 6). Note that here, positive values refer to offsets in the cued direction and negative values refer to offsets in the uncued direction. (a) Increased differential activation in channels tuned to −72° and −54° predicted a lower probability of a correct response, and increased differential activation in the channel tuned to +36° predicted a higher probability of a correct response. (b) No such effect was observed in the attend-contrast task. All error bars are ±1 S.E.M. across subjects.

Assessing statistical significance using a randomization procedure

Due to the non-independence of the channels in both the main data analyses and the logistic regression (because the half-sinusoidal basis functions overlapped, see Figure 3), the assumption of independence in the standard GLM/ANOVA framework was violated. Therefore, we used a randomization procedure to assess the significance of the attentional modulations reported in Figures 5, 7, and 8, and of the logistic fit coefficients reported in Figures 6 and 9. To evaluate the significance of the reported effects, we first ran a standard t-test or ANOVA (as appropriate) to obtain an observed p-value. Next, we randomly shuffled the data associated with the channel response profile on each trial. This re-labeling procedure thus generated a new data set that was the same size as the original data set. We then ran the same statistical test (t-test or ANOVA) on the new data set, and repeated this procedure 10,000 times to generate a distribution of p-values given randomized data. Finally, the probability of obtaining the observed p-value (or lower) was computed against the distribution of p-values generated from the randomized data sets. All reported p-values reflect this probability.

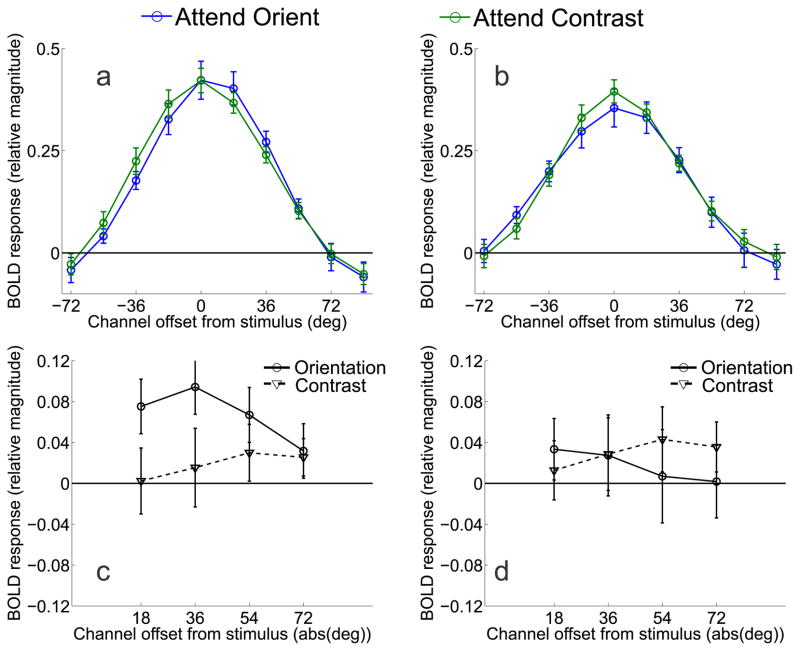

Figure 8.

(a, b) Channel tuning functions from V2v and V3v in Experiment 2, respectively. As in Figure 6a, data have been circularly shifted so that 0° indicates the cued orientation, positive values indicate channels offset in the cued direction and negative values indicate channels offset in the uncued direction. (c) Difference between corresponding channels tuned 18° to 72° in the cued and uncued directions for both the attend-orientation task (solid line) and attend-contrast task (dashed line) in area V2v. (d) Difference between corresponding channels tuned 18° to 72° in the cued and uncued directions for both the attend-orientation task (solid line) and attend-contrast task (dashed line) in area V3v. All error bars are ±1S.E.M across subjects.

Experiment 2

Subjects

Twelve subjects (6 males and 6 females) with an age range of 20 to 34 (M = 25.83, SD = 4.69) years were recruited to participate in a single fMRI session. Data from one subject were discarded due to excessive motion, so eleven subjects were included in the final analyses. Three of the subjects had previously participated in Experiment 1, and two others had participated in a different study that used similar stimuli, so they were experienced fMRI subjects and experienced with this stimulus paradigm. Prior to the scan session, each of the five experienced subjects participated in a 1 hr training session consisting of 8 blocks of the task (see below) to acquaint them with the attentional cueing procedure; the remaining six inexperienced subjects participated in two 1 hr training sessions held on separate days. Therefore, more extensive training was employed in Experiment 2 compared to Experiment 1. Following training, all subjects participated in a single 2 hr scan session that was held on a separate day for most subjects (for two experienced subjects, training and scanning occurred on the same day). All subjects gave written informed consent as per the Institutional Review Board requirements at UCSD. Compensation for participating was $10/hr for the training session(s) and $20/hr for the scanning session.

Attention scans

The parameters of the attention scans were nearly identical to those of Experiment 1, except here the letter stream task was omitted and the color of the cue (green or red) informed the subjects of the rotational offset of the mismatched grating in the event of a mismatch trial on attend-orientation scans. For instance, a green cue indicated that a ‘mismatch’ grating would be rotated CW from the cue, whereas a red cue indicated a CCW rotation from the cue; the direction of rotation was counterbalanced on a block-by-block basis. The same orientation was cued for 4 consecutive trials, and although the green and red color cues were also presented on attend-contrast blocks, they provided no relevant information about the task. The order of the attention blocks (attend-orientation and attend-contrast) was counterbalanced across subjects. The mean orientation offset on mismatch trials was 5.71° (SEM across subjects: 0.54°, range: 3.5°–10°) and the mean contrast offset on mismatch trials was 5.87% (SEM across subjects: 0.47%, range: 2.5%–8%).

The stimuli in Experiment 2 were presented on a different screen during scan sessions, such that all visual angles were 72.4% the size of the stimuli used in Experiment 1; the remaining data analysis methods for this experiment were otherwise identical. See Experiment 1 Materials and Methods for a description of the functional localizer scans, retinotopic mapping procedures, fMRI data acquisitions and analysis, and the derivation of orientation selective BOLD response profiles.

Results

Experiment 1

Behavioral task

The behavioral task is shown in Figure 2. Importantly, the stimulus display did not vary as a function of attention instructions: all blocks contained letter targets, orientation matches/mismatches, and contrast matches/mismatches, while only the locus of attention changed. Task difficulty, as operationalized by behavioral response accuracy, was closely matched in the attend-orientation and attend-contrast conditions, but was slightly higher in the attend-letter condition (orientation: 71.16%, SEM = 0.99% contrast: M = 69.77%, SEM = 3.6%; letter: M = 78.92%, SEM = 3.6%).

Feature-selective tuning curves and attentional gain

First, voxels in V1-V3v that responded to the spatial position occupied by the peripheral attention stimuli were identified using independent functional localizer scans (see Materials and Methods). Next, we used a forward encoding model to estimate the response profile across 10 hypothetical orientation channels that were equally distributed across 180° (see Materials and Methods: Orientation selective BOLD response profiles and Figure 3). By examining the shape of these orientation-selective BOLD response profiles in each attention condition, we indirectly estimated the degree to which top-down attentional gain is applied to underlying neural populations when subjects engage in a fine orientation discrimination task. Importantly, any orientation-selective modulations that we observe using BOLD imaging cannot be directly attributed to changes in neural spiking activity, as the BOLD signal can be modulated by multiple sources such as synaptic input from local and distant sources (Heeger et al., 2000, Logothetis et al., 2001, Heeger and Ress, 2002, Logothetis and Wandell, 2004, Logothetis, 2008, Sirotin and Das, 2009, Kleinschmidt and Muller, 2010, Das and Sirotin, 2011, Handwerker and Bandettini, 2011b). However, given that neurons in early sensory areas like V1 are heavily interconnected (Douglas and Martin, 2007), changes in the BOLD signal related to synaptic activity are likely to be tightly correlated with changes in local spiking activity.

Based on optimal models of attentional gain (Figure 1b), we predicted that the BOLD tuning profiles would follow one of two patterns, depicted in Figure 4: panel 4A provides an illustration of BOLD activation changes across orientation space as predicted by on-target gain, where the cued orientation is indicated by 0° on the x-axis. Panel 4B shows the predicted pattern if off-target gain is applied simultaneously on either side of the cued orientation.

Figure 4.

Model predictions of BOLD responses for orientation (in blue) and contrast (in green) conditions based on (a) on-target gain models of selective attention and (b) the optimal gain hypothesis with bilateral gain. Panels (c) and (d) show difference curves between the model predictions for attend-orientation and attend-contrast tasks in panels (a) and (b), respectively. Note that the model predictions show the greatest divergence at the cued orientation (0°) where gain is either maximized (panel c), or minimized (panel d).

Figure 5 shows the orientation tuning curves from V1, V2v, and V3v when subjects were performing either the central RSVP task, the contrast discrimination task (control conditions), and when they were performing the main orientation discrimination task. The full tuning curves are shown in Figure 5 (positive values indicate CCW rotations and negative values indicate CW rotations). As expected, the magnitude of the orientation-selective tuning curve was smaller when subjects were attending the central RSVP task compared to when they were attending to either the contrast or orientation discrimination tasks (main effect of attention condition, p<0.005 for both comparisons in V1, V2v, and in V3v; note that all statistics related to Figures 5–9 were computed using the randomization procedure described in Materials and Methods). The orientation and contrast discrimination conditions evoked a similar overall response amplitude; however, there was a crossover interaction in all tested visual areas such that attending to orientation lead to larger responses in channels tuned to the cued orientation and attenuated responses in channels tuned away from the cue, relative to the attend-contrast condition (p<0.01 in all areas, Figure 5). These results are in close accord with previous cases documenting on-target attentional gain (as shown in Figure 4a, Treue and Martinez Trujillo, 1999, Martinez-Trujillo, 2004, Maunsell and Treue, 2006), and are inconsistent with the deployment of off-target attentional gain when performing a fine-discrimination.

While the results clearly favor on-target attentional gain, it is still possible that the decision mechanisms selectively read-out sensory signals from neuronal populations that carry the most information about a relevant discrimination (Pestilli et al., 2011, Palmer et al., 2000, Eckstein et al., 2002, Gold and Shadlen, 2007, Law and Gold, 2008, 2009). The extent to which such a relationship can be observed is important for two reasons: 1) to establish that fMRI is sensitive enough to measure behaviorally meaningful changes in off-target channels, and 2) to establish that our fine-discrimination task did induce a reliance on off-channel responses to support behavior. First, we computed the differential response between corresponding channels in areas of visual cortex that were contralateral and ipsilateral to the deviant stimulus on mismatch trials. Next, we used logistic regression to estimate how the differential responses measured on each trial related to behavioral accuracy (see Methods: Predicting behavioral responses). The results are shown in Figure 6, where positive values along the x-axis refer to offsets in the same rotational direction as the deviant grating, and negative values refer to offsets in the opposite direction. Trial-by-trial fluctuations in V1 channels predicted accuracy on mismatch trials when subjects were performing the orientation discrimination task, but this effect was more prominent during the second scan session (Figure 6a–b; one-way ANOVA across orientation offsets for both scan sessions combined p = .0824; one-way ANOVA across orientation offsets for second scan session p = 0.0055; t-tests at −18° and +54° on second session p = 0.031 and p = 0.029, respectively). No predictive relationships were observed in V2v or V3v (one-way ANOVA across orientation offsets for V2v and V3v for both sessions combined p= 0.5701 and p = 0.4482, respectively, and for the second scan session alone p = 0.7284 and p = 0.9923, respectively). This is consistent with the notion that a relatively large differential response in contralateral off-target channels is interpreted as evidence in favor of a correct orientation judgment on mismatch trials, whereas a relatively similar response is interpreted as evidence in favor of an incorrect ‘match’ judgment. Importantly, this comparison was not biased by any physical dissimilarity of the stimuli since only mismatch trials were used (and therefore physical characteristics of the stimuli are held constant across correct and incorrect trials). In contrast, no predictive relationship was observed between channel responses and accuracy on mismatch trials when subjects were performing the contrast discrimination task (one-way ANOVA for both sessions combined p = 0.9994 and second session alone p = 0.1632; Figure 6c–d); however, the interaction between attention condition and channel offset did not reach significance (one-way ANOVA on differences between conditions for both scan sessions combined p = 0.6915 and second scan session alone p = 0.097). Nonetheless, the overall results suggest that even if attentional gain is applied to on-channel neural populations when subjects are attending to the orientation of the gratings (Figure 5), the success of perceptual decisions appears to be most strongly influenced by the relative activation of channels tuned beyond the target orientation.

Experiment 2

Although we did not observe any evidence for off-target attentional gain in Experiment 1 (Figure 5), at least two potential issues need to be addressed. First, feature-based attention may be restricted to a single gain field centered at one position in feature space. Given that the direction of the rotational offset on mismatch trials was unknown in advance in Experiment 1, subjects may have thus adopted one of two possible strategies: 1) to focus that single gain field on the orientation indicated by the central cue, as this would slightly elevate the response of informative off-target neural populations relative to a case in which no gain is applied at all, or 2) probabilistically deploy attention to one side of the cue or the other, in an attempt to guess the upcoming rotational offset. Finally, it is also possible that if gain was applied to neurons tuned to orientations closely flanking the relevant stimulus (such as the 18° channel), this gain could be effectively smoothed into the estimate of the 0° channel response during the estimation of the forward model. Some smoothing is expected to occur because the basis set of half-cycle sinusoids (Figure 3) was not orthogonal. Thus, to the extent that there is overlap between adjacent basis functions, the estimated channel responses will not be independent and larger responses in channels tuned ±18° from the cue might artificially elevate the estimated response amplitude in the 0° channel. Any of these possibilities may have decreased the probability of observing off-target gain in Experiment 1. We therefore performed a second experiment in which the pre-cue informed subjects about the rotational offset of the deviant orientation stimulus in the event of a mismatch trial. This manipulation should make it possible to deploy gain to off-target neurons tuned to one side of the cued orientation, and eliminate the symmetry of any smoothing that may occur between adjacent orientation channels, thereby preserving a bias in the shape of the tuning function induced by attention, should one exist.

Behavioral task

In Experiment 2, we used a modified version of Experiment 1 in which we pre-cued the rotational offset (either CW or CCW) of the deviant orientation stimulus on mismatch trials with 100% validity. Task difficulty was closely matched across the two conditions, as indicated by behavioral response accuracy: 72.85% (SEM = 2.4%) in the attend-orientation task, and 69.22% (SEM = 1.05%) in the attend-contrast task.

Feature-selective tuning curves and attentional gain

Figure 7a shows the orientation tuning curves from V1 when subjects were performing either the main orientation discrimination task or the contrast discrimination task. Data were shifted so that the 0° channel indicates the cued orientation and positive values on the x-axis indicate responses in channels that were offset in the cued direction, while negative values indicate responses in channels offset in the uncued direction. Responses in the two attention conditions were similar in overall amplitude. However, there was an interaction such that channel responses were systematically shifted in the cued direction in the attend-orientation condition compared to the attend-contrast condition (attention condition by orientation channel interaction, p<0.005). To highlight this shift in the tuning profiles, Figure 7b shows the magnitude of responses on attend-orientation trials in the cued direction relative to each corresponding offset in the uncued direction, and Figure 7c shows the corresponding comparison on attend-contrast trials. On attend-orientation trials, responses in channels tuned 18° and 36° in the cued direction were larger than the corresponding channels tuned in the uncued direction (p<0.01 and p<0.025, respectively, Figure 7b). No corresponding differences were found between responses on attend-contrast trials (Figure 7c). A direct comparison of the difference between responses in the cued and uncued directions revealed a larger modulation on attend-orientation trials compared to attend-contrast trials in the 18° and 36° channels (p<0.025 for both comparisons, Figure 7d). Note that this asymmetry cannot be explained by the fact that stimuli on mismatch trials were physically rotated in the cued direction, as the same displays were used in both attend-orientation and attend-contrast conditions, thereby controlling for any sensory-related influences on the channel tuning curves. This asymmetric shift in the response profile was also evident on attend-orientation trials in V2v (Figures 8a,c), as responses in channels tuned 18°, 36°, and 54° in the cued direction were significantly greater than responses in corresponding channels in the uncued direction on attend-orientation trials (p<0.025, p<0.01, p<0.05, respectively, see solid line in Figure 8c). However, a direct comparison between this response bias on attend-orientation trials and attend-contrast trials did not reach significance in any channel (all p’s>0.16, compare solid and dashed lines in Figure 8c). Finally, no evidence for an attention related asymmetry was present in V3v (Figures 8b,d).

While these data are consistent with off-target deployment of attentional gain in V1, an alternative explanation is that subjects were actually applying on-target gain to the expected deviant orienation. Because the cue indicated the direction of the rotational offset of mismatched stimuli, treating the cued orientation as the relevant target could potentially contribute to the biased gain pattern that we observed in V1 and in V2v. However, we view this explanation as unlikely given that the average orientation offset on mismatch trials was only 5.71°. Thus, applying on-target gain at this small offset would not be expected to have a large influence on the channel tuned 18° in the cued direction, and certainly would have even less of an impact at 36°, where the observed effect was just as large in both V1 and V2v (that is, statistically there was no difference between responses in the 18° and 36° channels, although the numerical value at 36° was slightly higher in both areas).

We further explored this possibility by repeating our analysis after re-centering the channel responses such that 0° now corresponds to the expected orientation of the deviant stimulus in the event of a mismatch trial (i.e. 0° now indicates the orientation of the expected deviant stimulus). Because the size of the offset was titrated to keep behavioral performance at the desired level, this rotation of the channels was tailored to the offset for each subject on a scan-by-scan basis. If on-target gain was applied to the cued deviant offset in the attend-orientation condition, then we would expect an equivalent response in channels tuned to either side of 0° since there should no longer be any bias in the allocation of attentional gain in the cued direction. Contrary to this prediction, activation in V1 was still greater in the channels in the cued compared to the uncued directions at 18°, 36°, and 54° (all p’s < 0.026). In addition, these differences were significantly greater in the attend-orientation condition compared to the attend-contrast condition (p = 0.034, p = 0.024, for the 18° and 36° channels, respectively, while p = 0.09 in the 54° channel). These results are consistent with off-target gain being directed to channels tuned beyond the deviant orientation.

As in Experiment 1, we assessed the predictive relationship between behavioral accuracy and differential activation levels in orientation channels measured from visual areas contralateral and ipsilateral to the deviant grating on mismatch trials. Here, we averaged corresponding channel responses across V1 and V2v, as both areas exhibited a shifted response function in the attend-orientation condition (Figures 7–8, and the logistic regression revealed a qualitatively similar pattern in both areas). The pattern was similar to that observed in Experiment 1: greater activation in the contralateral channels tuned +36° in the cued direction predicted correct responses on mismatch trials when subjects were attending to orientation (see Figure 9; one-way ANOVA across channels, p = 0.024; t-test at +36°, p = 0.04, marginally significant effect in the +18° channel, p=0.067). Conversely, greater activation in the contralateral channels tuned to −54° and −72° predicted a higher probability of incorrect responses (t-test at −54°, p=0.036, t-test at −72°, p = 0.033). No predictive relationship was observed between channel responses and accuracy in the attend-contrast condition (one-way ANOVA across channels, p = 0.81). Finally, there was a significant two-way interaction between orientation channel and attention condition (compare Figure 9a and 9b, p = 0.031). No significant effects were observed in V3v.

Discussion

In Experiment 1, we observed on-target gain in the feature-selective BOLD response profiles even though channels tuned to flanking orientations are theoretically more informative in the context of a fine discrimination task. Nonetheless, the logistic regression analysis shows that activation levels in off-target channels predicted subjects’ accuracy on mismatch trials (Figure 6). This suggests that even in the absence of off-target gain, difficult perceptual discriminations may be facilitated by optimizing the readout of sensory information during decision making (Pestilli et al., 2011, Palmer et al., 2000, Eckstein et al., 2002, Gold and Shadlen, 2007, Law and Gold, 2008, 2009). For example, simulations of fine motion discriminations suggest that MT-like neurons tuned away from a motion target (~±40° relative to the target direction in this case) should be weighted most strongly by parietal sensorimotor neurons that are thought to integrate sensory evidence over time (Law and Gold, 2008, 2009; see also Jazayeri and Movshon, 2006). However, the fact that a predictive relationship between off-target activation and accuracy is not robust until the second scan session suggests that some training may be necessary for optimal read-out to emerge (a point that we revisit below).

Given that the rotational offset between the two gratings was unknown in advance on mismatch trials in Experiment 1 (i.e. CW or CCW), we hypothesized two possible factors that may have reduced the likelihood of observing off-target gain: 1) subjects may have only been capable of applying a single gain field, and they centered this single gain field on the cued channel, or 2) small amounts of gain in channels tuned ±18° from the target may have been smoothed into the estimate of the 0° channel, obscuring any off-target gain. In Experiment 2, these issues were addressed by giving precise advance information about the rotational offset of the deviant grating on mismatch trials. Under these conditions, we observed robust off-target gain, suggesting that feature-based attention can modulate sensory responses in a more complex and optimal manner than previously recognized. Similar off-target modulations have been observed in the context of perceptual learning studies that employed a single well-trained stimulus discrimination (Schoups et al., 2001; Raiguel et al., 2006); however, the present results suggest that top-down attentional gain can be deployed to informative neural populations in a more flexible manner across a range of possible stimulus values (i.e. the 10 orientations used in the present study as opposed to a single well-trained orientation). Furthermore, a logistic regression analysis indicated that the relative amount of off-target activation in the cued direction predicted behavioral accuracy on mismatch trials, just as in Experiment 1. Note that the subjects in Experiment 2 were more experienced with the task than those in Experiment 1 (given either recent exposure to fine orientation discrimination tasks in other fMRI experiments, or an additional training session outside of the scanner; see Methods: Experiment 2 Subjects), which may account for the emergence of this predictive relationship in a single scan session. Finally, the data collectively suggest that decisions can be based on informative off-target neural populations, either in the absence of corresponding off-target attentional gain (Experiment 1) or in the presence of off-target gain (Experiment 2). This dissociation is consistent with the possibility that optimal sensory enhancement and optimal read-out of sensory information can make complementary, and perhaps independent, contributions to the efficiency of perceptual decisions.

An important limitation of the present study is that the precise offset of the most-informative sensory neurons cannot be directly inferred based on the point of maximum off-target gain observed in the BOLD signal. This problem arises for two reasons. First, even though changes in the BOLD signal are generally coupled with multi-unit neural activity, especially in areas like V1 where most connections are local (Douglas and Martin, 2007), the BOLD signal is also influenced by other factors such as synaptic input from distal sources and by the response properties of astrocytes (Heeger et al., 2000, Logothetis et al., 2001, Logothetis and Wandell, 2004, Logothetis, 2008, Schummers et al., 2008). Second, the encoding model that we used to estimate the response in each orientation channel is a coarse proxy for the activity level of underlying sensory neurons. The loss of resolution arises because each voxel contains a mixture of multiple neural populations that are tuned to different features, so the feature-based tuning curves we derive will always be less precise than their single-unit counterparts. Despite this inability to pinpoint the exact orientation offset at which attentional gain is being applied, the observation of higher activation levels in channels tuned away from the relevant feature (Figure 7) is consistent with the existence of off-target gain.

The observation that channel responses rotated unilaterally beyond the target predict behavioral accuracy (Experiments 1 and 2) and show enhanced gain (Experiment 2) are seemingly inconsistent with the observation of bilateral modulations in two previous studies. In one, Scolari and Serences (2009) reported psychophysical evidence suggesting that gain is applied bilaterally to neurons tuned to features on either side of the target. The authors reasoned that this was an adaptive pattern given that both sets of neurons should undergo a relatively large positive or negative change in firing rate in response to small orientation offsets. A priori, we expected that the same pattern of bilateral gain would be observed in the present study, and the exact reason for this apparent discrepancy is not clear. However, Scolari and Serences (2009) used a search display that contained multiple distracters, and the task was extremely difficult for many observers (based on the distribution of accuracies). Thus, informative off-target neural populations tuned to both sides of the target might be recruited to combat increased competition from additional distracters, particularly in the context of an extremely challenging search task.

Similarly, Scolari and Serences (2010) observed larger off-target BOLD responses bilaterally after post-sorting trials based on behavioral accuracy in a task where subjects had to perform a challenging orientation discrimination task. In contrast, an analogous analysis of the relationship between accuracy and BOLD responses in the present experiment only revealed an effect in channels tuned beyond the cued target orientation (see Figures 6, 9). However, in Scolari and Serences (2010), subjects compared sequentially presented ‘sample’ and ‘test’ stimuli that were always offset by a small CW or CCW rotation. The fact that the test stimulus never matched the sample may have thus encouraged a greater reliance on responses in orientation channels tuned on either side of the target, compared to the present paradigm in which 50% of the trials contained matching stimuli. Again, this explanation is highly speculative, but future psychophysical and neuroimaging studies might address the conditions under which bilateral modulations are observed by systematically manipulating both the number of distracters and the type of discrimination that is being performed.

A common thread among most existing models of top-down attention is a focus on the relationship between the tuning properties of a cell and the physical characteristics of the stimulus array. For instance, the biased competition model holds that attention biases activity levels towards the response evoked by a preferred stimulus when presented alone, even when the preferred stimulus is presented along with an unattended distracter that would normally drive down the response of the cell (Moran and Desimone, 1985; Desimone and Duncan, 1995; Reynolds and Desimone, 1999). Likewise, the feature similarity gain model suggests that attention increases the gain of neurons that are tuned to a relevant feature, and suppresses the gain of neurons tuned away from a relevant feature (Treue and Martinez Trujillo, 1999, Martinez-Trujillo, 2004, Maunsell and Treue, 2006). Critically, both of these accounts focus primarily on the relationship between the relevant stimulus feature and the tuning properties of the recorded neuron, without explicitly factoring in the nature of the perceptual task being performed by the observer. In contrast, optimal gain accounts (Navalpakkam and Itti, 2007, Scolari and Serences, 2009) hold that the magnitude of attentional gain should depend on the relationship between the relevant feature, a neuron’s tuning properties, and the perceptual task that is currently being performed. However, the addition of a task component poses a mystery: how does the brain ‘know’ which neural populations to modulate given a specific task? In the case of on-target gain, a multiplicative enhancement of all sensory neurons would produce the largest positive modulation in neurons that are already strongly driven by the sensory stimulus (McAdams and Maunsell, 1999a,b; Treue and Maunsell, 1999). However, off-target enhancement could not be implemented via this type of across-the-board increase in sensory gain. While we have no conclusive answer, perceptual learning following extensive practice has been shown to selectively modulate response properties of neurons tuned away from a target (Schoups et al., 2001; Raiguel et al., 2006; but see Ghose, Yang, and Maunsell, 2002). These off-target modulations might be mediated via a selective reweighting of pre-synaptic inputs (Ghose and Maunsell, 2008; Ghose 2009) that might occur either rapidly in response to attentional demands, or after repeated experience with a specific stimulus. While speculative, the experience-based account is at least consistent with our observation that the predictive relationship between modulations in off-target channels and behavioral performance became more pronounced with practice in Experiment 1 (Figure 6). Future investigations might therefore evaluate potential mechanisms by examining both the flexibility of off-target modulations and the degree to which they grow with practice in the context of a specific task.

Given these findings, existing models might be modified to account for the importance of task demands by stipulating that attention increases the gain of neurons that are most informative given the currently relevant perceptual discrimination (Navalpakkam and Itti, 2007). However, this poses an interesting challenge, as the activity of a sensory neuron is usually thought to convey information about the likelihood that its preferred feature is present in the visual field. Thus, off-target gain might lead to a non-veridical perceptual representation of a relevant feature, which may complicate the decoding of activity patterns during decision making (Navalpakkam and Itti, 2007; Scolari and Serences, 2009). One possible solution is that discriminating between two stimuli does not require the formation of veridical representations. Instead, visual features might be discriminated on the basis of the differential pattern of responses across populations of sensory neurons: a large differential response should count as evidence in favor of a ‘mismatch’ judgment, and a small differential response should be counted as evidence in favor of a ‘match’ judgment. In the present task, this would involve comparing the distance between simultaneously evoked response patterns in left and right visual cortex. If this type of decision rule is utilized, then increasing off-target gain would lead to a greater separation between response patterns evoked by highly similar stimuli (compared to on-target gain), thereby facilitating accurate behavioral performance.

Acknowledgments

We thank Soren K. Andersen, John H. Reynolds, Gijs Brouwer, and Thomas Sprague for helpful discussions related to experimental design and data analysis methods. This work was funded by NIMH RO1-092345 to J.T.S.

References

- Boynton G. Attention and visual perception. Current Opinion in Neurobiology. 2005;15:465–469. doi: 10.1016/j.conb.2005.06.009. [DOI] [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox. Spat Vis. 1997;10:433–436. [PubMed] [Google Scholar]

- Brouwer GJ, Heeger DJ. Decoding and Reconstructing Color from Responses in Human Visual Cortex. Journal of Neuroscience. 2009;29:13992–14003. doi: 10.1523/JNEUROSCI.3577-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brouwer GJ, Heeger DJ. Cross-orientation suppression in human visual cortex. Journal of Neurophysiology. 2011 doi: 10.1152/jn.00540.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen Y, Martinez-Conde S, Macknik SL, Bereshpolova Y, Swadlow HA, Alonso J-M. Task difficulty modulates the activity of specific neuronal populations in primary visual cortex. Nat Neurosci. 2008;11:974–982. doi: 10.1038/nn.2147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dale AM. Optimal experimental design for event-related fMRI. Hum Brain Mapp. 1999;8:109–114. doi: 10.1002/(SICI)1097-0193(1999)8:2/3<109::AID-HBM7>3.0.CO;2-W. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Das A, Sirotin YB. What could underlie the trial-related signal? A response to the commentaries by Drs. Kleinschmidt and Muller, and Drs. Handwerker and Bandettini. Neuroimage. 2011;55:1413–1418. doi: 10.1016/j.neuroimage.2010.07.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annu Rev Neurosci. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- Douglas RJ, Martin KA. Recurrent neuronal circuits in the neocortex. Curr Biol. 2007;17:R496–500. doi: 10.1016/j.cub.2007.04.024. [DOI] [PubMed] [Google Scholar]

- Eckstein MP, Shimozaki SS, Abbey CK. The footprints of visual attention in the Posner cueing paradigm revealed by classification images. J Vis. 2002;2:25–45. doi: 10.1167/2.1.3. [DOI] [PubMed] [Google Scholar]

- Engel SA, Rumelhart DE, Wandell BA, Lee AT, Glover GH, Chichilnisky EJ, Shadlen MN. fMRI of human visual cortex. Nature. 1994;369:525. doi: 10.1038/369525a0. [DOI] [PubMed] [Google Scholar]

- Freeman J, Brouwer GJ, Heeger DJ, Merriam EP. Orientation Decoding Depends on Maps, Not Columns. Journal of Neuroscience. 2011;31:4792–4804. doi: 10.1523/JNEUROSCI.5160-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freeman WT, Adelson EH. The design and use of steerable filters. Pattern Analysis and Machine Intelligence. IEEE Transactions on. 1991;13:891–906. [Google Scholar]

- Ghose GM, Yang T, Maunsell JH. Physiological correlates of perceptual learning in monkey V1 and V2. J Neurophysiol. 2002;87:1867–88. doi: 10.1152/jn.00690.2001. [DOI] [PubMed] [Google Scholar]

- Ghose GM, Maunsell JH. Spatial summation can explain the attentional modulation of neuronal responses to multiple stimuli in area V4. J Neurosci. 2008;28:5115–5126. doi: 10.1523/JNEUROSCI.0138-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghose GM. Attentional modulation of visual responses by flexible input gain. J Neurophysiol. 2009;101:2089–106. doi: 10.1152/jn.90654.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gold JI, Shadlen MN. The Neural Basis of Decision Making. Annual Review of Neuroscience. 2007;30:535–574. doi: 10.1146/annurev.neuro.29.051605.113038. [DOI] [PubMed] [Google Scholar]

- Gur M, Kagan I, Snodderly DM. Orientation and direction selectivity of neurons in V1 of alert monkeys: functional relationships and laminar distributions. Cereb Cortex. 2005;15:1207–1221. doi: 10.1093/cercor/bhi003. [DOI] [PubMed] [Google Scholar]

- Handwerker DA, Bandettini PA. Hemodynamic signals not predicted? Not so: A comment on Sirotin and Das (2009) Neuroimage. 2011a;55:1409–1412. doi: 10.1016/j.neuroimage.2010.04.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Handwerker DA, Bandettini PA. Simple explanations before complex theories: Alternative interpretations of Sirotin and Das’ observations. Neuroimage. 2011b;55:1419–1422. doi: 10.1016/j.neuroimage.2011.01.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haynes J-D, Rees G. Predicting the orientation of invisible stimuli from activity in human primary visual cortex. Nat Neurosci. 2005;8:686–691. doi: 10.1038/nn1445. [DOI] [PubMed] [Google Scholar]

- Heeger DJ, Huk AC, Geisler WS, Albrecht DG. Spikes versus BOLD: what does neuroimaging tell us about neuronal activity? Nat Neurosci. 2000;3:631–633. doi: 10.1038/76572. [DOI] [PubMed] [Google Scholar]

- Heeger DJ, Ress D. What does fMRI tell us about neuronal activity? Nat Rev Neurosci. 2002;3:142–151. doi: 10.1038/nrn730. [DOI] [PubMed] [Google Scholar]

- Hol K, Treue S. Different populations of neurons contribute to the detection and discrimination of visual motion. Vision Research. 2001;41:685–689. doi: 10.1016/s0042-6989(00)00314-x. [DOI] [PubMed] [Google Scholar]

- Itti L, Koch C, Braun J. Revisiting spatial vision: toward a unifying model. J Opt Soc Am A Opt Image Sci Vis. 2000;17:1899–917. doi: 10.1364/josaa.17.001899. [DOI] [PubMed] [Google Scholar]

- Jia X, Smith MA, Kohn A. Stimulus selectivity and spatial coherence of gamma components of the local field potential. J Neuroscience. 2011;31:9390–9403. doi: 10.1523/JNEUROSCI.0645-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jazayeri M, Movshon JA. Optimal representation of sensory information by neural populations. Nat Neurosci. 2006;9:690–696. doi: 10.1038/nn1691. [DOI] [PubMed] [Google Scholar]

- Jazayeri M, Movshon JA. Integration of sensory evidence in motion discrimination. Journal of Vision. 2007;7:7–7. doi: 10.1167/7.12.7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kamitani Y, Tong F. Decoding the visual and subjective contents of the human brain. Nat Neurosci. 2005;8:679–685. doi: 10.1038/nn1444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kay KN, Naselaris T, Prenger RJ, Gallant JL. Identifying natural images from human brain activity. Nature. 2008;452:352–355. doi: 10.1038/nature06713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleinschmidt A, Muller NG. The blind, the lame, and the poor signals of brain function--a comment on Sirotin and Das (2009) Neuroimage. 2010;50:622–625. doi: 10.1016/j.neuroimage.2009.12.075. [DOI] [PubMed] [Google Scholar]

- Law C-T, Gold JI. Neural correlates of perceptual learning in a sensory-motor, but not a sensory, cortical area. Nat Neurosci. 2008;11:505–513. doi: 10.1038/nn2070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Law C-T, Gold JI. Reinforcement learning can account for associative and perceptual learning on a visual-decision task. Nat Neurosci. 2009;12:655–663. doi: 10.1038/nn.2304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee J, Maunsell JH. A normalization model of attentional modulation of single unit responses. PLoS One. 2009;4:e4651. doi: 10.1371/journal.pone.0004651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Logothetis NK. What we can do and what we cannot do with fMRI. Nature. 2008;453:869–878. doi: 10.1038/nature06976. [DOI] [PubMed] [Google Scholar]

- Logothetis NK, Pauls J, Augath M, Trinath T, Oeltermann A. Neurophysiological investigation of the basis of the fMRI signal. Nature. 2001;412:150–157. doi: 10.1038/35084005. [DOI] [PubMed] [Google Scholar]

- Logothetis NK, Wandell BA. Interpreting the BOLD signal. Annu Rev Physiol. 2004;66:735–769. doi: 10.1146/annurev.physiol.66.082602.092845. [DOI] [PubMed] [Google Scholar]

- Luck SJ, Chelazzi L, Hillyard SA, Desimone R. Neural mechanisms of spatial selective attention in areas V1, V2, and V4 of macaque visual cortex. J Neurophysiol. 1997;77:24–42. doi: 10.1152/jn.1997.77.1.24. [DOI] [PubMed] [Google Scholar]

- Martinez-Trujillo JC, Treue S. Feature-Based Attention Increases the Selectivity of Population Responses in Primate Visual Cortex. Current Biology. 2004;14:744–751. doi: 10.1016/j.cub.2004.04.028. [DOI] [PubMed] [Google Scholar]

- Maunsell JH, Treue S. Feature-based attention in visual cortex. Trends Neurosci. 2006;29:317–322. doi: 10.1016/j.tins.2006.04.001. [DOI] [PubMed] [Google Scholar]

- McAdams CJ, Maunsell JH. Effects of attention on orientation-tuning functions of single neurons in macaque cortical area V4. J Neurosci. 1999a;19:431–441. doi: 10.1523/JNEUROSCI.19-01-00431.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McAdams CJ, Maunsell JH. Effects of attention on the reliability of individual neurons in monkey visual cortex. Neuron. 1999b;23:765–773. doi: 10.1016/s0896-6273(01)80034-9. [DOI] [PubMed] [Google Scholar]

- McAdams CJ, Reid RC. Attention modulates the responses of simple cells in monkey primary visual cortex. J Neurosci. 2005;25:11023–11033. doi: 10.1523/JNEUROSCI.2904-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore BCJ. Basic auditory processes involved in the analysis of speech sounds. Philosophical Transactions of the Royal Society B: Biological Sciences. 2008;363:947–963. doi: 10.1098/rstb.2007.2152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moran J, Desimone R. Selective attention gates visual processing in the extrastriate cortex. Science. 1985;229:782–784. doi: 10.1126/science.4023713. [DOI] [PubMed] [Google Scholar]

- Motter BC. Focal attention produces spatially selective processing in visual cortical areas V1, V2, and V4 in the presence of competing stimuli. J Neurophysiol. 1993;70:909–919. doi: 10.1152/jn.1993.70.3.909. [DOI] [PubMed] [Google Scholar]

- Naselaris T, Kay KN, Nishimoto S, Gallant JL. Encoding and decoding in fMRI. NeuroImage. 2011;56:400–410. doi: 10.1016/j.neuroimage.2010.07.073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Navalpakkam V, Itti L. Top-down attention selection is fine grained. Journal of Vision. 2006;6:4–4. doi: 10.1167/6.11.4. [DOI] [PubMed] [Google Scholar]

- Navalpakkam V, Itti L. Search Goal Tunes Visual Features Optimally. Neuron. 2007;53:605–617. doi: 10.1016/j.neuron.2007.01.018. [DOI] [PubMed] [Google Scholar]

- Palmer J, Verghese P, Pavel M. The psychophysics of visual search. Vision Res. 2000;40:1227–1268. doi: 10.1016/s0042-6989(99)00244-8. [DOI] [PubMed] [Google Scholar]

- Pelli DG. The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat Vis. 1997;10:437–442. [PubMed] [Google Scholar]

- Pestilli F, Carrasco M, Heeger David J, Gardner Justin L. Attentional Enhancement via Selection and Pooling of Early Sensory Responses in Human Visual Cortex. Neuron. 2011;72:832–846. doi: 10.1016/j.neuron.2011.09.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pouget A, Deneve S, Latham PE. The relevance of Fisher Information for theories of cortical computation and attention. In: Braun J, et al., editors. Visual Attention and Neural Circuits. Cambridge, MA: MIT; 2001. [Google Scholar]

- Purushothaman G, Bradley DC. Neural population code for fine perceptual decisions in area MT. Nature Neuroscience. 2004;8:99–106. doi: 10.1038/nn1373. [DOI] [PubMed] [Google Scholar]

- Raiguel S, Vogels R, Mysore SG, Orban GA. Learning to see the difference specifically alters the most informative V4 neurons. J Neurosci. 2006;26:6589–602. doi: 10.1523/JNEUROSCI.0457-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Regan D, Beverley KI. Postadaptation orientation discrimination. J Opt Soc Am A. 1985;2:147–155. doi: 10.1364/josaa.2.000147. [DOI] [PubMed] [Google Scholar]

- Reynolds JH, Chelazzi L, Desimone R. Competitive mechanisms subserve attention in macaque areas V2 and V4. J Neurosci. 1999;19:1736–1753. doi: 10.1523/JNEUROSCI.19-05-01736.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reynolds JH, Desimone R. The Role of Neural Mechanisms of Attention in Solving the Binding Problem. Neuron. 1999;24:19–29. doi: 10.1016/s0896-6273(00)80819-3. [DOI] [PubMed] [Google Scholar]

- Reynolds JH, Heeger DJ. The Normalization Model of Attention. Neuron. 2009;61:168–185. doi: 10.1016/j.neuron.2009.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ringach DL, Bredfeldt CE, Shapley RM, Hawken MJ. Suppression of neural responses to nonoptimal stimuli correlates with tuning selectivity in macaque V1. J Neurophysiol. 2002a;87:1018–1027. doi: 10.1152/jn.00614.2001. [DOI] [PubMed] [Google Scholar]

- Ringach DL, Shapley RM, Hawken MJ. Orientation selectivity in macaque V1: diversity and laminar dependence. J Neurosci. 2002b;22:5639–5651. doi: 10.1523/JNEUROSCI.22-13-05639.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sasaki Y, Rajimehr R, Kim BW, Ekstrom LB, Vanduffel W, Tootell RBH. The Radial Bias: A Different Slant on Visual Orientation Sensitivity in Human and Nonhuman Primates. Neuron. 2006;51:661–670. doi: 10.1016/j.neuron.2006.07.021. [DOI] [PubMed] [Google Scholar]

- Schiller PH, Finlay BL, Volman SF. Quantitative studies of single-cell properties in monkey striate cortex. II. Orientation specificity and ocular dominance. J Neurophysiol. 1976;39:1320–1333. doi: 10.1152/jn.1976.39.6.1320. [DOI] [PubMed] [Google Scholar]

- Schoups A, Vogels R, Qian N, Orban G. Practising orientation identification improves orientation coding in V1 neurons. Nature. 2001;412:549–553. doi: 10.1038/35087601. [DOI] [PubMed] [Google Scholar]

- Schummers J, Yu H, Sur M. Tuned Responses of Astrocytes and Their Influence on Hemodynamic Signals in the Visual Cortex. Science. 2008;320:1638–1643. doi: 10.1126/science.1156120. [DOI] [PubMed] [Google Scholar]

- Scolari M, Serences J. Adaptive allocation of attentional gain. Journal of Neuroscience. 2009;29:11933–11942. doi: 10.1523/JNEUROSCI.5642-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scolari M, Serences J. Basing perceptual decisions on the most informative sensory neurons. Journal of Neurophysiology. 2010 doi: 10.1152/jn.00273.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Serences J, Saproo S, Scolari M, Ho T, Muftuler L. Estimating the influence of attention on population codes in human visual cortex using voxel-based tuning functions. Neuroimage. 2009;44:223–231. doi: 10.1016/j.neuroimage.2008.07.043. [DOI] [PubMed] [Google Scholar]

- Serences JT, Boynton GM. The representation of behavioral choice for motion in human visual cortex. J Neurosci. 2007a;27:12893–9. doi: 10.1523/JNEUROSCI.4021-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Serences JT, Boynton GM. Feature-based attentional modulations in the absence of direct visual stimulation. Neuron. 2007b;55:301–12. doi: 10.1016/j.neuron.2007.06.015. [DOI] [PubMed] [Google Scholar]

- Serences JT, Saproo S. Computational advances towards linking BOLD and behavior. Neuropsychologia. 2011 doi: 10.1016/j.neuropsychologia.2011.07.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sereno MI, Dale AM, Reppas JB, Kwong KK, Belliveau JW, Brady TJ, Rosen BR, Tootell RB. Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science. 1995;268:889–893. doi: 10.1126/science.7754376. [DOI] [PubMed] [Google Scholar]

- Seung HS, Sompolinsky H. Simple Models for Reading Neuronal Population Codes. Proceedings of the National Academy of Sciences. 1993;90:10749–10753. doi: 10.1073/pnas.90.22.10749. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sirotin YB, Das A. Anticipatory haemodynamic signals in sensory cortex not predicted by local neuronal activity. Nature. 2009;457:475–479. doi: 10.1038/nature07664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Swisher JD, Gatenby JC, Gore JC, Wolfe BA, Moon CH, Kim SG, Tong F. Multiscale pattern analysis of orientation-selective activity in the primary visual cortex. J Neurosci. 2010;30:325–330. doi: 10.1523/JNEUROSCI.4811-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]