Abstract

Background

Mobile health (mHealth) is a growing field aimed at developing mobile information and communication technologies for healthcare. Adolescents are known for their ubiquitous use of mobile technologies in everyday life. However, the use of mHealth tools among adolescents is not well described.

Objective

We examined the usability of four commonly used mobile devices (an iPhone, an Android with touchscreen keyboard, an Android with built-in keyboard, and an iPad) for accessing healthcare information among a group of urban-dwelling adolescents.

Methods

Guided by the FITT (Fit between Individuals, Task, and Technology) framework, a think-aloud protocol was combined with a questionnaire to describe usability on three dimensions: 1) task-technology fit; 2) individual-technology fit; and 3) individual-task fit.

Results

For task-technology fit, we compared the efficiency, and effectiveness of each of the devices tested and found that the iPhone was the most usable had the fewest errors and prompts and had the . lowest mean overall task time For individual-task fit, we compared efficiency and learnability measures by website tasks and found no statistically significant effect on tasks steps, task time and number of errors. Following our comparison of success rates by website tasks, we compared the difference between two mobile applications which were used for diet tracking and found statistically significant effect on tasks steps, task time and number of errors. For individual-technology fit, interface quality was significantly different across devices indicating that this is an important factor to be considered in developing future mobile devices.

Conclusions

All of our users were able to complete all of the tasks, however the time needed to complete the tasks was significantly different by mobile device and mHealth application. Future design of mobile technology and mHealth applications should place particular importance on interface quality.

Keywords: adolescents, mobile health (mHealth), usability, health information

1.0 Introduction

Mobile health (mHealth) is aimed at developing and describing the use of mobile information and communication technologies for healthcare purposes. mHealth tools may provide innovative methods to better support information, communication, and documentation needs of patients, clinicians, and other healthcare workers [1]. Early use of mobile devices in healthcare mainly involved handheld devices known as personal digital assistants (PDAs) that provided various information resources such as medication dictionaries or point-of-care decision support [2]. The ability to develop applications on mobile devices was limited due to lack of memory, small screen space, poor graphical display, and inability to transfer data. Mobile technology has advanced and current devices allow for more memory and data storage, full color graphical user interfaces with video capability, wireless access, and integration with cellular devices.

Using mobile technologies to rapidly and accurately assess and modify health-related behavior has great potential to transform healthcare. Several specific advantages may be afforded by mobile technology, including reduction of memory bias, time-stamped data capture, and the provision of real-time personalized, tailored information. The ubiquitous nature of mobile technologies in daily life (e.g., smart phones, sensors) has created opportunities for applications that were not previously possible by allowing clinicians to deliver new health-related interventions in real time [3]. Moreover, by removing geographical and temporal boundaries, these technologies may reduce economic disparities, lessen healthcare costs, and promote more personalized healthcare.

mHealth tools may be particularly relevant to adolescents because they are known to be frequent users of mobile technology [4]. The mobile phone has become the favored communication tool for the majority of American teens [4]. In 2008, 71% of 12- to 17-year-olds [5] and 93% of 18- to 29-year-olds owned cell phones [6]. Additionally, 76% of teens used text messaging [5], with 38% sending text messages on a daily basis [7]. In 2009, 93% of 12- to 17-year-olds went online at a rate equal to that of the youngest adults (18- to 29-year-olds) [6].

Nonetheless, many emerging mHealth innovations have not gone beyond the pilot stage and few have been properly evaluated [8]. As the development of mHealth tools proliferates and the number of users of mHealth technologies increases, there is a need to understand the usability of these mobile devices and applications. In particular, the core set of functions available within these devices and applications that are required for their productive use should be studied. This paper presents the findings of usability testing of four mobile devices for the purpose of accessing health information and using mHealth applications among a group of urban adolescents.

2.0 Methods

2.1 Study Design

A laboratory-based observational study was used to examine and compare the usability characteristics of four mobile devices for accessing health information among adolescents. The four devices included an iPad 2 (Apple, Cupertino, Ca), an iPhone 4S (Apple, Cupertino, Ca), an Android device with a touchscreen keyboard (Android Impulse 4G, AT&T, Dallas, Tx), and an Android device with a built-in keyboard (HTC status AT&T, Dallas, Tx). These devices were chosen to reflect commonly used mobile tools while ensuring inclusion of a wide breadth of capabilities available in today’s mobile market.

2.2 Participant Recruitment

Participants were recruited from a local public high school in New York City. Initially, a project coordinator went to the site and verbally recruited participants. Snowball sampling was then used to identify additional participants until the desired number was recruited. Columbia University Medical Center Institutional Review Board (IRB) approval was secured prior to the start of study activities. IRB waiver of parental consent was obtained and participants signed assent forms prior to their participation in the study. All participants were compensated with $20.00 at the completion of the study session.

2.3 Tasks

Five tasks were chosen to measure the usability of the devices, each reflecting a different approach to accessing and using health information with a mobile device. Two tasks involved accessing health information via a website using the mobile device’s web-browser, two tasks involved using health-related mobile applications, and one task involved using the calendar included on the device. Two different websites (AIDS Healthcare Foundation and Medline Plus) that included information on HIV prevention were chosen. The two mobile applications (MyfitnessPal and Sparkpeople Diet Tracker) are used to track daily diet and exercise activities. These applications were chosen because they are free to download, are available on both Apple and Android operating systems, and are related to important health promotion topics for adolescents.

2.4 Testing Protocol

At the start of each session, the study procedures were explained to each participant and written assent was obtained. The participants were then asked to fill out a short demographic and computer experience and use questionnaire. Each participant used two pre-assigned devices to carry out the tasks. Device assignment was determined to ensure that each participant would test two different devices and each device was tested a total of ten times. We rotated the order of device testing so that each device would be the first for half of the testers and second for the other half of the testers (e.g. the iPhone would not always be the first device being tested). Devices were randomly assigned to the participants without any consideration of their prior use of the device. Using the assigned devices, each participant carried out the two website tasks, the two diet tracking application tasks, and the calendar task. Participants were asked to think aloud and to verbalize the steps taken to carry out each task as they used the devices. Participants’ interactions with the devices were video- and audio-recorded using a laptop computer and a web-camera. The participants’ faces were not recorded during the sessions. During each session, one member of the study team guided the execution of the protocol (BS) and a second team member managed the video and audio recording devices (YJL or MR). After performing all of the tasks using each device, participants completed the Post-Study System Usability Questionnaire (PSSUQ) [9]. The PSSUQ is a 19-item usability questionnaire designed to be administered immediately following task-based usability testing [9]. The PSSUQ consists of three subscales: system usefulness, information quality, and interface quality. Each item is measured on a 7-point Likert scale. Responses range from 1 (strongly agree) to 7 (strongly disagree). Lower scores on the subscales indicate a higher overall user rating. A separate questionnaire was completed for each device tested; therefore, each participant completed two questionnaires.

3.0 Theoretical Framework

3.1 FITT Model

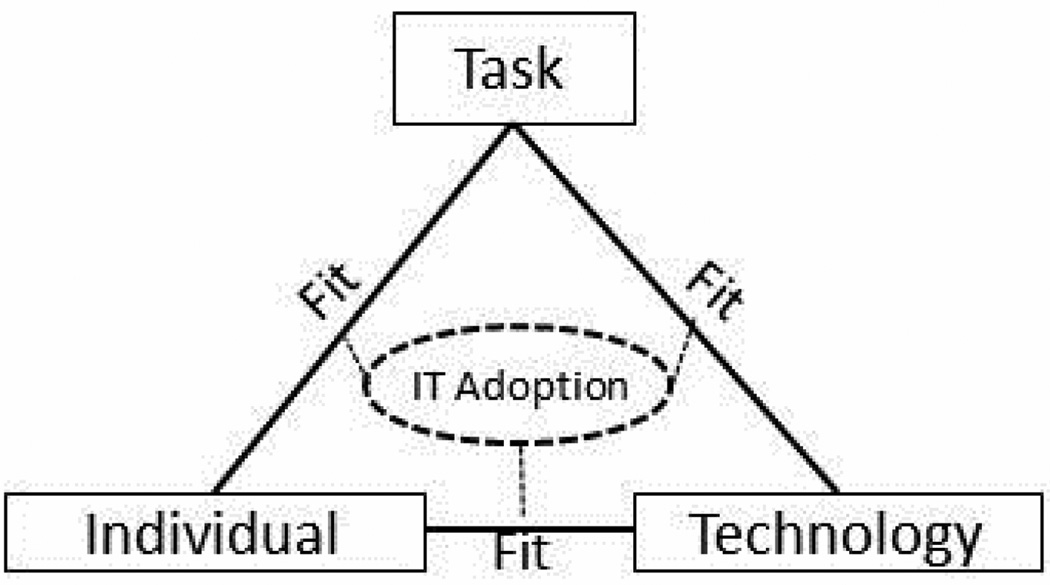

The study was designed based on the FITT (Fit between Individuals, Task, and Technology) model of technology adoption. The FITT model posits that adoption of technology is dependent on the three dimensions: 1) the task-technology fit 2) the individual-task fit and 3) the individual- technology fit [10]. Previous models of technology adoption emphasized the interaction between users and technology or between tasks and technology [11, 12]. The FITT model adds an additional dimension, fit between users and tasks, which allows for an enhanced analysis, taking into account the interaction between individual users and tasks carried out using a particular technology [13]. The FITT framework is illustrated in Figure 1. The characteristics of users, such as their experience and comfort with technology; the characteristics of tasks, such as level of complexity; and the characteristics of the technology, such as functions offered, all interact and result in adoption of a technology to carry out certain tasks [10].

Figure 1.

The FITT Model of IT adoption: From Ammenwerth E, Iller C, Mahler C. IT adoption and the interaction of task, technology and individuals: a fit framework and a case study. BMC Med Inform Decis Mak2006;6:3.

Usability in the model is a characteristic of the technology [10]. The usability of the mobile devices for the tasks and devices chosen was evaluated according to the three dimensions of fit described in the FITT model. We defined usability based on the International Organization for Standardization (ISO) standard for quality in use (ISO/IEC 25010.3) [14]. According to the ISO standard, usability is a characteristic of quality in use and has several sub-characteristics. The ISO defines usability as “the extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency and satisfaction in a specified context of use” [14]. The sub-characteristics of effectiveness, efficiency, and satisfaction can be measured to determine the usability of a particular product [15]. Learnability, an additional component of quality in use, was also used to assess usability for the mobile devices [16].

4.0 Measurement

4.1 Task-technology Fit

To measure task-technology fit, we compared the characteristics of usability across the different devices for the different tasks. Efficiency, and effectiveness were the usability characteristics used to measure task-technology fit. Efficiency, which is defined as the resources required to accurately complete tasks [14], was measured by determining the time taken for each task and the number of steps required to complete tasks. Effectiveness, the ability of a user to complete tasks accurately and completely using a particular system, was measured as the number of tasks completed without hints, prompts, or errors [14]. The comparison of these measures across the different devices allowed us to compare the fit between the tasks carried out and the specific device used for the tasks.

4.2 Individual-task Fit

To measure individual-task fit, we examined task success rates by task and learnability. Learnability is defined as “the degree to which the product enables its users to learn its application” [14]. We assessed learnability by measuring the number of hints, prompts, and errors experienced by participants as they completed the tasks. Task success rates were measured by determining the number of tasks completed without hints, prompts, or errors. We compared success rates on similar software to understand the fit between the individual and the task. First, we analyzed differences in task steps, task time and error/prompts between the web-based tasks which included accessing AIDS Healthcare Foundation and Medline Plus via a mobile web browser. Second, we compared the efficiency and learnability measures on using two mobile applications for diet tracking.

4.3 Individual- technology Fit

The fit between individual and technology was measured by satisfaction, a usability characteristic which is defined as the “degree to which users are satisfied with the experience of using a product in a specified context of use” [14] and was measured using the PSSUQ scores. Previous psychometric evaluation of the PSSUQ reported an overall Cronbach’s alpha of 0.97 [17].

5.0 Data Analysis

The video recordings were analyzed using Morae video-analytic software (Techsmith, Okemos, Mi.). The first author (BS) completed an analysis of the first eight audio-video recordings. Based on this analysis, a coding framework was developed to identify specific task steps and definitions for prompts, hints, and participant errors. A task step was defined as an action which would change the current view of the mobile device screen. For example, task steps included actions such as tapping an icon or button on the device or within an application or website, typing, scrolling, choosing from a list, tapping a link, and maximizing or minimizing a screen. Prompts and hints were defined as times when a participant was unable to carry out required task steps without assistance. Errors were identified when a participant executed an incorrect action in carrying out task steps. When the task was completed, it was coded as meeting a task goal. For example, the website task involved accessing a pre-determined website and finding a link to information about HV prevention. For the diet app, participants were asked to add a list of foods to the breakfast record. For the calendar task, participants were asked to add a dinner event to a particular date.

Once the initial coding framework was identified, the research team (BS, RS, and YJL) examined 10% of the recordings together to clarify the coding framework and determine agreement on the coded data. Any questions about the framework and potential disagreements about the coding were discussed until a resolution was reached. The remaining data were then coded according to the framework.

SPSS 18.0 (Chicago, IL) was used for all statistical analysis. We used analysis of variance (ANOVA) and multivariate analysis of variance (MANOVA) to compare scores across user groups. We controlled for previous reported experience with an iPhone in our MANOVA analyses.

6.0 Results

6.1 Participants

We recruited a total of 20 participants. Of these, 12 (60%) were male and 8 (40%) were female. The mean age of the participants was 15.8 years, with a range of 14 to 18 years (Table 1). Eleven (55%) reported using a computer at least once a day, while nine (45%) reported using a computer several times per week to several times per month. Sixteen (80%) reported using mobile devices at least once per day and three (15%) reported using mobile devices several times per week to several times per month. One participant did not report this information. Seven (35%) of the participants reported iPhones as the devices they used most frequently, while six (30%) reported using Android devices most frequently. Only one (5%) participant reported that a netbook was the device they used most frequently and one (5%) used a tablet. The remainder of the participants did not complete this question on their survey.

Table 1.

Participants’ demographic characteristics

| Variables | n (%) | |

|---|---|---|

| Gender | ||

| Male | 12(60) | |

| Female | 8(40) | |

| Age | ||

| Mean yrs (range) | 15.8 (14–18) | |

| Ethnicity* | ||

| Not Hispanic or Latino | 4 (20) | |

| Hispanic or Latino | 15 (75) | |

| Race* | ||

| Black or African American | 2 (10) | |

| Pacific Islander | 2 (10) | |

| White | 1(5) | |

| Multi-racial | 1 (5) | |

| Other | 11 (55) | |

Numbers do not total 100% due to missing data

6.2 Task-technology Fit

Based on the participants’ performance of the assigned tasks, we compared the efficiency, and effectiveness of each of the devices tested. We measured effectiveness as the percentage of tasks completed using each device with no hints, prompts, or errors. Similar to the other measures, the iPhone was the most usable and had the fewest errors and prompts. The device with the least task steps was the iPhone, while the Android keyboard device had the most task steps. The iPhone had the lowest mean overall task time with a total of 7.50 minutes required for completion of all tasks, while the Android keyboard had the highest mean task time 11.50 minutes (Table 2). Test device had a statistically significant effect on task time (F (3,32)=2.92; p<0.05; partial ε2=0.215). However, it did not have a statistically significant effect on task steps (F (3,32)=0.466; p>0.05, partial ε2=0.042) and number of errors (F (3,32)=1.280; p>0.05; partial ε2=0.107).

Table 2.

Task steps, task time and errors by device

| Measure | iPhone (n=10) |

iPad (n=9) |

Android Impulse (n=9) |

Android Keyboard (n=10) |

p-value |

|---|---|---|---|---|---|

| Task steps | 51.80 | 53.89 | 57.00 | 60.70 | 0.708 |

| Task time | 7.50 | 9.89 | 10.67 | 11.50 | 0.049 |

| Errors/prompts | 4.30 | 8.78 | 8.78 | 6.80 | 0.298 |

Errors includes device error, user needs prompting.

Results reported as means. Task time is in minutes.

The iPad had a higher rate of error than the iPhone. These were most frequently associated with the use of the calendar, which had some different requirements for adding events compared to the other devices. The need to access a separate icon to open the desired date was not expected. Participants assumed that they needed to tap the date in order to open it to schedule an event, a function that was possible on other devices. However, after tapping the date and not getting the desired result, participants were usually able to independently determine the appropriate action.

6.3 Individual-task Fit

To measure individual-task fit, we examined task success rates by task and also measured learnability. Learnability was evaluated by measuring the number of hints, prompts, and errors experienced by participants while completing the tasks on the different devices. We compared efficiency and learnability measures by website tasks (AIDS Healthcare Foundation and Medline Plus) and found no statistically significant effect on: tasks steps (F (4,33)=0.694; p=0.411; partial ε2=0.021), task time (F (4,33)=0.753; p>0.05; partial ε2=0.022), and number of errors (F (4,33)=1.839; p=0.184; partial ε2=0.053). Following our comparison of success rates by website tasks, we compared the difference between two mobile applications (MyfitnessPal and Sparkpeople Diet Tracker) which were used for diet tracking and found statistically significant effect on all measures: tasks steps (F (4,33)=38.282; p<0.001; partial ε2=0.537), task time (F (4,33)=15.396; p<0.001; partial ε2=0.318), and number of errors (F (4,33)=4.488; p=0.042; partial ε2=0.120).

6.4 Individual-technology Fit

Individual-technology fit refers to the fit between the individual user and the technology. In the current study, the technology is the mobile device used. Participants rated each device using the PSSUQ. There was no significant difference in the overall satisfaction scores for the different devices (Table 3). There was a significant difference in the mean scores for the different devices on the interface quality subscale (p=0.02 ANOVA). The Android Impulse had the lowest mean score, indicating the highest rating for interface quality. Table 3 provides the overall mean PSSUQ scores as well as the mean scores for the different devices on each. The information quality subscale of the PSSUQ was used as a measure of the participants’ satisfaction with their ability to carry out tasks using the different devices. There was no significant difference between the mean information quality scores among the different devices (p=0.16). The iPad had the lowest mean score for information quality (1.9).

Table 3.

PSSUQ scores by device (7-point scale, where the lower the response, the higher the subject’s usability satisfaction with their system)

| PSSUQ scale | iPad | iPhone | Android mpulse |

Android Keyboard |

p-value |

|---|---|---|---|---|---|

| System Usefulness | 1.8 | 2.3 | 2.9 | 3.2 | 0.19 |

| Information Quality | 1.9 | 2.6 | 2.8 | 3.4 | 0.16 |

| Interface Quality | 2.6 | 2.2 | 2.0 | 3.7 | 0.02 |

| Overall Satisfaction | 1.8 | 2.4 | 2.7 | 3.4 | 0.11 |

7.0 Discussion

This study reports the usability of four mobile devices for adolescents accessing and using health-related information, with a focus on testing the core set of functions that allow a user to carry out tasks using a mobile device. Our study provides a comprehensive examination of usability among adolescents by incorporating a task-based observational method with a self-report questionnaire which provided new insights into the use of mobile devices. We combined the FITT framework of technology adoption with a standard definition of usability, resulting in a novel approach to usability assessment.

Previous studies on the usability of mobile devices for healthcare have focused on specific applications and not on the technology itself [18–20]. A recent study on the usability of mobile phones for exercise tracking reported on usability of the phone as a data collection device and evaluated usability with the System Usability Scale [18]. Using the FITT framework, we were able to focus on both the technology (hardware) and the application itself (software).

For task-technology fit, findings from our study showed that differences in technology (device) do not significantly contribute to users’ ability to complete a task but does effect the time it takes. all participants were able to successfully complete the tasks assigned with some prompts from the study team. One error experienced by users involved incorrectly tapping an icon with the expectation of a different response. For example, participants commonly attempted to add an event to the calendar in the iPad by tapping the date on the calendar, which did not have the desired effect. Instead, they needed to tap a (+) sign in order to enter an event on a particular date. Participants also chose incorrect icons or links when they were too close together on the screen or too small to differentiate. As a result, future design of mobile technology should focus on the time needed to effectively use the device since a motivated user will complete the task but is less likely to want to adopt a technology which takes too much of their time [21, 22].

The second dimension in the FITT model is individual-task fit. Our findings indicated that there was little difference in the time needed for participants to access websites. However, the time required to complete tasks using different mobile applications was significantly different indicating that the design of mobile applications vary and will effect end-users’ efficiency in completing tasks. Moreover, when examining task success rates across participants, we found that they achieved task completion in most cases but required some help in doing so. Even when participants encountered errors, they usually were able to correct them with minimal assistance. The tasks involved accessing information via websites and using mobile applications that were not necessarily designed for adolescents. Because of this, the websites and applications may have used language that was difficult for our participants to understand.

Additionally, the applications do require some degree of learning. As one of our participants stated, “This is the first time I’m using this app. I would have to learn it.” Our participants showed a high degree of willingness to learn and an expectation about the need to learn to use a particular application. Participants rarely stopped and asked for help, preferring to use a process of elimination to figure out the necessary task step. A higher percentage of errors occurred when participants used the applications as compared to the websites. The applications’ increased degree of interactivity may explain this difference. The website tasks primarily involved information seeking and reading steps that required identification of specific links but less information input. The functions required when using a website, such as searching, clicking a link, and scrolling are commonly found on many websites that can be accessed on other devices like laptop or desktop computers. The applications used on the mobile devices, particularly those in our study, were unique to these types of devices and were new to our group of users. Therefore, the actions required to use them may not be as commonly understood.

The findings from this study illustrate useful lessons for the development of mHealth technology and applications particularly in adolescents. For the individual-technology fit, interface quality was particularly important to end-users as there was a significant difference between users. While the devices evaluated in this study may will rapidly become obsolete, nonetheless, the findings from our study illustrate the need for developing mHealth applications and technology which meet end-users’ need and pay particular attention to the interface quality.

Our study offers new insights into the hardware and software issues associated with using mHealth technology. In addition, this study demonstrates the usefulness of the FITT framework in evaluating mHealth technology. Past research has focused its use of the FITT framework for evaluating electronic health records [13, 23, 24]. Findings from our study demonstrate how application of the FITT framework explicated the fit between the individual, technology and task in mHealth technology.

There were several limitations to our study. First, this was a laboratory-based study. The participants were sitting stationary in a room while being recorded using the devices. These devices are “mobile” and are meant to be used outside or in settings where the user’s movements and environment may not be controlled. The effect of outdoor lighting, noise, interruptions, and movement could not be evaluated within our study design. An additional limitation is the small sample size. Although it is commonly recommended to use 10–20 participants in traditional usability testing [25], this made statistical comparisons limited. Another important limitation of this study is that participants had prior experience with some of the devices tested. Although device testing was randomly assigned, given the small sample size, a possible confounder is that users with previous experience with iPhones would be familiar with its use. Nonetheless, we were unable to find significant differences in the ratings of the devices between frequent and infrequent users or between genders. Individual task and technology fit may be better investigated using a larger sample size.

Learnability is also typically tested by determining how many times a user must perform a task in order to complete it without errors or hints. In our case, we only had users carry out tasks once. Therefore, we inferred learnability by determining the number of errors, hints, and prompts required. Repeated testing may be needed to determine if tasks can be easily learned and retained over time. Lastly, our study was limited to a group of urban-dwelling adolescents. Results may not be generalizable to other age groups or to adolescents living in rural or other suburban areas. Nonetheless, our participants reported using mobile devices frequently, with 80% using them at least once per day, which is consistent with reports of adolescents’ use of mobile technology [4], indicating that our sample is representative of the adolescent population. Finally we should note that time was used as an outcome measure for both the task-technology fit and individual-task fit dimensions. As a result, usability issues with the technology (device) and the task (software) may be confounded since they may both affect the overall time required to complete a task.

8.0 Conclusions

Findings from this study demonstrate the usefulness in exploring each of the FITT dimensions to illustrate the usability characteristics of mobile devices and mHealth applications. While our users were able to complete all tasks, the time needed to complete the tasks varied significantly by device and mobile application. Even though, users may be able to complete a task using a mobile device, it is important to design both software and hardware that can be used efficiently. Moreover, interface quality is particularly important in designing mobile devices and mHealth applications for adolescent end-users.

Acknowledgements

This study was funded by HEAL NY Phase 6 - Primary Care Infrastructure "A Medical Home Where Kids Live: Their School" Contract Number C024094 (Subcontract PI: R Schnall) and the Center for Evidence Based Practice in the Underserved NINR P30NR010677 (S. Bakken, Principal Investigator).

Footnotes

Conflicts of Interest

None of the listed authors have any financial or personal relationships with other people or organizations that may inappropriately influence or bias the objectivity of submitted content and/or its acceptance for publication in this journal.

Protection of Human Subjects and Animals in Research.

The procedures used have been reviewed in compliance with ethical standards of the responsible committee on human experimentation at the home institution of the authors. All research activities are in compliance with the World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research Involving Human Subjects.

References

- 1.mHealth Alliance. Frequently Asked Questions, 2011. [cited 2011 August 3];2010 Available from: http://www.mhealthalliance.org/about/frequently-asked-questions. [Google Scholar]

- 2.Istepanian R, Laxminarayan S, Pattichis CS, editors. M-health: Emerging mobile health systems. New York, N.Y: Springer; 2006. [Google Scholar]

- 3.Kleinke JD. Dot-gov: market failure and the creation of a national health information technology system. Health Aff (Millwood) 2005 Sep-Oct;24(5):1246–1262. doi: 10.1377/hlthaff.24.5.1246. [DOI] [PubMed] [Google Scholar]

- 4.Lenhart A. Teens, cell phones and texting. Washington, DC: Pew Research Center; 2010. [Google Scholar]

- 5.Lenhart A. Teens and Mobile Phones Over the Past Five Years: Pew Internet Looks Back. [cited 2010 March 28];Pew Internet and American Life Project. 2009 Available from: http://www.pewinternet.org/Reports/2009/14--Teens-and-Mobile-Phones-Data-Memo/1-Data-Memo/5-How-teens-use-text-messaging.aspx?r=1. [Google Scholar]

- 6.Lenhart A, Purcell K, Smith A, Zickuhr K. Social Media and Young Adults. [cited 2010 March 28];Pew Internet & American Life Project. 2010 Available from: http://www.pewinternet.org/Reports/2010/Social-Media-and-Young-Adults.aspx. [Google Scholar]

- 7.Hachman M. More Kids Using Cell Phones, Study Finds Pew Internet and American Life Project. [cited 2010 March 28];2009 Available from: http://www.pewinternet.org/Media-Mentions/2009/More-Kids-Using-Cell-Phones-Study-Finds.aspx. [Google Scholar]

- 8.Curioso WH, Mechael PN. Enhancing 'M-health' with south-to-south collaborations. Health Aff (Millwood) 2010 Feb;29(2):264–267. doi: 10.1377/hlthaff.2009.1057. [DOI] [PubMed] [Google Scholar]

- 9.Lewis J, editor. Psychometric evaluation of the post-study system usability questionnaire: The PSSUQ; Human Factors Society 36th Annual Meeting; Boca Raton, Fla. 1992. [Google Scholar]

- 10.Ammenwerth E, Iller C, Mahler C. IT-adoption and the interaction of task, technology and individuals: a fit framework and a case study. BMC Med Inform Decis Mak. 2006;6:3. doi: 10.1186/1472-6947-6-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Goodhue DL, Thompson RL. Task-Technology Fit and Individual Performance. Management Information Systems Quarterly. 1995;19(2):213–236. [Google Scholar]

- 12.Venkatesh V, Davis FD. A Theoretical Extension of the Technology Acceptance Model: Four Longitudinal Field Studies. Management Science. 2000;46(2):186–204. [Google Scholar]

- 13.Tsiknakis M, Kouroubali A. Organizational factors affecting successful adoption of innovative eHealth services: a case study employing the FITT framework. Int J Med Inform. 2009 Jan;78(1):39–52. doi: 10.1016/j.ijmedinf.2008.07.001. [DOI] [PubMed] [Google Scholar]

- 14.ISO/IEC FCD 25010. Software product quality requirements and evaluation (SQuaRE)- quality models for software product and system quality in use. In: Bevan N, editor. Systems and software engineering. 2009. [Google Scholar]

- 15.Saffah A, editor. QUIM: A framework for quantifying usability metrics in software quality models; Asia-Pacific Conference on Quality Software; Hong Kong. 2001. [Google Scholar]

- 16.Bevan N, editor. HCI International. San Diego, Ca: 2009. Extending quality in use to provide a framework for usability measurement. [Google Scholar]

- 17.Lewis JR. IBM computer usability satisfaction questionnaires: Psychometric evaluation and instructions for use. Int J Human Coputer Interact. 1995;7:57–78. [Google Scholar]

- 18.Heinonen R, Luoto R, Lindfors P, Nygard CH. Usability and feasibility of mobile phone diaries in an experimental physical exercise study. Telemed J E Health. 2012 Mar;18(2):115–119. doi: 10.1089/tmj.2011.0087. [DOI] [PubMed] [Google Scholar]

- 19.Whittaker R, Borland R, Bullen C, Lin RB, McRobbie H, Rodgers A. Mobile phone-based interventions for smoking cessation. Cochrane Database Syst Rev. 2009;(4) doi: 10.1002/14651858.CD006611.pub2. CD006611. [DOI] [PubMed] [Google Scholar]

- 20.Mulvaney SA, Rothman RL, Dietrich MS, Wallston KA, Grove E, Elasy TA, Johnson KB. Using mobile phones to measure adolescent diabetes adherence. Health Psychol. 2012 Jan;31(1):43–50. doi: 10.1037/a0025543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Lee J, Cain C, Young S, Chockley N, Burstin H. The Adoption Gap: Health Information Technology In Small Physician Practices. Health Affairs. 2005 Sep 1;24(5):1364–1366. doi: 10.1377/hlthaff.24.5.1364. 2005. [DOI] [PubMed] [Google Scholar]

- 22.Ramaiah M, Subrahmanian E, Sriram RD, Lide BB. Workflow and electronic health records in small medical practices. Perspect Health Inf Manag. 2012;9:1d. [PMC free article] [PubMed] [Google Scholar]

- 23.Honekamp W, Ostermann H. Evaluation of a prototype health information system using the FITT framework. Inform Prim Care. 2011;19(1):47–49. doi: 10.14236/jhi.v19i1.793. [DOI] [PubMed] [Google Scholar]

- 24.Schnall R, Smith AB, Sikka M, Gordon P, Camhi E, Kanter T, Bakken S. Employing the FITT framework to explore HIV case managers' perceptions of two electronic clinical data (ECD) summary systems. Int J Med Inform. 2012 Jul 27; doi: 10.1016/j.ijmedinf.2012.07.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Faulkner L. Beyond the five-user assumption: benefits of increased sample sizes in usability testing. Behav Res Methods Instrum Comput. 2003 Aug;35(3):379–383. doi: 10.3758/bf03195514. [DOI] [PubMed] [Google Scholar]