Abstract

Dopamine is highly implicated both as a teaching signal in reinforcement learning and in motivating actions to obtain rewards. However, theoretical disconnects remain between the temporal encoding properties of dopamine neurons and the behavioral consequences of its release. Here, we demonstrate in rats that dopamine evoked by Pavlovian cues increases during acquisition, but dissociates from stable conditioned appetitive behavior as this signal returns to pre-conditioning levels with extended training. Experimental manipulation of the statistical parameters of the behavioral paradigm revealed that this attenuation of cue-evoked dopamine release during the post-asymptotic period was attributable to acquired knowledge of the temporal structure of the task. In parallel, conditioned behavior became less dopamine dependent after extended training. Thus, the current work demonstrates that as the presentation of reward-predictive stimuli becomes anticipated through the acquisition of task information, there is a shift in the neurobiological substrates that mediate the motivational properties of these incentive stimuli.

Introduction

Reward-related dopamine transmission within the mesolimbic system is hypothesized to function as a reinforcement signal that promotes future behavioral responses to predictive cues (Wise, 2004) as well as a motivational signal that immediately mobilizes behavior through the assignment of incentive value (Berridge, 2007). Phasic dopamine neurotransmission during the contingent pairing of conditioned stimuli (CS) and rewards (unconditioned stimuli, US) shows a dynamic pattern of signaling where US-evoked phasic responses gradually decrease in parallel with a gradual increase in CS-evoked responses (Ljunberg et al., 1992). This pattern is highly relevant to the motivational properties of stimuli as it is differentially regulated dependent upon the degree to which individuals assign incentive value to reward-predictive cues (Flagel et al., 2011). Indeed, acquired phasic dopamine release at the time of a CS may function similarly to that of primary rewards to provide conditional reinforcement supporting secondary conditioning through the assignment of incentive value (McClure et al., 2003).

In the context of reinforcement learning, decreased US-evoked dopamine during learning is attributed to its developing predictability by the presentation of the CS, and increased CS-evoked dopamine is attributed to the establishment of this stimulus as the earliest predictor of reward. Thus, the presence of a dopamine signal only when rewards are not fully predicted is interpreted as evidence for dopamine acting as a teaching signal to update predictions when they are not accurate with regard to the precise timing and value of impending reward. Consistent with a significant role for predictability, CS-evoked responses also diminish when preceded by cues that occur at regular time intervals (Schultz, 1998), confirming that timing of reward-related events is central to the generation of these signals (Fiorillo et al., 2008) as well as a critical component to learning (Gallistel and Gibbon, 2000).

However, many real-world situations involve uncertainty in the probability and/or timing of rewards and reward-predictive cues. In experimental paradigms involving probabilistic rewards, there is evidence that cue-evoked dopamine signaling scales with probability of reward delivery (Fiorillo et al., 2003). The generation of anticipatory behavior such as approach requires not only knowledge concerning the variability in rewarding outcomes but also the ability to track the temporal pattern of cues for estimating the likelihood of an event occurring at a given time (i.e. hazard rate; Janssen and Shadlen, 2005). Thus, knowledge of task statistics, perhaps acquired through extended experience, may modulate dopamine-encoded prediction errors. However, the evolution of such responses during learning and the environmental conditions contributing to their development remain unclear. In addition to questions regarding the temporal encoding properties of dopamine neurons, these concepts also highlight a theoretical disconnect between the environmental events encoded by dopamine neurotransmission and the behavioral consequences of dopamine release. Indeed, if stimulus-evoked (CS or US) phasic dopamine transmission is attenuated as stimuli become predicted, it is unclear if and how the motivational properties of these stimuli are transmitted and maintained.

Materials and Methods

Animals

Male Sprague Dawley rats weighing ~300–350g were obtained from Charles River (Hollister, CA), housed individually on a 12hr light-dark cycle with Teklad rodent chow and water available ad lib except as noted, and weighed and handled daily. Prior to conditioning tasks, rats were food deprived to ~90% of their free-feeding body weight. All experimental procedures were in accordance with the Institutional Animal Care and Use Committee at the University of Washington.

Surgery and electrochemical detection of dopamine

Rats (n = 30) were implanted with carbon-fiber microelectrodes (1.3 mm lateral, 1.3 mm rostral and 6.8 mm ventral of bregma) for in vivo detection of phasic dopamine using fast-scan cyclic voltammetry (FSCV) (Clark et al., 2010). Thirty minutes prior to the start of each experimental session rats were placed in an operant chamber (Med Associates, VT) and chronically implanted microsensors were connected to a head-mounted voltammetric amplier. Of the 20 animals meeting the behavioral criterion, three had electrode placements outside of the nucleus accumbens core and seven failed for technical reasons (e.g. loss of headcap, saturation of signal). Rats (n = 10) were given a single uncued food pellet, delivered to the food receptacle, prior to the start of each session to assess reward-evoked dopamine signaling. Voltammetric scans were repeated every 100 ms (−0.4 V to +1.3 V at 400 V/s; National Instruments) and dopamine was isolated from the voltammetric signal with chemometric analysis (Heien et al., 2005) using a standard training set based on stimulated dopamine release detected by chronically implanted electrodes. Dopamine concentration was estimated based on the average post-implantation electrode sensitivity (Clark et al., 2010). Peak CS- and US-evoked dopamine release was obtained by taking the largest value in the 2-second period after stimulus presentation. Mixed measures ANOVA was used to compare peak stimulus-evoked dopamine release during learning with stimulus as the between group measure and decades as the within group measure. Separate repeated measures ANOVA for CS, US, and pre-session rewards were used to assess stimulus-evoked dopamine release across both phases of training with post-hoc tests for linear trends. CS- and US-evoked dopamine release during the first, tenth and last decade was compared with two-way ANOVA and post-hoc t-tests with the Bonferroni correction for multiple tests.

Behavior

Following a single session of magazine training where 20 food pellets (45mg; Bio-Serve, NJ) were delivered at a 90-s variable interval, rats were trained on a Pavlovian conditioned approach task (Flagel et al., 2011). During daily sessions, 25 trials were presented with a variable inter-trial interval (ITI) from a range of values consisting of 30, 40, 50, 60, 70, 80, and 90 seconds (without replacement). A trial consisted of a lever/light cue presented for 8 seconds followed immediately by delivery of a food pellet and retraction of the lever. Lever presses were recorded but without consequence for reward delivery. Animals failing to approach the predictive cue by the fifth session on at least 75 % of trials, as measured by lever pressing, were excluded from subsequent analysis (n = 10). This criterion selects rats that approach the predictive cue (sign-tracking) and excludes animals that approach the site of reward delivery during cue presentation (goal-tracking) as these behaviors are differentially dependent upon intact dopamine neurotransmission (Flagel et al., 2011) and may reflect different learning mechanisms (Clark et al., 2012). Behavioral data were binned into ten-trial epochs and fit with a standard psychometric function (Weibull) to obtain the best fit parameter for asymptote. Conditioned approach behavior was compared to stimulus-evoked dopamine release with linear regression separately for the pre- and post-asymptotic phases. All statistical analyses were carried out using Prism (GraphPad Software, La Jolla, CA).

Probe trials

After 15 sessions (375 trials), all animals were given two sessions (16 or 18) of probe trials, counterbalanced for order of presentation, separated by one normal session of training. In each probe session, 5 probe trials were presented along with 20 standard trials. For CS-probe trials, 5 trials were presented with an ITI of 10 seconds with the remaining 20 trials occurring within the normal range of ITI values. For US probe trials, all trials were identical to normal training sessions with the exception that an uncued reward was delivered during the ITI after every fifth trial. For CS probes, paired t-tests were used to compare cue-evoked dopamine release on probe trials to cue-evoked dopamine release on normal trials within the same session. Independent samples t-tests were used for comparison of cue-evoked dopamine release on short (<60 s) to that of long (>60 s) ITI values. For US probes, paired t-tests were used to compare reward-evoked dopamine release on probe trials to reward-evoked dopamine release on normal trials within the same session.

Histological verification of recording sites

Animals were anesthetized with sodium pentobarbital; the recording site was then marked with an electrolytic lesion (300 V) by applying current directly through the recording electrode for 20 s. Animals were transcardially perfused with phosphate buffered saline followed by 4-% paraformaldeyde. Brains were removed and post-fixed in paraformaldehyde and then rapidly frozen in an isopentane bath (~5 min), sliced on a cryostat (50-μm coronal sections, 20 °C) and stained with cresyl violet to aid in visualization of anatomical structures.

Pharmacology

A separate cohort of rats was trained as above on a Pavlovian conditioned approach task (n = 40) for either 5 sessions (asymptotic group) or 15 sessions (post-asymptotic training group). 14 rats failed to reach a criterion of 75 % approach by the fifth session and were excluded from analysis. The last session of training, either the 5th or 15th, was followed by a test session where animals received 5 cue presentations in extinction. Thirty minutes prior to the test session animals were injected with either the dopamine D1 receptor antagonist SCH23390 (0.01 mg/kg; i.p.) or saline. Two-way ANOVA with training and drug condition as between group measures was used to assess conditioned approach behavior on the test day followed by post-hoc t-tests with the Bonferroni correction for multiple tests.

Results

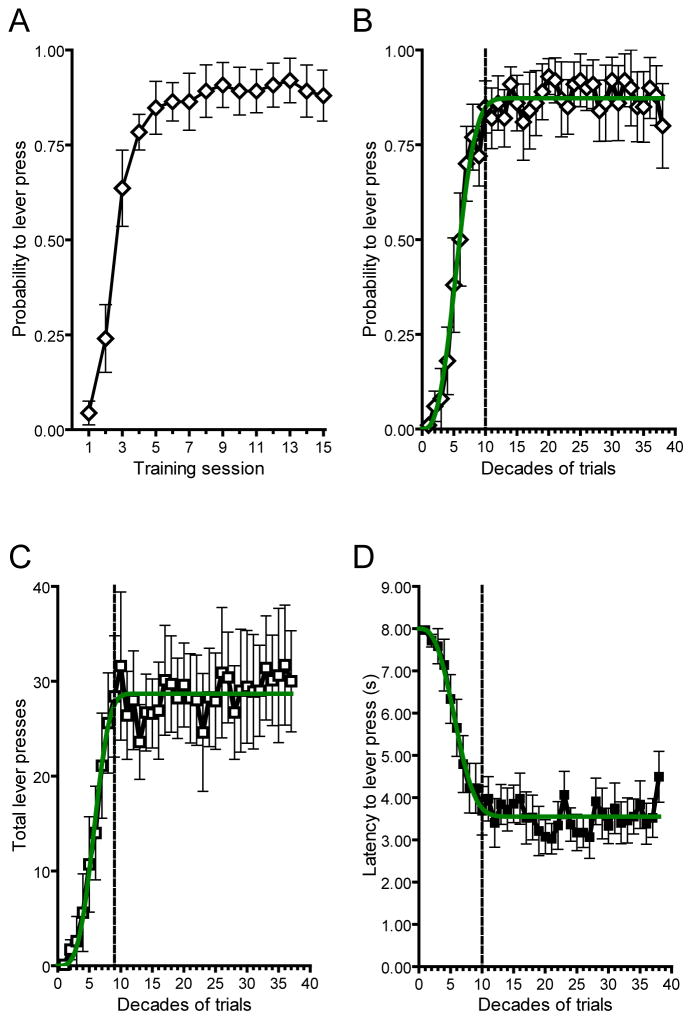

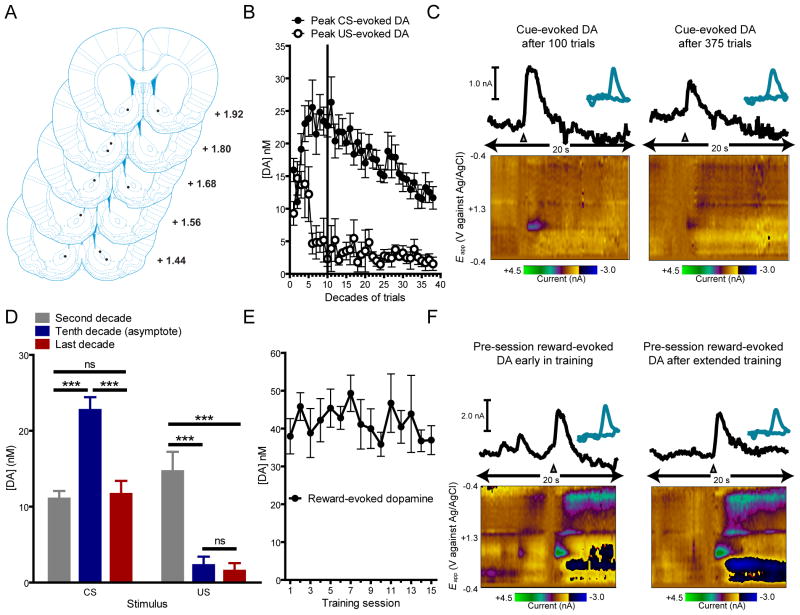

Over 15 sessions (375 trials) we observed conditioned approach behavior that increased over the first 4 sessions and remained stable thereafter (Figure 1A; n = 10). To determine asymptotic performance level we analyzed three separate behavioral measures in 10-trial epochs (Figure 1B–D) and fit these data with the Weibull function, a standard psychometric tool in the analysis of learning curves (Gallistel et al., 2004). Time to reach asymptote was defined as the first decade where mean response level exceeded the 95-% confidence interval (CI) of the best fit parameter for asymptote from each behavioral measure (asymptote for probability = 0.87, 95% CI = 0.83–0.90; total lever presses = 28.69, 95% CI = 26.85–30.53; latency = 3.55, 95% CI = 3.36–3.74). We used this statistic, similar across all behavioral metrics (Figure 1B–D), to divide behavior into pre-asymptotic (100 trials) and post-asymptotic periods for neurochemical analysis (Figure 2A–B). During the pre-asymptotic period (first 100 trials), there was a trial-by-trial shift in phasic dopamine activity from the reward to the CS, in agreement with previous reports (Flagel et al., 2011). Consistent with the encoding of a reward-prediction error, cue-evoked phasic dopamine increased (F9, 81 = 6.14, P < 0.0001; posttest for linear trend, P < 0.0001) and was positively correlated (r-squared = 0.46, P < 0.05) with conditioned approach while reward-evoked dopamine decreased (F9, 81 = 4.54, P < 0.0001; posttest for linear trend, P < 0.0001) and was negatively correlated (r-squared = 0.77, P < 0.0001) with conditioned approach (Figure 2B–C). In the post-asymptotic period of training (trials 100 to 375) the dopamine response to the US did not change further and remained minimal throughout this period (F9, 234 = 0.58, P > 0.05; posttest for linear trend, P > 0.05). However, cue-evoked dopamine release declined during the post-asymptotic period back to preconditioning levels (F9, 234 = 4.46, P < 0.0001; posttest for linear trend, P < 0.0001). Comparison of peak US-evoked and CS-evoked phasic dopamine release at different points in training (Figure 2D) using mixed measures ANOVA with stimulus (CS and US) as the between-group measure and decade of training (second, fifth, and tenth) as the within-group measure revealed a significant main effect of stimulus (F1,52 = 44.85, P < 0.0001), a significant main effect of decade (F2,52 = 9.13, P < 0.005), and a significant interaction effect between stimulus and decade (F2,52 = 26.95, P < 0.0001). Post-hoc tests showed that US-evoked dopamine release was significantly lower on the tenth (P < 0.001) and last decade (P < 0.0001) of training in comparison to the second decade. However, the tenth and last decade were not significantly different from each other. Conversely, post-hoc tests revealed that CS-evoked dopamine release increased significantly from the second to the tenth decade (P < 0.001) and then significantly decreased back to preconditioning levels from the tenth to the last decade (P < 0.001) where it did not differ from the preconditioning level.

Figure 1.

Conditioned approach behavior throughout acquisition and post-asymptotic training. (A) Probability to lever press by training session. Probability to lever press (B), total lever presses (C), and latency to lever press (D) binned by decades of trials. Green line depicts the best fit from the Weibull function for each behavioral measure. Vertical dotted lines denote the decade in training where asymptotic performance was reached as determined by the best fit parameter from the Weibull function. Data are mean ± s.e.m.

Figure 2.

Dopamine dynamics during pre- and post-asymptotic Pavlovian conditioning. (A) All recording sites (●) were within the nucleus accumbens core. The numbers on each plate indicate distance in millimeters anterior from bregma (Paxinos and Watson, 2005). (B) Average peak CS- and US-evoked dopamine release across 375 trials of conditioning. (C) Color-coded observed changes in electrochemical information as a function of applied potential (y axis) plotted over time (x axis). (D) Comparison of peak US-evoked and CS-evoked phasic dopamine release at different points in training. (E) Reward-evoked dopamine release prior to the start of each training session. Reward-evoked dopamine release did not significantly change over sessions as determined by one-way repeated measures ANOVA (F14, 126 = 0.79, P > 0.05; posttest for linear trend, P > 0.05). (F) Color-coded observed changes in electrochemical information as a function of applied potential (y axis) plotted over time (x axis). Gray triangles denote CS onset or reward delivery. Data are mean ± s.e.m. ***P < 0.001, ns = not a statistically significant difference.

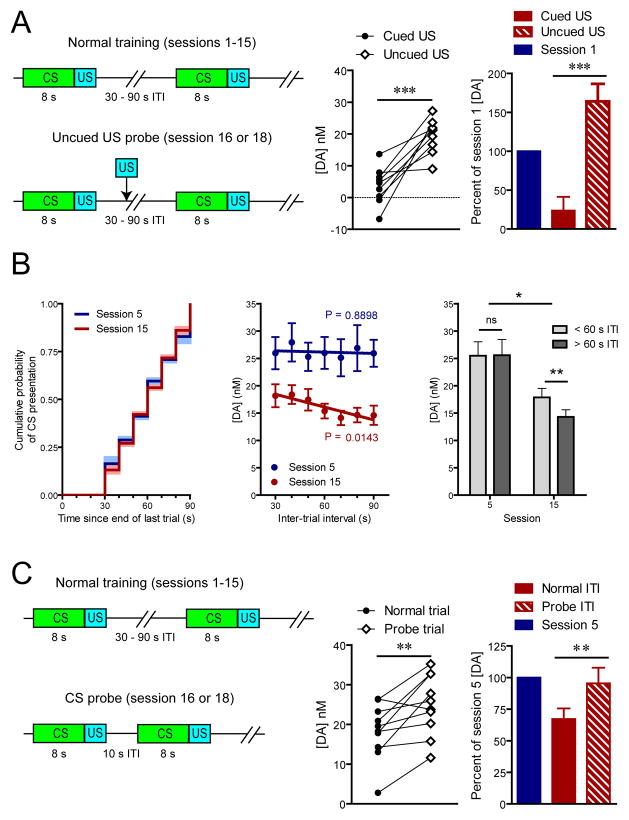

Stable reward-evoked dopamine release observed outside the context of the task (Figure 2E–F) indicates that attenuation of cue-evoked signaling during the post-asymptotic period is not attributable to general degradation of dopamine transmission. Therefore, we tested whether there was a development of task-related contextual suppression of dopamine release over the course of training (Figure 3A). Not surprisingly, when uncued rewards were delivered during the task (session 16 or 18), they elicited significantly more dopamine release than cued rewards (t[9] = 5.42, P < 0.001; Figure 3A). Importantly, the level of dopamine release to uncued rewards during this post-asymptotic phase was restored to the pre-acquisition level (session 1; Figure 3A) indicating that any contextual suppression did not develop over this period. Having ruled out these possibilities we hypothesized that attenuation of CS-evoked dopamine release was conferred by the acquisition of a temporal expectation of CS presentation. This notion is somewhat surprising given that CS presentation occurred at variable time intervals with respect to the end of previous trials. Nonetheless, if animals had acquired knowledge about the temporal statistics of the task, we would anticipate that their expectation would correspond to the hazard rate (Figure 3B) where the shortest time interval would be less predictable than progressively longer ones and, importantly, that this expectation would modulate the magnitude of cue-evoked phasic dopamine. Moreover, this temporal estimation would be expected to develop after the cue becomes established as a full predictor of reward and, as such, should be present after post-asymptotic training (session 15) but not immediately after acquisition (session 5). Consistent with our hypothesis, a pattern emerged over the course of extended training where higher cue-evoked dopamine release was observed for shorter inter-trial intervals (ITI) (main effect of ITI; F1,18 = 5.14, P < 0.05; main effect of session; F1,18 = 9.91, P < 0.01; session x ITI interaction; F1,18 = 5.78, P < 0.05), resulting in significant correlation between phasic dopamine signaling and the ITI after 15 sessions (P < 0.05) of training but not after 5 (P > 0.05; Figure 3B). Therefore, to further test our hypothesis we conducted probe trials where cues were presented with a shorter ITI than previously experienced by the animals (Figure 3C). These probe trials elicited significantly higher dopamine release than regular trials (t[9] = 3.46, P < 0.01; Figure 3C) and recovered signaling to that of session 5, suggesting that attenuation can be solely attributed to the learning of task statistics.

Figure 3.

Effect of temporal expectation on stimulus-evoked dopamine release. (A) Illustration of task design (left) and US-evoked dopamine during test sessions (center) relative to preconditioning level (right). (B) Experienced cumulative probability of CS presentation during session 5 and 15 (left), CS-evoked dopamine as a function of ITI length (center), and comparison of short to long ITIs at post-acquisition asymptote and after extended training (right). (C) Illustration of task design (left) and CS-evoked dopamine during the probe session (center) relative to peak levels at post-acquisition asymptote (right). Data are mean ± s.e.m. *P < 0.05, **P < 0.01, ***P < 0.005.

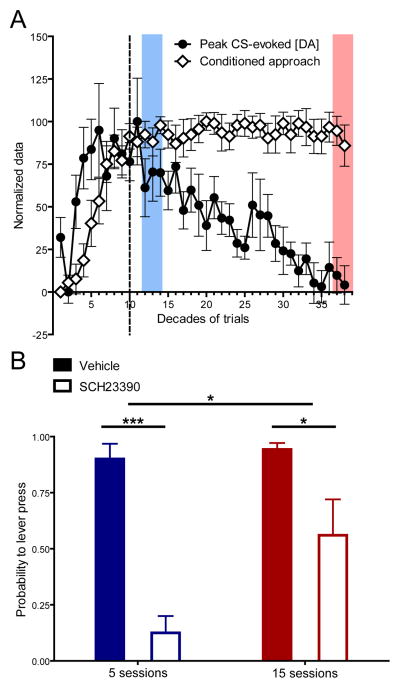

Stable conditioned approach behavior accompanied by diminishing cue-evoked dopamine release introduces a notable dissociation between a behavioral hallmark of acquired incentive value and dopamine encoding of Pavlovian cues (Figure 4A). This separation suggests that the involvement of dopamine in conditioned behavior may change during post-asymptotic learning. Indeed, a diminishing role of dopamine over training has been shown for other reward-related behaviors (Choi et al., 2005). Thus, to determine the dependence of conditioned approach on dopamine D1 receptor activation at post-acquisition asymptote and after extended post-asymptotic training, animals were trained on the Pavlovian conditioned approach task for 125 or 375 trials and then received either the dopamine D1 receptor antagonist SCH23390 or saline during a test session (n = 26). Conditioned approach on the last day of training prior to the test session did not significantly differ between groups. D1 receptor antagonism significantly reduced conditioned approach for both periods of training but was less effective following extended post-asymptotic training (main effect of drug; F1,22 = 45.39, P < 0.0001; main effect of training; F1,22 = 7.73, P < 0.05; drug x training interaction effect; F1,22 = 5.21, P < 0.05; Figure 4B) demonstrating that the dopamine dependence of conditioned behavior changes during post-asymptotic training.

Figure 4.

Temporal dynamics of CS-evoked dopamine and dopamine dependence of conditioned behavior. (A) Normalization of conditioned approach behavior and CS-evoked dopamine across all phases of training. (B) Conditioned approach behavior on a test session after injection of SCH23390 or saline in animals that received either 5 (saline: n = 6, SCH23390: n = 8; blue) or 15 (saline: n = 7, SCH23390: n = 5; red) sessions of training. Shaded blocks in (A) correspond to the point in training where pharmacological experiments were conducted in (B). Vertical dotted line denotes the decade in training where asymptotic performance was reached. Data are mean ± s.e.m. *P < 0.05, ***P < 0.005.

Discussion

A role for dopamine in reinforcement learning is suggested by the correlation between phasic patterns of neurotransmission during the contingent pairing of rewards and predictive stimuli and the encoding of a reward-prediction-error used as a teaching signal in formal models of learning (Montague et al., 1996). However, the contribution of dopamine neurotransmission to processes necessary for the acquisition of conditioned responses during learning and those necessary for maintaining the motivational value that drives performance remains unclear. It has been previously demonstrated that dopamine signaling is required for the acquisition and performance of conditioned approach behavior (DiCiano et al., 2001) generated by the acquired incentive properties of conditioned stimuli. Specifically, signaling at the dopamine D1 receptor has been associated with phasic dopamine release (Dreyer et al., 2010). Therefore, we compared the effects of a selective dopamine D1 receptor antagonist on CS-elicited conditioned behavior early and late in post-asymptotic training, when CS-evoked phasic dopamine was at its peak or after attenuation, respectively. We found that performance of conditioned approach behavior was completely abolished by dopamine D1 receptor antagonism administered at behavioral asymptote but became significantly less dependent on intact D1 signaling after extended post-asymptotic training. These findings demonstrate that the incentive properties of conditioned stimuli become less dependent upon dopamine following extended training.

One of the defining features of acquired incentive value by a Pavlovian cue is the ability to elicit approach behavior despite the fact that engaging the cue has no instrumental consequence to obtaining reward (Berridge, 2007). Here, we examined Pavlovian incentive value which has been theoretically and experimentally distinguished from instrumental incentive value (Dickinson et al., 2000). Previous work with instrumental learning has demonstrated a transition in the underlying associative structure of conditioned behavior across training where early in training responding is sensitive to manipulations of reward outcome but becomes increasing insensitive as training progresses (i.e. behavior becomes habitual; Dickinson, 1985). This behavioral change is accompanied by a switch in the dopamine dependence of performance from intact dopamine neurotransmission in the ventral striatum to intact dopamine neurotransmission in the dorsal striatum (Vanderschuren et al., 2004). Thus, the current findings demonstrate an important contrast between instrumental and Pavlovian conditioning where the switch in the underlying mechanism for conditioned responding is based upon dopamine dependence in different structures for the former and a less dopamine dependent state in general for the latter.

The observed attenuation of cue-evoked dopamine release after extended Pavlovian training mirrors findings of a previous report where the phasic activation of midbrain dopamine neurons in response to cues signaling reward availability was shown to attenuate after extensive overtraining (Ljunberg et al., 1992). Here we show that this attenuation is attributable to the developing predictability of trial onset, comparable to that described for manipulations of CS duration (Fiorillo et al., 2008), as animals learn a hazard rate conferred by the statistical parameters of the task. Indeed, the timing of rewards and their predictors is an integral feature to many theoretical accounts of learning (Savastano and Miller, 1998) and an important contribution of the computational reinforcement learning framework (Sutton and Barto, 1990) to traditional associative models (Rescorla and Wagner, 1978).

An alternative interpretation of attenuated cue-evoked dopamine release is that event predictability can become established through occasion setting where predictive information about stimulus delivery is provided by the context. Occasion setters offer configural information on expected contingencies between discrete stimuli (Meyers & Gluck, 1994). Accordingly, this account would predict that following sufficient training, CS-US presentation within the context of the session would elicit decreasing phasic dopamine release as the context comes to predict it. However, if the context were suppressing cue-evoked dopamine release after extended training, we would anticipate that this suppression would be present regardless of the temporal relationship between cues (the inter-trial interval). Contrary to this prediction, probe trials after extended training presented at shortened time intervals returned cue-evoked dopamine signaling to the pre-attenuation levels obtained during session 5, supporting the conclusion that attenuation can be attributed to estimates of temporal task statistics and not contextual learning.

These findings provide neurobiological evidence for the encoding of temporal information that could be used to shape and guide adaptive preparatory behavior through the generation of estimates of upcoming events, even if they occur at irregular intervals. Collectively, they demonstrate that dopamine-encoded prediction errors are modulated by ongoing estimates in the timing of reward-predictive events, dissociating them from the motivational significance of these events as they become anticipated.

Acknowledgments

This work was supported by NIH grants F32-DA024540 (JJC), R01-MH079292 (PEMP) and R01 DA027858 (PEMP). The authors thank Scott Ng-Evans for technical assistance.

Footnotes

Conflict of interest: None

References

- 1.Berridge KC. The debate over dopamine’s role in reward: the case for incentive salience. Psychopharmacology. 2007;191:391–431. doi: 10.1007/s00213-006-0578-x. [DOI] [PubMed] [Google Scholar]

- 2.Choi WY, Balsam PD, Horvitz JC. Extended habit training reduces dopamine mediation of appetitive response expression. J Neurosci. 2005;25:6729–6733. doi: 10.1523/JNEUROSCI.1498-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Clark JJ, Sandberg SG, Wanat MJ, Gan JO, Horne EA, Hart AS, Akers CA, Parker JG, Willuhn I, Martinez V, Evans SB, Stella N, Phillips PEM. Chronic microsensors for longitudinal, subsecond dopamine detection in behaving animals. Nat Methods. 2010;7:126–129. doi: 10.1038/nmeth.1412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Clark JJ, Hollon NG, Phillips PEM. Pavlovian valuation systems in learning and decision making. Curr Opin Neurobiol. 2012;22:1054–1061. doi: 10.1016/j.conb.2012.06.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Di Ciano P, Cardinal RN, Cowell RA, Little SJ, Everitt BJ. Differential involvement of NMDA, AMPA/kainate, and dopamine receptors in the nucleus accumbens core in the acquisition and performance of pavlovian approach behavior. J Neurosci. 2001;21:9471–9477. doi: 10.1523/JNEUROSCI.21-23-09471.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Dickinson A. Actions and habits: the development of behavioural autonomy. Philos Trans R Soc Lond B. 1985;308:67–78. [Google Scholar]

- 7.Dickinson A, Smith J, Mirenowicz J. Dissociation of Pavlovian and instrumental incentive learning under dopamine antagonists. Behav Neurosci. 2000;114:468–483. doi: 10.1037//0735-7044.114.3.468. [DOI] [PubMed] [Google Scholar]

- 8.Dreyer JK, Herrik KF, Berg RW, Hounsgaard JD. Influence of phasic and tonic dopamine release on receptor activation. J Neurosci. 2010;30:14273–14283. doi: 10.1523/JNEUROSCI.1894-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Fiorillo CD, Tobler PN, Schultz W. Discrete coding of reward probability and uncertainty by dopamine neurons. Science. 2003;299:1898–1902. doi: 10.1126/science.1077349. [DOI] [PubMed] [Google Scholar]

- 10.Fiorillo CD, Newsome WT, Schultz W. The temporal precision of reward prediction in dopamine neurons. Nat Neurosci. 2008;11:966–973. doi: 10.1038/nn.2159. [DOI] [PubMed] [Google Scholar]

- 11.Flagel SB, Clark JJ, Robinson TE, Mayo L, Czuj A, Willuhn I, Akers CA, Clinton SM, Phillips PE, Akil H. A selective role for dopamine in stimulus-reward learning. Nature. 2011;469:53–57. doi: 10.1038/nature09588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Gallistel CR, Gibbon J. Time, rate, and conditioning. Psychol Rev. 2000;107(2):289–344. doi: 10.1037/0033-295x.107.2.289. [DOI] [PubMed] [Google Scholar]

- 13.Gallistel CR, Fairhurst S, Balsam P. The learning curve: implications of a quantitative analysis. Proc Natl Acad Sci U S A. 2004;101:13124–13131. doi: 10.1073/pnas.0404965101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Heien ML, Khan AS, Ariansen JL, Cheer JF, Phillips PEM, Wassum KM, Wightman RM. Real-time measurement of dopamine fluctuations after cocaine in the brain of behaving rats. Proc Natl Acad Sci U S A. 2005;102:10023–10028. doi: 10.1073/pnas.0504657102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Janssen P, Shadlen MN. A representation of the hazard rate of elapsed time in macaque area LIP. Nat Neurosci. 2005;8:234–241. doi: 10.1038/nn1386. [DOI] [PubMed] [Google Scholar]

- 16.Ljungberg T, Apicella P, Schultz W. Responses of monkey dopamine neurons during learning of behavioral reactions. J Neurophysiol. 1992;67:145–163. doi: 10.1152/jn.1992.67.1.145. [DOI] [PubMed] [Google Scholar]

- 17.McClure SM, Daw ND, Montague PR. A computational substrate for incentive salience. Trends Neurosci. 2003;26:423–428. doi: 10.1016/s0166-2236(03)00177-2. [DOI] [PubMed] [Google Scholar]

- 18.Montague PR, Dayan P, Sejnowski TJ. A framework for mesencephalic dopamine systems based on predictive Hebbian learning. J Neurosci. 1996;16:1936–1947. doi: 10.1523/JNEUROSCI.16-05-01936.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Myers CE, Gluck MA. Context, conditioning, and hippocampal rerepresentation in animal learning. Behav Neurosci. 1994;108:835–47. doi: 10.1037//0735-7044.108.5.835. [DOI] [PubMed] [Google Scholar]

- 20.Paxinos G, Watson C. The rat brain in stereotaxic coordinates. Elsevier Academic Press; Amsterdam: 2005. [Google Scholar]

- 21.Rescorla RA, Wagner AR. A theory of Pavlovian conditioning: variations in the effectiveness of reinforcement and non-reinforcement. In: Black AH, Prokasy WF, editors. Classical Conditioning II: Current Research and Theory. New York: Appleton-Century-Crofts; 1972. pp. 64–99. [Google Scholar]

- 22.Savastano HI, Miller RR. Time as content in Pavlovian conditioning. Behav Processes. 1998;44:147–162. doi: 10.1016/s0376-6357(98)00046-1. [DOI] [PubMed] [Google Scholar]

- 23.Schultz W. Predictive reward signal of dopamine neurons. J Neurophysiol. 1998;80:1–27. doi: 10.1152/jn.1998.80.1.1. [DOI] [PubMed] [Google Scholar]

- 24.Sutton RS, Barto AG. Reinforcement Learning: An Introduction. Cambridge, MA: MIT Press; 1998. [Google Scholar]

- 25.Vanderschuren LJ, Di Ciano P, Everitt BJ. Involvement of the dorsal striatum in cue-controlled cocaine seeking. J Neurosci. 2005;25:8665–8670. doi: 10.1523/JNEUROSCI.0925-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Wise RW. Dopamine, learning and motivation. Nat Rev Neurosci. 2004;5:483–494. doi: 10.1038/nrn1406. [DOI] [PubMed] [Google Scholar]